Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

About:Community: Firefox 65 new contributors |

With the release of Firefox 65, we are pleased to welcome the 32 developers who contributed their first code change to Firefox in this release, 27 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

- andrewc.goupil: 1511850

- chandranvishwaak: 1507574

- hereissophie: 1501234

- r2hkri: 1501931, 1504522

- rishabhjairath: 1494045, 1504523, 1509449

- Adam: 1508989

- Adam Holm: 1451591, 1493931

- Adrian Kaczmarek: 1292878

- Barret Rennie: 1457546

- Cheng-You Bai UTC+8: 1506454

- Dragos Crisan: 1501250

- Emily Toop: 1395217, 1485718, 1500560, 1500566

- Fabian Henneke: 1509493

- Ferenc Nagy: 1508631

- Gavin Suntop: 1476458, 1501459

- Hrushikesh Bodas: 1509469

- Isabel Rios: 1502109, 1502113

- James Lee: 1423839, 1507809

- Jay Zuo: 1506604

- Kristin Taylor: 1502999

- Lenka Pelechova: 1499042, 1500018

- Marc Fisher: 1483996

- Maxiwell Luo: 1506338

- Nicklas Boman: 1370224, 1451813

- Ola Gasidlo: 1508651

- Paul Vitale: 1194010, 1477592

- Rehan Dalal: 1504038

- Shubham Kumaram: 1509664

- Tim B: 1500439

- Toby Ward: 1496082

- Vincent: 1435469, 1503175

- Vineeth Karra: 1499661

- Zameer: 1495971

https://blog.mozilla.org/community/2019/01/23/firefox-65-new-contributors/

|

|

Mozilla B-Team: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1511261] request queue page shows ‘Bugzilla::User=HASH(…)’ instead of username

- [1520856] “Opt out of these emails” at bottom of overdue request nagging emails doesn’t open desired page

- [1520011] Phabbugz panel short description missing

- [1518886] Remove outdated build plan code from PhabBugz extension used to move…

|

|

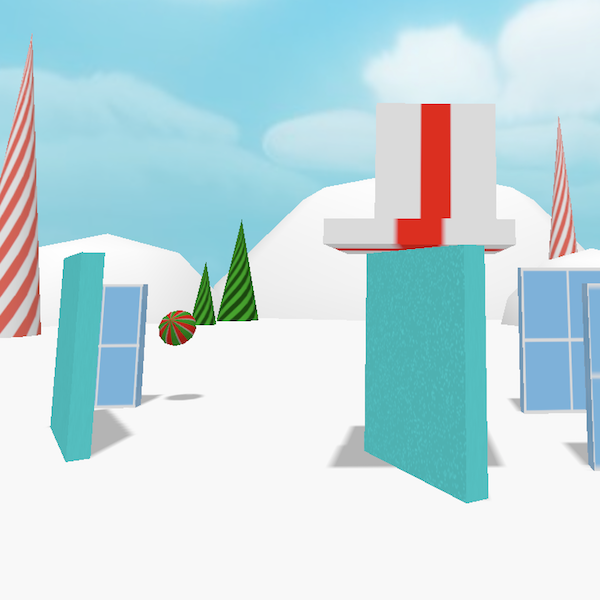

Mozilla VR Blog: How I made Jingle Smash |

When advocating a new technology I always try to use it in the way that real world developers will, and for WebVR (the VR-only precursor to WebXR), building a game is currently one of the best ways to do that. So for the winter holidays I built a game, Jingle Smash, a classic block tumbling game. If you haven't played it yet, put on your headset and give it a try. Now an overview of how I built it.

This article is part of my ongoing series of medium difficulty ThreeJS tutorials. I’ve long wanted something in between the intro “How to draw a cube” and “Let’s fill the screen with shader madness” levels. So here it is.

ThreeJS

Jingle Smash is written in ThreeJS using WebVR and some common boilerplate that I use in all of my demos. I chose to use ThreeJS directly instead of A-Frame because I knew I would be adding custom textures, custom geometry, and a custom control scheme. While it is possible to do this with A-Frame, I'd be writing so much code at the ThreeJS level that it was easier to cut out the middle man.

Physics

Jingle Smash is an Angry Birds style game where you lob an object at blocks to knock them over and destroy targets. Once you have destroyed the required targets you get to the next level. Seems simple enough. And for an 2D side view game like Angry Birds it is. I remember enough of my particle physics from school to write a simple 2D physics simulator, but 3D collisions are way beyond me. I needed a physics engine.

After evaluating the options I settled on Cannon.js because it's 100% Javascript and has no requirements on the UI. It simply calculates the positions of objects in space and puts your code in charge of stepping through time. This made it very easy to integrate with ThreeJS. It even has an example.

Graphics

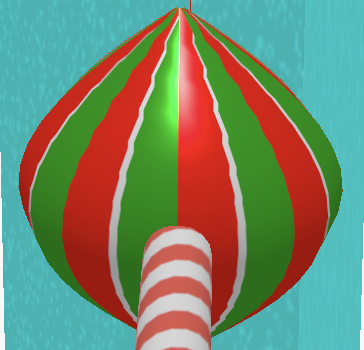

In previous games I have used 3D models created by an artist. For this Jingle Smash I created everything in code. The background, blocks, and ornaments all use either standard or generated geometry. All of the textures except for the sky background are also generated on the fly using 2D HTML Canvas, then converted into textures.

I went with a purely generated approach because it let me easily mess with UV values to create different effects and use exactly the colors I wanted. In a future blog I'll dive deep into how they work. Here is a quick example of generating an ornament texture:

const canvas = document.createElement('canvas')

canvas.width = 64

canvas.height = 16

const c = canvas.getContext('2d')

c.fillStyle = 'black'

c.fillRect(0, 0, canvas.width, canvas.height)

c.fillStyle = 'red'

c.fillRect(0, 0, 30, canvas.height)

c.fillStyle = 'white'

c.fillRect(30, 0, 4, canvas.height)

c.fillStyle = 'green'

c.fillRect(34, 0, 30, canvas.height)

this.textures.ornament1 = new THREE.CanvasTexture(canvas)

this.textures.ornament1.wrapS = THREE.RepeatWrapping

this.textures.ornament1.repeat.set(8, 1)

Level Editor

Most block games are 2D. The player has a view of the entire game board. Once you enter 3D, however, the blocks obscure the ones behind them. This means level design is completely different. The only way to see what a level looks like is to actually jump into VR and see it. That meant I really needed a way to edit the level from within VR, just as the player would see it.

To make this work I built a simple (and ugly) level editor inside of VR. This required building a small 2D UI toolkit for the editor controls. Thanks to using HTML canvas this turned out not to be too difficult.

Next Steps

I'm pretty happy with how Jingle Smash turned out. Lots of people played it at the Mozilla All-hands and said they had fun. I did some performance optimization and was able to get the game up to about 50fps, but there is still more work to do (which I'll cover soon in another post).

Jingle Smash proved that we can make fun games that run in WebVR, and that load very quickly (on a good connection the entire game should load in less than 2 seconds). You can see the full (but messy) code of Jingle Smash in my WebXR Experiments repo.

While you wait for the future updates on Jingle Smash, you might want to watch my new Youtube Series on How to make VR with the Web

|

|

Hacks.Mozilla.Org: Fearless Security: Memory Safety |

Fearless Security

Last year, Mozilla shipped Quantum CSS in Firefox, which was the culmination of 8 years of investment in Rust, a memory-safe systems programming language, and over a year of rewriting a major browser component in Rust. Until now, all major browser engines have been written in C++, mostly for performance reasons. However, with great performance comes great (memory) responsibility: C++ programmers have to manually manage memory, which opens a Pandora’s box of vulnerabilities. Rust not only prevents these kinds of errors, but the techniques it uses to do so also prevent data races, allowing programmers to reason more effectively about parallel code.

In the coming weeks, this three-part series will examine memory safety and thread safety, and close with a case study of the potential security benefits gained from rewriting Firefox’s CSS engine in Rust.

What Is Memory Safety

When we talk about building secure applications, we often focus on memory safety. Informally, this means that in all possible executions of a program, there is no access to invalid memory. Violations include:

- use after free

- null pointer dereference

- using uninitialized memory

- double free

- buffer overflow

For a more formal definition, see Michael Hicks’ What is memory safety post and The Meaning of Memory Safety, a paper that formalizes memory safety.

Memory violations like these can cause programs to crash unexpectedly and can be exploited to alter intended behavior. Potential consequences of a memory-related bug include information leakage, arbitrary code execution, and remote code execution.

Managing Memory

Memory management is crucial to both the performance and the security of applications. This section will discuss the basic memory model. One key concept is pointers. A pointer is a variable that stores a memory address. If we visit that memory address, there will be some data there, so we say that the pointer is a reference to (or points to) that data. Just like a home address shows people where to find you, a memory address shows a program where to find data.

Everything in a program is located at a particular memory address, including code instructions. Pointer misuse can cause serious security vulnerabilities, including information leakage and arbitrary code execution.

Allocation/free

When we create a variable, the program needs to allocate enough space in memory to store the data for that variable. Since the memory owned by each process is finite, we also need some way of reclaiming resources (or freeing them). When memory is freed, it becomes available to store new data, but the old data can still exist until it is overwritten.

Buffers

A buffer is a contiguous area of memory that stores multiple instances of the same data type. For example, the phrase “My cat is Batman” would be stored in a 16-byte buffer. Buffers are defined by a starting memory address and a length; because the data stored in memory next to a buffer could be unrelated, it’s important to ensure we don’t read or write past the buffer boundaries.

Control Flow

Programs are composed of subroutines, which are executed in a particular order. At the end of a subroutine, the computer jumps to a stored pointer (called the return address) to the next part of code that should be executed. When we jump to the return address, one of three things happens:

- The process continues as expected (the return address was not corrupted).

- The process crashes (the return address was altered to point at non-executable memory).

- The process continues, but not as expected (the return address was altered and control flow changed).

How languages achieve memory safety

We often think of programming languages on a spectrum. On one end, languages like C/C++ are efficient, but require manual memory management; on the other, interpreted languages use automatic memory management (like reference counting or garbage collection [GC]), but pay the price in performance. Even languages with highly optimized garbage collectors can’t match the performance of non-GC’d languages.

Manually

Some languages (like C) require programmers to manually manage memory by specifying when to allocate resources, how much to allocate, and when to free the resources. This gives the programmer very fine-grained control over how their implementation uses resources, enabling fast and efficient code. However, this approach is prone to mistakes, particularly in complex codebases.

Mistakes that are easy to make include:

- forgetting that resources have been freed and trying to use them

- not allocating enough space to store data

- reading past the boundary of a buffer

A safety video candidate for manual memory management

Smart pointers

A smart pointer is a pointer with additional information to help prevent memory mismanagement. These can be used for automated memory management and bounds checking. Unlike raw pointers, a smart pointer is able to self-destruct, instead of waiting for the programmer to manually destroy it.

There’s no single smart pointer type—a smart pointer is any type that wraps a raw pointer in some practical abstraction. Some smart pointers use reference counting to count how many variables are using the data owned by a variable, while others implement a scoping policy to constrain a pointer lifetime to a particular scope.

In reference counting, the object’s resources are reclaimed when the last reference to the object is destroyed. Basic reference counting implementations can suffer from performance and space overhead, and can be difficult to use in multi-threaded environments. Situations where objects refer to each other (cyclical references) can prohibit either object’s reference count from ever reaching zero, which requires more sophisticated methods.

Garbage Collection

Some languages (like Java, Go, Python) are garbage collected. A part of the runtime environment, named the garbage collector (GC), traces variables to determine what resources are reachable in a graph that represents references between objects. Once an object is no longer reachable, its resources are not needed and the GC reclaims the underlying memory to reuse in the future. All allocations and deallocations occur without explicit programmer instruction.

While a GC ensures that memory is always used validly, it doesn’t reclaim memory in the most efficient way. The last time an object is used could occur much earlier than when it is freed by the GC. Garbage collection has a performance overhead that can be prohibitive for performance critical applications; it requires up to 5x as much memory to avoid a runtime performance penalty.

Ownership

To achieve both performance and memory safety, Rust uses a concept called ownership. More formally, the ownership model is an example of an affine type system. All Rust code follows certain ownership rules that allow the compiler to manage memory without incurring runtime costs:

- Each value has a variable, called the owner.

- There can only be one owner at a time.

- When the owner goes out of scope, the value will be dropped.

Values can be moved or borrowed between variables. These rules are enforced by a part of the compiler called the borrow checker.

When a variable goes out of scope, Rust frees that memory. In the following example, when s1 and s2 go out of scope, they would both try to free the same memory, resulting in a double free error. To prevent this, when a value is moved out of a variable, the previous owner becomes invalid. If the programmer then attempts to use the invalid variable, the compiler will reject the code. This can be avoided by creating a deep copy of the data or by using references.

Example 1: Moving ownership

let s1 = String::from("hello");

let s2 = s1;

//won't compile because s1 is now invalid

println!("{}, world!", s1);

Another set of rules verified by the borrow checker pertains to variable lifetimes. Rust prohibits the use of uninitialized variables and dangling pointers, which can cause a program to reference unintended data. If the code in the example below compiled, r would reference memory that is deallocated when x goes out of scope—a dangling pointer. The compiler tracks scopes to ensure that all borrows are valid, occasionally requiring the programmer to explicitly annotate variable lifetimes.

Example 2: A dangling pointer

let r;

{

let x = 5;

r = &x

}

println!("r: {}", r);

The ownership model provides a strong foundation for ensuring that memory is accessed appropriately, preventing undefined behavior.

Memory Vulnerabilities

The main consequences of memory vulnerabilities include:

- Crash: accessing invalid memory can make applications terminate unexpectedly

- Information leakage: inadvertently exposing non-public data, including sensitive information like passwords

- Arbitrary code execution (ACE): allows an attacker to execute arbitrary commands on a target machine; when this is possible over a network, we call it a remote code execution (RCE)

Another type of problem that can appear is memory leakage, which occurs when memory is allocated, but not released after the program is finished using it. It’s possible to use up all available memory this way. Without any remaining memory, legitimate resource requests will be blocked, causing a denial of service. This is a memory-related problem, but one that can’t be addressed by programming languages.

The best case scenario with most memory errors is that an application will crash harmlessly—this isn’t a good best case. However, the worst case scenario is that an attacker can gain control of the program through the vulnerability (which could lead to further attacks).

Misusing Free (use-after-free, double free)

This subclass of vulnerabilities occurs when some resource has been freed, but its memory position is still referenced. It’s a powerful exploitation method that can lead to out of bounds access, information leakage, code execution and more.

Garbage-collected and reference-counted languages prevent the use of invalid pointers by only destroying unreachable objects (which can have a performance penalty), while manually managed languages are particularly susceptible to invalid pointer use (particularly in complex codebases). Rust’s borrow checker doesn’t allow object destruction as long as references to the object exist, which means bugs like these are prevented at compile time.

Uninitialized variables

If a variable is used prior to initialization, the data it contains could be anything—including random garbage or previously discarded data, resulting in information leakage (these are sometimes called wild pointers). Often, memory managed languages use a default initialization routine that is run after allocation to prevent these problems.

Like C, most variables in Rust are uninitialized until assignment—unlike C, you can’t read them prior to initialization. The following code will fail to compile:

Example 3: Using an uninitialized variable

fn main() {

let x: i32;

println!("{}", x);

}

Null pointers

When an application dereferences a pointer that turns out to be null, usually this means that it simply accesses garbage that will cause a crash. In some cases, these vulnerabilities can lead to arbitrary code execution 1 2 3. Rust has two types of pointers, references and raw pointers. References are safe to access, while raw pointers could be problematic.

Rust prevents null pointer dereferencing two ways:

- Avoiding nullable pointers

- Avoiding raw pointer dereferencing

Rust avoids nullable pointers by replacing them with a special Option type. In order to manipulate the possibly-null value inside of an Option, the language requires the programmer to explicitly handle the null case or the program will not compile.

When we can’t avoid nullable pointers (for example, when interacting with non-Rust code), what can we do? Try to isolate the damage. Any dereferencing raw pointers must occur in an unsafe block. This keyword relaxes Rust’s guarantees to allow some operations that could cause undefined behavior (like dereferencing a raw pointer).

Buffer overflow

While the other vulnerabilities discussed here are prevented by methods that restrict access to undefined memory, a buffer overflow may access legally allocated memory. The problem is that a buffer overflow inappropriately accesses legally allocated memory. Like a use-after-free bug, out-of-bounds access can also be problematic because it accesses freed memory that hasn’t been reallocated yet, and hence still contains sensitive information that’s supposed to not exist anymore.

A buffer overflow simply means an out-of-bounds access. Due to how buffers are stored in memory, they often lead to information leakage, which could include sensitive data such as passwords. More severe instances can allow ACE/RCE vulnerabilities by overwriting the instruction pointer.

Example 4: Buffer overflow (C code)

int main() {

int buf[] = {0, 1, 2, 3, 4};

// print out of bounds

printf("Out of bounds: %d\n", buf[10]);

// write out of bounds

buf[10] = 10;

printf("Out of bounds: %d\n", buf[10]);

return 0;

}

The simplest defense against a buffer overflow is to always require a bounds check when accessing elements, but this adds a runtime performance penalty.

How does Rust handle this? The built-in buffer types in Rust’s standard library require a bounds check for any random access, but also provide iterator APIs that can reduce the impact of these bounds checks over multiple sequential accesses. These choices ensure that out-of-bounds reads and writes are impossible for these types. Rust promotes patterns that lead to bounds checks only occurring in those places where a programmer would almost certainly have to manually place them in C/C++.

Memory safety is only half the battle

Memory safety violations open programs to security vulnerabilities like unintentional data leakage and remote code execution. There are various ways to ensure memory safety, including smart pointers and garbage collection. You can even formally prove memory safety. While some languages have accepted slower performance as a tradeoff for memory safety, Rust’s ownership system achieves both memory safety and minimizes the performance costs.

Unfortunately, memory errors are only part of the story when we talk about writing secure code. The next post in this series will discuss concurrency attacks and thread safety.

Exploiting Memory: In-depth resources

Heap memory and exploitation

Smashing the stack for fun and profit

Analogies of Information Security

Intro to use after free vulnerabilities

The post Fearless Security: Memory Safety appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/01/fearless-security-memory-safety/

|

|

Daniel Glazman: WebExtensions v3 considered harmful |

The Open Web Platform is a careful and fragile construction billions of people, including millions of implementors rely on. HTML, CSS, JavaScript, the Document Object Model, the Web API and more are all standardized one way or another; that means vendors and stakeholders gather around a table to discuss all changes and that these changes must pass quality and/or availability criteria to be considered "shippable".

One notable absent from the list of Web Standards is WebExtensions. WebExtensions are the generalized name of Google Chrome Extensions that became mainstream when Google achieved dominance over the desktop browser market and when Mozilla abandoned its own, and much more powerful, addons system based on XUL and privileged scripts.

As a reminder, the WebExtension API allows coders to implement extensions to the browser based on:

- HTML/CSS/JS for each and every dialog created by the extension, including the ones "integrated" into the browser's UI

- a dual model with "background scripts" with more privileges than "content scripts" that get added to visited web pages

- a new API (the WebExtension API) that offers - and rather strictly controls - access to information that is not otherwise reachable from JavaScript

- a permissions model that declare what part of the aforementioned API the extension uses and which remote URLs the embedded scripts can access

- a URL model that puts everything in the extension under a

chrome-extension://URL - a review process (on the Google Chrome Extension store) supposed to block harmful codes and more

A while ago, at a time Microsoft still had its own rendering engine, it initiated a Community Group on WebExtensions at the World Wide Web Consortium (W3C). With members from most browser vendors plus a few others, this seemed to be a very positive move not only for implementors but also for users.

But unfortunately, that effort went nowhere. Lack of commitment from other browser vendors and in particular Google, Microsoft abandoning its own rendering engine, lax Community Group instead of a formal W3C Working Group, the WebExtension draft specification has been in limbos for a while now and WebExtensions clearly remain the poor parent of Web Standards even if most people have at least one browser extension installed (usually some sort of ad-blocker).

Today, Google is impulsing a deep change in its WebExtension model:

- Background HTML pages will be deprecated in favor of ServiceWorkers. That change alone will imply a complete rearchitecture of existing extensions and will also impact their ability to create and deal with the dialogs their UX model requires.

- The

webRequestAPI that billions of users activate on a daily basis to block advertisement, trackers or undesirable content, is at stake and should be replaced by a declarartive new API that will not allow to monitor the requested resources any more. At a time the advertisement model on the Web is harmed by ad blockers, one can only wonder if this change is triggered only by technical considerations or if ad strategy is also behind it... Furthermore, it will be limited to a few dozens of thousands of declarations, which is far below the number of trackers and advertisement scripts available in the wild today. - Some heavily used API will be removed, without consideration for usage metrics or change cost to implementors

- Even the description of the top level of an extension (aka the "browser action" and the "page action") will change and impact extension vendors

- All of that is for the time being decided on the Google side alone, with little or no visible contact with the other WebExtension host (Mozilla) or the thousands of WebExtension (free or commercial) providers. There is even a "migration plans" document but it's not publicly available, the link being access-restricted

On the webRequest part specifically, all major actors of

the ad-blocking and security landscape are screaming (see also the chromium-extensions Google group). Us at Privowny are

also deeply concerned by the v3 proposed changes. Even Amnesty

International complained in a recent message! To me, the most important message posted in reply to the proposed changes is the following one:

Hi, we are the developer of a child-protection add-on, which strives to make the Internet safer for minors. This change would cripple our efforts on Chrome.

Talk about "don't be evil"...

All of that gives a set of very bad signals to third-party implementors, including us at Privowny:

- WebExtensions are not a mature part of the Open Web Platform. It completely lacks stability, and software vendors willing to use it must be ready to life-threatening (for them) changes at any time

- WebExtensions are fully in the hands of Google, that can and will change it any time based on its own interests only. It is not a Web Standard.

- Google is ready to make WebExtensions diverge from cross-browser interoperability at any time, killing precisely what brought vendors like us at Privowny to WebExtensions.

- Google Chrome is not what it seems to be, a browser based on an Open Source project that protects users, promotes openness and can serve as a basis tool for webcitizen's protection.

Reading the above, and given the fact Google is able to impulse changes of such magnitudes with little or no impact study on vendors like us, we consider that WebExtensions are not a safe development platform any more. We will probably study soon an extraction of most of our code into a native desktop application, leaving only the minimum minimorum in the browser extension to communicate with web pages and of course with our native app.

After Mozilla that severely harmed its amazing addons ecosystem (remember it triggered the success of Firefox), after Apple that partly went away from JavaScript-based Safari extensions jeopardizing its addons ecosystem so much it's anemic (I could even say dying), Google is taking a move that is harmful to Chrome extensions vendors. What is striking here is that Google is making the very same mistake Mozilla did: no prior discussion with stakeholders (hear extension implementors), release of a draft spec that was obviously going to trigger strong reactions, unmeasured impact (complexity, time and finances) on implementors, more and more restrictions on what it is possible to do but a too limited set of new features.

On the legal side of things, this unilateral change could probably even qualify as "Abuse of dominant position" under European Union's article 102 TFUE, and could then cost Google a lot, really a lot...

The Open Web Platform is alive and vibrant. The Browser Extension ecosystem is in jail, subject to unpredictable harmful changes decided by one single actor. This must change, it's not viable any more.

http://www.glazman.org/weblog/dotclear/index.php?post/2019/01/23/WebExtensions-v3-considered-harmful

|

|

Daniel Stenberg: HTTP/3 talk on video |

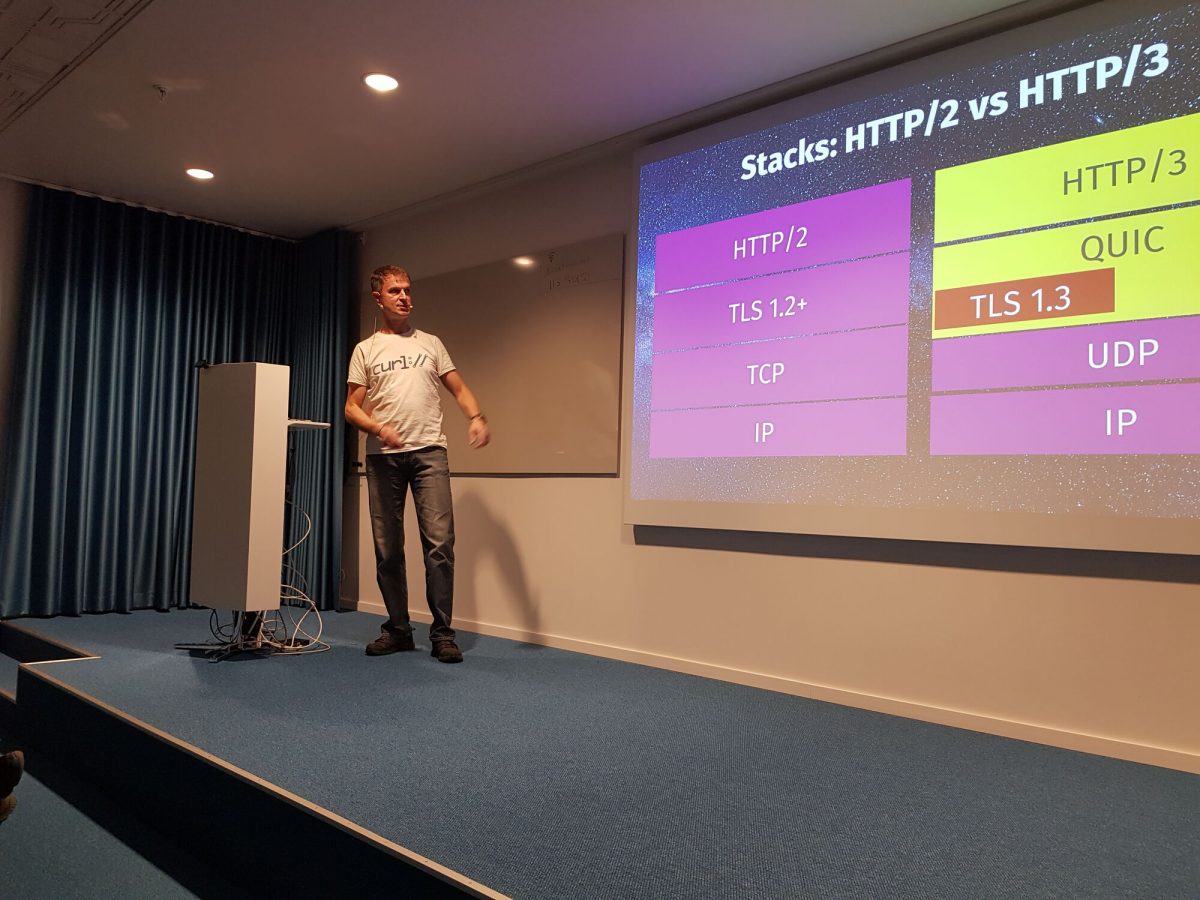

Yesterday, I had attracted audience enough to fill up the largest presentation room GOTO 10 has, which means about one hundred interested souls.

The subject of the day was HTTP/3. The event was filmed with a mevo camera and I captured the presentation directly from my laptop as well, and I then stitched together the two sources into this final version late last night. As you’ll notice, the sound isn’t awesome and the rest of the “production” isn’t exactly top notch either, but hey, I don’t think it matters too much.

The slide set can also be viewed on slideshare.

https://daniel.haxx.se/blog/2019/01/23/http-3-talk-on-video/

|

|

Hacks.Mozilla.Org: How to make VR with the web, a new video series |

Virtual reality (VR) seems complicated, but with a few JavaScript libraries and tools, and the power of WebGL, you can make very nice VR scenes that can be viewed and shared in a headset like an Oculus Go or HTC Vive, in a desktop web browser, or on your smartphone. Let me show you how:

In this new YouTube series, How to make a virtual reality project in your browser with three.js and WebVR, I’ll take you through building an interactive birthday card in seven short tutorials, complete with code and examples to get you started. The whole series clocks in under 60 minutes. We begin by getting a basic cube on the screen, add some nice 3D models, set up lights and navigation, then finally add music.

All you need are basic JavaScript skills and an internet connection.

Here’s the whole series. Come join me:

1: Learn how to build virtual reality scenes on the web with WebVR and JavaScript

2: Set up your WebVR workflow and code to build a virtual reality birthday card

3: Using a WebVR editor (Spoke) to create a fun 3D birthday card

4: How to create realistic lighting in a virtual reality scene

5: How to move around in virtual reality using teleportation to navigate your scene

6: Adding text and text effects to your WebVR scene with a few lines of code

7: How to add finishing touches like sound and sky to your WebVR scene

To learn how to make more cool stuff with web technologies, subscribe to Mozilla Hacks on YouTube. And if you want to get more involved in learning to create mixed reality experiences for the web, you can follow @MozillaReality on twitter for news, articles, and updates.

The post How to make VR with the web, a new video series appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/01/how-to-make-vr-with-the-web-video-series/

|

|

The Mozilla Blog: The Coral Project is Moving to Vox Media |

Since 2015, the Mozilla Foundation has incubated The Coral Project to support journalism and improve online dialog around the world through privacy-centered, open source software. Originally founded as a two-year collaboration between Mozilla, The New York Times and the Washington Post, it became entirely a Mozilla project in 2017.

Over the past 3.5 years, The Coral Project has developed two software tools, a series of guides and best practices, and grown a community of journalism technologists around the world advancing privacy and better online conversation.

Coral’s first tool, Ask, has been used by journalists in several countries, including the Spotlight team at the Boston Globe, whose series on racism used Ask on seven different occasions, and was a finalist for the Pulitzer Prize in Local Reporting.

The Coral Project’s main tool, the Talk platform, now powers the comments for nearly 50 newsrooms in 11 countries, including The Wall Street Journal, the Washington Post, The Intercept, and the Globe and Mail. The Coral Project has also collaborated with academics and technologists, running events and working with researchers to reduce online harassment and raise the quality of conversation on the decentralized web.

After 3.5 years at Mozilla, the time is right for Coral software to move further into the journalism space, and grow with the support of an organization grounded in that industry. And so, in January, the entire Coral Project team will join Vox Media, a leading media company with deep ties in online community engagement.

Under Vox Media’s stewardship, The Coral Project will receive the backing of a large company with an unrivaled collection of journalists as well as experience in the area of Software as a Service. This combination will help specifically to grow the adoption of Coral’s commenting platform Talk, while continuing as an open source project that respects user privacy.

The Coral Project has built a community of journalists and technologists who care deeply about improving the quality of online conversation. Mozilla will continue to support and highlight the work of this community as champions of a healthy, humane internet that is accessible to all.

We are excited for the new phase of The Coral Project at Vox Media, and hope you will join us in celebrating its success so far, and in supporting our shared vision for a better internet.

The post The Coral Project is Moving to Vox Media appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/01/22/the-coral-project-is-moving-to-vox-media/

|

|

Mozilla Open Policy & Advocacy Blog: Brussels Mozilla Mornings – Disinformation and online advertising: an unhealthy relationship? |

On the morning of 19 February, Mozilla will host the second of our Mozilla Mornings series – regular breakfast meetings where we bring together policy experts, policymakers and practitioners for insight and discussion on the latest EU digital policy developments. This session will be devoted to disinformation and online advertising.

Our expert panel will seek to unpack the relation between the two and explore policy solutions to ensure a healthy online advertising ecosystem.

Speakers

MEP Marietje Schaake, ALDE MEP

Clara Hanot, EU Disinfo Lab

Raegan MacDonald, Mozilla

Moderated by Brian Maguire, EURACTIV

Logistical information

19 February 2019

08:30-10:00

Sliversquare Europe, Square de Mee^us 35

Register your attendance here

The post Brussels Mozilla Mornings – Disinformation and online advertising: an unhealthy relationship? appeared first on Open Policy & Advocacy.

|

|

Jan-Erik Rediger: multi-store - Custom Telemetry with shared data |

Last year I implemented a new feature for Firefox Telemetry that changes how we can collect and analyze data with different requirements in regard to user privacy & frequency. This post will shine some light on the (rather simple) implementation and usage.

Intro: What is Firefox Telemetry?

In order to understand how Firefox performs in the wild, it can collect a bunch of performance metrics and other information. How and why we do this and what data we collect is explained in more detail in a blog post by Rebecca Weiss, Director of Data Science here at Mozilla: It’s your data, we’re just living in it.

I work on the Telemetry component inside Firefox. It provides APIs that are used by the various other parts of the browser to gather data and is responsible for storing, collecting and sending this data in what we call "pings", a periodic collection of measurements. Telemetry data is only ever sent out if the user agreed to it (see "Data Collection and Use" in the "Privacy & Security" preferences of your Firefox).

Most data is collected in one of 3 different formats: histograms, scalars & events (see our collection overview). Firefox sends this data in the "main" ping once in a while (usually roughly daily) and clears out the stored data locally. A "main" ping always corresponds to a subsession, which itself is part of a session. This is further explained in our Session concepts.

People working with the data can therefore make certain assumptions on how to interpret the data from multiple pings across sessions and subsessions.

The problem

Some data should not be correlated with other data due to privacy concerns. So far we had to push Telemetry users to create custom pings and keep track of their own data. If they rely on scalars or histograms as recorded by Telemetry, but send a custom ping in different intervals, they can't make valid assumptions about the metrics, as they might have been reset in between. This leads to weird hacks or unnecessary code duplication. Additionally we can't provide any help or support for custom data and our tools can't handle it automatically (e.g. to generate dashboards).

A solution

Multi-Store.

Every metric was always tied to the schedule of the "main" ping (read: subsession/session). Our multi-store solution now enables metrics to be associated with multiple stores at once, defaulting to be in the "main" ping only. Any user with custom requirements can now select metrics to be included in their custom store (which is still subject to Data Collection Review). Telemetry is still responsible for actual data storage and the APIs, but now the custom ping is responsible for collecting, clearing and periodically sending this data.

An example

To demonstrate how this is used, let's create a custom ping, which will include one metric of its own and one that's also available in the "main" store (and thus the "main" ping).

We start by adding a new metric to Scalars.yaml:

tick_times_rand: bug_numbers: - 0 description: "A random value at every tick" expires: '71' kind: uint notification_emails: - janerik@fnordig.de release_channel_collection: opt-out record_in_processes: - "main" record_into_store: - "custom-store"

This defines a scalar named tick_times_rand in the browser.engagement category. The exact details are not important as we will record random values anyway.

The important bit is setting the store using record_into_store.

We also include browser.engagement.tab_open_event_count into our store by just adding our custom store name to record_into_store (don't forget to also include "main" there).

--- c/toolkit/components/telemetry/Scalars.yaml +++ i/toolkit/components/telemetry/Scalars.yaml @@ -66,6 +66,9 @@ browser.engagement: release_channel_collection: opt-out record_in_processes: - 'main' + record_into_store: + - "main" + - "custom-store"

Next, we define our new custom ping in a new file in toolkit/components/telemetry/pings/CustomPing.jsm.

We only define a very simple interface: A way to start the custom ping, which itself sets up a (persistent) timer (firing every 24 hours).

When fired, notify() will be called, which then collects the payload from custom-store and schedule the ping for sending.

Telemetry will take care of actually sending the ping (and retrying, storing it in the archive, etc.).

For test reasons only we also send this ping when start() is called (so we don't actually have to wait 24 hours to see something).

var EXPORTED_SYMBOLS = ["TelemetryCustomPing"]; var TelemetryCustomPing = Object.freeze({ start() { UpdateTimerManager.registerTimer( "telemetry_custom_ping", this, 24 * 60 * 60; /* 1 day */ ); this.sendPing(); }, notify() { this.sendPing(); }, sendPing() { // Let's record just one more value, so _something_ is included. let randValue = Math.floor(Math.random() * Math.floor(100)); Services.telemetry.scalarSet("browser.engagement.tick_times_rand", randValue); // We only include the scalars, as that's all we are recording for this ping. let payload = { custom: "This is custom data", scalars: Services.telemetry.getSnapshotForScalars("custom-store", /* clear */ true), }; TelemetryController.submitExternalPing("custom", payload, { addClientId: false, addEnvironment: true, } ); } });

Now we need to actually start the ping timer. This should be done in the initialization phase of TelemetryController:

--- c/toolkit/components/telemetry/app/TelemetryController.jsm +++ i/toolkit/components/telemetry/app/TelemetryController.jsm @@ -62,6 +62,7 @@ XPCOMUtils.defineLazyModuleGetters(this, { TelemetryReportingPolicy: "resource://gre/modules/TelemetryReportingPolicy.jsm", TelemetryModules: "resource://gre/modules/ModulesPing.jsm", TelemetryUntrustedModulesPing: "resource://gre/modules/UntrustedModulesPing.jsm", + TelemetryCustomPing: "resource://gre/modules/CustomPing.jsm", UpdatePing: "resource://gre/modules/UpdatePing.jsm", TelemetryHealthPing: "resource://gre/modules/HealthPing.jsm", TelemetryEventPing: "resource://gre/modules/EventPing.jsm", @@ -720,6 +721,9 @@ var Impl = { if (AppConstants.NIGHTLY_BUILD && AppConstants.platform == "win") { TelemetryUntrustedModulesPing.start(); } + + // Start the custom ping, which reports minimal information. + TelemetryCustomPing.start(); } TelemetryEventPing.startup();

But it's not measuring anything!

That is right, but for now we only record one value when sending the ping.

And that's it! Our custom ping, with data sourced from a custom store and a release-shipped metric is ready1. Let's build Firefox (this is the part where you have time to grab a cup of coffee if you haven't compiled Firefox before):

./mach build

...

2 - 40 minutes later. Still with me?

Now run the freshly built Firefox:

./mach run

Once Telemetry is initialized (should take 60 seconds), you should be able to see the freshly generated custom ping (in a development build this ping is never actually send out).

To see it, go to about:telemetry, click on Current Ping in the upper-left corner, select Archived ping data and then the custom ping type. There you have it!

The full changeset is available in this commit on the gecko-dev mirror (don't worry, this is not landing in Firefox).

What's next?

Currently there's no user of the multi-store feature, mainly because it was only finished in early December and is currently lacking some documentation (which this post should change a bit). We expect some usage of this soon though.

Not entirely true. This needs some small changes to the build system.

https://fnordig.de/2019/01/22/multi-store-custom-telemetry-with-shared-data

|

|

The Mozilla Blog: Welcome Roxi Wen, our incoming Chief Financial Officer |

I am excited to announce that Roxi Wen is joining Mozilla Corporation as our Chief Financial Officer (CFO) next month.

As a wholly-owned subsidiary of the non-profit Mozilla Foundation, the Mozilla Corporation, with over 1,000 full-time employees worldwide, creates products, advances public policy and explores new technology that give people more control over their lives online, and shapes the future of the global internet platform for the public good.

As our CFO Roxi will become a key member of our senior executive team with responsibility for leading financial operations and strategy as we scale our mission impact with new and existing products, technology and business models to better serve our users and advance our agenda for a healthier internet.

“I’m thrilled to join Mozilla at such a pivotal moment for the technology sector,” said Roxi Wen. “With consumers demanding more and better from the companies that supply the technology they rely upon, Mozilla is well-positioned to become their go-to choice and I am eager to lend my financial know-how to this effort.”

Roxi comes to Mozilla from Elo Touch Solutions, where she was CFO for the private equity-backed (The Gores Group) $400 million global manufacturer of touch screen computing systems. She brings to Mozilla experience across varying sectors from technology to healthcare to banking having held senior-level positions at GE Energy, Medtronic and Royal Bank of Canada.

Roxi is a CFA charterholder, earned a Bachelor of Economics from Xiamen University, China, a MBA in Finance and Strategy from the Carlson School of Management at the University of Minnesota. When she assumes her role in mid-February, Roxi will be based in our Mountain View, California headquarters.

Please join me in welcoming Roxi to Mozilla.

The post Welcome Roxi Wen, our incoming Chief Financial Officer appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/01/22/welcome-roxi-wen-our-incoming-chief-financial-officer/

|

|

Mozilla Addons Blog: Friend of Add-ons: Shivam Singhal |

Please meet our newest Friend of Add-ons, Shivam Singhal! Shivam became involved with the add-ons community in April 2017. Currently, he is an extension developer, Mozilla Rep, and code contributor to addons.mozilla.org (AMO). He also helps mentor good-first-bugs on AMO.

“My skill set grew while contributing to Mozilla,” Shivam says of his experiences over the last two years. “Being the part of a big community, I have learned how to work remotely with a cross-cultural team and how to mentor newbies. I have met some super awesome people like [AMO engineers] William Durand and Rebecca Mullin. The AMO team is super helpful to newcomers and works actively to help them.”

This year, he’s looking forward to submitting patches to the WebExtensions API and Add-ons Manager in Firefox, and mentoring more new code contributors. Shivam has advice for anyone who is interested in contributing to Mozilla’s add-ons projects. “If you are shy or not feeling comfortable commenting on an issue, you can fill out the add-ons contributor survey and someone will help you get started. That’s what I did. You can also check https://whatcanidoformozilla.org for other ways to get involved.”

In his free time, Shivam enjoys watching stand-up comedy and sci-fi web series, exploring food at cafes, and going through pull requests on the AMO frontend repository.

Thanks for all of your contributions, Shivam! Your enthusiasm for the add-ons ecosystem is contagious, and it’s been a pleasure watching you grow.

To learn more about how to get involved with the add-ons community, check out our Contribute wiki.

The post Friend of Add-ons: Shivam Singhal appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2019/01/21/friend-of-add-ons-shivam-singhal/

|

|

Daniel Stenberg: QUIC and missing APIs |

I trust you’ve heard by now that HTTP/3 is coming. It is the next destined HTTP version, targeted to get published as an RFC in July 2019. Not very far off.

HTTP/3 will not be done over TCP. It will only be performed over QUIC, which is a transport protocol replacement for TCP that always is done encrypted. There’s no clear-text version of QUIC.

TLS 1.3

The encryption in QUIC is based on TLS 1.3 technologies which I believe everyone thinks is a good idea and generally the correct decision. We need to successively raise the bar as we move forward with protocols.

However, QUIC is not only a transport protocol that does encryption by itself while TLS is typically (and designed as) a protocol that is done on top of TCP, it was also designed by a team of engineers who came up with a design that requires APIs from the TLS layer that the traditional TLS over TCP use case doesn’t need!

New TLS APIs

A QUIC implementation needs to extract traffic secrets from the TLS connection and it needs to be able to read/write TLS messages directly – not using the TLS record layer. TLS records are what’s used when we send TLS over TCP. (This was discussed and decided back around the time for the QUIC interim in Kista.)

These operations need APIs that still are missing in for example the very popular OpenSSL library, but also in other commonly used ones like GnuTLS and libressl. And of course schannel and Secure Transport.

Libraries known to already have done the job and expose the necessary mechanisms include BoringSSL, NSS, quicly, PicoTLS and Minq. All of those are incidentally TLS libraries with a more limited number of application users and less mainstream. They’re also more or less developed by people who are also actively engaged in the QUIC protocol development.

The QUIC libraries in progress now are typically using either one of the TLS libraries that already are adapted or do what ngtcp2 does: it hosts a custom-patched version of OpenSSL that brings the needed functionality.

Matt Caswell of the OpenSSL development team acknowledged this situation already back in September 2017, but so far we haven’t seen this result in updated code shipped in a released version.

curl and QUIC

curl is TLS library agnostic and can get built with around 12 different TLS libraries – one or many actually, as you can build it to allow users to select TLS backend in run-time!

OpenSSL is without competition the most popular choice to build curl with outside of the proprietary operating systems like macOS and Windows 10. But even the vendor-build and provided mac and Windows versions are also built with libraries that lack APIs for this.

With our current keen interest in QUIC and HTTP/3 support for curl, we’re about to run into an interesting TLS situation. How exactly is someone going to build curl to simultaneously support both traditional TLS based protocols as well as QUIC going forward?

I don’t have a good answer to this yet. Right now (assuming we would have the code ready in our end, which we don’t), we can’t ship QUIC or HTTP/3 support enabled for curl built to use the most popular TLS libraries! Hopefully by the time we get our code in order, the situation has improved somewhat.

This will slow down QUIC deployment

I’m personally convinced that this little API problem will be friction enough when going forward that it will slow down and hinder QUIC deployment at least initially.

When the HTTP/2 spec shipped in May 2015, it introduced a dependency on the fairly new TLS extension called ALPN that for a long time caused head aches for server admins since ALPN wasn’t supported in the OpenSSL versions that was typically installed and used at the time, but you had to upgrade OpenSSL to version 1.0.2 to get that supported.

At that time, almost four years ago, OpenSSL 1.0.2 was already released and the problem was big enough to just upgrade to that. This time, the API we’re discussing here is not even in a beta version of OpenSSL and thus hasn’t been released in any version yet. That’s far worse than the HTTP/2 situation we had and that took a few years to ride out.

Will we get these APIs into an OpenSSL release to test before the QUIC specification is done? If the schedule sticks, there’s about six months left…

https://daniel.haxx.se/blog/2019/01/21/quic-and-missing-apis/

|

|

Marco Castelluccio: Code Coverage on Phabricator |

We have recently implemented a solution to integrate code coverage results into Phabricator.

Coverage information is uploaded either automatically for revisions after they are landed in mozilla-central (for example for release managers when looking at uplift requests), or on-demand for in-progress revisions.

For revisions under review, in order to upload coverage you just need to trigger a try push containing code coverage builds and tests, e.g. by using:

$ mach try fuzzy --full

and selecting the relevant ccov builds and test suites. In the future, we will also likely automatically trigger coverage try builds for revisions we deem to be risky, alongside the on-demand option.

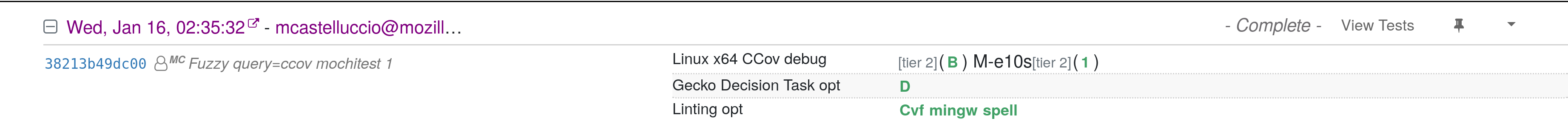

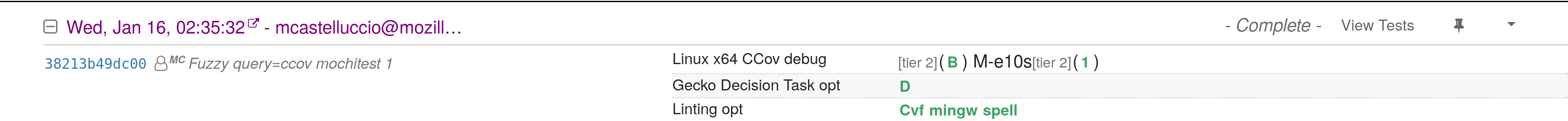

Here is an example of a try build which produced the coverage information for my revision:

Once the try build and linked tests finish, the coverage artifacts get parsed and uploaded to the Phabricator revisions corresponding to the commits in the try push. The analysis works on all commits in the try push that are linked to Phabricator revisions. Stacks of revisions are supported as well.

The coverage information is shown on Phabricator both at a high-level view, in the Revision Contents section, and at a detailed view in the Diff section.

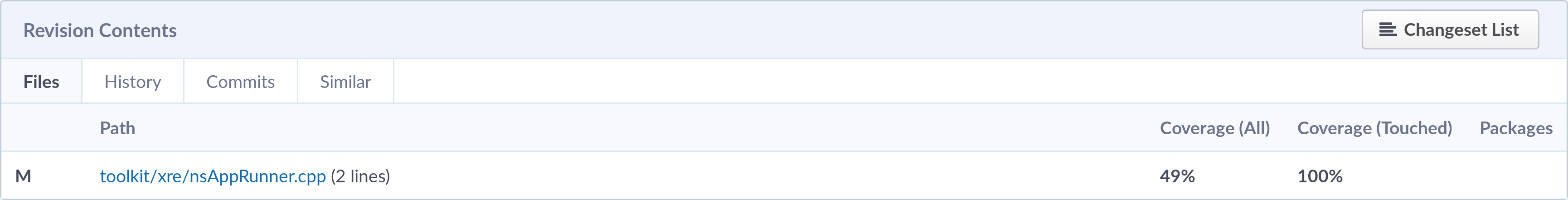

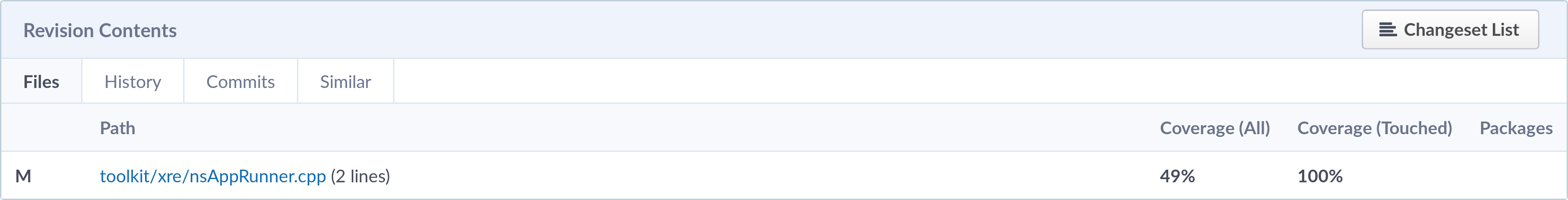

The Revision Contents section contains a list of the files modified by the revision, showing both the coverage percentage of the whole file and the coverage percentage of touched lines. Here’s the screenshot of the section from my revision:

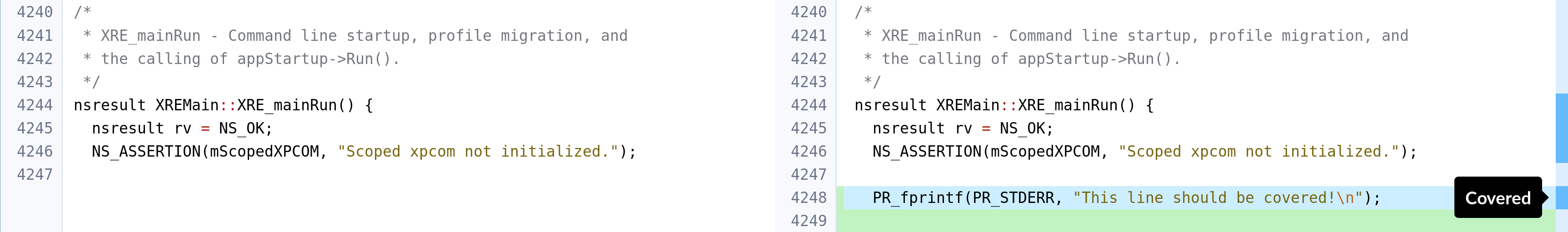

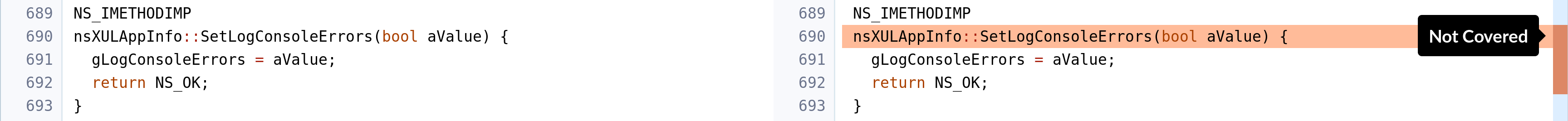

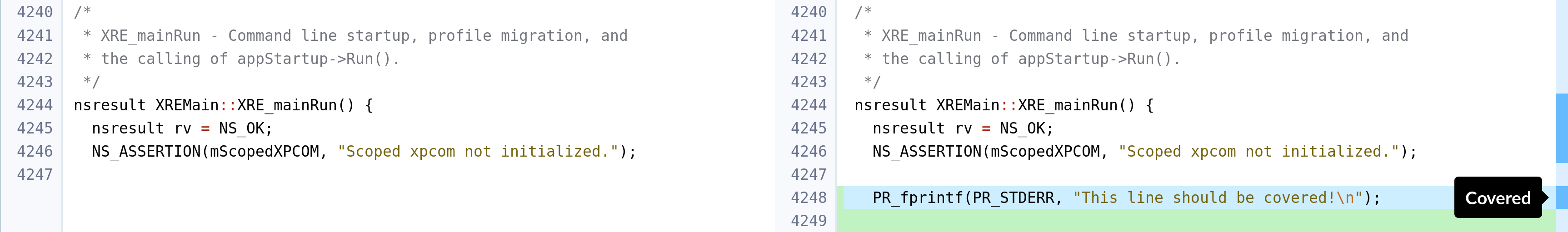

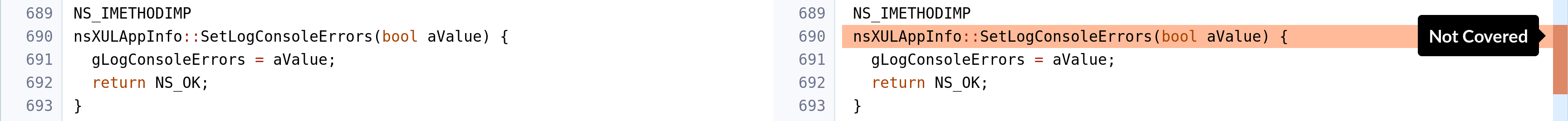

The Diff section instead shows the coverage line per line, coloring the bar on the right-hand side. Orange corresponds to uncovered lines, light blue corresponds to non-executable lines (e.g. a comment, a definition, and so on), dark blue corresponds to covered lines. When hovering the bar, the corresponding line is highlighted in the same color. The following screenshots show excerpts of my revision, with covered, uncovered and non-executable lines:

https://marco-c.github.io/2019/01/21/code-coverage-phabricator.html

|

|

Mozilla Release Management Team: Code Coverage on Phabricator |

We have recently implemented a solution to integrate code coverage results into Phabricator.

Coverage information is uploaded either automatically for revisions after they are landed in mozilla-central (for example for release managers when looking at uplift requests), or on-demand for in-progress revisions.

For revisions under review, in order to upload coverage you just need to trigger a try push containing code coverage builds and tests, e.g. by using:

$ mach try fuzzy --full

and selecting the relevant ccov builds and test suites. In the future, we will also likely automatically trigger coverage try builds for revisions we deem to be risky, alongside the on-demand option.

Here is an example of a try build which produced the coverage information for my revision:

Once the try build and linked tests finish, the coverage artifacts get parsed and uploaded to the Phabricator revisions corresponding to the commits in the try push. The analysis works on all commits in the try push that are linked to Phabricator revisions. Stacks of revisions are supported as well.

The coverage information is shown on Phabricator both at a high-level view, in the Revision Contents section, and at a detailed view in the Diff section.

The Revision Contents section contains a list of the files modified by the revision, showing both the coverage percentage of the whole file and the coverage percentage of touched lines. Here’s the screenshot of the section from my revision:

The Diff section instead shows the coverage line per line, coloring the bar on the right-hand side. Orange corresponds to uncovered lines, light blue corresponds to non-executable lines (e.g. a comment, a definition, and so on), dark blue corresponds to covered lines. When hovering the bar, the corresponding line is highlighted in the same color. The following screenshots show excerpts of my revision, with covered, uncovered and non-executable lines:

https://release.mozilla.org/tooling/codecoverage/2019/01/20/code-coverage-on-phabricator.html

|

|

Aaron Klotz: 2018 Roundup: Q1 |

I had a very busy 2018. So busy, in fact, that I have not been able to devote any time to actually discussing what I worked on! I had intended to write these posts during the end of December, but a hardware failure delayed that until the new year. Alas, here we are in 2019, and I am going to do a series of retrospectives on last year’s work, broken up by quarter.

(Links to future posts will go here)

Overview

The general theme of my work in 2018 was dealing with the DLL injection problem: On Windows, third parties love to forcibly load their DLLs into other processes – web browsers in particular, thus making Firefox a primary target.

Many of these libraries tend to alter Firefox processes in ways that hurt the stability and/or performance of our code; many chemspill releases have gone out over the years to deal with these problems. While I could rant for hours over this, the fact is that DLL injection is rampant in the ecosystem of Windows desktop applications and is not going to disappear any time soon. In the meantime, we need to be able to deal with it.

Some astute readers might be ready to send me an email or post a comment about how ignorant I am about the new(-ish) process mitigation policies that are available in newer versions of Windows. While those features are definitely useful, they are not panaceas:

- We cannot turn on the “Extension Point Disable” policy for users of assistive technologies; screen

readers rely heavily on DLL injection using

SetWindowsHookExandSetWinEventHook, both of which are covered by this policy; - We could enable the “Microsoft Binary Signature” policy, however that requires us to load our own DLLs first before enabling; once that happens, it is often already too late: other DLLs have already injected themselves by the time we are able to activate this policy. (Note that this could easily be solved if this policy were augmented to also permit loading of any DLL signed by the same organization as that of the process’s executable binary, but Microsoft seems to be unwilling to do this.)

- The above mitigations are not universally available. They do not help us on Windows 7.

For me, Q1 2018 was all about gathering better data about injected DLLs.

Learning More About DLLs Injected into Firefox

One of our major pain points over the years of dealing with injected DLLs has been that the vendor of the DLL is not always apparent to us. In general, our crash reports and telemetry pings only include the leaf name of the various DLLs on a user’s system. This is intentional on our part: we want to preserve user privacy. On the other hand, this severely limits our ability to determine which party is responsible for a particular DLL.

One avenue for obtaining this information is to look at any digital signature that is embedded in the DLL. By examining the certificate that was used to sign the binary, we can extract the organization of the cert’s owner and include that with our crash reports and telemetry.

In bug 1430857 I wrote a bunch of code that enables us to extract that information from signed binaries using the Windows Authenticode APIs. Originally, in that bug, all of that signature extraction work happened from within the browser itself, while it was running: It would gather the cert information on a background thread while the browser was running, and include those annotations in a subsequent crash dump, should such a thing occur.

After some reflection, I realized that I was not gathering annotations in the right place. As an example, what if an injected DLL were to trigger a crash before the background thread had a chance to grab that DLL’s cert information?

I realized that the best place to gather this information was in a post-processing step after the

crash dump had been generated, and in fact we already had the right mechanism for doing so: the

minidump-analyzer program was already doing post-processing on Firefox crash dumps before sending

them back to Mozilla. I moved the signature extraction and crash annotation code out of Gecko and

into the analyzer in bug 1436845.

(As an aside, while working on the minidump-analyzer, I found some problems with how it handled

command line arguments: it was assuming that main passes its argv as UTF-8, which is not true on

Windows. I fixed those issues in bug 1437156.)

In bug 1434489 I also ended up adding this information to the “modules ping” that we have in telemetry; IIRC this ping is only sent weekly. When the modules ping is requested, we gather the module cert info asynchronously on a background thread.

Finally, I had to modify Socorro (the back-end for crash-stats) to be able to understand the signature annotations and be able to display them via bug 1434495. This required two commits: one to modify the Socorro stackwalker to merge the module signature information into the full crash report, and another to add a “Signed By” column to every report’s “Modules” tab to display the signature information (Note that this column is only present when at least one module in a particular crash report contains signature information).

The end result was very satisfying: Most of the injected DLLs in our Windows crash reports are signed, so it is now much easier to identify their vendors!

This project was very satisifying for me in many ways: First of all, surfacing this information was an itch that I had been wanting to scratch for quite some time. Secondly, this really was a “full stack” project, touching everything from extracting signature info from binaries using C++, all the way up to writing some back-end code in Python and a little touch of front-end stuff to surface the data in the web app.

Note that, while this project focused on Windows because of the severity of the library injection problem on that platform, it would be easy enough to reuse most of this code for macOS builds as well; the only major work for the latter case would be for extracting signature information from a dylib. This is not currently a priority for us, though.

Thanks for reading! Coming up in Q2: Refactoring the Windows DLL Interceptor!

|

|

Hacks.Mozilla.Org: MDN Changelog – Looking back at 2018 |

|

|

Nick Desaulniers: Finding compiler bugs with C-Reduce |

Support for a long awaited GNU C extension, asm goto, is in the midst of landing in Clang and LLVM. We want to make sure that we release a high quality implementation, so it’s important to test the new patches on real code and not just small test cases. When we hit compiler bugs in large source files, it can be tricky to find exactly what part of potentially large translation units are problematic. In this post, we’ll take a look at using C-Reduce, a multithreaded code bisection utility for C/C++, to help narrow done a reproducer for a real compiler bug (potentially; in a patch that was posted, and will be fixed before it can ship in production) from a real code base (the Linux kernel). It’s mostly a post to myself in the future, so that I can remind myself how to run C-reduce on the Linux kernel again, since this is now the third real compiler bug it’s helped me track down.

So the bug I’m focusing on when trying to compile the Linux kernel with Clang is a linkage error, all the way at the end of the build.

1

| |

Hmm…looks like the object file (drivers/spi/spidev.o), has a

section (__jump_table), that references a non-existent

symbol (.Ltmp), which looks like a temporary label that should have been

cleaned up by the compiler. Maybe it was accidentally left behind by an

optimization pass?

To run C-reduce, we need a shell script that returns 0 when it should keep

reducing, and an input file. For an input file, it’s just way simpler to

preprocess it; this helps cut down on the compiler flags that typically

requires paths (-I, -L).

Preprocess

First, let’s preprocess the source. For the kernel, if the file compiles

correctly, the kernel’s KBuild build process will create a file named in the

form path/to/.file.o.cmd, in our case drivers/spi/.spidev.o.cmd. (If the file

doesn’t compile, then

I’ve had success

hooking make path/to/file.o with

bear

then getting the compile_commands.json for the file.) I find it easiest to

copy this file to a new shell script, then strip out everything but the first

line. I then replace the -c -o with -E. chmod +x that new

shell script, then run it (outputting to stdout) to eyeball that it looks

preprocessed, then redirect the output to a .i file. Now that we have our

preprocessed input, let’s create the C-reduce shell script.

Reproducer

I find it helpful to have a shell script in the form:

- remove previous object files

- rebuild object files

- disassemble object files and pipe to grep

For you, it might be some different steps.

As the docs show,

you just need the shell script to return 0 when it should keep reducing. From

our previous shell script that pre-processed the source and dumped a .i file,

let’s change it back to stop before linking rather that preprocessing

(s/-E/-c/), and change the input to our new .i file. Finally, let’s add

the test for what we want. Since I want C-Reduce to keep reducing until the

disassmbled object file no longer references anything Ltmp related, I write:

1

| |

Now I can run the reproducer to check that it at least returns 0, which C-Reduce needs to get started:

1 2 3 | |

Running C-Reduce

Now that we have a reproducer script and input file, let’s run C-Reduce.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | |

So it took C-reduce just over 6 minutes to turn >56k lines of mostly irrelevant code into 12 when running 40 threads on my 48 core workstation.

It’s also highly entertaining to watch C-Reduce work its magic. In another

terminal, I highly recommend running watch -n1 cat

to see it pared down before your eyes.

Jump to 4:24 to see where things really pick up.

Finally, we still want to bisect our compiler flags (the kernel uses a lot). I still do this process manually, and it’s not too bad. Having proper and minimal steps to reproduce compiler bugs is critical.

That’s enough for a great bug report for now. In a future episode, we’ll see how to start pulling apart llvm to see where compilation is going amiss.

http://nickdesaulniers.github.io/blog/2019/01/18/finding-compiler-bugs-with-c-reduce/

|

|

Nick Cameron: Leaving Mozilla and (most of) the Rust project |

Today is my last day as an employee of Mozilla. It's been almost exactly seven years - two years working on graphics and layout for Firefox, and five years working on Rust. Mostly remote, with a few stints in the Auckland office. It has been an amazing time: I've learnt an incredible amount, worked on some incredible projects, and got to work with some absolutely incredible people. BUT, it is time for me to learn some new things, and work on some new things with some new people.

Nearly everyone I've had contact with at Mozilla has been kind and smart and fun to work with. I would have liked to give thanks and a shout-out to a long list of people I've learned from or had fun with, but the list would be too long and still incomplete.

I'm going to be mostly stepping back from the Rust project too. I'm pretty sad about that (although I hope it will be worth it) - it's an extremely exciting, impactful project. As a PL researcher turned systems programmer, it really has been a dream project to work on. The Rust team at Mozilla and the Rust community in general are good people, and I'll miss working with you all terribly.

Concretely, I plan to continue to co-lead the Cargo and IDEs and Editors teams. I'll stay involved with the Rustfmt and Rustup working groups for a little while. I'll be leaving the other teams I'm involved with, including the core team (although I'll stick around in a reduced capacity for a few months). I won't be involved with code and review for Rust projects day-to-day. But I'll still be around on Discord and GitHub if needed for mentoring or occasional review; I will probably take much longer to respond.

None of the projects I've worked on are going to be left unmaintained, I'm very confident in the people working on them, on the teams I'm leaving behind, and in the Rust community in general (did I say you were awesome already?).

I'm very excited about my next steps (which I'll leave for another post), but for now I'm feeling pretty emotional about moving on from the Rust project and the Rust team at Mozilla. It's been a big part of my life for five years and I'm going to miss y'all. <3

P.S., it turns out that Steve is also leaving Mozilla - this is just a coincidence and there is no conspiracy or shared motive. We have different reasons for leaving, and neither of us knew the other was leaving until after we'd put in our notice. As far as I know, there is no bad blood between either of us and the Rust team.

http://www.ncameron.org/blog/leaving-mozilla-and-most-of-the-rust-project/

|

|

Marco Castelluccio: “It’s not a bug, it’s a feature.” - Differentiating between bugs and non-bugs using machine learning |

Bugzilla is a noisy data source: bugs are used to track anything, from Create a LDAP account for contributor X to Printing page Y doesn’t work. This makes it hard to know which bugs are bugs and which bugs are not bugs but e.g. feature requests, or meta bugs, or refactorings, and so on. To ease reading the next paragraphs, I’ll refer as bugbug to bugs that are actually bugs, as fakebug to bugs that are not actually bugs, and as bug to all Bugzilla bugs (bugbug + fakebug).

Why do we need to tell if a bug is actually a bug? There are several reasons, the main two being:

- Quality metrics: to analyze the quality of a project, to measure the churn of a given release, it can be useful to know, for example, how many bugbugs are filed in a given release cycle. If we don’t know which bugs are bugbugs and which are feature requests, we can’t precisely measure how many problems are found (= bugbugs filed) in a given component for a given release, we can only know the overall number, confusing bugbugs and feature work;

- Bug prediction: given the development history of the project, one can try to predict, with some measure of accuracy, which changes are risky and more likely to lead to regressions in the future. In order to do that, of course, you need to know which changes introduced problems in the past. If you can’t identify problems (i.e. bugbugs), then you can’t identify changes that introduced them!

On BMO, we have some optional keywords to identify regressions vs features, but they are not used consistently (and, being optional, they can’t be. We can work on improving the practices, but we can’t reach perfection when there is human involvement). So, we need another way to identify them. A possibility is to use handwritten rules (‘mozregression’ in comment -> regression; ‘support’ in title -> feature), which can be precise up to a certain accuracy level, but any improvement over that requires hard manual labor. Another option is to use machine learning techniques, leaving the hard work of extracting information from bug features to the machines!

The bugbug project is trying to do just that, at first with a very simple ML architecture.

We have a set of 1913 bugs, manually labelled between the two possible classes (bugbug vs nobug). We augment this manually labelled set with Bugzilla bugs containing the keywords ‘regression’ or ‘feature’, which are basically labelled already. The augmented data set contains 10818 bugs. Unfortunately we can’t use all of them indistinctly, as the dataset is unbalanced towards bugbugs, which would skew the results of the classifier, so we simply perform random under-sampling to reduce the number of bugbug examples. In the end, we have 1928 bugs.

We split the dataset into a training set of 1735 bugs and a test set of 193 bugs (90% - 10%).

We extract features both from bug fields (such as keywords, number of attachments, presence of a crash signature, and so on), bug title and comments.

To extract features from text (title and comments), we use a simple BoW model with 1-grams, using TF-IDF to lower the importance of very common words in the corpus and stop word removal mainly to speed up the training phase (stop word removal should not be needed for accuracy in our case since we are using a gradient boosting model, but it can speed up the training phase and it eases experimenting with other models which would really need it).

We are then training a gradient boosting model (these models usually work quite well for shallow features) on top of the extracted features.

This very simple approach, in a handful of lines of code, achieves ~93% accuracy. There’s a lot of room for improvement in the algorithm (it was, after all, written in a few hours…), so I’m confident we can get even better results.

This is just the first step: in the near future we are going to implement improvements in Bugzilla directly and in linked tooling so that we can stop guessing and have very accurate data.

Since the inception of bugbug, we have also added additional experimental models for other related problems (e.g. detecting if a bug is a good candidate for tracking, or predicting the component of a bug), turning bugbug into a platform for quickly building and experimenting with new machine learning applications on Bugzilla data (and maybe soon VCS data too). We have many other ideas to implement, if you are interested take a look at the open issues on our repo!

|

|