Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla GFX: WebRender newsletter #36 |

Hi everyone! This week’s highlight is Glenn’s picture caching work which almost landed about a week ago and landed again a few hours ago. Fingers crossed! If you don’t know what picture caching means and are interested, you can read about it in the introduction of this newsletter’s season 01 episode 28.

On a more general note, the team continues focusing on the remaining list of blocker bugs which grows and shrinks depending on when you look, but the overall trend is looking good.

Without further ado:

Notable WebRender and Gecko changes

- Bobby fixed unbounded interner growth.

- Bobby overhauled the memory reporter.

- Bobby added a primitive highlighting debug tool.

- Bobby reduced code duplication around interners.

- Matt and Jeff continued investigating telemetry data.

- Jeff removed the minimum blob image size, yielding nice improvements on some talos benchmarks (18% raptor-motionmark-animometer-firefox linux64-qr opt and 7% raptor-motionmark-animometer-firefox windows10-64-qr opt).

- kvark fixed a crash.

- kvark reduced the number of vector allocations.

- kvark improved the chasing debugging tool.

- kvark fixed two issues with reference frame and scrolling.

- Andrew fixed an issue with SVGs that embed raster images not rendering correctly.

- Andrew fixed a mismatch between the size used during decoding images and the one we pass to WebRender.

- Andrew fixed a crash caused by an interaction between blob images and shared surfaces.

- Andrew avoided scene building caused by partially decoded images when possible.

- Emilio made the build system take care of generating the ffi bindings automatically.

- Emilio fixed some clipping issues.

- Glenn optimized how picture caching handle world clips.

- Glenn fixed picture caching tiles being discarded incorrectly.

- Glenn split primitive preparation into a separate culling pass.

- Glenn fixed some invalidation issues.

- Glenn improved display list correlation.

- Glenn re-landed picture caching.

- Doug improved the way we deal with document splitting to allow more than two documents.

Ongoing work

The team keeps going through the remaining blockers (14 P2 bugs and 29 P3 bugs at the time of writing).

Enabling WebRender in Firefox Nightly

In about:config, set the pref “gfx.webrender.all” to true and restart the browser.

Reporting bugs

The best place to report bugs related to WebRender in Firefox is the Graphics :: WebRender component in bugzilla.

Note that it is possible to log in with a github account.

https://mozillagfx.wordpress.com/2019/01/17/webrender-newsletter-36/

|

|

Mozilla Localization (L10N): L10n report: January edition |

Welcome!

New localizers

- Nart Tlisha (Locales: ab, ady, uby)

- Naail, Iffam, Aiesh, Shahu, Ibrahim and Sofwath (Locale: dv)

- Kristian (Locale: vot)

Are you a locale leader and want us to include new members in our upcoming reports? Contact us!

New community/locales added

New content and projects

What’s new or coming up in Firefox desktop

The localization cycle for Firefox 66 in Nightly is approaching its end, and Tuesday (Jan 15) was the last day to get changes into Firefox 65 before it moves to release (Jan 29). These are the key dates for the next cycle:

- January 28: Nightly will be bumped to version 67.

- February 26: deadline to ship updates to Beta (Firefox 66).

As of January, localization of the Pocket add-on has moved back into the Firefox main project. That’s a positive change for localization, since it gives us a clearer schedule for updates, while before they were complex and sparse. All existing translations from the stand-alone process were imported into Mercurial repositories (and Pontoon).

In terms of prioritization, there are a couple of features to keep an eye on:

- Profile per installation: with Firefox 67, Firefox will begin using a dedicated profile for each Firefox version (including Nightly, Beta, Developer Edition, and ESR). This will make Firefox more stable when switching between versions on the same computer and will also allow you to run different Firefox installations at the same time. This introduces a set of dialogs and web pages to warn the user about the change, and explain how to sync data between profiles. Unlike other features, this targets all versions, but Nightly users in particular, since they are more likely to have multiple profiles according to Telemetry data. That’s a good reason to prioritize these strings.

- Security error pages: nothing is more frustrating than being unable to reach a website because of certificate issues. There are a lot of experiments happening around these pages and the associated user experience, both in Beta and Release, so it’s important to prioritize translations for these strings (they’re typically in netError.dtd).

What’s new or coming up in Test Pilot

As explained in this blog post, Test Pilot is reaching its end of life. The website localization has been updated in Pontoon to include messages around this change, while other experiments (Send, Monitor) will continue to exist as stand-alone projects. Screenshots is also going to see changes in the upcoming days, mostly on the server side of the project.

What’s new or coming up in mobile

Just like for Firefox desktop, the last day to get in localizations for Fennec 65 was Tuesday, Jan 15. Please see the desktop section above for more details.

Firefox iOS v15 localization deadline was Friday, January 11. The app should be released to everyone by Jan 29th, after a phased roll-out. This time around we’ve added seven new locales: Angika, Burmese, Corsican, Javanese, Nepali, Norwegian Bokmal and Sundanese. This means that we’re currently shipping 87 locales out of the 88 that are being localized – which is twice as more than when we first shipped the app. Congrats to all the voluntary localizers involved in this effort over the years!

And stay tuned for an update on the upcoming v16 l10n timeline soon.

We’re also still working with Lockbox Android team in order to get the project plugged in to Pontoon, and you can expect to see something come up in the next couple of weeks.

Firefox Reality project is going to be available and open for localization very soon too. We’re working out the specifics right now, and the timeline will be shared very soon and once everything is ironed out.

What’s new or coming up in web projects

Mozilla.org has a few updates.

- Navigation bar: The new navigation.lang file contains strings for the redesigned navigation bar. When the language completion rate reaches 80%+, the new layout will be switched on. Try to get your locale completed by the time it is switched over.

- Content Blocking Tour with updated UIs will go live on 29 Jan. Catch up all the updates by completing the firefox/tracking-protection-tour.lang file before then.

What’s new or coming up in Foundation projects

Mozilla’s big end-of-year push for donations has passed, and thanks in no small part to your efforts, the Foundation’s financial situation is in a much better shape for this year to pick up the fight where they left it before the break. Thank you all for your help!

In these first days of 2019, the fundraising team takes the opportunity of the quiet time to modernize the donation receipts with a better email sent to donors and migrate the receipts to the same infrastructure used to send Mozilla & Firefox newsletters. Content for the new receipts should be exposed in the Fundraising project by the end of the month for the 10-15 locales with the most donations in 2018.

The Advocacy team is still working on the misinfo campaign in Europe, with a first survey coming up to be sent to the people subscribed to the Mozilla newsletter, to get a flavor of where opinion lies with their attitudes to misinformation at the moment. Next steps will include launching a campaign about political ads ahead of the EU elections then promote anti-disinformation tools. Let’s do this!

What’s new or coming up in Support

- Updates to locales visible (and available) on the Support site. You should see changes soon here and here.

- Content updates in the Knowledge Base: Firefox

- https://support.mozilla.org/en-US/kb/task-manager-tabs-or-extensions-are-slowing-firefox

- https://support.mozilla.org/en-US/kb/personalized-extension-recommendations (hidden for now)

- https://support.mozilla.org/en-US/kb/third-party-trackers

- https://support.mozilla.org/en-US/kb/what-happened-tracking-protection

- https://support.mozilla.org/en-US/kb/content-blocking

- Content updates in the Knowledge Base: Firefox for iOS

- https://support.mozilla.org/en-US/kb/translate-web-pages-firefox-ios

- https://support.mozilla.org/en-US/kb/new-tab-settings-firefox-ios

- https://support.mozilla.org/en-US/kb/add-web-pages-your-reading-list-firefox-ios

- https://support.mozilla.org/en-US/kb/download-photos-and-files-firefox-ios

- https://support.mozilla.org/en-US/kb/customize-firefox-home-ios

- https://support.mozilla.org/en-US/kb/set-homepage-firefox-ios

- Content updates: not related to upcoming releases

- Want to get involved in localizing Support content? Start reading here.

What’s new or coming up in Pontoon

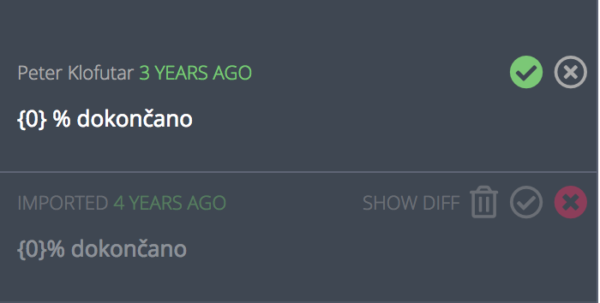

We re-launched the ability to delete translations. First you need to reject a translation, and then click on the trash can icon, which only appears next to rejected translations. The delete functionality has been replaced by the reject functionality, but over time it became obvious there are various use cases for both features to co-exist. See bug 1397377 for more details about why we first removed and then restored this feature.

Events

- Want to showcase an event coming up that your community is participating in? Reach out to any l10n-driver and we’ll include that (see links to emails at the bottom of this report)

Friends of the Lion

Image by Elio Qoshi

- Sofwath came to us right after the new year holiday break through the Common Voice project. As the locale manager of Dhivehi, the official language of Maldives, he gathered all the necessary information in order to onboard several new contributors. Together, they almost completed the web site localization in a matter of days. They are already looking into government sources that are public for sentence collection. Kudos to the entire community!

Know someone in your l10n community who’s been doing a great job and should appear here? Contact on of the l10n-drivers and we’ll make sure they get a shout-out (see list at the bottom)!

Useful Links

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Facebook group: it’s new! Come check it out!

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

- #l10n irc channel: this wiki page will help you get set up with IRC. For L10n, we use the #l10n channel for all general discussion. You can also find a list of IRC channels in other languages here.

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying – l10n Project Manager for web projects, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Axel (Pike) – l10n Tech Team Lead

- Sta's – l20n/FTL tamer

- Zibi (gandalf) – L10n/Intl Platform Software Engineer

- Matjaz – Pontoon dev

- Adrian – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

- Michal – l10n & community coordinator for Support

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2019/01/17/l10n-report-january-edition/

|

|

Nick Cameron: proc-macro-rules |

I'm announcing a new library for procedural macro authors: proc-macro-rules (and on crates.io). It allows you to do macro_rules-like pattern matching inside a procedural macro. The goal is to smooth the transition from declarative to procedural macros (this works pretty well when used with the quote crate).

(This is part of my Christmas yak mega-shave. That might someday get a blog post of its own, but I only managed to shave about 1/3 of my yaks, so it might take till next Christmas).

Here's an example,

rules!(tokens => {

($finish:ident ($($found:ident)*) # [ $($inner:tt)* ] $($rest:tt)*) => {

for f in found {

do_something(finish, f, inner, rest[0]);

}

}

(foo $($bar:expr)?) => {

match bar {

Some(e) => foo_with_expr(e),

None => foo_no_expr(),

}

}

});

The example is kind of nonsense. The interesting thing is that the syntax is very similar to macro_rules macros. The patterns which are matched are exactly the same as in macro_rules (modulo bugs, of course). Metavariables in the pattern (e.g., $finish or $found in the first arm) are bound to fresh variables in the arm's body (e.g., finish and found). The types reflect the type of the metavariable (for example, $finish has type syn::Ident). Because $found occurs inside a $(...)*, it is matched multiple times and so has type Vec.

The syntax is:

rules!( $tokens:expr => { $($arm)* })

where $tokens evaluates to a TokenStream and the syntax of an $arm is given by

($pattern) => { $body }

or

($pattern) => $body,

where $pattern is a valid macro_rules pattern (which is not yet verified by the library, but should be) and $body is Rust code (i.e., an expression or block.

The intent of this library is to make it easier to write the 'frontend' of a procedural macro, i.e., to make parsing the input a bit easier. In particular to make it easy to convert a macro_rules macro to a procedural macro and replace a small part with some procedural code, without having to roll off the 'procedural cliff' and rewrite the whole macro.

As an example of converting macros, here is a declarative macro which is sort-of like the vec macro (example usage: let v = vec![a, b, c]):

macro_rules! vec {

() => {

Vec::new()

};

( $( $x:expr ),+ ) => {

{

let mut temp_vec = Vec::new();

$(

temp_vec.push($x);

)*

temp_vec

}

};

}

Converting to a procedural macro becomes a mechanical conversion:

use quote::quote;

use proc_macro::TokenStream;

use proc_macro_rules::rules;

#[proc_macro]

pub fn vec(input: TokenStream) -> TokenStream {

rules!(input.into() => {

() => { quote! {

Vec::new()

}}

( $( $x:expr ),+ ) => { quote! {

let mut temp_vec = Vec::new();

#(

temp_vec.push(#x);

)*

temp_vec

}}

}).into()

}

Note that we are using the quote crate to write the bodies of the match arms. That crate allows writing the output of a procedural macro in a similar way to a declarative macro by using quasi-quoting.

How it works

I'm going to dive in a little bit to the implementation because I think it is interesting. You don't need to know this to use proc-macro-rules, and if you only want to do that, then you can stop reading now.

rules is a procedural macro, using syn for parsing, and quote for code generation. The high-level flow is that we parse all code passed to the macro into an AST, then handle each rule in turn (generating a big if/else). For each rule, we make a pass over the rule to collect variables and compute their types, then lower the AST to a 'builder' AST (which duplicates some work at the moment), then emit code for the rule. That generated code includes Matches and MatchesBuilder structs to collect and store bindings for metavariables. We also generate code which uses syn to parse the supplied tokenstream into the Matches struct by pattern-matching the input.

The pattern matching is a little bit interesting: because we are generating code (rather than interpreting the pattern) the implementation is very different from macro_rules. We generate a DFA, but the pattern is not reified in a data structure but in the generated code. We only execute the matching code once, so we must be at the same point in the pattern for all potential matches, but they can be at different points in the input. These matches are represented in the MatchSet. (I didn't look around for a nice way of doing this, so there may be something much better, or I might have made an obvious mistake).

The key functions on a MatchSet are expect and fork. Both operate by taking a function from the client which operates on the input. expect compares each in-progress match with the input and if the input can be matched we continue; if it cannot, then the match is deleted. fork iterates over the in-progress matches, forking each one. One match is matched against the next element in the patten, and one is not. For example, if we have a pattern ab?c and a single match which has matched a in the input then we can fork and one match will attempt to match b then c, and one will just match c.

One interesting aspect of matching is handling metavariable matching in repeated parts of a pattern, e.g., in $($n:ident: $e: expr),*. Here we would repeatedly try to match $n:ident: $e: expr and find values for n and e, we then need to push each value into a Vec and a Vec. We call this 'hoisting' the variables (since we are moving out of a scope while converting T to U). We generate code for this which uses an implementation of hoist in the Fork trait for each MatchesBuilder, a MatchesHandler helper struct for the MatchSet, and generated code for each kind of repeat which can appear in a pattern.

|

|

Hacks.Mozilla.Org: Augmented Reality and the Browser — An App Experiment |

We all want to build the next (or perhaps the first) great Augmented Reality app. But there be dragons! The space is new and not well defined. There aren’t any AR apps that people use every day to serve as starting points or examples. Your new ideas have to compete against an already very high quality bar of traditional 2d apps. And building a new app can be expensive, especially for native app environments. This makes AR apps still somewhat uncharted territory, requiring a higher initial investment of time, talent and treasure.

But this also creates a sense of opportunity; a chance to participate early before the space is fully saturated.

From our point of view the questions are: What kinds of tools do artists, developers, designers, entrepreneurs and creatives of all flavors need to be able to easily make augmented reality experiences? What kinds of apps can people build with tools we provide?

For example: Can I watch Trevor Noah on the Daily Show this evening, and then release an app tomorrow that is a riff on a joke he made the previous night? A measure of success is being able to speak in rich media quickly and easily, to be a timely part of a global conversation.

With Blair MacIntyre‘s help I wrote an experiment to play-test a variety of ideas exploring these questions. In this comprehensive post-mortem I’ll review the app we made, what we learned and where we’re going next.

Finding “good” use cases

To answer some of the above questions, we started out surveying AR and VR developers, asking them their thoughts and observations. We had some rules of thumb. What we looked for were AR use cases that people value, that are meaningful enough, useful enough, make enough of a difference, that they might possibly become a part of people’s lives.

Existing AR apps also provided inspiration. One simple AR app I like for example is AirMeasure, which is part of a family of similar apps such as the Augmented Reality Measuring Tape. I use it once or twice a month and while not often, it’s incredibly handy. It’s an app with real utility and has 6500 reviews on the App Store – so there’s clearly some appetite already.

Sean White, Mozilla’s Chief R&D Officer, has a very specific definition for an MVP (minimum viable product). He asks: What would 100 people use every day?

When I hear this, I hear something like: What kind of experience is complete, compelling, and useful enough, that even in an earliest incarnation it captures a core essential quality that makes it actually useful for 100 real world people, with real world concerns, to use daily even with current limitations? Shipping can be hard, and finding those first users harder.

Browser-based AR

New Pixel phones, iPhones and other emerging devices such as the Magic Leap already support Augmented Reality. They report where the ground is, where walls are, and other kinds of environment sensing questions critical for AR. They support pass-through vision and 3d tracking and registration. Emerging standards, notably WebXR, will soon expose these powers to the browser in a standards- based way, much like the way other hardware features are built and made available in the browser.

Native app development toolchains are excellent but there is friction. It can be challenging to jump through the hoops required to release a product across several different app stores or platforms. Costs that are reasonable for a AAA title may not be reasonable for a smaller project. If you want to knock out an app tonight for a client tomorrow, or post an app as a response to an article in the press or a current event— it can take too long.

With AR support coming to the browser there’s an option now to focus on telling the story rather than worrying about the technology, costs and distribution. Browsers historically offer lower barriers to entry, and instant deployment to millions of users, unrestricted distribution and a sharing culture. Being able to distribute an app at the click of a link, with no install, lowers the activation costs and enables virality. This complements other development approaches, and can be used for rapid prototyping of ideas as well.

ARPersist – the idea

In our experiment we explored what it would be like to decorate the world with virtual post-it notes. These notes can be posted from within the app, and they stick around between play sessions. Players can in fact see each other, and can see each other moving the notes in real time. The notes are geographically pinned and persist forever.

Using our experiment, a company could decorate their office with hints about how the printers work, or show navigation breadcrumbs to route a bewildered new employee to a meeting. Alternatively, a vacationing couple could walk into an AirBNB, open an “ARBNB” app (pardon the pun) and view post-it notes illuminating where the extra blankets are or how to use the washer.

We had these kinds of aspirational use case goals for our experiment:

- Office interior navigation: Imagine an office decorated with virtual hints and possibly also with navigation support. Often a visitor or corporate employee shows up in an unfamiliar place — such as a regional Mozilla office or a conference hotel or even a hospital – and they want to be able to navigate that space quickly. Meeting rooms are on different floors — often with quirky names that are unrelated to location. A specific hospital bed with a convalescing friend or relative could be right next door or up three flights and across a walkway. I’m sure we’ve all struggled to find bathrooms, or the cafeteria, or that meeting room. And even when we’ve found what we want – how does it work, who is there, what is important? Take the simple example of a printer. How many of us have stood in front of a printer for too long trying to figure out how to make a single photocopy?

- Interactive information for house guests: Being a guest in a person’s home can be a lovely experience. AirBNB does a great job of fostering trust between strangers. But is there a way to communicate all the small details of a new space? How to use the Nest sensor, how to use the fancy dishwasher? Where is the spatula? Where are extra blankets? An AirBNB or shared rental could be decorated with virtual hints. An owner walks around the space and posts up virtual post-it notes attached to some of the items, indicating how appliances work. A machine-assisted approach also is possible – where the owner walks the space with the camera active, opens every drawer and lets the machine learning algorithm label and memorize everything. Or, imagine a real-time variation where your phone tells you where the cat is, or where your keys are. There’s a collaborative possibility as well here, a shared journal, where guests could leave hints for each other — although this does open up some other concerns which are tricky to navigate – and hard to address.

- Public retail and venue navigation: These ideas could also work in a shopping scenario to direct you to the shampoo, or in a scenario where you want to pinpoint friends in a sports coliseum or concert hall or other visually noisy venue.

ARPersist – the app

Taking these ideas we wrote a standalone app for the iPhone 6S or higher — which you can try at arpersist.glitch.me and play with the source code at https://github.com/anselm/arpersist>github.com/anselm/arpersist.

Here’s a short video of the app running, which you might have seen some days ago in my tweet:

And more detail on how to use the app if you want to try it yourself:

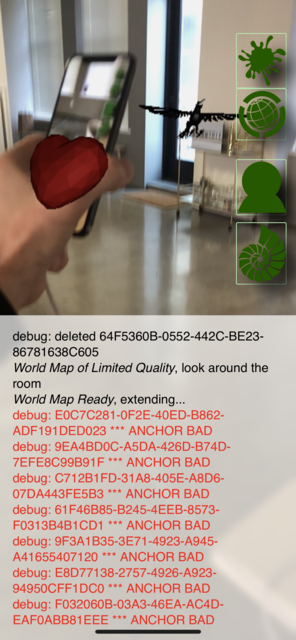

Here’s an image of looking at the space through the iPhone display:

And an image of two players – each player can see the other player’s phone in 3d space and a heart placed on top of that in 3d:

You’ll need the WebXR Viewer for iOS, which you can get on the iTunes store. (WebXR standards are still maturing so this doesn’t yet run directly in most browsers.)

This work is open source, it’s intended to be re-used and intended to be played with, but also — because it works against non-standard browser extensions — it cannot be treated as something that somebody could build a commercial product with (yet).

The videos embedded above offer a good description: Basically, you open ARPersist, (using the WebXR viewer linked above on an iPhone 6s or higher), by going to the URL (arpersist.glitch.me). This drops you into a pass-through vision display. You’ll see a screen with four buttons on the right. The “seashell” button at the bottom takes you to a page where you can load and save maps. You’ll want to “create an anchor” and optionally “save your map”. At this point, from the main page, you can use the top icon to add new features to the world. Objects you place are going to stick to the nearest floor or wall. If you join somebody else’s map, or are at a nearby geographical location, you can see other players as well in real time.

This app features downloadable 3d models from Sketchfab. These are the assets I’m using:

What went well

Coming out of that initial phase of development I’ve had many surprising realizations, and even a few eureka moments. Here’s what went well, which I describe as essential attributes of the AR experience:

- Webbyness. Doing AR in a web app is very very satisfying. This is good news because (in my opinion) mobile web apps more typically reflects how developers will create content in the future. Of course there are questions still such as payment models and difficulty in encrypting or obfuscating art assets if those assets are valuable. For example a developer can buy a 3d model off the web and trivially incorporate that model into a web app but it’s not yet clear how to do this without violating licensing terms around re-distribution and how to compensate creators per use.

- Hinting. This was a new insight. It turns out semantic hints are critical, both for intelligently decorating your virtual space with objects but also for filtering noise. By hints I mean being able to say that the intent of a virtual object is that it should be shown on the floor, or attached to a wall, or on top of the watercooler. There’s a difference between simply placing something in space and understanding why it belongs in that position. Also, what quickly turns up is an idea of priorities. Some virtual objects are just not as important as others. This can depend on the user’s context. There are different layers of filtering, but ultimately you have some collection of virtual objects you want to render, and those objects need to argue amongst themselves which should be shown where (or not at all) if they collide. The issue isn’t the contention resolution strategy — it’s that the objects themselves need to provide rich metadata so that any strategies can exist. I went as far as classifying some of the kinds of hints that would be useful. When you make a new object there are some toggle fields you can set to help with expressing your intention around placement and priority.

- Server/Client models. In serving AR objects to the client a natural client server pattern emerges. This model begins to reflect a traditional RSS pattern — with many servers and many clients. There’s a chance here to try and avoid some of the risky concentrations of power and censorship that we see already with existing social networks. This is not a new problem, but an old problem that is made more urgent. AR is in your face — and preventing centralization feels more important.

- Login/Signup. Traditional web apps have a central sign-in concept. They manage your identity for you, and you use a password to sign into their service. However, today it’s easy enough to push that back to the user.

This gets a bit geeky — but the main principle is that if you use modern public key cryptography to self-sign your own documents, then a central service is not needed to validate your identity. Here I implemented a public/private keypair system similar to Metamask. The strategy is that the user provides a long phrase and then I use Ian Coleman’s Mnemonic Code Converter bip39 to turn that into a public/private keypair. (In this case, I am using bitcoin key-signing algorithms.)

In my example implementation, a given keypair can be associated with a given collection of objects, and it helps prune a core responsibility away from any centralized social network. Users self-sign everything they create.

- 6DoF control. It can be hard to write good controls for translating, rotating and scaling augmented reality objects through a phone. But towards the end of the build I realized that the phone itself is a 6dof controller. It can be a way to reach, grab, move and rotate — and vastly reduce the labor of building user interfaces. Ultimately I ended up throwing out a lot of complicated code for moving, scaling and rotating objects and replaced it simply with a single power, to drag and rotate objects using the phone itself. Stretching came for free — if you tap with two fingers instead of one finger then your finger distance is used as the stretch factor.

- Multiplayer. It is pretty neat having multiple players in the same room in this app. Each of the participants can manipulate shared objects, and each participant can be seen as a floating heart in the room — right on top of where their phone is in the real world. It’s quite satisfying. There wasn’t a lot of shared compositional editing (because the app is so simple) but if the apps were more powerful this could be quite compelling.

Challenges that remain

We also identified many challenges. Here are some of the ones we faced:

- Hardware. There’s a fairly strong signal that Magic Leap or Hololens will be better platforms for this experience. Phones just are not a very satisfying way to manipulate objects in Augmented Reality. A logical next step for this work is to port it to the Magic Leap or the Hololens or both or other similar emerging hardware.

- Relocalization. One serious, almost blocker problem had to do with poor relocalization. Between successive runs I couldn’t reestablish where the phone was. Relocalization, my device’s ability to accurately learn its position and orientation in real world space, was unpredictable. Sometimes it would work many times in a row when I would run the app. Sometimes I couldn’t establish relocalization once in an entire day. It appears that optimal relocalization is demanding, and requires very bright sunlight, stable lighting conditions and jumbled sharp edge geometry. Relocalization on passive optics is too hard and it disrupts the feeling of continuity — being able to quit the app and restart it, or enabling multiple people to share the same experience from their own devices. I played with a work-around, which was to let users manually relocalize — but I think this still needs more exploration.

This is ultimately a hardware problem. Apple/Google have done an unbelievable job with pure software but the hardware is not designed for the job. Probably the best short-term answer is to use a QRCode. A longer term answer is to just wait a year for better hardware. Apparently next-gen iPhones will have active depth sensors and this may be an entirely solved problem in a year or two. (The challenge is that we want to play with the future before it arrives — so we do need some kind of temporary solution for now.)

- Griefing. Although my test audience was too small to have any griefers — it was pretty self-evident that any canonical layer of reality would instantly be filled with graphical images that could be offensive or not safe for work (NSFW). We have to find a way to allow for curation of layers. Spam and griefing are important to prevent but we don’t want to censor self-expression. The answer here was to not have any single virtual space but to let people self select who they follow. I could see roles emerging for making it easy to curate and distribute leadership roles for curation of shared virtual spaces — similar to Wikipedia.

- Empty spaces. AR is a lonely world when there is nobody else around. Without other people nearby it’s just not a lot of fun to decorate space with virtual objects at all. So much of this feels social. A thought here is that it may be better, and possible, to create portals that wire together multiple AR spaces — even if those spaces are not actually in the same place — in order to bring people together to have a shared consensus. This begins to sound more like VR in some ways but could be a hybrid of AR and VR together. You could be at your house, and your friend at their house, and you could join your rooms together virtually, and then see each others post-it notes or public virtual objects in each others spaces (attached to the nearest walls or floors as based on the hints associated with those objects).

- Security/Privacy. Entire posts could be written on this topic alone. The key issue is that sharing a map to a server, that somebody else can then download, means leaking private details of your own home or space to other parties. Some of this simply means notifying the user intelligently — but this is still an open question and deserves thought.

- Media Proxy. We’re fairly used to being able to cut and paste links into slack or into other kinds of forums, but the equivalent doesn’t quite yet exist in VR/AR, although the media sharing feature in Hubs, Mozilla’s virtual reality chat system and social environment, is a first step. It would be handy to paste not only 3d models but also PDFs, videos and the like. There is a highly competitive anti-sharing war going on between rich media content providers and entities that want to allow and empower sharing of content. Take the example of iframely, a service that aims to simplify and optimize rich media sharing between platforms and devices.

Next steps

Here’s where I feel this work will go next:

- Packaging. Although the app works “technically” it isn’t that user friendly. There are many UI assumptions. When capturing a space one has to let the device capture enough data before saving a map. There’s no real interface for deleting old maps. The debugging screen, which provides hints about the system state, is fairly incomprehensible to a novice. Basically the whole acquisition and tracking phase should “just work” and right now it requires a fair level of expertise. The right way to exercise a more cohesive “package” is to push this experience forward as an actual app for a specific use case. The AirBNB decoration use case seems like the right one.

- HMD (Head-mounted display) support. Magic Leap or Hololens or possibly even Northstar support. The right place for this experience is in real AR glasses. This is now doable and it’s worth doing. Granted every developer will also be writing the same app, but this will be from a browser perspective, and there is value in a browser-based persistence solution.

- Embellishments. There are several small features that would be quick easy wins. It would be nice to show contrails of where people moved through space for example. As well it would be nice to let people type in or input their own text into post-it notes (right now you can place gltf objects off the net or images). And it would be nice to have richer proxy support for other media types as mentioned. I’d like to clarify some licensing issues for content as well in this case. Improving manual relocalization (or using a QRCode) could help as well.

- Navigation. I didn’t do the in-app route-finding and navigation; it’s one more piece that could help tell the story. I felt it wasn’t as critical as basic placement — but it helps argue the use cases.

- Filtering. We had aspirations around social networking — filtering by peers that we just didn’t get to test out. This would be important in the future.

Several architecture observations

This research wasn’t just focused on user experience but also explored internal architecture. As a general rule I believe that the architecture behind an MVP should reflect a mature partitioning of jobs that the fully-blown app will deliver. In nascent form, the MVP has to architecturally reflect a larger code base. The current implementation of this app consists of these parts (which I think reflect important parts of a more mature system):

- Cloud Content Server. A server must exist which hosts arbitrary data objects from arbitrary participants. We needed some kind of hosting that people can publish content to. In a more mature universe there could be many servers. Servers could just be WordPress, and content could just be GeoRSS. Right now however I have a single server — but at the same time that server doesn’t have much responsibility. It is just a shared database. There is a third party ARCloud initiative which speaks to this as well.

- Content Filter. Filtering content is an absurdly critical MVP requirement. We must be able to show that users can control what they see. I imagine this filter as a perfect agent, a kind of copy of yourself that has the time to carefully inspect every single data object and ponder if it is worth sharing with you or not. The content filter is a proxy for you, your will. It has perfect serendipity, perfect understanding and perfect knowledge of all things. The reality of course falls short of this — but that’s my mental model of the job here. The filter can exist on device or in the cloud.

- Renderer. The client-side rendering layer deals with painting stuff on your field of view. It deals with contention resolution between objects competing for your attention. It handles presentation semantics — that some objects want to be shown in certain places — as well as ideas around fundamental UX paradigms for how people will interact with AR. Basically it invents an AR desktop — a fundamental AR interface — for mediating human interaction. Again of course, we can’t do all this, but that’s my mental model of the job here.

- Identity Management. This is unsolved for the net at large and is destroying communication on the net. It’s arguably one of the most serious problems in the world today because if we can’t communicate, and know that other parties are real, then we don’t have a civilization. It is a critical problem for AR as well because you cannot have spam and garbage content in your face. The approach I mentioned above is to have users self-sign their utterances. On top of this would be conventional services to build up follow lists of people (or what I call emitters) and then arbitration between those emitters using a strategy to score emitters based on the quality of what they say, somewhat like a weighted contextual network graph.

An architectural observation regarding geolocation of all objects

One other technical point deserves a bit more elaboration. Before we started we had to answer the question of “how do we represent or store the location of virtual objects?”. Perhaps this isn’t a great conversation starter at the pub on a Saturday night, but it’s important nevertheless.

We take so many things for granted in the real world – signs, streetlights, buildings. We expect them to stick around even when you look away. But programming is like universe building, you have to do everything by hand.

The approach we took may seem obvious: to define object position with GPS coordinates. We give every object a latitude, longitude and elevation (as well as orientation).

But the gotcha is that phones today don’t have precise geolocation. We had to write a wrapper of our own. When users start our app we build up (or load) an augmented reality map of the area. That map can be saved back to a server with a precise geolocation. Once there is a map of a room, then everything in that map is also very precisely geo-located. This means everything you place or do in our app is in fact specified in earth global coordinates.

Blair points out that although modern smartphones (or devices) today don’t have very accurate GPS, this is likely to change soon. We expect that in the next year or two GPS will become hyper-precise – augmented by 3d depth maps of the landscape – making our wrapper optional.

Conclusions

Our exploration has been taking place in conversation and code. Personally I enjoy this praxis — spending some time talking, and then implementing a working proof of concept. Nothing clarifies thinking like actually trying to build an example.

At the 10,000 foot view, at the idealistic end of the spectrum, it is becoming obvious that we all have different ideas of what AR is or will be. The AR view I crave is one of many different information objects from many of different providers — personal reminders, city traffic overlays, weather bots, friend location notifiers, contrails of my previous trajectories through space etc. It feels like a creative medium. I see users wanting to author objects, where different objects have different priorities, where different objects are “alive” — that they have their own will, mobility and their own interactions with each other. In this way an AR view echoes a natural view of the default world— with all kinds of entities competing for our attention.

Stepping back even further — at a 100,000 foot view — there are several fundamental communication patterns that humans use creatively. We use visual media (signage) and we use audio (speaking, voice chat). We have high-resolution high-fidelity expressive capabilities, that includes our body language, our hand gestures, and especially a hugely rich facial expressiveness. We also have text-based media — and many other kinds of media. It feels like when anybody builds a communication medium that easily allows humans to channel some of their high-bandwidth needs over that pipeline, that medium can become very popular. Skype, messaging, wikis, even music — all of these things meet fundamental expressive human drives; they are channels for output and expressiveness.

In that light a question that’s emerging for me is “Is sharing 3D objects in space a fundamental communication medium?”. If so then the question becomes more “What are reasons to NOT build a minimal capability to express the persistent 3d placement of objects in space?”. Clearly work needs to make money and be sustainable for people who make the work. Are we tapping into something fundamental enough, valuable enough, even in early incarnations, that people will spend money (or energy) on it? I posit that if we help express fundamental human communication patterns — we all succeed.

What’s surprising is the power of persistence. When the experience works well I have the mental illusion that my room indeed has these virtual images and objects in it. Our minds seem deeply fooled by the illusion of persistence. Similar to using the Magic Leap there’s a sense of “magic” — the sense that there’s another world — that you can see if you squint just right. Even after you put down the device that feeling lingers. Augmented Reality is starting to feel real.

The post Augmented Reality and the Browser — An App Experiment appeared first on Mozilla Hacks - the Web developer blog.

|

|

The Mozilla Blog: Evolving Firefox’s Culture of Experimentation: A Thank You from the Test Pilot Program |

For the last three years Firefox has invested heavily in innovation, and our users have been an essential part of this journey. Through the Test Pilot Program, Firefox users have been able to help us test and evaluate a variety of potential Firefox features. Building on the success of this program, we’re proud to announce today that we’re evolving our approach to experimentation even further.

Lessons Learned from Test Pilot

Test Pilot was designed to harness the energy of our most passionate users. We gave them early prototypes and product explorations that weren’t ready for wide release. In return, they gave us feedback and patience as these projects evolved into the highly polished features within our products today. Through this program we have been able to iterate quickly, try daring new things, and build products that our users have been excited to embrace.

Graduated Features

Since the beginning of the Test Pilot program, we’ve built or helped build a number of popular Firefox features. Activity Stream, which now features prominently on the Firefox homepage, was in the first round of Test Pilot experiments. Activity Stream brought new life to an otherwise barren page and made it easier to recall and discover new content on the web. The Test Pilot team continued to draw the attention of the press and users alike with experiments like Containers that paved the way for our highly successful Facebook Container. Send made private, encrypted, file sharing as easy as clicking a button. Lockbox helped you take your Firefox passwords to iOS devices (and soon to Android). Page Shot started as a simple way to capture and share screenshots in Firefox. We shipped the feature now known as Screenshots and have since added our new approach to anti-tracking that first gained traction as a Test Pilot experiment.

So what’s next?

Test Pilot performed better than we could have ever imagined. As a result of this program we’re now in a stronger position where we are using the knowledge that we gained from small groups, evangelizing the benefits of rapid iteration, taking bold (but safe) risks, and putting the user front and center.

We’re applying these valuable lessons not only to continued product innovation, but also to how we test and ideate across the Firefox organization. So today, we are announcing that we will be moving to a new structure that will demonstrate our ability to innovate in exciting ways and as a result we are closing the Test Pilot program as we’ve known it.

More user input, more testing

Migrating to a new model doesn’t mean we’re doing fewer experiments. In fact, we’ll be doing even more! The innovation processes that led to products like Firefox Monitor are no longer the responsibility of a handful of individuals but rather the entire organization. Everyone is responsible for maintaining the Culture of Experimentation Firefox has developed through this process. These techniques and tools have become a part of our very DNA and identity. That is something to celebrate. As such, we won’t be uninstalling any experiments you’re using today, in fact, many of the Test Pilot experiments and features will find their way to Addons.Mozilla.Org, while others like Send and Lockbox will continue to take in more input from you as they evolve into stand alone products.

We couldn’t do it without you

We want to thank Firefox users for their input and support of product features and functionality testing through the Test Pilot Program. We look forward to continuing to work closely with our users who are the reason we build Firefox in the first place. In the coming months look out for news on how you can get involved in the next stage of our experimentation.

In the meantime, the Firefox team will continue to focus on the next release and what we’ll be developing in the coming year, while other Mozillians chug away at developing equally exciting and user-centric product solutions and services. You can get a sneak peak at some of these innovations at Mozilla Labs, which touches everything from voice capability to IoT to AR/VR.

And so we say goodbye and thank you to Test Pilot for helping us usher in a bright future of innovation at Mozilla.

The post Evolving Firefox’s Culture of Experimentation: A Thank You from the Test Pilot Program appeared first on The Mozilla Blog.

|

|

Firefox Nightly: Moving to a Profile per Install Architecture |

With Firefox 67 you’ll be able to run different Firefox installs side by side by default.

Supporting profiles per installation is a feature that has been requested by pre-release users for a long time now and we’re pleased to announce that starting with Firefox 67 users will be able to run different installs of Firefox side by side without needing to manage profiles.

What are profiles?

Firefox saves information such as bookmarks, passwords and user preferences in a set of files called your profile. This profile is stored in a location separate from the Firefox program files.

More details on profiles are can be found here.

What changes are we making to profiles in Firefox 67?

Previously, all Firefox versions shared a single profile by default. With Firefox 67, Firefox will begin using a dedicated profile for each Firefox version (including Nightly, Beta, Developer Edition, and ESR). This will make Firefox more stable when switching between versions on the same computer and will also allow you to run different Firefox installations at the same time:

- You have not lost any personal data or customizations. Any previous profile data is saved and associated with the first Firefox installation that was opened after this change.

- Starting with Firefox 67, Firefox installations will now have separate profiles. This will apply to Nightly 67 initially and then to all versions of release 67 and above as the change makes it way to Developer Edition, Beta, Firefox, and ESR.

What are my options?

If you do nothing, your profile data will be different on each version of Firefox.

If you would like the information you save to Firefox to be the same on all versions, you can use a Firefox Account to keep them in sync.

Sync is the easiest way to make your profiles consistent on all of your versions of Firefox. You also get additional benefits like sending tabs and secure password storage. Get started with Sync here.

You will not lose any personal data or customizations. Any previous profile data is safe and attached to the first Firefox installation that was opened after this change.

Users of only one Firefox install or users of multiple Firefox installs who already had set different profiles for different installations will not notice the change

We really hope that this change will make it simpler for Firefox users to start running Nightly. If you come across a bug or have any suggestions we really welcome your input through our support channels.

What if I already use separate profiles for my different Firefox installations?

Users who already have created manually separate profile for different installations will not notice the change (this has been the advised procedure on Nightly for a while).

https://blog.nightly.mozilla.org/2019/01/14/moving-to-a-profile-per-install-architecture/

|

|

Cameron Kaiser: TenFourFox FPR12b1 available |

Unfortunately, we continue to accumulate difficult-to-solve JavaScript bugs. The newest one is issue 541, which affects Github most severely and is hampering my ability to use the G5 to work in the interface. This one could be temporarily repaired with some ugly hacks and I'm planning to look into that for FPR13, but I don't have this proposed fix in FPR12 since it could cause parser regressions and more testing is definitely required. However, the definitive fix is the same one needed for the frustrating issue 533, i.e., the new frontend bindings introduced with Firefox 51. I don't know if I can do that backport (both with respect to the technical issues and the sheer amount of time required) but it's increasingly looking like it's necessary for full functionality and it may be more than I can personally manage.

Meanwhile, FPR12 is scheduled for parallel release with Firefox 60.5/65 on January 29. Report new issues in the comments (as always, please verify the issue doesn't also occur in FPR11 before reporting a new regression, since sites change more than our core does).

http://tenfourfox.blogspot.com/2019/01/tenfourfox-fpr12b1-available.html

|

|

The Servo Blog: This Week In Servo 123 |

In the past three weeks, we merged 72 PRs in the Servo organization’s repositories.

Congratulations to dlrobertson for their new reviewer status for the ipc-channel library!

Planning and Status

Our roadmap is available online. Plans for 2019 will be published soon.

This week’s status updates are here.

Exciting works in progress

- Maharsh is adding preliminary support for the OffscreenCanvas API.

- jdm is fixing the bug preventing Google from loading.

- sreeise is adding the DOM APIs for manipulating audio and video tracks.

Notable Additions

- nox improved the web compatibility of the MIME type parser.

- Manishearth removed some blocking behaviour from the WebXR implementation.

- Collares implemented the

ChannelSplitterNodeWebAudio API. - makepost added musl support to the ipc-channel crate.

- aditj implemented several missing APIs for the resource timing standard.

- dlrobertson exposed the

HTMLTrackElementAPI. - ferjm added support for backoff to the media playback implementation.

- jdm implemented the missing

sourceAPI for message events. - ferjm improved the compatibility of the media playback DOM integration.

- germangc implemented missing DOM APIs for looping and terminating media playback.

New Contributors

Interested in helping build a web browser? Take a look at our curated list of issues that are good for new contributors!

|

|

Hacks.Mozilla.Org: Designing the Flexbox Inspector |

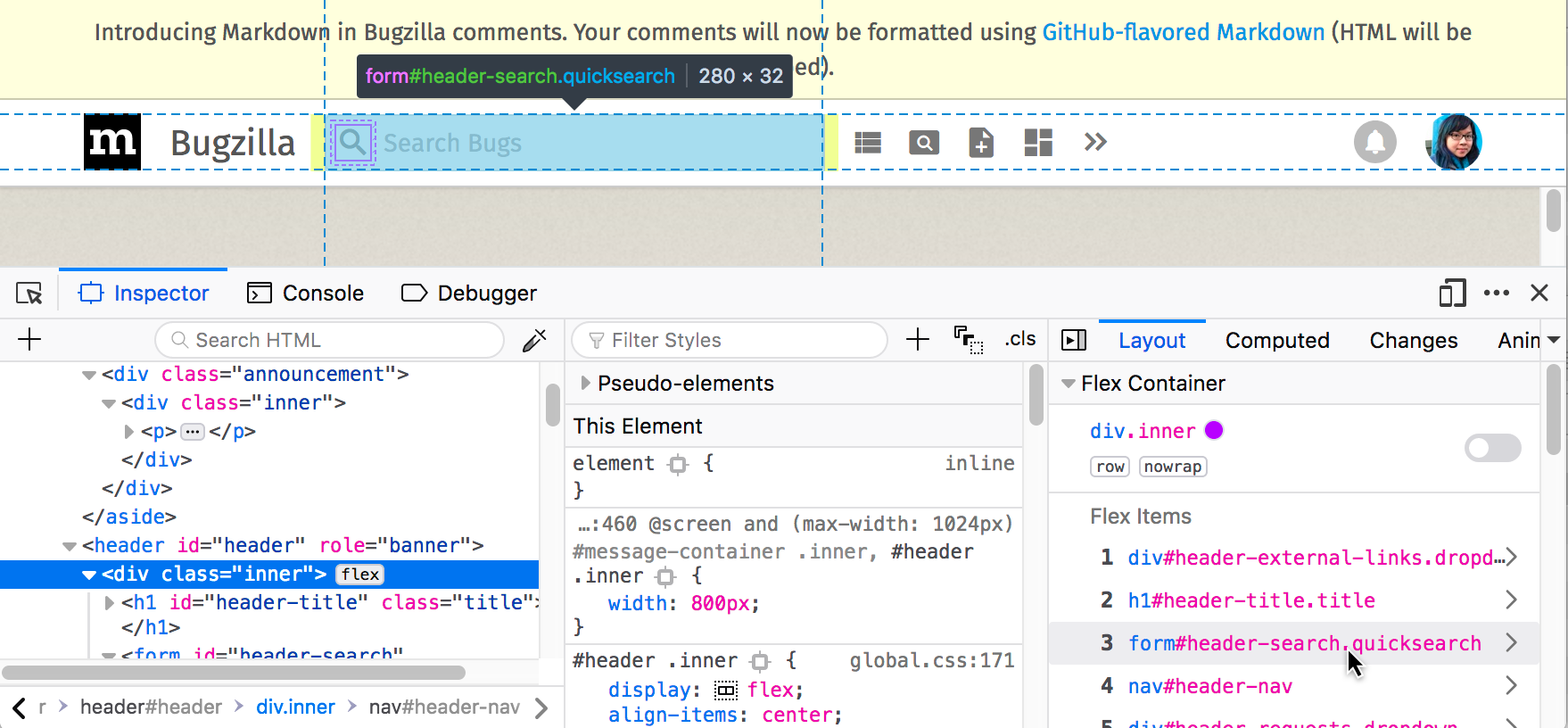

The new Flexbox Inspector, created by Firefox DevTools, helps developers understand the sizing, positioning, and nesting of Flexbox elements. You can try it out now in Firefox DevEdition or join us for its official launch in Firefox 65 on January 29th.

The UX challenges of this tool have been both frustrating and a lot of fun for our team. Built on the basic concepts of the CSS Grid Inspector, we sought to expand on the possibilities of what a design tool could be. I’m excited to share a behind-the-scenes look at the UX patterns and processes that drove our design forward.

Research and ideation

CSS Flexbox is an increasingly popular layout model that helps in building robust dynamic page layouts. However, it has a big learning curve—at the beginning of this project, our team wasn’t sure if we understood Flexbox ourselves, and we didn’t know what the main challenges were. So, we gathered data to help us design the basic feature set.

Our earliest research on design-focused tools included interviews with developer/designer friends and community members who told us they wanted to understand Flexbox better.

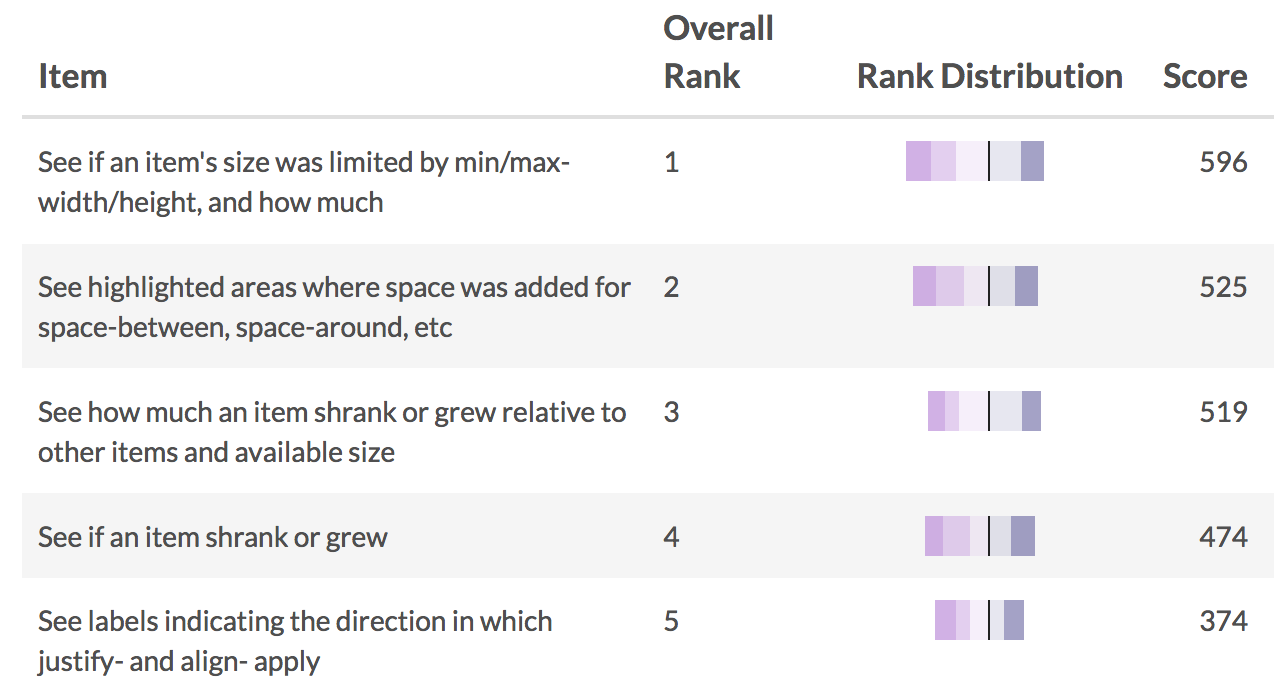

We also ran a survey to rank the Flexbox features folks most wanted to see. Min/max width and height constraints received the highest score. The ranking of shrink/grow features was also higher than we expected. This greatly influenced our plans, as we had originally assumed these more complicated features could wait for a version 2.0. It was clear however that these were the details developers needed most.

Most of the early design work took the form of spirited brainstorming sessions in video chat, text chat, and email. We also consulted the experts: Daniel Holbert, our Gecko engine developer who implemented the Flexbox spec for Firefox; Dave Geddes, CSS educator and creator of the Flexbox Zombies course; and Jen Simmons, web standards champion and designer of the Grid Inspector.

The discussions with friendly and passionate colleagues were among the best parts of working on this project. We were able to deep-dive into the meaty questions, the nitty-gritty details, and the far-flung ideas about what could be possible. As a designer, it is amazing to work with developers and product managers who care so much about the design process and have so many great UX ideas.

Visualizing a new layout model

After our info-gathering, we worked to build our own mental models of Flexbox.

While trying to learn Flexbox myself, I drew diagrams that show its different features.

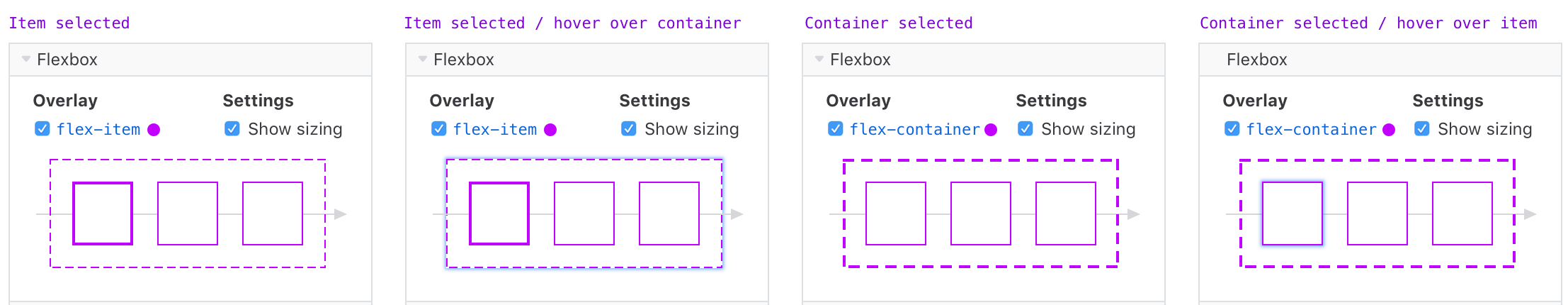

My colleague Gabriel created a working prototype of a Flexbox highlighter that greatly influenced our first launch version of the overlay. It’s a monochrome design similar to our Grid Inspector overlay, with a customizable highlight color to make it clearly visible on any website.

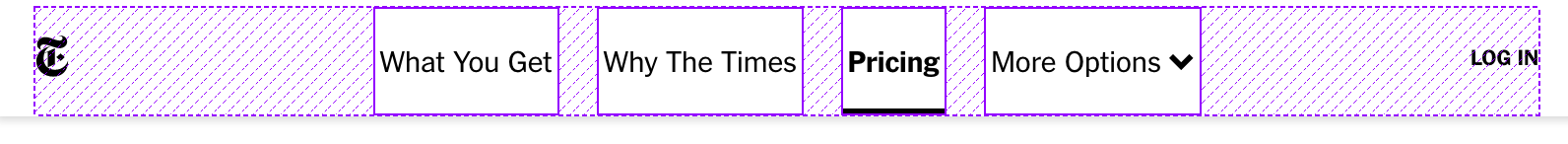

We use a dotted outline for the container, solid outlines for items, and diagonal shading between the items to represent the free space created by justify-content and margins.

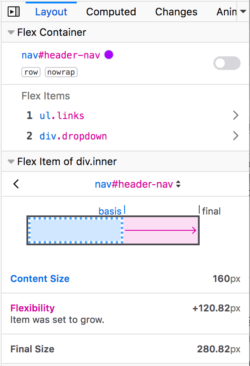

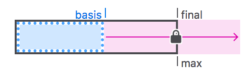

We got more adventurous with the Flexbox pane inside DevTools. The flex item diagram (or “minimap” as we love to call it) shows a visualization of basis, shrink/grow, min/max clamping, and the final size—each attribute appearing only if it’s relevant to the layout engine’s sizing decisions.

Many other design ideas, such as these flex container diagrams, didn’t make it into the final MVP, but they helped us think through the options and may get incorporated later.

Color-coded secrets of the rendering engine

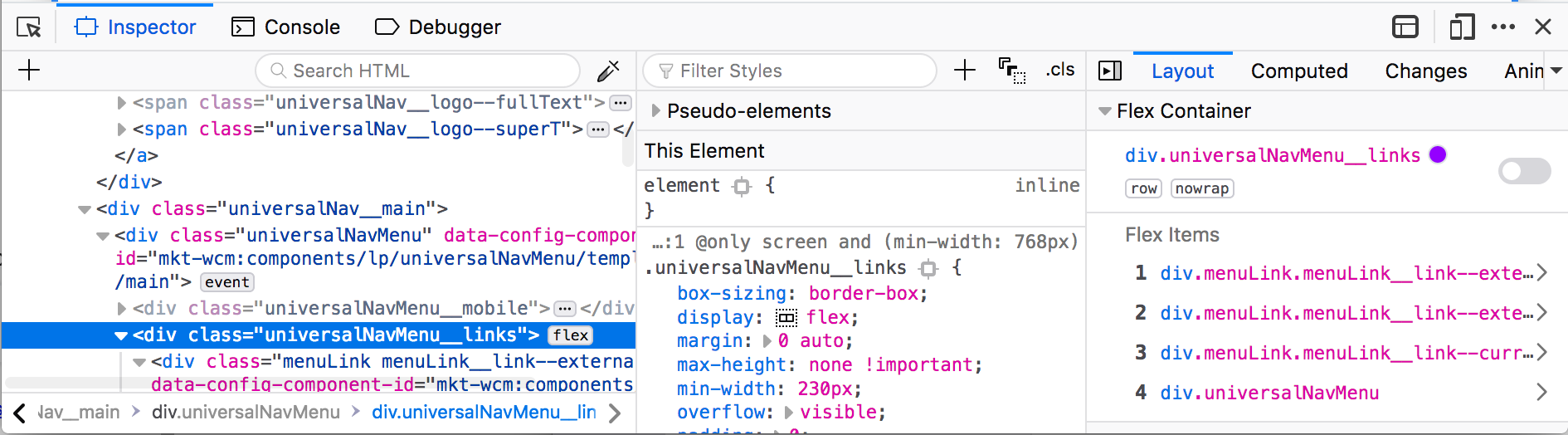

With help from our Gecko engineers, we were able to display a chart with step-by-step descriptions of how a flex item’s size is determined. Basic color-coding between the diagram and chart helps to connect the two UIs.

Markup badges and other entry points

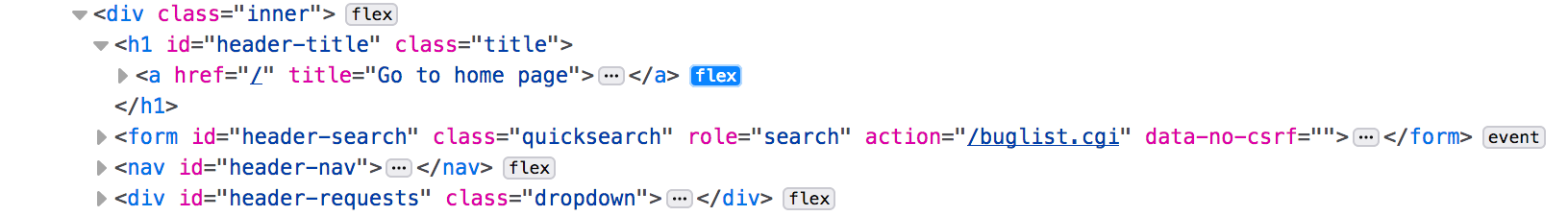

Flex badges in the markup view serve as indicators of flex containers as well as shortcuts for turning on the in-page overlay. Early data shows that this is the most common way to turn on the overlay; the toggle switch in the Layout panel and the button next to the display:flex declaration in Rules are two other commonly used methods. Having multiple entry points accommodates different workflows, which may focus on any one of the three Inspector panels.

Surfacing a brand new tool

Building new tools can be risky due to the presumption of modifying developers’ everyday workflows. One of my big fears was that we’d spend countless hours on a new feature only to hide it away somewhere inside the complicated megaplex that is Firefox Developer Tools. This could result in people never finding it or not bothering to navigate to it.

To invite usage, we automatically show Flexbox info in the Layout panel whenever a developer selects a flex container or item inside the markup view. The Layout panel will usually be visible by default in the third Inspector column which we added in Firefox 62. From there, the developer can choose to dig deeper into flex visualizations and relationships.

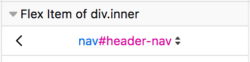

Mobile-inspired navigation & structure

One new thing we’re trying is a page-style navigation in which the developer goes “forward a page” to traverse down the tree (to child elements), or “back a page” to go up the tree (to parent elements). We’re also making use of a select menu for jumping between sibling flex items. Inspired by mobile interfaces, the Firefox hamburger menu, and other page-style UIs, it’s a big experimental departure from the simpler navigation normally used in DevTools.

One of the trickier parts of the structure was coming up with a cohesive design for flex containers, items, and nested container-items. My colleague Patrick figured out that we should have two types of flex panes inside the Layout panel, showing whichever is relevant: an Item pane or a Container pane. Both panes show up when the element is both a container and an item.

Tighter connection with in-page context

When hovering over element names inside the Flexbox panes, we highlight the element in the page, strengthening the connection between the code and the output without including extra ‘inspect’ icons or other steps. I plan to introduce more of this type of intuitive hover behavior into other parts of DevTools.

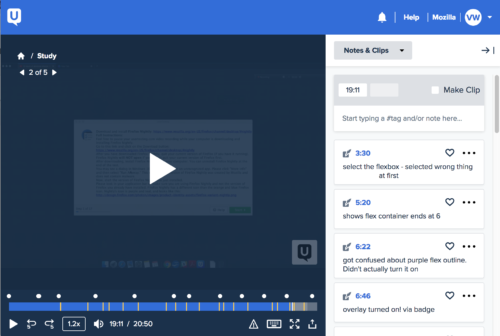

Testing and development

After lots of iteration, I created a high-fidelity prototype to share with our community channels. We received lots of helpful comments that fed back into the design.

We had our first foray into formal user testing, which was helpful in revealing the confusing parts of our tool. We plan to continue improving our user research process for all new projects.

Later this month, developers from our team will be writing a more technical deep-dive about the Flexbox Inspector. Meanwhile, here are some fun tidbits from the dev process: Lots and lots of issues were created in Bugzilla to organize every implementation task of the project. Silly test pages, like this one, created by my colleague Mike, were made to test out every Flexbox situation. Our team regularly used the tool in Firefox Nightly with various sites to dog-food the tool and find bugs.

What’s next

2018 was a big year for Firefox DevTools and the new Design Tools initiative. There were hard-earned lessons and times of doubt, but in the end, we came together as a team and we shipped!

We have more work to do in improving our UX processes, stepping up our research capabilities, and understanding the results of our decisions. We have more tools to build—better debugging tools for all types of CSS layouts and smoother workflows for CSS development. There’s a lot more we can do to improve the Flexbox Inspector, but it’s time for us to put it out into the world and see if we can validate what we’ve already built.

Now we need your help. It’s critical that the Flexbox Inspector gets feedback from real-world usage. Give it a spin in DevEdition, and let us know via Twitter or Discourse if you run into any bugs, ideas, or big wins.

Thanks to Martin Balfanz, Daniel Holbert, Patrick Brosset, and Jordan Witte for reviewing drafts of this article.

The post Designing the Flexbox Inspector appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/01/designing-the-flexbox-inspector/

|

|

Mozilla GFX: WebRender newsletter #35 |

Bonsoir! Another week, another newsletter. I stealthily published WebRender on crates.io this week. This doesn’t mean anything in terms of API stability and whatnot, but it makes it easier for people to use WebRender in their own rust projects. Many asked for it so there it is. Everyone is welcome to use it, find bugs, report them, submit fixes and improvements even!

In other news we are initiating a notable workflow change: WebRender patches will land directly in Firefox’s mozilla-central repository and a bot will automatically mirror them on github. This change mostly affects the gfx team. What it means for us is that testing webrender changes becomes a lot easier as we don’t have to manually import every single work in progress commit to test it against Firefox’s CI anymore. Also Kats won’t have to spend a considerable amount of his time porting WebRender changes to mozilla-central anymore.

We know that interacting with mozilla-central can be intimidating for external contributors so we’ll still accept pull requests on the github repository although instead of merging them from there, someone in the gfx team will import them in mozilla-central manually (which we already had to do for non-trivial patches to run them against CI before merging). So for anyone who doesn’t work everyday on WebRender this workflow change is pretty much cosmetic. You are still welcome to keep following and interacting with the github repository.

Notable WebRender and Gecko changes

- Jeff fixed a recent regression that was causing blob images to be painted twice.

- Kats the work to make the repository transition possible without losing any of the tools and testing we have in WebRender. He also set up the repository synchronization.

- Kvark completed the clipping API saga.

- Matt added some new telemetry for paint times, that take vsync into account.

- Matt fixed a bug with a telemetry probe that was mixing content and UI paint times.

- Andrew fixed an image flickering issue.

- Andrew fixed a bug with image decode size and pixel snapping.

- Lee fixed a crash in DWrite font rasterization.

- Lee fixed a bug related to transforms and clips.

- Emilio fixed a bug with clip path and nested clips.

- Glenn fixed caching fixed position clips.

- Glenn improved the cached tile eviction heuristics (2).

- Glenn fixed an intermittent test failure.

- Glenn fixed caching with opacity bindings that are values.

- Glenn avoided caching tiles that always change.

- Glenn fixed a cache eviction issue.

- Glenn added a debugging overlay for picture caching.

- Nical reduced the overdraw when rendering dashed corners, which was causing freezes in extreme cases.

- Nical added the possibility to run wrench/scripts/headless.py (which lets us run CI under os-mesa) inside gdb, cgdb, rust-gdb and rr both with release and debug builds (see Debugging WebRender on wiki for more info about how to set this up).

- Nical fixed a blob image key leak.

- Sotaro fixed the timing of async animation deletion which addressed bug 1497852 and bug 1505363.

- Sotaro fixed a cache invalidation issue when the number of blob rasterization requests hits the per-transaction limit.

- Doug cleaned up WebRenderLayaerManager’s state management.

- Doug fixed a lot of issues in WebRender when using multiple documents at the same time.

Ongoing work

The team keeps going through the remaining blockers (19 P2 bugs and 34 P3 bugs at the time of writing).

Enabling WebRender in Firefox Nightly

In about:config, set the pref “gfx.webrender.all” to true and restart the browser.

Reporting bugs

The best place to report bugs related to WebRender in Firefox is the Graphics :: WebRender component in bugzilla.

Note that it is possible to log in with a github account.

https://mozillagfx.wordpress.com/2019/01/10/webrender-newsletter-35/

|

|

The Mozilla Blog: Eric Rescorla Wins the Levchin Prize at the 2019 Real-World Crypto Conference |

The Levchin Prize awards two entrepreneurs every year for significant contributions to solving global, real-world cryptography issues that make the internet safer at scale. This year, we’re proud to announce that our very own Firefox CTO, Eric Rescorla, was awarded one of these prizes for his involvement in spearheading the latest version of Transport Layer Security (TLS). TLS 1.3 incorporates significant improvements in both security and speed, and was completed in August and already secures 10% of sites.

Eric has contributed extensively to many of the core security protocols used in the internet, including TLS, DTLS, WebRTC, ACME, and the in development IETF QUIC protocol. Most recently, he was editor of TLS 1.3, which already secures 10% of websites despite having been finished for less than six months. He also co-founded Let’s Encrypt, a free and automated certificate authority that now issues more than a million certificates a day, in order to remove barriers to online encryption and helped HTTPS grow from around 30% of the web to around 75%. Previously, he served on the California Secretary of State’s Top To Bottom Review where he was part of a team that found severe vulnerabilities in multiple electronic voting devices.

The 2019 winners were selected by the Real-World Cryptography conference steering committee, which includes professors from Stanford University, University of Edinburgh, Microsoft Research, Royal Holloway University of London, Cornell Tech, University of Florida, University of Bristol, and NEC Research.

This prize was announced on January 9th at the 2019 Real-World Crypto Conference in San Jose, California. The conference brings together cryptography researchers and developers who are implementing cryptography on the internet, the cloud and embedded devices from around the world. The conference is organized by the International Association of Cryptologic Research (IACR) to strengthen and advance the conversation between these two communities.

For more information about the Levchin Prize visit www.levchinprize.com.

The post Eric Rescorla Wins the Levchin Prize at the 2019 Real-World Crypto Conference appeared first on The Mozilla Blog.

|

|

Mozilla Open Policy & Advocacy Blog: Our Letter to Congress About Facebook Data Sharing |

Last week Mozilla sent a letter to the House Energy and Commerce Committee concerning its investigation into Facebook’s privacy practices. We believe Facebook’s representations to the Committee — and more recently — concerning Mozilla are inaccurate and wanted to set the record straight about any past and current work with Facebook. You can read the full letter here.

The post Our Letter to Congress About Facebook Data Sharing appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2019/01/08/our-letter-to-congress-about-facebook/

|

|

The Mozilla Blog: Mozilla Announces Deal to Bring Firefox Reality to HTC VIVE Devices |

Last year, Mozilla set out to build a best-in-class browser that was made specifically for immersive browsing. The result was Firefox Reality, a browser designed from the ground up to work on virtual reality headsets. To kick off 2019, we are happy to announce that we are partnering with HTC VIVE to power immersive web experiences across Vive’s portfolio of devices.

What does this mean? It means that Vive users will enjoy all of the benefits of Firefox Reality (such as its speed, power, and privacy features) every time they open the Vive internet browser. We are also excited to bring our feed of immersive web experiences to every Vive user. There are so many amazing creators out there, and we are continually impressed by what they are building.

“This year, Vive has set out to bring everyday computing tasks into VR for the first time,” said Michael Almeraris, Vice President, HTC Vive. “Through our exciting and innovative collaboration with Mozilla, we’re closing the gap in XR computing, empowering Vive users to get more content in their headset, while enabling developers to quickly create content for consumers.”

Virtual reality is one example of how web browsing is evolving beyond our desktop and mobile screens. Here at Mozilla, we are working hard to ensure these new platforms can deliver browsing experiences that provide users with the level of privacy, ease-of-use, and control that they have come to expect from Firefox.

In the few months since we released Firefox Reality, we have already released several new features and improvements based on the feedback we’ve received from our users and content creators. In 2019, you will see us continue to prove our commitment to this product and our users with every update we provide.

Stay tuned to our mixed reality blog and twitter account for more details. In the meantime, you can check out all of the announcements from HTC Vive here.

If you have an all-in-one VR device running Vive Wave, you can search for “Firefox Reality” in the Viveport store to try it out right now.

The post Mozilla Announces Deal to Bring Firefox Reality to HTC VIVE Devices appeared first on The Mozilla Blog.

|

|

Mozilla VR Blog: Navigation Study for 3DoF Devices |

Over the past few months I’ve been building VR demos and writing tutorial blogs. Navigation on a device with only three degrees of freedom (3dof) is tricky, So I decided to do a survey of many native apps and games for the Oculus Go to see how each of them handled it. Below are my results.

For this study I looked only at navigation, meaning how the user moves around in the space, either by directly moving or by jumping to semantically different spaces (ex: click a door to go to the next room). I don't cover other interactions like how buttons or sliders work. Just navigation.

TL;DR

Don’t touch the camera. The camera is part of the users head. Don’t try to move it. All apps which move the camera induce some form of motion sickness. Instead use one of a few different forms of teleportation, always under user control.

The ideal control for me was teleportation to semantically meaningful locations, not just 'forward ten steps'. Further more, when presenting the user with a full 360 environment it is helpful to have a way to recenter the view, such as by using left/right buttons on the controller. Without a recentering option the user will have to physically turn themselves around, which is cumbersome unless you are in a swivel chair.

To help complete the illusion I suggest subtle sound effects for movement, selection, and recentering. Just make sure they aren't very noticable.

Epic Roller Coaster

This is a roller coaster simulator, except it lets you do things that a real rollercoaster can’t, such as jumping between tracks and being chased by dinosaurs. To start you have pointer interaction across three panels: left, center, right. Everything has hover/rollover effects with sound. During the actual rollercoaster ride you are literally a camera on rails. Press the trigger to start and then the camera moves at constant speed. All you can do is look around. Speed and angle changes made me dizzy and I had to take it off after about five minutes, but my 7 year old loves Epic Roller Coaster.

Space Time

A PBS app that teaches you about black holes, the speed of light, and other physics concepts. You use pointer interaction to click buttons then watch non-interactive 3D scenes/info, though they are in full 360 3D, rather than plain movies.

Within Videos

A collection of many 360 and 3D movies. Pointer interaction to pick videos, some scrolling w/ touch gestures. Then non-interactive videos except for the video controls.

Master Work Journeys

Explorer famous monuments and locations like Mount Rushmore. You can navigate around 360 videos by clicking on hotspots with the pointer. Some trigger photos or audio. Others are teleportation spots. There is no free navigation or free teleportation, only to the hotspots. You can adjust the camera with left and right swipes, though.

Thumper

An intense driving and tilting music game. It uses pointer control for menus. In the game you run at a constant speed. The track itself turns but you are always stable in the middle. Particle effects stream at you, reinforcing the illusion of the tube you are in.

Bait

A fishing simulator. You use pointer clicks for navigation in the menus. The main interaction is a fishing pole. Hold then release button at the right time while flicking the pole forward to cast, then click to reel it back in.

Dinosaurs

Basically like the rollercoaster game, but you learn about various dinosaurs by driving on a constant speed rails car to different scenes. It felt better to me than Epic Roller Coaster because the velocity is constant, rather than changing.

Breaking Boundaries in Science

Text overlays with audio and 360 background image. You can navigate through full 3D space by jumping to hard coded teleport spots. You can click certain spots to get to these points, hear audio, and lightly interact with artifacts. If you look up at an angle you see a flip-180 button to change the view. This avoids the problem of having to be in a 360 chair to navigate around. You cannot camera adjust with left/right swipes.

WonderGlade

In every scene you float over a static mini-landscape, sort of like you are above a game board. You cannot adjust the angle or camera, just move your head to see stuff. Everything laid around you for easy viewing from the fixed camera point. Individual mini games may use different mechanics for playing, but they are all using the same camera. Essentially the camera and world never move. You can navigate your player character around the board by clicking on spots, similar to an RTS like Starcraft.

Starchart

Menus are a static camera view with mouse interaction. Once inside of a star field you are at the center and can look in any direction of the virtual night sky. Swipe left / right to move camera 45 degrees, which happens instantly, not with navigation, though there are sound effects.