Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Niko Matsakis: Rust pattern: Iterating an over a Rc<Vec> |

|

|

Cameron Kaiser: TenFourFox FPR9 available, and introducing Talospace |

Allow me to also take the wraps off of Talospace, the new spin-off blog primarily oriented at the POWER9 Raptor Talos family of systems but will also be where I'll post general Power ISA and PowerPC items, refocusing this blog back to Power Macs specifically. Talospace is a combination of news bits, conjecture and original content "first person" items. For a period of time until it accumulates its own audience, I'll crosspost links here to seed original content (for the news pieces, you'll just have to read it or subscribe to the RSS feed).

As the first long-form article, read this two-part series on running Mac OS X under KVM-PPC (first part, second part). Upcoming: getting the damn Command key working "as you expect it" in Linux.

http://tenfourfox.blogspot.com/2018/08/tenfourfox-fpr9-available-and.html

|

|

Mozilla Addons Blog: Extensions in Firefox 63 |

Firefox 63 is rolling into Beta and it’s absolutely loaded with new features for extensions. There are some important new API, some major enhancements to existing API, and a large collection of miscellaneous improvements and bug fixes. All told, this is the biggest upgrade to the WebExtensions API since the release of Firefox Quantum.

An upgrade this large would not have been possible in a single release without the hard work of our Mozilla community. Volunteer contributors landed over 25% of all the features and bug fixes for WebExtensions in Firefox 63, a truly remarkable effort. We are humbled and grateful for your support of Firefox and the open web. Thank you.

Note: due to the large volume of changes in this release, the MDN documentation is still catching up. I’ve tried to link to MDN where possible, and more information will appear in the weeks leading up to the public release of Firefox 63.

Less Kludgy Clipboard Access

A consistent source of irritation for developers since the WebExtensions API was introduced is that clipboard access is not optimal. Having to use execCommand() to cut, copy and paste always felt like a workaround rather than a valid way to interact with the clipboard.

That all changes in Firefox 63. Starting with this release, parts of the official W3C draft spec for asynchronous clipboard API is now available to extensions. When using the clipboard, extensions can use standard the WebAPI to read and write to the clipboard using navigator.clipboard.readText() and navigator.clipboard.writeText(). A couple of things to note:

- clipboard.writeText is available to secure contexts and extensions, without requiring any permissions, as long as it is used in a user-initiated event callback. Extensions can request the clipboardWrite permission if they want to use clipboard.writeText outside of a user-initiated event callback. This preserves the same use conditions as document.execCommand(“copy”).

- clipboard.readText is available to extensions only and requires the clipboardRead permission. There currently is no way to expose the clipboard.readText API to web content since no permission system exists for it outside of extensions. This preserves the same use conditions as document.execCommand(“paste”).

In addition, the text versions of the API are the only ones available in Firefox 63. Support for the more general clipboard.read() and clipboard.write() API are awaiting clarity around the W3C spec and will be added in a future release.

Selecting Multiple Tabs

One of the big changes coming in Firefox 63 is the ability to select multiple tabs simultaneously by either Shift- or CTRL-clicking on tabs beyond the currently active tab. This allows you to easily highlight a set of tabs and move, reload, mute or close them, or drag them into another window. It is a very convenient feature that power users will appreciate.

In concert with this user-facing change, extensions are also gaining support for multi-select tabs in Firefox 63. Specifically:

- The tabs.onHighlighted event now handles multiple selected tabs in Firefox.

- The tabs.highlight API accepts an array of tab ID’s that should be selected.

- The tabs.Tab object properly sets the value of the highlighted property.

- The tabs.query API now accepts “highlighted” as a parameter and will return an array of the currently selected tabs.

- The tabs.update API can alter the status of selected tabs by setting the highlighted property.

A huge amount of gratitude goes to Oriol Brufau, the volunteer contributor who implemented every single change listed above. Without his hard work, multi-select tabs would not be available in Firefox 63. Thank you, Oriol!

P.S. Oriol wasn’t satisfied doing all of the work for multi-select tabs, he also fixed several issues with extension icons.

What You’ve Been Searching For

Firefox 63 introduces a completely new API namespace that allows extensions to enumerate and access the search engines built into Firefox. Short summary:

- The new search.get() API returns an array of search engine objects representing all of the search engines currently installed in Firefox.

- Each search engine object contains:

- name (string)

- isDefault (boolean)

- alias (string)

- favIconUrl (URL string)

- The new search.search() API takes a query string and returns the results. It accepts an optional search engine name (default search engine is used, if omitted) and an optional tab ID where the results should be displayed (opens a new tab, if omitted).

- Extensions must declare the search permission to use either API.

- The search.search() API can only be called from inside a user-input handler, such as a button, context menu or keyboard shortcut.

More Things to Theme

Once again, the WebExtensions API for themes has received some significant enhancements.

- The built-in Firefox sidebars can now be themed separately using:

- sidebar

- sidebar_text

- sidebar_highlight

- sidebar_highlight_text

- Support for theming the new tab page was added via the properties ntp_background and ntp_color (both of which are compatible with Chrome).

- The images in the additional_backgrounds property are aligned correctly to the toolbox, making all the settings in additional_backgrounds_alignment work properly. Note that this also changes the default z-order of additional_backgrounds, making those image stack on top of any headerURL image.

- By default, all images for additional_backgrounds are anchored to the top right of the browser window. This was variable in the past, based on which properties were included in the theme.

- The browser action theme_icons property now works with more themes.

- Themes now enforces a maximum limit of 15 images for additional_backgrounds.

- The theme properties accentcolor and textcolor are now optional.

Finally, there is a completely new feature for themes called theme_experiment that allows theme authors to declare their own theme properties based on any Firefox CSS element. You can declare additional properties in general, additional elements that can be assigned a color, or additional elements that can be images. Any of the items declared in the theme_experiment section of the manifest can be used inside the theme declaration in the same manifest file, as if those items were a native part of the WebExtensions theme API.

theme_experiment is available only in the Nightly and Developer editions of Firefox and requires that the ‘extensions.legacy.enabled’ preference be set to true. And while it also requires more detailed knowledge of Firefox, it essentially gives authors the power to completely theme nearly every aspect of the Firefox user interface. Keep on eye on MDN for detailed documentation on how to use it (here is the bugzilla ticket for those of you who can’t wait).

Similar to multi-select tabs, all of the theme features listed above were implemented by a single contributor, Tim Nguyen. Tim has been a long-time contributor to Mozilla and has really been a champion for themes from the beginning. Thank you, Tim!

Gaining More Context

We made a concerted effort to improve the context menu subsystem for extensions in Firefox 63, landing a series of patches to correct or enhance the behavior of this heavily used feature of the WebExtensions API.

- A new API, menus.getTargetElement, was added to return the element for a context menu that was either shown or clicked. The menus.onShown and menus.onClicked events were updated with a new info.targetElementId integer that is accepted by getTargetElement. Available to all extension script contexts (content scripts, background pages, and other extension pages), menus.getTargetElement has the advantage of allowing extensions to detect the clicked element without having to insert a content script into every page.

- The “visible” parameter for menus.create and menus.update is now supported, making it much easier for extensions to dynamically show and hide context menu items.

- Context menus now accept any valid target URL pattern, not just those supported by valid match patterns.

- Extensions can now set a keyboard access key for a context menu item by preceding it with the & symbol in the menu item label.

- The activeTab permission is now granted for any tab on which a context menu is shown, allowing for a more intuitive user experience without extensions needing to request additional permissions.

- The menus.create API was fixed so that the callback is also called when a failure occurs

- Fixed how menu icons and extensions icons are displayed in context menus to match the MDN documentation.

- The menus.onClick handler can now call other methods that require user input.

- menus.onShown now correctly fires for the bookmark context.

- Made a change that allows menus.refresh() to operate without an onShown listener.

Context menus will continue to be a focus and you can expect to see even more improvements in the Firefox 64 timeframe.

A Motley Mashup of Miscellany

In addition to the major feature areas mentioned above, a lot of other patches landed to improve different parts of the WebExtensions API. These include:

- Out-of-process extensions have been enabled for Linux, bringing that platform to parity with Windows and Mac.

- Keyboard shortcut handlers are treated as user input handlers for those API and events that require them.

- Extension-defined keyboard shortcuts can now use more combinations of modifier keys.

- Extensions work with shadow DOM/WebComponents.

- Permission notifications from extension pages display the extension name instead of the extension’s ID.

- The speculative connection type was added for the webRequest and proxy API.

- Browser actions will set the badge text color automatically to maximize contrast or, if you want more control, there are new setBadgeTextColor and getBadgeTextColor API so you can set it yourself.

- tabs.create() supports the “discarded” property so that new tabs can be created in the discarded (unloaded) state.

- Support for the “sameSite” attribute (no_restriction, lax, strict) was added to the cookies API

- Extensions now have access to a tab’s “attention” flag in the tab object.

- Removed inadvertent restrictions on extension managed proxies.

- The webRequest.getSecurityInfo API was changed to not return localized values as well as leave keaGroupName and signatureSchemeName undefined if their value is none.

Thank You

A total of 111 features and improvements landed as part of Firefox 63, easily the biggest upgrade to the WebExtensions API since Firefox Quantum was released in November of 2017. Volunteer contributors were a huge part of this release and a tremendous thank you goes out to our community, including: Oriol Brufau, Tim Nguyen, ExE Boss, Ian Moody, Peter Simonyi, Tom Schuster, Arshad Kazmi, Tomislav Jovanovic and plaice.adam+persona. It is the combined efforts of Mozilla and our amazing community that make Firefox a truly unique product. If you are interested in contributing to the WebExtensions ecosystem, please take a look at our wiki.

The post Extensions in Firefox 63 appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/08/31/extensions-in-firefox-63/

|

|

Mozilla Addons Blog: September’s featured extensions |

Pick of the Month: Iridium for YouTube

by Particle

Play videos in a pop-out window, only see ads within subscribed channels, take video screenshots, and much more.

“Been using this for a couple of months and it’s the greatest YouTube extension ever. I have tried a lot of different ones and this one melts my heart.”

Featured: Private Bookmarks

by rharel

Password-protect your personal bookmarks.

“This capability was sorely needed, and is well done. Works as advertised, and is easy to use.”

Featured: Universal Bypass

by Tim “TimmyRS” Speckhals

Automatically skip annoying link shorteners.

“Wow you must try this extension.”

Featured: Copy PlainText

by erosman

Easily remove text formatting when saving to your clipboard.

“Works very well and is great for copying from browsers to HTML format emails, which often makes a complete mess of not only fonts but layout spacing as well.”

If you’d like to nominate an extension for featuring, please send it to amo-featured [at] mozilla [dot] org for the board’s consideration. We welcome you to submit your own add-on!

The post September’s featured extensions appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/08/31/septembers-featured-extensions-2/

|

|

About:Community: Firefox 62 new contributors |

With the upcoming release of Firefox 62, we are pleased to welcome the 48 developers who contributed their first code change to Firefox in this release, 35 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

https://blog.mozilla.org/community/2018/08/31/firefox-62-new-contributors/

|

|

Mozilla VR Blog: This Week in Mixed Reality: Issue 18, Hubs Edition |

This has been another quite week full of bug fixes and UI polish. There are a few notable items, though.

Browsers

- Fixed some gamepad issues in Gecko. issue

- Added a new 3D spinner: issue

- More improvements to remote debugging: issue

Social

Hubs attendance is going strong. Along the way several people requested a space that was wide open and not distracting when they are sharing content, so the team added a new scene style called Wide Open Space, and it's live now!

Content Ecosystem

We are working on a new release of the WebVR exporter for Unity with refactored code, performance improvements, and other optimizations. Look for it soon.

https://blog.mozvr.com/this-week-in-mixed-reality-issue-18-hubs-edition/

|

|

Mozilla Future Releases Blog: Changing Our Approach to Anti-tracking |

Anyone who isn’t an expert on the internet would be hard-pressed to explain how tracking on the internet actually works. Some of the negative effects of unchecked tracking are easy to notice, namely eerily-specific targeted advertising and a loss of performance on the web. However, many of the harms of unchecked data collection are completely opaque to users and experts alike, only to be revealed piecemeal by major data breaches. In the near future, Firefox will — by default — protect users by blocking tracking while also offering a clear set of controls to give our users more choice over what information they share with sites.

Over the next few months, we plan to release a series of features that will put this new approach into practice through three key initiatives:

Improving page load performance

Tracking slows down the web. In a study by Ghostery, 55.4% of the total time required to load an average website was spent loading third party trackers. For users on slower networks the effect can be even worse.

Long page load times are detrimental to every user’s experience on the web. For that reason, we’ve added a new feature in Firefox Nightly that blocks trackers that slow down page loads. We will be testing this feature using a shield study in September. If we find that our approach performs well, we will start blocking slow-loading trackers by default in Firefox 63.

Removing cross-site tracking

In the physical world, users wouldn’t expect hundreds of vendors to follow them from store to store, spying on the products they look at or purchase. Users have the same expectations of privacy on the web, and yet in reality, they are tracked wherever they go. Most web browsers fail to help users get the level of privacy they expect and deserve.

In order to help give users the private web browsing experience they expect and deserve, Firefox will strip cookies and block storage access from third-party tracking content. We’ve already made this available for our Firefox Nightly users to try out, and will be running a shield study to test the experience with some of our beta users in September. We aim to bring this protection to all users in Firefox 65, and will continue to refine our approach to provide the strongest possible protection while preserving a smooth user experience.

Mitigating harmful practices

Deceptive practices that invisibly collect identifiable user information or degrade user experience are becoming more common. For example, some trackers fingerprint users — a technique that allows them to invisibly identify users by their device properties, and which users are unable to control. Other sites have deployed cryptomining scripts that silently mine cryptocurrencies on the user’s device. Practices like these make the web a more hostile place to be. Future versions of Firefox will block these practices by default.

Why are we doing this?

This is about more than protecting users — it’s about giving them a voice. Some sites will continue to want user data in exchange for content, but now they will have to ask for it, a positive change for people who up until now had no idea of the value exchange they were asked to make. Blocking pop-up ads in the original Firefox release was the right move in 2004, because it didn’t just make Firefox users happier, it gave the advertising platforms of the time a reason to care about their users’ experience. In 2018, we hope that our efforts to empower our users will have the same effect.

How to Manually Enable the Protections

Do you want to try out these protections in Firefox Nightly? You can control both features from the Firefox Nightly Control Center menu, accessible on the left-hand side of the address bar. In that menu you’ll see a new “Content Blocking” section. From there, you can:

- Enable the blocking of slow-loading trackers or cross-site tracking through third-party cookies by clicking “Add Blocking…” next to the respective option.

- In the “Content Blocking” preferences panel:

- Click the checkbox next to “Slow-Loading Trackers” to improve page load performance.

- Click the checkbox next to “Third-Party Cookies” and select “Trackers (recommended)” to block cross-site tracking cookies.

- You can disable these protections by clicking the gear icon in the control center and unchecking the checkboxes next to “Slow-Loading Trackers” and “Third-party Cookies”.

The post Changing Our Approach to Anti-tracking appeared first on Future Releases.

https://blog.mozilla.org/futurereleases/2018/08/30/changing-our-approach-to-anti-tracking/

|

|

Andreas Tolfsen: Lunchtime brown bags |

Over the Summer I’ve come to organise quite a number of events in Mozilla’s London office. Early Summer we started doing lunchtime brown bags, where staff give a 10 ~ 15 minute informal talk about what they are currently working on or a topic of their interest.

So far we’ve covered MDN’s Browser Compat Data initiative, been given a high-level introduction to Gecko’s graphics stack, learned what goes into releasing Firefox, and had conversations about how web compatibility came about and why it’s a necessary evil. We’ve been given insight into the Spectre security vulnerability (and become mortally terrified as a result of it), learned how TLS 1.3 is analysed and proofed, and been gobsmacked by the meticulousness of Firefox Accounts’ security model.

Organising these small events have had a markedly positive effect on office environment. People have told me they are finding the time to come in to the office more often because of them, and they’ve been great for introducing the six interns we’ve had in London over Summer to Mozilla and our values.

For me as the organiser, they take very little time to put together. My most important piece of advice if you want to replicate this in another office would be to not ask for voluntary signups: I’ve had far greater success conscripting individuals privately. The irony is that nearly everyone I’ve approached thinks that what they do is not interesting enough to present, but I’d say the list above begs to differ.

|

|

Patrick Finch: On leaving Mozilla |

I didn’t want to write one of those “all@” goodbye emails. At best, they generate ambivalence, maybe some sadness. And maybe they generate clutter in the inboxes of people who prefer their inboxes uncluttered. The point is, they don’t seem to improve things. I’m not sending one.

But I have taken the decision to leave Mozilla as a full-time employee. I’m leaving the industry, in fact. For the last 10 years, for everything I’ve learned, for the many opportunities and for the shared achievements, I’ve got nothing but gratitude towards my friends and colleagues. I cannot imagine I’ll work anywhere quite like this again.

Long before I joined Mozilla, it was the organisation that had restored my optimism about the future of tech. From the dark days of the dot-com crash and the failure of platform-independent client-side internet applications to live up to their initial promise (I’m looking at you, Java applets), Firefox showed the world that openness wins. Working here was always more than a job. It has been a privilege.

At their very least, Mozilla’s products are open platforms that make their users sovereign, serving as a reference and inspiration for others. And at their best, our products liberate developers, bringing them new opportunities, and they delight users such that complete strangers want to hug you (and no, I didn’t invent the Awesome Bar, but I know someone who did…).

So I’ve always been very proud of Mozilla, and of proud of the work the team I’m on – Open Innovation – does. Being Mozilla is not easy. Tilting at windmills is the job. We live in times when the scale of Internet companies means that these giants have resources to buy or copy just about any innovation that comes to market. Building for such a market – as well as the inherent challenge of building world-class user experiences in the extremely complex environment of content on the Web – also means identifying the gaps in the market and who our allies are in filling them. It’s a complex and challenging environment and it needs special people. I will miss them.

I’m in there somewhere [Credit: Gen Kanai, CC BY-NC-ND 2.0]

However, this is probably my last time in the Bay Area for a while. So I took the opportunity to see some old friends (I’ve worked in this industry for over 20 years now). A common theme? Many had gone back to using Firefox since the Quantum release in late 2017.

“Haven’t you heard?”, said one, explaining how his development team asked to prioritise Firefox support for their product, as that’s what they want to use themselves, “Firefox is cool again.”

I had to disagree: it has never stopped being cool.

|

|

Hacks.Mozilla.Org: Dweb: Building Cooperation and Trust into the Web with IPFS |

In this series we are covering projects that explore what is possible when the web becomes decentralized or distributed. These projects aren’t affiliated with Mozilla, and some of them rewrite the rules of how we think about a web browser. What they have in common: These projects are open source, and open for participation, and share Mozilla’s mission to keep the web open and accessible for all.

Some projects start small, aiming for incremental improvements. Others start with a grand vision, leapfrogging today’s problems by architecting an idealized world. The InterPlanetary File System (IPFS) is definitely the latter – attempting to replace HTTP entirely, with a network layer that has scale, trust, and anti-DDOS measures all built into the protocol. It’s our pleasure to have an introduction to IPFS today from Kyle Drake, the founder of Neocities and Marcin Rataj, the creator of IPFS Companion, both on the IPFS team at Protocol Labs -Dietrich Ayala

IPFS – The InterPlanetary File System

We’re a team of people all over the world working on IPFS, an implementation of the distributed web that seeks to replace HTTP with a new protocol that is powered by individuals on the internet. The goal of IPFS is to “re-decentralize” the web by replacing the location-oriented HTTP with a content-oriented protocol that does not require trust of third parties. This allows for websites and web apps to be “served” by any computer on the internet with IPFS support, without requiring servers to be run by the original content creator. IPFS and the distributed web unmoor information from physical location and singular distribution, ultimately creating a more affordable, equal, available, faster, and less censorable web.

IPFS aims for a “distributed” or “logically decentralized” design. IPFS consists of a network of nodes, which help each other find data using a content hash via a Distributed Hash Table (DHT). The result is that all nodes help find and serve web sites, and even if the original provider of the site goes down, you can still load it as long as one other computer in the network has a copy of it. The web becomes empowered by individuals, rather than depending on the large organizations that can afford to build large content delivery networks and serve a lot of traffic.

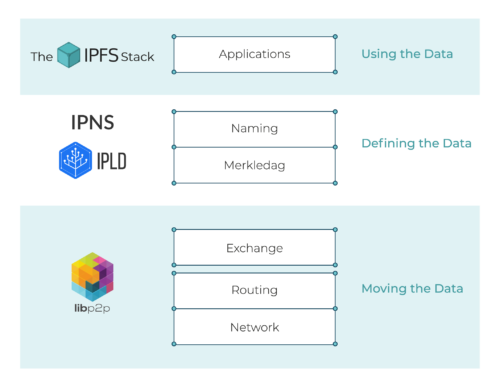

The IPFS stack is an abstraction built on top of IPLD and libp2p:

Hello World

We have a reference implementation in Go (go-ipfs) and a constantly improving one in Javascript (js-ipfs). There is also a long list of API clients for other languages.

Thanks to the JS implementation, using IPFS in web development is extremely easy. The following code snippet…

- Starts an IPFS node

- Adds some data to IPFS

- Obtains the Content IDentifier (CID) for it

- Reads that data back from IPFS using the CID

Open Console (Ctrl+Shift+K)

That’s it!

Before diving deeper, let’s answer key questions:

Who else can access it?

Everyone with the CID can access it. Sensitive files should be encrypted before publishing.

How long will this content exist? Under what circumstances will it go away? How does one remove it?

The permanence of content-addressed data in IPFS is intrinsically bound to the active participation of peers interested in providing it to others. It is impossible to remove data from other peers but if no peer is keeping it alive, it will be “forgotten” by the swarm.

The public HTTP gateway will keep the data available for a few hours — if you want to ensure long term availability make sure to pin important data at nodes you control. Try IPFS Cluster: a stand-alone application and a CLI client to allocate, replicate and track pins across a cluster of IPFS daemons.

Developer Quick Start

You can experiment with js-ipfs to make simple browser apps. If you want to run an IPFS server you can install go-ipfs, or run a cluster, as we mentioned above.

There is a growing list of examples, and make sure to see the bi-directional file exchange demo built with js-ipfs.

You can add IPFS to the browser by installing the IPFS Companion extension for Firefox.

Learn More

Learn about IPFS concepts by visiting our documentation website at https://docs.ipfs.io.

Readers can participate by improving documentation, visiting https://ipfs.io, developing distributed web apps and sites with IPFS, and exploring and contributing to our git repos and various things built by the community.

A great place to ask questions is our friendly community forum: https://discuss.ipfs.io.

We also have an IRC channel, #ipfs on Freenode (or #freenode_#ipfs:matrix.org on Matrix). Join us!

https://hacks.mozilla.org/2018/08/dweb-building-cooperation-and-trust-into-the-web-with-ipfs/

|

|

Will Kahn-Greene: Standup report: End of days |

What is Standup?

Standup is a system for capturing standup-style posts from individuals making it easier to see what's going on for teams and projects. It has an associated IRC bot standups for posting messages from IRC.

Short version

Standups project is done and we're going to decommission all the parts in the next couple of months.

Long version: End of days

Paul and I run Standups and have for a couple of years now. We do the vast bulk of work on it covering code changes, feature implementing, bug fixing, site administration and user support. Neither of us use it.

There are occasional contributions from other people, but not enough to keep the site going without us.

Standups has a lot of issues and a crappy UI/UX. Standups continues to accrue technical debt.

The activity seems to be dwindling over time. Groups are going elsewhere.

In June, I wrote Standup report: June 8th, 2018 in which I talked about us switching to swag-driven development as a way to boost our energy level in the project, pull in contributors, etc. We added a link to the site. It was a sort of last-ditch attempt to get the project going again.

Nothing happened. I heard absolutely nothing from anyone in any medium about the post or any of the thoughts therein.

Sometimes, it's hard to know when a project is dead. You sometimes have metrics that could mean something about the health of a project, but it's hard to know for sure. Sometimes it's hard to understand why you're sitting in a room all by yourself. Will something happen? Will someone show up? What if we just wait 15 minutes more?

I don't want to wait anymore. The project is dead. If it's not actually really totally dead, it's such a fantastic fascimile of dead that I can't tell the difference. There's no point in me waiting anymore; nothing's going to change and no one is going to show up.

Sure, maybe I could wait another 15 minutes--what's the harm since it's so easy to just sit and wait? The harm is that I've got so many things on my plate that are more important and have more value than this project. Also, I don't really like working on this project. All I've experienced in the last year was the pointy tips of bug reports most of them related to authentication. The only time anyone appreciates me spending my very very precious little free time on Standups is when I solicit it.

Also, I don't even know if it's "Standups" with an "s" or "Standup" without the "s". It might be both. I'm tired of looking it up to avoid embarrassment.

Timeline for shutdown

Shutting down projects like Standups is tricky and takes time and energy. I think tentatively, it'll be something like this:

- August 29th -- Announce end of Standups. Add site message (assuming site comes back up). Adjust irc bot reply message.

- October 1st -- Shut down IRC bots.

- October 15th -- Decommission infrastructure: websites, DNS records, Heroku infra; archive github repositories, etc.

I make no promises on that timeline. Maybe things will happen faster or slower depending on circumstances.

What we're not going to do

There are a few things we're definitely not going to do when decommissioning:

First, we will not be giving the data to anyone. No db dumps, no db access, nothing. If you want the data, you can slurp it down via the existing APIs on your own. [1]

Second, we're not going to keep the site going in read-only mode. No read-only mode or anything like that unless someone gives us a really really compelling reason to do that and has a last name that rhymes with Honnen. If you want to keep a historical mirror of the site, you can do that on your own.

Third, we're not going to point www.standu.ps or standu.ps at another project. Pretty sure the plan will be to have those names point to nothing or something like that. IT probably has a process for that.

| [1] | Turns out when we did the rewrite in 2016, we didn't reimplement the GET API. Issue 478 covers creating a temporary new one. If you want it, please help them out. |

Alternatives for people using it

If you're looking for alternatives or want to discuss alternatives with other people, check out issue 476.

But what if you want to save it!

Maybe you want to save it and you've been waiting all this time for just the right moment? If you want to save it, check out issue 477.

Many thanks!

Thank you to all the people who worked on Standups in the early days! I liked those days--they were fun.

Thank you to everyone who used Standups over the years. I hope it helped more than it hindered.

Update August 30, 2018: Added note about GET API not existing.

http://bluesock.org/~willkg/blog/mozilla/standup_end_of_days.html

|

|

Wladimir Palant: Password managers: Please make sure AutoFill is secure! |

Dear developers of password managers, we communicate quite regularly, typically within the context of security bug bounty programs. Don’t get me wrong, I don’t mind being paid for finding vulnerabilities in your products. But shouldn’t you do your homework before setting up a bug bounty program? Why is it the same basic mistakes that I find in almost all password managers? Why is it that so few password managers get AutoFill functionality right?

Of course you want AutoFill to be part of your product, because from the user’s point of view it’s the single most important feature of a password manager. Take it away and users will consider your product unusable. But from the security point of view, filling in passwords on the wrong website is almost the worst thing that could happen. So why isn’t this part getting more scrutiny? There is a lot you can do, here are seven recommendations for you.

1. Don’t use custom URL parsers

Parsing URLs is surprisingly complicated. Did you know that the “userinfo” part of it can contain an @ character? Did you think about data: URLs? There are many subtle details here, and even well-established solutions might have corner cases where their parser produces a result that’s different from the browser’s. But you definitely don’t want to use a URL parser that will disagree with the browser’s — if the browser thinks that you are on malicious.com then you shouldn’t fill in the password for google.com no matter what your URL parser says.

Luckily, there is an easy solution: just use the browser’s URL parser. If you worry about supporting very old browsers, the same effect can be achieved by creating an element and assigning the URL to be parsed to its href property. You can then read out the link’s hostname property without even adding the element to the document.

2. Domain name is not “the last two parts of a host name”

Many password managers will store passwords for a domain rather than an individual host name. In order to do this, you have to deduce the domain name from the host name. Very often, I will see something like the old and busted “last two parts of a host name” heuristic. It works correctly for foo.example.com but for foo.example.co.uk it will consider co.uk to be the domain name. As a result, all British websites will share the same passwords.

No amount of messing with that heuristic will save you, things are just too complicated. What you need is the Public Suffix List, it’s a big database of rules which can be applied to all top-level domains. You don’t need to process that list yourself, there is a number of existing solutions for that such as the psl package.

3. Don’t forget about raw IP addresses

Wait, there is a catch! The Public Suffix List will only work correctly for actual host names, not for IP addresses. If you give it something like 192.168.0.1 you will get 0.1 back. What about 1.2.0.1? Also 0.1. If your code doesn’t deal with IP addresses separately, it will expose passwords for people’s home routers to random websites.

What you want is recognizing IP addresses up front and considering the entire IP address as the “domain name” — passwords should never be shared between different IP addresses. Recognizing IP addresses is easier said that done however. Most solutions will use a regular expression like /^\d{1-3}\.\d{1-3}\.\d{1-3}\.\d{1-3}$/. In fact, this covers pretty much all IPv4 addresses you will usually see. But did you know that 0xC0.0xA8.0x00.0x01 is a valid IPv4 address? Or that 3232235521 is also an IPv4 address?

Things get even more complicated once you add IPv6 addresses to the mix. There are plenty of different notations to represent an IPv6 address as well, for example the last 32 bits of the address can be written like an IPv4 address. So you might want to use an elaborate solution that considers all these details, such as the ip-address package.

4. Be careful with what host names you consider equivalent

It’s understandable that you want to spare your users disappointments like “I added a password on foo.example.com, so why isn’t it being filled in on bar.example.com?” Yet you cannot know that these two subdomains really share the same owner. To give you a real example, foo.blogspot.com and bar.blogspot.com are two blogs owned by different people, and you certainly don’t want to share passwords between them.

As a more extreme example, there are so many Amazon domains that it is tempting to just declare: amazon. is always Amazon and should receive Amazon passwords. And then somebody goes there and registers amazon.boots to steal people’s Amazon passwords.

From what I’ve seen, the only safe assumption is that the host name with www. at the beginning and the one without are equivalent. Other than that, assumptions tend to backfire. It’s better to let the users determine which host names are equivalent, while maybe providing a default list populated with popular websites.

5. Require a user action for AutoFill

And while this might be a hard sell with your marketing department: please consider requiring a user action before AutoFill functionality kicks in. While this costs a bit of convenience, it largely defuses potential issues in the implementation of the points above. Think of it as defense in the depth. Even if you mess up and websites can trick your AutoFill functionality into thinking that they are some other website, requiring a user action will still prevent the attackers from automatically trying out a huge list of popular websites in order to steal user’s credentials for all of them.

There is also another aspect here that is discussed in a paper from 2014. Cross-Site Scripting (XSS) vulnerabilities in websites are still common. And while such a vulnerability is bad enough on its own, a password manager that fills in passwords automatically allows it to be used to steal user’s credentials which is considerably worse.

What kind of user action should you require? Typically, it will be clicking on a piece of trusted user interface or pressing a specific key combination. Please don’t forget checking event.isTrusted, whatever event you process should come from the user rather than from the website.

6. Isolate your content from the webpage

Why did I have to stress that the user needs to click on a trusted user interface? That’s because browser extensions will commonly inject their user interface into web pages and at this point you can no longer trust it. Even if you are careful to accept only trusted events, a web page can manipulate elements and will always find a way to trick the user into clicking something.

Solution here: your user interface should always be isolated within an element, so that the website cannot access it due to same-origin policy. This is only a partial solution unfortunately as it will not prevent clickjacking attacks. Also, the website can always remove your frame or replace it by its own. So asking users to enter their master password in this frame is a very bad idea: users won’t know whether the frame really belongs to your extension or has been faked by the website.

7. Ignore third-party frames

Finally, there is another defense in the depth measure that you can implement: only fill in passwords in the top-level window or first-party frames. Legitimate third-party frames with login forms are very uncommon. On the other hand, a malicious website seeking to exploit an XSS vulnerability in a website or a weakness in your extension’s AutoFill functionality will typically use a frame with a login form. Even if AutoFill requires a user action, it won’t be obvious to the user that the login form belongs to a different website, so they might still perform that action.

https://palant.de/2018/08/29/password-managers-please-make-sure-autofill-is-secure

|

|

John Ford: Shrinking Go Binaries |

A bit of background is that Go binaries are static binaries which have the Go runtime and standard library built into them. This is great if you don't care about binary size but not great if you do.

This graph has the binary size on the left Y axis using a linear scale in blue and the number of nanoseconds each reduction in byte takes to compute on the right Y axis using a logarithmic scale.

To reproduce my results, you can do the following:

go get -u -t -v github.com/taskcluster/taskcluster-lib-artifact-go

cd $GOPATH/src/github.com/taskcluster/taskcluster-lib-artifact-go

git checkout 6f133d8eb9ebc02cececa2af3d664c71a974e833

time (go build) && wc -c ./artifact

time (go build && strip ./artifact) && wc -c ./artifact

time (go build -ldflags="-s") && wc -c ./artifact

time (go build -ldflags="-w") && wc -c ./artifact

time (go build -ldflags="-s -w") && wc -c ./artifact

time (go build && upx -1 ./artifact) && wc -c ./artifact

time (go build && upx -9 ./artifact) && wc -c ./artifact

time (go build && strip ./artifact && upx -1 ./artifact) && wc -c ./artifact

time (go build && strip ./artifact && upx --brute ./artifact) && wc -c ./artifact

time (go build && strip ./artifact && upx --ultra-brute ./artifact) && wc -c ./artifact

time (go build && strip && upx -9 ./artifact) && wc -c ./artifact

Since I was removing a lot of debugging information, I figured it'd be worthwhile checking that stack traces are still working. To ensure that I could definitely crash, I decided to panic with an error immediately on program startup.

Even with binary stripping and the maximum compression, I'm still able to get valid stack traces. A reduction from 9mb to 2mb is definitely significant. The binaries are still large, but they're much smaller than what we started out with. I'm curious if we can apply this same configuration to other areas of the Taskcluster Go codebase with similar success, and if the reduction in size is worthwhile there.

I think that using strip and upx -9 is probably the best path forward. This combination provides enough of a benefit over the non-upx options that the time tradeoff is likely worth the effort.

|

|

Firefox Nightly: Firefox Nightly Secure DNS Experimental Results |

A previous post discussed a planned Firefox Nightly experiment involving secure DNS via the DNS over HTTPS (DoH) protocol. That experiment is now complete and this post discusses the results.

Browser users are currently experiencing spying and spoofing of their DNS information due to reliance on the unsecured traditional DNS protocol. A paper from the 2018 Usenix Security Symposium provides a new data point on how often DNS is actively interfered with – to say nothing of the passive data collection that it also endures. DoH will let Firefox securely and privately obtain DNS information from one or more services that it trusts to give correct answers and keep the interaction private.

Using a trusted DoH cloud based service in place of traditional DNS is a significant change in how networking operates and it raises many things to consider as we go forward when selecting servers (see “Moving Forward” at the end of this post). However, the initial experiment focused on validating two separate important technical questions:

- Does the use of a cloud DNS service perform well enough to replace traditional DNS?

- Does the use of a cloud DNS service create additional connection errors?

During July, about 25,000 Firefox Nightly 63 users who had previously agreed to be part of NIghtly experiments participated in some aspect of this study. Cloudflare operated the DoH servers that were used according to the privacy policy they have agreed to with Mozilla. Each user was additionally given information directly in the browser about the project. That information included the service provider, and an opportunity to decline participation in the study.

![]()

The experiment generated over a billion DoH transactions and is now closed. You can continue to manually enable DoH on your copy of Firefox Nightly if you like. See the bottom of the original announcement for instructions.

Results

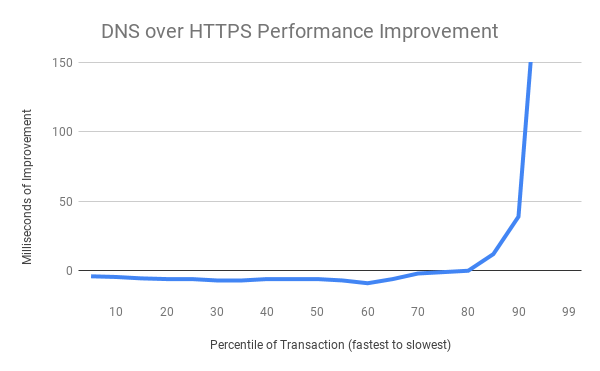

Using HTTPS with a cloud service provider had only a minor performance impact on the majority of non-cached DNS queries as compared to traditional DNS. Most queries were around 6 milliseconds slower, which is an acceptable cost for the benefits of securing the data. However, the slowest DNS transactions performed much better with the new DoH based system than the traditional one – sometimes hundreds of milliseconds better.

The above chart shows the net improvement of the DoH performance distribution vs the traditional DNS performance distribution. The fastest DNS exchanges are at the left of the chart and the slowest at the right. The slowest 20% of DNS exchanges are radically improved (improvements of several seconds are truncated for chart formatting reasons at the extreme), while the majority of exchanges exhibit a small tolerable amount of overhead when using a cloud service. This is a good result.

We hypothesize the improvements at the tail of the distribution are derived from 2 advantages DoH has compared to traditional DNS. First, is the consistency of the service operation – when dealing with thousands of different operating system defined resolvers there are surely some that are overloaded, unmaintained, or forwarded to strange locations. Second, HTTP’s use of modern loss recovery and congestion control allow it to better operate on very busy or low quality networks.

The experiment also considered connection error rates and found that users using the DoH cloud service in ‘soft-fail’ mode experienced no statistically significant different rate of connection errors than users in a control group using traditional DNS. Soft-fail mode primarily uses DoH, but it will fallback to traditional DNS when a name does not resolve correctly or when a connection to the DoH provided address fails. The connection error rate measures whether an HTTP channel can be successfully established from a name and therefore incorporates the fallbacks into its measurements. These fallbacks are needed to ensure seamless operation in the presence of firewalled services and captive portals.

Moving Forward

We’re committed long term to building a larger ecosystem of trusted DoH providers that live up to a high standard of data handling. We’re also working on privacy preserving ways of dividing the DNS transactions between a set of providers, and/or partnering with servers geographically. Future experiments will likely reflect this work as we continue to move towards a future with secured DNS deployed for all of our users.

https://blog.nightly.mozilla.org/2018/08/28/firefox-nightly-secure-dns-experimental-results/

|

|

John Ford: Taskcluster Artifact API extended to support content verification and improve error detection |

Background

At Mozilla, we're developing the Taskcluster environment for doing Continuous Integration, or CI. One of the fundamental concerns in a CI environment is being able to upload and download files created by each task execution. We call them artifacts. For Mozilla's Firefox project, an example of how we use artifacts is that each build of Firefox generates a product archive containing a build of Firefox, an archive containing the test files we run against the browser and an archive containing the compiler's debug symbols which can be used to generate stacks when unit tests hit an error.The problem

In the old Artifact API, we had an endpoint which generated a signed S3 url that was given to the worker which created the artifact. This worker could upload anything it wanted at that location. This is not to suggest malicious usage, but that any errors or early termination of uploads could result in a corrupted artifact being stored in S3 as if it were a correct upload.If you created an artifact with the local contents "hello-world\n", but your internet connection dropped midway through, the S3 object might only contain "hello-w". This went silent and uncaught until something much later down the pipeline (hopefully!) complained that the file it got was corrupted. This corruption is the cause of many orange-factor bugs, but we have no way to figure out exactly where the corruption is happening.

Our old API was also very challenging to use and artifact handling in tasks. It would often require a task writer to use one of our client libraries to generate a Taskcluster-Signed-URL and Curl to do uploads. For a lot of cases, this is really hazard fraught. Curl doesn't fail on errors by default (!!!), Curl doesn't automatically handle "Content-Encoding: gzip" responses without "Accept: gzip", which we sometimes need to serve. It requires each user figure all of this out for themselves, each time they want to use artifacts.

We also had a "Completed Artifact" pulse message which isn't actually sending anything useful. It would send a message when the artifact is allocated in our metadata tables, not when the artifact was actually complete. We could mark a task as being completed before all of the artifacts were finished being uploaded. In practice, this was avoided by avoiding a call to complete the task before the uploads were done, but it was a convention.

Our solution

We wanted to address a lot of issues with Taskcluster Artifacts. Specifically the following issues are ones which we've tackled:- Corruption during upload should be detected

- Corruption during download should be detected

- Corruption of artifacts should be attributable

- S3 Eventual Consistency error detection

- Caches should be able to verify whether they are caching valid items

- Completed Artifact messages should only be sent when the artifact is actually complete

- Tasks should be unresolvable until all uploads are finished

- Artifacts should be really easy to use

- Artifacts should be able to be uploaded with browser-viewable gzip encoding

Code

Here's the code we wrote for this project:- https://github.com/taskcluster/remotely-signed-s3 -- A library which wraps the S3 APIs using the lower level S3 REST Api and uses the aws4 request signing library

- https://github.com/taskcluster/taskcluster-lib-artifact -- A light wrapper around remotely-signed-s3 to enable JS based uploads and downloads

- https://github.com/taskcluster/taskcluster-lib-artifact-go -- A library and CLI written in Go

- https://github.com/taskcluster/taskcluster-queue/commit/6cba02804aeb05b6a5c44134dca1df1b018f1860 -- The final Queue patch to enable the new Artifact API

Upload Corruption

If an artifact is uploaded with a different set of bytes to those which were expected, we should fail the upload. The S3 V4 signatures system allows us to sign a request's headers, which includes an X-Amz-Content-Sha256 and Content-Length header. This means that the request headers we get back from signing can only be used for a request which sets the X-Amz-Content-Sha256 and Content-Length to the value provided at signing. The S3 library checks that the body of each request's Sha256 checksum matches the value provided in this header and also the Content-Length.The requests we get from the Taskcluster Queue can only be used to upload the exact file we asked permission to upload. This means that the only set of bytes that will allow the request(s) to S3 to complete sucessfully will be the ones we initially told the Taskcluster Queue about.

The two main cases we're protecting against here are disk and network corruption. The file ends up being read twice, once to hash and once to upload. Since we have the hash calculated, we can be sure to catch corruption if the two hashes or sizes don't match. Likewise, the possibility of network interuption or corruption is handled because the S3 server will report an error if the connection is interupted or corrupted before data matching the Sha256 hash exactly is uploaded.

This does not protect against all broken files from being uploaded. This is an important distinction to make. If you upload an invalid zip file, but no corruption occurs once you pass responsibility to taskcluster-lib-artifact, we're going to happily store this defective file, but we're going to ensure that every step down the pipeline gets an exact copy of this defective file.

Download Corruption

Like corruption during upload, we could experience corruption or interruptions during downloading. In order to combat this, we set some metadata on the artifacts in S3. We set some extra headers during uploading:- x-amz-meta-taskcluster-content-sha256 -- The Sha256 of the artifact passed into a library -- i.e. without our automatic gzip encoding

- x-amz-meta-taskcluster-content-length -- The number of bytes of the artifact passed into a library -- i.e. without our automatic gzip encoding

- x-amz-meta-taskcluster-transfer-sha256 -- The Sha256 of the artifact as passed over the wire to S3 servers. In the case of identity encoding, this is the same value as x-amz-meta-taskcluster-content-sha256. In the case of Gzip encoding, it is almost certainly not identical.

- x-amz-meta-taskcluster-transfer-length -- The number of bytes of the artifact as passed over the wire to S3 servers. In the case of identity encoding, this is the same value as x-amz-meta-taskcluster-content-sha256. In the case of Gzip encoding, it is almost certainly not identical.

Important to note is that because these are non-standard headers, verification requires explicit action on the part of the artifact downloader. That's a big part of why we've written supported artifact downloading tools.

Attribution of Corruption

Corruption is inevitable in a massive system like Taskcluster. What's really important is that when corruption happens we detect it and we know where to focus our remediation efforts. In the new Artifact API, we can zero in on the culprit for corruption.With the old Artifact API, we don't have any way to figure out if an artifact is corrupted or where that happened. We never know what the artifact was on the build machine, we can't verify corruption in caching systems and when we have an invalid artifact downloaded on a downstream task, we don't know whether it is invalid because the file was defective from the start or if it was because of a bad transfer.

Now, we know that if the Sha256 checksums of the downloaded artifact, the original file was broken before it was uploaded. We can build caching systems which ensure that the value that they're caching is valid and alert us to corruption. We can track corruption to detect issues in our underlying infrastructure.

Completed Artifact Messages and Task Resolution

Previously, as soon as the Taskcluster Queue stored the metadata about the artifact in its internal tables and generated a signed url for the S3 object, the artifact was marked as completed. This behaviour resulted in a slightly deceptive message being sent. Nobody cares when this allocation occurs, but someone might care about an artifact becoming available.On a related theme, we also allowed tasks to be resolved before the artifacts were uploaded. This meant that a task could be marked as "Completed -- Success" without actually uploading any of its artifacts. Obviously, we would always be writing workers with the intention of avoiding this error, but having it built into the Queue gives us a stronger guarantee.

We achieved this result by adding a new method to the flow of creating and uploading an artifact and adding a 'present' field in the Taskcluster Queue's internal Artifact table. For those artifacts which are created atomically, and the legacy S3 artifacts, we just set the value to true. For the new artifacts, we set it to false. When you finish your upload, you have to run a complete artifact method. This is sort of like a commit.

In the complete artifact method, we verify that S3 sees the artifact as present and only once it's completed do we send the artifact completed method. Likewise, in the complete task method, we ensure that all artifacts have a present value of true before allowing the task to complete.

S3 Eventual Consistency and Caching Error Detection

S3 works on an Eventual consistency model for some operations in some regions. Caching systems also have a certain level of tolerance for corruption. We're now able to determine whether the bytes we're downloading are those which we expect. We can now rely on more than http status code to know whether the request worked.In both of these cases we can programmatically check if the download is corrupt and try again as appropriate. In the future, we could even build smarts into our download libraries and tools to request caches involved to drop their data or try bypassing caches as a last result.

Artifacts should be easy to use

Right now, if your working with artifacts directly, you're probably having a hard time. You have to use something like Curl and building urls or signed urls. You've probably hit pitfalls like Curl not exiting with an error on a non-200 HTTP Status. You're not getting any content verification. Basically, it's hard.Taskcluster is about enabling developers to do their job effectively. Something so critical to CI usage as artifacts should be simple to use. To that end, we've implemented libraries for interacting with artifacts in Javascript and Go. We've also implemented a Go based CLI for interacting with artifacts in the build system or shell scripts.

Javascript

The Javascript client uses the same remotely-signed-s3 library that the Taskcluster Queue uses internally. It's a really simple wrapper which provides an put() and get() interface. All of the verification of requests is handled internally, as is decompression of Gzip resources. This was primarily written to enable integration in Docker-Worker directly.Go

We also provide a Go library for downloading and uploading artifacts. This is intended to be used in the Generic-Worker, which is written in Go. The Go Library uses the minimum useful interface in the Standard I/O library for inputs and outputs. We're also doing type assertions to do even more intelligent things on those inputs and outputs which support it.CLI

For all other users of Artifacts, we provide a CLI tool. This provides a simple interface to interact with artifacts. The intention is to make it available in the path of the task execution environment, so that users can simply call "artifact download --latest $taskId $name --output browser.zip.Artifacts should allow serving to the browser in Gzip

We want to enable large text files which compress extremely well with Gzip to be rendered by web browsers. An example is displaying and transmitting logs. Because of limitations in S3 around Content-Encoding and its complete lack of content negotiation, we have to decide when we upload an artifact whether or not it should be Gzip compressed.There's an option in the libraries to support automatic Gzip compression of things we're going to upload. We chose Gzip over possibly-better encoding schemes because this is a one time choice at upload time, so we wanted to make sure that the scheme we used would be broadly implemented.

Further Improvements

As always, there's still some things around artifact handling that we'd like to improve upon. For starters, we should work on splitting artifact handling out of our Queue. We've already agreed on a design of how we should store artifacts. This involves splitting out all of the artifact handling out of the Queue into a different service and having the Queue track only which artifacts belong to each task run.We're also investigting an idea to store each artifact in the region it is created in. Right now, all artifacts are stored in EC2's US West 2 region. We could have a situation where a build vm and test vm are running on the same hypervisor in US East 1, but each artifact has to be upload and downloaded via US West 2.

Another area we'd like to work on is supporting other clouds. Taskcluster ideally supports whichever cloud provider you'd like to use. We want to support other storage providers than S3, and splitting out the low level artifact handling gives us a huge maintainability win.

Possible Contributions

We're always open to contributions! A great one that we'd love to see is allowing concurrency of multipart uploads in Go. It turns out that this is a lot more complicated than I'd like it to be in order to support passing in the low level io.Reader interface. We'd want to do some type assertions to see if the input supports io.ReaderAt, and if not, use a per-go-routine offset and file mutex to guard around seeking on the file. I'm happy to mentor this project, so get in touch if that's something you'd like to work on.Conclusion

This project has been a really interesting one for me. It gave me an opportunity to learn the Go programming language and work with the underlying AWS Rest API. It's been an interesting experience after being heads down in Node.js code and has been a great reminder of how to use static, strongly typed languages. I'd forgotten how nice a real type system was to work with!Integration into our workers is still ongoing, but I wanted to give an overview of this project to keep everyone in the loop. I'm really excited to see a reduction in the amount of corruptions for artifacts

http://blog.johnford.org/2018/08/taskcluster-artifact-api-extended-to.html

|

|

The Mozilla Blog: Let’s be Transparent |

Two years ago, we released the Firefox Hardware Report to share with the public the state of desktop hardware. Whether you’re a web developer deciding what hardware settings to test against or someone just interested in CPUs and GPUs, we wanted to provide a public resource to show exactly what technologies are running in the wild.

This year, we’re continuing the tradition by releasing the Firefox Public Data Report. This report expands on the hardware report by adding data on how Firefox desktop users are using the browser and the web. Ever wanted to know the effect of Spring Festival on internet use in China? (it goes down.) What add-on is most popular this week in Russia? (it’s Визуальные закладки.) What country averages the most browser use per day? (Americans, with about 6 to 6.5 hours of use a day.) In total there are 10 metrics, broken down by the top 10 countries, with plans to add more in the future.

Similar to the hardware report for developers, we hope the report can be a resource for journalists, researchers, and the public for understanding not only the state of desktop browsing but also how data is used at Mozilla. We try to be open by design and users should know how data is collected, what data is collected, and how that data is used.

We collect non-sensitive data from the Firefox desktop browsers’ Telemetry system, which sends us data on the browser’s performance, hardware, usage and customizations. All data undergoes an extensive review process to ensure that anything we collect is necessary and secure. If you’re curious about exactly what data you’re sending to Mozilla, you can see for yourself by navigating to about:telemetry in the Firefox browser (and if you’re uncomfortable with sending any of this data to Firefox, you can always disable data collection by going to about:preferences#privacy.)

With this data, we aggregate metrics for a variety of use cases, from tracking crash rates to answering specific product questions (how many clients have add-ons? 35% this week.) In addition we measure the impact of experiments that we run to improve the browser.

Firefox is an open source project and we think the data generated should be useful to the public as well. Code contributors should be able to see how many users their work impacted last month (256 million), researchers should be able to know how browser usage is changing in developing nations, and the general public should be able to see how we use data.

After all, it’s your data.

The post Let’s be Transparent appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/08/28/lets-be-transparent/

|

|

The Mozilla Blog: Thank You, Cathy Davidson |

Cathy Davidson joined the Mozilla Foundation board in 2012, and has been a force helping us broaden our horizons and enter new areas. Cathy was the first person to join the Foundation board without a multi-year history with browsers or open source. This was an act of bravery!

Cathy is a leading educational innovator and a pioneer in recognizing the importance of digital literacy. She co-founded HASTAC (Humanities, Arts, Science, and Technology Advanced Collaboratory) in 2002. HASTAC is dedicated to rethinking the future of learning in the digital age, and is considered to be the world’s first and oldest academic social network (older than Facebook or even MySpace). She’s been directing or co-directing HASTAC since its founding.

Cathy has been using the Mozilla Manifesto in her classes for years, asking students to describe how the Manifesto is applicable in their world.

Cathy has been a forceful figure in Mozilla’s expansions in learning, outreach and more broad-based programs generally. She set the stage for broadening the expertise in the Mozilla Foundation board. We’ve been building a board with an increasing diversity of perspectives ever since she joined.

Three years ago, Cathy took on a new role as a Distinguished Professor and the Director of the Futures Initiative at the City University of New York, a university that makes higher learning accessible to an astounding 274,000 students per year. With these new responsibilities taking up more of her time, Cathy asked that she roll off the Mozilla Foundation board.

Cathy remains a close friend of Mozilla, and we’re in close contact about work we might continue to do together. Currently, we’re exploring the limitations of “STEM” education — in particular the risks of creating new generations of technologists that lack serious training and toolsets for considering the interactions of technology with societies, a topic she explores in her latest book, The New Education: How to Revolutionize the University to Prepare Students for a World in Flux.

Please join me in thanking Cathy for her tenure as a board member, and wishing her tremendous success in her new endeavors.

The post Thank You, Cathy Davidson appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/08/27/thank-you-cathy-davidson/

|

|

Joel Maher: Experiment: Adjusting SETA to run individual files instead of individual jobs |

3.5 years ago we implemented and integrated SETA. This has a net effect today of reducing our load between 60-70%. SETA works on the premise of identifying specific test jobs that find real regressions and marking them as high priority. While this logic is not perfect, it proves a great savings of test resources while not adding a large burden to our sheriffs.

There are a two things we could improve upon:

- a test job that finds a failure runs dozens if not hundreds of tests, even though the job failed for only a single test that found a failure.

- in jobs that are split to run in multiple chunks, it is likely that tests failing in chunk 1 could be run in chunk X in the future- therefore making this less reliable

I did an experiment in June (was PTO and busy on migrating a lot of tests in July/August) where I did some queries on the treeherder database to find the actual test cases that caused the failures instead of only the job names. I came up with a list of 171 tests that we needed to run and these ran in 6 jobs in the tree using 147 minutes of CPU time.

This was a fun project and it gives some insight into what a future could look like. The future I envision is picking high priority tests via SETA and using code coverage to find additional tests to run. There are a few caveats which make this tough:

- Not all failures we find are related to a single test- we have shutdown leaks, hangs, CI and tooling/harness changes, etc. This experiment only covers tests that we could specify in a manifest file (about 75% of the failures)

- My experiment didn’t load balance on all configs. SETA does a great job of picking the fewest jobs possibly by knowing if a failure is windows specific we can run on windows and not schedule on linux/osx/android. My experiment was to see if we could run tests, but right now we have no way to schedule a list of test files and specify which configs to run them on. Of course we can limit this to run “all these tests” on “this list of configs”. Running 147 minutes of execution on 27 different configs doesn’t save us much, it might take more time than what we currently do.

- It was difficult to get the unique test failures. I had to do a series of queries on the treeherder data, then parse it up, then adjust a lot of the SETA aggregation/reduction code- finally getting a list of tests- this would require a few days of work to sort out if we wanted to go this route and we would need to figure out what to do with the other 25% of failures.

- The only way to run is using per-test style used for test-verify (and the in-progress per-test code coverage). This has a problem of changing the way we report tests in the treeherder UI- it is hard to know what we ran and didn’t run and to summarize between bugs for failures could be interesting- we need a better story for running tests and reporting them without caring about chunks and test harnesses (for example see my running tests by component experiment)

- Assuming this was implemented- this model would need to be tightly integrated into the sheriffing and developer workflow. For developers, if you just want to run xpcshell tests, what does that mean for what you see on your try push? For sheriffs, if there is a new failure, can we backfill it and find which commit caused the problem? Can we easily retrigger the failed test?

I realized I did this work and never documented it. I would be excited to see progress made towards running a more simplified set of tests, ideally reducing our current load by 75% or more while keeping our quality levels high.

|

|

The Mozilla Blog: Dear Venmo: Update Your Privacy Settings |

Last month, privacy researcher and Mozilla Fellow Hang Do Thi Duc released Public By Default, a sobering look at the vast amount of personal data that’s easily accessible on Venmo, the mobile payment app.

By using Venmo’s public API and its “public by default” setting for user transactions, Hang was able to watch a couple feud on Valentine’s Day, observe a woman’s junk food habits, and peer into a marijuana dealer’s business operations. Seven million people use Venmo every month — and many may not know that their transactions are available for anyone to see.

Privacy, and not publicity, should be the default.

Despite widespread coverage of Hang’s work — and a petition by Mozilla that has garnered more than 17,000 signatures — Venmo transactions are still public by default.

But on August 23, Bloomberg reported that “In recent weeks, executives at PayPal Holdings Inc., the parent company of Venmo, were weighing whether to remove the option to post and view public transactions, said a person familiar with the deliberations. It’s unclear if those discussions are still ongoing.”

Today, we’re urging Venmo: See these important discussions through. Put users’ privacy first by making transactions private by default.

Ashley Boyd is Mozilla’s VP of Advocacy.

The post Dear Venmo: Update Your Privacy Settings appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/08/27/dear-venmo-update-your-privacy-settings/

|

|