Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Reps Community: H2 2018 Reps Plan for the Council |

This blogpost was authored by Daniele Scasciafratte

What we have done

In the first half of the year, our focus was to align the program with Mission Driven Mozillians and prepare the mandatory Reps onboarding course to ensure it aligns with the D&I work. We have also started improving the understanding of issues of different Reps roles like Mentors and Alumni.

We also worked on administration issues like the transition of inactive Reps to Alumni, the cleanup of old Reps applications still open after years and wrong mentors assigned to profiles and the improvement of our reporting system. Last but not least, we moved to the creation of expertise teams that took over everyday work day tasks from the Council (newsletter and onboarding).

We’ve chosen to split our ideas and tasks in 3 different areas for the next half of the year (with percentage):

- Prepare the ground for Mission Driven Mozillians: 45%

- Visible wins: 45%

- Miscellaneous: 10%

Prepare the ground for Mission Driven Mozillians

The Reps program is working to prepare the ground for Mission Driven Mozillians and there are different tasks and issues to face for that.

The most important point for the Reps Council is the Roles of Reps inside the communities. We know that in Mozilla there are a lot of international communities, local community and project specific communities, and we need to understand and be ready to support all of them.

We gathered different ideas that we will investigate soon:

- Role of Reps

- Update all the Reps about the last updates of the Mission Driven Mozillians program

- Understanding and agreement on Leadership Agreement

- Start conversations on where Reps belong/Reps Role

- We are already working on:

- Restart Newsletter with a new team

- Discussion about migrating the Reps mailing list to Discourse

- Create courses for various roles

- Review Team and Mentors already have a course

- We are working on a new onboarding course

- Skills criteria for various roles

- Every role has a skillset and we are working to improve them as requirements

- Update all the Reps about the last updates of the Mission Driven Mozillians program

- Mozilla Groups (MDM)

- How they fit inside the Reps program

- This is an experiment and we need to understand how the program can fit to be part of this initiative

- How they fit inside the Reps program

- Onboarding

- New SOPs for various roles/teams

- We updated SOPs in the past and we need to define where we need to update them

- Courses inside the program

- Few roles have courses but for Reps we need to define if we can do general courses or if we can find them already available

- CPG Alignment (update the Reps with updated CPG information, add it as mandatory part to the onboarding course)

- New SOPs for various roles/teams

Visible wins

The program also needs to be more visible inside Mozilla and the others communities, so we’ve chosen to focus on 3 different areas:

- Communication

- Discover new areas where Reps are missing

- There are a lot of communities where we don’t have a large representation or there is no interest

- Reps news to all Mozilla

- Share what Reps are doing, for example during the Weekly Monday Project Call

- Discover new areas where Reps are missing

- Statistics

- Showcase events inside Mozilla

- Improve the sharing of statistics about what volunteers are doing

- Showcase events inside Mozilla

- Campaigns

- Plan for more than 3 weeks out

- Let the community be aware of a new campaign in advice

- Pocket involvement

- Part of Mozilla but there is no involvement of volunteers right now

- Create an event asset repository

- Events often require the same resources such as graphics or links, we need to gather them

- Plan for more than 3 weeks out

Miscellaneous

The last area that we chose are the miscellaneous ideas, something that does not block the program’s goals but can improve it, at the same time this has low priority:

- Improving mentee/mentors relationship

- Improving understanding of Alumni role

- Improve reps-tweet social usage

- Style guidelines for the community

- Reporting activities

- Encourage Reps to report activities

- Without reports it’s difficult to do data-driven decisions

- Understand issues Reps face with reporting

- Encourage Reps to report activities

This list of ideas will be evaluated in the next quarters by the Council and as usual we are open to feedback!

|

|

Will Kahn-Greene: Thoughts on Guido retiring as BDFL of Python |

I read the news of Guido van Rossum announcing his retirement as BDFL of Python and it made me a bit sad.

I've been programming in Python for almost 20 years on a myriad of open source projects, tools for personal use, and work. I helped out with several PyCon US conferences and attended several others. I met a lot of amazing people who have influenced me as a person and as a programmer.

I started PyVideo in March 2012. At a PyCon US after that (maybe 2015?), I found myself in an elevator with Guido and somehow we got to talking about PyVideo and he asked point-blank, "Why work on that?" I tried to explain what I was trying to do with it: create an index of conference videos across video sites, improve the meta-data, transcriptions, subtitles, feeds, etc. I remember he patiently listened to me and then said something along the lines of how it was a good thing to work on. I really appreciated that moment of validation. I think about it periodically. It was one of the reasons Sheila and I worked hard to transition PyVideo to a new group after we were burned out.

It wouldn't be an overstatement to say that through programming in Python, I've done some good things and become a better person.

Thank you, Guido, for everything!

http://bluesock.org/~willkg/blog/dev/python/guido_retiring_bdfl.html

|

|

Mike Hommey: Announcing git-cinnabar 0.5.0 beta 4 |

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

These release notes are also available on the git-cinnabar wiki.

What’s new since 0.5.0 beta 3?

- Fixed incompatibility with Mercurial 3.4.

- Performance and memory consumption improvements.

- Work around networking issues while downloading clone bundles from Mozilla CDN with range requests to continue past failure.

- Miscellaneous metadata format changes.

- The prebuilt helper for Linux now works across more distributions (as long as libcurl.so.4 is present, it should work)

- Updated git to 2.18.0 for the helper.

- Properly support the

pack.packsizelimitsetting. - Experimental support for initial clone from a git repository containing git-cinnabar metadata.

- Changed the default make rule to only build the helper.

- Now can successfully clone the pypy and GNU octave mercurial repositories.

- More user-friendly errors.

|

|

The Firefox Frontier: Get your game on, in the browser |

The web is a gamer’s dream. It works on any device, can connect players across the globe, and can run a ton of games—from classic arcade games to old-school computer … Read more

The post Get your game on, in the browser appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/get-your-game-on-in-the-browser/

|

|

Robert Kaiser: VR Map - A-Frame Demo using OpenStreetMap Data |

The prime driver for writing my first such demo was that I wanted to do something meaningful with A-Frame. Previously, I had only played around with the Hello WebVR example and some small alterations around the basic elements seen in that one, which is also pretty much what I taught to others in the WebVR workshops I held in Vienna last year. Now, it was time to go beyond that, and as I had recently bought a HTC Vive, I wanted something where the controllers could be used - but still something that would fall back nicely and be usable in 2D mode on a desktop browser or even mobile screens.

While I was thinking about what I could work on in that area, another long-standing thought crossed my mind: How feasible is it to render OpenStreetMap (OSM) data in 3D using WebVR and A-Frame? I decided to try and find out.

First, I built on my knowledge from Lantea Maps and the fact that I had a tile cache server set up for that, and created a layer of a certain set of tiles on the ground to for the base. That brought me to a number of issue to think about and make decisions on: First, should I respect the curvature of the earth, possibly put the tiles and the viewer on a certain place on a virtual globe? Should I respect the terrain, especially the elevation of different points on the map? Also, as the VR scene relates to real-world sizes of objects, how large is a map tile actually in reality? After a lot of thinking, I decided that this would be a simple demo so I would assume the earth is flat - both in terms of curvature or "the globe" and terrain, and the viewer would start off at coordinates 0/0/0 with x and z coordinates being horizontal and y the vertical component, as usual in A-Frame scenes. For the tile size, I found that with OpenStreetMap using Mercator projection, the tiles always stayed squares, with different sizes based on the latitude (and zoom level, but I always use the same high zoom there). In this respect, I still had to take account of the real world being a globe.

Once I had those tiles rendering on the ground, I could think about navigation and I added teleport controls, later also movement controls to fly through the scene. With W/A/S/D keys on the desktop (and later the fly controls), it was possible to "fly" underneath the ground, which was awkward, so I wrote a very simple "position-limit" A-Frame control later on, which prohibits that and also is a very nice example for how to build a component, because it's short and easy to understand.

All this isn't using OSM data per se, but just the pre-rendered tiles, so it was time to go one step further and dig into the Overpass API, which allows to query and retrieve raw geo data from OSM. With Overpass Turbo I could try out and adjust the queries I wanted to use ad then move those into my code. I decided the first exercise would be to get something that is a point on the map, a single "node" in OSM speak, and when looking at rendered maps, I found that trees seemed to fit that requirement very well. An Overpass query for "node[natural=tree]" later and some massaging the result into a format that JavaScript can nicely work with, I was able to place three-dimensional A-Frame entities in the places where the tiles had the symbols for trees! I started with simple brown cylinders for the trunks, then placed a sphere on top of them as the crown, later got fancy by evaluating various "tags" in the data to render accurate height, crown diameter, trunk circumference and even a different base model for needle-leaved trees, using a cone for the crown.

But to make the demo really look like a map, it of course needed buildings to be rendered as well. Those are more complex, as even the simpler buildings are "ways" with a variable amount of "nodes", and the more complex ones have holes in their base shape and therefore require a compound (or "relation" in OSM speak) of multiple "ways", for the outer shape and the inner holes. And then, the 2D shape given by those properties needs to be extruded to a certain height to form an actual 3D building. After finding the right Overpass query, I realized it would be best to create my own "building" geometry in A-Frame, which would get the inner and outer paths as well as the height as parameters. In the code for that, I used the THREE.js library underlying A-Frame to create a shape (potentially with holes), extrude it to the right height and rotate it to actually stand on the ground. Then I used code similar to what I had for trees to actually create A-Frame entities that had that custom geometry. For the height, I would use the explicit tags in the OSM database, estimate from its levels/floors if given or else fall back to a default. And I would even respect the color of the building if there was a tag specifying it.

With that in place, I had a pretty nice demo that uses data directly from OpenStreetMap to render Virtual Reality scenes that could be viewed in the desktop or mobile browser, or even in a full VR headset!

It's available under the name of "VR Map" at vrmap.kairo.at, and of course the source code can also be expected, copied and forked on GitHub.

Again, this is intended as a demo, not a full-featured product, and e.g. does at this time only render an area of a defined size and does not include any code to load additional scenery as you are moving around. Also, it does not support "building parts", which are the way to specify in OSM that a different pieces of a building have e.g. different heights or colors. It could also be extended to actually render models of the buildings when they exist and are referred in the database (so e.g. the Eiffel Tower would look less weird when going to the Paris preset). There are a lot of things that still can be done to improve on this demo for sure, but as it stands, it's a pretty simple piece of code that shows the power of both A-Frame and the OpenStreetMap data, and that's what I set out to do, after all.

My plan is to take this to multiple meetups and conferences to promote both underlying projects and get people inspired to think about what they can do with those ideas. Please let me know if you know of a good event where I can present this work. The first of those presentations happened a at the ViennaJS May Meetup, see the slides and video.

I'm also in an email conversation with another OSM contributor who is using this demo as a base for some of his work, e.g. on rendering building models in 3D and VR and allowing people to correct their position data.

I hope that this demo spawns more ideas of what people can do with this toolset, and I'll also be looking into more demos that will probably move into different directions.

https://home.kairo.at/blog/2018-07/vr_map_a_frame_demo_using_openstreetmap

|

|

Firefox Test Pilot: The Evolution of Side View |

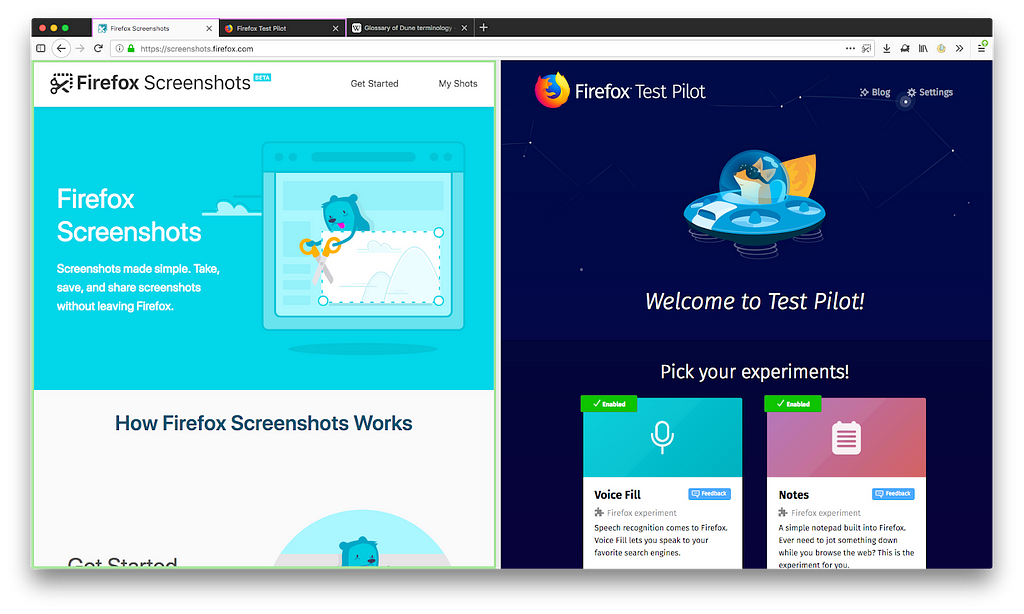

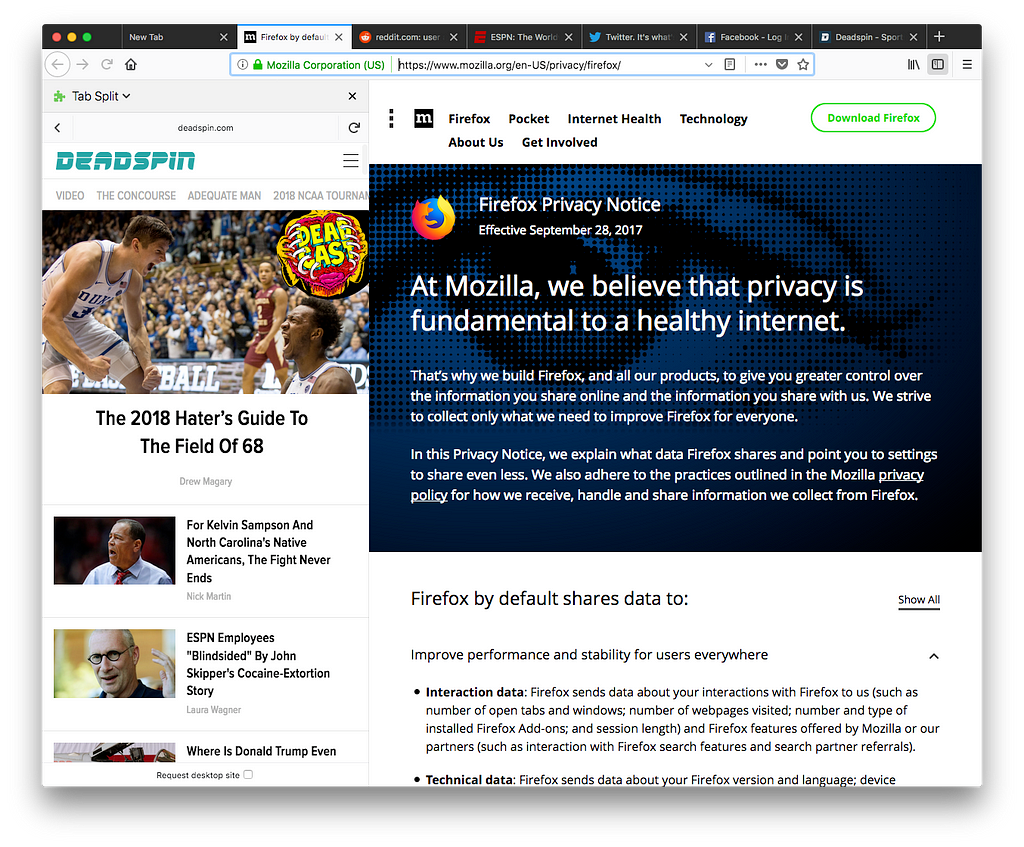

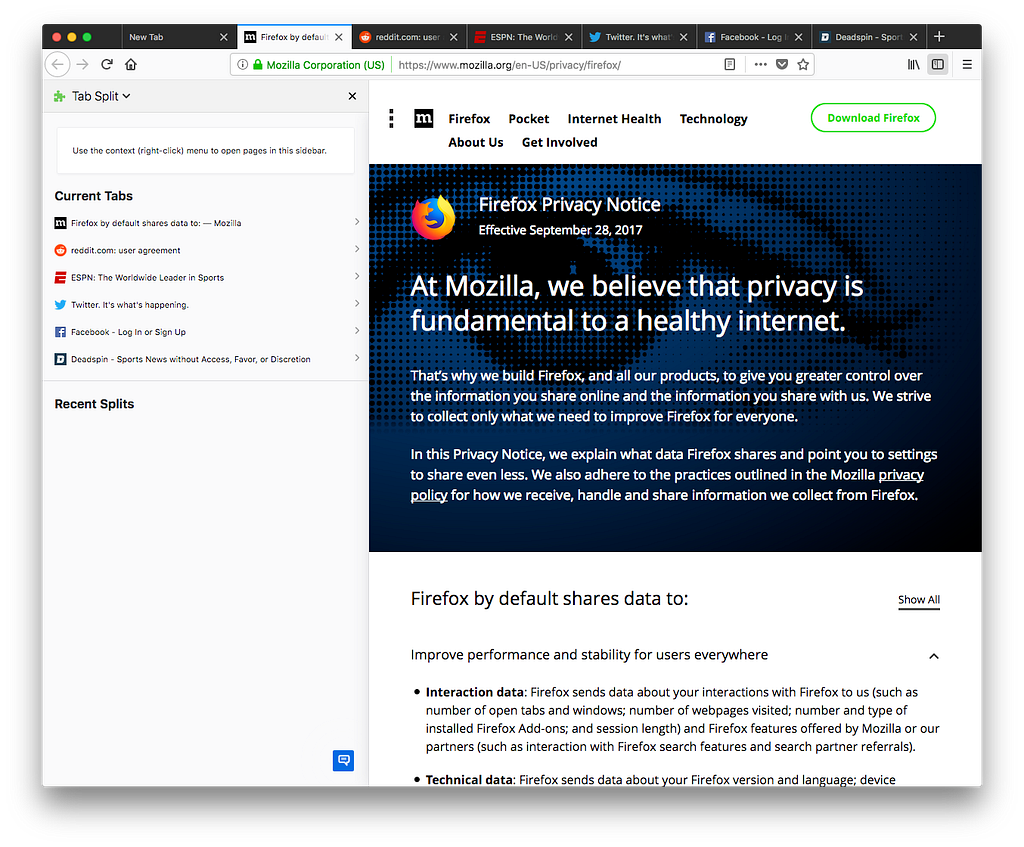

Side View is a new Firefox Test Pilot experiment which allows you to send any webpage to the Firefox sidebar, giving you an easy way to view two webpages side-by-side. It was released June 5 through the Test Pilot program, and we thought we would share with you some of the different approaches we tried while implementing this idea.

Beginnings — Tab Split

The history of Side View implementations goes back to the end of 2017, when a prototype implementation was completed as an old style XPCOM add-on. It was originally called Tab Split and the spec called for being able to split any tab into two, with full control over the URL bar and history for each side of the split. Clicking on either the left side or the right side of the split would focus that side, causing the URL bar, back button, and other controls to apply to that half of the split. The spec also originally mentioned being able to split the window either horizontally or vertically, but this feature may have made it difficult to understand which page the URL bar was referring to, so we decided to focus on only allowing viewing pages side-by-side.

Firefox Quantum

With the release of Firefox Quantum, XPCOM add-ons are no longer supported. We therefore needed to rewrite our prototype as a WebExtension. The implementation was simply an Embedded WebExtension API Experiment containing the entire previous XPCOM implementation. Our WebExtension wrapper handled the click on the browser action by calling a function exposed by the API Experiment which then called into the old XPCOM implementation. In this way, we quickly had a working WebExtension implementation that had all the capabilities of the old version. However, we encountered a bug in Firefox which broke all of the webExtension APIs if an embedded web extension experiment was loaded. This bug was eventually fixed, but we decided to see how far we could get with a pure WebExtension implementation since this entire implementation seemed prone to failure.

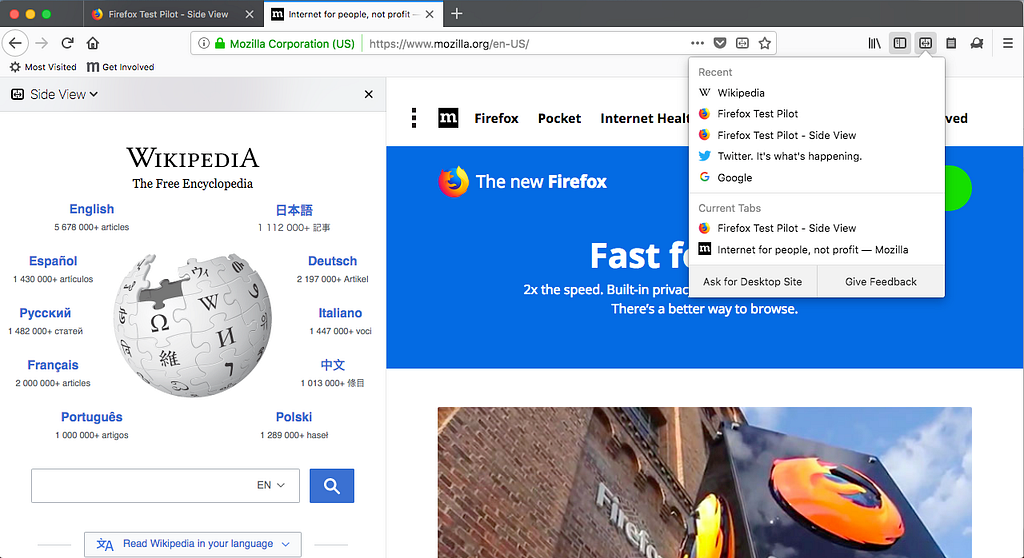

Tab Split 2: WebExtension Sidebar

WebExtension APIs are new standardized APIs which can be used to write web browser add-ons in a cross-browser way. They are supported by Chrome, Opera, Firefox, and Edge, although there are some APIs that are only available on specific browsers. Add-ons written using WebExtension APIs do not have as much freedom to modify the browser as XPCOM add-ons used to have. Therefore, we were not able to implement the user interface we had previously used where any number of tabs could be split into side by side pairs, with the focused side having control over the navigation bar user interface. However, WebExtensions are allowed to display a pane in the sidebar. We decided that we could show a web page in the sidebar with some of our user interface around it to allow the user to change the page that was being shown in the sidebar, since the sidebar webpage would not be able to use the browser’s navigation bar user interface as a normal webpage would. This limited the usefulness of navigating in the web page that was being shown in the sidebar, so any links that were clicked in the sidebar web page would be opened in a new tab instead of navigating the sidebar.

IFRAME troubles

The WebExtension APIs allow a web extension to set the sidebar to a particular webpage. We needed some user interface in the sidebar, so the webpage we set it to was an HTML page inside our add-on which had a bar at the top showing the URL of the page currently being viewed with a back button, the actual webpage embedded using an iframe, and a bottom bar which contained some miscellaneous UI. We also had a Tab Split homepage which would appear when you first pressed the button. This home page showed you a list of all your current tabs and a list of tabs that you had recently sent to the sidebar, allowing you to choose one of them to be loaded in the sidebar.

However, the fact that our implementation required the sidebar webpage to be embedded in an iframe caused a large number of problems. Many webpages use frame busting techniques to detect if they are being embedded in a frame and attempt to prevent it. The original technique for frame busting involves checking whether window.top is equal to window.self. We were able to fix some pages which use this technique by setting the sandbox attribute on the iframe, but this caused a number of other problems.

Some more modern Web servers use CORS headers to tell the browser not to frame the page. Since we had code running in the WebExtension, we were able to successfully strip out just the part of the header that caused this. Nevertheless, this approach felt unstable since we would have to consistently mess with site security to make it work.

A new approach

After struggling with the problems our anti-frame-busting approaches were encountering, I finally had a new idea which removed the need for us to put the sidebar webpage in an iframe. We would put the previous homepage in a pop-up panel instead of in the sidebar. This would allow us to show our user interface in the panel, while reserving the entire sidebar for the chosen webpage. While this required changing the UI slightly, it solved all the technical problems we were encountering and made the implementation much more solid.

A new name for the launch

Since the implementation changed to be more of a sidebar browser than a tab splitting feature, marketing gave us a new name: Side View. Side View is now available for you to try on Firefox Test Pilot. If you try it, we would love your feedback! Press the “Give Feedback” button in the Side View panel to submit suggestions and comments, and use our GitHub repository to submit bug reports.

The Evolution of Side View was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Mozilla B-Team: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1473726] WebExtensions bugs missing crash reports when viewed

- [1469911] Make user autocompletion faster

- [1328659] Add support for utf8=utf8mb4 (switches to dynamic/compressed row format, and changes charset to utf8mb4)

- [1472896] Update to Gear Store inventory dropdown

discuss these changes on mozilla.tools.bmo.

|

|

Mozilla VR Blog: This week in Mixed Reality: Issue 12 |

This week we landed a bunch of core features: in the browsers space, we landed WebVR support and immersive controllers; in the social area, added media tools to Hubs; and in the content ecosystem, we now have WebGL2 support on the WebGLRenderer in three.js.

Browsers

This week has been super exciting for the Firefox Reality team. We spent the last few weeks working on immersive WebVR support and it's finally here!

- Completed prototypes of immersive mode for WebVR support

- Implemented private browsing on the new UI

- Added mini tray

- Immersive mode for presentation is now implemented across the supported standalone headsets

- New controller models landed

- Support for asynchronous loading of models and textures

- Mapped out client side error pages for text only (DNS error, SSL certificate error, etc)

Here are two sneek peaks of Firefox Reality. First is support for entering and existing WebVR:

Quick enter and exit WebVR test in Firefox Reality from Imanol Fern'andez Gorostizaga on Vimeo.

The second is controller support:

Firefox Reality WebVR controllers from Imanol Fern'andez Gorostizaga on Vimeo.

Servo's Android support is receiving some care and attention. Not only can developers now build a real Android app locally that embeds Servo alongside a URL bar and other fundamental UI controls, but we can now support regression tests that verify that the Android port won't crash on startup. These important steps will allow us to work on more complicated Android embedding strategies with greater confidence in the future.

Social

We are making great progress towards adding new features on Hubs by Mozilla:

- Making improvements on the following: scene management and nested scenes, references, GLTF export, breadcrumb UI/UX, many bug fixes

- Demoed media tools (paste a URL: get an image, video, or model in the space) at Friday’s meetup. Finishing up polish and bugfixing on that and adding support for more content types.

- More progress on drawing tools, better geometry generation and networking implementation in place.

this right here, this is some good Internet pic.twitter.com/YBthWL5QAw

— Greg Fodor (@gfodor) July 6, 2018

Interested in joining our public Friday stand ups? For more details, join our public WebVR Slack #social channel to participate in on the discussion!

Content ecosystem

This week, we landed initial WebGL2 support to the WebGLRenderer in three.js!

Found a critical bug on the Unity WebVR exporter? File it in our public GitHub repo or let us know on the public WebVR Slack #unity channel and as always, join us in our discussion!

Stay tuned for new features and improvements across our three areas!

|

|

Mozilla Open Policy & Advocacy Blog: India advances globally leading net neutrality regulations |

India is now one step away from having some of the strongest net neutrality regulations in the world. This week, the Indian Telecom Commission’s approved the Telecom Regulatory Authority of India’s (TRAI) recommendations to introduce net neutrality conditions into all Telecom Service Provider (TSP) licenses. This means that any net neutrality violation could cause a TSP to lose its license, a uniquely powerful deterrent. Mozilla commends this vital action by the Telecom Commission, and we urge the Government of India to move swiftly to implement these additions to the license terms.

Eight months ago,TRAI recommended a series of net neutrality conditions to be introduced in TSP licenses, which we applauded at the time. Some highlights of these regulations:

- Prohibit Telecom Service Providers from engaging in “any form of discrimination or interference” in the treatment of online content.

- Any deviance from net neutrality, including for traffic management practices, must be “proportionate, transient and transparent in nature.”

- Specialized services cannot be “usable or offered as a replacement for Internet Access Services;” and “the provision of the Specialised Services is not detrimental to the availability and overall quality of Internet Access Service.”

- The creation of a multistakeholder body to collaborate and assist TRAI in the monitoring and enforcement of net neutrality. While we must be vigilant that this body not become subject to industry capture, there are good international examples of various kinds of multi-stakeholder bodies working collaboratively with regulators, including the Brazilian Internet Steering Committee (CGI.br) and the Broadband Internet Technical Advisory Group (BITAG).

The Telecom Commissions’ approval is a critical step to finish this process and ensure that not just differential pricing (prohibited through regulations in 2016) but other forms of differential treatment are also restricted by law. Mozilla has engaged at each step of the two and half years of consultations and discussions on this topic (see our filings here), and we applaud the Commission for taking this action to protect the open internet.

The post India advances globally leading net neutrality regulations appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2018/07/12/india-advances-net-neutrality/

|

|

Mozilla Addons Blog: Upcoming changes for themes |

Theming capabilities on addons.mozilla.org (AMO) will undergo significant changes in the coming weeks. We will be switching to a new theme technology that will give designers more flexibility to create their themes. It includes support for multiple background images, and styling of toolbars and tabs. We will migrate all existing themes to this new format, and their users should not notice any changes.

As part of this upgrade, we need to remove the theme preview feature on AMO. This feature allowed you to hover over the theme image and see it applied on your browser toolbar. It doesn’t work very reliably because image sizes and network speed can make it slow and unpredictable.

Given that the new themes are potentially more complex, the user experience is likely to worsen. Thus, we decided to drop this in favor of a simpler install and uninstall experience (which is also coming soon). The preview feature will be disabled starting today.

It’s only a matter of weeks before we release the new theme format on AMO. Keep following this blog for that announcement.

The post Upcoming changes for themes appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/07/12/upcoming-changes-for-themes/

|

|

Cameron Kaiser: OverbiteNX is now available from Mozilla Add-Ons for beta testing |

Because WebExtensions still doesn't have a TCP sockets API, nor a spec, OverbiteNX uses its bespoke Onyx native component to do network operations. Onyx is written in open-source portable C with no dependencies and is available in pre-built binaries for macOS 10.12+ and Windows (or get the repo and build it yourself on almost any POSIX system).

To try OverbiteNX, install Onyx from the links above, and then install the extension from Mozilla Add-ons. If you use(d) OverbiteWX, which is the proxy-based strict WebExtensions add-on, please disable it as it may conflict. Copious debugging output is emitted to the browser console for this test version. If you file an issue (or better still a pull request) on Github, please include the output so that we can see the execution trace.

http://tenfourfox.blogspot.com/2018/07/overbitenx-is-now-available-from.html

|

|

Axel Hecht: Localization, Translation, and Machines |

TL;DR: Is there research bringing together Software Analysis and Machine Translation to yield Machine Localization of Software?

I’m Telling You, There Is No Word For ‘Yes’ Or ‘No’ In Irish

from Brendan Caldwell

The art of localizing a piece of software with a Yes button is to know what that button will do. This is an example of software UI that makes assumptions on language that hold for English, but might not for other languages. A more frequent example in both UI and languages that are affecting is piecing together text and UI controls:

In the localization tool, you’ll find each of those entries as individual strings. The localizer will recognize that they’re part of one flow, and will move fragments from the shared string to the drop-down as they need. Merely translating the individual segments is not going to be a proper localization of that piece of UI.

If we were to build a rule-based machine localization system, we’d find rules like

gaelic-yes:

If the title of your dialog contains a verb, localize Yes by translating the found verb.pieced-ui:

For each variant,- Piece together the fragments of English to a single sentence

- Translate the sentences into the target language

- Find shared content in matching positions to the original layout

- Split each translated fragment, and adjust the casing and spacing

- Map the subfragments to the localization of the English individual fragments

Map the shared fragment to the localization of the English shared fragment

Now that’s rule-based, and it’d be tedious to maintain these rules. Neural Machine Translation (NMT) has all the buzz now, and Machine Learning in general. There is plenty of research that improves how NMT systems learn about the context of the sentence they’re translating. But that’s all text.

It’d be awesome if we could bring Software Analysis into the mix, and train NMT to localize software instead of translating fragments.

For Firefox, could one train on English and localized DOM? For Android’s XML layout, a similar approach could work? For projects with automated screenshots, could one train on those? Is there enough software out there to successfully train a neural network?

Do you know of existing research in this direction?

https://blog.mozilla.org/axel/2018/07/12/localization-translation-and-machines/

|

|

The Mozilla Blog: New Features in Firefox Focus for iOS, Android – now also on the BlackBerry Key2 |

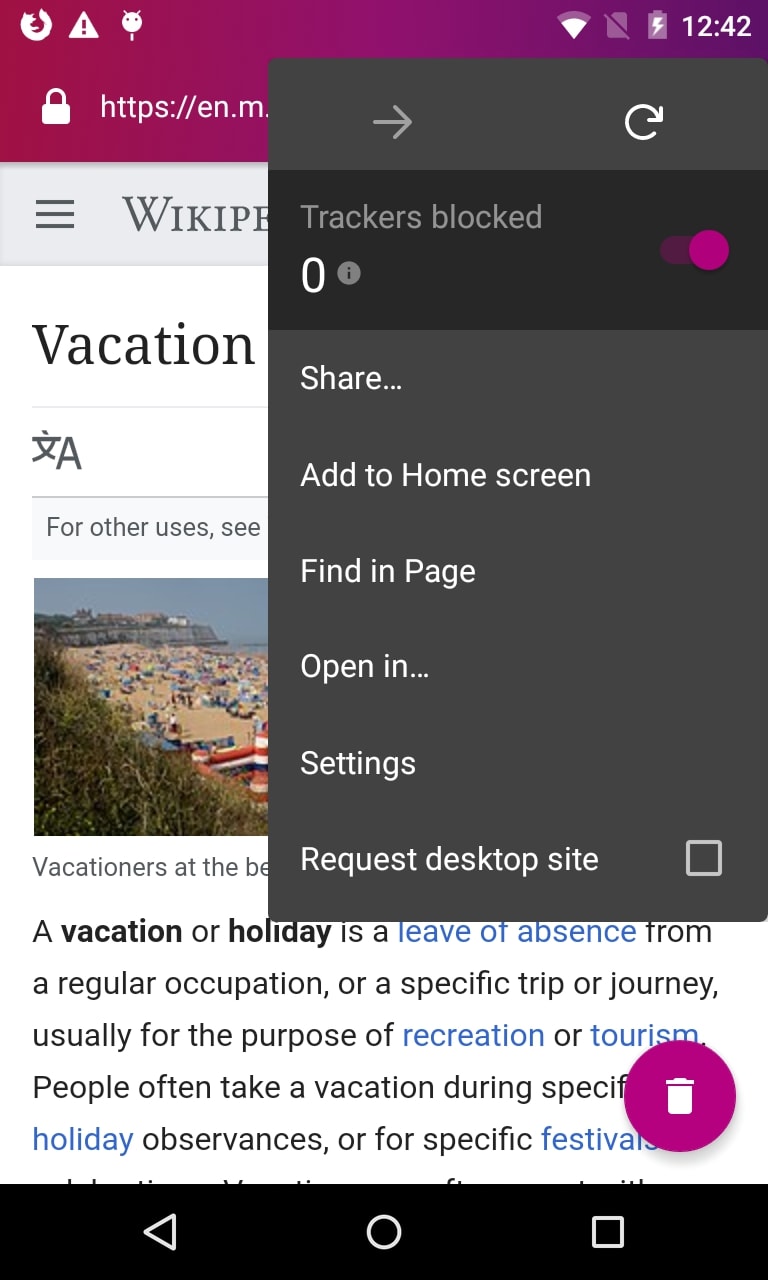

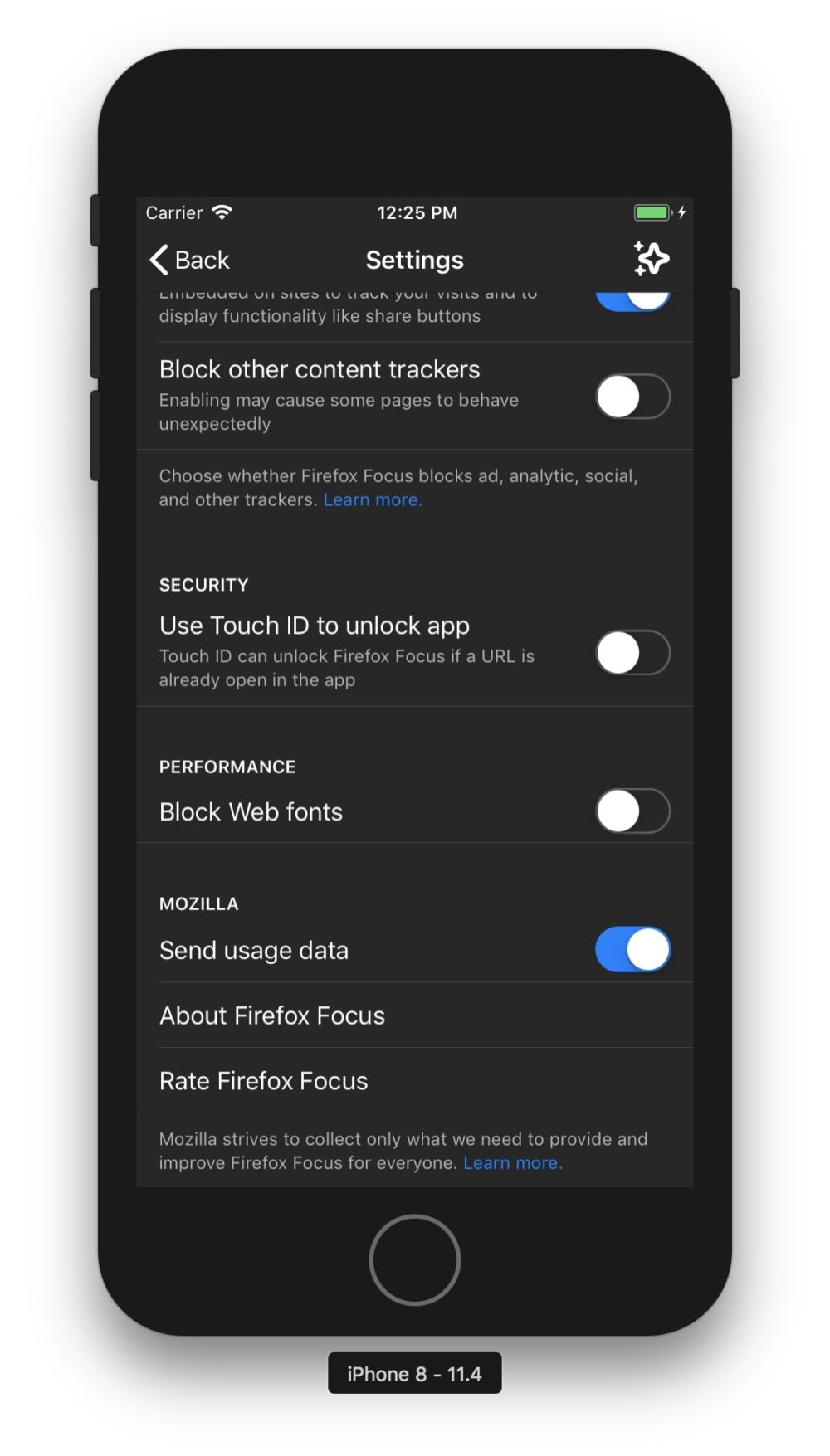

Since the launch of Firefox Focus as a content blocker for iOS in December 2015, we’ve continuously improved the now standalone browser for Apple and Android while always being mindful of users’ requests and suggestions. We analyze app store reviews and evaluate regularly which new features make our privacy browser even more user-friendly, efficient and secure. Today’s update for iOS and Android adds functionality to further simplify accessing information on the web. And we are happy to make Focus for Android available to a new group: BlackBerry Key2 users.

On point: find keywords easily with “Find in page” feature for all websites

Desktop browsing without the “search page” feature? Unthinkable! On mobile, it is now also super simple to find the content you’re looking for on a website: Open the Focus menu, select “Find in page” and enter your search term. Firefox Focus will immediately highlight any mention of your keyword or phrase on the site, including the count of instances. You can then use the handy arrow buttons to jump between the instances.

“Find in page” applies to all kinds of websites, no matter if they’re optimized for mobile browsing or not. Why are we pointing this out? Many users are still not completely comfortable browsing the web on their mobile devices because mobile, non-responsive versions of their favorite websites may not have the full range of features, are confusing or simply less appealing simplified versions of the desktop page, reduced to fit the smaller screen. As of today, Firefox Focus enables users to display the desktop page in such cases. Simply choose “request desktop page” in the browser menu to browse the more familiar desktop version of your favorite website.

Find your search terms easily on mobile and desktop versions of your favorite websites

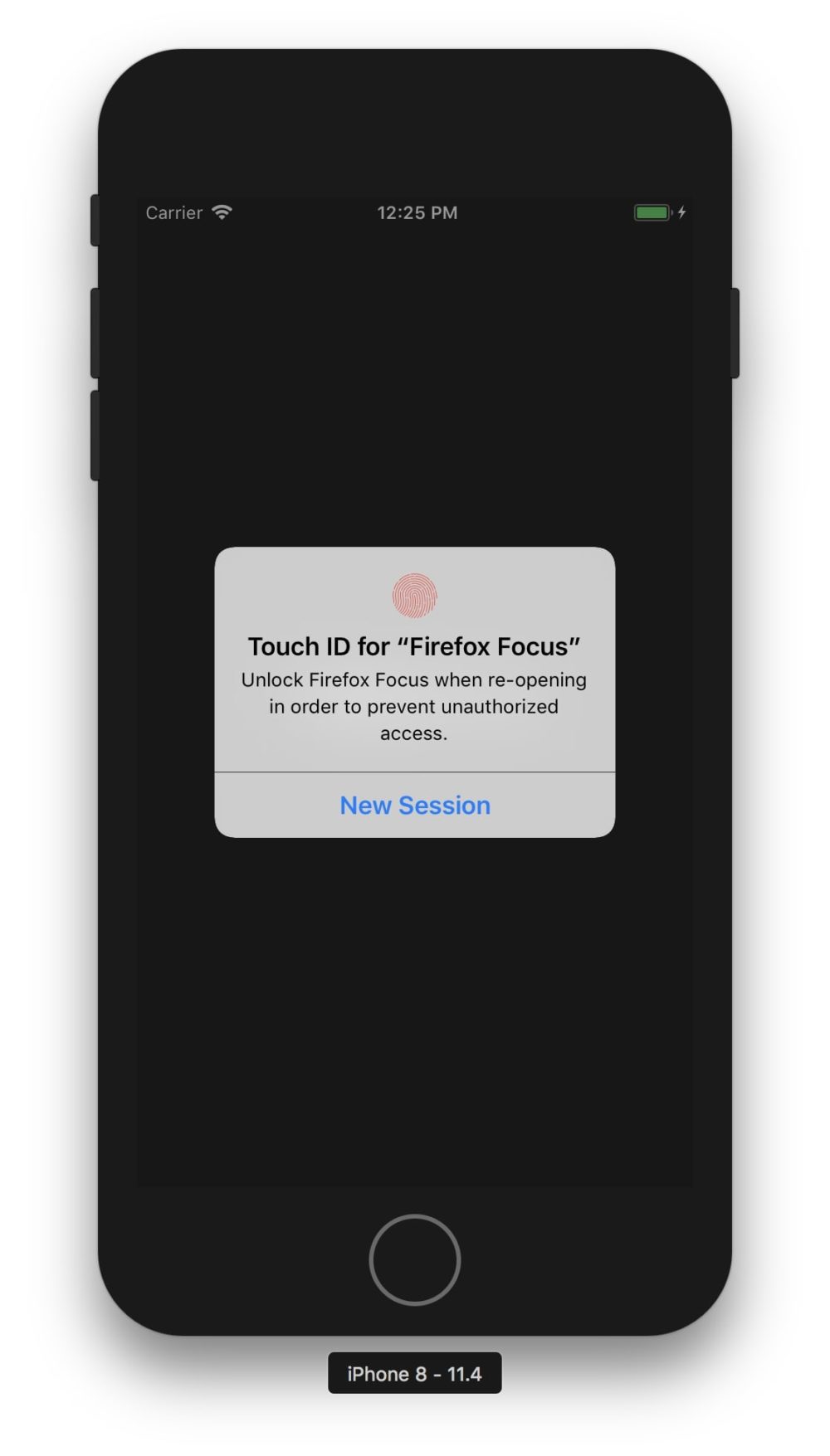

Make Focus your own — with Custom Tabs and biometric access features

Our intent with Firefox Focus is to give users the most comfortable browsing experience — by making them feel safe and protected, enabling them to enjoy intuitive navigation as well as an appealing design. A great example for how we ensure that is our support and continuous improvement of Custom Tabs. When opening links from some third-party apps, such as Twitter or Yelp, and when Focus is set as default browser on the respective device, Firefox Focus will display the corresponding page in the familiar look and feel of the original app, including menu colors and options. Now you can share this experience even faster with your friends. Just long press the URL to copy it to the clipboard for sharing or pasting elsewhere. Currently, this feature is available only to Android users.

iOS users will enjoy even more personalization with today’s version of Focus because they can now set Focus up to lock whenever it is backgrounded, and unlock only with a successful Face or Touch ID verification. This feature is common in banking apps, and provides another layer of security for browsing privacy by only allowing you, and no unauthorized user, to access your version of Focus.

Unlock Firefox Focus via Touch or Face ID to add another layer of security to your private browsing experience.

A shout out to BlackBerry users

Recently, the BlackBerry KEY2 – manufactured by TCL Communication – was introduced, representing the most advanced Blackberry ever. Bringing Firefox’s features, functionality and choice to our users no matter how they browse is important to us. So we’re proud to announce that Firefox Focus is pre-installed as part of the Locker application found on the BlackBerry KEY2.

This data protection application, integrated into the KEY2 user experience, can only be opened by fingerprint or password, which makes it the ideal solution for securely storing sensitive user data such as photos, documents and even apps — as well as the perfect place for Firefox Focus.

The latest version of Firefox Focus for Android and iOS is now available for download on Google Play, in the App Store.

The post New Features in Firefox Focus for iOS, Android – now also on the BlackBerry Key2 appeared first on The Mozilla Blog.

|

|

Robert O'Callahan: Why Isn't Debugging Treated As A First-Class Activity? |

Mark C^ot'e has published a "vision for engineering workflow at Mozilla": part 2, part 3. It sounds really good. These are its points:

- Checking out the full mozilla-central source is fast

- Source code and history is easily navigable

- Installing a development environment is fast and easy

- Building is fast

- Reviews are straightforward and streamlined

- Code is landed automatically

- Bug handling is easy, fast, and friendly

- Metrics are comprehensive, discoverable, and understandable

- Information on “code flow” is clear and discoverable

Consider also Gitlab's advertised features:

- Regardless of your process, GitLab provides powerful planning tools to keep everyone synchronized.

- Create, view, and manage code and project data through powerful branching tools.

- Keep strict quality standards for production code with automatic testing and reporting.

- Deploy quickly at massive scale with integrated Docker Container Registry.

- GitLab's integrated CI/CD allows you to ship code quickly, be it on one - or one thousand servers.

- Configure your applications and infrastructure.

- Automatically monitor metrics so you know how any change in code impacts your production environment.

- Security capabilities, integrated into your development lifecycle.

One thing developers spend a lot of time on is completely absent from both of these lists: debugging! Gitlab doesn't even list anything debugging-related in its missing features. Why isn't debugging treated as worthy of attention? I genuinely don't know — I'd like to hear your theories!

One of my theories is that debugging is ignored because people working on these systems aren't aware of anything they could do to improve it. "If there's no solution, there's no problem." With Pernosco we need to raise awareness that progress is possible and therefore debugging does demand investment. Not only is progress possible, but debugging solutions can deeply integrate into the increasingly cloud-based development workflows described above.

Another of my theories is that many developers have abandoned interactive debuggers because they're a very poor fit for many debugging problems (e.g. multiprocess, time-sensitive and remote workloads — especially cloud and mobile applications). Record-and-replay debugging solves most of those problems, but perhaps people who have stopped using a class of tools altogether stop looking for better tools in the class. Perhaps people equate "debugging" with "using an interactive debugger", so when trapped in "add logging, build, deploy, analyze logs" cycles they look for ways to improve those steps, but not for tools to short-circuit the process. Update This HN comment is a great example of the attitude that if you're not using a debugger, you're not debugging.

http://robert.ocallahan.org/2018/07/why-isnt-debugging-treated-as-first.html

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla applauds passage of Brazilian data protection law |

After nearly a decade of debate, the Brazilian Congress has just passed the Brazilian Data Protection Bill (PLC 53/2018). The following statement can be attributed to Mozilla COO Denelle Dixon:

“As a company that has fought for strong data protection around the world, Mozilla congratulates Brazilian lawmakers on their action to protect the rights of Brazilian users. At a time when privacy is at risk like never before, this law contains critical safeguards and will act as a powerful check on both companies and the government. We believe that individual security and privacy is fundamental and cannot be treated as optional, and this is a welcome and important step toward that goal.”

Mozilla’s previous statement supporting the Brazilian Data Protection Bill can be found here. The bill will now go to Brazilian President Michel Temer for his signature.

The post Mozilla applauds passage of Brazilian data protection law appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2018/07/11/mozilla-applauds-brazilian-data-protection-law/

|

|

Robert Kaiser: My Journey to Tech Speaking about WebVR/XR |

I knew I had to keep my distance to crash stats, despite knowing the area in and out and having developed some passion for it, but staying in the same area as a volunteer than in a job that almost burned me out was just not a good idea, from multiple points of view. I thought about building up some talks about working with data but it still was a bit too close to that past and not what I presently do a lot (I work in blockchain technology mostly today), so that didn't go far (but maybe it will happen at some point).

On the other hand, I got more and more interested in some things the Open Innovation group at Mozilla was doing, and even more in what the Emerging Technologies teams bring into the Mozilla and web sphere. My talk (slides) at this year's local "Linuxwochen Wien" conference was a very quick run-through of what's going on there and it's a whole stack of awesomeness, from Mixed Reality via codecs, Rust, Voice and whatnot to IoT. I would love to dig a bit into the latter but I didn't yet find the time.

What I did find some time for is digging into WebVR (now WebXR, where "XR" means "Mixed Reality") and the A-Frame library that Mozilla has created to make it dead simple to create your own VR/XR experiences. Last year I did two workshops in Vienna on that area, another one this year and I'm planning more of them. It's great how people with just some HTML knowledge can build something easily there as well as people who are more into JS programming, who can dig even deeper. And the immersiveness of VR with a real headset blows people away again and again in any case, so a good thing to show off.

While last year I only had cardboards with some left-over Sony Z3C phones (thanks to Mozilla) to show some basic 3DoF (rotation only) VR with low resolution, this proved to be interesting already to people I presented to or made workshops with. Now, this year I decided to buy a HTC Vive, seeing its price go down somewhat before the next generation of headsets would be shipped. (As a side note, I chose the Vive over the Rift because of Linux drivers being available and because I don't want to give money to Facebook.) Along with a new laptop with a high-end GPU that can drive the VR headset, I got into fully immersive 6DoF VR and, I have to say, got somewhat addicted to the experience.

I ran a demo booth with A-Painter at "Linuxwochen Wien" in May, and people were both awed at the VR experience and that this was all running in plain Firefox! Spreading the word about new web technologies can be really fun and rewarding with experiences like that! Next to showing demos and using VR myself, I also got into building WebVR/XR demos myself (I'm more the person to do demos and prototypes and spread the word, rather than building long-lasting products) - but I'll leave that to another blog post that will be upcoming very soon!

So, for the moment, I have found a place I feel very comfortable with in the community, doing demos and presentations about WebVR or "Mixed Reality" (still need to dig into AR but I don't have fitting hardware for that yet) as well as giving people and overview of the Emerging Technologies "we" (MoCo and the Mozilla community) are bringing to the web, and trying to make people excited and use the technologies or hopefully even contribute to them. Being at the forefront of innovation for once feels really good, I hope it lasts long!

https://home.kairo.at/blog/2018-07/my_journey_tech_speaking_webvr

|

|

The Mozilla Blog: Mozilla Funds Top Research Projects |

We are very happy to announce the results of the 2018H1 Mozilla Research Grants. This was an extremely competitive process, with over 115 applicants. We selected a total of eight proposals, ranging from tools to fight online harassment to systems for generating speech. All these projects support Mozilla’s mission to make the Internet safer, more empowering, and more accessible.

The Mozilla Research Grants program is part of Mozilla’s Emerging Technologies commitment to being a world-class example of inclusive innovation and impact culture-and reflects Mozilla’s commitment to open innovation, continuously exploring new possibilities with and for diverse communities. We will open the 2018H2 round in Fall of 2018: see our Research Grant webpage for more details and to sign up to be notified when applications open.

| Principal Investigator | Institution | Department | Title |

| Jeff Huang | Texas A&M University | Department of Computer Science and Engineering | Predictively Detecting and Debugging Multi-threaded Use-After-Free Vulnerabilities in Firefox

|

| Eduardo Vicente Goncalves | Open Knowledge Foundation, Brazil | Data Science for Civic Innovation Programme | A Brazilian bot to read government gazettes and bills: Using NLP to empower citizens and civic movements

|

| Leah Findlater | University of Washington | Human Centered Design and Engineering | Task-Appropriate Synthesized Speech

|

| Laura James | University of Cambridge | Trustworthy Technologies Initiative | Trust and Technology: building shared understanding around trust and distrust

|

| Libby Hemphill | University of Michigan | School of Information and Institute for Social Research | Learning and Automating De-escalation Strategies in Online Discussions

|

| Pamela Wisniewski | University of Central Florida | Department of Computer Science | A Community-based Approach to Co-Managing Privacy and Security for Mozilla’s Web of Things

|

| Munmun De Choudhury | Georgia Institute of Technology | School of Interactive Computing | Combating Professional Harassment Online via Participatory Algorithmic and Data-Driven Research

|

| David Joyner | Georgia Institute of Technology | College of Computing | Virtual Reality for Classrooms-at-a-Distance in Online Education

|

Many thanks to all our applicants in this very competitive and high-quality round.

The post Mozilla Funds Top Research Projects appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/07/11/mozilla-funds-top-research-projects/

|

|

The Mozilla Blog: An Invisible Tax on the Web: Video Codecs |

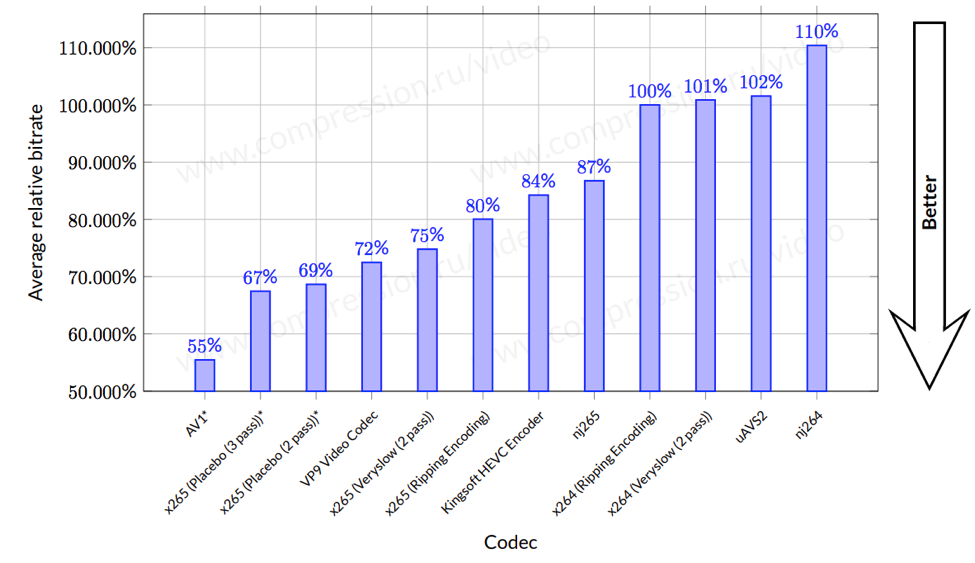

Here’s a surprising fact: It costs money to watch video online, even on free sites like YouTube. That’s because about 4 in 5 videos on the web today rely on a patented technology called the H.264 video codec.

A codec is a piece of software that lets engineers shrink large media files and transmit them quickly over the internet. In browsers, codecs decode video files so we can play them on our phones, tablets, computers, and TVs. As web users, we take this performance for granted. But the truth is, companies pay millions of dollars in licensing fees to bring us free video.

It took years for companies to put this complex, global set of legal and business agreements in place, so H.264 web video works everywhere. Now, as the industry shifts to using more efficient video codecs, those businesses are picking and choosing which next-generation technologies they will support. The fragmentation in the market is raising concerns about whether our favorite web past-time, watching videos, will continue to be accessible and affordable to all.

A drive to create royalty-free codecs

Mozilla is driven by a mission to make the web platform more capable, safe, and performant for all users. With that in mind, the company has been supporting work at the Xiph.org Foundation to create royalty-free codecs that anyone can use to compress and decode media files in hardware, software, and web pages.

But when it comes to video codecs, Xiph.org Foundation isn’t the only game in town.

Over the last decade, several companies started building viable alternatives to patented video codecs. Mozilla worked on the Daala Project, Google released VP9, and Cisco created Thor for low-complexity videoconferencing. All these efforts had the same goal: to create a next-generation video compression technology that would make sharing high-quality video over the internet faster, more reliable, and less expensive.

In 2015, Mozilla, Google, Cisco, and others joined with Amazon and Netflix and hardware vendors AMD, ARM, Intel, and NVIDIA to form AOMedia. As AOMedia grew, efforts to create an open video format coalesced around a new codec: AV1. AV1 is based largely on Google’s VP9 code and incorporates tools and technologies from Daala, Thor, and VP10.

Why Mozilla loves AV1

Mozilla loves AV1 for two reasons: AV1 is royalty-free, so anyone can use it free of charge. Software companies can use it to build video streaming into their applications. Web developers can build their own video players for their sites. It can open up business opportunities, and remove barriers to entry for entrepreneurs, artists, and regular people. Most importantly, a royalty-free codec can help keep high-quality video affordable for everyone.

The second reason we love AV1 is that it delivers better compression technology than even high-efficiency codecs – about 30% better, according to a Moscow State University study. For companies, that translates to smaller video files that are faster and cheaper to transmit and take up less storage space in their data centers. For the rest of us, we’ll have access to gorgeous, high-definition video through the sites and services we already know and love.

Open source, all the way down

AV1 is well on its way to becoming a viable alternative to patented video codecs. As of June 2018, the AV1 1.0 specification is stable and available for public use on a royalty-free basis. Looking for a deep dive into the specific technologies that made the leap from Daala to AV1? Check out our Hacks post, AV1: next generation video – The Constrained Directional Enhancement Filter.

The post An Invisible Tax on the Web: Video Codecs appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/07/11/royalty-free-web-video-codecs/

|

|

Wladimir Palant: FTAPI SecuTransfer - the secure alternative to emails? Not quite... |

Emails aren’t private, so much should be known by now. When you communicate via email, the contents are not only visible to yours and the other side’s email providers, but potentially also to numerous others like the NSA who intercepted your email on the network. Encrypting emails is possible via PGP or S/MIME, but neither is particularly easy to deploy and use. Worse yet, both standard were found to have security deficits recently. So it is not surprising that people and especially companies look for better alternatives.

It appears that the German company FTAPI gained a good standing in this market, at least in Germany, Austria and Switzerland. Their website continues to stress how simple and secure their solution is. And the list of references is impressive, featuring a number of known names that should have a very high standard when it comes to data security: Bavarian tax authorities, a bank, lawyers etc. A few years ago they even developed a “Secure E-Mail” service for Vodafone customers.

I now had a glimpse at their product. My conclusion: while it definitely offers advantages in some scenarios, it also fails to deliver the promised security.

Quick overview of the FTAPI approach

The primary goal of the FTAPI product is easily exchanging (potentially very large) files. They solve it by giving up on decentralization: data is always stored on a server and both sender and recipient have to be registered with that server. This offers clear security benefits: there is no data transfer between servers to protect, and offering the web interface via HTTPS makes sure that data upload and download are private.

But FTAPI goes beyond that: they claim to follow the Zero Knowledge approach, meaning that data transfers are end-to-end encrypted and not even the server can see the contents. For that, each user defines their “SecuPass” upon registration which is a password unknown to the server and used to encrypt data transfers.

Why bother doing crypto in a web application?

The first issue is already shining through here: your data is being encrypted by a web application in order to protect it from a server that delivers that web application to you. But the server can easily give you a slightly modified web application, one that will steal your encryption key for example! With several megabytes of JavaScript code executing here, there is no way you will notice a difference. So the server administrator can read your emails, e.g. because of being ordered by the company management, the whole encryption voodoo didn’t change that fact. Malicious actors who somehow gained access to the server will have even less scruples of course. Worse yet, malicious actors don’t need full control of the server. A single Cross-site scripting vulnerability is sufficient to compromise the web application.

Of course, FTAPI also offers a desktop client as well as an Outlook Add-in. While I haven’t looked at either, it is likely that no such drawbacks exist there. The only trouble: FTAPI fails to communicate that encryption is only secure outside of the browser. The standalone clients are being promoted as improvements to convenience, not as security enhancements.

Another case of weak key derivation function

According to the FTAPI website, there is a whitepaper describing their SecuTransfer 4.0 approach. Too bad that this whitepaper isn’t public, and requesting at least in my case didn’t yield any response whatsoever. Then again, figuring out the building blocks of SecuTransfer took merely a few minutes.

Your SecuPass is used as input to PBKDF2 algorithm in order to derive an encryption key. That encryption key can be used to decrypt your private RSA key as stored on the server. And the private RSA key in turn can be used to recover the encryption key for incoming files. So somebody able to decrypt your private RSA key will be able to read all your encrypted data stored on the server.

If somebody in control of the server wants to read your data, how do they decrypt your RSA key? Why, by guessing your SecuPass of course. While the advise is to choose a long password here, humans are very bad at choosing good passwords. In my previous article I already explained why LastPass doing 5000 PBKDF2 iterations isn’t a real hurdle preventing attackers from guessing your password. Yet FTAPI is doing merely 1,000 iterations which means that bruteforcing attacks will be even faster, by factor 5 at least (actually more, because FTAPI is using SHA-1 whereas LastPass is using SHA-256). This means that even the strongest passwords can be guessed within a few days.

Mind you, PBKDF2 isn’t a bad algorithm and with 100,000 iterations (at least, more is better) it can currently be considered reasonably secure. There days, there are better alternatives however — bcrypt and scrypt are the fairly established ones, whereas Argon2 is the new hotness.

And the key exchange?

One of the big challenges with end-to-end encryption is always the key exchange — how do I know that the public key belongs to the person I want to communicate with? S/MIME solves it via a costly public trust infrastructure whereas PGP relies on a network of key servers with its own set of issues. On the first glance, FTAPI dodges this issue with its centralized architecture: the server makes sure that you always get the right public key.

Oh, but we didn’t want to trust the server. What if the server replaces the real public key by the server administrator’s (or worse: a hacker’s), and we make our files visible to them? There is also a less obvious issue: FTAPI still uses the insecure email for bootstrapping. If you aren’t registered yet, email is how you get notified that you received a file. If somebody manages to intercept that email, they will be able to register at the FTAPI server and receive all the “secure” data transfers meant for you.

Final notes

While sharing private data via an HTTPS-protected web server clearly has benefits over sending it via email, the rest of FTAPI’s security measures is mostly appearance of security right now. Partly, it is a failure on their end: 1,000 PBKDF2 iterations were already offering way too little protection in 2009, back when FTAPI prototype was created. But there are also fundamental issues here: real end-to-end encryption is inherently complicated, particularly solving key exchange securely. And of course, end-to-end encryption is impossible to implement in a web application, so you have to choose between convenience (zero overhead: nothing to install, just open the site in your browser) and security.

https://palant.de/2018/07/11/ftapi-secutransfer-the-secure-alternative-to-emails-not-quite

|

|