Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Open Policy & Advocacy Blog: Data localization: bad for users, business, and security |

Mozilla is deeply concerned by news reports that India’s first data protection law may include data localization requirements. Recent leaks suggest that the Justice Srikrishna Committee, the group charged by the Government of India with developing the country’s first data protection law, is considering requiring companies subject to the law to store critical personal data within India’s borders. A data localization mandate would undermine user security, harm the growth and competitiveness of Indian industry, and potentially burden relations between India and other countries. We urge the Srikrishna Committee and the Government of India to exclude this in the forthcoming legislative proposal.

Security Risks

Locating data within a given jurisdiction does not in itself convey any security benefits; rather, the law should require data controllers to strictly protect the data that they’re entrusted with. One only has to look to the recurring breaches of the Aadhaar demographic data to understand that storing data locally does not, by itself, keep data protected (see here, here and here). Until India has a data protection law and demonstrates robust enforcement of that law, it’s difficult to see how storing user data in India would be the most secure option.

In Puttaswamy, the Supreme Court of India unequivocally stated that privacy is a fundamental right, and put forth a proportionality standard that has profound implications for government surveillance in India. We respectfully recommend that if Indian authorities are concerned about law enforcement access to data, then a legal framework for surveillance with appropriate protections for users is a necessary first step. This would provide the lawful basis for the government to access data necessary for legal proceedings. A data localization mandate is an insufficient instrument for ensuring data access for the legitimate purposes of law enforcement.

Economic and Political Harms

A data localization mandate may also harm the Indian economy. India is home to many inspiring companies that are seeking to move beyond India’s generous borders. Requiring these companies to store data locally may thwart this expansion, and may introduce a tax on Indian industry by requiring them to maintain the legal and technical regimes of multiple jurisdictions.

Most Indian companies handle critical personal data, so even data localization for just this data subset could harm Indian industry. Such a mandate would force companies to use potentially cost-inefficient data storage and deny companies from using the most effective and efficient routing possible. Moreover, the Indian outsourcing industry is predicated on the idea of these firms being able to store and process data in India, and then transfer it to companies abroad. A data localization mandate could pose an existential risk to these companies.

At the same time, if India imposes data localization on foreign companies doing business in India, other countries may impose reciprocal data localization policies that force Indian companies to store user data within that country’s jurisdictional borders, leading to legal conflict and potential breakdown of trade.

Data Transfer, Not Data Localization

There are better alternatives to ensuring user data protection. Above all, obtaining an adequacy determination from the EU would both demonstrate commitment to a global high standard of data protection, and significantly benefit the Indian economy. Adequacy would allow Indian companies to more easily expand overseas, because they would already be compliant with the high standards of the GDPR. It would also open doors to foreign investment and expansion in the Indian market, as companies who are already GDPR-compliant could enter the Indian market with little to no additional compliance burden. Perhaps most significantly, this approach would make the joint EU-India market the largest in the world, thus creating opportunities for India to step into even greater economic global leadership.

If India does choose to enact data localization policies, we strongly urge it to also adopt provisions for transfer via Binding Corporate Rules (BCRs). This model has been successfully adopted by the EU, which allows for data transfer upon review and approval of a company’s data processing policies by the relevant Data Protection Authority (DPA). Through this process, user rights are protected, data is secured, and companies can still do business. However, adequacy offers substantial benefits over a BCR system. By giving all Indian companies the benefits of data transfer, rather than requiring each company to individually apply for approval from a DPA, Indian industry will likely be able to expand globally with fewer policy obstacles.

Necessity of a Strong Regulator

Whether considering user security or economic growth, data localization is a weak tool when compared to a strong data protection framework and regulator.

By creating strong incentives for companies to comply with data use, storage, and transfer regulations, a DPA that has enforcement power will get better data protection results than data localization, and won’t harm Indian users, industry, and innovation along the way. We remain hopeful that the Srikrishna Committee will craft a bill that centers on the user — this means strong privacy protections, strong obligations on public and private-sector data controllers, and a DPA that can enforce rules on behalf of all Indians.

The post Data localization: bad for users, business, and security appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2018/06/22/data-localization-india/

|

|

Support.Mozilla.Org: State of Mozilla Support: 2018 Mid-year Update – Part 1 |

Hello, present and future Mozillians!

As you may have heard, Mozilla held one of its All Hands biannual meetings, this time in San Francisco. The support.mozilla.org Admin team was there as well, along with several members of the support community.

The All Hands meetings are meant to be gatherings summarizing the work done and the challenges ahead. San Francisco was no different from that model. The four days of the All Hands were full of things to experience and participate in. Aside from all the plenary and “big stage” sessions – most of which you should be able to find at Air Mozilla soon – we also took part in many smaller (formal and informal) meetings, workshops, and chats.

By the way, if you watch Denelle’s presentation, you may hear something about Mozillians being awesome through helping users ;-).

This is the first in a series of posts summarizing what we talked about regarding support.mozilla.org, together with many (maaaaaany) pages of source content we have been working on and receiving from our research partners over the last few months.

We will begin with the summary of quite a heap of metrics, as delivered to us in by the analytics and research consultancy from Copenhagen – Analyse & Tal (Analysis & Numbers). You can find all the (105!) pages here but you can also read the summary below, which captures the most important information.

The A&T team used descriptive statistics (to tell a story using numbers) and network analysis (emphasizing interconnectedness and correlations), taking information from the 11 years of data available in Kitsune’s databases and 1 year of Google Analytics data.

Almost all perspectives of the analysis brought to the spotlight the amount of work contributed and the dedication of numerous Mozillians over many years. It’s hard to overstate the importance of that for Mozilla’s mission and continuous presence and support for the millions of users of open source software who want an open web. We are all equally proud and humbled that we can share this voyage with you.

As you can imagine, analyzing a project as complex and stretched in time as Mozilla’s Support site is quite challenging and we could not have done it without cooperation with Open Innovation and our external partners.

Key Takeaways

- In the 2010-2017 period, only 124 contributors were responsible for 63% of all contributions. Given that there are hundreds of thousands of registered accounts in the system, there is a lot of work to do for us to make contributions easier and more fun.

- There are quite a few returning contributors who contribute steadily over several years.

- There are several hundreds of contributors who are active within a short timeframe and even more very occasional helpers. In both cases, making sure long-term contributing is appealing to them.

- While our community has not shown to be worryingly fragile, we have to make sure we understand better how and why contributions happen and what can be done to ensure a steady future for Mozilla’s community-powered Support.

- The Q&A support forums on the site are the most popular place for contributions, with the core and most engaged contributors present mostly there.

- On the other hand, the Knowledge Base, even if it has fewer contributors, sees more long-term commitment from returning contributors.

- Contributors through Twitter are a separate group, usually not engaged in other support channels and focusing on this external platform.

- Firefox is the most active product across all channels, but Thunderbird sees a lot of action as well. Many regular contributors are active supporting both products.

- Among other Firefox related products, Firefox for Android is the most established one.

- The top 15 locales amount to 76 percent of the overall revisions in the Knowledge Base, with the vast majority of contributions coming from core contributors mostly.

- Based on network analysis, Russian, Spanish, Czech, and Japanese localization efforts are the most vulnerable to changes in sustainability.

What’s Next?

Most of the findings in the report support many anecdotal observations we have had, giving us a very powerful set of perspectives grounded in over 7 years’ worth of data. Based on the analysis, we are able to create future plans for our community that are more realistic and based on facts.

The A&T team provided us with a list of their recommendations:

- Understanding the motivations for contributing and how highly dedicated contributors were motivated to start contributing should be a high priority for further strategic decisions.

- Our metrics should be strategically expanded and used through interactive dashboards and real time measurements. The ongoing evolution of the support community could be better understood and observed thanks to dynamic definitions of more detailed contributor segments and localization, as well as community sustainability scores.

- A better understanding of visitors and how they use the support pages (more detailed behaviour and opinions) would be helpful for understanding where to guide contributors to ensure a both a better user experience and an enhanced level of satisfaction among contributors.

Taking our own interpretation of the data analysis and the A&T recommendations into account, over the next few weeks we will be outlining more plans for the second half of the year, focusing on areas like:

- Contributor onboarding and motivation insights

- A review of metrics and tools used to obtain them

- Recruitment and learning experiments

- Backup and contingency plans for emergency gaps in community coverage

- Tailoring support options for new products

As always, thank you for your patience and ongoing support of Mozilla’s mission. Stay tuned for more post-All Hands mid-year summaries and updates coming your way soon – and discuss them in the Contributors or Discourse forum threads.

https://blog.mozilla.org/sumo/2018/06/22/state-of-mozilla-support-2018-mid-year-update-part-1/

|

|

Mozilla VR Blog: This Week in Mixed Reality: Issue 10 |

Last week, the team was in San Francisco for an all-Mozilla company meeting.

This week the team is focusing on adding new features, making improvements and fixing bugs.

Browsers

We are all hands on deck building more components and adding new UI across Firefox Reality:

- Improve keyboard visibility detection

- Added special characters to the keyboard

- Added some features & research some issues in the VRB renderer, required to properly implement focus mode

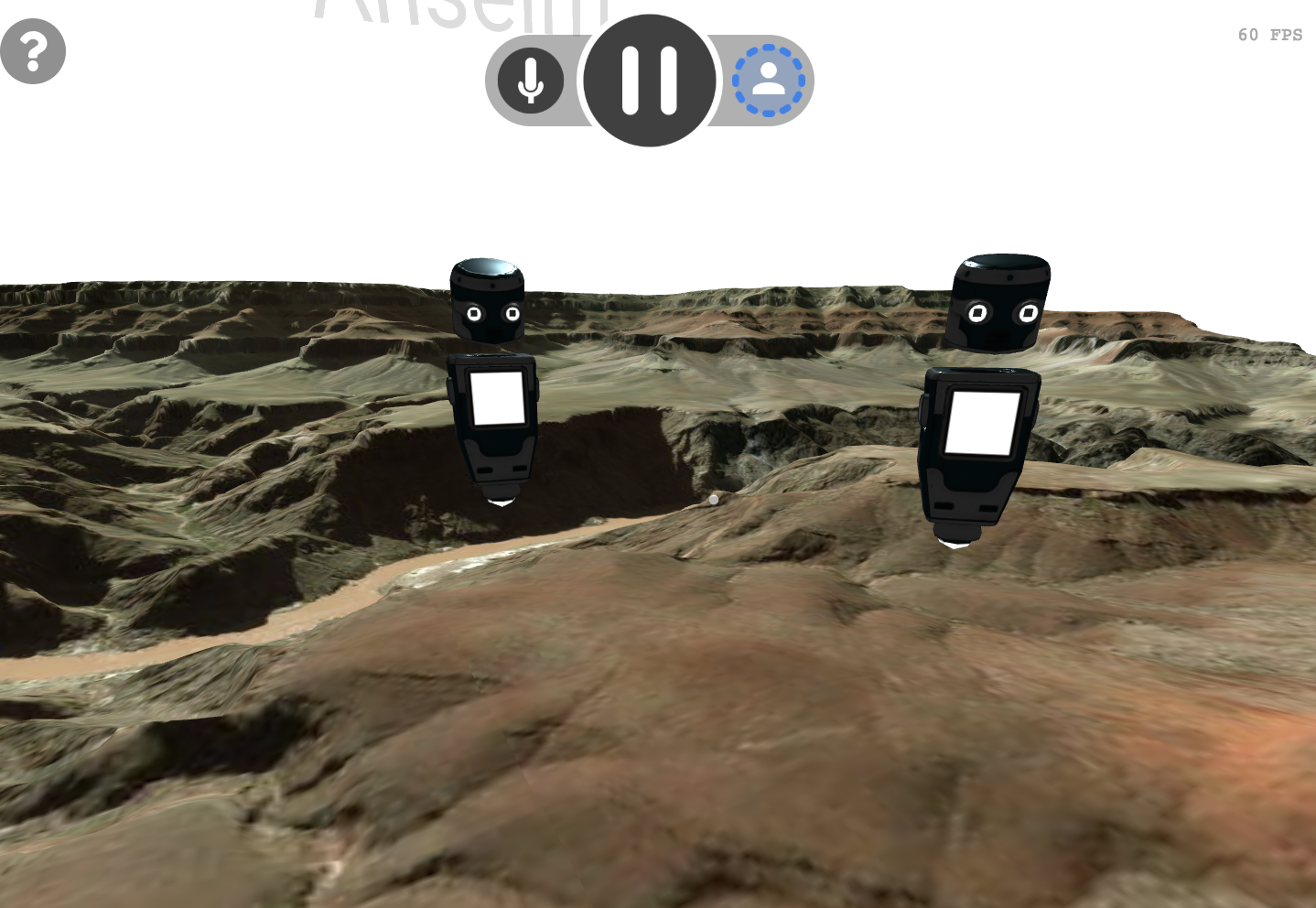

Here is a preview that we showed off of the support for skybox and some of the new UX/UI:

Social

We are continuing to provide a better experience across Hubs by Mozilla:

- Added better flow for iOS webviews

- Added support for VM development and fast entry flow for developers

- Began work on image proxying for sharing 2d images

- Continuing development on 2d/3d object spawning, space editor, and pen tool

Join our public WebVR Slack #social channel to participate in on the discussion!

Content ecosystem

Found a critical bug? File it in our public GitHub repo or let us know on the public WebVR Slack #unity channel and as always, join us in our discussion!

Stay tuned for new features and improvements across our three areas!

|

|

Mozilla Open Policy & Advocacy Blog: Parliament adopts dangerous copyright proposal – but the battle continues |

On 20 June the European Parliament’s legal affairs committee (JURI) approved its report on the copyright directive, sending the controversial and dangerous copyright reform into its final stages of lawmaking.

Here is a statement from Raegan MacDonald, Mozilla’s Head of EU Public Policy:

“This is a sad day for the Internet in Europe. Lawmakers in the European Parliament have just voted for a new law that would effectively impose a universal monitoring obligation on users posting content online. As bad as that is, the Parliament’s vote would also introduce a ‘link tax’ that will undermine access to knowledge and the sharing of information in Europe.

It is especially disappointing that just a few weeks after the entry into force of the GDPR – a law that made Europe a global regulatory standard bearer – Parliamentarians have approved a law that will fundamentally damage the Internet in Europe, with global ramifications. But it’s not over yet – the final text still needs to be signed off by the Parliament plenary on 4 July. We call on Parliamentarians, and all those who care for an internet that fosters creativity and competition in Europe, to overturn these regressive provisions in July.”

Article 11 – where press publishers can demand a license fee for snippets of text online – passed by a slim majority of 13 to 12. The provision mandating upload filters for copyright content, Article 13, was adopted 15 to 10.

Mozilla will continue to fight for copyright that suits the 21st century and fosters creativity and competition online. We encourage anyone who shares these concerns to reach out to members of the European Parliament – you can call them directly via changecopyright.org, or tweet and email them at saveyourinternet.eu.

The post Parliament adopts dangerous copyright proposal – but the battle continues appeared first on Open Policy & Advocacy.

|

|

Mozilla Reps Community: Rep of the Month – May 2018 |

Please join us in congratulating Prathamesh Chavan, our Rep of the Month for May 2018!

Prathamesh is from Pune, India and works as a Technical Support Engineer at Red Hat. From his very early days in the Mozilla community, Prathamesh used his excellect people skills to spread the community to different colleges and to evangelise many of the upcoming projects, products and Mozilla initiatives. Prathamesh is also a very resourceful person. Due to this, he did a great job at organizing some great events at Pune and creare many new Mozilla Clubs across the city there.

As a Mozilla Reps Council member, Prathamesh has done some great work and has shown great leadership skills. He is always proactive in sharing important updates with the bigger community as well as raising his hand at every new initiative.

Thanks Prathamesh, keep rocking the Open Web!

Please congratulate him by heading over to the Discourse topic.

https://blog.mozilla.org/mozillareps/2018/06/22/rep-of-the-month-may-2018/

|

|

The Firefox Frontier: Open source isn’t just for software: Opensourcery recipe |

Firefox is one of the world’s most successful open source software projects. This means we make the code that runs Firefox available for anyone to modify and use so long … Read more

The post Open source isn’t just for software: Opensourcery recipe appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/open-source-isnt-just-for-software-opensourcery-recipe/

|

|

K Lars Lohn: Things Gateway - the RESTful API and the Tide Light |

One of the most important aspects of Project Things is the concept of giving each IoT device a URL on the local area network. This is includes the devices that natively cannot speak HTTP like all those Z-Wave & Zigbee lights and plugs. The Things Gateway gives these devices a voice on your IP network. That's a powerful idea. It enables the ability to write software that can control individual devices in a home using Web standards and Web tools. Is the Things Gateway's rule system not quite sophisticated enough to accomplish what you want? You can use any language capable of communicating with a RESTful API to control devices in your home.

My project today is to control the color of a Philips HUE bulb to tell me at a glance the level and trend for the tide at the Pacific Coast west of my home. When the tide is low, I want the light to be green. When the tide is high, the light will be red. During the transition from low to high, I want the light to slowly transition from green to yellow to orange to red. For the transition from high tide to low tide, the light should go from red to magenta to blue to green.

So how do you tell a Philips HUE bulb to change its color? It's done with an HTTP PUT command to the Things Gateway. It's really pretty simple in any language. Here's an asynchronous implementation in Python:

async with aiohttp.ClientSession() as session:

async with async_timeout.timeout(seconds_for_timeout):

async with session.put(

"http://gateway.local/things/{}/properties/color".format(thing_id),

headers={

'Accept': 'application/json',

'Authorization': 'Bearer {}'.format(things_gateway_auth_key),

'Content-Type': 'application/json'

},

data='{{"color": "{}"}}'.format(a_color)

) as response:

return await response.text()

Most of this code can be treated as boilerplate. There are only three data items that come from outside: thing_id, things_gateway_auth_key, a_color. The value of color is obvious, it's the color that you want to set in the Philips HUE bulb in the form a HEX string: '#FF0000' for red, '#FF00FF' for yellow, ... The other two, thing_id and things_gateway_auth_key, are not obvious and you have do some mining in the Things Gateway to determine the appropriate values.

The Things Gateway will generate an authorization key for you by going to Settings -> Authorizations -> Create New Local Authorization -> Allow

You can even create authorizations that allow access only to specific things within your gateway. Once you've pressed "Allow", the next screen gives you the Authorization Token as well as examples of its use in various languages:

Copy your Authorization Token to someplace that you can get reference to it again in the future.

The next task is to find the thing_id for the thing that you want to control. For me, this was the Philips HUE light that I named "Tide Light". The thing_id was the default name that the Things Gateway tried to give it when it was first paired. If you didn't take note of that, you can fetch it again by using the command line Curl example from the example on the Local Token Service page shown above. Unfortunately, that will return a rather dense block of unformatted json text. I piped the output through json_pp and then into an editor to make it easier to search for my device called "Tide Light". Once found, then I looked for the associated color property and found the href entry under the color property.

$ curl -H "Authorization: Bearer XDZkRTVK2fLw...IVEMZiZ9Z" \

-H "Accept: application/json" --insecure \

http://gateway.local/things | json_pp | vim -

{

"properties" : {

"color" : {

"type" : "string",

"href" : "/things/zb-0017880103415d70/properties/color"

},

"on" : {

"type" : "boolean",

"href" : "/things/zb-0017880103415d70/properties/on"

}

},

"type" : "onOffColorLight",

"name" : "Tide Light",

"links" : [

{

"rel" : "properties",

"href" : "/things/zb-0017880103415d70/properties"

},

{

"href" : "/things/zb-0017880103415d70/actions",

"rel" : "actions"

},

{

"href" : "/things/zb-0017880103415d70/events",

"rel" : "events"

},

{

"rel" : "alternate",

"href" : "/things/zb-0017880103415d70",

"mediaType" : "text/html"

},

{

"rel" : "alternate",

"href" : "ws://gateway.local/things/zb-0017880103415d70"

}

],

"description" : "",

"href" : "/things/zb-0017880103415d70",

"actions" : {},

"events" : {}

}

Now that we can see how to get and change information on a device controlled by the Things Gateway, we can start having fun with it. To run my code, the table below shows the prerequisites.

| Item | What's it for? | Where I got it |

|---|---|---|

| A Raspberry Pi running the Things Gateway with the associated hardware from Part 2 of this series. | This is the base platform that we'll be adding onto | From Part 2 of this series |

| DIGI XStick | This allows the Raspberry Pi to talk the ZigBee protocol - there are several models, make sure you get the XU-Z11 model. | The only place that I could find this was Mouser Electronics |

| Philips Hue White & Color Ambiance bulb | This will be the Tide Light. Set up one with a HUE Bridge with instructions from Part 4 of this series or independently from Part 5 of this series. | Home Depot |

| Weather Underground developer account | This is where the tide data comes from. | The developer account is free and you can get one directly from Weather Underground. |

| a computer with Python 3.6 | My tide_light.py code was written with Python 3.6. The RPi that runs the Things Gateway has only 3.5. To run my code, you'll need to either install 3.6 on the RPi or run the tide light on another machine. | My workstation has Python 3.6 by default |

Step 1: Install the software modules required to run tide_light.py:

Step 2: Recall that the Tide Light is to reflect the real time tide level at some configurable location. You need to select a location. Weather Underground doesn't supply tide data for every location. Unfortunately, I can't find a list of the locations for which they do supply information. You may have to do some trial and error. I was lucky and found good information on my first try: Waldport, OR.

$ sudo pip3 install configman

$ sudo pip3 install webthing

$ git clone https://github.com/twobraids/pywot.git

$ cd pywot

$ export PYTHONPATH=$PYTHONPATH:$PWD

$ cd demo

$ cp tide_light_sample.ini tide_light.ini

Edit the tide_light.ini file to reflect your selection of city & state, as well as your Weather Underground access key, the thing_id of your Philips HUE bulb, and the Things Gateway auth key:

# the name of the city (use _ for spaces)

city_name=INSERT CITY NAME HERE

# the two letter state code

state_code=INSERT STATE CODE HERE

# the id of the color bulb to control

thing_id=INSERT THING ID HERE

# the api key to access the Things Gateway

things_gateway_auth_key=INSERT THINGS GATEWAY AUTH KEY HERE

# the api key to access Weather Underground data

weather_underground_api_key=INSERT WEATHER UNDERGROUND KEY TOKEN HERE

Step 3: Run the tide light like this:

$ ./tide_light.py --admin.conf=tide_light.ini

You can alway override the values in the ini file with command line switches:

$ ./tide_light.py --admin.conf=tide_light.ini --city=crescent_city --state_code=CA

So how does the Tide Light actually work? Check out the source code. It starts with downloading tide tables in the manner shown in the code quote near the top of this page. The program takes the time of the next high/low tide cycle and divides it into 120 time increments. These increments correspond to the 120 colors in the low-to-high and high-to-low color tables. As each time increment passes, the next color is selected from the currently active color table.

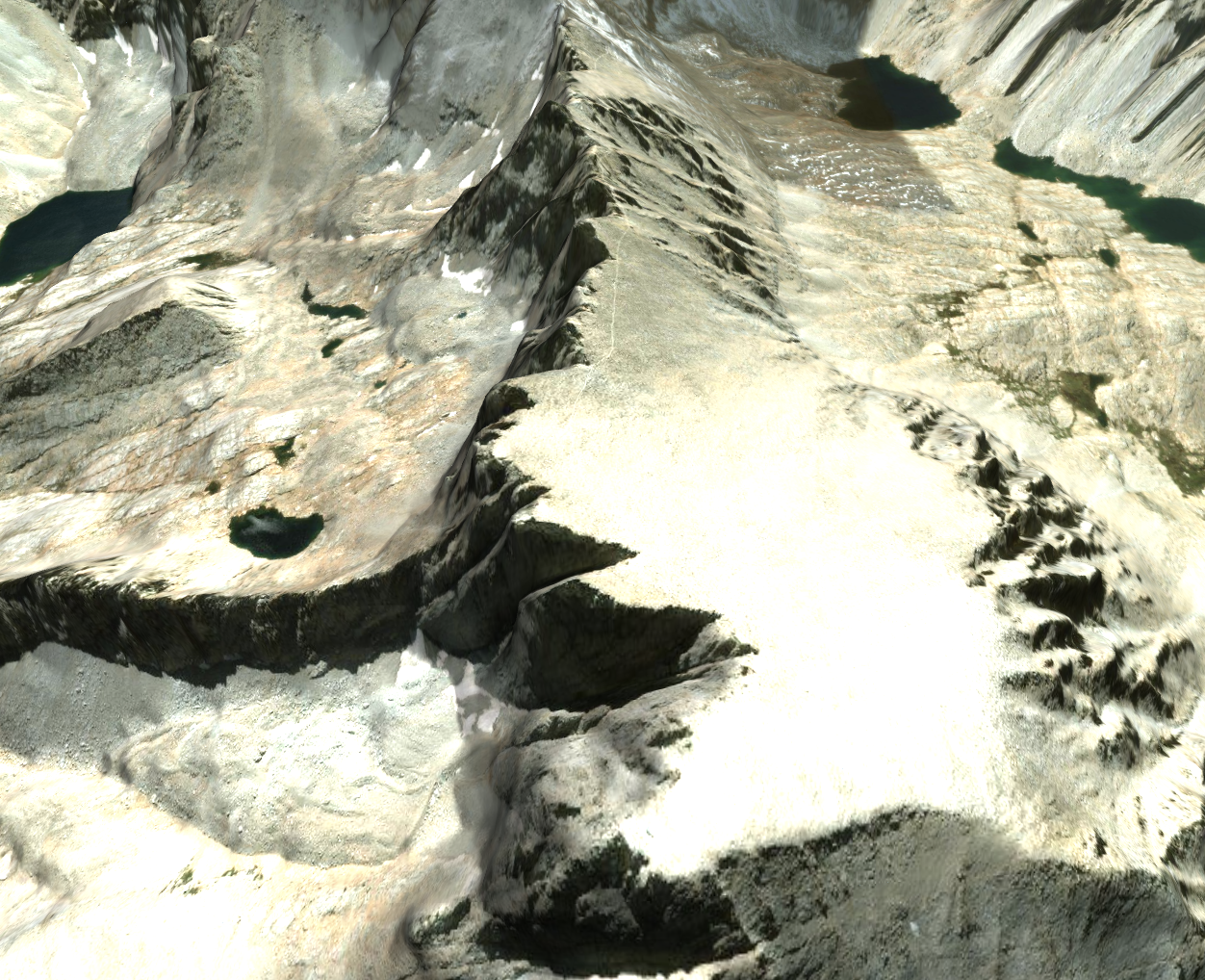

Epilogue: I learned a lot about tides this week. Having spent much of my life in the Rocky Mountains, I've never really had to pay attention to tides. While I live in Oregon now, I just don't go to the coast that much. I didn't realize that tides were so variable. I drove out to the coast to take photos of high and low tides to accompany this blog post, but surprisingly found that on average days the tides of the Oregon coast aren't all that dramatic. In fact, my high and low tide photos from Nye Beach in Newport, OR were nearly indistinguishable. My timing was poor, the tides are more interesting at the new moon and full moon times. This week is not the right week for drama. The photos that I published here are, for low tide, from May 17, 2017 and, for high tide, January 18, 2018. The high tide photo was from an extreme event where a high tide and an offshore storm conspired for record-breaking waves.

http://www.twobraids.com/2018/06/things-gateway-restful-api-and-tide.html

|

|

Mozilla Addons Blog: Add-ons at the San Francisco All Hands Meeting |

Last week, more than 1,200 Mozillians from around the globe converged on San Francisco, California, for Mozilla’s biannual All Hands meeting to celebrate recent successes, learn more about products from around the company, and collaborate on projects currently in flight.

For the add-ons team, this meant discussing tooling improvements for extension developers, reviewing upcoming changes to addons.mozilla.org (AMO), sharing what’s in store for the WebExtensions API, and checking in on initiatives that help users discover extensions. Here are some highlights:

Developer Tools

During a recent survey, participating extension developers noted two stand-out tools for development: web-ext, a command line tool that can run, lint, package, and sign an extension; and about:debugging, a page where developers can temporarily install their extensions for manual testing. There are improvements coming to both of these tools in the coming months.

In the immediate future, we want to add a feature to web-ext that would let developers submit their extensions to AMO. Our ability to add this feature is currently blocked by how AMO handles extension metadata. Once that issue is resolved, you can expect to see web-ext support a submit command. We also discussed implementing a create command that would generate a standard extension template for developers to start from.

Developers can currently test their extensions manually by installing them through about:debugging. Unfortunately, these installations do not persist once the browser is closed or restarted. Making these installations persistent is on our radar, and now that we are back from the All Hands, we will be looking at developing a plan and finding resources for implementation.

Addons.mozilla.org (AMO)

During the next three months, the AMO engineering team will prioritize work around improving user rating and review flows, improving the code review tools for add-on reviewers, and converting dictionaries to WebExtensions.

Engineers will also tackle a project to ensure that users who download Firefox because they want to install a particular extension or theme from AMO are able to successfully complete the installation process. Currently, users who download Firefox from a listing on AMO are not returned to AMO when they start Firefox for the first time, making it hard for them to finish installing the extension they want. By closing this loop, we expect to see an increase in extension and/or theme installations.

WebExtensions APIs

Several new and enhanced APIs have landed in Firefox since January, and more are on their way. In the next six months, we anticipate landing WebExtensions APIs for clipboard support, bookmarks and session management (including bookmark tags and further expansions of the theming API).

Additionally, we’ll be working towards supporting visual overlays (like notification bars, floating panels, popups, and toolbars) by the end of the year.

Help Users Find Great Extensions Faster

This year, we are focusing on helping Firefox users find and discover great extensions quickly. We have made a few bets on how we can better meet user needs by recommending specific add-ons. In San Francisco, we checked in on the status of projects currently underway:

Recommending extensions to users on AMO

In May, we started testing recommendations on listing pages for extensions commonly co-installed by other users.

Results so far have shown that people are discovering and installing more relevant extensions from these recommendations than the control group, who only sees generally popular extensions. We will continue to make refinements and fully graduate it into AMO in the second half of the year.

(For our privacy-minded friends: you can learn more about how Firefox uses data to improve its products by reading the Firefox Privacy Notice.)

Adding extensions to the onboarding tour for new Firefox users.

We want to make users aware of the benefits of customizing their browser soon after installing Firefox. We’re currently testing a few prototypes of a new onboarding flow.

And more!

We have more projects to improve extension discovery and user satisfaction on our Trello.

Join Us

Are you interested in contributing to the add-ons ecosystem? Check out our wiki to see a list of current contribution opportunities.

The post Add-ons at the San Francisco All Hands Meeting appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/06/21/add-ons-at-the-san-francisco-all-hands-meeting/

|

|

Air Mozilla: Reps Weekly Meeting, 21 Jun 2018 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Air Mozilla: The Joy of Coding - Episode 142 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Air Mozilla: Weekly SUMO Community Meeting, 20 Jun 2018 |

This is the SUMO weekly call

This is the SUMO weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-20180620/

|

|

Botond Ballo: Trip Report: C++ Standards Meeting in Rapperswil, June 2018 |

Summary / TL;DR

| Project | What’s in it? | Status |

| C++17 | See list | Published! |

| C++20 | See below | On track |

| Library Fundamentals TS v2 | source code information capture and various utilities | Published! Parts of it merged into C++17 |

| Concepts TS | Constrained templates | Merged into C++20 with some modifications |

| Parallelism TS v2 | Task blocks, library vector types and algorithms, and more | Approved for publication! |

| Transactional Memory TS | Transaction support | Published! Not headed towards C++20 |

| Concurrency TS v1 | future.then(), latches and barriers, atomic smart pointers |

Published! Parts of it merged into C++20, more on the way |

| Executors | Abstraction for where/how code runs in a concurrent context | Final design being hashed out. Ship vehicle not decided yet. |

| Concurrency TS v2 | See below | Under development. Depends on Executors. |

| Networking TS | Sockets library based on Boost.ASIO | Published! |

| Ranges TS | Range-based algorithms and views | Published! Headed towards C++20 |

| Coroutines TS | Resumable functions, based on Microsoft’s await design |

Published! C++20 merge uncertain |

| Modules v1 | A component system to supersede the textual header file inclusion model | Published as a TS |

| Modules v2 | Improvements to Modules v1, including a better transition path | Under active development |

| Numerics TS | Various numerical facilities | Under active development |

| Graphics TS | 2D drawing API | No consensus to move forward |

| Reflection TS | Static code reflection mechanisms | Send out for PDTS ballot |

| Contracts | Preconditions, postconditions, and assertions | Merged into C++20 |

A few links in this blog post may not resolve until the committee’s post-meeting mailing is published (expected within a few days of June 25, 2018). If you encounter such a link, please check back in a few days.

Introduction

A couple of weeks ago I attended a meeting of the ISO C++ Standards Committee (also known as WG21) in Rapperswil, Switzerland. This was the second committee meeting in 2018; you can find my reports on preceding meetings here (March 2018, Jacksonville) and here (November 2017, Albuquerque), and earlier ones linked from those. These reports, particularly the Jacksonville one, provide useful context for this post.

At this meeting, the committee was focused full-steam on C++20, including advancing several significant features — such as Ranges, Modules, Coroutines, and Executors — for possible inclusion in C++20, with a secondary focus on in-flight Technical Specifications such as the Parallelism TS v2, and the Reflection TS.

C++20

C++20 continues to be under active development. A number of new changes have been voted into its Working Draft at this meeting, which I list here. For a list of changes voted in at previous meetings, see my Jacksonville report.

- Language:

- Support for contract-based programming in C++20. This adds language-level preconditions, postconditions, and assertions to C++. It’s a feature long in the making, and one of the most significant features added to C++20 to date.

- Class types in non-type template parameters. This is a long-desired change that significantly increases the expressiveness of the language for metaprogramming and reflection purposes.

- Allowing virtual function calls in constant expressions.

- Prohibit aggregates with user-declared constructors.

- Efficient sized deletion for variable-sized classes.

- Consistency improvements for

<=>and other comparison operators. - Conditionally

explicitconstructors, a.k.a.explicit(bool). - Deprecate implicit capture of

thisvia[=]. This deprecates the arguably misleading semantics of a default by-value capture implicitly capturing thethispointer (which amounts to capturing the enclosing object by reference). If such capture is desired, it can be expressed explicitly as[=, this]. (It’s worth noting that C++20 also provides a syntax for capturing the enclosing object by value:[this].) - Integrating feature-test macros into the C++ working draft.

- A tweak to the rules about when certain errors related to a class being abstract are reported. This is also a Defect Report against previous versions of the standard. (Recall, this means that implementers are encouraged to adopt the new semantics even in previous standards-conformance modes, like

-std=c++11.) - A tweak to the treatment of padding bits during atomic compare-and-exchange operations.

- Tweaks to the

__VA_OPT__preprocessor feature. - Updating the reference to the Unicode standard.

- Library:

- The most notable addition at this meeting was standard library Concepts. This rounds out Concepts, the language feature, by adding foundational Concepts — originally developed as part of the Ranges TS — to the standard library, and paving the way for higher-level concept-constrained libraries that make use of these foundational Concepts.

atomic_ref- Bit-casting object representations

- Standard library specification in a Concepts and Contracts world

- Checking for the existence of an element in associative containers

- Add

shift()to - Implicit conversion traits and utility functions

- Integral power-of-2 operations

- The identity metafunction

- Improving the return value of

erase()-like algorithms constexprcomparison operators forstd::arrayconstexprfor swap and related functionsfposrequirements- Eradicating unnecessarily

explicitdefault constructors - Removing some facilities that were deprecated in C++17 or earlier

Technical Specifications

In addition to the C++ International Standard (IS), the committee publishes Technical Specifications (TS) which can be thought of experimental “feature branches”, where provisional specifications for new language or library features are published and the C++ community is invited to try them out and provide feedback before final standardization.

At this meeting, the committee voted to publish the second version of the Parallelism TS, and to send out the Reflection TS for its PDTS (“Proposed Draft TS”) ballot. Several other TSes remain under development.

Parallelism TS v2

The Parallelism TS v2 was sent out for its PDTS ballot at the last meeting. As described in previous reports, this is a process where a draft specification is circulated to national standards bodies, who have an opportunity to provide feedback on it. The committee can then make revisions based on the feedback, prior to final publication.

The results of the PDTS ballot had arrived just in time for the beginning of this meeting, and the relevant subgroups (primarily the Concurrency Study Group) worked diligently during the meeting to go through the comments and address them. This led to the adoption of several changes into the TS working draft:

- Finding the right set of traits for

simd concat()andsplit()onsimd<>objects- Other tweaks to SIMD

- Various other comment resolutions

The working draft, as modified by these changes, was then approved for publication!

Reflection TS

The Reflection TS, based on the reflexpr static reflection proposal, picked up one new feature, static reflection of functions, and was subsequently sent out for its PDTS ballot! I’m quite excited to see efficient progress on this (in my opinion) very important feature.

Meanwhile, the committee has also been planning ahead for the next generation of reflection and metaprogramming facilities for C++, which will be based on value-based constexpr programming rather than template metaprogramming, allowing users to reap expressiveness and compile-time performance gains. In the list of proposals reviewed by the Evolution Working Group (EWG) below, you’ll see quite a few of them are extensions related to constexpr; that’s largely motivated by this direction.

Concurrency TS v2

The Concurrency TS v2 (no working draft yet), whose notable contents include revamped versions of async() and future::then(), among other things, continues to be blocked on Executors. Efforts at this meeting focused on moving Executors forward.

Library Fundamentals TS v3

The Library Fundementals TS v3 is now “open for business” (has an initial working draft based on the portions of v2 that have not been merged into the IS yet), but no new proposals have been merged to it yet. I expect that to start happening in the coming meetings, as proposals targeting it progress through the Library groups.

Future Technical Specifications

There are (or were, in the case of the Graphics TS) some planned future Technical Specifications that don’t have an official project or working draft at this point:

Graphics

At the last meeting, the Graphics TS, set to contain 2D graphics primitives with an interface inspired by cairo, ran into some controversy. A number of people started to become convinced that, since this was something that professional graphics programmers / game developers were unlikely to use, the large amount of time that a detailed wording review would require was not a good use of committee time.

As a result of these concerns, an evening session was held at this meeting to decide the future of the proposal. A paper arguing we should stay course was presented, as was an alternative proposal for a much lighter-weight “diet” graphics library. After extensive discussion, however, neither the current proposal nor the alternative had consensus to move forward.

As a result – while nothing is ever set in stone and the committee can always change in mind – the Graphics TS is abandoned for the time being.

(That said, I’ve heard rumours that the folks working on the proposal and its reference implementation plan to continue working on it all the same, just not with standardization as the end goal. Rather, they might continue iterating on the library with the goal of distributing it as a third-party library/package of some sort (possibly tying into the committee’s exploration of improving C++’s package management ecosystem).)

Executors

SG 1 (the Concurrency Study Group) achieved design consensus on a unified executors proposal (see the proposal and accompanying design paper) at the last meeting.

At this meeting, another executors proposal was brought forward, and SG 1 has been trying to reconcile it with / absorb it into the unified proposal.

As executors are blocking a number of dependent items, including the Concurrency TS v2 and merging the Networking TS, SG 1 hopes to progress them forward as soon as possible. Some members remain hopeful that it can be merged into C++20 directly, but going with the backup plan of publishing it as a TS is also a possibility (which is why I’m listing it here).

Merging Technical Specifications into C++20

Turning now to Technical Specifications that have already been published, but not yet merged into the IS, the C++ community is eager to see some of these merge into C++20, thereby officially standardizing the features they contain.

Ranges TS

The Ranges TS, which modernizes and Conceptifies significant parts of the standard library (the parts related to algorithms and iterators), has been making really good progress towards merging into C++20.

The first part of the TS, containing foundational Concepts that a large spectrum of future library proposals may want to make use of, has just been merged into the C++20 working draft at this meeting. The second part, the range-based algorithms and utilities themselves, is well on its way: the Library Evolution Working Group has finished ironing out how the range-based facilities will integrate with the existing facilities in the standard library, and forwarded the revised merge proposal for wording review.

Coroutines TS

The Coroutines TS was proposed for merger into C++20 at the last meeting, but ran into pushback from adopters who tried it out and had several concerns with it (which were subsequently responded to, with additional follow-up regarding optimization possibilities).

Said adopters were invited to bring forward a proposal for an alternative / modified design that addressed their concerns, no later than at this meeting, and so they did; their proposal is called Core Coroutines.

Core Coroutines was reviewed by the Evolution Working Group (I summarize the technical discussion below), which encouraged further iteration on this design, but also felt that such iteration should not hold up the proposal to merge the Coroutines TS into C++20. (What’s the point in iterating on one design if another is being merged into the IS draft, you ask? I believe the thinking was that further exploration of the Core Coroutines design could inspire some modifications to the Coroutines TS that could be merged at a later meeting, still before C++20’s publication.)

As a result, the merge of the Coroutines TS came to a plenary vote at the end of the week. However, it did not garner consensus; a significant minority of the committee at large felt that the Core Coroutines design deserved more exploration before enshrining the TS design into the standard. (At least, I assume that was the rationale of those voting against. Regrettably, due to procedural changes, there is very little discussion before plenary votes these days to shed light on why people have the positions they do.)

The window for merging a TS into C++20 remains open for approximately one more meeting. I expect the proponents of the Coroutines TS will try the merge again at the next meeting, while the authors of Core Coroutines will refine their design further. Hopefully, the additional time and refinement will allow us to make a better-informed final decision.

Networking TS

The Networking TS is in a situation where the technical content of the TS itself is in a fairly good shape and ripe for merging into the IS, but its dependency on Executors makes a merger in the C++20 timeframe uncertain.

Ideas have been floated around of coming up with a subset of Executors that would be sufficient for the Networking TS to be based on, and that could get agreement in time for C++20. Multiple proposals on this front are expected at the next meeting.

Modules

Modules is one of the most-anticipated new features in C++. While the Modules TS was published fairly recently, and thus merging it into C++20 is a rather ambitious timeline (especially since there are design changes relative to the TS that we know we want to make), there is a fairly widespread desire to get it into C++20 nonetheless.

I described in my last report that there was a potential path forward to accomplishing this, which involved merging a subset of a revised Modules design into C++20, with the rest of the revised design to follow (likely in the form of a Modules TS v2, and a subsequent merge into C++23).

The challenge with this plan is that we haven’t fully worked out the revised design yet, never mind agreed on a subset of it that’s safe for merging into C++20. (By safe I mean forwards-compatible with the complete design, since we don’t want breaking changes to a feature we put into the IS.)

There was extensive discussion of Modules in the Evolution Working Group, which I summarize below. The procedural outcome was that there was no consensus to move forward with the “subset” plan, but we are moving forward with the revised design at full speed, and some remain hopeful that the entire revised design (or perhaps a larger subset) can still be merged into C++20.

What’s happening with Concepts?

The Concepts TS was merged into the C++20 working draft previously, but excluding certain controversial parts (notably, abbreviated function templates (AFTs)).

As AFTs remain quite popular, the committee has been trying to find an alternative design for them that could get consensus for C++20. Several proposals were heard by EWG at the last meeting, and some refined ones at this meeting. I summarize their discussion below, but in brief, while there is general support for two possible approaches, there still isn’t final agreement on one direction.

The Role of Technical Specifications

We are now about 6 years into the committee’s procedural experiment of using Technical Specifications as a vehicle for gathering feedback based on implementation and use experience prior to standardization of significant features. Opinions differ on how successful this experiment has been so far, with some lauding the TS process as leading to higher-quality, better-baked features, while others feel the process has in some cases just added unnecessary delays.

The committee has recently formed a Direction Group, a small group composed of five senior committee members with extensive experience, which advises the Working Group chairs and the Convenor on matters related to priority and direction. One of the topics the Direction Group has been tasked with giving feedback on is the TS process, and there was evening session at this meeting to relay and discuss this advice.

The Direction Group’s main piece of advice was that while the TS process is still appropriate for sufficiently large features, it’s not to be embarked on lightly; in each case, a specific set of topics / questions on which the committee would like feedback should be articulated, and success criteria for a TS “graduating” and being merged into the IS should be clearly specified at the outset.

Evolution Working Group

I’ll now write in a bit more detail about the technical discussions that took place in the Evolution Working Group, the subgroup that I sat in for the duration of the week.

Unless otherwise indicated, proposals discussed here are targeting C++20. I’ve categorized them into the usual “accepted”, “further work encouraged”, and “rejected” categories:

Accepted proposals:

- Standard library compatibility promises. EWG looked at this at the last meeting, and asked that it be revised to only list the types of changes the standard library reserves to make; a second list, of code patterns that should be avoided if you want a guarantee of future library updates not breaking your code, was to be removed as it follows from the first list. The revised version was approved and will be published as a Standing Document (pending a plenary vote).

- A couple of minor tweaks to the contracts proposal:

- In response to implementer feedback, the

alwayschecking level was removed, and the source location reported for precondition violations was made implementation-defined (previously, it had to be a source location in the function’s caller). - Virtual functions currently require that overrides repeat the base function’s pre- and postconditions. We can run into trouble in cases where the base function’s pre- or postcondition, interpreted in the context of the derived class, has a different meaning (e.g. because the derived class shadows a base member’s name, or due to covariant return types). Such cases were made undefined behaviour, with the understanding that this is a placeholder for a more principled solution to forthcome at a future meeting.

- In response to implementer feedback, the

try/catchblocks inconstexprfunctions. Throwing an exception is still not allowed during constant evaluation, but thetry/catchconstruct itself can be present as long as only the non-throwing codepaths as exercised at compile time.- More

constexprcontainers. EWG previously approved basic support for using dynamic allocation during constant evaluation, with the intention of allowing containers likestd::vectorto be used in aconstexprcontext (which is now happening). This is an extension to that, which allows storage that was dynamically allocated at compile time to survive to runtime, in the form of a static (or automatic) storage duration variable. - Allowing virtual destructors to be “trivial”. This lifts an unnecessary restriction that prevented some commonly used types like

std::error_codefrom being used at compile time. - Immediate functions. These are a stronger form of

constexprfunctions, speltconstexpr!, which not only can run at compile time, but have to. This is motivated by several use cases, one of them being value-based reflection, where you need to be able to write functions that manipulate information that only exists at compile-time (like handles to compiler data structures used to implement reflection primitives). std::is_constant_evaluated(). This allows you to check whether aconstexprfunction is being invoked at compile time or at runtime. Again there are numerous use cases for this, but a notable one is related to allowingstd::stringto be used in aconstexprcontext. Most implementations ofstd::stringuse a “small string optimization” (SSO) where sufficiently small strings are stored inline in the string object rather than in a dynamically allocated block. Unfortunately, SSO cannot be used in aconstexprcontext because it requires usingreinterpret_cast(and in any case, the motivation for SSO is runtime performance), so we need a way to make the SSO conditional on the string being created at runtime.- Signed integers are two’s complement. This standardizes existing practice that has been the case for all modern C++ implementations for quite a while.

- Nested inline namespaces. In C++17, you can shorten

namespace foo { namespace bar { namespace baz {tonamespace foo::bar::baz {, but there is no way to shortennamespace foo { inline namespace bar { namespace baz {. This proposal allows writingnamespace foo::inline bar::baz. The single-name version,namespace inline foo {is also valid, and equivalent toinline namespace foo {.

There were also a few that, after being accepted by EWG, were reviewed by CWG and merged into the C++20 working draft the same week, and thus I already mentioned them in the C++20 section above:

- Prohibit aggregate types with user-declared constructors. This addresses a confusing and unexpected hole in the language where some types that have deleted constructors can still be constructed (even using the same number / types of arguments as in the deleted constructor) via aggregate initialization. (An alternative, more targeted fix was considered but rejected.)

- Allowing virtual function calls in constant expressions. This lifts another restriction that’s unnecessary, since during constant evaluation, the compiler tracks the dynamic types of objects anyways.

- Integrating feature-test macros into the C++ working draft. This promotes feature-test macros (like

__cpp_constexpr) from being listed in a Standing Document, to being listed in the standard itself. My understanding is that this is the “stamp of approval” Microsoft has been waiting for to implement these macros in MSVC.

Proposals for which further work is encouraged:

- Generalizing alias declarations. The idea here is to generalize C++’s alias declarations (

using a = b;) so that you can alias not only types, but also other entities like namespaces or functions. EWG was generally favourable to the idea, but felt that aliases for different kinds of entities should use different syntaxes. (Among other considerations, using the same syntax would mean having to reinstate the recently-removed requirement to usetypenamein front of a dependent type in an alias declaration.) The author will explore alternative syntaxes for non-type aliases and return with a revised proposal. - Allow initializing aggregates from a parenthesized list of values. This idea was discussed at the last meeting and EWG was in favour, but people got distracted by the quasi-related topic of aggregates with deleted constructors. There was a suggestion that perhaps the two problems could be addressed by the same proposal, but in fact the issue of deleted constructors inspired independent proposals, and this proposal returned more or less unchanged. EWG liked the idea and initially approved it, but during Core Working Group review it came to light that there are a number of subtle differences in behaviour between constructor initialization and aggregate initialization (e.g. evaluation order of arguments, lifetime extension, narrowing conversions) that need to be addressed. The suggested guidance was to have the behaviour with parentheses match the behaviour of constructor calls, by having the compiler (notionally) synthesize a constructor to call when this notation is used. The proposal will return with these details fleshed out.

- Extensions to class template argument deduction. This paper proposed seven different extensions to this popular C++17 feature. EWG didn’t make individual decisions on them yet. Rather, the general guidance was to motivate the extensions a bit better, choose a subset of the more important ones to pursue for C++20, perhaps gather some implementation experience, and come back with a revised proposal.

- Deducing

this. The type of the implicit object parameter (the “this” parameter) of a member function can vary in the same ways as the types of other parameters: lvalue vs. rvalue,constvs. non-const. C++ provides ways to overload member functions to capture this variation (trailingconst, ref-qualifiers), but sometimes it would be more convenient to just template over the type of thethisparameter. This proposal aims to allow that, with a syntax like this:

template

R foo(this Self&& self, /* other parameters */);

EWG agreed with the motivation, but expressed a preference for keeping information related to the implicit object parameter at the end of the function declaration, (where the trailingconstand ref-qualifiers are now), leading to a syntax more like this:

template

R foo(/* other parameters */) Self&& self

(the exact syntax remains to be nailed down as the end of a function declaration is a syntactically busy area, and parsing issues have to be worked out).

EWG also opined that in such a function, you should only be able to access the object via the declared object parameter (selfin the above example), and not also usingthis(as that would lead to confusion in cases where e.g.thishas the base type whileselfhas a derived type). constexprfunction parameters. The most ambitiousconstexpr-related proposal brought forward at this meeting, this aimed to allow function parameters to be marked asconstexpr, and accordingly act as constant expressions inside the function body (e.g. it would be valid to use the value of one as a non-type template parameter or array bound). It was quickly pointed out that, while the proposal is implementable, it doesn’t fit into the language’s current model of constant evaluation; rather, functions withconstexprparameters would have to be implemented as templates, with a different instantiation for every combination of parameter values. Since this amounts to being a syntactic shorthand for non-type template parameters, EWG suggested that the proposal be reformulated in those terms.- Binding returned/initialized objects to the lifetime of parameters. This proposal aims to improve C++’s lifetime safety (and perhaps take one step towards being more like Rust, though that’s a long road) by allowing programmers to mark function parameters with an annotation that tells the compiler that the lifetime of the function’s return value should be “bound” to the lifetime of the parameter (that is, the return value should not outlive the parameter).

There are several options for the associated semantics if the compiler detects that the lifetime of a return value would, in fact, exceed the lifetime of a parameter:- issue a warning

- issue an error

- extend the lifetime of the returned object

In the first case, the annotation could take the form of an attribute (e.g.[[lifetimebound]]). In the second or third case, it would have to be something else, like a context-sensitive keyword (since attributes aren’t supposed to have semantic effects). The proposal authors suggested initially going with the first option in the C++20 timeframe, while leaving the door open for the second or third option later on.

EWG agreed that mitigating lifetime hazards is an important area of focus, and something we’d like to deliver on in the C++20 timeframe. There was some concern about the proposed annotation being too noisy / viral. People asked whether the annotations could be deduced (not if the function is compiled separately, unless we rely on link-time processing), or if we could just lifetime-extend by default (not without causing undue memory pressure and risking resource exhaustion and deadlocks by not releasing expensive resources or locks in time). The authors will investigate the problem space further, including exploring ways to avoid the attribute being viral, and comparing their approach to Rust’s, and report back. - Nameless parameters and unutterable specializations. In some corner cases, the current language rules do not give you a way to express a partial or explicit specialization of a constrained template (because a specialization requires repeating the constraint with the specialized parameter values substituted in, which does not always result in valid syntax). This proposal invents some syntax to allow expressing such specializations. EWG felt the proposed syntax was scary, and suggested coming back with better motivating examples before pursuing the idea further.

- How to catch an

exception_ptrwithout eventrying. This aims to allow getting at the exception inside anexception_ptrwithout having tothrowit (which is expensive). As a side effect, it would also allow handlingexception_ptrs in code compiled with-fno-exceptions. EWG felt the idea had merit, even though performance shouldn’t be the guiding principle (since the slowness ofthrowis technically a quality-of-implementation issue, although implementations seem to have agreed to not optimize it). - Allowing class template specializations in associated namespaces. This allows specializing e.g.

std::hashfor your own type, in your type’s namespace, instead of having to close that namespace, opennamespace std, and then reopen your namespace. EWG liked the idea, but the issue of which names — names in your namespace, names instd, or both — would be visible without qualification inside the specialization, was contentious.

Rejected proposals:

- Define

basic_string_view(nullptr). This paper argued that since it’s common to represent empty strings as aconst char*with valuenullptr, the constructor ofstring_viewwhich takes aconst char*argument should allow anullptrvalue and interpret it as an empty string. Another paper convincingly argued that conflating “a zero-sized string” with “not-a-string” does more harm than good, and this proposal was accordingly rejected. - Explicit concept expressions. This paper pointed out that if constrained-type-specifiers (the language machinery underlying abbreviated function templates) are added to C++ without some extra per-parameter syntax, certain constructs can become ambiguous (see the paper for an example). The ambiguity involves “concept expressions”, that is, the use of a concept (applied to some arguments) as a boolean expression, such as

CopyConstructible, outside of a requires-clause. The authors proposed removing the ambiguity by requiring the keywordrequiresto introduce a concept expression, as inrequires CopyConstructible. EWG felt this was too much syntactic clutter, given that concept expressions are expected to be used in places likestatic_assertandif constexpr, and given that the ambiguity is, at this point, hypothetical (pending what hapens to AFTs) and there would be options to resolve it if necessary.

Concepts

EWG had another evening session on Concepts at this meeting, to try to resolve the matter of abbreviated function templates (AFTs).

Recall that the main issue here is that, given an AFT written using the Concepts TS syntax, like void sort(Sortable& s);, it’s not clear that this is a template (you need to know that Sortable is a concept, not a type).

The four different proposals in play at the last meeting have been whittled down to two:

- An updated version of Herb’s in-place syntax proposal, with which the above AFT would be written

void sort(Sortable{}& s);orvoid sort(Sortable{S}& s);(withSin the second form naming the concrete type deduced for this parameter). The proposal also aims to change the constrained-parameter syntax (with which the same function could be writtentemplate) to require braces for type parameters, so that you’d instead writevoid sort(S& s); template void sort(S& s);. (The motivation for this latter change is to make it so thatConceptName Cconsistently makesCa value, whether it be a function parameter or a non-type template parameter, whileConceptName{C]consistently makesCa type.) - Bjarne’s minimal solution to the concepts syntax problems, which adds a single leading

templatekeyword to announce that an AFT is a template:template void sort(Sortable& s);. (This is visually ambiguous with one of the explicit specialization syntaxes, but the compiler can disambiguate based on name lookup, and programmers can use the other explicit specialization syntax to avoid visual confusion.) This proposal leaves the constrained-parameter syntax alone.

Both proposals allow a reader to tell at a glance that an AFT is a template and not a regular function. At the same time, each proposal has downsides as well. Bjarne’s approach annotates the whole function rather than individual parameters, so in a function with multiple parameters you still don’t know at a glance which parameters are concepts (and so e.g. in a case of a Foo&& parameter, you don’t know if it’s an rvalue reference or a forwarding reference). Herb’s proposal messes with the well-loved constrained-parameter syntax.

After an extensive discussion, it turned out that both proposals had enough support to pass, with each retaining a vocal minority of opponents. Neither proposal was progressed at this time, in the hope that some further analysis or convergence can lead to a stronger consensus at the next meeting, but it’s quite clear that folks want something to be done in this space for C++20, and so I’m fairly optimistic we’ll end up getting one of these solutions (or a compromise / variation).

In addition to the evening session on AFTs, EWG looked at a proposal to alter the way name lookup works inside constrained templates. The original motivation for this was to resolve the AFT impasse by making name lookup inside AFTs work more like name lookup inside non-template functions. However, it became apparent that (1) that alone will not resolve the AFT issue, since name lookup is just one of several differences between template and non-template code; but (2) the suggested modification to name lookup rules may be desirable (not just in AFTs but in all constrained templates) anyways. The main idea behind the new rules is that when performing name lookup for a function call that has a constrained type as an argument, only functions that appear in the concept definition should be found; the motivation is to avoid surprising extra results that might creep in through ADL. EWG was supportive of making a change along these lines for C++20, but some of the details still need to be worked out; among them, whether constraints should be propagated through auto variables and into nested templates for the purpose of applying this rule.

Coroutines

As mentioned above, EWG reviewed a modified Coroutines design called Core Coroutines, that was inspired by various concerns that some early adopters of the Coroutines TS had with its design.

Core Coroutines makes a number of changes to the Coroutines TS design:

- The most significant change, in my opinion, is that it exposes the “coroutine frame” (the piece of memory that stores the compiler’s transformed representation of the coroutine function, where e.g. stack variables that persist across a suspension point are stored) as a first-class object, thereby allowing the user to control where this memory is stored (and, importantly, whether or not it is dynamically allocated).

- Syntax changes:

- To how you define a coroutine. Among other motivations, the changes emphasize that parameters to the coroutine act more like lambda captures than regular function parameters (e.g. for reference parameters, you need to be careful that the referred-to objects persist even after a suspension/resumption).

- To how you call a coroutine. The new syntax is an operator (the initial proposal being

[<-]), to reflect that coroutines can be used for a variety of purposes, not just asynchrony (which is whatco_awaitsuggests).

- A more compact API for defining your own coroutine types, with fewer library customiztion points (basically, instead of specializing numerous library traits that are invoked by compiler-generated code, you overload

operator [<-]for your type, with more of the logic going into the definition of that function).

EWG recognized the benefits of these modifications, although there were a variety of opinions as to how compelling they are. At the same time, there were also a few concerns with Core Coroutines:

- While having the coroutine frame exposed as a first-class object means you are guaranteed no dynamic memory allocations unless you place it on the heap yourself, it still has a compiler-generated type (much like a lambda closure), so passing it across a translation unit boundary requires type erasure (and therefore a dynamic allocation). With the Coroutines TS, the type erasure was more under the compiler’s control, and it was argued that this allows eliding the allocation in more cases.

- There were concerns about being able to take the

sizeofof the coroutine object, as that requires the size being known by the compiler’s front-end, while with the Coroutines TS it’s sufficient for the size to be computed during the optimization phase. - While making the customization API smaller, this formulation relies on more new core-language features. In addition to introducing a new overloadable operator, the feature requires tail calls (which could also be useful for the language in general), and lazy function parameters, which have been proposed separately. (The latter is not a hard requirement, but the syntax would be more verbose without them.)

As mentioned, the procedural outcome of the discussion was to encourage further work on the Core Coroutines, while not blocking the merger of the Coroutines TS into C++20 on such work.

While in the end there was no consensus to merge the Coroutines TS into C++20 at this meeting, there remains fairly strong demand for having coroutines in some form in C++20, and I am therefore hopeful that some sort of joint proposal that combines elements of Core Coroutines into the Coroutines TS will surface at the next meeting.

Modules

As of the last meeting, there were two alternative Modules designs before the committee: the recently-published Modules TS, and the alternative proposal from the Clang Modules implementers called Another Take On Modules (“Atom”).

Since the last meeting, the authors of the two proposals have been collaborating to produce a merged proposal that combines elements from both proposals.

The merged proposal accomplishes Atom’s goal of providing a better mechanism for existing codebases to transition to Modules via modularized legacy headers (called legacy header imports in the merged proposal) – basically, existing headers that are not modules, but are treated as-if they were modules by the compiler. It retains the Modules TS mechanism of global module fragments, with some important restrictions, such as only allowing #includes and other preprocessor directives in the global module fragment.

Other aspects of Atom that are part of the the merged proposal include module partitions (a way of breaking up the interface of a module into multiple files), and some changes to export and template instantiation semantics.

EWG reviewed the merged proposal favourably, with a strong consensus for putting these changes into a second iteration of the Modules TS. Design guidance was provided on a few aspects, including tweaks to export behaviour for namespaces, and making export be “inherited”, such that e.g. if the declaration of a structure is exported, then its definition is too by default. (A follow-up proposal is expected for a syntax to explicitly make a structure definition not exported without having to move it into another module partition.) A proposal to make the lexing rules for the names of legacy header units be different from the existing rules for #includes failed to gain consensus.

One notable remaining point of contention about the merged proposal is that module is a hard keyword in it, thereby breaking existing code that uses that word as an identifier. There remains widespread concern about this in multiple user communities, including the graphics community where the name “module” is used in existing published specifications (such as Vulkan). These concerns would be addressed if module were made a context-sensitive keyword instead. There was a proposal to do so at the last meeting, which failed to gain consensus (I suspect because the author focused on various disambiguation edge cases, which scared some EWG members). I expect a fresh proposal will prompt EWG to reconsider this choice at the next meeting.

As mentioned above, there was also a suggestion to take a subset of the merged proposal and put it directly into C++20. The subset included neither legacy header imports nor global module fragments (in any useful form), thereby not providing any meaningful transition mechanism for existing codebases, but it was hoped that it would still be well-received and useful for new codebases. However, there was no consensus to proceed with this subset, because it would have meant having a new set of semantics different from anything that’s implemented today, and that was deemed to be risky.

It’s important to underscore that not proceeding with the “subset” approach does not necessarily mean the committee has given up on having any form of Modules in C++20 (although the chances of that have probably decreased). There remains some hope that the development of the merged proposal might proceed sufficiently quickly that the entire proposal — or at least a larger subset that includes a transition mechanism like legacy header imports — can make it into C++20.

Finally, EWG briefly heard from the authors of a proposal for modular macros, who basically said they are withdrawing their proposal because they are satisfied with Atom’s facility for selectively exporting macros via #export directives, which is being treated as a future extension to the merged proposal.

Papers not discussed

With the continued focus on large proposals that might target C++20 like Modules and Coroutines, EWG has a growing backlog of smaller proposals that haven’t been discussed, in some cases stretching back to two meetings ago (see the the committee mailings for a list). A notable item on the backlog is a proposal by Herb Sutter to bridge the two worlds of C++ users — those who use exceptions and those who not — by extending the exception model in a way that (hopefully) makes it palatable to everyone.

Other Working Groups

Library Groups

Having sat in EWG all week, I can’t report on technical discussions of library proposals, but I’ll mention where some proposals are in the processing queue.

I’ve already listed the library proposals that passed wording review and were voted into the C++20 working draft above.

The following are among the proposals have passed design review and are undergoing (or awaiting) wording review:

- Notably, the merge of the Ranges TS into C++20. This includes various proposed enhancements:

- Making

std::vectorconstexpr. (This is the sort of thing that language enhancements to allow dynamic allocation in aconstexprcontext were meant to unblock.) constexprinstd::pointer_traits- Tightening constaints on

std::function - Well-behaved interpolation for numbers and pointers

- Utility function to implement uses-allocator construction

- Allocator-aware

basic_stringbuf decay_unwrapandunwrap_reference- Vectorization policies (taken from the Parallelism TS v2)

- Utility enhancements for

std::span function_ref: a non-owning reference to a Callable- A coroutine

tasktype

The following proposals are still undergoing design review, and are being treated with priority:

- Text formatting

- A stack trace library

- Generic

none()factories for Nullable types - Monadic operations for

std::optional

The following proposals are also undergoing design review:

- Executors

- A friendlier

tupleget() - Fractional numeric type

std::embed, which provides a mechanism to access program-external resources at compile time- Fixing the

partial_ordercomparison algorithm - Fixed-point real numbers

- User-defined literals for

std::filesystem::path split()/join()forstringandstring_view- A call for a data persistence (“iostream v2”) study group. It looks like there is sufficient interest to proceed with creating a Study Group here.

- Sizes should only

spanunsigned. This is likely to prompt an evening session at the next meeting to have a more general discussion about the use of signed vs. unsigned types in library interfaces. - Should

spanbe regular? - Safe integral comparisons

- Zero-overhead deterministic exceptions: throwing values. Feedback on this in Library Evolution Working Group has been positive.

As usual, there is a fairly long queue of library proposals that haven’t started design review yet. See the committee’s website for a full list of proposals.

(These lists are incomplete; see the post-meeting mailing when it’s published for complete lists.)

Study Groups

SG 1 (Concurrency)

I’ve already talked about some of the Concurrency Study Group’s work above, related to the Parallelism TS v2, and Executors.

The group has also reviewed some proposals targeting C++20. These are at various stages of the review pipeline:

Proposals before the Library Evolution Working Group include latches and barriers, C atomics in C++, and a joining thread.

Proposals before the Library Working Group include improvements to atomic_flag, efficient concurrent waiting, and fixing atomic initialization.