Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Karl Dubost: Dyslexia, Typo and Web Compatibility |

Because we type code. We do mistakes. Today by chance my fingers typed viewpoet instead of viewport. It made me smile right away and I had to find out if I was the only one who did that typo but in actual code. So I started to search for broken code.

-

Example:

this.$element.on("transitoinEnd webkitTransitionEnd", function() { -

Example:

background: linear-gradeint($direction, $color-stops);

Slip of mind, dyslexia, keys close to each other, many reasons to do beautiful typos. As Descartes was saying:

I do typos therefore I am.

Otsukare!

|

|

Chris McDonald: Started Writing a Game |

I started writing a game! Here’s the origin:

commit 54dbc81f294b612f7133c8a3a0b68d164fd0322c Author: Wraithan (Chris McDonald) <xwraithanx@gmail.com> Date: Mon Sep 5 17:11:14 2016 initial commit

Ok, now that we’re past that, lets talk about how I got there. I’ve been a gamer all my life, a big portion of the reason I went into software development was to work on video games. I’ve started my own games a couple times around when I as 18. Then every couple years I dabble in rendering Final Fantasy Tactics style battle map.

I’ve also played in a bunch of AI competitions over the years. I love writing programs to play games. Also, I recently started working at Sparkypants, a game studio, on Dropzone a modern take on RTS. I develop a bunch of the supporting services in the game our matchmaking and such.

Another desire that has lead me here is the desire to complete something. I have many started projects but rarely get them to a release quality. I think it is time I make my own game, and the games I’ve enjoyed the most have been management and simulation games. I’ll be drawing from a lot of games in that genre. Prison Architect, Rimworld, FortressCraft Evolved, Factorio, and many more. I want to try implementing lots of the features as experiments to decide what I want to dig further into.

The game isn’t open source currently, maybe eventually. I’m building the game mostly from scratch using rust and the glium abstraction over OpenGl/glutin. I’m going to try to talk about portions of the development on my blog. This may be simple things like new stuff I learn about Rust. Celebrating achievements as I feel proud of myself. And whatever else comes up as I go. If you want to discuss stuff I prefer twitter over comments, but comments are there if you would rather.

So far I’ve built a very simple deterministic map generator. I had to make sure the map got it’s own seed so it could decide when to pull numbers out and ensure consistency. It currently requires the map to be generated in a single pass. I plan to change to more of a procedural generator so I can expand the map as needed during game play.

I started to build a way to visualize the map beyond an ascii representation. I knew I wanted to be on OpenGL so I support all the operating systems I work/play in. In Rust the best OpenGL abstraction I could find was glium which is pretty awesome. It includes glutin so I get window creation and interaction as part of it, without having to stitch it together myself.

This post is getting a bit long. I think I’ll wrap it up here. In the near future (maybe later tonight?) I’ll have another post with my thoughts on rendering tiles, coordinate systems, panning and zooming, etc. I’m just a beginner at all of this so I’m very open to ideas and criticisms, but please also be respectful.

|

|

Chris McDonald: Assigning Blocks in Rust |

So, I’m hanging out after an amazing day at RustConf working on a side project. I’m trying to practice a more “just experiment” attitude while playing with some code. This means not breaking things out into functions or other abstractions unless I have to. One thing that made this uncomfortable was when I’d accidentally shadow a variable or have a hard time seeing logical chunks. Usually a chunk of the code could be distilled into a single return value so a function would make sense, but it also makes my code less flexible during this discovery phase.

While reading some code I noticed a language feature I’d never noticed before. I knew about using braces to create scopes. And from that, I’d occasionally declare a variable above a scope, then use the scope to assign to it while keeping the code to create the value separated. Other languages have this concept so I knew it from there. Here is an example:

fn main() {

let a = 2;

let val;

{

let a = 1;

let b = 3;

val = a + b;

} // local a and local b are dropped here

println!("a: {}, val: {}", val); // a: 2, val: 4

}

But this feature I noticed let me do something to declare my intent much more exactly:

fn main() {

let a = 2;

let val = {

let a = 1;

let b = 3;

a + b

} // local a and local b are dropped here

println!("a: {}, val: {}", val); // a: 2, val: 4

}

A scope can have a return value, so you just make the last expression return by omitting the semicolon and you can assign it out. Now I have something that I didn’t have to think about the signature of but separates some logic and namespace out. Then later once I’m done with discovery and ready to abstract a little bit to make things like error handling, testing, or profiling easier I have blocks that may be combined with a couple related blocks into a function.

|

|

Cameron Kaiser: Ars Technica's notes from the OS 9 underground |

Naturally much of my E-mail interview with him could not be used in the article (I expected that) and I think he's done a fabulous job balancing those various parts of the OS 9 retrocomputing community. Still, there are some things I'd like to see entered into posterity publicly from that interview and with his permission I'm posting that exchange here.

Before doing so, though, just a note to Classilla users. I do have some work done on a 9.3.4 which fixes some JavaScript bugs, has additional stelae for some other site-specific workarounds and (controversially, because this will impact performance) automatically fixes screen update problems with many sites using CSS overflow. (They still don't layout properly, but they will at least scroll mostly correctly.) I will try to push that out as a means of keeping the fossil fed. TenFourFox remains my highest priority because it's the browser I personally dogfood 90% of the time, but I haven't forgotten my roots.

The interview follows. Please pardon the hand-conversion to HTML; I wrote this in plain text originally, as you would expect no less from me. This was originally written in January 2016, and, for the record, on a 1GHz iMac G4.

***

Q. What's your motivation for working on Classilla (and TenFourFox, but I'm mostly interested in Classilla for this piece)?A. One of the worst things that dooms otherwise functional hardware to apparent obsolescence is when "they can't get on the Internet." That's complete baloney, of course, since they're just as capable of opening a TCP socket and sending and receiving data now as they were then (things like IPv6 on OS 9 notwithstanding, of course). Resources like Gopherspace (a topic for another day) and older websites still work fine on old Macs, even ones based on the Motorola 680x0 series.

So, realistically, the problem isn't "the Internet" per se; some people just want to use modern websites on old hardware. I really intensely dislike the idea that the ability to run Facebook is the sole determining factor of whether a computer is obsolete to some people, but that's the world we live in now. That said, it's almost uniformly a software issue. I don't see there being any real issues as far as hardware capability, because lots of people dig out their old P3 and P4 systems and run browsers on them for light tasks, and older G4 and G3 systems (and even arguably some 603 and 604s) are more than comparable.

Since there are lots of x86 systems, there are lots of people who want to do this, and some clueful people who can still get it to work (especially since operating system and toolchain support is still easy to come by). This doesn't really exist for architectures out of the mainstream like PowerPC, let alone for a now almost byzantine operating system like Mac OS 9, but I have enough technical knowledge and I'm certifiably insane and by dumb luck I got it to work. I like these computers and I like the classic Mac OS, and I want them to keep working and be "useful." Ergo, Classilla.

TenFourFox is a little more complicated, but the same reason generally applies. It's a bit more pointed there because my Quad G5 really is still my daily driver, so I have a lot invested in keeping it functional. I'll discuss this more in detail at the end.

Q. How many people use Classilla?

A. Hard to say exactly since unlike TenFourFox there is no automatic checkin mechanism. Going from manual checkins and a couple back-of-the-napkin computations from download counts, I estimate probably a few thousand. There's no way to know how many of those people use it exclusively, though, which I suspect is a much smaller minority.

Compare this with TenFourFox, which has much more reliable numbers; the figure, which actually has been slowly growing since there are no other good choices for 10.4 and less and less for 10.5, has been a steady 25,000+ users with about 8,000 checkins occurring on a daily basis. That 30% or so are almost certainly daily drivers.

Q. Has it been much of a challenge to build a modern web browser for OS 9? The problems stem from more than just a lack of memory and processing speed, right? What are there deeper issues that you've had to contend with?

A. Classilla hails as a direct descendant of the old Mozilla Suite (along with SeaMonkey/Iceweasel, it's the only direct descendant still updated in any meaningful sense), so the limitations mostly come from its provenance. I don't think anyone who worked on the Mac OS 9 compatible Mozilla will dispute the build system is an impressive example of barely controlled disaster. It's actually an MacPerl script that sends AppleEvents to CodeWarrior and MPW Toolserver to get things done (primarily the former, but one particularly problematic section of the build requires the latter), and as an example of its fragility, if I switch the KVM while it's trying to build stubs, it hangs up and I usually have to restart the build. There's a lot of hacking to make it behave and I rarely run the builder completely from the beginning unless I'm testing something. The build system is so intimidating few people have been able to replicate it on their computers, which has deterred all but the most motivated (or masochistic) contributors. That was a problem for Mozilla too back in the day, I might add, and they were only too glad to dump OS 9 and move to OS X with Mozilla 1.3.

Second, there's no Makefiles, only CodeWarrior project files (previously it actually generated them on the fly from XML templates, but I put a stop to that since it was just as iffy and no longer necessary). Porting code en masse usually requires much more manual work for that reason, like adding new files to targets by hand and so on, such as when I try to import newer versions of libpng or pieces of code from NSS. This is a big reason why I've never even tried to take entire chunks of code like layout/ and content/ even from later versions of the Suite; trying to get all the source files set up for compilation in CodeWarrior would be a huge mess, and wouldn't buy me much more than what it supports now. With the piecemeal hacks in core, it's already nearly to Mozilla 1.7-level as it is (Suite ended with 1.7.13).

Third is operating system support. Mozilla helpfully swallows up much of the ugly guts in the Netscape Portable Runtime, and NSPR is extremely portable, a testament to its good design. But that doesn't mean there weren't bugs and Mac OS 9 is really bad at anything that requires multithreading or multiprocessing, so some of these bugs (like a notorious race condition in socket handling where the socket state can change while the CPU is busy with something else and miss it) are really hard to fix properly. Especially for Open Transport networking, where complex things are sometimes easy but simple things are always hard, some folks (including Mozilla) adopted abstraction layers like GUSI and then put NSPR on top of the abstraction layer, meaning bugs could be at any level or even through subtleties of their interplay.

Last of all is the toolchain. CodeWarrior is pretty damn good as a C++ compiler and my hat is off to Metrowerks for the job they did. It had a very forward-thinking feature set for the time, including just about all of C++03 and even some things that later made it into C++11. It's definitely better than MPW C++ was and light-years ahead of the execrable classic Mac gcc port, although admittedly rms' heart was never in it. Plus, it has an outstanding IDE even by modern standards and the exceptional integrated debugger has saved my pasty white butt more times than I care to admit. (Debugging in Macsbug is much like walking in a minefield on a foggy morning with bare feet: you can't see much, it's too easy to lose your footing and you'll blow up everything to smithereens if you do.) So that's all good news and has made a lot of code much more forward-portable than I could ever have hoped for, but nothing's ever going to be upgraded and no bugs will ever be fixed. We can't even fix them ourselves, since it's closed source. And because it isn't C++11 compliant, we can forget about pulling in substantially more recent versions of the JavaScript interpreter or realistically anything else much past Gecko 2.

Some of the efficiencies possible with later code aren't needed by Classilla to render the page, but they certainly do make it slower. OS 9 is very quick on later hardware and I do my development work on an Power Mac G4 MDD with a Sonnet dual 1.8GHz 7447A upgrade card, so it screams. But that's still not enough to get layout to complete on some sites in a timely fashion even if Classilla eventually is able to do it, and we've got no JIT at all in Classilla.

Mind you, I find these challenges stimulating. I like the feeling of getting something to do tasks it wasn't originally designed to do, sort of like a utilitarian form of the demoscene. Constraints like these require a lot of work and may make certain things impossible, so it requires a certain amount of willingness to be innovative and sometimes do things that might be otherwise unacceptable in the name of keeping the port alive. Making the browser into a series of patches upon patches is surely asking for trouble, but there's something liberating about that level of desperation, anything from amazingly bad hacks to simply accepting a certain level of wrong behaviour in one module because it fixes something else in another to ruthlessly saying some things just won't be supported, so there.

Q. Do you get much feedback from people about the browser? What sorts of things do they say? Do you hear from the hold-outs who try to do all of their computing on OS 9 machines?

A. I do get some. Forcing Classilla to preferring mobile sites actually dramatically improved its functionality, at least for some period of time until sites starting assuming everyone was on some sufficiently recent version of iOS or Android. That wasn't a unanimously popular decision, but it worked pretty well, at least for the time. I even ate my own dogfood and took nothing but an OS 9 laptop with me on the road periodically (one time I took it to Leo Laporte's show back in the old studio, much to his amazement). It was enough for E-mail, some basic Google Maps and a bit of social media.

Nowadays I think people are reasonable about their expectations. The site doesn't have to look right or support more than basic functionality, but they'd like it to do at least that. I get occasional reports from one user who for reasons of his particular disability cannot use OS X, and so Classilla is pretty much his only means of accessing the Web. Other people don't use it habitually, but have some Mac somewhere that does some specific purpose that only works in OS 9, and they'd like a browser there for accessing files or internal sites while they work. Overall, I'd say the response is generally positive that there's something that gives them someimprovement, and that's heartening. Open source ingrates are the worst.

The chief problem is that there's only one of me, and I'm scrambling to get TenFourFox 45 working thanks to the never-ending Mozilla rapid release treadmill, so Classilla only gets bits and pieces of my time these days. That depresses me, since I enjoy the challenge of working on it.

Q. What's your personal take on the OS 9 web browsing experience?

A. It's ... doable, if you're willing to be tolerant of the appearance of pages and use a combination of solutions. There are some other browsers that can service this purpose in a limited sense. For example, the previous version of iCab on classic Mac is Acid2 compliant, so a lot of sites look better, but its InScript JavaScript interpreter is glacial and its DOM support is overall worse than Classilla's. Internet Explorer 5.1 (and the 5.5 beta, if you can find it) is very fast on those sites it works on, assuming you can find any. At least when it crashes, it does that fast too! Sometimes you can even get Netscape 4.8 to be happy with them or at least the visual issues look better when you don't even try to render CSS. Most times they won't look right, but you can see what's going on, like using Lynx.

Unfortunately, none of those browsers have up-to-date certificate stores or ciphers and some sites can only be accessed in Classilla for that reason, so any layout or speed advantages they have are negated. Classilla has some other tricks to help on those sites it cannot natively render well itself. You can try turning off the CSS entirely; you could try juggling the user agent. If you have some knowledge of JavaScript, you can tell Classilla's Byblos rewrite module to drop or rewrite problematic portions of the page with little snippets called stelae, basically a super-low-level Greasemonkey that works at the HTML and CSS level (a number of default ones are now shipped as standard portions of the browser).

Things that don't work at all generally require DOM features Classilla does not (yet) expose, or aspects of JavaScript it doesn't understand (I backported Firefox 3's JavaScript to it, but that just gives you the syntax, not necessarily everything else). This aspect is much harder to deal with, though some inventive users have done it with limited success on certain sites.

You can cheat, of course. I have Virtual PC 6 on my OS 9 systems, and it is possible (with some fiddling in lilo) to get it to boot some LiveCDs successfully -- older versions of Knoppix, for example, can usually be coaxed to start up and run Firefox and that actually works. Windows XP, for what that's worth, works fine too (I would be surprised if Vista or anything subsequent does, however). The downside to this is the overhead is a killer on laptops and consumes lots of CPU time, and Linux has zero host integration, but if you're able to stand it, you can get away with it. I reserved this for only problematic sites that I had to access, however, because it would bring my 867MHz TiBook to its knees. The MDD puts up with this a lot better but it's still not snappy.

If all this sounds like a lot of work, it is. But at least that makes it possible to get the majority of Web sites functional to some level in OS 9 (and in Classilla), at least one way or another, depending on how you define "functional." To that end I've started focusing now on getting specific sites to work to some personally acceptable level rather than abstract wide-ranging changes in the codebase. If I can't make the core render it correctly, I'll make some exceptions for it with a stele and ship that with the browser. And this helps, but it's necessarily centric to what I myself do with my Mac OS 9 machines, so it might not help you.

Overall, you should expect to do a lot of work yourself to make OS 9 acceptable with the modern Web and you should accept the results are at best imperfect. I think that's what ultimately drove Andrew Cunningham up the wall.

I'm going to answer these questions together:

Q1. How viable do you think OS 9 is as a primary operating system for someone today? How viable is it for you?

[...]

Q2. What do you like about using older versions of Mac OS (in this case, I'm talking in broad terms - so feel free to discuss OS X Tiger and PPC hardware as well)? Why not just follow the relentless march of technology? (It's worth mentioning here that I actually much prefer the look and feel of classic MacOS and pre-10.6 OS X, but for a lot of my own everyday computing I need to use newer, faster machines and operating systems.)

A. I'm used to a command line and doing everything in text. My Mac OS 9 laptop has Classilla and MacSSH on it. I connect to my home server for E-mail and most other tasks like Texapp for command-line posting to App.net, and if I need a graphical browser, I've got one. That covers about 2/3rds of my typical use case for a computer. In that sense, Mac OS 9 is, at least, no worse than anything else for me. I could use some sort of Linux, but then I wouldn't be able to easily run much of my old software (see below). If I had to spend my time in OS 9 even now, with a copy of Word and Photoshop and a few other things, I think I could get nearly all of my work done, personally. There is emulation for the rest. :)

I will say I think OS 9 is a pleasure to use relative to OS X. Part of this is its rather appalling internals, which in this case is necessity made virtue; I've heard it said OS 9 is just a loose jumble of libraries stacked under a file browser and that's really not too far off. The kernel, if you can call it that, is nearly non-existent -- there's a nanokernel, but it's better considered as a primitive hypervisor. There is at best token support for memory protection and some multiprocessing, but none of it is easy and most of it comes with severe compromises. But because there isn't much to its guts, there's very little between you and the hardware. I admit to having an i7 MBA running El Crapitan, and OS 9 still feels faster. Things happen nearly instantaneously, something I've never said about any version of OS X, and certain classes of users still swear by its exceptionally low latency for applications such as audio production. Furthermore, while a few annoyances of the OS X Finder have gradually improved, it's still not a patch on the spatial nature of the original one, and I actually do like Platinum (de gustibus non disputandum, of course). The whole user experience feels cleaner to me even if the guts are a dog's breakfast.

It's for that reason that, at least on my Power Macs, I've said Tiger forever. Classic is the best reason to own a Power Mac. It's very compatible and highly integrated, to the point where I can have Framemaker open and TenFourFox open and cut and paste between them. There's no Rhapsody full-screen blue box or SheepShaver window that separates me from making Classic apps first-class citizens, and I've never had a Classic app take down Tiger. Games don't run so well, but that's another reason to keep the MDD around, though I play most of my OS 9 games on a Power Mac 7300 on the other desk. I've used Macs partially since the late 1980s and exclusively since the mid-late 1990s (the first I owned personally was a used IIsi), and I have a substantial investment in classic Mac software, so I want to be able to have my cake and eat it too. Some of my preference for 10.4 is also aesthetic: Tiger still has the older Mac gamma, which my eyes are accustomed to after years of Mac usage, and it isn't the dreary matte grey that 10.5 and up became infested with. These and other reasons are why I've never even considered running something like Linux/PPC on my old Macs.

Eventually it's going to be the architecture that dooms this G5. This Quad is still sufficient for most tasks, but the design is over ten years old, and it shows. Argue the PowerPC vs x86 argument all you like, but even us PPC holdouts will concede the desktop battle was lost years ago. We've still got a working gcc and we've done lots of hacking on the linker, but Mozilla now wants to start building Rust into Gecko (and Servo is, of course, all Rust), and there's no toolchain for that on OS X/ppc, so TenFourFox's life is limited. For that matter, so is general Power Mac software development: other than freaks like me who still put -arch ppc -arch i386 in our Makefiles, Universal now means i386/x86_64, and that's not going to last much longer either. The little-endian web (thanks to asm.js) even threatens that last bastion of platform agnosticism. These days the Power Mac community lives on Pluto, looking at a very tiny dot of light millions of miles away where the rest of the planets are.

So, after TenFourFox 45, TenFourFox will become another Classilla: a fork off Gecko for a long-abandoned platform, with later things backported to it to improve its functionality. Unlike Classilla it won't have the burden of six years of being unmaintained and the toolchain and build system will be in substantially better shape, but I'll still be able to take the lessons I've learned maintaining Classilla and apply it to TenFourFox, and that means Classilla will still live on in spirit even when we get to that day when the modern web bypasses it completely.

I miss the heterogeneity of computing when there were lots of CPUs and lots of architectures and ultimately lots of choices. I think that was a real source of innovation back then and much of what we take for granted in modern systems would not have been possible were it not for that competition. Working in OS 9 reminds me that we'll never get that diversity back, and I think that's to our detriment, but as long as I can keep that light on it'll never be completely obsolete.

***

http://tenfourfox.blogspot.com/2016/09/ars-technicas-notes-from-os-9.html

|

|

Cameron Kaiser: TenFourFox 45.4.0 available (plus: priorities for feature parity and down with Dropbox) |

The major change in this release is additional tweaking to the MediaSource implementation and I'm now more comfortable with its functioning on G4 systems through a combination of some additional later patches I backported and adjusting our own hacks to not only aggressively report the dropped frames but also force rebuffering if needed. The G4 systems now no longer seize and freeze (and, occasionally, fatally assert) on some streams, and the audio never becomes unsynchronized, though there is some stuttering if the system is too overworked trying to keep the video and audio together. That said, I'm going to keep MediaSource off for 45.4 so that there will be as little unnecessary support churn as possible while you test it (if you haven't already done so, turn media.mediasource.enabled to true in about:config; do not touch the other options). In 45.5, assuming there are no fatal problems with it (I don't consider performance a fatal flaw, just an important one), it will be the default, and it will be surfaced as an option in the upcoming TenFourFox-specific preference pane.

However, to make the most of MediaSource we're going to need AltiVec support for VP9 (we only have it for VP3 and VP8). While upper-spec G5 systems can just power through the decoding process (though this might make hi-def video finally reasonable on the last generation machines), even high-spec G4 systems have impaired decoding due to CPU and bus bandwidth limitations and the low-end G4 systems are nearly hopeless at all but the very lowest bitrates. Officially I still have a minimum 1.25GHz recommendation but I'm painfully aware that even those G4s barely squeak by. We're the only ones who ever used libvpx's moldy VMX code for VP8 and kept it alive, and they don't have anything at all for VP9 (just x86 and ARM, though interestingly it looks like MIPS support is in progress). Fortunately, the code was clearly written to make it easier for porters to hand-vectorize and I can do this work incrementally instead of having to convert all the codec pieces simultaneously.

Interestingly, even though our code now faithfully and fully reports every single dropped frame, YouTube doesn't seem to do anything with this information right now (if you right-click and select "Stats for nerds" you'll see our count dutifully increase as frames are skipped). It does downshift for network congestion, so I'm trying to think of a way to fool it and make dropped frames look like a network throughput problem instead. Doing so would technically be a violation of the spec but you can't shame that which has no shame and I have no shame. Our machines get no love from Google anyway so I'm perfectly okay with abusing their software.

I have the conversion to platform codec of our minimp3 decoder written as a first draft, but I haven't yet set that up or tested it, so this version still uses the old codec wrapper and still has the track-shifting problem with Amazon Music. That is probably the highest priority for 45.5 since it is an obvious regression from 38. On the security side, this release also disables RTCPeerConnection to eliminate the WebRTC IP "leak" (since I've basically given up on WebRTC for Power Macs). You can still reenable it from about:config as usual.

The top three priorities for the next couple versions (with links to the open Github issues) are, highest first, fixing Amazon Music, AltiVec VP9 codepaths and the "little endian typed array" portion of IonPower-NVLE to fix site compatibility with little-endian generated asm.js. Much of this work will proceed in parallel and the idea is to have a beta 45.5 for you to test them in a couple weeks. Other high priority items on my list to backport include allowing WebKit/Blink to eat the web supporting certain WebKit-prefixed properties to make us act the same as regular Firefox, support for ChaCha20+Poly1305, WebP images, expanded WebCrypto support, the "NV" portion of IonPower-NVLE and certain other smaller-scope HTML/CSS features. I'll be opening tracking issues for these as they enter my worklist, but I have not yet determined how I will version the browser to reflect these backported new features. For now we'll continue with 45.x.y while we remain on 45ESR and see where we end up.

As we look into the future, though, it's always instructive to compare it with the past. With the anticipation that even Google Code's Archive will be flushed down the Mountain View memory hole (the wiki looks like it's already gone, but you can get most of our old wikidocs from Github), I've archived 4.0b7, 4.0.3, 8.0, 10.0.11, 17.0.11 and Intel 17.0.2 on SourceForge along with their corresponding source changesets. These Google Code-only versions were selected as they were either terminal (quasi-)ESR releases or have historical or technical relevance (4.0b7 was our first beta release of TenFourFox ever "way back" in 2010, 8.0 was the last release that was pure tracejit which some people prefer, and of course Intel 17.0.2 was our one and so far only release on Intel Macs). There is no documentation or release notes; they're just there for your archival entertainment and foolhardiness. Remember that old versions run an excellent chance of corrupting your profile, so start them up with one you can throw away.

Finally, a good reason to dump Dropbox (besides the jerking around they give those of you trying to keep the PowerPC client working) is their rather shameful secret unauthorized abuse of your Mac's accessibility framework by forging an entry in the privacy database. (Such permissions allow it to control other applications on your Mac as if it were you at the user interface. The security implications of that should be immediately obvious, but if they're not, see this discussion.) The fact this is possible at all is a bug Apple absolutely must fix and apparently has in macOS Sierra, but exploiting it in this fashion is absolutely appalling behaviour on Dropbox's part because it won't even let you turn it off. To their credit they're taking their lumps on Hacker News and TechCrunch, but accepting their explanation of "only asking for privileges we use" requires a level of trust that frankly they don't seem worthy of and saying they never store your administrator password is a bit disingenuous when they use administrative access to create a whole folder of setuid binaries -- they don't need your password at that point to control the system. Moreover, what if there were an exploitable security bug in their software?

Mind you, I don't have a problem with apps requesting that access if I understand why and the request isn't obfuscated. As a comparison, GOG.com has a number of classic DOS games I love that were ported for DOSBox and work well on my MacBook Air. These require that same accessibility access for proper control of input methods. Though I hope they come up with a different workaround eventually, the GOG installer does explain why and does use the proper APIs for requesting that privilege, and you can either refuse on the spot or disable it later if you decide you're not comfortable with it. That's how it's supposed to work, but that's not what Dropbox did, and they intentionally hid it and the other inappropriate system-level things they were sneaking through. Whether out of a genuine concern for user experience or just trying to get around what they felt was an unnecessary security precaution, it's not acceptable and it's potentially exploitable, and they need to answer for that.

Watch for 45.4 going final in a couple days, and hopefully a 45.5 beta in a couple weeks.

http://tenfourfox.blogspot.com/2016/09/tenfourfox-4540-available-plus.html

|

|

Mozilla Security Blog: Firefox AddressSanitizer builds have been moved |

This is a short announcement for all security researchers working on Firefox that use our pre-built AddressSanitzer (ASan) builds. Until recently, you could download these ASan builds from our FTP servers. Due to changes to our internal build infrastructure, these builds are no longer available from the usual location. Instead, they are available on a build system called TaskCluster. Most people just need the latest available build for testing purposes. Fortunately, this is easy to get:

Direct Download for Latest Firefox AddressSanitizer Build

For more advanced queries, TaskCluster offers a public API that can be used to interact with the system (e.g. to retrieve past builds). More information is available in the documentation.

https://blog.mozilla.org/security/2016/09/09/firefox-addresssanitizer-builds-have-been-moved/

|

|

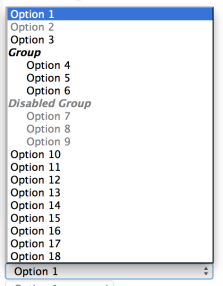

Jared Wein: Improving <select> dropdowns |

Last week marked the beginning of a new 12-week period of mentorship for myself. I’ll be working Mike Conley to share mentorship duties as we mentor a group of five Michigan State University students. The students are all seniors in the Computer Science program.

Last week marked the beginning of a new 12-week period of mentorship for myself. I’ll be working Mike Conley to share mentorship duties as we mentor a group of five Michigan State University students. The students are all seniors in the Computer Science program.

The MSU Capstone is a program that pairs industry companies with small student groups to give students access to real-world software development and the software development life cycle.

This semester, the five students will be working on improving the visual design of the . The project has three main components:

- The

- The default look and feel of the

(left to right) Jared Beach, Fred Luo, Mark Golbeck,

(left to right) Jared Beach, Fred Luo, Mark Golbeck,Miguel Wright, Tyler Maklebust

Since the students were assigned to the project last week, they now have local builds up and running as well as Bugzilla accounts. We’ve been active on IRC and will have weekly check-in meetings over Vidyo.

I’ll have more to share in the coming weeks as the students begin ramping up on their work.

Tagged: capstone, firefox, msu, planet-mozilla

https://msujaws.wordpress.com/2016/09/09/improving-select-dropdowns/

|

|

Michael Kaply: Debugging Firefox AutoConfig Problems |

I get a lot of questions about debugging AutoConfig issues, so I thought I would document what I do to try to track them down.

If Firefox starts, go to about:config and search for general.config. Make sure there are values for general.config.filename and general.config.obscure_value. If they are not there, your defaults/prefs/autoconfig.js file is not being read. The most common reason this happens is permissions or you’ve placed it in the wrong directory.

If both the general.config values are present, the next step is to check if your cfg file is being read. I usually make a very simple cfg file:

// First line is always a comment

lockPref("a.b.c.d", "e.f.g.h");

Then I go to about:config and verify that the a.b.c.d preference is there (it will be at the top). If the preference is not there, then something must be very wrong. I check the browser console to see if there are any errors. If the preference is there, AutoConfig is working correctly. I then start adding it the parts of my AutoConfig file a piece at a time, leaving the setting of the a.b.c.d pref at the end of the file. Then I check about:config each time and see which line is breaking the AutoConfig file.

If the browser doesn’t start at all, the first thing I do is put all my AutoConfig code in a try/catch block like this:

// First line is always a comment

try {

// My AutoConfig code.

} catch (e) {

Components.utils.reportError(e);

}

If the browser then starts, I look in the browser console to see what the error was. If the browser still doesn’t start, I start removing pieces of my AutoConfig until it does.

The most common problem in the past with AutoConfig was the use of let instead of var. This was fixed in Firefox 42 and should no longer be an issue. Some other common problems were around the use of international characters, in particular saving AutoConfig files as UTF-8 versus ASCII. This should also be fixed. All AutoConfig files should be saved as UTF-8.

In general, as long as you don’t have JavaScript syntax errors in your AutoConfig file, they should just work. That’s been my experience, and I’ve written some pretty complicated AutoConfig files.

https://mike.kaply.com/2016/09/08/debugging-firefox-autoconfig-problems/

|

|

Nicholas Nethercote: How to get localized Firefox Nightly builds |

One of the easiest and best ways that someone can help Mozilla and Firefox is to run Firefox Nightly. I’ve been doing it on my Windows, Mac and Linux machines for the past couple of months. It requires daily restarts, but otherwise it has been a smooth experience for me.

Unfortunately the number of Nightly users has been steadily dropping for some time, which hurts our ability to catch crashes and other regressions early. Pascal Chevrel and Marcia Knous are leading efforts underway to reverse this trend.

One problem with Nightly builds has been their visibility. In particular, finding localized (non-English) builds was difficult. That situation has just improved: thanks to Kohei Yoshino there is now a single page containing Nightly builds for all platforms and locales. As far as I know there are no other pages that currently link to that page, but perhaps that will happen as part of the planned work to give Nightly builds a place on mozilla.org.

If you have friends and family who would like to help Mozilla and are willing to use pre-release versions of Firefox, please suggest Firefox Nightly to them.

https://blog.mozilla.org/nnethercote/2016/09/09/how-to-get-localized-firefox-nightly-builds/

|

|

Asa Dotzler: SiteSonar: Measuring the Impact of Ads |

A while ago I wrote about about web performance and ad blockers. In that blog post I explained that I block ads because I can’t take the performance hit, that running an ad blocker or using Firefox’s tracking protection makes the web responsive again and a real pleasure to use. That blog post lead to discussions with a few people, including Mozilla intern Francesco Polizzi. Francesco and I discussed a study Tracking Protection in Firefox for Privacy and Performance (pdf) and how we could build on that. That study measured a 44% increase in page load performance and a 39% reduction in bandwidth usage across the Alexa top 200 news sites when tracking protection was enabled

So, ad networks, on average, are dramatically burdening page load times and drastically increasing data usage. This makes people sad and makes the Web less competitive with mobile. But publishers depend on ad networks for their livelihoods and surely some ad networks are better than others, right? Francesco and I wanted to quantify this and because Francesco is awesome, he created a browser extension for Firefox and Chrome, called SiteSonar, that identifies and benchmarks performance information about ad-related assets on the web as you browse.

(The extension uses Disconnect‘s list of ad domains to identify ad-related assets and then uses the WebRequest API to determine network response time for individual ad assets. That benchmark, along with information received like file size, status codes, and a timestamp are recorded locally. Finally, every few minutes the anonymous benchmarking stats are sent to a server where they’re parsed and logged into a database. The data powers a dashboard that displays aggregated information about ad network performance that the extension collected. To learn more, check it out on github.)

The early results from project SiteSonar are available at this dashboard. These are preliminary results from just a couple of us running the extension. We’d like to improve the results by broadening the base of people using this extension and submitting data. If you browse without an ad-blocker and would like to share some of your browsing history with us to improve the results of this experiment, you can find and install the Firefox extension at addons.mozilla.org and the Chrome extension at the Chrome web store.

https://asadotzler.com/2016/09/08/site-sonar-measuring-the-impact-of-ads/

|

|

Robert Kaiser: IDIC: Embrace Differences |

Well, if we want to go by the book, IDIC is actually seen as the basis of a philosophy, specifically that of Star Trek's Vulcan species, it's "native language" name is Kol-Ut-Shan, and it's symbolized by that really nice-looking jewel that has a triangle/pyramid with a marked point/ball on top and a circle around it (see image). That said, it ends up culminating Gene Roddenberry's philosophy behind a lot of what Star Trek depicts, and the philosophy that even 50 years (to this exact day) after the show first aired is still largely shared by the fans of the franchise (including myself).

What IDIC centers around is to increase and heavily embrace diversity in all things - and that can be applied to and give thought inspiration to many things.

Everything of course starts with Gene's vision of a lead crew as diverse as the mid-1960s would allow it, a United Federation of Planets that is a utopian in-between of UN and US in a galactic dimension, to other figures than white mean being in leadership positions in various incarnations of the franchise, and preserving diversity of life forms beyond the two-legged variety in various stories as well (if you like deeply digging into messages and philosophy of Star Trek episodes, the Mission Log Podcast may be something for you).

I like looking beyond Star Trek when it comes to this philosophy though. Take for example the genomes of life forms we know (in reality, on this planet) - no two life forms have the exact same genes, not even twins. Nature shows that "infinite" diversity (created from seemingly infinite combinations of very few elements) not because it's fun, or because our design sucks, or it's Vulcan, of course. It gives life an ingenious robustness by making it hard for attacks to affect large amounts of different individuals and species, it makes life forms complement each other to cover different environments, and adaptive to react to different circumstances.

And from all I hear from studies and see in practice, when we put together diverse groups of people, they usually excel in creativity and putting up different ideas, they are harder to control by a single bad influence, they develop more respect for other humans, higher sensitivity towards the needs of other people, deeper understanding of and respect for different persons - at least in comparison to many groups of people very similar to each other. Fun fact on the side, the crowd I see at Star Trek conventions is probably one of the most diverse group of "geeks" you can find (across gender, race, age, profession, and other criteria) - thanks to the role models and the philosophy put front and center in that franchise. That kind of diversity is something I want to see in many more areas of my life and around me. The more we get different people to sit down or stand together, the more we create and show role models of diversity enriching life, the more we get people to respect other people, no matter who they are, and the more we create a better world - and universe.

Now, what about things other than life forms? What for example about computer systems? About software?

There's a lot of people advocating for hardware, operating systems, software packages that are exactly the same for everyone, so it's easy to verify that they haven't been modified unduly, and that software updates are easier to apply. And that surely has merit in a number of dimensions, and reproducible builds, Flatpak and Snap, even reducing "fingerprintability" on the Web and quite a few other mechanisms exist to reduce differences between our systems.

But then, we as users of those computers and that software are all different. We want our systems to be personalized and therefore to be different from anyone else's system. We install different add-ons into our Firefox, different apps or applications on our computers and smartphones, log into different accounts on different websites, we want our system to be uniquely ours, or at least feel like it is that. So at some level, we as users want "infinite" diversity of computers. Different people may even want different screen numbers and sizes, have different focus on what is important for them that their computer does, desire different set-ups of the hardware on their home and/or work desks. And there are security reasons to put randomization (like ASLR and other RoP defense mechanisms) into our computer (runtime) setups in some cases. Would a higher degree of diversity on software make it harder for attacks to break a large amount of systems? Maybe, I don't know which benefits outweigh the others there.

It's clear that's a principle which works pretty decently in nature at a low level, and for groups of people at a high level, and we definitely should embrace it there. At which layers of our software and hardware it's useful or detrimental is not always entirely clear, but it has to work in personalizing our computer systems to our requirements, desires and wishes as we are all different and that diversity needs to end up being reflected so we can use its strength to work together and improve this world.

Thanks to Gene Roddenberry and Star Trek in general for giving me something interesting to think about - and Happy 50th Birthday Star Trek!

|

|

Firefox UX: A New Mobile Experience |

|

|

Support.Mozilla.Org: What’s Up with SUMO – 8th September |

Hello, SUMO Nation!

September sun… Still hot! At least in some parts of the world. How are you doing? Are you missing summer holidays already? We surely are… But there’s loads to do before the end of this year, so let’s not delay and get cracking!

Welcome, new contributors!

If you just joined us, don’t hesitate – come over and say “hi” in the forums!

Contributors of the week

- The forum supporters who tirelessly helped users out for the last week.

- The writers of all languages who worked tirelessly on the KB for the last week.

- All the Social Superheroes – thank you!

We salute you!

SUMO Community meetings

- LATEST ONE: 7th of September- you can read the notes here and see the video at AirMozilla.

- NEXT ONE: happening on the 14th of September!

- If you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- LAST CALL! Is anyone going to the Participation Meeting in Berlin on 10th & 11th of September? Make yourselves known!

- Reminder: We need to be inspired by you! Share your favourite superhero with Rachel. We are looking for different heroes from all parts of the world.

- Reminder: MozFest is coming our way! London, October 28-30. Are you excited yet?

- Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

- Ongoing reminder #3: want to know what’s going on with the Admins? Check this thread in the forum.

Platform

- PLATFORM REMINDER! The Platform Meetings are BACK! If you missed the previous ones, you can find the notes in this document. (here’s the channel you can subscribe to).

- Last meeting includes a huge set of questions and answers from the 8th of September meeting – thanks to John99 for asking them!

- Also… A complete and detailed breakdown of all the migration tasks – huge thanks to Madalina for managing the whole project with care.

- QUESTIONS? CONCERNS? Please take a look at this migration document and use this migration thread to put questions/comments about it for everyone to share and discuss. As much as possible, please try to keep the migration discussion and questions limited to those two places – we don’t want to chase ten different threads in too many different places.

Social

- SUMO Day coming up next week!

- Reminder: Please email Rachel and/or Madalina to get started supporting Mozilla’s product users on Facebook and Twitter. We need your help! Use the step-by-step guide here. Take a look at some useful videos:

Support Forum

- SUMO Day coming up next week!

- Reminder: If you are using email notifications to know what posts to return to, jscher2000 has a great tip (and tool) for you. Check it out here!

Knowledge Base & L10n

- We are 1 week before next release / 5 weeks after current release. What does that mean? (Reminder: we are following the process/schedule outlined here)

- Only Joni or other admins can introduce and/or approve potential last minute changes of next release content; only Joni or other admins can set new content to RFL; localizers should focus on this content.

- All other existing content is deprioritized, but can be edited and localized as usual.

- The content to be localized with high priority can be found on this list.

- Pontoon / user interface reminder: please do not work on UI strings in Pontoon as we are moving to Lithium – work on localizing articles instead.

- Thanks to AliceWyman for her watchful eyes that helped us update this set of review guidelines and best practices.

Firefox

- for Android

- Version 49 coming next week (tentatively on the 13th of September). Highlights include:

- caching selected pages (e.g. mozilla.org) for offline retrieval

- usual platform and bug fixes

- caching selected pages (e.g. mozilla.org) for offline retrieval

- Version 49 coming next week (tentatively on the 13th of September). Highlights include:

- for Desktop

- Version 49 coming next week (tentatively on the 13th of September) – read more about it in the release thread (thank you, Philipp!). Highlights include:

- text-to-speech in Reader mode

- ending support for older Mac OS versions

- ending support for older CPUs

- ending support for Firefox Hello

- usual platform and bug fixes

- There may be a possible delay to the release, as a number of last-minute fixes are being worked on.

- Version 49 coming next week (tentatively on the 13th of September) – read more about it in the release thread (thank you, Philipp!). Highlights include:

- for iOS

- Nothing new, steady as she goes, captain! ;-)

- Nothing new, steady as she goes, captain! ;-)

…and that’s it for today! If you need a bit less SUMO and a bit more fun, visit the Off Topic forums for some inspiration. We’ll see you around the helpful web, heroes and heroettes :-)

https://blog.mozilla.org/sumo/2016/09/08/whats-up-with-sumo-8th-september/

|

|

Air Mozilla: Intern Presenations 2016 |

Group 7 of the interns will be presenting on what they worked on this summer.

Group 7 of the interns will be presenting on what they worked on this summer.

|

|

Jared Wein: Outreachy wrap-up |

Just a couple of weeks ago marked the end of my first Outreachy session. During the session I mentored Katie Broida as she worked on some pretty cool features for Firefox.

Just a couple of weeks ago marked the end of my first Outreachy session. During the session I mentored Katie Broida as she worked on some pretty cool features for Firefox.

I learned a lot during the session, and I think she did too. I learned how to advertise and recruit for job openings, gather initial work, and to try to be as attentive as possible for the people that I’m working with.

We started advertising the internship a few weeks before the deadline to apply. Prior to advertising the internship there was little to no outreach to potential applicant pools. In the future, I hope we can reach out to campus groups, hobbyists, and other places that we might find potential candidates. We also need to do this work sooner, as a good number of potential candidates will already have made their internship plans four to six months earlier.

One of the advantages of being an open source company is that Mozilla can help potential applicants get involved in the community far before the application period opens. Applicants can get a chance to see if they will enjoy the work of their internship, and mentors can get a chance to see if the applicants will be successful. This becomes more powerful and insightful than the traditional interview.

As far as Katie’s work, she did an amazing job. Katie fixed numerous bugs throughout the Firefox user interface as well as added a couple new features too. On Windows 10, we now show a larger graphic for the Start Menu tile (it doesn’t work for everyone though, still some ghosts in the shadows here), and for all users we now have a new zoom indicator in the location bar that appears when a site is not at 100% zoom.

Restoring defaults in the Customize Mode now also restores the theme, and she introduced a new API for tab-specific panels to opt-in to closing when the selected tab changes.

Not only did Katie fix a bunch of bugs during the past four months but she also blogged about them, keeping detailed reports about how she got stuck and later how she was able to move around, over, or through the obstacles.

I had a great time working with the Outreachy program and I’ll do it again when Mozilla sponsors another session.

Tagged: firefox, planet-mozilla

|

|

Air Mozilla: Connected Devices Weekly Program Update, 08 Sep 2016 |

Weekly project updates from the Mozilla Connected Devices team.

Weekly project updates from the Mozilla Connected Devices team.

https://air.mozilla.org/connected-devices-weekly-program-update-20160908/

|

|

Air Mozilla: Reps weekly, 08 Sep 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Andy McKay: Mozilla is messy |

Mozilla is very open about the stuff it does compared to many companies. It's a perpetual worry for me that we are never open enough and could always be more open. But this has some interesting consequences. One of them is that we can be very messy in communciations.

Some examples:

"Person X said this, you said this. You don't even agree with each other."

"Person X said this and now you are saying this, who's right."

"Listen to the advice of your own people who don't agree with you."

Mozilla tries to be an open source organisation of contributors to the project, but we can't ignore that there are about (currently) 1,000 people employed at the Mozilla Corporation and they are paid contributors to the project. Most of the time criticism like this is directed at the paid contributors.

But in an organisation where all the paid contributors are on IRC and mailing lists and blogs all day long, you have a scenario where any paid contributor can say whatever they want, when they want. If you work at Mozilla you likely care about the open web, the code area you work in, your colleagues, believe in open source and likely have strong opinions on things.

People care and are willing to talk about things openly. And because people are people, people disagree with each other. This isn't a large secretive corporation where people can't talk. This isn't a place where answers to questions are ignored or funnelled through one or two people.

In fact I've seen some amazing things in meetings and mailing lists where lowly Engineers directly and openly question Directors and Vice Presidents in front of others.

But that's important, because it ensures that good ideas are formulated by the most knowledgeable people and discussed openly. That's the cost you pay dealing with the Mozilla community. You might get differing, conflicting or incoherent answers as you interact with it.

Would you rather have the alternative?

|

|

Emma Irwin: Open Source nostalgia — Can we just move forward please? |

This is #3 of 5 posts I had in draft state for a few months, that I decided finish up & post. Here’s hoping my research helps others. I started writing this in May.

“Inessential weirdness of open source”

This term (crediting to Katrina Owen at Github) perfectly describes a conundrum of open participation, whereby we hold onto symbols, processes, and idiosyncrasies of open source in a mix of nostalgia, delusion and … I’m going to say it – arrogance , as the primary (nearly holy) measures of ‘being open’ in community building .

‘But’ve always done x’, is a very common response to change in open communities. Whereby we unintentionally (yet deliberately) avoid change because we believe that that purity of ‘open’ is the only way to innovate further . We even avoid change despite huge potential to grow more diverse and healthy open communities – because… there are slivers of non-open. gah!

Two years ago I ran the ‘Open Hatch Comes To Campus’ workshop at the University of Victoria. I spent 1.5 hours teaching people the skills they needed to ultimately… type ‘hello’ on an IRC channel.. Our workshop implied IRC was a critical doorway, and on-ramp to participation in open source. Saying hello, asking for help – with an instructors guidance: 1.5 hours. What?

I’ve often heard project maintainers say, that obtuse processes like these actually help ensure the success of those who are truly serious about contribution. As if asking basic questions is a holy grail of volunteering- one where only those willing to waste ridiculous amounts of time on discombobulated, obtuse processes and tools are worthy of participation. I call bullshit on any process that makes connecting with others, in an ‘open project’ – an obstacle.

“open and accessible doesn’t beat usable and intelligent”

In the last couple of years we’ve seen open communities faced with an interesting choice of using tools that work really well for working open, but are not themselves open. Github being the most obvious example. Similarly I’ve also followed the Open Data communities use of + Slack + Slackin!

Still in the voice of nostalgia asking us to remember our legacy IRC.

Anyway….what exactly do we need our community software to do? Here’s a short list I used when measuring chat solutions (and sure I am missing things)

- Open source – I want the ability to inspect, and improve-on software we use for community conversation, and to propose improvement via pull requests.

- Data is discover-able via web search. So much success of ‘open’ is that people can stumble on conversations that push innovation further.

- Open Conversations – no login or registration required. Anyone can ‘lurk’.

- Easy to grasp & intuitive – Lets not ask newbies to install software to ask for help. Lets’ not expect that contributors are technical contributors.

- Github feed (my own requirement, that everyone can see new issues, and comments they subscribe to).

A clever human-connection setup should allow new contributors an ability to answer these questions with some clarity:

- Who is here?

- Am I welcome here?

- What’s happening in this community?

- How can I contribute?

- How do I ask for help?

With this criteria, and questions in mind, here are the results of those I researched for education contributors at Mozilla:

Mattermost – Has potential, but seems unfinished, and little ‘alpha’. Without installing myself ,I couldn’t figure out how to enable a Github feed.

Gitter – I discovered this when looking around Free Code Club. I liked the UI, and possibilities for multiple channels easily toggled, searchable and friendly. Plugins tend to be more developer-friendly, which was a drawback for non-technical contribution – but not a show stopper. Has a great search option for communities. Chat rooms are associated with Github Repos, which has huge potential for building communities around projects and initiatives.

I think Gitter is doing with Github, what Github should be doing for Github projects interested in nurturing participation.

Discord – I found found Reactiflux development via Facebook React’s repo, but was nervous about jumping in. Seems more like a team project, than community. I found it intimidating, especially with voice, and it wasn’t clear what preferences where. Quickly left.

I revisited this after comments were left about this project portal being community organized (as it had been months since I was there). Aside from struggling to switch login/register status, I do have to say it’s a very easy to lurk into – and has desktop versions (it seems I didn’t have a lot of time to test). I’m not clear on how discover able conversations are outside of this app, but the community has set things up very well to ask questions in a number of ways (which is awesome). Still on the fence about voice chat, but maybe that’s because it’s harder to stay gender-anonymous with voice. Thanks for the comment that made me take another look Mark!

Rocketchat – It’s open source, it looks great – it has the potential to do what Gitter is doing for communities, but it feels very single-instance and Slack-replacement focused. Don’t get me wrong, it’s beautiful, very capable of being a good alternative but I want more – I want ‘open’ feel like more than code. If I had to choose an alternative it would be this one.

Rivr – I couldn’t find inspiration other than free, and not-Slack. Guessing it’s a great alternative too.

Slack should be thought of as first generation example of how community might meet, connect with participation and community, but not as a template, and not as a ‘bar’ that we now try to replicate openly. Reactiflux community has also demonstrated that a cohesive collection of support vrs any one solution is often the best way to go as well.

It’s time we prioritized connection of humans ‘ in the open’- lets end the inessential weirdness of open source.

|

|

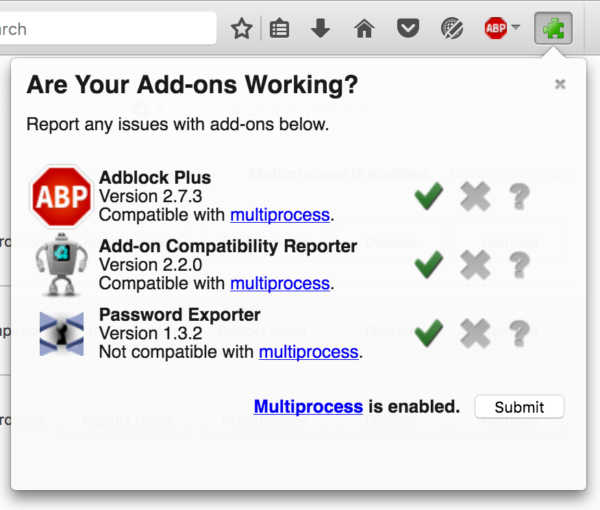

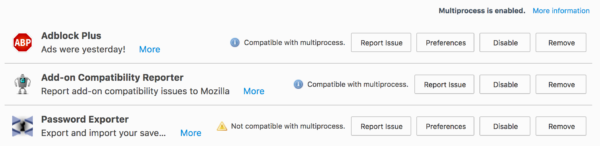

Mozilla Addons Blog: Help make add-ons multiprocess compatible with Add-on Compatibility Reporter |

Firefox is currently transitioning to a multiprocess architecture (e10s), which will give users a more stable and responsive browser. This transition affects certain add-ons, which must adapt to the new model or they won’t work correctly, and will be flagged as incompatible. We’re reaching out to add-on developers in various ways so they can check whether their add-ons are affected, and get support for updating their add-ons.

Now, there’s a way for you to help as well with outreach efforts, and that is by reporting incompatible add-ons.

Add-on Compatibility Reporter (ACR) enables you to tell us if an add-on works in a particular version of Firefox. Its reports have been a very useful tool for us in tracking incompatible add-ons and helping developers fix them. Add-on developers are also able to see reports sent for their add-ons in the developer tools. If you want to give it a try, we suggest that you do on one of the prerelease versions: Beta, Developer Edition, or Nightly. The latter two have multiprocess enabled for all add-ons by default, which is what we’re most interested in at the moment.

The latest versions of ACR have integrated support for multiprocess Firefox. Once you install the add-on, you will see which of your add-ons have declared multiprocess compatibility. You will also see if your version of Firefox has multiprocess enabled. The icons let you report if an add-on works or not.

You can also do all of this from the Add-ons Manager, if you prefer.

To determine if an add-on is multiprocess compatible, ACR looks for a flag in the add-on manifest, set by the developer. So, for example, Password Exporter appears as not compatible, even though it works correctly with e10s on. I maintain Password Exporter and haven’t yet updated its manifest to reflect this (I will soon!).

Knowing which add-ons are working with e10s is critical for a successful transition, so we hope you can help us by installing the Add-on Compatibility Reporter and letting us know which add-ons aren’t working for you.

|

|