Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Matjaz Horvat: Triage translations by author in Pontoon |

A few months ago we rolled out bulk actions in Pontoon, allowing you to perform various operations on multiple strings at the same time. Today we’re introducing a new string filter, bringing mass operations a level further.

From now on you can filter translations by author, which simplifies tasks like triaging suggestions from a particular translator. The new filter is especially useful in combination with bulk actions.

For example, you can delete all suggestions submitted by Prince of Nigeria, because they are spam. Or approve all suggestions from Mia M"uller, who was just granted Translator permission and was previously unable submit approved translations.

See how to filter by translation author in the video.

P.S.: Gasper, don’t freak out. I didn’t actually remove your translations.

http://horv.at/blog/easily-triage-translations-by-their-author/

|

|

Daniel Stenberg: syscast discussion on curl and life |

I sat down and talked curl, HTTP, HTTP/2, IETF, the web, Firefox and various internet subjects with Mattias Geniar on his podcast the syscast the other day.

https://daniel.haxx.se/blog/2016/06/22/syscast-discussion-on-curl-and-life/

|

|

Air Mozilla: Mozilla H1 2016 |

What did Mozilla accomplish in the first half of 2016? Here's the mind-boggling list in rapid-fire review.

What did Mozilla accomplish in the first half of 2016? Here's the mind-boggling list in rapid-fire review.

|

|

Air Mozilla: Martes mozilleros, 21 Jun 2016 |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1277600] Custom field “Crash Signature” link is deprecated

- [1274757] hourly whine being run every 15 minutes

- [1278592] “status” label disappears when changing the resolution in view mode

- [1209219] Add an option to the disable the “X months ago” format for dates

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/06/21/happy-bmo-push-day-22/

|

|

John O'Duinn: Joining the U.S. Digital Service |

I’ve never worked in government before – or even considered it until I read Dan Portillo’s blog post when he joined the U.S. Digital Service. Mixing the technical skills and business tactics honed in Silicon Valley with the domain specific skills of career government employees is a brilliant way to solve long-standing complex problems in the internal mechanics of government infrastructure. Since their initial work on healthcare.gov, they’ve helped out at the Veterans Administration, Dept of Education and IRS to mention just a few public examples. Each of these solutions have material impact to real humans, every single day.

Building Release Engineering infrastructure at scale, in all sorts of different environments, has always been interesting to me. The more unique the situation, the more interesting. The possibility of doing this work, at scale, while also making a difference to the lives of many real people made me stop, ask a bunch of questions and then apply.

The interviews were the most thorough and detailed of my career so far, and the consequence of this is clear once I started working with other USDS folks – they are all super smart, great at their specific field, unflappable when suddenly faced with un-imaginable projects and downright nice, friendly people. These are not just “nice to have” attributes – they’re essential for the role and you can instantly see why once you start.

The range of skills needed is staggering. In the few weeks since I started, projects I’ve been involved with have involved some combinations of: Ansible, AWS, Cobol, GitHub, NewRelic, Oracle PL/SQL, nginx, node.js, PowerBuilder, Python, Ruby, REST and SAML. All while setting up fault tolerant and secure hybrid physical-colo-to-AWS production environments. All while meeting with various domain experts to understand the technical and legal constraints behind why things were done in a certain way and also to figure out some practical ideas of how to help in an immediate and sustainable way. All on short timelines – measured in days/weeks instead of years. In any one day, it is not unusual to jump from VPN configurations to legal policy to branch merging to debugging intermittent production alerts to personnel discussions.

Being able to communicate effectively up-and-down the technical stack and also the human stack is tricky, complicated and also very very important to succeed in this role. When you see just how much the new systems improve people’s lives, the rewards are self-evident, invigorating and humbling – kinda like the view walking home from the office – and I find myself jumping back in to fix something else. This is very real “make a difference” stuff and is well worth the intense long days.

Being able to communicate effectively up-and-down the technical stack and also the human stack is tricky, complicated and also very very important to succeed in this role. When you see just how much the new systems improve people’s lives, the rewards are self-evident, invigorating and humbling – kinda like the view walking home from the office – and I find myself jumping back in to fix something else. This is very real “make a difference” stuff and is well worth the intense long days.

Over the coming months, please be patient with me if I contact you looking for help/advice – I may very well be fixing something crucial for you, or someone you know!

If you are curious to find out more about USDS, feel free to ask me. There is a lot of work to do (before starting, I was advised to get sleep!) and yes, we are hiring (for details, see here!). I suspect you’ll find it is the hardest, most rewarding job you’ve ever had!

John.

http://oduinn.com/blog/2016/06/21/joining-the-u-s-digital-service/

|

|

Tantek Celik: microformats.org at 11 |

Thanks to Julie Anne Noying for the meme birthday card.

Thanks to Julie Anne Noying for the meme birthday card.

10,000s of microformats2 sites and now 10 microformats2 parsers

The past year saw a huge leap in the number of sites publishing microformats2, from 1000s to now 10s of thousands of sites, primarily by adoption in the IndieWebCamp community, and especially the excellent Known publishing system and continually improving WordPress plugins & themes.

New modern microformats2 parsers continue to be developed in various languages, and this past year, four new parsing libraries (in three different languages) were added, almost doubling our previous set of six (in five different languages) that brought our year 11 total to 10 microformats2 parsing libraries available in 8 different programming languages.

microformats2 parsing spec updates

The microformats2 parsing specification has made significant progress in the past year, all of it incremental iteration based on real world publishing and parsing experience, each improvement discussed openly, and tested with real world implementations. The microformats2 parsing spec is the core of what has enabled even simpler publishing and processing of microformats.

The specification has reached a level of stability and interoperability where fewer issues are being filed, and those that are being filed are in general more and more minor, although once in a while we find some more interesting opportunities for improvement.

We reached a milestone two weeks ago of resolving all outstanding microformats2 parsing issues thanks to Will Norris leading the charge with a developer spec hacking session at the recent IndieWeb Summit where he gathered parser implementers and myself (as editor) and walked us through issue by issue discussions and consensus resolutions. Some of those still require minor edits to the specification, which we expect to complete in the next few days.

One of the meta-lessons we learned in that process is that the wiki really is less suitable for collaborative issue filing and resolving, and as of today are switching to using a GitHub repo for filing any new microformats2 parsing issues.

more microformats2 parsers

The number of microformats2 parsers in different languages continues to grow, most of them with deployed live-input textareas so you can try them on the web without touching a line of parsing code or a command line! All of these are open source (repos linked from their sections), unless otherwise noted. These are the new ones:

The Java parsers are a particularly interesting development as one is part of the upgrade to Apache Any23 to support microformats2 (thanks to Lewis John McGibbney). Any23 is a library used for analysis of various web crawl samples to measure representative use of various forms of semantic markup.

The other Java parser is mf2j, an early-stage Java microformats2 parser, created by Kyle Mahan.

The Elixir, Haskell, and Java parsers add to our existing in-development parser libraries in Go and Ruby. The Go parser in particular has recently seen a resurgence in interest and improvement thanks to Will Norris.

These in-development parsers add to existing production parsers, that is, those being used live on websites to parse and consume microformats for various purposes:

- Javascript (cross-browser client-side, and Node)

- PHP

- Python

As with any open source projects, tests, feedback, and contributions are very much welcome! Try building the production parsers into your projects and sites and see how they work for you.

Still simpler, easier, and smaller after all these years

Usually technologies (especially standards) get increasingly complex and more difficult to use over time. With microformats we have been able to maintain (and in some cases improve) their simplicity and ease of use, and continue to this day to get testimonials saying as much, especially in comparison to other efforts:

…hmm, looks like I should use a separate meta element: https://schema.org/startDate .

Man, Schema is verbose. @microformats FTW!

On the broader problem of schema.org verbosity (no matter the syntax), Kevin Marks wrote a very thorough blog post early in the past year:

More testimonials:

I still prefer @microformats over microdata

@microformats are easier to write, easier to maintain and the code is so much smaller than microdata.

I am not a big fan of RDF, semanticweb, or predefined ontologies. We need something lightweight and emergent like the microformats

This last testimonial really gets at the heart of one of the deliberate improvements we have made to iterating on microformats vocabularies in particular.

evolving h-entry

We have had an implementation-driven and implementation-tested practice for the microformats2 parsing specification for quite some time.

More and more we are adopting a similar approach to growing and evolving microformats vocabularies like h-entry.

We have learned to start vocabularies as minimal as possible, rather than start with everything you might want to do. That “start with everything you might want” is a common theory-first approach taken by a-priori vocabularies or entire "predefined ontologies" like schema.org's 150+ objects at launch, very few of which (single digits?) Google or anyone bothers to do anything with, a classic example of premature overdesign, of YAGNI).

With h-entry in particular, we started with an implementation filtered subset of hAtom, and since then have started documenting new properties through a few deliberate phases (which helps communicate to implementers which are more experimental or more stable)

- Proposed Additions – when someone proposes a property, gets some sort of consensus among their community peers, and perhaps one more person to implementing it in the wild beyond themselves (e.g. as the IndieWebCamp community does), it's worth capturing it as a proposed property to communicate that this work is happening between multiple people, and that feedback, experimentation, and iteration is desired.

- Draft Properties - when implementations begin to consume proposed properties and doing something explicit with them, then a postive reinforcement feedback loop has started and it makes sense to indicate that such a phase change has occured by moving those properties to "draft". There is growing activity around those properties, and thus this should be considered a last call of sorts for any non-trivial changes, which get harder to make with each new implementation.

- Core Properties - these properties have gained so much publishing and consuming support that they are for all intents and purposes stable. Another phase change has occured: it would be much harder to change them (too many implementations to coordinate) than keep them the same, and thus their stability has been determined by real world market adoption.

The three levels here, proposed, draft, and core, are merely "working" names, that is, if you have a better idea what to call these three phases by all means propose it.

In h-entry in particular, it's likely that some of the draft properties are now mature (implemented) enough to move them to core, and some of the proposed properties have gained enough support to move to draft. The key to making this happen is finding and citing documentation of such implementation and support. Anyone can speak up in the IRC channel etc. and point out such properties that they think are ready for advancement.

How we improve moving forward

We have made a lot of progress and have much better processes than we did even a year ago, however I think there’s still room for improvement in how we evolve both microformats technical specifications like the microformats2 parsing spec, and in how we create and improve vocabularies.

It’s pretty clear that to enable innovation we have to ways of encouraging constructive experimentation, and yet we also need a way of indicating what is stable vs in-progress. For both of those we have found that real world implementations provide both a good focusing mechanism and a good way to test experiments.

In the coming year I expect we will find even better ways to explain these methods, in the hopes that others can use them in their efforts, whether related to microformats or in completely different standards efforts. For now, let’s appreciate the progress we’ve made in the past year from publishing sites, to parsing implementations, from process improvements, to continuously improving living specifications. Here's to year 12.

Also published on: microformats.org.

|

|

Daniel Stenberg: curl user survey results 2016 |

The annual curl user poll was up 11 days from May 16 to and including May 27th, and it has taken me a while to summarize and put together everything into a single 21 page document with all the numbers and plenty of graphs.

Full 2016 survey analysis document

The conclusion I’ve drawn from it: “We’re not done yet”.

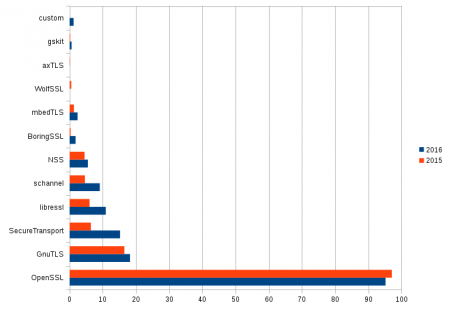

Here’s a bonus graph from the report, showing what TLS backends people are using with curl in 2016 and 2015:

https://daniel.haxx.se/blog/2016/06/21/curl-user-survey-results-2016/

|

|

Christian Heilmann: My closing keynote at Awwwards NYC 2016: A New Hope – the web strikes back |

Last week I was lucky enough to give the closing keynote at the Awwwards Conference in New York.

Following my current fascination, I wanted to cover the topic of Progressive Web Apps for an audience that is not too technical, and also very focused on delivering high-fidelity, exciting and bleeding edge experiences on the web.

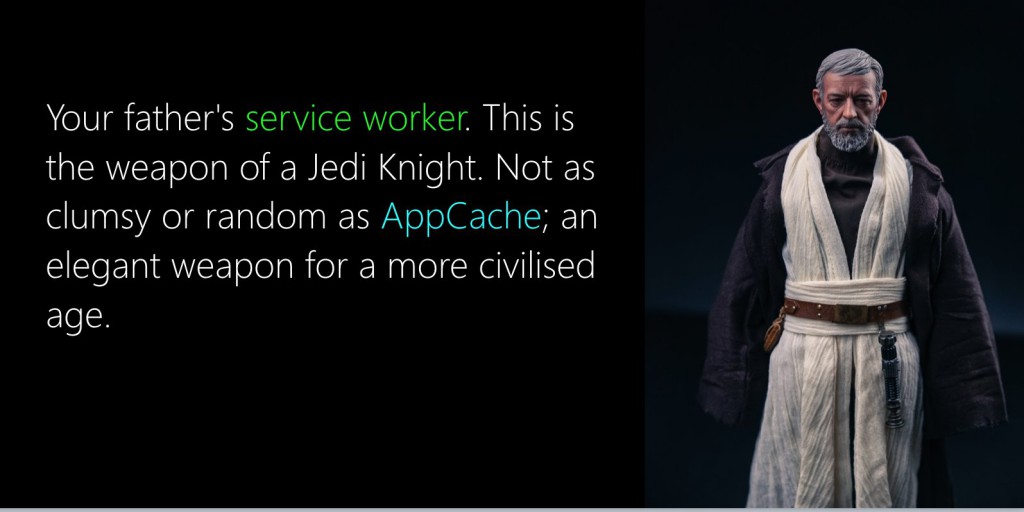

Getting slightly too excited about my Star Wars based title, I got a bit overboard with bastardising Star Wars quotes in the slides, but I managed to cover a lot of the why of progressive web apps and how it is a great opportunity right now.

I covered:

- The web as an idea and its inception: independent, distributed and based on open protocols

- The power of links

- The horrible environment that was the first browser wars

- The rise of standards as a means to build predictable, future-proof products

- How we became too dogmatic about standards

- How this lead to rebelling developer using JavaScript to build everything

- Why this is a brittle environment and a massive bet on things working flawlessly on our users’ computers

- How we never experience this as our environments are high-end and we’re well connected

- How we defined best practices for JavaScript, like Unobtrusive JavaScript and defensive coding

- How libraries and frameworks promise to fix all our issues and we’ve become dependent on them

- How a whole new generation of developers learned development by copying and pasting library-dependent code on Stackoverflow

- How this, among other factors, lead to a terribly bloated web full of multi-megabyte web sites littered with third party JavaScript and library code

- How to rise of mobile and its limitations is very much a terrible environment for those to run in

- How native apps were heralded as the solution to that

- How we retaliated by constantly repeating that the web will win out in the end

- How we failed to retaliate by building web-standard based apps that played by the rules of native – an environment where the deck was stacked against browsers

- How right now our predictions partly came true – the native environments and closed marketplaces are failing to deliver right now. Users on mobile use 5 apps and download on average not a single new one per month

- How users are sick of having to jump through hoops to try out some new app and having to lock themselves in a certain environment

- How the current state of supporting mobile hardware access in browsers is a great opportunity to build immersive experiences with web technology

- How ServiceWorker is a great opportunity to offer offline capable solutions and have notifications to re-engage users and allow solutions to hibernate

- How Progressive Web Apps are a massive opportunity to show native how software distribution should happen in 2016

Yes, I got all that in. See for yourself :).

The slides are available on SlideShare

You can watch the screencast of the video on YouTube.

|

|

Daniel Pocock: WebRTC and communications projects in GSoC 2016 |

This year a significant number of students are working on RTC-related projects as part of Google Summer of Code, under the umbrella of the Debian Project. You may have already encountered some of them blogging on Planet or participating in mailing lists and IRC.

WebRTC plugins for popular CMS and web frameworks

There are already a range of pseudo-WebRTC plugins available for CMS and blogging platforms like WordPress, unfortunately, many of them are either not releasing all their source code, locking users into their own servers or requiring the users to download potentially untrustworthy browser plugins (also without any source code) to use them.

Mesut is making plugins for genuinely free WebRTC with open standards like SIP. He has recently created the WPCall plugin for WordPress, based on the highly successful DruCall plugin for WebRTC in Drupal.

Keerthana has started creating a similar plugin for MediaWiki.

What is great about these plugins is that they don't require any browser plugins and they work with any server-side SIP infrastructure that you choose. Whether you are routing calls into a call center or simply using them on a personal blog, they are quick and convenient to install. Hopefully they will be made available as packages, like the DruCall packages for Debian and Ubuntu, enabling even faster installation with all dependencies.

Would you like to try running these plugins yourself and provide feedback to the students? Would you like to help deploy them for online communities using Drupal, WordPress or MediaWiki to power their web sites? Please come and discuss them with us in the Free-RTC mailing list.

You can read more about how to run your own SIP proxy for WebRTC in the RTC Quick Start Guide.

Finding all the phone numbers and ham radio callsigns in old emails

Do you have phone numbers and other contact details such as ham radio callsigns in old emails? Would you like a quick way to data-mine your inbox to find them and help migrate them to your address book?

Jaminy is working on Python scripts to do just that. Her project takes some inspiration from the Telify plugin for Firefox, which detects phone numbers in web pages and converts them to hyperlinks for click-to-dial. The popular libphonenumber from Google, used to format numbers on Android phones, is being used to help normalize any numbers found. If you would like to test the code against your own mailbox and address book, please make contact in the #debian-data channel on IRC.

A truly peer-to-peer alternative to SIP, XMPP and WebRTC

The team at Savoir Faire Linux has been busy building the Ring softphone, a truly peer-to-peer solution based on the OpenDHT distribution hash table technology.

Several students (Simon, Olivier, Nicolas and Alok) are actively collaborating on this project, some of them have been fortunate enough to participate at SFL's offices in Montreal, Canada. These GSoC projects have also provided a great opportunity to raise Debian's profile in Montreal ahead of DebConf17 next year.

Linux Desktop Telepathy framework and reSIProcate

Another group of students, Mateus, Udit and Balram have been busy working on C++ projects involving the Telepathy framework and the reSIProcate SIP stack. Telepathy is the framework behind popular softphones such as GNOME Empathy that are installed by default on the GNU/Linux desktop.

I previously wrote about starting a new SIP-based connection manager for Telepathy based on reSIProcate. Using reSIProcate means more comprehensive support for all the features of SIP, better NAT traversal, IPv6 support, NAPTR support and TLS support. The combined impact of all these features is much greater connectivity and much greater convenience.

The students are extending that work, completing the buddy list functionality, improving error handling and looking at interaction with XMPP.

Streamlining provisioning of SIP accounts

Currently there is some manual effort for each user to take the SIP account settings from their Internet Telephony Service Provider (ITSP) and transpose these into the account settings required by their softphone.

Pranav has been working to close that gap, creating a JAR that can be embedded in Java softphones such as Jitsi, Lumicall and CSipSimple to automate as much of the provisioning process as possible. ITSPs are encouraged to test this client against their services and will be able to add details specific to their service through Github pull requests.

The project also hopes to provide streamlined provisioning mechanisms for privately operated SIP PBXes, such as the Asterisk and FreeSWITCH servers used in small businesses.

Improving SIP support in Apache Camel and the Jitsi softphone

Apache Camel's SIP component and the widely known Jitsi softphone both use the JAIN SIP library for Java.

Nik has been looking at issues faced by SIP users in both projects, adding support for the MESSAGE method in camel-sip and looking at why users sometimes see multiple password prompts for SIP accounts in Jitsi.

If you are trying either of these projects, you are very welcome to come and discuss them on the mailing lists, Camel users and Jitsi users.

GSoC students at DebConf16 and DebConf17 and other events

Many of us have been lucky to meet GSoC students attending DebConf, FOSDEM and other events in the past. From this year, Google now expects the students to complete GSoC before they become eligible for any travel assistance. Some of the students will still be at DebConf16 next month, assisted by the regular travel budget and the diversity funding initiative. Nik and Mesut were already able to travel to Vienna for the recent MiniDebConf / LinuxWochen.at

As mentioned earlier, several of the students and the mentors at Savoir Faire Linux are based in Montreal, Canada, the destination for DebConf17 next year and it is great to see the momentum already building for an event that promises to be very big.

Explore the world of Free Real-Time Communications (RTC)

If you are interesting in knowing more about the Free RTC topic, you may find the following resources helpful:

- Come and join the Free-RTC mailing list.

- Debian community members are encouraged to set up your SIP/XMPP/WebRTC account and join the debian-rtc list to discuss your experiences using these services.

- Start reading the RTC Quick Start Guide or consider printing the PDF version to read on your commute

- Try Lumicall and Ring apps for Android with your freinds

RTC mentoring team 2016

We have been very fortunate to build a large team of mentors around the RTC-themed projects for 2016. Many of them are first time GSoC mentors and/or new to the Debian community. Some have successfully completed GSoC as students in the past. Each of them brings unique experience and leadership in their domain.

Helping GSoC projects in 2016 and beyond

Not everybody wants to commit to being a dedicated mentor for a GSoC student. In fact, there are many ways to help without being a mentor and many benefits of doing so.

Simply looking out for potential applicants for future rounds of GSoC and referring them to the debian-outreach mailing list or an existing mentor helps ensure we can identify talented students early and design projects around their capabilities and interests.

Testing the projects on an ad-hoc basis, greeting the students at DebConf and reading over the student wikis to find out where they are and introduce them to other developers in their area are all possible ways to help the projects succeed and foster long term engagement.

Google gives Debian a USD $500 grant for each student who completes a project successfully this year. If all 2016 students pass, that is over $10,000 to support Debian's mission.

|

|

Shing Lyu: Show Firefox Bookmark Toolbar in Fullscreen Mode |

By default, the bookmark toolbar is hidden when Firefox goes into fullscreen mode. It’s quite annoying because I use the bookmark toolbar a lot. And since I use i3 window manager, I also use the fullscreen mode very often to avoid resizing the window. After some googling I found this quick solution on SUMO (Firefox commuity is awesome!).

The idea is that the Firefox chrome (not to be confused with the Google Chrome browser) is defined using XUL. You can adjust its styling using CSS. The user defined chrome CSS is located in your Firefox profile. Here is how you do it:

- Open your Firefox profile folder, which is

~/.mozilla/firefox/on Linux. If you can’t find it, you can open. about:supportin your Firefox. and click the “Open Directory” button in the “Profile Directory” field.

- Create a folder named

chromeif it doesn’t exist yet. - Create a file called

userChrome.cssin thechromefolder, copy the following content into it and save.

@namespace url("http://www.mozilla.org/keymaster/gatekeeper/there.is.only.xul"); /* only needed once */

/* full screen toolbars */

#navigator-toolbox[inFullscreen] toolbar:not([collapsed="true"]) {

visibility:visible!important;

}

- Restart your Firefox and Voila!

https://shinglyu.github.io/web/2016/06/20/firefox_bookmark_toolbar_in_fullscreen.html

|

|

Mozilla Open Policy & Advocacy Blog: EU Internet Users Can Stand Up For Net Neutrality |

Over the past 18 months, the debate around the free and open Internet has taken hold in countries around the world, and we’ve been encouraged to see governments take steps to secure net neutrality. A key component of these movements has been strong public support from users upholding the internet as a global public resource. From the U.S. to India, public opinion has helped to positively influence internet regulators and shape internet policy.

Now, it’s time for internet users in the EU to speak out and stand up for net neutrality.

The Body of European Regulators of Electronic Communications (BEREC) is currently finalising implementation guidelines for the net neutrality legislation passed by EU Parliament last year. This is an important moment — how the legislation is interpreted will have a major impact on the health of the internet in the EU. A clear, strong interpretation can uphold the internet as a free and open platform. But a different interpretation can grant big telecom companies considerable influence and the ability to implement fast lanes, slow lanes, and zero-rating. It would make the internet more closed and more exclusive.

At Mozilla, we believe the internet is at its best as a free, open, and decentralised platform. This is the kind of internet that enables creativity and collaboration; that grants everyone equal opportunity; and that benefits competition and innovation online.

Everyday internet users in the EU have the opportunity to stand up for this type of internet. From now through July 18, BEREC is accepting public comments on the draft guidelines. It’s a small window — and BEREC is simultaneously experiencing pressure from telecom companies and other net neutrality foes to undermine the guidelines. That’s why it’s so important to sound off. When more and more citizens stand up for net neutrality, we’re empowering BEREC to stand their ground and interpret net neutrality legislation in a positive way.

Mozilla is proud to support savetheinternet.eu, an initiative by several NGOs — like European Digital Rights (EDRi) and Access Now — to uphold strong net neutrality in the EU. savetheinternet.eu makes it simple to submit a public comment to BEREC and stand up for an open internet in the EU. BEREC’s draft guidelines already address many of the ambiguities in the Regulation; your input and support can bring needed clarity and strength to the rules. We hope you’ll join us: visit savetheinternet.eu and write BEREC before the July 18 deadline.

https://blog.mozilla.org/netpolicy/2016/06/20/eu-users-can-stand-up-for-net-neutrality/

|

|

The Servo Blog: This Week In Servo 68 |

In the last week, we landed 55 PRs in the Servo organization’s repositories.

The entire Servo team and several of our contributors spent last week in London for the Mozilla All Hands meeting. While this meeting resulted in fewer PRs than normal, there were many great meetings that resulted in both figuring out some hard problems and introducing more people to Servo’s systems. These meetings included:

- Security work planning

- Detailed Rust/Servo-in-Gecko planning

- In-depth overview of Servo’s style and layout systems

- Overview of WebRender and lessons learned in WR1 applied to WR2

Planning and Status

Our overall roadmap and quarterly goals are available online.

This week’s status updates are here.

Notable Additions

- connorgbrewster added tests for the history interface

- ms2ger moved

ServoLayoutNodeto script, as part of the effort to untangle our build dependencies - nox is working to reduce our shameless dependencies on Nightly Rust across our dependencies

- aneeshusa added better support for installing the Android build tools for cross-compilation on our builders

- jdm avoided a poor developer experience when debugging on OS X

- darinm223 fixed the layout of images with percentage dimensions

- izgzhen implemented several APIs related to Blob URLs

- srm912 and jdm added support for private mozbrowser iframes (ie. incognito mode)

- nox improved the performance of several 2d canvas APIs

- jmr0 implemented throttling for mozbrowser iframes that are explicitly marked as invisible

- notriddle fixed the positioning of the cursor in empty input fields

New Contributors

Interested in helping build a web browser? Take a look at our curated list of issues that are good for new contributors!

Screenshot

No screenshots this week.

|

|

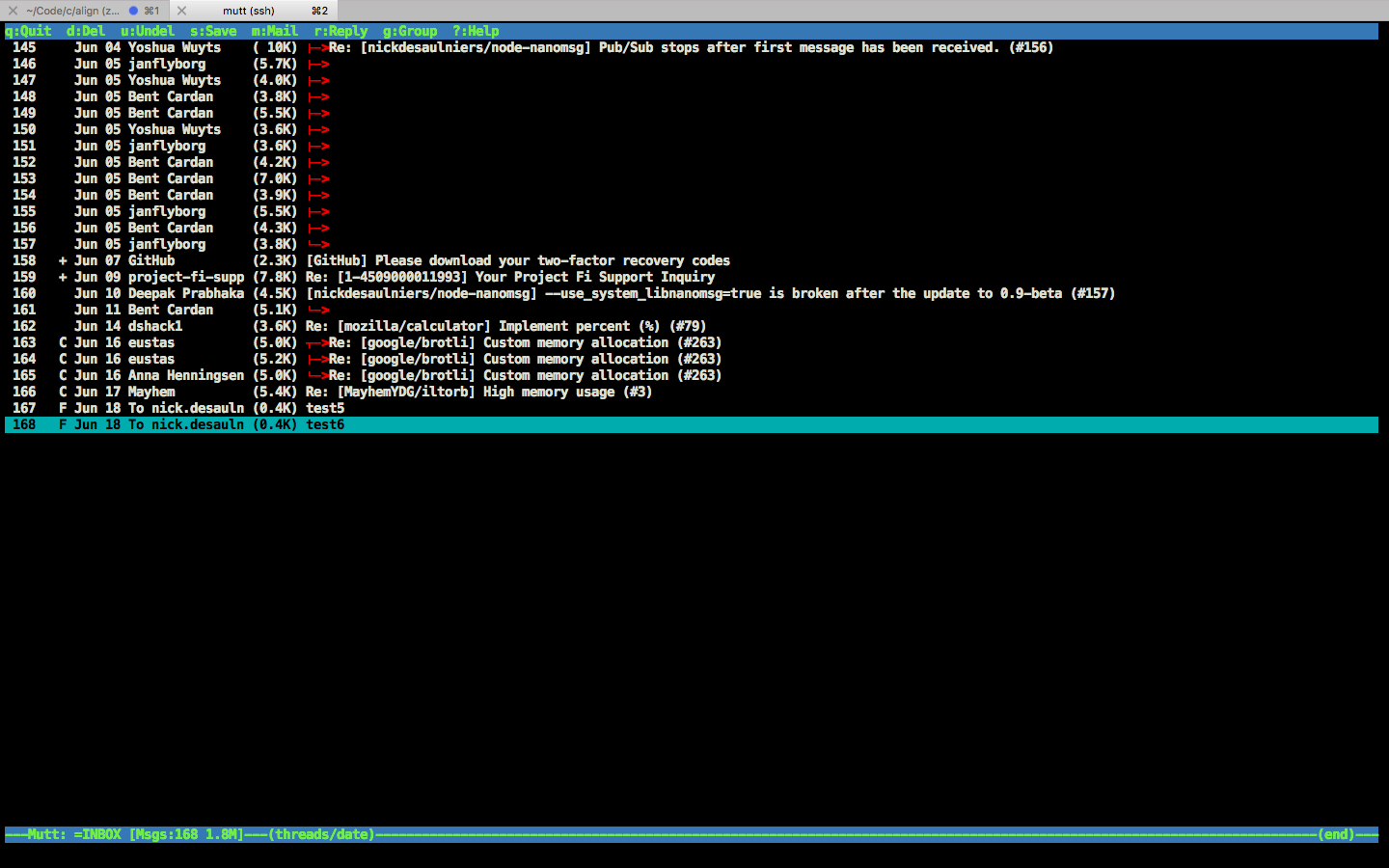

Nick Desaulniers: Setting up mutt with gmail on Ubuntu |

I was looking to set up the mutt email client on my Ubuntu box to go through my gmail account. Since it took me a couple of hours to figure out, and I’ll probably forget by the time I need to know again, I figure I’d post my steps here.

I’m on Ubuntu 16.04 LTS (lsb_release -a)

Install mutt:

1

| |

In gmail, allow other apps to access gmail:

Allowing less secure apps to access your account Less Secure Apps

Create the folder

1 2 3 | |

where $MAIL for me was /var/mail/nick.

Create the ~/.muttrc file

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

I’m sure there’s better/more config options. Feel free to go wild, this is by no means a comprehensive setup.

Run mutt:

1

| |

We should see it connect and download our messages. m to start sending new

messages. G to fetch new messages.

http://nickdesaulniers.github.io/blog/2016/06/18/mutt-gmail-ubuntu/

|

|

Daniel Glazman: Pourquoi il n'aurait pas du arr^eter d'utiliser CSS |

Cet article est un commentaire sur celui-ci. Je l'ai trouv'e via un tweet de Korben, 'evidemment. Il m'a un peu h'eriss'e le poil, 'evidemment, et donc j'ai besoin de fournir une r'eponse `a son auteur via ce blog.

Les s'electeurs

L'auteur de l'article fait les trois reproches suivants aux s'electeurs CSS :

- La d'efinition d'un style associ'e `a un s'electeur peut ^etre red'efinie ailleurs

- Si on associe plusieurs styles `a un s'electeur, les derniers d'efinis dans le CSS auront toujours la priorit'e

- Quelqu'un peut p'eter les styles d'un composant pour peu qu'il ne sache pas qu'un s'electeur est utilis'e ailleurs

Le moins que l'on puisse dire est j'ai hallucin'e en lisant cela. Quelques lignes au-dessus, l'auteur faisait une comparaison avec JavaScript. Reprenons donc ses trois griefs....

Pour le num'ero 1, il r^ale parce que if (foo) { a = 1; } ... if (foo) { a = 2;} est tout simplement possible. Bwarf.

Dans le cas num'ero 2, il r^ale parce que dans if (foo) { a = 1; a = 2; ... } les ... verront la variable a avoir la valeur 2.

Dans le cas num'ero 3, oui, certes, je ne connais pas de langage dans lequel quelqu'un qui modifie sans conna^itre le contexte ne fera aucune connerie...

La sp'ecificit'e

Le « plein d'elire » de !important, et je suis le premier `a reconna^itre que cela ne m'a pas aid'e dans l'impl'ementation de BlueGriffon, c'est quand m^eme ce qui a permis `a des t'etraflop'ees de bookmarklets et de codes inject'es dans des pages totalement arbitraires d'avoir un r'esultat visuel garanti. L'auteur ne r^ale pas seulement contre la sp'ecificit'e et son calcul mais aussi sur la possibilit'e d'avoir une base contextuelle pour l'application d'une r`egle. Il a en partie raison, et j'avais moi-m^eme il y a longtemps propos'e un CSS Editing Profile limitant les s'electeurs utilis'es dans un tel profil pour une plus grande facilit'e de manipulation. Mais l`a o`u il a tort, c'est que des zillions de sites professionnels utilisant des composants ont absolument besoin des s'electeurs complexes et de ce calcul de sp'ecificit'e...

Les r'egressions

En lisant cette section, j'ai lach'e un sonore « il exag`ere tout de m^eme »... Oui, dans tout langage interpr'et'e ou compil'e, modifier un truc quelque part sans tenir compte du reste peut avoir des effets de bords n'egatifs. Son exemple est exactement conforme `a celui d'une classe qu'on d'eriverait ; un ajout `a la classe de base se retrouverait dans la classe d'eriv'ee. Oh bah c'est pas bien ca... Soyons s'erieux une seconde svp.

Le choix de priorisation des styles

L`a, clairement, l'auteur n'a pas compris un truc fondamental dans les navigateurs Web et le DOM et l'application des CSS. Il n'y a que deux choix possibles : soit on utilise l'ordre du document tree, soit on utilise des r`egles de cascade des feuilles de styles. Du point de vue du DOM, class="red blue" et class="blue red" sont strictement 'equivalents et il n'y a aucune, je r'ep`ete aucune, garantie que les navigateurs pr'eservent cet ordre dans leur DOMTokenList.

Le futur de CSS

Revenons `a la comparaison JS de l'auteur. En gros, si on a en ligne 1 var a=1 en ligne 2 alert(a), l'auteur r^ale parce que si on ins`ere var a = 2 entre les deux lignes on affichera la valeur 2 et pas 1... C'est clairement inadmissible (au sens de pas acceptable) comme argument.

La m'ethodologie BEM

Un pansement sur une jambe de bois... On ne change rien mais on fout des indentations qui augmentent la taille du fichier, g^enent son 'edition et sa manipulation, et ne sont aucunement compr'ehensibles par une machine puisque tout cela n'est pas pr'eserv'e par le CSS Object Model.

Sa proposition alternative

J'ai touss'e `a m'en 'ejecter les poumons du corps `a la lecture de cette section. C'est une horreur non-maintenable, verbeuse et error-prone.

En conclusion...

Oui, CSS a des d'efauts de naissance. Je le reconnais bien volontiers. Et m^eme des d'efauts d'adulte quand je vois certaines cochoncet'es que le ShadowDOM veut nous faire mettre dans les S'electeurs CSS. Mais ca, c'est un rouleau-compresseur pour 'ecraser une mouche, une usine `a gaz d'une magnitude rarement 'egal'ee.

Je ne suis globalement pas d'accord du tout avec bloodyowl, qui oublie trop facilement les immenses b'en'efices que nous avons pu tirer de tout ce qu'il d'ecrie dans son article. Des centaines de choses seraient impossibles sans tout ca. Alors oui, d'accord, la Cascade c'est un peu capillotract'e. Mais on n'attrape pas des mouches avec du vinaigre et si le monde entier a adopt'e CSS (y compris le monde de l''edition qui vient pourtant de solutions assez radicalement diff'erentes du Web), c'est bien parce que c'est bien et que ca marche.

En r'esum'e, non CSS n'est pas « un langage horriblement dangereux ». Mais oui, si on laisse n'importe qui faire des ajouts n'importe comment dans un corpus existant, ca peut donner des catas. C'est pareil dans un langage de programmation, dans un livre, dans une th`ese, dans de la m'ecanique, partout. Voil`a voil`a.

|

|

Soledad Penades: Post #mozlondon |

Writing this from the comfort of my flat, in London, just as many people are tweeting about their upcoming flight from “#mozlondon”—such a blissful post-all Hands travel experience for once!

Note: #mozlondon was a Mozilla all hands which was held in London last week. And since everything is quite social networked nowadays, the “#mozlondon” tag was chosen. Previous incarnations: mozlando (for Orlando), mozwww (for Vancouver’s “Whistler Work Week” which made for a very nice mountainous jagged tag), and mozlandia (because it was held in Portland, and well, Portlandia. Obviously!)

I always left previous all hands feeling very tired and unwell in various degrees. There’s so much going on, in different places, and there’s almost no time to let things sink in your brain (let alone in your stomach as you quickly brisk from location to location). The structure of previous editions also didn’t really lend itself very well to collaboration between teams—too many, too long plenaries, very little time to grab other people’s already exhausted attention.

This time, the plenaries were shortened and reduced in number. No long and windy “inspirational” keynotes, and way more room for arranging our own meetings with other teams, and for arranging open sessions to talk about your work to anyone interested. More BarCamp style than big and flashy, plus optional elective training sessions in which we could learn new skills, related or not to our area of expertise.

I’m glad to say that this new format has worked so much better for me. I actually was quite surprised that it was going really well for me half-way during the week, and being the cynic that I sometimes am, was expecting a terrible blow to be delivered before the end of the event. But… no.

We have got better at meetings. Our team meeting wasn’t a bunch of people interrupting each other. That was a marvel! I loved that we got things done and agreements and disagreements settled in a civilised manner. The recipe for this successful meeting: have an agenda, a set time, and a moderator, and demand one or more “conclusions” or “action items” after the meeting (otherwise why did you meet?), and make everyone aware that time is precious and running out, to avoid derailments.

We also met with the Servo team. With almost literally all of them. This was quite funny: we had set up a meeting with two or three of them, and other members of the team saw it in somebody else’s calendar and figured a meeting to discuss Servo+DevRel sounded interesting, so they all came, out of their own volition! It was quite unexpected, but welcome, and that way we could meet everyone and put faces to IRC nicknames in just one hour. Needless to say, it’s a great caring team and I’m really pleased that we’re going to work together during the upcoming months.

I also enjoyed the elective training sessions.

I went to two training sessions on Rust; it reminded me how much fun “systems programming” can be, and made me excited about the idea of safe parallelism (among other cool stuff). I also re-realised how hard programming and teaching programming can be as I confronted my total inexperience in Rust and increasing frustration at the amount of new concepts thrown at me in such a short interval—every expert on any field should regularly try learning something new every now and then to bring some ‘humility’ back and replenish the empathy stores.

The people sessions were quite long and extenuating and had a ton of content in 3 hours each, and after them I was just an empty hungry shell. But a shell that had learned good stuff!

One was about having difficult conversations, navigating conflict, etc. I quickly saw how many of my ways had been wrong in the past (e.g. replying to a hurt person with self-defense instead of trying to find why they were hurt). Hopefully I can avoid falling in the same traps in the future! This is essential for so many aspects in life, not only open source or software development; I don’t know why this is not taught to everyone by default.

The second session was about doing good interviews. In this respect, I was a bit relieved to see that my way of interviewing was quite close to the recommendations, but it was good to learn additional techniques, like the STAR interview technique. Which surfaces an irony: even “non-technical” skills have a technique to them.

A note to self (that I’m also sharing with you): always make an effort to find good adjectives that aren’t a negation, but a description. E.g. in this context “people sessions” or “interpersonal skills sessions” work so much better and are more descriptive and specific than “non-technical” while also not disrespecting those skills because they’re “just not technical”.

A thing I really liked from these two sessions is that I had the chance to meet people from areas I would not have ever met otherwise, as they work on something totally different from what I work on.

The session on becoming a more senior engineer was full of good inspiration and advice. Some of the ideas I liked the most:

- as soon as you get into a new position, start thinking of who should replace you so you can move on to something else in the future (so you set more people in a path of success). You either find that person or make it possible for others to become that person…

- helping people be successful as a better indicator of your progress to seniority than being very good at coding

- being a good generalist is as good as being a good specialist—different people work differently and add different sets of skills to an organisation

- but being a good specialist is “only good” if your special skill is something the organisation needs

- changing projects and working on different areas as an antidote to burn out

- don’t be afraid to occasionally jump into something even if you’re not sure you can do it; it will probably grow you!

- canned projects are not your personal failure, it’s simply a signal to move on and make something new and great again, using what you learned. Most of the people on the panel had had projects canned, and survived, and got better

- if a project gets cancelled there’s a very high chance that you are not going to be “fired”, as there are always tons of problems to be fixed. Maybe you were trying to fix the wrong problem. Maybe it wasn’t even a problem!

- as you get more senior you speak less to machines and more to people: you develop less, and help more people develop

- you also get less strict about things that used to worry you a lot and turn out to be… not so important! you also delegate more and freak out less. Tolerance.

- I was also happy to hear a very clear “NO” to programming during every single moment of your spare time to prove you’re a good developer, as that only leads to burn out and being a mediocre engineer.

Deliberate strategies

I designed this week with the full intent of making the most of it while still keeping healthy. These are my strategies for future reference:

- A week before: I spent time going through the schedule and choosing the sessions I wanted to attend.

- I left plenty of space between meetings in order to have some “buffer” time to process information and walk between venues (the time pedestrians spend in traffic lights is significantly higher than you would expect). Even then, I had to rush between venues more than once!

- I would not go to events outside of my timetable – no late minute stressing over going to an unexpected session!

- If a day was going to be super busy on the afternoon, I took it easier on the morning

- Drank lots of water. I kept track of how much, although I never met my target, but I felt much better the days I drank more water.

- Avoided the terrible coffee at the venues, and also caffeine as much as possible. Also avoided the very-nice-looking desserts, and snacks in general, and didn’t eat a lot because why, if we are just essentially sitting down all day?

- Allowed myself a good coffee a day–going to the nice coffee places I compiled, which made for a nice walk

- Brought layers of clothes (for the venues were either scorching hot and humid or plainly freezing) and comfy running trainers (to walk 8 km a day between venues and rooms without developing sore feet)

- Saying no to big dinners. Actively seeking out smaller gatherings of 2-4 people so we all hear each other and also have more personal conversations.

- Saying no to dinner with people when I wasn’t feeling great.

The last points were super essential to being socially functional: by having enough time to ‘recharge’, I felt energised to talk to random people I encountered in the “Hallway track”, and had a number of fruitful conversations over lunch, drinks or dinner which would otherwise not have happened because I would have felt aloof.

I’m now tired anyway, because there is no way to not get tired after so many interactions and information absorbing, but I am not feeling sick and depressed! Instead I’m just thinking about what I learnt during the last week, so I will call this all hands a success!

|

|

Giorgos Logiotatidis: Build and Test against Docker Images in Travis |

The road towards the absolute CI/CD pipeline goes through building Docker images and deploying them to production. The code included in the images gets unit tested, both locally during development and after merging in master branch using Travis.

But Travis builds its own environment to run the tests on which could be different from the environment of the docker image. For example Travis may be running tests in a Debian based VM with libjpeg version X and our to-be-deployed docker image runs code on-top of Alpine with libjpeg version Y.

To ensure that the image to be deployed to production is OK, we need to run the

tests inside that Docker image. That still possible with Travis with only a few

changes to .travis.yml:

Sudo is required

sudo: required

Start by requesting to run tests in a VM instead of in a container.

Request Docker service:

services: - docker

The VM must run the Docker daemon.

Add TRAVIS_COMMIT to Dockerfile (Optional)

before_install: - docker --version - echo "ENV GIT_SHA ${TRAVIS_COMMIT}" >> Dockerfile

It's very useful to export the git SHA of HEAD as a Docker environment variable.

This way you can always identify the code included, even if you have .git

directory in .dockerignore to reduce size of the image.

The resulting docker image gets also tagged with the same SHA for easier identification.

Build the image

install: - docker pull ${DOCKER_REPOSITORY}:last_successful_build || true - docker pull ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} || true - docker build -t ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} --pull=true .

Instead of pip installing packages, override the install step to build the

Docker image.

Start by pulling previously built images from the Docker Hub. Remember that Travis will run each job in a isolate VMs therefore there is no Docker cache. By pulling previously built images cache gets seeded.

Travis' built-in cache functionality can also be used, but I find it more convenient to push to the Hub. Production will later pull from there and if debug is needed I can pull the same image locally too.

Each docker pull is followed by a || true which translates to "If the Docker

Hub doesn't have this repository or tag it's OK, don't stop the build".

Finally trigger a docker build. Flag --pull=true will force downloading the

latest versions of the base images, the ones from the FROM instructions. For

example if an image is based on Debian this flag will force Docker to download

the latest version of Debian image. The Docker cache has been already populated

so this is not superfluous. If skipped the new build could use an outdated base

image which could have security vulnerabilities.

Run the tests

script: - docker run -d --name mariadb -e MYSQL_ALLOW_EMPTY_PASSWORD=yes -e MYSQL_DATABASE=foo mariadb:10.0 - docker run ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} flake8 foo - docker run --link mariadb:db -e CHECK_PORT=3306 -e CHECK_HOST=db giorgos/takis - docker run --env-file .env --link mariadb:db ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} coverage run ./manage.py test

First start mariadb which is needed for the Django tests to run. Fork to the

background with -d flag. The --name flag makes linking with other containers

easier.

Then run flake8 linter. This is run after mariadb - although it doesn't depend

on it - to allow some time for the database to download and initialize before it

gets hit with tests.

Travis needs about 12 seconds to get MariaDB ready which is usually more than

the time the linter runs. To wait for the database to become ready before

running the tests, run Takis. Takis waits for the container named mariadb to

open port 3306. I blogged in detail about Takis

before.

Finally run the tests making sure that the database is linked using --link and

that environment variables needed for the application to initialize are set

using --env-file.

Upload built images to the Hub

deploy: - provider: script script: bin/dockerhub.sh on: branch: master repo: foo/bar

And dockerhub.sh

docker login -e "$DOCKER_EMAIL" -u "$DOCKER_USERNAME" -p "$DOCKER_PASSWORD" docker push ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} docker tag -f ${DOCKER_REPOSITORY}:${TRAVIS_COMMIT} ${DOCKER_REPOSITORY}:last_successful_build docker push ${DOCKER_REPOSITORY}:last_successful_build

The deploy step is used to run a script to tag images, login to Docker Hub and

finally push those tags. This step is run only on branch: master and not on

pull requests.

Pull requests will not be able to push to Docker Hub anyway because Travis does

not include encrypted environment variables to pull requests and therefore,

there will be no $DOCKER_PASSWORD. In the end of the day this is not a problem

because you don't want pull requests with arbitrary code to end up in your

Docker image repository.

Set the environment variables

Set the environment variables needed to build, test and deploy in env section:

env: global: # Docker - DOCKER_REPOSITORY=example/foo - DOCKER_EMAIL="foo@example.com" - DOCKER_USERNAME="example" # Django - DEBUG=False - DISABLE_SSL=True - ALLOWED_HOSTS=* - SECRET_KEY=foo - DATABASE_URL=mysql://root@db/foo - SITE_URL=http://localhost:8000 - CACHE_URL=dummy://

and save them to .env file for the docker daemon to access with --env-file

before_script: - env > .env

Variables with private data like DOCKER_PASSWORD can be added through Travis'

web interface.

That's all!

Pull requests and merges to master are both tested against Docker images and

successful builds of master are pushed to the Hub and can be directly used to

production.

You can find a real life example of .travis.yml in the

Snippets

project.

https://giorgos.sealabs.net/build-and-test-against-docker-images-in-travis.html

|

|

Mozilla Addons Blog: Multi-process Firefox and AMO |

In Firefox 48, which reaches the release channel on August 1, 2016, mullti-process support (code name “Electrolysis”, or “e10s”) will begin rolling out to Firefox users without any add-ons installed.

In preparation for the wider roll-out to users with add-ons installed, we have implemented compatibility checks on all add-ons uploaded to addons.mozilla.org (AMO).

There are currently three possible states:

- The add-on is a WebExtension and hence compatible.

- The add-on has marked itself in the install.rdf as multi-process compatible.

- The add-on has not marked itself compatible, so the state is currently unknown.

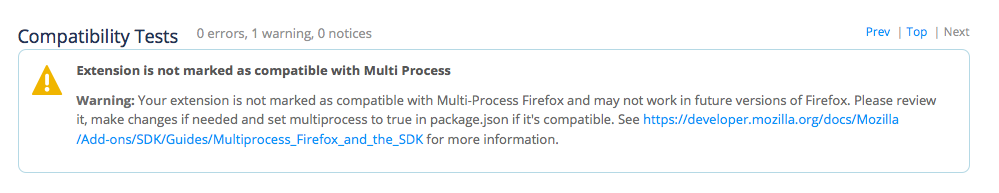

If a new add-on or a new version of an old add-on is not multi-process compatible, a warning will be shown in the validation step. Here is an example:

In future releases, this warning might become more severe as the feature nears full deployment.

For add-ons that fall into the third category, we might implement a more detailed check in a future release, to provide developers with more insight into the “unknown” state.

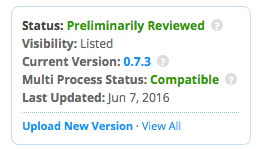

After an add-on is uploaded, the state is shown in the Developer Hub. Here is an example:

Once you verify that your add-on is compatible, be sure to mark it as such and upload a new version to AMO. There is documentation on MDN on how to test and mark your add-on.

If your add-on is not compatible, please head to our resource center where you will find information on how to update it and where to get help. We’re here to support you!

https://blog.mozilla.org/addons/2016/06/17/multi-process-firefox-and-amo/

|

|

John O'Duinn: RelEng Conf 2016: Call for papers |

(Suddenly, its June! How did that happen? Where did the year go already?!? Despite my recent public silence, there’s been a lot of work going on behind the scenes. Let me catchup on some overdue blogposts – starting with RelEngConf 2016!)

We’ve got a venue and a date for this conference sorted out, so now its time to start gathering presentations, speakers and figuring out all the other “little details” that go into making a great, memorable, conference. This means two things:

1) RelEngCon 2016 is now accepting proposals for talks/sessions. If you have a good industry-related or academic-focused topic in the area of Release Engineering, please have a look at the Release Engineering conference guidelines, and submit your proposal before the deadline of 01-jul-2016.

2) Like all previous RelEng Conferences, the mixture of attendees and speakers, from academia and battle-hardened industry, makes for some riveting topics and side discussions. Come talk with others of your tribe, swap tips-and-gotchas with others who do understand what you are talking about and enjoy brainstorming with people with very different perspectives.

For further details about the conference, or submitting proposals, see http://releng.polymtl.ca/RELENG2015/html/index.html. If you build software delivery pipelines for your company, or if you work in a software company that has software delivery needs, I recommend you follow @relengcon, block off November 18th, 2016 on your calendar and book your travel to Seattle now. It will be well worth your time.

I’ll be there – and look forward to seeing you there!

John.

http://oduinn.com/blog/2016/06/16/releng-conf-2016-call-for-papers/

|

|

Michael Comella: Enhancing Articles Through Hyperlinks |

When reading an article, I often run into a problem: there are links I want to open but now is not a good time to open them. Why is now not a good time?

- If I open them and read them now, I’ll lose the context of the article I’m currently reading.

- If I open them in the background now and come back to them later, I won’t remember the context that this page was opened from and may not remember why it was relevant to the original article.

I prototyped a solution – at the end of an article, I attach all of the links in the article to the end of the article with some additional context. For example, from my Android thread annotations post:

To remember why I wanted to open the link, I provide the sentence the link appeared in.

To see if the page is worth reading, I access the page the link points to and include some of its data: the title, the host name, and a snippet.

There is more information we can add here as well, e.g. a “trending” rating (a fake implementation is pictured), a favicon, or a descriptive photo.

And vice versa

You can also provide the original article’s context on a new page after you click a link:

This context can be added for more than just articles.

Shout-out to Chenxia & Stefan for independently discovering this idea and a context graph brainstorming group for further fleshing this out.

Note: this is just a mock-up – I don’t have a prototype for this.

Themes

The web is a graph. In a graph, we can access new nodes, and their content, by traversing backwards or forwards. Can we take advantage of this relationship?

Alan Kay once said, people largely use computers “as a convenient way of getting at old media…” This is prevalent on the web today – many web pages fill fullscreen browser windows that allow you to read the news, create a calendar, or take notes, much like we can with paper media. How can we better take advantage of the dynamic nature of computers? Of the web?

Can we mix and match live content from different pages? Can we find clever ways to traverse & access the web graph?

This blog & prototype provide two simple examples of traversing the graph and being (ever so slightly) more dynamic: 1) showing the context of where the user is going to go and 2) showing the context of where they came from. Wikipedia (with a certain login-needed feature enabled) has another interesting example when mousing over a link:

They provide a summary and an image of the page the hyperlink will open. Can we use this technique, and others, to provide more context for hyperlinks on every page on the web?

To summarize, perhaps we can solve user problems by considering:

- The web as a graph – accessing content backwards & forwards from the current page

- Computers & the web as a truly dynamic medium, with different capabilities than their print predecessors

For the source and a chance to run it for yourself, check out the repository on github.

http://mcomella.xyz/blog/2016/enhancing-articles-through-hyperlinks.html

|

|