Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Giorgos Logiotatidis: Takis - A util that blocks until a port is open. |

Over at Mozilla's Engagement Engineering we use Docker to ship our websites. We build the docker images in CI and then we run tests against them. Our tests usually need a database or a cache server which you can get it running simply with a single command:

docker run -d mariadb

The problem is that this container will take some time to initialize and become available to accept connections. Depending on what your test and how you run your tests this delay can cause a test failure to due database connection timeouts.

We used to wait on executing our tests with sleep command but that -besides

being an ugly hack- will not always work. For example you may set sleep timeout

to 10 seconds and due to CI server load database initialization takes 11

seconds. And nobody wants a non-deterministic test suite.

Meet Takis. Takis checks once per

second if a host:port is open. Once it's open, it just returns. It blocks the

execution of your pipeline until services become available. No messages or other

output to get in the way of your build logs. No complicated configuration

either: It reads CHECK_PORT and optionally CHECK_HOST environment variables,

waits and eventually returns.

Takis is build using Go and it's fully statically linked as Adriaan explains in this intriguing read. You can download it and directly use it in your scripts

~$ wget https://github.com/glogiotatidis/takis/raw/master/bin/takis ~$ chmod +x takis ~$ CHECK_PORT=3306 ./takis

or use it's super small Docker image

docker run -e CHECK_PORT=3306 -e CHECK_HOST=database.example.com giorgos/takis

For example here's how we use it to build Snippets Service in TravisCI:

script: - docker run -d --name mariadb -e MYSQL_ALLOW_EMPTY_PASSWORD=yes -e MYSQL_DATABASE=snippets mariadb:10.0 # Wait mariadb to initialize. - docker run --link mariadb:db -e CHECK_PORT=3306 -e CHECK_HOST=db giorgos/takis - docker run --env-file .env --link mariadb:db mozorg/snippets:latest coverage run ./manage.py test

My colleague Paul also build urlwait, a Python utility and library with similar functionality that can be nicely added to your docker-compose workflow to fight the same problem. Neat!

https://giorgos.sealabs.net/takis-a-util-that-blocks-until-a-port-is-open.html

|

|

Daniel Stenberg: curl user poll 2016 |

It is time for our annual survey on how you use curl and libcurl. Your chance to tell us how you think we’ve done and what we should do next. The survey will close on midnight (central European time) May 27th, 2016.

If you use curl or libcurl from time to time, please consider helping us out with providing your feedback and opinions on a few things:

http://goo.gl/forms/e4CoSDEKde

It’ll take you a couple of minutes and it’ll help us a lot when making decisions going forward. Thanks a lot!

The poll is hosted by Google and that short link above will take you to:

https://docs.google.com/forms/d/1JftlLZoOZLHRZ_UqigzUDD0AKrTBZqPMpnyOdF2UDic/viewform

|

|

Karl Dubost: [worklog] Make Web sites simpler. |

Not a song this week, but just a documentary to remind me that some sites are overly complicated and there are strong benefits and resilience in chosing a solid simple framework for working. Not that it makes easier the work. I think it's even the opposite, it's basically harder to make a solid simple Web site. But that the cost is beneficial on the longterm. Tune of the week: The Depth of simplicity in Ozu's movie.

Webcompat Life

Progress this week:

Today: 2016-05-16T10:12:01.879159 354 open issues ---------------------- needsinfo 3 needsdiagnosis 109 needscontact 30 contactready 55 sitewait 142 ----------------------

In my journey in getting the contactready and needscontact lower, we are making progress. You are welcome to participate

Reorganizing a bit the wiki so it better aligns with our current work. In Progress.

Good news on the front of appearance in CSS.

The CSSWG just resolved that

"appearance: none"should turn checkbox & radioelements into a normal non-replaced element.

Learning on how to do mozregression

We are looking at creating a mechanism similar to Opera browser.js into Firefox. Read and participate to the discussion.

Webcompat issues

(a selection of some of the bugs worked on this week).

- Pizza being unresponsive or more to the point being slow. Issue with React not being reactive? When I see the markup, I start crying. Why do we hurt ourselves like this?

- Finally closed the Firefox Gmail on Android issue. Happiness.

- irresponsible responsive: 353 requests 25 Mo…. The number of insane Web sites be on mobile or desktop… It's like the hummer SUV to drive around your neighborhood to buy milk.

- OffShore Panama CSS

Reading List

- Intent to Use Counter: Everything

- My URL isn’t your URL

Cache-Control: immutable. Good stuff.- Compression, Anti-virus, MITM and Facebook: recipes for disaster.

- Being where the people are:

Vendor Prefixes are dead but with them mass author involvement in early stage specifications. The history of Grid shows that it is incredibly difficult to get people to do enough work to give helpful feedback with something they can’t use – even a little bit – in production.

Follow Your Nose

TODO

- Document how to write tests on webcompat.com using test fixtures.

- ToWrite: rounding numbers in CSS for

width - ToWrite: Amazon prefetching resources with

for Firefox only.

Otsukare!

|

|

Nick Desaulniers: What's in a Word? |

Recently, there some was some confusion between myself and a coworker over the definition of a “word.” I’m currently working on a blog post about data alignment and figured it would be good to clarify some things now, that we can refer to later.

Having studied computer engineering and being quite fond of processor design, when I think of a “word,” I think of the number of bits wide a processor’s general purpose registers are (aka word size). This places hard requirements on the largest representable number and address space. A 64 bit processor can represent 264-1 (1.8x1019) as the largest unsigned long integer, and address up to 264-1 (16 EiB) different addresses in memory.

Further, word size limits the possible combinations of operations the processor can perform, length of immediate values used, inflates the size of binary files and memory needed to store pointers, and puts pressure on instruction caches.

Word size also has implications on loads and stores based on alignment, as we’ll see in a follow up post.

When I think of 8 bit computers, I think of my first microcontroller: an Arduino with an Atmel AVR processor. When I think of 16 bit computers, I think of my first game console, a Super Nintendo with a Ricoh 5A22. When I think of 32 bit computers, I think of my first desktop with Intel’s Pentium III. And when I think of 64 bit computers, I think modern smartphones with ARMv8 instruction sets. When someone mentions a particular word size, what are the machines that come to mind for you?

So to me, when someone’s talking about a 64b processor, to that machine (and me) a word is 64b. When we’re referring to a 8b processor, a word is 8b.

Now, some confusion.

Back in my previous blog posts about x86-64 assembly, JITs, or debugging, you might have seen me use instructions that have suffixes of b for byte (8b), w for word (16b), dw for double word (32b), and qw for quad word (64b) (since SSE2 there’s also double quadwords of 128b).

Wait a minute! How suddenly does a “word” refer to 16b on a 64b processor, as opposed to a 64b “word?”

In short, historical baggage. Intel’s first hit processor was the 4004, a 4b processor released in 1971. It wasn’t until 1979 that Intel created the 16b 8086 processor.

The 8086 was created to compete with other 16b processors that beat it to the market, like the Zilog Z80 (any Gameboy emulator fans out there? Yes, I know about the Sharp LR35902). The 8086 was the first design in the x86 family, and it allowed for the same assembly syntax from the earlier 8008, 8080, and 8085 to be reassembled for it. The 8086’s little brother (8088) would be used in IBM’s PC, and the rest is history. x86 would become one of the most successful ISAs in history.

For backwards compatibility, it seems that both Microsoft’s (whose success has tracked that of x86 since MS-DOS and IBM’s PC) and Intel’s documentation refers to words still as being 16b. This allowed 16b PE32+ executables to be run on 32b or even 64b newer versions of Windows, without requiring recompilation of source or source code modification.

This isn’t necessarily wrong to refer to a word based on backwards compatibility, it’s just important to understand the context in which the term “word” is being used, and that there might be some confusion if you have a background with x86 assembly, Windows API programming, or processor design.

So the next time someone asks: why does Intel’s documentation commonly refer to a “word” as 16b, you can tell them that the x86 and x86-64 ISAs have maintained the notion of a word being 16b since the first x86 processor, the 8086, which was a 16b processor.

Side Note: for an excellent historical perspective programming early x86 chips, I recommend Michael Abrash’s Graphics Programming Black Book. For instance he talks about 8086’s little brother, the 8088, being a 16b chip but only having an 8b bus with which to access memory. This caused a mysterious “cycle eater” to prevent fast access to 16b variables, though they were the processor’s natural size. Michael also alludes to alignment issues we’ll see in a follow up post.

http://nickdesaulniers.github.io/blog/2016/05/15/whats-in-a-word/

|

|

Mark C^ot'e: BMO's database takes a leap forward |

For historical reasons (or “hysterical raisins” as gps says) that elude me, the BMO database has been in (ughhh) Pacific Time since it was first created. This caused some weirdness on every daylight savings time switch (particularly in the fall when 2:00-3:00 am technically occurs twice), but not enough to justify the work in fixing it (it’s been this way for close to two decades, so that means lots of implicit assumptions in the code).

However, we’re planning to move BMO to AWS at some point, and their standard db solution (RDS) only supports UTC. Thus we finally had the excuse to do the work, and, after a bunch of planning, developing, and reviewing, the migration happened yesterday without issues. I am unreasonably excited by this and proud to have witnessed the correction of this egregious violation of standard db principles 18 years after BMO was originally deployed.

Thanks to the BMO team and the DBAs!

https://mrcote.info/blog/2016/05/15/bmos-database-takes-a-leap-forward/

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1270295] don’t update timestamps when the tab is not active / in the background

- [1270867] confusing error message when I was just searching for a bug

- [232193] bmo’s systems (webheads, database, etc) should use UTC natively for o/s timezone and date storage

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/05/14/happy-bmo-push-day-18/

|

|

Emma Humphries: Readable Bug Statuses For Bugzilla: Update |

First, thank you for your interest in this project. Over 100 npm users have downloaded the package this week!

Second, I've been making updates:

- Added a script so that you can run

npm script bundleand create a browserify'ed version of the module to include on web pages. - Use target milestone to indicate the release, before any uplifts, a bug is targeted for.

- Aggressive exception handling so you just need to handle an error message if the package can't parse a bug.

Make sure you're using the latest version, and if you have a feature request, find a bug, or want to make an improvement, submit it to the GitHub repo.

|

|

Yunier Jos'e Sosa V'azquez: Mozilla abre su programa de ayuda al software libre a todos los proyectos |

El a~no pasado Mozilla lanz'o MOSS (por sus siglas en ingl'es de Mozilla Open Source Support), un programa para ayudar econ'omicamente a proyectos de c'odigo abierto. En sus inicios, MOSS estuvo dirigido principalmente a los proyectos que Mozilla emplea a diario. Ahora, con la adici'on de “Mozilla Partners” cualquier proyecto que est'a realizando actividades relacionadas con la Misi'on de Mozilla podr'a acceder a 'el.

Nuestra misi'on, tal como se plasma en nuestro Manifiesto, es garantizar que Internet permanezca siendo un recurso p'ublico global, abierto y accesible a todos. Una Internet que realmente ponga a las personas de primero. Sabemos que muchos otros proyectos de software comparten estas metas con nosotros, y queremos utilizar nuestros recursos para ayudar y animar a otros a trabajar hacia ellos.

Si usted piensa que su proyecto califica, le recomendamos que env'ie su solicitud llenando este formulario. Los criterios de selecci'on en que se basa el comit'e encargado de elegir los proyectos que apliquen puedes leerlos en la Wiki. El presupuesto para este a~no es de aproximadamente 1,25 millones de d'olares estadounidenses (USD).

El plazo para recibir solicitudes para la etapa inicial cerrar'a el jueves 31 de mayo a las 11:59 PM (hora del pac'ifico). Los primeros premiados ser'an dados a conocer a mediados de junio en Londres durante el evento Mozilla All Hands. Es v'alido mencionar que las solicitudes permanecer'an abiertas.

Si deseas unirte a la lista de discusi'on o mantenerte informado del avance del programa, puedes hacerlo mediante las siguientes v'ias:

- Lista de correo: moss@lists.mozilla.org

- Grupo de noticias: mozilla.moss

- Web: Google Groups

Fuente: The Mozilla Blog

|

|

Matt Thompson: Our network is full of stories |

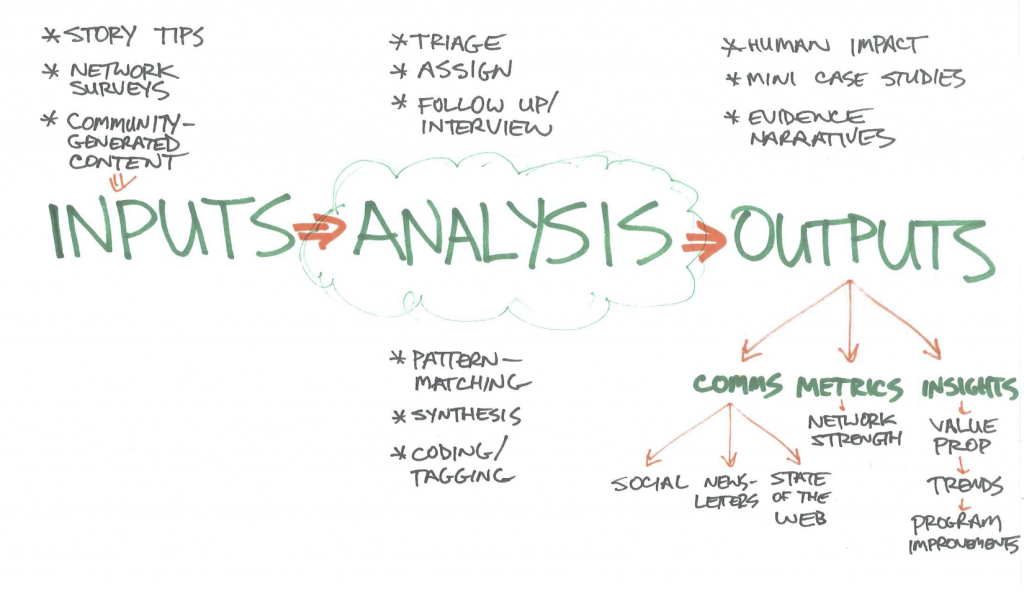

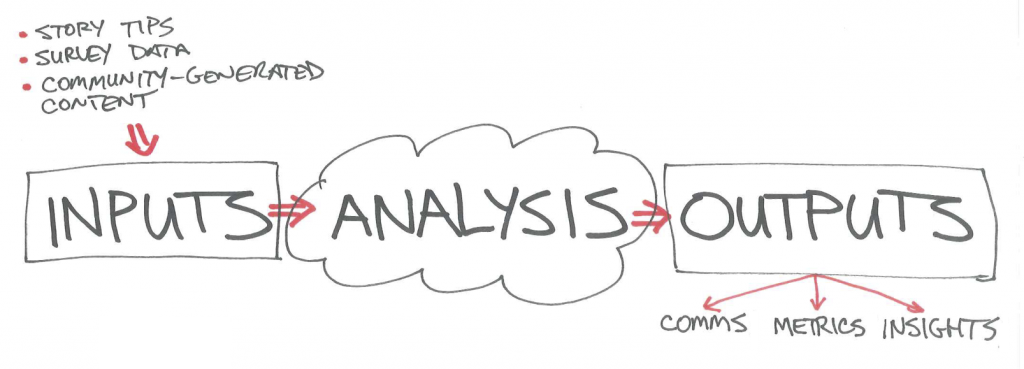

Our network is full of stories, impact and qualitative data. Colleagues and community members discover and use these narratives daily across a broad range — from communications and social media, to metrics and evaluation, to grant-writing, curriculum case studies, and grist for new outlets like the State of the Web.

Our challenge is: how do we capture and analyze these stories and qualitative data in a more systematic and rigorous way?

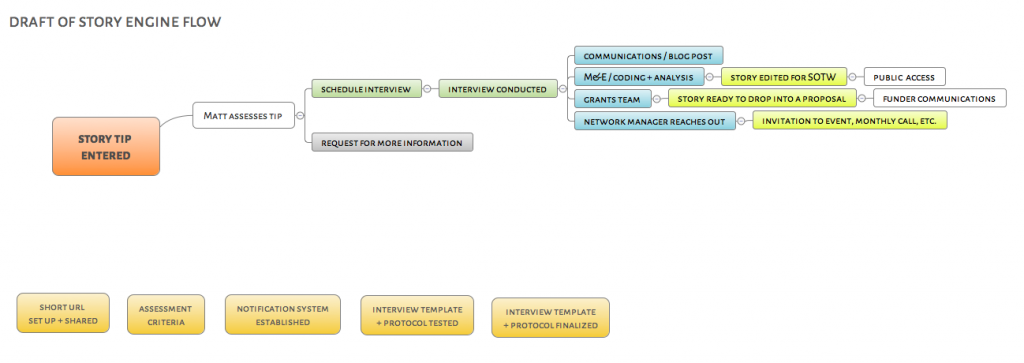

Can we design a unified “Story Engine” that serves multiple customers and use cases simultaneously — in ways that knit together much of our existing work? That’s the challenge we undertook in our first “Story Engine 0.1” sprint: document the goals, interview colleagues, a develop personae. Then design a process, ship a baby prototype, and test it out using some real data.

Designing a network story Engine

Here’s what we shipped in our first 3-day sprint:

- A prototype web site. With a “file a story tip” intake process.

- A draft business plan / workflow

- A successful test around turning network survey data into story leads

- Some early pattern-matching / ways to code and tag evidence narratives

- Documented our key learnings and next steps

1) A prototype web site

http://mzl.la/story is now a thing! It packages what we’ve done so far. Plus a work-bench for ongoing work. It includes:

- “File a story” tip sheet — A quick, easy intake form for filing potential story leads to follow up on. Goal: make it fast and easy for anyone to file the “minimum viable info” we’d need to then triage and follow up. http://mzl.la/tip

- See stories — See story tips submitted via the tip sheet. (Requires password for now, as it contains member emails.) Just a spreadsheet at this point — it will eventually become a Git Hub repo for easier tasking, routing and follow-up. And maybe: a “story garden” with a prettier, more usable interface for humans to browse through and see the people and stories inside our network. http://mzl.la/leads

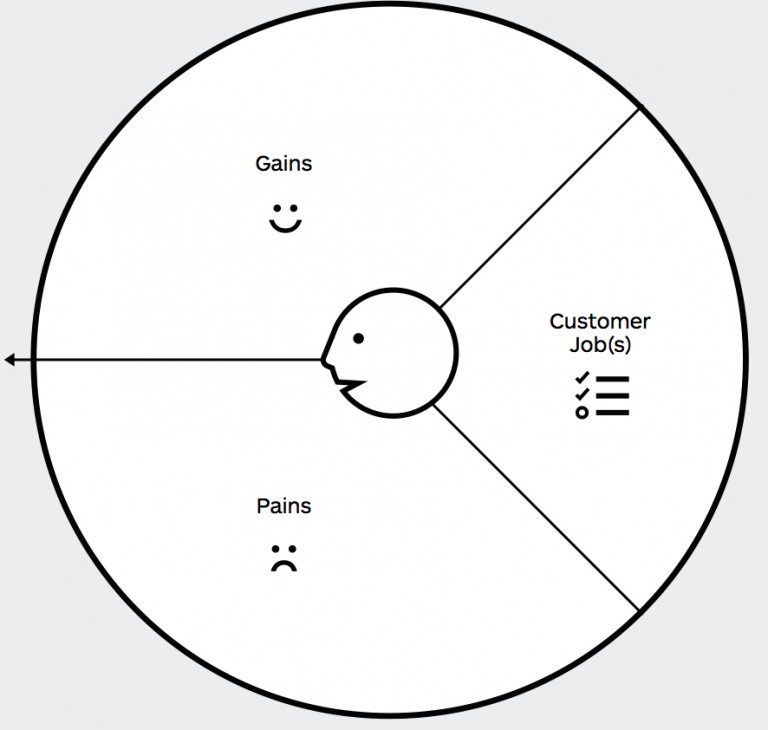

- Personas — Who does this work need to serve? Who are the humans at the center of the design? We interviewed colleagues and documented their context, jobs, pains, and gains. Plus the claims they’d want to make and how they might use our findings. Focused on generating quick wins for the Mozilla Foundation grants, State of the Web, communications and metrics teams. http://mzl.la/customers

- About — Outlining our general thinking, approach and potential methodologies. http://mzl.la/about

- How-To Guides — (Coming soon.) Will eventually become: interview templates, guidance and training on how to conduct effective interviews, our methodology, and coding structure.

2) A draft business process / workflow

What happens when a story tip gets filed? Who does what? Where are the decision points? We mapped some of this initial process, including things like: assess the lead, notify the right staff, conduct follow-up interviews, generate writing/ artefacts, share via social, code and analyze the story, then package and use findings.

3) Turning network survey data into stories

Our colleagues in the “Insights and Impact” team recently conducted their first survey of the network. These survey responses are rich in potential stories, evidence narratives, and qualitative data that can help test and refine our value proposition.

We tested the first piece of our baby story engine by pulling from the network survey and mapping data we just gathered.

This proved to be rich. It proved that our network surveys are not only great ways to gather quantitative data and map network relationships — they also provide rich leads for our grants / comms / M&E / strategy teams to follow up on.

Sample story leads

(Anonymous for privacy reasons):

- “The network helps us form connections to small organizations that offer digital media and learning programs. We learn from their practices and are able to share them out to our broader network of over 1600 Afterschool providers in NYC. It also expands our staff capacity to teach Digital Media and Learning activities.”

- “My passion is youth advocacy and fighting in solidarity with them in their corner. Being part of the network helps me do more with them like working with libraries in the UK to develop ‘Open source library days; lead by our youths who have so much to share with us all.”

- “The collaboration has allowed the local community to learn about the Internet and be able to contribute to it. The greatest joy is seeing young community girls being a part of this revolution through clubs. Through the process of learning they also meet local girls who share the same passion as they do.”

These are examples of leads that may be worth following up on to help flesh out theory of change, analyze trends, and tell a story about impact. Some of the leads we gathered also include critique or ways we need to do better — combined with explicit offers to help.

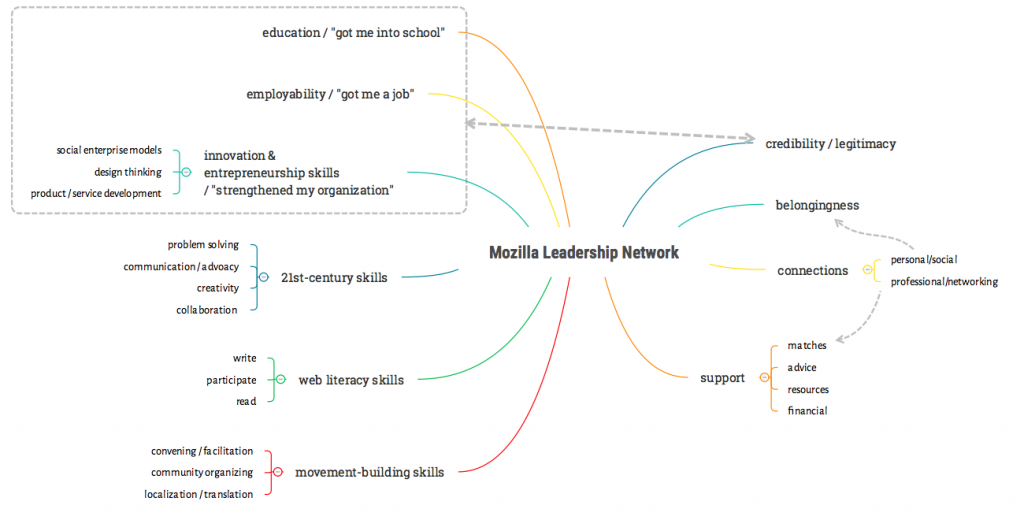

4) Early Pattern-matching / coding AND tagging

One of our goals is to combine the power of both qualitative and quantitative data. Out of this can come tagging and codes around the benefit / value the network is providing to members. Some early patterns in the benefits network members are reporting:

- Support — advice, links to resources, financial support, partners (“matchmaking”)

- Connections — professional, social

- Credibility / legitimacy of being associated with Mozilla

- Belongingness — being part of a group and drawing strength from that

- Skills / practises / knowhow

- Employability / “Helped me get a job”

- Educational opportunity / “Helped me get into school”

- Entrepreneurship & innovation / developing new models, products, services

Imagine these as simple tags we could apply to story tickets in a repo. This will help colleagues sift, sort and follow up on specific evidence narratives that matter to them. Plus allow us to spot patterns, develop claims, and test assumptions over time.

5) Key Learnings

Some of our “a ha!” moments from this first sprint:

- Increased empathy and understanding is key. Increasing our empathy and understanding of network members is a key goal for this work.

This is a key muscle we want to strengthen in MoFo’s culture and systems: the ability to empathize with our members’ aspirations, challenges and benefits.

Regularly exposing staff and community to these stories from our network can ground our strategy, boost motivation, aid our change management process, and regularly spark “a ha” moments.

- We are rich in qualitative data. We sometimes fall into a trap of assuming that what we observe, hear about and help facilitate is too ephemeral and anecdotal to be useful. In reality, it’s a rich source of data that needs to be systematically aggregated, analyzed, and fed back to teams and partners. Working on processes and frameworks to improve that was illuminating in terms of the quality of what we already have.

- The network mapping survey is already full of great stories. Our early review and testing proved this thesis — there’s greater fodder for evidence narratives / human impact in that data.

- Connect the dots between existing work. This “story engine” work is not about creating another standalone initiative; the opportunity is to provide some process and connective tissue to good work that is already ongoing.

- We can start to see patterns emerging. In terms of: the value members are seeing in the network. We can turn these into a recurring set of themes / tags / codes that can inform our research and feedback loops.

Feedback on the network survey process:

Open-ended questions like: “what’s the value or benefit you get from the network” generate great material.

- This survey question was a rich vein. (Mozilla Science Lab did not ask this open-ended question about value, which meant we lost an opportunity to gather great stories there — we can’t get story tips when people are selecting from a list of benefits.)

- Criticism / suggestions for improvement are great. We’re logging people who will likely also have good critiques, not just ra-ra success stories. And (importantly) some of these critiques come with explicit offers to help.

- Consider adding an open-ended “link or artefact field” to the survey next time. e.g., “Got a link to something cool that you made or documented as part of your interaction with the network?” This could be blog posts, videos, tweets, etc. These can generate easy wins and rich media.

What’s next?

We’ve documented our next steps here. Over the last three days, we’ve dug into how to better capture the impact of what we do. We’ve launched the first discovery phase of a design thinking process centred around: “How might we create stories that are also data?”

We’re listening, reviewing existing thinking, digging into people’s needs and context — asking “what if?” Based on the Mozilla Foundation strategy, we’ve created personas, thought about claims they might want to make, pulled from the results of a first round of surveys on network impacts (New York Hive, Open Science Lab, Mozilla Clubs), and created a prototype workflow and tip sheet. Next up: more digging, listening, and prototyping.

What would you focus on next?

If we consider what we’ve done above as version 0.1, what would you prioritize or focus on for version 0.2? Let us know!

https://openmatt.org/2016/05/13/our-network-is-full-of-stories/

|

|

Tim Taubert: Six Months as a Security Engineer |

It’s been a little more than six months since I officially switched to the Security Engineering team here at Mozilla to work on NSS and related code. I thought this might be a good time to share what I’ve been up to in a short status update:

Removed SSLv2 code from NSS

NSS contained quite a lot of SSLv2-specific code that was waiting to be removed. It was not compiled by default so there was no way to enable it in Firefox even if you wanted to. The removal was rather straightforward as the protocol changed significantly with v3 and most of the code was well separated. Good riddance.

Added ChaCha20/Poly1305 cipher suites to Firefox

Adam Langley submitted a patch to bring ChaCha20/Poly1305 cipher suites to NSS already two years ago but at that time we likely didn’t have enough resources to polish and land it. I picked up where he left and updated it to conform to the slightly updated specification. Firefox 47 will ship with two new ECDHE/ChaCha20 cipher suites enabled.

RSA-PSS for TLS v1.3 and the WebCrypto API

Ryan Sleevi, also a while ago, implemented RSA-PSS in freebl, the lower

cryptographic layer of NSS. I hooked it up to some more APIs so Firefox can

support RSA-PSS signatures in its WebCrypto API implementation. In NSS itself

we need it to support new handshake signatures in our experimental TLS v1.3

code.

Improve continuous integration for NSS

Kai Engert from RedHat is currently doing a hell of a job maintaining quite a few buildbots that run all of our NSS tests whenever someone pushes a new changeset. Unfortunately the current setup doesn’t scale too well and the machines are old and slow.

Similar to e.g. Travis CI, Mozilla maintains its own continuous integration and release infrastructure, called TaskCluster. Using TaskCluster we now have an experimental Docker image that builds NSS/NSPR and runs all of our 17 (so far) test suites. The turnaround time is already very promising. This is an ongoing effort, there are lots of things left to do.

Joined the WebCrypto working group

I’ve been working on the Firefox WebCrypto API implementation for a while, long before I switched to the Security Engineering team, and so it made sense to join the working group to help finalize the specification. I’m unfortunately still struggling to carve out more time for involvement with the WG than just attending meetings and representing Mozilla.

Added HKDF to the WebCrypto API

The main reason the WebCrypto API in Firefox did not support HKDF until recently is that no one found the time to implement it. I finally did find some time and brought it to Firefox 46. It is fully compatible to Chrome’s implementation (RFC 5869), the WebCrypto specification still needs to be updated to reflect those changes.

Added SHA-2 for PBKDF2 in the WebCrypto API

Since we shipped the first early version of the WebCrypto API, SHA-1 was the only available PRF to be used with PBKDF2. We now support PBKDF2 with SHA-2 PRFs as well.

Improved the Firefox WebCrypto API threading model

Our initial implementation of the WebCrypto API would naively spawn a new thread

every time a crypto.subtle.* method was called. We now use a thread pool per

process that is able to handle all incoming API calls much faster.

Added WebCrypto API to Workers and ServiceWorkers

After working on this on and off for more than six months, so even before I officially joined the security engineering team, I managed to finally get it landed, with a lot of help from Boris Zbarsky who had to adapt our WebIDL code generation quite a bit. The WebCrypto API can now finally be used from (Service)Workers.

What’s next?

In the near future I’ll be working further on improving our continuous integration infrastructure for NSS, and clean up the library and its tests. I will hopefully find the time to write more about it as we progress.

https://timtaubert.de/blog/2016/05/six-months-as-a-security-engineer/

|

|

Kim Moir: Welcome Mozilla Releng summer interns |

Francis and Connor will be working on implementing some new features in release promotion as well as migrating some builds to taskcluster. I'll be mentoring Francis, while Rail will be mentoring Connor. If you are in the Toronto office, please drop by to say hi to them. Or welcome them on irc as fkang or sheehan.

| Kim, Francis, Connor and Rail |

Mentoring an intern provides an opportunity to see the systems we run from a fresh perspective. They both have lots of great questions which makes us revisit why design decisions were made, could we do things better? Like all teaching roles, I always find that I learn a tremendous amount from the experience, and hope they have fun learning real world software engineering concepts with respect to running large distributed systems.

Welcome to Mozilla!

http://relengofthenerds.blogspot.com/2016/05/welcome-mozilla-releng-summer-interns.html

|

|

QMO: Firefox 47 Beta 7 Testday, May 20th |

Hey y’all!

I am writing to let you know that next week on Friday (May 20th) we are organizing Firefox 47 Beta 7 Testday. The main focus will be on APZ feature and plugin compatibility. Check out all the details via this etherpad.

No previous testing experience is needed, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better!

https://quality.mozilla.org/2016/05/firefox-47-beta-7-testday-may-20th/

|

|

Pascal Chevrel: MozFR Transvision Reloaded: 1 year later |

Just one year ago, the French Mozilla community was living times of major changes: several key historical contributors were leaving the project, our various community portals were no longer updates or broken, our tools were no longer maintained. At the same time a few new contributors were also popping in our IRC channel asking for ways to get involved in the French Mozilla community.

As a result, Kaze decided to organize the first ever community meetup for the French-speaking community in the Paris office (and we will repeat this meetup in June in the brand new Paris office!) .

This resulted in a major and successful community reboot. Leaving contributors passed on the torch to other members of the community, newer contributors were meeting in real life for the first time. This is how Clarista officially became our events organizer, this is how Th'eo replaced C'edric as the main Firefox localizer and this is how I became the new developer for Transvision!

What is Transvision? Transvision is a web application created by Philippe Dessantes which was helping the French team finding localized/localizable strings in Mozilla repositories.

Summarized like that, it doesn't sound that great, but believe me, it is! Mozilla applications have big gigantic repos, there are tens of thousands of strings in our mercurial repositories, some of them we have translated like a decade ago, when you decide to change a verb for a better one for example, it is important to be able to find all occurrences of this verb you have used in the past to see if they need update too. When somebody spots a typo or a clumsy wording, it's good to be able to check if you didn't make the same translation mistakes in other parts of the Mozilla applications several years ago and of course, it's good to be able to check that in just a few seconds. Basically, Phillippe had built the QA/assistive technology for our localization process that best fitted our team needs and we just couldn't let it die.

During the MozFR meetup, Philippe showed to me how the application worked and we created a github repository where we put the currently running version of the code. I tagged that code as version 1.0.

Over the summer, I familiarized myself with the code which was mostly procedural PHP, several Bash scripts to maintain copies of our mercurial repos and a Python script used to extract the strings. Quickly, I decided that I would follow the old Open Source strategy of Release early, release often

. Since I was doing that on the sidelines of my job at Mozilla, I needed the changes to be small but frequent incremental steps as I didn't know how much time I could devote to this project. Basically, having frequent releases means that I always have the codebase in mind which is good since I can implement an idea quickly, without having to dive into the code to remember it.

One year and 15 releases later, we are now at version 2.5, so here are the features and achievements I am most proud of:

- Transvision is alive and kicking

- We are now a team! Jes'us Perez has been contributing code since last December, a couple more people have shown interest in contributing and Philippe is interested in helping again too. We have also a dynamic community of localizers giving feedback, reporting bugs are asking for immrovements

- The project is now organized and if some day I need to step down and pass the torch to another maintainer, he should not have difficulties setting the project up and maintaining it. We have a github repo, release notes, bugs, tagged releases, a beta server, unit testing, basic stats to understand what is used in the app and a mostly cleaned up codebase using much more modern PHP and tools (Atoum, Composer). It's not perfect, but I think that for amateur developers, it's not bad at all and the most important thing is that the code keeps on improving!

- There are now more than 3000 searches per week done by localizers on Transvision. That was more like 30 per week a year ago. There are searches in more than 70 languages, although 30 locales are doing the bulk of searches and French is still the biggest consumer with 40% of requests.

- Some people are using Transvision in ways I hadn't anticipated, for example our documentation localizers use it to find the translation of UI mentioned in help articles they translate for support.mozilla.org, people in QA use it to point to localized strings in Bugzilla

A quick recap of what we have done, feature-wise, in the last 12 months:

- Completely redesigned the application to look and feel good

- Locale to Locale searches, English is not necessarily the locale you want to use as the source (very useful to check differences from languages of the same family, for example Occitan/French/Catalan/Spanish...).

- Hints and warnings for strings that look too long or too short compare to English, potentially bad typography, access keys that don't match your translation...

- Possibility for anybody to file a bug in Bugzilla with a pointer to the badly translated string (yes we will use it for QA test days within the French community!)

- Firefox OS strings are now there

- Search results are a lot more complete and accurate

- We now have a stable Json/JsonP API, I know that Pontoon uses it to provide translation suggestions, I heard that the Moses project uses it too. (if you use the Transvision API, ping me, I'd like to know!)

- We can point any string to the right revision controled file in the source and target repos

- We have a companion add-on called MozTran for heavy users of the tool provided by Goofy, from our Babelzilla friends.

The above list is of course just a highlight of the main features, you can get more details on the changelog.

If you use Transvision, I hope you enjoy it and that it is useful oo you. If you don't use Transvision (yet), give it a try, it may help you in your translation process, especially if your localization process is similar to the French one (targets Firefox Nighty builds first, work directly on the mercurial repo, focus on QA).

This was the first year of the rebirth of Transvision, I hope that the year to come will be just as good as this one. I learnt a lot with this project and I am happy to see it grow both in terms of usage and community, I am also happy that one tool that was created by a specific localization team is now used by so many other teams in the world

|

|

Yunier Jos'e Sosa V'azquez: Ay'udanos a construir el futuro de Firefox con el nuevo programa Test Pilot |

El programa Test Pilot de Mozilla tiene una nueva cara y sitio web, as'i nos los ha mostrado Mozilla en un art'iculo publicado en su blog por Nick Nguyen, Vice Presidente de Firefox. Test Pilot representa la posibilidad de probar las funcionalidades experimentales que ser'an incorporadas a Firefox y decir lo que te parece, que deber'ia ser cambiado o nuevas ideas a trav'es de la retroalimentaci'on con cada nueva caracter'istica.

En el video que te mostramos a continuaci'on podr'as ver r'apidamente los experimentos disponibles.

|

|

Air Mozilla: Bay Area Rust Meetup May 2016 |

Bay Area Rust Meetup for May 2016.

Bay Area Rust Meetup for May 2016.

|

|

Mozilla Addons Blog: AMO technical architecture |

addons.mozilla.org (AMO) has been around for more than 12 years, making it one of the oldest websites at Mozilla. It celebrated its 10th anniversary a couple of years ago, as Wil blogged about.

AMO started as a PHP site that grew and grew as new pieces of functionality were bolted on. In October 2009 the rewrite from PHP to Python began. New features were added, the site grew ever larger, and now a few cracks are starting to appear. These are merely the result of a site that has lots of features and functionality and has been around for a long time.

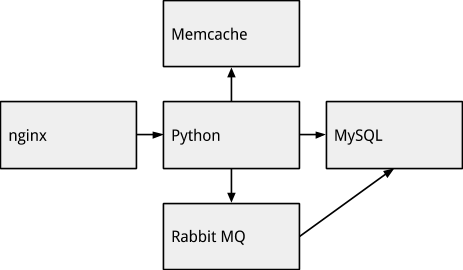

The site architecture is currently something like below, but please note this simplifies the site and ignores the complexities of AWS, the CDN and other parts of the site.

Basically, all the code is one repository and the main application (a Django app) is responsible for generating everything—from HTML, to emails, to APIs, and it all gets deployed at the same time. There’s a few problems with this:

- The amount of functionality in the site has caused such a growth in interactions between the features that it is harder and harder to test.

- Large JavaScript parts of the site have no automated testing.

- The JavaScript and CSS spill over between different parts of the site, so changes in one regularly break other parts of the site.

- Not all parts of the site have the same expectation of uptime but are all deployed at the same time.

- Not all parts of the site have the same requirements for code contributions.

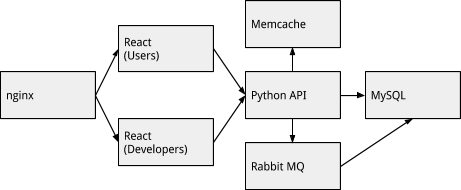

We are moving towards a new model similar to the one used for Firefox Marketplace. Whereas Marketplace built its own front-end framework, we are going to be using React on the front end.

The end result will start look something like this:

A separate version of the site is rendered for the different use cases, for example developers or users. In this case a request comes in hits one of the appropriate front-end stacks. That will render the site using React universal in node.js on the server. It will access the data store by calling the appropriate Python REST APIs.

In this scenario, the legacy Python code will migrate to being a REST API that manages storage, transactions, workflow, permissions and the like. All the front-facing user interface work will be done in React and be independent from each other as much as possible.

It’s not quite micro services, but the breaking of a larger site into smaller independent pieces. The first part of this is happening with the “discovery pane” (accessible at about:addons). This is our first project using this infrastructure, which features a new streamlined way to install add-ons with a new technical architecture to serve it to users.

As we roll out this new architecture we’ll be doing more blog posts, so if you’d like to get involved then join our mailing list or check out our repositories on Github.

https://blog.mozilla.org/addons/2016/05/12/amo-technical-architecture/

|

|

Support.Mozilla.Org: What’s Up with SUMO – 12th May |

Hello, SUMO Nation!

Yes, we know, Friday the 13th is upon us… Fear not, in good company even the most unlucky days can turn into something special ;-) Pet a black cat, find a four leaf clover, smile and enjoy what the weekend brings!

As for SUMO, we have a few updates coming your way. Here they are!

Welcome, new contributors!

Contributors of the week

- The ever-helpful jscher2000 for cracking the amazing “5000 solutions given” milestone on the forums – hats off!

- The forum supporters who helped users out for the last week.

- The writers of all languages who worked on the KB for the last week.

We salute you!

Most recent SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

The next SUMO Community meeting…

- …is happening on WEDNESDAY the 18th of May – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- Remember our great Ivory Coast Mozillians? They’re organizing a SUMO sprint this Saturday in Abidjan! Thanks to Abbackar for his continuous community efforts!

- We are slowly reaching the end of the discussions and presentations about possible platform substitutions for Kitsune, as per this thread. Stay tuned for announcements next week! HUGE thanks to everyone who provided feedback and helped us review various options.

- Reminder: want to know what’s going on with the Admins? Check this thread in the forum.

-

Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

Social

- No major updates – keep tweeting helpfully, you’re doing an awesome job!

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- Turkish SUMO forums are live! Thanks to Selim and Emin for being its first stewards.

- Remember, if you’re new to the support forum, come over and say hi!

Knowledge Base & L10n

- If you want to participate in the ongoing discussion about source material quality and frequency, take a look at this thread.

- Reminder: L10n hackathons everywhere! Find your people and get organized!

Firefox

- for Android

- Version 46 support discussion thread.

- Reminder: version 47 will stop supporting Gingerbread. High time to update your Android installations!

- for Desktop

- Fly away with the Firefox Test Pilot!

- We are aware of compatibility issues between Firefox, Kaspersky Internet Security, and Facebook. More details here.

- For the main thread on our forums about Firefox 46, click here.

- for iOS

- Firefox for iOS 4.0 IS HERE! The highlights are:

- Firefox is now present on the Today screen.

- You can access your bookmarks in the search bar.

- You can override the certificate warning on sites that present them (but be careful!).

- You can print webpages.

- Users with older versions of iOS 8 or lower will not be able to add the Firefox widget. See Common Response Available.

- Start your countdown clocks ;-) Firefox for iOS 5.0 should be with us in approximately 6 weeks!

- Firefox for iOS 4.0 IS HERE! The highlights are:

Thanks for your attention and see you around SUMO, soon!

https://blog.mozilla.org/sumo/2016/05/12/whats-up-with-sumo-12th-may/

|

|

Air Mozilla: Web QA Team Meeting, 12 May 2016 |

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

|

|

Air Mozilla: Reps weekly, 12 May 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

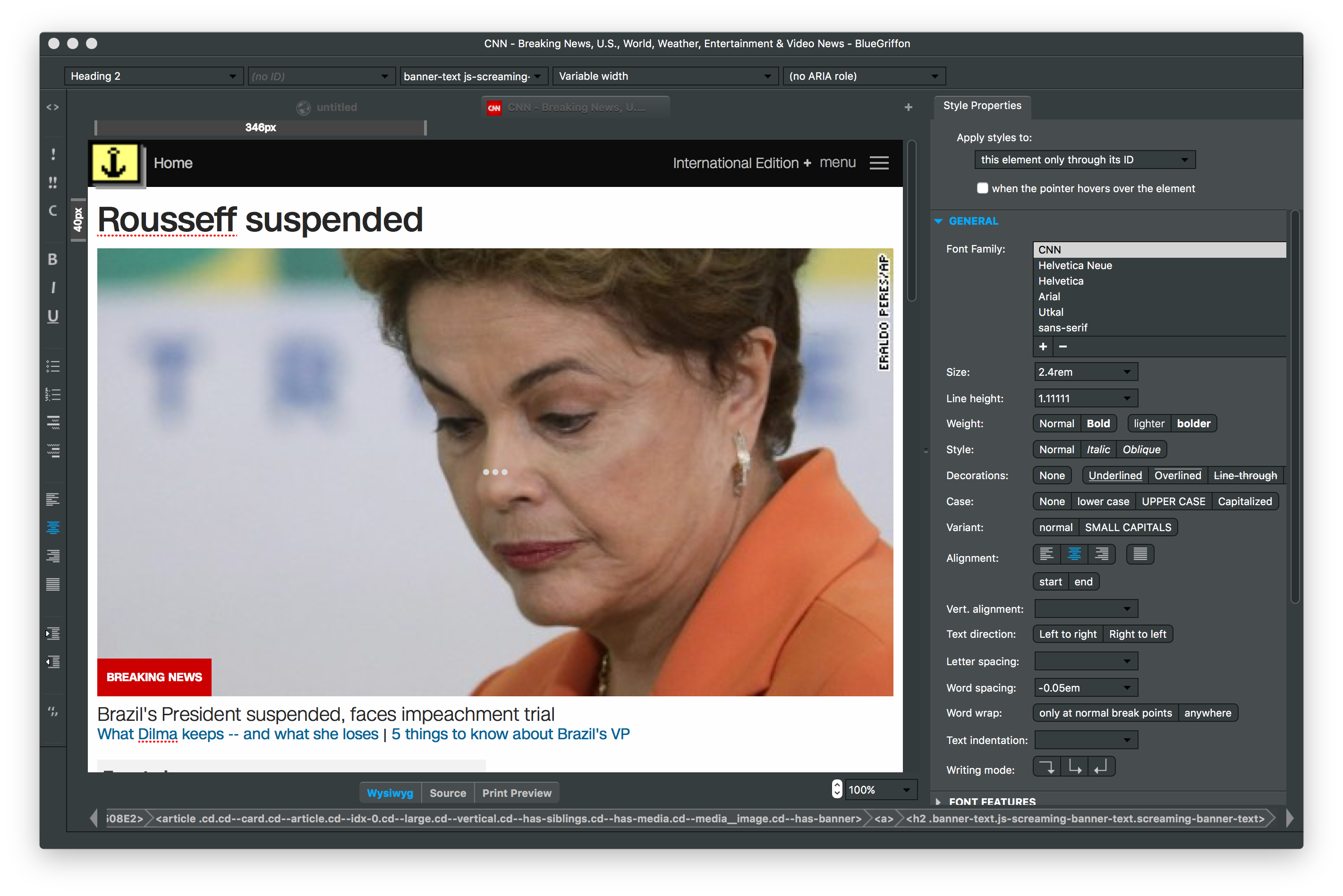

Daniel Glazman: BlueGriffon 2.0 approaching... |

BlueGriffon 2.0 is approaching, a major revamp of my cross-platform Wysiwyg Gecko-based editor. You can find previews here for OSX, Windows and Ubuntu 16.04 (64 bits).

Warnings:

- it's HIGHLY recommended to NOT overwrite your existing 1.7 or 1.8 version ; install it for instance in /tmp instead of /Applications

- it's VERY HIGHLY recommended to start it creating a dedicated

profile

- open BlueGriffon.app --args -profilemanager (on OSX)

- bluegriffon.exe -profilemanager (on Windows)

- add-ons will NOT work with it, don't even try to install them in your test profile

- it's a work in progress, expect bugs, issues and more

Changes:

- major revamp, you won't even recognize the app- based on a very recent version of Gecko, that was a HUGE work. - no more floating panels, too hacky and expensive to maintain - rendering engine support added for Blink, Servo, Vivliostyle and Weasyprint! - tons of debugging in *all* areas of the app - BlueGriffon now uses the native colorpicker on OSX. Yay!!! The native colorpicker of Windows is so weak and ugly we just can't use it (it can't even deal with opacity...) and decided to stick to our own implementation. On Linux, the situation is more complicated, the colorpicker is not as ugly as the Windows' one, but it's unfortunately too weak compared to what our own offers. - more CSS properties handled - helper link from each CSS property in the UI to MDN - better templates handling - auto-reload of html documents if modified outside of BlueGriffon - better Markdown support - zoom in Source View - tech changes for future improvements: support for :active and other dynamic pseudo-classes, support for ::before and ::after pseudo-elements in CSS Properties; rely on Gecko's CSS lexer instead of our own. We're also working on cool new features on the CSS side like CSS Variables and even much cooler than that

http://www.glazman.org/weblog/dotclear/index.php?post/2016/05/12/BlueGriffon-2.0-approaching...

|

|