Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

QMO: Firefox 47 beta 7 Testday Results |

Howdy mozillians!

Last week on Friday (May 20th), we held another successfull event – Firefox 47 beta 7 Testday.

Thank you all – Ilse Mac'ias, Stelian Ionce, Iryna Thompson, Nazir Ahmed Sabbir, Rezaul Huque Nayeem, Tanvir Rahman, Zayed News, Azmina Akter Papeya, Roman Syed, Raihan Ali, Sayed Ibn Masudn, Samad Talukdar, John Sujoy, Nafis Ahmed Muhit, Sajedul Islam, Asiful Kabir Heemel, Sunny, Maruf Rahman, Md. Tanvir Ahmed, Saddam Hossain, Wahiduzzaman Hridoy, Ishak Herock, Md.Tarikul Islam Oashi, Md Rakibul Islam, Niaz Bhuiyan Asif, MD. Nnazmus Shakib (Robin), Akash, Towkir Ahmed, Saheda Reza Antora, Md. Almas Hossain, Hasibul Hasan Shanto, Tazin Ahmed, Badiuzzaman Pranto, Md.Majedul islam, Aminul Islam Alvi, Toufiqul Haque Mamun, Fahim, Zubayer Alam, Forhad Hossain, Mahfuza Humayra Mohona – for the participation!

A big thank you goes out to all our active moderators too!

Results:

- there were no bugs verified nor triaged

- some failures were mentioned for APZ feature in the etherpads (link 1 and link 2); therefore, please add the requested details in the etherpads or, even better, join us on #qa IRC channel and let’s figure them out

https://quality.mozilla.org/2016/05/firefox-47-beta-7-testday-results/

|

|

Yunier Jos'e Sosa V'azquez: Firefox para iOS mejora su seguridad y te hace ir m'as r'apido por la Web |

La semana pasada Mozilla liber'o una nueva versi'on Firefox para iOS y desde Mozilla Hispano te mostramos sus novedades. Principalmente, esta entrega mejora la privacidad y seguridad de las personas al navegar en la Web y aporta una experiencia m'as aerodin'amica que te permitir'a un mayor control sobre tu experiencia de navegaci'on m'ovil.

?Qu'e hay de nuevo en esta actualizaci'on?

El widget Today de iOS: Sabes que obtener lo que buscas en la Web r'apidamente para ti es importante, especialmente en tu m'ovil. Por esa raz'on, ahora puedes acceder a Firefox a trav'es del widget iOS Today para abrir nuevas pesta~nas o un enlace copiado recientemente.

La barra alucinante: De ahora en adelante al escribir en la barra de direcciones se mostrar'an tus marcadores, historial y sugerencias de b'usqueda que coincidan con el t'ermino deseado. Esto har'a que el acceso a tus sitios web favoritos sea m'as r'apido y f'acil.

Administra tu seguridad: Por defecto, Firefox contribuye a garantizar tu seguridad avis'andote cuando la conexi'on a determinada web no es segura. Cuando trates de acceder a una web poco segura, ver'as un mensaje de “error” avis'andote de que esa conexi'on no es de fiar y estar'as protegido a la hora de acceder a ellas. Con Firefox para iOS, puedes ignorar temporalmente esos mensajes de error de las p'aginas web que has considerado como “seguras” pero pueden quedar registradas como potencialmente no-seguras por Firefox.

Debido a que el mecanismo empleado por Apple para descargar e instalar aplicaciones en sus tel'efonos es muy complicado, no podemos proveer la descarga de esta versi'on desde nuestro sitio. Quiz'as m'as adelante, si esta regla var'ia, podremos hacerlo y completaremos en kit de versiones de Firefox. Por lo que para experimentar y gozar estas nuevas funcionalidades a~nadidas a Firefox para iOS debes descargar esta actualizaci'on desde la AppStore.

Fuentes: The Mozilla Blog y Mozilla Press

http://firefoxmania.uci.cu/firefox-para-ios-mejora-su-seguridad-y-te-hace-ir-mas-rapido-por-la-web/

|

|

Niko Matsakis: Unsafe abstractions |

The unsafe keyword is a crucial part of Rust’s design. For those not

familiar with it, the unsafe keyword is basically a way to bypass

Rust’s type checker; it essentially allows you to write something more

like C code, but using Rust syntax.

The existence of the unsafe keyword sometimes comes as a surprise at

first. After all, isn’t the point of Rust that Rust programs should

not crash? Why would we make it so easy then to bypass Rust’s type

system? It can seem like a kind of flaw in the design.

In my view, though, unsafe is anything but a flaw: in fact, it’s a

critical piece of how Rust works. The unsafe keyword basically

serves as a kind of escape valve

– it means that we can keep the

type system relatively simple, while still letting you pull whatever

dirty tricks you want to pull in your code. The only thing we ask is

that you package up those dirty tricks with some kind of abstraction

boundary.

This post introduces the unsafe keyword and the idea of unsafety

boundaries. It is in fact a lead-in for another post I hope to publish

soon that discusses a potential design of the so-called

Rust memory model, which is basically a set of rules that help

to clarify just what is and is not legal in unsafe code.

Unsafe code as a plugin

I think a good analogy for thinking about how unsafe works in Rust

is to think about how an interpreted language like Ruby (or Python)

uses C modules. Consider something like the JSON module in Ruby. The

JSON bundle includes a pure Ruby implementation (JSON::Pure), but it

also includes a re-implementation of the same API in C

(JSON::Ext). By default, when you use the JSON bundle, you are

actually running C code – but your Ruby code can’t tell the

difference. From the outside, that C code looks like any other Ruby

module – but internally, of course, it can play some dirty tricks and

make optimizations that wouldn’t be possible in Ruby. (See this

excellent blog post on Helix for more details, as well as

some suggestions on how you can write Ruby plugins in Rust instead.)

Well, in Rust, the same scenario can arise, although the scale is different. For example, it’s perfectly possible to write an efficient and usable hashtable in pure Rust. But if you use a bit of unsafe code, you can make it go faster still. If this a data structure that will be used by a lot of people or is crucial to your application, this may be worth the effort (so e.g. we use unsafe code in the standard library’s implementation). But, either way, normal Rust code should not be able to tell the difference: the unsafe code is encapsulated at the API boundary.

Of course, just because it’s possible to use unsafe code to make things run faster doesn’t mean you will do it frequently. Just like the majority of Ruby code is in Ruby, the majority of Rust code is written in pure safe Rust; this is particularly true since safe Rust code is very efficient, so dropping down to unsafe Rust for performance is rarely worth the trouble.

In fact, probably the single most common use of unsafe code in Rust is for FFI. Whenever you call a C function from Rust, that is an unsafe action: this is because there is no way the compiler can vouch for the correctness of that C code.

Extending the language with unsafe code

To me, the most interesting reason to write unsafe code in Rust (or a

C module in Ruby) is so that you can extend the capabilities of the

language. Probably the most commonly used example of all is the Vec

type in the standard library, which uses unsafe code so it can handle

uninitialized memory; Rc and Arc, which enable shared ownership,

are other good examples. But there are also much fancier examples,

such as how Crossbeam and deque use unsafe code to implement

non-blocking data structures, or Jobsteal and Rayon use unsafe

code to implement thread pools.

In this post, we’re going to focus on one simple case: the

split_at_mut method found in the standard library. This method is

defined over mutable slices like &mut [T]. It takes as argument a

slice and an index (mid), and it divides that slice into two pieces

at the given index. Hence it returns two subslices: ranges from

0..mid, and one that ranges from mid...

You might imagine that split_at_mut would be defined like this:

1 2 3 4 5 | |

If it compiled, this definition would do the right thing, but in fact if you try to build it you will find it gets a compilation error. It fails for two reasons:

- In general, the compiler does not try to reason precisely about

indices. That is, whenever it sees an index like

foo[i], it just ignores the index altogether and treats the entire array as a unit (foo[_], effectively). This means that it cannot tell that&mut self[0..mid]is disjoint from&mut self[mid..]. The reason for this is that reasoning about indices would require a much more complex type system. - In fact, the

[]operator is not builtin to the language when applied to a range anyhow. It is implemented in the standard library. Therefore, even if the compiler knew that0..midandmid..did not overlap, it wouldn’t necessarily know that&mut self[0..mid]and&mut self[mid..]return disjoint slices.

Now, it’s plausible that we could extend the type system to make this

example compile, and maybe we’ll do that someday. But for the time

being we’ve preferred to implement cases like split_at_mut using

unsafe code. This lets us keep the type system simple, while still

enabling us to write APIs like split_at_mut.

Abstraction boundaries

Looking at unsafe code as analogous to a plugin helps to clarify the idea of an abstraction boundary. When you write a Ruby plugin, you expect that when users from Ruby call into your function, they will supply you with normal Ruby objects and pointers. Internally, you can play whatever tricks you want: for example, you might use a C array instead of a Ruby vector. But once you return values back out to the surrounding Ruby code, you have to repackage up those results as standard Ruby objects.

It works the same way with unsafe code in Rust. At the public

boundaries of your API, your code should act as if

it were any other

safe function. This means you can assume that your users will give you

valid instances of Rust types as inputs. It also means that any values

you return or otherwise output must meet all the requirements that the

Rust type system expects. Within the unsafe boundary, however, you

are free to bend the rules (of course, just how free you are is the

topic of debate; I intend to discuss it in a follow-up post).

Let’s look at the split_at_mut method we saw in the previous

section. For our purposes here, we only care about the public

interface

of the function, which is its signature:

1 2 3 4 5 6 7 | |

So what can we derive from this signature? To start, split_at_mut

can assume that all of its inputs are valid

(for safe code, the

compiler’s type system naturally ensures that this is true; unsafe

callers would have to ensure it themselves). Part of writing the rules

for unsafe code will require enumerating more precisely what this

means, but at a high-level it’s stuff like this:

- The

selfargument is of type&mut [T]. This implies that we will receive a reference that points at some numberNofTelements. Because this is a mutable reference, we know that the memory it refers to cannot be accessed via any other alias (until the mutable reference expires). We also know the memory is initialized and the values are suitable for the typeT(whatever it is). - The

midargument is of typeusize. All we know is that it is some unsigned integer.

There is one interesting thing missing from this list,

however. Nothing in the API assures us that mid is actually a legal

index into self. This implies that whatever unsafe code we write

will have to check that.

Next, when split_at_mut returns, it must ensure that its return

value meets the requirements of the signature. This basically means it

must return two valid &mut [T] slices (i.e., pointing at valid

memory, with a length that is not too long). Crucially, since those

slices are both valid at the same time, this implies that the two

slices must be disjoint (that is, pointing at different regions of

memory).

Possible implementations

So let’s look at a few different implementation strategies for

split_at_mut and evaluate whether they might be valid or not. We

already saw that a pure safe implementation doesn’t work. So what if

we implemented it using raw pointers like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

This is a mostly valid implementation, and in fact fairly close to

what the standard library actually does. However, this

code is making a critical assumption that is not guaranteed by the

input: it is assuming that mid is in range

. Nowhere does it check

that mid <= len, which means that the q pointer might be out of

range, and also means that the computation of remainder might

overflow and hence (in release builds, at least by default) wrap

around. So this implementation is incorrect, because it requires

more guarantees than what the caller is required to provide.

We could make it correct by adding an assertion that mid is a valid

index (note that the assert macro in Rust always executes, even in

optimized code):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

OK, at this point we have basically reproduced the implementation in the standard library (it uses some slightly different helpers, but it’s the same idea).

Extending the abstraction boundary

Of course, it might happen that we actually wanted to assume mid

that is in bound, rather than checking it. We couldn’t do this for the

actual split_at_mut, of course, since it’s part of the standard

library. But you could imagine wanting a private helper for safe code

that made this assumption, so as to avoid the runtime cost of a bounds

check. In that case, split_at_mut is relying on the caller to

guarantee that mid is in bounds. This means that split_at_mut is

no longer safe

to call, because it has additional requirements for

its arguments that must be satisfied in order to guarantee memory

safety.

Rust allows you express the idea of a fn that is not safe to call by

moving the unsafe keyword out of the fn body and into the public

signature. Moving the keyword makes a big difference as to the meaning

of the function: the unsafety is no longer just an implementation

detail of the function, it’s now part of the function’s

interface. So we could make a variant of split_at_mut called

split_at_mut_unchecked that avoids the bounds check:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

When a fn is declared as unsafe like this, calling that fn becomes

an unsafe action: what this means in practice is that the caller

must read the documentation of the function and ensure that what

conditions the function requires are met. In this case, it means that

the caller must ensure that mid <= self.len().

If you think about abstraction boundaries, declaring a fn as unsafe

means that it does not form an abstraction boundary with safe code.

Rather, it becomes part of the unsafe abstraction of the fn that calls

it.

Using split_at_mut_unchecked, we could now re-implemented split_at_mut

to just layer on top the bounds check:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

Unsafe boundaries and privacy

Although there is nothing in the language that explicitly connects the privacy rules with unsafe abstraction boundaries, they are naturally interconnected. This is because privacy allows you to control the set of code that can modify your fields, and this is a basic building block to being able to construct an unsafe abstraction.

Earlier we mentioned that the Vec type in the standard library is

implemented using unsafe code. This would not be possible without

privacy. If you look at the definition of Vec, it looks something

like this:

1 2 3 4 5 | |

Here the field pointer is a pointer to the start of some

memory. capacity is the amount of memory that has been allocated and

length is the amount of memory that has been initialized.

The vector code is all very careful to maintain the invariant that it

is always safe the first length elements of the the memory that

pointer refers to. You can imagine that if the length field were

public, this would be impossible: anybody from the outside could go

and change the length to whatever they want!

For this reason, unsafety boundaries tend to fall into one of two categories:

- a single functions, like

split_at_mut- this could include unsafe callees like

split_at_mut_unchecked

- this could include unsafe callees like

- a type, typically contained in its own module, like

Vec- this type will naturally have private helper functions as well

- and it may contain unsafe helper types too, as described in the next section

Types with unsafe interfaces

We saw earlier that it can be useful to define unsafe functions like

split_at_mut_unchecked, which can then serve as the building block

for a safe abstraction. The same is true of types. In fact, if you

look at the actual definition of Vec from the standard

library, you will see that it looks just a bit different from what we

saw above:

1 2 3 4 | |

What is this RawVec? Well, that turns out to be an unsafe helper

type that encapsulates the idea of a pointer and a capacity:

1 2 3 4 5 6 | |

What makes RawVec an unsafe

helper type? Unlike with functions,

the idea of an unsafe type

is a rather fuzzy notion. I would define

such a type as a type that doesn’t really let you do anything useful

without using unsafe code. Safe code can construct RawVec, for example,

and even resize the backing buffer, but if you want to actually access

the data in that buffer, you can only do so by calling

the ptr method, which returns a *mut T. This is a raw

pointer, so dereferencing it is unsafe; which means that, to be

useful, RawVec has to be incorporated into another unsafe

abstraction (like Vec) which tracks initialization.

Conclusion

Unsafe abstractions are a pretty powerful tool. They let you play just

about any dirty performance trick you can think of – or access any

system capbility – while still keeping the overall language safe and

relatively simple. We use unsafety to implement a number of the core

abstractions in the standard library, including core data structures

like Vec and Rc. But because all of these abstractions encapsulate

the unsafe code behind their API, users of those modules don’t carry

the risk.

How low can you go?

One thing I have not discussed in this post is a lot of specifics about exactly what is legal within unsafe code and not. Clearly, the point of unsafe code is to bend the rules, but how far can you bend them before they break? At the moment, we don’t have a lot of published guidelines on this topic. This is something we aim to address. In fact there has even been a first RFC introduced on the topic, though I think we can expect a fair amount of iteration before we arrive at the final and complete answer.

As I wrote on the RFC thread, my take is that we should be

shooting for rules that are human friendly

as much as possible. In

particular, I think that most people will not read our rules and fewer

still will try to understand them. So we should ensure that the unsafe

code that people write in ignorance of the rules is, by and large,

correct. (This implies also that the majority of the code that exists

ought to be correct.)

Interestingly, there is something of a tension here: the more unsafe code we allow, the less the compiler can optimize. This is because it would have to be conservative about possible aliasing and (for example) avoid reordering statements.

In my next post, I will describe how I think that we can leverage unsafe abstractions to actually get the best of both worlds. The basic idea is to aggressively optimized safe code, but be more conservative within an unsafe abstraction (but allow people to opt back in with additional annotations).

Edit note: Tweaked some wording for clarity.

http://smallcultfollowing.com/babysteps/blog/2016/05/23/unsafe-abstractions/

|

|

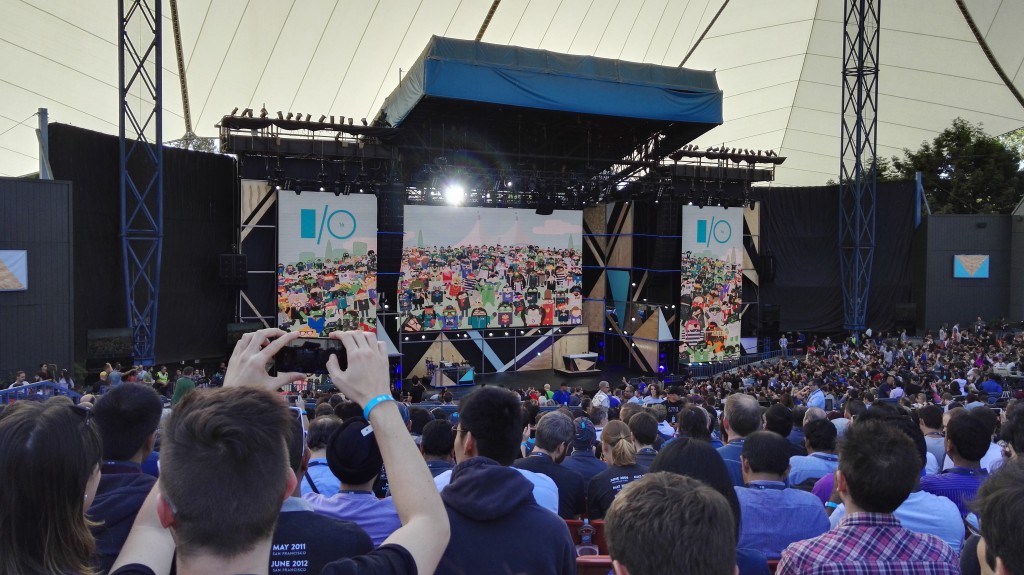

Christian Heilmann: Google IO – A tale of two Googles |

Disclaimer: The following are my personal views and experiences at this year’s Google IO. They are not representative of my employer. Should you want to quote me, please do so as Chris Heilmann, developer.

TL;DR: Is Google IO worth the $900? Yes, if you’re up for networking, getting information from experts and enjoy social gatherings. No, if you expect to be able to see talks. You’re better off watching them from home. The live streaming and recordings are excellent.

Google IO this year left me confused and disappointed. I found a massive gap between the official messaging and the tech on display. I’m underwhelmed with the keynote and the media outreach. The much more interesting work in the breakout sessions, talks and demos excited me. It seems to me that what Google wants to promote and the media to pick up is different to what its engineers showed. That’s OK, but it feels like sales stepping on a developer conference turf.

I enjoyed the messaging of the developer outreach and product owner team in the talks and demos. At times I was wondering if I was at a Google or a Mozilla event. The web and its technologies were front and centre. And there was a total lack of “our product $X leads the way” vibes.

Kudos to everyone involved. The messaging about progressive Web Apps, AMP and even the new Android Instant Apps was honest. It points to a drive in Google to return to the web for good.

The vibe of the event changed a lot since moving out of Moscone Center in San Francisco. Running it on Google’s homestead in Mountain View made the whole show feel more like a music festival than a tech event. It must have been fun for the presenters to stand on the same stage they went to see bands at.

Having smaller tents for the different product and technology groups was great. It invited much more communication than booths. I saw a lot of neat demos. Having experts at hand to talk with about technologies I wanted to learn about was great.

Organisation

Here are the good and bad things about the organisation:

- Good: traffic control wasn’t as much of a nightmare I expected. I got there two hours in advance as I anticipated traffic jams, but it wasn’t bad at all. Shuttles and bike sheds helped getting people there.

- Good: there was no queue at badge pickup. Why I had to have my picture taken and a – somehow sticky – plastic badge printed was a bit beyond me, though. It seems wasteful.

- Good: the food and beverages were plentiful and applicable. With a group this big it is hard to deliver safe to eat and enjoyable food. The sandwiches, apples and crisps did the trick. The food at the social events was comfort food/fast food, but let’s face it – you’re not at a food fair. I loved that all the packaging was paper and cardboard and there was not too much excess waste in the form of plastics. We also got a reusable water bottle you could re-fill at water dispensers like you have in offices. Given the weather, this was much needed. Coffee and tea was also available throughout the day. We were well fed and watered. I’m no Vegan, and I heard a few complaints about a lack of options, but that may have been personal experiences.

- Good: the toilets were amazing. Clean, with running water and plenty of paper, mirrors, free sunscreen and no queues. Not what I expected from a music festival surrounding.

- Great: as it was scorching hot on the first day the welcome pack you got with your badge had a bandana to cover your head, two sachets of sun screen, a reusable water bottle and sunglasses. As a ginger: THANK YOU, THANK YOU, THANK YOU. The helpers even gave me a full tube of sunscreen on re-entry the second day, taking pity on my red skin.

- Bad: the one thing that was exactly the same as in Moscone was the abysmal crowd control. Except for the huge stage tent number two (called HYDRA - I am on to you, people) all others were far too small. It was not uncommon to stand for an hour in a queue for the talk you wanted to see just to be refused entry as it was full up. Queuing up in the scorching sun isn’t fun for anyone and impossible for me. Hence I missed all but two talks I wanted to see.

- Good: if you were lucky enough to see a talk, the AV quality was great. The screens were big and readable, all the talks were live transcribed and the presenters audible.

The bad parts

Apart from the terrible crowd control, two things let me down the most. The keynote and a total lack of hardware giveaway – something that might actually be related.

Don’t get me wrong, I found the showering of attendees with hardware excessive at the first few IOs. But announcing something like a massive move into VR with Daydream and Tango without giving developers something to test it on is assuming a lot. Nine hundred dollars plus flying to the US and spending a lot of money on accommodation is a lot for many attendees. Getting something amazing to bring back would be a nice “Hey, thanks”.

There was no announcement at the keynote about anything physical except for some vague “this will be soon available” products. This might be the reason.

My personal translation of the keynote is the following:

We are Google, we lead in machine learning, cloud technology and data insights. Here are a few products that may soon come out that play catch-up with our competition. We advocate diversity and try to make people understand that the world is bigger than the Silicon Valley. That’s why we solve issues that aren’t a problem but annoyances for the rich. All the things we’re showing here are solving issues of people who live in huge houses, have awesome cars and suffer from the terrible ordeal of having to answer text messages using their own writing skills. Wouldn’t it be better if a computer did that for you? Why go and wake up your children with a kiss using the time you won by becoming more effective with our products when you can tell Google to do that for you? Without the kiss that is – for now.

I actually feel poor looking at the #io16 keynote. We have lots of global problems technology can help with. This is pure consumerism.

I stand by this. Hardly anything in the keynote excited me as a developer. Or even as a well-off professional who lives in a city where public transport is a given. The announcement of Instant Apps, the Firebase bits and the new features of Android Studio are exciting. But it all got lost in an avalanche of “Look what’s coming soon!” product announcements without the developer angle. We want to look under the hood. We want to add to the experience and we want to understand how things work. This is how developer events work. Google Home has some awesome features. Where are the APIs for that?

As far as I understand it, there was a glitch in the presentation. But the part where a developer in Turkey used his skills to help the Syrian refugee crisis was borderline insulting. There was no information what the app did, who benefited from it and what it ran on. No information how the data got in and how the data was going to the people who help the refugees. The same goes for using machine learning to help with the issue of blindness. Both were teasers without any meat and felt like “Well, we’re also doing good, so here you go”.

Let me make this clear: I am not criticising the work of any Google engineer, product owner or other worker here. All these things are well done and I am excited about the prospects. I find it disappointing that the keynote was a sales pitch. It did not pay respect to this work and failed to show the workings rather than the final product. IO is advertised as a developer conference, not a end user oriented sales show. It felt disconnected.

Things that made me happy

- The social events were great – the concert in the amphitheatre was for those who wanted to go. Outside was a lot of space to have a chat if you’re not the dancing type. The breakout events on the second day were plentiful, all different and arty. The cynic in my sniggered at Burning Man performers (the anthithesis to commercialism by design) doing their thing at a commercial IT event, but it gave the whole event a good vibe.

- Video recording and live streaming – I watched quite a few of the talks I missed the last two days in the gym and I am grateful that Google offers these on YouTube immediately, well described and easy to find in playlists. Using the app after the event makes it easy to see the talks you missed.

- Boots on the ground – everyone I wanted to meet from Google was there and had time to chat. My questions got honest and sensible answers and there was no hand-waving or over-promising.

- A good focus on health and safety – first aid tents, sunscreen and wet towels for people to cool down, creature comforts for an outside environment. The organisers did a good job making sure people are safe. Huge printouts of the Code of Conduct also made no qualm about it that antisocial or aggressive behaviour was not tolerated.

Conclusion

I will go again to Google IO, to talk, to meet, to see product demos and to have people at hand that can give me insight further than the official documentation. I am likely to not get up early next time to see the keynote though and I would love to see a better handle on the crowd control. It is frustrating to queue and not being able to see talks at the conference of a company who prides itself at organising huge datasets and having self-driving cars.

Here are a few things that could make this better:

- Having screening tents with the video and the transcription screens outside the main tents. These don’t even need sound (which is the main outside issue)

- Use the web site instead of two apps. Advocating progressive web apps and then telling me in the official conference mail to download the Android app was not a good move. Especially as the PWA outperformed the native app at every turn – including usability (the thing native should be much better). It was also not helpful that the app showed the name of the stage but not the number of the tent.

- Having more places to charge phones would have been good, or giving out power packs. As we were outside all the time and moving I didn’t use my computer at all and did everything on the phone.

I look forward to interacting and working with the tech Google. I am confused about the Google that tries to be in the hands of end users without me being able to crack the product open and learn from how it is done.

https://www.christianheilmann.com/2016/05/23/google-io-a-tale-of-two-googles/

|

|

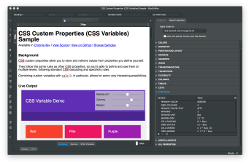

Daniel Glazman: CSS Variables in BlueGriffon |

I guess the title says it all  Click on the thumbnail to enlarge it.

Click on the thumbnail to enlarge it.

http://www.glazman.org/weblog/dotclear/index.php?post/2016/05/22/CSS-Variables-in-BlueGriffon

|

|

Gian-Carlo Pascutto: Technical Debt, Episode 1 |

Although we go to great lengths to make this impossible, there is always a chance that a bug in Firefox would allow an attacker to exploit and take over a Web Content process. But by using features provided by the operating system, we can prevent them from taking over the rest of the computing device by disallowing many ways to interact with it, for example by stopping them from starting new programs or reading or writing specific files.

This feature has been enabled on Firefox Nightly builds for a while, at least on Windows and Mac OS X. Due to the diversity of the ecosystem, it's taken a bit longer for Linux, but we are now ready to flip that switch too.

The initial version on Linux will block very, very little. It's our goal to get Firefox working and shipping with this first and foremost, while we iterate rapidly and hammer down the hatches as we go, shipping a gradual stream of improvements to our users.

One of the first things to hammer down is filesystem access. If an attacker is free to write to any file on the filesystem, he can quickly take over the system. Similarly, if he can read any file, it's easy to leak out confidential information to an attacking webpage. We're currently figuring out the list of files and locations the Web Content process needs to access (e.g. system font directories) and which ones it definitely shouldn't (your passwords database).

And that's where this story about technical debt really starts.

While tracing filesystem access, we noticed at some point that the Web Content process accesses /etc/passwd. Although on most modern Unix systems this file doesn't actually contain any (hashed) passwords, it still typically contains the complete real name of the users on the system, so it's definitely not something that we'd want to leave accessible to an attacker.

My first thought was that something was trying to enumerate valid users on the system, because that would've been a good reason to try to read /etc/passwd.

Tracing the system call to its origin revealed another caller, though. libfreebl, a part of NSS (Network Security Services) was reading it during its initialization. Specifically, we traced it to this array in the source. Reading on what it is used for is, eh, quite eyebrow-raising in the modern security age.

The NSS random number generator seeds itself by attempting to read /dev/urandom (good), ignoring whether that fails or not (not so good), and then continuing by reading and hashing the password file into the random number generator as additional entropy. The same code then goes on to read in several temporary directories (and I do mean directories, not the files inside them) and perform the same procedure.

Should all of this have failed, it will make a last ditch effort to fork/exec

"netstat -ni" and hash the output of that. Note that the usage of fork here is especially "amusing" from the sandboxing perspective, as it's the one thing you'll absolutely never want to allow.Now, almost none of this has ever been a *good* idea, but in its defense NSS is old and caters to many exotic and ancient configurations. The discussion about /dev/urandom reliability was raised in 2002, and I'd wager the relevant Linux code has seen a few changes since. I'm sure that 15 years ago, this might've been a defensible decision to make. Apparently one could even argue that some unnamed Oracle product running on Windows 2000 was a defensible use case to keep this code in 2009.

Nevertheless, it's technical debt. Debt that hurt on the release of Firefox 3.5, when it caused Firefox startup to take over 2 minutes on some people's systems.

It's not that people didn't notice this idea was problematic:

I'm fully tired of this particular trail of tears. There's no good reason to waste users' time at startup pretending to scrape entropy off the filesystem.

-- Brendan Eich, July 2009

RNG_SystemInfoForRNG - which tries to make entropy appear out of the air.

-- Ryan Sleevi, April 2014Though sandboxing was clearly not considered much of a use case in 2006:

Only a subset of particularly messed-up applications suffer from the use of fork.

-- Well meaning contributor, September 2006Nevertheless, I'm - still - looking at this code in the year of our Lord 2016 and wondering if it shouldn't all just be replaced by a single getrandom() call.

If your system doesn't have getrandom(), well maybe there's a solution for that too.

Don't agree? Can we then at least agree that if your /dev/urandom isn't secure, it's your problem, not ours?

|

|

Air Mozilla: Webdev Beer and Tell: May 2016 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

|

|

Support.Mozilla.Org: Event Report: Mozilla Ivory Coast SUMO Sprint |

We’re back, SUMO Nation! This time with a great event report from Abbackar Diomande, our awesome community spirit in Ivory Coast! Grab a cup of something nice to drink and enjoy his report from the Mozilla Ivory Coast SUMO Sprint.

The Mozilla Ivory Coast community is not yet ready to forget Saturday, May 15. It was then that the first SUMO Sprint in Ivory Coast took place, lasting six hours!

For this occasion, we were welcomed and hosted by the Abobo Adjame University, the second largest university in the country.

Many students, some members of the Mozilla local community, and other members of the free software community gathered on this day.

The event began with a Mozilla manifesto presentation by Kouadio – a young member of our local SUMO team and the Lead of the Firefox Club at the university.

The event began with a Mozilla manifesto presentation by Kouadio – a young member of our local SUMO team and the Lead of the Firefox Club at the university.

After that, I introduced everyone to SUMO, the areas of SUMO contribution, the our Nouchi translation project, and Locamotion (the tool we use to localize).

During my presentation I learned that all the guests were really surprised and happy to learn of the existence of support.mozilla.org and a translation project for Nouchi

They were very happy and excited to participate in this sprint, and you can see that in the photos, emanating from their smiles and the joy that you can read from their the faces.

After all presentations and introductions, the really serious things could begin. Everyone spent two hours answering questions of French users on Twitter – the session passed very quickly in the friendly atmosphere.

After all presentations and introductions, the really serious things could begin. Everyone spent two hours answering questions of French users on Twitter – the session passed very quickly in the friendly atmosphere.

We couldn’t reach the goal of answering all the Army of Awesome posts in French, but everyone appreciated what we achieved, providing answers to over half the posts – we were (and still are) very proud of our job!

After the Army of Awesome session, our SUMO warriors have turned to Locamotion for Nouchi localization. It was at once serious and fun. Originally planned for three hours, we localized for four – because it was so interesting :-)

After the Army of Awesome session, our SUMO warriors have turned to Locamotion for Nouchi localization. It was at once serious and fun. Originally planned for three hours, we localized for four – because it was so interesting :-)

Mozilla and myself received congratulations from all participants for this initiative, which promotes the Ivorian language and Ivory Coast as a digital country present on the internet.

Even though we were not able to reach all our objectives, we are still very proud of what we have done. We contributed very intensely, both to help people who needed it and to improve the scale and quality of Nouchi translations in open source, with the help of new and dynamic contributors.

The sprint ended with a group tasting of garba (a traditional local dish) and a beautiful family picture.

The sprint ended with a group tasting of garba (a traditional local dish) and a beautiful family picture.

Thank you, Abbackar! It’s always great to see happy people contributing their skills and time to open source initiatives like this. SUMO is proud to be included in Ivory Coast’s open source movement! We hope to see more awesomeness coming from the local community in the future – in the meantime, I think it’s time to cook some garba! ;-)

https://blog.mozilla.org/sumo/2016/05/20/event-report-mozilla-ivory-coast-sumo-sprint/

|

|

Patrick Cloke: Google Summer of Code 2016 projects |

I’d like to introduce the 13 students that are being mentored by Mozilla this year as part of Google Summer of Code 2016! Currently the “community bonding” period is ongoing, but we are on the cusp of the “coding period” starting.

As part of Google Summer of Code (GSoC), we ask students to provide weekly updates of their progress in a public area (usually a blog). If you’re interested in a particular project, please follow along! Lastly, remember that GSoC is a community effort: if a student is working in an area where you consider yourself knowledgable, please introduce yourself and offer to provide help and/or advice!

Below is a listing of each student’s project (linked to their weekly updates), the name of each student and the name of their mentor(s).

| Project | Student | Mentor(s) |

|---|---|---|

| Download app assets at runtime (Firefox for Android) | Krish | skaspari |

| File API Support (Servo) | izgzhen | Manishearth |

| Implement RFC7512 PKCS#11 URI support and system integration (NSS) | varunnaganathan | Bob Relyea, David Woodhoue |

| Implementing Service Worker Infrastructure in Servo Browser Engine | creativcoder | jdm |

| Improving and expanding the JavaScript XMPP Implementation | Abdelrhman Ahmed | aleth, nhnt11 |

| Mozilla Calendar – Event in a Tab | paulmorris | Philipp Kewisch |

| Mozilla Investigator (MIG): Auditd integration | Arun | kang |

| Prevent Failures due to Update Races (Balrog) | varunjoshi | Ben Hearsum |

| Proposal of Redesign SETA | MikeLing | Joel Maher |

| Schedule TaskCluster Jobs in Treeherder | martianwars | armenzg |

| Thunderbird - Implement mbox -> maildir converter | Shiva | mkmelin |

| Two Projects to Make A-Frame More Useful, Accessible, and Exciting | bryik | Diego Marcos |

| Web-based GDB Frontend | baygeldin | jonasfj |

http://patrick.cloke.us/posts/2016/05/20/google-summer-of-code-2016-projects/

|

|

Air Mozilla: Foundation Demos May 20 2016 |

Foundation Demos May 20 2016

Foundation Demos May 20 2016

|

|

Doug Belshaw: What does it mean to be a digitally literate school leader? |

As part of the work I’m doing with London CLC, their Director, Sarah Horrocks, asked me to write something on what it means to be a digitally literate school leader. I’d like to thank her for agreeing to me writing this for public consumption.

Image CC BY K.W. Barrett

Before I start, I think it’s important to say why I might be in a good position to be able to answer this question. First off, I’m a former teacher and senior leader. I used to be Director of E-Learning of a large (3,000 student), all-age, multi-site Academy. I worked for Jisc on their digital literacies programme, writing my thesis on the same topic. I’ve written a book entitled The Essential Elements of Digital Literacies. I also worked for the Mozilla Foundation on their Web Literacy Map, taking it from preliminary work through to version 1.5. I now consult with clients around identifying, developing, and credentialing digital skills.

That being said, it’s now been a little over six years since I last worked in a school, and literacy practices change quickly. So I’d appreciate comments and pushback on what follows.

Let me begin by saying that, as Allan Martin (2006) pointed out, “Digital literacy is a condition, not a threshold.” That’s why, as I pointed out in my 2012 TEDx talk, we shouldn’t talk about ‘digital literacy’ as a binary. People are not either digitally literate or digitally illiterate - instead literacy practices in a given domain exist on a spectrum.

In the context of a school and other educational institutions, we should be aware that that there are several cultures at play. As a result, there are multiple, overlapping literacy practices. For this reason we should talk of digital literacies in their plurality. As I found in the years spent researching my thesis, there is no one, single, definition of digital literacy that is adequate in capturing the complexity of human experience when using digital devices.

In addition, I think that it’s important to note that digital literacies are highly context dependent. This is perhaps most evident when addressing the dangerous myth of the 'digital native’. We see young people confidently using smartphones, tablets, and other devices and therefore we assume that their skillsets in one domain are matched by the requisite mindsets from another.

So to recap so far, I think it’s important to note that digital literacies are plural and context-dependent. Although it’s tempting to attempt to do so, it’s impossible to impose a one-size-fits-all digital literacy programme on students, teachers, or leaders and meet with success. Instead, and this is the third 'pillar’ one which my approach rests, I’d suggest that definitions of digital literacies need to be co-created.

By 'co-created’ I mean that there are so many ways in which one can understand both the 'digital’ and 'literacies’ aspects of the term 'digital literacies’ that it can be unproductively ambiguous. Instead, a dialogic approach to teasing out what this means in your particular context is much more useful. In my thesis and book I came up with eight elements of digital literacies from the research literature which prove useful to scaffold these conversations:

- Cultural

- Cognitive

- Constructive

- Communicative

- Confident

- Creative

- Critical

- Civic

In order not to make this post any longer than it needs to be, I’ll encourage you to look at my book and thesis for more details on this. Suffice to say, it’s important both to collaboratively define the above eight terms and define then what you mean by 'digital literacies’ in a particular context.

All of this means that the job of the school leader is not to reach a predetermined threshold laid down by a governing body or professional body. Instead, the role of the school leader is to be always learning, questioning their practice, and encouraging colleagues and students in all eight of the 'essential elements’ listed above.

As with any area of interest and focus, school leaders should model the kinds of knowledge, skills, and behaviours they want to see develop in those around them. Just as we help people learn that being punctual is important by always turning up on time ourselves, so the importance of developing digital literacies can be demonstrated by sharing learning experiences and revelations.

There is much more on this in my thesis, book, and presentations but I’ll finish with some recommendations as to what school leaders can do to ensure they are constantly improving their practices around digital literacies:

-

Seek out new people: it’s easy for us to become trapped in what are known as filter bubbles, either through the choices we make as a result of confirmation bias, or algorithmically-curated newsfeeds. Why not find people and organisations who you wouldn’t usually follow, and add them to your daily reading habits?

- Share what you learn: why not create a regular way to update those in your school community about issues relating to the considered use of technology? This could be a discussion forum, a newsletter pointing to the work of people like the Electronic Frontier Foundation or Common Sense Media, or 'clubs’ that help staff and students get to grips with new technologies.

- Find other ways: the danger of 'best practices’ or established workflows is that they can make you blind to new, better ways of doing things. As Clay Shirky notes in this interview it can be liberating to jettison existing working practices in favour of new ones. What other ways can you find to write documents, collaborate with others, be creative, and/or keep people informed?

Comments? Questions? I’m @dajbelshaw or you can get in touch with me at: hello@dynamicskillset.com. I consult around identifying, developing, and credentialing digital skills.

|

|

Air Mozilla: Bay Area Accessibility and Inclusive Design meetup: Fifth Annual Global Accessibility Awareness Day |

Digital Accessibility meetup with speakers for Global Accessibility Awareness Day. #a11ybay. 6pm Welcome with 6:30pm Start Time.

Digital Accessibility meetup with speakers for Global Accessibility Awareness Day. #a11ybay. 6pm Welcome with 6:30pm Start Time.

https://air.mozilla.org/fifth-annual-global-accessibility-awareness-day/

|

|

Support.Mozilla.Org: What’s Up with SUMO – 19th May |

Hello, SUMO Nation!

Glad to see all of you on this side of spring… How are you doing? Have you missed us as much as we missed you? Here we go yet again, another small collection of updates for your reading pleasure :-)

Welcome, new contributors!

Contributors of the week

- The “by default awesome” MikkCZ, for his ongoing triage of SUMO issues reported in Bugzilla.

- The forum supporters who helped users out for the last week.

- The writers of all languages who worked on the KB for the last week.

We salute you!

Most recent SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

The next SUMO Community meeting…

- …is happening on WEDNESDAY the 25th of May – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- Got ideas? Not afraid of dinos? Take a look here!

- Last Saturday in Abidjan is going to be showcased at a blog near you, soon – thanks to Abbackar’s reporting skills :-)

- Reminder: we are slowly reaching the end of the discussions and presentations about possible platform substitutions for Kitsune, as per this thread. We have received a lot of your feedback already, but we are always eager to hear more from you. Let us know what you think and add you thoughts to this document.

-

Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

- Ongoing reminder #3: want to know what’s going on with the Admins? Check this thread in the forum.

Social

- The resident socialites are working on activity reports – stay tuned for more details!

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- Check out Test Pilot if you have not already, there is a discussion going on here.

- Rachel says: “Thank you for all of you answering questions and editing and cleaning out articles this week, it is much appreciated!”

- Please report bugs that were filed from support threads in the last 3 weeks – contact Rachel

- Remember, if you’re new to the support forum, come over and say hi!

Knowledge Base & L10n

- The Polish team have reached their monthly milestone – congratulations!

- Final reminder: if you want to participate in the ongoing discussion about source material quality and frequency, take a look at this thread. We are going to propose a potential way of addressing your issues once we collate enough feedback.

- Reminder: L10n hackathons everywhere! Find your people and get organized!

Firefox

- for Android

- Version 46 support discussion thread.

- Reminder: version 47 will stop supporting Gingerbread. High time to update your Android installations!

- Other than that, it should be a minor release. Documentation in progress!

- for Desktop

- For the main thread on our forums about Firefox 46, click here.

- Version 47 should not bring any revolution, but it will be as good as the previous ones… if not better ;-)

- for iOS

- Firefox for iOS 4.0 IS HERE!

- Firefox for iOS 5.0 should be with us in approximately 5 weeks!

And that’s it! We hope you are looking forward to the end of this week and the beginning of the next one… We surely are! Don’t forget to follow us on Twitter!

https://blog.mozilla.org/sumo/2016/05/19/whats-up-with-sumo-19th-may/

|

|

Yunier Jos'e Sosa V'azquez: Mozilla presenta a Alex Salkever como Vice Presidente de Marketing |

En el d'ia de hoy, Mozilla ha hecho p'ublico su m'as reciente adici'on del equipo de liderazgo en la fundaci'on. Se trata de Alex Salkever, qui'en ejercer'a como nuevo Vice Presidente de Marketing.

En el art'iculo publicado en el blog de Mozilla, Jascha Kaykas-Wolff (Director de Marketing) comenta que en su nuevo rol, Alex tendr'a bajo su mando la conducci'on de las campa~nas estrategias de posicionamiento y marketing. Unido a ello, tambi'en se encargar'a de supervisar las comunicaciones globales, los medios de comunicaci'on social, la asistencia de los usuarios y los equipos de marketing de contenido y de trabajo en toda la organizaci'on para desarrollar comunicaciones externas impactantes para los productos de Mozilla y Firefox.

Anteriormente, Alex fue Director de Marketing de Silk.co, donde centr'o sus esfuerzos al crecimiento de usuarios y las asociaciones de la plataforma. Adem'as, Salkever ha ocupado una variedad de cargos relacionados con el mundo del marketing de productos en los campos de instrumentos cient'ificos, computaci'on en la nube, telecomunicaciones e Internet de las Cosas. En estas diversas capacidades, Alex ha gestionado campa~nas a trav'es de todos los aspectos de marketing y comercializaci'on de productos que incluyen relaciones p'ublicas, marketing de contenidos, adquisici'on de usuarios, contrataci'on de desarrolladores y an'alisis de marketing.

Alex tambi'en brindar'a a Mozilla su experiencia como ex editor de tecnolog'ia en BusinessWeek.com. Entre sus muchos logros, Alex es el co-autor del libro “The Immigrant Exodus” (en espa~nol El 'Exodo del Inmigrante), un libro llamado El Libro de Economista de la lista del a~no en la categor'ia Libros de Negocio en 2012.

!Bienvenido a Mozilla Alex!

http://firefoxmania.uci.cu/mozilla-presenta-a-alex-salkever-como-vice-presidente-de-marketing/

|

|

Air Mozilla: Reps weekly, 19 May 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Air Mozilla: Web QA Team Meeting, 19 May 2016 |

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

|

|

About:Community: Jakarta Community Space Launch |

This post was written by Fauzan Alfi.

It was not an ordinary Friday 13th for Mozilla Indonesia because on May 13th, 2016, it was a very big day for us. After months of planning and preparation, the Mozilla Community Space Jakarta finally launched and opened for the community. It’s the 4th volunteer-run physical community space after Bangalore (now closed), Manila and Taipei and another one is opening soon in Berlin. Strategically located in Cikini – Central Jakarta, the Space will become a place for Mozillians from Greater Jakarta and Bandung to do many activities, especially developer-focused events, and to build relationships with other tech communities in the city.

The Space. Photo by Yofie Setiawan

Invited to the event were many open source and other communities around the city. Mozilla Reps, FSAs and Mozillians also joined to celebrate the Space opening. On his presentation, Yofie Setiawan (Mozilla Rep, Jakarta Space Manager) hopes that Jakarta Community Space can be useful for many people and communities, especially to educate anyone who comes and joins events that take place in the space.

Also joining the event, Brian King from Participation Team at Mozilla. During his remarks, Brian said that the reason behind the Jakarta Community Space is because “the Mozilla community here is one of the most active globally, with deep roots and a strong network in tech scene”. He also added that “Indonesia is an important country with a very dynamic Web presence, and we’d like to engage with more people to make the online experience better for everyone.”

The Jakarta Community Space is around 40 square meters in area that fits 20-30 people inside. On the front side, it has glass wall that’s covered by frosted sticker with some Mozilla projects wording printed on it. Inside, we have some chairs, tables, home theater set, food & drink supplies and coffee machine. Most of the items were donated by Mozillians in Jakarta.

One area where the Jakarta Community excelled was with the planning and design. All the processes are done by the community itself. One of Reps from Indonesia, Fauzan Alfi – who has a background in architecture, helped design the space and kept the process transparent on the Community Design GitHub. The purpose is to ignite collaborative design, not only from Indonesian community but also from other parts of the globe. More creativity was shown by creating mural drawings of landmarks in selected cities around the world – including Monas of Jakarta.

Jakarta Community Space means a lot for Mozilla community in Greater Jakarta and Indonesia, in general. Having a physical place means the Indonesian community will have their own home to spread the mission and collaborate with more communities that are aligned with Mozilla, especially developer communities. Hopefully, the Space will bring more and more people to contribute to Mozilla and help shape the future of the Web.

http://blog.mozilla.org/community/2016/05/19/jakarta-community-space-launch/

|

|

Pascal Chevrel: Let's give Firefox Nightly some love! |

After a decade working on making Mozilla Web properties available in dozens of languages, creating communities of localizers around the globe and building Quality Assurance tools, dashboards and APIs to help ship our software and websites internationally, I recently left the Localization department to report to Doug Turner and work on a new project benefiting directly the Platform and Firefox teams!

I am now in charge of a project aiming to turn Nightly into a maintained channel (just as we have the Aurora, Beta and Release channels) whose goal will be to engage our very technical Nightly users into the Mozilla project in activities that have a measurable impact on the quality of our products.

Here are a few key goals I would like us to achieve in 2016-2017:

Double the number of Nightly users so as to detect much earlier regressions, crashes and Web compatibility issues. A regression detected and reported a couple of days after the code landed on mozilla-central is a simple backout, the same regression reported weeks or even months later in the Aurora, Beta or even discovered on the Release channel can be much more work to get fixed.

Make of Firefox Nightly a real entry point for the more technical users that want to get involved in Mozilla and help us ship software (QA, code, Web Compatibility, security…). Not only for Firefox but also to all technical Mozilla projects that would benefit from a wider participation.

Make of Firefox Nightly a better experience for these technical contributors. This means as a first step using the built-in communication channels (about:home promotional snippets, default tiles, first run / what's New pages…) to communicate information adapted to technical users and propose resources, activities and ways to participate in Mozilla that are technical by nature. I also want to have a specific focus on three countries, Germany, France and Spain, where we have strong local communities, staff and MozSpaces and can engage people more easily IRL.

I will not work on that alone, Sylvestre Ledru, our Release Management Lead, has created a new team (with Marcia Knous in the US and Calixte Denizet in France) to work on improving the quality of the Nightly channel and analyse crashes and regressions. Members of other departments (Participation, MDN, Security, Developer Relations…) have also shown interest in the project and intend to get involved.

But first and foremost, I do intend to get the Mozilla community involved and hopefully also get people not involved in Mozilla yet to join us and help us make of this "Nightly Reboot" project a success!

A few pointers for this project:

There is an existing #nightly IRC channel that we are restoring with Marcia and a few contributors. I am pascalc on IRC and I am in the CET timezone, don't hesitate to ping me there if you want to propose your help, know more about the project or propose your own ideas.

Marcia created a "Nightly Testers" Telegram channel, ping me if you are already using Nightly to report bugs and want to be added

For asynchronous communication, there is a Nightly Testers mailing list

If you want to download Nightly, go to nightly.mozilla.org. Unfortunately the site only proposes en-US builds and this is definitely something I want to get fixed! If you are a French speaker, our community maintains its own download site for Nightly with links to French builds that you can find at nightly.mozfr.org, otherwise other localized builds can be found on our FTP.

If you want to know all the new stuff that gets into our Nightly channel, follow our @FirefoxNightly twitter account

If you are a Nightly user and report a bug on https://bugzilla.mozilla.org, please put the tag [nightly-community] in the whiteboard field of your bug report, this allows us to measure the impact of our active Nightly community on Bugzilla.

Interested? Do get involved and don't hesitate to contact me if you have any suggestion or idea that could fit into that project. Several people I spoke with in the last weeks gave me very interesting feedback and concrete ideas that I preciously noted!

You can contact me (in English, French or Spanish) through the following communication channels:

- Email: pascal AT mozilla DOT com

- IRC on Moznet and Freenode: pascalc

- Twitter: @pascalchevrel

update 15:33 See also this blog post by Mozilla Engineer Nicholas Nethercote I want more users on the Nightly channel

https://www.chevrel.org/carnet/?post/2016/05/19/Let-s-give-Firefox-Nightly-some-love

|

|

Pascal Chevrel: Let's give Firefox Nightly some love! |

After a decade working on making Mozilla Web properties available in dozens of languages, creating communities of localizers around the globe and building Quality Assurance tools, dashboards and APIs to help ship our software and websites internationally, I recently left the Localization department to report to Doug Turner and work on a new project benefiting directly the Platform and Firefox teams!

I am now in charge of a project aiming to turn Nightly into a maintained channel (just as we have the Aurora, Beta and Release channels) whose goal will be to engage our very technical Nightly users into the Mozilla project in activities that have a measurable impact on the quality of our products.

Here are a few key goals I would like us to achieve in 2016-2017:

Double the number of Nightly users so as to detect much earlier regressions, crashes and Web compatibility issues. A regression detected and reported a couple of days after the code landed on mozilla-central is a simple backout, the same regression reported weeks or even months later in the Aurora, Beta or even discovered on the Release channel can be much more work to get fixed.

Make of Firefox Nightly a real entry point for the more technical users that want to get involved in Mozilla and help us ship software (QA, code, Web Compatibility, security…). Not only for Firefox but also to all technical Mozilla projects that would benefit from a wider participation.

Make of Firefox Nightly a better experience for these technical contributors. This means as a first step using the built-in communication channels (about:home promotional snippets, default tiles, first run / what's New pages…) to communicate information adapted to technical users and propose resources, activities and ways to participate in Mozilla that are technical by nature. I also want to have a specific focus on three countries, Germany, France and Spain, where we have strong local communities, staff and MozSpaces and can engage people more easily IRL.

I will not work on that alone, Sylvestre Ledru, our Release Management Lead, has created a new team (with Marcia Knous in the US and Calixte Denizet in France) to work on improving the quality of the Nightly channel and analyse crashes and regressions. Members of other departments (Participation, MDN, Security, Developer Relations…) have also shown interest in the project and intend to get involved.

But first and foremost, I do intend to get the Mozilla community involved and hopefully also get people not involved in Mozilla yet to join us and help us make of this "Nightly Reboot" project a success!

A few pointers for this project:

There is an existing #nightly IRC channel that we are restoring with Marcia and a few contributors. I am pascalc on IRC and I am in the CET timezone, don't hesitate to ping me there if you want to propose your help, know more about the project or propose your own ideas.

Marcia created a "Nightly Testers" Telegram channel, ping me if you are already using Nightly to report bugs and want to be added

For asynchronous communication, there is a Nightly Testers mailing list

If you want to download Nightly, go to nightly.mozilla.org. Unfortunately the site only proposes en-US builds and this is definitely something I want to get fixed! If you are a French speaker, our community maintains its own download site for Nightly with links to French builds that you can find at nightly.mozfr.org, otherwise other localized builds can be found on our FTP.

If you want to know all the new stuff that gets into our Nightly channel, follow our @FirefoxNightly twitter account

If you are a Nightly user and report a bug on https://bugzilla.mozilla.org, please put the tag [nightly-community] in the whiteboard field of your bug report, this allows us to measure the impact of our active Nightly community on Bugzilla.

Interested? Do get involved and don't hesitate to contact me if you have any suggestion or idea that could fit into that project. Several people I spoke with in the last weeks gave me very interesting feedback and concrete ideas that I preciously noted!

You can contact me (in English, French or Spanish) through the following communication channels:

- Email: pascal AT mozilla DOT org

- IRC on Moznet and Freenode: pascalc

- Twitter: @pascalchevrel

https://www.chevrel.org/carnet/?post/2016/05/19/Let-s-give-Firefox-Nightly-some-love-

|

|

Nicholas Nethercote: I want more users on the Nightly channel |

I have been working recently on a new Platform Engineering initiative called Uptime, the goal of which is to reduce Firefox’s crash rate on both desktop and mobile. As a result I’ve been spending a lot of time looking at crash reports, particular on the Nightly channel. This in turn has increased my appreciation of how important Nightly channel users are.

A crash report from a Nightly user is much more useful than a crash report from a non-Nightly user, for two reasons.

- If a developer lands a change that triggers crashes for Nightly users, they will get fast feedback via crash reports, often within a day or two. This maximizes the likelihood of a fix, because the particular change will be fresh in the developer’s mind. Also, backing out changes is usually easy at this point. In contrast, finding out about a crash weeks or months later is less useful.

- Because a new Nightly build is done every night, if a new crash signature appears, we have a fairly small regression window. This makes it easier to identify which change caused the new crashes.

Also, Nightly builds contain some extra diagnostics and checks that can also be helpful with identifying a range of problems. (See MOZ_DIAGNOSTIC_ASSERT for one example.)

If we could significantly increase the size of our Nightly user population, that would definitely help reduce crash rates. We would get data about a wider range of crashes. We would also get stronger signals for specific crash-causing defects. This is important because the number of crash reports received for each Nightly build is relatively low, and it’s often the case that a cluster of crash reports that come from two or more different users will receive more attention than a cluster that comes from a single user.

(You might be wondering how we distinguish those two cases. Each crash report doesn’t contain enough information to individually identify the user — unless the user entered their email address into the crash reporting form — but crash reports do contain enough information that you can usually tell if two different crash reports have come from two different users. For example, the installation time alone is usually enough, because it’s measured to the nearest second.)

All this is doubly true on Android, where the number of Nightly users is much smaller than on Windows, Mac and Linux.

Using the Nightly channel is not the best choice for everyone. There are some disadvantages.

- Nightly is less stable than later channels, but not drastically so. The crash rate is typically 1.5–2.5 times higher than Beta or Release, though occasionally it spikes higher for a short period. So a Nightly user should be comfortable with the prospect of less stability.

- Nightly gets updated every 24 hours, which some people would find annoying.

There are also advantages.

- Nightly users get to experience new features and fixes immediately.

- Nightly users get the satisfaction that they are helping produce a better Firefox. The frustration of any crash is offset by the knowledge that the information in the corresponding crash report is disproportionately valuable. Indeed, there’s a non-trivial likelihood that a single crash report from a Nightly user will receive individual attention from an engineer.

If you, or somebody you know, thinks that those advantages outweigh the disadvantages, please consider switching. Thank you.

https://blog.mozilla.org/nnethercote/2016/05/19/i-want-more-users-on-the-nightly-channel/

|

|