Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Henrik Skupin: Firefox Automation report – week 5/6 2014 |

A lot of things were happening in weeks 5 and 6, and we made some good progress regards the stability of Mozmill.

Highlights

The unexpected and intermittent Jenkins crashes our Mozmill CI system was affected with are totally gone now. Most likely the delayed creation of jobs made that possible, which also gave Jenkins a bit more breath and not bomb it with hundreds of API calls.

For the upcoming release of Mozmill 2.0.4 a version bump for package dependencies was necessary for mozdownload. So we released mozdownload 1.11. Sadly a newly introduced regression in packaging caused us to release mozdownload 1.11.1 a day later.

After a lot of work for stability improvements we were able to release Mozmill 2.0.4. This version is one with the largest amount of changes in the last couple of months. Restarts and shutdowns of the application is way better handled by Mozmill now. Sadly we noticed another problem during restarts of the application on OS X (bug 966234) which forced us to fix mozrunner.

Henrik released mozrunner 5.34 which includes a fix how mozrunner retrieves the state of the application during a restart. It was failing here by telling us that the application has quit while it was still running. As result Mozmill started a new Firefox process, which was not able to access the still used profile. A follow-up Mozmill release was necessary, so we went for testing it.

As another great highlight for community members who are usually not able to attend our Firefox Automation meetings, we have started to record our meetings now. So if you want to replay the meetings please check our archive.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 5 and week 6.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda and notes from the Firefox Automation meetings of week 5 and week 6.

http://www.hskupin.info/2014/03/14/firefox-automation-report-week-56-2014/

|

|

Henrik Skupin: Firefox Automation report – week 3/4 2014 |

Due to the high work load and a week of vacation I was not able to give some updates for work done by the Firefox Automation team. In the next days I really want to catch up with the reports, and bring you all on the latest state.

Highlights

After the staging system for Mozmill CI has been setup by IT and Henrik got all the VMs connected, also the remaining Mac Minis for OS X 10.6 to 10.9 have been delivered. That means our staging system is complete and can be used to test upcoming updates, and to investigate failures.

For the production system the Ubuntu 13.04 machines have been replaced by 13.10. It’s again a bit late but other work was stopping us from updating earlier. The next update to 14.04 should become live faster.

Beside the above news we also had 2 major blockers. First one was a top crasher of Firefox caused by the cycle collector. Henrik filed it as bug 956284 and together with Tim Taubert we got it fixed kinda quick. The second one was actually a critical problem with Mozmill, which didn’t let us successfully run restart tests anymore. As it has been turned out the zombie processes, which were affecting us for a while, kept the socks server port open, and the new Firefox process couldn’t start its own server. As result JSBridge failed to establish a connection. Henrik got this fixed on bug 956315

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 3 and week 4.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda and notes from the Firefox Automation meetings of week 3 and week 4.

http://www.hskupin.info/2014/03/14/firefox-automation-report-week-3-4-2014/

|

|

Joshua Cranmer: Understanding email charsets |

This time, I modified my data-collection scripts to make it much easier to mass-download NNTP messages. The first script effectively lists all the newsgroups, and then all the message IDs in those newsgroups, stuffing the results in a set to remove duplicates (cross-posts). The second script uses Python's nntplib package to attempt to download all of those messages. Of the 32,598,261 messages identified by the first set, I succeeded in obtaining 1,025,586 messages in full or in part. Some messages failed to download due to crashing nntplib (which appears to be unable to handle messages of unbounded length), and I suspect my newsserver connections may have just timed out in the middle of the download at times. Others failed due to expiring before I could download them. All in all, 19,288 messages were not downloaded.

Analysis of the contents of messages were hampered due to a strong desire to find techniques that could mangle messages as little as possible. Prior experience with Python's message-parsing libraries lend me to believe that they are rather poor at handling some of the crap that comes into existence, and the errors in nntplib suggest they haven't fixed them yet. The only message parsing framework I truly trust to give me the level of finess is the JSMime that I'm writing, but that happens to be in the wrong language for this project. After reading some blog posts of Jeffrey Stedfast, though, I decided I would give GMime a try instead of trying to rewrite ad-hoc MIME parser #N.

Ultimately, I wrote a program to investigate the following questions on how messages operate in practice:

- What charsets are used in practice? How are these charsets named?

- For undeclared charsets, what are the correct charsets?

- For charsets unknown to a decoder, how often would ASCII suffice?

- What charsets are used in RFC 2047 encoded words?

- How prevalent are malformed RFC 2047 encoded words?

- When HTML and MIME are mixed, who wins?

- What is the state of 8-bit headers?

While those were the questions I seeked the answers to originally, I did come up with others as I worked on my tool, some in part due to what information I was basically already collecting. The tool I wrote primarily uses GMime to convert the body parts to 8-bit text (no charset conversion), as well as parse the Content-Type headers, which are really annoying to do without writing a full parser. I used ICU to handle charset conversion and detection. RFC 2047 decoding is done largely by hand since I needed very specific information that I couldn't convince GMime to give me. All code that I used is available upon request; the exact dataset is harder to transport, given that it is some 5.6GiB of data.

Other than GMime being built on GObject and exposing a C API, I can't complain much, although I didn't try to use it to do magic. Then again, in my experience (and as this post will probably convince you as well), you really want your MIME library to do charset magic for you, so in doing well for my needs, it's actually not doing well for a larger audience. ICU's C API similarly makes me want to complain. However, I'm now very suspect of the quality of its charset detection code, which is the main reason I used it. Trying to figure out how to get it to handle the charset decoding errors also proved far more annoying than it really should.

Some final background regards the biases I expect to crop up in the dataset. As the approximately 1 million messages were drawn from the python set iterator, I suspect that there's no systematic bias towards or away from specific groups, excepting that the ~11K messages found in the eternal-september.* hierarchy are completely represented. The newsserver I used, Eternal September, has a respectably large set of newsgroups, although it is likely to be biased towards European languages and under-representing East Asians. The less well-connected South America, Africa, or central Asia are going to be almost completely unrepresented. The download process will be biased away towards particularly heinous messages (such as exceedingly long lines), since nntplib itself is failing.

This being news messages, I also expect that use of 8-bit will be far more common than would be the case in regular mail messages. On a related note, the use of 8-bit in headers would be commensurately elevated compared to normal email. What would be far less common is HTML. I also expect that undeclared charsets may be slightly higher.

Charsets

Charset data is mostly collected on the basis of individual body parts within body messages; some messages have more than one. Interestingly enough, the 1,025,587 messages yielded 1,016,765 body parts with some text data, which indicates that either the messages on the server had only headers in the first place or the download process somehow managed to only grab the headers. There were also 393 messages that I identified having parts with different charsets, which only further illustrates how annoying charsets are in messages.

The aliases in charsets are mostly uninteresting in variance, except for the various labels used for US-ASCII (us - ascii, 646, and ANSI_X3.4-1968 are the less-well-known aliases), as well as the list of charsets whose names ICU was incapable of recognizing, given below. Unknown charsets are treated as equivalent to undeclared charsets in further processing, as there were too few to merit separate handling (45 in all).

- x-windows-949

- isolatin

- ISO-IR-111

- Default

- ISO-8859-1 format=flowed

- X-UNKNOWN

- x-user-defined

- windows-874

- 3D"us-ascii"

- x-koi8-ru

- windows-1252 (fuer gute Newsreader)

- LATIN-1#2 iso-8859-1

For the next step, I used ICU to attempt to detect the actual charset of the body parts. ICU's charset detector doesn't support the full gamut of charsets, though, so charset names not claimed to be detected were instead processed by checking if they decoded without error. Before using this detection, I detect if the text is pure ASCII (excluding control characters, to enable charsets like ISO-2022-JP, and +, if the charset we're trying to check is UTF-7). ICU has a mode which ignores all text in things that look like HTML tags, and this mode is set for all HTML body parts.

I don't quite believe ICU's charset detection results, so I've collapsed the results into a simpler table to capture the most salient feature. The correct column indicates the cases where the detected result was the declared charset. The ASCII column captures the fraction which were pure ASCII. The UTF-8 column indicates if ICU reported that the text was UTF-8 (it always seems to try this first). The Wrong C1 column refers to an ISO-8859-1 text being detected as windows-1252 or vice versa, which is set by ICU if it sees or doesn't see an octet in the appropriate range. The other column refers to all other cases, including invalid cases for charsets not supported by ICU.

| Declared | Correct | ASCII | UTF-8 | Wrong C1 | Other | Total |

|---|---|---|---|---|---|---|

| ISO-8859-1 | 230,526 | 225,667 | 883 | 8,119 | 1,035 | 466,230 |

| Undeclared | 148,054 | 1,116 | 37,626 | 186,796 | ||

| UTF-8 | 75,674 | 37,600 | 1,551 | 114,825 | ||

| US-ASCII | 98,238 | 0 | 304 | 98,542 | ||

| ISO-8859-15 | 67,529 | 18,527 | 0 | 86,056 | ||

| windows-1252 | 21,414 | 4,370 | 154 | 3,319 | 130 | 29,387 |

| ISO-8859-2 | 18,647 | 2,138 | 70 | 71 | 2,319 | 23,245 |

| KOI8-R | 4,616 | 424 | 2 | 1,112 | 6,154 | |

| GB2312 | 1,307 | 59 | 0 | 112 | 1,478 | |

| Big5 | 622 | 608 | 0 | 174 | 1,404 | |

| windows-1256 | 343 | 10 | 0 | 45 | 398 | |

| IBM437 | 84 | 257 | 0 | 341 | ||

| ISO-8859-13 | 311 | 6 | 0 | 317 | ||

| windows-1251 | 131 | 97 | 1 | 61 | 290 | |

| windows-1250 | 69 | 69 | 0 | 14 | 101 | 253 |

| ISO-8859-7 | 26 | 26 | 0 | 0 | 131 | 183 |

| ISO-8859-9 | 127 | 11 | 0 | 0 | 17 | 155 |

| ISO-2022-JP | 76 | 69 | 0 | 3 | 148 | |

| macintosh | 67 | 57 | 0 | 124 | ||

| ISO-8859-16 | 0 | 15 | 101 | 116 | ||

| UTF-7 | 51 | 4 | 0 | 55 | ||

| x-mac-croatian | 0 | 13 | 25 | 38 | ||

| KOI8-U | 28 | 2 | 0 | 30 | ||

| windows-1255 | 0 | 18 | 0 | 0 | 6 | 24 |

| ISO-8859-4 | 23 | 0 | 0 | 23 | ||

| EUC-KR | 0 | 3 | 0 | 16 | 19 | |

| ISO-8859-14 | 14 | 4 | 0 | 18 | ||

| GB18030 | 14 | 3 | 0 | 0 | 17 | |

| ISO-8859-8 | 0 | 0 | 0 | 0 | 16 | 16 |

| TIS-620 | 15 | 0 | 0 | 15 | ||

| Shift_JIS | 8 | 4 | 0 | 1 | 13 | |

| ISO-8859-3 | 9 | 1 | 1 | 11 | ||

| ISO-8859-10 | 10 | 0 | 0 | 10 | ||

| KSC_5601 | 3 | 6 | 0 | 9 | ||

| GBK | 4 | 2 | 0 | 6 | ||

| windows-1253 | 0 | 3 | 0 | 0 | 2 | 5 |

| ISO-8859-5 | 1 | 0 | 0 | 3 | 4 | |

| IBM850 | 0 | 4 | 0 | 4 | ||

| windows-1257 | 0 | 3 | 0 | 3 | ||

| ISO-2022-JP-2 | 2 | 0 | 0 | 2 | ||

| ISO-8859-6 | 0 | 1 | 0 | 0 | 1 | |

| Total | 421,751 | 536,373 | 2,226 | 11,523 | 44,892 | 1,016,765 |

The most obvious thing shown in this table is that the most common charsets remain ISO-8859-1, Windows-1252, US-ASCII, UTF-8, and ISO-8859-15, which is to be expected, given an expected prior bias to European languages in newsgroups. The low prevalence of ISO-2022-JP is surprising to me: it means a lower incidence of Japanese than I would have expected. Either that, or Japanese have switched to UTF-8 en masse, which I consider very unlikely given that Japanese have tended to resist the trend towards UTF-8 the most.

Beyond that, this dataset has caused me to lose trust in the ICU charset detectors. KOI8-R is recorded as being 18% malformed text, with most of that ICU believing to be ISO-8859-1 instead. Judging from the results, it appears that ICU has a bias towards guessing ISO-8859-1, which means I don't believe the numbers in the Other column to be accurate at all. For some reason, I don't appear to have decoders for ISO-8859-16 or x-mac-croatian on my local machine, but running some tests by hand appear to indicate that they are valid and not incorrect.

Somewhere between 0.1% and 1.0% of all messages are subject to mojibake, depending on how much you trust the charset detector. The cases of UTF-8 being misdetected as non-UTF-8 could potentially be explained by having very few non-ASCII sequences (ICU requires four valid sequences before it confidently declares text UTF-8); someone who writes a post in English but has a non-ASCII signature (such as myself) could easily fall into this category. Despite this, however, it does suggest that there is enough mojibake around that users need to be able to override charset decisions.

The undeclared charsets are described, in descending order of popularity, by ISO-8859-1, Windows-1252, KOI8-R, ISO-8859-2, and UTF-8, describing 99% of all non-ASCII undeclared data. ISO-8859-1 and Windows-1252 are probably over-counted here, but the interesting tidbit is that KOI8-R is used half as much undeclared as it is declared, and I suspect it may be undercounted. The practice of using locale-default fallbacks that Thunderbird has been using appears to be the best way forward for now, although UTF-8 is growing enough in popularity that using a specialized detector that decodes as UTF-8 if possible may be worth investigating (3% of all non-ASCII, undeclared messages are UTF-8).

HTML

Unsuprisingly (considering I'm polling newsgroups), very few messages contained any HTML parts at all: there were only 1,032 parts in the total sample size, of which only 552 had non-ASCII characters and were therefore useful for the rest of this analysis. This means that I'm skeptical of generalizing the results of this to email in general, but I'll still summarize the findings.

HTML, unlike plain text, contains a mechanism to explicitly identify the charset of a message. The official algorithm for determining the charset of an HTML file can be described simply as "look for a tag in the first 1024 bytes. If it can be found, attempt to extract a charset using one of several different techniques depending on what's present or not." Since doing this fully properly is complicated in library-less C++ code, I opted to look first for a tt> production, guess the extent of the tag, and try to find a charset= string somewhere in that tag. This appears to be an approach which is more reflective of how this parsing is actually done in email clients than the proper HTML algorithm. One difference is that my regular expressions also support the newer construct, although I don't appear to see any use of this.

I found only 332 parts where the HTML declared a charset. Only 22 parts had a case where both a MIME charset and an HTML charset and the two disagreed with each other. I neglected to count how many messages had HTML charsets but no MIME charsets, but random sampling appeared to indicate that this is very rare on the data set (the same order of magnitude or less as those where they disagreed).

As for the question of who wins: of the 552 non-ASCII HTML parts, only 71 messages did not have the MIME type be the valid charset. Then again, 71 messages did not have the HTML type be valid either, which strongly suggests that ICU was detecting the incorrect charset. Judging from manual inspection of such messages, it appears that the MIME charset ought to be preferred if it exists. There are also a large number of HTML charset specifications saying unicode, which ICU treats as UTF-16, which is most certainly wrong.

Headers

In the data set, 1,025,856 header blocks were processed for the following statistics. This is slightly more than the number of messages since the headers of contained message/rfc822 parts were also processed. The good news is that 97% (996,103) headers were completely ASCII. Of the remaining 29,753 headers, 3.6% (1,058) were UTF-8 and 43.6% (12,965) matched the declared charset of the first body part. This leaves 52.9% (15,730) that did not match that charset, however.

Now, NNTP messages can generally be expected to have a higher 8-bit header ratio, so this is probably exaggerating the setup in most email messages. That said, the high incidence is definitely an indicator that even non-EAI-aware clients and servers cannot blindly presume that headers are 7-bit, nor can EAI-aware clients and servers presume that 8-bit headers are UTF-8. The high incidence of mismatching the declared charset suggests that fallback-charset decoding of headers is a necessary step.

RFC 2047 encoded-words is also an interesting statistic to mine. I found 135,951 encoded-words in the data set, which is rather low, considering that messages can be reasonably expected to carry more than one encoded-word. This is likely an artifact of NNTP's tendency towards 8-bit instead of 7-bit communication and understates their presence in regular email.

Counting encoded-words can be difficult, since there is a mechanism to let them continue in multiple pieces. For the purposes of this count, a sequence of such words count as a single word, and I indicate the number of them that had more than one element in a sequence in the Continued column. The 2047 Violation column counts the number of sequences where decoding words individually does not yield the same result as decoding them as a whole, in violation of RFC 2047. The Only ASCII column counts those words containing nothing but ASCII symbols and where the encoding was thus (mostly) pointless. The Invalid column counts the number of sequences that had a decoder error.

| Charset | Count | Continued | 2047 Violation | Only ASCII | Invalid |

|---|---|---|---|---|---|

| ISO-8859-1 | 56,355 | 15,610 | 499 | 0 | |

| UTF-8 | 36,563 | 14,216 | 3,311 | 2,704 | 9,765 |

| ISO-8859-15 | 20,699 | 5,695 | 40 | 0 | |

| ISO-8859-2 | 11,247 | 2,669 | 9 | 0 | |

| windows-1252 | 5,174 | 3,075 | 26 | 0 | |

| KOI8-R | 3,523 | 1,203 | 12 | 0 | |

| windows-1256 | 765 | 568 | 0 | 0 | |

| Big5 | 511 | 46 | 28 | 0 | 171 |

| ISO-8859-7 | 165 | 26 | 0 | 3 | |

| windows-1251 | 157 | 30 | 2 | 0 | |

| GB2312 | 126 | 35 | 6 | 0 | 51 |

| ISO-2022-JP | 102 | 8 | 5 | 0 | 49 |

| ISO-8859-13 | 78 | 45 | 0 | 0 | |

| ISO-8859-9 | 76 | 21 | 0 | 0 | |

| ISO-8859-4 | 71 | 2 | 0 | 0 | |

| windows-1250 | 68 | 21 | 0 | 0 | |

| ISO-8859-5 | 66 | 20 | 0 | 0 | |

| US-ASCII | 38 | 10 | 38 | 0 | |

| TIS-620 | 36 | 34 | 0 | 0 | |

| KOI8-U | 25 | 11 | 0 | 0 | |

| ISO-8859-16 | 22 | 1 | 0 | 22 | |

| UTF-7 | 17 | 2 | 1 | 8 | 3 |

| EUC-KR | 17 | 4 | 4 | 0 | 9 |

| x-mac-croatian | 10 | 3 | 0 | 10 | |

| Shift_JIS | 8 | 0 | 0 | 0 | 3 |

| Unknown | 7 | 2 | 0 | 7 | |

| ISO-2022-KR | 7 | 0 | 0 | 0 | 0 |

| GB18030 | 6 | 1 | 0 | 0 | 1 |

| windows-1255 | 4 | 0 | 0 | 0 | |

| ISO-8859-14 | 3 | 0 | 0 | 0 | |

| ISO-8859-3 | 2 | 1 | 0 | 0 | |

| GBK | 2 | 0 | 0 | 0 | 2 |

| ISO-8859-6 | 1 | 1 | 0 | 0 | |

| Total | 135,951 | 43,360 | 3,361 | 3,338 | 10,096 |

This table somewhat mirrors the distribution of regular charsets, with one major class of differences: charsets that represent non-Latin scripts (particularly Asian scripts) appear to be overdistributed compared to their corresponding use in body parts. The exception to this rule is GB2312 which is far lower than relative rankings would presume—I attribute this to people using GB2312 being more likely to use 8-bit headers instead of RFC 2047 encoding, although I don't have direct evidence.

Clearly continuations are common, which is to be relatively expected. The sad part is how few people bother to try to adhere to the specification here: out of 14,312 continuations in languages that could violate the specification, 23.5% of them violated the specification. The mode-shifting versions (ISO-2022-JP and EUC-KR) are basically all violated, which suggests that no one bothered to check if their encoder "returns to ASCII" at the end of the word (I know Thunderbird's does, but the other ones I checked don't appear to).

The number of invalid UTF-8 decoded words, 26.7%, seems impossibly high to me. A brief check of my code indicates that this is working incorrectly in the face of invalid continuations, which certainly exaggerates the effect but still leaves a value too high for my tastes. Of more note are the elevated counts for the East Asian charsets: Big5, GB2312, and ISO-2022-JP. I am not an expert in charsets, but I belive that Big5 and GB2312 in particular are a family of almost-but-not-quite-identical charsets and it may be that ICU is choosing the wrong candidate of each family for these instances.

There is a surprisingly large number of encoded words that encode only ASCII. When searching specifically for the ones that use the US-ASCII charset, I found that these can be divided into three categories. One set comes from a few people who apparently have an unsanitized whitespace (space and LF were the two I recall seeing) in the display name, producing encoded words like =?us-ascii?Q?=09Edward_Rosten?=. Blame 40tude Dialog here. Another set encodes some basic characters (most commonly = and ?, although a few other interpreted characters popped up). The final set of errors were double-encoded words, such as =?us-ascii?Q?=3D=3FUTF-8=3FQ=3Ff=3DC3=3DBCr=3F=3D?=, which appear to be all generated by an Emacs-based newsreader.

One interesting thing when sifting the results is finding the crap that people produce in their tools. By far the worst single instance of an RFC 2047 encoded-word that I found is this one: Subject: Re: [Kitchen Nightmares] Meow! Gordon Ramsay Is =?ISO-8859-1?B?UEgR lqZ VuIEhlYWQgVH rbGeOIFNob BJc RP2JzZXNzZW?= With My =?ISO-8859-1?B?SHVzYmFuZ JzX0JhbGxzL JfU2F5c19BbXiScw==?= Baking Company Owner (complete with embedded spaces), discovered by crashing my ad-hoc base64 decoder (due to the spaces). The interesting thing is that even after investigating the output encoding, it doesn't look like the text is actually correct ISO-8859-1... or any obvious charset for that matter.

I looked at the unknown charsets by hand. Most of them were actually empty charsets (looked like =??B?Sy4gSC4gdm9uIFLDvGRlbg==?=), and all but one of the outright empty ones were generated by KNode and really UTF-8. The other one was a Windows-1252 generated by a minor newsreader.

Another important aspect of headers is how to handle 8-bit headers. RFC 5322 blindly hopes that headers are pure ASCII, while RFC 6532 dictates that they are UTF-8. Indeed, 97% of headers are ASCII, leaving just 29,753 headers that are not. Of these, only 1,058 (3.6%) are UTF-8 per RFC 6532. Deducing which charset they are is difficult because the large amount of English text for header names and the important control values will greatly skew any charset detector, and there is too little text to give a charset detector confidence. The only metric I could easily apply was testing Thunderbird's heuristic as "the header blocks are the same charset as the message contents"—which only worked 45.2% of the time.

Encodings

While developing an earlier version of my scanning program, I was intrigued to know how often various content transfer encodings were used. I found 1,028,971 parts in all (1,027,474 of which are text parts). The transfer encoding of binary did manage to sneak in, with 57 such parts. Using 8-bit text was very popular, at 381,223 samples, second only to 7-bit at 496,114 samples. Quoted-printable had 144,932 samples and base64 only 6,640 samples. Extremely interesting are the presence of 4 illegal transfer encodings in 5 messages, two of them obvious typos and the others appearing to be a client mangling header continuations into the transfer-encoding.

Conclusions

So, drawing from the body of this data, I would like to make the following conclusions as to using charsets in mail messages:

- Have a fallback charset. Undeclared charsets are extremely common, and I'm skeptical that charset detectors are going to get this stuff right, particularly since email can more naturally combine multiple languages than other bodies of text (think signatures). Thunderbird currently uses a locale-dependent fallback charset, which roughly mirrors what Firefox and I think most web browsers do.

- Let users override charsets when reading. On a similar token, mojibake text, while not particularly common, is common enough to make declared charsets sometimes unreliable. It's also possible that the fallback charset is wrong, so users may need to override the chosen charset.

- Testing is mandatory. In this set of messages, I found base64 encoded words with spaces in them, encoded words without charsets (even UNKNOWN-8BIT), and clearly invalid Content-Transfer-Encodings. Real email messages that are flagrantly in violation of basic spec requirements exist, so you should make sure that your email parser and client can handle the weirdest edge cases.

- Non-UTF-8, non-ASCII headers exist. EAI not withstanding, 8-bit headers are a reality. Combined with a predilection for saying ASCII when text is really ASCII, this means that there is often no good in-band information to tell you what charset is correct for headers, so you have to go back to a fallback charset.

- US-ASCII really means ASCII. Email clients appear to do a very good job of only emitting US-ASCII as a charset label if it's US-ASCII. The sample size is too small for me to grasp what charset 8-bit characters should imply in US-ASCII.

- Know your decoders. ISO-8859-1 actually means Windows-1252 in practice. Big5 and GB1232 are actually small families of charsets with slightly different meanings. ICU notably disagrees with some of these realities, so be sure to include in your tests various charset edge cases so you know that the decoders are correct.

- UTF-7 is still relevant. Of the charsets I found not mentioned in the WHATWG encoding spec, IBM437 and x-mac-croatian are in use only due to specific circumstances that limit their generalizable presence. IBM850 is too rare. UTF-7 is common enough that you need to actually worry about it, as abominable and evil a charset it is.

- HTML charsets may matter—but MIME matters more. I don't have enough data to say if charsets declared in HTML are needed to do proper decoding. I do have enough to say fairly conclusively that the MIME charset declaration is authoritative if HTML disagrees.

- Charsets are not languages. The entire reason x-mac-croatian is used at all can be traced to Thunderbird displaying the charset as "Croatian," despite it being pretty clearly not a preferred charset. Similarly most charsets are often enough ASCII that, say, an instance of GB2312 is a poor indicator of whether or not the message is in English. Anyone trying to filter based on charsets is doing a really, really stupid thing.

- RFCs reflect an ideal world, not reality. This is most notable in RFC 2047: the specification may state that encoded words are supposed to be independently decodable, but the evidence is pretty clear that more clients break this rule than uphold it.

- Limit the charsets you support. Just because your library lets you emit a hundred charsets doesn't mean that you should let someone try to do it. You should emit US-ASCII or UTF-8 unless you have a really compelling reason not to, and those compelling reasons don't require obscure charsets. Some particularly annoying charsets should never be written: EBCDIC is already basically dead on the web, and I'd like to see UTF-7 die as well.

When I have time, I'm planning on taking some of the more egregious or interesting messages in my dataset and packaging them into a database of emails to help create testsuites on handling messages properly.

http://quetzalcoatal.blogspot.com/2014/03/understanding-email-charsets.html

|

|

Dave Townsend: An update for hgchanges |

Nearly a year ago I showed off the first version of my webapp for displaying recent changes in mercurial repositories. I’ve heard directly from a number of people that use it but it’s had a few problems that I’ve spent some of my spare time trying to solve. I’m now pleased to say that a new version is up and running. I’m not sure it’s accurate to call this a rewrite since it was entirely incremental but when I look back over of the code changes there really isn’t much that wasn’t touched in some way or other. So what’s new? For you, a person using the site absolutely nothing! So what on earth have I been doing rewriting the code?

Hopefully I’ve been making the thing a whole lot more stable. Eagle eyed users may have noticed that periodically the site would stop updating, sometimes for days at a time. The site is split into two pieces. One is a django website to display the changes. The other is the important bit that reads the change data out of mercurial and puts it into a database that the website can use. I do this caching because it would be too expensive to work it out on the fly. The problem is that the process of reading the data out of mercurial meant keeping a local version of every repository on the server (some 5GB for the four currently tracked) and reading the changes using mercurial’s python API was slow and used a lot of memory. The shared hosting environment that I run this out of kept killing the process when it used too many resources. Normally it could recover but occasionally it would need a manual kick. Worse there is some mercurial bug where sometimes pulling a repository will end up with an inconsistent database. It’s rare so many people don’t see it but when you’re pulling repositories every ten minutes it starts happing every couple of weeks, again requiring manual work to fix the problem.

I did dabble with getting this into shape so I could run it on a mozilla server. PAAS seemed like the obvious option but after a lot of work it turned out that the database size restrictions there made this impossible. Since then I’ve seem so many horror stories about PAAS falling over that I think I’m glad I never got there. I also tried getting a dedicated server from Mozilla to run this on but they were quite rightly wary when I mentioned that the process used a bunch of memory and wanted harder figures before committing, unfortunately I never found out a way to give those figures.

The final solution has been ripping out the mercurial API dependency. Now rather than needing local mercurial repositories the import process instead talks to them over the web. The default mercurial web APIs aren’t great but luckily all of the repositories I care about have pushlog installed which has a good JSON API for retrieving recently pushed changesets. So now the process is to pull the recent pushlog, get the patches for every changeset and parse them to work out which files have changed. By not having to load the mercurial structures there is far less memory used and since the process now has to delay as it downloads each patch file it keeps the CPU usage low.

I’ve also made the database itself a lot more efficient. Previously I recorded every changeset for every repository, but many repositories have the same changeset in them. So by noting that the database gets a little more complicated but uses much less space and since you only have to parse the changeset once the import process becomes faster. It took me a while to get the website performing as fast as before with these changes but I think it’s there now (barring some strange slowness in Django that I can’t identify). I’ve also turned on some quite aggressive caching both on the client and server side, if someone has visited the same page as you’re trying to access recently then it should load super fast.

So, the new version is up and running at the same location as the old. Chances are you’re using it already so if you’re noticing any unexpected slowness or something not looking right please let me know.

http://www.oxymoronical.com/blog/2014/03/An-update-for-hgchanges

|

|

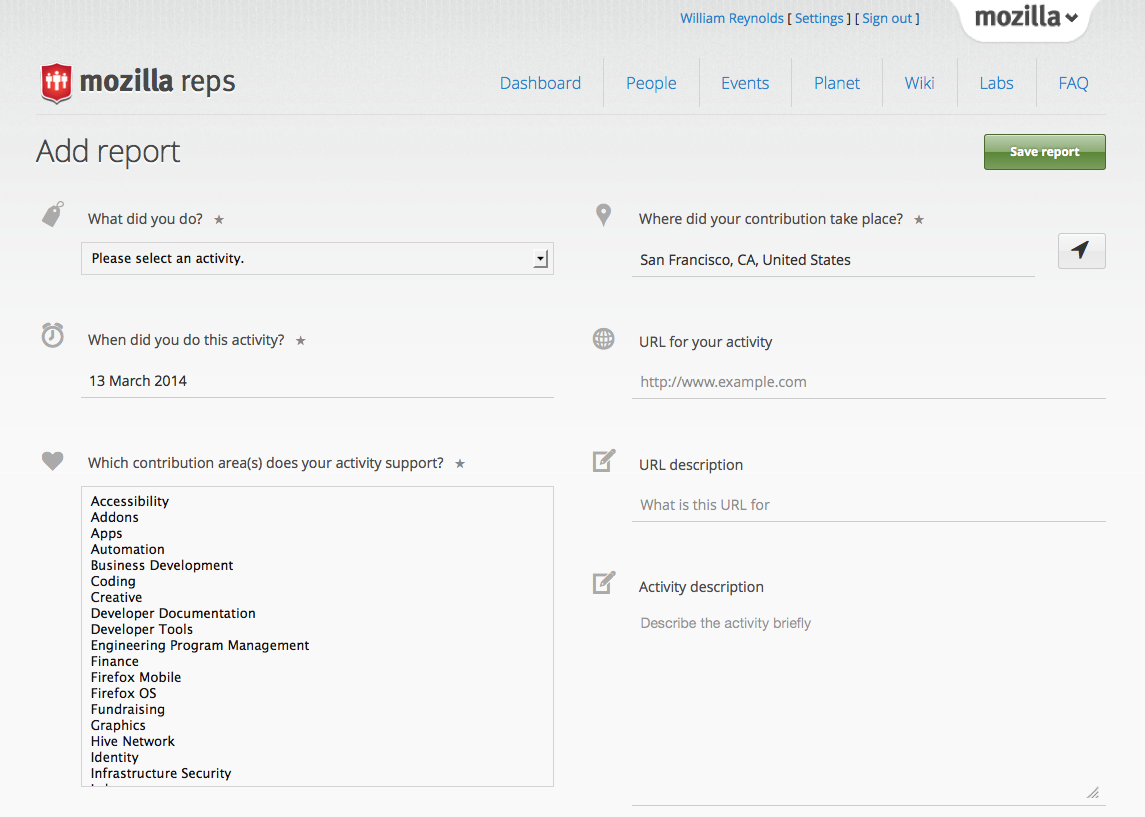

William Reynolds: Introducing a better reporting system for Mozilla Reps |

Starting today we are switching to a new reporting system for Mozilla Reps that is easier, faster, and activity-based. This means there are no more monthly reports, and Reps can report activities as they happen.

We originally chose a monthly reporting system because of technical limitations when reports were first posted on the wiki. This new activity-based reporting will provide more frequent reporting, more valuable information and better measurement of the impact of the Reps program. Reps do great things all the time, and we want to be able to show that success to them and others involved with Mozilla.

The new reporting system introduces two types of reporting (active and passive) in order to make it easier to communicate activities and save time. Active reports are done by completing a simple form. Passive reports are automatically generated as Reps work on different efforts. When a Rep creates an event or attends an event on the Reps Portal, a passive report will automatically be created about that action. A Rep’s mentor will be notified when a Rep organizes or attends an event, and that information will appear on the Rep’s profile.

A Rep no longer need to create reports saying she or he is organizing or attending an event, since it will be done automatically. In the future, we plan to add more types of passive reports, such as tweeting or blogging about a Mozilla topic.

To help Reps get started with these new reports, we will send some initial reminders to the reps-general forum, and then the friendly ReMoBot will send email reminders when a Rep has not reported any activities in 3 weeks. To prevent mentors from getting too many report notifications by email, mentors will receive daily digests that summarize the activities of their mentees.

What happens to existing reports? They have been migrated and now show up as individual activities where it makes sense.

Reps do a lot for Mozilla. We think this new reporting system will greatly improve our ability to show the impact each Rep and the Reps program as a whole are having as Mozilla builds the Internet that the world needs.

Like any process change, the new reporting system may not be perfect and may have bugs, so please do share feedback and ideas with the Reps Dev team or Council. We will continue to improve our tools and processes. If you see some odd behavior, please file a bug.

You can read more about the reporting system in the updated Reports SOP. To add a report go to the Dashboard and click ‘Add report’ or bookmark the direct link.

Welcome to a world of better Reps reports! Special thanks to the ReMo Dev team, Council, and Mentors who have worked on the new reporting system for the last few months, from design to testing. It has been a big effort, and we are excited to launch today.

http://dailycavalier.com/2014/03/introducing-a-better-reporting-system-for-mozilla-reps/

|

|

Christie Koehler: Joining the Community Building Team at Mozilla |

After almost a year and a half on the Technical Evangelism team, my role at Mozilla is changing. As of March 3rd, I am the Education Lead on the recently formed Community Building Team (CBT) led by David Boswell.

The purpose of the CBT is to empower contributors to join us in furthering Mozilla’s mission. We strive to create meaningful and clear contribution pathways, to collect and make available useful data about the contribution life-cycle, to provide relevant and necessary educational resources and to help build meaningful recognition systems.

As Education Lead, I’ll drive efforts to: 1) identify the education and culture-related needs common across Mozilla, and b) to develop and implement strategies for creating and maintaining these needs. Another part of my role as Education Lead will be to organize the Education and Culture Working Group as well as the Wiki Working Group.

Community Building Team, March 2014 in SF

Community Building Team, March 2014 in SFI’m super excited to be joining Dino Anderson, David Boswell, Michelle Marovich, Pierros Papadeas, William Quiviger, and Larissa Shapiro and for community building to be a recognized, full-time aspect of my job at Mozilla.

If I had been working with you on Firefox OS App localization and you still have questions, let me know or email appsdev@mozilla.com.

http://subfictional.com/2014/03/13/joining-the-community-building-team-at-mozilla/

|

|

Peter Bengtsson: Buggy - A sexy Bugzilla offline webapp |

Buggy is a singe-page webapp that relies entirely on the Bugzilla Native REST API. And it works offline. Sort of. I say "sort of" because obviously without a network connection you're bound to have outdated information from the bugzilla database but at least you'll have what you had when you went offline.

When you post a comment from Buggy, the posted comment is added to an internal sync queue and if you're online it immediately processes that queue. There is, of course, always a risk that you might close a bug when you're in a tunnel or on a plane without WiFi and when you later get back online the sync fails because of some conflict.

The reason I built this was partly to scratch an itch I had ("What's the ideal way possible for me to use Bugzilla?") and also to experiment with some new techniques, namely AngularJS and localforage.

So, the way it works is:

-

You pick your favorite product and components.

-

All bugs under these products and components are downloaded and stored locally in your browser (thank you localforage).

-

When you click any bug it then proceeds to download its change history and its comments.

-

Periodically it checks each of your chosen product and components to see if new bugs or new comments have been added.

-

If you refresh your browser, all bugs are loaded from a local copy stored in your browser and in the background it downloads any new bugs or comments or changes.

-

If you enter your username and password, an auth token is stored in your browser and you can thus access secure bugs.

Pros and cons

The main advantage of Buggy compared to Bugzilla is that it's fast to navigate. You can instantly filter bugs by status(es), components and/or by searching in the bug summary.

The disadvantage of Buggy is that you can't see all fields, file new bugs or change all fields.

The code

The code is of course open source. It's available on https://github.com/peterbe/buggy and released under a MPL 2 license.

The code requires no server. It's just an HTML page with some CSS and Javascript.

Everything is done using AngularJS. It's only my second AngularJS project but this is also part of why I built this. To learn AngularJS better.

Much of the inspiration came from the CSS framework Pure and one of their sample layouts which I started with and hacked into shape.

The deployment

Because Buggy doesn't require a server, this is the very first time I've been able to deploy something entirely on CDN. Not just the images, CSS and Javascript but the main HTML page as well. Before I explain how I did that, let me explain about the make.py script.

I really wanted to use Grunt but it just didn't work for me. There are many positive things about Grunt such as the ease with which you can easily add plugins and I like how you just have one "standard" file that defines how a bunch of meta tasks should be done. However, I just couldn't get the concatenation and minification and stuff to work together. Individually each tool works fine, such as the grunt-contrib-uglify plugin but together none of them appeared to want to work. Perhaps I just required too much.

In the end I wrote a script in python that does exactly what I want for deployment. Its features are:

- Hashes in the minified and concatenated CSS and Javascript files (e.g.

vendor-8254f6b.min.js) - Custom names for the minified and concatenated CSS and Javascript files so I can easily set far-future cache headers (e.g.

/_cache/vendor-8254f6b.min.js) - Ability to fold all CSS minified into the HTML (since there's only one page, theres little reason to make the CSS external)

- A Git revision SHA into the HTML of the generated

./dist/index.htmlfile - All files in

./client/static/copied intelligently into./dist/static/ - Images in CSS to be given hashes so they too can have far-future cache headers

So, the way I have it set up is that, on my server, I have a it run python make.py and that generates a complete site in a ./dist/ directory. I then point Nginx to that directory and run it under http://buggy-origin.peterbe.com. Then I set up a Amazon Cloudfront distribution to that domain and then lastly I set up a CNAME for buggy.peterbe.com to point to the Cloudfront distribution.

The future

I try my best to maintain a TODO file inside the repo. That's where I write down things to come. (it's also works as a changelog) since I also use this file to write down what's been done.

One of the main features I want to add is the ability to add bugs that are outside your chosen products and components. It'll be a "fake" component called "Misc". This is for bugs outside the products and components you usually monitor and work in but perhaps bugs you've filed or been assigned to. Or just other bugs you're interested in in general.

Another major feature to work on is the ability to choose to see more fields and ability to edit these too. This will require some configuration on the individual users' behalf. For example, some people use the "Target Milestone" a lot. Some use the "Importance" a lot. So, some generic solution is needed to accomodate all these non-basic fields.

And last but not least, the Bugzilla team here at Mozilla is working on a very exciting project that allows you to register a certain list of bugs with a WebSocket and have it push to you as soon as these bugs change. That means that I won't have to periodically query bugzilla every 30 seconds if certain bugs have changed but instead get instant notifications when they do. That's going to be major! I confidently speculate that that will be implemented some time summer this year.

Give it a go. What are you waiting for? :) Go to http://buggy.peterbe.com/, pick your favorite products and components and try to use it for a week.

|

|

David Burns: Management is hard |

I have been a manager within the A*Team for 6 months now and I wanted to share what I have learnt in that time. The main thing that I have learnt is being a manager is hard work.

Why has it been hard?

Well, being a manager is requires a certain amount of personal skills. So being able to speak to people and check they are doing the tasks they are supposed to is trivial. You can be a "good" manager on this but being a great manager is knowing how to fix problems when members of your team aren't doing the things that you expect.

It's all about the people

As an engineer you learn how to break down hardware and software problems and solve them. Unfortunately breaking down problems with people in your team are nowhere near the same. Engineering skills can be taught, even if the person struggles at first, but people skills can't be taught in the same way.

Working at Mozilla I get to work with a very diverse group of people literally from all different parts of the world. This means that when speaking to people, what you say and how you say things can be taken in the wrong way. It can be the simplest of things that can go wrong.

Careers

Being a manager means that you are there to help shape peoples careers. Do a great job of it and the people that you manage will go far, do a bad job of it and you will stifle their careers and possibly make them leave the company. The team that I am in is highly skilled and highly sought after in the tech world so losing them isn't really an option.

Feedback

Part of growing people's careers is asking for feedback and then acting upon that feedback. At Mozilla we have a process of setting goals and then measuring the impact of those goals on others. The others part is quite broad, from team mates to fellow paid contributors to unpaid contributors and the wider community. As a manager I need to give feedback on how I think they are doing. Personally I reach out to people who might be interacting with people I manage and get their thoughts.

But I don't stop at asking for feedback for the people I manage, I also ask for feedback about how I am doing from the people I manage. If you are a manager and never done this I highly recommend doing it. What you can learn about yourself in a 360 review is quite eye opening. You need to set ground rules like the feedback is private and confidential AND MUST NOT influence how you interact with that person in the future. Criticism is hard to receive but a manager is expected to be the adult in the room and if you don't act that way you head to the realm of a bad manager, a place you don't want to be.

So... do I still want to be a manager?

Definitely! Being a manager is hard work, let's not kid ourselves but seeing your team succeed and the joy on people's faces when they succeed is amazing.

http://www.theautomatedtester.co.uk/blog/2014/management-is-hard.html

|

|

Joel Maher: mochitests and manifests |

Of all the tests that are run on tbpl, mochitests are the last ones to receive manifests. As of this morning, we have landed all the changes that we can to have all our tests defined in mochitest.ini files and have removed the entries in b2g*.json, by putting entries in the appropriate mochitest.ini files.

Ahal, has done a good job of outlining what this means for b2g in his post. As mentioned there, this work was done by a dedicated community member :vaibhav1994 as he continues to write patches, investigate failures, and repeat until success.

For those interested in the next steps, we are looking forward to removing our build time filtering and start filtering tests at runtime. This work is being done by billm in bug 938019. Once that is landed we can start querying which tests are enabled/disabled per platform and track that over time!

http://elvis314.wordpress.com/2014/03/13/mochitests-and-manifests/

|

|

Lucas Rocha: How Android transitions work |

One of the biggest highlights of the Android KitKat release was the new Transitions framework which provides a very convenient API to animate UIs between different states.

The Transitions framework got me curious about how it orchestrates layout rounds and animations between UI states. This post documents my understanding of how transitions are implemented in Android after skimming through the source code for a bit. I’ve sprinkled a bunch of source code links throughout the post to make it easier to follow.

Although this post does contain a few development tips, this is not a tutorial on how to use transitions in your apps. If that’s what you’re looking for, I recommend reading Mark Allison’s tutorials on the topic.

With that said, let’s get started.

The framework

Android’s Transitions framework is essentially a mechanism for animating layout changes e.g. adding, removing, moving, resizing, showing, or hiding views.

The framework is built around three core entities: scene root, scenes, and transitions. A scene root is an ordinary ViewGroup that defines the piece of the UI on which the transitions will run. A scene is a thin wrapper around a ViewGroup representing a specific layout state of the scene root.

Finally, and most importantly, a transition is the component responsible for capturing layout differences and generating animators to switch UI states. The execution of any transition always follows these steps:

- Capture start state

- Perform layout changes

- Capture end state

- Run animators

The process as a whole is managed by the TransitionManager but most of the steps above (except for step 2) are performed by the transition. Step 2 might be either a scene switch or an arbitrary layout change.

How it works

Let’s take the simplest possible way of triggering a transition and go through what happens under the hood. So, here’s a little code sample:

TransitionManager.beginDelayedTransition(sceneRoot); View child = sceneRoot.findViewById(R.id.child); LayoutParams params = child.getLayoutParams(); params.width = 150; params.height = 25; child.setLayoutParams(params);

This code triggers an AutoTransition on the given scene root animating child to its new size.

The first thing the TransitionManager will do in beingDelayedTransition() is checking if there is a pending delayed transition on the same scene root and just bail if there is onecode. This means only the first beingDelayedTransition() call within the same rendering frame will take effect.

Next, it will reuse a static AutoTransition instancecode. You could also provide your own transition using a variant of the same method. In any case, it will always clonecode the given transition instance to ensure a fresh startcode—consequently allowing you to safely reuse Transition instances across beingDelayedTransition() calls.

It then moves on to capturing the start statecode. If you set target view IDs on your transition, it will only capture values for thosecode. Otherwise it will capture the start state recursively for all views under the scene rootcode. So, please, set target views on all your transitions, especially if your scene root is a deep container with tons of children.

An interesting detail here: the state capturing code in Transition has especial treatment for ListViews using adapters with stable IDscode. It will mark the ListView children as having transient state to avoid them to be recycled during the transition. This means you can very easily perform transitions when adding or removing items to/from a ListView. Just call beginDelayedTransition() before updating your adapter and an AutoTransition will do the magic for you—see this gist for a quick sample.

The state of each view taking part in a transition is stored in TransitionValues instances which are essentially a Map with an associated Viewcode. This is one part of the API that feels a bit hand wavy. Maybe TransitionValues should have been better encapsulated?

Transition subclasses fill the TransitionValues instances with the bits of the View state that they’re interested in. The ChangeBounds transition, for example, will capture the view bounds (left, top, right, bottom) and location on screencode.

Once the start state is fully captured, beginDelayedTransition() will exit whatever previous scene was set in the scene rootcode, set current scene to nullcode (as this is not a scene switch), and finally wait for the next rendering framecode.

TransitionManager waits for the next rendering frame by adding an OnPreDrawListenercode which is invoked once all views have been properly measured and laid out, and are ready to be drawn on screen (Step 2). In other words, when the OnPreDrawListener is triggered, all the views involved in the transition have their target sizes and positions in the layout. This means it’s time to capture the end state (Step 3) for all of themcode—following the same logic than the start state capturing described before.

With both the start and end states for all views, the transition now has enough data to animate the views aroundcode. It will first update or cancel any running transitions for the same viewscode and then create new animators with the new TransitionValuescode (Step 4).

The transitions will use the start state for each view to “reset” the UI to its original state before animating them to their end state. This is only possible because this code runs just before the next rendering frame is drawn i.e. inside an OnPreDrawListener.

Finally, the animators are startedcode in the defined order (together or sequentially) and magic happens on screen.

Switching scenes

The code path for switching scenes is very similar to beginDelayedTransition()—the main difference being in how the layout changes take place.

Calling go() or transitionTo() only differ in how they get their transition instance. The former will just use an AutoTransition and the latter will get the transition defined by the TransitionManager e.g. toScene and fromScene attributes.

Maybe the most relevant of aspect of scene transitions is that they effectively replace the contents of the scene root. When a new scene is entered, it will remove all views from the scene root and then add itself to itcode.

So make sure you update any class members (in your Activity, Fragment, custom View, etc.) holding view references when you switch to a new scene. You’ll also have to re-establish any dynamic state held by the previous scene. For example, if you loaded an image from the cloud into an ImageView in the previous scene, you’ll have to transfer this state to the new scene somehow.

Some extras

Here are some curious details in how certain transitions are implemented that are worth mentioning.

The ChangeBounds transition is interesting in that it animates, as the name implies, the view bounds. But how does it do this without triggering layouts between rendering frames? It animates the view frame (left, top, right, and bottom) which triggers size changes as you’d expect. But the view frame is reset on every layout() call which could make the transition unreliable. ChangeBounds avoids this problem by suppressing layout changes on the scene root while the transition is runningcode.

The Fade transition fades views in or out according to their presence and visibility between layout or scene changes. Among other things, it fades out the views that have been removed from the scene root, which is an interesting detail because those views will not be in the tree anymore on the next rendering frame. Fade temporarily adds the removed views to the scene root‘s overlay to animate them out during the transitioncode.

Wrapping up

The overall architecture of the Transitions framework is fairly simple—most of the complexity is in the Transition subclasses to handle all types of layout changes and edge cases.

The trick of capturing start and end states before and after an OnPreDrawListener can be easily applied outside the Transitions framework—within the limitations of not having access to certain private APIs such as ViewGroup‘s supressLayout()code.

As a quick exercise, I sketched a LinearLayout that animates layout changes using the same technique—it’s just a quick hack, don’t use it in production! The same idea could be applied to implement transitions in a backwards compatible way for pre-KitKat devices, among other things.

That’s all for now. I hope you enjoyed it!

|

|

William Duyck: Happy 25th Birthday, World Wide Web! |

The web has become an integral part of all our lives, shaping how we learn, how we connect and how we communicate. Looking ahead, the web is faced with new opportunities and new challenges. As we celebrate its 25th birthday, let’s all take a moment to imagine the future of the open web.

Say a HUGE happy birthday to the web, and do a little remixing at the same time. mzl.la/webwewantremix

http://blog.wduyck.com/2014/03/happy-25th-birthday-world-wide-web/

|

|

Erik Vold: A Blockbuster Firefox, Part 2 - Your Brain On The Web |

This is part two for A Blockbuster Firefox.

I’ve been thinking about Firefox as a user agent instead of a viewport. If the parts of the web that I have visited are part of my knowledge, then Firefox is my brain on the web. I will use my history, bookmarks, and search tools which Firefox provides to store that knowledge sometimes, in otherwords I’ll use a database which Firefox provides me access to, or other times I may just store the information in the biomass in my skull and access it with my brain.

If we think about Firefox as a cognitive extension then it should be more clear where work can be done.

Retrieval

One very common use case for a browser is fact checking, and the common way to do this is to Google it. I would argue that this is not the most efficient way.

Ubiquity is a good example of an add-on that worked on this issue, Search Tabs was another.

Recall

Recalling information is another use case for browsers, this is why the history and bookmark features exist, but progress has died off since then, and those two assets are not being used to their full potential.

Tab Groups and Recall Monkey were good examples of add-ons that tackled this issue. These days I’m starting to think fewer tabs is better, so tab organization is not quite as useful as just getting to the page that I want to go to, if I can do that efficiently without tabs, then I only need one tab, or maybe two tabs.

Refinement

When I am researching something I start with a search, then I have distractions, later I return to this search by re-searching. Why should both of these searches be equal? At the moment I have to use a search engine only, what if I could search multiple search engines and my history with Firefox? purge some results and highlight the ones that I’ve seen and thought were good results for that search?

I don’t think I’ve seen any good example of this, Google had something like this at one point, but I think the searches should be doable offline and privately too, that should make researching a better experience anyhow.

I plan to work on this during the upcoming months in my spare time.

Routine

There are many routines that I wish to establish and maintain on the web that basiclly come down to checking a page for updates, or making updates to a page.

Feedly is a good example of this done for blog updates, but this could be done better by the browser.

Live bookmarks are a good start.

Analysis

It is important to know thyself.

about:me, about:profile, and Lightbeam are all good examples here, but what if this information was used somehow for good? perhaps to suggest new pages. Somthing like Predictive Newtab.

Making Connections

If I’ve visited a number of blog posts that link to some MDN page, and perhaps I never noticed the link, or didn’t have a chance to visit it yet. There is a good chance that I want to read that MDN page and just don’t know it, this would be a good opportunity for Firefox to suggest it to me, and help me establish that connection.

http://work.erikvold.com/firefox/2014/03/13/a-blockbuster-firefox-part-2-your-brain-on-the-web.html

|

|

Christian Heilmann: Speaking = sponsoring |

Following Remy Sharp’s excellent You’re paying to speak post about conferences not paying speakers I thought it might be interesting to share some of my experiences.

I’ve been on the road now for almost three years constantly presenting at conferences, running workshops, giving brownbags and the like. I am also part of the group in Mozilla that sifts through all the event requests we get (around 30-40 a month right now).

A labour of love

I love speaking. I love the rush of writing a talk, organising my thoughts and trying to distill the few points I want the audience to take away to impress their peers with. I do it out of passion, not because I get paid. If I were to do it to get paid, I’d quit my job and ask for payment for my talks and run much more workshops. This is where real money comes in for speakers. As it is now, all I ask for is to be put up in a hotel, and – if possible – get my flights or travel expenses paid.

Drowning in mediocrity

That’s why I get annoyed when conference organisers see speakers as a commodity. In the last year I had a few incidences that made me wonder why I bother putting effort into my work instead of writing one or two talks a year and keep repeating them (which works, I see people do it):

- I had a few events where the organisers flat out didn’t want to put me up in a hotel.

- I had events where the organisers got me a flight and a hotel – just not at the same dates, so I had to get a last minute room for myself without being reimbursed

- I had a few events where I arrived in time for my talk just to see that the speaker before me was still speaking and my talk time was half of what I planned for.

- I had an event where the room planning was all askew and people couldn’t find my talk which meant I presented for 10 people who were lucky enough to already having been in the room (2 of which trying to find another talk)

- I even had an event where I had perfectly set up my computer (including already running screen recording) only to have a “sponsored pitch” speaker disconnect my laptop and give a five minute intro I wasn’t told about before my keynote.

And here comes the kicker: every single one of these bad examples happened in the United States – most in San Francisco. The place where everybody dreams of speaking, the place where all the cool kids are and where apparently everything happens that rocks our web world.

The happy incidences

On the flipside of this, almost every conference in Europe and Asia I’ve been to was an amazing experience. Organisers know you are what makes a conference and they make you feel welcome. A few outstanding ones:

- When I lost my credit card in Romania, the organisers of the Internet and Mobile World Romania came by the hotel, organised with the local restaurant that I could eat for free, got me some money to spend and took me out for an amazing evening to get my mind off the dilemma. That on top of a flawless pickup from the airport, great hotel to stay in and transport to and from the venue.

- The Login conference in Lithuania had dedicated helpers for each speaker to help you get around and pick you up at the airport. Instead of chancing us using the expensive room service in the hotel or organise one huge dinner, they cut deals with all the restaurants around the hotel to have ledgers for speakers to eat whatever we wanted paid by the organisers afterwards

- Marc Thiele’s Beyond Tellerand conference in Germany is all about a cozy atmosphere encouraging speakers and attendees to mingle. He even had music artists sample the talks into songs live at the event.

- The Smashing Conf had flawless travel organisation and a super nice welcome basket in the hotel room for speakers with tourism info how to get around town for partners the speakers might have brought

Keep the talent happy

I am not saying I expect all of these things, I am saying that organisers outside the US seem to understand one thing others have forgotten and Remy mentioned as well: without good speakers putting effort in, there is no conference.

Remy had an excellent point, namely that having speakers and taking care of them should be part of the budget of every conference:

That’s what a budget is for – which comes from ticket sales and sponsorship agreements. It is part of their budget because without a speaker, they have no content to sell.

Good talks make a conference

Of course there is more to a conference than just the presentations: there are booths, there is networking during breaks and at the afterparties. But you don’t need a conference for that – you could just attend a meetup. A conference is about good presentations and workshops. And this is where I get very angry when I see corners being cut when it comes to speakers.

Yes, running a conference is expensive. And running one in San Francisco even more so. But why add cuts at the core content? Maybe spend less on the afterparty in a loud club with lots of drinks leading to socially inept people behaving like cavemen and creating the next “conference incident”. Maybe don’t move into the bigger location and instead allow your event to retain its soul? Conferences like dconstruct, Full Frontal and Edge Conf show that this works.

As people who buy tickets, you deserve to get a good show. You deserve to be challenged, to learn something new. Of course, most of you do not pay for the tickets – your companies do – but that doesn’t make a difference. If organisers don’t value their speakers and instead pad their events with lots of tracks with just slightly veiled sales pitches you shouldn’t make jokes about this and have a coffee instead – you should ask for your money back.

Nicholas Zakas also covered this in The Problem with Tech Conference talks lately.

Too many conferences

The hype we experience in our market also leads to a massive overload of events. Not all are needed – by a long shot. Some have been around so long they’ve become a spoof of themselves. It is time to ask for better quality.

In the US, this is a tradition thing: tech conferences have been around for ages and there are a lot of terrible “this is how it always worked” shortcuts being taken. IT conferences have become cookie-cutter. Not only do you see the same booths, the same catering and the same companies, you also see the same kind of talks over and over again.

As a company owner, I’d frankly not set up a budget for conference attendance any longer. It is not part of training when you don’t learn something new or get in-depth insight into something you already should know. And there is really no shortage of online content or even meetups where you can get the same information. The job of a speaker is to bring this content to life, to explain the why instead of the how or bring a new angle to the subject matter. This doesn’t happen if conference talks are limited to local speakers who are in the same echo chamber.

Show us the money so you can talk?

Which brings me to sponsoring. Mozilla’s and my policy is that we don’t sponsor events to get speaking slots. We want Mozillians to attend an event and have one speaker even before we consider sponsorship. Giving talks and giving money to the event are disconnected. I wished every company did that. There is far too much “pay to play” going on and that leads to boring sales pitches that are lucrative for organisers and insulting to the audience and, frankly, painful to watch.

Having insight into the numbers around events is another thing that keeps amazing me. I can not tell them here, but let’s say sponsoring a coffee break in a San Francisco conference for 200 people easily pays for sponsoring a whole venue in Poland, India or Romania – including the posters and branding.

So here’s my view: when I speak at your conference, I already sponsor it. I attach my name to it, I dedicate time to make it worth while for your audience to listen to me and you can add your branding to the video of my talk. I will not pay to “get a better speaking slot” and I will not sponsor to “get another talk for Mozilla” in.

I am happy to be at an event that values what I do. I will stop going to those that feel like I am just a name to attract people without really being challenged to give a good talk. As a speaker, I am a sponsor. Not with money, but with my reputation and my time and effort. That should get rewarded, much like any other sponsorship gets placement and mentioning.

Comment on Google+ or Facebook or Twitter if you are so inclined.

http://christianheilmann.com/2014/03/12/speaking-sponsoring/

|

|

Rebeccah Mullen: Loving tech for 25 years – Happy Birthday World Wide Web! |

I’ll start with a very frank admission – I didn’t always love technology. I remember feeling utterly out of my depth in grade five while trying to create code that would display green @ and # symbols across my black screen in a lovely tree shape. I managed the shape – but it took 25 minutes to render, and I made a failing grade due to not quite comprehending how to optimize my code.

http://bosslevel.ca/2014/03/12/loving-tech-for-25-years-happy-birthday-world-wide-web/

|

|

Daniel Stenberg: what’s –next for curl |

curl is finally getting support for doing multiple independent requests specified in the same command line, which allows users to make even better use of curl’s excellent persistent connection handling and more. I don’t know when I first got the question of how to do a GET and a POST in a single command line with curl, but I do know that we’ve had the TODO item about adding such a feature mentioned since 2004 – and I know it wasn’t added there right away…

Starting in curl 7.36.0, we can respond with a better answer: use the –next option!

curl has been able to work with multiple URLs on the command line virtually since day 1, but all the command line options would then mostly apply and be used for all specified URLs.

This new –next option introduces a “boundary”, or a wall if you like, between options on the command line. The options set before –next will be handled as one request and the options set on the right side of –next will start adding up to another request. You of course then need to specify at least one URL per individual such section and you can add any number of –next on the command line. If the command line then gets too long, we also support the same logic and sequence in the “config files” which is the way you can specify command line arguments into a text file and have curl read them from there using -K or –config.

Here’s a somewhat silly example to illustrate. This fist makes a POST and then a HEAD to two different pages on the same host:

curl -d FOO example.com/input.cgi --next --head example.com/robots.txt

Thanks to Steve Holme for his hard work on implementing this!

|

|

John Zeller: Triggering of Arbitrary builds/tests is now possible! |

Bug 793989 requests the ability of buildapi's self-serve to be able to request arbitrary builds/tests on a given push, and this is now possible! If you are interested in triggering an arbitrary build/test you can start with this simple python script to get you started.

This new buildapi REST functionality takes a POST request with the parameters properties and files, as a dictionary and list, respectively. The REST URL is built as https://secure.pub.build.mozilla.org/buildapi/self-serve/{branch}/builders/{buildername}/{revision} where {branch} is a valid branch, {revision} is an existing revision on that branch, and {buildername} is the arbitrary build/test that you wish to trigger. Examples of appropriate buildernames are "Ubuntu VM 12.04 try opt test mochitest-1" or "Linux x86-64 try build"; Any buildername that shows up in the builder column on buildapi should work. Please file a bug if you find any exceptions!

As a specific example, if you are launching the test "Ubuntu VM 12.04 x64 try opt test jetpack" after having run the build "Linux x86-64 try build", then you need to supply the files list parameter as ["http://ftp.mozilla.org/pub/mozilla.org/firefox/try-builds/cviecco@mozilla.com-b5458592c1f3/try-linux64-debug/firefox-30.0a1.en-US.linux-x86_64.tar.bz2", "http://ftp.mozilla.org/pub/mozilla.org/firefox/try-builds/cviecco@mozilla.com-b5458592c1f3/try-linux64-debug/firefox-30.0a1.en-US.linux-x86_64.tests.zip"].

Once you have submitted an arbitrary build/test request, you can see the status of that build/test on BuildAPI in multiple locations. The one which allows you to see which masters have grabbed the build/test, you can use the following URL: https://secure.pub.build.mozilla.org/buildapi/revision/{branch}-selfserve/{revision}, where obviously {branch} is the branch you submitted your request on and {revision} is the revision you submitted on that branch.

NOTE: Currently TBPL is not showing the pending/running status of builds/tests started with this new BuildAPI functionality because of some issues outlined in Bug 981825, however once a build/test that was triggered with this new functionality is finished, it shows up on TBPL just as you would expect any other build/test to.

http://johnzeller.com/blog/2014/03/12/triggering-of-arbitrary-buildstests-is-now-possible/

|

|

Mark Surman: Happy birthday world wide web. I love you. And want to keep you free. |

As my business card says, I have an affection for the world wide the web. And, as the web turns 25 this week, I thought it only proper to say to the web ‘I love you’ and ‘I want to keep you free’.

From its beginning, the web has been a force for innovation and education, reshaping the way we interact with the world around us. Interestingly, the original logo and tag line for the web was ‘let’s share what we know! — which is what billions of us have now done.

As we have gone online to connect and share, the web has revolutionized how we work, live and love: it has brought friends and families closer even when they are far away; it has decentralized once closed and top-down industries; it has empowered citizens to pursue democracy and freedom. It has become a central building block for all that we do.

Yet, on its 25 birthday, the web is at an inflection point.

Despite its positive impact, too many of us don’t understand its basic mechanics, let alone its culture or what it means to be a citizen of the web. Mobile, the platform through which the next billion users will join the web, is increasingly closed, not allowing the kind of innovation and sharing that has made the world wide web such a revolutionary force in in the first place. And, in many parts of the world, the situation is made worse by governments who censor the web or use the web surveil people at a massive scale, undermining the promise of the web as an open and trusted resource for all of humanity.

Out of crisis comes opportunity. As the Web turns 25, let us all say to the web: ‘I love you’ and ‘I want to keep you free’. Let’s take the time to reflect not just on the web we have, but on the web we want.

Mozilla believes the web needs to be both open and trusted. We believe that users should be able to control how their private information is used. And we believe that the web is not a one-way platform — it should give as all a chance makers, not just consumers. Making this web means we all need access to an open network, we all need software that is open and puts us in control and we all need to be literate in the technology and culture of the web. The Mozilla community around the world stands for all these things.

So on the web’s 25 birthday, we are joining with the Web at 25 campaign and the Web We Want campaign to enable and amplify the voice of the Internet community. We encourage you to visit www.webat25.org to sign a birthday card for the web and visit our interactive quilt to share your vision for the type of web you want.

Happy Birthday to the web — and to all of us who are on and in it!

–Mark

ps: Also, check out these birthday wishes for the web from Mozilla’s Mitchell Baker and Brendan Eich.

pps: Here’s the quilt: the web I want enables everyone around the world to be a maker. Add yourself.

Filed under: drumbeat, mozilla, openweb, webmakers

|

|

Mihai Sucan: Web Console improvements, episode 30 |

Hello Mozillians!

We are really close to the next Firefox release, which will happen next week. This is a rundown of Web Console changes in current Firefox release channels.

Here is a really nice introduction video for the Web Console, made by Will Bamberg:

You can learn more about the Web Console on Mozilla's Developer Network web site.

Stable release (Firefox 27)

Added page reflow logging. Enable this in the "CSS > Log" menu option (bug 926371).

You can use the -jsconsole command line option when

you start Firefox to automatically start the Browser

Console (bug

860672).

Beta channel (Firefox 28)

Added split console: you can press Escape in any tool to quickly open the console (bug 862558).

Added support for console.assert() (bug

760193).

Added console.exception() as an alias for the

console.error() method (bug

922214).