Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nathan Froyd: for-purpose instead of non-profit |

I began talking with a guy in his midforties who ran an investment fund and told me about his latest capital raise. We hit it off while discussing the differences between start-ups on the East and West Coasts, and I enjoyed learning about how he evaluated new investment opportunities. Although I’d left that space a while ago, I still knew it well enough to carry a solid conversation and felt as if we were speaking the same language. Then he asked what I did.

“I run a nonprofit organization called Pencils of Promise.”

“Oh,” he replied, somewhat taken aback. “And you do that full-time?”

More than full-time, I thought, feeling a bit judged. “Yeah, I do. I used to work at Bain, but left to work on the organization full-time.”

“Wow, good for you,” he said in the same tone you’d use to address a small child, then immediately looked over my shoulder for someone new to approach…

On my subway ride home that night I began to reflect on the many times that this scenario had happened since I’d started Pencils of Promise. Conversations began on an equal footing, but the word nonprofit could stop a discussion in its tracks and strip our work of its value and true meaning. That one word could shift the conversational dynamic so that the other person was suddenly speaking down to me. As mad as I was at this guy, it suddenly hit me. I was to blame for his lackluster response. With one word, nonprofit, I had described my company as something that stood in stark opposition to the one metric that his company was being most evluated by. I had used a negative word, non, to detail our work when that inaccurately described what we did. Our primary driver was not the avoidance of profits, but the abundance of social impact…

That night I decided to start using a new phrase that more appropriately labeled the motivation behind our work. By changing the words you use to describe something, you can change how other perceive it. For too long we had allowed society to judge us with shackling expectations that weren’t supportive of scale. I knew that the only way to win the respect of our for-profit peers would be to wed our values and idealism to business acumen. Rather than thinking of ourselves as nonprofit, we would begin to refer to our work as for-purpose.

From The Promise of a Pencil by Adam Braun.

https://blog.mozilla.org/nfroyd/2016/01/28/for-purpose-instead-of-non-profit/

|

|

The Mozilla Blog: It’s International Data Privacy Day: Help us Build a Better Internet |

Update your Software and Share the Lean Data Practices

Today is International Data Privacy Day. What can we all do to help ourselves and each other improve privacy on the Web? We have something for everyone:

- Users can greatly improve their own data privacy by simply updating their software.

- Companies can increase user trust in their products and user privacy by implementing Lean Data Practices that increase transparency and offer user control.

By taking action on these two simple ideas, we can create a better Web together.

Why is updating important?

Updating your software is a basic but crucial step you can take to help increase your privacy and security online. Outdated software is one of the easiest ways for hackers to access your data online because it’s prone to vulnerabilities and security holes that can be exploited and that may have been patched in the updated versions. Updating can make your friends and family more secure because a computer that has been hacked can be used to hack others. Not updating software is like driving with a broken tail light – it might not seem immediately urgent, but it compromises your safety and that of people around you.

For our part, we’ve tried to make updating Firefox as easy as possible by automatically sending users updates by default so they don’t have to worry about it. Updates for other software may not come automatically, but they are equally important.

Once you complete your updates share the “I Updated” badge using #DPD2016 and #PrivacyAware and encourage your friends and family to update, too!

Why should companies implement Lean Data Practices?

Today we’re also launching a new way for companies and projects to earn user trust through a simple framework that helps companies think about the decisions they make daily about data. We call these Lean Data Practices and the three central questions that help companies work through are how can you stay lean, build in security and engage your users. The more companies and projects that implement these concepts, the more we as an industry can earn user trust. You can read more in this blog post from Mozilla’s Associate General Counsel Jishnu Menon.

As a nonprofit with a mission to promote openness, innovation and opportunity on the Web Mozilla is dedicated to putting users in control of their online experiences. That’s why we think about online privacy and security every day and have privacy principles that show how we build it into everything we do. All of us – users and businesses alike – can contribute to a healthy, safe and trusted Web. The more we focus on ways to reach that goal, the easier it is to innovate and keep the Web open and accessible to all. Happy International Data Privacy Day!

|

|

Mozilla Open Policy & Advocacy Blog: Introducing Lean Data Practices |

At Mozilla, we believe that users trust products more when companies build in transparency and user control. Earned trust can drive a virtuous cycle of adoption, while conversely, mistrust created by even just a few companies can drive a negative cycle that can damage a whole ecosystem.

Today on International Data Privacy Day, we are happy to announce a new initiative aimed at assisting companies and projects of all sizes to earn trust by staying lean and being smart about collecting and using data.

We call these Lean Data Practices.

Lean Data Practices are not principles, nor are they a way to address legal compliance— rather, they are a framework to help companies think about the decisions they make about data. They do not prescribe a particular outcome and can help even the smallest companies to begin building user trust by fostering transparency and user control.

We have designed Lean Data Practices to be simple and direct:

- stay lean by focusing on data you need,

- build in security appropriate to the data you have and

- engage your users to help them understand how you use their data.

We have even created a toolkit to make it easy to implement them.

We use these practices as a starting point for our own decisions about data at Mozilla. We believe that as more companies and projects use Lean Data Practices, the better they will become at earning trust and, ultimately, the more trusted we will all become as an industry.

Please check them out and help us spread the word!

https://blog.mozilla.org/netpolicy/2016/01/27/lean-data-practices/

|

|

Nick Cameron: Macros and name resolution |

This should be the last blog post on the future of macros. Next step is to make some RFCs!

I've talked previously about how macros should be nameable just like other items, rather than having their own system of naming. That means we must be able to perform name resolution at the same time as macro expansion. That's not currently possible. In this blog post I want to spell out how we might make it happen and make it work with the sets of scopes hygiene algorithm. Niko Matsakis has also been thinking about name resolution a lot, you can find his prototype algorithm in this repo. This blog post will basically describe that algorithm, tied in with the sets of scopes hygiene algorithm.

Name resolution is the phase in the compiler where we match uses of a name with definitions. For example, in

fn foo() { ... }

fn bar() { foo(); }

We match up the use of foo in the body of bar with the definition of foo above.

This gets a bit more complicated because of shadowing, e.g., if we change bar:

fn bar() {

fn foo() { ... }

foo();

}

Now the use of foo refers to the nested bar function, not the one outside foo.

Imports also complicate resolution: to resolve a name we might need to resolve an import (which might be a glob import). The compiler also enforces rules about imports during name resolution, for example we can't import two names into the same namespace.

Name resolution today

Name resolution takes place fairly early on in the compiler, just after the AST has been lowered to the HIR. There are three passes over the AST, the first builds the 'reduced graph', the second resolves names, and the third checks for unused names. The 'reduced graph' is a record of all definitions and imports in a program (without resolving anything), I believe it is necessary to allow forward references.

Goals

We would like to make it possible to resolve names during macro expansion so that macros can be modularised in the same way as other items. We would also like to be a little more permissive with restricted imports - it should be allowed to import the same name into a scope twice if it refers to the same definition, or if that name is never used.

There are some issues with this idea. In particular, a macro expansion can introduce new names. With no restrictions, a name could refer to different definitions at different points in program expansion, or even be legal at some point and become illegal later due to an introduced duplicate name. This is unfortunate because as well as being confusing, it means the order of macro expansion becomes important, whereas we would like for the expansion order to be immaterial.

Using sets of scopes for name resolution

Previously, I outlined how we could use the sets of scopes hygiene algorithm in Rust. Sets of scopes can also be used to do name resolution, in fact that is how it is intended to be used. The traditional name resolution approach is, essentially, walking up a tree of scopes to find a binding. With sets of scopes, all bindings are treated as being in a global 'namespace'. A name lookup will result in a set of bindings, we then compare the sets of scopes for each binding with the set of scopes for the name use and try to find an unambiguously best binding.

The plan

The plan is basically to split name resolution into two stages. The first stage will happen at the same time as macro expansion and will resolve imports to define (conceptually) a mapping from names in scope to definitions. The second stage is looking up a definition in this mapping for any given use of a name. This could happen at any time, to start with we'll probably leave it where it is. Later it could be merged with the AST to HIR lowering or performed lazily.

When we need to lookup a macro during expansion, we use the sets of names as currently known. We must ensure that each such lookup remains unchanged throughout expansion.

In addition, the method of actually looking up a name is changed. Rather than looking through nested scopes to find a name, we use the sets of scopes from the hygiene algorithm. We pretty much have a global table of names, and we use the name and sets of scopes to lookup the definition.

Details

Currently, parsing and macro expansion happen at the same time. With this proposal, we add import resolution to that mix too. The output of libsyntax is an expanded AST with sets of scopes for each identifier and tables of name bindings which bind names to sets of scopes to definitions.

We rely on a monotonicity property in macro expansion - once an item exists in a certain place, it will always exist in that place. It will never disappear and never change. Note that for the purposes of this property, I do not consider code annotated with a macro to exist until it has been fully expanded.

A consequence of this is that if the compiler resolves a name, then does some expansion and resolves it again, the first resolution will still be valid. However, a better resolution may appear, so the resolution of a name may change as we expand. It can also change from a good resolution to an ambiguity. It is also possible to change from good to ambiguous to good again. There is even an edge case where we go from good to ambiguous to the same good resolution (but via a different route).

In order to keep macro expansion comprehensible to programmers, we must enforce that all macro uses resolve to the same binding at the end of resolution as they do when they were resolved.

We start by parsing as much of the program as we can - like today we don't parse macros. We then walk the AST top-down computing sets of scopes. We add these to every identifier. When we find items which bind a name, we add it to the binding table. When entering a module we also add self and super bindings. When we find imports and macro uses we add these to a work list. When we find a glob import, we have to record a 'back link'; so that when a public name is added for the supplying module, we can add it for the importing module. At this stage a glob would not add any names for a module since we don't resolve the path prefix. For each macro definition, we add the pending set of scopes.

We then loop over the work list trying to resolve names. If a name has exactly one best resolution then we use it (and record it on a list of resolved names). If there are zero, or more than one resolution, then we put it back on the work list. When we reach a fixed point, i.e., the work list no longer changes, then we are done. If the work list is empty, then expansion/import resolution succeeded, otherwise there are names not found or ambiguous names and we failed.

If the name we are resolving is for a non-glob import, then we just record it in the binding table. If it is a glob import, we add bindings for every public name currently known.

If the resolved name is for a macro, then we expand that macro by parsing the arguments, pattern matching, and doing hygienic expansion. At the end of expansion, we apply the pending scopes and compute scopes due to the expanded macro. This may include applying more scopes to identifiers outside the expansion. We add new names to the binding table and push any new macro uses and imports to the work list to resolve.

If we add pubic names for a module which has back links, we must follow them and add these names to the importing module. When following these back links, we check for cycles, signaling an error if one is found.

If we succeed, then after running the fix-point algorithm, we check our record of name resolutions. We re-resolve and check we get the same result. We also check for un-used macros at this point.

The second part of name resolution happens later, when we resolve names of types and values. We could hopefully merge this with the lowering from AST to HIR. After resolving all names, we can check for unused imports and names.

Resolving names

To resolve a name, we look up the 'literal' name in the binding table. That gives us a list of sets of scopes. We can discard any which are not a subset of the scopes on the name, then order the remainder by sub-setting and pick the largest (according to the subset ordering, not the set with most elements). If there is no largest set, then name resolution is ambiguous. If there are no subsets, then the binding is undefined. Otherwise we get the definition we're looking for.

To resolve a path we look up the first segment like a name. We then lookup the next segment using the scope defined by the previous segment. Furthermore, in all lookups apart from the initial segment, we ignore outside edge scopes. For example, consider:

// scope 0

mod foo { // scope 1

fn bar() {} // scope 2

}

fn main() { // scope 3

use foo::bar;

}

The use item has set of scopes {0, 3} the foo name is thus bound by the module foo in scope {0}. We then lookup bar with scopes {1} (the scope defined by foo) and find the bar function. By ignoring outside edge scopes, this will be the case even if bar is introduced by a macro (if the macro is expanded in the right way).

Names which are looked up based on type are first looked up using the type, then sets of scopes are compared when checking names. E.g., to check a field lookup e.f we find the type of e then get all fields on the struct with that type. We then try and find the one called f and with the largest subset of the scopes found on f in e.f. The same applies for enum variants, methods, etc.

To be honest, I'm not sure if type based lookups should be hygienic. I can't think of strong use cases. However, the only consistent position seems to be hygiene everywhere or nowhere, including items (which sometimes causes problems in current Rust).

Data structures

The output of expansion is now more than just the AST. Each identifier in the AST should be annotated with its sets of scopes info. We pass the complete binding table to the compiler. Exported macros are still part of the crate and come with sets of pending scopes. I don't think we need any information about imports.

When a crate is packed into metadata, we must also include the binding table. We must include private entries due to macros that the crate might export. We don't need data for function bodies though. For functions which are serialised for inlining/monomorphisation, we should include local data (although it's probably better to serialise the HIR or MIR, then it should be unnecessary). We need pending scopes for any exported macros.

Due to the nature of functions, I think we can apply an optimisation of having local binding tables for function bodies. That makes preserving and discarding the right data easier, as well as making lookups faster because there won't be a million global entries for x. Any binding with a function's scope is put into that function's local table. Other than nested functions, I don't think an item can have scopes from multiple functions. I don't think there is a good reason to extend this to nested functions.

Questions

- Do we ever need to find an AST node from a scope? I hope not.

- How do represent a definition in a binding? An id, a reference to an AST node?

- See above, hygiene for 'type-based' lookups.

|

|

Air Mozilla: Quality Team (QA) Public Meeting, 27 Jan 2016 |

The bi-monthly status review of the Quality team at Mozilla. We showcase new and exciting work on the Mozilla project, highlight ways you can get...

The bi-monthly status review of the Quality team at Mozilla. We showcase new and exciting work on the Mozilla project, highlight ways you can get...

https://air.mozilla.org/quality-team-qa-public-meeting-20160127/

|

|

Air Mozilla: Bugzilla Development Meeting, 27 Jan 2016 |

Join the core team to discuss the on-going development of the Bugzilla product. Anyone interested in contributing to Bugzilla, in planning, development, testing, documentation, or...

Join the core team to discuss the on-going development of the Bugzilla product. Anyone interested in contributing to Bugzilla, in planning, development, testing, documentation, or...

|

|

Dave Townsend: Improving the performance of the add-ons manager with asynchronous file I/O |

The add-ons manager has a dirty secret. It uses an awful lot of synchronous file I/O. This is the kind of I/O that blocks the main thread and can cause Firefox to be janky. I’m told that that is a technical term. Asynchronous file I/O is much nicer, it means you can let the rest of the app continue to function while you wait for the I/O operation to complete. I rewrote much of the current code from scratch for Firefox 4.0 and even back then we were trying to switch to asynchronous file I/O wherever possible. But still I used mostly synchronous file I/O.

Here is the problem. For many moons we have allowed other applications to install add-ons into Firefox by dropping them into the filesystem or registry somewhere. We also have to do things like updating and installing non-restartless add-ons during startup when their files aren’t in use. And we have to know the full set of non-restartless add-ons that we are going to activate quite early in startup so the startup function for the add-ons manager has to do all those installs and a scan of the extension folders before returning back to the code startup up the browser, and that means being synchronous.

The other problem is that for the things that we could conceivably use async I/O, like installs and updates of restartless add-ons during runtime we need to use the same code for loading and parsing manifests, extracting zip files and others that we need to be synchronous during startup. So we can either write a second version that is asynchronous so we can have nice performance at runtime or use the synchronous version so we only have one version to test and maintain. Keeping things synchronous was where things fell in the end.

That’s always bugged me though. Runtime is the most important time to use asynchronous I/O. We shouldn’t be janking the browser when installing a large add-on particularly on mobile and so we have taken some steps since Firefox 4 to make parts of the code asynchronous. But there is still a bunch there.

The plan

The thing is that there isn’t actually a reason we can’t use the asynchronous I/O functions. All we have to do is make sure that startup is blocked until everything we need is complete. It’s pretty easy to spin an event loop from JavaScript to wait for asynchronous operations to complete so why not do that in the startup function and then start making things asynchronous?

Performances is pretty important for the add-ons manager startup code, the longer we spend in startup the more it hurts us. Would this switch slow things down? I assumed that there would be some losses due to other things happening during an event loop tick that otherwise wouldn’t have but that the file I/O operations should take around the same time. And here is the clever bit. Because it is asynchronous I could fire off operations to run in parallel. Why check the modification time of every file in a directory one file at a time when you can just request the times for every file and wait until they all complete?

There are really a tonne of things that could affect whether this would be faster or slower and no amount of theorising was convincing me either way and last night this had finally been bugging me for long enough that I grabbed a bottle of wine, fired up the music and threw together a prototype.

The results

It took me a few hours to switch most of the main methods to use Task.jsm, switch much of the likely hot code to use OS.File and to run in parallel where possible and generally cover all the main parts that run on every startup and when add-ons have changed.

The challenge was testing. Default talos runs don’t include any add-ons (or maybe one or two) and I needed a few different profiles to see how things behaved in different situations. It was possible that startups with no add-ons would be affected quite differently to startups with many add-ons. So I had to figure out how to add extensions to the default talos profiles for my try runs and fired off try runs for the cases where there were no add-ons, 200 unpacked add-ons with a bunch of files and 200 packed add-ons. I then ran all those a second time with deleting extensions.json between each run to force the database to be loaded and rebuilt. So six different talos runs for the code without my changes and then another six with my changes and I triggered ten runs per test and went to bed.

The first thing I did this morning was check the performance results. The first ready was with 200 packed add-ons in the profile, should be a good check of the file scanning. How did it do? Amazing! Incredible! A greater than 50% performance improvement across the board! That’s astonishing! No really that’s pretty astonishing. It would have to mean the add-ons manager takes up at least 50% of the browser startup time and I’m pretty sure it doesn’t. Oh right I’m accidentally comparing to the test run with 200 packed add-ons and a database reset with my async code. Well I’d expect that to be slower.

Ok, let’s get it right. How did it really do? Abysmally! Like incredibly badly. Across the board in every test run startup is significantly slower with the asynchronous I/O than without. With no add-ons in the profile the new code incurs a 20% performance hit. In the case with 200 unpacked add-ons? An almost 1000% hit!

What happened?

Ok so that wasn’t the best result but at least it will stop bugging me now. I figure there are two things going on here. The first is that OS.File might look like you can fire off I/O operations in parallel but in fact you can’t. Every call you make goes into a queue and the background worker thread doesn’t start on one operation until the previous has completed. So while the I/O operations themselves might take about the same time you have the added overhead of passing messages between the background thread and promises. I probably should have checked that before I started! Oh, and promises. Task.jsm and OS.File make heavy use of promises and I have to say I’m sold on using them for async code. But. Everytime you wait for a promise you have to wait at least one tick of the event loop longer than you would with a simple callback. That’s great if you want responsive UI but during startup every event loop tick costs time since other code might be running that you don’t care about.

I still wonder if we could get more threads for OS.File whether it would speed things up but that’s beyond where I want to play with things for now so I guess this is where this fun experiment ends. Although now I have a bunch of code converted I wonder if I can create some replacements for OS.File and Task.jsm that behave synchronously during startup and asynchronously at runtime, then we get the best of both worlds … where did that bottle of wine go?

|

|

Air Mozilla: January 2016 Brantina: The Right Way to Build Software: Ideals Over Ideology with Jocelyn Goldfein |

Rapid releases. Closed source software. Dogfooding. For legitimate reasons, Mozillians end up on all sides of these (and other) debates. We're not alone. Leading software...

Rapid releases. Closed source software. Dogfooding. For legitimate reasons, Mozillians end up on all sides of these (and other) debates. We're not alone. Leading software...

|

|

Air Mozilla: The Joy of Coding - Episode 42 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2016/01/27 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

The Review Queues

In the past 3 weeks, 1446 add-ons were reviewed:

- 1068 (74%) were reviewed in less than 5 days.

- 74 (5%) were reviewed between 5 and 10 days.

- 304 (21%) were reviewed after more than 10 days.

There are 212 listed add-ons and 2 unlisted add-ons awaiting review.

If you’re an add-on developer and would like to see add-ons reviewed faster, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 44 Compatibility

This compatibility blog post is up. The bulk compatibility validation was run last week. Some of you may have received more than one email because the first validation run had many false positives due to a bug and many add-ons failed validation incorrectly.

If you’re using the Add-ons SDK to build your add-on, make sure you’re using the latest version of jpm, since some of the JavaScript syntax changes in 44 affect add-ons built with cfx and older versions of jpm.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Extension Signing

The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions. The current plan is to remove the signing override preference in Firefox 46 (updated from the previous deadline of Firefox 44).

Electrolysis

Electrolysis, also known as e10s, is the next major compatibility change coming to Firefox. Firefox will run on multiple processes now, running content code in a different process than browser code.

This is the time to test your add-ons and make sure they continue working in Firefox. We’re holding regular office hours to help you work on your add-ons, so please drop in on Tuesdays and chat with us!

WebExtensions

If you read the post on the future of add-on development, you should know there are big changes coming. We’re working on the new WebExtensions API, and we recommend that you start looking into it for your add-ons. You can track progress of its development in http://www.arewewebextensionsyet.com/.

We will be talking about development with WebExtensions in the upcoming FOSDEM. Come hack with us if you’re around!

https://blog.mozilla.org/addons/2016/01/27/add-ons-update-76/

|

|

Wil Clouser: Meet the New Test Pilot |

Effective immediately, we've renamed the Idea Town program to Test Pilot.

The original Test Pilot was an opt-in "labs" project around measuring what people actually did with the browser in small experiments, but hasn't been used in over a year and has no future plans. We'll be using the name and some of the assets to accelerate our original Idea Town plans.

At first glance the two projects appear similar but have key differences. I originally wrote a list to clarify what the new Test Pilot program was but decided it would be most useful if everyone could see it. Below is a rough description of the new Test Pilot.

- Test Pilot is an evolving set of stepping stones for getting an idea from a concept stage to landing in Firefox itself in an expedient way, being measured and verified along that path.

- Test Pilot is not a prototyping team.

- Test Pilot team members are amplifiers of people participating in the program. When a person or group submits an idea to Test Pilot they are starting a process in which they should expect to be involved until a conclusion is reached.

- Test Pilot team members help participants progress through the Test Pilot process in whichever ways are needed - from boiling an idea down to get at a measurable core concept, documenting an idea thoroughly, iterating on designs and prototypes, assisting with coding, communicating with appropriate teams around Mozilla, and helping uplift a successful idea to its next stage in life. An analogy could be made between Test Pilot team members and consultants.

- Test Pilot's intention is to facilitate providing decision-makers with quality, focused data in a short period of time.

- Test Pilot works very closely with the Mozilla community (including paid staff).

I'm working on our migration plan but the Test Pilot wiki page is up and contains links to getting involved and staying up to date on further changes.

Long live Test Pilot. Again.

http://micropipes.com/blog//2016/01/27/meet-the-new-test-pilot/

|

|

Aaron Klotz: Announcing Mozdbgext |

A well-known problem at Mozilla is that, while most of our desktop users run Windows, most of Mozilla’s developers do not. There are a lot of problems that result from that, but one of the most frustrating to me is that sometimes those of us that actually use Windows for development find ourselves at a disadvantage when it comes to tooling or other productivity enhancers.

In many ways this problem is also a Catch-22: People don’t want to use Windows for many reasons, but tooling is big part of the problem. OTOH, nobody is motivated to improve the tooling situation if nobody is actually going to use them.

A couple of weeks ago my frustrations with the situation boiled over when I

learned that our Cpp unit test suite could not log symbolicated call stacks,

resulting in my filing of bug 1238305 and bug 1240605. Not only could we

not log those stacks, in many situations we could not view them in a debugger

either.

Due to the fact that PDB files consume a large amount of disk space, we don’t keep those when building from integration or try repositories. Unfortunately they are be quite useful to have when there is a build failure. Most of our integration builds, however, do include breakpad symbols. Developers may also explicitly request symbols for their try builds.

A couple of years ago I had begun working on a WinDbg debugger extension that was tailored to Mozilla development. It had mostly bitrotted over time, but I decided to resurrect it for a new purpose: to help WinDbg* grok breakpad.

Enter mozdbgext

mozdbgext is the result. This extension

adds a few commands that makes Win32 debugging with breakpad a little bit easier.

The original plan was that I wanted mozdbgext to load breakpad symbols and then

insert them into the debugger’s symbol table via the IDebugSymbols3::AddSyntheticSymbol

API. Unfortunately the design of this API is not well equipped for bulk loading

of synthetic symbols: each individual symbol insertion causes the debugger to

re-sort its entire symbol table. Since xul.dll’s quantity of symbols is in the

six-figure range, using this API to load that quantity of symbols is

prohibitively expensive. I tweeted a Microsoft PM who works on Debugging Tools

for Windows, asking if there would be any improvements there, but it sounds like

this is not going to be happening any time soon.

My original plan would have been ideal from a UX perspective: the breakpad symbols would look just like any other symbols in the debugger and could be accessed and manipulated using the same set of commands. Since synthetic symbols would not work for me in this case, I went for “Plan B:” Extension commands that are separate from, but analagous to, regular WinDbg commands.

I plan to continuously improve the commands that are available. Until I have a proper README checked in, I’ll introduce the commands here.

Loading the Extension

- Use the

.loadcommand:.load

Loading the Breakpad Symbols

- Extract the breakpad symbols into a directory.

- In the debugger, enter

!bploadsyms - Note that this command will take some time to load all the relevant symbols.

Working with Breakpad Symbols

Note: You must have successfully run the !bploadsyms command first!

As a general guide, I am attempting to name each breakpad command similarly to

the native WinDbg command, except that the command name is prefixed by !bp.

- Stack trace:

!bpk - Find nearest symbol to address:

!bplnwhere address is specified as a hexadecimal value.

Downloading windbgext

I have pre-built a 32-bit binary (which obviously requires 32-bit WinDbg). I have not built a 64-bit binary yet, but the code should be source compatible.

Note that there are several other commands that are “roughed-in” at this point and do not work correctly yet. Please stick to the documented commands at this time.

* When I write “WinDbg”, I am really

referring to any debugger in the Debugging Tools for Windows package,

including cdb.

|

|

Yunier Jos'e Sosa V'azquez: Soporte para WebM/VP9, m'as seguridad y nuevas herramientas para desarrolladores en el nuevo Firefox |

!Como pasa el tiempo amigos! Casi sin darnos cuenta han transcurrido 6 semanas y hasta hemos comenzado un a~no nuevo, un a~no en el que Mozilla prepara nuevas funcionalidades que har'an de Firefox un mejor como por ejemplo: la separaci'on de procesos, el uso de v'ias alternas para ejecutar plugins y la nueva API para desarrollar complementos “multi navegador”.

Desde el anuncio en 2010 del formato de video WebM, Mozilla ha mostrado un especial inter'es al ser una alternativa potente frente a los formatos propietarios del mercado que exist'ian en aquel momento y de esta forma mejorar la experiencia de los usuarios al reproducir videos en la web. Con esta liberaci'on se ha habilitado el soporte para WebM/VP9 en aquellos sistemas que no soportan MP4/H.264.

Desde algunas versiones atr'as, Firefox incluye el plugin OpenH264 prove'ido por Cisco para cumplir las especificaciones de WebRTC y habilitar las llamadas con dispositivos que lo requieran. Ahora, si el decodificador de H.264 est'a disponible en el sistema, entonces se habilita este codec de video.

Novedades para desarrolladores

En esta oportunidad, los desarrolladores podr'an contar con herramientas de animaci'on y filtros CSS, informes sobre consumo de memoria, depuraci'on de WebSocket y m'as. Todo esto puedes leerlo en el blog de Labs de Mozilla Hispano.

Novedades en Android

- Los usuarios pueden elegir la p'agina de inicio a mostrar, en vez de los sitios m'as visitados.

- El servicio de impresi'on de Android permite activar la impresi'on en la nube.

- Al intentar abrir una URIs, se le pregunta al usuario si desea abrirla en una pesta~na privada.

- Adicionado el soporte para ejecutar URIs con el protocolo mms.

- F'acil acceso a la configuraci'on de la b'usqueda mientras buscamos en Internet.

- Ahora se muestran las sugerencias del historial de b'usqueda.

- La p'agina Cuentas Firefox ahora est'a basada en la web.

Otras novedades

- El soporte para el algoritmo criptogr'afico RC4 ha sido removido.

- Soporte para el formato de compresi'on brotli cuando se usa HTTPS.

- Uso de un certificado de firmado SHA256 para las versiones de Windows en aras de adaptarse a los nuevos requerimientos.

- Para soportar el descriptor unicode-range de las fuentes web, el algoritmo de concordancia en Linux usa el mismo c'odigo como en las dem'as plataformas.

- Firefox no confiar'a m'as en la autoridad de certificaci'on Equifax Secure Certificate Authority 1024-bit root o UTN – DATACorp SGC para validar certificados web seguros.

- El soporte para el teclado en pantalla ha sido temporalmente desactivado en Windows 8 y 8.1.

Si deseas conocer m'as, puedes leer las notas de lanzamiento (en ingl'es) para conocer m'as novedades.

Aclaraci'on para la versi'on m'ovil.

En las descargas se pueden encontrar 3 versiones para Android. El archivo que contiene i386 es para los dispositivos que tengan la arquitectura de Intel. Mientras que en los nombrados arm, el que dice api11 funciona con Honeycomb (3.0) o superior y el de api9 es para Gingerbread (2.3).

Puedes obtener esta versi'on desde nuestra zona de Descargas en espa~nol e ingl'es para Linux, Mac, Windows y Android. Recuerda que para navegar a trav'es de servidores proxy debes modificar la preferencia network.auth.force-generic-ntlm a true desde about:config.

Si te ha gustado, por favor comparte con tus amigos esta noticia en las redes sociales. No dudes en dejarnos un comentario.

|

|

QMO: Firefox 45.0 Beta 3 Testday, February 5th |

Hello Mozillians,

We are happy to announce that Friday, February 5th, we are organizing Firefox 45.0 Beta 3 Testday. We will be focusing our testing on the following features: Search Refactoring, Synced Tabs Menu, Text to Speech and Grouped Tabs Migration. Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better! See you on Friday!

https://quality.mozilla.org/2016/01/firefox-45-0-beta-3-testday-february-5th/

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/01/26/happy-bmo-push-day-4/

|

|

Tanvi Vyas: Updated Firefox Security Indicators |

Cross posting this. It was written a couple months ago and posted to Mozilla’s Security Blog

This article was coauthored by Aislinn Grigas, Senior Interaction Designer, Firefox Desktop

November 3, 2015

Over the past few months, Mozilla has been improving the user experience of our privacy and security features in Firefox. One specific initiative has focused on the feedback shown in our address bar around a site’s security. The major changes are highlighted below along with the rationale behind each change.

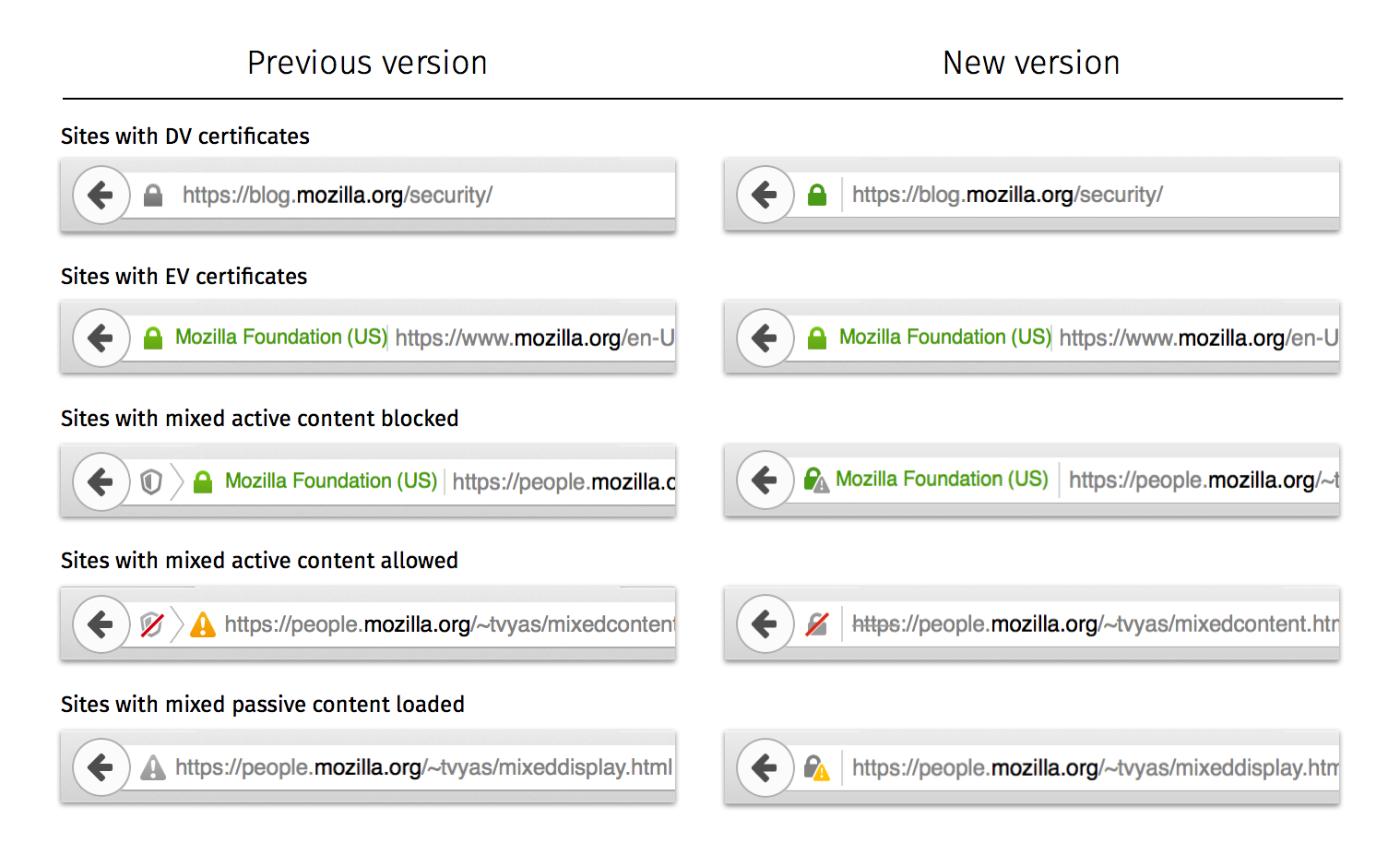

Change to DV Certificate treatment in the address bar

Color and iconography is commonly used today to communicate to users when a site is secure. The most widely used patterns are coloring a lock icon and parts of the address bar green. This treatment has a straightforward rationale given green = good in most cultures. Firefox has historically used two different color treatments for the lock icon – a gray lock for Domain-validated (DV) certificates and a green lock for Extended Validation (EV) certificates. The average user is likely not going to understand this color distinction between EV and DV certificates. The overarching message we want users to take from both certificate states is that their connection to the site is secure. We’re therefore updating the color of the lock when a DV certificate is used to match that of an EV certificate.

Although the same green icon will be used, the UI for a site using EV certificates will continue to differ from a site using a DV certificate. Specifically, EV certificates are used when Certificate Authorities (CA) verify the owner of a domain. Hence, we will continue to include the organization name verified by the CA in the address bar.

Changes to Mixed Content Blocker UI on HTTPS sites

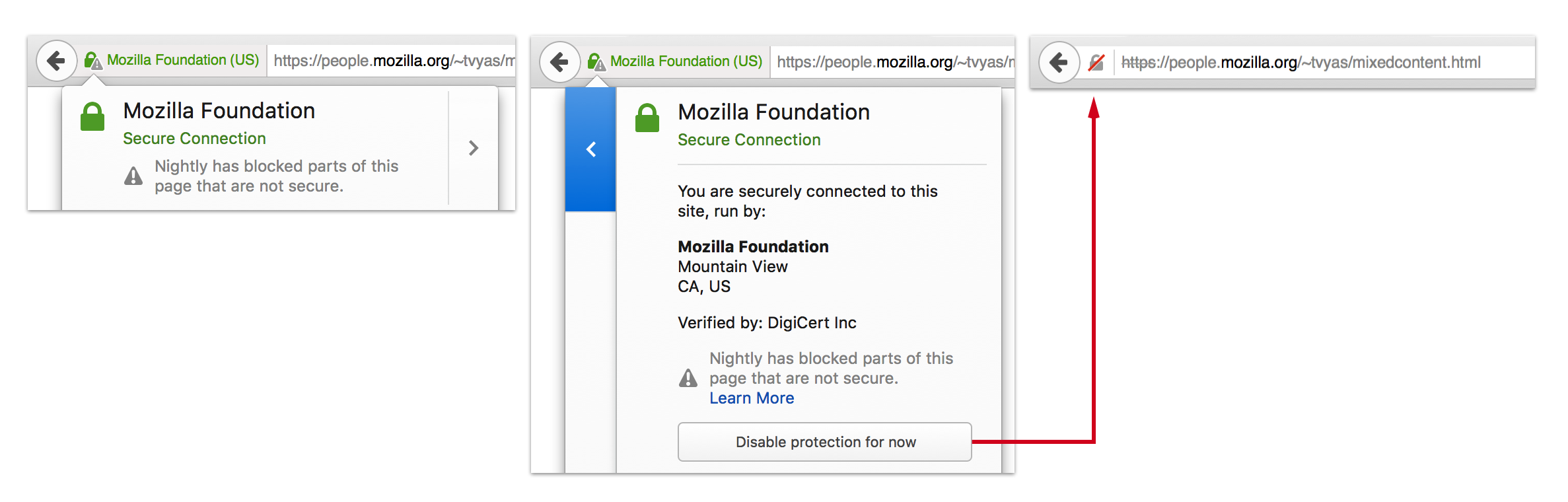

A second change we’re introducing addresses what happens when a page served over a secure connection contains Mixed Content. Firefox’s Mixed Content Blocker proactively blocks Mixed Active Content by default. Users historically saw a shield icon when Mixed Active Content was blocked and were given the option to disable the protection.

Since the Mixed Content state is closely tied to site security, the information should be communicated in one place instead of having two separate icons. Moreover, we have seen that the number of times users override mixed content protection is slim, and hence the need for dedicated mixed content iconography is diminishing. Firefox is also using the shield icon for another feature in Private Browsing Mode and we want to avoid making the iconography ambiguous.

The updated design that ships with Firefox 42 combines the lock icon with a warning sign which represents Mixed Content. When Firefox blocks Mixed Active Content, we retain the green lock since the HTTP content is blocked and hence the site remains secure.

For users who want to learn more about a site’s security state, we have added an informational panel to further explain differences in page security. This panel appears anytime a user clicks on the lock icon in the address bar.

Previously users could click on the shield icon in the rare case they needed to override mixed content protection. With this new UI, users can still do this by clicking the arrow icon to expose more information about the site security, along with a disable protection button.

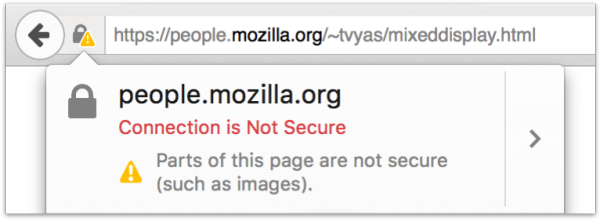

Loading Mixed Passive Content on HTTPS sites

There is a second category of Mixed Content called Mixed Passive Content. Firefox does not block Mixed Passive Content by default. However, when it is loaded on an HTTPS page, we let the user know with iconography and text. In previous versions of Firefox, we used a gray warning sign to reflect this case.

We have updated this iconography in Firefox 42 to a gray lock with a yellow warning sign. We degrade the lock from green to gray to emphasize that the site is no longer completely secure. In addition, we use a vibrant color for the warning icon to amplify that there is something wrong with the security state of the page.

We also use this iconography when the certificate or TLS connection used by the website relies on deprecated cryptographic algorithms.

The above changes will be rolled out in Firefox 42. Overall, the design improvements make it simpler for our users to understand whether or not their interactions with a site are secure.

Firefox Mobile

We have made similar changes to the site security indicators in Firefox for Android, which you can learn more about here.

https://blog.mozilla.org/tanvi/2016/01/26/updated-firefox-security-indicators/

|

|

Benoit Girard: Using RecordReplay to investigate intermittent oranges |

This is a quick write up to summarize my, and Jeff’s, experience, using RR to debug a fairly rare intermittent reftest failure. There’s still a lot of be learned about how to use RR effectively so I’m hoping sharing this will help others.

Finding the root of the bad pixel

First given a offending pixel I was able to set a breakpoint on it using these instructions. Next using rr-dataflow I was able to step from the offending bad pixel to the display item responsible for this pixel. Let me emphasize this for a second since it’s incredibly impressive. rr + rr-dataflow allows you to go from a buffer, through an intermediate surface, to the compositor on another thread, through another intermediate surface, back to the main thread and eventually back to the relevant display item. All of this was automated except for when the two pixels are blended together which is logically ambiguous. The speed at which rr was able to reverse continue through this execution was very impressive!

Here’s the trace of this part: rr-trace-reftest-pixel-origin

Understanding the decoding step

From here I started comparing a replay of a failing test and a non failing step and it was clear that the DisplayList was different. In one we have a nsDisplayBackgroundColor in the other we don’t. From here I was able to step through the decoder and compare the sequence. This was very useful in ruling out possible theories. It was easy to step forward and backwards in the good and bad replay debugging sessions to test out various theories about race conditions and understanding at which part of the decode process the image was rejected. It turned out that we sent two decodes, one for the metadata that is used to sized the frame tree and the other one for the image data itself.

Comparing the frame tree

In hindsight, it would have been more effective to start debugging this test by looking at the frame tree (and I imagine for other tests looking at the display list and layer tree) first would have been a quicker start. It works even better if you have a good and a bad trace to compare the difference in the frame tree. From here, I found that the difference in the layer tree came from a change hint that wasn’t guaranteed to come in before the draw.

The problem is now well understood: When we do a sync decode on reftest draw, if there’s an image error we wont flush the style hints since we’re already too deep in the painting pipeline.

Take away

- Finding the root cause of a bad pixel is very easy, and fast, to do using rr-dataflow.

- However it might be better to look for obvious frame tree/display list/layer tree difference(s) first.

- Debugging a replay is a lot simpler then debugging against non-determinist re-runs and a lot less frustrating too.

- rr is really useful for race conditions, especially rare ones.

|

|

The Servo Blog: These Weeks In Servo 48 |

In the last two weeks, we landed 130 PRs in the Servo organization’s repositories.

After months of work by vlad and many others, Windows support landed! Thanks to everyone who contributed fixes, tests, reviews, and even encouragement (or impatience!) to help us make this happen.

Notable Additions

- nikki added tests and support for checking the Fetch redirect count

- glennw implemented horizontal scrolling with arrow keys

- simon created a script that parses all of the CSS properties parsed by Servo

- ms2ger removed the legacy reftest framework

- fernando made crowbot able to rejoin IRC after it accidentally floods the channel

- jack added testing the

geckolibtarget to our CI - antrik fixed transfer corruption in ipc-channel on 32-bit

- valentin added and simon extended IDNA support in rust-url, which is required for both web and Gecko compatibility

New Contributors

- Chandler Abraham

- Darin Minamoto

- Josh Leverette

- Joshua Holmer

- Kishor Bhat

- Lanza

- Matthew Kuo

- Oleksii Fedorov

- St.Spyder

- Vladimir Vukicevic

- apopiak

- askalski

Screenshot

Screencast of this post being upvoted on reddit… from Windows!

Meetings

We had a meeting on some CI-related woes, documenting tags and mentoring, and dependencies for the style subsystem.

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 25 Jan 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160125/

|

|

A meetup about web tech.

A meetup about web tech.