Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Desaulniers: Intro to Debugging x86-64 Assembly |

I’m hacking on an assembly project, and wanted to document some of the tricks I was using for figuring out what was going on. This post might seem a little basic for folks who spend all day heads down in gdb or who do this stuff professionally, but I just wanted to share a quick intro to some tools that others may find useful. (oh god, I’m doing it)

If your coming from gdb to lldb, there’s a few differences in commands. LLDB has great documentation on some of the differences. Everything in this post about LLDB is pretty much there.

The bread and butter commands when working with gdb or lldb are:

- r (run the program)

- s (step in)

- n (step over)

- finish (step out)

- c (continue)

- q (quit the program)

You can hit enter if you want to run the last command again, which is really useful if you want to keep stepping over statements repeatedly.

I’ve been using LLDB on OSX. Let’s say I want to debug a program I can build, but is crashing or something:

1

| |

Setting a breakpoint on jump to label:

1 2 | |

Running the program until breakpoint hit:

1 2 3 4 5 6 7 8 9 10 | |

Seeing more of the current stack frame:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

Getting a back trace (call stack):

1 2 3 4 5 6 7 | |

peeking at the upper stack frame:

1 2 3 4 5 6 7 | |

back down to the breakpoint-halted stack frame:

1 2 3 4 5 6 7 | |

dumping the values of registers:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

read just one register:

1 2 | |

When you’re trying to figure out what system calls are made by some C code, using dtruss is very helpful. dtruss is available on OSX and seems to be some kind of wrapper around DTrace.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

If you compile with -g to emit debug symbols, you can use lldb’s disassemble

command to get the equivalent assembly:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 | |

Anyways, I’ve been learning some interesting things about OSX that I’ll be sharing soon. If you’d like to learn more about x86-64 assembly programming, you should read my other posts about writing x86-64 and a toy JIT for Brainfuck (the creator of Brainfuck liked it).

I should also do a post on Mozilla’s rr, because it can do amazing things like step backwards. Another day…

http://nickdesaulniers.github.io/blog/2016/01/20/debugging-x86-64-assembly-with-lldb-and-dtrace/

|

|

Rail Aliiev: Rebooting productivity |

Every new year gives you an opportunity to sit back, relax, have some scotch and re-think the passed year. Holidays give you enough free time. Even if you decide to not take a vacation around the holidays, it's usually calm and peaceful.

This time, I found myself thinking mostly about productivity, being effective, feeling busy, overwhelmed with work and other related topics.

When I started at Mozilla (almost 6 years ago!), I tried to apply all my GTD and time management knowledge and techniques. Working remotely and in a different time zone was an advantage - I had close to zero interruptions. It worked perfect.

Last year I realized that my productivity skills had faded away somehow. 40h+ workweeks, working on weekends, delivering goals in the last week of quarter don't sound like good signs. Instead of being productive I felt busy.

"Every crisis is an opportunity". Time to make a step back and reboot myself. Burning out at work is not a good idea. :)

Here are some ideas/tips that I wrote down for myself you may found useful.

Concentration

- Task #1: make a daily plan. No plan - no work.

- Don't start your day by reading emails. Get one (little) thing done first - THEN check your email.

- Try to define outcomes, not tasks. "Ship XYZ" instead of "Work on XYZ".

- Meetings are time consuming, so "Set a goal for each meeting". Consider skipping a meeting if you don't have any goal set, unless it's a beer-and-tell meeting! :)

- Constantly ask yourself if what you're working on is important.

- 3-4 times a day ask yourself whether you are doing something towards your goal or just finding something else to keep you busy. If you want to look busy, take your phone and walk around the office with some papers in your hand. Everybody will think that you are a busy person! This way you can take a break and look busy at the same time!

- Take breaks! Pomodoro technique has this option built-in. Taking breaks helps not only to avoid RSI, but also keeps your brain sane and gives you time to ask yourself the questions mentioned above. I use Workrave on my laptop, but you can use a real kitchen timer instead.

- Wear headphones, especially at office. Noise cancelling ones are even better. White noise, nature sounds, or instrumental music are your friends.

(Home) Office

- Make sure you enjoy your work environment. Why on the earth would you spend your valuable time working without joy?!

- De-clutter and organize your desk. Less things around - less distractions.

- Desk, chair, monitor, keyboard, mouse, etc - don't cheap out on them. Your health is more important and expensive. Thanks to mhoye for this advice!

Other

- Don't check email every 30 seconds. If there is an emergency, they will call you! :)

- Reward yourself at a certain time. "I'm going to have a chocolate at 11am", or "MFBT at 4pm sharp!" are good examples. Don't forget, you are Pavlov's dog too!

- Don't try to read everything NOW. Save it for later and read in a batch.

- Capture all creative ideas. You can delete them later. ;)

- Prepare for next task before break. Make sure you know what's next, so you can think about it during the break.

This is my list of things that I try to use everyday. Looking forward to see improvements!

I would appreciate your thoughts this topic. Feel free to comment or send a private email.

Happy Productive New Year!

|

|

Mozilla Addons Blog: Archiving AMO Stats |

One of the advantages of listing an add-on or theme on addons.mozilla.org (AMO) is that you’ll get statistics on your add-on’s usage. These stats, which are covered by the Mozilla privacy policy, provide add-on developers with information such as the number of downloads and daily users, among other insights.

Currently, the data that generates these statistics can go back as far as 2007, as we haven’t had an archiving policy. As a result, statistics take up the vast majority of disk space in our database and require a significant amount of processing and operations time. Statistics over a year old are very rarely accessed, and the value of their generation is very low, while the costs are increasing.

To reduce our operating and development costs, and increase the site’s reliability for developers, we are introducing an archiving policy.

In the coming weeks, statistics data over one year old will no longer be stored in the AMO database, and reports generated from them will no longer be accessible through AMO’s add-on statistics pages. Instead, the data will be archived and maintained as plain text files, which developers can download. We will write a follow-up post when these archives become available.

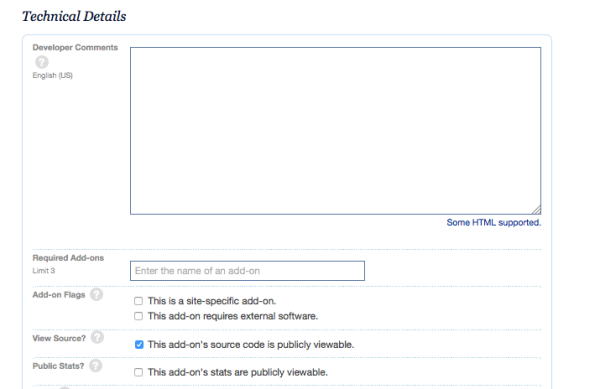

If you’ve chosen to keep your add-on’s statistics private, they will remain private when stats are archived. You can check your privacy settings by going to your add-on in the Developer Hub, clicking on Edit Listing, and then Technical Details.

The total number of users and other cumulative counts on add-ons and themes will not be affected and these will continue to function.

If you have feedback or concerns, please head to our forum post on this topic.

https://blog.mozilla.org/addons/2016/01/20/archiving-amo-stats/

|

|

Air Mozilla: The Joy of Coding - Episode 41 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Nathan Froyd: gecko and c++ onboarding presentation |

One of the things the Firefox team has been doing recently is having onboarding sessions for new hires. This onboarding currently covers:

- 1st day setup

- Bugzilla

- Building Firefox

- Desktop Firefox Architecture / Product

- Communication and Community

- Javascript and the DOM

- C++ and Gecko

- Shipping Software

- Telemetry

- Org structure and career development

My first day consisted of some useful HR presentations and then I was given my laptop and a pointer to a wiki page on building Firefox. Needless to say, it took me a while to get started! It would have been super convenient to have an introduction to all the stuff above.

I’ve been asked to do the C++ and Gecko session three times. All of the sessions are open to whoever wants to come, not just the new hires, and I think yesterday’s session was easily the most well-attended yet: somewhere between 10 and 20 people showed up. Yesterday’s session was the first session where I made the slides available to attendees (should have been doing that from the start…) and it seemed equally useful to make the slides available to a broader audience as well. The Gecko and C++ Onboarding slides are up now!

This presentation is a “living” presentation; it will get updated for future sessions with feedback and as I think of things that should have been in the presentation or better ways to set things up (some diagrams would be nice…). If you have feedback (good, bad, or ugly) on particular things in the slides or you have suggestions on what other things should be covered, please contact me! Next time I do this I’ll try to record the presentation so folks can watch that if they prefer.

https://blog.mozilla.org/nfroyd/2016/01/20/gecko-and-c-onboarding-presentation/

|

|

Andreas Gal: Brendan is back to save the Web |

Brendan is back, and he has a plan to save the Web. Its a big and bold plan, and it may just work. I am pretty excited about this. If you have 5 minutes to read along I’ll explain why I think you should be as well.

The Web is broken

Lets face it, the Web today is a mess. Everywhere we go online we are constantly inundated with annoying ads. Often pages are more ads than content, and the more ads the industry throws at us, the more we ignore them, the more obnoxious ads get, trying to catch our attention. As Brendan explains in his blog post, the browser used to be on the user’s side—we call browsers the user agent for a reason. Part of the early success of Firefox was that it blocked popup ads. But somewhere over the last 10 years of modern Web browsers, browsers lost their way and stopped being the user’s agent alone. Why?

Browsers aren’t free

Making a modern Web browser is not free. It takes hundreds of engineers to make a competitive modern browser engine. Someone has to pay for that, and that someone needs to have a reason to pay for it. Google doesn’t make Chrome for the good of mankind. Google makes Chrome so you can consume more Web and along with it, more Google ads. Each time you click on one, Google makes more money. Chrome is a billion dollar business for Google. And the same is true for pretty much every other browser. Every major browser out there is funded through advertisement. No browser maker can escape this dilemma. Maybe now you understand why no major browser ships with a builtin enabled by default ad-blocker, even though ad-blockers are by far the most popular add-ons.

Our privacy is at stake

It’s not just the unregulated flood of advertisement that needs a solution. Every ad you see is often selected based on sensitive private information advertisement networks have extracted from your browsing behavior through tracking. Remember how the FBI used to track what books Americans read at the library, and it was a big scandal? Today the Googles and Facebooks of the world know almost every site you visit, everything you buy online, and they use this data to target you with advertisement. I am often puzzled why people are so afraid of the NSA spying on us but show so little concern about all the deeply personal data Google and Facebook are amassing about everyone.

Blocking alone doesn’t scale

I wish the solution was as easy as just blocking all ads. There is a lot of great Web content out there: news, entertainment, educational content. It’s not free to make all this content, but we have gotten used to consuming it “for free”. Banning all ads without an alternative mechanism would break the economic backbone of the Web. This dilemma has existed for many years, and the big browser vendors seem to have given up on it. It’s hard to blame them. How do you disrupt the status quo without sawing off the (ad revenue) branch you are sitting on?

It takes an newcomer to fix this mess

I think its unlikely that the incumbent browser vendors will make any bold moves to solve this mess. There is too much money at stake. I am excited to see a startup take a swipe at this problem, because they have little to lose (seed money aside). Brave is getting the user agent back into the game. Browsers have intentionally remained silent onlookers to the ad industry invading users’ privacy. With Brave, Brendan makes the user agent step up and fight for the user as it was always intended to do.

Brave basically consists of two parts: part one blocks third party ad content and tracking signals. Instead of these Brave inserts alternative ad content. Sites can sign up to get a fair share of any ads that Brave displays for them. The big change in comparison to the status quo is that the Brave user agent is in control and can regulate what you see. It’s like a speed limit for advertisement on the Web, with the goal to restore balance and give sites a fair way to monetize while giving the user control through the user agent.

Making money with a better Web

The ironic part of Brave is that its for-profit. Brave can make money by reducing obnoxious ads and protecting your privacy at the same time. If Brave succeeds, it’s going to drain money away from the crappy privacy-invasive obnoxious advertisement world we have today, and publishers and sites will start transacting in the new Brave world that is regulated by the user agent. Brave will take a cut of these transactions. And I think this is key. It aligns the incentives right. The current funding structure of major browsers encourages them to keep things as they are. Brave’s incentive is to bring down the whole diseased temple and usher in a better Web. Exciting.

Quick update: I had a chance to look over the Brave GitHub repo. It looks like the Brave Desktop browser is based on Chromium, not Gecko. Yes, you read that right. Brave is using Google’s rendering engine, not Mozilla’s. Much to write about this one, but it will definitely help Brave “hide” better in the large volume of Chrome users, making it harder for sites to identify and block Brave users. Brave for iOS seems to be a fork of Firefox for iOS, but it manages to block ads (Mozilla says they can’t).

Filed under: Mozilla

http://andreasgal.com/2016/01/20/brendan-is-back-to-save-the-web/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1236161] when converting a BMP attachment to PNG fails a zero byte attachment is created

- [1231918] error handler doesn’t close multi-part responses

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2016/01/20/happy-bmo-push-day-166/

|

|

Alex Johnson: Removing Honeycomb Code |

As an effort to reduce the APK size of Firefox for Android and to remove unnecessary code, I will be helping remove the Honeycomb code throughout the Fennec project. Honeycomb will not be supported since Firefox 46, so this code is not necessary.

Bug 1217675 will keep track of the progress.

Hopefully this will help reduce the APK size some and clean up the road for killing Gingerbread hopefully sometime in the near future.

|

|

Brian R. Bondy: Brave Software |

Since June of last year, I’ve been co-founding a new startup called Brave Software with Brendan Eich. With our amazing team, we're developing something pretty epic.

We're building the next-generation of browsers for smartphones and laptops as part of our new ad-tech platform. Our terms of use give our users control over their personal data by blocking ad trackers and third party cookies. We re-integrate fewer and better ads directly into programmatic ad positions, paying revenue shares to users and publishers to support both of these essential parties in the web ecosystem.

Coming built in, we have new faster engines for tracking protection, ad block, HTTPS Everywhere, safe ads with rev-share, and more. We're seeing massive web page load time speedups.

We're starting to bring people in for early developer build access on all platforms.

I’m happy to share that the browsers we’re developing were made fully open sourced. We welcome contributors, and would love your help.

Some of the repositories include:

- Brave OSX and Windows x64 browsers: Prototyped as a Gecko based browser, but now replaced with a powerful new browser built on top of the electron framework. The electron framework is the same one in use by Slack and the Atom editor. It uses the latest libchromiumcontent and Node.

- Brave for Android: Formerly Link Bubble, working as a background service so you can use other apps as your pages load.

- Brave for iOS: Originally forked from Firefox for iOS but with all of the built-in greatness described above.

- And many others: Website, updater code, vault, electron fork, and others.

|

|

James Socol: PIEfection Slides Up |

I put the slides for my ManhattanJS talk, "PIEfection" up on GitHub the other day (sans images, but there are links in the source for all of those).

I completely neglected to talk about the Maillard reaction, which is responsible for food tasting good, and specifically for browning pie crusts. tl;dr: Amino acid (protein) + sugar + ~300°F (~150°C) = delicious. There are innumerable and poorly understood combinations of amino acids and sugars, but this class of reaction is responsible for everything from searing stakes to browning crusts to toasting marshmallows.

Above ~330°F, you get caramelization, which is also a delicious part of the pie and crust, but you don't want to overdo it. Starting around ~400°F, you get pyrolysis (burning, charring, carbonization) and below 285°F the reaction won't occur (at least not quickly) so you won't get the delicious compounds.

(All of these are, of course, temperatures measured in the material, not in the air of the oven.)

So, instead of an egg wash on your top crust, try whole milk, which has more sugar to react with the gluten in the crust.

I also didn't get a chance to mention a rolling technique I use, that I learned from a cousin of mine, in whose baking shadow I happily live.

When rolling out a crust after it's been in the fridge, first roll it out in a long stretch, then fold it in thirds; do it again; then start rolling it out into a round. Not only do you add more layer structure (mmm, flaky, delicious layers) but it'll fill in the cracks that often form if you try to roll it out directly, resulting in a stronger crust.

Those pepper flake shakers, filled with flour, are a great way to keep adding flour to the workspace without worrying about your buttery hands.

For transferring the crust to the pie plate, try rolling it up onto your rolling pin and unrolling it on the plate. Tapered (or "French") rolling pins (or wine bottle) are particularly good at this since they don't have moving parts.

Finally, thanks again to Jenn for helping me get pies from one island to another. It would not have been possible without her!

|

|

Myk Melez: New Year, New Blogware |

Four score and many moons ago, I decided to move this blog from Blogger to WordPress. The transition took longer than expected, but it’s finally done.

If you’ve been following along at the old address, https://mykzilla.blogspot.com/, now’s the time to update your address book! If you’ve been going to https://mykzilla.org/, however, or you read the blog on Planet Mozilla, then there’s nothing to do, as that’s the new address, and Planet Mozilla has been updated to syndicate posts from it.

|

|

Michael Kohler: Mozillas strategische Leitlinien f"ur 2016 und danach |

Dieser Beitrag wurde zuerst im Blog auf https://blog.mozilla.org/community ver"offentlicht. Herzlichen Dank an Aryx und Coce f"ur die "Ubersetzung!

Auf der ganzen Welt arbeiten leidenschaftliche Mozillianer am Fortschritt f"ur Mozillas Mission. Aber fragt man f"unf verschiedene Mozillianer, was die Mission ist, erh"alt man wom"oglich sieben verschiedene Antworten.

Am Ende des letzten Jahres legte Mozillas CEO Chris Beard klare Vorstellungen "uber Mozillas Mission, Vision und Rolle dar und zeigte auf, wie unsere Produkte uns diesem Ziel in den n"achsten f"unf Jahren n"aher bringen. Das Ziel dieser strategischen Leitlinien besteht darin, f"ur Mozilla insgesamt ein pr"agnantes, gemeinsames Verst"andnis unserer Ziele zu entwickeln, die uns als Individuen das Treffen von Entscheidungen und Erkennen von M"oglichkeiten erleichtert, mit denen wir Mozilla voranbringen.

Mozillas Mission k"onnen wir nicht alleine erreichen. Die Tausenden von Mozillianern auf der ganzen Welt m"ussen dahinter stehen, damit wir z"ugig und mit lauterer Stimme als je zuvor Unglaubliches erreichen k"onnen.

Deswegen ist eine der sechs strategischen Initiativen des Participation Teams f"ur die erste Jahresh"alfte, m"oglichst viele Mozillianer "uber diese Leitlinien aufzukl"aren, damit wir 2016 den bisher wesentlichsten Einfluss erzielen k"onnen. Wir werden einen weiteren Beitrag ver"offentlichen, der sich n"aher mit der Strategie des Participation Teams f"ur das Jahr 2016 befassen wird.

Das Verstehen dieser Strategie wird unabdingbar sein f"ur jeden, der bei Mozilla in diesem Jahr etwas bewirken m"ochte, denn sie wird bestimmen, wof"ur wir eintreten, wo wir unsere Ressourcen einsetzen und auf welche Projekte wir uns 2016 konzentrieren werden.

Zu Jahresbeginn werden wir n"aher auf diese Strategie eingehen und weitere Details dazu bekanntgeben, wie die diversen Teams und Projekte bei Mozilla auf diese Ziele hinarbeiten.

Der aktuelle Aufruf zum Handeln besteht darin, im Kontext Ihrer Arbeit "uber diese Ziele nachzudenken und dar"uber, wie Sie im kommenden Jahr bei Mozilla mitwirken m"ochten. Dies hilft, Ihre Innovationen, Ambitionen und Ihren Einfluss im Jahr 2016 zu gestalten.

Wir hoffen, dass Sie mitdiskutieren und Ihre Fragen, Kommentare und Pl"ane f"ur das Vorantreiben der strategischen Leitlinien im Jahr 2016 hier auf Discourse teilen und Ihre Gedanken auf Twitter mit dem Hashtag #Mozilla2016Strategy mitteilen.

Mission, Vision & Strategie

Unsere Mission

Daf"ur zu sorgen, dass das Internet eine weltweite "offentliche Ressource ist, die allen zug"anglich ist.

Unsere Vision

Ein Internet, f"ur das Menschen tats"achlich an erster Stelle stehen. Ein Internet, in dem Menschen ihr eigenes Erlebnis gestalten k"onnen. Ein Internet, in dem die Menschen selbst entscheiden k"onnen sowie sicher und unabh"angig sind.

Unsere Rolle

Mozilla setzt sich im wahrsten Sinne des Wortes in Ihrem Online-Leben f"ur Sie ein. Wir setzen uns f"ur Sie ein, sowohl in Ihrem Online-Erlebnis als auch f"ur Ihre Interessen beim Zustand des Internets.

Unsere Arbeit

Unsere S"aulen

- Produkte: Wir entwickeln Produkte mit Menschen im Mittelpunkt sowie Bildungsprogramme, mit deren Hilfe Menschen online ihr gesamtes Potential aussch"opfen k"onnen.

- Technologie: Wir entwickeln robuste technische L"osungen, die das Internet "uber verschiedene Plattformen hinweg zum Leben erwecken.

- Menschen: Wir entwickeln F"uhrungspersonen und Mitwirkende in der Gemeinschaft, die das Internet erfinden, gestalten und verteidigen.

Wir wir positive Ver"anderungen in Zukunft anpacken wollen

Die Arbeitsweise ist ebensowichtig wie das Ziel. Unsere Gesundheit und bleibender Einfluss h"angen davon ab, wie sehr unsere Produkte und Aktivit"aten:

- Interoperabilit"at, Open Source und offene Standards f"ordern,

- Gemeinschaften aufbauen und f"ordern,

- F"ur politische Ver"anderungen und rechtlichen Schutz eintreten sowie

- Netzb"urger bilden und einbeziehen.

https://michaelkohler.info/2016/mozillas-strategische-leitlinien-fur-2016-und-danach

|

|

David Lawrence: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1238573] Change label of “New Bug” menu to “New/Clone Bug”

- [1239065] Project Kickoff Form: Adjustments needed to Mozilla Infosec review portion

- [1240157] Fix a typo in bug.rst

- [1236461] Mass update mozilla-reps group

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/01/19/happy-bmo-push-day-3/

|

|

Soledad Penades: Hardware Hack Day @ MozLDN, 1 |

Last week we ran an internal “hack day” here at the Mozilla space in London. It was just a bunch of software engineers looking at various hardware boards and things and learning about them

Here’s what we did!

Sole

I essentially kind of bricked my Arduino Duemilanove trying to get it working with Johnny Five, but it was fine–apparently there’s a way to recover it using another Arduino, and someone offered to help with that in the next NodeBots London, which I’m going to attend.

Francisco

Thinks he’s having issues with cables. It seems like the boards are not reset automatically by the Arduino IDE nowadays? He found the button in the board actually resets the board when pressed i.e. it’s the RESET button.

On the Raspberry Pi side of things, he was very happy to put all his old-school Linux skills in action configuring network interfaces without GUIs!

Guillaume

Played with mDNS advertising and listening to services on Raspberry Pi.

(He was very quiet)

(He also built a very nice LEGO case for the Raspberry Pi, but I do not have a picture, so just imagine it).

Wilson

Wilson: “I got my Raspberry Pi on the Wi-Fi”

Francisco: “Sorry?”

Wilson: “I mean, you got my Raspberry Pi on the network. And now I’m trying to build a web app on the Pi…”

Chris

Exploring the Pebble with Linux. There’s a libpebble, and he managed to connect…

(sorry, I had to leave early so I do not know what else did Chris do!)

Updated, 20 January: Chris told me he just managed to successfully connect to the Pebble watch using the bluetooth WebAPI. It requires two Gecko patches (one regression patch and one obvious logic error that he hasn’t filed yet). PROGRESS!

~~~

So as you can see we didn’t really get super far in just a day, and I even ended up with unusable hardware. BUT! we all learned something, and next time we know what NOT to do (or at least I DO KNOW what NOT to do!).

http://soledadpenades.com/2016/01/19/hardware-hack-day-mozldn-1/

|

|

Daniel Stenberg: “Subject: Urgent Warning” |

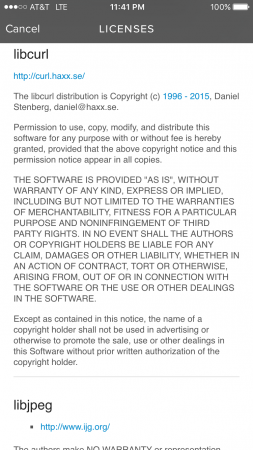

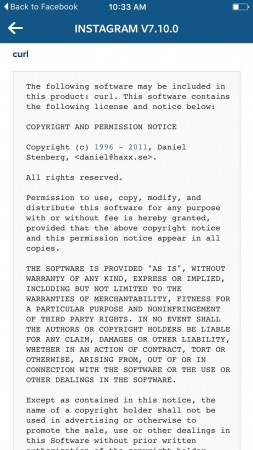

Back in December I got a desperate email from this person. A woman who said her Instagram had been hacked and since she found my contact info in the app she mailed me and asked for help. I of course replied and said that I have nothing to do with her being hacked but I also have nothing to do with Instagram other than that they use software I’ve written.

Today she writes back. Clearly not convinced I told the truth before, and now she strikes back with more “evidence” of my wrongdoings.

Dear Daniel,

I had emailed you a couple months ago about my “screen dumps” aka screenshots and asked for your help with restoring my Instagram account since it had been hacked, my photos changed, and your name was included in the coding. You claimed to have no involvement whatsoever in developing a third party app for Instagram and could not help me salvage my original Instagram photos, pre-hacked, despite Instagram serving as my Photography portfolio and my career is a Photographer.

Since you weren’t aware that your name was attached to Instagram related hacking code, I thought you might want to know, in case you weren’t already aware, that your name is also included in Spotify terms and conditions. I came across this information using my Spotify which has also been hacked into and would love your help hacking out of Spotify. Also, I have yet to figure out how to unhack the hackers from my Instagram so if you change your mind and want to restore my Instagram to its original form as well as help me secure my account from future privacy breaches, I’d be extremely grateful. As you know, changing my passwords did nothing to resolve the problem. Please keep in mind that Facebook owns Instagram and these are big companies that you likely don’t want to have a trail of evidence that you are a part of an Instagram and Spotify hacking ring. Also, Spotify is a major partner of Spotify so you are likely familiar with the coding for all of these illegally developed third party apps. I’d be grateful for your help fixing this error immediately.

Thank you,

[name redacted]

P.S. Please see attached screen dump for a screen shot of your contact info included in Spotify (or what more likely seems to be a hacked Spotify developed illegally by a third party).

Here’s the Instagram screenshot she sent me in a previous email:

I’ve tried to respond with calm and clear reasonable logic and technical details on why she’s seeing my name there. That clearly failed. What do I try next?

http://daniel.haxx.se/blog/2016/01/19/subject-urgent-warning/

|

|

Emily Dunham: How much knowledge do you need to give a conference talk? |

How much knowledge do you need to give a conference talk?

I was recently asked an excellent question when I promoted the LFNW CFP on IRC:

As someone who has never done a talk, but wants to, what kind of knowledge do you need about a subject to give a talk on it?

If you answer “yes” to any of the following questions, you know enough to propose a talk:

- Do you have a hobby that most tech people aren’t experts on? Talk about applying a lesson or skill from that hobby to tech! For instance, I turned a habit of reading about psychology into my Human Hacking talk.

- Have you ever spent a bunch of hours forcing two tools to work with each other, because the documentation wasn’t very helpful and Googling didn’t get you very far, and built something useful? “How to build ___ with ___” makes a catchy talk title, if the thing you built solves a common problem.

- Have you ever had a mentor sit down with you and explain a tool or technique, and the new understanding improved the quality of your work or code? Passing along useful lessons from your mentors is a valuable talk, because it allows others to benefit from the knowledge without taking as much of your mentor’s time.

- Have you seen a dozen newbies ask the same question over the course of a few months? When your answer to a common question starts to feel like a broken record, it’s time to compose it into a talk then link the newbies to your slides or recording!

- Have you taken a really interesting class lately? Can you distill part of it into a 1-hour lesson that would appeal to nerds who don’t have the time or resources to take the class themselves? (thanks lucyw for adding this to the list!)

- Have you built a cool thing that over a dozen other people use? A tutorial talk can not only expand your community, but its recording can augment your documentation and make the project more accessible for those who prefer to learn directly from humans!

- Did you benefit from a really great introductory talk when you were learning a tool? Consider doing your own tutorial! Any conference with beginners in their target audience needs at least one Git lesson, an IRC talk, and some discussions of how to use basic Unix utilities. These introductory talks are actually better when given by someone who learned the technology relatively recently, because newer users remember what it’s like not to know how to use it. Just remember to have a more expert user look over your slides before you present, in case you made an incorrect assumption about the tool’s more advanced functionality.

I personally try to propose talks I want to hear, because the dealine of a CFP or conference is great motivation to prioritize a cool project over ordinary chores.

http://edunham.net/2016/01/19/how_much_knowledge_do_you_need_to_give_a_conference_talk.html

|

|

QMO: Aurora 45.0 Testday Results |

Howdy mozillians!

Last week – on Friday, January 15th – we held Aurora 45.0 Testday; and, of course, it was another outstanding event!

Thank you all – Mahmoudi Dris, Iryna Thompson, Chandrakant Dhutadmal, Preethi Dhinesh, Moin Shaikh, Ilse Mac'ias, Hossain Al Ikram, Rezaul Huque Nayeem, Tahsan Chowdhury Akash, Kazi Nuzhat Tasnem, Fahmida Noor, Tazin Ahmed, Md. Ehsanul Hassan, Mohammad Maruf Islam, Kazi Sakib Ahmad, Khalid Syfullah Zaman, Asiful Kabir, Tabassum Mehnaz, Hasibul Hasan, Saddam Hossain, Mohammad Kamran Hossain, Amlan Biswas, Fazle Rabbi, Mohammed Jawad Ibne Ishaque, Asif Mahmud Shuvo, Nazir Ahmed Sabbir, Md. Raihan Ali, Md. Almas Hossain, Sadik Khan, Md. Faysal Alam Riyad, Faisal Mahmud, Md. Oliullah Sizan, Asif Mahmud Rony, Forhad Hossain and Tanvir Rahman – for the participation!

A big thank you to all our active moderators too!

Results:

- 15 issues were verified: 1235821, 1228518, 1165637, 1232647, 1235379, 842356, 1222971, 915962, 1180761, 1218455, 1222747, 1210752, 1198450, 1222820, 1225514

- 1 bug was triaged: 1230789

- some failures were mentioned for Search Refactoring feature in the etherpads (link 1 and link 2); please feel free to add the requested details in the etherpads or, even better, join us on #qa IRC channel and let’s figure them out

I strongly advise everyone of you to reach out to us, the moderators, via #qa during the events when you encountered any kind of failures. Keep up the great work! \o/

And keep an eye on QMO for upcoming events!

https://quality.mozilla.org/2016/01/aurora-45-0-testday-results/

|

|

Eitan Isaacson: It’s MLK Day and It’s Not Too Late to Do Something About It |

For the last three years I have had the opportunity to send out a reminder to Mozilla staff that Martin Luther King Jr. Day is coming up, and that U.S. employees get the day off. It has turned into my MLK Day eve ritual. I read his letters, listen to speeches, and then I compose a belabored paragraph about Dr. King with some choice quotes.

If you didn’t get a chance to celebrate Dr. King’s legacy and the movements he was a part of, you still have a chance:

- Watch Selma.

- Watch The Black Power Mixtape (it’s on Netflix).

- Read A Letter from a Birmingham Jail (it’s really really good).

- Listen to his speech Beyond Vietnam.

- Listen to his last speech I Have Been To The Mountaintop.

http://blog.monotonous.org/2016/01/18/its-mlk-day-and-its-not-too-late-to-do-something-about-it/

|

|

Nick Cameron: Libmacro |

As I outlined in an earlier post, libmacro is a new crate designed to be used by procedural macro authors. It provides the basic API for procedural macros to interact with the compiler. I expect higher level functionality to be provided by library crates. In this post I'll go into a bit more detail about the API I think should be exposed here.

This is a lot of stuff. I've probably missed something. If you use syntax extensions today and do something with libsyntax that would not be possible with libmacro, please let me know!

I previously introduced MacroContext as one of the gateways to libmacro. All procedural macros will have access to a &mut MacroContext.

Tokens

I described the tokens module in the last post, I won't repeat that here.

There are a few more things I thought of. I mentioned a TokenStream which is a sequence of tokens. We should also have TokenSlice which is a borrowed slice of tokens (the slice to TokenStream's Vec). These should implement the standard methods for sequences, in particular they support iteration, so can be maped, etc.

In the earlier blog post, I talked about a token kind called Delimited which contains a delimited sequence of tokens. I would like to rename that to Sequence and add a None variant to the Delimiter enum. The None option is so that we can have blocks of tokens without using delimiters. It will be used for noting unsafety and other properties of tokens. Furthermore, it is useful for macro expansion (replacing the interpolated AST tokens currently present). Although None blocks do not affect scoping, they do affect precedence and parsing.

We should provide API for creating tokens. By default these have no hygiene information and come with a span which has no place in the source code, but shows the source of the token to be the procedural macro itself (see below for how this interacts with expansion of the current macro). I expect a make_ function for each kind of token. We should also have API for creating macros in a given scope (which do the same thing but with provided hygiene information). This could be considered an over-rich API, since the hygiene information could be set after construction. However, since hygiene is fiddly and annoying to get right, we should make it as easy as possible to work with.

There should also be a function for creating a token which is just a fresh name. This is useful for creating new identifiers. Although this can be done by interning a string and then creating a token around it, it is used frequently enough to deserve a helper function.

Emitting errors and warnings

Procedural macros should report errors, warnings, etc. via the MacroContext. They should avoid panicking as much as possible since this will crash the compiler (once catch_panic is available, we should use it to catch such panics and exit gracefully, however, they will certainly still meaning aborting compilation).

Libmacro will 're-export' DiagnosticBuilder from syntax::errors. I don't actually expect this to be a literal re-export. We will use libmacro's version of Span, for example.

impl MacroContext {

pub fn struct_error(&self, &str) -> DiagnosticBuilder;

pub fn error(&self, Option, &str);

}

pub mod errors {

pub struct DiagnosticBuilder { ... }

impl DiagnosticBuilder { ... }

pub enum ErrorLevel { ... }

}

There should be a macro try_emit!, which reduces a Result to a T or calls emit() and then calls unreachable!() (if the error is not fatal, then it should be upgraded to a fatal error).

Tokenising and quasi-quoting

The simplest function here is tokenize which takes a string (&str) and returns a Result. The string is treated like source text. The success option is the tokenised version of the string. I expect this function must take a MacroContext argument.

We will offer a quasi-quoting macro. This will return a TokenStream (in contrast to today's quasi-quoting which returns AST nodes), to be precise a Result. The string which is quoted may include metavariables ($x), and these are filled in with variables from the environment. The type of the variables should be either a TokenStream, a TokenTree, or a Result (in this last case, if the variable is an error, then it is just returned by the macro). For example,

fn foo(cx: &mut MacroContext, tokens: TokenStream) -> TokenStream {

quote!(cx, fn foo() { $tokens }).unwrap()

}

The quote! macro can also handle multiple tokens when the variable corresponding with the metavariable has type [TokenStream] (or is dereferencable to it). In this case, the same syntax as used in macros-by-example can be used. For example, if x: Vec then quote!(cx, ($x),*) will produce a TokenStream of a comma-separated list of tokens from the elements of x.

Since the tokenize function is a degenerate case of quasi-quoting, an alternative would be to always use quote! and remove tokenize. I believe there is utility in the simple function, and it must be used internally in any case.

These functions and macros should create tokens with spans and hygiene information set as described above for making new tokens. We might also offer versions which takes a scope and uses that as the context for tokenising.

Parsing helper functions

There are some common patterns for tokens to follow in macros. In particular those used as arguments for attribute-like macros. We will offer some functions which attempt to parse tokens into these patterns. I expect there will be more of these in time; to start with:

pub mod parsing {

// Expects `(foo = "bar"),*`

pub fn parse_keyed_values(&TokenSlice, &mut MacroContext) -> Result, ErrStruct>;

// Expects `"bar"`

pub fn parse_string(&TokenSlice, &mut MacroContext) -> Result;

}

To be honest, given the token design in the last post, I think parse_string is unnecessary, but I wanted to give more than one example of this kind of function. If parse_keyed_values is the only one we end up with, then that is fine.

Pattern matching

The goal with the pattern matching API is to allow procedural macros to operate on tokens in the same way as macros-by-example. The pattern language is thus the same as that for macros-by-example.

There is a single macro, which I propose calling matches. Its first argument is the name of a MacroContext. Its second argument is the input, which must be a TokenSlice (or dereferencable to one). The third argument is a pattern definition. The macro produces a Result where T is the type produced by the pattern arms. If the pattern has multiple arms, then each arm must have the same type. An error is produced if none of the arms in the pattern are matched.

The pattern language follows the language for defining macros-by-example (but is slightly stricter). There are two forms, a single pattern form and a multiple pattern form. If the first character is a { then the pattern is treated as a multiple pattern form, if it starts with ( then as a single pattern form, otherwise an error (causes a panic with a Bug error, as opposed to returning an Err).

The single pattern form is (pattern) => { code }. The multiple pattern form is {(pattern) => { code } (pattern) => { code } ... (pattern) => { code }}. code is any old Rust code which is executed when the corresponding pattern is matched. The pattern follows from macros-by-example - it is a series of characters treated as literals, meta-variables indicated with $, and the syntax for matching multiple variables. Any meta-variables are available as variables in the righthand side, e.g., $x becomes available as x. These variables have type TokenStream if they appear singly or Vec if they appear multiply (or Vec> and so forth).

Examples:

matches!(cx, input, (foo($x:expr) bar) => {quote(cx, foo_bar($x).unwrap()}).unwrap()

matches!(cx, input, {

() => {

cx.err("No input?");

}

(foo($($x:ident),+ bar) => {

println!("found {} idents", x.len());

quote!(($x);*).unwrap()

}

}

})

Note that since we match AST items here, our backwards compatibility story is a bit complicated (though hopefully not much more so than with current macros).

Hygiene

The intention of the design is that the actual hygiene algorithm applied is irrelevant. Procedural macros should be able to use the same API if the hygiene algorithm changes (of course the result of applying the API might change). To this end, all hygiene objects are opaque and cannot be directly manipulated by macros.

I propose one module (hygiene) and two types: Context and Scope.

A Context is attached to each token and contains all hygiene information about that token. If two tokens have the same Context, then they may be compared syntactically. The reverse is not true - two tokens can have different Contexts and still be equal. Contexts can only be created by applying the hygiene algorithm and cannot be manipulated, only moved and stored.

MacroContext has a method fresh_hygiene_context for creating a new, fresh Context (i.e., a Context not shared with any other tokens).

MacroContext has a method expansion_hygiene_context for getting the Context where the macro is defined. This is equivalent to .expansion_scope().direct_context(), but might be more efficient (and I expect it to be used a lot).

A Scope provides information about a position within an AST at a certain point during macro expansion. For example,

fn foo() {

a

{

b

c

}

}

a and b will have different Scopes. b and c will have the same Scopes, even if b was written in this position and c is due to macro expansion. However, a Scope may contain more information than just the syntactic scopes, for example, it may contain information about pending scopes yet to be applied by the hygiene algorithm (i.e., information about let expressions which are in scope).

Note that a Scope means a scope in the macro hygiene sense, not the commonly used sense of a scope declared with {}. In particular, each let statement starts a new scope and the items and statements in a function body are in different scopes.

The functions lookup_item_scope and lookup_statement_scope take a MacroContext and a path, represented as a TokenSlice, and return the Scope which that item defines or an error if the path does not refer to an item, or the item does not define a scope of the right kind.

The function lookup_scope_for is similar, but returns the Scope in which an item is declared.

MacroContext has a method expansion_scope for getting the scope in which the current macro is being expanded.

Scope has a method direct_context which returns a Context for items declared directly (c.f., via macro expansion) in that Scope.

Scope has a method nested which creates a fresh Scope nested within the receiver scope.

Scope has a static method empty for creating an empty scope, that is one with no scope information at all (note that this is different from a top-level scope).

I expect the exact API around Scopes and Contexts will need some work. Scope seems halfway between an intuitive, algorithm-neutral abstraction, and the scopes from the sets of scopes hygiene algorithm. I would prefer a Scope should be more abstract, on the other hand, macro authors may want fine-grained control over hygiene application.

Manipulating hygiene information on tokens,

pub mod hygiene {

pub fn add(cx: &mut MacroContext, t: &Token, scope: &Scope) -> Token;

// Maybe unnecessary if we have direct access to Tokens.

pub fn set(t: &Token, cx: &Context) -> Token;

// Maybe unnecessary - can use set with cx.expansion_hygiene_context().

// Also, bad name.

pub fn current(cx: &MacroContext, t: &Token) -> Token;

}

add adds scope to any context already on t (Context should have a similar method). Note that the implementation is a bit complex - the nature of the Scope might mean we replace the old context completely, or add to it.

Applying hygiene when expanding the current macro

By default, the current macro will be expanded in the standard way, having hygiene applied as expected. Mechanically, hygiene information is added to tokens when the macro is expanded. Assuming the sets of scopes algorithm, scopes (for example, for the macro's definition, and for the introduction) are added to any scopes already present on the token. A token with no hygiene information will thus behave like a token in a macro-by-example macro. Hygiene due to nested scopes created by the macro do not need to be taken into account by the macro author, this is handled at expansion time.

Procedural macro authors may want to customise hygiene application (it is common in Racket), for example, to introduce items that can be referred to by code in the call-site scope.

We must provide an option to expand the current macro without applying hygiene; the macro author must then handle hygiene. For this to work, the macro must be able to access information about the scope in which it is applied (see MacroContext::expansion_scope, above) and to supply a Scope indicating scopes that should be added to tokens following the macro expansion.

pub mod hygiene {

pub enum ExpansionMode {

Automatic,

Manual(Scope),

}

}

impl MacroContext {

pub fn set_hygienic_expansion(hygiene::ExpansionMode);

}

We may wish to offer other modes for expansion which allow for tweaking hygiene application without requiring full manual application. One possible mode is where the author provides a Scope for the macro definition (rather than using the scope where the macro is actually defined), but hygiene is otherwise applied automatically. We might wish to give the author the option of applying scopes due to the macro definition, but not the introduction scopes.

On a related note, might we want to affect how spans are applied when the current macro is expanded? I can't think of a use case right now, but it seems like something that might be wanted.

Blocks of tokens (that is a Sequence token) may be marked (not sure how, exactly, perhaps using a distinguished context) such that it is expanded without any hygiene being applied or spans changed. There should be a function for creating such a Sequence from a TokenSlice in the tokens module. The primary motivation for this is to handle the tokens representing the body on which an annotation-like macro is present. For a 'decorator' macro, these tokens will be untouched (passed through by the macro), and since they are not touched by the macro, they should appear untouched by it (in terms of hygiene and spans).

Applying macros

We provide functionality to expand a provided macro or to lookup and expand a macro.

pub mod apply {

pub fn expand_macro(cx: &mut MacroContext,

expansion_scope: Scope,

macro: &TokenSlice,

macro_scope: Scope,

input: &TokenSlice)

-> Result<(TokenStream, Scope), ErrStruct>;

pub fn lookup_and_expand_macro(cx: &mut MacroContext,

expansion_scope: Scope,

macro: &TokenSlice,

input: &TokenSlice)

-> Result<(TokenStream, Scope), ErrStruct>;

}

These functions apply macro hygiene in the usual way, with expansion_scope dictating the scope into which the macro is expanded. Other spans and hygiene information is taken from the tokens. expand_macro takes pending scopes from macro_scope, lookup_and_expand_macro uses the proper pending scopes. In order to apply the hygiene algorithm, the result of the macro must be parsable. The returned scope will contain pending scopes that can be applied by the macro to subsequent tokens.

We could provide versions that don't take an expansion_scope and use cx.expansion_scope(). Probably unnecessary.

pub mod apply {

pub fn expand_macro_unhygienic(cx: &mut MacroContext,

macro: &TokenSlice,

input: &TokenSlice)

-> Result;

pub fn lookup_and_expand_macro_unhygienic(cx: &mut MacroContext,

macro: &TokenSlice,

input: &TokenSlice)

-> Result;

}

The _unhygienic variants expand a macro as in the first functions, but do not apply the hygiene algorithm or change any hygiene information. Any hygiene information on tokens is preserved. I'm not sure if _unhygienic are the right names - using these is not necessarily unhygienic, just that we are automatically applying the hygiene algorithm.

Note that all these functions are doing an eager expansion of macros, or in Scheme terms they are local-expand functions.

Looking up items

The function lookup_item takes a MacroContext and a path represented as a TokenSlice and returns a TokenStream for the item referred to by the path, or an error if name resolution failed. I'm not sure where this function should live.

Interned strings

pub mod strings {

pub struct InternedString;

impl InternedString {

pub fn get(&self) -> String;

}

pub fn intern(cx: &mut MacroContext, s: &str) -> Result;

pub fn find(cx: &mut MacroContext, s: &str) -> Result;

pub fn find_or_intern(cx: &mut MacroContext, s: &str) -> Result;

}

intern interns a string and returns a fresh InternedString. find tries to find an existing InternedString.

Spans

A span gives information about where in the source code a token is defined. It also gives information about where the token came from (how it was generated, if it was generated code).

There should be a spans module in libmacro, which will include a Span type which can be easily inter-converted with the Span defined in libsyntax. Libsyntax spans currently include information about stability, this will not be present in libmacro spans.

If the programmer does nothing special with spans, then they will be 'correct' by default. There are two important cases: tokens passed to the macro and tokens made fresh by the macro. The former will have the source span indicating where they were written and will include their history. The latter will have no source span and indicate they were created by the current macro. All tokens will have the history relating to expansion of the current macro added when the macro is expanded. At macro expansion, tokens with no source span will be given the macro use-site as their source.

Spans can be freely copied between tokens.

It will probably useful to make it easy to manipulate spans. For example, rather than point at the macro's defining function, point at a helper function where the token is made. Or to set the origin to the current macro when the token was produced by another which should an implementation detail. I'm not sure what such an interface should look like (and is probably not necessary in an initial library).

Feature gates

pub mod features {

pub enum FeatureStatus {

// The feature gate is allowed.

Allowed,

// The feature gate has not been enabled.

Disallowed,

// Use of the feature is forbidden by the compiler.

Forbidden,

}

pub fn query_feature(cx: &MacroContext, feature: Token) -> Result;

pub fn query_feature_by_str(cx: &MacroContext, feature: &str) -> Result;

pub fn query_feature_unused(cx: &MacroContext, feature: Token) -> Result;

pub fn query_feature_by_str_unused(cx: &MacroContext, feature: &str) -> Result;

pub fn used_feature_gate(cx: &MacroContext, feature: Token) -> Result<(), ErrStruct>;

pub fn used_feature_by_str(cx: &MacroContext, feature: &str) -> Result<(), ErrStruct>;

pub fn allow_feature_gate(cx: &MacroContext, feature: Token) -> Result<(), ErrStruct>;

pub fn allow_feature_by_str(cx: &MacroContext, feature: &str) -> Result<(), ErrStruct>;

pub fn disallow_feature_gate(cx: &MacroContext, feature: Token) -> Result<(), ErrStruct>;

pub fn disallow_feature_by_str(cx: &MacroContext, feature: &str) -> Result<(), ErrStruct>;

}

The query_* functions query if a feature gate has been set. They return an error if the feature gate does not exist. The _unused variants do not mark the feature gate as used. The used_ functions mark a feature gate as used, or return an error if it does not exist.

The allow_ and disallow_ functions set a feature gate as allowed or disallowed for the current crate. These functions will only affect feature gates which take affect after parsing and expansion are complete. They do not affect feature gates which are checked during parsing or expansion.

Question: do we need the used_ functions? Could just call query_ and ignore the result.

Attributes

We need some mechanism for setting attributes as used. I don't actually know how the unused attribute checking in the compiler works, so I can't spec this area. But, I expect MacroContext to make available some interface for reading attributes on a macro use and marking them as used.

|

|

Seif Lotfy: Skizze progress and REPL |

Over the last 3 weeks, based on feedback we proceeded fledging out the concepts and the code behind Skizze.

Neil Patel suggested the following:

So I've been thinking about the server API. I think we want to choose one thing and do it as well as possible, instead of having six ways to talk to the server. I think that helps to keep things sane and simple overall.

Thinking about usage, I can only really imagine Skizze in an environment like ours, which is high-throughput. I think that is it's 'home' and we should be optimising for that all day long.

Taking that into account, I believe we have two options:

We go the gRPC route, provide .proto files and let people use the existing gRPC tooling to build support for their favourite language. That means we can happily give Ruby/Node/C#/etc devs a real way to get started up with Skizze almost immediately, piggy-backing on the gRPC docs etc.

We absorb the Redis Protocol. It does everything we need, is very lean, and we can (mostly) easily adapt it for what we need to do. The downside is that to get support from other libs, there will have to be actual libraries for every language. This could slow adoption, or it might be easy enough if people can reuse existing REDIS code. It's hard to tell how that would end up.

gRPC is interesting because it's built already for distributed systems, across bad networks, and obviously is bi-directional etc. Without us having to spend time on the protocol, gRPC let's us easily add features that require streaming. Like, imagine a client being able to listen for changes in count/size and be notified instantly. That's something that gRPC is built for right now.

I think gRPC is a bit verbose, but I think it'll pay off for ease of third-party lib support and as things grow.

The CLI could easily be built to work with gRPC, including adding support for streaming stuff etc. Which could be pretty exciting.

That being said, we gave Skizze a new home, where based on feedback we developed .proto files and started rewriting big chunks of the code.

We added a new wrapper called "domain" which represents a stream. It wraps around Count-Min-Log, Bloom Filter, Top-K and HyperLogLog++, so when feeding it values it feeds all the sketches. Later we intend to allow attaching and detaching sketches from "domains" (We need a better name).

We also implemented a gRPC API which should allow easy wrapper creation in other languages.

Special thanks go to Martin Pinto for helping out with unit tests and Soren Macbeth for thorough feedback and ideas about the "domain" concept.

Take a look at our initial REPL work there:

|

|