Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Addons Blog: Firefox Accounts on AMO |

In order to provide a more consistent experience across all Mozilla products and services, addons.mozilla.org (AMO) will soon begin using Firefox Accounts.

During the first stage of the migration, which will begin in a few weeks, you can continue logging in with your current credentials and use the site as you normally would. Once you’re logged in, you will be asked to log in with a Firefox Account to complete the migration. If you don’t have a Firefox Account, you can easily create one during this process.

Once you are done with the migration, everything associated with your AMO account, such as add-ons you’ve authored or comments you’ve written, will continue to be linked to your account.

A few weeks after that, when enough people have migrated to Firefox Accounts, old AMO logins will be disabled. This means when you log in with your old AMO credentials, you won’t be able to use the site until you follow the prompt to log in with or create a Firefox Account.

For more information, please take a look at the Frequently Asked Questions below, or head over to the forums. We’re here to help, and we apologize for any inconvenience.

Frequently asked questions

What happens to my add-ons when I convert to a new Firefox Account?

All the add-ons are accessible to the new Firefox Account.

Why do I want a Firefox Account?

Firefox Accounts is the identity system that is used to synchronize Firefox across multiple devices. Many Firefox products and services will soon begin migrating over, simplifying your sign-in process and making it easier for you to manage all your accounts.

Where do I change my password?

Once you have a Firefox Account, you can go to accounts.firefox.com, sign in, and click on Password.

If you have forgotten your current password:

- Go to the AMO login page

- Click on I forgot my password

- Proceed to reset the password

https://blog.mozilla.org/addons/2016/02/01/firefox-accounts-on-amo/

|

|

Mitchell Baker: Dr. Karim Lakhani Appointed to Mozilla Corporation Board of Directors |

|

|

The Mozilla Blog: Dr. Karim Lakhani Appointed to Mozilla Corporation Board of Directors |

Today we are very pleased to announce an addition to the Mozilla Corporation Board of Directors, Dr. Karim Lakhani, a scholar in innovation theory and practice.

Dr. Lakhani is the first of the new appointments we expect to make this year. We are working to expand our Board of Directors to reflect a broader range of perspectives on people, products, technology and diversity. That diversity encompasses many factors: from geography to gender identity and expression, cultural to ethnic identity, expertise to education.

Born in Pakistan and raised in Canada, Karim received his Ph.D. in Management from Massachusetts Institute of Technology (MIT) and is Associate Professor of Business Administration at the Harvard Business School, where he also serves as Principal Investigator for the Crowd Innovation Lab and NASA Tournament Lab at the Harvard University Institute for Quantitative Social Science.

Karim’s research focuses on open source communities and distributed models of innovation. Over the years I have regularly reached out to Karim for advice on topics related to open source and community based processes. I’ve always found the combination of his deep understanding of Mozilla’s mission and his research-based expertise to be extremely helpful. As an educator and expert in his field, he has developed frameworks of analysis around open source communities and leaderless management systems. He has many workshops, cases, presentations, and journal articles to his credit. He co-edited a book of essays about open source software titled Perspectives on Free and Open Source Software, and he recently co-edited the upcoming book Revolutionizing Innovation: Users, Communities and Openness, both from MIT Press.

However, what is most interesting to me is the “hands-on” nature of Karim’s research into community development and activities. He has been a supporter and ready advisor to me and Mozilla for a decade.

Please join me now in welcoming Dr. Karim Lakhani to the Board of Directors. He supports our continued investment in open innovation and joins us at the right time, in parallel with the Katharina Borchert’s transition off of our Board of Directors into her role as our new Chief Innovation Officer. We are excited to extend our Mozilla network with these additions, as we continue to ensure that the Internet stays open and accessible to all.

Mitchell

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 01 Feb 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160201/

|

|

Ludovic Hirlimann: Fosdem 2016 day 2 |

Day 2 was a bit different than day 1, has I was less tired. It started by me visiting a few booths in order to decorate my bag and get a few more T-shirts, thanks to wiki-mania, Apache, Open Stack. I got the mini-port to VGA cable I had left in the conference room and then headed for the conferences.

The first one was “Active supervision and monitoring with Salt, Graphite and Grafana“ was interesting because I knew nothing about any of these, except for graphite, but I knew so little that I learned a lot.

The second one titled “War Story: Puppet in a Traditional Enterprise” was someone implementing puppet at an enterprise scale in a big company. It reminded me all the big company I had consulted to a few years back - nothing surprising. It was quiet interesting anyway.

The Third talk I attend was about hardening and securing configuration management software. It was more about general principle than an howto. Quite interesting specially the hardening.io link given at the end of the documentation and the idea to remove ssh if possible on all servers and enable it thru conf. management to investigate issues. I didn’t learn much but it was a good refresher.

I then attend a talk in a very small room that was packed packed packed , about mapping with your phone. As I’ve started contributing to OSM, it was nice to listen and discover all the other apps that I can run on my droid phone in order to add data to the maps. I’ll probably share that next month at the local OSM meeting that got announced this week-end.

Last but not least I attended the key signing party. According to my paperwork, I’ll have sot sign twice 98 keys (twice because I’m creating a new key).

I’ve of course added a few pictures to my Fosdem set.

|

|

Kartikaya Gupta: Frameworks vs libraries (or: process shifts at Mozilla) |

At some point in the past, I learned about the difference between frameworks and libraries, and it struck me as a really important conceptual distinction that extends far beyond just software. It's really a distinction in process, and that applies everywhere.

The fundamental difference between frameworks and libraries is that when dealing with a framework, the framework provides the structure, and you have to fill in specific bits to make it apply to what you are doing. With a library, however, you are provided with a set of functionality, and you invoke the library to help you get the job done.

It may not seem like a very big distinction at first, but it has a huge impact on various properties of the final product. For example, a framework is easier to use if what you are trying to do lines up with the goal the framework is intended to accomplish. The only thing you need to do is provide (or override) specific things that you need to customize, and the framework takes care of the rest. It's like a builder building your house, and you picking which tile pattern you want for the backsplash. With libraries there's a lot more work - you have a Home Depot full of tools and supplies, but you have to figure out how to put them together to build a house yourself.

The flip side, of course, is that with libraries you get a lot more freedom and customizability than you do with frameworks. With the house analogy, a builder won't add an extra floor for your house if it doesn't fit with their pre-defined floorplans for the subdivision. If you're building it yourself, though, you can do whatever you want.

The library approach makes the final workflow a lot more adaptable when faced with new situations. Once you are in a workflow dictated by a framework, it's very hard to change the workflow because you have almost no control over it - you only have as much control as it was designed to let you have. With libraries you can drop a library here, pick up another one there, and evolve your workflow incrementally, because you can use them however you want.

In the context of building code, the *nix toolchain (a pile of command-line tools that do very specific things) is a great example of the library approach - it's very adaptable as you can swap out commands for other commands to do what you need. An IDE, on the other hand, is more of a framework. It's easier to get started because the heavy lifting is taken care of, all you have to do is "insert code here". But if you want to do some special processing of the code that the IDE doesn't allow, you're out of luck.

An interesting thing to note is that usually people start with frameworks and move towards libraries as their needs get more complex and they need to customize their workflow more. It's not often that people go the other way, because once you've already spent the effort to build a customized workflow it's hard to justify throwing the freedom away and locking yourself down. But that's what it feels like we are doing at Mozilla - sometimes on purpose, and sometimes unintentionally, without realizing we are putting on a straitjacket.

The shift from Bugzilla/Splinter to MozReview is one example of this. Going from a customizable, flexible tool (attachments with flags) to a unified review process (push to MozReview) is a shift from libraries to frameworks. It forces people to conform to the workflow which the framework assumes, and for people used to their own customized, library-assisted workflow, that's a very hard transition. Another example of a shift from libraries to frameworks is the bug triage process that was announced recently.

I think in both of these cases the end goal is desirable and worth working towards, but we should realize that it entails (by definition) making things less flexible and adaptable. In theory the only workflows that we eliminate are the "undesirable" ones, e.g. a triage process that drops bugs on the floor, or a review process that makes patch context hard to access. In practice, though, other workflows - both legitimate workflows currently being used and potential improved workflows get eliminated as well.

Of course, things aren't all as black-and-white as I might have made them seem. As always, the specific context/situation matters a lot, and it's always a tradeoff between different goals - in the end there's no one-size-fits-all and the decision is something that needs careful consideration.

|

|

Ludovic Hirlimann: Fosdem 2016 day 1 |

This year I’m attending fosdem, after skipping it last year. It’s good to be back even if I was very tired when I arrived yesterday night and managed to visit three of Brussels train station. I was up early and the indications in bus 71 where fucked up so it took me a short walk under some rain to get to the campus - but I made it early and was able to take interesting empty pictures.

The first talk I attended was about MIPS for the embedded world. It was interesting for some tidbids, but felt more like a marketing speech to use MIPS on future embedding project.

After that I wanders and found a bunch of ex-joosters and had very interesting conversation with all of them.

I delivered my talk in 10 minutes and then answered question for the next 20 minutes.

The http2 talk was interesting and the room was packed. But probably not deep enough for me. Still I think we should think about enabling http/2 on mozfr.org.

I left to get some rest after talking to otto about block chain and bitcoins.

|

|

Mike Hommey: Enabling TLS on this blog |

Long overdue, I finally enabled TLS on this blog. It went almost like a breeze.

I used simp_le to get the certificate from Let’s Encrypt, along Mozilla’s Web Server Configuration generator. SSL Labs now reports a rating of A+.

I just had a few issues:

- I had some hard-coded http:// links in my wordpress theme, that needed changes,

- Since my wordpress instance is reverse-proxied and the real server not behind HTTPS, I had to adjust the wordpress configuration so that it doesn’t do an infinite redirect loop,

- Nginx’s config for multiple virtualhosts needs SSL configuration to be repeated. Fortunately, one can use

includestatements, - Contrary to the suggested configuration, setting

ssl_session_tickets off;makes browsers unhappy (at least, it made my Firefox unhappy, with aSSL_ERROR_RX_UNEXPECTED_NEW_SESSION_TICKETerror message).

I’m glad that there are tools helping to get a proper configuration of SSL. It is sad, though, that the defaults are not better and that we still need to tweak at all. Setting where the certificate and the private key files are should, in 2016, be the only thing to do to have a secure web server.

|

|

Chris Cooper: RelEng & RelOps Weekly Highlights - January 29, 2016 |

Well, that was a quick month! Time flies when you’re having fun…or something.

Modernize infrastructure:

In an effort to be more agile in creating and/or migrating webapps, Releng has a new domain name and SSL wildcard! The new domain (mozilla-releng.net) is setup for management under inventory and an ssl endpoint has been established in Heroku. See https://wiki.mozilla.org/ReleaseEngineering/How_Tos/Heroku:Add_a_custom_domain

Improve CI pipeline:

Coop (hey, that’s me!) re-enabled partner repacks as part of release automation this week, and was happy to see the partner repacks for the Firefox 44 release get generated and published without any manual intervention. Back in August, we moved the partner repack process and configuration into github from mercurial. This made it trivially easy for Mozilla partners to issue a pull request (PR) when a configuration change was needed. This did require some re-tooling on the automation side, and we took the opportunity to fix and update a lot of partner-related cruft, including moving the repack hosting to S3. I should note that the EME-free repacks are also generated automatically now as part of this process, so those of you who prefer less DRM with your Firefox can now also get your builds on a regular basis.

Release:

One of the main reasons why build promotion is so important for releng and Mozilla is that it removes the current disconnect between the nightly/aurora and beta/release build processes, the builds for which are created in different ways. This is one of the reasons why uplift cycles are so frequently “interesting” - build process changes on nightly and aurora don’t often have an existing analog in beta/release. And so it was this past Tuesday when releng started the beta1 process for Firefox 45. We quickly hit a blocker issue related to gtk3 support that prevented us from even running the initial source builder, a prerequisite for the rest of the release process. Nick, Rail, Callek, and Jordan put their heads together and quickly came up with an elegant solution that unblocked progress on all the affected branches, including ESR. In the end, the solution involved running tooltool from within a mock environment, rather than running it outside the mock environment and trying to copy relevant pieces in. Thanks for the quick thinking and extra effort to get this unblocked. Maybe the next beta1 cycle won’t suck quite as much! The patch that Nick prepared (https://bugzil.la/886543) is now in production and being used to notify users on unsupported versions of GTK why they can’t update. In the past, they would’ve simply received no update with no information as to why.

Operational:

Dustin made security improvements to TaskCluster, ensuring that expired credentials are not honored.

We had a brief Balrog outage this morning [Fri Jan 29]. Balrog is the server side component of the update system used by Firefox and other Mozilla products. Ben quickly tracked the problem down to a change in the caching code. Big thanks to mlankford, Usul, and w0ts0n from the MOC for their quick communication and help in getting things back to a good state quickly.

Outreach:

On Wednesday, Dustin spoke at Siena College, holding an information session on Google Summer of Code and speaking to a Software Engineering class about Mozilla, open source, and software engineering in the real world.

See you next week!

|

|

Air Mozilla: Foundation Demos January 29 2016 |

Mozilla Foundation Demos January 29 2016

Mozilla Foundation Demos January 29 2016

|

|

Yunier Jos'e Sosa V'azquez: Visualizando lo invisible |

Esta es una traducci'on del art'iculo original publicado en el blog The Mozilla Blog. Escrito por Jascha Kaykas-Wolff .

Hoy en d'ia, la privacidad y las amenazas como el seguimiento invisible de terceros en la Web en l'inea parecen ser muy abstractas. Muchos de nosotros no somos conscientes de lo que est'a pasando con nuestros datos en l'inea o nos sentimos impotentes porque no sabemos qu'e hacer. Cada vez m'as, Internet se est'a convirtiendo en una casa de cristal gigante donde tu informaci'on personal est'a expuesta a terceros que la recogen y utilizan para sus propios fines.

Recientemente hemos lanzado Navegaci'on privada con la protecci'on de seguimiento en Firefox – una caracter'istica que se centra en proporcionar a cualquier persona que utilice Firefox una elecci'on significativa frente a terceros en la Web que podr'ian recolectar sus datos sin su conocimiento o control. Esta es una caracter'istica que se ocupa de la necesidad de un mayor control sobre la privacidad en l'inea, pero tambi'en est'a conectada a un debate permanente e importante en torno a la preservaci'on de un ecosistema Web sano, abierto y sostenible y los problemas y las posibles soluciones a la pregunta sobre los contenidos bloqueados.

La casa de cristal

A principios de este mes nos dedicamos a un evento de tres d'ias sobre la privacidad en l'inea en Hamburgo, Alemania. Hoy en d'ia, nos gustar'ia compartir algunas impresiones del evento y tambi'en un experimento que filmamos en la famosa calle Reeperbahn.

?Nuestro experimento?

Nos dispusimos a ver si pod'iamos explicar algo que no es f'acilmente visible, la privacidad en l'inea, de una manera muy tangible. Hemos construido un apartamento totalmente equipado con todo lo necesario para disfrutar de un corto viaje a la perla del norte de Alemania. Hicimos el apartamento a disposici'on de los diferentes viajeros que llegan a pasar la noche. Una vez que se conectaron a Wi-Fi de la vivienda, se eliminaron todas las paredes, dejando al descubierto los viajeros a los espectadores y la conmoci'on externa causados

|

|

Daniel Glazman: Google, BlueGriffon.org and blacklists |

Several painful things happened to bluegriffon.org yesterday... In chronological order:

- during my morning, two users reported that their browser did not let them reach the downloads section of bluegriffon.org without a security warning. I could not see it myself from here, whatever the browser or the platform.

- during the evening, I could see the warning using Chrome on OS X

- apparently, and if I believe the "Search Console", Google thought two files in my web repository of releases are infected. I launched a complete verification of the whole web site and ran all the software releases through three anti-virus systems (Sophos, Avast and AVG) and an anti-adware system. Nothing at all to report. No infection, no malware, no adware, nothing.

- since this was my only option, I deleted the two reported files from my server. Just for the record, the timestamps were unchanged, and I even verified the files were precisely the ones I uploaded in january and april 2012. Yes, 2012... Yesterday, without being touched/modified in any manner during the last four years, they were erroneously reported infected.

- this morning, Firefox also reports a security warning on most large sections of BlueGriffon.org and its Downloads section. I guess Firefox is also using the Google blacklist. Just for the record, both Spamhaus and CBL have nothing to say about bluegriffon.org...

- the Google Search Console now reports my site is ok but Firefox still reports it unsecure, ahem.

I need to draw a few conclusions here:

- Google does not tell you how the reported files are unsecure, which is really lame. The online tool they provide to "analyze" a web site did not help at all.

- Since all my antivir/antiadware declared all files in my repo clean, I had absolutely no option but to delete the files that are now missing from my repo

- two reported files in bluegriffon.org/freshmeat/1.4/ and bluegriffon.org/freshmeat/1.5.1/ led to blacklisting of all of bluegriffon.org/freshmeat and that's hundreds of files... Hey guys, we are and you are programmers, right? Sure you can do better than that?

- during more than one day, customers fled from bluegriffon.org because of these security warnings, security warnings I consider as fake reports. Since no public antimalware app could find anything to say about my files, I am suspecting a fake report of human origin. How such a report can reach the blacklist when files are reported safe by four up-to-date antimalware apps and w/o infection information reported to the webmaster is far beyond my understanding.

- blacklists are a tool that can be harmful to businesses if they're not well managed.

Update: oh I and forgot one thing: during the evening, Earthlink.net blacklisted one of the Mail Transport Agents of Dreamhost. Not, my email address, that whole SMTP gateway at Dreamhost... So all my emails to one of my customers bounced and I can't even let her know some crucial information. I suppose thousands at Dreamhost are impacted. I reported the issue to both Earthlink and DH, of course.

|

|

Karl Dubost: [worklog] APZ bugs, Some webcompat bugs and HTTP Refresh |

Monday, January 25, 2016. It's morning in Japan. The office room temperature is around 3°C (37.4F). I just put on the Aladdin. The sleep was short or more exactly interrupted a couple of times. Let's go through emails after two weeks away.

My tune for WebCompat for this first quarter 2016 is Jurassic 5 - Quality Control.

MFA (Multi Factor Authentication)

Multi Factor Authentication in the computing industry is sold as something easier and more secure. I still have to be convinced about the easier part.

WebCompat Bugs

- Bugzilla Activity

- Github Activity

- In Bug 1239922, Google Docs is sending HiRes icons only to WebKit devices because of a mediaqueries

@media screen and (-webkit-min-device-pixel-ratio:2) {}in the CSS. One difference is that the images are set throughcontentoutside of the mediaquery on a pseudo-element, but inside directly on the element itself. It is currently a non-standard feature as explained by Daniel Holbert. We contacted them. A discussion has been started and the magical Daniel dived a bit more in the issue. Read it. - Google Search seems to have ditched the opensearch link in the HTML

head. As a result, users have more difficult to add the search for specific locales. Contacted. - Google Custom Search is not sending the same search results links to Firefox Desktop and iOS9 on one side than Firefox Android. Firefox Android receives

http://cse.google.com/url?instead ofhttp://www.google.com/url?. This is unfortunate becausehttp://cse.google.com/url?is a 404. - DeKlab is sending some resources (a template for Dojo) with a bogus BOM. Firefox is stricter than Chrome on this and rejects the document, which in return breaks the application. Being stricter is cool and the site should be contacted, but at the same time, the pressure of it working in Chrome makes it harder to convince devs.

- When creating a set of images for srcset, make absolutely sure that your images are actually on the server.

- Some bugs are harder to test than others. redbox.com is not accessible from Japan. Maybe it's working from USA. If someone can test for us.

- When mixing

emandpxin a design you always risk that the fonts and rounding of values will not be exactly the same in all browsers. - There is an interesting bug with Font being truncated on twitter differently on linux and other platforms and this depending on the fonts. Boris Zbarsky suspect that twitter plays somehow with the font. I started to dig to extract more information.

- Strange issue on a 360 video not playing in IceWeasel. This seems related specifically to the Debian version.

- Some of the bugs we had with Japanese Web sites are being fixed, silently as usual. Once we reached out, not always the person will tell us back, hey we deployed a fix.

- A developer is working on a Web site and he feels the need because of a performance, feature issue to create a script to block a specific user-agent string. Time passes. We analyze. Find the contact information given on the site. Try to contact and don't get any answers. We try to contact again this time on twitter. And the person replies that he is not working anymore there so can't fix the user agent sniffing. Apart of the frustration that it creates, you can see how user agent sniffing strategy is broken, it doesn't take evolution of implementations and human changes in consideration. User agent sniffing may work but it is a very high maintenance choice with consequences on a long term. It's not a zero cost.

APZ Bugs

There's a list of scrolling bugs which affects Firefox. The issue is documented on MDN.

These effects work well in browsers where the scrolling is done synchronously on the browser's main thread. However, most browsers now support some sort of asynchronous scrolling in order to provide a consistent 60 frames per second experience to the user. In the asynchronous scrolling model, the visual scroll position is updated in the compositor thread and is visible to the user before the scroll event is updated in the DOM and fired on the main thread. This means that the effects implemented will lag a little bit behind what the user sees the scroll position to be. This can cause the effect to be laggy, janky, or jittery — in short, something we want to avoid.

So if you know someone (or a friend of a friend) who is able to fix or put us in contact with one of these APZ bugs site owners, please tell us in the bugs comments or on IRC (#webcompat on irc.mozilla.org). I did a first pass at bugs triage for contacting the site.

- python docs website needs a patch

- MediaTek Web site needs a fix

- When working on this scrolling bug, I discovered completely unrelated to the issue that they were using the HTML

nameattribute for storing a JSON data structure with quotes escaped so that they would not be mangled with the HTML. Crazy!

Some of these bugs are not mature yet in terms of outreach status. With Addons/e10s and APZ, it might happen more often that the Web Compat team is contacted for reaching out the sites which have quirks, specific code hindering Firefox, etc. But to reach out a Web site requires first a couple of steps and understanding. I need to write something about this. Added to my TODO list.

Addons e10s

Difficult to make progress in some cases, because the developers have completely disappeared. I wonder in some cases if it would not just be better to complete remove it from the addons list. It would both give the chance to someone else to propose something if needed by users (nature doesn't like void) or/and the main developer to wake up and wonder why the addon is not downloadable anymore.

Webcompat Life

We had a https://wiki.mozilla.org/Compatibility/Mobile/2016-01-26. We discuss about APZ, WebCompat wiki, Firefox OS Tier3 and WebkitCSS bugs status.

Pile of mails

- Around 1000 emails after 2 weeks, this is not too bad. I dealt in priority with those where my email address is directly in the

To:field (dynamic mailbox), then the ones where I'm inneedinfoin Bugzilla, then finally the ones which are addressed to specific mailing-lists I have work to do. The rest, rapid scann of the topics (Good email topics are important. Remember!) and marking as read all the ones that I don't have any interests. I'm almost done in one day with all these emails.

HTTP Refresh

- Decided to finally open an issue on documenting HTTP Refresh. I had written about this HTTP header in the past. As usual, this is a big mess.

TODO

- explain what is necessary to consider a bug "ready for outreach".

- check the webkitcss bugs we had in Bugzilla and WebCompat.com

- check if the bugs still open about Firefox OS are happening on Firefox Android

- testing Google search properties and see if we can find a version which is working better on Firefox Android than the current default version sent by Google. Maybe testing with Chrome UA and Iphone UA.

Otsukare!

|

|

Ehsan Akhgari: Building Firefox With clang-cl: A Status Update |

Last June, I wrote about enabling building Firefox with clang-cl. We didn’t get these builds up on the infrastructure and things regressed on both the Mozilla and LLVM side, and we got to a state where clang-cl either wouldn’t compile Firefox any more, or the resulting build would be severely broken. It took us months but earlier today we finally managed to finally get a full x86-64 Firefox build with clang-cl! The build works for basic browsing (except that it crashes on yahoo.com for reasons we haven’t diagnosed yet) and just for extra fun, I ran all of our service worker tests (which I happen to run many times a day on other platforms) and they all passed.

This time, we got to an impressive milestone. Previously, we were building Firefox with the help of clang-cl’s fallback mode (which falls back to MSVC when clang fails to build a file for whatever reason) but this time we have a build of Firefox that is fully produced with clang, without even using the MSVC compiler once. And that includes all of our C++ tests too. (Note that we still use the rest of the Microsoft toolchain, such as the linker, resource compiler, etc. to produce the ultimate binaries; I’m only focusing on C and C++ compilation in this post.)

We should now try to keep these builds working. I believe that this is a big opportunity for Firefox to be able to leverage the modern toolchain that we have been enjoying on other platforms on Windows, where we have most of our users. An open source compilation toolchain has long been needed on Windows, and clang-cl is the first open source replacement that is designed to be a drop-in replacement for MSVC, and that makes it an ideal target for Firefox. Also, Microsoft’s recent integration of clang as a front-end for the MSVC code generator promises the prospects of switching to clang/C2 in the future as our default compiler on Windows (assuming that the performance of our clang-cl builds don’t end up being on par with the MSVC PGO compiler.)

My next priority for this project would be to stand up Windows static analysis builds on TreeHerder. That requires getting our clang-plugin to work on Windows, fixing the issues that it may find (since that would be the first time we would be running our static analyses on Windows!), and trying to get them up and running on TaskCluster. That way we would be able to leverage our static analysis on Windows as the first fruit of this effort, and also keep these builds working in the future. Since clang-cl is still being heavily developed, we will be preparing periodic updates to the compiler, potentially fixing the issues that may have been uncovered in either Firefox or LLVM and therefore we will keep up with the development in both projects.

Some of the future things that I think we should look into, sorted by priority:

- Get DXR to work on Windows. Once we port our static analysis clang plugin to Windows, the next logical step would be to get the DXR indexer clang plugin to work on Windows, so that we can get a DXR that works for Windows specific code too.

- Look into getting these builds to pass our test suites. So far we are at a stage where clang-cl can understand all of our C and C++ code on Windows, but the generated code is still not completely correct. Right now we’re at such an early stage that one can find crashes etc within minutes of browsing. But we need to get all of our tests to pass in these builds in order to get a rock-solid browser built with clang-cl. This will also help the clang-cl project, since we have been reporting clang-cl bugs that the Firefox code has continually uncovered, and on occasions also fixes to those bugs. Although the rate of the LLVM side issues has decreased dramatically as the compiler has matured, I expect to find LLVM bugs occasionally, and hope to continue to work with the LLVM project to resolve them.

- Get the clang-based sanitizers to work on Windows, to extend their coverage to Windows. This includes things such as AddressSanitizer, ThreadSanitizer, LeakSanitizer, etc. The security team has been asking for AddressSanitizer for a long time. We could definitely benefit from the Windows specific coverage of all of these tools. Obviously the first step is to get a solid build that works. We have previously attempted to use AddressSanitizer on Windows but we have run into a lot of AddressSanitizer specific issues that we had to fix on both sides. These efforts have been halted for a while since we have been focusing on getting the basic compilation to work again.

- Start to do hybrid builds, where we randomly pick either clang-cl or MSVC o build each individual file. This is required to improve clang-cl’s ABI compatibility. The reason that is important is that compiler bugs in a lot of cases represent themselves as hard to explain artifacts in the functionality, anything from a random crash somewhere where everything should be working fine, to parts of the rendering going black for unclear reasons. A cost effective way to diagnose such issues is getting us to a point where we can mix and match object files produced by either compilers to get a correct build, and then bisect between the compilers to find the translation unit that is being miscompiled, and then keep bisecting to find the function (and sometimes the exact part of the code) that is being miscompiled. This is basically impractical without a good story for ABI compatibility in clang-cl. We have recently hit a few of these issues.

- Improving support for debug information generation in LLVM to a point that we can use Breakpad to generate crash stacks for crash-stats. This should enable us to advertise these builds to a small community of dogfooders.

- Start to look at a performance comparison between clang-cl and MSVC builds. This plus the bisection infrastructure I touched on above should generate a wealth of information on performance issues in clang-cl, and then we can fix them with the help of the LLVM community. Also, in case the numbers aren’t too far apart, maybe even ship one Firefox Nightly for Windows built with clang-cl, as a milestone!

Longer term, we can look into issues such as helping to add support for full debug information support, with the goal of making it possible to use Visual Studio on Windows with these builds. Right now, we basically debug at the assembly level. Although facilitating this will probably help speed up development too, so perhaps we should start on it earlier. There is also LLDB on Windows which should in theory be able to consume the DWRAF debug information that clang-cl can generate similar to how it does on Linux, so that is worth looking into as well. I’m sure there are other things that I’m not currently thinking of that we can do as well.

Last but not least, this has been a collaboration between quite a few people, on the Mozilla side, Jeff Muizelaar, David Major, Nathan Froyd, Mike Hommey, Raymond Forbes and myself, and on the LLVM side many members of the Google compiler and Chromium teams: Reid Kleckner, David Majnemer, Hans Wennborg, Richard Smith, Nico Weber, Timur Iskhodzhanov, and the rest of the LLVM community who made clang-cl possible. I’m sure I’m forgetting some important names. I would like to appreciate all of these people’s help and effort.

https://ehsanakhgari.org/blog/2016-01-29/building-firefox-with-clang-cl-a-status-update

|

|

Air Mozilla: Privacy Lab - Privacy for Startups - January 2016 |

Privacy for Startups: Practical Guidance for Founders, Engineers, Marketing, and those who support them Startups often espouse mottos that make traditional Fortune 500 companies cringe....

Privacy for Startups: Practical Guidance for Founders, Engineers, Marketing, and those who support them Startups often espouse mottos that make traditional Fortune 500 companies cringe....

https://air.mozilla.org/privacy-lab-privacy-for-startups-january-2016/

|

|

Tanvi Vyas: No More Passwords over HTTP, Please! |

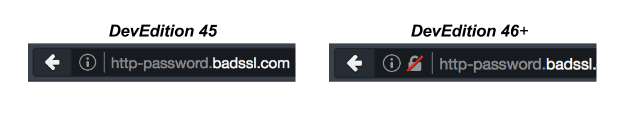

Firefox Developer Edition 46 warns developers when login credentials are requested over HTTP.

Username and password pairs control access to users’ personal data. Websites should handle this information with care and only request passwords over secure (authenticated and encrypted) connections, like HTTPS. Unfortunately, we too frequently see non-secure connections, like HTTP, used to handle user passwords. To inform developers about this privacy and security vulnerability, Firefox Developer Edition warns developers of the issue by changing the security iconography of non-secure pages to a lock with a red strikethrough.

How does Firefox determine if a password field is secure or not?

Firefox determines if a password field is secure by examining the page it is embedded in. The embedding page is checked against the algorithm in the W3C’s Secure Contexts Specification to see if it is secure or non-secure. Anything on a non-secure page can be manipulated by a Man-In-The-Middle (MITM) attacker. The MITM can use a number of mechanisms to extract the password entered onto the non-secure page. Here are some examples:

- Change the form action so the password submits to an attacker controlled server instead of the intended destination. Then seamlessly redirect to the intended destination, while sending along the stolen password.

- Use javascript to grab the contents of the password field before submission and send it to the attacker’s server.

- Use javascript to log the user’s keystrokes and send them to the attacker’s server.

Note that all of the attacks mentioned above can occur without the user realizing that their account has been compromised.

Firefox has been alerting developers of this issue via the Developer Tools Web Console since Firefox 26.

Why isn’t submitting over HTTPS enough? Why does the page have to be HTTPS?

We get this question a lot, so I thought I would call it out specifically. Although transmitting over HTTPS instead of HTTP does prevent a network eavesdropper from seeing a user’s password, it does not prevent an active MITM attacker from extracting the password from the non-secure HTTP page. As described above, active attackers can MITM an HTTP connection between the server and the user’s computer to change the contents of the webpage. The attacker can take the HTML content that the site attempted to deliver to the user and add javascript to the HTML page that will steal the user’s username and password. The attacker then sends the updated HTML to the user. When the user enters their username and password, it will get sent to both the attacker and the site.

What if the credentials for my site really aren’t that sensitive?

Sometimes sites require username and passwords, but don’t actually store data that is very sensitive. For example, a news site may save which news articles a user wants to go back and read, but not save any other data about a user. Most users don’t consider this highly sensitive information. Web developers of the news site may be less motivated to secure their site and their user credentials. Unfortunately, password reuse is a big problem. Users use the same password across multiple sites (news sites, social networks, email providers, banks). Hence, even if access to the username and password to your site doesn’t seem like a huge risk to you, it is a great risk to users who have used the same username and password to login to their bank accounts. Attackers are getting smarter; they steal username/password pairs from one site, and then try reusing them on more lucrative sites.

How can I remove this warning from my site?

Put your login forms on HTTPS pages.

Of course, the most straightforward way to do this is to move your whole website to HTTPS. If you aren’t able to do this today, create a separate HTTPS page that is just used for logins. Whenever a user wants to login to your site, they will visit the HTTPS login page. If your login form submits to an HTTPS endpoint, parts of your domain may already be set up to use HTTPS.

In order to host content over HTTPS, you need a TLS Certificate from a Certificate Authority. Let’s Encrypt is a Certificate Authority that can issue you free certificates. You can reference these pages for some guidance on configuring your servers.

What can I do if I don’t control the webpage?

We know that users of Firefox Developer Edition don’t only use Developer Edition to work on their own websites. They also use it to browse the net. Developers who see this warning on a page they don’t control can still take a couple of actions. You can try to add “https://” to the beginning of the url in the address bar and see if you are able to login over a secure connection to help protect your data. You can also try and reach out to the website administrator and alert them of the privacy and security vulnerability on their site.

Do you have examples of real life attacks that occurred because of stolen passwords?

There are ample examples of password reuse leading to large scale compromise. There are fewer well-known examples of passwords being stolen by performing MITM attacks on login forms, but the basic techniques of javascript injection have been used at scale by Internet Service Providers and governments.

Why does my browser sometimes show this warning when I don’t see a password field on the page?

Sometimes password fields are in a hidden

Will this feature become available to Firefox Beta and Release Users?

Right now, the focus for this feature is on developers, since they’re the ones that ultimately need to fix the sites that are exposing users’ passwords. In general, though, since we are working on deprecating non-secure HTTP in the long run, you should expect to see more and more explicit indications of when things are not secure. For example, in all current versions of Firefox, the Developer Tools Network Monitor shows the lock with a red strikethrough for all non-secure HTTP connections.

How do I enable this warning in other versions of Firefox?

Users of Firefox version 44+ (on any branch) can enable or disable this feature by following these steps:

- Open a new window or tab in Firefox.

- Type about:config and press enter.

- You will get to a page that asks you to promise to be careful. Promise you will be.

- The value of the security.insecure_password.ui.enabled preference determines whether or not Firefox warns you about non-secure login pages. You can enable the feature and be warned about non-secure login pages by setting this value to true. You can disable the feature by setting the value to false.

Thank you!

A special thanks to Paolo Amadini and Aislinn Grigas for their implementation and user experience work on this feature!

https://blog.mozilla.org/tanvi/2016/01/28/no-more-passwords-over-http-please/

|

|

Support.Mozilla.Org: What’s up with SUMO – 28th January |

Hello, SUMO Nation!

Starting from this week, we’re moving things around a bit (to keep them fresh and give you more time to digest and reply. The Friday posts are moving to Thursday, and Fridays will be open for guest posts (including yours) – if you’re interested in writing a post for this blog, let me know in the comments.

Welcome, new contributors!

Contributors of the week

- The Mozilla Bangladesh crew for the next leg of the SUMO Tour, this time visiting the port city of Chittagong. Take a look at the photos here. Woot!

We salute you!

Most recent SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

- IMPORTANT: We are considering changing the way the meetings work. Help us figure out what’s best for you – join the discussion on the forums in this thread: (Monday) Community Meetings in 2016.

The next SUMO Community meeting…

- is happening on Monday the 1st of February – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Monday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Developers

- The new Ask A Question flow is being tested this week, so keep your eyes peeled for novelty ;-)

- You can see the current state of the backlog our developers are working on here.

- The latest SUMO Platform meeting notes can be found here.

- Interested in learning how Kitsune (the engine behind SUMO) works? Read more about it here and fork it on GitHub!

Community

- The Singapore Participation Gathering took place recently and we’re waiting for updates from those of you who made it there.

- The SUMO Event Kit has been updated with swag links for your events!

-

Ongoing reminder: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

Social

- We have a training out there for all those interested in Social Support.

- Talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- Today (was/is/will still be for a few hours) a SUMO Day, connected to the release week for Version 44. Keep answering those questions, heroes of the helpful web!

Knowledge Base

- Lauren and Joni are working to create a training guide to teach new writers to write articles.

- Version 44 articles have all been updated!

- Firefox for iOS 2.0 articles are ready for localization:

Localization

- Dinesh is the new Locale Leader for Telugu – congratulations & thank you for all your hard work so far!

- The Portuguese L10ns have reached this month’s goal of 300 KB articles – thumbs up for the awesome crew from Lusitania! :-) Has your locale reached a milestone? Mark it in the spreadsheet!

- Reminder: we’re talking about helping infrequent contributors get back to us (or helping new ones stay longer with us) – join the discussion!

Firefox

- for Android

- It’s the Firefox 44 Release Week! Forum talk about the release.

- Draft release notes are here.

- This is a staged rollout using Google Play, addressing the crash rate discussions

- IMPORTANT: Android OS versions 3.0 – 3.2.6 (Honeycomb) are no longer supported – the app will not be visible on the Google Play Store for users of these OS versions

- for Desktop

- The certificate error pages in have been redesigned and contain a new “Learn more” link to a SUMO page – this should increase site traffic.

- The “Ask me everytime” option for cookies removed from the Privacy panel.

- Two major issues noted so far:

- Drag & drop from some external applications into firefox is broken – Bug 1243507

- Firefox 44 is hungry and may be eating some passwords – Bug 1242176

- for iOS

- 2.0 is still under wraps – thank you for your patience!

That’s it for today, dear SUMOnians! We still have Friday to enjoy, so see you around SUMO and not only… tomorrow!

https://blog.mozilla.org/sumo/2016/01/28/whats-up-with-sumo-28th-january/

|

|

Air Mozilla: Web QA Weekly Meeting, 28 Jan 2016 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Air Mozilla: Reps weekly, 28 Jan 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Mark Surman: Inspired by our grassroots leaders |

Last weekend, I had the good fortune to attend our grassroots Leadership Summit in Singapore: a hands on learning and planning event for leaders in Mozilla’s core contributor community.

We’ve been doing these sorts of learning / planning / doing events with our broader community of allies for years now: they are at the core of the Mozilla Leadership Network we’re rolling out this year. It was inspiring to see the participation team and core contributor community dive in and use a similar approach.

I left Singapore feeling inspired and hopeful — both for the web and for participation at Mozilla. Here is an email I sent to everyone who participated in the Summit explaining why:

As I flew over the Pacific on Monday night, I felt an incredible sense of inspiration and hope for the future of the web — and the future of Mozilla. I have all of you to thank for that. So, thank you.

This past weekend’s Leadership Summit in Singapore marked a real milestone: it was Mozilla’s first real attempt at an event consciously designed to help our core contributor community (that’s you!) develop important skills like planning and dig into critical projects in areas like connected devices and campus outreach all at the same time. This may not seem like a big deal. But it is.

For Mozilla to succeed, *all of us* need to get better at what we do. We need to reach and strive. The parts of the Summit focused on personality types, planning and building good open source communities were all meant to serve as fuel for this: giving us a chance to hone skills we need.

Actually getting better comes by using these skills to *do* things. The campus campaign and connected devices tracks at the Summit were designed to make this possible: to get us all working on concrete projects while applying the skills we were learning in other sessions. The idea was to get important work done while also getting better. We did that. You did that.

Of course, it’s the work and the impact we have in the world that matter most. We urgently need to explore what the web — and our values — can mean in the coming era of the internet of things. The projects you designed in the connected devices track are a good step in this direction. We also need to grow our community and get more young people involved in our work. The plans you made for local campus campaigns focused on privacy will help us do this. This is important work. And, by doing it the way we did it, we’ve collectively teed it up to succeed.

I’m saying all this partly out of admiration and gratitude. But I’m also trying to highlight the underlying importance of what happened this past weekend: we started using a new approach to participation and leadership development. It’s an approach that I’d like to see us use even more both with our core participation leaders (again, that’s you!) and with our Mozilla Leadership Network (our broader network of friends and allies). By participating so fully and enthusiastically in Singapore, you helped us take a big step towards developing this approach.

As I said in my opening talk: this is a critical time for the web and for Mozilla. We need to simultaneously figure out what technologies and products will bring our values into the future and we need to show the public and governments just how important those values are. We can only succeed by getting better at working together — and by growing our community around the world. This past weekend, you all made a very important step in this direction. Again, thank you.

I’m looking forward to all the work and exploration we have ahead. Onwards!

As I said in my message, the Singapore Leadership Summit is a milestone. We’ve been working to recast and rebuild our participation team for about a year now. This past weekend I saw that investment paying off: we have a team teed up to grow and support our contributor community from around the world. Nicely done! Good things ahead.

The post Inspired by our grassroots leaders appeared first on Mark Surman.

http://marksurman.commons.ca/2016/01/28/inspired-by-our-grassroots-leaders/

|

|