Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Joel Maher: Adventures in Task Cluster – Running tests locally |

There is a lot of promise around Taskcluster (the replacement for BuildBot in our CI system at Mozilla) to be the best thing since sliced bread. One of the deliverables on the Engineering Productivity team this quarter is to stand up the Linux debug tests on Taskcluster in parallel to running them normally via Buildbot. Of course next quarter it would be logical to turn off the BuildBot tests and run tests via Taskcluster.

This post will outline some of the things I did to run the tests locally. What is neat is that we run the taskcluster jobs inside a Docker image (yes this is Linux only), and we can download the exact OS container and configuration that runs the tests.

I started out with a try server push which generated some data and a lot of failed tests. Sadly I found that the treeherder integration was not really there for results. We have a fancy popup in treeherder when you click on a job, but for taskcluster jobs, all you need is to find the link to inspect task. When you inspect a task, it takes you to a task cluster specific page that has information about the task. In fact you can watch a test run live (at least from the log output point of view). In this case, my test job is completed and I want to see the errors in the log, so I can click on the link for live.log and search away. The other piece of critical information is the ‘Task‘ tab at the top of the inspect task page. Here you can see the details about the docker image used, what binaries and other files were used, and the golden nugget at the bottom of the page, the “Run Locally” script! You can cut and paste this script into a bash shell and theoretically reproduce the exact same failures!

As you can imagine this is exactly what I did and it didn’t work! Luckily in the #taskcluster channel, there were a lot of folks to help me get going. The problem I had was I didn’t have a v4l2loopback device available. This is interesting because we need this in many of our unittests and it means that our host operating system running docker needs to provide video/audio devices for the docker container to use. Now is time to hack this up a bit, let me start:

first lets pull down the docker image used (from the run locally script):

docker pull 'taskcluster/desktop-test:0.4.4'

next lets prepare my local host machine to run by installing/setting up v4l2loopback:

sudo apt-get install v4l2loopback-dkms

sudo modprobe v4l2loopback devices=2

Now we can try to run docker again, this time adding the –device command:

docker run -ti \

--name "${NAME}" \

--device=/dev/video1:/dev/video1 \

-e MOZILLA_BUILD_URL='https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/target.tar.bz2' \

-e MOZHARNESS_SCRIPT='mozharness/scripts/desktop_unittest.py' \

-e MOZHARNESS_URL='https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/mozharness.zip' \

-e GECKO_HEAD_REPOSITORY='https://hg.mozilla.org/try/' \

-e MOZHARNESS_CONFIG='mozharness/configs/unittests/linux_unittest.py mozharness/configs/remove_executables.py

' \

-e GECKO_HEAD_REV='5e76c816870fdfd46701fd22eccb70258dfb3b0c' \

taskcluster/desktop-test:0.4.4Now when I run the test command, I don’t get v4l2loopback failures!

bash /home/worker/bin/test.sh --no-read-buildbot-config '--installer-url=https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/target.tar.bz2' '--test-packages-url=https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/test_packages.json' '--download-symbols=ondemand' '--mochitest-suite=browser-chrome-chunked' '--total-chunk=7' '--this-chunk=1'In fact, I get the same failures as I did when the job originally ran :) This is great, except for the fact that I don’t have an easy way to run the test by itself, debug, or watch the screen- let me go into a few details on that.

Given a failure in browser/components/search/test/browser_searchbar_keyboard_navigation.js, how do we get more information on that? Locally I would do:

./mach test browser/components/search/test/browser_searchbar_keyboard_navigation.js

Then at least see if anything looks odd in the console, on the screen, etc. I might look at the test and see where we are failing at to give me more clues. How do I do this in a docker container? The command above to run the tests, calls test.sh, which then calls test-linux.sh as the user ‘worker’ (not as user root). This is important that we use the ‘worker’ user as the pactl program to find audio devices will fail as root. Now what happens is we setup the box for testing, including running pulseaudio, Xfvb, compiz (after bug 1223123), and bootstraps mozharness. Finally we call the mozharness script to run the job we care about, in this case it is ‘mochitest-browser-chrome-chunked’, chunk 1. It is important to follow these details because mozharness downloads all python packages, tools, firefox binaries, other binaries, test harnesses, and tests. Then we create a python virtualenv to setup the python environment to run the tests while putting all the files and unpacking them in the proper places. Now mozharness can call the test harness (python run_tests.py –browser-chrome …) Given this overview of what happens, it seems as though we should be able to run:

test.sh

–test-path browser/components/search/test

Why this doesn’t work is that mozharness has no method for passing in a directory or single test, let along doing other simple things that |./mach test| allows. In fact, in order to run this single test, we need to:

- download Firefox binary, tools, and harnesses

- unpack them (in all the right places)

- setup the virtual env and install needed dependencies

- then run the mochitest harness with the dirty dozen (just too many commands to memorize)

Of course most of this is scripted, how can we take advantage of our scripts to set things up for us? What I did was hack the test-linux.sh locally to not run mozharness and instead echo the command. Likewise with the mozharness script to echo the test harness call instead of calling it. Here is the commands I ended up using:

bash /home/worker/bin/test.sh --no-read-buildbot-config '--installer-url=https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/target.tar.bz2' '--test-packages-url=https://queue.taskcluster.net/v1/task/c7FbSCQ9T3mE9ieiFpsdWA/artifacts/public/build/test_packages.json' '--download-symbols=ondemand' '--mochitest-suite=browser-chrome-chunked' '--total-chunk=7' --this-chunk=1- #now that it failed, we can do:

- cd workspace/build

- . venv/bin/activate

- cd ../build/tests/mochitest

- python runtests.py –app ../../application/firefox/firefox –utility-path ../bin –extra-profile-file ../bin/plugins –certificate-path ../certs –browser-chrome browser/browser/components/search/test/

- # NOTE: you might not want –browser-chrome or the specific directory, but you can adjust the parameters used

This is how I was able to run a single directory, and then a single test. Unfortunately that just proved that I could hack around the test case a bit and look at the output. In docker there is no simple way to view the screen. To solve this I had to install x11vnc:

apt-get install x11vnc

Assuming the Xvfb server is running, you can then do:

x11vnc &

This allows you to connect with vnc to the docker container! The problem is you need the ipaddress. I then need to get the ip address from the host by doing:

docker ps #find the container id (cid) from the list

docker inspect

| grep IPAddress

for me this is 172.17.0.64 and now from my host I can do:

xtightvncviewer 172.17.0.64

This is great as I can now see what is going on with the machine while the test is running!

This is it for now. I suspect in the future we will make this simpler by doing:

- allowing mozharness (and test.sh/test-linux.sh scripts) to take a directory instead of some args

- create a simple bootstrap script that allows for running ./mach style commands and installing tools like x11vnc.

- figuring out how to run a local objdir in the docker container (I tried mapping the objdir, but had GLIBC issues based on the container being based on Ubuntu 12.04)

Stay tuned for my next post on how to update your own custom TaskCluster image- yes it is possible if you are patient.

https://elvis314.wordpress.com/2015/11/09/adventures-in-task-cluster-running-tests-locally/

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 09 Nov 2015 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20151109/

|

|

The Servo Blog: This Week In Servo 41 |

In the last week, we landed 129 PRs in the Servo organization’s repositories!

James Graham, a long-time Servo contributor who has been one of the main architects of our testing strategy, now has reviewer privileges. No good deed goes unpunished!

Notable additions

- Patrick Walton reduced the number of spurious reflows and compositor events

- Alan Jeffrey got us a huge SpiderMonkey speed boost by using

nativeRegExp - Martin Robinson’s layerization work has allowed him to remove the incredibly-complicated

layers_needed_for_descendantshandling! - Bobby Holley continued his work on fixing performance and correctness of restyling

- Lars added CCACHE support and turned it on for our SpiderMonkey build, shaving a couple of minutes off the CI builder times

- Manish made the CI system verify that the Cargo.lock did not change during the build, a common source of build woes

- Matt Brubeck and others have been working on cleaning the libcpocalypse

- Lars changed how the Android build works, so that now we can have a custom icon, Java code for handling intents, and debug

New Contributors

- Abhishek Kumar

- Benjamin Herr

- Jitendra Jain

- Mohammed Attia

- Nikki

- Raphael Nestler

- Sylvester Willis

- Ulysse Carion

- jsharda

Meetings

At last week’s meeting, we discussed review carry-over, test coverage, the 2016 roadmap, rebase/autosquash for the autolander, the overwhelming PR queue, debug logging, and the CSSWG reftests.

There was also an Oxidation meeting, about the support for landing Rust/Servo components in Gecko. Though it mainly covers the needs of larger systems projects, some of the proposed Cargo features (like flat-source-tree) might also be interesting for Servo.

|

|

Chris Finke: Introducing Reenact: an app for reenacting photos |

Here’s an idea that I’ve been thinking about for a long time: a camera app for your phone that helps you reenact old photos, like those seen in Ze Frank’s “Young Me Now Me” project. For example, this picture that my wife took with her brother, sister, and childhood friend:

Reenacting photographs from your youth, taking pregnancy belly progression pictures, saving a daily selfie to show off your beard growth: all of these are situations where you want to match angles and positions with an old photo. A specialized camera app could be of considerable assistance, so I’ve developed one for Firefox OS. It’s called Reenact.

The app’s opening screen is simply a launchpad for choosing your original photo.

The photo picker in this case is handled by any apps that have registered themselves as able to provide a photo, so these screens come from whichever app the user chooses to use for browsing their photos.

The camera screen of the app begins by showing the original photo at full opacity.

The photo then will continually fade out and back in, allowing you to match up your current pose to the old photo.

Take your shot and then compare the two photos before saving. The thumbs-up icon saves the shot, or you can go back and try again.

Reenact can either save your new photo as its own file or create a side-by-side composite of the original and new photos.

And finally, you get a choice to either share this photo or reenact another shot.

Voila!

If you’re running Firefox OS 2.5 or later, you can install Reenact from the Firefox OS Marketplace, and the source is available on GitHub. I used Firefox OS as a proving ground for the concept, but now that I’ve seen that the idea works, I’ll be investigating writing Android and iOS versions as well.

What do you think? Let me know in the comments.

|

|

Botond Ballo: Trip Report: C++ Standards Meeting in Kona, October 2015 |

Summary / TL;DR

| Project | What’s in it? | Status |

| C++14 | C++14 | Published! |

| C++17 | Very much in flux. Significant language features under consideration include default comparisons, operator., a unified function call syntax, coroutines, and concepts. |

On track for 2017 |

| Filesystems TS | Standard filesystem interface | Published! |

| Library Fundamentals TS I | optional, any, string_view and more |

Published! |

| Library Fundamentals TS II | source code information capture and various utilities | Voted out for balloting by national standards bodies |

| Concepts (“Lite”) TS | Constrained templates | Publication imminent |

| Parallelism TS I | Parallel versions of STL algorithms | Published! |

| Parallelism TS II | TBD. Exploring task blocks, progress guarantees, SIMD. | Under active development |

| Transactional Memory TS | Transaction support | Published! |

| Concurrency TS I | improvements to future, latches and barriers, atomic smart pointers |

Voted out for publication! |

| Concurrency TS II | TBD. Exploring executors, synchronic types, atomic views, concurrent data structures | Under active development |

| Networking TS | Sockets library based on Boost.ASIO | Design review completed; wording review of the spec in progress |

| Ranges TS | Range-based algorithms and views | Design review completed; wording review of the spec in progress |

| Numerics TS | Various numerical facilities | Beginning to take shape |

| Array Extensions TS | Stack arrays whose size is not known at compile time | Direction given at last meeting; waiting for proposals |

| Reflection | Code introspection and (later) reification mechanisms | Still in the design stage, no ETA |

| Graphics | 2D drawing API | Waiting on proposal author to produce updated standard wording |

| Modules | A component system to supersede the textual header file inclusion model | Microsoft and Clang continuing to iterate on their implementations and converge on a design. The feature will target a TS, not C++17. |

| Coroutines | Resumable functions | At least two competing designs. One of them may make C++17. |

| Contracts | Preconditions, postconditions, etc. | In early design stage |

Introduction

Last week I attended a meeting of the ISO C++ Standards Committee in Kona, Hawaii. This was the second committee meeting in 2015; you can find my reports on the past few meetings here (June 2014, Rapperswil), here (November 2014, Urbana-Champaign), and here (May 2015, Lenexa). These reports, particularly the Lenexa one, provide useful context for this post.

The focus of this meeting was primarily C++17. There are many ambitious features underway for standardization, and the time has come to start deciding what which of them will make C++17 and which of them won’t. The ones that won’t will target a future standard, or a Technical Specification (which can eventually also be merged into a future standard). In addition, there are a number of existing Technical Specifications in various stages of completion.

C++17

After C++11, the committee adopted a release train model (much like Firefox’s) for new revisions of the C++ International Standard. Instead of targetting specific features for the next revision of the standard, and waiting until they are all ready before publishing the revision (the way it was done in C++11, which ended up being published 13 years after the previous major revision, C++98), new revisions are released on a three-year train (thus C++14, C++17, C++20, etc.), and a feature is either ready in time to make a particular train, or not (in which case, too bad; it can ride the next train).

As such, there isn’t a list of features planned for C++17 per se. Rather, there are many features in the works, and some will be ready to ride the C++17 train while others will not. More specifically, the committee plans to release the first draft of C++17 for balloting by national standards bodies, called the Committee Draft or CD, at the end of the June 2016 meeting in Oulu, Finland. (This is the timeline required to achieve a publication date in 2017.) This effectively means that for a feature to be in C++17, it must be voted in at that meeting at the latest.

Features already in C++17 coming into the meeting

As a recap, here are the features that have already been voted into C++17 at previous meetings:

- Language:

static_assert(condition)without a message- Allowing

auto var{expr}; - Writing a template template parameter as

template <...> typename Name - Removing trigraphs

- Folding expressions

std::uncaught_exceptions()- Attributes for namespaces and enumerators

- Shorthand syntax for nested namespace definitions

u8character literals- Allowing full constant expressions in non-type template parameters

- Library:

- Removing some legacy library components

- Contiguous iterators

- Safe conversions to

unique_ptr - Making

std::reference_wrappertrivially copyable - Cleaning up

noexceptin containers - Improved insertion interface for unique-key maps

void_talias templateinvokefunction template- Non-member

size(),empty(), anddata()functions - Improvements to

pairandtuple bool_constantshared_mutex- Incomplete type support for standard containers

I’d classify all of the above as “minor” except for folding expressions, which significantly increase the expressiveness of variadic template code.

Features voted into C++17 at this meeting

Here are the features that have been voted into C++17 at this meeting:

- Language:

- Removing the

registerkeyword, while keeping it reserved for future use - Removing

operator++forbool - Making exception specifications part of the type system. This resolves a long-standing issue where exception specifications sort-of contributed to a function’s type but not quite, and as such their handling in various contexts (passing template arguments, conversions between function pointer types, and others) required special rules.

__has_include(), a portable way of testing for the presence of a header. (The original proposal also included a__has_attribute()facility that would portably test for support for an attribute, but EWG rejected this on the basis that it encourages wrapping attributes into macros, and will have limited utility once compiler support for standard attributes becomes universal.)- Choosing an official name for what are commonly called “non-static data member initializers” or NSDMIs. The official name is “default member initializers”.

- A minor change to the semantics of inheriting constructors

- Removing the

- Library:

- Type traits variable templates. These are variable template versions of type traits that return a value (as opposed to a type), such as

is_convertible. The variable template version’s name is the original trait’s name suffixed with_v. For example,is_convertible::valuecan now be writtenis_convertible_v. This feature is also in the Library Fundamentals TS II, but it’s so popular that people wanted in C++17 as well. as_const(), a function that provides a constant view of its argument- Removing deprecated iostreams aliases

- Making

std::owner_lessmore flexible - Polishing

- Variadic

lock_guard - Logical type traits. These are type traits that take boolean arguments and perform logical operations on them. The traits are

conjunction,disjunction, andnegation. not_fnwas rejected on the basis that the proposal also included removing the legacynot1andnot2facilities without deprecating them first, and there was opposition to this. An updated version of the proposal that changes the removal to deprecation is expected to pass at the next meeting.

- Type traits variable templates. These are variable template versions of type traits that return a value (as opposed to a type), such as

By and large, this is also minor stuff.

Features on the horizon for C++17

Finally, the most interesting bunch: features that haven’t been voted into C++17 yet, but that people are working hard to try and get into C++17.

I’d like to stress that, while obviously the committee tries to standardize features as expeditiously as is reasonable, there is a high quality bar that has to be met for standardization, and a feature isn’t in C++17 until it’s voted in. The features I mention here all have a chance of making C++17 (some better than others), but there are no guarantees.

Concepts

The Concepts Technical Specification (informally called “Concepts Lite”) has been voted for publication in July of this year, and the release of the published TS by ISO is imminent.

The idea behind Technical Specifications is that they give implementers and users a chance to gain experience with a feature, without tying the committee’s hands by standardizing the feature which makes it very difficult to change it in non-backwards-compatible ways. The eventual fate envisioned for a TS is being merged into a future standard, possibly with modifications motivated by feedback from implementers and users.

The question of whether the Concepts TS should be merged into C++17 thus naturally came up. This was certainly the original plan in 2012, when the decision was made to pursue Concepts Lite as a TS aimed for publication in 2014; people then envisioned the TS as being ripe for merger by C++17. Today, with the publication of the TS having slipped to 2015, and the effective deadline for getting changes into C++17 being mid-2016, the timeline looks a bit tighter.

The Concepts TS currently has one complete implementation (modulo bugs), in GCC trunk, which will make it into a release (GCC 6) next year. This, currently experimental, implementation has already been used to gain experience with the feature. Most notably, the Ranges Technical Specification, which revamps significant parts of the C++ Standard Library using Concepts, has an implementation that uses Concepts (in contrast to the previous implementation which emulated Concepts in C++11) and compiles with GCC trunk.

Based on this and other experience, several committee members have argued that Concepts is ripe for standardization in C++17. Others have expressed various concerns about the current design, and argued on this basis that Concepts is not ready for C++17:

- There is some concern that the current design gives programmers too many syntactic ways of expressing the same thing, and that some of these are redundant. There is no specific proposal for removal one or more of them, but some feel that additional use experience may give insight into what could be removed.

- Some have argued that Concepts as a language feature, and a revamped standard library that takes advantage of Concepts, should be standardized simultaneously, to demonstrate confidence that the language feature is able to meet the needs of complex, demanding use cases such as those in standard library. The latter is being worked on in the form of the Ranges TS, but that’s quite unlikely to make C++17, so some argue the language feature shouldn’t, either.

- Some have expressed concern that we don’t yet have a good picture of how well the current Concepts design lends itself to separate checking of template definitions, and that it’s premature to standardize the design until we do.

This last point deserves some elaboration.

When you write a reusable piece of code, such as a function or class, you’re defining an interface between the users of the code, and its implementation. When the function or class is a template, the interface includes requirements on the types (or values) of the template parameters, which are checked at compile time.

Prior to Concepts, these requirements were implicit; you could document the requirements, and you could approximate making them explicit in code by using certain techniques like enable_if, but there was no first-class language support for making them explicit in code. As a result, violations of these requirements – either on the user side by passing template arguments that don’t meet the requirements, or on the implementation side by using the template arguments in ways that go beyond the requirements – would only be caught at instantiation time, that is, when the template is instantiated with concrete arguments for a particular use site. Compiler errors resulting from such violations typically come with long instantiation backtraces, and are notoriously difficult to understand.

Concepts Lite allows us to express these requirements explicitly in code, and to catch violations on the user side “early”, by checking the concrete template arguments passed at a use site against the requirements, without having to look at the implementation of the template. The resulting compiler errors are much easier to understand, and do not contain instantiation backtraces.

However, with the current Concepts Lite design, violations of the requirements on the implementation side – that is, using the template arguments in ways that go beyond the specified requirements – continue to be caught only at instantiation time, and produce hard-to-understand errors with long backtraces. A complete Concepts design, such as the one originally proposed for C++11, includes checking the body of a template against the specified requirements independently of any particular instantiation, to catch implementation-side errors “early” as well; this is referred to as separate checking of template definitions.

Concepts Lite doesn’t currently provide for separate checking of template definitions. Claims have been made that it can be extended to support this, but as this is a difficult-to-implement feature, some would like to see stronger evidence for this (such as a proof-of-concept implementation, or a detailed description of how one would implement it) prior to standardizing Concepts Lite and thus locking us into the current design.

No decision about merging Concepts Lite into C++17 has been made at this meeting; I expect that the topic will continue to be discussed over the next two meetings, with a decision made no later than the June 2016 meeting.

Other published Technical Specifications

The first Parallelism TS, which contains parallel versions of standard algorithms, and was published earlier this year, will be proposed for merger into C++17.

The Filesystem TS, the first Library Fundamentals TS, and the Transactional Memory TS have also been published earlier this year, and could plausibly be proposed for merger into C++17, although I haven’t heard specific talk of such proposals yet.

TS’es that are still in earlier stages of the publication pipeline, such as the Concurrency TS, will almost certainly not make C++17.

Coroutines

As I’ve described in previous posts, there are three competing coroutines proposals in front of the committee:

- A proposal for stackless coroutines, also known as resumable functions. (This is sometimes called “the

awaitproposal” after one of the keywords it introduces. - A proposal for stackful coroutines.

- A hybrid proposal called resumable expressions that tries to achieve some of the appealing characteristics of both stackful and stackless coroutines.

I talk more about the tradeoffs between stackful and stackless coroutines in my Urbana report, and describe the hybrid approach in more detail in my Lenexa report.

In terms of standardization, at the Urbana meeting the consensus was that stackful and stackless coroutines should advance as independent proposals in the form of a Technical Specification, with a possible view of unifying them in the future. Developments since then can be summed up as follows:

- The stackless / resumable functions /

awaitproposal has advanced to a stage where it is fully fleshed out, has standard wording, and the Core Working Group has begun reviewing the standard wording. - Purely stackful coroutines can be used today as a library-only feature (see e.g. Boost.Coroutine); as such, there is less of a pressing need to standardize them than designs that require language changes.

- Attempts to achieve a unified proposal are still very much in the design stage. The most recent development on this front is that the author of the “resumable expressions” proposal, Chris Kohlhoff, has decided to abandon the syntax of his proposal, and instead join forces with the authors of the stackful coroutines proposal to come up with an attempt at a unified proposal where the syntax would similar to the one in the stackful proposal, but there would be provisions for the compiler to transform coroutines into a stackless form where possible as an optimization.

Given this state of affairs, and particularly the advanced stage of the await proposal, the question of whether it should be standardized in C++17, rather than a TS, came up. A poll was held on this topic in the Evolution Working Group, with the options being (1) standardizing the await proposal in C++17, and (2) having a TS with both proposals as originally planned. There wasn’t a strong consensus favouring either of these choices over the other; opinion was mostly divided between people who felt we should have some form of coroutines in C++17, and people who felt there was still room for iteration on and convergence between the proposals, making a TS the more appropriate vehicle.

For now, the Core Working Group will continue reviewing the wording of the await proposal; the ship vehicle will presumably be decided by a vote of the full committee at a future meeting.

Contracts

Contracts are a way to express preconditions, postconditions, and other runtime requirements in code, with a view toward opening up use cases such as:

- Optionally checking the requirements at runtime, and handling their violation in some way.

- Exposing the requirements to tools such as an analyzer that might attempt to check some of them statically

- Exposing the requirements to optimizers that might make assumptions (such as assuming that the requirements at met) when optimizing

Initial proposals in the area tended to cater to one or more of these use cases to the detriment of others; guidance from the committee was to aim for a unified proposal. While no such unified proposal has been written yet, the authors of the original proposal and other interested parties have been hard at work trying to solve some of the technical problems involved. I give some details further down.

Given the relatively early stage of these efforts, it’s in my opinion unlikely that they will result in a proposal that makes it into C++17. However, it’s not out of the question, if a written proposal is produced for the next meeting, and its design and wording reviews go sufficiently smoothly.

Operator Dot

Overloading operator dot (the member access operator) allows new forms of interface composition that weren’t possible before.

A proposal for doing so was approved by EWG at the previous meeting; it’s currently pending wording review by CWG.

Another proposal that brings even more expressive power was looked at this meeting; it was sent to the Reflection Study Group as the abilities it unlocks effectively constitute a form of reflection.

The original proposal is slated to come up for a vote to go into C++17 once it passes wording review; however, some object to it on the basis that reflection facilities (such as those that might be produced by iterating on the second proposal) would supersede it. Therefore, I would classify its fate in C++17 as uncertain at this point.

Other Language Features

Beyond the big-ticket items mentioned above, numerous smaller language features may be included in C++17. For a list, please refer to the “Evolution Working Group” section below. Proposals approved by EWG at this meeting are fairly likely to make C++17, as they only need to go through wording review by the Core Working Group before being voted into C++17. Proposals deemed by EWG to need further work face a bit of a higher hurdle, as an updated proposal would need to go through both design and wording reviews before being voted into C++17.

What about Modules?

Modules are possibly the single hottest feature on the horizon for C++ right now. Everyone wants them, and everyone agrees that they will solve very significant problems ranging from code organization to build times. They are also a very challenging feature to specify and implement.

There are two work-in-progress Modules implementations: one in Microsoft’s compiler, and one in Clang, developed primarily by Google. The two implementations take slightly different approaches, and the implementers have been working hard to try to converge their designs.

The Evolution Working Group held a lengthy design discussion about Modules, which culminated in a poll about which of two possible ship vehicles is more appropriate: C++17, or a Technical Specification. A Technical Specification had a stronger consensus, and this is what is currently being pursued. This means Modules are not currently slated for inclusion in C++17. It’s not inconceivable for this to change, if the implementors make very significant progress before the next meeting and convince the committee to change its mind; in my opinion, that’s unlikely to happen.

I talk about the technical issues surrounding Modules in more detail below.

What about ?

Major language features that I haven’t mentioned above, such as reflection, aren’t even being considered for inclusion for C++17.

Evolution Working Group

As usual, I spent practically all of my time in the Evolution Working Group, which spends its time evaluating and reviewing the design of proposed language features.

EWG categorizes incoming proposals into three rough categories:

- Approved. The proposal is approved without design changes. They are sent on to CWG, which revises them at the wording level, and then puts them in front of the committee at large to be voted into whatever IS or TS they are targeting.

- Further Work. The proposal’s direction is promising, but it is either not fleshed out well enough, or there are specific concerns with one or more design points. The author is encouraged to come back with a modified proposal that is more fleshed out and/or addresses the stated concerns.

- Rejected. The proposal is unlikely to be accepted even with design changes.

Accepted proposals:

- Inline variables allow declaring namespace-scope variables and and static data members as

inline, in which case the declaration counts as a definition. (The declaration must then include any initializer.) This is analogous to inline functions, and spares the programmer from having to provide an out-of-line definition in a single translation unit (which can force an otherwise header-only library to no longer be header-only, among other annoyances). The proposal was accepted, with two notable changes. First, to reduce verbosity,constexprwill implyinline. Second, namespace-scope variables will only be allowed to beinlineif they are alsoconst. The motivation for the second change is to discourage proliferation of mutable global state; it passed despite objections from some who thought it was complicating the rules with little benefit. - A proposal to allow lambdas to appear in a

constexprcontext. These have good use cases, and there isn’t any compelling motivation to disallow them; the proposal had near unanimous support. - Removing dynamic exception specifications, i.e. constructs of the form

throw(at the end of a function declaration. These have been deprecated since C++11 introduced- )

noexcept.throw()is kept as a (deprecated) synonym fornoexcept(true). - An extension to aggregate initialization that allows aggregate initialization of types with base classes. The base classes are treated as subobjects, in order of declaration, preceding the class’s own members. Not allowed for classes with virtual bases.

constexpr_if, which was calledstatic_ifin previous iterations of the proposal. It’s like anifstatement, but its condition is evaluated at compile time, and if it appears in a template, the branch not taken is not instantiated. This is neat because it allows code likeconstexpr_if (/* T is refcounted */) { /* do something */ } constexpr_else { /* do something else */ }; currently, things like this need to be accomplished more verbosely via specialization.- Guaranteeing copy elision in certain contexts. Copy elision refers to the compiler eliding (i.e. not performing) a copy or move of an object in some situations. It differs from a pure optimization in that the compiler is allowed to do it even if the elided copy or move constructor has side effects. Every major compiler does this, but it’s not mandatory, and as a result, the language requires the type whose copy or move is elided to still be copyable or movable. This precludes some useful patterns, such as writing a factory function for a non-copyable, non-movable type. This proposal rectifies the problem by requiring that copy elision be performed in certain contexts (specifically, when a temporary object is used to initialize another object; this happens when returning a temporary from a function, initializing a function parameter with a temporary, and throwing a temporary as an exception), and removing the requirement that types which are only notionally copied or moved in those circumstances, be copyable or movable.

- A proposal to allow an empty enumeration (that is, one with no enumerators) with a fixed underlying type to be constructed from any integer in the range of its underlying type using the

EnumName{42}syntax. This was already allowed using theEnumName(42)syntax, but it was considered a narrowing conversion which is not allowed with the{}syntax. This allows using an enumeration as an opaque/strong typedef for an integer type more effectively. It passed despite objections that a full opaque typedefs proposal would make using enums for this purpose unnecessary. - A proposal to specify the order of evaluation of operands for all expressions. This is a breaking change, but one people agree we need to make because not sepcifying the order of evaluation leads to a lot of subtle bugs.

- Unified function call syntax, in one direction only:

f(x, y)can resolve tox.f(y)if regular name lookup finds no results forf. This is #3 out of the six design alternatives presented in the original proposal. It satisfies the proposal’s primary use case of making it easier to write generic code using Concepts, by allowing concepts to to require the non-member syntax to work (and have template implementations use the non-member syntax), while a type can be made to model a concept using either member or non-member functions. The other direction (x.f(y)resolving tof(x, y)if member name lookup finds no results forf) was excluded because it was too controversial, as it enabled writing rather brittle code that’s susceptible to “action at a distance”. - A proposal to disallow unary folds of some operators over an empty parameter pack was approved by EWG, with the modification that it should be disallowed for all operators. However, the proposal failed to achieve consensus at the plenary meeting, and will not be moving forward at this time.

- Several modifications to the

awaitproposal (a.k.a. resumable functions / stackless coroutines). Most notably, EWG settled on the keyword choicesco_await,co_yield, andco_return; the proposed alternative of “soft keywords” that tried to allow usingawaitandyieldwithout making them keywords, was rejected on the basis that “the difficulty of adding keywords to C++ is a feature”. The various modifications listed in this paper and this one were also accepted.

(I didn’t list features which also passed CWG review and were voted into C++17 at this meeting; those are listed in the the C++17 section above.)

Proposals for which further work is encouraged:

- Allowing lambdas declared at class scope to capture

*thisby value. Currently, capturingthiscaptures the containing object by reference; in situations where capture by value is desired (for example, because the lambda can outlive the object), a temporary copy of the object has to be made and then captured, which is easy to forget to do. The paper proposes introducing two new forms in the capture list:*this, to mean “capture the containing object by value”, and*, to mean “capture all referenced variables including the containing object by value”. EWG advised going forward with the first one only (*looks like “capture by pointer”, and we don’t need a third kind of default capture). - Dynamic allocation with user-specified alignment. Currently, the user can specify custom alignment for a type using

alignas(N), but this does not affect dynamic allocation; the proposal makes it do so. EWG agreed that this should be fixed, but there were some concerns about backward-compatibility; the proposal author will iterate on the proposal to address these concerns. - The C++ standard library includes parts of the C standard library by reference. Currently, the referenced version of C is C99. EWG looked at a proposal to update the referenced version to C11. EWG encouraged this, and further suggested that the topic of compatibility between C++ and C in two areas, threads and atomics, be explored.

- A proposal to obtain the ability the create opaque aliases (a form of typedefs that create a new type) by adding two new language features: function aliases, and allowing inheritance from built-in types. The idea is that the mechanism of creating an opaque alias would be derivation; to allow aliases of built-in types, inheritance from built-in types would be allowed. Function aliases, meanwhile, would provide a mechanism for lifting the operations of the base class into the derived class, so that if you e.g. inherit from

int, the inheritedoperator +could be “aliased” to take and return your derived type instead. EWG liked the idea of function aliases, and encouraged developing it into an independent proposal. Regarding opaque aliases, EWG felt inheritance wasn’t the appropriate mechanism; in particular, deriving from built-in types opens up a can of worms (e.g. “are the operations onintvirtual?”). Instead, wrapping the underlying type as a member should be explored as the mechanism for creating opaque aliases. This can already be done today, if you define all the wrapped operations yourself; the language should make that easier to do. It was pointed out that reflection may provide the answer to this. - A different approach to overloading “operator dot” where, in the presence of an overloaded

operator .,obj.memberwould resolve toobj.operator.(f), wherefis a compiler-generated function object that accepts an argumentaof any type, and returnsa.member. (Similarly,obj.func(args)would be transformed in the same way, with the function object returninga.func(args)). This proposal has more expressive power than the existing “operator dot” proposal, allowing additional patterns like a form of duck typing (see the paper for a list). EWG liked the abilities this would open up, but wasn’t convinced that “operator dot” was the right spelling for such a feature. In addition, it was pointed out that a lot of these abilities fall under the purview of reflection; EWG recommended continuing to pursue the idea in the Reflection Study Group. - A proposal to allow initializer lists to contain movable elements. EWG didn’t find anything objectionable about this per se, but didn’t feel it was sufficiently motivated, and encouraged the author to return with more compelling motivating examples.

- A proposal to standardize a

[[pure]]attribute, which would apply to a function and indicate that the function had no side effects. Everyone wants some form of this, but people disagree on the exact semantics, and how to specify them. The prevailing suggestion seemed to be to specify the behaviour in terms of the “abstract machine” (an abstraction used in the C++ standard text to specify behaviour without getting into implementation-specific details), and to explore standardizing two attributes with related semantics: one to mean “the function can observe the state of the abstract machine, but not modify it”, and another to mean “the function cannot observe the state of the abstract machine, except for its arguments”. To illustrate the difference, a function which reads the value of a global variable (which could change between invocations) would satisfy the first condition, but not the second; such a function has no side effects, but different invocations can potentially return different values. GCC has (non-standard) attributes matching these semantics,[[gnu::pure]]and[[gnu::const]], respectively. - A proposal to allow non-type template parameters of deduced type, spelt

template. This was almost approved, but then someone noticed a potential conflict with the Concepts TS. In the Concepts TS,autois treated as a concept which is modelled by all types, and in most contexts whereautocan be used, so can a concept name. Extending those semantics to this proposal, the meaning oftemplateought to be “a template with a single non-type template parameter whose type is deduced but must satisfy the conceptConceptName“. The problem is,templatecurrently has a different meaning in the Concepts TS: “a template with a single type template parameter which must satisfyConceptName. EWG encouraged the proposal author to work with the editor of the Concepts TS to resolve this conflict, and to propose any resulting feature for addition into the Concepts TS. - A revised version of a proposal to allow template argument deduction for constructors. This would allow omitting the template argument list from the name of a class when constructing it, if the template arguments can be deduced from the constructor. The proposal contained two complementary mechanisms for performing the deduction. The first is to perform deduction against the set of constructors of the primary template of the class, as if they were non-member functions and their template parameters included those of the class. The second is to introduce a new construct called a “canonical factory function”, which would be outside the class, and would look something like this:

template

vector(Iter begin, Iter end) -> vector>;

The meaning of this is “ifvectoris constructed without explicit template arguments and the constructor arguments have typeIter, deducevector‘s template argument to beValueTypeOf. The proposal author recommended allowing both forms of deduction, and EWG, after discussing both at length, agreed; the author will write standard wording for the next meeting. - Extend the

forloop syntax to run different code when the loop exited early (usingbreak) than when the loop exited normally. This avoids needing to save enough state outside the loop’s scope to be able to test how the loop exited, which is particularly problematic when looping over ranges with single-pass iterators. EWG agreed this problem is worth solving, but thought the proposal wasn’t nearly well baked enough. A notable concern was that the proposed syntax,if for (...) { /* loop body */} { /* normal exit block */ } else { /* early exit block */ }had the reverse of the semantics of python’s existingfor ... elsesyntax, in whichelsedenotes code to be run if the loop ran to completion. - A proposal to allow something like using-declarations inside attribtues. The proposed syntax was

[[using(ns), foo, bar, baz]], which would be a shorthand for[[ns::foo, ns::bar, ns::baz]]. EWG liked the idea, but felt some more work was necessary to get the lookup rules right (e.g. what should happen if an attribute name following ausingis not found in the namespace named by theusing). [[unused]],[[nodiscard]], and[[fallthrough]]attributes, whose meanings are roughly “if this entity isn’t used, that’s intentional”, “it’s important that callers of this function use the return value”, and “thisswitchcase deliberately falls through to the next”. The purpose of the attributes is to allow implementations to give or omit warnings related to these scenarios more accurately; they all exist in the wild as implementation-specific attributes, so standardizing them makes sense. EWG liked these attributes, but slightly preferred the name[[maybe_unused]]for the first, as[[unused]]might wrongly suggest the semantics “this should not be used”. The notion that[[nodiscard]]should be something other than an attribute (such as a keyword) so that the standard can require a diagnostic if the result of a function so marked is discarded, came up but was rejected.- A proposal for de-structuring initialization, that would allow writing

auto {x, y, z} = expr;where the type ofexprwas a tuple-like object, whose elements would be bound to the variablesx,y, andz(which this construct declares). “Tuple-like objects” includestd::tuple,std::pair,std::array, and aggregate structures. The proposal lacked a mechanism to adapt a non-aggregate user-defined type to be “tuple-like” and work with this syntax; EWG’s feedback was that such a mechanism is important. Moreover, EWG recommended that the proposal be expanded to allow (optionally) specifying types forx,y, andz, instead of having their types be deduced. - A paper outlining a strategy for unifying the stackless and stackful coroutine proposals. The paper argued that the stackless/stackful distinction is focusing in on one dimension of the design space (the call stack implementation), while there are a number of other dimensions, such as forward progress guarantees, thread-local storage, and lock ownership; it further observed that coroutines have a lot in common with threads, fibres, task-region task, and other similar constructs – collectively, “threads of execution” – and that unification should be sought across all these dimensions. EWG encouraged the author to come back with a fleshed-out proposal.

- EWG looked at some design issues that came up during wording review of the default comparisons proposal. The most significant one concerned the name lookup rules for auto-generated comparisons. The current wording effectively lexically expands a comparison like

a == binto something likea.foo == b.foo && a.bar == b.barat each call site, performing lookup for each member’s comparison operator at the call site. As these lookups can yield different results for different call sites, the comparison can have different semantics at different call sites. People didn’t like this; several alternatives were proposed along the lines of generating a single, canonical comparison operator for a type, and using it at each call site. An updated proposal that formalizes one of these alternatives is expected at the next meeting.

Rejected proposals:

- Making

voidbe an object type, so that you can use it the way you can use any object it (that is, you can instantiate it, form a reference to it, and other things currently forbidden forvoid). The motivation was to ease the writing of generic code, which oftens needs to specialize templates to handlevoidcorrectly. EWG agreed the problem was worth solving, but didn’t like this approach; the strongest objections revolved around situations where the inability to usevoidin a particular way is an important safeguard, such as trying to delete avoid*, or trying to perform pointer arithmetic on one, both of which would have to be allowed (or otherwise special-cased) under this scheme. - Extension methods, which would allow the first parameter of a non-member function to be named

this, and allow calling such functions using the member function call syntax. This is essentially an opt-in version of the “x.f(y)can resolve tof(x, y)” half of the unified function call syntax proposal, which is the half that didn’t pass. It was shot down mostly for the same reasons that the full (no opt-in) version was (concerns about making code more brittle), but even proponents of a full unified call syntax opposed it because they felt an opt-in policy was too restrictive (not allowing existing free functions to be called with a member syntax), and that the safety objectives that motivated opt-in could be better accomplished through Modules. - A revised version of the generalized lifetime extension proposal (originally presented in Urbana) that would extend C++’s “lifetime extension” rules. These rules specify that if a local reference variable is bound to a temporary, the lifetime of the temporary (which would normally end at the end of the statement) is extended to match the lifetime of the local variable. The proposal would extend the rules to apply to temporaries appearing as subexpressions of the variable’s initializer, if the compiler can determine that the result of the entire initializer expression refers to one of these temporaries. To make this analysis possible for initializers that contain calls to separately-defined functions, annotations on function parameters (that essentially say whether the result of the function refers to the parameter) would be required. EWG’s view was that the problem this aims to solve (a category of subtle object lifetime issues) is not big enough to warrant requiring such annotations. It’s worth noting that Microsoft is developing a tool that would use annotations of a very similar kind (with well-chosen defaults to reduce verbosity) to warn about such object lifetime issues.

noexcept(auto). This wasn’t so much a rejection, as a statement from the proposal author that he does not intend to pursue this proposal further, in spite of EWG having consensus for it at the last meeting. A notable reason for this change in course was the observation that authors of generic code who would benefit most from this feature, often want the function’s return expression to appear in the declaration (such as in the noexcept-specification) for SFINAE purposes.- Relaxing a rule about a particular use of unions in constant expressions. If all union members share a “common initial sequence”, then C++ allows accessing something in this sequence through any union member, not just the active one. In constant expressions, this is currently disallowed; the proposal would allow it. Rejected because an implementer argued that it would have a significant negative impact on the performance of their constant expression evaluation.

- Replacing

std::uncaught_exceptions(), previously added to C++17, with a different API that the author argued is harder to misuse. EWG didn’t find this compelling, and there was no consensus to move forward with it.

A proposal for looping simultaneously over multiple ranges was not heard because there was no one available to present it.

Modules

I talked above about the target ship vehicle for Modules being a Technical Specification rather than C++17. Here I’ll summarize the technical discussion that led to this decision.

Modules enable C++ programmers to fundamentally change how they structure their code, and derive many benefits as a result, ranging from improved build times to better tooling. However, programmers often don’t have the luxury of making such fundamental changes to their existing codebases, so there is an enormous demand for implementations to support paradigms that allow reaping some of these benefits with minimal changes to existing code. A big open question is, to what extent should this set of use cases – transitioning existing codebases to a modular world – influence the design of the language feature as standardized, versus being provided for in implementation-specific extensions. Having different answers for this question is the largest source of divergence between the two current Modules implementations.

The most significant issue that this question manifests itself in, is whether modules should “carry” macros; that is, whether macros should be among the set of semantic entities that one module can export and another import.

There are compelling arguments on both sides. On the one hand, due to their nature (being preprocessor constructs, handled during a phase of translation when no syntactic or semantic information is yet available), macros hugely complicate the analysis of C++ code by tools. The vast majority of their uses cases now have non-preprocessor-based alternatives, from const variables and inline functions introduced back in the days of C, to reflection features like source code information capture (to replace things like __FILE__) being standardized today. They are widely viewed as a scourge on the language, and a legacy feature that has no place in new code and does not deserve consideration when designing new language features. As a result, many argue that Modules should not have first-class support for macros. This is the position reflected in Microsoft’s implementation. (It’s important to note that Microsoft’s implementation does have a mechanism for dealing with macros to support transitioning existing codebases (specifically, there exists a compiler flag that can be used when compiling a module that, in addition to generating a module interface file, generates a “side header” containing macro definitions that a consumer of the module can #include in addition to importing the module), but this is strictly an extension and not part of the language feature as they propose it.)

On the other hand, practically all existing large codebases include components that use macros in their interfaces – most notably system headers – and this is unlikely to change in the near future. (As someone put it, “no one is going to rewrite all of POSIX to not use macros any time soon”.) To allow modularizing such codebases in a portable way, many argue that it’s critical that Modules have first-class, standardized support for macros. This is the position reflected in Clang’s implementation.

Factoring into this debate is the state of progress of the two implementations. Microsoft claims to have a substantially complete implementation of their design (where modules do not carry macros), to be released as part of Visual C++ 2015 Update 1, and has submitted a paper with standard wording to the committee. The Clang folks have not yet written such a paper or wording for their design (where modules do carry macros), because they feel their implementation is not yet sufficiently complete that they can be confident that the design works.

EWG discussed all this, and recognized the practical need to support macros in some way, while also recognizing that there is a lot of demand to have some form of Modules available for people to use as soon as possible. A preference was expressed for standardizing Microsoft’s “ready to go” design in some form, while treating the additional abilities conferred by Clang’s design (namely, modules carrying macros) as a possible future extension. The decision to pursue Microsoft’s design as a Technical Specification rather than in C++17 was made primarily because at this stage, we cannot yet be confident that Clang’s design can be expressed as a pure, no-breaking-changes extension to Microsoft’s design. A Technical Specification comes with no guarantee that future standards will be compatible with it, making it the safer and more appropriate choice of ship vehicle.

Later in the week, there was an informal evening session about the implementation of Modules. Many interesting topics were brought up, such as whether the result of compiling a module is suitable as a distribution format for code, and what the implications of that are for things like DRM. Such topics are strictly out of scope of standardization by the committee, but it’s good to hear implementers are thinking about them.

Contracts

Two evening sessions were held on the topic of contract programming, to try to make progress towards standardization. Building on the consensus from the previous meeting that it should be possible to express contracts both in the interface of a function (such as a precondition stated in the declaration) and in the implementation (such as an assertion in the function body), the group tackled some remaining technical challenges.

The security implications of having a global “contract violation handler” which anyone can install, were discussed: it opens up an attack vector where malicious code sets a handler and causes the program to violate a contract, leading to execution of the handler. It was observed that two existing language features, the “terminate handler” (called when std::terminate() is invoked) and the “unexpected handler” (called when an exception is thrown during stack unwinding) have a similar problem, and protection mechanisms employed for those can be applied to the contract violation handler as well.

The thorniest issue was the interaction between a possibly-throwing contract violation handler and a noexcept function: what should happen if a noexcept function has a precondition, the program is compiled in a mode where preconditions are checked, the precondition is checked and fails, the contract violation handler is invoked, and it throws an exception? The possibility of “just allowing it” was considered but rejected, as it would be very problematic for noexcept to mean “does not throw, unless the precondition fails” (code relying on the noexcept would be operating on a possibly-incorrect assumption). The possibilities of not allowing contract violation handlers to throw, and of not allowing preconditions on noexcept functions, were also considered but discarded as being too restrictive. The consensus in the end was, noexcept functions can have preconditions, and contract violation handlers can throw, but if an exception is thrown from a contract violation handler while checking the preconditions of a noecept function, the program will terminate. This is consistent with the more general rule that if an exception is thrown from a noexcept function, the program terminates.

The question of whether a contract violation handler should be allowed to return, i.e. neither throw nor terminate but allow execution of the contract-violated function to proceed, came up, but there was no time for a full discussion on this topic.

Concepts Design Review

When the Concepts TS was balloted by national standards bodies, some of the resulting ballot comments were deferred as design issues to be considered for the next revision of the TS (or its merger into the standard, whichever happens first). EWG looked at these issues at this meeting. Here’s the outcome for the most notable ones:

- A suggestion to remove terse notation (where

void foo(ConceptName c)declares a constrained template function) was rejected on the basis that user feedback about this feature so far has been mostly positive. - A suggestion to remove one or more of the four current ways of declaring a constrained template function was rejected on the basis that no specific proposal as to what to remove has been made; the comment authors are welcome to write such a specific proposal if they wish.

- Unifying the two ways of declaring concepts (as a variable and as a function) was discussed. EWG agreed that this is a worthy goal, but there is no specific proposal on the table. (“Just remove function concepts” isn’t a viable approach because variable concepts cannot be overloaded on the kind and arity of their template parameters, and such overloading is considered an important use case.)

- Allowing the evaluation of a concept anywhere (such as in a

static_assertor aconstexpr_if), not just in a requires-clause, was approved. - A suggestion to add syntax for same-type constraints (in addition to the existing syntax for “convertible-to” constraints) was rejected on the basis that same-type constraints can easily be expressed as convertible-to constraints with the use of simple helper concept (e.g.

{ expr } -> Same).

See also the section above where I talk about the bigger picture about Concepts and the possibility of the TS being merged into C++17.

Library / Library Evolution Working Groups

Having spent practically all of my time in EWG, I didn’t have much of a chance to follow developments on the library side of things, but I’ll summarize what I’ve gathered during the plenary sessions.

I already listed library features accepted into C++17 at this meeting (and at previous meetings) above.

Library Fundamentals TS

The first revision of the Library Fundamentals TS was recently published and could be proposed for merger into C++17.

The second revision came into the meeting containing the following:

|

|

Matt Thompson: GitDone |

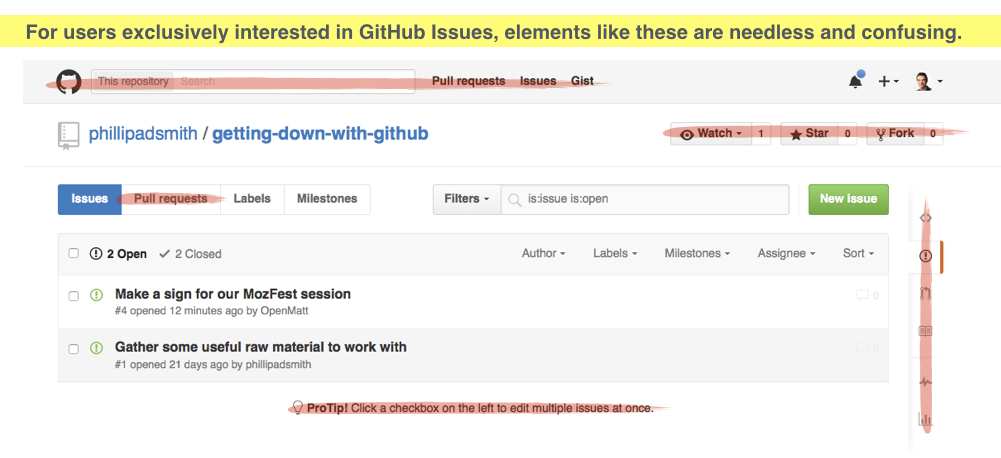

GitDone aims to make GitHub Issues easier to use. It’s a simple browser extension that streamlines the GitHub Issues interface for new users and non-developers — and can add handy features and shortcuts that project managers and Task Rabbits will love.

GitDone was born at the 2015 Mozilla Festival. It was one of twelve ideas our session came up with for making GitHub easier and more powerful for new audiences. Phillip Smith and the heroic Darren Mothersele then spent the weekend hacking on an early prototype.

Right now it’s just a bare-bones proof of concept for developers. We’re hoping the next 0.2 release will start to deliver enough value that we can begin testing and human trials with actual project managers and novice GitHub Issues users.

Who is GitDone for?

- Project Managers

- Non-technical users. People new to GitHub or GitHub Issues.

- Anyone looking for a fast, easy task manager. Or an issue tracker that both technical and non-technical audiences will like and use.

Why GitDone?

In many ways, GitHub Issues is like a “product unto itself.” For a growing segment of users, it’s the only part of GitHub they regularly use.

Project managers like me and my team-mates at Mozilla love it. Of all the issue trackers out there (Bugzilla, Asana, etc.), it’s arguably the easiest to use. And it has a huge added benefit: it’s the same tool our developer colleagues are already using — a major bonus.

The challenge is…

- GitHub can be intimidating for new users. On first use, GitHub looks and smells like a place that’s “just for developers.” That can make it harder for project managers to explain and get their non-technical team-mates to embrace.

- GitHub’s language and terminology is foreign. For project managers or people just trying to get tasks done, terms like “repos” and “pull requests” don’t make sense.

- Routine tasks take too many clicks. It’d be nice to get common features like “create new task” or “see all my tasks” into a toolbar that’s always close to hand. And some features are hard to find in the current GitHub interface. (e.g., “How do I add a new label?”)

By hiding some of GitHub’s complexity for “Task Rabbits,” our hope is that we can make it easier for new users to understand and get started. We can also make UI tweaks, translate terms and add shortcuts that make project managers’ lives easier.

GitDone 0.1 and beyond

We just released the GitDone 0.1 “cupcake” release — a bite-sized proof of concept. We’d love your ideas and help with release 0.2, which we can then test with some real-life project managers, get feedback, and see whether GitDone 0.3 should… get done.

GitDone 0.1 focused on:

- Removing stuff that’s confusing. Hide parts of the GitHub Issues interface that are confusing for new users.

- Providing some tool buttons for commonly performed tasks. A lot of value can be provided by just by pulling helpful GitHub functions and links into a toolbar.

GitDone 0.2 will:

- Add stuff that’s helpful. Add elements to the interface that project managers will love. (Like: displaying labels and milestones right on the project page, adding a “new label” button right on the label list, etc.)

- Then: test with humans. Gather feedback. Decide whether / what for release 0.3

What’s the ultimate goal?

- Make a simpler issues management experience

- Provide a gateway drug / on-ramp into the rest of GitHub for new users

- Help non-developers interact with developers productively on a shared platform

|

|

Daniel Pocock: debian.org RTC: announcing XMPP, SIP presence and more |

Announced 7 November 2015 on the debian-devel-announce mailing list.

The Debian Project now has an XMPP service available to all Debian Developers. Your Debian.org email identity can be used as your XMPP address.

The SIP service has also been upgraded and now supports presence. SIP and XMPP presence, rosters and messaging are not currently integrated.

The Lumicall app has been improved to enable rapid setup for Debian.org SIP users.

This announcement concludes the maintenance window on the RTC services. All services are now running on jessie (using packages from jessie-backports).

XMPP and SIP enable a whole new world of real-time multimedia communications possibilities: video/webcam, VoIP, chat messaging, desktop sharing and distributed, federated communication are the most common use cases.

Details about how to get started and get support are explained in the User Guide in the Debian wiki. As it is a wiki, you are completely welcome to help it evolve.

Several of the people involved in the RTC team were also at the Cambridge mini-DebConf (7-8 November).

The password for all these real time communication services can be set via the LDAP control panel. Please note that this password needs to be different to any of your other existing debian.org passwords. Please use a strong password and please keep it secure.

Some of the infrastructure, like the TURN server, is shared by clients of both SIP and XMPP. Please configure your XMPP and SIP clients to use the TURN server for audio or video streaming to work most reliably through NAT.

A key feature of both our XMPP and SIP services is that they support federated inter-connectivity with other domains. Please try it. The FedRTC service for Fedora developers is one example of another SIP service that supports federation. For details of how it works and how we establish trust between domains, please see the RTC Quick Start Guide. Please reach out to other communities you are involved with and help them consider enabling SIP and XMPP federation of their own communities/domains: as Metcalfe's law suggests, each extra person or community who embraces open standards like SIP and XMPP has far more than just an incremental impact on the value of these standards and makes them more pervasive.

If you are keen to support and collaborate on the wider use of Free RTC technology, please consider joining the Free RTC mailing list sponsored by FSF Europe. There will also be a dedicated debian-rtc list for discussion of these technologies within Debian and derivatives.

This service has been made possible by the efforts of the DSA team in the original SIP+WebRTC project and the more recent jessie upgrades and XMPP project. Real-time communications systems have specific expectations for network latency, connectivity, authentication schemes and various other things. Therefore, it is a great endorsement of the caliber of the team and the quality of the systems they have in place that they have been able to host this largely within their existing framework for Debian services. Feedback from the DSA team has also been helpful in improving the upstream software and packaging to make them convenient for system administrators everywhere.

Special thanks to Peter Palfrader and Luca Filipozzi from the DSA team, Matthew Wild from the Prosody XMPP server project, Scott Godin from the reSIProcate project, Juliana Louback for her contributions to JSCommunicator during GSoC 2014, Iain Learmonth for helping get the RTC team up and running, Enrico Tassi, Sergei Golovan and Victor Seva for the Prosody and prosody-modules packaging and also the Debian backports team, especially Alexander Wirt, helping us ensure that rapidly evolving packages like those used in RTC are available on a stable Debian system.

http://danielpocock.com/debian.org-rtc-announcing-xmpp-sip-presence-and-more

|

|

This Week In Rust: This Week in Rust 104 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: nasa42, brson, and llogiq.

Updates from Rust Community

News & Blog Posts

- There were two major breaking events in the ecosystem this week due to a libc upgrade and a winapi upgrade.

- Bare metal Rust: Low-level CPU I/O ports.

- Macros in Rust - Part 1.

- Macros in Rust - Part 2. Procedural macros.

- Macros in Rust - Part 3. Macro hygiene in Rust.

- Using rustfmt in Vim.

- Writing my first Rust crate: jsonwebtoken.

- Learning to 'try!' things in Rust.

- [video] Concurrency in Rust.

- This week in Redox 5.

- This week in Servo 40.

Notable New Crates & Projects

- Organn. A simple drawbar organ in Rust.

- libloading. A safer binding to platform’s dynamic library loading utilities.

Updates from Rust Core

104 pull requests were merged in the last week.

See the triage digest for more details.

Notable changes

- Library FCP Issues for the 1.6 cycle.

- Deprecate

_msfunctions that predate theDurationAPI. - Implement

IntoIteratorfor&{Path, PathBuf}. - Use guard-pages also on DragonFly/FreeBSD.

- Make Windows directory layout uniform with everything else.

- Expose

drop_in_placeasptr::drop_in_place. - Make all integer intrinsics generic.

- Check whether a supertrait contains Self even without being it.

- Improve error handling in libsyntax.

New Contributors

- Amanieu d'Antras

- Amit Saha

- Bruno Tavares

- Daniel Trebbien

- Ivan Kozik

- Jake Worth

- jrburke

- Kyle Mayes

- Oliver Middleton

- Rizky Luthfianto

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Allow overlapping implementations for marker traits.

#[deprecated]for Everyone.- Improvements to the Time APIs.

- Define the general semantics of intrinsic functions.

- Add an

aliasattribute to#[link]and-l.

New RFCs

- Add

retain_muttoVecandVecDeque. - Add

#[cfg(...)]attribute to enable conditional compilation dependent on size and alignment of FFI types.

Upcoming Events

- 11/9. Seattle Rust Meetup.

- 11/10. San Diego Rust Meetup #10.

- 11/11. RustBerlin Hack and Learn.

- 11/13. Rust Rhein-Main.

- 11/14. Rust at the Hungarian Web Conference.

- 11/16. Rust Paris.

- 11/17. Rust Hack and Learn Hamburg.

- 11/18. Rust Los Angeles Monthly Meetup.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

- Research Engineer - Servo at Mozilla.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Crate of the Week

This week's Crate of the Week is ramp. Ramp supplies some high performance low memory easy to use big integral types.

Whenever you need integers too large for a u64 and cannot afford to lose precision, ramp has just what you need.

Thanks to zcdziura for this week's suggestion. Submit your suggestions for next week!

Quote of the Week

with unsafe .... if you have to ask, then you probably shouldn't be doing it basically

— Steve Klabnik on #rust IRC.

Thanks to Oliver Schneider for the tip.

Submit your quotes for next week!

http://this-week-in-rust.org/blog/2015/11/09/this-week-in-rust-104/

|

|

Air Mozilla: Mosfest 2015: Second Day Closing |

The wrap-up session for Mozfest 2015, from Ravensbourne College in London.

The wrap-up session for Mozfest 2015, from Ravensbourne College in London.

|

|

Air Mozilla: Doc Searles: Giving Users Superpowers |

David "Doc" Searls (born July 29, 1947), co-author of The Cluetrain Manifesto and author of The Intention Economy: When Customers Take Charge, is an American...

David "Doc" Searls (born July 29, 1947), co-author of The Cluetrain Manifesto and author of The Intention Economy: When Customers Take Charge, is an American...

https://air.mozilla.org/doc-searles-giving-users-superpowers/

|

|

Christian Heilmann: [Excellent talks] “OnConnectionLost: The life of an offline web application” at JSConf EU 2015 |

I spend a lot of timing giving and listening to talks at conferences and I want to point out a few here I enjoyed.

At JSConfEU this year Stefanie Grewenig and Johannes Th"ones talked about offline applications: