Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Cameron: Macros in Rust pt4 |

Previously: intro, macro_rules, procedural macros, hygiene.

This post is a grab-bag of stuff that didn't fit elsewhere. First, I'll discuss some more macro_rules stuff around import/export/modularisation of macros. Then a few more implemetnation details. Next time, I'll get into what I think are issues that we should fix.

scoping and import/export

Mostly in Rust, the ordering of items is irrelevant, i.e., you can refer to a function before it is declared. However, for macros, order is important. You can only refer to a macro after it is declared. This even applies where the declaration and/or use is in modules. E.g.,

mod a; // can't name foo!

macro_rules! foo { ... }

mod b; // can name foo!

The module system works differently for macros, the structure of macros does not impose a naming scheme like it does for other items. Macros defined inside a module cannot be used unless that module is annotated with #[macro_use]. That means you can define module-private macros. If you only want to use some macros in the module, you can list those macros in parentheses, e.g., #[macro_use(foo, bar)]. Macros defined before and outside a module can be used inside the module without being imported.

#[macro_use]

mod foo {

pub fn bar() {}

// Doesn't need to be `pub` (and can't be).

macro_rules! baz ...

}

fn main() {

::foo::bar(); // bar cannot be named without importing it here or using a path.

baz!(); // baz can - modules don't matter for naming.

}

Macros are encapsulated by crates. They must be explicitly imported and exported. When declaring a macro, if you want it to be visible in other crates, you must mark it with #[macro_export]. When importing a crate using extern crate you must annotate the extern crate statement with #[macro_use]. You can also re-export macros from a crate (so somebody that pulls in your crate can use the macros) using #[macro_reexport(reexported)]. Note that #[macro_reexport(reexported)] is feature gated.

As mentioned in the last post, you should nearly always refer to items and types in macro definitions using absolute paths. If a macro is exported and used in another crate, there is no way (in regular Rust code) to write an absolute path for the exporting crate which is valid in the importing crate. For example, in the crate foo we might refer to ::bar::baz but in the importing crate we would need foo::bar::baz. To solve this you can use the magic path element $crate, e.g., $crate::bar::baz. It will be replaced with the crate name when the macro is exported, but ignored (giving an absolute path) when the macro is used in the same crate in which it is declared.

Note that if you export a macro from crate A which uses items defined in crate B into crate C, then crate C will need an extern crate B;.

For more details and discussion, see RFC 453. Note that not everything in that RFC was actually implemented.

more implementation details

data structures

When discussing macros, there are three representations of the program which are important: the source text, token trees, and the AST.

The source text is the text as passed to rustc. It is stored (pretty much verbatim) in the codemap, this is a data structure representing an entire crate. Files loaded due to modules are included, but not inline (a CodeMap has many FileMaps, one per file). It is immutable: it is not modified due to macro expansion, or for any other reason. It can be indexed by character indices, byte indices, or by line.

Once the source text has been lexed, it is not used very much by the compiler. The main use case is in error messages.

Lexing is the first stage of compilation where source text is transformed into tokens. In Rust, we have token trees, rather than a token stream. There are tokens for each keyword, punctuation recognised by Rust, string literals, names, etc. Tokens are mostly defined in token.rs. There are some interesting edge cases in tokenisation, for example in > the tokens are <, X, :, Foo, <, Y, and >>. The >> is transformed into two > tokens during parsing.

Token trees are trees because we match opening and closing brackets - (), {}, and [] (but not <>). We do this so that we can find the scope of macros from the token tree without having to parse. This does mean you can't use unbalanced brackets in your input to macros, but that is a minor limitation. TokenTree is defined in ast.rs.

Parsing a token tree gives an AST (abstract syntax tree). An AST is a tree of nodes representing the source text in a fairly concrete way. Rust's AST even includes parentheses and doc comments, although not regular comments. Each expression and sub-expression is a node in the AST, for example x.foo(a, b, c) is an ExprMethodCall with four sub-expressions for x, a, b, and c, each of which is an ExprPath. Likewise, an item like a trait is an AST node and it's functions are child nodes.

Many AST nodes have an id field which is a NodeId, this gives an easy way to identify a node in the AST. NodeIds are crate-relative. For referring to nodes in other crates, we use DefIds. Many nodes also have a Span, which is basically a pair of start and end indices into the codemap. Spans are used for highlighting sections of code in error messages, among other things (more on these later).

parsing process

At the coarsest level you can think of Rust compilation in three phases: parsing and expansion, analysis, and code generation. The first phase is implemented in libsyntax and consists of lexing, parsing, and macro expansion. Everything in this phase is purely syntactic - we don't do any analysis which requires assigning meaning to code. The analysis and code generation phases are implemented in various librustc_* crates and begins with name resolution, which we discussed briefly in the last post. Libsyntax is slightly more user-facing than the rest of the compiler. We convert the AST into a high-level intermediate representation (HIR) in the compiler, before name resolution, so that the compiler's internals are isolated from libsyntax and from tools or procedural macros which hook into it.

Although we talk about lexing, parsing, and macro expansion as distinct phases, that is not really the case. As far as the compiler is concerned there is just parsing, which takes a file or string and returns an AST.

The process beings by reading the source text of the first file into a FileMap which is stored in a CodeMap. That FileMap is lexed to produce a token tree which is wrapped in a Parser. We then start parsing by reading the tokens in order.

If we encounter a mod foo; item, then we read the corresponding file into a FileMap (and store that in our codemap), lex it, wrap it in a parser, and parse it. That will give an ItemMod AST node, which we insert into the outer module's AST.

If we encounter a macro use (which includes using macro_rules!, i.e., a macro_rules macro definition), then we do not parse it's arguments, we save the token tree into the AST without parsing it.

Once parsing is complete, then we have an AST for the whole crate which will include macro uses with un-parsed token trees as arguments. We can then start macro expansion.

As described last time, macro expansion has to walk the entire AST in order to properly implement macro hygiene. During this walk we build up a table of macro definitions. When we encounter a macro use on the walk, we look it up in this table and try to match the token tree argument with one of the patterns in the macro definition. If that succeeds then we perform substitution into the macro body, parse the result (which may even cause us to read more files and lex them), and insert it into the AST to replace the macro use. We then continue to walk down into the new AST to further expand macros.

Expanding procedural macros is similar, except that the procedural macro must explicitly do any parsing required.

At the end of this process we have an expanded AST. This contains a subset of AST nodes (no macro uses, although defintions are left in). Even at this stage there may still be unparsed token trees in the AST due to macro definitions, however, these are not used when compiling the crate, they are left in so that they can be referred to from other crates via the crate's metadata.

Spans and expansion stacks

A span identifies a section of code in the source text. It is implemented as two indices into the codemap: a start and an end. This saves a lot of memory compared with saving text inline. For example, for the statement let foo = bar(a, b); we store spans for a, b, bar, bar(a, b), foo, and the whole statement.

The primary use of spans is in error messages. Say we couldn't find a variable called b, then we would use the span on bs AST node to highlight it in the error message along with some context.

If an error occurs due to code that came from a macro expansion, then there is no single part of the source code to highlight. We want to highlight the erroneous code in the macro, and the macro use, since the error could be due to code in either place. Furthermore, if the macro expansion had many steps (due to nested macros), we want to record each stage in expansion.

To handle this scenario, spans are more complex than just a start and end index. (By the way, Spans are defined in codemap.rs). They also hold an ExpnId (expansion id), which is an index into a table of expansion traces, this table is stored in a CodeMap. Each entry in the table is an ExpnInfo instance. If there is no expansion trace (i.e., the span is code written directly by the user with no macro expansion), then the expansion id is NO_EXPANSION.

An ExpnInfo contains information about the macro use and the macro definition (called call_site and callee respectively, although technically, macros are not called).

Consider

1 | fn main() {

2 | macro_rules! foo {

3 | ($e: expr) => {

4 | $e

5 | }

6 | }

7 |

8 | macro_rules! bar {

9 | ($e: expr) => {

10| foo!($e);

11| }

12| }

13|

14|

15| bar!(42);

16| }

This will expand to 42. The span of that token (expressed as row, column) will be Span { lo: (15, 10), hi: (15, 12), expn_id: 0 } (since that is where the token originates). The ExpnInfo for ids 0 and 1 (which is referenced by 0) is:

0: ExpnInfo {

call_site: Span { lo: (10, 13), hi: (10, 21), expn_id: 1 },

callee: (foo, Span { lo: (2, 5), hi: (6, 6), expn_id: NO_ }),

}

1: ExpnInfo {

call_site: Span { lo: (15, 5), hi: (15, 14), expn_id: NO_ },

callee: (foo, Span { lo: (8, 5), hi: (12, 6), expn_id: NO_ }),

}

The call_site always refers to a macro call somewhere in the source, the callee refers to the whole macro which is used. Since our example macros are not defined by macros, there is no further expansion info for either callee. However, in general expansion traces are trees of expansions.

If we think of the stages of expansion, the first step is to expand bar!(42) to foo!(42), this is given by ExpnInfo 1. Then we expand foo!(42) to 42, given by 2. Note that the expansion trace starts with the fully expanded code and goes 'backwards' to the pre-expansion code. Note also that the span we have for 42 is for an argument to a macro, but the expansion trace is all about macros and their calls, we don't have information about how arguments are propagated.

|

|

Nicholas Nethercote: I rewrote Firefox’s BMP decoder |

Recently I’ve been deliberately working on some areas of Firefox I’m unfamiliar with, particular relating to graphics. This led me to rewriting Firefox’s BMP decoder and learn a number of interesting things along the way.

Image decoding

Image decoding is basically the process of taking an image encoded in a file and extracting its pixels. In principle it’s simple. You start by reading some information about the image, such as its size and colour depth, which typically comes in some kind of fixed-size header. Then you read the pixel data, which is variable-sized.

This isn’t hard if you have all the data available at the start. But in the context of a browser it makes sense to decode incrementally as data comes in over the network. In that situation you have to be careful and constantly check if you have enough data yet to safely read the next chunk of data. This checking is error-prone and tends to spread itself all over the image decoder.

For this reason, Seth Fowler recently wrote a new class called StreamingLexer that encapsulates this checking and exposes a nice state-based interface to image decoders. When a decoder changes state (e.g. it finishes reading the header) it tells StreamingLexer how many bytes it needs to safely enter the next state (e.g. to read the first row of pixels) and StreamingLexer won’t return control to the decoder until that many bytes are available.

Another consideration when decoding images is that you can’t trust them. E.g. an image might claim to be 100 x 100 pixels but actually contain less data than that. If you’re not careful you could easily read memory you shouldn’t, which could cause crashes or security problems. StreamingLexer helps with this, too.

StreamingLexer makes image decoders simpler and safer, and converting the BMP decoder to use it was my starting point.

The BMP format

The BMP format comes from Windows. On the web it’s mostly used on the web for favicons though it can be used for normal images.

There’s no specification for BMP. There are eight in-use versions of the format that I know of, with later versions mostly(!) extending earlier versions. If you’re interested, you can read the brief description of all these versions that I wrote in a big comment at the top of nsBMPDecoder.cpp.

Because the format is so gnarly I started getting nervous that my rewrite might introduce bugs in some of the darker corners, especially once Seth told me that our BMP test coverage wasn’t that good.

So I searched around and found Jason Summers’ wonderful BMP Suite, which exercises pretty much every corner of the BMP format. Version 2.3 of the BMP Suite contains 57 images, 23 of which are “good” (obviously valid), 14 of which are “bad” (obviously invalid) and 20 of which are “questionable” (not obviously valid or invalid). The presence of this last category demonstrates just how ill-specified BMP is as a format, and some of the “questionable” tests have two or three reference images, any of which could be considered a correct rendering. (Furthermore, it’s possible to render a number of the “bad” images in a reasonable way.)

This test suite was enormously helpful. As well as giving me greater confidence in my changes, it immediately showed that we had several defects in the existing BMP decoder, particular relating to the scaling of 16-bit colors and an almost complete lack of transparency handling. In comparison, Chrome rendered pretty much all the images in BMP suite reasonably, and Safari and Edge got a few wrong but still did better than Firefox.

Fixing the problems

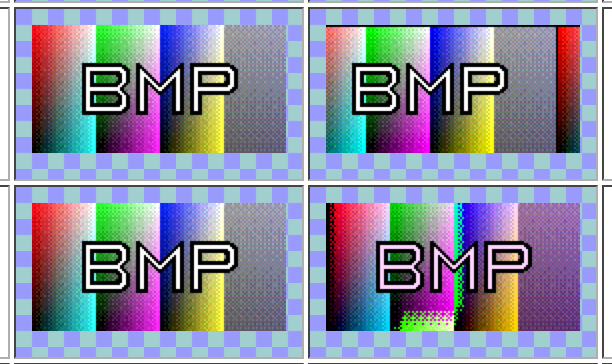

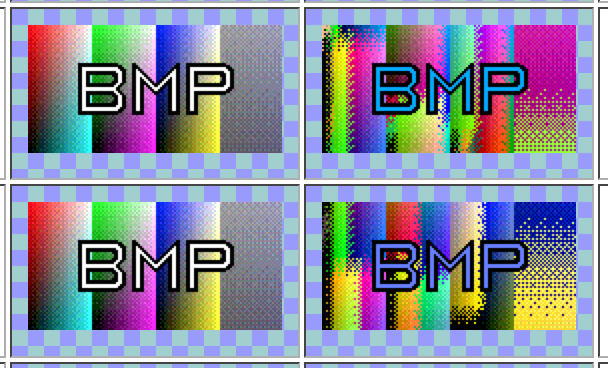

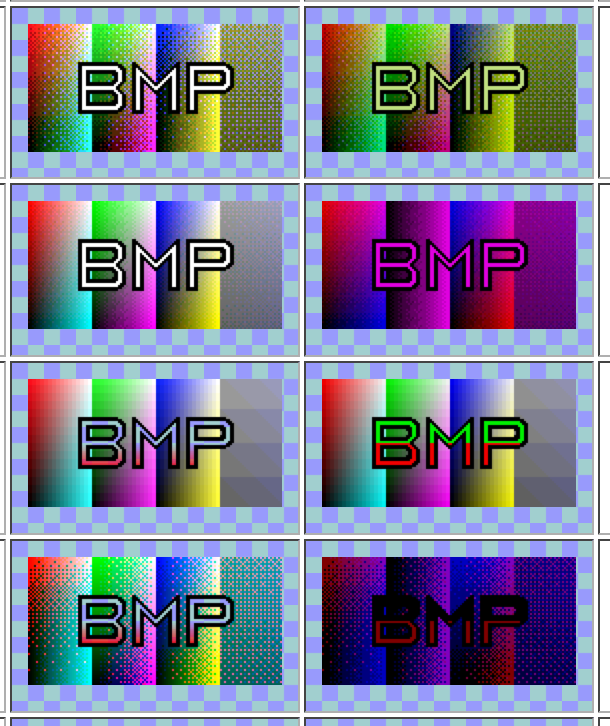

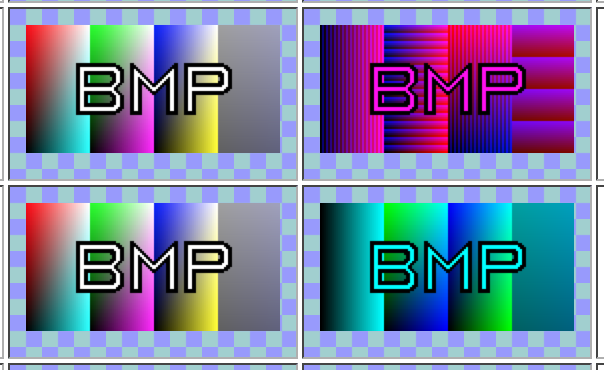

So I fixed these problems as part of my rewrite. The following images show a number of test images that Firefox used to render incorrectly; in each case a correct rendering is on the left, and our old incorrect rendering is on the right.

It’s clear that the old defects were mostly related to colour-handling, though the first pair of images shows a problem relating to the starting point of the pixel data.

(These images are actually from an old version of Firefox with version 2.4 of BMP Suite, which I just discovered was released only a few days ago. I just filed a bug to update the copy we use in automated testing. Happily, it looks like the new code does reasonable things with all the images added in v2.4.)

These improvements will ship in Firefox 44, which is scheduled to be released in late January, 2016. And with that done I now need to start thinking about rewriting the GIF decoder…

https://blog.mozilla.org/nnethercote/2015/11/06/i-rewrote-firefoxs-bmp-decoder/

|

|

Mozilla Addons Blog: Friend of Add-ons: Tom Schuster (evilpie) |

Our newest Friend of add-ons is Tom Schuster, aka evilpie. Tom is a JavaScript expert who has been a Mozillian for five years. Recently, he has contributed to the Web Extensions API initiative, implementing the chrome.cookies API and parts of the chrome.bookmarks API. He says,

“I have been contributing to Mozilla since 2010, because I feel like improving Firefox is the best way for me to ensure an open web. I am thankful for everything I have learned and the people I get to interact with.”

Thanks to Tom for his support!

We recently revamped the add-ons contribution wiki page, and it is now updated with the latest contribution opportunities, including information on helping with the Web Extensions API and FirefoxOS add-ons.

As always, please remember to document your contributions on the Recognition page. Thanks!

https://blog.mozilla.org/addons/2015/11/05/friend-of-add-ons-tom-schuster-evilpie/

|

|

Planet Mozilla Interns: Michael Sullivan: End of summer Rust internship slides from 2011-2013 |

Slides from my end of summer talks for all of my Rust internships. (This post heavily backdated and the slides are hilariously out of date.)

2012 – Vector Reform and Static Typeclass Methods

2013 – Default Methods in Rust

http://www.msully.net/blog/2013/09/01/end-of-summer-rust-internship-slides-from-2011-2013/

|

|

Air Mozilla: Web QA Weekly Meeting, 05 Nov 2015 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Yunier Jos'e Sosa V'azquez: Conoce los complementos destacados para noviembre |

El 2015 est'a llegando casi a su fin y no por menos vamos a dejar de mostrarles a ustedes un grupo de los mejores complementos para Firefox seleccionados por el Addons Members Board Team. Si eres de los que utiliza alg'un servicio de traducci'on, tenemos un complemento que te viene como anillo al dedo, por otra parte, si quieres navegar informando que usas otro navegador, aqu'i te mostramos una soluci'on.

Selecci'on del mes: Google Translator for Firefox

por nobzol

Google Translator for Firefox traduce cualquier texto a tu idioma con solo un clic o mediante una combinaci'on de teclas. Con 'el puedes traducir las p'aginas completas o una parte del documento, cuando hagas esto, el complemento reemplazar'a el texto en la p'agina web. Como su nombre lo indica utiliza el servicio de traducci'on de Google y seg'un las personas que lo utilizan es impresionante.

Instalar Google Translator for Firefox »

Tambi'en te recomendamos User-Agent Switcher

por Linder

Con este complemento puedes cambiar r'apida y f'acilmente el User Agent de tu navegador. El User Agent o Agente de usuario es una cadena que env'ian los navegadores a los sitios web para identificarse y saber si usas Firefox, Chrome, Opera u otro navegador, plataforma y otros datos adicionales. User-Agent Switcher es muy 'util para los desarrolladores web y te permite navegar diciendo que est'as empleando Safari en iOS u otro.

Instalar User Agent Switcher »

'Unete al Addons Members Board Team.

Este mes el equipo de la comunidad que selecciona los complementos destacados es renovado y tu puedes formar parte de 'el. Solo tienes que enviar un correo a la direcci'on amo-featured@mozilla.org con tu nombre y proporcionando informaci'on acerca de c'omo te relacionas con AMO (la galer'ia de complementos de Mozilla). La fecha l'imite para enviar tus datos es el pr'oximo 9 de noviembre a las 23:59 PDT. En esta p'agina podr'as encontrar informaci'on relacionada con las tareas que debes realizar.

Y hasta aqu'i por este mes, si te a gustado esta selecci'on, por favor d'ejanos tu opini'on.

http://firefoxmania.uci.cu/conoce-los-complementos-destacados-para-noviembre-2015/

|

|

Jonathan Griffin: Engineering Productivity Update, November 5, 2015 |

It’s the first week of November, and because of the December all-hands and the end-of-year holidays, this essentially means the quarter is half over. You can see what the team is up to and how we’re tracking against our deliverables with this spreadsheet.

Highlights

hg.mozilla.org: gps did some interesting work investigating ways to increase cloning performance on Windows; it turns out closing files which have been appended is a very expensive process there. He also helped roll out bundle-related cloning improvements in Mercurial 3.6.

Community: jmaher has posted details about our newest Quarter of Contribution. One of our former Outreachy interns, adusca, has blogged about what she gets out of contributing to open source software.

MozReview and Autoland: dminor blogged about the most recent MozReview work week in Toronto. Meanwhile, mcote is busy trying to design a more intuitive way to deal with parent-child review requests. And glob, who is jumping in to help out with MozReview, has created a high-level diagram sketching out MozReview’s primary components and dependencies.

Autoland has been enabled for the version-control-tools repo and is being dogfooded by the team. We hope to have it turned on for landings to mozilla-inbound within a couple of weeks.

Treeherder: the team is in London this week working on the automatic starring project. They should be rolling out an experimental UI soon for feedback from sheriffs and others. armenzg has fixed several issues with automatic backfilling so it should be more useful.

Perfherder: wlach has blogged about recent improvements to Perfherder, including the ability to track the size of the Firefox installer.

Developer Workflows: gbrown has enabled |mach run| to work with Android.

TaskCluster Support: the mochitest-gl job on linux64-debug is now running in TaskCluster side-by-side with buildbot. Work is ongoing to green up other suites in TaskCluster. A few other problems (like failure to upload structured logs) need to be fixed before we can turn off the corresponding buildbot jobs and make the TaskCluster jobs “official”.

e10s Support: we are planning to turn on e10s tests on Windows 7 as they are greened up; the first job which will be added is the e10s version of mochitest-gl, and the next is likely mochitest-devtools-chrome. To help mitigate capacity impacts, we’ve turned off Windows XP tests by default on try in order to allow us to move some machines from the Windows XP pool to the Windows 7 pool, and some machines have already been moved from the Linux 64 pool (which only runs Talos and Android x86 tests) to the Windows 7 pool. Combined with some changes recently made by Releng, Windows wait times are currently not problematic.

WebDriver: ato, jgraham and dburns recently went to Japan to attend W3C TPAC to discuss the WebDriver specification. They will be extending the charter of the working group to get it through to CR. This will mean certain parts of the specification need to finished as soon as possible to start getting feedback.

The Details

hg.mozilla.org

- Better error messages during SSH failures (bug 1217964)

- Make pushlog compatible with Mercurial 3.6 (bug 1217569)

- Support Mercurial 3.6 clone bundles feature on hg.mozilla.org (bug 1216216)

- Functionality from bundleclone extension that Mozilla wrote and deployed is now a feature in Mercurial itself!

- Advertise clone bundles feature to 3.6+ clients that don’t have it enabled (bug 1217155)

- Update the bundleclone extension to seamlessly integrate with now built-in clone bundles feature

MozReview/Autoland

- We’ve enabled Autoland to “Inbound” for the version-control-tools repository and are dogfooding it while working on UI and workflow improvements.

- Following up on some discussion around “squashed diffs”, an explanatory note has been added to the parent (squashed) review requests, which serves to distinguish them from, and to promote, review requests for individual commits.

- “Complete Diff” has been renamed to the more accurate “Squashed Diff”. The “Review Summary” link has been removed, but you can still get to the squashed-diff reviews via the squashed diff itself—but note that we’ll likely be removing support for squashed-diff reviews in order to promote the practices of splitting up large commits into smaller, standalone ones and reviewing each individually.

- A patch to track the files review status is now under review; it should land in the next few days.

Mobile Automation

- [gbrown] ‘mach run’ now supports Firefox for Android

- [bc] Helping out with Autophone Talos, mozdevice adb*.py maintenance

Firefox and Media Automation

- [maja_zf] Marionette test runner is now a litte more flexible and extensible: I’ve added some features needed by Firefox UI and Update tests that are useful to all desktop tests. (Bug 1212608)

ActiveData

- [ekyle] Buildbot JSON logs are imported, along with all text logs they point to: we should now have a complete picture of the time spent on all steps by all machines on all pools. Still verifying the data though.

bugzilla.mozilla.org

- (bug 1213757) delegate password and 2fa resets to servicedesk

- (bug 1218457) Allow localconfig to override (force) certain data/params values (needed for AWS)

- (bug 1219750) Allow Apache2::SizeLimit to be configured via params

- (bug 1177911) Determine and implement better password requirements for BMO

- (bug 1196743) – Fix information disclosure vulnerability that allows attacker to obtain victim’s GitHub OAuth return code

Perfherder/Performance Testing

- Lots of improvements: http://wrla.ch/blog/2015/11/perfherder-onward/

- Tracking installer size for Firefox in Perfherder now and make it easy to submit data to Perfherder from any treeherder job. Posted to dev.platform asking for feedback on what else we could start tracking: https://groups.google.com/forum/#!topic/mozilla.dev.platform/W0Xtx9yup5E

- Compare view now supports filtering, links to graph view for viewing numbers in context

- Lots of small fixes from Mike Ling (see blog post ^^ for details)

- Performance sheriffing interface coming along (again, see blog post for screenshot)

TaskCluster Support

- [ahal] mochitest-webgl running on linux64 debug on all trunk branches

General Automation

- [ahal] reftest with structured logging working on b2g, except for a shutdown crash

- [armenzg] Fixed automatic backfilling of permanent failures and hidden jobs

- [armenzg] Fixed automated backfilling dealing with out-of-date information about builders

- [armenzg] Added alerts for automated backfilling

WebDriver

- We (ato, AutomatedTester, jgraham) went to Japan to W3C TPAC to discuss the WebDriver specification. We will be extending the charter of the working group to get it through to CR. This will mean certain parts of the specification need to finished as soon as possible to start getting feedback.

https://jagriffin.wordpress.com/2015/11/05/engineering-productivity-update-november-5-2015/

|

|

QMO: Firefox 43.0 Beta 3 Testday, November 13th |

Great news y’all! Friday, November 13th :), we are hosting a new event – Firefox 43.0 Beta 3 Testday. The main focus will be on the new features: Add-ons Signing, Search Suggestions and Unified Autocomplete. Check out the detailed instructions via this etherpad.

No previous testing experience is required! Therefore, feel free to join us on #qa IRC channel and our moderators will happily offer you guidance.

Looking forward to see you on Friday!

https://quality.mozilla.org/2015/11/firefox-43-0-beta-3-testday-november-13th/

|

|

Robert O'Callahan: rr In VMWare: Solved! |

Well, sort of.

I previously explained how rr running in a VMWare guest encounters a VMWare bug where performance counters measuring conditional branches executed fail to count a conditional branch executed between a pair of CPUID instructions. Today a helpful VWMare developer confirmed that this is an issue caused by clever optimizations in the virtual machine monitor. Better still, he provided an easy way to work around it! Just add

monitor_control.disable_hvsim_clusters = trueto your .vmx file. This may impact guest performance a little bit, but it will make rr work reliably in a VMWare guest, which should be useful to a lot of people. Excellent!

http://robert.ocallahan.org/2015/11/rr-in-vmware-solved.html

|

|

Jeff Walden: Linus Torvalds’s “communication style” |

Linus Torvalds recently wrote a long rant rejecting a patch. Read it now.

Fellow Mozillian Nick Nethercote commented on that rant. Now read that.

I began commenting on Nick’s post, but my thoughts spiraled. And I’ve been pondering this awhile, in the context of Linus and other topics. So I made it a full-blown post.

Ranting is sometimes funny

Linus’s rants are entertaining, in a certain sense: much the same sense that makes us laugh at a teacher’s statement (to a student, if an odd one) in Billy Madison:

What you’ve just said is one of the most insanely idiotic things I have ever heard. At no point in your rambling, incoherent response were you even close to anything that could be considered a rational thought. Everyone in this room is now dumber for having listened to it. I award you no points, and may God have mercy on your soul.

We’re entertained not because we approve of the teacher’s (or Linus’s) rants. We laugh because they’re creative. (Linus’s rants are creative, if not screenwriter-creative.) The flame can be an art form, even to flame war participants. My college dorm mailing list had regular flame wars (sometimes instigated or continued by regular trolls). I hated flame wars as a freshman. But eventually I grew to appreciate the art of the flame. In utter seriousness, that learning process was a valuable part of my college education, as it’s been for others.

(Yes, Billy Madison is low-brow humor. So are Linus’s flames, as entertainment. Not all humor must be high-brow.)

Even funny ranting is sometimes bad behavior

We also sometimes laugh because the behavior’s bad. (Humor’s core, I think, is a contradiction between expectation and reality. I highly recommend Stranger in a Strange Land for, among other things, its meditations on the nature of humor.) That’s one reason we laugh at the Billy Madison teacher-student interaction (allowing for its fictionality), and at Linus.

But somewhat pace Nick, I absolutely cannot equate laughing, or being entertained, with approval or celebration. This and this don’t celebrate ISIS, no matter ISIS appalls us. Naming Linus’s mail an “epic rant” doesn’t celebrate it. It’s just a description of five hundred over-the-top words when fewer words and less drama would have been better (as Nick observes). (At least one place calling the rant “epic” also linked this ironically-abusive decrial.)

Linux thrives despite Linus’s behavior, not because of it

Many of Linus’s rants are unacceptable. (I’ve seen a few that were overheated but not abusive.) Many developers weather them. But some developers have left the Linux community in response, when they wouldn’t have for gentler criticism. That’s a problem.

Linus gets away with bad behavior because: he’s abusive just infrequently enough; Linux has unusually high technical barriers to entry; it’s indispensable to many companies; and those companies fund development no matter Linus’s behavior. Linux led by Linus would have died already, absent these considerations. Linux is one of very, very few projects that can survive Linus’s abusiveness.

Linus is still very smart, and his rants can be right

I often recognize reasonable technical criticism beneath Linus’s rants. Regarding this patch, Linus is right. The proposed changes are harder to read than his suggestion — I’d reject them, too. I don’t agree with every Linus rant. But it’s normal to sometimes disagree even with smart developers.

What’s not normal, is being required to sift through abuse for usable feedback. It’s a skill worth having. If you can weather excess criticism from a coworker having an off day, you’ll be more productive. But it still shouldn’t be necessary (let alone routine), especially not for new open source contributors.

Conclusion

Linus is really, really smart. Because of that, and because Linux is really valuable, Linus unfortunately can keep being Linus. Barring a strongly-backed fork, nothing will change.

http://whereswalden.com/2015/11/05/linus-torvaldss-communication-style/

|

|

Gregory Szorc: hg.mozilla.org Updates |

A number of changes have been made to hg.mozilla.org over the past few days:

-

Bookmarks are now replicated from master to mirrors properly (before, you could have seen foo@default bookmarks appearing). This means bookmarks now properly work on hg.mozilla.org! (bug 1139056).

-

Universally upgraded to Mercurial 3.5.2. Previously we were running 3.5.1 on the SSH master server and 3.4.1 on the HTTP endpoints. Some HTML templates received minor changes. (bug 1200769).

-

Pushes from clients running Mercurial older than 3.5 will now see an advisory message encouraging them to upgrade. (bug 1221827).

-

Author/user fields are now validated to be a RFC 822-like value (e.g. "Joe Smith <someone@example.com>"). (bug 787620).

-

We can now mark individual repositories as read-only. (bug 1183282).

-

We can now mark all repositories read-only (useful during maintenance events). (bug 1183282).

-

Pushlog commit list view only shows first line of commit message. (bug 666750).

Please report any issues in their respective bugs. Or if it is an emergency, #vcs on irc.mozilla.org.

http://gregoryszorc.com/blog/2015/11/05/hg.mozilla.org-updates

|

|

Nicholas Nethercote: moz-icon: a curious corner of Firefox |

Here’s an odd little feature in Firefox. Enter the following text into the address bar.

moz-icon://.pdf?size=128

On my Linux box, it shows the following icon image.

On my Windows laptop, it shows a different icon image.

On my Mac Laptop, that URL doesn’t work but if I change the “128” to “64” it shows this icon image.

In each case we get the operating system’s icon for a PDF file.

Change the “size” (up to a maximum of 255) value and you’ll get a different size. Except on Mac the limit seems to be lower, probably due to the retina screen in some way.

Change the file extension and you’ll get a different icon. You can try all sorts, like “.xml”, “.cpp”, “.js”, “.py”, “.doc”, etc.

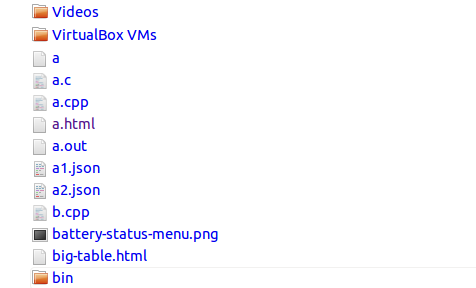

How interesting. So what’s this for? As far as I understand, it’s used to make local directory listing pages look nice. E.g. on my Linux box if I type “file:///home/njn/” into the address bar I get a listing of my home directory, part of which looks like the following.

That listing uses this mechanism to show the appropriate system icon for each file type.

Furthermore, this feature is usable from regular web pages! Just put a “moz-icon” URL into an

That’ll only work on Firefox, though. Chrome actually has a similar mechanism, though it’s not usable from regular web pages. (For more detail — much more — read here.) As far as I know Safari, IE and Edge do not have such a mechanism; I’m not sure if they support listing of local directories in this fashion.

https://blog.mozilla.org/nnethercote/2015/11/05/moz-icon-a-curious-corner-of-firefox/

|

|

Yunier Jos'e Sosa V'azquez: Protecci'on contra rastreo en modo privado, soporte para Windows de 64 bits y mucho m'as en el nuevo Firefox |

Siguiendo el ritmo de actualizaciones, ayer martes 3 Mozilla liber'o una nueva versi'on de Firefox, el navegador que pone en tus manos el control de tus datos y privacidad. Cada vez m'as, Firefox sobresale por encima de otras soluciones en cuanto a la privacidad y aporta herramientas para que los usuarios sean quienes elijan si desean ofrecer sus datos. Adem'as, con este lanzamiento llega el soporte para Windows de 64 bits, mejoras en la administraci'on de contrase~nas, nuevas caracter'isticas para desarrolladores y muchas novedades m'as.

Hace poco aqu'i comentamos acerca de la protecci'on contra rastreo, c'omo funcionaba y las ventajas que ofrece al utilizarlo. Por defecto esta opci'on viene activada en Firefox, pero si no te aparece, debes realizar los siguientes pasos:

- Haz clic en el bot'on de men'u

, seguido de Preferencias.

, seguido de Preferencias. - Haz clic en Privacidad y desmarca la casilla que se encuentra junto Utilizar la Protecci'on contra el rastreo en ventanas privadas.

- Para guardar los cambios, cierra la pesta~na.

Al navegar te aparecer'a el icono de un escudo en la barra de direcciones cada vez que Firefox bloquee dominios de rastreo.

Si deseas conocer m'as acerca de esta nueva funcionalidad, te sugiero que visites esta p'agina en la Ayuda de Mozilla.

Aunque desde la p'agina oficial de descargas no se puede descargar y Mozilla no se ha pronunciado acerca de ello, es noticia la llegada de Firefox para Windows de 64 bits, de esta forma se completa el soporte para esta arquitectura pues con anterioridad ya exist'ia para Mac y Linux. De ahora en adelante los usuarios de Windows podr'an instalar esta versi'on optimizada y emplear al m'aximo todas las ventajas que ofrece dicha arquitectura en Firefox.

El Administrador de sesiones ha sido mejorado en varios aspectos entre los que podemos encontrar:

- Mejor heur'istica al guardar usuarios y contrase~nas.

- Desde el men'u contextual se puede editar y copiar los datos de una cuenta.

- En el proceso de migraci'on de datos hacia Firefox ahora se incluyen las contrase~nas.

WebRTC tambi'en ha sido actualizado con soporte a IPv6, nuevas preferencias para el control de generaci'on de candidatos ICE y la mejora de la capacidad de las aplicaciones a la hora de comprobar que dispositivos son usados en getUserMedia.

Novedades en Android

- El nuevo Administrador de sesiones m'ovil (about:login) que lista todas los inicios de sesi'on almacenados y permite editar, ver o eliminar las contrase~nas.

- Navegaci'on familiar amistosa cuando cuando los usuarios crean perfiles restringidos en las tabletas.

- Las direcciones externas abiertas desde otras aplicaciones de Android se realiza en segundo plano.

- Soporte para b'usquedas mediante entrada de voz desde la barra de direcciones.

- Uso de pesta~nas desplazables en los paneles de navegaci'on.

Otras novedades

- Implementado ES6 Reflect.

- Soporte para ImageBitmap y createImageBitmap().

- Posibilidad de ver el c'odigo HTML en una nueva pesta~na.

- Depuraci'on remota de sitios sobre WiFi sin la necesidad de utilizar cable o ADB.

- El simulador de Firefox OS ahora se puede configurar para simular otros dispositivos como tel'efonos, tabletas e incluso televisores.

- En el Inspector web ahora se pueden filtrar estilos CSS.

Si deseas, puedes leer las notas de lanzamiento para conocer m'as novedades.

Si deseas conocer m'as, puedes leer las notas de lanzamiento (en ingl'es).

Aclaraci'on para la versi'on m'ovil.

En las descargas se pueden encontrar 3 versiones para Android. El archivo que contiene i386 es para los dispositivos que tengan la arquitectura de Intel. Mientras que en los nombrados arm, el que dice api11 funciona con Honeycomb (3.0) o superior y el de api9 es para Gingerbread (2.3).

Puedes obtener esta versi'on desde nuestra zona de Descargas en espa~nol e ingl'es para Linux, Mac, Windows y Android. Recuerda que para navegar a trav'es de servidores proxy debes modificar la preferencia network.auth.force-generic-ntlm a true desde about:config.

|

|

Air Mozilla: View Source: Wednesday PM Session |

View Source is a brand new conference for web developers, presented by Mozilla and friends, produced by the folks who also bring you the Mozilla...

View Source is a brand new conference for web developers, presented by Mozilla and friends, produced by the folks who also bring you the Mozilla...

|

|

Nick Cameron: Macros in Rust pt3 |

Previously I covered macro_rules and procedural macros. In this post I'll cover macro hygiene in a bit more detail. In upcoming posts, I'll cover modularisation for macros, some implementation details, some areas where I think we should improve the system, and some ideas for doing so.

macro hygiene in Rust

I explained the general concept of macro hygiene in this blog post.

The good news is that all macros in Rust are hygienic (caveat, see the bad news below). When you use macros in Rust, variable naming is hygienic, this is best illustrated with some examples:

macro_rules! foo {

() => {

let x = 0;

}

}

fn main() {

let mut x = 42;

foo!();

println!("{}", x);

}

Here the xs defined in main and foo! are disjoint, so we print 42.

macro_rules! foo {

($x: ident) => {

$x = 0;

}

}

fn main() {

let mut x = 42;

foo!(x);

println!("{}", x);

}

Here, x is passed in to the macro so foo! can modify main's x. We print 0.

macro_rules! foo {

($x: ident) => {

let $x = 0;

}

}

fn main() {

let x = 42;

foo!(x);

println!("{}", x);

}

Again, we print 0. This version is (IMO) slightly unfortunate because looking at main it seems like x should not be mutable, but foo! redefines x. A limitation of Rust's hygiene system is that although it limits a macro's effects to objects 'passed' to the macro, it does not limit those effects to what you would expect from the rest of the language. One could imagine a stricter system where the user must explicitly state how the macro can affect the use context (in this case by adding a variable to the environment).

fn main() {

let mut x = 42;

macro_rules! foo {

() => {

x = 0;

}

}

foo!();

println!("{}", x);

}

In this example, main's x is in scope for foo!, so we print 0.

fn main() {

let mut x = 42;

macro_rules! foo {

() => {

x = 0;

}

}

let mut x = 33;

foo!();

println!("{}", x);

}

Finally, in this example we print 33 because foo! can only 'see' the first x and modifies that one, not the second x which is printed. Note that you can't write code without macros to do what foo! does here - you can't name the first x because it is hidden by the second one, but macro hygiene means the two xs are different to the macro.

Rust is also hygienic with respect to labels, e.g.,

fn main() {

macro_rules! foo {

($e: expr) => {

'foo: loop {

$e;

}

}

}

'foo: loop {

foo!{

break 'foo

}

}

}

This example terminates after a single iteration, whereas with unhygienic macro expansion, it would loop forever. (Compiling this example does give an erroneous warning, but that is due to a bug).

The macro system is also hygienic with respect to Rust's system of feature gating and stability checking.

limitations

There is some bad news too: hygiene basically only works on expression variables and labels, there's a bunch of places we're poor with hygiene. We don't apply hygiene to lifetime or type variables or types themselves. We are also unhygienic with respect to privacy and safety (i.e., use of unsafe blocks).

When referring to items from a macro, we resolve names in the context of the use, without taking into account the definition site. That means that to be safe, all references to non-local names must use an absolute path, and such names must be visible anywhere the macro is to be used.

It is not exactly clear what hygienic expansion of items should look like. For example, if a macro defines a function (or any other item), should that function be nameable from outside the macro (assuming it's name wasn't passed in)? It currently behaves naively - it can be accessed and has the scope of the use, without applying any kind of hygiene.

implementation

For the purposes of this discussion, the phases of the Rust compiler basically look like this:

lexing -> parsing -> macro expansion -> name resolution -> analysis and translation

Note that lexing, parsing, and macro expansion are all sort of mixed up together to some extent, but that doesn't matter too much. The macro expansion phase is hygienic, we'll get back to exactly why later. Name resolution is the phase where we take syntactic names and resolve them to definitions. For example in

let foo = ...;

...

let x = foo;

when we resolve foo in the second statement, we find the definition of foo in the first statement and store a 'link' between the two. Name resolution is a bit complicated - it must take into account relative and absolute paths, use items including pub use, glob imports, imports which introduce an alias, lexical scoping, redefinitions using let, the type vs value namespacing, and so forth. At the end of all of that we have to be able to answer the question does foo == foo? In an un-hygienic system, this just comes down to string equality (well, modulo possible unicode normalisation). In Rust, the question is a little more complicated.

When we parse Rust code, identifiers are represented as Names, these are just strings, interned for the sake of efficiency. For name resolution, we consider Idents, an Ident is a Name and a syntax context. These syntax contexts are created during macro expansion. To check if two Idents are equal we resolve each Name under it's syntax context (note that this resolution is a completely separate concept from name resolution). The result of this resolution is a new Name, we then compare these resolved Names for equality (which is an integer comparison, since they are interned strings).

Syntax contexts are added to Idents during macro expansion. There is a distinguished context, EMPTY_CTXT, which is initially given to each Ident during parsing. Each Ident gets a new context during expansion.

Macro hygiene works in the same way for macro_rules and procedural macros. It depends only on the AST produced by the macro, not how it was produced. However, procedural macros can manually override this process by assigning syntax contexts to Idents themselves. If the syntax context is unknown to the hygiene algorithm, then it will be left alone. If it is known, then it will be treated the same way as if the algorithm has assigned it during a previous expansion.

Since we compare Names by comparing indices into a table, if we create two different entries in that table, then we will have two Names with the same string representation, but which are not equal when compared. This is sometimes useful for creating fresh variables with useful names which will not clash with other variables in the same scope. To get such a name use the gensym or gensym_ident functions in token.rs.

Note that if you use such identifiers in a syntax extension, then macro hygiene using syntax contexts is applied 'on top'. So two Idents will be incompatible if they are different entries in the interner table to start with or if they are renamed differently. In fact, such gensym'ed names are a key part of the hygiene algorithm.

The mtwt algorithm

The macro hygiene algorithm we use in Rust comes from a paper titled Macros That Work Together, thus we call it the mtwt algorithm. It is mostly implemented in mtwt.rs.

We use the mtwt algorithm during macro expansion. We walk the whole AST for the crate, expanding macro uses and applying the algorithm to all identifiers. Note that mtwt requires we touch the whole tree, not just those parts which are produced by macros.

In some ways, we apply hygiene lazily. We walk the AST during expansion computing syntax contexts, but we don't actually apply those syntax contexts until we resolve an Ident to a Name using its syntax context (I think this is only done in name resolution).

Mtwt has two key concepts - marking and renaming. Marking is applied when we expand a macro and renaming is done when we enter a new scope. Conceptually, a syntax context under mtwt consists of a series of marks and renames. However, syntax contexts are interned, and so the syntax context in an Ident is an integer index into the table of syntax contexts - two Idents with the same conceptual syntax context should have the same index.

marking

When we expand a macro, the newly created code is marked with a fresh mark (you can imagine just using integers for the marks). Arguments are not marked when they are expanded. So, if we have the following macro definition,

macro_rules! foo {

($x: ident) => {

let y = 42;

let $x = y;

}

}

We would replace foo!(a) with the code

let y = 42;

let a = y;

where the declaration and use of y would be marked with a fresh mark, but a would not.

renaming

Whenever we enter a scope during expansion we rename all identifiers. However, the meaning of scope here is somewhat interesting. In a traditional setting, a let expression looks something like (let var expr body) (please excuse the pseudo-code) which defines a new variable called var with value given by expr and scope body. This is roughly equivalent to { let var = expr; body } in Rust. Note that in the traditional syntax, the scope of the variable is very explicit.

In Rust, a scope from the hygiene algorithm's point of view includes functions, but not blocks (i.e., { ... }). It also includes an implicit scope which starts with any let statement (or other pattern introduction) and ends when the variables declared go out of scope. E.g.,

fn foo(w: Bar) { // | w

let x = ...; // | x |

{ // | |

let y = ...; // | y | |

... // | | |

let z = ...; // | z | | |

... // | | | |

} // | |

... // | |

} //

Here, there are four scopes, shown on the right hand side.

Every identifier in a scope is 'renamed'. A renaming syntax context consists of a 'from' Ident and a 'to' Name. The 'from' Ident is the variable identifier that starts the scope (e.g., w or z in the above example). We use an Ident rather than a Name for the 'from' identifier because we may have applied the mtwt algorithm to it earlier, so it may already be marked and/or renamed.

Note that renaming is cumulative, so the syntax context for the ... under let z... in the above example will include renames for w, x, y, and z. The name we rename to is a fresh name which looks the same as the original name, but is not equal to it. We use the gensym approach described above to implement this. So we might rename from foo interned at index 31 to foo interned at 45234.

resolution

When we call mtwt::resolve on an Ident we compute how its syntax context affects the Name. We essentially walk the syntax context applying marks and renaming where relevant, ending up with a renamed Name. Marks are simple, we apply them all. So at any point of resolution, we have a name and a Vec of marks applied. Marks do not affect the end result of resolution (i.e., once we have fully resolved, we then ignore the marks), but they do affect renaming. When we come across a rename we compare the 'from' Ident with the current name being resolved, they are considered equal if the names are equal (which will take into account renaming already performed) and the list of marks on each are the same.

Let's go through an example. We'll denote names as foo[i] where foo is the name as written in the source code and i is the index into the interned string table. So, foo[1] and foo[2] will look the same in the source code, but are not equal. It is not possible to have different names with the same index.

A mark is denoted as mark(n) where n is an integer, this just marks all names with n.

A renaming is denoted as rename(from -> to) where from is an Ident, however, we will consider them pre-resolved to a name and a set of marks, e.g., foo[42](2, 5, 6) the name foo with index 42 marked with 2, 5, and 6.

Let's look at the following syntax context:

rename(x[1]() -> x[4]), mark(1), mark(2), rename(y[2]() -> y[5]), rename(y[2](1, 2) -> y[6])

Lets first apply this context to the name x[1]: we start with no marks: x[1](). The first rename matches, so we rename to x[4](), then we add the two marks: x[4](1, 2). Neither of the later two renames match, so we end up with the resolved name x[4].

Now consider y[2]. In this case the first rename does not match, but we still apply the marks, giving y[2](1, 2). The next rename matches the name, but not the marks, so is not applied, the next rename does match, so we get y[6](1, 2), so the resolved name is y[6].

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2015/11/04 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

The Review Queues

- Most nominations for full review are taking less than 10 weeks to review.

- 142 nominations in the queue awaiting review.

- Most updates are being reviewed within 7 weeks.

- 70 updates in the queue awaiting review.

- Most preliminary reviews are being reviewed within 7 weeks.

- 141 preliminary review submissions in the queue awaiting review.

Review times for most add-ons have improved recently due to more volunteer activity. Add-ons that are admin-flagged or very complex are still moving along slowly, but we’re in the process of getting more paid reviewer time in the coming weeks, which should help in that area.

If you’re an add-on developer and would like to see add-ons reviewed faster, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 42 Compatibility

The compatibility blog post is up, and the automatic validation was run last week.

Firefox 43 Compatibility

This compatibility blog post should come up soon.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Changes in let and const in Firefox 44

Firefox 44 includes some breaking changes that you should all be aware of. Please read the post carefully and test your add-ons on Nightly or the newest Developer Edition.

Extension Signing

The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions. The current plan is to turn on enforcement by default in Firefox 43.

Electrolysis

Electrolysis, also known as e10s, is the next major compatibility change coming to Firefox. In a nutshell, Firefox will run on multiple processes now, running content code in a different process than browser code. If you have questions about this, please join the #e10s channel on IRC.

Web Extensions

If you read the post on the future of add-on development, you should know there are big changes coming. We’re investing heavily on the new WebExtensions API, so we strongly recommend that you start looking into it for your add-ons. You can track progress of its development in http://www.arewewebextensionsyet.com/.

https://blog.mozilla.org/addons/2015/11/04/add-ons-update-73/

|

|

Air Mozilla: The Joy of Coding - Episode 33 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Air Mozilla: View Source: Wednesday AM Session |

View Source is a brand new conference for web developers, presented by Mozilla and friends, produced by the folks who also bring you the Mozilla...

View Source is a brand new conference for web developers, presented by Mozilla and friends, produced by the folks who also bring you the Mozilla...

|

|

William Lachance: Perfherder: Onward! |

In addition to the database refactoring I mentioned a few weeks ago, some cool stuff has been going into Perfherder lately.

Tracking installer size

Perfherder is now tracking the size of the Firefox installer for the various platforms we support (bug 1149164). I originally only intended to track Android .APK size (on request from the mobile team), but installer sizes for other platforms came along for the ride. I don’t think anyone will complain.

Just as exciting to me as the feature itself is how it’s implemented: I added a log parser to treeherder which just picks up a line called “PERFHERDER_DATA” in the logs with specially formatted JSON data, and then automatically stores whatever metrics are in there in the database (platform, options, etc. are automatically determined). For example, on Linux:

PERFHERDER_DATA: {"framework": {"name": "build_metrics"}, "suites": [{"subtests": [{"name": "libxul.so", "value": 99030741}], "name": "installer size", "value": 55555785}]}

This should make it super easy for people to add their own metrics to Perfherder for build and test jobs. We’ll have to be somewhat careful about how we do this (we don’t want to add thousands of new series with irrelevant / inconsistent data) but I think there’s lots of potential here to be able to track things we care about on a per-commit basis. Maybe build times (?).

More compare view improvements

I added filtering to the Perfherder compare view and added back links to the graphs view. Filtering should make it easier to highlight particular problematic tests in bug reports, etc. The graphs links shouldn’t really be necessary, but unfortunately are due to the unreliability of our data — sometimes you can only see if a particular difference between two revisions is worth paying attention to in the context of the numbers over the last several weeks.

Miscellaneous

Even after the summer of contribution has ended, Mike Ling continues to do great work. Looking at the commit log over the past few weeks, he’s been responsible for the following fixes and improvements:

- Bug 1218825: Can zoom in on perfherder graphs by selecting the main view

- Bug 1207309: Disable ‘<' button in test chooser if no test selected

- Bug 1210503 – Include non-summary tests in main comparison view

- Bug 1153956 – Persist the selected revision in the url on perfherder (based on earlier work by Akhilesh Pillai)

Next up

My main goal for this quarter is to create a fully functional interface for actually sheriffing performance regressions, to replace alertmanager. Work on this has been going well. More soon.

|

|

Support.Mozilla.Org: Firefox 42 SUMO Day this Thursday, November 5th |

Remember, remember, the 5th of November, for some people it’s Guy Fawkes Night for us it’s Firefox 42 release SUMO Day!

What’s so special about this SUMO Day?

A SUMO Day is that time of the week where everybody who loves doing support, contributors, admins, moderators gather together and try and answer all the incoming questions on the Mozilla support forums. Firefox 42 has just been released with a load of new exciting features so we’re organizing a special SUMO Day just for this as we expect to have a lot more incoming questions on the support forums.

I want to participate! Where do I start?

Just create an account and then take some time to help with unanswered questions. We have an etherpad ready with all the details plus additional tips and resources. We have also created a special common responses spreadsheet for Firefox 42 that will be constantly updated.

This is a 24 hour event, we will start early during European mornings and finish late during US Pacific evenings.

We are also hanging out having fun and helping each other in #sumo on IRC.

If you get stuck with questions that are too difficult feel free to ping us on IRC #sumo or ask for help on the contributors forums.

Moderators

SUMO Day will be moderated by madasan (EU morning/afternoon), marksc (EU afternoon/US morning), guigs (US morning/afternoon). We can always use more people to help moderate through the day so if you would like to do this just add your name in the etherpad!

What does it mean to be a SUMO Day moderator?

It’s easy! Just check out the forums and monitor incoming questions. Don’t forget to hang out on IRC on #sumo and the contributor forums and chat with the other SUMO Day participants about possible solutions to questions. As a moderator you also help out contributors who are stuck with difficult questions and need help.

What else is happening?

During this SUMO Day and throughout the following weeks we are working together with our friends from the Social team who are answering user questions on social media channels such as Twitter and Facebook. As not all the “Social” contributors are familiar with support we are giving them a hand .

How does this work?

*If you are already familiar with SUMO and the support forum you can join us in helping out with escalations. When the contributors working on Social encounter a post or a question that they don’t know how to deal with, they escalate it to us and we point them to the right direction.

IMPORTANT: We will not be replying to the user directly but we will explain to the Social contributors how to answer and show them the right KB articles they should point the user to. Also, expect most escalations to be very easy, this is just a matter of educating Social contributors on how to deal with certain issues. There should be none of the crazy troubleshooting we sometimes see in the forums :)

*If you are a member of the SUMO community and also fluent speaker of French or German you can help out with approving the posts of the French and German contributors who answer questions on the social media channels. As not everybody is familiar with SUMO, some of the posts will need to be checked for accuracy and approved the same way we review and approve edits for L10N.

If you are interested in helping with any of the above please let Madasan know.

I hope everybody is as excited as we are about this new release! We will be trying to answer each and every incoming question on the support forum on Thursday so please join us. The more the merrier!

See you online and happy SUMO Day!

https://blog.mozilla.org/sumo/2015/11/04/firefox-42-sumo-day-this-thursday-november-5th/

|

|