Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Cameron Kaiser: Two interesting things |

First, from the Google Chrome blog:

Today, we’re announcing the end of Chrome’s support for Windows XP, as well as Windows Vista, and Mac OS X 10.6, 10.7, and 10.8, since these platforms are no longer actively supported by Microsoft and Apple. Starting April 2016, Chrome will continue to function on these platforms but will no longer receive updates and security fixes.

(Recall that Google had already dropped 32-bit Intel Macs with Chrome 39.)

I've long warned on this blog that Firefox dropping 10.6 support will probably really hurt us in TenFourFox-land, since there are major API changes and assumptions about the compiler, as well as the loss of 32-bit support (we are a hybrid Carbon/Cocoa 32-bit application, even on G5 systems). Naturally, we would of course add it back in just like we did when 10.4 and 10.5 support was progressively removed from the tree, but it would greatly hurt long-term maintainability and may force dependencies on certain features Tiger just doesn't have. However, with 45 (the next ESR) now on Nightly with 10.6 support still fully in effect, and with even plans to merely drop 10.6.0-10.6.2 stalling, it doesn't look like that will happen before 45ESR. That at least gives us a chance at one more ESR version assuming Electrolysis isn't mandatory by then.

Now, second. I've never particularly been a big fan of Gr"uber Alles, but he says something very interesting in his review of the iPad Pro:

According to Geekbench's online results, the iPad Pro is faster in single-core testing than Microsoft's new Surface Pro 4 with a Core-i5 processor. The Core-i7 version of the Surface Pro 4 isn't shipping until December -- that model will almost certainly test faster than the iPad Pro. But that's a $1599 machine with an Intel x86 CPU. The iPad Pro starts at $799 and runs an ARM CPU -- Apple's A9X. There is no more trade-off. You don't have to choose between the performance of x86 and the battery life of ARM.We've now reached an inflection point. The new MacBook is slower, gets worse battery life, and even its cheapest configuration costs $200 more than the top-of-the-line iPad Pro.

Although it's quite possible this says more about how bad the new MacBook sucks than about how good the iPad Pro is, it really looks like those P.A. Semi folks Apple snapped up a few years back (yes, the people who worked on the PWRficient PA6T, one of the PowerPC CPUs in the running for the post-PowerBook G4 PowerBook) are starting to make serious inroads against x86. POWER remains a monster in the datacenter because it can scale, and, well, it's IBM, but IBM hasn't cared (as much) about performance per watt in that environment for quite some time whereas that matters a great deal in the consumer market, and that's why we never got a PowerBook G5. For years industry pundits said the reason ARM chips were so thrifty with their power usage is that they weren't all that powerful, comparatively speaking. Well, the A9X changes that landscape substantially: now you've got an ARM core that's seriously threatening the low to mid range of Chipzilla's market segment and is highly power efficient, and I can't imagine Intel isn't scared about Apple licensing their design. This blog may be written by an old RISC fetishist -- in this very room I have a PA-RISC, a SuperH, a SPARC, a DEC Alpha, several SGI-MIPS and of course a whole crapload of PowerPC hardware -- but after seeing these surprising benchmark numbers all those old rumours about Apple eventually coming up with an ARM MacBook suddenly don't seem all that far-fetched.

Finally, okay, okay, three things, this Engrish gem from mozilla.governance, emphasis mine:

I’m now writing to you to tell you something that make me puzzled. As I know, Firefox is a free browser which offers users independent web suffering.

We all know it, brother. We all know it. :)

http://tenfourfox.blogspot.com/2015/11/two-interesting-things.html

|

|

Air Mozilla: Quality Team (QA) Public Meeting, 11 Nov 2015 |

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

https://air.mozilla.org/quality-team-qa-public-meeting-20151111/

|

|

Air Mozilla: Building a Community around MOSS |

Learn more about the Mozilla Open Source Support Program (MOSS), what is it, what's involved and how you can help support open source and free...

Learn more about the Mozilla Open Source Support Program (MOSS), what is it, what's involved and how you can help support open source and free...

|

|

Jeff Muizelaar: Debugging reftests with RR |

When debugging reftests it's common to want to trace back the contents of pixel to see where they came from. I wrote a tool called rr-dataflow to help with this.

What follows is a log of a rr session where I use this tool to trace back the contents of a pixel to the code responsible for it being set. In this case I'm using the softpipe mesa driver which is a simple software implementation of OpenGL. This means that I can trace through the entire graphics pipeline as needed.

Breakpoint 1, mozilla::WebGLContext::ReadPixels (this=0x7fc064bc7000, x=0, y=0, width=64, height=64, format=6408,

type=5121, pixels=..., rv=...) at /home/jrmuizel/src/gecko/dom/canvas/WebGLContextGL.cpp:1411

1411 {

(gdb) c

Continuing.

Breakpoint 11, mozilla::ReadPixelsAndConvert (gl=0x7fc05c4e7000, x=0, y=0, width=64, height=64, readFormat=6408,

readType=5121, pixelStorePackAlignment=4, destFormat=6408, destType=5121, destBytes=0x7fc05c9d5000)

at /home/jrmuizel/src/gecko/dom/canvas/WebGLContextGL.cpp:1310

1310 {

(gdb) list

1305

1306 static void

1307 ReadPixelsAndConvert(gl::GLContext* gl, GLint x, GLint y, GLsizei width, GLsizei height,

1308 GLenum readFormat, GLenum readType, size_t pixelStorePackAlignment,

1309 GLenum destFormat, GLenum destType, void* destBytes)

1310 {

1311 if (readFormat == destFormat && readType == destType) {

1312 gl->fReadPixels(x, y, width, height, destFormat, destType, destBytes);

1313 return;

1314 }

(gdb) n

1311 if (readFormat == destFormat && readType == destType) {

(gdb)

1312 gl->fReadPixels(x, y, width, height, destFormat, destType, destBytes);

(gdb)

1313 return;

Let's disable the two breakpoints and set a watch point on the first pixel of the destination

(gdb) dis 1

(gdb) dis 11

(gdb) watch -location *(int*)destBytes

Hardware watchpoint 12: -location *(int*)destBytes

Then reverse-continue back to where the first pixel was set.

(gdb) rc

Continuing.

Hardware watchpoint 12: -location *(int*)destBytes

Old value = -16711936

New value = 0

__memcpy_avx_unaligned () at ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S:213

213 ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S: No such file or directory.

We end up at a memcpy inside of readpixels that copies into the destination buffer.

(gdb) bt 9

#0 0x00007fc0ad9ed955 in __memcpy_avx_unaligned () at ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S:213

#1 0x00007fc075c5080f in _mesa_readpixels (__len=, __src=, __dest=)

at /usr/include/x86_64-linux-gnu/bits/string3.h:53

#2 0x00007fc075c5080f in _mesa_readpixels (packing=0x7fc05c3e92e8, pixels=, type=5121, format=766008576, height=64, width=64, y=0, x=0, ctx=0x7fc05c3ce000) at main/readpix.c:245

#3 0x00007fc075c5080f in _mesa_readpixels (ctx=ctx@entry=0x7fc05c3ce000, x=x@entry=0, y=y@entry=0, width=width@entry=64, height=height@entry=64, format=format@entry=6408, type=5121, packing=0x7fc05c3e92e8, pixels=)

at main/readpix.c:873

#4 0x00007fc075ce0985 in st_readpixels (ctx=0x7fc05c3ce000, x=0, y=0, width=64, height=64, format=6408, type=5121, pack=0x7fc05c3e92e8, pixels=0x7fc05c9d5000) at state_tracker/st_cb_readpixels.c:255

#5 0x00007fc075c519d4 in _mesa_ReadnPixelsARB (x=0, y=0, width=64, height=64, format=6408, type=5121, bufSize=2147483647, pixels=0x7fc05c9d5000) at main/readpix.c:1120

#6 0x00007fc075c51c82 in _mesa_ReadPixels (x=, y=, width=, height=, format=, type=, pixels=0x7fc05c9d5000) at main/readpix.c:1128

#7 0x00007fc09c3a9b3b in mozilla::gl::GLContext::raw_fReadPixels(int, int, int, int, unsigned int, unsigned int, void*) (this=0x7fc05c4e7000, x=0, y=0, width=64, height=64, format=6408, type=5121, pixels=0x7fc05c9d5000)

at /home/jrmuizel/src/gecko/gfx/gl/GLContext.h:1511

#8 0x00007fc09c39abe1 in mozilla::gl::GLContext::fReadPixels(int, int, int, int, unsigned int, unsigned int, void*) (this=0x7fc05c4e7000, x=0, y=0, width=64, height=64, format=6408, type=5121, pixels=0x7fc05c9d5000)

at /home/jrmuizel/src/gecko/gfx/gl/GLContext.cpp:2873

#9 0x00007fc09d78696d in mozilla::ReadPixelsAndConvert(mozilla::gl::GLContext*, GLint, GLint, GLsizei, GLsizei, GLenum, GLenum, size_t, GLenum, GLenum, void*) (gl=0x7fc05c4e7000, x=0, y=0, width=64, height=64, readFormat=6408, readType=5121, pixelStorePackAlignment=4, destFormat=6408, destType=5121, destBytes=0x7fc05c9d5000)

at /home/jrmuizel/src/gecko/dom/canvas/WebGLContextGL.cpp:1312

From here we can see that the memcpy is storing the value of ymm4 into [r10] We use the origin command to step back to the place where ymm4 is loaded.

(gdb) origin

0x1000: vmovdqu ymmword ptr [r10], ymm4

1

reg used ymm4

212 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: add rdx, rdi

211 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: add edx, eax

210 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: jae 0xfd2

209 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: sub edx, eax

208 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: add rdi, rax

207 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqa ymmword ptr [rdi + 0x60], ymm3

206 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqa ymmword ptr [rdi + 0x40], ymm2

205 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqa ymmword ptr [rdi + 0x20], ymm1

204 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqa ymmword ptr [rdi], ymm0

203 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: add rsi, rax

202 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqu ymm3, ymmword ptr [rsi + 0x60]

201 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqu ymm2, ymmword ptr [rsi + 0x40]

200 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqu ymm1, ymmword ptr [rsi + 0x20]

199 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqu ymm0, ymmword ptr [rsi]

197 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: sub edx, eax

196 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: add rsi, r11

195 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

0x1000: vmovdqu ymm4, ymmword ptr [rsi]

We end up the instruction that loads ymm4 from [rsi]. Origin does this by single stepping backwards looking for writes to the ymm4 register. From here we want to continue tracking the origin. We use the origin command again. This time it sets a hardware watchpoint on the address in rsi.

(gdb) origin

0x1000: vmovdqu ymm4, ymmword ptr [rsi]

3

mem used *(int*)(0x7fc05c6c5000)

Hardware watchpoint 13: *(int*)(0x7fc05c6c5000)

Hardware watchpoint 13: *(int*)(0x7fc05c6c5000)

Old value = -16711936

New value = -452919552

__memcpy_avx_unaligned () at ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S:238

238 in ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S

We end up in another memcpy. This memcpy is flushing the tile buffer which

is used for rendering to the backbuffer that ReadPixels is reading from.

(gdb) bt 9

#0 0x00007fc0ad9ed9a6 in __memcpy_avx_unaligned () at ../sysdeps/x86_64/multiarch/memcpy-avx-unaligned.S:238

#1 0x00007fc075e89753 in pipe_put_tile_raw (pt=pt@entry=0x7fc05c4f8740, dst=dst@entry=0x7fc05c6c5000, x=x@entry=0, y=y@entry=0, w=, w@entry=64, h=, h@entry=64, src=0x7fc05c9d9000, src_stride=)

at util/u_tile.c:80

#2 0x00007fc075e8a268 in pipe_put_tile_rgba_format (pt=0x7fc05c4f8740, dst=0x7fc05c6c5000, x=0, y=0, w=w@entry=64, h=h@entry=64, format=PIPE_FORMAT_R8G8B8A8_UNORM, p=0x7fc05c9c5000) at util/u_tile.c:524

#3 0x00007fc0760034aa in sp_flush_tile (tc=tc@entry=0x7fc06c1e9400, pos=pos@entry=0) at sp_tile_cache.c:427

#4 0x00007fc076003c05 in sp_flush_tile_cache (tc=0x7fc06c1e9400) at sp_tile_cache.c:457

#5 0x00007fc075fe6a0e in softpipe_flush (pipe=pipe@entry=0x7fc0650d5000, flags=flags@entry=0, fence=fence@entry=0x7fff2da85b40) at sp_flush.c:72

#6 0x00007fc075fe6b0d in softpipe_flush_resource (pipe=0x7fc0650d5000, texture=texture@entry=0x7fc07fad4380, level=level@entry=0, layer=, flush_flags=flush_flags@entry=0, read_only=, cpu_access=1 '\001', do_not_block=0 '\000') at sp_flush.c:148

#7 0x00007fc07600304f in softpipe_transfer_map (pipe=, resource=0x7fc07fad4380, level=0, usage=1, box=0x7fff2da85bf0, transfer=0x7fc0665e0a58) at sp_texture.c:387

#8 0x00007fc075cdd6bd in st_MapRenderbuffer (transfer=0x7fc0665e0a58, h=64, w=, y=0, x=0, access=, layer=, level=, resource=, context=)

at ../../src/gallium/auxiliary/util/u_inlines.h:457

#9 0x00007fc075cdd6bd in st_MapRenderbuffer (ctx=, rb=0x7fc0665e09d0, x=0, y=, w=, h=64, mode=1, mapOut=0x7fff2da85cf8, rowStrideOut=0x7fff2da85cf0) at state_tracker/st_cb_fbo.c:772

We use the origin command again. This time we have rep movsb operation that reads from memory as a source. Origin uses a hardware watchpoint on that address again.

(gdb) origin

0x1000: rep movsb byte ptr [rdi], byte ptr [rsi]

3

mem used *(int*)(0x7fc05c9d9003)

Hardware watchpoint 14: *(int*)(0x7fc05c9d9003)

Hardware watchpoint 14: *(int*)(0x7fc05c9d9003)

Old value = 16711935

New value = -437918209

util_format_r8g8b8a8_unorm_pack_rgba_float (dst_row=0x7fc05c9d9000 "", dst_stride=256, src_row=0x7fc05c9c5000,

src_stride=, width=64, height=64) at util/u_format_table.c:15204

15204 *(uint32_t *)dst = value;

This watchpoint takes us back to the function that converts from the floating point output of the graphics pipeline to the byte value that goes in the destination tile.

(gdb) bt 9

#0 0x00007fc075eabe44 in util_format_r8g8b8a8_unorm_pack_rgba_float (dst_row=0x7fc05c9d9000 "", dst_stride=256, src_row=0x7fc05c9c5000, src_stride=, width=64, height=64) at util/u_format_table.c:15204

#1 0x00007fc075e8a23b in pipe_put_tile_rgba_format (pt=0x7fc05c4f8740, dst=0x7fc05c6c5000, x=0, y=0, w=w@entry=64, h=h@entry=64, format=PIPE_FORMAT_R8G8B8A8_UNORM, p=0x7fc05c9c5000) at util/u_tile.c:518

#2 0x00007fc0760034aa in sp_flush_tile (tc=tc@entry=0x7fc06c1e9400, pos=pos@entry=0) at sp_tile_cache.c:427

#3 0x00007fc076003c05 in sp_flush_tile_cache (tc=0x7fc06c1e9400) at sp_tile_cache.c:457

#4 0x00007fc075fe6a0e in softpipe_flush (pipe=pipe@entry=0x7fc0650d5000, flags=flags@entry=0, fence=fence@entry=0x7fff2da85b40) at sp_flush.c:72

#5 0x00007fc075fe6b0d in softpipe_flush_resource (pipe=0x7fc0650d5000, texture=texture@entry=0x7fc07fad4380, level=level@entry=0, layer=, flush_flags=flush_flags@entry=0, read_only=, cpu_access=1 '\001', do_not_block=0 '\000') at sp_flush.c:148

#6 0x00007fc07600304f in softpipe_transfer_map (pipe=, resource=0x7fc07fad4380, level=0, usage=1, box=0x7fff2da85bf0, transfer=0x7fc0665e0a58) at sp_texture.c:387

#7 0x00007fc075cdd6bd in st_MapRenderbuffer (transfer=0x7fc0665e0a58, h=64, w=, y=0, x=0, access=, layer=, level=, resource=, context=)

at ../../src/gallium/auxiliary/util/u_inlines.h:457

#8 0x00007fc075cdd6bd in st_MapRenderbuffer (ctx=, rb=0x7fc0665e09d0, x=0, y=, w=, h=64, mode=1, mapOut=0x7fff2da85cf8, rowStrideOut=0x7fff2da85cf0) at state_tracker/st_cb_fbo.c:772

#9 0x00007fc075c507b2 in _mesa_readpixels (packing=0x7fc05c3e92e8, pixels=0x7fc05c9d5000, type=5121, format=766008576, height=64, width=64, y=0, x=0, ctx=0x7fc05c3ce000) at main/readpix.c:234

We see that ecx is being stored into [r10 - 4]. We use origin to track back to the source.

(gdb) origin

0x1000: mov dword ptr [r10 - 4], ecx

1

reg used ecx

15206 src += 4;

0x1000: add rax, 0x10

15207 dst += 4;

0x1000: add r10, 4

15204 *(uint32_t *)dst = value;

0x1000: or ecx, esi

We end at an or instruction. Looking at the source below we see that each of the floating point channels is being converted to a byte. We'll manually set a watchpoint on the channel that we're interested to avoid getting lost in the conversion code.

(gdb) list

15199 uint32_t value = 0;

15200 value |= (float_to_ubyte(src[0])) & 0xff;

15201 value |= ((float_to_ubyte(src[1])) & 0xff) << 8;

15202 value |= ((float_to_ubyte(src[2])) & 0xff) << 16;

15203 value |= (float_to_ubyte(src[3])) << 24;

15204 *(uint32_t *)dst = value;

15205 #endif

15206 src += 4;

15207 dst += 4;

15208 }

(gdb) watch -location src[1]

Hardware watchpoint 15: -location src[1]

(gdb) rc

Continuing.

Hardware watchpoint 15: -location src[1]

Old value = 1

New value = -1.35707841e+23

0x00007fc076003783 in clear_tile_rgba (tile=0x7fc05c9c5000, format=PIPE_FORMAT_R8G8B8A8_UNORM, clear_value=0x7fc06c1e968c)

at sp_tile_cache.c:272

272 tile->data.color[i][j][1] = clear_value->f[1];

We end up at the clear_tile_rgba function which is settting the data in the buffer from the clear value.

(gdb) bt 9

#0 0x00007fc076003783 in clear_tile_rgba (tile=0x7fc05c9c5000, format=

PIPE_FORMAT_R8G8B8A8_UNORM, clear_value=0x7fc06c1e968c) at sp_tile_cache.c:272

#1 0x00007fc0760040e5 in sp_find_cached_tile (tc=0x7fc06c1e9400, addr=...) at sp_tile_cache.c:579

#2 0x00007fc075febee9 in single_output_color (layer=, y=, x=, tc=) at sp_tile_cache.h:155

#3 0x00007fc075febee9 in single_output_color (qs=0x7fc07fb6c780, quads=0x7fc0665f3500, nr=1) at sp_quad_blend.c:1179

#4 0x00007fc075fefc9f in flush_spans (setup=setup@entry=0x7fc0665f1000) at sp_setup.c:251

#5 0x00007fc075ff0112 in subtriangle (setup=setup@entry=0x7fc0665f1000, eleft=eleft@entry=0x7fc0665f1058, eright=eright@entry=0x7fc0665f1028, lines=64) at sp_setup.c:759

#6 0x00007fc075ff0af2 in sp_setup_tri (setup=setup@entry=0x7fc0665f1000, v0=v0@entry=0x7fc089aea7c0, v1=v1@entry=0x7fc089aea7d0, v2=v2@entry=0x7fc089aea7e0) at sp_setup.c:853

#7 0x00007fc075fe71a2 in sp_vbuf_draw_arrays (vbr=, start=, nr=6) at sp_prim_vbuf.c:422

#8 0x00007fc075e2b704 in draw_pt_emit_linear (emit=, vert_info=, prim_info=0x7fff2da85f80)

at draw/draw_pt_emit.c:261

#9 0x00007fc075e2d025 in fetch_pipeline_generic (prim_info=0x7fff2da85f80, vert_info=0x7fff2da85e40, emit=) at draw/draw_pt_fetch_shade_pipeline.c:196

We use origin again twice to track through the store and the load.

(gdb) origin

0x1000: movss dword ptr [rax - 0xc], xmm0

272 tile->data.color[i][j][1] = clear_value->f[1];

0x1000: movss xmm0, dword ptr [rbp + 4]

(gdb) origin

0x1000: movss xmm0, dword ptr [rbp + 4]

3

mem used *(int*)(0x7fc06c1e9690)

Hardware watchpoint 16: *(int*)(0x7fc06c1e9690)

Hardware watchpoint 16: *(int*)(0x7fc06c1e9690)

Old value = 1065353216

New value = 0

sp_tile_cache_clear (tc=0x7fc06c1e9400, color=color@entry=0x7fc05c3cfa4c, clearValue=clearValue@entry=0)

at sp_tile_cache.c:640

640 tc->clear_color = *color;

We end up in sp_tile_cache_clear which is setting up the clear color.

(gdb) bt 9

#0 0x00007fc076004376 in sp_tile_cache_clear (tc=0x7fc06c1e9400, color=color@entry=0x7fc05c3cfa4c, clearValue=clearValue@entry=0) at sp_tile_cache.c:640

#1 0x00007fc075fe5c84 in softpipe_clear (pipe=0x7fc0650d5000, buffers=5, color=0x7fc05c3cfa4c, depth=1, stencil=0)

at sp_clear.c:71

#2 0x00007fc075cd8181 in st_Clear (ctx=0x7fc05c3ce000, mask=272) at state_tracker/st_cb_clear.c:539

#3 0x00007fc09c3a9427 in mozilla::gl::GLContext::raw_fClear(unsigned int) (this=0x7fc05c4e7000, mask=16640)

at /home/jrmuizel/src/gecko/gfx/gl/GLContext.h:952

#4 0x00007fc09c3a9456 in mozilla::gl::GLContext::fClear(unsigned int) (this=0x7fc05c4e7000, mask=16640)

at /home/jrmuizel/src/gecko/gfx/gl/GLContext.h:959

#5 0x00007fc09d7830bf in mozilla::WebGLContext::Clear(unsigned int) (this=0x7fc064bc7000, mask=16640)

at /home/jrmuizel/src/gecko/dom/canvas/WebGLContextFramebufferOperations.cpp:46

#6 0x00007fc09d202460 in mozilla::dom::WebGLRenderingContextBinding::clear(JSContext*, JS::Handle, mozilla::WebGLContext*, JSJitMethodCallArgs const&) (cx=0x7fc078086400, obj=..., self=0x7fc064bc7000, args=...)

at /home/jrmuizel/src/gecko/obj-x86_64-unknown-linux-gnu/dom/bindings/WebGLRenderingContextBinding.cpp:11027

#7 0x00007fc09d6de3fa in mozilla::dom::GenericBindingMethod(JSContext*, unsigned int, JS::Value*) (cx=0x7fc078086400, argc=1, vp=0x7fc08f230210) at /home/jrmuizel/src/gecko/dom/bindings/BindingUtils.cpp:2644

#8 0x00007fc0a03af188 in js::Invoke(JSContext*, JS::CallArgs const&, js::MaybeConstruct) (args=..., native=0x7fc09d6de0bd , cx=0x7fc078086400)

at /home/jrmuizel/src/gecko/js/src/jscntxtinlines.h:235

#9 0x00007fc0a03af188 in js::Invoke(JSContext*, JS::CallArgs const&, js::MaybeConstruct) (cx=0x7fc078086400, args=..., construct=js::NO_CONSTRUCT) at /home/jrmuizel/src/gecko/js/src/vm/Interpreter.cpp:489

(gdb)

Running a backtrace we see that this goes back to a call to WebGLContext::Clear. This is the actual clear call that triggers the code that eventually sets the pixel to the value that we see when we call glReadPixels. At this point we've travelled through the entire pipeline, and we've done it with minimal effort through the magic of rr.

http://muizelaar.blogspot.com/2015/11/debugging-reftests-with-rr.html

|

|

Mozilla Addons Blog: AMO experiencing technical difficulties |

Update: as of now (1:10pm PT), it looks like all major features are back to normal.

Due to a recent server migration, AMO has been experiencing some problems for the past few hours. While the site is up, installing and submitting add-ons isn’t working correctly at the moment. The developer and operations team are working hard on fixing these problems as soon as possible and we will update this post once the problems are resolved.

https://blog.mozilla.org/addons/2015/11/11/amo-experiencing-technical-difficulties/

|

|

Mozilla WebDev Community: Extravaganza – November 2015 |

Once a month, web developers from across Mozilla get together to revolutionize layoffs. While we calculate the optimum distance away an employee should be from their desk when they’re informed of their impending doom, we find time to talk about the work that we’ve shipped, share the libraries we’re working on, meet new folks, and talk about whatever else is on our minds. It’s the Webdev Extravaganza! The meeting is open to the public; you should stop by!

You can check out the wiki page that we use to organize the meeting, or view a recording of the meeting in Air Mozilla. Or just read on for a summary!

Shipping Celebration

The shipping celebration is for anything we finished and deployed in the past month, whether it be a brand new site, an upgrade to an existing one, or even a release of a library.

Pontoon is replacing Verbatim

Osmose (that’s me!) started us off with the news that Pontoon, a Mozilla-developed website for translating software, is going to replace Verbatim, Mozilla’s instance of Pootle, which is an externally-developed website for translating software. Pontoon is able to do certain things that Pootle doesn’t, such as in-page translation.

Over 60% of active localization teams have voluntarily moved to Pontoon already, and the team currently aims to have 100% of active localization to have moved over by the first week of December.

mozilla.org on Deis Update

Next was jgmize, who shared a small update about the previously-mentioned move of mozilla.org to Deis, an open-source PaaS. The site has now fully transitioned out of the PHX1 datacenter and is split between Deis on AWS and SCL3.

Open-source Citizenship

Here we talk about libraries we’re maintaining and what, if anything, we need help with for them.

django-browserid 1.0.1

Last was Osmose again with the news that django-browserid 1.0.1 has been released. The only changes are the addition of a universal wheel distribution and preliminary Django 1.9 support.

This month’s excellence award went to Mythmon’s “Severance Santa” plan for holding layoffs over the holiday break.

If you’re interested in web development at Mozilla, or want to attend next month’s Extravaganza, subscribe to the dev-webdev@lists.mozilla.org mailing list to be notified of the next meeting, and maybe send a message introducing yourself. We’d love to meet you!

See you next month!

https://blog.mozilla.org/webdev/2015/11/11/extravaganza-november-2015/

|

|

The Mozilla Blog: A New Yahoo Search Experience for Firefox Users in U.S. |

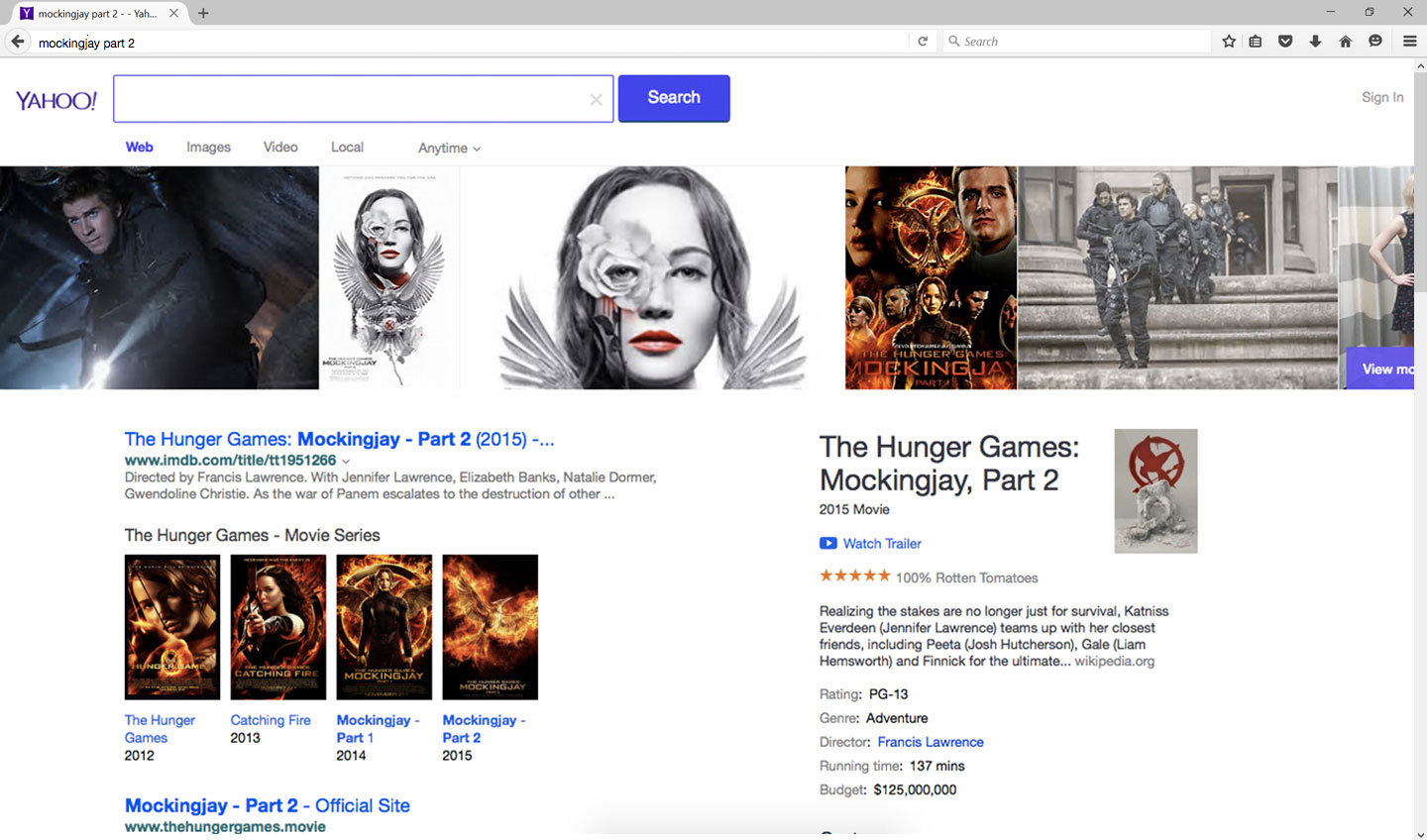

Today, we are excited that Firefox users in the U.S. will see a new, improved, innovative and fun experience on Yahoo Search. A year ago we entered into a strategic partnership with Yahoo to be the default search experience for Firefox users in the U.S. We ended our practice of having a single global default search provider for Firefox because we wanted to encourage growth in search, provide users with local choices and promote innovation in the space.

We have accomplished a lot over the last year. We worked closely with Yahoo to improve the search experience for our U.S. users. And, while we tend to be an opinionated and passionate project, Yahoo has been collaborative and flexible as we’ve provided continual feedback. Ultimately, these advances in the experience improve the competitive landscape for search which is good for our users.

Firefox users in the U.S. can try this exclusive new search experience in the Firefox Awesome bar on Windows, Mac and Linux. Yahoo Search has been redesigned to surface relevant, actionable answers and display images and videos up front so that you can browse, find and discover more about your queries. You can learn more about the new Yahoo Search experience here.

Firefox users in the U.S. can try this exclusive new search experience in the Firefox Awesome bar on Windows, Mac and Linux. Yahoo Search has been redesigned to surface relevant, actionable answers and display images and videos up front so that you can browse, find and discover more about your queries. You can learn more about the new Yahoo Search experience here.

Give it a try and let them know what you think!

https://blog.mozilla.org/blog/2015/11/11/a-new-yahoo-search-experience-for-firefox-users-in-u-s/

|

|

Air Mozilla: The Joy of Coding - Episode 34 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Michael Kaply: Identifying Preferences Names in Firefox |

One question I get a lot is how to associate a preference option that you see in the Firefox preferences with the name of a preference to set or lock.

To make getting that information easier, I've made a very simple extension called Pref Helper that when installed highlights any item that has a corresponding preference in blue and shows that preference when you right click on the item. This should make it easy to find the preference name you are looking for.

Then you can set or lock it with the CCK2.

You can download it here.

https://mike.kaply.com/2015/11/11/identifying-preferences-names-in-firefox/

|

|

Joel Maher: Adventures in Task Cluster – running a custom Docker image |

I needed to get compiz on the machine (bug 1223123), and I thought it should be on the base image. So to take the path of most resistance, I dove deep into the internals of taskcluster/docker and figured this out. To be fair, :ahal gave me a lot of pointers, in fact if I would have taken better notes this might have been a single pass to success vs. 3 attempts.

First let me explain a bit about how the docker image is defined and how it is referenced/built up. We define the image to use in-tree. In this case we are using taskcluster/docker-test:0.4.4 for the automation jobs. If you look carefully at the definition in-tree, we have a Dockerfile which outlines who we inherit the base image from:

FROM taskcluster/ubuntu1204-test-upd:0.1.2.20151102105912

This means there is another image called ubuntu1204-test-upd, and this is also defined in tree which then references a 3rd image, ubuntu1204-test. These all build upon each other creating the final image we use for testing. If you look in each of the directories, there is a REGISTRY and a VERSION file, these are used to determine the image to pull, so in the case of wanting:

docker pull taskcluster/desktop-test:0.4.4

we would effectively be using:

docker pull {REGISTRY}/desktop-test:{VERSION}

For our use case, taskcluster/desktop-test is defined on hub.docker.com. This means that you could create a new version of the container ‘desktop-test’ and use that while pushing to try. In fact that is all that is needed.

First lets talk about how to create an image. I found that I needed to create both a desktop-test and an ubuntu1204-test image on Docker Hub. Luckily in tree there is a script build.sh which will take a currently running container and make a convenient package ready to upload, some steps would be:

- docker pull taskcluster/desktop-test:0.4.4

- docker run taskcluster/desktop-test:0.4.4

- apt-get install compiz; # inside of docker, make modifications

- # now on the host we prepare the new image (using elvis314 as the docker hub account)

- echo elvis314 > testing/docker/docker-test/REGISTRY

- echo 0.4.5 > testing/docker/docker-test/VERSION # NOTE: I incremented the version

- cd testing/docker

- build.sh docker-test # go run a 5K

- docker push elvis314/docker-test # go run a 10K

those are the simple steps to update an image, what we want to do is actually verify this image has what we need. While I am not an expert in docker, I do like to keep my containers under control, so I do a |docker ps -a| and then |docker rm

- docker pull elvis314/desktop-test:0.4.5

- docker run elvis314/desktop-test:0.4.5

- compiz # this verifies my change is there, I should get an error about display not found!

I will continue on here assuming things are working. As you saw earlier, I had modifed filed in testing/docker/desktop-test, these should be part of a patch to push to try. In fact that is all the magic. to actually use compiz successfully, I needed to add this to test-linux.sh to launch |compiz &| after initializing Xvfb.

Now when you push to try with your patch, any tasks that used taskcluster/desktop-test before will use the new image (i.e. elvis314/desktop-test). In this case I was able to see the test cases that opened dialogs and windows pass on try!

https://elvis314.wordpress.com/2015/11/11/adventures-in-task-cluster-running-a-custom-docker-image/

|

|

Pascal Chevrel: Progressively moving from Sublime Text 3 to Atom |

I am currently moving from Sublime Text (I am a happy paying customer) to Atom. I love Sublime but unfortunately, the project is dying and I want to invest in tools that are maintained in the long term.

Sublime 3 is still not release after years, the only developper completely disappeared for months and this is not the first time it happens, this is also not an open source project which means that the project will die if this only developper leaves. The support forum is filled with spam and basically, there is no roadmap nor any official commitment to pursue the project.

Of course, Sublime still works fine, but there are already reports of it getting crashy on newer version of Linux, the addons ecosystem is slowing down and in the meantime, there is Atom created by the vibrant GitHub community with regular releases and attractive features (mainly clearly copied from Sublime).

So far I hadn't switched because Atom was not ready for prime time, despite its 1.0 number. It was just not usable on my machine for any serious dev work.

That said, the recent 1.2beta builds did get improvements and many extensions are actually working around core bugs that were making Atom a no go until now. So today, I am trying to use Atom 50% of the time instead of 10% as before.

So as to get a working setup, I did have to install a few must have extensions that just make the app usable. First and foremost, I needed Atom to work on my X1 carbon which boasts a 2560*1440 resolution and well, hiDPI is not Chrome Forte, I couldn't even read the microscopic UI and using CSS to fix that was a pain and not scalable when you switch your screen to a external monitor or for a presentation with a different resolution, one point for Sublime which just respects my OS DPI settings. Fortunately, this extension fixes it all:

The second thing I needed was decent speed, it's still lagging behind Sublime but the recent Atom builds over the summer did make a significant effort in that respect and the boot up time is now acceptable for my daily use.

The third problem which is probably still a blocker for me is that Atom basically sucks at managing non-US Qwerty keyboards, like seriously, it's bad. Most of the shortcuts didn't work on my French keyboard and this is the same problem for most keyboards in the world. Again this seems to be a Chrome engine limitation according to Github Issues and it should be fixed upstream in a few versions of Chrome from now. In the meantime, this package is an acceptable workaround that makes many non-US keyboards work more or less with Atom:

There is one big caveat and one of my day to day blockers, I can't comment out a single line from a keyboard shortcut, if that simple bug were fixed in the package or Atom, I would certainly use it 75% of the time and not 50%.

In terms of UI and color scheme, it took me some time to find something agreable to the eyes (On Sublime, I tended to like Cobalt color scheme) but Atom Light for the UI and Code Dark for color scheme are rather agreable. Of course I am a PHP developper, so my color scheme tends towards having clear syntax in this language. The 1.2beta builds also stopped opening context menu in silly locations on my screen instead of below my cursor, another pain point gone.

The Zen and minimap extensions are just must haves for Sublime defectors like me:

Other extensions I use a lot are available:

In terms of PHP specific extensions, I found several equivalents to what I use in Sublime:

There are other PHP extensions I installed but didn't have much chance with them yet, some require configuration files, others don't seem to work or conflict with the keyboard shortcuts (stuff like php-cs-fixer).

The one extension I really miss from Sublime is Codeintel that autocompletes method names and gives tootips explaining methods from the data extracted from Dockblocks in classes. I really wish this will be ported some day or that an equivalent feature will be created.

I also need to see how to launch simple scripts on saving files, to launch Atoum unit tests for example, hopefully somebody will hack it and I won't have to dig into doing it myself

On the plus side for Atom, Git/GitHub integration in the UI is obviously out of the box, I didn't have to install any extension for that. The UI is also improving regularly and just more pleasing to the eyes than Sublime's, which is a bit too geeky to my taste. There is a real preference panel where you can manage your extensions and many interesting small features for which you don't have to edit configuration files like in Sublime (seriously, setting your font size in a JSON file is not user friendly).

It does have its share of bugs though, for example color syntaxing seems to choke on very large files (like 2MB) and everything is displayed as text (not cool for XML or long PHP arrays for example). There are also sometimes lock ups of the UI, often when I switch to preferences.

But all in all, the experience is getting better over time and I think that I found a credible alternative to Sublime for 2016. I know there are other options, I actually have PHPStorm for example which is super powerful, but just as with Eclipse, Netbeans and other heavy-weight IDEs, I have better things to do in my life than spend 3 months just learning the tool to be able to edit a couple of files and I don't buy the argument that this is a life investment

The one aspect of Atom that I think is still basic is project management, I need something just as simple as Sublime but I may just have overlooked the right extension for that and anyway, it's such a popular request that I have no doubt it will be in core at some point.

That's it, if you haven't tried Atom in a while and like me are worried about Sublime Text future, maybe that post will make you want to give it a try again.

I still love Sublime, even in its beta form it is a solid and complete product and I would be happy to keep on paying for it and get updates, but unfortunately, its days seem to be doomed because all the eggs are in the same basket of a single developer that may have good reasons to have vanished, but I just need work done and there are enough bus factor people in my own job to afford having also this problem with the main tool I use on a daily basis. I'd be happy to be proven wrong and see a Sublime Renaissance, the only dev is obviously incredibly talented and deserves to make a living out of his work, I just think he got overwelmed by the incredible success he had with his product and just can't keep up. At some point, open source or prioritary software, you need a team to scale up and meet your customers satisfaction. I do hope that if he completely gives up on this project to pursue other adventures, he will open source Sublime Text and not let all this coding beauty disappear in the prioritary world limbos forever

PS: And yes, I prepared this blog post in Markdown syntax thanks to the built-in Markdown previewing pane in Atom

http://www.chevrel.org/carnet/?post/2015/11/11/Progressively-moving-from-Sublime-Text-3-to-Atom

|

|

Mozilla Addons Blog: Promote your add-ons with the “Get the add-on” button |

If you are an add-on developer, and you’d like something a little snazzy to help you promote your add-ons, our creative team has cooked up something for you!

The new “Get the add-on” button comes in two sizes:

172 x 60 pixels

129 x 45 pixels

Simply save these images, put them on your website, and link them to your add-on’s listing on addons.mozilla.org. Questions? Please head to our forums.

If you’re interested in localizing the button for your language, please contact us.

Enjoy!

https://blog.mozilla.org/addons/2015/11/10/promote-your-add-ons-with-the-get-the-add-on-button/

|

|

Air Mozilla: Martes mozilleros, 10 Nov 2015 |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

|

|

Yunier Jos'e Sosa V'azquez: Odisleysi Mart'inez: “Mozilla me aporta deseos de seguir aprendiendo y me abri'o las puertas a un mundo nuevo” |

Nuevamente tenemos la posibilidad de conocer m'as acerca de los integrantes de Firefoxman'ia y hoy tenemos como invitada a una de nuestras f'eminas. Se trata de una muchacha que destaca por su peculiar estilo de llenar su laptop con pegatinas (no le cabe una m'as) y hacer alusi'on a Firefox al vestir, me refiero a F!r3f0x G!rl o simplemente Odi como todos la conocemos.

Pregunta:/ ?C'omo te llamas y a qu'e te dedicas?

Respuesta:/ Me llamo Odisleysi Mart'inez, soy programadora de interfaz en la Universidad de las Ciencias Inform'aticas.

P:/ ?Por qu'e te decidiste a colaborar con Mozilla/Firefoxman'ia?

R:/ Decid'i colaborar con Mozilla/Firefoxman'ia porque es un proyecto comunitario bastante interesante, Firefoxman'ia (Comunidad de Mozilla en cuba) apoya desde el 2008 la misi'on de Mozilla. Mozilla tiene una misi'on altruista, protege la privacidad de sus usuarios, fomenta la innovaci'on, la creatividad, tiene en cuenta el criterio de sus usuarios, intenta mantener la red abierta, participativa, y eso me gusta mucho. Cuando supe de la comunidad en la universidad de las ciencias inform'aticas (UCI), casualmente comenzaban a hacer el famoso logo gigante de Firefox que se hizo al lado del docente 1 y me pareci'o divertido colaborar, no iba a ser solo postear en un blog, sino compartir, ayudar, ense~nar, conocer amigos, que han resultado ser muy buenos amigos desde entonces.

P:/ ?Actualmente qu'e labor desempe~nas en la Comunidad?

R:/ En estos momentos atiendo las 'areas divulgaci'on y temas ligeros. Aunque todos los colaboradores activos somos m'usicos , poetas y locos. Todos colaboran mucho en la divulgaci'on de los eventos, actividades, lanzamientos, etc., en especial el l'ider de la comunidad.

P:/ ?Qu'e es lo que m'as valoras o es lo m'as positivo de Mozilla / la Comunidad?

R:/ Valoro mucho la innovaci'on: Mozilla y Firefoxman'ia para mi son eso, innovaci'on, creatividad, trabajar teniendo en cuenta siempre el usuario final.

P:/ ?Qu'e te aporta a ti Mozilla / la Comunidad?

R:/ Mozilla me aporta deseos de seguir aprendiendo, me abri'o las puertas a un mundo nuevo, a otras tecnolog'ias en las que no hab'ia profundizado. Me hizo interesarme en el dise~no y desarrollo web, en fin le debo mucho. Lo mejor de todo es que puedo aplicar lo aprendido en mi trabajo y eso me llena de satisfacci'on profesional.

P:/ ?C'omo crees que ser'a Mozilla en el futuro?

R:/ Mozilla me sorprende siempre de manera positiva, recuerdo la primera vez que instal'e el navegador Firefox creo que era la versi'on 3.5, jeje. En poco tiempo descubr'i Thunderbird, salio Firefox OS, han salido complementos para todos los gustos, para disimiles funcionalidades, para mejorar la experiencia de usuario. Si desde entonces hasta ahora ha dado pasos gigantes y en la actualidad el mundo esta girando al rededor de la Web, no dudo que llegue lejos, tengo fe en que as'i va a ser.

P:/ En pocas palabras ?Qu'e es Firefoxman'ia para ti?

R:/ Creo que la palabra perfecta que engloba a Firefoxman'ia es: Familia.

P:/ ?Qu'e crees de la mujer en Mozilla?

R:/ Esa es una pregunta dif'icil, sin dudas puedo decir que la mujer est'a presente en cada decisi'on de Mozilla. En cada encuentro, noticia o evento no falta la presencia femenina. Creo que Mozilla nos tiene en cuenta y suma esfuerzos para integrar m'as colaboradoras, ejemplo de ello es el apoyo que brinda a proyectos dedicados a mejorar la visibilidad y la igualdad de g'enero de las mujeres en el software libre, es el caso de WoMoz.

P:/ Eres una de las creadoras de temas para la Galer'ia Personas ?Qu'e sientes cuando vez a alguien vistiendo un tema tuyo?

R:/ Cuando veo a alguien usando un tema que hice, me pongo muy contenta, recuerdo el momento en el que lo hice y c'omo hacia encuestas a mis compa~neros de laboratorio, en ese momento, para saber cu'al categor'ia tendr'ia m'as pegada. Era gracioso porque casi nadie ten'ia el mismo inter'es y termine preguntando en algunos casos a desconocidos, solo para saber qu'e tema ser'ia el pr'oximo 'exito. Ver que algo que hice con tanto empe~no tenga aceptaci'on me da muchas energ'ias positivas y motivos para trabajar m'as, para colaborar m'as, para brindar lo mejor de m'i.

P:/ Adem'as de colaborar en Firefoxman'ia y tu trabajo ?qu'e otros hobbies tienes?

R:/ Me gusta escuchar m'usica, cantar y aprenderme canciones en la guitarra. Hace rato no lo hago porque no tengo tiempo pero me encanta hacer guateques (denominaci'on que le di a cuando se juntan dos o m'as personas a trovadorear), jejeje. Me gusta seguir tendencias de dise~no web y cacharrear frameworks CSS-JS. A veces leo, costumbre que adquir'i de una muy buena amiga (Carmen). Me gusta tejer a croch'e y publicar las cosas que hago en una p'agina en Facebook que se llama Croch'e y mucho +. Ver alguna buena pel'icula o una buena serie mientras tejo, :D. Visitar a los amigos, aunque reconozco que no lo hago mucho por falta de tiempo.

P:/ Unas palabras para las personas que desean unirse a la Comunidad.

R:/ Recuerdo que entraba a la pagina y ve'ia los temas ligeros y deseaba colaborar, sin embargo me entraba la duda. Esta experiencia se las cuento porque s'e que no solo a mi me pasaba en ese tiempo, y durante un tiempo tome una postura incorrecta al respecto. Nunca tengan miedo de demostrar las cosas que pueden hacer, nunca les de pena acercarse y colaborar, no solo con esta comunidad, sino con cualquiera de las comunidades de desarrollo, el objetivo a la larga va a ser: “colaborar”, la ayuda siempre es muy bien recibida por nosotros, y de seguro traen cosas de las cuales todos podr'iamos aprender. Estamos esper'andolos con los brazos abiertos a todo el que quiera formar parte de Firefoxman'ia.

Muchas gracias chica Firefox por acceder a la entrevista.

|

|

Daniel Pocock: Aggregating tasks from multiple issue trackers |

After my experiments with the iCalendar format at the beginning of 2015, including Bugzilla feeds from Fedora and reSIProcate, aggregating tasks from the Debian Maintainer Dashboard, Github issue lists and even unresolved Nagios alerts, I decided this was fertile ground for a GSoC student.

In my initial experiments, I tried using the Mozilla Lightning plugin (Iceowl-extension on Debian/Ubuntu) and GNOME Evolution's task manager. Setting up the different feeds in these clients is not hard, but they have some rough edges. For example, Mozilla Lightning doesn't tell you if a feed is not responding, this can be troublesome if the Nagios server goes down, no alerts are visible, so you assume all is fine.

To take things further, Iain Learmonth and I proposed a GSoC project for a student to experiment with the concept. Harsh Daftary from Mumbai, India was selected to work on it. Over the summer, he developed a web application to pull together issue, task and calendar feeds from different sources and render them as a single web page.

Harsh presented his work at DebConf15 in Heidelberg, Germany, the video is available here. The source code is in a Github repository. The code is currently running as a service at horizon.debian.net although it is not specific to Debian and is probably helpful for any developer who works with more than one issue tracker.

http://danielpocock.com/aggregating-tasks-multiple-issue-trackers-gsoc-2015-summary

|

|

Nick Cameron: Macros in Rust pt5 |

I've previously laid out the current state of affairs in Rust (0, 1, 2, 3, 4). In this post I want to highlight what I think are issues with the current system and if/when I think we should fix them. I plan to do some focused design work on this leading to an RFC and to work on the implementation as well. Help with both will be greatly appreciated, let me know if you're interested.

I'd like to gather the community's thoughts on this before getting to the RFC stage (and I'm only really requirements gathering at this stage, not coming up with a plan). To help with that I have opened a thread on internals.rust-lang, please comment there if you have thoughts.

RFC issue 440 describes many of the issues, I hope I have collected all of those which are still relevant here.

high-level motivation

The most pressing issue is the instability of procedural macros. We've seen people do really cool things with them, they are an extremely powerful mechanism, and one of the most requested features for stability. However, there are many doubts about the current system. We would like to have a system of procedural macros we are happy with and move towards stability.

There are many issues with macro_rules macros which we would have liked to address before 1.0, but couldn't due to time constraints and prioritisation. Many of the 'rough edges' would be breaking changes to fix. It is unclear how difficult such changes would be to cope with and if it is worth making such changes. In particular, we can have the best macro system in the world, but if the old one has momentum and no-one uses the new one, then that would not be a good investment.

Finally, the two macro systems interact a lot. We need to make sure that any decisions we make in one area will leave us the freedom to do what we want in the other.

macro_rules issues

Our hygiene story should be more complete. See blog post 3 for details of what is not covered by Rust's hygiene implementation. In particular type and lifetimes variables should be covered, and we should have unsafety and privacy hygiene. One open question here is how items introduced by macro expansion should be scoped.

Modularisation of macros needs work. It would be nice if macros could be named in the same way as other items and importing worked the same way for macros as for other items. This will require some modifications to our name resolution implementation (which is probably a good thing, it is kinda ugly at the moment).

Having to use absolute paths for naming is a drag. It would be better if naming respected the scopes in which macros are defined.

Ordering of macro definitions and uses shouldn't matter, in the same way that it doesn't for other items. E.g.,

foo!();

macro_rules! foo { ... }

should work.

- Ordering of uses shouldn't matter, e.g.,

macro_rules! foo {

() => {

baz!();

}

}

macro_rules! bar {

() => {

macro_rules! baz {

() => {

...

}

}

}

}

foo!();

bar!();

should work.

- There are some significant-sounding bugs that should be addressed, or at least assessed. E.g., around parsing macro arguments: 6994, 3232, and 27832

stability

The big question is whether we can adapt the current system with minimal breakage, or whether we need to define a macros 2.0 system and if so, how to do that. I believe that even if we go the macros 2.0 path, it must be close enough to the current system that the majority of macros continue to work unchanged, otherwise changing will be too painful for the new system to be successful. That obviously limits some of the things we can do.

procedural macro issues

The current breakdown of procedural macros into different kinds using traits is a bit ad hoc. We should try to rationalise this breakdown. Hopefully we can merge

ItemDecoratorandItemModifier, removeIdentTT, and makeMacroRulesTTmore of an implementation detail. We should also find a simpler interface than the current mess of traits and data structures.All kinds of macros should take and produce token trees, rather than ASTs. However, there should be good facilities for working with the token trees as ASTs. Either provided by the compiler or from external libraries.

There should be powerful and easy to use libraries for working with hygiene and spans.

The plugin registry system is not ideal, instead we should allow scoped attributes and support modularisation of procedural macros.

Having to build procedural macros as separate crates is not ideal, it would be nice to be able to have procedural macros defined inline like macro_rules macros. I imagine we would still allow something like the current system, but also support inline macros as a kind of sugar (nesting these inside macro_rules macros will make things fun).

stability

Currently procedural macros have access to all of the compiler. If we were to stabilise macros like this, then we could never change the compiler or language. We need to present APIs to procedural macros that allow the language to evolve whilst the remain stable. Various ways have been suggested for this, such as working only with quasi-quoting, relying on external libraries, some kind of extensible AST API, extensible enums, and a more flexible token tree structure.

other stuff

Scoped attributes were mentioned for procedural macros, it would be nice to allow tools to make use of these too. This could allow more precise interaction with the various attribute lints (unknown attribute, etc.).

Concatenating idents is supported by

concat_idents, however, it is not very useful due to hygiene constraints. I believe we can do much better. (See tracking issue). We might also want to allow macros in ident position.Proper modularisation of macros would require moving some of name resolution to the macro expansion phase. This would be a big change. However, name resolution is due for some serious refactoring and we could move what is left to the AST->HIR lowering step or even later and make it 'on-demand' during type checking. The latter would allow us to use the same code for associated items as for plain names.

We seem to be paying the price for some unification/orthogonality without getting a great deal of benefit. It would be nice to either make macro_rules macros more built-in and/or be able to do more compiler stuff as pure procedural macros (e.g., all the built-in macros,

cfg, maybe even macro_rules macros).

out of scope?

There are some things which are touched on here or in the last few blog posts, but which I don't want to think about at the moment. I think most of them are orthogonal to the main focus here (modularisation, hygiene, stabilising procedural macros) and backwards compatible. Things I can think of:

- other plugin registry stuff - the plugin registry is pretty ugly, I'd rather it disappeared completely. Eliminating the registration of procedural macros is a step towards that, but I don't want to think about lints, LLVM passes, etc. for now.

- Macro uses in more positions (I mentioned ident position above, I'm not sure how important it is to consider that now. I'm not sure if there are other positions we should consider) - we can add more if there is demand, but it doesn't intersect with much else here.

- Expansion stacks and other implementation stuff - we can probably do better, but I think it is mostly orthogonal to the high level design.

- Tooling - we need better tooling around macros, but it's pretty orthogonal to this work

- Compiler as a library.

- gensym in macro_rules.

- Allow matching of

+and*in macro patterns . - External deriving implementations - hopefully this will extend the procdural macros work, but I don't think it affects the design at this stage.

- Dealing with repetition in sequences - i.e., generating n of something in a macro, counting the number of repetitions in a pattern.

|

|

The Mozilla Blog: MozFest 2015 Demo Garage: Showing What’s Possible with the Open Web |

Mozilla Festival 2015 was a productive and dynamic event celebrating the world’s most valuable public resource — the open Web. MozFest is also a gathering place for Mozilla community members from around the world and brings together makers, designers, builders, coders and creative folks to showcase their ideas of how the Web can enable the sort of innovative tinkering you might do (or want to do) in your own garage.

In fact one of the many exciting exploration spaces at MozFest was a demo zone we called “The Garage”. Here we had a steady stream of demos, workshops and spontaneous conversations about a wide-range of maker movement topics including 3D printing, robotics, sensors, connected devices, digitally discovering the world around us, and open hardware platforms like Raspberry Pi and Arduino. Naturally the Web, JavaScript, Firefox and Firefox OS were featured prominently in those experiences, a few of which we wanted to share.

First up Developer Kannan Vijayan showed me FlyWeb, an experiment that enables loading Web content using a peer to peer connection from one smartphone to another:

Also you’ll remember we were excited to work with Panasonic to launch Panasonic VIERA Smart TVs powered by Firefox OS earlier this year. Product Manager Joe Cheng showed me how Firefox OS performs on a smart TV:

Next up Product Manager Cindy Hsiang and team showed me a series of demos showcasing use of JavaScript to program connected devices and sensors through a common microcontroller such as Arduino. Evan Tseng built WotMaker, a general-purpose development tool to facilitate maker-style prototyping, and Eddie Lin leveraged an open source framework to build an automatic cat feeder and a small robot that could be controlled wirelessly from a simple web application running on a smart phone.

Blog Post from Mark Surman, Executive Director, Mozilla Foundation

MozFest 2015: Connecting Leaders and Rallying Communities for the Open Web

|

|

James Long: My ReactiveConf Talk |

Last week I gave a talk at ReactiveConf about how React and Redux solve a lot of issues in complex apps. I talked about how vital it is to keep things as simple as possible, and how we're trying to clean up the code of Firefox Developer Tools.

I thought it would be good to post it here and give a little retrospective on it. Watch it here:

I gave a similar talk at Strange Loop which can be watched here. It's similar content, but I restructured the whole talk the second time around. I went into more details at Strange Loop.

A few notes now that my trip is over:

- Even though I was trying to be very conscious of it, man, I still said "um" a lot. It's crazy how hard it is to speak elegantly if it doesn't come naturally.

- I always find 30 minutes to be a very hard time slot. I prefer 45 min by far. I would have spent the last 15 minutes talking about migrating code again and going into more detail about how we're actually cleaning up code in the Firefox devtools. I feel like I wasn't able to wrap it up.

- To give some more details about how Firefox devtools is using all of this, the new memory tool is fully written in React and Redux, and I'm cleaning up the debugger to use Redux. I am very, very close in landing that work. I have only cleaned up the core of the debugger, but that's the hardest part. Interestingly, I am not even using React yet. Watch my Strange Loop talk to see how I'm using Redux without React.

- I think the synchronous nature of React/Redux is one of the most under-hyped selling points. The fact that changing state and updating the UI always is synchronous and happens on the same tick makes thing very easy to grasp what's going on for me. It's a big relief, especially coming from our current code where there are promises everywhere.

- I has some good discussions about Elm, Cycle.js, and observables, where async UIs are more common (I don't know much about Elm, Cycle.js definitely embraces it though). Paul Taylor (one of the creators of Falcor) especially helped me understand how observables work (watch his talk, as well as Andr'e's Cycle.js talk). It's powerful stuff, but I still can't get behind making state implicit. With observables, state is implicitly held in the data flow that you build with streams, whereas with Redux you store state explicitly (like "is loading", etc) in a store. Implicit state makes some things hard like server rendering (Elm doesn't even support that).

It was a great conference, even if my baggage got lost and I sat beside an angry old man for 10 hours on the flight who kept shaking me awake.

|

|

Yunier Jos'e Sosa V'azquez: 11 a~nos de Firefox, 11 a~nos defendiendo la Web |

Corr'ia el mes de noviembre de 2004, era una 'epoca donde la supremac'ia de Internet Explorer sobre los dem'as competidores era notable con la ayuda de Microsoft pese al fallo emitido por el juez Jackson relacionado con pr'acticas monopolistas y anti competitivas en 2000 que obligaba a los de Redmond separar IE de Windows.

Gracias a un peque~no grupo de programadores, dise~nadores e idealistas que tuvieron la idea de crear una comunidad global para generar innovaci'on y hacer frente a la situaci'on que viv'ia la Web en aquel entonces, simplemente algo mejor, Mozilla. Y con Mozilla, Firefox el navegador que marcar'ia la diferencia y defender'ia los est'andares web abiertos. Aquellos d'ias fueron emocionantes para la comunidad open source, d'ias en los que 9 de cada 10 usuarios navegaba con Internet Explorer.

Firefox se convirti'o en la alternativa oficial a IE desde Windows. Firefox impuls'o la creaci'on de una Web a'un m'as abierta. Oblig'o a que miles de sitios se apegaran a est'andares abiertos antes que a los impuestos por una tecnolog'ia privada. En estos 11 a~nos son innumerables las nuevas caracter'isticas que se han a~nadido a Firefox y los buenos momentos que hemos vivido los seguidores de este navegador.

Actualmente Firefox sigue mejorando y con cada liberaci'on Mozilla nos afirma que el proyecto sigue vivo y que se mantiene entre los mejores navegadores, apostando por la privacidad y control de los usuarios de su navegaci'on.

Felicitemos a Firefox en su d'ia y esperemos que est'e junto a nosotros por mucho tiempo m'as.

Larga vida a Firefox.

http://firefoxmania.uci.cu/11-anos-de-firefox-11-anos-defendiendo-la-web/

|

|