Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Pocock: Want to be selected for Google Summer of Code 2016? |

I've mentored a number of students in 2013, 2014 and 2015 for Debian and Ganglia and most of the companies I've worked with have run internships and graduate programs from time to time. GSoC 2015 has just finished and with all the excitement, many students are already asking what they can do to prepare and be selected for Outreachy or GSoC in 2016.

My own observation is that the more time the organization has to get to know the student, the more confident they can be selecting that student. Furthermore, the more time that the student has spent getting to know the free software community, the more easily they can complete GSoC.

Here I present a list of things that students can do to maximize their chance of selection and career opportunities at the same time. These tips are useful for people applying for GSoC itself and related programs such as GNOME's Outreachy or graduate placements in companies.

Disclaimers

There is no guarantee that Google will run the program again in 2016 or any future year until the Google announcement.

There is no guarantee that any organization or mentor (including myself) will be involved until the official list of organizations is published by Google.

Do not follow the advice of web sites that invite you to send pizza or anything else of value to prospective mentors.

Following the steps in this page doesn't guarantee selection. That said, people who do follow these steps are much more likely to be considered and interviewed than somebody who hasn't done any of the things in this list.

Understand what free software really is

You may hear terms like free software and open source software used interchangeably.

They don't mean exactly the same thing and many people use the term free software for the wrong things. Not all projects declaring themselves to be "free" or "open source" meet the definition of free software. Those that don't, usually as a result of deficiencies in their licenses, are fundamentally incompatible with the majority of software that does use genuinely free licenses.

Google Summer of Code is about both writing and publishing your code and it is also about community. It is fundamental that you know the basics of licensing and how to choose a free license that empowers the community to collaborate on your code well after GSoC has finished.

Please review the definition of free software early on and come back and review it from time to time. The The GNU Project / Free Software Foundation have excellent resources to help you understand what a free software license is and how it works to maximize community collaboration.

Don't look for shortcuts

There is no shortcut to GSoC selection and there is no shortcut to GSoC completion.

The student stipend (USD $5,500 in 2014) is not paid to students unless they complete a minimum amount of valid code. This means that even if a student did find some shortcut to selection, it is unlikely they would be paid without completing meaningful work.

If you are the right candidate for GSoC, you will not need a shortcut anyway. Are you the sort of person who can't leave a coding problem until you really feel it is fixed, even if you keep going all night? Have you ever woken up in the night with a dream about writing code still in your head? Do you become irritated by tedious or repetitive tasks and often think of ways to write code to eliminate such tasks? Does your family get cross with you because you take your laptop to Christmas dinner or some other significant occasion and start coding? If some of these statements summarize the way you think or feel you are probably a natural fit for GSoC.

An opportunity money can't buy

The GSoC stipend will not make you rich. It is intended to make sure you have enough money to survive through the summer and focus on your project. Professional developers make this much money in a week in leading business centers like New York, London and Singapore. When you get to that stage in 3-5 years, you will not even be thinking about exactly how much you made during internships.

GSoC gives you an edge over other internships because it involves publicly promoting your work. Many companies still try to hide the potential of their best recruits for fear they will be poached or that they will be able to demand higher salaries. Everything you complete in GSoC is intended to be published and you get full credit for it. Imagine a young musician getting the opportunity to perform on the main stage at a rock festival. This is how the free software community works. It is a meritocracy and there is nobody to hold you back.

Having a portfolio of free software that you have created or collaborated on and a wide network of professional contacts that you develop before, during and after GSoC will continue to pay you back for years to come. While other graduates are being screened through group interviews and testing days run by employers, people with a track record in a free software project often find they go straight to the final interview round.

Register your domain name and make a permanent email address

Free software is all about community and collaboration. Register your own domain name as this will become a focal point for your work and for people to get to know you as you become part of the community.

This is sound advice for anybody working in IT, not just programmers. It gives the impression that you are confident and have a long term interest in a technology career.

Choosing the provider: as a minimum, you want a provider that offers DNS management, static web site hosting, email forwarding and XMPP services all linked to your domain. You do not need to choose the provider that is linked to your internet connection at home and that is often not the best choice anyway. The XMPP foundation maintains a list of providers known to support XMPP.

Create an email address within your domain name. The most basic domain hosting providers will let you forward the email address to a webmail or university email account of your choice. Configure your webmail to send replies using your personalized email address in the From header.

Update your ~/.gitconfig file to use your personalized email address in your Git commits.

Create a web site and blog

Start writing a blog. Host it using your domain name.

Some people blog every day, other people just blog once every two or three months.

Create links from your web site to your other profiles, such as a Github profile page. This helps reinforce the pages/profiles that are genuinely related to you and avoid confusion with the pages of other developers.

Many mentors are keen to see their students writing a weekly report on a blog during GSoC so starting a blog now gives you a head start. Mentors look at blogs during the selection process to try and gain insight into which topics a student is most suitable for.

Create a profile on Github

Github is one of the most widely used software development web sites. Github makes it quick and easy for you to publish your work and collaborate on the work of other people. Create an account today and get in the habbit of forking other projects, improving them, committing your changes and pushing the work back into your Github account.

Github will quickly build a profile of your commits and this allows mentors to see and understand your interests and your strengths.

In your Github profile, add a link to your web site/blog and make sure the email address you are using for Git commits (in the ~/.gitconfig file) is based on your personal domain.

Start using PGP

Pretty Good Privacy (PGP) is the industry standard in protecting your identity online. All serious free software projects use PGP to sign tags in Git, to sign official emails and to sign official release files.

The most common way to start using PGP is with the GnuPG (GNU Privacy Guard) utility. It is installed by the package manager on most Linux systems.

When you create your own PGP key, use the email address involving your domain name. This is the most permanent and stable solution.

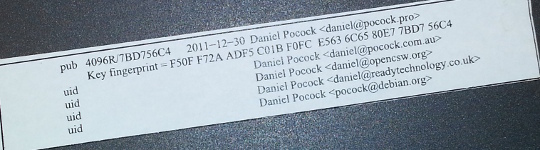

Print your key fingerprint using the gpg-key2ps command, it is in the signing-party package on most Linux systems. Keep copies of the fingerprint slips with you.

This is what my own PGP fingerprint slip looks like. You can also print the key fingerprint on a business card for a more professional look.

Using PGP, it is recommend that you sign any important messages you send but you do not have to encrypt the messages you send, especially if some of the people you send messages to (like family and friends) do not yet have the PGP software to decrypt them.

If using the Thunderbird (Icedove) email client from Mozilla, you can easily send signed messages and validate the messages you receive using the Enigmail plugin.

Get your PGP key signed

Once you have a PGP key, you will need to find other developers to sign it. For people I mentor personally in GSoC, I'm keen to see that you try and find another Debian Developer in your area to sign your key as early as possible.

Free software events

Try and find all the free software events in your area in the months between now and the end of the next Google Summer of Code season. Aim to attend at least two of them before GSoC.

Look closely at the schedules and find out about the individual speakers, the companies and the free software projects that are participating. For events that span more than one day, find out about the dinners, pub nights and other social parts of the event.

Try and identify people who will attend the event who have been GSoC mentors or who intend to be. Contact them before the event, if you are keen to work on something in their domain they may be able to make time to discuss it with you in person.

Take your PGP fingerprint slips. Even if you don't participate in a formal key-signing party at the event, you will still find some developers to sign your PGP key individually. You must take a photo ID document (such as your passport) for the other developer to check the name on your fingerprint but you do not give them a copy of the ID document.

Events come in all shapes and sizes. FOSDEM is an example of one of the bigger events in Europe, linux.conf.au is a similarly large event in Australia. There are many, many more local events such as the Debian UK mini-DebConf in Cambridge, November 2015. Many events are either free or free for students but please check carefully if there is a requirement to register before attending.

On your blog, discuss which events you are attending and which sessions interest you. Write a blog during or after the event too, including photos.

Quantcast generously hosted the Ganglia community meeting in San Francisco, October 2013. We had a wild time in their offices with mini-scooters, burgers, beers and the Ganglia book. That's me on the pink mini-scooter and Bernard Li, one of the other Ganglia GSoC 2014 admins is on the right.

Install Linux

GSoC is fundamentally about free software. Linux is to free software what a tree is to the forest. Using Linux every day on your personal computer dramatically increases your ability to interact with the free software community and increases the number of potential GSoC projects that you can participate in.

This is not to say that people using Mac OS or Windows are unwelcome. I have worked with some great developers who were not Linux users. Linux gives you an edge though and the best time to gain that edge is now, while you are a student and well before you apply for GSoC.

If you must run Windows for some applications used in your course, it will run just fine in a virtual machine using Virtual Box, a free software solution for desktop virtualization. Use Linux as the primary operating system.

Here are links to download ISO DVD (and CD) images for some of the main Linux distributions:

If you are nervous about getting started with Linux, install it on a spare PC or in a virtual machine before you install it on your main PC or laptop. Linux is much less demanding on the hardware than Windows so you can easily run it on a machine that is 5-10 years old. Having just 4GB of RAM and 20GB of hard disk is usually more than enough for a basic graphical desktop environment although having better hardware makes it faster.

Your experiences installing and running Linux, especially if it requires some special effort to make it work with some of your hardware, make interesting topics for your blog.

Decide which technologies you know best

Personally, I have mentored students working with C, C++, Java, Python and JavaScript/HTML5.

In a GSoC program, you will typically do most of your work in just one of these languages.

From the outset, decide which language you will focus on and do everything you can to improve your competence with that language. For example, if you have already used Java in most of your course, plan on using Java in GSoC and make sure you read Effective Java (2nd Edition) by Joshua Bloch.

Decide which themes appeal to you

Find a topic that has long-term appeal for you. Maybe the topic relates to your course or maybe you already know what type of company you would like to work in.

Here is a list of some topics and some of the relevant software projects:

- System administration, servers and networking: consider projects involving monitoring, automation, packaging. Ganglia is a great community to get involved with and you will encounter the Ganglia software in many large companies and academic/research networks. Contributing to a Linux distribution like Debian or Fedora packaging is another great way to get into system administration.

- Desktop and user interface: consider projects involving window managers and desktop tools or adding to the user interface of just about any other software.

- Big data and data science: this can apply to just about any other theme. For example, data science techniques are frequently used now to improve system administration.

- Business and accounting: consider accounting, CRM and ERP software.

- Finance and trading: consider projects like R, market data software like OpenMAMA and connectivity software (Apache Camel)

- Real-time communication (RTC), VoIP, webcam and chat: look at the JSCommunicator or the Jitsi project

- Web (JavaScript, HTML5): look at the JSCommunicator

Before the GSoC application process begins, you should aim to learn as much as possible about the theme you prefer and also gain practical experience using the software relating to that theme. For example, if you are attracted to the business and accounting theme, install the PostBooks suite and get to know it. Maybe you know somebody who runs a small business: help them to upgrade to PostBooks and use it to prepare some reports.

Make something

Make some small project, less than two week's work, to demonstrate your skills. It is important to make something that somebody will use for a practical purpose, this will help you gain experience communicating with other users through Github.

For an example, see the servlet Juliana Louback created for fixing phone numbers in December 2013. It has since been used as part of the Lumicall web site and Juliana was selected for a GSoC 2014 project with Debian.

There is no better way to demonstrate to a prospective mentor that you are ready for GSoC than by completing and publishing some small project like this yourself. If you don't have any immediate project ideas, many developers will also be able to give you tips on small projects like this that you can attempt, just come and ask us on one of the mailing lists.

Ideally, the project will be something that you would use anyway even if you do not end up participating in GSoC. Such projects are the most motivating and rewarding and usually end up becoming an example of your best work. To continue the example of somebody with a preference for business and accounting software, a small project you might create is a plugin or extension for PostBooks.

Getting to know prospective mentors

Many web sites provide useful information about the developers who contribute to free software projects. Some of these developers may be willing to be a GSoC mentor.

For example, look through some of the following:

- Planet / Blog aggregation sites: these sites all have links to the blogs of many developers. They are useful sources of information about events and also finding out who works on what.

- Developer profile pages. Many projects publish a page about each developer and the packages, modules or other components he/she is responsible for. Look through these lists for areas of mutual interest.

- Developer github profiles. Github makes it easy to see what projects a developer has contributed to. To see many of my own projects, browse through the history at my own Github profile

Getting on the mentor's shortlist

Once you have identified projects that are interesting to you and developers who work on those projects, it is important to get yourself on the developer's shortlist.

Basically, the shortlist is a list of all students who the developer believes can complete the project. If I feel that a student is unlikely to complete a project or if I don't have enough information to judge a student's probability of success, that student will not be on my shortlist.

If I don't have any student on my shortlist, then a project will not go ahead at all. If there are multiple students on the shortlist, then I will be looking more closely at each of them to try and work out who is the best match.

One way to get a developer's attention is to look at bug reports they have created. Github makes it easy to see complaints or bug reports they have made about their own projects or other projects they depend on. Another way to do this is to search through their code for strings like FIXME and TODO. Projects with standalone bug trackers like the Debian bug tracker also provide an easy way to search for bug reports that a specific person has created or commented on.

Once you find some relevant bug reports, email the developer. Ask if anybody else is working on those issues. Try and start with an issue that is particularly easy and where the solution is interesting for you. This will help you learn to compile and test the program before you try to fix any more complicated bugs. It may even be something you can work on as part of your academic program.

Find successful projects from the previous year

Contact organizations and ask them which GSoC projects were most successful. In many organizations, you can find the past students' project plans and their final reports published on the web. Read through the plans submitted by the students who were chosen. Then read through the final reports by the same students and see how they compare to the original plans.

Start building your project proposal now

Don't wait for the application period to begin. Start writing a project proposal now.

When writing a proposal, it is important to include several things:

- Think big: what is the goal at the end of the project? Does your work help the greater good in some way, such as increasing the market share of Linux on the desktop?

- Details: what are specific challenges? What tools will you use?

- Time management: what will you do each week? Are there weeks where you will not work on GSoC due to vacation or other events? These things are permitted but they must be in your plan if you know them in advance. If an accident or death in the family cut a week out of your GSoC project, which work would you skip and would your project still be useful without that? Having two weeks of flexible time in your plan makes it more resilient against interruptions.

- Communication: are you on mailing lists, IRC and XMPP chat? Will you make a weekly report on your blog?

- Users: who will benefit from your work?

- Testing: who will test and validate your work throughout the project? Ideally, this should involve more than just the mentor.

If your project plan is good enough, could you put it on Kickstarter or another crowdfunding site? This is a good test of whether or not a project is going to be supported by a GSoC mentor.

Learn about packaging and distributing software

Packaging is a vital part of the free software lifecycle. It is very easy to upload a project to Github but it takes more effort to have it become an official package in systems like Debian, Fedora and Ubuntu.

Packaging and the communities around Linux distributions help you reach out to users of your software and get valuable feedback and new contributors. This boosts the impact of your work.

To start with, you may want to help the maintainer of an existing package. Debian packaging teams are existing communities that work in a team and welcome new contributors. The Debian Mentors initiative is another great starting place. In the Fedora world, the place to start may be in one of the Special Interest Groups (SIGs).

Think from the mentor's perspective

After the application deadline, mentors have just 2 or 3 weeks to choose the students. This is actually not a lot of time to be certain if a particular student is capable of completing a project. If the student has a published history of free software activity, the mentor feels a lot more confident about choosing the student.

Some mentors have more than one good student while other mentors receive no applications from capable students. In this situation, it is very common for mentors to send each other details of students who may be suitable. Once again, if a student has a good Github profile and a blog, it is much easier for mentors to try and match that student with another project.

Conclusion

Getting into the world of software engineering is much like joining any other profession or even joining a new hobby or sporting activity. If you run, you probably have various types of shoe and a running watch and you may even spend a couple of nights at the track each week. If you enjoy playing a musical instrument, you probably have a collection of sheet music, accessories for your instrument and you may even aspire to build a recording studio in your garage (or you probably know somebody else who already did that).

The things listed on this page will not just help you walk the walk and talk the talk of a software developer, they will put you on a track to being one of the leaders. If you look over the profiles of other software developers on the Internet, you will find they are doing most of the things on this page already. Even if you are not selected for GSoC at all or decide not to apply, working through the steps on this page will help you clarify your own ideas about your career and help you make new friends in the software engineering community.

http://danielpocock.com/getting-selected-for-google-summer-of-code-2016

|

|

Will Kahn-Greene: Input: Trigger rule project Phase 1 |

Summary

Last quarter, I finished up the suggester framework for Input. When a user leaves feedback, registered suggester modules would look at the feedback metadata and text and return suggested links. The suggested links would then show up on the Thank You page. Users could then read a bit about the link and click on it if it was appealing.

The first suggester I wrote does a search against SUMO kb articles to see if any of the kb articles seemed relevant to the feedback. Users frequently leave feedback about problems they're having that could be known issues with known solutions or even problems Firefox solves with features the user wasn't aware of. Because of this, it behooves us greatly to guide these users to the solutions that make their Firefox experience better. I wrote a post about that.

This project covers adding a new suggester that allows analyzers to set up trigger rules for suggestions which is stored in the database. When feedback matches the criteria for a trigger rule, then the suggestion is shown.

I pushed out the last code changes on September 9th, 2015. On September 25th, we created a trigger rule for feedback talking about Norton's addon and suggested a link for a SUMO kb article that talks about the problem. In the 5 days, 22 people saw the suggestion and 6 clicked on the link.

This blog post is a write-up for the Trigger rule project phase 1.

Read more… (10 mins to read)

http://bluesock.org/%7Ewillkg/blog/mozilla/input_trigger_rules_phase1.html

|

|

Support.Mozilla.Org: What’s up with SUMO – 2nd October |

What have you been up to this week, SUMO warriors? We have some news and announcements for you, so start reading before the weekend sweeps us all away from our screens!

Welcome, New Contributors!

Contributors of the week

- A group nomination to all the people who keep being awesome and supporting millions of users around the world :-) If you are reading these words… this most likely means YOU!

Last SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel.

Reminder: the next SUMO Community meeting…

- …is going to take place on Monday,5th of October. Join us!

- If you want to add a discussion topic to upcoming the live meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Monday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

Developers

- The notes from the most recent Platform meeting can be found here.

- The next Platform meeting will take place on the 8th of October.

Community

- Let’s talk about contributor information at SUMO.

- The SUMO Event Kit page has been updated – take a look and let us know what you think on the forums!

- If you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- The first set of invites to upcoming global community events should be heading your way.

Support Forum

- Some details from the last SUMO Day:

- 45 participants: 32 for Firefox, 5 for Firefox for Android, 2 for Firefox for iOS

- 93% issues replied to in 24 hours, 96% in 72 hours (FF, FF OS and FF iOS)

- 37% issues replied to in 24 hours and 87% in 72 hours for FF for Android

- Once again – HUGE thanks to all participants! You rock!

- Save the date: the next SUMO Questions Day is coming up on the 8th of October.

Knowledge Base

- The tracking protection articles will be revised and finalized by mid-October, depending on the coordination with Legal and Product teams.

- If you want work with us directly on the Knowledge Base as an intern, take a look at this Outreachy opportunity.

L10n

- Happy post-International Translation Day!

- Huge thanks to everyone rocking the KB last week!

- Let’s talk about new ideas for l10n badges.

- Help needed! Let’s build a database of English departments at universities around the world – this will help us find new SUMO l10ns in the future.

Firefox

- (for Android) The documentation for version 42 has started, and a dot release for 41 took place as well.

- (for Desktop) Version 41 has landed! Head over to our forums to learn more and discuss.

- There has been a general increase in the number of browser hanging cases, so expect to see users reporting problems of that type. Yes, Farmville 2 is unfortunately affected, as well.

https://blog.mozilla.org/sumo/2015/10/02/whats-up-with-sumo-2nd-october/

|

|

Mark Finkle: Fun With Telemetry: URL Suggestions |

Firefox for Android has a UI Telemetry system. Here is an example of one of the ways we use it.

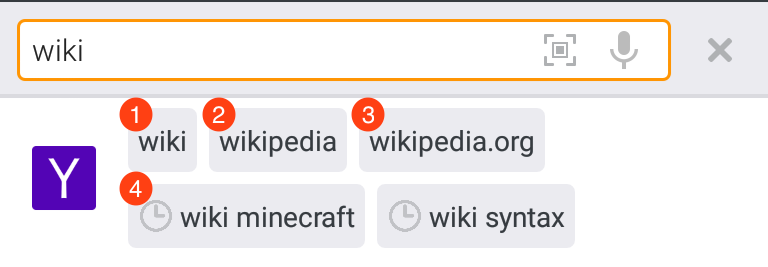

As you type a URL into Firefox for Android, matches from your browsing history are shown. We also display search suggestions from the default search provider. We also recently added support for displaying matches to previously entered search history. If any of these are tapped, with one exception, the term is used to load a search results page via the default search provider. If the term looks like a domain or URL, Firefox skips the search results page and loads the URL directly.

- This suggestion is not really a suggestion. It’s what you have typed. Tagged as

user. - This is a suggestion from the search engine. There can be several search suggestions returned and displayed. Tagged as

engine.# - This is a special search engine suggestion. It matches a domain, and if tapped, Firefox loads the URL directly. No search results page. Tagged as

url - This is a matching search term from your search history. There can be several search history suggestions returned and displayed. Tagged as

history.#

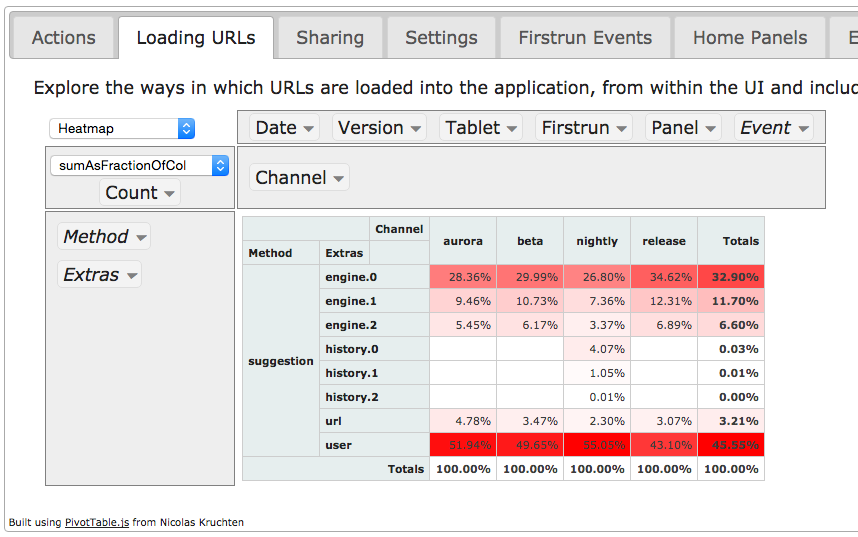

Since we only recently added the support for search history, we want to look at how it’s being used. Below is a filtered view of the URL suggestion section of our UI Telemetry dashboard. Looks like history.# is starting to get some usage, and following a similar trend to engine.# where the first suggestion returned is used more than the subsequent items.

Also worth pointing out that we do get a non-trivial amount of url situations. This should be expected. Most search keyword data released by Google show that navigational keywords are the most heavily used keywords.

An interesting observation is how often people use the user suggestion. Remember, this is not actually a suggestion. It’s what the person has already typed. Pressing “Enter” or “Go” would result in the same outcome. One theory for the high usage of that suggestion is it provides a clear outcome: Firefox will search for this term. Other ways of trigger the search might be more ambiguous.

http://starkravingfinkle.org/blog/2015/10/fun-with-telemetry-url-suggestions/

|

|

Yunier Jos'e Sosa V'azquez: Pablo P'erez: “Mozilla seguir'a velando por los derechos de los usuarios en Internet” |

Para conocer mucho m'as acerca de las personas que integran Mozilla Hispano, llegan nuevamente las entrevistas los miembros de la comunidad. Este espacio servir'a para reconocer el trabajo de nuestros colaboradores.

Para conocer mucho m'as acerca de las personas que integran Mozilla Hispano, llegan nuevamente las entrevistas los miembros de la comunidad. Este espacio servir'a para reconocer el trabajo de nuestros colaboradores.

En esta oportunidad tenemos como invitado al espa~nol Pablo P'erez.

?C'omo te llamas y a qu'e te dedicas?

Me llamo Pablo P'erez Diez, nac'i en un pueblo del norte de Espa~na. Posteriormente me traslad'e a la ciudad de Valladolid (tambi'en en el norte de Espa~na) para realizar mis estudios de T'ecnico Superior en Telem'atica e Ingeniero T'ecnico en Telem'atica. Tras unos a~nos trabajando como programador all'i, actualmente resido en Madrid.

?Por qu'e te decidiste a colaborar con Mozilla?

Decid'i colaborar para aportar mi grano de arena en la defensa de los derechos de los usuarios online (neutralidad, privacidad, derecho al olvido, etc.), todos ellos cada vez m'as en peligro.

?Actualmente qu'e labor desempe~nas en la Comunidad?

Actualmente realizo las tareas de coordinaci'on en el 'area de asistencia que integra tanto el foro como Twitter.

?Qu'e es lo que m'as valoras o es lo m'as positivo de Mozilla / la Comunidad?

Lo que m'as valoro es como gente de diferentes pa'ises, creencias, ideolog'ias, etc. son capaces de colaborar por un objetivo com'un que en 'ultima instancia es el bien com'un.

?Qu'e te aporta a ti Mozilla / la Comunidad?

Me aporta la experiencia de colaborar con otra mucha gente aprendiendo lo mejor de todos ellos tanto de pa'ises hispanohablantes como de otros muchos m'as.

?Como crees que ser'a Mozilla en el futuro?

En mi opini'on Mozilla seguir'a velando por los derechos de los usuarios en Internet apoy'andose cada vez m'as en la comunidad.

Unas palabras para las personas que desean unirse a la Comunidad.

Si compartes los valores tanto de Mozilla como de Mozilla Hispano este es el lugar perfecto para contribuir a que esos valores sean respetados y obteniendo grandes experiencias con el resto de la comunidad.

Muchas gracias Pablo por acceder a la entrevista.

Fuente: Mozilla Hispano

|

|

Hal Wine: duo MFA & viscosity no-cell setup |

duo MFA & viscosity no-cell setup

The Duo application is nice if you have a supported mobile device, and it’s usable even when you you have no cell connection via TOTP. However, getting Viscosity to allow both choices took some work for me.

For various reasons, I don’t want to always use the Duo application, so would like for Viscosity to alway prompt for password. (I had already saved a password - a fresh install likely would not have that issue.) That took a bit of work, and some web searches.

Disable any saved passwords for Viscosity. On a Mac, this means opening up “Keychain Access” application, searching for “Viscosity” and deleting any associated entries.

Ask Viscosity to save the “user name” field (optional). I really don’t need this, as my setup uses a certificate to identify me. So it doesn’t matter what I type in the field. But, I like hints, so I told Viscosity to save just the user name field:

defaults write com.viscosityvpn.Viscosity RememberUsername -bool true

With the above, you’ll be prompted every time. You have to put “something” in the user name field, so I chose to put “push or TOTP” to remind me of the valid values. You can put anything there, just do not check the “Remember details in my Keychain” toggle.

http://dtor.com/halfire/2015/10/02/duo_mfa___viscosity_no_cell_setup.html

|

|

Julien Vehent: Introducing SOPS: a manager of encrypted files for secrets distribution |

Automating the distribution of secrets and credentials to components of an infrastructure is a hard problem. We know how to encrypt secrets and share them between humans, but extending that trust to systems is difficult. Particularly when these systems follow devops principles and are created and destroyed without human intervention. The issue boils down to establishing the initial trust of a system that just joined the infrastructure, and providing it access to the secrets it needs to configure itself.

The initial trust

In many infrastructures, even highly dynamic ones, the initial trust is established by a human. An example is seen in Puppet by the way certificates are issued: when a new system attempts to join a Puppetmaster, an administrator must, by default, manually approve the issuance of the certificate the system needs. This is cumbersome, and many puppetmasters are configured to auto-sign new certificates to work around that issue. This is obviously not recommended and far from ideal.

AWS provides a more flexible approach to trusting new systems. It uses a powerful mechanism of roles and identities. In AWS, it is possible to verify that a new system has been granted a specific role at creation, and it is possible to map that role to specific resources. Instead of trusting new systems directly, the administrator trusts the AWS permission model and its automation infrastructure. As long as AWS keys are safe, and the AWS API is secure, we can assume that trust is maintained and systems are who they say they are.

KMS, Trust and secrets distribution

Using the AWS trust model, we can create fine grained access controls to Amazon's Key Management Service (KMS). KMS is a service that encrypts and decrypts data with AES_GCM, using keys that are never visible to users of the service. Each KMS master key has a set of role-based access controls, and individual roles are permitted to encrypt or decrypt using the master key. KMS helps solve the problem of distributing keys, by shifting it into an access control problem that can be solved using AWS's trust model.

Since KMS's inception a few months ago, a number of projects have popped up to use its capabilities to distribute secrets: credstash and sneaker are such examples. Today I'm introducing sops: a secrets editor that uses KMS and PGP to manage encrypted files.

SOPS: Secrets OPerationS

A few weeks ago, Mozilla's Services Operations team started revisiting the issue of distributing secrets to EC2 instances, with a goal to store these secrets encrypted until the very last moment, when they need to be decrypted on target systems. Not unlike many other organizations that operate sufficiently complex automation, we found this to be a hard problem with a number of prerequisites:

- Secrets must be stored in YAML files for easy integration into hiera

- Secrets must be stored in GIT, and when a new CloudFormation stack is built, the current HEAD is pinned to the stack. (This allows secrets to be changed in GIT without impacting the current stack that may autoscale).

- Encrypt entries separately. Encrypting entire files as blobs makes git conflict resolution almost impossible. Encrypting each entry separately is much easier to manage.

- Secrets must always be encrypted on disk (admin laptop, upstream git repo, jenkins and S3) and only be decrypted on the target systems

Daniel Thornton and I brainstormed a number of ideas, and eventually ended-up with a workflow similar to the one described below.

The idea behind SOPS is to provide a wrapper around a text editor that takes care of the encryption and decryption transparently. When creating a new file, sops generates a data encryption key "Kd" that is itself encrypted with one or more KMS master keys and PGP public keys. Kd is used to encrypt the content of the file with AES256-GCM. In order to decrypt the files, sops must have access to any of the KMS or PGP master keys.

SOPS can be used to encrypt YAML, JSON and TEXT files. In TEXT mode, the content of the file is treated as a blob, the same way PGP would encrypt an entire file. In YAML and JSON modes, however, the content of the file is manipulated as a tree where keys are stored in cleartext, and values are encrypted. hiera-eyaml does something similar, and over the years we learned to appreciate its benefits, namely:

- diff are meaningful. If a single value of a file is modified, only that value will show up in the diff. The diff is still limited to only showing encrypted data, but that information is already more granular that indicating that an entire file has changed.

- conflicts are easier to resolve. If multiple users are working on the same encrypted files, as long as they don't modify the same values, changes are easy to merge. This is an improvement over the PGP encryption approach where unsolvable conflicts often happen when multiple users work on the same file.

# edit a file

$ sops example.yaml file written to example.yaml

# take a look at the diff

$ git diff example.yaml diff --git a/example.yaml b/example.yaml index 00fe479..5f40330 100644 --- a/example.yaml +++ b/example.yaml @@ -1,5 +1,5 @@ # The secrets below are unreadable without access to one of the sops master key -myapp1: ENC[AES256_GCM,data:Tr7oo=,iv:1vw=,aad:eo=,tag:ka=]

+myapp1: ENC[AES256_GCM,data:krm,iv:0Y=,aad:KPyE=,tag:oIA==]

app2: db: user: ENC[AES256_GCM,data:YNKE,iv:H4JQ=,aad:jk0=,tag:Neg==]

Below are two examples of SOPS encrypted files. The first one in YAML, the second one in JSON:

YAML

cleartext:

# The secrets below are unreadable without access to one of the sops master key

myapp1: t00m4nys3cr3tzupdated

app2:

db:

user: eve

password: c4r1b0u

# private key for secret operations in app2

key: |

-----BEGIN RSA PRIVATE KEY-----

MIIBPAIBAAJBAPTMNIyHuZtpLYc7VsHQtwOkWYobkUblmHWRmbXzlAX6K8tMf3Wf

ImcbNkqAKnELzFAPSBeEMhrBN0PyOC9lYlMCAwEAAQJBALXD4sjuBn1E7Y9aGiMz

bJEBuZJ4wbhYxomVoQKfaCu+kH80uLFZKoSz85/ySauWE8LgZcMLIBoiXNhDKfQL

vHECIQD6tCG9NMFWor69kgbX8vK5Y+QL+kRq+9HK6yZ9a+hsLQIhAPn4Ie6HGTjw

fHSTXWZpGSan7NwTkIu4U5q2SlLjcZh/AiEA78NYRRBwGwAYNUqzutGBqyXKUl4u

Erb0xAEyVV7e8J0CIQC8VBY8f8yg+Y7Kxbw4zDYGyb3KkXL10YorpeuZR4LuQQIg

bKGPkMM4w5blyE1tqGN0T7sJwEx+EUOgacRNqM2ljVA=

-----END RSA PRIVATE KEY-----

number: 1234567890

an_array:

- secretuser1

- secretuser2

- somelongvalueAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA

- some other value

encrypted:

# The secrets below are unreadable without access to one of the sops master key

myapp1: ENC[AES256_GCM,data:krwEdH2fxWRexFuvZHS816Wz46Lm,iv:0STqWePc0HOPuDn2EizQdNepx9ksx0guHGeKrshlYSY=,aad:Krl8HyPGQmnIWIZh74Ib+y0OdiVEvRDBv3jTdMGSPyE=,tag:oI2THtQeUX4ZLNnbrdel2A==]

app2:

db:

user: ENC[AES256_GCM,data:YNKE,iv:H9CDb4aUHBJeF2MSTKHQuOwlLxQVdx12AhT0+Dob4JQ=,aad:jlF2KvytlQIgyMpOoO/BiQbukiMwrh1j94Oys+YMgk0=,tag:NeDysIHV9CGtMAQq9i4vMg==]

password: ENC[AES256_GCM,data:p673JCgHYw==,iv:EOOeivCp/Fd80xFdMYX0QeZn6orGTK8CeckmipjKqYY=,aad:UAhi/SHK0aCzptnFkFG4dW8Vv1ASg7TDHD6lui9mmKQ=,tag:QE6uuhRx+cGInwSVdmxXzA==]

# private key for secret operations in app2

key: |-

ENC[AES256_GCM,data:Ea3zTFSOlg1PDZmBa1U2dtKl3pO4nTmaFswJx41fPfq3u8O2/Bq1UVfXn2SrO13obfr6xH4zuUceCDTvW2qvphlan5ir609EXt4dE2TEEcjVKhmAHf4LMwlZVAbvTJtlsnvo/aYJH95uctjsSX5h8pBlLaTGBGYwMrZuMyRU6vdcMWyha+piJckUc9sq7fevy1TSqIxf1Usbn/0NEklWm2VSNzQ2Urqtny6EXar+xU7NfYSRJ3mqmcJZ14oIeXPdpk962RwMEFWdYrbE7D59kWU2BgMjDxYJD5KXpWiw2YCrA/wsATxVCbZlwqC+TJFA5WAUZX756mFhV/t2Li3zQyDNUe6KkMXV9qwf/oV1j5sVRVFsKDYIBqhi3qWBVA+SO9RloQMjhru+IsdbQcS4LKq/1DrBENeZuJ0djUAxKLVfJzMGUf89ju3m9IEPovW8mfF0RbfAGRwFHMO9nEXCxrTLERf3owdR3u4j5/rNBpIvvy1z+2dy6sAx/eyNdS+cn5qO9BPAxsXpSwkaI96rlBagwH1Pfxus0x/D00j93OpE+M8MgQ/9LA68FlCFU4OAQlvw8f7MPoxnq+/+gFTS/qqjTR6EoUuX5NH2WY93YCC5TCbe4GOXyP0H05PbIWq55UMVLNcpAyac3gO4kL5O5U8=,iv:Dl61tsemKH0fdmNul/PmEEsRYFAh8GorR8GRupus/EM=,aad:Ft2aSYYukD1x8pMj1WvmodLjJV6waPy5FqdlImWyQKA=,tag:EPg4KpWqni/buCFjFL857A==]

number: ENC[AES256_GCM,data:XMrBalgZ9tvBxQ==,iv:XyEAAaIzVy/2trnJhLrjMInLg8tMI4CAX9+ccnj3T1Y=,aad:JOlAkP159UxDjL1CrumTuQDqgW2+VOIwz7bdfaJIIn4=,tag:WOHOMJS4nhSdj/aQcGbU1A==]

an_array:

- ENC[AES256_GCM,data:td1aAv4s4cOzSo0=,iv:ErVqte7GpQ3JfzVpVRf7pWSQZDHn6W0iAntKWFsMqio=,aad:RiYy8fKX/yVY7KRgXSOIzydT0+TwK7WGzSFSy+1GmVM=,tag:aSGLCmNZsGcBjxEGvNQRwA==]

- ENC[AES256_GCM,data:2K8C418jef8zoAY=,iv:cXE4Hwdl4ZHzAHHyyXqaIMFs0mn65JUehDdaw/aM0WI=,aad:RlAgUZUZ1DvxD9/lZQk9KOHKl4L+fYETaAdpDVekCaA=,tag:CORSBzis6Vy45dEvT/UtMg==]

- ENC[AES256_GCM,data:hbcOBbsaWmlnrpeuwLfh1ttsi8zj/pxMc1LYqhdksT/oQb80g2z0FE4QwUVb7VV+x98LAWHofVyV8Q==,iv:/sXHXde82r2FyG3Z3vC5x8zONB14RwC0GmtkiYEUNLI=,aad:BQb8l5fZzF/aa/EYnrOQvRfGUTq9QmJOAR/zmgOfYDA=,tag:fjNeg3Manjl6B2U2oflRhg==]

- ENC[AES256_GCM,data:LLHkzGobqL53ws6E2zglkA==,iv:g9z3zz4DUzJr4Cim0SVqKF736w2mZoItqbB0TcsGrQU=,aad:Odrvz0loqFdd9wKJz0ULMX/lyEQcX8WaHE59MgeXkcI=,tag:V+rV/AeZ4uEgtwGhlamTag==]

sops:

kms:

- enc: CiC6yCOtzsnFhkfdIslYZ0bAf//gYLYCmIu87B3sy/5yYxKnAQEBAQB4usgjrc7JxYZH3SLJWGdGwH//4GC2ApiLvOwd7Mv+cmMAAAB+MHwGCSqGSIb3DQEHBqBvMG0CAQAwaAYJKoZIhvcNAQcBMB4GCWCGSAFlAwQBLjARBAyGdRODuYMHbA8Ozj8CARCAO7opMolPJUmBXd39Zlp0L2H9fzMKidHm1vvaF6nNFq0ClRY7FlIZmTm4JfnOebPseffiXFn9tG8cq7oi

enc_ts: 1439568549.245995

arn: arn:aws:kms:us-east-1:656532927350:key/920aff2e-c5f1-4040-943a-047fa387b27e

pgp:

- fp: 85D77543B3D624B63CEA9E6DBC17301B491B3F21

enc: |

-----BEGIN PGP MESSAGE-----

Version: GnuPG v1

hQIMA0t4uZHfl9qgAQ//ZvUMJOLUJyzKa/Uigwh1jKVhx3feHUitVjCWBfVTPgj1

rRbaTcaF/mYi+rLdW+6kmAg1UEPoVgEBEiBvCTcHjyDzw3m0DoQwvK85nqOpEhkx

rjU1XAnKZ8LNFfIaj8Xo/L6qzE882gwOhfCPU+QmnkWdijs6dQof06DButQDTx5D

KlFvr9CgSa52/uPazZ41disho9guS06k+KrV/P2F4jrU5aB5mfP7YZY9mkVcm2bv

9C5O9neNlXcivgWqKQjB5fmv1Z9yUFAUBNg98wjT8o5Hxz6P6hIbV3f+vn/Vu+VZ

Qo+E7g3/2ItaT89KAIVXgQdHhwJneoDBVpJ4rYz7LLbcvEyAbipKIY4Fl3Cn1ggH

9odIZWA6FWZxHNhRVonMVHZ8Jei5NkUdpJltjDmPJpl3B+7XiWg4NS8dp860fLeL

8nrkR0Z4nVK8DNg+7nQiOxHL9wye6ljWl7/xapJ5r+mYA6eLybsSSlxDo9/OmeON

CYo3jV8HT8amrXYVi4MyZ3LV2TTyGVPObnthYEN2lPSJmms6ei6t/xKaZtAj6779

EzGbUP9VpTKKf5tqGcy9MeGEk2p5ed5hJGinrrt92cNIebcMBJpkLQAy++V/fKnH

Meecaoj1NThBnRguNuz73WSy2C5u/g7OoI50HJJCmoVXY+8D64tWmCZc8Ib2fprS

XgFcoR9u5yPkLZW8xASpRXfKKTbRTTjAXdYEyaYuuOW2nFWo62/d1mZsT7kY21ja

AhVVoxwsj45FCuk63bDVceAJJm+9xxufMp0gNW1GUk858VLyE8gn+uAB5zBcS5c=

=BN/t

-----END PGP MESSAGE-----

created_at: 1443203323.058362

JSON

cleartext

{

"address": {

"city": "New York",

"postalCode": "10021-3100",

"state": "NY",

"streetAddress": "21 2nd Street"

},

"age": 25,

"firstName": "John",

"lastName": "Smith",

"phoneNumbers": [

{

"number": "212 555-1234",

"type": "home"

},

{

"number": "646 555-4567",

"type": "office"

}

]

}

encrypted:

{

"address": {

"city": "ENC[AES256_GCM,data:2wNRKB+Sjjw=,iv:rmATLCPii2WMzcT80Wp9gOpYQqzx6juRmCf9ioz2ZLM=,aad:dj0QZW0BvZVjF1Dn25hOJpcwcVB0qYvEIhGWgxq6YzQ=,tag:wOoPYU+8BA9DiNFlsal3Aw==]",

"postalCode": "ENC[AES256_GCM,data:xwWZ/np9Gxv3CQ==,iv:OLwOr7iliPyWWBtKfUUH7E1wQlxJLA6aFxIfNAEC/M0=,aad:8mw5NU8MpyBlrh7XaUqa642jeyJWGqKvduaQ5bWJ5pc=,tag:VFmnc4Ay+yKzyHcrKeEzZQ==]",

"state": "ENC[AES256_GCM,data:3jY=,iv:Y2bEgkjdn91Pbf5RgJMbyCsyfhV7XWdDhe8wVwTQue0=,aad:DcA5kW1rrET9TxQ4kn9jHSpoMlkcPKs5O5n9wZjZYCQ=,tag:ad1xdNnFwkqx/8EOKVVHIA==]",

"streetAddress": "ENC[AES256_GCM,data:payzP57DGPl5S9Z7uQ==,iv:UIz34fk9zH4z6hYfu0duXmAnI8CqnoRhoaIUqg1YoYA=,aad:hll9Baw40lMjwj7HePQ1o1Lsuh1LCwrE6+bkG4025sg=,tag:FDBhYxMmJ1Wj/uxYxdvVZg==]"

},

"age": "ENC[AES256_GCM,data:4Y4=,iv:hi1iSH19dHSgG/c7yVbNj4yzueHSmmY46yYqeNCoX5M=,aad:nnyubQyaWeLTcz9k9cMHUlgTwVDMyHf32sWCBm7KWAA=,tag:4lcMjstadzI8K40BoDEfDA==]",

"firstName": "ENC[AES256_GCM,data:KVe8Dw==,iv:+eg+Rjvaqa2EEp6ufw9c4hwWwObxRLPmxx3fG6rkyps=,aad:3BdHcorHfbvM2Jcs96zX0JY2VQL5dBNgy7zwhqLNqAU=,tag:5OD6MN9SPhBmXuA81hyxhQ==]",

"lastName": "ENC[AES256_GCM,data:1+koqsI=,iv:b2kBxSW4yOnLFc8qoeylkMtiO/6qr4cZ5VTntXTyXO8=,aad:W7HXukq3lUUMj9i57UehILG2NAp8XCgJMYbvgflWJIY=,tag:HOrgi1L+IRP+X5JGMnm7Ig==]",

"phoneNumbers": [

{

"number": "ENC[AES256_GCM,data:Oo0IxdtBrnfE+bTf,iv:tQ1E/JQ4lHZvj1nQnGL2sKE30sCctjiMCiagS2Yzch8=,aad:P+m5gD3pKfNEOy6t61vbKhEpPtMFI2NZjBPrD/m8T9w=,tag:6iRMUVUEx3UZvUTGTjCdwg==]",

"type": "ENC[AES256_GCM,data:M3zOKQ==,iv:pD9RO4BPUVu6AWPo2DprRsOqouN+0HJn+RXQAXhfB2s=,aad:KFBBVEEnSjdmah3i2XmPx7wWEiFPrxpnfKYW4BSolhk=,tag:liwNnip/L6SZ9srn0N5G4g==]"

},

{

"number": "ENC[AES256_GCM,data:BI2f/qFUea6UHYQ+,iv:jaVLMju6h7s+AlF7CsPbpUFXO2YtYAqYsCIsyHgfrfI=,aad:N+8sVpdTlY5I+DcvnY018Iyh/QesD7bvwfKHRr7q2L0=,tag:hHPPpQKP4cUIXfh9CFe4dA==]",

"type": "ENC[AES256_GCM,data:EfKAdEUP,iv:Td+sGaS8XXRqzY98OK08zmdqsO2EqVGK1/yDTursD8U=,aad:h9zi8s+EBsfR3BQG4r+t+uqeChK4Hw6B9nJCrValXnI=,tag:GxSk1LAQIJNGyUy7AvlanQ==]"

}

],

"sops": {

"kms": [

{

"arn": "arn:aws:kms:us-east-1:656532927350:key/920aff2e-c5f1-4040-943a-047fa387b27e",

"created_at": 1443204393.48012,

"enc": "CiC6yCOtzsnFhkfdIslYZ0bAf//gYLYCmIu87B3sy/5yYxKnAQEBAgB4usgjrc7JxYZH3SLJWGdGwH//4GC2ApiLvOwd7Mv+cmMAAAB+MHwGCSqGSIb3DQEHBqBvMG0CAQAwaAYJKoZIhvcNAQcBMB4GCWCGSAFlAwQBLjARBAwBpvXXfdPzEIyEMxICARCAOy57Odt9ngHHyIjVU8wqMA4QszXdBglNkr+duzKQO316CRoV5r7bO8JwFCb7699qreocJd+RhRH5IIE3"

},

{

"arn": "arn:aws:kms:ap-southeast-1:656532927350:key/9006a8aa-0fa6-4c14-930e-a2dfb916de1d",

"created_at": 1443204394.74377,

"enc": "CiBdfsKZbRNf/Li8Tf2SjeSdP76DineB1sbPjV0TV+meTxKnAQEBAgB4XX7CmW0TX/y4vE39ko3knT++g4p3gdbGz41dE1fpnk8AAAB+MHwGCSqGSIb3DQEHBqBvMG0CAQAwaAYJKoZIhvcNAQcBMB4GCWCGSAFlAwQBLjARBAwag3w44N8+0WBVySwCARCAOzpqMpvzIXV416ycCJd7mn9dBvjqzkUDag/zHlKse57uNN7P0S9GeRVJ6TyJsVNM+GlWx8++F9B+RUE3"

}

],

"pgp": [

{

"created_at": 1443204394.748745,

"enc": "-----BEGIN PGP MESSAGE-----\nVersion: GnuPG v1\n\nhQIMA0t4uZHfl9qgAQ//dpZVlRD9WGvz6Pl+PRKvBf661IHLkCeOq5ubzqLIJZu7\nJMNu0KBoO0qX+rgIQtzMU+04QlbIukw01q9ELSDYjBDQPRQJ+6OAeauawxf5mPGa\nZKOaSuoCuPbfOmGj8AENdSCpDaDz+KvOPvo5NNe16kC8BeerFJGewyEwbnkx5dxZ\ngk+LJBOuWRVUEzjsB1pzGfGRzvuzHcrUzWAoA8N936hDFIpoeDYC/8KLc0CWTltA\nYYGaKh5cZxC0R0TgQ5S9GjcU2nZjhcL94XRxZ+9BZDLCDRnjnRfUpPSTHoxr9wmR\nAuLtgyCIolrPl3fqRLJSLUH6FyTo2CO+2mFSx7y9m2OXkKQd1z2tkOlpC9PDTjGT\nVfGvy9nMUsmrgWG35soEmk0nNJaZehiscvZfomBnnHQgqx7DMSMxAnBneFqjsyOQ\nGK7Jacs/tigxe8NZcYhx+usITeQzVLmuqZ2pO5nEGyq0XJhJjxce9YVaeC4QOft0\nlm6qq+m6oABOdKTGh6zuIiWxU1r417IEgV8mkwjlraAvNNPKowQq5j8dohG4HaNK\nOKoOt8aIZWvD3HE9szuH+uDRXBBEAbIvnojQIyrqeIYv1xU8hDTllJPKw/kYD6nx\nMKrw4UAFand5qAgN/6QoIrOPXC2jhA2VegXkt0LXXSoP1ccR4bmlrGRHg0x6Y8zS\nXAE+BVEMYh8l+c86BNhzVOaAKGRor4RKtcZIFCs/Gpa4FxzDp5DfxNn/Ovrhq/Xc\nlmzlWY3ywrRF8JSmni2Asxet31RokiA0TKAQj2Q7SFNlBocR/kvxWs8bUZ+s\n=Z9kg\n-----END PGP MESSAGE-----\n",

"fp": "85D77543B3D624B63CEA9E6DBC17301B491B3F21"

}

]

}

}

As you can see on each key/value pair, only the values are encrypted and keys are kept in clear. It can be argued that this approach leaks sensitive information, but it’s a tradeoff we’re willing to accept in exchange for an increased usability.

Simplifying key management

OpenPGP gets a lot of bad press for being an outdated crypto protocol, and while true, what really made look for alternatives is the difficulty to manage and distribute keys to systems. With KMS, we manage permissions to an API, not keys, and that's a lot easier to do.

But PGP is not dead yet, and we still rely on it heavily as a backup solution: all our files are encrypted with KMS and with one PGP public key, with its private key stored securely for emergency decryption in the event that we lose all our KMS master keys.

That said, nothing prevents you from using SOPS the same way you would use an encrypted PGP file: by referencing the pubkeys of each individual who has access to the file. It can easily be done by providing sops with a comma-separated list of public keys when creating a new file:

$ sops --pgp "E60892BB9BD89A69F759A1A0A3D652173B763E8F, 84050F1D61AF7C230A12217687DF65059EF093D3, 85D77543B3D624B63CEA9E6DBC17301B491B3F21" mynewfile.yaml

Updating the master keys

GnuPG can be a little obscure when it comes to managing the keys that have access to a file. While its command line is powerful, it takes a few minutes to find the right commands and figure out how to provide access to a new member of the team.

In SOPS, managing master keys is easy: they are simply stored as entries of the document under sops->{kms,pgp}. By default, that information is hidden during editing, but calling sops with the "-s" flag will display the master keys in the editor. From there, add new keys by creating entry in the document, or remove them by deleting the lines.

Rotation

Rotating data keys is also trivial: sops provides a rotation flag "-r" that will generate a new data key Kd and re-encrypt all values in the file with it. Coupled with in-place encryption/decryption, it is easy to rotate all the keys on a group of files:

for file in $(find . -type f -name "*.yaml"); do

sops -d -i $file

sops -e -i -r $file

done

Something that should be done every few months for good practice ;)

Assuming roles

SOPS has the ability to use KMS in multiple AWS accounts by assuming roles in each account. Being able to assume roles is a nice feature of AWS that allows administrator to establish trust relationships between accounts, typically from the most secure account to the least secure one. In our use-case, we use roles to indicate that a user of the Master AWS account is allowed to make use of KMS master keys in development and staging AWS accounts. Using roles, a single file can be encrypted with KMS keys in multiple accounts, thus increasing reliability and ease of use.

Check it out, and contribute!

SOPS is available on Github at http://github.com/mozilla/sops and on Pypi at https://pypi.python.org/pypi/sops. We are progressively reaching a stable stage, with a goal to support Python 2.6.6 to 3.4.

|

|

Mozilla Addons Blog: October 2015 Featured Add-ons |

Pick of the Month: New Tab Tools

by Geoff Lankow

Customize the new tab page, add more tiles, add the launcher from Firefox start. Set new tile images and titles, see recently closed tabs, and more!

“I was trying to figure out how to get more tiles without zooming the page out. I stumbled on this and it’s perfect.”

Featured: Privacy Settings

by Jeremy Schomery

Alter Firefox’s built-in privacy settings easily with a toolbar panel.

Featured: PriceZombie

by Price Zombie

Price Zombie is a price tracker and price comparison browser extension. PriceZombie lets you see full price history on millions of products across hundreds of stores such as Amazon, BestBuy, Bloomingdale’s, and many more.

Nominate your favorite add-ons

Featured add-ons are selected by a community board made up of add-on developers, users, and fans. Board members change every six months, so there’s always an opportunity to participate. Stayed tuned to this blog for the next call for applications.

If you’d like to nominate an add-on for featuring, please send it to amo-featured@mozilla.org for the board’s consideration. We welcome you to submit your own add-on!

https://blog.mozilla.org/addons/2015/10/01/october-2015-featured-add-ons/

|

|

Jonathan Griffin: Engineering Productivity Update, Oct 1, 2015 |

We’ve said good-bye to Q3, and are moving on to Q4. Planning for Q4 goals and deliverables is well underway; I’ll post a link to the final versions next update.

Last week, a group of 8-10 people from Engineering Productivity gathered in Toronto to discuss approaches to several aspects of developer workflow. You can look at the notes we took; next up is articulating a formal Vision and Roadmap for 2016, which incorporates both this work as well as other planning which is ongoing separately for things like MozReview and Treeherder.

Highlights

Bugzilla: Support for 2FA has been enhanced.

Treeherder:

- The automatic starring backend, along with related database changes, is now in production. In Q4 we’ll be developing a simple UI for this, and by the end of quarter, automatic starring for at least simple failures should be a reality.

- Treeherder will soon stop posting bug comments for each intermittent failure. Instead OrangeFactor will post periodic summaries on bugs – see: https://groups.google.com/d/msg/mozilla.dev.tree-management/az643p0u4hs/3el7fqIDBwAJ

- Job Ingestion via Pulse Exchanges is in the final review stages. This will allow projects like Task Cluster to send JSON Schema-validated job data to Treeherder via a Pulse Exchange, rather than our APIs. It also enables developers and testers the ability to ingest production jobs from Task Cluster to their local machine. Blog post: https://cheshirecam.wordpress.com/2015/09/30/treeherder-loading-data-from-pulse/

- :Goma’s line highlighting and linking in the log viewer are now live. See this blog post for details.

- Jonathan French, our awesome contractor and contributor, has landed onscreen shortcuts; see this blog post. Jonathan will be moving on to other things soon, and we’ll sorely miss him!

Perfherder and Performance Automation:

- Work is underway to prototype a UI in Perfherder which can be used for performance sheriffing sans Alert Manager or Graphserver; follow bug 1201154 for more details. Separately, work has been started to allow other performance harnesses (besides Talos) submit data to Perfherder; bug 1175295.

- Talos on linux32 has been turned off; the machines that had been used for this are being repurposed as Windows 7 and Windows 8 test workers, in order to reduce overall wait times on those platforms.

- The dromaeo DOM Talos test has been enabled on linux64.

MozReview and Autoland: mcote posted a blog post detailing some of the rough edges in MozReview, and explaining how the team intends on tackling these. dminor blogged about the state of autoland; in short, we’re getting close to rolling out an initial implementation which will work similarly to the current “checkin-needed” mechanism, except, of course, it will be entirely automated. May you never have to worry about closed trees again!

Mobile Automation: gbrown made some additional improvements to mach commands on Android; bc has been busy with a lot of Autophone fixes and enhancements.

Firefox Automation: maja_zf has enabled MSE playback tests on trunk, running per-commit. They will go live at the next buildbot reconfig.

Developer Workflow: numerous enhancements have been made to |mach try|; see list below in the Details section. run-by-dir has been applied to mochitest-plain on most platforms, and to mochitest-chrome-opt, by kaustabh93, one of team’s contributors. This reduces test bleedthrough, a source of intermittent failures, as well as improves our ability to change job chunking without breaking tests.

Build System: gps has improved test package generation, which results in significantly faster builds – a savings of about 5 minutes per build on OSX and Windows in automation; about 90s on linux.

TaskCluster Migration: linux64 debug builds are now running, so ahal is unblocked on getting linux64 debug tests running in TaskCluster. armenzg has landed mozharness code to support running buildbot jobs via TaskCluster scheduling, via buildbot bridge.

The Details

bugzilla.mozilla.org

- bug 1199087 – 2fa protection tidied up and extended beyond login

- bug 1199090 – add printable 2fa recovery codes

- as always: https://wiki.mozilla.org/BMO/Recent_Changes

Treeherder

- [jgraham] Autoclassification backend now working on Treeherder production

- [jgraham+mdoglio] API endpoint for autoclassification data now landed on master

- [jfrench] :Goma’s Line highlighting and line linking in Logviewer is now on master https://tojonmz.wordpress.com/2015/09/28/line-highlighting-and-line-linking-in-logviewer/ – https://bugzilla.mozilla.org/show_bug.cgi?id=1108764

- [jfrench] Onscreen keyboard shortcuts are now on master https://tojonmz.wordpress.com/2015/09/29/onscreen-keyboard-shortcuts/ – ‘part 1’ of https://bugzilla.mozilla.org/show_bug.cgi?id=1141569

- [jfrench] Over the last several weeks treeherder.css has been split into 8 separate components https://github.com/mozilla/treeherder/tree/master/ui/css for anyone who is adding new styles – https://bugzilla.mozilla.org/show_bug.cgi?id=1193804

- [emorley] Treeherder will soon stop posting bug comments for each intermittent failure. Instead OrangeFactor will post periodic summaries on bugs – see: https://groups.google.com/d/msg/mozilla.dev.tree-management/az643p0u4hs/3el7fqIDBwAJ

- [camd] Job Ingestion via Pulse Exchanges is in the final review stages. This will allow projects like Task Cluster to send JSON Schema-validated job data to Treeherder via a Pulse Exchange, rather than our APIs. It also enables developers and testers the ability to ingest production jobs from Task Cluster to their local machine. Blog post: https://cheshirecam.wordpress.com/2015/09/30/treeherder-loading-data-from-pulse/

Perfherder/Performance Testing

- [jmaher] Linux32 Talos is turned off

- [jmaher] Dromaeo DOM is enabled for Linux64 Talos

- Big perfherder database refactoring landed, which paves the way to expanding the scope of the system — https://bugzilla.mozilla.org/show_bug.cgi?id=1192976

- [wlach] Prototyping UI for sheriffing performance alerts in https://bugzilla.mozilla.org/show_bug.cgi?id=1201154

- [wlach] Started on work to let other harnesses besides talos easily submit performance artifacts to perfherder. https://bugzilla.mozilla.org/show_bug.cgi?id=1175295

TaskCluster Support

- armenzg – Mozharness code landed to support Buildbot Bridge test jobs

- http://armenzg.blogspot.ca/2015/09/mozharness-support-for-buildbot-bridge.html

- [ahal] started work getting linux64 tests running with taskcluster

Mobile Automation

- mach cppunittest now supports Firefox for Android

- mach test commands now download host utilities for Firefox for Android

- [bc] Autophone

- Bug 1202826 – Autophone – 2015-09-09 deployment

- Bug 1202833 – Autophone – CHARGING state should not prevent Autophone shutdown/restart

- Bug 1201061 – Autophone – deploy robocop_adobe_flash.html

- Bug 1196115 – Intermittent Crash Autophone S1S2Test beginning 2015-08-18

- Bug 1207836 – Autophone – 2015-09-23 deployment

- Bug 1205864 – Autophone – phonetest.py:Logcat collects duplicate messages

- Bug 1206954 – Autophone – better handle failures to submit results to PhoneDash

- Bug 1209796 – Autophone – next deployment (In progress)

- Bug 1205836 – Autophone – investigate orange for remote nytimes s1s2

- Bug 1208782 – Autophone – do not attempt to get response json during Treeherder submission error if response is None

- Bug 1209647 – Autophone – eliminate startup check for network connectivity

- Bug 1209651 – Autophone – do not allow logcat device error to prevent setup_job initialization

- Bug 1209653 – Autophone – after clearing logcat, specifying -b main can hang

- Bug 1209675 – Autophone – Logcat should use PhoneTest loggerdeco

- Bug 1209691 – Autophone – handle incorrect logcat dates emitted by devices.

- jmaher/wlach working to get Autophone Talos reporting results to PerfHerder

Firefox and Media Automation

- [maja_zf] MSE Video Playback buildbot jobs will be deployed to run per-commit on mozilla-inbound any day now…

General Automation

- [ahal] started work on reftest using structured logging

- [ahal] consolidate mochitest + xpcshell’s StructuredLog.jsm

- [jgraham] Landed new |mach try| implementation that passes test paths rather than manifest paths; this adds support for web-platform-tests in |mach try|

- [jgraham] Added support for saving and reusing try strings in |mach try|

- [jgraham] Added Talos support to |mach try|

- [jgraham] reftest and xpcshell test harnesses now take paths to multiple test locations on the command line and expose more functionality through mach

- [jmaher] Kaustabh93 has runbydir live for mochitest-plain osx debug, and mochitest-chrome opt; All that is left is mochitest-chrome debug and linux64 ASAN e10s.

- [ato] Support for running Marionette tests using `mach try` in review

ActiveData

- [ekyle] Upgraded cluster to 1.7.1 (1.4.2 had known recovery issues)

- [ekyle] Added third `zone` with a full copy of data for redundancy (zone awareness on three zones does not work as expected? seems to cause instability. Looking into the problem further: https://github.com/elastic/elasticsearch/issues/13667#issuecomment-141903363)

- [ekyle] fixed problems with deep queries and deployed: We can now query subtests: http://activedata.allizom.org/tools/query.html#query_id=HyQAbwOd , turns out they are a bit of a mess, so of limited use right now.

WebDriver (highlights)

- [ato] Defined remote end steps for Element Clear command

- [ato] Element location strategies have been outlined

- [ato] Added steps to Base64 encode screen capture results

- [ato] Because implementors have relied on prose from outdated sections, warnings were added to those sections which have yet to be redefined

- + a ton of various fixes and rewording

Marionette

- [ato] findChildElement and findChildElements commands removed

bughunter

- [bc] Have been keeping the system running, helping triage bugs

- [tomcat] Has been filing bugs, sent a September status report to internal set of people.

bugherder

- bugs 924405/1199788 – Bugherder now uses Bugzilla’s native REST API and can use bugzilla api keys for authentication even when 2FA is enabled.

Firefox build system

- [gps] Test packaging is now drastically faster in automation. 50% reduction across all platforms. This is a 5+ minute decrease on OS X build jobs!

https://jagriffin.wordpress.com/2015/10/01/engineering-productivity-update-oct-01-2015/

|

|

Will Kahn-Greene: Input: Moving to Django 1.8 |

Over the course of 2015, we've been reworking large parts of the Fjord codebase to do the following:

- ditch jingo and friends and other libraries that deviate from typical Django and aren't active projects

- reduce complexity by moving closer to a "default/typical Django project"

- upgrade to Django 1.8

This blog post covers many grueling details including order we did things, design decisions we made and some anecdotes.

Read more… (13 mins to read)

http://bluesock.org/%7Ewillkg/blog/mozilla/input_django_1_8_upgrade.html

|

|

Ben Hearsum: Improvements to updates for Foxfooders |

We've been providing on-device updates (that is to say: no flashing required) to users in the Foxfood program for nearly 6 months now. These updates are intended for users who are officially part of the Foxfooding program, but the way our update system works means that anyone who puts themselves on the right update channel can receive them. This makes things tough for us, because we'd like to be able to provide official Foxfooders with some extra bits and we can't do that while these populations are on the same update channel. Thanks to work that Rob Wood and Alexandre Lissy are doing, we'll soon be able to resolve this and get Foxfooders the bits they need to do the best possible testing.

To make this possible, we've implemented a short term solution that lets us only serve updates to official Foxfooders. When landed, they will send a hashed version of their IMEI as part of their update request. A list of the acceptable IMEI hashes will be maintained in Balrog (the update server), which lets us only serve an update if the incoming one matches one of the whitelisted ones.

To really make this work we need to detangle the current "dogfood" update channel. As I mentioned, it's currently being used in two distinct populations of users: those are part of the official program, and those who aren't. In order to support both populations of users we'll be splitting the "dogfood" update channel into two:

- The new "foxfood" channel will be for users who are officially part of the Foxfooding program. Users on this channel will be part of the IMEI whitelist, and could receive FOTA or OTA updates.

- The "dogfood" channel will continue to serve serve OTA updates to anyone who puts themself on it.

To transition, we will be asking folks who are officially part of the Foxfooding program to flash with a new image that switches them to the "foxfood" update channel. When this is ready to go, it will be announced and communicated appropriately.

Big thanks to everyone who was involved in this effort, particularly Rob Wood, who implemented the new whitelisting feature in Balrog, and Alexandre Lissy and Jean Gong, who went through multiple rounds of back and forth before we settled on this solution.

It's worth noting that this solution isn't ideal: sending IMEIs (even hashed versions) isn't something we prefer to do for both reasons of user privacy and protection of the bits. In the longer term, we'd like to look at a solution that wouldn't require IMEIs to be sent to us. This could come in the form of embedding or asking for credentials, and using those to access the updates. This type of solution would enhance user privacy and make it harder to get around the protections by brute forcing.

http://hearsum.ca/blog/improvements-to-updates-for-foxfooders.html

|

|

Air Mozilla: Web QA Weekly Meeting |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Dan Minor: Autoland to Inbound |

We’re currently putting the finishing touches on Autoland to Inbound from MozReview. We have a relatively humble goal for the initial version of this: we’d like to replace the current manual “checkin-needed” process done by a sheriff. Basically, if a patch is reviewed and a try run has been done, the patch author can flag “checkin-needed” in Bugzilla and at some point in the future, a sheriff will land the changes.

In the near future, if a “ship-it” has been granted and a try run has been made, a button to land to Inbound will appear in the Automation menu in MozReview. At the moment, the main benefit of using Autoland is that it will retry landings in the event that the tree is closed. Enforcing a try run should cut down on tree closures, analysis of closure data in the past has shown a not insignificant number of patches which end up breaking the tree have had no try run performed.

A number of workflow improvements should be easy to implement once this is in place, for instance automatically closing MozReview requests to keep people’s incoming review requests sane and rewriting any r? in the commit summary to be r=.

http://www.lowleveldrone.com/mozilla/mozreview/autoland/2015/10/01/autoland.html

|

|

James Long: Immutable Data Structures and JavaScript |

A little while ago I briefly talked about my latest blog rewrite and promised to go more in-depth on specific things I learned. Today I'm going to talk about immutable data structures in JavaScript, specifically two libraries immutable.js and seamless-immutable. There are other libraries (mori is ClojureScript's persistent data structures and is similar to immutable.js), but we can get a good idea of tradeoffs by just looking at these two. I'll also talk a little about transit-js, which is a great way to serialize anything.

Very little of this applies specifically to Redux. I talk about using immutable data structures generally, but provide pointers for using it specifically in Redux. In Redux, you have a single app state object and update it immutably, and there are various ways to achieve this, each with tradeoffs. I explore this below.

One thing to think about with Redux is how you combine reducers to form the single app state atom; the default method that Redux provides (combineReducers) assumes that you are combining multiple values into a single JavaScript object. If you really want to combine them into a single Immutable.js object, for example, you would need to write your own combineReducers that does so. This might be necessary if you need to serialize your app state and you assume that it's entirely made up of Immutable.js objects.

Most of this applies to using immutable objects in JavaScript in general. It's a bit awkward sometimes because you're fighting the default semantics, and it can feel like you're juggling types. However, depending on your app and how you set things up, you can get a lot out of it.

Currently there is a proposal for adding immutable data structures to JavaScript natively, but it's not clear if it will work out yet. It would certainly remove most problems with using them in JavaScript currently.

Immutable.js

Immutable.js comes from Facebook and is one of the most popular implementations of immutable data structures. It's the real deal; it implements fully persistent data structures from scratch using advanced things like tries to implement structural sharing. All updates return new values, but internally structures are shared to drastically reduce memory usage (and GC thrashing). This means that if you append to a vector with 1000 elements, it does not actually create a new vector 1001-elements long. Most likely, internally only a few small objects are allocated.

The advancements of structural sharing data structures, greatly helped with the groundbreaking work by Okasaki, has all but shattered the myth that immutable values are too slow for Real Apps. In fact, it's surprising how many apps can be made faster with them. Apps which read and copy data structures heavily (to avoid being mutated from someone else) will easily benefit from immutable data structures (simply copying a large array once will diminish your performance wins from mutability).

Another example is how ClojureScript discovered that UIs are given a huge performance boost when backed by immutable data structures. If you're mutating a UI, you commonly touch the DOM more than necessary (because you don't know whether the value needs updating or not). React will minimize DOM mutations, but you still need to generate the virtual DOM for it to work with. When components are immutable, you don't even have to generate the virtual DOM; a simple === equality check tells you if it needs to update or not.

Is it Too Good to Be True? You might wonder why we don't use immutable data structures all the time with the benefits they provide. Well, some languages do, like ClojureScript and Elm. It's harder in JavaScript because they are not the default in the language, so we need to weigh the pros and cons.

Space and GC Efficiency

I already explained why structural sharing makes immutable data structures efficient. Nothing is going to beat mutating an array at an index, but the overhead of immutability isn't large. If you need to avoid mutations, they are going to beat copying objects hands-down.

In Redux, immutability is enforced. You won't see any updates on the screen unless you return a new value. There are big wins because of this, and if you want to avoid copying you might want to look at Immutable.js.

Reference & Value Equality

Let's say you internally stored a reference to an object, and called it obj1. Later on, obj2 comes down the pipe. If you never mutate objects, and obj1 === obj2 is true, you know absolutely nothing has changed. In many architectures, like React, this allows you easily do powerful optimizations.

That's called "reference equality," where you can simply just compare pointers. But there's also the concept of "value equality," where you can check if two objects are identical by doing obj1.equals(obj2). When things are immutable, you treat objects as just values.

In ClojureScript everything is a value, and even the default equality operator performs the value equality check (as if === would). If you actually wanted to compare instances you would use identical?. The benefit of value equality with immutable data structures is that it can usually do the checks more performantly than a full recursive scan (if it shares structure it can skip that part).

So where does this come into play? I already explained how it makes optimizing React trivial. Just implement shouldComponentUpdate and check if the state is identical, and skip rendering if so.

I also discovered that while using === with Immutable.js does not perform a value equality check (obviously, you can't override JavaScript's semantics), Immutable.js uses value equality for identities of objects. Anywhere that it wants to check if objects are the same, it uses value equality.

For example, keys of a Map object are value equality checked. This means I can store an object in a Map, and retrieve it later just by supplying an object of the same shape:

let map = Immutable.Map();

map = map.set(Immutable.Map({ x: 1, y: 2}), "value");

map.get(Immutable.Map({ x: 1, y: 2 })); // -> "value"

This has a lot of really nice implications. For example, let's say I have a function that takes a query object that specifies fields to pull from a server:

function runQuery(query) {

// pseudo-code: somehow pass the query to the server and

// get some results

return fetchFromServer(serialize(query));

}

runQuery(Immutable.Map({

select: 'users',

filter: { name: 'James' }

}));

If I wanted to implement query caching, this is all I would have to do:

let queryCache = Immutable.Map();

function runQuery(query) {

let cached = queryCache.get(query);

if(cached) {

return cached;

} else {

let results = fetchFromServer(serialize(query));

queryCache.set(query, results);

return results;

}

}

I can treat the query object as a value, and store the results with it as a key. Later on, if something runs the same query, I'll get back the cached results even if the query object isn't the same instance.

There are all sorts of patterns that value equality simplifies. In fact, I do the exact same technique when querying for posts.

JavaScript Interop

The major downside to Immutable.js data structures is the reason that they are able to implement all the above features: they are not normal JavaScript data structures. An Immutable.js object is completely different from a JavaScript object.

That means you must do map.get("property") instead of map.property, and array.get(0) instead of array[0]. While Immutable.js goes to great lengths to provide JavaScript-compatible APIs, even they are different (push must return a new array instead of mutating the existing instance). You can feel it fighting the default mutation-heavy semantics of JavaScript.

The reason this makes things complicated is that unless you're really hardcore and are starting a project from scratch, you can't use Immutable objects everywhere. You don't really need to anyway for local objects of small functions. Even if you create every single object/array/etc as immutable, you're going to have to work with 3rd party libraries which use normal JavaScript objects/arrays/etc.