Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mike Conley: Things I’ve Learned This Week (May 25 – May 29, 2015) |

MozReview will now create individual attachments for child commits

Up until recently, anytime you pushed a patch series to MozReview, a single attachment would be created on the bug associated with the push.

That single attachment would link to the “parent” or “root” review request, which contains the folded diff of all commits.

We noticed a lot of MozReview users were (rightfully) confused about this mapping from Bugzilla to MozReview. It was not at all obvious that Ship It on the parent review request would cause the attachment on Bugzilla to be r+’d. Consequently, reviewers used a number of workarounds, including, but not limited to:

- Manually setting the r+ or r- flags in Bugzilla for the MozReview attachments

- Marking Ship It on the child review requests, and letting the reviewee take care of setting the reviewer flags in the commit message

- Just writing “r+” in a MozReview comment

Anyhow, this model wasn’t great, and caused a lot of confusion.

So it’s changed! Now, when you push to MozReview, there’s one attachment created for every commit in the push. That means that when different reviewers are set for different commits, that’s reflected in the Bugzilla attachments, and when those reviewers mark “Ship It” on a child commit, that’s also reflected in an r+ on the associated Bugzilla attachment!

I think this makes quite a bit more sense. Hopefully you do too!

See gps’s blog post for the nitty gritty details, and some other cool MozReview announcements!

http://mikeconley.ca/blog/2015/06/01/things-ive-learned-this-week-may-25-may-29-2015/

|

|

Nick Cameron: My Git and GitHub work flow |

Also, I only describe what I do, not why, i.e., the underlying concepts you should understand. To do that would probably take a book rather than a blog post and I'm not the right person to write such a thing.

Starting out

I operate in two modes when using Git - either I'm contributing to an existing repo (e.g., Rust) or I'm working on my own repo (e.g., rustfmt), which might just be a personal thing, essentially just using GitHub for backup, or which might be a community project that I started. The workflow for the two scenarios is a bit different.

Lets start with contributing to someone else's repo. The first step is to find that repo on GitHub and fork it (I'm assuming you have a GitHub account set up, it's very easy to do if you haven't). Forking means that you have your own personal copy of the repo hosted by GitHub and associated with your account. So for example, if you fork https://github.com/rust-lang/rust, then you'll get https://github.com/nrc/rust. It is important you fork the version of the repo you want to contribute to. In this case, make sure you fork rust-lang's repo, not somebody else's fork of that repo (e.g., nrc's).

Then make a local clone of your fork so you can work on it locally. I create a directory, then `cd` into it and use:

git clone git@github.com:nrc/rust.git .

Here, you'll replace the 'git@...' string with the identifier for your repo found on its GitHub page. The trailing `.` means we clone into the current directory instead of creating a new directory.

Finally. you'll want to create a reference to the original repo (so your local copy of the repo knows about your fork (nrc/rust, called 'origin') and the original repo (rust-lang/rust, called 'upstream'):

git remote add upstream https://github.com/rust-lang/rust.git

Now you're all set to go and contribute something!

If I'm starting out with my own repo, then I'll first create a directory and write a bit of code in there, probably add a README.md file, and make sure something builds. Then, to make it a git repo I use

git init

then make an initial commit (see the next section for more details). Over on GitHub, go to the repos page and add a new, empty repo, choose a cool name, etc. The we have to associate the local repo with the one on GitHub:

git remote add origin git@github.com:nrc/rust-fmt.git

Finally, we can make the GitHub repo up to date with the local one (again, see below for more details):

git push origin master

Doing work

I usually start off by creating a new branch for my work. Create a branch called 'foo' using:

git checkout -b foo

There is always a 'master' branch which corresponds with the current state (as of the last time you updated) of the repo without any of your branches. I try to avoid working on master. You can switch between branches using `git checkout`, e.g.,

git checkout master

git checkout foo

Once I've done some work, I commit it. Eventually, when you submit the work upstream, a commit should be a self-contained, modular piece of work. However, when working locally I prefer to make many small commits and then sort them out later. I generally commit when I context switch to work on something else, when I have to make a decision I'm not sure about, or when I reach a point which seems like it could be a natural break in the proper commits I'll submit later. I usually commit using

git commit -a

The `-a` means all the changed files git knows about will be included in the commit. This is usually what I want. I sometimes use `-m "The commit message"`, but often prefer to use a text editor since it allows me to check which files are being committed.

Often, I don't want to create a new commit, but just add my current work to the last commit, then I use:

git commit -a --amend

If you've created new files as part of your work, you need to tell Git about them before committing, use:

git add path/to/file_name.rs

Updating

When I want to update the local repo to the upstream repo I use `git pull upstream master` (with my master branch checked out locally). Commonly, I want to update my master and then rebase my working branch to branch off the updated master.

Assuming I'm working on the foo branch, the recipe I use to rebase is:

git checkout master

git pull upstream master

git checkout foo

git rebase master

The last step will often require manual resolution of conflicts, after that you must `git add` the changed files and then `git rebase --continue`. That might happen several times.

If you've got a lot of commits, I find it is usually easier to squash a bunch of commits before rebasing - it sometimes means dealing with conflicts fewer times.

On the subject of updating the repo, there is a bit of a debate about rebasing vs merging. Rebasing has the advantage that it gives you a clean history and fewer merge commits (which are just boilerplate, most of the time). However, it does change your history, which if you are sharing your branch is very, very bad news. My rule of thumb is to rebase private branches (never merge) and to only merge (never rebase) branches which have been shared publicly. The latter generally means the master branch of repos that others are also working on (e.g., rustfmt). But sometimes I'll work on a project branch with someone else.

Current status

With all these repos, branches, commits, and so forth, it is pretty easy to get lost. Here are few commands I use to find out what I'm doing.

As an aside, because Rust is a compiled language and the compiler is big, I have multiple Rust repos on my local machine so I don't have to checkout branches too often.

Show all branches in the current repo and highlight the current one:

git branch

Show the history of the current branch (or any branch, foo):

git log

git log foo

Which files have been modified, deleted, etc.:

git status

All changes since last commit (excludes files which Git doesn't know about, e.g., new files which haven't been `git add`ed):

git diff

The changes in the last commit and since that commit:

git diff HEAD~1

Tidying up

Like I said above, I like to make a lot of small, work in progress commits and then tidy up later. To do that I use:

git rebase -i HEAD~n

Where `n` is the number of commits I want to tidy up. `rebase -i` lets you move commits, around squash them together, reword the commit messages, and so forth. I usually do a `rebase -i` before every rebase and a thorough one before submitting work.

Submitting work

Once I've tidied up the branch, I push it to my GitHub repo using:

git push origin foo

I'll often do this to backup my work too if I'm spending more than a day or so on it. If I've done this and rebased since then, then you need to add `-f` to the above command. Sometimes I want my branch to have a different name on the GitHub repo than I've had locally:

git push origin foo:bar

(The common use case here is foo = "fifth-attempt-at-this-stupid-piece-of-crap-bar-problem").

When ready to submit the branch, I go to the GitHub website and make a pull request (PR). Once that is reviewed, the owner of the upstream repo (or, often, a bot) will merge it into master.

Alternatively, if it is my repo I might create a branch and pull request, or I might manually merge and push:

git checkout master

git merge foo

git push origin master

Misc.

And here is a bunch of stuff I do all the time, but I'm not sure how to classify.

Delete a branch when I'm all done:

git branch -d foo

or

git branch -D foo

You need the capital 'D' if the branch has not been merged to master. With complex merges (e.g., if the branch got modified) you sometimes need capital 'D', even if the branch is merged.

Sometimes you need to throw away some work. If I've already committed, I use the following to throw away the last commit:

git reset HEAD~1

or

git reset HEAD~1 --hard

The first version leaves changes from the commit as uncommitted changes in your working directory. The second version throws them away completely. You can change the '1' to a larger number to throw away more than one commit.

If I have uncommitted changes I want to throw away, I use:

git checkout HEAD -f

This only gets rid of changes to tracked files. If you created new files, those won't be deleted.

Sometimes I need more fine-grained control of which changes to include in a commit. This often happens when I'm reorganising my commits before submitting a PR. I usually use some combination of `git rebase -i` to get the ordering right, then pop off a few commits using `git reset HEAD~n`, then add changes back in using:

git add -p

which prompts you about each change. (You can also use `git add filename` to add all the changes in a file). After doing all this, use `git commit` to commit. My muscle memory often appends the `-a`, which ruins all the work put in to separating out changes.

Sometimes this is too much work, in which case the best thing to do is save all the changes from your commits as a diff, edit them around in a text editor, then patch them back piece by piece when committing. Something like:

git diff ... >patch.diff

...

patch -p1

Every now and again, I'll need to copy a commit from one branch to another. I use `git log branch-name` to show the commits, copy the hash from the commit I want to copy, then use

git cherry-pick hash

to copy the commit into the current branch.

Finally, if things go wrong and you can't see a way out, `git reflog` is the secret magic that can fix nearly everything. It shows a log of pretty much everything Git has done, down to a fine level of detail. You can usually use this info to get out of any pickle (you'll have to google the specifics). However, Git only know about files which have been committed at least once, so even more reason to do regular, small commits.

http://featherweightmusings.blogspot.com/2015/06/my-git-and-github-work-flow.html

|

|

Karl Dubost: What a Web developer should know about cross compatibility? |

This morning on reddit I discovered the following question:

What big cross browser compatibility issues (old and new) should we know about as web developers?

followed by the summary:

I am a relative noob to web dev and have my first interview for my first front end dev role coming up. Cross browser compatibility isn't a strong point of mine. What issues should I (and any developers) know about and cater for?

Any links to articles so I can do some further reading would be awesome sauce. Thanks!

When talking about compatibility, I usually prefer Web Compatibility to cross browser compatibility. It's not that much but there is a turn into it which focus less on browsers and more on the Web as used by people.

First of all, there's no magic bullet. Browsers evolve, devices and networks change, Web development practices and tooling are emerging at a pace which is difficult to follow. Your knowledge will always be outdated. There's no curriculum which once known will guarantee that all Web development practices will be flawless. It leads me to a first rule.

Web Compatibility is a practice.

Read blogs around, look at Stackoverflow, understand the issues that some people have with their Web development. But more than blindly copying a quick solution, understand your choices and the impact on users. Often your users are in a blind spot. You can't see them because your Web development prevent them to access the content you have created. One of the quick answers we receive when contacting for Web Compatibility issues is: "This browser is not part of our targets list, because it's not visible in our stats." As a matter of fact, indeed, a browser Z is not visible in their stats, because… the Web site is not usable at all with this browser. There's no chance it will ever appear in the stats. Worse. The browsers have to lie about which they are to be able to get the site working, inflating the stats of the targeted list. Another strategy is to make your Web site resilient enough that you do not have to question yourselves about Web Compatibility.

Web Compatibility is about being resilient.

Where do you learn about Web Compatibility?

- Web Compatibility with a punk twist by Mike Taylor

- What could be wrong by Hallvord Reiar Michaelsen Steen

- Dev Opera. Opera had a time with a strong stance on Web Compatibility. They have bitten the dust because of Web Compatibility issues and switched to Blink. There are still a lot of good people there, caring about Web Compatibility.

- Intent to ship and deprecate

- (Google) Blink dev mailing list

- Mozilla Dev Platform

- Microsoft

- Web Compat Teams

- Microsoft also their teams

- Mozilla

- WebCompat A cross-browsers effort for Web Compatibility

All of these you may find on Planet WebCompat

Where do I report Web Compatibility issues?

Before I would have told you on each browser bug reporting systems. But now we have a place for this:

Some Reference Resources

- can I use

- MDN compatibility tables At the bottom of each features, you will find a compatibility table in between browsers.

- Firefox Site Compatibility maintained by Kohei Yoshino giving information about regressions and backward compatibility issues for Firefox. Very useful.

- Safari Guides

Understanding some meta issues about the Web

There are some people who you should read absolutely about the Web in general and why it's important to be inclusive in the Web development. They do not necessary talk often about Web Compatibility per se, but they approach the Web with a more 30,000 meters view of the Web. A more humane Web. This list is open ended, but I would start with:

Otsukare!

http://www.otsukare.info/2015/06/01/webdev-cross-compatibility-101

|

|

Kartikaya Gupta: Management, TRIBE, and other thoughts |

At the start of 2014, I became a "manager". At least in the sense that I had a couple of people reporting to me. Like most developers-turned-managers I was unsure if management was something I wanted to do but I figured it was worth trying at least. Somebody recommended the book First, Break All The Rules to me as a good book on management, so I picked up a copy and read it.

The book is based on data from many thousands of interviews and surveys that the Gallup organization did, across all sorts of organizations. There were lots of interesting points in the book, but the main takeaway relevant here was that people who build on their strengths instead of trying to correct their weaknesses are generally happier and more successful. This leads to some obvious follow-up questions: how do you know what your strengths are? What does it mean to "build on your strengths"?

To answer the first question I got the sequel, Now, Discover Your Strengths, which includes a single-use code for the online StrengthsFinder assessment. I read the book, took the assessment, and got a list of my top 5 strengths. While interesting, the list was kind of disappointing, mostly because I didn't really know what to do with it. Perhaps the next book in the series, Go Put Your Strengths To Work, would have explained but at this point I was disillusioned and didn't bother reading it.

Fast-forward to a month ago, when I finally got to attend the first TRIBE session. I'd heard good things about it, without really knowing anything specific about what it was about. Shortly before it started though, they sent us a copy of Strengths Based Leadership, which is a book based on the same Gallup data as the aforementioned books, and includes a code to the 2.0 version of the same online StrengthsFinder assessment. I read the book and took the new assessment (3 of the 5 strengths I got matched my initial results; the variance is explained on their FAQ page) but didn't really end up with much more information than I had before.

However, the TRIBE session changed that. It was during the session that I learned the answer to my earlier question about what it means to "build on strengths". If you're familiar with the 4 stages of competence, that TRIBE session took me from "unconscious incompetence" to "conscious incompetence" with regard to using my strengths - it made me aware of when I'm using my strengths and when I'm not, and to be more purposeful about when to use them. (Two asides: (1) the TRIBE session also included other useful things, so I do recommend attending and (2) being able to give something a name is incredibly powerful, but perhaps that's worth a whole 'nother blog post).

At this point, I'm still not 100% sure if being a manager is really for me. On the one hand, the strengths I have are not really aligned with the strengths needed to be a good manager. On the other hand, the Strengths Based Leadership book does provide some useful tips on how to leverage whatever strengths you do have to help you fulfill the basic leadership functions. I'm also not really sold on the idea that your strengths are roughly constant over your lifetime. Having read about neuroplasticity I think your strengths might change over time just based on how you live and view your life. That's not really a case for or against being a manager or leader, it just means that you'd have to be ready to adapt to an evolving set of strengths.

Thankfully, at Mozilla, unlike many other companies, it is possible to "grow" without getting pushed into management. The Mozilla staff engineer level descriptions provide two tracks - one as an individual contributor and one as a manager (assuming these descriptions are still current - and since the page was last touched almost 2 years ago it might very well not be!). At many companies this is not even an option.

For now I'm going to try to level up to "conscious competence" with respect to using my strengths and see where that gets me. Probably by then the path ahead will be more clear.

|

|

Andy McKay: Docker in development (part 2) |

Tips for developing with Docker.

Write critical logs outside the container

You are developing an app, so it will go wrong. It will go wrong a lot, that's fine. But at the beginning you will have a cycle that goes like this: container starts up, container starts your app, app fails and exits, container stops. What went wrong with your app? You've got no idea.

Because the container died, you lost the logs and if you start the container up again, the same thing happens.

If you store the critical logs of your app outside your container, then you can see the problems after it exits. If you use a process runner like supervisord then you'll find that docker logs contains your process runner.

You can do a few things here, like move your logs into the process runner logs, or just write your logs to a location that allows you to save them. There's lots of ways to do that, but since we use supervisord and docker-compose, for us its a matter of making sure supervisord writes its logs out to a volume.

See also part 1.

http://www.agmweb.ca/2015-05-31-docker-in-development-part-2/

|

|

Christian Heilmann: That one tweet… |

One simple tweet made me feel terrible. One simple tweet made me doubt myself. One simple tweet – hopefully not meant to be mean – had a devastating effect on me. Here’s how and why, and a reminder that you should not be the person that with one simple tweet causes anguish like that.

Beep beep, I’m a roadrunner

As readers of this blog, you know that the last weeks have been hectic for me:

I bounced from conference to conference, delivering a new talk at each of them, making my slides available for the public. I do it because I care about people who can not get to the conference and for those I coach about speaking so they can re-use the decks if they wanted to. I also do a recording of my talks and publish them on YouTube so people can listen. Mostly I do these for myself, so I can get better at what I do. This is a trick I explained in the developer evangelism handbook – another service I provide for free.

Publishing on the go is damn hard:

- Most Wi-Fi at events is flaky or very slow.

- I travel world-wide which means I have no data on my phone without roaming and such.

- Many times uploading my slides needs four to five attempts

- Creating the screencast can totally drain the battery of my laptop with no power plug in sight.

- Uploading the screencast can mean I do it over night.

The format of your slides are irellevant to these issues. HTML, Powerpoint, Keynote – lots of images means lots of bytes.

Traveling and presenting is tough – physical space still matters

Presenting and traveling is both stressful and taxing. Many people ask me how I do it and my answer is simply that: the positive feedback I get and seeing people improve when they get my advice is a great reward and keeps me going. The last few weeks have been especially taxing as I also need to move out of my flat. I keep getting calls by my estate agent that I need to wire money or be somewhere I can not. I also haven’t seen my partner more than a few hours because we are both busy.

I love my job. I still get excited to go to conferences, hear other presenters, listen to people’s feedback and help them out. A large part of my career is based on professional relationships that formed at events.

The lonely part of the rockstar life

Being a public speaker means you don’t spend much time for yourself. At the event you sleep on average 4-5 hours as you don’t want to be the rockstar presenter that arrives, delivers a canned talk and leaves. You are there for the attendees, so you sacrifice your personal time. That’s something to prepare for. If you make promises, you also need to deliver them immediately. Any promise of you to look into something or contact someone you don’t follow up as soon as you can piles up to a large backlog you have a hard time remembering what it is you wanted to find out.

It also can make you feel very lonely. I’ve had many conversations with other presenters who feel very down as you are not with the people you care about, in the place you call home or in an environment you understand and feel comfortable in. Sure, hotels, airports and conference venues are all lush and have a “jet set” feel to them. They are also very nondescript and make you feel like a stranger.

Progressive Enhancement discussions happen and I can’t be part of it!

I care deeply about progressive enhancement. To me, it means you care more for the users of your products than you care about development convenience. It is a fundamental principal of the web, and – to me – the start of caring about accessibility.

In the last few weeks progressive enhancement was a hot topic in our little world and I wanted to chime in many a time. After all, I wrote training materials on this 11 years ago, published a few books on it and keep banging that drum. But, I was busy with the other events on my backlog and the agreed topics I would cover.

That’s why I was very happy when “at the frontend” came up as a speaking opportunity and I submitted a talk about progressive enhancement. In this talk, I explain in detail that it is not about the JavaScript on or off case. I give out a lot of information and insight into why progressive enhancement is much more than that.

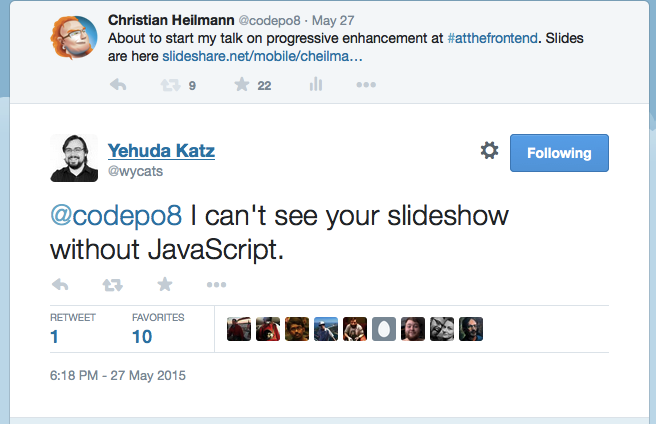

That Tweet

Just before my talk, I uploaded and tweeted my deck, in case people are interested. And then I get this tweet as an answer:

It made me angry – a few minutes before my talk. It made me angry because of a few things:

- it is insincere – this is not someone who has trouble accessing my content. It is someone who wants to point out one flaw to have a “ha-ha you’re doing it wrong” moment.

- the poster didn’t bother to read what I wrote at all – not even the blog post I wrote a few days before covering exactly the same topic or others explaining that the availability of JS is not what PE is about at all.

- it judges the content of a publication by the channel it was published on. Zeldman wrote an excellent piece on this years ago how this is a knee-jerk reaction and an utter fallacy.

- it makes me responsible for Slideshare’s interface – a service used my many people as a great place to share decks

- it boils the topic I talked about and care deeply for down to a simple binary state. This state isn’t even binary if you analyse it and is not the issue. Saying something is PE because of JavaScript being available or not is the same technical nonsense that is saying a text-only version means you are accessible.

I posted the Zeldman article as an answer to the tweet and got reprimanded for not using any of the dozens available HTML slide deck versions that are progressively enhancing a document. Never mind that using keynote makes me more effective and helps me with re-use. I have betrayed the cause and should do better and feel bad for being such a terrible slide-creator. OK then. I shrugged this off before, and will again. But, this time, I was vulnerable and it hurt more.

Siding with my critics

I addition to me having lot of respect of what Yehuda achieved other people started favouriting the tweet. People I look up to, people I care about:

- Alex Russel, probably one of the most gifted engineers I know with a vocabulary that makes a Thesaurus blush.

- Michael Mahemoff, always around with incredibly good advice when HTML5 and apps where the question.

- My colleague Jacob Rossi, who blows me away every single day with his insight and tech knowledge

And that’s when my anger turned inward and the ugly voice of impostor syndrome reared its head. Here is what it told me:

- You’re a fool. You’re making a clown of yourself trying to explain something everyone knows and nobody gives a shit about. This battle is lost.

- You’re trying to cover up your loss of reality of what’s needed nowadays to be a kick-ass developer by releasing a lot of talks nobody needs. Why do you care about writing a new talk every time? Just do one, keep delivering it and do some real work instead

- Everybody else moved on, you just don’t want to admit to yourself that you lost track.

- They are correct in mocking you. You are a hypocrite for preaching things and then violating them by not using HTML for a format of publication it wasn’t intended for.

I felt devastated, I doubted everything I did. When I delivered the talk I had so looked forward to and many people thanked me for my insights I felt even worse:

- Am I just playing a role?

- Am I making the lives of those who want to follow what I advocate unnecessarily hard?

- Shouldn’t they just build things that work in Chrome now and burn them in a month and replace them with the next new thing?

Eventually, I did what I always do and what all of you should: tell the impostor syndrome voice in your head to fuck off and let my voice of experience ratify what I am doing. I know my stuff, I did this for a long time and I have a great job working on excellent products.

Recovery and no need for retribution

I didn’t have any time to dwell more on this, as I went to the next conference. A wonderful place where every presentation was full of personal stories, warmth and advice how to be better in communicating with another. A place with people from 37 countries coming together to celebrate their love for a product that brings them closer. A place where people brought their families and children although it was a geek event.

I’m not looking for pity here. I am not harbouring a grudge against Yehuda and I don’t want anyone to reprimand him. My insecurities and how they manifest themselves when I am vulnerable and tired are my problem. There are many other people out there with worse issues and they are being attacked and taken advantage of and scared. These are the ones we need to help.

Think before trying to win with a tweet

What I want though is to make you aware that everything you do online has an effect. And I want you to think next time before you post the “ha-ha you are wrong” tweet or favourite and amplify it. I want you to consider to:

- read the whole publication before judging it

- question if your criticism really is warranted

- wonder how much work it was to publish the thing

- consider how the author feels when the work is reduced to one thing that might be wrong.

Social media was meant to make media more social. Not to make it easier to attack and shut people up or tell them what you think they should do without asking about the how and why.

I’m happy. Help others to be the same.

|

|

Patrick Cloke: New Position in Cyber Security at Percipient Networks |

Note

If you’re hitting this from planet mozilla, this doesn’t mean I’m leaving the Mozilla Community since I’m not (nor was I ever) a Mozilla employee.

After working for The MITRE Corporation for a bit over four years, I left a few weeks ago to begin work at a cyber security start-up: Percipient Networks. Currently our main product is STRONGARM: an intelligent DNS blackhole. Usually DNS blackholes are set-up to block known bad domains by sending requests for those domains to a non-routable or localhost. STRONGARM redirects that traffic for identification and analysis. You could give it a try and let us know of any feedback you might have! Much of my involvement has been in the design and creation of the blackhole, including writing protocol parsers for both standard protocols and malware.

So far, I’ve been greatly enjoying my new position. There’s been a renewed focus on technical work, while being in a position to greatly influence both SRTONGARM and Percipient Networks. My average day involves many more activities now, including technical work: reverse engineering, reviewing/writing code, or reading RFCs; as well as other work: mentoring [1], user support, writing documentation, and putting desks together [2]. I’ve been thoroughly enjoying the varied activities!

Shifting software stacks has also been nice. I’m now writing mostly Python code, instead of mostly MATLAB, Java and C/C++ [3]. It has been great how many ready to use packages are available for Python! I’ve been very impressed with the ecosystem, and been encouraged to feed back into the open-source community, where appropriate.

| [1] | We currently have four interns, so there’s always some mentoring to do! |

| [2] | We got a delivery of 10 desks a couple of weeks ago and spent the evening putting them together. |

| [3] | I originally titled this post "xx days since my last semi-colon!", since that has gone from being a common key press of mine to a rare one. Although now I just get confused when switching between Python and JavaScript. Since semicolons are optional in both, but encouraged in JavaScript and discouraged in Python… |

http://patrick.cloke.us/posts/2015/05/30/new-position-in-cyber-security-at-percipient-networks/

|

|

Niko Matsakis: Virtual Structs Part 2: Classes strike back |

This is the second post summarizing my current thoughts about ideas related to “virtual structs”. In the last post, I described how, when coding C++, I find myself missing Rust’s enum type. In this post, I want to turn it around. I’m going to describe why the class model can be great, and something that’s actually kind of missing from Rust. In the next post, I’ll talk about how I think we can get the best of both worlds for Rust. As in the first post, I’m focusing here primarily on the data layout side of the equation; I’ll discuss virtual dispatch afterwards.

(Very) brief recap

In the previous post, I described how one can setup a class hierarchy in C++ (or Java, Scala, etc) with a base class and one subclass for every variant:

1 2 3 | |

This winds up being very similar to a Rust enum:

1 2 3 4 | |

However, there are are some important differences. Chief among them is

that the Rust enum has a size equal to the size of its largest

variant, which means that Rust enums can be passed “by value” rather

than using a box. This winds up being absolutely crucial to Rust: it’s

what allows us to use Option<&T>, for example, as a zero-cost

nullable pointer. It’s what allows us to make arrays of enums (rather

than arrays of boxed enums). It’s what allows us to overwrite one enum

value with another, e.g. to change from None to Some(_). And so

forth.

Problem #1: Memory bloat

There are a lot of use cases, however, where having a size equal to the largest variant is actually a handicap. Consider, for example, the way the rustc compiler represents Rust types (this is actually a cleaned up and simplified version of the real thing).

The type Ty represents a rust type:

1 2 | |

As you can see, it is in fact a reference to a TypeStructure (this

is called sty in the Rust compiler, which isn’t completely up to

date with modern Rust conventions). The lifetime 'tcx here

represents the lifetime of the arena in which we allocate all of our

type information. So when you see a type like &'tcx, it represents

interned information allocated in an arena. (As an aside, we

added the arena back before we even had lifetimes at all, and

used to use unsafe pointers here. The fact that we use proper

lifetimes here is thanks to the awesome eddyb and his super duper

safe-ty branch. What a guy.)

So, here is the first observation: in practice, we are already boxing

all the instances of TypeStructure (you may recall that the fact

that classes forced us to box was a downside before). We have to,

because types are recursively structured. In this case, the ‘box’ is

an arena allocation, but still the point remains that we always pass

types by reference. And, moreover, once we create a Ty, it is

immutable – we never switch a type from one variant to another.

The actual TypeStructure enum is defined something like this:

1 2 3 4 5 6 7 8 | |

You can see that, in addition to the types themselves, we also intern

a lot of the data in the variants themselves. For example, the

BareFn variant takes a &'tcx BareFnData<'tcx>. The reason we do

this is because otherwise the size of the TypeStructure type

balloons very quickly. This is because some variants, like BareFn,

have a lot of associated data (e.g., the ABI, the types of all the

arguments, etc). In contrast, types like structs or references have

relatively little associated data. Nonetheless, the size of the

TypeStructure type is determined by the largest variant, so it

doesn’t matter if all the variants are small but one: the enum is

still large. To fix this, Huon

spent quite a bit of time analyzing the size of each variant

and introducing indirection and interning to bring it down.

Consider what would have happened if we had used classes instead. In that case, the type structure might look like:

1 2 3 4 5 6 7 | |

In this case, whenever we allocated a Reference from the arena, we

would allocate precisely the amount of memory that a Reference

needs. Similarly, if we allocated a BareFn type, we’d use more

memory for that particular instance, but it wouldn’t affect the other

kinds of types. Nice.

Problem #2: Common fields

The definition for Ty that I gave in the previous section was

actually somewhat simplified compared to what we really do in rustc.

The actual definition looks more like:

1 2 3 4 5 6 7 8 9 | |

As you can see, Ty is in fact a reference not to a TypeStructure

directly but to a struct wrapper, TypeData. This wrapper defines a

few fields that are common to all types, such as a unique integer id

and a set of flags. We could put those fields into the variants of

TypeStructure, but it’d be repetitive, annoying, and inefficient.

Nonetheless, introducing this wrapper struct feels a bit indirect. If we are using classes, it would be natural for these fields to live on the base class:

1 2 3 4 5 6 7 8 9 10 11 | |

In fact, we could go further. There are many variants that share common bits of data. For example, structs and enums are both just a kind of nominal type (“named” type). Almost always, in fact, we wish to treat them the same. So we could refine the hierarchy a bit to reflect this:

1 2 3 4 5 6 7 8 | |

Now code that wants to work uniformly on either a struct or enum could

just take a Nominal*.

Note that while it’s relatively easy in Rust to handle the case where

all variants have common fields, it’s a lot more awkward to handle a

case like Struct or Enum, where only some of the variants have

common fields.

Problem #3: Initialization of common fields

Rust differs from purely OO languages in that it does not have special constructors. An instance of a struct in Rust is constructed by supplying values for all of its fields. One great thing about this approach is that “partially initialized” struct instances are never exposed. However, the Rust approach has a downside, particularly when we consider code where you have lots of variants with common fields: there is no way to write a fn that initializes only the common fields.

C++ and Java take a different approach to initialization based on constructors. The idea of a constructor is that you first allocate the complete structure you are going to create, and then execute a routine which fills in the fields. This approach to constructos has a lot of problems – some of which I’ll detail below – and I would not advocate for adding it to Rust. However, it does make it convenient to separately abstract over the initialization of base class fields from subclass fields:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

Here, the constructor for TypeStructure initializes the

TypeStructure fields, and the Bool constructor initializes the

Bool fields. Imagine we were to add a field to TypeStructure that

is always 0, such as some sort of counter. We could do this without

changing any of the subclasses:

1 2 3 4 5 6 7 8 9 | |

If you have a lot of variants, being able to extract the common initialization code into a function of some kind is pretty important.

Now, I promised a critique of constructors, so here we go. The biggest

reason we do not have them in Rust is that constructors rely on

exposing a partially initialized this pointer. This raises the

question of what value the fields of that this pointer have before

the constructor finishes: in C++, the answer is just undefined

behavior. Java at least guarantees that everything is zeroed. But

since Rust lacks the idea of a “universal null” – which is an

important safety guarantee! – we don’t have such a convenient option.

And there are other weird things to consider: what happens if you call

a virtual function during the base type constructor, for example? (The

answer here again varies by language.)

So, I don’t want to add OO-style constructors to Rust, but I do want some way to pull out the initialization code for common fields into a subroutine that can be shared and reused. This is tricky.

Problem #4: Refinement types

Related to the last point, Rust currently lacks a way to “refine” the

type of an enum to indicate the set of variants that it might be. It

would be great to be able to say not just “this is a TypeStructure”,

but also things like “this is a TypeStructure that corresponds to

some nominal type (i.e., a struct or an enum), though I don’t know

precisely which kind”. As you’ve probably surmised, making each

variant its own type – as you would in the classes approach – gives

you a simple form of refinement types for free.

To see what I mean, consider the class hierarchy we built for TypeStructure:

1 2 3 4 5 6 7 8 | |

Now, I can pass around a TypeStructure* to indicate “any sort of

type”, or a Nominal* to indicate “a struct or an enum”, or a

BareFn* to mean “a bare fn type”, and so forth.

If we limit ourselves to single inheritance, that means one can construct an arbitrary tree of refinements. Certainly one can imagine wanting arbitrary refinements, though in my own investigations I have always found a tree to be sufficient. In C++ and Scala, of course, one can use multiple inheritance to create arbitrary refinements, and I think one can imagine doing something similar in Rust with traits.

As an aside, the right way to handle ‘datasort refinements’ has been a topic of discussion in Rust for some time; I’ve posted a different proposal in the past, and, somewhat amusingly, my very first post on this blog was on this topic as well. I personally find that building on a variant hierarchy, as above, is a very appealing solution to this problem, because it avoids introducing a “new concept” for refinements: it just leverages the same structure that is giving you common fields and letting you control layout.

Conclusion

So we’ve seen that there also advantages to the approach of using

subclasses to model variants. I showed this using the TypeStructure

example, but there are lots of cases where this arises. In the

compiler alone, I would say that the abstract syntax tree, the borrow

checker’s LoanPath, the memory categorization cmt types, and

probably a bunch of other cases would benefit from a more class-like

approach. Servo developers have long been requesting something more

class-like for use in the DOM. I feel quite confident that there are

many other crates at large that could similarly benefit.

Interestingly, Rust can gain a lot of the benefits of the subclass approach—namely, common fields and refinement types—just by making enum variants into types. There have been proposals along these lines before, and I think that’s an important ingredient for the final plan.

Perhaps the biggest difference between the two approaches is the size

of the “base type”. That is, in Rust’s current enum model, the base

type (TypeStructure) is the size of the maximal variant. In the

subclass model, the base class has an indeterminate size, and so must

be referenced by pointer. Neither of these are an “expressiveness”

distinction—we’ve seen that you can model anything in either

approach. But it has a big effect on how easy it is to write code.

One interesting question is whether we can concisely state conditions in which one would prefer to have “precise variant sizes” (class-like) vs “largest variant” (enum). I think the “precise sizes” approach is better when the following apply:

- A recursive type (like a tree), which tends to force boxing anyhow. Examples: the AST or types in the compiler, DOM in servo, a GUI.

- Instances never change what variant they are.

- Potentially wide variance in the sizes of the variants.

The fact that this is really a kind of efficiency tuning is an important insight. Hopefully our final design can make it relatively easy to change between the ‘maximal size’ and the ‘unknown size’ variants, since it may not be obvious from the get go which is better.

Preview of the next post

The next post will describe a scheme in which we could wed together enums and structs, gaining the advantages of both. I don’t plan to touch virtual dispatch yet, but intead just keep focusing on concrete types.

http://smallcultfollowing.com/babysteps/blog/2015/05/29/classes-strike-back/

|

|

Adam Munter: “The” Problem With “The” Perimeter |

“It’s secure, we transmit it over over SSL to a cluster behind a firewall in a restricted vlan.”

“But my PCI QSA said I had to do it this way to be compliant.”

This study by Gemalto discusses interesting survey results about perceptions of security perimeters such as that 64% of IT decision makers are looking to increase spend on perimeter security within the next 6 months and that 1/3 of those polled believe that unauthorized users have access to their information assets. It also revealed that only 8% of data affected by breaches was protected by encryption.

The perimeter is dead, long live the perimeter! The Jericho Forum started discussing “de-perimeterization” in 2003. If you hung out with pentesters, you already knew the the concept of ‘perimeter’ was built on shaky foundations. The growth of mobile, web API, and Internet of Things have only served to drive the point home. Yet, there is an entire industry of VC-funded product companies and armies of consultants who are still operating from the mental model of there being “a perimeter.”[0]

In discussion about “the perimeter,” it’s not the concept of “perimeter” that is most problematic, it’s the word “the.”

There is not only “a” perimeter, there are many possible logical perimeters, depending on the viewpoint of the actor you are considering. There are an unquantifiable number of theoretical overlaid perimeters based on the perspective of the actors you’re considering and their motivation, time and resources, what they can interact with or observe, what each of those components can interact with, including humans and their processes and automated data processing systems, authentication and authorization systems, all the software, libraries, and hardware dependencies going down to the firmware, the interaction between different systems that might interpret the same data to mean different things, and all execution paths, known and unknown, etc, etc.

The best CSOs know they are working on a problem that has no solution endpoint, and that thinking so isn’t even the right mindset or model. They know they are living in a world of resource scarcity and have a problem of potentially unlimited size and start by asset classification, threat modeling[1] and inventorying. Without that it’s impossible to even have a rough idea of the shape and size of the problem. They know that their actual perimeter isn’t what’s drawn inside an arbitrary theoretical border in a diagram. It’s based on the attackable surface area seen by an potential attacker, the value of the resource to the attacker, and the many possible paths that could be taken to reach it in a way that is useful to the attacker, not some imaginary mental model of logical border control.

You’ve deployed anti-malware and anti-APT products, Network and web app firewalls, endpoint protection and database encryption. Fully PCI compliant! All useful when applied with knowledge of what you’re protecting, how, from whom, and why. But if you don’t consider what you’re protecting and from whom as you design and build systems, not so useful. Imagine the following scenario: All of these perimeter protection technologies allow SSL traffic through port 443 to your webserver’s REST API listeners. The listening application has permission to access the encrypted database to read or modify data. And when the attacker finds a logic vulnerability that lets them access data which their user id should not be able to see, it looks looks like normal application traffic to your IDS/IPS and web app firewall. As requested, the application uses its credentials to retrieve decrypted data and present it to the user.

Footnotes

0. I’m already skeptical about the usefulness of studies that aggregate data in this way. N percent of respondents think that y% is the correct amount to spend on security technology categories A, B, C. Who cares? The increasing yoy numbers of attacks are the result of the distribution of knowledge during the time surveyed and in any event these numbers aggregate a huge variety of industries, business histories, risk tolerance, and other tastes and preferences.

1. Threat modeling doesn’t mean technical decomposition to identify possible attacks, that’s attack modeling, through the two are often confused, even in many books and articles. The “threat” is “customer data exposed to unauthorized individuals.” The business “risk” is “Data exposure would lead to headline risk(bad press) and loss of data worth approx $N dollars.” The technical risk is “Application was built using inline SQL queries and is vulnerable to SQL injection” and “Database is encrypted but the application’s credentials let it retrieve cleartext data” and probably a bunch of other things.

Filed under: infosec, web security Tagged: infosec, Mozilla, perimeter, webappsec

https://adammuntner.wordpress.com/2015/05/29/the-problem-with-the-perimeter/

|

|

Air Mozilla: World Wide Haxe Conference 2015 |

Fifth International Conference in English about the Haxe programming language

Fifth International Conference in English about the Haxe programming language

|

|

Mozilla Open Policy & Advocacy Blog: Copyright reform in the European Union |

The European Union is considering broad reform of copyright regulations as part of a “Digital Single Market” reform agenda. Review of the current Copyright Directive, passed in 2001, began with a report by MEP Julia Reda. The European Parliament will vote on that report and a number of amendments this summer, and the process will continue with a legislative proposal from the European Commission in the autumn. Over the next few months we plan to add our voice to this debate; in some cases supporting existing ideas, in other cases raising new issues.

This post lays out some of the improvements we’d like to see in the EU’s copyright regime – to preserve and protect the Web, and to better advance the innovation and competition principles of the Mozilla Manifesto. Most of the objectives we identify are actively being discussed today as part of copyright reform. Our advocacy is intended to highlight these, and characterize positions on them. We also offer a proposed exception for interoperability to push the conversation in a slightly new direction. We believe an explicit exception for interoperability would directly advance the goal of promoting innovation and competition through copyright law.

Promoting innovation and competition

“The effectiveness of the Internet as a public resource depends upon interoperability (protocols, data formats, content), innovation and decentralized participation worldwide.” – Mozilla Manifesto Principle #6

Clarity, consistency, and new exceptions are needed to ensure that Europe’s new copyright system encourages innovation and competition instead of stifling it. If new and creative uses of copyrighted content can be shut down unconditionally, innovation suffers. If copyright is used to unduly restrict new businesses from adding value to existing data or software, competition suffers.

Open norm: Implement a new, general exception to copyright allowing actions which pass the 3-step test of the Berne Convention. That test says that any exception to copyright must be a special case, that it should not conflict with a normal exploitation of the work, and it should not unreasonably prejudice the legitimate interests of the author. The idea of an “open norm” is to capture a natural balance for innovation and competition, allowing the copyright holder to retain normal exclusionary rights but not exceptional restrictive capabilities with regards to potential future innovative or competing technologies.

Quotation: Expand existing protections for text quotations to all media and a wider range of uses. An exception of this type is fundamental not only for free expression and democratic dialogue, but also to promote innovation when the quoter is adding value through technology (such as a website which displays and combines excerpts of other pages to meet a new user need).

Interoperability: An exception for acts necessary to enable ongoing interoperability with an existing computer program, protocol, or data format. This would directly enable competition and technology innovation. Such interoperation is also necessary for full accessibility for the disabled (who are often not appropriately catered for in standard programs), and to allow citizens to benefit fully from other exceptions to copyright.

Not breaking the Internet

“The Internet is a global public resource that must remain open and accessible.” – Mozilla Manifesto Principle #2

The Internet has numerous technical and policy features which have combined, sometimes by happy coincidence, to make it what it is today. Clear legislation to preserve and protect these core capabilities would be a powerful assurance, and avoid creating chilling risk and uncertainty.

Hyperlinking: hyperlinking should not be considered as any form of “communication to a public”. A recent Court of Justice of the EU ruling stated that hyperlinking was generally legal, as it does not consist of communication to a “new public.” A stronger and more common-sense rule would be a legislative determination that linking, in and of itself, does not constitute communicating the linked content to a public under copyright law. The acts of communicating and making content available are done by the person who placed the content on the target server, not by those making links to content.

Robust protections for intermediaries: a requirement for due legal process before intermediaries are compelled to take down content. While it makes sense for content hosters to be asked to remove copyright-infringing material within their control, a mandatory requirement to do so should not be triggered by mere assertion, but only after appropriate legal process. The existing waiver for liability for intermediaries should thus be strengthened with an improved definition of “actual knowledge” that requires such process, and (relatedly) to allow minor, reasonable modifications to data (e.g. for network management) without loss of protection.

We look forward to working with European policymakers to build consensus on the best ways to protect and promote innovation and competition on the Internet.

Chris Riley

Gervase Markham

Jochai Ben-Avie

https://blog.mozilla.org/netpolicy/2015/05/28/copyright-reform-in-the-european-union/

|

|

The Servo Blog: This Week In Servo 33 |

In the past two weeks, we merged 73 pull requests.

We have a new member on our team. Please welcome Emily Dunham! Emily will be the DevOps engineer for both Servo and Rust. She has a post about her ideas regarding open infrastructure which is worth reading.

Josh discussed Servo and Rust at a programming talk show hosted by Alexander Putilin.

We have an impending upgrade of the SpiderMonkey Javascript engine by Michael Wu. This moves us from a very old spidermonkey to a recent-ish one. Naturally, the team is quite excited about the prospect of getting rid of all the old bugs and getting shiny new ones in their place.

Notable additions

- We landed a tiny Rust upgrade that caused a lot of trouble for its size. Shortly after, we landed another tiny one that brought us to 1.2.0

- Corey found a bug in

rust-urlusing afl.rs, a fuzzer for Rust by Keegan. It’s had quite a few success stories so far, try it out on your own project! - Patrick improved support for incremental reflow

- Glenn fixed our Android build after a long bout of brokenness

- Lars and Mike bravely fought the Cargo.lock beast and won; in an epic battle of man vs machine. There even was a sequel!

- Patrick implemented per-glyph font fallback

- Keith started work on the

fetchmodule - Jinwoo added support for CSS

background-origin,background-clip,padding-boxandbox-sizing - Anthony cleaned up our usages of

RootedVec - Patrick made our reflowing more in line with what Gecko does

- Glenn fixed a bunch of race conditions with reftests and the compositor

- James improved our web platform tests integration to allow for upstreaming binary changes

New contributors

Screenshots

This shows off the CSS direction property. RTL text still needs some work

Meetings

- We discussed forking or committing to maintaining the extensions we need in glutin. Glutin is trying to stay focused and “not become a toolkit”, but there are some changes we need in it for embedding. Currently we have some changes on our fork; but we’d prefer to not use tweaked forks for community-maintained dependencies and were exploring the possibilities.

- Mike is back and working on more embedding!

- There was some planning for the Rust-in-Gecko sessions at Whistler

- RTL is coming!

|

|

Mike Conley: The Joy of Coding (Ep. 16): Wacky Morning DJ |

I’m on vacation this week, but the show must go on! So I pre-recorded a shorter episode of The Joy of Coding last Friday.

In this episode1, I focused on a tool I wrote that I alluded to in the last episode, which is a soundboard to use during Joy of Coding episodes.

I demo the tool, and then I explain how it works. After I finished the episode, I pushed to repository to GitHub, and you can check that out right here.

So I’ll see you next week with a full length episode! Take care!

http://mikeconley.ca/blog/2015/05/27/the-joy-of-coding-ep-16-wacky-morning-dj/

|

|

Air Mozilla: Kids' Vision - Mentorship Series |

Mozilla hosts Kids Vision Bay Area Mentor Series

Mozilla hosts Kids Vision Bay Area Mentor Series

https://air.mozilla.org/kids-vision-mentorship-series-20150527/

|

|

Air Mozilla: Quality Team (QA) Public Meeting |

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

https://air.mozilla.org/quality-team-qa-public-meeting-20150527/

|

|

Benjamin Smedberg: Yak Shaving |

Yak shaving tends to be looked down on. I don’t necessarily see it that way. It can be a way to pay down technical debt, or learn a new skill. In many ways I consider it a sign of broad engineering skill if somebody is capable of solving a multi-part problem.

It started so innocently. My team has been working on unifying the Firefox Health Report and Telemetry data collection systems, and there was a bug that I thought I could knock off pretty easily: “FHR data migration: org.mozilla.crashes”. Below are the roadblocks, mishaps, and sideshows that resulted, and I’m not even done yet:

- Tryserver failure: crashes

- Constant crashes only on Linux opt builds. It turned out this was entirely my fault. The following is not a safe access pattern because of c++ temporary lifetimes:

nsCSubstringTuple str = str1 + str2; Fn(str);

- Backout #1: talos xperf failure

- After landing, the code was backed out because the xperf Talos test detected main-thread I/O. On desktop, this was a simple ordering problem: we always do that I/O during startup to initialize the crypto system; I just moved it slightly earlier in the startup sequence. Why are we initializing the crypto system? To generate a random number. Fixed this by whitelisting the I/O. This involved landing code to the separate Talos repo and then telling the main Firefox tree to use the new revision. Much thanks to Aaron Klotz for helping me figure out the right steps.

- Backout #2: test timeouts

- Test timeouts if the first test of a test run uses the PopupNotifications API. This wasn’t caught during initial try runs because it appeared to be a well-known random orange. I was apparently changing the startup sequence just enough to tickle a focus bug in the test harness. It so happened that the particular test which runs first depends on the e10s or non-e10s configuration, leading to some confusion about what was going on. Fortunately, I was able to reproduce this locally. Gavin Sharp and Neil Deakin helped get the test harness in order in bug 1138079.

- Local test failures on Linux

- I discovered that several xpcshell tests were failing locally on Linux which were working fine on tryserver. After some debugging, I discovered that the tests thought I wasn’t using Linux, because the cargo-culted test for Linux was let isLinux = ("@mozilla.org/gnome-gconf-service;1" in Cc). This means that if gconf is disabled at build time or not present at runtime, the tests will fail. I installed GConf2-devel and rebuilt my tree and things were much better.

- Incorrect failure case in the extension manager

- While debugging the test failures, I discovered an incorrect codepath in GMPProvider.jsm for clients which are not Windows, Mac, or Linux (Android and the non-Linux Unixes).

- Android performance regression

- The landing caused an Android startup performance regression, bug 1163049. On Android, we don’t initialize NSS during startup, and the earlier initialization of the addon manager caused us to generate random Sync IDs for addons. I first fixed this by using Math.random() instead of good crypto, but Richard Newman suggested that I just make Sync generation lazy. This appears to work and will land when there is an open tree.

- mach bootstrap on Fedora doesn’t work for Android

- As part of debugging the performance regression, I built Firefox for Android for the first time in several years. I discovered that mach bootstrap for Android isn’t implemented on Fedora Core. I manually installed packages until it built properly. I have a list of the packages I installed and I’ll file a bug to fix mach bootstrap when I get a chance.

- android build-tools not found

- A configure check for the android build-tools package failed. I still don’t understand exactly why this happened; it has something to do with a version that’s too new and unexpected. Nick Alexander pointed me at a patch on bug 1162000 which fixed this for me locally, but it’s not the “right” fix and so it’s not checked into the tree.

- Debugging on Android (jimdb)

- Binary debugging on Android turned out to be difficult. There are some great docs on the wiki, but those docs failed to mention that you have to pass the configure flag –enable-debug-symbols. After that, I discovered that pending breakpoints don’t work by default with Android debugging, and since I was debugging a startup issue that was a critical failure. I wrote an ask.mozilla.org question and got a custom patch which finally made debugging work. I also had to patch the implementation of DumpJSStack() so that it would print to logcat on Android; this is another bug that I’ll file later when I clean up my tree.

- Crash reporting broken on Mac

- I broke crash report submission on mac for some users. Crash report annotations were being truncated from unicode instead of converted from UTF8. Because JSON.stringify doesn’t produce ASCII, this was breaking crash reporting when we tried to parse the resulting data. This was an API bug that existed prior to the patch, but I should have caught it earlier. Shoutout to Ted Mielczarek for fixing this and adding automated tests!

- Semi-related weirdness: improving the startup performance of Pocket

- The Firefox Pocket integration caused a significant startup performance issue on some trees. The details are especially gnarly, but it seems that by reordering the initialization of the addon manager, I was able to turn a performance regression into a win by accident. Probably something to do with I/O wait, but it still feels like spooky action at a distance. Kudos to Joel Maher, Jared Wein and Gijs Kruitbosch for diving into this under time pressure.

Experiences like this are frustrating, but as long as it’s possible to keep the final goal in sight, fixing unrelated bugs along the way might be the best thing for everyone involved. It will certainly save context-switches from other experts to help out. And doing the Android build and debugging was a useful learning experience.

Perhaps, though, I’ll go back to my primary job of being a manager.

|

|

Air Mozilla: Security Services / MDN Update |

An update on the roadmap and plan for Minion and some new MDN projects coming up in Q3 and the rest of 2015

An update on the roadmap and plan for Minion and some new MDN projects coming up in Q3 and the rest of 2015

|

|

Air Mozilla: Product Coordination Meeting |

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

https://air.mozilla.org/product-coordination-meeting-20150527/

|

|

Armen Zambrano: Welcome adusca! |

adusca has an outstanding number of contributions over the last few months including Mozilla CI Tools (which we're working on together).

Here's a bit about herself from her blog:

Hi! I’m Alice. I studied Mathematics in college. I was doing a Master’s degree in Mathematical Economics before getting serious about programming.She is also a graduate from Hacker's School.

Even though Alice has not been a programmer for many years, she has shown already lots of potential. For instance, she wrote a script to generate scheduling relations for buildbot; for this and many other reasons I tip my hat.

adusca will initially help me out with creating a generic pulse listener to handle job cancellations and retriggers for Treeheder. The intent is to create a way for Mozilla CI tools to manage scheduling on behalf of TH, make the way for more sophisticated Mozilla CI actions and allow other people to piggy back to this pulse service and trigger their own actions.

If you have not yet had a chance to welcome her and getting to know her, I highly encourage you to do so.

Welcome Alice!

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/PV4N6y2VOos/welcome-adusca.html

|

|

Mark Surman: Mozilla Academy Strategy Update |

One of MoFo’s main goals for 2015 is to come up with an ambitious learning and community strategy. The codename for this is ‘Mozilla Academy’. As a way to get the process rolling, I wrote a long post in March outlining what we might include in that strategy. Since then, I’ve been putting together a team to dig into the strategy work formally.

This post is an update on that process in FAQ form. More substance and meat is coming in future posts. Also, there is lots of info on the wiki.

Q1. What are we trying to do?

Our main goal is alignment: to get everyone working on Mozilla’s learning and leadership development programs pointed in the same direction. The three main places we need to align are:

- Purpose: help people learn and hone the ability to read | write | participate.

- Process: people learn and improve by making things (in a community of like-minded peers).

- Poetry: tie back to ‘web = public resource’ narrative. Strong Mozilla brand.

At the end of the year, we will have a unified strategy that connects Mozilla’s learning and leadership development offerings (Webmaker, Hive, Open News, etc.). Right now, we do good work in these areas, but they’re a bit fragmented. We need to fix that by creating a coherent story and common approaches that will increase the impact these programs can have on the world.

Q2. What is ‘Mozilla Academy’?

That’s what we’re trying to figure out. At the very least, Mozilla Academy will be a clearly packaged and branded harmonization of Mozilla’s learning and leadership programs. People will be able to clearly understand what we’re doing and which parts are for them. Mozilla Academy may also include a common set of web literacy skills, curriculum format and learning approaches that we use across programs. We are also reviewing the possibility of a shared set of credentials or roles for people participating in Mozilla Academy.

Q3. Who is ‘Mozilla Academy’ for?

Over the past few weeks, we’ve started to look at who we’re trying to serve with our existing programs (blog post on this soon). Using the ‘scale vs depth’ graph in the Mozilla Learning plan as a framework, we see three main audiences:

- 1.4 billion Facebook users. Or, whatever metric you use to count active people on the internet. We can reach some percentage of these people with software or marketing that invite people to ‘read | write | participate’. We probably won’t get them to want to ‘learn’ in an explicit way. They will learn by doing. Which is fine. Webmaker and SmartOn currently focus on this group.

- People who actively want to grow their web literacy and skills. These are people interested enough in skills or technology or Mozilla that they will choose to participate in an explicit learning activity. They include everyone from young people in afterschool programs to web developers who might be interested in taking a course with Mozilla. Mozilla Clubs, Hive and MDN’s nascent learning program currently focus on this group.

- People who want to hone their skills *and* have an impact on the world. These are people who already understand the web and technology at some level, but want to get better. They are also interested in doing something good for the web, the world or both. They include everyone from an educator wanting to create digital curriculum to a developer who wants to make the world of news or science better. Hive, ReMo and our community-based fellowships currently serve these people.

A big part of the strategy process is getting clear on these audiences. From there we can start to ask questions like: who can Mozilla best serve?; where can we have the most impact?; can people in one group serve or support people in another? Once we have the answers to these questions we can decide where to place our biggest bets (we need to do this!). And we can start raising more money to support our ambitious plans.

Q4. What is a ‘strategy’ useful for?

We want to accomplish a few things as a result of this process. A. A way to clearly communicate the ‘what and why’ of Mozilla’s learning and leadership efforts. B. A framework for designing new programs, adjusting program designs and fundraising for program growth. C. Common approaches and platforms we can use across programs. These things are important if we want Mozilla to stay in learning and leadership for the long haul, which we do.

Q5. What do you mean by ‘common approaches’?

There are a number of places where we do similar work in different ways. For example, Mozilla Clubs, Hive, Mozilla Developer Network, Open News and Mozilla Science Lab are all working on curriculum but do not yet have a shared curriculum model or repository. Similarly, Mozilla runs four fellowship programs but does not have a shared definition of a ‘Mozilla Fellow’. Common approaches could help here.

Q6. Are you developing a new program for Mozilla?

That’s not our goal. We like most of the work we’re doing now. As outlined in the 2015 Mozilla Learning Plan, our aim is to keep building on the strongest elements of our work and then connect these elements where it makes sense. We may modify, add or cut program elements in the future, but that’s not our main focus.

Q7. Are you set on the ‘Mozilla Academy’ name?

It’s pretty unlikely that we will use that name. Many people hate it. However, we needed a moniker to use during the strategy process. For better or for worse, that’s the one we chose.

Q8. What’s the timing for all of this?

We will have a basic alignment framework around ‘purpose, process and poetry’ by the end of June. We’ll work with the team at the Mozilla All Hands in Whistler. We will develop specific program designs, engage in a broad conversation and run experiments. By October, we will have an updated version of the Mozilla Learning plan, which will lay out our work for 2016+.

—

As indicated above, the aim of this post is to give a process update. There is much more info on the process, who’s running it and what all the pieces are in the Mozilla Learning strategy wiki FAQ. The wiki also has info on how to get involved. If you have additional questions, ask them here. I’ll respond to the comments and also add my answers to the wiki.

In terms of substance, I’m planning a number of posts in coming weeks on topics like the essence of web literacy, who our audiences are and how we think about learning. People leading Mozilla Academy working groups will also be posting on substantive topics like our evolving thinking around the web literacy map and fellows programs. And, of course, the wiki will be growing with substantive strategy documents covering many of the topics above.

Filed under: education, mozilla, webmakers

https://commonspace.wordpress.com/2015/05/27/mozilla-academy-strategy-update/

|

|