Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Andy McKay: Docker in development (part 4) |

Tips for developing with Docker.

Keep your container small

Each layer in your Dockerfile is cached. This means that if you have the same layer repeated in multiple images, it will cache and reuse that layer.

So its easy not too worry about how big a layer is. Until you start pulling your containers on to servers, test runners, QA servers, developers laptops and so on. Then you start to wonder how your container blew up to 2 gigs [1].

After you add a layer, do yourself a favour and see how much it adds. To find out how much a layer adds, use the docker history [image id] command. The results can be suprising, especially when it comes to yum.

Installing supervisor using pip:

540868cb5bab 35 seconds ago /bin/sh -c pip install supervisor 2.429 MB

Installing supervisor using yum:

16bb922e5ff5 6 hours ago /bin/sh -c yum install -y supervisor 224.5 MB

That's a 222.071 MB difference.

You can do a yum clean and that's when it gets interesting. Three seperate lines, no clean:

392cecc77eae 12 hours ago /bin/sh -c yum install -y cronie 34.72 MB 91154ebe69d8 12 hours ago /bin/sh -c yum install -y bash-completion 18.67 MB 760d1b735093 12 hours ago /bin/sh -c yum install -y supervisor 224.5 MB

Install and clean in three lines:

832fe193df7d About a minute ago /bin/sh -c yum install -y cronie && yum clean 34.69 MB 331bc45fc42a About a minute ago /bin/sh -c yum install -y bash-completion && 18.64 MB f74a8b922149 2 minutes ago /bin/sh -c yum install -y supervisor && yum c 21.54 MB

Install and clean in one line:

23d486d7bc04 2 minutes ago /bin/sh -c yum install -y supervisor bash-com 38.7 MB

The last one saves you 239.19 MB.

It's a pretty simple and quick check to see how big the layer in your Dockerfile. Next time you add a layer, give it a try.

See also part 3.

http://www.agmweb.ca/2015-06-03-docker-in-development-part-4/

|

|

Andy McKay: 5-Eyes |

I'm glad the US is for once restricting it's laws around mass data survelliance. Well for US people anyway, because there is nothing about anyone. Nothing about the approx. 6.8 billion other people on the planet for whom mass data survelliance from the NSA will happen anyway.

That's me, all my family, most of my friends and probably a majority of the people reading this blog are still being spied on by the NSA.

But hey, don't worry US people, you aren't safe. Canada will spy on you. We recently reduced the amount of oversight on our intelligence agencies, so we aren't really sure what they are doing.

The term "judicial oversight", as used by members of the Conservative Party in this debate, is truly a perversion of reality. It is one of the most offensive sections of the whole bill.

What can Canada do? Share it with the US as part of 5-Eyes.

Until now, it had been generally understood that the citizens of each country were protected from surveillance by any of the others.

But...

The NSA has been using the UK data to conduct so-called "pattern of life" or "contact-chaining" analyses, under which the agency can look up to three "hops" away from a target of interest - examining the communications of a friend of a friend of a friend

But hey, its not just Canada, there's the UK, Australia and New Zealand who are all part of 5-Eyes and will share it back with the US. So that means you are still screwed. And then there's China and other countries that will spy on you and not share the data with the US.

So you are pretty screwed, we are all pretty screwed.

|

|

Daniel Stenberg: server push to curl |

The next step in my efforts to complete curl’s HTTP/2 implementation, after having made sure downloading and uploading transfers in parallel work, was adding support for HTTP/2 server push.

A quick recap

HTTP/2 Server push is a way for the server to initiate the transfer of a resource. Like when the client asks for resource X, the server can deem that the client most probably also wants to have resource Y and Z and initiate their transfers.

The server then sends a PUSH_PROMISE to the client for the new resource and hands over a set of “request headers” that a GET for that resource could have used, and then it sends the resource in a way that it would have done if it was requested the “regular” way.

The push promise frame gives the client information to make a decision if the resource is wanted or not and it can then immediately deny this transfer if it considers it unwanted. Like in a browser case if it already has that file in its local cache or similar. If not denied, the stream has an initial window size that allows the server to send a certain amount of data before the client has to give the stream more allowance to continue.

It is also suitable to remember that server push is a new protocol feature in HTTP/2 and as such it has not been widely used yet and it remains to be seen exactly how it will become used the best way and what will turn out popular and useful. We have this “immaturity” in mind when designing this support for libcurl.

Enter libcurl

When setting up a transfer over HTTP/2 with libcurl you do it with the multi interface to make it able to work multiplexed. That way you can set up and perform any number of transfers in parallel, and if they happen to use the same host they can be done multiplexed but if they use different hosts they will use separate connections.

To the application, transfers pretty much look the same and it can remain agnostic to whether the transfer is multiplexed or not, it is just another transfer.

With the libcurl API, the application creates an “easy handle” for each transfer and it sets options in that handle for the upcoming transfer. before it adds that to the “multi handle” and then libcurl drives all those individual transfers at the same time.

Server-initiated transfers

Starting in the future version 7.44.0 – planned release date in August, the plan is to introduce the API support for server push. It couldn’t happen sooner because I missed the merge window for 7.43.0 and then 7.44.0 is simply the next opportunity. The wiki link here is however updated and reflects what is currently being implemented.

An application sets a callback to allow server pushed streams. The callback gets called by libcurl when a PUSH_PROMISE is received by the client side, and the callback can then tell libcurl if the new stream should be allowed or not. It could be as simple as this:

static int server_push_callback(CURL *parent,

CURL *easy,

size_t num_headers,

struct curl_pushheaders *headers,

void *userp)

{

char *headp;

size_t i;

FILE *out;

/* here's a new stream, save it in a new file for each new push */

out = fopen("push-stream", "wb");

/* write to this file */

curl_easy_setopt(easy, CURLOPT_WRITEDATA, out);

headp = curl_pushheader_byname(headers, ":path");

if(headp)

fprintf(stderr, "The PATH is %s\n", headp);

return CURL_PUSH_OK;

}

The callback would instead return CURL_PUSH_DENY if the stream isn’t desired. If no callback is set, no pushes will be accepted.

An interesting effect of this API is that libcurl now creates and adds easy handles to the multi handle by itself when the callback okeys it, so there will be more easy handles to cleanup at the end of the operations than what the application added. Each pushed transfer needs get cleaned up by the application that “inherits” the ownership of the transfer and the easy handle for it.

PUSH_PROMISE headers

The headers passed along in that frame will contain the mandatory “special” request ones (”:method”, “:path”, “:scheme” and “:authority”) but other than those it really isn’t certain which other headers servers will provide and how this will work. To prepare for this fact, we provide two accessor functions for the push callback to access all PUSH_PROMISE headers libcurl received:

- curl_pushheader_byname() lets the callback get the contents of a specific header. I imagine that “:path” for example is one of those that most typical push callbacks will want to take a closer look at.

- curl_pushheader_bynum() allows the function to iterate over all received headers and do whatever it needs to do, it gets the full header by index.

These two functions are also somewhat special and new in the libcurl world since they are only possible to use from within this particular callback and they are invalid and wrong to use in any and all other contexts.

HTTP/2 headers are compressed on the wire using HPACK compression, but when access from this callback all headers use the familiar HTTP/1.1 style of “name:value”.

Work in progress

As I mentioned above already, this is work in progress and I welcome all and any comments or suggestions on how this API can be improved or tweaked to even better fit your needs. Implementing features such as these usually turn out better when there are users trying them out before they are written in stone.

As I mentioned above already, this is work in progress and I welcome all and any comments or suggestions on how this API can be improved or tweaked to even better fit your needs. Implementing features such as these usually turn out better when there are users trying them out before they are written in stone.

To try it out, build a libcurl from the http2-push branch:

https://github.com/bagder/curl/commits/http2-push

And while there are docs and an example in that branch already, you may opt to read the wiki version of the docs:

https://github.com/bagder/curl/wiki/HTTP-2-Server-Push

The best way to send your feedback on this is to post to the curl-library mailing list, but if you find obvious bugs or want to provide patches you can also opt to file issues or pull-requests on github.

|

|

Ted Clancy: Which Unicode character should represent the English apostrophe? (And why the Unicode committee is very wrong.) |

The Unicode committee is very clear that U+2019 (RIGHT SINGLE QUOTATION MARK) should represent the English apostrophe.

Section 6.2 of the Unicode Standard 7.0.0 states:

U+2019 […] is preferred where the character is to represent a punctuation mark, as for contractions: “We’ve been here before.”

This is very, very wrong. The character you should use to represent the English apostrophe is U+02BC (MODIFIER LETTER APOSTROPHE). I’m here to tell you why why.

Using U+2019 is inconsistent with the rest of the standard

Earlier in section 6.2, the standard explains the difference between punctuation marks and modifier letters:

Punctuation marks generally break words; modifier letters generally are considered part of a word.

Consider any English word with an apostrophe, e.g. “don’t”. The word “don’t” is a single word. It is not the word “don” juxtaposed against the word “t”. The apostrophe is part of the word, which, in Unicode-speak, means it’s a modifier letter, not a punctuation mark, regardless of what colloquial English calls it.

According to the Unicode character database, U+2019 is a punctuation mark (General Category = Pf), while U+02BC is a modifier letter (General Category = Lm). Since English apostrophes are part of the words they’re in, they are modifier letters, and hence should be represented by U+02BC, not U+2019.

(It would be different if we were talking about French. In French, I think it makes more sense to consider «L’Homme» as two words, or «jusqu’ici» as two words. But that’s a conversation for another time. Right now I’m talking about English.)

Using U+2019 breaks regular expressions

When doing word matching on Unicode text, programmers might reasonably assume they can detect “words” with the regex /\w+/ (which, in a Unicode context, matches characters with General Category L*, M*, N*, or Pc). This won’t actually work with English words that contain apostrophes if the apostrophes are represented as U+2019, but it will work if the apostrophes are represented as U+02BC.

To be fair, this problem exists in ASCII right now, where /\w+/ fails to match \x27 (the ASCII apostrophe). This leads to common bugs where users named O’Brien get told they can’t enter their name on a form, or where blog titles get auto-formatted as “Don’T Stop The Music”. Programmers soon learn they need to include the ASCII apostrophe in their regex as an exception.

But we shouldn’t be perpetuating this problem. When a programmer is writing a regex that can match text in Chinese, Arabic, or any other human language supported by Unicode, they shouldn’t have to add an exception for English. Furthermore, if apostrophes are represented as U+2019, the programmer would have to add both \x27 and \u2019 to their regex as exceptions.

The solution is to represent apostrophes as U+02BC, and let programmers simply write /\w+/ to match words like O’Brien and don’t.

Using U+2019 means that Word Processors can’t distinguish between apostrophes and actual quotation marks, leading to a heap of problems.

How many times have you seen things like ‘Tis the Season or Up and at ‘em with the apostrophe curled the wrong way, because someone’s word processor mistook the apostrophe for an opening single quotation mark? Or, have you ever cut-and-pasted a block of text to use as a quote, put quotation marks around it, and then had to manually change all the nested quotation marks from double to single? Or maybe you’ve received text from the UK to use in your American presentation, but first you had to change all the quotation marks, because the UK prefers single-quotes while the US prefers double-quotes.

These are all things your word processor should be able to handle automatically and properly, but it can’t due to the ambiguity of whether a U+2019 character represents a single quotation mark or an apostrophe. We wouldn’t have these problems if apostrophes were represented by U+02BC. Allow me to explain.

1) In my perfect world, Word processors would automatically ensure that quotes (and nested quotes) were properly formatted according to your locale’s conventions, whether it be US, UK, or one of the myriad crazy quote conventions found across Europe. All quote marks would be reformatted on the fly according to their position in the paragraph, e.g. changing ‘ to “ (and vice versa) or ’ to ” (and vice versa).

2) Right now, Microsoft word users use the " key to type a double-quote (either opening or closing) and the &apos key to type either a single-quote (either opening or closing) or an apostrophe. In my perfect world, since the word processor could automatically convert between single- and double-quotes as needed, you’d only need one key (the " key) to type all quotation marks, and the &apos key would be reserved exclusively for typing apostrophes. Therefore, the word processor would know that &apos-t-i-l means ’til, not ‘til.

But because U+2019 can represent either an apostrophe or single quote, it’s hard for Word Processors to do (1), which means they also can’t do (2).

So whenever you see ‘Til we meet again with the apostrophe curled the wrong way, remember that’s because of the Unicode committee telling you to use U+2019 for English apostrophes.

Common bloody sense

For godsake, apostrophes are not closing quotation marks!

U+2019 (RIGHT SINGLE QUOTATION MARK) is classed as a closing punctuation mark. Its general category is Pf, which is defined in section 4.5 of the standard as meaning:

Punctuation, final quote (may behave like Ps [opening punctuation] or Pe [closing punctuation] depending on usage [They’re talking about right-to-left text –Ed])

When you use U+2019 to represent an apostrophe, it’s behaving as neither a Ps or Pe. (Or, if it is, it’s an unbalanced one. As of Unicode 6.3, the Unicode bidi algorithm attempts to detect bracket pairs for bidi processing. They would be unable to do the same for quotation marks, due to all these unbalanced “quotation marks”.)

Compare that to U+02BC (MODIFIER LETTER APOSTROPHE) which has “apostrophe” right in its name. LOOK AT IT. RIGHT THERE, IT SAYS APOSTROPHE.

Which do you think make more sense for representing apostrophes?

C’mon, let’s fix this.

|

|

Emma Irwin: Heartbeat #3 (my) Participation Opportunities |

As you may or may not yet be aware, the Participation Team at Mozilla is working more openly using the Heartbeat process. This week we start Heartbeat #3 , and in the next two days you’ll see this page fill with the issues the team will be working on for next three weeks. You can see our previous issues and see how those went by viewing this page.

I thought I would surface a couple of things, I’m working on this heartbeat and would LOVE help with – should you want to get involved as a contributor in any way. I always offer lots of feedback, training (where it helps) and #mozlove with every chance I get. I also outlined what you might learn as part of contributing.

For my ‘base task‘ this Heartbeat:

1) Education Portal Theming

Our platform needs some love from someone interested in bringing this site inline with Mozilla design standards. It’s close but – not close enough. Built on Jekyll (Github Pages, Liquid, Bootstrap ) – ideally we need something like Makerstrap.

You’ll learn (or get better at) : SASS, Jekyll, Twitter Bootstrap, SASS

2) Mozilla Domain Transfer

Right now the Education platform is under my repository account. Deb has kindly given me some instructions on how to transfer, but need someone to book time with me to walk through it and make it so.

You’ll learn (or get better at) : Github repository management

3) Figure out a way to add ‘chapters’ to training videos

I need a way to add chapters to a couple of our longer traiing videos. Research to help identify the best way (not Popcorn Maker which is being depreciated) would be super-helpful.

You’ll learn (or get better at) : Media / Teaching Technologies

For my Marketpulse task this Heartbeat:

1) Targeted Outreach

We have a community growing around Market Research contribution (Marketpulse) thanks to the clarity of our steps in the participation ladder. We still need a lot more people completing steps #1 and #2 in areas where Firefox OS phone is sold.

2) For a creative challenge I need help identifying non-traditional ways to reach out to people already skilled in Market Research. Basically outside the Mozilla community where do we go for this skill-set? I need not only ideas but someone interested in testing those ideas. If we could have even 1 new and skilled Market Researcher join as a volunteer I would do a happy dance and share that on Social Media.

You’ll learn (or get better at) : Strategy, Communication, Community Building, Writing

For my Community Onboarding Task

Community members will soon be part of the onboarding process for new staff and interns (yay!), as part of that we’ll need a scheduling tool. Onboarding is every Monday, and we need to ensure that all slots are covered. So!

1) Tool Research

I need help figuring out a way to create an open and accessible scheduling tool for sign-up. Is there a good (free and open) tool for this? Maybe its just a calendar, but I need help researching, evaluating and selecting.

You’ll learn (or get better at) : Community Management, Scheduling

So these are the things that would really be helpful if you’re looking to get involved with the Participation Team this Heartbeat – or at least my small place on it. Please email me at eirwin at mozilla, OR leave a comment OR tweet at me. if you’re interested. Thanks!

I would also be curious if this is helpful to those looking to get involved (above the Github issues). If not what else would work?

http://tiptoes.ca/heartbeat-3-my-contribution-opportunities/

|

|

Nicholas Nethercote: Measuring data structure sizes: Firefox (C++) vs. Servo (Rust) |

Firefox’s about:memory page presents fine-grained measurements of memory usage. Here’s a short example.

725.84 MB (100.0%) -- explicit +--504.36 MB (69.49%) -- window-objects | +--115.84 MB (15.96%) -- top(https://treeherder.mozilla.org/#/jobs?repo=mozilla-inbound, id=2147483655) | | +---85.30 MB (11.75%) -- active | | | +--84.75 MB (11.68%) -- window(https://treeherder.mozilla.org/#/jobs?repo=mozilla-inbound) | | | | +--36.51 MB (05.03%) -- dom | | | | | +--16.46 MB (02.27%) -- element-nodes | | | | | +--13.08 MB (01.80%) -- orphan-nodes | | | | | +---6.97 MB (00.96%) ++ (4 tiny) | | | | +--25.17 MB (03.47%) -- js-compartment(https://treeherder.mozilla.org/#/jobs?repo=mozilla-inbound) | | | | | +--23.29 MB (03.21%) ++ classes | | | | | +---1.87 MB (00.26%) ++ (7 tiny) | | | | +--21.69 MB (02.99%) ++ layout | | | | +---1.39 MB (00.19%) ++ (2 tiny) | | | +---0.55 MB (00.08%) ++ window(https://login.persona.org/communication_iframe) | | +---30.54 MB (04.21%) ++ js-zone(0x7f131ed6e000)

A typical about:memory invocation contains many thousands of measurements. Although they can be hard for non-experts to interpret, they are immensely useful to Firefox developers. For this reason, I’m currently implementing a similar system in Servo, which is a next-generation browser engine that’s implemented in Rust. Although the implementation in Servo is heavily based on the Firefox implementation, Rust has some features that make the Servo implementation a lot nicer than the Firefox implementation, which is written in C++. This blog post is a deep dive that explains how and why.

Measuring data structures in Firefox

A lot of the measurements done for about:memory are of heterogeneous data structures that live on the heap and contain pointers. We want such data structures to be able to measure themselves. Consider the following simple example.

struct CookieDomainTuple

{

nsCookieKey key;

nsRefPtr cookie;

size_t SizeOfExcludingThis(mozilla::MallocSizeOf aMallocSizeOf) const;

};

The things to immediately note about this type are as follows.

- The details of

nsCookieKeyandnsCookiedon’t matter here. nsRefPtris a smart pointer type.- There is a method, called

SizeOfExcludingThis, for measuring the size of aCookieDomainTuple.

That measurement method has the following form.

size_t

CookieDomainTuple::SizeOfExcludingThis(MallocSizeOf aMallocSizeOf) const

{

size_t amount = 0;

amount += key.SizeOfExcludingThis(aMallocSizeOf);

amount += cookie->SizeOfIncludingThis(aMallocSizeOf);

return amount;

}

Things to note here are as follows.

aMallocSizeOfis a pointer to a function that takes a pointer to a heap block and returns the size of that block in bytes. Under the covers it’s implemented with a function likemalloc_usable_size. Using a function like this is superior to computing the size analytically, because (a) it’s less error-prone and (b) it measures the actual size of heap blocks, which is often larger than the requested size because heap allocators round up some request sizes. It will also naturally measure any padding between members.- The two data members are measured by invocations to size measurement methods that they provide.

- The first of these is called

SizeOfExcludingThis. The “excludingthis” here is necessary becausekeyis annsCookieKeythat sits within aCookieDomainTuple. We don’t want to measure thensCookieKeystruct itself, just any additional heap blocks that it has pointers to. - The second of these is called

SizeOfIncludingThis. The “includingthis” here is necessary becausecookieis just a pointer to annsCookiestruct, which we do want to measure, along with any additional heap blocks it has pointers to. - We need to be careful with these calls. If we call

SizeOfIncludingThiswhen we should callSizeOfExcludingThis, we’ll likely get a crash due to callingaMallocSizeOfon a non-heap pointer. And if we callSizeOfExcludingThiswhen we should callSizeOfIncludingThis, we’ll miss measuring the struct. - If this struct had a pointer to a raw heap buffer — e.g. a

char*member — it would measure it by callingaMallocSizeOfdirectly on the pointer.

With that in mind, you can see that this method is itself a SizeOfExcludingThis method, and indeed, it doesn’t measure the memory used by the struct instance itself. A method that did include that memory would look like the following.

size_t

CookieDomainTuple::SizeOfIncludingThis(MallocSizeOf aMallocSizeOf)

{

return aMallocSizeOf(this) + SizeOfExcludingThis(aMallocSizeOf);

}

All it does is measure the CookieDomainTuple struct itself — i.e. this — and then call the SizeOfExcludingThis method, which measures all child structures.

There are a few other wrinkles.

- Often we want to ignore a data member. Perhaps it’s a scalar value, such as an integer. Perhaps it’s a non-owning pointer to something and that thing would be better measured as part of the measurement of another data structure. Perhaps it’s something small that isn’t worth measuring. In these cases we generally use comments in the measurement method to explain why a field isn’t measured, but it’s easy for these comments to fall out-of-date. It’s also easy to forget to update the measurement method when a new data member is added.

- Every

SizeOfIncludingThismethod body looks the same:return aMallocSizeOf(this) + SizeOfExcludingThis(aMallocSizeOf); - Reference-counting complicates things, because you end up with pointers that conceptually own a fraction of another structure.

- Inheritance complicates things.

(The full documentation goes into more detail.)

Even with all the wrinkles, it all works fairly well. Having said that, there are a lot of SizeOfExcludingThis and SizeOfIncludingThis methods that are boilerplate-y and tedious to write.

Measuring data structures in SERVO

When I started implementing a similar system in Servo, I naturally followed a similar design. But I soon found I was able to improve upon it.

With the same functions defined for lots of types, it was natural to define a Rust trait, like the following.

pub trait HeapSizeOf {

fn size_of_including_self(&self) -> usize;

fn size_of_excluding_self(&self) -> usize;

}

Having to repeatedly define size_of_including_self when its definition always looks the same is a pain. But heap pointers in Rust are handled via the parameterized Box type, and it’s possible to implement traits for this type. This means we can implement size_of_excluding_this for all Box types — thus removing the need for size_of_including_this — in one fell swoop, as the following code shows.

impl HeapSizeOf for Box{ fn size_of_excluding_self(&self) -> usize { heap_size_of(&**self as *const T as *const c_void) + (**self).size_of_excluding_self() } }

The pointer manipulations are hairy, but basically it says that if T implements the HeapSizeOf trait, then we can measure Box by measuring the T struct itself (via heap_size_of, which is similar to the aMallocSizeOf function in the Firefox example), and then measuring the things hanging off T (via the size_of_excluding_self call). Excellent!

With the including/excluding distinction gone, I renamed size_of_excluding_self as heap_size_of_children, which I thought communicated the same idea more clearly; it seems better for the name to describe what it is measuring rather than what it is not measuring.

But there was still a need for a lot of tedious boilerplate code, as this example shows.

pub struct DisplayList {

pub background_and_borders: LinkedList,

pub block_backgrounds_and_borders: LinkedList,

pub floats: LinkedList,

pub content: LinkedList,

pub positioned_content: LinkedList,

pub outlines: LinkedList,

pub children: LinkedList>,

}

impl HeapSizeOf for DisplayList {

fn heap_size_of_children(&self) -> usize {

self.background_and_borders.heap_size_of_children() +

self.block_backgrounds_and_borders.heap_size_of_children() +

self.floats.heap_size_of_children() +

self.content.heap_size_of_children() +

self.positioned_content.heap_size_of_children() +

self.outlines.heap_size_of_children() +

self.children.heap_size_of_children()

}

}

However, the Rust compiler has the ability to automatically derive implementations for some built-in traits. Even better, the compiler lets you write plug-ins that do arbitrary transformations of the syntax tree, which makes it possible to write a plug-in that does the same for non-built-in traits on request. And the delightful Manish Goregaokar has done exactly that. This allows the example above to be reduced to the following.

#[derive(HeapSizeOf)]

pub struct DisplayList {

pub background_and_borders: LinkedList,

pub block_backgrounds_and_borders: LinkedList,

pub floats: LinkedList,

pub content: LinkedList,

pub positioned_content: LinkedList,

pub outlines: LinkedList,

pub children: LinkedList>,

}

The first line is an annotation that triggers the plug-in to do the obvious thing: generate a heap_size_of_children definition that just calls heap_size_of_children on all the struct fields. Wonderful!

But you may remember that I mentioned that sometimes in Firefox’s C++ code we want to ignore a particular member. This is also true in Servo’s Rust code, so the plug-in supports an ignore_heap_size annotation which can be applied to any field in the struct definition; the plug-in will duly ignore any such field.

If a new field is added which has a type for which HeapSizeOf has not been implemented, the compiler will complain. This means that we can’t add a new field to a struct and forget to measure it. The ignore_heap_size_of annotation also requires a string argument, which (by convention) holds a brief explanation why the member is ignored, as the following example shows.

#[ignore_heap_size_of = "Because it is a non-owning reference."] pub image: Arc,

(An aside: the best way to handle Arc is an open question. If one of the references is clearly the owner, it probably makes sense to count the full size for that one reference. Otherwise, it is probably best to divide the size equally among all the references.)

The plug-in also has a known_heap_size_of! macro that lets us easily dictate the heap size of built-in types (such as integral types, whose heap size is zero). This works because Rust allows implementations of custom traits for built-in types. It provides additional uniformity because built-in types don’t need special treatment. The following line says that all the built-in signed integer types have a heap_size_of_children value of zero.

known_heap_size_of!(0, i8, i16, i32, i64, isize);

Finally, if there is a type for which the measurement needs to do something more complicated, we can still implement heap_size_of_children manually.

Conclusion

The Servo implementation is much nicer than the Firefox implementation, in the following ways.

- There is no need for an including/excluding split thanks to trait implementations on

Box. This avoids boilerplate some code and makes it impossible to accidentally call the wrong method. - Struct fields that use built-in types are handled the same way as all others, because Rust trait implementations can be defined for built-in types.

- Even more boilerplate is avoided thanks to the compiler plug-in that auto-derives

HeapSizeOfimplementations; it can even ignore fields. - For ignored fields, the required string parameter makes it impossible to forget to explain why the field is ignored.

These are possible due to several powerful language and compiler features of Rust that C++ lacks. There may be some C++ features that could improve the Firefox code — and I’d love to hear suggestions — but it’s never going to be as nice as the Rust code.

|

|

Air Mozilla: Unboxing the New Firefox with Greg |

Unboxing the New Firefox with Greg.

Unboxing the New Firefox with Greg.

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla applauds U.S. Senate’s passage of the USA FREEDOM Act |

Today, the U.S. Senate voted to approve the USA FREEDOM Act. We will digest the news fully over the next few days but in the meantime would like to share this response from Denelle Dixon-Thayer, Senior Vice President for Business and Legal Affairs:

“Mozilla has long supported the USA FREEDOM Act, and we are glad to see today’s passage, which is a significant first step to restore trust online, and a foundation for further needed reform. We have more work to do, in the United States and in many other countries around the world, to protect user privacy and security on the Internet. Mozilla will continue our efforts to secure the Web and its users, demand greater oversight of surveillance activities by all governments, and keep backdoors out of our private communications.”

We’re not done yet. We’re going to rally the troops and plan for next steps to defend privacy all around the world. Stay tuned for more, soon.

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1146787] “take” buttons missing in edit mode

- [1165866] “See Also” allows github issues but not github pull requests

- [1169227] “Short URL” fails on any new query – I don’t get a link

- [1169783] ‘Edit’ option shown on user context menus even if I don’t have permission to edit users

- [1163760] Bugzilla Auth Delegation via API Keys

- [1170007] Collapse “Tracking” section when values are set to defaults (eg “—“)

- [1169479] 500 error when attaching patch with r? but no reviewer

- [1169173] Empty emails for overdue requests

- [1146771] implement comment tagging

- [1168824] stop nagging about massively overdue requests

- [1169418] Can’t enter space in Keyword field

- [1169301] The REST link at the bottom of buglist.cgi does not work for saved searches as currently written

- [1168392] Automatic watch user creation not working when editing component without prior watch user account

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2015/06/02/happy-bmo-push-day-145/

|

|

Smokey Ardisson: The Old Web: Follow-up |

Today’s comments on Tim Bray’s post that I linked to yesterday have been very interesting, both as to the scale of link rot and efforts underway to ameliorate it (you should read those comments if you have not done so).

One commenter on ongoing also linked to two recent posts by Bret Victor; the second one is a incisive examination of the web’s permanence/impermanence problem that I briefly wrestled with before closing yesterday’s post with congratulations to Zeldman. (You should really read Victor’s post.)

Tuesday update: In Tuesday morning’s comments on Bray’s post, Andy Jackson of the British Library links to their study of URLs in their archive, where one of the conclusions is “50% of the content is lost after just one year, with more being lost each subsequent year” (emphasis mine).

http://www.ardisson.org/afkar/2015/06/01/the-old-web-follow-up/

|

|

Cameron Kaiser: TenFourFox 38 is GO! (plus: SourceForge gets sleazy) |

First, some good news: TenFourFox 38 successfully compiled and linked, and took its first halting steps last night. There are still some critical bugs in it; among others, there is a glitch with the application menus that Mozilla seems to have regressed on 10.4 between Fx36 and 38, and JIT changes have caused IonPower to fail six tests it used to pass (and needs to pass to run the browser chrome). However, these issues are almost certainly solveable and we should be officially on target for switching from 31ESR to 38ESR after 31.8.0 with another year of Mozilla updates in the bag. Once again Power Mac users will be fully current with Firefox ESR, meaning even the very last G5 to roll off the assembly line in 2006 will have enjoyed at least 10 years of Gecko-powered browsing (and even if Fx38 is the last source-parity TenFourFox, we'll still be doing feature parity releases for a good long while afterwards). Although I will not be able to work much on the browser for the next couple weeks due to my Master's program getting in the way again, I'm still hoping for a beta release by July so that we have enough time for the localizers to work their magic once more.

In the storm clouds department, I continue to monitor the functional state of Electrolysis (multi-process Firefox) closely; I still believe, and some of the code I've had to hack around only confirms this, that Electrolysis will not work correctly on the 10.4 SDK (nor likely, for that matter, faster, since many Power Macs still in use are single core) even if the underlying system functions are implemented in userland. However, Mozilla is now in the midst of developing Fx41, and Electrolysis still has some major issues preventing it from primetime; the current calendar has Electrolysis reaching the release channel no earlier than Fx42, and I'm betting this is probably a release or two behind. If Mozilla still allows the main browser to start single-process in 45ESR, then we have a shot at one more ESR. If it allows the main browser to start single-process only in safe mode, we may be able to hack around that, but add-on compatibility might be impacted. If neither is allowed, we drop source parity with 38.

TenFourFox 38 and derivatives are also the last release(s) that will support gcc 4.6. Mozilla already dropped support for it, but I really didn't want to certify a new compiler and toolchain on top of a new stable release branch, and the support change was late enough in development that most of the browser still builds. The 4.6 changes are being segregated into a single changeset so that they can be quickly discarded when a later compiler comes online, which will be mandatory for any test releases we make beyond 38 (we might use Sevan Janiyan's pkgsrc gcc since MacPorts is kind of a dog's breakfast for PowerPC nowadays -- more on this later). Speaking of, now that the last remnants of our old JaegerMonkey and PPCBC JIT backends are purged from the changeset pack, we've cut our merge overhead by about 30 percent. Now, that's a diet.

G3 owners should also be warned that Mozilla is doing more with SIMD in JavaScript, and our hot new IonPower JavaScript compiler may be required post-38 to support it. SIMD, of course, is implemented in the PowerPC ISA as AltiVec/VMX, but G3 systems don't have AltiVec support, which may mean no JavaScript compilation. That would be a very suboptimal configuration to ship particularly on the lowest spec platforms that need a JIT the very most. Although I would not prevent the browser from being built for G3 without JIT support should someone wish to do so, I may stop offering G3 builds myself if SIMD support became mandatory (G4/7400 builds would still continue), especially as it represents 25% of total buildtime and is consistently our least used configuration. This does not apply to TenFourFox 38 -- G3 folks are still guaranteed at least that much, and if we drop source parity, G3 builds will likely continue since there would be no technical reason to drop them then. But, if you can find a G4 for your Yosemite's ZIF socket, now might be a good time. :)

Finally, the transition off Google Code should be complete by July as well. However, I'm displeased to see that SourceForge has engaged in some fairly questionable behaviour with hosted downloads, admittedly with abandoned Windows-based projects, but definitely without project owner consent. I'm not going to begrudge them a buck for continuing to allow free binary hosting by displaying ads, but I intensely dislike them monkeying with existing binaries without opting into it.

It is highly unlikely we would ever be victimized in that fashion since our now exotic architecture would make it very technically difficult for them to do so, but since it's easy to get things into SourceForge, just not out of it, I'm now considering using Github (despite my Mercurial preference) for tickets and wiki and eventually source code, and just leaving binaries on SourceForge as long as they remain unmolested, since Google Code does offer an export-to-Github option. If Github doesn't work out, we can import to SourceForge from there, if we have to; Classilla will probably transition the same way. But I have to say with sadness tinged with disgust that I'm really disappointed in them. Once upon a time, they were the go-to site for FOSS, and now they're scrabbling around in the muck for a dollar. Lo, how the mighty have fallen.

http://tenfourfox.blogspot.com/2015/06/tenfourfox-38-is-go-plus-sourceforge.html

|

|

George Wright: Electrolysis: A tale of tab switching and themes |

Electrolysis (“e10s”) is a project by Mozilla to bring Firefox from its traditional single-process roots to a modern multi-process browser. The general idea here is that with modern computers having multiple CPU cores, we should be able to make effective use of that by parallelising as much of our work as possible. This brings benefits like being able to have a responsive user interface when we have a page that requires a lot of work to process, or being able to recover from content process crashes by simply restarting the crashed process.

However, I want to touch on one particular aspect of e10s that works differently to non-e10s Firefox, and that is the way tab switching is handled.

Currently, when a user switches tabs, we do the operation synchronously. That is, when a user requests a new tab for display, the browser effectively halts what it’s doing until it is in a position to display that tab, at which point it will switch the tab. This is fine for the most part, but if the browser gets bogged down and is stuck waiting for CPU time, it can take a jarring amount of time for the tab to be switched.

With e10s, we are able to do better. In this case, we are able to switch tabs asynchronously. When the user requests a new tab, we fire off the request to the process that handles processing that tab, and wait for a message back from that process that says “I’m ready to be displayed now”, and crucially we do not switch the selected tab in the user interface (the “chrome”) until we get that message. In most cases, this should happen in under 300ms, but if we hit 300ms and we still haven’t received the message, we switch the tab anyway and show a big spinner where the tab content would be to indicate that we’re still waiting for the initial content to be ready for display.

Unfortunately, this affects themes. Basically, there’s an attribute on the tab element called “selected” which is used by the CSS to style the tab accordingly. With the switch to asynchronous tab switching, I added a new attribute, “visuallyselected”, which is used by the CSS to style the tab. The “selected” attribute is now used to signify a logical selection within the browser.

We thought long and hard about making this change, and concluded that this was the best approach to take. It was the least invasive in terms of changing our existing browser code, and kept all our test assumptions valid (primarily that “selected” is applied synchronously with a tab switch). The impact on third party themes that haven’t been updated is that if they’re styled off the “selected” attribute, they will immediately show a tab switch but can show the old tab’s content for up to 300ms until either the new tab’s content is ready, or the spinner is shown. Using “visuallyselected” on non-e10s works fine as well, because we now apply both the “selected” and “visuallyselected” attributes on the tab when it is selected in the non-e10s case.

http://www.hackermusings.com/2015/06/electrolysis-a-tale-of-tab-switching-and-themes/

|

|

Mozilla Open Policy & Advocacy Blog: Amendments at risk of derailing USA FREEDOM |

On Sunday night, the U.S. Senate advanced the USA FREEDOM Act on a vote of 77-17, taking the largest step toward surveillance reform by this chamber in decades. We’re glad the Senate heard the loud chorus of voices demanding reform and voted in favor of the legislation, and we hope they will swiftly overcome the remaining procedural hurdles standing in the way of this important legislation without attaching any harmful amendments.

Real reform now is critical and this legislation will further serve as a foundation for the many reforms needed to restore users’ trust in the Internet, which is why we’re concerned to see several amendments put forward by Senate Majority Leader McConnell. Mozilla opposes all of the amendments up for a vote tomorrow:

- Amendment 1451 – Guts amicus provision: The USA FREEDOM Act as approved by the House included good (not great) language appointing outside experts in certain cases, who would be charged to argue for protections for privacy and civil liberties. Amendment 1451 would remove these duties, rendering the amicus advocates ineffective and removing most benefits of the amicus provision.

- Amendment 1450 – Doubles the waiting period before the law goes into effect: This amendment would extend from 6 months to 12 the transition period from current bulk collection to company-held data, despite clear indications from the NSA that they can transition fully in 6 months. The primary practical impact of the extended waiting period would be six months of unnecessary bulk collection and opportunities to weaken or curtail reforms.

- Amendment 1449 – Requires providers to give notice about data retention policies: This amendment requires an “electronic communication service provider” to give 6 months notice to the Attorney General if that service provider intends to retain its call detail records for a period of less than 18 months. In practice, this could be interpreted as a data retention mandate, even though the Director of National Intelligence has already said that no new data retention mandate is needed. Requiring this kind of notification before a company can change its internal policies is unnecessary interference and seems to serve no purpose other than to undermine user privacy and security.

Due to a procedural move by Majority Leader McConnell, these are likely to be the only amendments considered by the Senate. However, if any of these amendments are adopted, this bill will need to go back to the House for another vote. It is unclear whether the House would adopt an amended version or how long it would take to approve a watered-down version of a bill that the House so overwhelmingly approved. We urge the Senate to pass the House version of the USA FREEDOM Act swiftly to avoid any further delay in enacting the reforms this country needs.

https://blog.mozilla.org/netpolicy/2015/06/01/amendments-at-risk-of-derailing-usa-freedom/

|

|

Mozilla Addons Blog: June 2015 Featured Add-ons |

Pick of the Month: Location Guard

by Marco Stronati, Kostas Chatzikokolakis

Enjoy the useful applications of geolocation while protecting your privacy. It does so by reporting a fake location to websites, obtained by adding a certain amount of “noise” to the real location. The noise is randomly selected in a way that ensures that the real location cannot be inferred with high accuracy.

“Many thanks! That’s just what I need and also I like the idea of hiding it but making the icon visible only if there is a location ask.”

Featured: Tab Scope

by Gomita

Shows a popup on tabs and enables you to preview and navigate tab contents on a popup.

Featured: Password Tags

by Daniel Dawson

Adds metadata and sortable tags to the password manager.

Featured: Mind the Time

by Paul Morris

Keep track of how much time you spend on the web, and where you spend it. Ticker widget shows (1) time spent at the current site and (2) total time spent on the web today.

Nominate your favorite add-ons

Featured add-ons are selected by a community board made up of add-on developers, users, and fans. Each quarter, the board also selects a featured complete theme and featured mobile add-on.

Board members change every six months, so there’s always an opportunity to participate. Stayed tuned to this blog for the next call for applications.

If you’d like to nominate an add-on for featuring, please send it to amo-featured@mozilla.org for the board’s consideration. We welcome you to submit your own add-on.

https://blog.mozilla.org/addons/2015/06/01/june-2015-featured-add-ons/

|

|

Hannah Kane: Short-term roadmap for teach.mozilla.org |

Teach.mozilla.org was released into the wild at the end of April, and we spent part of the last month doing a round of iterations based on early feedback.

The most notable iteration is the homepage. Our goals were to clarify the three main actions a user can take, incorporate the blog, and use more plain language across the page. Do you think we succeeded?

I want to share an updated short-term roadmap, so you can know what’s coming up in the immediate future:

I want to share an updated short-term roadmap, so you can know what’s coming up in the immediate future:

- The next thing we’ll release is a revised workflow for new Clubs. The workflow reflects our evolving thinking about the organizing model. It will work like this: people will submit their Clubs on the site, they’ll then be connected with a Regional Coordinator who will work with the Club Captain to refine the description if necessary. Once approved, the Club will be visible to everyone who visits the site. For more on what a Regional Coordinator is, check out Michelle’s blog post.

- Next up: Since the three main actions require a pretty heavy lift for users, we want to provide a lower-effort action. We’re going to test out a “Pledge to Teach” action that simply allows the user to, well, pledge to teach the web in their community.

- Maker Party is right around the corner. We’ll be promoting it on the homepage, and making some changes to the Maker Party page itself, including adding case studies of successful parties, and linking to the recommended Maker Party activities for this year.

- The Clubs page will get a makeover soon, too. The goal is to provide better scaffolding for people who are thinking about starting a Club, as well as to provide a more useful experience for people who are running Clubs.

- We’ll keep on adding more curriculum as it’s tested and finalized!

- We’re starting the localization effort! Check out this globalization ticket for an overview of all the different pieces of this project.

I’ll blog about the medium-term roadmap soon!

|

|

Rob Wood: Raptor on Gaia CI |

What is Raptor?

No, I’m not talking about Jurassic World (although the movie looks great), I’m actually referring to Raptor – the Firefox OS Performance Framework, created by Eli Perelman.

If you work on Gaia you’ve probably heard about Raptor already. If not, I’ll give you a quick intro. Raptor is the next generation performance measurement tool for Firefox OS. Raptor-based tests perform automated actions on Firefox OS devices, while listening for performance markers (generated by Gaia via the User Timing Gecko API). The performance data is gathered, then results calculated and summarized in a nifty table in the console. For more details about the Raptor tool itself, please see the excellent article “Raptor: Performance Tools for Gaia” that Eli authored on MDN.

Raptor Launch Test

The Raptor launch test automatically launches the specified Firefox OS application, listens for specific end markers, and gathers launch-related performance data. In the case of the launch test, once the ‘fullyLoaded’ marker has been heard, the test iteration is considered complete.

Each time the launch test runs, the application is launched 30 times. Raptor then calculates results based on data from all 30 launches and displays it in the console like this:

Currently the main value that we are concerned with for application launches is the 95th percentile (p95) value for the ‘visuallyLoaded’ metric. In the above example the value is 38,316 ms. The ‘visuallyLoaded’ metric is the one to keep your eye on when concerned about application launch time.

Where Raptor is Running

I am pleased to note that Raptor performance tests are now running on Gaia on a per check-in basis. The test suite runs on Gaia (try) when every Gaia pull request is created. The same suite also runs on gaia-master, after each pull request is merged. The test suite is powered by TaskCluster, runs on the b2g emulator, and reports results on Treeherder.

Currently the Raptor suite running on Gaia check-in consists of the application launch test running on the Firefox OS Settings app. In the near future the launch test will be extended to run on several more Firefox OS apps, and the suite will also be expanded to include the B2G restart test.

The Raptor suite is also running on real Firefox OS devices against several commits per day. That suite is maintained by Eli and reports to an awesome Raptor dashboard. This blog article concerns the Raptor suite running on a per check-in basis, not the daily device suite.

Why Run on Gaia CI?

The purpose of the Raptor test suite running on Gaia check-in is to find Gaia performance regressions before or at the time they are introduced. Pull requests that result in a flagged performance regression can be denied merge, or reverted.

Why the Emulator?

We use the B2G emulator for the Raptor tests running on Gaia CI because of scalability. There are potentially a large number of Gaia pull requests and merges submitted on a daily basis, and it wouldn’t be feasible to use a real Firefox OS device to run the tests on each check-in.

The B2G emulator is sufficient for this task because we are concerned with the change in performance numbers, not the actual performance numbers themselves. The Raptor suite running on actual Firefox OS device hardware mentioned previously provides real, and historical, performance numbers.

The latest successful TaskCluster emulator-ics build is used for all of the tasks; the same emulator and Gecko build is used for all of the tests.

Raptor Base Tasks vs Patch Tasks

The Raptor launch test is run six times on the emulator using the Gaia “base” build, and six times using the Gaia “patch” build, all concurrently. When the tests are triggered by a pull request (Gaia try), the “base” build is the current base/branch master at the time of the pull request creation, and the “patch” build is the base build + the pull request code. Therefore the test measures performance without the pull request code, and with the pull request code added.

When the tests are triggered by a Gaia merge (gaia-master) the “base” build is the current base/master -1 commit (rev before the code merge), and the “patch” build is base rev (has the merged code). Therefore the test measures performance without the merged code, and with the merged code.

Interpreting the Treeherder Dashboard

There is a new Raptor group now appearing on Treeherder for Gaia. At the time of writing this article, the launch test is running on the Settings app only. After the launch test suite is expanded, the explanation of how to interpret the dashboard can be applied to other apps in the same manner.

The Raptor test tasks appear on Treeherder in the “Rpt” group. The task name has a two letter prefix, which matches the first two letters of the Firefox OS application under test (in the above example “se”, which is for the Settings app).

The first set of numbered tasks (se1, se2, se3, se4, se4, se6) are the tasks that ran the Raptor launch test on the ‘base’ build. On Gaia try (testing a pull request), these tasks run the launch test on Gaia BASE_REV (code without the PR). On gaia-master (not testing a PR), these tasks run the launch test on Gaia BASE_REV – 1 commit (commit right before the merged PR).

The second set of numbered tasks (se6, se7, se8, se9, se10, se11, se12) are the tasks that ran the Raptor launch test on the ‘patch’ build. On Gaia try (testing a PR), these tasks run the launch test on Gaia HEAD_REV (code with the PR). On gaia-master (not testing a PR) these tasks run the launch test on BASE_REV (commit with the PR).

The task with no number suffix (se) represents the launch test results task for the settings app. This is the task that will determine if a performance regression has been introduced. If this task shows orange on Treeherder then that means a performance regression was detected.

How the Results are Calculated

The Raptor launch test suite results are determined by comparing the results from the tests run on the base build vs the same tests run on the patch build.

Each individual Raptor launch test has a single result that we are concerned with, the p95 value for the ‘visuallyLoaded’ performance metric. All six of the results for the base tasks (se1 to se6) are taken and then the median of these is calculated and used as the median base build result. The same thing is applied to the patch builds; all six of the results of the patch tasks (se6 to se12) are taken and the median of those calculated and used as the median patch build result.

If the median patch build result is greater than the median base build result, that means that the median app launch time in the patch has increased. If this increase is greater than 15%, then it is flagged as a performance regression, and the result task (se) is marked as a failure on Treeherder.

To view the results task (se) results, click on the results task symbol (se) on Treeherder. Then on the panel that appears towards the bottom, click on “Job details”, and then “Inspect Task”. The Task Inspector opens in a new tab. Scroll down and you will see the console output for the results task. This includes the summary table for each base and patch task, the final median for each set, and the overall result (if a regression has been detected or not). If a regression has been detected, the regression percentage is displayed; this represents the increase in application launch time.

Questions or comments?

For more information feel free to join the #raptor channel on Mozilla IRC, or send me a message via the contact form on the About page of this blog. I hope you found this post useful, and don’t forget to go see Jurassic World in theatres June 12.

|

|

Jeff Muizelaar: Direct2D on top of WARP |

Profiling Internet Explorer shows the following private API's used by Direct2D

d3d10warp.dll!UMDevice::DrawGlyphRun

d3d10warp.dll!UMDevice::AlphaBlt2

d3d10warp.dll!UMDevice::InternalGetDC

d3d10warp.dll!UMDevice::CreateGeometry

d3d10warp.dll!UMDevice::DrawGeometryInternal

It looks like these turn into fairly traditional 2D graphics operations as shown with the following call stack snippets below:

RasterizationStage::Rasterize_TEXT

DrawGlyphRun6x1_B8G8R8A8_SSE<1>

DrawGlyphRun4x4_B8G8R8A8_SSE

RasterizationStage::Rasterize_GEOMETRY

PixelJITRasterizeGeometry

PixelJITGeometryRasterizer::Rasterize

WarpGeometry::Rasterize

CAntialiasedFiller::RasterizeEdges

CAntialiasedFiller::FillEdges

CAntialiasedFiller::GenerateOutput

PixelJITGeometryRasterizer::RasterizeComplexScan

PixelJITGeometryRasterizer::BeginSpan

InitializeEdges

InitializeInactiveArray

QuickSortEdges

This suggests that using Direct2D on top of WARP is more efficient than expected and might actually make more sense than our current strategy of only using WARP for composition.

http://muizelaar.blogspot.com/2015/06/direct2d-on-top-of-warp.html

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20150601/

|

|

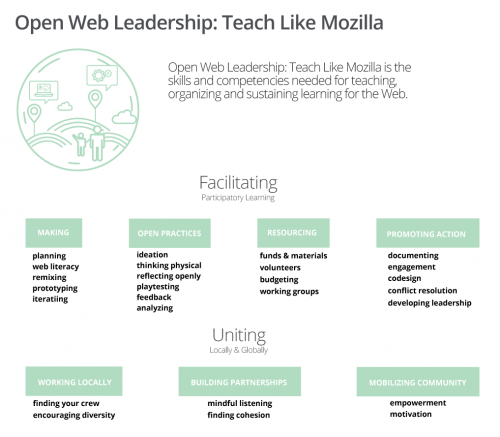

Laura Hilliger: Collapsing Open Leadership Strands |

Missing context? Catch up with recent posts tagged with "Methods & Theories".

A couple weeks ago, in the Open Web Leadership call we talked about the last iteration of the Open Leadership Map (OLM) (notice: this call has been changed! New pad + details here). One of the things that we discussed was the idea that theory and practice are too often separated – that understanding theory is important for improving practice, but practicing is important for understanding. It’s cyclical, so trying to divide the competencies we believe are necessary for leaders in the Open Community into arbitrary theory/practice based groupings is simply not necessary. Upon conclusion of that discussion, we decided to collapse the “Understanding: Participatory Learning” and the “Facilitating: People, Processes and Content” strands together. Makes sense, right? To have “what you need to know” and “how you wield what you know” as “What you need to know and how to wield it”? While working through this, I made some decisions based on our conversations, so I’m particularly interested to hear your thoughts on how this worked out: [caption id="attachment_2592" align="aligncenter" width="500"] click for the enlarged version of the Open Web Leadership Map[/caption]

First, as discussed, “Making” replaced “Prototyping” as the competency, and I moved Prototyping as a note on topics to cover (we may yet get to the granularity of skills!). Next, I moved “Open Practices” and looked at “Making” and “Open Practices” in concert. Design thinking, it was noted, fits well under making, but what seemed to work better was exploding the term “design thinking” into the steps in the process and organizing them under these two competencies. It was here that I started moving around what I viewed as mainly thinking versus mainly producing – to clarify, I say “mainly” because we are constantly producing AND thinking with our methods, so Making + Open Practices are always in concert with one another (for open leaders anyhow).

Aside: We had discussed that the terminology “Open Thinking“ isn’t right, but I did find it difficult to not want to address just how brain-heavy ideation, reflection and analyzation can be. Also, I sort of threw out “Connected Learning”. I was thinking that “Connected Learning” as a model comes in during a content step, rather than a meta organizing step. Though, at the same time, I found that “web literacy” as a model can be included because I feel that web literacy directly contributes to the ability to make, teach, organize and sustain learning for the web.

All of this was the hard part of the iteration. Afterwards, I simply moved all the topics from under participation to under “Promoting Action” because designing for participation is about promoting action IMHO. I also see a congruency between “promoting action” and “mobilizing community”, so I’m looking forward to continuing the conversation.

Another thing we should talk about – naming for this strand – in the meantime, I called in “Facilitating: Participatory Learning”.

Looking forward to hearing what folks think! Join us on June 2nd 16:00 UTC (18:00 CET / 12:00 ET / 09:00 PT) and share your thoughts, or leave a comment / tweet at me / tweet using #teachtheweb or send me an email :D

click for the enlarged version of the Open Web Leadership Map[/caption]

First, as discussed, “Making” replaced “Prototyping” as the competency, and I moved Prototyping as a note on topics to cover (we may yet get to the granularity of skills!). Next, I moved “Open Practices” and looked at “Making” and “Open Practices” in concert. Design thinking, it was noted, fits well under making, but what seemed to work better was exploding the term “design thinking” into the steps in the process and organizing them under these two competencies. It was here that I started moving around what I viewed as mainly thinking versus mainly producing – to clarify, I say “mainly” because we are constantly producing AND thinking with our methods, so Making + Open Practices are always in concert with one another (for open leaders anyhow).

Aside: We had discussed that the terminology “Open Thinking“ isn’t right, but I did find it difficult to not want to address just how brain-heavy ideation, reflection and analyzation can be. Also, I sort of threw out “Connected Learning”. I was thinking that “Connected Learning” as a model comes in during a content step, rather than a meta organizing step. Though, at the same time, I found that “web literacy” as a model can be included because I feel that web literacy directly contributes to the ability to make, teach, organize and sustain learning for the web.

All of this was the hard part of the iteration. Afterwards, I simply moved all the topics from under participation to under “Promoting Action” because designing for participation is about promoting action IMHO. I also see a congruency between “promoting action” and “mobilizing community”, so I’m looking forward to continuing the conversation.

Another thing we should talk about – naming for this strand – in the meantime, I called in “Facilitating: Participatory Learning”.

Looking forward to hearing what folks think! Join us on June 2nd 16:00 UTC (18:00 CET / 12:00 ET / 09:00 PT) and share your thoughts, or leave a comment / tweet at me / tweet using #teachtheweb or send me an email :D http://www.zythepsary.com/education/collapsing-open-leadership-strands/

|

|