Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Joel Maher: A-Team contribution opportunity – Dashboard Hacker |

I am excited to announce a new focused project for contribution – Dashboard Hacker. Last week we gave a preview that today we would be announcing 2 contribution projects. This is an unpaid program where we are looking for 1-2 contributors who will dedicate between 5-10 hours/week for at least 8 weeks. More time is welcome, but not required.

What is a dashboard hacker?

When a developer is ready to land code, they want to test it. Getting the results and understanding the results is made a lot easier by good dashboards and tools. For this project, we have a starting point with our performance data view to fix up a series of nice to have polish features and then ensure that it is easy to use with a normal developer workflow. Part of the developer work flow is the regular job view, If time permits there are some fun experiments we would like to implement in the job view. These bugs, features, projects are all smaller and self contained which make great projects for someone looking to contribute.

What is required of you to participate?

- A willingness to learn and ask questions

- A general knowledge of programming (most of this will be in javascript, django, angularJS, and some work will be in python.

- A promise to show up regularly and take ownership of the issues you are working on

- Good at solving problems and thinking out of the box

- Comfortable with (or willing to try) working with a variety of people

What we will guarantee from our end:

- A dedicated mentor for the project whom you will work with regularly throughout the project

- A single area of work to reduce the need to get up to speed over and over again.

- This project will cover many tools, but the general problem space will be the same

- The opportunity to work with many people (different bugs could have a specific mentor) while retaining a single mentor to guide you through the process

- The ability to be part of the team- you will be welcome in meetings, we will value your input on solving problems, brainstorming, and figuring out new problems to tackle.

How do you apply?

Get in touch with us either by replying to the post, commenting in the bug or just contacting us on IRC (I am :jmaher in #ateam on irc.mozilla.org, wlach on IRC will be the primary mentor). We will point you at a starter bug and introduce you to the bugs and problems to solve. If you have prior work (links to bugzilla, github, blogs, etc.) that would be useful to learn more about you that would be a plus.

How will you select the candidates?

There is no real criteria here. One factor will be if you can meet the criteria outlined above and how well you do at picking up the problem space. Ultimately it will be up to the mentor (for this project, it will be :wlach). If you do apply and we already have a candidate picked or don’t choose you for other reasons, we do plan to repeat this every few months.

Looking forward to building great things!

https://elvis314.wordpress.com/2015/05/18/a-team-contribution-opportunity-dashboard-hacker/

|

|

Joel Maher: A-Team contribution opportunity – DX (Developer Ergonomics) |

I am excited to announce a new focused project for contribution – Developer Ergonomics/Experience, otherwise known as DX. Last week we gave a preview that today we would be announcing 2 contribution projects. This is an unpaid program where we are looking for 1-2 contributors who will dedicate between 5-10 hours/week for at least 8 weeks. More time is welcome, but not required.

What does DX mean?

We chose this project as we continue to experience frustration while fixing bugs and debugging test failures. Many people suggest great ideas, in this case we have set aside a few ideas (look at the dependent bugs to clean up argument parsers, help our tests run in smarter chunks, make it easier to run tests locally or on server, etc.) which would clean up stuff and be harder than a good first bug, yet each issue by itself would be too easy for an internship. Our goal is to clean up our test harnesses and tools and if time permits, add stuff to the workflow which makes it easier for developers to do their job!

What is required of you to participate?

- A willingness to learn and ask questions

- A general knowledge of programming (this will be mostly in python with some javascript as well)

- A promise to show up regularly and take ownership of the issues you are working on

- Good at solving problems and thinking out of the box

- Comfortable with (or willing to try) working with a variety of people

What we will guarantee from our end:

- A dedicated mentor for the project whom you will work with regularly throughout the project

- A single area of work to reduce the need to get up to speed over and over again.

- This project will cover many tools, but the general problem space will be the same

- The opportunity to work with many people (different bugs could have a specific mentor) while retaining a single mentor to guide you through the process

- The ability to be part of the team- you will be welcome in meetings, we will value your input on solving problems, brainstorming, and figuring out new problems to tackle.

How do you apply?

Get in touch with us either by replying to the post, commenting in the bug or just contacting us on IRC (I am :jmaher in #ateam on irc.mozilla.org). We will point you at a starter bug and introduce you to the bugs and problems to solve. If you have prior work (links to bugzilla, github, blogs, etc.) that would be useful to learn more about you that would be a plus.

How will you select the candidates?

There is no real criteria here. One factor will be if you can meet the criteria outlined above and how well you do at picking up the problem space. Ultimately it will be up to the mentor (for this project, it will be me). If you do apply and we already have a candidate picked or don’t choose you for other reasons, we do plan to repeat this every few months.

Looking forward to building great things!

https://elvis314.wordpress.com/2015/05/18/a-team-contribution-opportunity-dx-developer-ergonomics/

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20150519/

|

|

Daniel Pocock: Free and open WebRTC for the Fedora Community |

In January 2014, we launched the rtc.debian.org service for the Debian community. An equivalent service has been in testing for the Fedora community at FedRTC.org.

Some key points about the Fedora service:

- The web front-end is just HTML, CSS and JavaScript. PHP is only used for account creation, the actual WebRTC experience requires no server-side web framework, just a SIP proxy.

- The web code is all available in a Github repository so people can extend it.

- Anybody who can authenticate against the FedOAuth OpenID is able to get a fedrtc.org test account immediately.

- The server is built entirely with packages from CentOS 7 + EPEL 7, except for the SIP proxy itself. The SIP proxy is reSIProcate, which is available as a Fedora package and builds easily on RHEL / CentOS.

Testing it with WebRTC

Create an RTC password and then log in. Other users can call you. It is federated, so people can also call from rtc.debian.org or from freephonebox.net.

Testing it with other SIP softphones

You can use the RTC password to connect to the SIP proxy from many softphones, including Jitsi or Lumicall on Android.

Copy it

The process to replicate the server for another domain is entirely described in the Real-Time Communications Quick Start Guide.

Discuss it

The FreeRTC mailing list is a great place to discuss any issues involving this site or free RTC in general.

WebRTC opportunities expanding

Just this week, the first batch of Firefox OS televisions are hitting the market. Every one of these is a potential WebRTC client that can interact with free communications platforms.

http://danielpocock.com/free-and-open-webrtc-for-the-fedora-community

|

|

Mozilla Reps Community: New council members – Spring 2015 |

We are happy to announce that three new members of the Council have been elected.

![]()

![]()

![]()

Welcome Michael, Shahid and Christos! They bring with them skills they have picked up as Reps mentors, and as community leaders both inside Mozilla and in other fields. A HUGE thank you to the outgoing council members – Arturo, Emma and Raj. We are hoping you continue to use your talents and experience to continue in a leadership role in Reps and Mozilla.

The new members will be gradually on boarding during the following 3 weeks.

The Mozilla Reps Council is the governing body of the Mozilla Reps Program. It provides the general vision of the program and oversees day-to-day operations globally. Currently, 7 volunteers and 2 paid staff sit on the council. Find out more on the ReMo wiki.

Congratulate new Council members on this Discourse topic!

https://blog.mozilla.org/mozillareps/2015/05/18/new-council-members-spring-2015/

|

|

Tim Taubert: Implementing a PBKDF2-based Password Storage Scheme for Firefox OS |

My esteemed colleague Frederik Braun recently took on to rewrite the module responsible for storing and checking passcodes that unlock Firefox OS phones. While we are still working on actually landing it in Gaia I wanted to seize the chance to talk about this great use case of the WebCrypto API in the wild and highlight a few important points when using password-based key derivation (PBKDF2) to store passwords.

The Passcode Module

Let us take a closer look at not the verbatim implementation but at a slightly simplified version. The API offers the only two operations such a module needs to support: setting a new passcode and verifying that a given passcode matches the stored one.

let Passcode = { store(code) { // ... }, verify(code) { // ... } };

When setting up the phone for the first time - or when changing the passcode

later - we call Passcode.store() to write a new code to disk.

Passcode.verify() will help us determine whether we should unlock the phone.

Both methods return a Promise as all operations exposed by the WebCrypto API

are asynchronous.

Passcode.store("1234").then(() => { return Passcode.verify("1234"); }).then(valid => { console.log(valid); }); // Output: true

Make the passcode look “random”

The module should absolutely not store passcodes in the clear. We will use PBKDF2 as a pseudorandom function (PRF) to retrieve a result that looks random. An attacker with read access to the part of the disk storing the user’s passcode should not be able to recover the original input, assuming limited computational resources.

The function deriveBits() is a PRF that takes a passcode and returns a Promise

resolving to a random looking sequence of bytes. To be a little more specific,

it uses PBKDF2 to derive pseudorandom bits.

function deriveBits(code) { // Convert string to a TypedArray. let bytes = new TextEncoder("utf-8").encode(code); // Create the base key to derive from. let importedKey = crypto.subtle.importKey( "raw", bytes, "PBKDF2", false, ["deriveBits"]); return importedKey.then(key => { // Salt should be at least 64 bits. let salt = crypto.getRandomValues(new Uint8Array(8)); // All required PBKDF2 parameters. let params = {name: "PBKDF2", hash: "SHA-1", salt, iterations: 5000}; // Derive 160 bits using PBKDF2. return crypto.subtle.deriveBits(params, key, 160); }); }

Choosing PBKDF2 parameters

As you can see above PBKDF2 takes a whole bunch of parameters. Choosing good values is crucial for the security of our passcode module so it is best to take a detailed look at every single one of them.

Select a cryptographic hash function

PBKDF2 is a big PRF that iterates a small PRF. The small PRF, iterated multiple times (more on why this is done later), is fixed to be an HMAC construction; you are however allowed to specify the cryptographic hash function used inside HMAC itself. To understand why you need to select a hash function it helps to take a look at HMAC’s definition, here with SHA-1 at its core:

HMAC-SHA-1(k, m) = SHA-1((k

|

|

Gregory Szorc: Firefox Mercurial Repository with CVS History |

When Firefox made the switch from CVS to Mercurial in March 2007, the CVS history wasn't imported into Mercurial. There were good reasons for this at the time. But it's a decision that continues to have side-effects. I am surprised how often I hear of engineers wanting to access blame and commit info from commits now more than 9 years old!

When individuals created a Git mirror of the Firefox repository a few years ago, they correctly decided that importing CVS history would be a good idea. They also correctly decided to combine the logically same but physically separate release and integration repositories into a unified Git repository. These are things we can't easily do to the canonical Mercurial repository because it would break SHA-1 hashes, breaking many systems, and it would require significant changes in process, among other reasons.

While Firefox developers do have access to a single Firefox repository with full CVS history (the Git mirror), they still aren't satisfied.

Running git blame (or hg blame for that matter) can be very expensive. For this reason, the blame interface is disabled on many web-based source viewers by default. On GitHub, some blame URLs for the Firefox repository time out and cause GitHub to display an error message. No matter how hard you try, you can't easily get blame results (running a local Git HTTP/HTML interface is still difficult compared to hg serve).

Another reason developers aren't satisfied with the Git mirror is that Git's querying tools pale in comparison to Mercurial's. I've said it before and I'll say it again: Mercurial's revision sets and templates are incredibly useful features that enable advanced repository querying and reporting. Git's offerings come nowhere close. (I really wish Git would steal these awesome features from Mercurial.)

Anyway, enough people were complaining about the lack of a Mercurial Firefox repository with full CVS history that I decided to create one. If you point your browsers or Mercurial clients to https://hg.mozilla.org/users/gszorc_mozilla.com/gecko-full, you'll be able to access it.

The process used for the conversion was the simplest possible: I used hg-git to convert the Git mirror back to Mercurial.

Unlike the Git mirror, I didn't include all heads in this new repository. Instead, there is only mozilla-central's head (the current development tip). If I were doing this properly, I'd include all heads, like gecko-aggregate.

I'm well aware there are oddities in the Git mirror and they now exist in this new repository as well. My goal for this conversion was to deliver something: it wasn't a goal to deliver the most correct result possible.

At this time, this repository should be considered an unstable science experiment. By no means should you rely on this repository. But if you find it useful, I'd appreciate hearing about it. If enough people ask, we could probably make this more official.

http://gregoryszorc.com/blog/2015/05/18/firefox-mercurial-repository-with-cvs-history

|

|

Gervase Markham: Eurovision Bingo |

Some people say that all Eurovision songs are the same. That’s probably not quite true, but there is perhaps a hint of truth in the suggestion that some themes tend to recur from year to year. Hence, I thought, Eurovision Bingo.

I wrote some code to analyse a directory full of lyrics, normally those from the previous year of the competition, and work out the frequency of occurrence of each word. It will then generate Bingo cards, with sets of words of different levels of commonness. You can then use them to play Bingo while watching this year’s competition (which is on Saturday).

There’s a Github repo, or if you want to go straight to pre-generated cards for this year, they are here.

Here’s a sample card from the 2014 lyrics:

| fell | cause | rising | gonna | rain |

| world | believe | dancing | hold | once |

| every | mean | LOVE | something | chance |

| hey | show | or | passed | say |

| because | light | hard | home | heart |

Have fun :-)

http://feedproxy.google.com/~r/HackingForChrist/~3/_3Rvju8PEvE/

|

|

Air Mozilla: OuiShare Labs Camp #3 |

OuiShare Labs Camp #3 is a participative conference dedicated to decentralization, IndieWeb, semantic web and open source community tools.

OuiShare Labs Camp #3 is a participative conference dedicated to decentralization, IndieWeb, semantic web and open source community tools.

|

|

Mark C^ot'e: Project Isolation |

The other day I read about another new Mozilla project that decided to go with GitHub issues instead of our Bugzilla installation (BMO). The author’s arguments make a lot of sense: GitHub issues are much simpler and faster, and if you keep your code in GitHub, you get tighter integration. The author notes that a downside is the inability to file security or confidential bugs, for which Bugzilla has a fine-grained permission system, and that he’d just put those (rare) issues on BMO.

The one downside he doesn’t mention is interdependencies with other Mozilla projects, e.g. the Depends On/Blocks fields. This is where Bugzilla gets into project, product, and perhaps even program management by allowing people to easily track dependency chains, which is invaluable in planning. Many people actually file bugs solely as trackers for a particular feature or project, hanging all the work items and bugs off of it, and sometimes that work crosses product boundaries. There are also a number of tracking flags and fields that managers use to prioritize work and decide which releases to target.

If I had to rebut my own point, I would argue that the projects that use GitHub issues are relatively isolated, and so dependency tracking is not particularly important. Why clutter up and slow down the UI with lots of features that I don’t need for my project? In particular, most of the tracking features are currently used only by, and thus designed for, the Firefox products (aside: this is one reason the new modal UI hides most of these fields by default if they have never been set).

This seems hard to refute, and I certainly wouldn’t want to force an admittedly complex tool on anyone who had much simpler needs. But something still wasn’t sitting right with me, and it took a while to figure out what it was. As usual, it was that a different question was going unasked, leading to unspoken assumptions: why do we have so many isolated projects, and what are we giving up by having such loose (or even no) integration amongst all our work?

Working on projects in isolation is comforting because you don’t have to think about all the other things going on in your organization—in other words, you don’t have to communicate with very many people. A lack of communication, however, leads to several problems:

- low visibility: what is everyone working on?

- redundancy: how many times are we solving the same problem?

- barriers to coordination: how can we become greater than the sum of our parts by delivering inter-related features and products?

By working in isolation, we can’t leverage each other’s strengths and accomplishments. We waste effort and lose great opportunities to deliver amazing things. We know that places like Twitter use monorepos to get some of these benefits, like a single build/test/deploy toolchain and coordination of breaking changes. This is what facilitates architectures like microservices and SOAs. Even if we don’t want to go down those paths, there is still a clear benefit to program management by at least integrating the tracking and planning of all of our various endeavours and directions. We need better organization-wide coordination.

We’re already taking some steps in this direction, like moving Firefox and Cloud Services to one division. But there are many other teams that could benefit from better integration, many teams that are duplicating effort and missing out on chances to work together. It’s a huge effort, but maybe we need to form a team to define a strategy and process—a Strategic Integration Team perhaps?

|

|

Mark C^ot'e: Integration |

The other day I read about another new Mozilla project that decided to go with GitHub issues instead of our Bugzilla installation (BMO). The author’s arguments make a lot of sense: GitHub issues are much simpler and faster, and if you keep your code in GitHub, you get tighter integration. The author notes that a downside is the inability to file security or confidential bugs, for which Bugzilla has a fine-grained permission system, and that he’d just put those (rare) issues on BMO.

The one downside he doesn’t mention is interdependencies with other Mozilla projects, e.g. the Depends On/Blocks fields. This is where Bugzilla gets into project, product, and perhaps even program management by allowing people to easily track dependency chains, which is invaluable in planning. Many people actually file bugs solely as trackers for a particular feature or project, hanging all the work items and bugs off of it, and sometimes that work crosses product boundaries. There are also a number of tracking flags and fields that managers use to prioritize work and decide which releases to target.

If I had to rebut my own point, I would argue that the projects that use GitHub issues are relatively isolated, and so dependency tracking is not particularly important. Why clutter up and slow down the UI with lots of features that I don’t need for my project? In particular, most of the tracking features are currently used only by, and thus designed for, the Firefox products (aside: this is one reason the new modal UI hides most of these fields by default if they have never been set).

This seems hard to refute, and I certainly wouldn’t want to force an admittedly complex tool on anyone who had much simpler needs. But something still wasn’t sitting right with me, and it took a while to figure out what it was. As usual, it was that a different question was going unasked, leading to unspoken assumptions: why do we have so many isolated projects, and what are we giving up by having such loose (or even no) integration amongst all our work?

Working on projects in isolation is comforting because you don’t have to think about all the other things going on in your organization—in other words, you don’t have to communicate with very many people. A lack of communication, however, leads to several problems:

- low visibility: what is everyone working on?

- redundancy: how many times are we solving the same problem?

- barriers to coordination: how can we become greater than the sum of our parts by delivering inter-related features and products?

By working in isolation, we can’t leverage each other’s strengths and accomplishments. We waste effort and lose great opportunities to deliver amazing things. We know that places like Twitter use monorepos to get some of these benefits, like a single build/test/deploy toolchain and coordination of breaking changes. This is what facilitates architectures like microservices and SOAs. Even if we don’t want to go down those paths, there is still a clear benefit to program management by at least integrating the tracking and planning of all of our various endeavours and directions. We need better organization-wide coordination.

We’re already taking some steps in this direction, like moving Firefox and Cloud Services to one division. But there are many other teams that could benefit from better integration, many teams that are duplicating effort and missing out on chances to work together. It’s a huge effort, but maybe we need to form a team to define a strategy and process—a Strategic Integration Team perhaps?

|

|

Mike Conley: The Joy of Coding (Ep. 14): More OS X Printing |

In this episode, I kept working on the same bug as last week – proxying the print dialog from the content process on OS X. We actually finished the serialization bit, and started doing deserialization!

Hopefully, next episode we can polish off the deserialization and we’l be done. Fingers crossed!

Note that this episode was about 2 hours and 10 minutes, but the standard-definition recording up on Air Mozilla only plays for about 13 minutes and 5 seconds. Not too sure what’s going on there – we’ve filed a bug with the people who’ve encoded it. Hopefully, we’ll have the full episode up for standard-definition soon.

In the meantime, if you’d like to watch the whole episode, you can go to the Air Mozilla page and watch it in HD, or you can go to the YouTube mirror.

References

Bug 1091112 – Print dialog doesn’t get focus automatically, if e10s is enabled – Notes

http://mikeconley.ca/blog/2015/05/17/the-joy-of-coding-ep-14-more-osx-printing/

|

|

Manish Goregaokar: The Problem With Single-threaded Shared Mutability |

This is a post that I’ve been meaning to write for a while now; and the release of Rust 1.0 gives me the perfect impetus to go ahead and do it.

Whilst this post discusses a choice made in the design of Rust; and uses examples in Rust; the principles discussed here apply to other languages for the most part. I’ll also try to make the post easy to understand for those without a Rust background; please let me know if some code or terminology needs to be explained.

What I’m going to discuss here is the choice made in Rust to disallow having multiple mutable aliases to the same data (or a mutable alias when there are active immutable aliases), even from the same thread. In essence, it disallows one from doing things like:

let mut x = Vec::new();

{

let ptr = &mut x; // Take a mutable reference to `x`

ptr.push(1); // Allowed

let y = x[0]; // Not allowed (will not compile): as long as `ptr` is active,

// x cannot be read from ...

x.push(1); // .. or written to

}

// alternatively,

let mut x = Vec::new();

x.push(1); // Allowed

{

let ptr = &x; // Create an immutable reference

let y = ptr[0]; // Allowed, nobody can mutate

let y = x[0]; // Similarly allowed

x.push(1); // Not allowed (will not compile): as long as `ptr` is active,

// `x` is frozen for mutation

}

This is essentially the “Read-Write lock” (RWLock) pattern, except it’s not being used in a threaded context, and the “locks” are done via static analysis (compile time “borrow checking”).

Newcomers to the language have the recurring question as to why this exists. Ownership semantics and immutable borrows can be grasped because there are concrete examples from languages like C++ of problems that these concepts prevent. It makes sense that having only one “owner” and then multiple “borrowers” who are statically guaranteed to not stick around longer than the owner will prevent things like use-after-free.

But what could possibly be wrong with having multiple handles for mutating an object? Why do we need an RWLock pattern? 1

It causes memory unsafety

This issue is specific to Rust, and I promise that this will be the only Rust-specific answer.

Rust enums provide a form of algebraic data types. A Rust enum is allowed to “contain” data, for example you can have the enum

enum StringOrInt {

Str(String),

Int(i64)

}

which gives us a type that can either be a variant Str, with an associated string, or a variant Int2, with an associated integer.

With such an enum, we could cause a segfault like so:

let x = Str("Hi!".to_string()); // Create an instance of the `Str` variant with associated string "Hi!"

let y = &mut x; // Create a mutable alias to x

if let Str(ref insides) = x { // If x is a `Str`, assign its inner data to the variable `insides`

*y = Int(1); // Set `*y` to `Int(1), therefore setting `x` to `Int(1)` too

println!("x says: {}", insides); // Uh oh!

}

Here, we invalidated the insides reference because setting x to Int(1) meant that there is no longer a string inside it.

However, insides is still a reference to a String, and the generated assembly would try to dereference the memory location where

the pointer to the allocated string was, and probably end up trying to dereference 1 or some nearby data instead, and cause a segfault.

Okay, so far so good. We know that for Rust-style enums to work safely in Rust, we need the RWLock pattern. But are there any other reasons we need the RWLock pattern? Not many languages have such enums, so this shouldn’t really be a problem for them.

Iterator invalidation

Ah, the example that is brought up almost every time the question above is asked. While I’ve been quite guilty of using this example often myself (and feel that it is a very appropriate example that can be quickly explained), I also find it to be a bit of a cop-out, for reasons which I will explain below. This is partly why I’m writing this post in the first place; a better idea of the answer to The Question should be available for those who want to dig deeper.

Iterator invalidation involves using tools like iterators whilst modifying the underlying dataset somehow.

For example,

let buf = vec![1,2,3,4];

for i in &buf {

buf.push(i);

}

Firstly, this will loop infinitely (if it compiled, which it doesn’t, because Rust prevents this). The equivalent C++ example would be this one, which I use at every opportunity.

What’s happening in both code snippets is that the iterator is really just a pointer to the vector and an index. It doesn’t contain a snapshot of the original vector; so pushing to the original vector will make the iterator iterate for longer. Pushing once per iteration will obviously make it iterate forever.

The infinite loop isn’t even the real problem here. The real problem is that after a while, we could get a segmentation fault. Internally, vectors have a certain amount of allocated space to work with. If the vector is grown past this space, a new, larger allocation may need to be done (freeing the old one), since vectors must use contiguous memory.

This means that when the vector overflows its capacity, it will reallocate, invalidating the reference stored in the iterator, and causing use-after-free.

Of course, there is a trivial solution in this case — store a reference to the Vec/vector object inside

the iterator instead of just the pointer to the vector on the heap. This leads to some extra indirection or a larger

stack size for the iterator (depending on how you implement it), but overall will prevent the memory unsafety.

This would still cause problems with more comple situations involving multidimensional vectors, however.

“It’s effectively threaded”

Aliasing with mutability in a sufficiently complex, single-threaded program is effectively the same thing as accessing data shared across multiple threads without a lock

(The above is my paraphrasing of someone else’s quote; but I can’t find the original or remember who made it)

Let’s step back a bit and figure out why we need locks in multithreaded programs. The way caches and memory work; we’ll never need to worry about two processes writing to the same memory location simultaneously and coming up with a hybrid value, or a read happening halfway through a write.

What we do need to worry about is the rug being pulled out underneath our feet. A bunch of related reads/writes

would have been written with some invariants in mind, and arbitrary reads/writes possibly happening between them

would invalidate those invariants. For example, a bit of code might first read the length of a vector, and then go ahead

and iterate through it with a regular for loop bounded on the length.

The invariant assumed here is the length of the vector. If pop() was called on the vector in some other thread, this invariant could be

invalidated after the read to length but before the reads elsewhere, possibly causing a segfault or use-after-free in the last iteration.

However, we can have a situation similar to this (in spirit) in single threaded code. Consider the following:

let x = some_big_thing();

let len = x.some_vec.len();

for i in 0..len {

x.do_something_complicated(x.some_vec[i]);

}

We have the same invariant here; but can we be sure that x.do_something_complicated() doesn’t modify x.some_vec for

some reason? In a complicated codebase, where do_something_complicated() itself calls a lot of other functions which may

also modify x, this can be hard to audit.

Of course, the above example is a simplification and contrived; but it doesn’t seem unreasonable to assume that such bugs can happen in large codebases — where many methods being called have side effects which may not always be evident.

Which means that in large codebases we have almost the same problem as threaded ones. It’s very hard to maintain invariants when one is not completely sure of what each line of code is doing. It’s possible to become sure of this by reading through the code (which takes a while), but further modifications may also have to do the same. It’s impractical to do this all the time and eventually bugs will start cropping up.

On the other hand, having a static guarantee that this can’t happen is great. And when the code is too convoluted for a static guarantee (or you just want to avoid the borrow checker), a single-threaded RWlock-esque type called RefCell is available in Rust. It’s a type providing interior mutability and behaves like a runtime version of the borrow checker. Similar wrappers can be written in other languages.

Edit: In case of many primitives like simple integers, the problems with shared mutability turn out to not be a major issue.

For these, we have a type called Cell which lets these be mutated and shared simultaenously. This works on all Copy

types; i.e. types which only need to be copied on the stack to be copied. (Unlike types involving pointers or other indirection)

This sort of bug is a good source of reentrancy problems too.

Safe abstractions

In particular, the issue in the previous section makes it hard to write safe abstractions, especially with generic code. While this problem is clearer in the case of Rust (where abstractions are expected to be safe and preferably low-cost), this isn’t unique to any language.

Every method you expose has a contract that is expected to be followed. Many times, a contract is handled by type safety itself, or you may have some error-based model to throw out uncontractual data (for example, division by zero).

But, as an API (can be either internal or exposed) gets more complicated, so does the contract. It’s not always possible to verify that the contract is being violated at runtime either, for example many cases of iterator invalidation are hard to prevent in nontrivial code even with asserts.

It’s easy to create a method and add documentation “the first two arguments should not point to the same memory”. But if this method is used by other methods, the contract can change to much more complicated things that are harder to express or check. When generics get involved, it only gets worse; you sometimes have no way of forcing that there are no shared mutable aliases, or of expressing what isn’t allowed in the documentation. Nor will it be easy for an API consumer to enforce this.

This makes it harder and harder to write safe, generic abstractions. Such abstractions rely on invariants, and these invariants can often be broken by the problems in the previous section. It’s not always easy to enforce these invariants, and such abstractions will either be misused or not written in the first place, opting for a heavier option. Generally one sees that such abstractions or patterns are avoided altogether, even though they may provide a performance boost, because they are risky and hard to maintain. Even if the present version of the code is correct, someone may change something in the future breaking the invariants again.

My previous post outlines a situation where Rust was able to choose the lighter path in a situation where getting the same guarantees would be hard in C++.

Note that this is a wider problem than just with mutable aliasing. Rust has this problem too, but not when it comes to mutable aliasing. Mutable aliasing is important to fix however, because we can make a lot of assumptions about our program when there are no mutable aliases. Namely, by looking at a line of code we can know what happened wrt the locals. If there is the possibility of mutable aliasing out there; there’s the possibility that other locals were modified too. A very simple example is:

fn look_ma_no_temp_var_l33t_interview_swap(&mut x, &mut y) {

*x = *x + *y;

*y = *x - *y;

*x = *x - *y;

}

// or

fn look_ma_no_temp_var_rockstar_interview_swap(&mut x, &mut y) {

*x = *x ^ *y;

*y = *x ^ *y;

*x = *x ^ *y;

}

In both cases, when the two references are the same3, instead of swapping, the two variables get set to zero.

A user (internal to your library, or an API consumer) would expect swap() to not change anything when fed equal

references, but this is doing something totally different. This assumption could get used in a program; for example instead

of skipping the passes in an array sort where the slot is being compared with itself, one might just go ahead with it

because swap() won’t change anything there anyway; but it does, and suddenly your sort function fills everything with

zeroes. This could be solved by documenting the precondition and using asserts, but the documentation gets harder and harder

as swap() is used in the guts of other methods.

Of course, the example above was contrived. It’s well known that those swap() implementations have that precondition,

and shouldn’t be used in such cases. Also, in most swap algorithms it’s trivial to ignore cases when you’re comparing

an element with itself, generally done by bounds checking.

But the example is a simplified sketch of the problem at hand.

In Rust, since this is statically checked, one doesn’t worry much about these problems, and robust APIs can be designed since knowing when something won’t be mutated can help simplify invariants.

Wrapping up

Aliasing that doesn’t fit the RWLock pattern is dangerous. If you’re using a language like Rust, you don’t need to worry. If you’re using a language like C++, it can cause memory unsafety, so be very careful. If you’re using a language like Java or Go, while it can’t cause memory unsafety, it will cause problems in complex bits of code.

This doesn’t mean that this problem should force you to switch to Rust, either. If you feel that you can avoid writing APIs where this happens, that is a valid way to go around it. This problem is much rarer in languages with a GC, so you might be able to avoid it altogether without much effort. It’s also okay to use runtime checks and asserts to maintain your invariants; performance isn’t everything.

But this is an issue in programming; and make sure you think of it when designing your code.

http://manishearth.github.io/blog/2015/05/17/the-problem-with-shared-mutability/

|

|

Emma Irwin: Towards a Participation Standard |

Participation at Mozilla is a personal journey, no story the same, no path identical and while motivations may be similar at times, what sustains and rewards our participation is unique. Knowing this, it feels slightly ridiculous to use the visual of a ladder to model the richness of opportunity and value/risk of ‘every step’. The impression that there is a single starting point, a single end and a predictable series of rigid steps between seems contrary to the journey.

Yet… the ‘ladder’ to me has always seemed like the perfect way to visualize the potential of ‘starting’. Even more importantly, I think ladders help people visualize how finishing a single step leads to greater things: greater impact, depth of learning and personal growth among other things.

After numerous conversations (inside and outside Mozilla) on this topic, I’ve come to realize that focus should be more on the rung or ‘step’, and not on building a rigid project-focused connection between them. In the spirit of our virtuous circle, I believe that being thoughtful and deliberate about step design, lends to the emergence of personalized learning and participating pathways. “Cowpaths of participation”.

In designing steps, we also need to consider that not everyone needs to jump to a next thing, and that specializations and ‘depth’ exists in opportunities as well. Here’s template I’m using to build participation steps right now:

* Realize I need to add ‘mentorship available’ as well.

This model (or an evolution of it) if adopted could provide a way for contributors to traverse between projects and grow valuable skillsets and experience for life with increasing impact to Mozilla’s mission. For example, as a result of participating in the Marketpulse project I find my ‘place’ in User Research, I can also look for steps across the project in need of that skill, or offering ways to specialize even further. A Python developer perhaps, can look for QA ‘steps’ after realizing the most enjoyable part of one project ladder was actually the QA process.

I created a set of Participation Personas to help me visualize the people we’re engaging, and what their unique perspectives, opportunities and risks are. I’m building these on the ‘side of my desk’ so only Lurking Lucinda has a full bio at the moment, but you can see all profiles in this document (feel free to add comments).

I believe all of this thinking, and design have helped me build a compelling and engaging ladder for Marketpulse, where one of our goals is to sustain project-connection through learning opportunities.

In reality though, while this can help us design for single projects – really well, to actually support personalized ladders we need adoption across the project. At some point we just need to get together on standards that help us scale participation – a “Participation Standard” .

Last year I spent a lot of time working with a number of other open projects, trying to solve for a lot of these same participation challenges present in Mozilla. And so, I also dream of that something like this can empowers other projects in a similar way: where personalized learning and participating pathways can extend between Mozilla and other projects with missions people care about. Perhaps this is something Mark can consider in thinking for the ‘Building a Mozilla Academy‘.

|

|

Air Mozilla: Rust Release Party |

The release party for Rust 1.0.

The release party for Rust 1.0.

|

|

Air Mozilla: Webdev Beer and Tell: May 2015 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

|

|

Armen Zambrano: mozci 0.6.0 - Trigger based on Treeherder filters, Windows support, flexible and encrypted password managament |

We also have our latest experimental script mozci-triggerbyfilters (http://mozilla-ci-tools.readthedocs.org/en/latest/scripts.html#triggerbyfilters-py).

How to update

Run "pip install -U mozci" to update.Notice

We have move all scripts from scripts/ to mozci/scripts/.Note that you can now use "pip install" and have all scripts available as mozci-name_of_script_here in your PATH.

Contributions

We want to welcome @KWierso as our latest contributor!Our gratitude @Gijs for reporting the Windows issues and for all his feedback.

Congratulations to @parkouss for making https://github.com/parkouss/mozbattue the first project using mozci as its dependency.

In this release we had @adusca and @vaibhavmagarwal as our main and very active contributors.

Major highlights

- Added script to trigger jobs based on Treeherder filters

- This allows using filters like --include "web-platform-tests" and that will trigger all matching builders

- You can also use --exclude to exclude builders you don't want

- With the new trigger by filters script you can preview what will be triggered:

233 jobs will be triggered, do you wish to continue? y/n/d (d=show details) d

05/15/2015 02:58:17 INFO: The following jobs will be triggered:

Android 4.0 armv7 API 11+ try opt test mochitest-1

Android 4.0 armv7 API 11+ try opt test mochitest-2

- Remove storing passwords in plain-text (Sorry!)

- We now prompt the user if he/she wants to store their password enctrypted

- When you use "pip install" we will also install the main scripts as mozci-name_of_script_here binaries

- This makes it easier to use the binaries in any location

- Windows issues

- The python module gzip.py is uncapable of decompressing large binaries

- Do not store buildjson on a temp file and then move

Minor improvements

- Updated docs

- Improve wording when triggering a build instead of a test job

- Loosened up the python requirements from == to >=

- Added filters to alltalos.py

All changes

You can see all changes in here:0.5.0...0.6.0

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Joel Maher: Watching the watcher – Some data on the Talos alerts we generate |

What are the performance regressions at Mozilla- who monitors them and what kind of regressions do we see? I want to answer this question with a few peeks at the data. There are plenty of previous blog posts I have done outlining stats, trends, and the process. Lets recap what we do briefly, then look at the breakdown of alerts (not necessarily bugs).

When Talos uploads numbers to graph server they get stored and eventually run through a calculation loop to find regressions and improvements. As of Jan 1, 2015, we upload these to mozilla.dev.tree-alerts as well as email to the offending patch author (if they can easily be identified). There are a couple folks (performance sheriffs) who look at the alerts and triage them. If necessary a bug is filed for further investigation. Reading this brief recap of what happens to our performance numbers probably doesn’t inspire folks, what is interesting is looking at the actual data we have.

Lets start with some basic facts about alerts in the last 12 months:

- We have collected 8232 alerts!

- 4213 of those alerts are regressions (the rest are improvements)

- 3780 of those above alerts have a manually marked status

- the rest have been programatically marked as merged and associated with the original

- 278 bugs have been filed (or 17 alerts/bug)

- 89 fixed!

- 61 open!

- 128 (5 invalid, 8 duplicate, 115 wontfix/worksforme)

As you can see this is not a casual hobby, it is a real system helping out in fixing and understanding hundreds of performance issues.

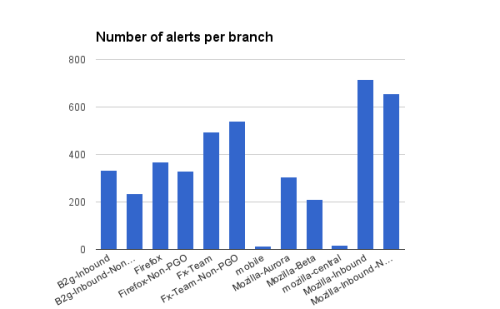

We generate alerts on a variety of branches, here is the breakdown of branches and alerts/branch;

There are a few things to keep in mind here, mobile/mozilla-central/Firefox are the same branch, and for non-pgo branches that is only linux/windows/android, not osx.

Looking at that graph is sort of non inspiring, most of the alerts will land on fx-team and mozilla-inbound, then show up on the other branches as we merge code. We run more tests/platforms and land/backout stuff more frequently on mozilla-inbound and fx-team, this is why we have a larger number of alerts.

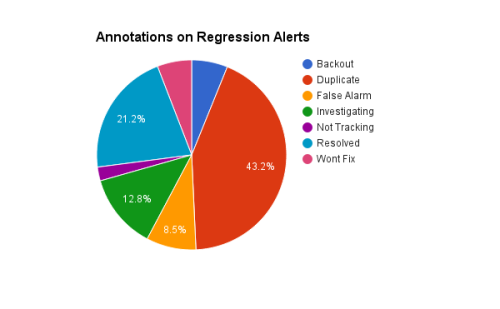

Given the fact we have so many alerts and have manually triaged them, what state the the alerts end up in?

The interesting data point here is that 43% of our alerts are duplicates. A few reasons for this:

- we see an alert on non-pgo, then on pgo (we usually mark the pgo ones as duplicates)

- we see an alert on mozilla-inbound, then the same alert shows up on fx-team,b2g-inbound,firefox (due to merging)

- and then later we see the pgo versions on the merged branches

- sometimes we retrigger or backfill to find the root cause, this generates a new alert many times

- in a few cases we have landed/backed out/landed a patch and we end up with duplicate sets of alerts

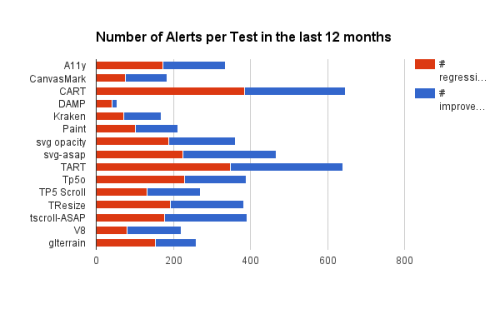

The last piece of information that I would like to share is the break down of alerts per test:

There are a few outliers, but we need to keep in mind that active work was being done in certain areas which would explain a lot of alerts for a given test. There are 35 different test types which wouldn’t look good in an image, so I have excluded retired tests, counters, startup tests, and android tests.

Personally, I am looking forward to the next year as we transition some tools and do some hacking on the reporting, alert generation and overall process. Thanks for reading!

|

|

Mozilla Reps Community: Reps Weekly Call – May 14th 2015 |

Last Thursday we had our weekly call about the Reps program, where we talk about what’s going on in the program and what Reps have been doing during the last week.

Summary

- Advocacy Task force

- Featured Events

- Council elections results.

- Help me with my project.

AirMozilla video

Detailed notes

Advocacy Task force

Stacy and Jochai joined the call to hear Reps feedback on two new initiatives: Request for Policy Support & Advocacy Task Forces.

Request for Policy Support

The goal is to enable mozillians to request support for policy in their local countries. Mozillians will be able to collaborate with a Mozilla Rep to submit an issue which is reviewed and acted upon by public policy team.

Prior to rollout, they will develop training for Mozilla Reps.

Advocacy task force

These task forces will be self organized local groups focused on Educating people about open Web issues and Organizing action on regional political issues.

The members will partner with a Mozilla Rep and communicate using the Advocacy Discourse.

You can check the full presentation and send any feedback to smartin@mozilla.com and jochai@mozilla.com.

Featured Events of the week

In this new section we want to talk about some of the events happening this week.

- Open tech summit (Berlin, Germany), May 14th

- Mozilla Reps African community meetup (Nairobi, Kenya), May 16th-17th

- RSJS 2015 (Porto Alegre, Brazil) May 16th

- DORS/CLUC (Zagreb, Croatia) May 18th

- BucharestJS (Bucharest, Romania) May 20th

- MozBalkans Meetup May 21/24th

Council elections results

Council elections are over and we have just received the results.

Three seats had to be renewed so the next new council members will be:

On-boarding process will start now and they should be fully integrated in the Council in the coming weeks. More announcements about this will be done in all Rep’s channels.

Help me with my project!

In this new section, the floor is yours to present in 1 minute a project you are working on and ask other Reps for help and support.

If you can’t make the call, you can add your project and a link with more information and we’ll read it for you during the call.

In this occasion we talked about:

- FSA WoMoz recruitment campaign – Manuela

- She needs feedback on the project.

- Mozilla Festival East Africa – Lawrence

- They need help promoting and getting partners.

- Marketpulse – Emma

- She needs help with outreach in Brazil, Mexico, India, Bangladesh, Philippines, Russia, Colombia

More details in the pad.

For next week

Next week Greg will be on this call to talk about Shape of the web project. Please, check the presentation on air mozilla (starting at min. 15), the site and gather all questions you might have.

Amira and the webmaker team will be also next week on the call, check her email on reps-general and gather questions too.

Don’t forget to comment about this call on Discourse and we hope to see you next week!

https://blog.mozilla.org/mozillareps/2015/05/15/reps-weekly-call-may-14th-2015/

|

|

Robert O'Callahan: Using rr To Debug Dropped Video Frames In Gecko |

Lately I've been working on a project to make video rendering smoother in Firefox, by sending our entire queue of decoded timestamped frames to the compositor and making the compositor responsible for choosing the correct video frame to display every time we composite the window. I've been running a testcase with a 60fps video, which should draw each video frame exactly once, with logging to show when that isn't the case (i.e. we dropped or duplicated a frame). rr is excellent for debugging such problems! It's low overhead so it doesn't change the results much. After recording a run, I examine the log to identify dropped or dup'ed frames, and then it's easy to use rr to replay the execution and figure out exactly why each frame was dropped or dup'ed. Using a regular interactive debugger to debug such issues is nigh-impossible since stopping the application in the debugger totally messes up the timing --- and you don't know which frame is going to have a problem, so you don't know when to stop anyway.

I've been using rr on optimized Firefox builds because debug builds are too slow for this work, and it turns out rr with reverse execution really helps debugging optimized code. One of the downsides of debugging optimized code is the dreaded "Value optimized out" errors you often get trying to print values in gdb. When that happens under rr, you can nearly always find the correct value by doing "reverse-step" or "reverse-next" until you reach a program point where the variable wasn't optimized out.

I've found it's taking me some time to learn to use reverse execution effectively. Finding the fastest way to debug a problem is a challenge, because reverse execution makes new and much more effective strategies available that I'm not used to having. For example, several times I've found myself exploring the control flow of a function invocation by running (forwards or backwards) to the start of a function and then stepping forwards from there, because that's how I'm used to working, when it would be more effective to set a breakpoint inside the function and then reverse-next a few times to see how we got there.

But even though I'm still learning, debugging is much more fun now!

http://robert.ocallahan.org/2015/05/using-rr-to-debug-dropped-video-frames.html

|

|