Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

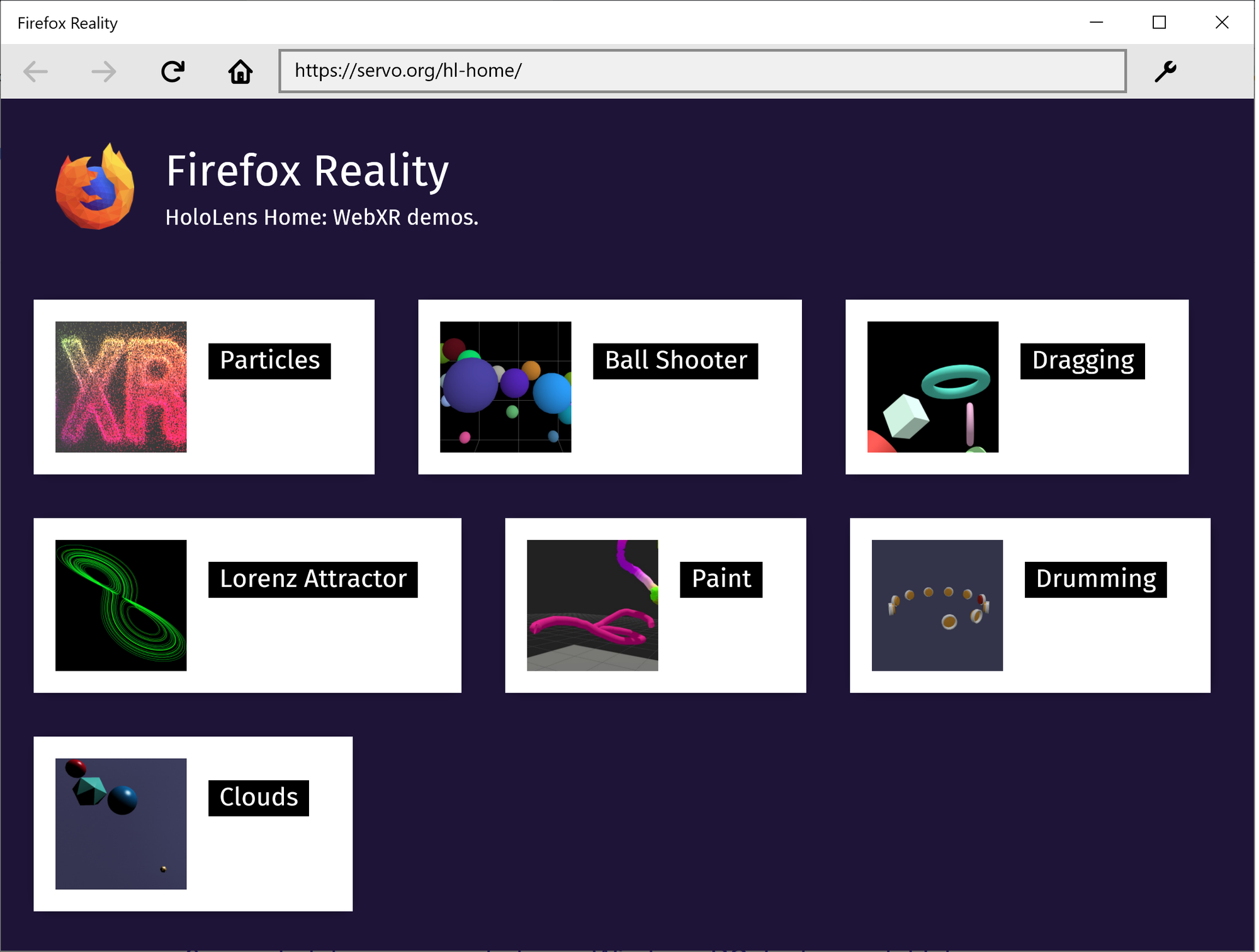

Mozilla VR Blog: Scaling Virtual Events with Hubs and Hubs Cloud |

Virtual events are unique, and each one has varying needs for how many users can be present. In this blog post, we’ll talk about the different ways that you can consider concurrency as part of a virtual event, the current capabilities of Mozilla Hubs and Hubs Cloud for supporting users, and considerations for using Hubs as part of events of varying sizes. If you’ve considered using Hubs for a meetup or conference, or are just generally interested in how the platform works, read on!

Concurrency Planning for Virtual Events

When we think about traditional event planning, we have one major restriction that guides us: the physical amount of space that is available to us. When we take away the physical location as a restriction for our event by moving our event online, it can be hard to understand what limits might exist for a given platform.

With online collaboration software, there are many factors that come into play. Applications might have a per-event limit, a per-room limit, or a per-platform limit that can influence how capacity planning is done. Hubs has both ‘per-room’ and ‘per-platform’ user limits. These limits are dependent on whether you are creating a room on hubs.mozilla.com, or using Hubs Cloud. When considering the number of people who you might want to have participate in your event, it’s important to think about the distribution of those users and the content of the event that you’re planning. This relates back to physical event planning: while your venue may have an overall capacity in the thousands, you will also need to consider how many people can fit in different rooms.

When thinking about how to use Hubs or Hubs Cloud for an event, you can consider two different options -- one where you start from how many people you want to support, and how they will be split across rooms, and one where you start from the total event size and figure out how many rooms will be necessary. Below, we cover some of the considerations for room and system-wide capabilities for Hubs and Hubs Cloud.

Hubs Room Capacity

With Hubs, the question gets a bit more complicated, because you can control the room capacity and the “venue” capacity via Hubs Cloud. Hubs supports up to 50 users in an individual room. However, it is important to note that this is the server limit on the number of people who can be accommodated in a single room - it does not guarantee that everyone will be able to have a good experience. Generally speaking, users who are connecting with a mobile phone or standalone virtual reality headset (for example, something with an Qualcomm 835 chipset) will start to see performance drops before users on a gaming PC with hardwired internet will. While Hubs offers a great amount of flexibility in sharing all sorts of content from around the web, user-generated content can often bring with it a large amount of overhead to support sharing large files or streaming content. Oftentimes, these performance issues manifest in a degraded audio experience or frame rate drops.

With virtual events, the device that your attendees are connecting from will make a difference, as will their home network configurations. Factors that impact performance in Hubs include:

- The number of people who are in a room

- The amount of content that is in a room

- The type of content that is in a room

As a result of these considerations, we set a default room capacity at about half of the total server capacity, because users will likely experience issues connecting to a room that has 50 avatars in it. The server limit is capped because each user that connects to a room opens up a channel to the server to send network data (specifically, voice data) and at higher number of users, the software running on the server has to do a lot of work to get that data processed and sent back out to the other clients in real-time. While increasing the size and number of servers that are available can help the system-wide concurrency, it will not increase individual room capacity beyond the 50 connection cap given that the underlying session description protocol (SDP) negotiations between the connected clients is a single-threaded process.

Hubs also has the concept of a room ‘lobby’. While in the lobby, visitors can see what is happening in the room, hear what is being discussed, and send messages via chat, but they are not represented as an avatar in the space. While the lobby system in Hubs was initially designed to provide context to a user before they were embodied in a room, it is now possible to use the lobby as a lighter-weight viewing experience for larger events similar to what you might experience on a 2D streaming platform, such as Twitch. We recently made an experimental change that tested up to 90 clients watching from the lobby, with 10 people in the room. For events where a passive viewing experience is contextually appropriate, this approach can facilitate a larger group than the room itself can, because the lobby viewers are not sending data to the server, only receiving it, which has a reduced impact on room load.

Scaling Hubs Cloud

While room limits are one consideration for virtual events, the system-wide concurrency then becomes another question. Like physical venues, virtual platforms will have a cap on the total number of connected users that it can support. This is generally the result of the server capabilities and hardware that the backend of the application is running on. For Hubs Cloud, this is primarily dependent on the EC2 server size that is running your deployment, and if you are using a single server or multiple servers. A small, t3.micro server (the smallest one that can run Hubs Cloud) will be able to handle a smaller simultaneous user load than a c5.24xlarge. This is an important consideration when figuring out how large of a server instance to use for a Hubs Cloud deployment.

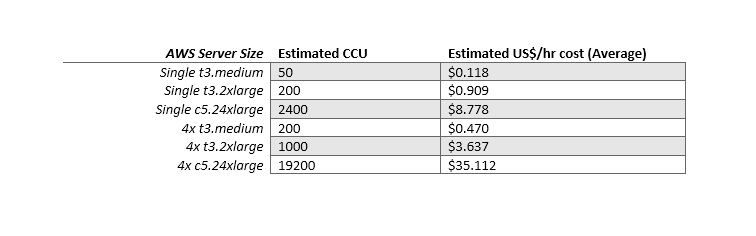

The exact configuration for a large event running on Hubs Cloud will be dependent on the type of sessions that will be running, how many users will be in each Hubs room, and whether the users will participate as audience members from the lobby or be embodied in a space. We recently released an estimated breakdown of how different instance sizes on AWS deployments of Hubs Cloud translate to a total number of concurrent users (CCU).

Hubs Strategies for Events

Building out a strategy to incorporate Hubs into a virtual event that you’re holding will be unique to your individual event. With any event, we recommend that you familiarize yourself with the moderation tools and best practices, but we’ve offered a few sample configurations and strategies below based on what we’ve heard works well for different types of meetings and organizations.

For groups up to 25 users (e.g. small meetup or meeting, breakout sessions for a larger event) - a single room on hubs.mozilla.com will generally be sufficient. If all of your users are connecting via mobile phones or standalone headsets as avatars, you may want to experiment with splitting the groups across two or three rooms and linking them together.

For groups up to 50 users (e.g. a networking event and panel of speakers) - a single room on hubs.mozilla.com, where the speakers are represented as avatars and about half of the participants view from the lobby, or two rooms on hubs.mozilla.com, where one room is the panel room and one room is for viewers.

For events with speakers broadcasting to more than 50 users (e.g. conference keynote) - you will likely need to set up a streaming service that will allow you to broadcast video into several rooms simultaneously, with a central location made available for attendees to discover one another. This could be a separate “lobby” room with links to the other viewing areas, or a simple web page that has links or the different rooms embedded. While it is possible to host events this way on hubs.mozilla.com, managing more than two rooms as part of a single event on the hosted Mozilla Hubs infrastructure can be difficult to coordinate and create a unified experience. Instead, for events that require more than two or three rooms, we recommend deploying a Hubs Cloud instance.

With Hubs Cloud, you have more granular control over the rooms and availability of your event, because instead of simply controlling the permissions and settings of an individual set of rooms, you are hosting your own version of hubs.mozilla.com - so you not only can set platform-wide permissions and configurations, you also have the ability to add your own URL, branding, accounts, avatars, scenes, and more.

The Future of Scaling and Concurrency with Hubs and Hubs Cloud

We’ve been impressed with the different ways that groups have configured Hubs to meet, even with the existing infrastructure. Hubs was originally built to more closely mirror platforms that have closed, private meeting rooms, but as more people have looked to virtual solutions to stay connected during times of physical distance, we’ve been looking at new ways that we can scale the platform to better support larger audiences. This means considering a few big questions:

- What is the right design for gathering large groups of people virtually? In thinking about this question, there are technical and social considerations that come into play when considering how to best support larger communities. We can take the approach of trying to mimic our physical environment, and simply focus on increasing the raw number of avatars that can be present in a room, or we can take more experimental approaches to the unique affordances that technology presents in reshaping how we socialize and gather.

- What are the trade-offs in our assumptions about the capabilities offered by virtual meeting platforms, and how do we preserve principles of privacy and safety in larger, potentially less-trusted groups? In our day-to-day lives, we find our actions heavily influenced by the context and community around us. As we look to software solutions to enable some of these use cases, it is important for us to think about how these safety and privacy structures continue to be core considerations for the platform.

Of course, there are also the underlying architectural questions about the ways that web technologies are evolving, too, which also influences how we’re able to explore and respond to our community of users as the product grows. We welcome discussions, questions, and contributions as we explore this space in our community Discord server, on GitHub, or via email, and look forward to continuing to grow the platform in different ways to best support the needs of our users!

https://blog.mozvr.com/scaling-virtual-events-with-hubs-and-hubs-cloud/

|

|

Mozilla Addons Blog: Extensions in Firefox 77 |

Firefox 77 is loaded with great improvements for the WebExtensions API. These additions to the API will help you provide a great experience for your users.

Optional Permissions

Since Firefox 57, users have been able to see what permissions an extension wants to access during the installation process. The addition of any new permissions to the extension triggers another notification that users must accept during the extension’s next update. If they don’t, they won’t receive the updated version.

These notifications were intended to provide transparency about what extensions can do and help users make informed decisions about whether they should complete the installation process. However, we’ve seen that users can feel overwhelmed by repeated prompts. Worse, failure to see and accept new permissions requests for updated versions can leave users stranded on older versions.

We’re addressing this with optional permissions. First, we have made a number of permissions optional. Optional permissions don’t trigger a permission prompt for users during installation or when the extension updates. It also means that users have less of a chance of becoming stranded.

If you use the following permissions, please feel welcome to move them from the permissions manifest.json key to the optional_permissions key:

- management

- devtools

- browsingData

- pkcs11

- proxy

- session

Second, we’re encouraging developers who use optional permissions to request them at runtime. When you use optional permissions with the permissions.request API, permission requests will be triggered when permissions are needed for a feature. Users can then see which permissions are being requested in context of using the extension. For more information, please see our guide on requesting permissions at runtime.

As an added bonus, we’ve also implemented the permissions.onAdded and permissions.onRemoved events, allowing you to react to permissions being granted or revoked.

Merging CSP headers

Users who have multiple add-ons installed that modify the content security policy headers of requests may have been seeing their add-ons behave erratically and will likely blame the add-on(s) for not working. Luckily, we now properly merge the CSP headers when two add-ons modify them via webRequest. This is especially important for content blockers leveraging the CSP to block resources such as scripts and images.

Handling SameSite cookie restrictions

We’ve seen developers trying to work around SameSite cookie restrictions. If you have been using iframes on your extension pages and expecting them to behave like first party frames, the SameSite cookie attribute will keep your add-on from working properly. In Firefox 77, the cookies for these frames will behave as if it was a first party request. This should ensure that your extension continues to work as expected.

Other updates

Please also see these additional changes:

- The tabs.duplicate API now allows the position and active status of a duplicated tab to be specified.

- We’ve limited the ability to monitor data: URIs using the webRequest API. This feature was never clearly documented, and is also not supported by Chrome. Please see the bug for more details.

- WebExtensions can now also clear IndexedDB and ServiceWorkers by hostname using the hostnames removal option of browsingData.remove.

- tabs.goBack and tabs.goForward are now supported.

I’m very excited about the number of patches from the community that are included in this release. Please congratulate Tom Schuster, Ajitesh, Tobias, M'elanie Chauvel, Atique Ahmed Ziad, and a few teams across Mozilla that are bringing these great additions to you. I’m looking forward to finding out what is in store for Firefox 78, please stay tuned!

The post Extensions in Firefox 77 appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/05/28/extensions-in-firefox-77/

|

|

The Firefox Frontier: Firefox features for remote school (that can also be used for just about anything) |

Helping kids with school work can be challenging in the best of times (“new” math anyone?) let alone during a worldwide pandemic. These Firefox features can help make managing school … Read more

The post Firefox features for remote school (that can also be used for just about anything) appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/firefox-features-remote-school/

|

|

Mozilla Localization (L10N): L10n Report: May 2020 Edition |

Please note some of the information provided in this report may be subject to change as we are sometimes sharing information about projects that are still in early stages and are not final yet.

Welcome!

New localizer

- Darli of Luganda

Are you a locale leader and want us to include new members in our upcoming reports? Contact us!

New community/locales added

- Armenian Western (hyw)

New content and projects

What’s new or coming up in Firefox desktop

Upcoming deadlines:

- Firefox 77 is currently in beta and will be released on Jun 2. The deadline to update localization was on May 19.

- The deadline to update localizations for Firefox 78, currently in Nightly, will be Jun 16 (4 weeks after the previous deadline).

IMPORTANT: Firefox 78 is the next ESR (Extended Support Release) version. That’s a more stable version designed for enterprises, but also used in some Linux distributions, and it remains supported for about a year. Once Firefox 78 moves to release, that content will remain frozen until that version becomes unsupported (about 15 months), so it’s important to ship the best localization possible.

In Firefox 78 there’s a lot of focus on the about:protections page. Make sure to test your changes on a new profile and in different states (e.g. logged out of Firefox Account and Monitor, logged into one of them, etc.). For example, the initial description changes depending on the fact that the user has enabled Enhanced Tracking Protection (ETP) or not:

- Protections enabled: Firefox is actively protecting them, hence “Firefox protects your privacy behind the scenes while you browse”.

- Protections disabled: Firefox could protect them, but it currently isn’t. So, ““Firefox can protect your privacy behind the scenes while you browse”.

Make sure to keep these nuances in your translation.

New Locales

With Firefox 78 we added 3 new locales to Nightly, since they made good progress and are ready for initial testing:

- Central Kurdish (ckb)

- Eastern Armenian with Classic Orthography (hye)

- Mixteco Yucuhiti (meh)

All three are available for download on mozilla.org.

What’s new or coming up in mobile

- Fennec Nightly and Beta channels have been successfully migrated to Fenix! Depending on the outcome of an experiment that launched this week, we may see the release channel migration happen soon. We’ll be sending some Fenix-specific comms this week.

- The localization cycle for Firefox for iOS v26 has ended as of Monday, May 25th.

What’s new or coming up in web projects

Mozilla.org

Mozilla.org is now at its new home: it was switched from .lang to Fluent format last Friday. Changing to the new format would allow us:

- to put more natural expression power in localizers hands.

- greater flexibility for translations & making string changes.

- to make `en` as the source for localization, while en-US is a locale.

- to push localized content to production more often and automatically throughout the day.

Only a handful high priority files have been migrated to the new format, as you have seen in the project dashboard. Please dedicate some time to go over the migrated files and report any technical issues you discover. Please go over the updated How to test mozilla.org] documentation for what to focus on and how to report technical issues regarding the new file format.

The migration from the old format to the new would take some time to complete. Check the mozilla.org dashboard in Pontoon regularly for updates. In the coming weeks, there will be new pages added, including the next WNP.

Web of Things

No new feature development for the foreseeable future. However, adding new locales, bug fixes and localized content are being made to the GitHub repository on a regular basis.

What’s new or coming up in SuMo

Next week is Firefox 77 release. It presents few updates to our KB articles:

In the next weeks we will communicate with the members a lot of news we had been working in the past months, between those:

- Reports on views and helpfulness of the translated articles

- Content for new contribution channels

What’s new or coming up in Pontoon

Django 2. As of April, Pontoon runs on Django 2. The effort to make the transition happen was started a while ago by Jotes, who first made the Pontoon codebase run on Python 3, which is required for Django 2. And the effort was also completed by Jotes, who took care of squashing DB migrations and other required steps for Pontoon to say goodbye to Django 1. Well done, Jotes!

New user avatars. Pontoon uses the popular Gravatar service for displaying user avatars. Until recently, we’ve been showing a headshot of a red panda as the fallback avatar for users without a Gravatar. But that made it hard to distinguish users just by scanning their avatars sometimes, so we’re now building custom avatars from their initials. Thanks and welcome to our new contributor, Vishnudas!

Private projects. Don’t worry, we’re not limiting access to any projects on pontoon.mozilla.org, but there are 3rd party deployments of Pontoon, which would benefit from such a feature. Jotes started paving the way for that by adding the ability to switch projects between private and public and making all new projects private after they’ve been set up. That means they are only accessible to project managers, which allows the project setup to be tested without using a separate deployment for that purpose (e.g. a stage server) and without confusing users with broken projects. If any external team wants to take the feature forward as specified, we’d be happy to take patches upstream, but we don’t plan to develop the feature ourselves, since we don’t need it at Mozilla.

More reliable Sync. Thanks to Vishal, Pontoon sync is now more immune to errors we’ve been hitting every now and then and spending a bunch of time debugging. Each project sync task is now encapsulated in a single celery task, which makes it easier to rollback and recover from errors.

Newly published localizer facing documentation

- Updated: Testing mozilla.org includes information on testing Fluent files.

Friends of the Lion

- G"urkan G"ull"u, who is the second person to exceed 2000 suggestions on Pontoon in the Turkish community, and is making significant contributions to SUMO as well.

Know someone in your l10n community who’s been doing a great job and should appear here? Contact one of the l10n-drivers and we’ll make sure they get a shout-out (see list at the bottom)!

Useful Links

- #l10n-community channel on Matrix

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying (CocoMo) – l10n Project Manager for mozilla.org, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Axel (Pike) – l10n Tech Team Lead

- Sta's – l20n/FTL tamer

- Matjaz – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

- Giulia Guizzardi – Community manager for Support

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2020/05/28/l10n-report-may-2020-edition/

|

|

The Mozilla Blog: Mozilla’s journey to environmental sustainability |

Process, strategic goals, and next steps

The programme may be new, but the process has been shaping for years: In March 2020, Mozilla officially launched a dedicated Environmental Sustainability Programme, and I am proud and excited to be stewarding our efforts.

Since we launched, the world has been held captive by the COVID-19 pandemic. People occasionally ask me, “Is this really the time to build up and invest in such a large-scale, ambitious programme?” My answer is clear: Absolutely.

A sustainable internet is built to sustain economic well-being and meaningful social connection, just as it is mindful of a healthy environment. Through this pandemic, we’re reminded how fundamental the internet is to our social connections and that it is the baseline for many of the businesses that keep our economies from collapsing entirely. The internet has a significant carbon footprint of its own — data centers, offices, hardware and more require vast amounts of energy. The climate crisis will have lasting effects on infrastructure, connectivity and human migration. These affect the core of Mozilla’s business. Resilience and mitigation are therefore critical to our operations.

In this world, and looking towards desirable futures, sustainability is a catalyst for innovation.

To embark on this journey towards environmental sustainability, we’ve set three strategic goals:

- Reduce and mitigate Mozilla’s operational impact;

- Train and develop Mozilla staff to build with sustainability in mind;

- Raise awareness for sustainability, internally and externally.

We are currently busy conducting our Greenhouse Gas (GHG) baseline emissions assessment, and we will publish the results later this year. This will only be the beginning of our sustainability work. We are already learning that transparently and openly creating, developing and assessing GHG inventories, sustainability data management platforms and environmental impact is a lot harder than it should be, given the importance of these assessments.

If Mozilla, as an international organisation, struggles with this, what must that mean for smaller non-profit organisations? That is why we plan to continuously share what we learn, how we decide, and where we see levers for change.

Four principles that guide us:

Be humble

We’re new to this journey and the larger environmental movement as well as recognising that the mitigation of our own operational impact won’t be enough to address the climate crisis. We understand what it means to fuel larger movements that create the change we want to see in the world. We are leveraging our roots and experience towards this global, systemic challenge.

Be open

We will openly share what we learn, where we make progress, and how our thinking evolves — in our culture as well as in our innovation efforts. We intend to focus our efforts and thinking on the internet’s impact. Mozilla’s business builds on and grows with the internet. We understand the tech, and we know where and how to challenge the elements that aren’t working in the public interest.

Be optimistic

We approach the future in an open-minded, creative and strategic manner. It is easy to be overwhelmed in the face of a systemic challenge like the climate crisis. We aim to empower ourselves and others to move from inertia towards action, working together to build a sustainable internet. Art, strategic foresight, and other thought-provoking engagements will help us imagine positive futures we want to create.

Be opinionated

Mozilla’s mission drives us to develop and maintain the internet as a global public resource. Today, we understand that an internet that serves the public interest must be sustainable. A sustainable internet is built to sustain economic wellbeing and meaningful social connection; it is also mindful of the environment. Starting with a shared glossary, we will finetune our language, step up, and speak out to drive change.

I look forward to embarking on this journey with all of you.

The post Mozilla’s journey to environmental sustainability appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/05/28/mozillas-journey-to-environmental-sustainability/

|

|

Mike Hommey: The influence of hardware on Firefox build times |

I recently upgraded my aging “fast” build machine. Back when I assembled the machine, it could do a full clobber build of Firefox in about 10 minutes. That was slightly more than 10 years ago. This upgrade, and the build times I’m getting on the brand new machine (now 6 months old) and other machines led me to look at how some parameters influence build times.

Note: most of the data that follows was gathered a few weeks ago, building off Mercurial revision 70f8ce3e2d394a8c4d08725b108003844abbbff9 of mozilla-central.

Old vs. new

The old “fast” build machine had a i7-870, with 4 cores, 8 threads running at 2.93GHz, turbo at 3.6GHz, and 16GiB RAM. The new machine has a Threadripper 3970X, with 32 cores, 64 threads running at 3.7GHz, turbo at 4.5GHz (rarely reached to be honest), and 128GiB RAM (unbuffered ECC).

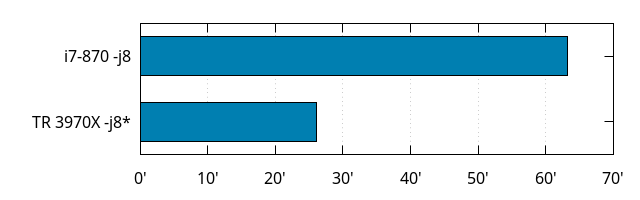

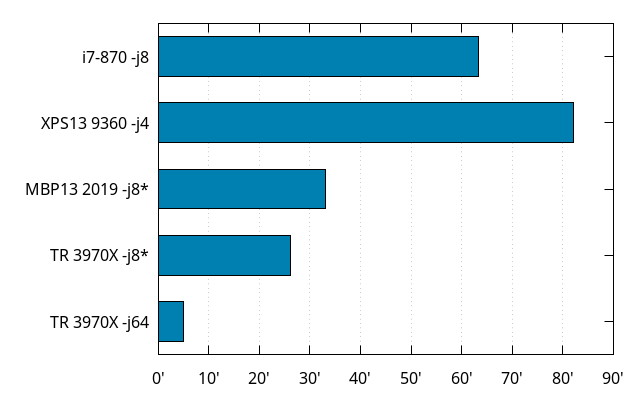

Let’s compare build times between them, at the same level of parallelism:

That is 63 minutes for the i7-870 vs. 26 for the Threadripper, with a twist: the Threadripper was explicitly configured to use 4 physical cores (with 2 threads each) so as to be fair to the poor i7-870.

Assuming the i7 maxed out at its base clock, and the Threadripper at turbo speed (which it actually doesn’t, but it’s closer to the truth than the base clock is with a eighth of the cores activated), the speed-up from the difference in frequency alone would make the build 1.5 times faster, but we got close to 2.5 times faster.

But that doesn’t account for other factors we’ll explore further below.

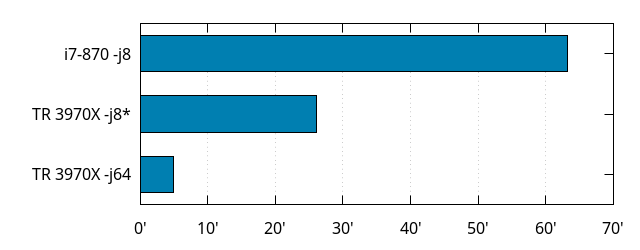

Before going there, let’s look at what unleashing the full power of the Threadripper brings to the table:

Yes, that is 5 minutes for the Threadripper 3970X, when using all its cores and threads.

Spinning disk vs. NVMe

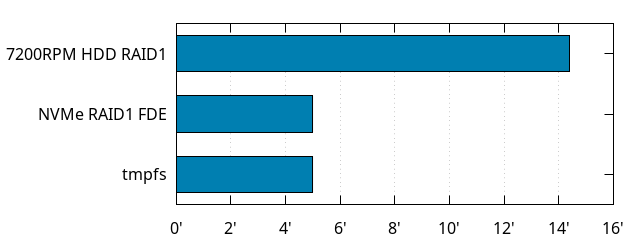

The old machine was using spinning disks in RAID 1. I can’t really test much faster SSDs on that machine because I don’t have any that would fit, and the machine is now dismantled, but I was able to try the spinning disks on the Threadripper.

Using spinning disks instead of modern solid-state makes the build almost 3 times as slow! (or an addition of almost 10 minutes). And while I kind of went overboard with this new machine by setting up not one but two NVMe PCIe 4.0 SSDs in RAID1, I also slowed them down by using Full Disk Encryption, but it doesn’t matter, because I get the same build times (within noise) if I put everything in memory (because I can).

It would be interesting to get more data with different generations of SSDs, though (SATA ones may still have a visible overhead, for instance).

Going back to the original comparison between the i7-870 and the downsized Threadripper, assuming the overhead from disk alone corresponds to what we see here (which it probably doesn’t, actually, because less parallelism means less concurrent accesses, means less seeks, means more speed), the speed difference now looks closer to “only” 2x.

Desktop vs. Laptop

My most recent laptop is a 3.5 years old Dell XPS13 9360, that came with a i7-7500U (2 cores, 4 threads, because that’s all you could get in the 13'' form factor back then; 2.7GHz, 3.5GHz turbo), and 16GiB RAM.

A more recent 13'' laptop would be the Apple Macbook Pro 13'' Mid 2019, sporting an i7-8569U (4 cores, 8 threads, 2.8GHz, 4.7GHz turbo), and 16GiB RAM. I don’t own one, but Mozilla’s procurement system contains build times for it (although I don’t know what changeset that corresponds to, or what compilers were used ; also, the OS is different).

The XPS13 being old, it is subject to thermal throttling, making it slower than it should be, but it wouldn’t beat the 10 years old desktop anyway. Macbook Pros tend to get into these thermal issues after a while too.

I’ve relied on laptops for a long time. My previous laptop before this XPS was another XPS, that is now about 6 to 7 years old, and while the newer one had more RAM, it was barely getting better build times compared to the older one when I switched. The evolution of laptop performance has been underwelming for a long time, but things finally changed last year. At long last.

I wish I had numbers with a more recent laptop under the same OS as the XPS for fairer comparison. Or with the more recent larger laptops that sport even more cores, especially the fancy ones with Ryzen processors.

Local vs. Cloud

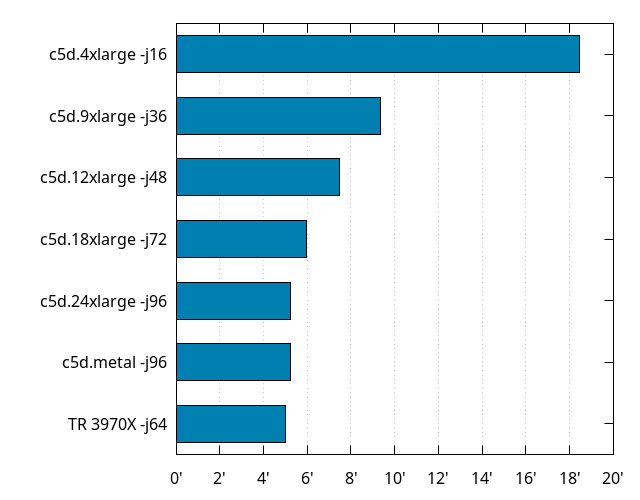

As seen above, my laptop was not really a great experience. Neither was my faster machine. When I needed power, I actually turned to AWS EC2. That’s not exactly cheap for long term use, but I only used it for a couple hours at a time, in relatively rare occasions.

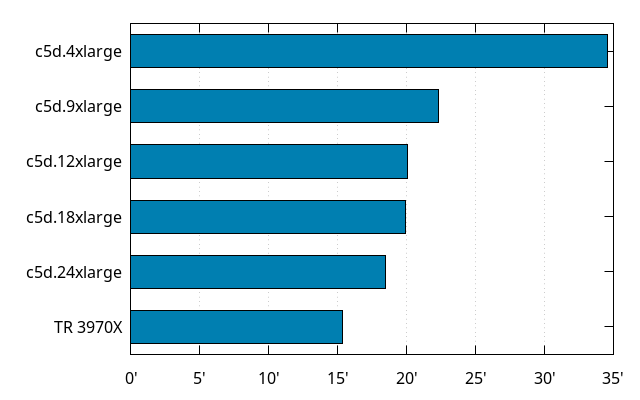

The c5d instances are AFAIK, and as of writing, the most powerful you can get on EC2 CPU-wise. They are based on 3.0GHz Xeon Platinum CPUs, either 8124M or 8275CL (which don’t exist on Intel’s website. Edit: apparently, at least the 8275CL can turbo up to 3.9GHz). The 8124M is Skylake-based and the 8275CL is Cascade Lake, which I guess is the best you could get from Intel at the moment. I’m not sure if it’s a lottery of some sort between 8124M and 8275CL, or if it’s based on instance type, but the 4xlarge, 9xlarge and 18xlarge were 8124M and 12xlarge, 24xlarge and metal were 8275CL. Skylake or Cascade Lake doesn’t seem to make much difference here, but that would need to be validated on a non-virtualized environment with full control over the number of cores and threads being used.

Speaking of virtualization, one might wonder what kind of overhead it has, and as far as building Firefox goes, the difference between c5d.metal (without) and c5d.24xlarge (with), is well within noise.

As seen earlier, storage can have an influence on build times, and EC2 instances can use either EBS storage or NVMe. On the c5d instances, it made virtually no difference. I even compared with everything in RAM (on instances with enough of it), and that didn’t make a difference either. Take this with a grain of salt, though, because EBS can be slower on other types of instances. Also, I didn’t test NVMe or RAM on c5d.4xlarge, and those have slower networking so there could be a difference there.

There are EPYC-based m5a instances, but they are not Zen 2 and have lower frequencies, so they’re not all that good. Who knows when Amazon will deliver their Zen 2 offerings.

Number of cores

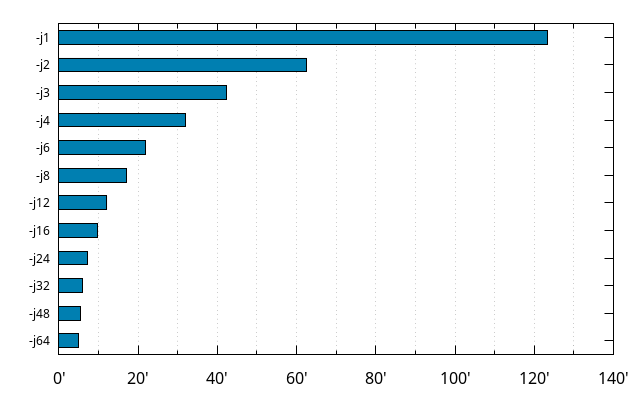

The Firefox build system is not perfect, and the number of jobs it’s allowed to run at once can have an influence on the overall build times, even on the same machine. This can somehow be observed on the results on AWS above, but testing different values of -jn on a machine with a large number of cores is another way to investigate how well the build system scales. Here it is, on the Threadripper 3970X:

Let’s pause a moment to appreciate that this machine can build slightly faster, with only 2 cores, than the old machine could with 4 cores and 8 threads.

You may also notice that the build times at -j6 and -j8 are better than the -j8 build time given earlier. More on that further below.

The data, however, is somehow skewed as far as getting a clear picture of how well the Firefox build system scales, because the less cores and threads are being used, the faster those cores can get, thanks to “Turbo”. As mentioned earlier, the base frequency for the processor is 3.7GHz, but it can go up to 4.5GHz. In practice, /proc/cpuinfo doesn’t show much more than 4.45GHz when few cores are used, but on the opposite end, would frequently show 3.8GHz when all of them are used. Which means all the values in that last graph are not entirely comparable to each other.

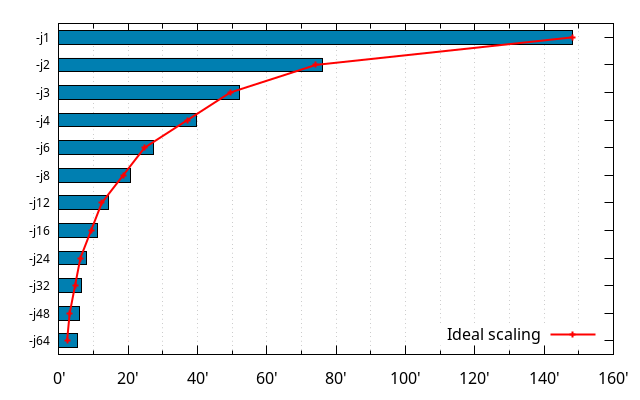

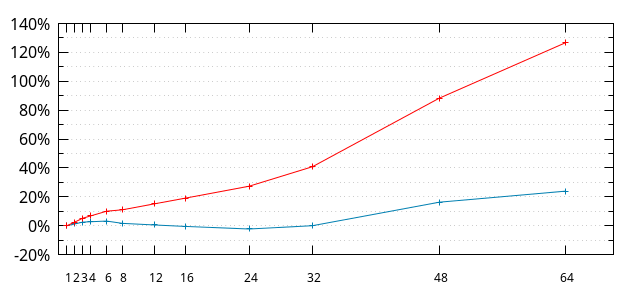

So let’s see how things go with Turbo disabled (note, though, that I didn’t disable dynamic CPU frequency scaling):

The ideal scaling (red line) is the build time at -j1 divided by the n in -jn.

So, at the scale of the graph above, things look like there’s some kind of constant overhead (the parts of the build that aren’t parallelized all that well), and a part that is within what you’d expect for parallelization, except above -j32 (we’ll get to that last part further down).

Based on the above, let’s assume a modeled build time of the form O + P / n. We can find some O and P that makes the values for e.g. -j1 and -j32 correct. That works out to be 1.9534 + 146.294 / n (or, in time format: 1:57.2 + 2:26:17.64 / n).

Let’s see how it fares:

The red line is how far from the ideal case (-j1/n) the actual data is, in percent from the ideal case. At -j32, the build time is slightly more than 1.4 times what it ideally would be. At -j24, about 1.3 times, etc.

The blue line is how far from the modeled case the actual data is, in percent from the modeled case. The model is within 3% of reality (without Turbo) until -j32.

And above -j32, things blow up. And the reason is obvious: the CPU only has 32 actual cores. Which brings us to…

Simultaneous Multithreading (aka Hyperthreading)

Ever since the Pentium 4, many (and now most) modern desktop and laptop processors have had some sort of Simultaneous Multithreading (SMT) technology. The underlying principle is that CPUs are waiting for something a lot of the time (usually data in RAM), and that they could actually be executing something else when that happens.

From the software perspective, though, it only appears to be more cores than there physically are. So on a processor with 2 threads per core like the Threadripper 3970X, that means the OS exposes 64 “virtual” cores instead of the 32 physical ones.

When the workload doesn’t require all the “virtual” cores, the OS will schedule code to run on virtual cores that don’t share the same physical core, so as to get the best of one’s machine.

This is why -jn on a machine that has n real cores or more will have better build times than -jn on a machine with less cores, but at least n virtual cores.

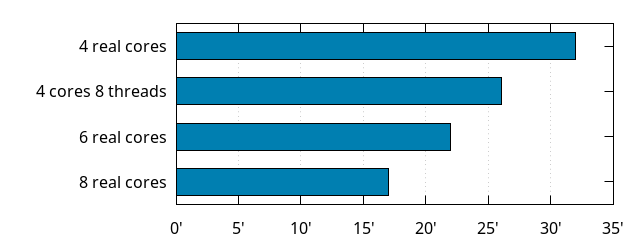

This was already exposed in some way above, where the first -j8 build time shown was worse than the subsequent ones. That one was taken while disabling all physical cores but 4, and still keeping 2 threads per core. Let’s compare that with similar number of real cores:

As we can see, 4 cores with 8 threads is better than 4 cores, but it doesn’t beat 6 physical cores. Which kind of matches the general wisdom that SMT brings an extra 30% performance.

By the way, this being all on a Zen 2 processor, I’d expect a Ryzen 3 3300X to provide similar build times to that 4 cores 8 threads case.

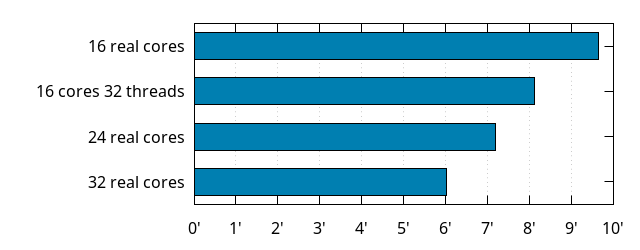

Let’s look at 16 cores and 32 threads:

Similar observation: obviously better than 16 cores alone, but worse than 24 physical cores. And again, this being all on a Zen 2 processor, I’d expect a Ryzen 9 3950X to provide similar build times to the 16 cores and 32 threads case.

Based on the above, I’d estimate a Threadripper 3960X to have build times close to those of 32 real cores on the 3970X (32 cores is 33% more cores than 24).

Operating System

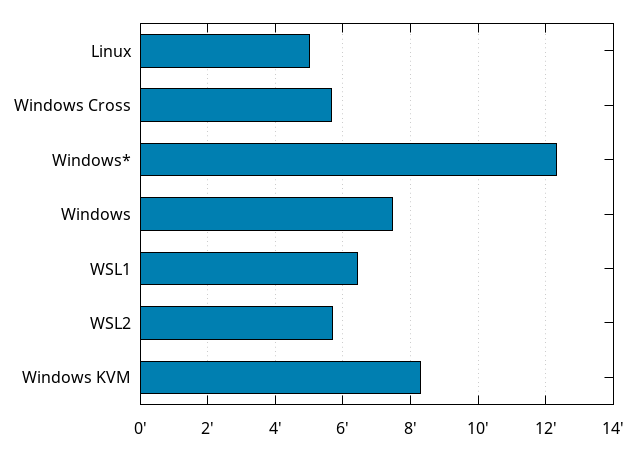

All the build times mentioned above except for the Macbook Pro have been taken under Debian GNU/Linux 10, building Firefox for Linux64 from a specific revision of mozilla-central without any tweaks.

Things get different if you build for a different target (say, Firefox for Windows), or under a different operating system.

Our baseline on this machine is the Firefox for Linux64 build is 5 minutes. Building Firefox for Windows (as a cross-compilation) takes 40 more seconds (5:40), because of building some extra Windows-specific stuff.

A similar Firefox for Windows build, natively, on a fresh Windows 10 install takes … more than 12 minutes! Until you realize you can disable Windows Defender for the source and build tree, at which point it only takes 7:27. That’s still noticeably slower than cross-compiling, but not as catastrophic as when the antivirus is enabled.

Recent versions of Windows come with a Windows Subsystem for Linux (WSL), which allows to run native Linux programs unmodified. There are now actually two versions of WSL. One emulates Linux system calls (WSL1), and the other runs a real Linux kernel in a virtual machine (WSL2). With a Debian GNU/Linux 10 system under WSL1, building Firefox for Linux64 takes 6:25, while it takes 5:41 under WSL2, so WSL2 is definitely faster, but still not close to metal.

Edit: Ironically, it’s very much possible that a cross-compiled Firefox for Windows build in WSL2 would be faster than a native Firefox for Windows build on Windows (but I haven’t tried).

Finally, a Firefox for Windows build, on a fresh Windows 10 install in a KVM virtual machine under the original Debian GNU/Linux system takes 8:17, which is a lot of overhead. Maybe there are some tweaks to do to get better build times, but it’s probably better to go with cross-compilation.

Let’s recap as a graph:

Automation

All the build times mentioned so far have been building off mozilla-central without any tweaks. That’s actually not how a real Firefox release is built, which enables more optimizations. Without even going to the full set of optimizations that go into building a real Firefox Nightly (including Link Time Optimizations and Profile Guided Optimizations), simply switching --enable-release on will up the optimization game.

Building on Mozilla automation also enables things that don’t happen during what we call “local developer builds”, such as dumping debug info for use to process crash reports, linking a second libxul library for some unit tests, the full packaging of Firefox, the preparation of archives that will be used to run tests, etc.

As of writing, most Firefox builds on Mozilla automation happen on c5d.4xlarge (or similarly sized) AWS EC2 instances. They benefit from the use of a shared compilation cache, so their build times are very noisy, as they depend on the cache hit rate.

So, I took some Linux64 optimized build that runs on automation (not one with LTO or PGO), and ran its full script, with the shared compilation cache disabled, on corresponding and larger AWS EC2 instances, as well as the Threadripper:

The immediate observation is that these builds scale much less gracefully than a “local developer build” does, and there are multiple factors that come into play, but the common thread is that parts of the build that don’t run during those “local developer builds” are slow and not well parallelized. Work is in progress to make things better, though.

Another large contributor is that with the higher level of optimizations, the compilation of Rust code takes longer. And compiling Rust doesn’t parallelize very well at the moment (for a variety of reasons, but the main one is the long sequences of crate dependencies, i.e. when A depends on B, which depends on C, which depends on D, etc.). So what happens the more cores are available, is that compiling all the C/C++ code finishes well before compiling all the Rust code does, and with the long tail of crate dependencies, what’s remaining doesn’t parallelize well. And that also blocks other portions of the build that need the compiled Rust code, and aren’t very well parallelized themselves.

All in all, it’s not all that great to go with bigger instances, because they cost more but won’t compensate by doing as many more builds in the same amount of time… except if doing more builds in parallel, which is being experimented with.

On the Threadripper 3970X, I can do 2 “local developer builds” in parallel in less time than doing them one after the other (1:20 less, so 8:40 instead of 10 minutes). Even with -j64. Heck, I can do 4 builds in parallel at -j16 in 16 minutes and 15 seconds, which is somewhere between a single -j12 and single -j8, which is where one -j16 with 8 threads and 16 threads should be at. That doesn’t seem all that useful, until you think of it this way: I can locally build for multiple platforms at once in well under 20 minutes. All on one machine, from one source tree.

Closing words

We looked at various ways build times can vary depending on different hardware (and even software) configurations. Note this only covers hardware parameters that didn’t require reboots to test out (e.g. this excludes variations like RAM speed ; and yes, this means there are ways to disable turbo, cores and threads without fiddling with the BIOS, I’ll probably write a separate blog post about this).

We saw there is room for improvements in Firefox build times, especially on automation. But even for local builds, on machines like mine, it should be possible to get under 4 minutes eventually. Without any form of caching. Which is kind of mind blowing. Even without these future improvements, these build times changed the way I approach things. I don’t mind clobber builds anymore, although ideally we’d never need them.

If you’re on the market for a new machine, I’d advise getting a powerful desktop machine (based on a 3950X, a 3960X or a 3970X) rather than refreshing your aging laptop. I don’t think any laptop currently available would get below 15 minutes build times. And those that can build in less than 20 minutes probably cost more than a desktop machine that would build in well under half that. Edit: Also, pick a fast NVMe SSD that can sustain tons of IOPS.

Of course, if you use some caching method, build times will be much better even on a slower machine, but even with a cache, it happens quite often that you get a lot of cache misses that cancel out the benefit of the cache. YMMV.

You may also have legitimate concerns that rr doesn’t work yet, but when I need it, I can pull my old Intel-based laptop. rr actually works well enough on Ryzen for single-threaded workloads, and I haven’t needed to debug Firefox itself with rr recently, so I haven’t actually pulled the old laptop a lot (although I have been using rr and Pernosco).

|

|

Ryan Harter: Writing inside organizations |

Tom Critchlow has a great post here outlining some points on how important writing is for an organization.

I'm still working through the links, but his post already sparked some ideas. In particular, I'm very interested in the idea of an internal blog for sharing context.

Snippets

My team keeps …

|

|

Mozilla Open Policy & Advocacy Blog: An opportunity for openness and user agency in the proposed Facebook-Giphy merger |

Facebook is squarely in the crosshairs of global competition regulators, but despite that scrutiny, is moving to acquire Giphy, a popular platform that lets users share images on social platforms, such as Facebook, or messaging applications, such as WhatsApp. This merger – how it is reviewed, whether it is approved, and if approved under what sort of conditions – will set a precedent that will influence not only future mergers, but also the shape of legislative reforms being actively developed all around the world. It is crucial that antitrust agencies incorporate into their processes a deep understanding of the nature of the open internet and how it promotes competition, how data flows between integrated services, and in particular the role played by interoperability.

Currently Giphy is integrated with numerous independent social messaging services, including, for example, Slack, Signal, and Twitter. A combined Facebook-Giphy would be in a position to restrict access by those companies, whether to preserve their exclusivity or to get leverage for some other reason. This would bring clear harm to users who would suddenly lose the capabilities they currently enjoy, and make it harder for other companies to compete.

As is typical at this stage of a merger, Facebook said in its initial blog post that “developers and API partners will continue to have the same access to GIPHY’s APIs” and “we’re looking forward to investing further in its technology and relationships with content and API partners.” But we have to evaluate that promise in light of Facebook’s history of limiting third-party access to its own APIs and making plans to integrate its own messaging services into a modern-day messaging silo.

We also know of one other major risk: the loss of user agency and control over data. In 2014, Facebook acquired the new messaging service WhatsApp for a staggering $19 billion. WhatsApp had done something remarkable even for the high tech sector: its user growth numbers were off the charts. At that time, before today’s level of attention to interoperability and competition, the primary concern raised by the merger was the possibility of sharing user data and profiles across services. So the acquisition was permitted under conditions limiting such sharing, although Facebook was subsequently fined by the European Commission for violating these promises.

We don’t know, at this point, the full scale of potential data sharing harms that could result from the Giphy acquisition. Certainly, that’s a question that deserves substantial exploration. User data previously collected by Giphy – such as the terms used to look for images – may at some point in the future be collected by Facebook, and potentially integrated with other services, the same fact pattern as with WhatsApp.

We must learn from the missed opportunity of the Facebook/WhatsApp merger. If this merger is allowed to proceed, Facebook should at minimum be required to keep to its word and preserve the same access to Giphy’s APIs for developers and API partners. Facebook should go one step further, and commit to using open standards whenever possible, while also making available to third parties under fair, reasonable, and nondiscriminatory terms and conditions any APIs or other interoperability interfaces it develops between Giphy and Facebook’s current services. In order to protect users from yet more tracking of their behavior, Facebook should commit not to combine data collected by Giphy with other user data that it collects – a commitment that fell short in the WhatsApp case, but can be strengthened here. Together these restrictions would help open up the potential benefits of technology integration while simultaneously making them available to the entire ecosystem, protecting users broadly.

As antitrust agencies begin their investigations of this transaction, they must conduct a full and thorough review based on a detailed technical understanding of the services, data flows, and partnerships involved, and ensure that the outcome protects user agency and promotes openness and interoperability. It’s time to chart a new course for tech competition, and this case represents a golden opportunity.

The post An opportunity for openness and user agency in the proposed Facebook-Giphy merger appeared first on Open Policy & Advocacy.

|

|

This Week In Rust: This Week in Rust 340 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Check out this week's This Week in Rust Podcast

Updates from Rust Community

News & Blog Posts

- Compiling Rust binaries for Windows 98 SE and more: a journey

- Zero To Production #0: Foreword

- Some Extensive Projects Working with Rust

- Writing Python inside your Rust code — Part 4

- Drawing SVG Graphics with Rust

- Designing the Rust Unleash API Client

- Conway's Game of Life on the NES in Rust

- How to organize your Rust tests

- Just: How I Organize Large Rust Programs

- Integrating Qt events into Actix and Rust

- Actix-Web in Docker: How to build small and secure images

- Angular, Rust, WebAssembly, Node.js, Serverless, and... the NEW Azure Static Web Apps!

- The Chromium project finds that around 70% of our serious security bugs are memory safety problems

- Integration of AV-Metrics Into rav1e, the AV1 Encoder

- Oxidizing the technical interview

- Porting K-D Forests to Rust

- Rust Macro Rules in Practice

- Rust: Dropping heavy things in another thread can make your code 10000 times faster

- Rust's Runtime

- [audio] Tech Except!ons: What Microsoft has to do with Rust? With Ryan Levick

- [video] [Russian] Rust: Not as hard as you think - Meta/conf: Backend Meetup 2020

- [video] 3 Part Video for Beginners to Rust Programming on Iteration

- [video] Bringing WebAssembly outside the web with WASI by Lin Clark

- [video] Microsoft's Safe Systems Programming Languages Effort

- [video] Rust, WebAssembly, and the future of Serverless by Steve Klabnik

Crate of the Week

This week's crate is cargo-asm, a cargo subcommand to show the resulting assembly of a function. Useful for performance work.

Thanks to Jay Oster for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- pijama: easy issues

- mdbx-rs: Add support for more compile time options

- ruma: Replace impl_enum! with strum derives

- time-rs: Revamped parsing/formatting

- http-types: Request::query should match Tide's behavior

- http-types: Status should take TryInto

- http-types: Expose method shorthands for Request constructor

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

359 pull requests were merged in the last week

- update to LLVM 10

- llvm: expose tiny code model to users

- enable ARM TME (Transactional Memory Extensions)

- implement new

asm!syntax from RFC #2873 - always generated object code for

#![no_builtins] - break tokens before checking if they are 'probably equal'

- emit a better diagnostic when function actually has a 'self' parameter

- stabilize fn-like proc macros in expression, pattern and statement positions

- use

once_cellcrate instead of custom data structure - simple NRVO

- remove ReScope

- exhaustively check

ty::Kindduring structural match checking - move borrow-of-packed-field unsafety check out of loop

- fix

InlineAsmOperandexpresions being visited twice during liveness checking - chalk: cleanup crate structure and add features for SLG/recursive solvers

- check non-

Send/Syncupvars captured by generator - support coercion between

FnDefand arg-less closure and vice versa - more lazy normalization of constants

- miri: prepare Dlsym system for dynamic symbols on Windows

- use

T's discriminant type inmem::Discriminantinstead ofu64 - fix discriminant type in generator transform

impl FromforBox,Rc, andArc- another attempt to reduce

size_of - set initial non-empty

Vecsize to 4 instead of 1 - make

std::charfunctions and constants associated tochar - stabilize

saturating_absandsaturating_neg - add

lenandslice_from_raw_partstoNonNull<[T]> - add fast-path optimization for

Ipv4Addr::fmt impl Ord for proc_macro::LineColumn- cargo: try installing exact versions before updating

- cargo: automatically update

patch, and provide better errors if an update is not possible - cargo: add option to strip binaries

- rustfmt: merge configs from parent directories

- rustfmt: umprove error message when module resolution failed

- rustfmt: parse comma-separated branches in macro definitions

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved last week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs are currently in the final comment period.

Tracking Issues & PRs

- [disposition: merge] Tracking issue for

std::io::{BufReader, BufWriter}::capacity - [disposition: merge] impl

From<[T; N]>forBox<[T]> - [disposition: merge] Implement PartialOrd and Ord for SocketAddr*

- [disposition: merge] Stabilize AtomicN::fetch_min and AtomicN::fetch_max

- [disposition: merge] Resolve overflow behavior for RangeFrom

- [disposition: merge] impl Step for char (make

Range*iterable) - [disposition: merge] Stabilize core::panic::Location::caller

- [disposition: merge] Stabilize str_strip feature

New RFCs

No new RFCs were proposed this week.

Upcoming Events

Online

- May 27. Montr'eal, QC, CA - Remote - RustMTL May 2020

- May 27. Wroclaw, PL - Remote - Rust Wroclaw Meetup #20

- May 27. London, UK - Remote - LDN Talks May 2020

- May 28. Berlin, DE - Remote - Rust Hack and Learn

- June 3. Johannesburg, ZA - Remote - Johannesburg Rust Meetup

- June 8. Auckland, NZ - Remote - Rust AKL

- June 9. Seattle, WA - Remote - Seattle Rust Meetup

North America

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Tweet us at @ThisWeekInRust to get your job offers listed here!

- Rust Back End Engineer, Core Banking - TrueLayer - Milan, Italy

- Sr. SW Engineer (TW or Remote) - NZXT - Taipei, Taiwan

- Rust Graphics Engineer - Elektron - Gotheburg, Sweden

Quote of the Week

Things that are programming patterns in C are types in Rust.

– Kornel Lesi'nski on rust-users

Thanks to trentj for the suggestions!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/05/27/this-week-in-rust-340/

|

|

Mozilla Localization (L10N): How to unleash the full power of Fluent as a localizer |

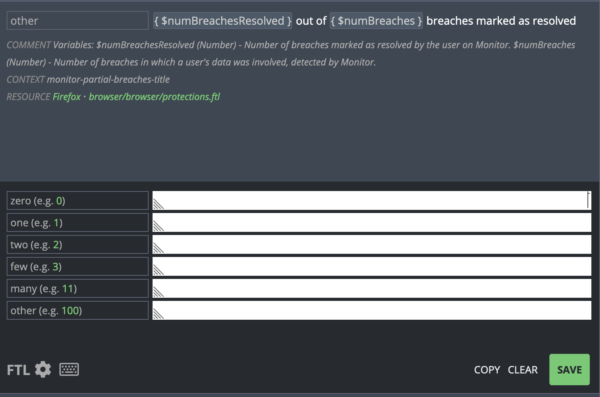

Fluent is an extremely powerful system, providing localizers with a level of flexibility that has no equivalent in other localization systems. It can be as straightforward as older formats, thanks to Pontoon’s streamlined interface, but it requires some understanding of the syntax to fully utilize its potential.

Here are a few examples of how you can get the most out of Fluent. But, before jumping in, you should get familiar with our documentation about Fluent syntax for localizers, and make sure to know how to switch to the Advanced FTL mode, to work directly with the syntax of each message.

Plurals

Plural forms are a really complex subject; some locales don’t use any (e.g. Chinese), English and most Romance languages use two (one vs many), others use all the six forms defined by CLDR (e.g. Arabic). Fluent gives you all the flexibility that you need, without forcing you to provide all forms if you don’t need them (unlike Gettext, for example).

Consider this example displayed in Pontoon for Arabic:

The underlying reference message is:

The underlying reference message is:

# Variables:

# $numBreachesResolved (Number) - Number of breaches marked as resolved by the user on Monitor.

# $numBreaches (Number) - Number of breaches in which a user's data was involved, detected by Monitor.

monitor-partial-breaches-title =

{ $numBreaches ->

*[other] { $numBreachesResolved } out of { $numBreaches } breaches marked as resolved

}

You can see it if you switch to the FTL advanced mode, and temporarily COPY the English source.

This gives you a lot of information:

- It’s a message with plurals, although only the

othercategory is defined for English. - The

othercategory is also the default, since it’s marked with an asterisk. - The value of

$numBreachesdefines which plural form will be used.

First of all, since you only have one form, you can decide to remove the select logic completely. This is equivalent to:

monitor-partial-breaches-title = { $numBreachesResolved } out of { $numBreaches } breaches marked as resolved

You might ask: why is there a plural in English then? That’s a great question! It’s just a small hack to trick Pontoon into displaying the UI for plurals.

But there’s more: what if this doesn’t work for your language? Consider Italian, for example: to get a more natural sounding sentence, I would translate it as “10 breaches out of 20 marked as resolved”. There are two issues to solve: I need $numBreachesResolved (number of solved breaches) to determine which plural form to use, not the total number, and I need a singular form. While I can’t make these changes directly through Pontoon’s UI, I can still write my own syntax:

monitor-partial-breaches-title =

{ $numBreachesResolved ->

[one] { $numBreachesResolved } violazione su { $numBreaches } contrassegnata come risolta

*[other] { $numBreachesResolved } violazioni su { $numBreaches } contrassegnate come risolte

}

Notice the two relevant changes:

- There is now a

oneform (used for 1 in Italian). $numBreachesResolvedis used to determine which plural form is selected ($numBreachesResolved ->).

Terms

We recently started migrating mozilla.org to Fluent, and there are a lot of terms defined for specific feature names and brands. Using terms instead of hard-coding text in strings helps ensure consistency and enforce branding rules. The best example is probably the term for Firefox Account, where the “account” part is localizable.

-brand-name-firefox-account = Firefox Account

In Italian it’s translated as “account Firefox”. But it should be “Account Firefox” if used at the beginning of a sentence. How can I solve this, without removing the term and getting warnings in Pontoon? I can define a parameterized term.

-brand-name-firefox-account =

{ $capitalization ->

*[lowercase] account Firefox

[uppercase] Account Firefox

}

In this example, the default is lowercase, since it’s the most common form in Italian. By using only the term reference { -brand-name-firefox-account }, it will be automatically displayed as “account Firefox”.

login-button = Accedi al tuo { -brand-name-firefox-account }

What if I need the uppercase version?

login-title = { -brand-name-firefox-account(capitalization: "uppercase") }

You can find more information about terms, as well as other examples, in our documentation. And don’t forget to check the rules about brand names.

Things you shouldn’t translate

Variables and placeables should not be translated. The good news is that they’re easy to spot, since they’re surrounded by curly parentheses:

- Variable reference:

{ $somevariable } - Term reference:

{ -someterm } - Message reference:

{ somemessage }

The content between parentheses must be kept as in the English source string.

The same is valid for the data-l10n-name attribute in HTML elements. Consider for example:

update-downloading =Downloading update —

The only thing to translate in this message is “Downloading update -”. “Icon” and “download-status” should be kept untranslated.

The rule of thumb is to pay extra attention when there are curly parentheses, since they’re used to identify special elements of the syntax.

Another example:

menu-menuitem-preferences =

{ PLATFORM() ->

[windows] Options

*[other] Preferences

}

PLATFORM() is a function, and should not be translated. windows and other are variant names, and should be kept unchanged as well.

One other thing to look out for are style attributes, since they’re used for CSS rules.

downloads-panel-list =

.style = width: 70ch

width: 70ch is a CSS rule to define the width of an element, and should be kept untranslated. The only thing that you might want to change is the actual value, for example to make it larger (width: 75ch). Note that ch is a unit of measure used in CSS.

https://blog.mozilla.org/l10n/2020/05/26/how-to-unleash-the-full-power-of-fluent-as-a-localizer/

|

|

Data@Mozilla: How does the Glean SDK send gzipped pings |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.)

Last week’s blog post:This Week in Glean: mozregression telemetry (part 2) by William Lachance.

All “This Week in Glean” blog posts are listed in the TWiG index (and on the Mozilla Data blog).

In the Glean SDK, when a ping is submitted it gets internally persisted to disk and then queued for upload. The actual upload may happen later on, depending on factors such as the availability of an Internet connection or throttling. To save users’ bandwidth and reduce the costs to move bytes within our pipeline, we recently introduced gzip compression for outgoing pings.

This article will go through some details of our upload system and what it took us to enable the ping compression.

How does ping uploading work?

Within the Glean SDK, the glean-core Rust component does not provide any specific implementation to perform the upload of pings. This means that either the language bindings (e.g. Glean APIs for Android in Kotlin) or the product itself (e.g. Fenix) have to provide a way to transport data from the client to the telemetry endpoint.

Before our recent changes (by Beatriz Rizental and Jan-Erik) to the ping upload system, the language bindings needed to understand the format with which pings were persisted to disk in order to read and finally upload them. This is not the case anymore: glean-core will provide language bindings with the headers and the data (ping payload!) of the request they need to upload.

The new upload API empowers the SDK to provide a single place in which to compress the payload to be uploaded: glean-core, right before serving upload requests to the language bindings.

gzipping: the implementation details

The implementation of the function to compress the payload is trivial, thanks to the `flate2` Rust crate:

/// Attempt to gzip the provided ping content.

fn gzip_content(path: &str, content: &[u8]) -> Option> {

let mut gzipper = GzEncoder::new(Vec::new(), Compression::default());

// Attempt to add the content to the gzipper.

if let Err(e) = gzipper.write_all(content) {

log::error!("Failed to write to the gzipper: {} - {:?}", path, e);

return None;

}

gzipper.finish().ok()

}

And an even simpler way to use it to compress the body of outgoing requests:

pub fn new(document_id: &str, path: &str, body: JsonValue) -> Self {

let original_as_string = body.to_string();

let gzipped_content = Self::gzip_content(path, original_as_string.as_bytes());

let add_gzip_header = gzipped_content.is_some();

let body = gzipped_content.unwrap_or_else(|| original_as_string.into_bytes());

let body_len = body.len();

Self {

document_id: document_id.into(),

path: path.into(),

body,

headers: Self::create_request_headers(add_gzip_header, body_len),

}

}

What’s next?

The new upload mechanism and its compression improvement is only currently available for the iOS and Android Glean SDK language bindings. Our next step (currently in progress!) is to add the newer APIs to the Python bindings as well, moving the complexity of handling the upload process to the shared Rust core.

In our future, the new upload mechanism will additionally provide a flexible constraint-based scheduler (e.g. “send at most 10 pings per hour”) in addition to pre-defined rules for products to use.

https://blog.mozilla.org/data/2020/05/26/how-does-the-glean-sdk-send-gzipped-pings/

|

|

Daniel Stenberg: curl ootw: –socks5 |

(Previous option of the week posts.)

--socks5 was added to curl back in 7.18.0. It takes an argument and that argument is the host name (and port number) of your SOCKS5 proxy server. There is no short option version.

Proxy

A proxy, often called a forward proxy in the context of clients, is a server that the client needs to connect to in order to reach its destination. A middle man/server that we use to get us what we want. There are many kinds of proxies. SOCKS is one of the proxy protocols curl supports.

SOCKS

SOCKS is a really old proxy protocol. SOCKS4 is the predecessor protocol version to SOCKS5. curl supports both and the newer version of these two, SOCKS5, is documented in RFC 1928 dated 1996! And yes: they are typically written exactly like this, without any space between the word SOCKS and the version number 4 or 5.

One of the more known services that still use SOCKS is Tor. When you want to reach services on Tor, or the web through Tor, you run the client on your machine or local network and you connect to that over SOCKS5.

Which one resolves the host name

One peculiarity with SOCKS is that it can do the name resolving of the target server either in the client or have it done by the proxy. Both alternatives exists for both SOCKS versions. For SOCKS4, a SOCKS4a version was created that has the proxy resolve the host name and for SOCKS5, which is really the topic of today, the protocol has an option that lets the client pass on the IP address or the host name of the target server.

The --socks5 option makes curl itself resolve the name. You’d instead use --socks5-hostname if you want the proxy to resolve it.

--proxy

The --socks5 option is basically considered obsolete since curl 7.21.7. This is because starting in that release, you can now specify the proxy protocol directly in the string that you specify the proxy host name and port number with already. The server you specify with --proxy. If you use a socks5:// scheme, curl will go with SOCKS5 with local name resolve but if you instead sue socks5h:// it will pick SOCKS5 with proxy-resolved host name.

SOCKS authentication

A SOCKS5 proxy can also be setup to require authentication, so you might also have to specify name and password in the --proxy string, or set separately with --proxy-user. Or with GSSAPI, so curl also supports --socks5-gssapi and friends.

Examples

Fetch HTTPS from example.com over the SOCKS5 proxy at socks5.example.org port 1080. Remember that –socks5 implies that curl resolves the host name itself and passes the address to to use to the proxy.

curl --socks5 socks5.example.org:1080 https://example.com/

Or download FTP over the SOCKS5 proxy at socks5.example port 9999:

curl --socks5 socks5.example:9999 ftp://ftp.example.com/SECRET

Useful trick!

A very useful trick that involves a SOCKS proxy is the ability OpenSSH has to create a SOCKS tunnel for us. If you sit at your friends house, you can open a SOCKS proxy to your home machine and access the network via that. Like this. First invoke ssh, login to your home machine and ask it to setup a SOCKS proxy:

ssh -D 8080 user@home.example.com

Then tell curl (or your browser, or both) to use this new SOCKS proxy when you want to access the Internet:

curl --socks5 localhost:8080 https:///www.example.net/

This will effectively hide all your Internet traffic from your friends snooping and instead pass it all through your encrypted ssh tunnel.

Related options

As already mentioned above, --proxy is typically the preferred option these days to set the proxy. But --socks5-hostname is there too and the related --socks4 and --sock4a.

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla Mornings on advertising and micro-targeting in the EU Digital Services Act |

On 4 June, Mozilla will host the next installment of Mozilla Mornings – our regular breakfast series that brings together policy experts, policymakers and practitioners for insight and discussion on the latest EU digital policy developments.

In 2020 Mozilla Mornings has adopted a thematic focus, starting with a three-part series on the upcoming Digital Services Act. This second virtual event in the series will focus on advertising and micro-targeting; whether and to what extent the DSA should address them. With several European Parliament resolutions dealing with this question and given its likely prominence in the upcoming European Commission public consultation, the discussion promises to be timely and insightful.

Speakers

Tiemo W"olken MEP

JURI committee rapporteur, Digital Services Act INI

Katarzyna Szymielewicz

President

Panoptykon Foundation

Stephen Dunne

Senior Policy Advisor

Centre for Data Ethics & Innovation

Brandi Geurkink

Senior Campaigner

Mozilla Foundation

With opening remarks by Raegan MacDonald

Head of European Public Policy, Mozilla Corporation

Moderated by Jennifer Baker

EU Tech Journalist

Logistical information

4 June, 2020

10:30-12:00 CEST

Zoom webinar (conferencing details post-registration)

Register here or by email to mozillabrussels@loweurope.eu

The post Mozilla Mornings on advertising and micro-targeting in the EU Digital Services Act appeared first on Open Policy & Advocacy.

|

|

William Lachance: The humble blog |

I’ve been thinking a lot about markdown, presentation, dashboards, and other frontendy sorts of things lately, partly inspired by my work on Iodide last year, partly inspired by my recent efforts on improving docs.telemetry.mozilla.org. I haven’t fully fleshed out my thoughts on this yet, but in general I think blogs (for some value of “blog”) are still a great way to communicate ideas and concepts to an interested audience.

Like many organizations, Mozilla’s gone down the path of Google Docs, Zoom and Slack which makes me more than a little sad: good ideas disappear down the memory hole super quickly with these tools, not to mention the fact that they are closed-by-default (even to people inside Mozilla!). My view on “open” is a bit more nuanced than it used to be: I no longer think everything need be all-public, all-the-time— but I still think talking about and through our ideas (even if imperfectly formed or stated) with a broad audience builds trust and leads to better outcomes.

Is there some way we can blend some of these old school ideas (blogs, newsgroups, open discussion forums) with better technology and social practices? Let’s find out.

https://wlach.github.io/blog/2020/05/the-humble-blog/?utm_source=Mozilla&utm_medium=RSS

|

|

The Mozilla Blog: The USA Freedom Act and Browsing History |

Last Thursday, the US Senate voted to renew the USA Freedom Act which authorizes a variety of forms of national surveillance. As has been reported, this renewal does not include an amendment offered by Sen. Ron Wyden and Sen. Steve Daines that would have explicitly prohibited the warrantless collection of Web browsing history. The legislation is now being considered by the House of Representatives and today Mozilla and a number of other technology companies sent a letter urging them to adopt the Wyden-Daines language in their version of the bill. This post helps fill in the technical background of what all this means.

Last Thursday, the US Senate voted to renew the USA Freedom Act which authorizes a variety of forms of national surveillance. As has been reported, this renewal does not include an amendment offered by Sen. Ron Wyden and Sen. Steve Daines that would have explicitly prohibited the warrantless collection of Web browsing history. The legislation is now being considered by the House of Representatives and today Mozilla and a number of other technology companies sent a letter urging them to adopt the Wyden-Daines language in their version of the bill. This post helps fill in the technical background of what all this means.

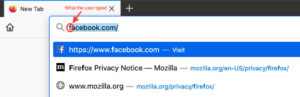

Despite what you might think from the term “browsing history,” we’re not talking about browsing data stored on your computer. Web browsers like Firefox store, on your computer, a list of the places you’ve gone so that you can go back and find things and to help provide better suggestions when you type stuff in the awesomebar. That’s how it is that you can type ‘f’ in the awesomebar and it might suggest you go to Facebook.

Browsers also store a pile of other information on your computer, like cookies, passwords, cached files, etc. that help improve your browsing experience and all of this can be used to infer where you have been. This information obviously has privacy implications if you share a computer or if someone gets access to your computer, and most browsers provide some sort of mode that lets you surf without storing history (Firefox calls this Private Browsing). Anyway, while this information can be accessed by law enforcement if they have access to your computer, it’s generally subject to the same conditions as other data on your computer and those conditions aren’t the topic at hand.

In this context, what “web browsing history” refers to is data which is stored outside your computer by third parties. It turns out there is quite a lot of this kind of data, generally falling into four broad categories:

- Telecommunications metadata. Typically, as you browse the Internet, your Internet Service Provider (ISP) learns every website you visit. This information leaks via a variety of channels (DNS lookups), the IP address of sites, TLS Server Name Indication (SNI), and then ISPs have various policies for how much of this data they log and for how long. Now that most sites have TLS Encryption this data generally will be just the name of the Web site you are going to, but not what pages you go to on the site. For instance, if you go to WebMD, the ISP won’t know what page you went to, they just know that you went to WebMD.

- Web Tracking Data. As is increasingly well known, a giant network of third party trackers follows you around the Internet. What these trackers are doing is building up a profile of your browsing history so that they can monetize it in various ways. This data often includes the exact pages that you visit and will be tied to your IP address and other potentially identifying information.