Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: curl ootw: -U for proxy credentials |

(older options of the week)

-U, --proxy-user

The short version of this option uses the uppercase letter ‘U’. It is important since the lower case letter ‘u’ is used for another option. The longer form of the option is spelled --proxy-user.

This command option existed already in the first ever curl release!

The man page‘s first paragraph describes this option as:

Specify the user name and password to use for proxy authentication.

Proxy

This option is for using a proxy. So let’s first briefly look at what a proxy is.

A proxy is a “middle man” in the communication between a client (curl) and a server (the one that holds the contents you want to download or will receive the content you want to upload).

The client communicates via this proxy to reach the server. When a proxy is used, the server communicates only with the proxy and the client also only communicates with the proxy:

curl <===> proxy <===> server

There exists several different types of proxies and a proxy can require authentication for it to allow it to be used.

Proxy authentication

Sometimes the proxy you want or need to use requires authentication, meaning that you need to provide your credentials to the proxy in order to be allowed to use it. The -U option is used to set the name and password used when authenticating with the proxy (separated by a colon).

You need to know this name and password, curl can’t figure them out – unless you’re on Windows and your curl is built with SSPI support as then it can magically use the current user’s credentials if you provide blank credentials in the option: -U :.

Security

Providing passwords in command lines is a bit icky. If you write it in a script, someone else might see the script and figure them out.

If the proxy communication is done in clear text (for example over HTTP) some authentication methods (for example Basic) will transmit the credentials in clear text across the network to the proxy, possibly readable by others.

Command line options may also appear in process listing so other users on the system can see them there – although curl will attempt to blank them out from ps outputs if the system supports it (Linux does).

Needs other options too

A typical command line that use -U also sets at least which proxy to use, with the -x option with a URL that specifies which type of proxy, the proxy host name and which port number the proxy runs on.

If the proxy is a HTTP or HTTPS type, you might also need to specify which type of authentication you want to use. For example with --proxy-anyauth to let curl figure it out by itself.

If you know what HTTP auth method the proxy uses, you can also explicitly enable that directly on the command line with the correct option. Like for example --proxy-basic or --proxy-digest.

SOCKS proxies

curl also supports SOCKS proxies, which is a different type than HTTP or HTTPS proxies. When you use a SOCKS proxy, you need to tell curl that, either with the correct prefix in the -x argument or by with one of the --socks* options.

Example command line

curl -x http://proxy.example.com:8080 -U user:password https://example.com

See Also

The corresponding option for sending credentials to a server instead of proxy uses the lowercase version: -u / --user.

https://daniel.haxx.se/blog/2020/02/04/curl-ootw-u-for-proxy-credentials/

|

|

Jan-Erik Rediger: This Week in Glean: Cargo features - an investigation |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean. You can find an index of all TWiG posts online.)

The last blog post: This Week in Glean: Glossary by Bea.

As :chutten outlined in the first TWiG blog post we're currently prototyping Glean on Desktop. After a couple rounds of review, some adjustements and some learnings from doing Rust on mozilla-central, we were ready to land the first working prototype code earlier this year (Bug 1591564).

Unfortunately the patch set was backed out nearly immediately 1 for 2 failures. The first one was a "leak" (we missed cleaning up memory in a way to satisfy the rigorous Firefox test suite, that was fixed in another patch). The second one was a build failure on a Windows platform.

This is what the log had to say about it (shortened to the relevant parts here, see the full log output):

lld-link: error: undefined symbol: __rbt_backtrace_pcinfo

>>> referenced by gkrust_gtest.lib(backtrace-5286ea09b9822175.backtrace.3kzojw1m-cgu.3.rcgu.o)

lld-link: error: undefined symbol: __rbt_backtrace_create_state

>>> referenced by gkrust_gtest.lib(backtrace-5286ea09b9822175.backtrace.3kzojw1m-cgu.3.rcgu.o)

lld-link: error: undefined symbol: __rbt_backtrace_syminfo

>>> referenced by gkrust_gtest.lib(backtrace-5286ea09b9822175.backtrace.3kzojw1m-cgu.3.rcgu.o)

clang-9: error: linker command failed with exit code 1 (use -v to see invocation)

/builds/worker/workspace/build/src/config/rules.mk:608: recipe for target 'xul.dll' failed]

I set out to investigate this error.

While I had not seen that particular error before, I knew about the backtrace crate. It caused me some trouble before (it depends on a C library, and won't work on all targets easily).

I knew that the Glean SDK doesn't really depend on its functionality2 and thus removing it from our dependency graph would probably solve the issue.

But first I had to find out why we depend on it somewhere and why it is causing these linker errors to begin with.

The first thing I noticed is that we didn't include anything new in the patch set that was now rejected.

Through some experimentation and use cargo-tree I could tell that backtrace was included in the build before our Glean patch3, as a transitive dependency of another crate: failure.

So why didn't it fail the build before? As per the errors above, the build failed only during linking, not compilation, which makes me believe those functions were never linked in previously, because no one passed around any errors that would cause these functions to be used.

As said before, the Glean SDK doesn't really need failure's backtrace feature, so I tried disabling its default features. Due to how cargo currently works, this needs to be done across all transitive dependencies (the final feature set a crate is compiled with is the union across everything).

- Disabling it in ffi-support in application-services

- Disabling it in Glean (and depending on that changed ffi-support

I then changed mozilla-central to use the crates from git directly for testing.

Turns out that still fails with the same issue on the Windows target.

Something was re-enabling the "std" feature of failure in tree.

cargo-feature-set was able to show me all enabled features for all dependencies I tracked it down further4.

Turns out the quantum_render feature enables the webrender_bindings crate,

which then somehow pulls in failure through transitive dependencies again.

More trial-and-error revealed its a dependency of the dirs crate, only used on Windows.

Except dirs doesn't need failure for the target we're building for (x86_64-pc-windows-gnu or Mac or Linux).

It's again a transitive dependency for a crate called redox_users, which is only pulled in when compiled for Redox5.

Except that's not how Cargo works. Cargo always pulls in all dependencies, merges all features and only later ignores the crates it doesn't actually need. That's a long standing issue:

So now we identified who's pulling in the backtrace crate and maybe even identified why it was not a problem before. How do we fix this?

As shown before, just disabling the backtrace feature in crates we use directly doesn't solve it, so one quick workaround was to force failure itself to not have that feature ever.

Easily done:

--- Cargo.toml +++ Cargo.toml @@ -23,5 +23,5 @@ members = [".", "failure_derive"] [features] default = ["std", "derive"] #small-error = ["std"] -std = ["backtrace"] +std = [] derive = ["failure_derive"]

I forked failure and commited that patch, then made mozilla-central use my forked version instead.

Later I also removed failure from both Glean and application-services' ffi-support, as the small functionality we got from it was easily reimplemented manually.

Both approaches are short-term fixes for getting Glean into Firefox and it's clear that this issue might easily come up in some form soon again for either us or another team.

It's also a major hassle for lots of people outside of Mozilla, for example people working on embedded Rust frequently run into problems with no_std libraries suddenly linking in libstd again.

Initially I also planned to figure out a way forward for Cargo and come up with a fix for it, but as it turns out: Someone is already doing that!

@ehuss started working on adding a new feature resolver.

While not yet final, it will bring a new -Zfeatures flag initially:

itarget— Ignores features for target-specific dependencies for targets that don't match the current compile target.build_dep— Prevents features enabled on build dependencies from being enabled for normal dependencies.dev_dep— Prevents features enabled on dev dependencies from being enabled for normal dependencies.

I'm excited to see this being worked on and can't wait to try it out. It will take longer for mozilla-central to rely on this of course, but I hope this will eventually solve one of the long standing issues with cargo.

When patches are merged, a full set of tests are run on the Mozilla CI servers. If these tests fail the patch is reverted ("backed out") and the initial committer informed.

Glean's error handling is pretty simplistic. We mostly only log errors on the FFI boundary and don't propagate them over this boundary. So far we didn't need a backtrace for errors there.

The exact command enabling all the same features as the build I run in toolkit/library/rust/shared was (on the parent commit of my later patch to fix it: 7a3be2bbc):

cargo tree --features bitsdownload,cranelift_x86,cubeb-remoting,fogotype,gecko_debug,gecko_profiler,gecko_refcount_logging,moz_memory,moz_places,new_cert_storage,new_xulstore,quantum_render

Same feature set as above, just running cargo feature-set this time.

"Redox is a Unix-like Operating System written in Rust, aiming to bring the innovations of Rust to a modern microkernel and full set of applications". Firefox doesn't build on Redox.

|

|

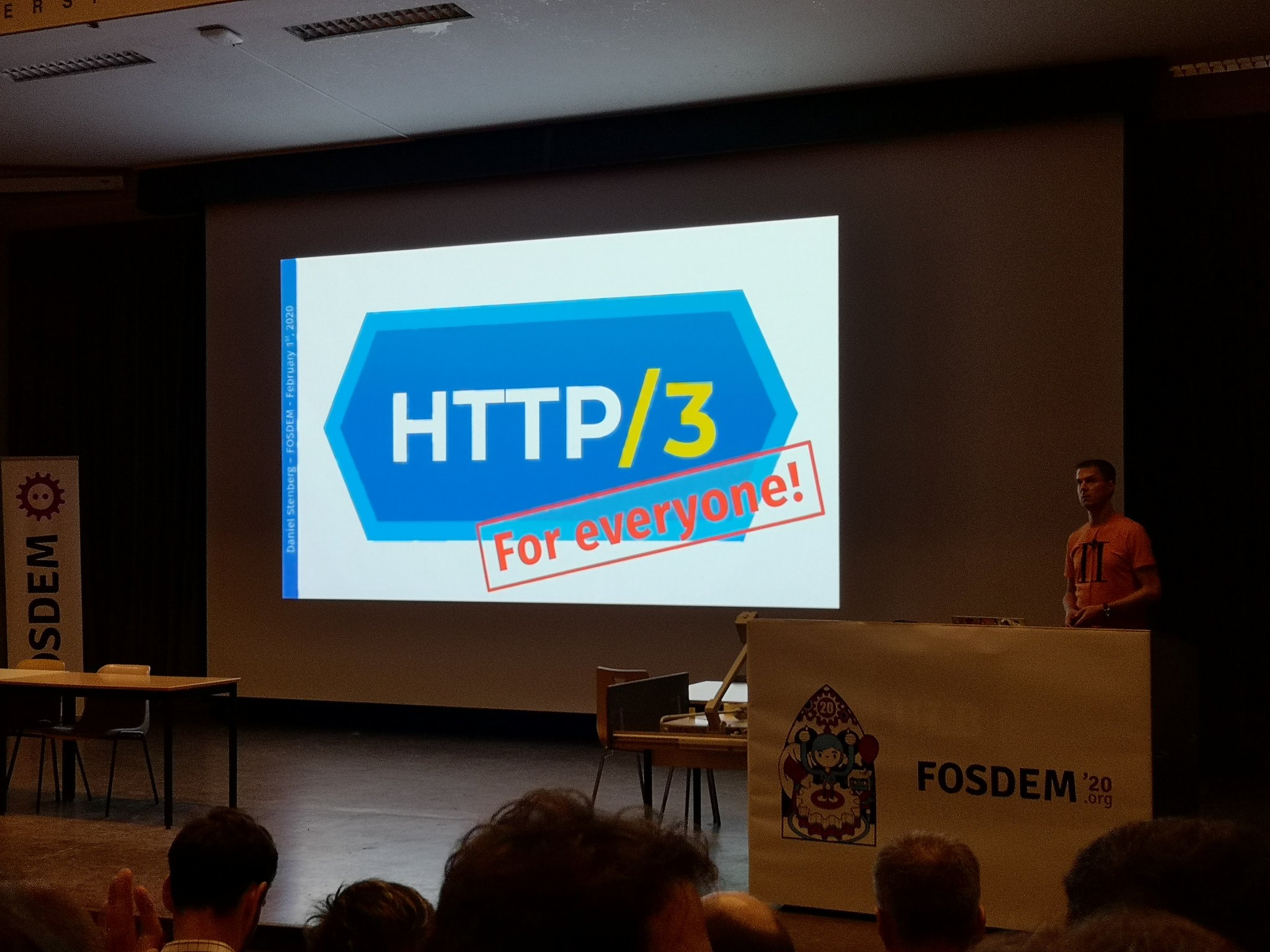

Daniel Stenberg: HTTP/3 for everyone |

FOSDEM 2020 is over for this time and I had an awesome time in Brussels once again.

Stickers

I brought a huge collection of stickers this year and I kept going back to the wolfSSL stand to refill the stash and it kept being emptied almost as fast. Hundreds of curl stickers were given away! The photo on the right shows my “sticker bag” as it looked before I left Sweden.

Lesson for next year: bring a larger amount of stickers! If you missed out on curl stickers, get in touch and I’ll do my best to satisfy your needs.

The talk

“HTTP/3 for everyone” was my single talk this FOSDEM. Just two days before the talk, I landed updated commits in curl’s git master branch for doing HTTP/3 up-to-date with the latest draft (-25). Very timely and I got to update the slide mentioning this.

As I talked HTTP/3 already last year in the Mozilla devroom, I also made sure to go through the slides I used then to compare and make sure I wouldn’t do too much of the same talk. But lots of things have changed and most of the content is updated and different this time around. Last year, literally hundreds of people were lining up outside wanting to get into room when the doors were closed. This year, I talked in the room Janson, which features 1415 seats. The biggest one on campus. It was pack full!

It is kind of an adrenaline rush to stand in front of such a wall of people. At one time in my talk I paused for a brief moment and then I felt I could almost hear the complete silence when a huge amount of attentive faces captured what I had to say.

I got a lot of positive feedback on the presentation. I also thought that my decision to not even try to take question in the big room was a correct and I ended up talking and discussing details behind the scene for a good while after my talk was done. Really fun!

The video

The video is also available from the FOSDEM site in webm and mp4 formats.

The slides

If you want the slides only, run over to slideshare and view them.

|

|

The Firefox Frontier: Tracking Diaries with Melanie Ehrenkranz |

In Tracking Diaries, we invited people from all walks of life to share how they spent a day online while using Firefox’s privacy protections to keep count of the trackers … Read more

The post Tracking Diaries with Melanie Ehrenkranz appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/tracking-diaries-melanie-ehrenkranz/

|

|

The Rust Programming Language Blog: The 2020 Rust Event Lineup |

A new decade has started, and we are excited about the Rust conferences coming up. Each conference is an opportunity to learn about Rust, share your knowledge, and to have a good time with your fellow Rustaceans. Read on to learn more about the events we know about so far.

FOSDEM

February 2nd, 2020

FOSDEM stands for the Free and Open Source Developers European Meeting. At this event software developers around the world will meet up, share ideas and collaborate. FOSDEM will be hosting a Rust devroom workshop that aims to present the features and possibilities offered by Rust, as well as some of the many exciting tools and projects in its ecosystem.

Located in Brussels, BelgiumRustFest Netherlands

Q2, 2020

The RustFest Netherlands team are working hard behind the scenes on getting everything ready. We hope to tell you more soon so keep an eye on the RustFest blog and follow us on Twitter!

Located in NetherlandsRust+GNOME Hackfest

April 29th to May 3rd, 2020

The goal of the Rust+GNOME hackfest is to improve the interactions between Rust and the GNOME libraries. During this hackfest, we will be improving the interoperability between Rust and GNOME, improving the support of GNOME libraries in Rust, and exploring solutions to create GObject APIs from Rust.

Located in Montr'eal, QuebecRust LATAM

May 22nd-23rd, 2020

Where Rust meets Latin America! Rust Latam is Latin America's leading event for and by the Rust community. Two days of interactive sessions, hands-on activities and engaging talks to bring the community together. Schedule to be announced at this link.

Located in Mexico City, MexicoOxidize

July, 2020

The Oxidize conference is about learning, and improving your programming skills with embedded systems and IoT in Rust. The conference plans on having one day of guided workshops for developers looking to start or improve their Embedded Rust skills, one day of talks by community members, and a two day development session focused on Hardware and Embedded subjects in Rust. The starting date is to be announced at a later date.

Located in Berlin, GermanyRustConf

August 20th-21st, 2020

The official RustConf will be taking place in Portland, Oregon, USA. Last years' conference was amazing, and we are excited to see what happens next. See the website, and Twitter for updates as the event date approaches!

Located in Oregon, USARusty Days

Fall, 2020

Rusty Days is a new conference located in Wroclaw, Poland. Rustaceans of all skill levels are welcome. The conference is still being planned. Check out the information on their site, and twitter as we get closer to fall.

Located in Wroclaw, PolandRustLab

October 16th-17th, 2020

RustLab 2020 is a 2 days conference with talks and workshops. The date is set, but the talks are still being planned. We expect to learn more details as we get closer to the date of the conference.

Located in Florence, ItalyFor the most up-to-date information on events, visit timetill.rs. For meetups, and other events see the calendar.

|

|

Armen Zambrano: Web performance issue — reoccurrence |

Web performance issue — reoccurrence

In June we discovered that Treeherder’s UI slowdowns were due to database slow downs (For full details you can read this post). After a couple of months of investigations, we did various changes to the RDS set up. The changes that made the most significant impact were doubling the DB size to double our IOPS cap and adding Heroku auto-scaling for web nodes. Alternatively, we could have used Provisioned IOPS instead of General SSD storage to double the IOPS but the cost was over $1,000/month more.

Looking back, we made the mistake of not involving AWS from the beginning (I didn’t know we could have used their help). The AWS support team would have looked at the database and would have likely recommended the parameter changes required for a write intensive workload (the changes they recommended during our November outage — see bug 1597136 for details). For the next four months we did not have any issues, however, their help would have saved a lot of time and it would have prevented the major outage we had in November.

There were some good things that came out of these two episodes: the team has learned how to better handle DB issues, there’s improvements we can do to prevent future incidents (see bug 1599095), we created an escalation path and we worked closely as a team to go through the crisis (thanks bobm, camd, dividehex, ekyle, fubar, habib, kthiessen & sclements for your help!).

|

|

The Rust Programming Language Blog: Announcing Rust 1.41.0 |

The Rust team is happy to announce a new version of Rust, 1.41.0. Rust is a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.41.0 is as easy as:

rustup update stable

If you don't have it already, you can get rustup from the

appropriate page on our website, and check out the detailed release notes for

1.41.0 on GitHub.

What's in 1.41.0 stable

The highlights of Rust 1.41.0 include relaxed restrictions for trait

implementations, improvements to cargo install, a more git-friendly

Cargo.lock, and new FFI-related guarantees for Box. See the detailed

release notes to learn about other changes not covered by this post.

Relaxed restrictions when implementing traits

To prevent breakages in the ecosystem when a dependency adds a new trait

impl, Rust enforces the orphan rule. The gist of it is that

a trait impl is only allowed if either the trait or the type being

implemented is local to (defined in) the current crate as opposed to a

foreign crate. What this means exactly is complicated, however,

when generics are involved.

Before Rust 1.41.0, the orphan rule was unnecessarily strict, getting in the

way of composition. As an example, suppose your crate defines the

BetterVec struct, and you want a way to convert your struct to the

standard library's Vec. The code you would write is:

impl From> for Vec {

// ...

}

...which is an instance of the pattern:

impl ForeignTrait for ForeignType {

// ...

}

In Rust 1.40.0 this impl was forbidden by the orphan rule, as both From and

Vec are defined in the standard library, which is foreign to the current

crate. There were ways to work around the limitation, such as the newtype

pattern, but they were often cumbersome or even impossible in

some cases.

While it's still true that both From and Vec were foreign, the trait (in

this case From) was parameterized by a local type. Therefore, Rust 1.41.0

allows this impl.

For more details, read the the stabilization report and the RFC proposing the change.

cargo install updates packages when outdated

With cargo install, you can install binary crates in your system. The command

is often used by the community to install popular CLI tools written in Rust.

Starting from Rust 1.41.0, cargo install will also update existing

installations of the crate if a new release came out since you installed it.

Before this release the only option was to pass the --force flag, which

reinstalls the binary crate even if it's up to date.

Less conflict-prone Cargo.lock format

To ensure consistent builds, Cargo uses a file named Cargo.lock, containing

dependency versions and checksums. Unfortunately, the way the data was arranged

in it caused unnecessary merge conflicts when changing dependencies in separate

branches.

Rust 1.41.0 introduces a new format for the file, explicitly designed to avoid those conflicts. This new format will be used for all new lockfiles, while existing lockfiles will still rely on the previous format. You can learn about the choices leading to the new format in the PR adding it.

More guarantees when using Box in FFI

Starting with Rust 1.41.0, we have declared that a Box, where T: Sized

is now ABI compatible with the C language's pointer (T*) types. So if you

have an extern "C" Rust function, called from C, your Rust function can now

use Box, for some specific T, while using T* in C for the

corresponding function. As an example, on the C side you may have:

// C header */

// Returns ownership to the caller.

struct Foo* foo_new(void);

// Takes ownership from the caller; no-op when invoked with NULL.

void foo_delete(struct Foo*);

...while on the Rust side, you would have:

#[repr(C)]

pub struct Foo;

#[no_mangle]

pub extern "C" fn foo_new() -> Box {

Box::new(Foo)

}

// The possibility of NULL is represented with the `Option<_>`.

#[no_mangle]

pub extern "C" fn foo_delete(_: Option>) {}

Note however that while Box and T* have the same representation and ABI,

a Box must still be non-null, aligned, and ready for deallocation by the

global allocator. To ensure this, it is best to only use Boxes originating

from the global allocator.

Important: At least at present, you should avoid using Box types for

functions that are defined in C but invoked from Rust. In those cases, you

should directly mirror the C types as closely as possible. Using types like

Box where the C definition is just using T* can lead to undefined

behavior.

To read more, consult the documentation for Box.

Library changes

In Rust 1.41.0, we've made the following additions to the standard library:

-

The

Result::map_orandResult::map_or_elsemethods were stabilized.Similar to

Option::map_orandOption::map_or_else, these methods are shorthands for the.map(|val| process(val)).unwrap_or(default)pattern. -

NonZero*numerics now implementFromif it's a smaller integer width. For example,NonZeroU16now implementsFrom. -

The

weak_countandstrong_countmethods onWeakpointers were stabilized.std::rc::Weak::weak_countstd::rc::Weak::strong_countstd::sync::Weak::weak_countstd::sync::Weak::strong_count

These methods return the number of weak (

rc::Weakandsync::Weak) or strong (RcandArc) pointers to the allocation respectively.

Reducing support for 32-bit Apple targets soon

Rust 1.41.0 is the last release with the current level of compiler support for

32-bit Apple targets, including the i686-apple-darwin target. Starting from

Rust 1.42.0, these targets will be demoted to the lowest support tier.

You can learn more about this change in this blog post.

Other changes

There are other changes in the Rust 1.41.0 release: check out what changed in Rust, Cargo, and Clippy. We also have started landing MIR optimizations, which should improve compile time: you can learn more about them in the "Inside Rust" blog post.

Contributors to 1.41.0

Many people came together to create Rust 1.41.0. We couldn't have done it without all of you. Thanks!

|

|

Karl Dubost: Week notes - 2020 w04 - worklog - Python |

Monday

Some webcompat diagnosis. Nothing exciting in the issues found, except maybe something about clipping and scrolling. Update: there is a bug! Thanks Daniel

Tuesday

scoping request to the webhook to the actual repo. That way we do not do useless work or worse conflicts of labels assignments. I struggled a bit with the mock Basically for the test I wanted to avoid to make the call to GitHub. When I think about it, there are possibly two options:

- Mocking.

- or putting a flag in the code to avoid the call if in test environment. Not sure what is the best strategy.

I also separated some tests which were tied together under the same function, so that it is clearer when one of them is failing.

Phone meeting with the webcompat team this night from 23:00 to midnight. Minutes.

About Mocking

Yes mocking is evil in unit tests, but it becomes necessary if you have dependencies on external services (that you do not control). A good reminder is that you need to mock the function where it is actually called and not where it is imported from. In my case, I wanted to make a couple of tests for our webhook without actually sending requests to GitHub. The HTTP response from GitHub which interests us would be either:

- 4**

- 200

So I created a mock for the case where it is successful and makes actually the call. I added comments here to explain.

@patch('webcompat.webhooks.new_opened_issue') def test_new_issue_right_repo(self, mock_proxy): """Test that repository_url matches the CONFIG for public repo. Success is: payload: 'gracias amigos' status: 200 content-type: text/plain """ json_event, signature = event_data('new_event_valid.json') headers = { 'X-GitHub-Event': 'issues', 'X-Hub-Signature': 'sha1=2fd56e551f8243a4c8094239916131535051f74b', } with webcompat.app.test_client() as c: mock_proxy.return_value.status_code = 200 rv = c.post( '/webhooks/labeler', data=json_event, headers=headers ) self.assertEqual(rv.data, b'gracias, amigo.') self.assertEqual(rv.status_code, 200) self.assertEqual(rv.content_type, 'text/plain')

Wednesday

Asia Dev Roadshow

Sandra has published the summary and the videos of the Developer Roadshow in Asia. This is the talk we gave about Web Compatibility and devtools in Seoul.

Anonymous reporting

Still working on our new anonymous reporting workflow.

Started to work on the PATCH issue when it is moderated positively but before adding code I needed to refactor a bit so we don't end up with a pile of things. I think we can further simplify. Unit tests make it so much easier to move things around. Because when moving code in different modules, files, we break tests. And then we need to fix both codes and tests, so it's working again. But we know in the end that all the features that were essential are still working.

Skiping tests before completion

I had ideas for tests and I didn't want to forget them, so I wanted to add them to the code, so that they will be both here, but not make fail the system.

I could use pass:

def test_patch_not_acceptable_issue(self): pass

but this will be silent, and so you might forget about them.

Then I thought, let's use the NotImplementedError

def test_patch_not_acceptable_issue(self): raise NotImplementedError

but here everything will break and the test suite will stop working. So not good. I searched and I found unittest.SkipTest

def test_patch_not_acceptable_issue(self): """Test for not acceptable issues from private repo. payload: 'Moderated issue rejected' status: 200 content-type: text/plain """ raise unittest.SkipTest('TODO')

Exactly what I needed for nose.

(env) ~/code/webcompat.com % nosetests tests/unit/test_webhook.py -v

gives:

Extract browser label name. ... ok Extract 'extra' label. ... ok Extract dictionary of metadata for an issue body. ... ok Extract priority label. ... ok POST without bogus signature on labeler webhook is forbidden. ... ok POST with event not being 'issues' or 'ping' fails. ... ok POST without signature on labeler webhook is forbidden. ... ok POST with an unknown action fails. ... ok GET is forbidden on labeler webhook. ... ok Extract the right information from an issue. ... ok Extract list of labels from an issue body. ... ok Validation tests for GitHub Webhooks: Everything ok. ... ok Validation tests for GitHub Webhooks: Missing X-GitHub-Event. ... ok Validation tests for GitHub Webhooks: Missing X-Hub-Signature. ... ok Validation tests for GitHub Webhooks: Wrong X-Hub-Signature. ... ok Test that repository_url matches the CONFIG for public repo. ... ok Test when repository_url differs from the CONFIG for public repo. ... ok Test the core actions on new opened issues for WebHooks. ... ok Test for acceptable issues comes from private repo. ... SKIP: TODO Test for rejected issues from private repo. ... SKIP: TODO Test for issues in the wrong repo. ... SKIP: TODO Test the private scope of the repository. ... ok Test the public scope of the repository. ... ok Test the unknown of the repository. ... ok Test the signature check function for WebHooks. ... ok POST with PING events just return a 200 and contains pong. ... ok ---------------------------------------------------------------------- Ran 26 tests in 0.102s OK (SKIP=3)

It doesn't fail the test suite, but at least I know I have work to do. We can perfectly see what is missing.

Test for acceptable issues comes from private repo. ... SKIP: TODO Test for rejected issues from private repo. ... SKIP: TODO Test for issues in the wrong repo. ... SKIP: TODO

Thursday and Friday

I dedicated most of my time in advancing the new anonymous workflow reporting. The interesting process in doing it was to have tests and having to refactor some functions a couple of times so it made more sense.

Tests are really a safe place to make progress. A new function will break tests results and we will work to fix the tests and/or the function to a place which is cleaner. And then we work on the next modification of the code. Tests become a lifeline in your development.

Another thing which I realize that it is maybe time we create a new module for our issues themselves. It would model, instantiate our issues and we can use in multiple places. Currently we have too many back and forth on parsing texts, calling dictionaries items, etc. We can probably improve this with a dedicated module. Probably for the phase 2 of our new workflow project.

Also I have not been effective as I wished. The windmill of thoughts about my ex-work colleagues future is running wild.

Otsukare!

|

|

Mozilla Thunderbird: Thunderbird’s New Home |

As of today, the Thunderbird project will be operating from a new wholly owned subsidiary of the Mozilla Foundation, MZLA Technologies Corporation. This move has been in the works for a while as Thunderbird has grown in donations, staff, and aspirations. This will not impact Thunderbird’s day-to-day activities or mission: Thunderbird will still remain free and open source, with the same release schedule and people driving the project.

There was a time when Thunderbird’s future was uncertain, and it was unclear what was going to happen to the project after it was decided Mozilla Corporation would no longer support it. But in recent years donations from Thunderbird users have allowed the project to grow and flourish organically within the Mozilla Foundation. Now, to ensure future operational success, following months of planning, we are forging a new path forward. Moving to MZLA Technologies Corporation will not only allow the Thunderbird project more flexibility and agility, but will also allow us to explore offering our users products and services that were not possible under the Mozilla Foundation. The move will allow the project to collect revenue through partnerships and non-charitable donations, which in turn can be used to cover the costs of new products and services.

Thunderbird’s focus isn’t going to change. We remain committed to creating amazing, open source technology focused on open standards, user privacy, and productive communication. The Thunderbird Council continues to steward the project, and the team guiding Thunderbird’s development remains the same.

Ultimately, this move to MZLA Technologies Corporation allows the Thunderbird project to hire more easily, act more swiftly, and pursue ideas that were previously not possible. More information about the future direction of Thunderbird will be shared in the coming months.

Update: A few of you have asked how to make a contribution to Thunderbird under the new corporation, especially when using the monthly option. Please check out our updated site at give.thunderbird.net!

|

|

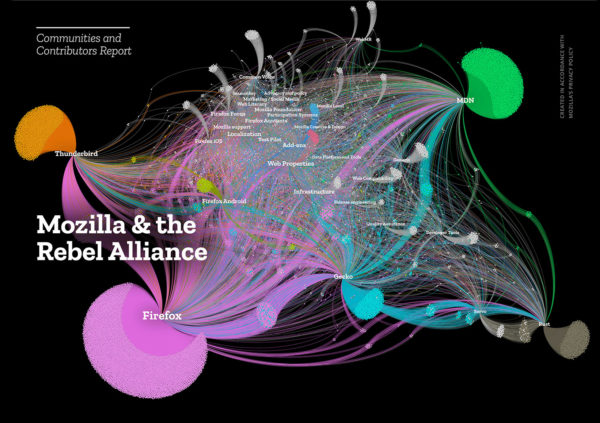

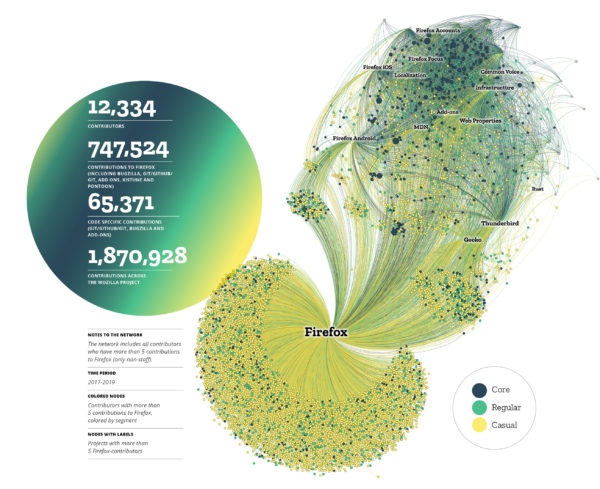

The Mozilla Blog: Mapping the power of Mozilla’s Rebel Alliance |

At Mozilla, we often speak of our contributor communities with gratitude, pride and even awe. Our mission and products have been supported by a broad, ever-changing rebel alliance — full of individual volunteers and organizational contributors — since we shipped Firefox 1.0 in 2004. It is this alliance that comes up with new ideas, innovative approaches and alternatives to the ongoing trends towards centralisation and an internet that doesn’t always work in the interests of people.

But we’ve been unable to speak in specifics. And that’s a problem, because the threats to the internet we love have never been greater. Without knowing the strength of the various groups fighting for a healthier internet, it’s hard to predict or achieve success.

We know there are thousands around the globe who help build, localize, test, de-bug, deploy, and support our products and services. They help us advocate for better government regulation and ‘document the web’ through the Mozilla Developer Network. They speak about Mozilla’s mission and privacy-preserving products and technologies at conferences around the globe. They help us host events around the globe too, like this year’s 10th anniversary of MozFest, where participants hacked on how to create a multi-lingual, equitable internet and so much more.

With the publication of the Mozilla and the Rebel Alliance report, we can now speak in specifics. And what we have to say is inspiring. As we rise to the challenges of today’s internet, from the injustices of the surveillance economy to widespread misinformation and the rise of untrustworthy AI, we take heart in how powerful we are as a collective.

Making the connections

In 2018, well over 14,000 people supported Mozilla by contributing their expertise, work, creativity, and insights. Between 2017 and 2019, more than 12,000 people contributed to Firefox. These counts only consider those people whose contributions we can see, such as through Bugzilla, GitHub, or Kitsune, our support platform. They don’t include non-digital contributions. Firefox and Gecko added almost 3,500 new contributors in 2018. The Mozilla Developer Network added over 1,000 in 2018. 52% of all traceable contributions in 2018 came from individual volunteers and commercial contributors, not employees.

The report’s network graphs demonstrate that there are numerous Mozilla communities, not one. Many community members participate across multiple projects: core contributors participate in an average of 4.3 of them. Our friends at Analyse & Tal helped create an interactive version of Mozilla’s contributor communities, highlighting common patterns of contribution and distinguishing between levels of contribution by project. Also, it’s important to note what isn’t captured in the report: the value of social connections, the learning and the mutual support people find in our communities.

We can make a reasonable estimate of the discrete value of some contributions from our rebel alliance. For example, community contributions comprise 58% of all filed Firefox regression bugs, which are particularly costly in their impact on the number of people who use and keep using the browser.

But the real value in our rebel alliance and their contributions is in how they inform and amplify our voice. The challenges around the state of the internet are daunting: disinformation, algorithmic bias and discrimination, the surveillance economy and greater centralisation. We believe this report shows that with the creative strength of our diverse contributor communities, we’re up for the fight.

If you’d like to contribute yourself: check out various opportunities here or dive right into one of our Activate Campaigns!)

The post Mapping the power of Mozilla’s Rebel Alliance appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/01/27/mapping-the-power-of-mozillas-rebel-alliance/

|

|

The Mozilla Blog: Firefox Team Looks Within to Lead Into the Future |

For Firefox products and services to meet the needs of people’s increasingly complex online lives, we need the right organizational structure. One that allows us to respond quickly as we continue to excel at delivering existing products and develop new ones into the future.

Today, I announced a series of changes to the Firefox Product Development organization that will allow us to do just that, including the promotion of long-time Mozillian Selena Deckelmann to Vice President, Firefox Desktop.

“Working on Firefox is a dream come true,” said Selena Deckelmann, Vice President, Firefox Desktop. “I collaborate with an inspiring and incredibly talented team, on a product whose mission drives me to do my best work. We are all here to make the internet work for the people it serves.”

Selena Deckelmann, VP Firefox Desktop

During her eight years with Mozilla, Selena has been instrumental in helping the Firefox team address over a decade of technical debt, beginning with transitioning all of our build infrastructure over from Buildbot. As Director of Security and then Senior Director, Firefox Runtime, Selena led her team to some of our biggest successes, ranging from big infrastructure projects like Quantum Flow and Project Fission to key features like Enhanced Tracking Protection and new services like Firefox Monitor. In her new role, Selena will be responsible for growth of the Firefox Desktop product and search business.

Rounding out the rest of the Firefox Product Development leadership team are:

Joe Hildebrand, who moves from Vice President, Firefox Engineering into the role of Vice President, Firefox Web Technology. He will lead the team charged with defining and shipping our vision for the web platform.

James Keller who currently serves as Senior Director, Firefox User Experience will help us better navigate the difficult trade-off between empowering teams while maintaining a consistent user journey. This work is critically important because since the Firefox Quantum launch in November 2017 we have been focused on putting the user back at the center of our products and services. That begins with a coherent, engaging and easy to navigate experience in the product.

I’m extraordinarily proud to have such a strong team within the Firefox organization that we could look internally to identify this new leadership team.

These Mozillians and I, will eventually be joined by two additional team members. One who will head up our Firefox Mobile team and the other who will lead the team that has been driving our paid subscription work. Searches for both roles will be posted.

Alongside Firefox Chief Technology Officer Eric Rescorla and Vice President, Product Marketing Lindsey Shepard, I look forward to working with this team to meet Mozilla’s mission and serve internet users as we build a better web.

You can download Firefox here.

The post Firefox Team Looks Within to Lead Into the Future appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/01/24/firefox-team-looks-within-to-lead-into-the-future/

|

|

Daniel Stenberg: Coming to FOSDEM 2020 |

I’m going to FOSDEM again in 2020, this will be my 11th consecutive year I’m travling to this awesome conference in Brussels, Belgium.

At this my 11th FOSDEM visit I will also deliver my 11th FOSDEM talk: “HTTP/3 for everyone“. It will happen at 16:00 Saturday the 1st of February 2020, in Janson, the largest room on the campus. (My third talk in the main track.)

For those who have seen me talk about HTTP/3 before, this talk will certainly have overlaps but I’m also always refreshing and improving slides and I update them as the process moves on, things changes and I get feedback. I spoke about HTTP/3 already at FODEM 2019 in the Mozilla devroom (at which time there was a looong line of people who tried, but couldn’t get a seat in the room) – but I think you’ll find that there’s enough changes and improvements in this talk to keep you entertained this year as well!

If you come to FOSDEM, don’t hesitate to come say hi and grab a curl sticker or two – I intend to bring and distribute plenty – and talk curl, HTTP and Internet transfers with me!

You will most likely find me at my talk, in the cafeteria area or at the wolfSSL stall. (DM me on twitter to pin me down! @bagder)

https://daniel.haxx.se/blog/2020/01/24/coming-to-fosdem-2020/

|

|

Patrick Cloke: Cleanly removing a Django app (with models) |

While pruning features from our product it was necessary to fully remove some Django apps that had models in them. If the code is just removed than the tables (and some other references) will be left in the database.

After doing this a few times for work I came up …

https://patrick.cloke.us/posts/2020/01/23/cleanly-removing-a-django-app-with-models/

|

|

Patrick Cloke: Using MySQL’s LOAD DATA with Django |

While attempting to improve performance of bulk inserting data into MySQL database my coworker came across the LOAD DATA SQL statement. It allows you to read data from a text file (in a comma separated variable-like format) and quickly insert it into a table. There’s two variations of it …

https://patrick.cloke.us/posts/2020/01/23/using-mysqls-load-data-with-django/

|

|

Mozilla VR Blog: Hello WebXR |

We are happy to share a brand new WebXR experience we have been working on called Hello WebXR!

Here is a preview video of how it looks:

We wanted to create a demo to celebrate the release of the WebXR v1.0 API!.

The demo is designed as a playground where you can try different experiences and interactions in VR, and introduce newcomers to the VR world and its special language in a smooth, easy and nice way.

How to run it

You just need to open the Hello WebXR page on a WebXR (Or WebVR thanks to the WebXR polyfill) capable browser like Firefox Reality or Oculus Browser on standalone devices such as the Oculus Quest, or with Chrome on Desktop >79. For an updated list of supported browsers please visit the ImmersiveWeb.dev support table.

Features

- The demo starts in the main hall where you can find:

- Floating spheres containing 360o mono and stereo panoramas

- A pair of sticks that you can grab to play the xylophone

- A painting exhibition where paintings can be zoomed and inspected at will

- A wall where you can use a graffiti spray can to paint whatever you want

- A twitter feed panel where you can read tweets with hashtag #hellowebxr

- Three doors that will teleport you to other locations:

- A dark room to experience positional audio (can you find where the sounds come from?)

- A room displaying a classical sculpture captured using photogrammetry

- The top of a building in a skyscrapers area (are you scared of heights?)

Goals

Our main goal for this demo was to build a nice looking and nice performing experience where you could try different interactions and explore multiple use cases for WebXR. We used Quest as our target device to demonstrate WebXR is a perfectly viable platform not only for powerful desktops and headsets but also for more humble devices like the Quest or Go, where resources are scarce.

Also, by building real-world examples we learn how web technologies, tools, and processes can be optimized and improved, helping us to focus on implementing actual, useful solutions that can bring more developers and content to WebXR.

Tech

The demo was built using web technologies, using the three.js engine and our ECSY framework in some parts. We also used the latest standards such as glTF with Draco compression for models and Basis for textures. The models were created using Blender, and baked lighting is used throughout all the demo.

We also used third party content like the photogrammetry sculpture (from this fantastic scan by Geoffrey Marchal in Sketchfab), public domain sounds from freesound.org and classic paintings are taken from the public online galleries of the museums where they are exhibited.

Conclusions

There are many things we are happy with:

- The overall aesthetic and “gameplay” fits perfectly with the initial concepts.

- The way we handle the different interactions in the same room, based on proximity or states made everything easier to scale.

- The demo was created initially using only Three.js, but we successfully integrated some functionality using ECSY.

And other things that we could improve:

- We released fewer experiences than we initially planned.

- Overall the tooling is still a bit rough and we need to keep on improving it:

- When something goes wrong it is hard to debug remotely on the device. This is even worse if the problem comes from WebGL. ECSY tools will help here in the future.

- State of the art technologies like Basis or glTF still lack good tools.

- Many components could be designed to be more reusable.

What’s next?

- One of our main goals for this project is also to have a sandbox that we could use to prototype new experiences and interactions, so you can expect this demo to grow over time.

- At the same time, we would like to release a template project with an empty room and a set of default VR components, so you can build your own experiments using it as a boilerplate.

- Improve the input support by using the great WebXR gamepads module and the WebXR Input profiles.

- We plan to write a more technical postmortem article explaining the implementation details and content creation.

- ECSY was released after the project started so we only used it on some parts of the demo. We would like to port other parts in order to make them reusable in other projects easily.

- Above all, we will keep investing in new tools to improve the workflow for content creators and developers.

Of course, the source code is available for everyone. Please give Hello World! a try and share your feedback or issues with us on the github repository.

|

|

The Firefox Frontier: Data detox: Five ways to reset your relationship with your phone |

There’s a good chance you’re reading this on a phone, which is not surprising considering that most of us spend up to four hours a day on our phones. And … Read more

The post Data detox: Five ways to reset your relationship with your phone appeared first on The Firefox Frontier.

|

|

Rub'en Mart'in: Modernizing communities – The Mozilla way |

It’s been a long time since I’ve wanted to write deeply about my work empowering communities. I want to start with this article sharing some high-level learnings around working on community strategy.

Hi, I’m Rub'en Mart'in and I work as a Community Strategist for Mozilla, the non-profit behind Firefox Browser.

I’ve been working with, and participating in open/libre source communities since 2004 – the first decade as a volunteer before making open source my full time career – joining Mozilla as staff five years ago, where as part of my Community Strategist role I’ve been focused on:

- Identifying and designing opportunities for generating organizational impact through participation of volunteer communities.

- Design open processes for collaboration that provide a nice, empowering and rich experience for contributors.

During these many years, I have witnessed incredible change in how communities engage, grow and thrive in the open source ecosystem and beyond, and ‘community’ has become a term more broadly implicated in product marketing and its success in many organizations. At Mozilla our community strategy, while remaining dedicated to the success of projects and people, has been fundamentally modernized and optimized to unlock the true potential of a Mission-Driven Organization by:

- Prioritizing data, or what I refer to as ‘humanizing the data-driven approach’

- Implementing our Open By Design strategy.

- Investing into our contributor experience holistically.

Today Mozilla’s communities are a powerhouse of innovation, unlocking much more impact to different areas of the organization, like the Common Voice 2019 Campaign where we collected 406 hours of public domain voices for unlocking the speech-to-text market or the Firefox Preview Bug Hunter Campaign with more than 500 issues filed and 8000 installs in just two weeks that were fundamental to launch this browser to the market sooner.

Follow me during this post to know how we got there.

The roots, how did we get here?

Mozilla has grown from a small community of volunteers and a few employees, to an organization with 1200+ employees and tens of thousands of volunteers around the world. You can imagine that Mozilla and its needs in 2004 were completely different to the current ones.

Communities were created and organized organically for a long time here at Mozilla. Anyone with the time and energy to mobilize a local community created one and tried to provide value to the organization, usually through product localization or helping with user support.

I like a quote from my college Rosana Ardila who long ago said that contributing opportunities at Mozilla were a “free buffet” where anyone could jump into anything with no particular order of importance and where many of the dishes were long empty. We needed to “refill” the buffet.

This is how usually a lot of libre and open source communities operate, being open by default with a ton of entry points to contribute, unfortunately some or most of them not really fully optimized for contributions and therefore don’t offer a great contribution experience.

Impact and humanizing the data-driven approach

Focusing on impact and at the same time humanizing the data-driven approach were a couple of fundamental changes that happened around 4-5 years ago and completely changed our approach to communities.

When you have a project with a community around there are usually two fundamental problems to solve:

- Provide value to the organization’s current goals.

- Provide value to the volunteers contributing *

If you move the balance too much into the first one, you risk your contributors to become “free labor”, but if you balance too much into the other direction, your contributor efforts are highly likely to become irrelevant for the organization.

* The second point is the key factor to humanize the approach, and something people forget when using data to make decisions: It’s not just about numbers, it’s also people, human beings!

How do you even start to balance both?

RESEARCH!

Any decision you take should be informed by data, sometimes people in charge of community strategy or management have “good hunches” or “assumptions”, but that’s a risky business you need to avoid, unless you have data to support it.

Do internal research to understand your organization, where it is heading, what are the most important things and the immediate goals for this year. Engage into conversations to understand why these goals are important with key decision makers.

Do internal research to also understand your communities and contributors, who they are, why they are contributing (motivation), where, how? Both quantitatively (stats from tools) as well as qualitatively (surveys, conversations).

This will provide you with an enormous amount of information to figure out where are the places where impact can be boosted and also understand how your communities and contributors are operating. Are they aligned? Are they happy? If not, why?

Do also external research to understand how other similar organizations are solving the same problems, get out of your internal bubble and be open learning from others.

A few years ago we did all of this at Mozilla from the Open Innovation team, and it really informed our strategy moving forward. We keep doing internal and external research regularly in all of our projects to inform any important decisions.

Open by default vs open by design

I initially mentioned that being open by default can lead to a poor contributor experience, which is something we learned from this research. If you think your approach will benefit from being open, please do so with intention, do so by design.

Pointing people to donate their free time to suboptimal contributor experiences will do more harm than good. And if something is not optimized or doesn’t need external contributions, you shouldn’t point people there and clarify the expectations with everyone upfront.

Working with different teams and stakeholders inside the organization is key in order to design and optimize impactful opportunities, and this is something we have done in the past years at Mozilla in the form of Activate Campaigns, a regular-cadence set of opportunities designed by the community team in collaboration with different internal projects, optimized for boosting their immediate goals and optimized to be an engaging and fun experience for our Mission-Driven contributors.

The contributor experience

In every organization there is always going to be a tension between immediate impact and long term sustainability, especially when we are talking about communities and contributors.

Some organizations will have more room than others to operate in the long term, and I’m privileged to work in an organization that understands the value of long term sustainability.

If you optimize only for immediate value, you risk your communities to fall apart in the medium term, but if you optimize only for long-term you risk the immediate success of the organization.

Find the sweet-spot between both, maybe that’s 70-30% immediate-long or 80-20%, it’s really going to depend on the resources you have and where your organization is right now.

The way we approached it was to always have relevant and impactful (mesurable) opportunities for people to jump into (through campaigns) and at the same time work on the big 7 themes we found we needed to fix as part of our internal research.

I suspect these themes are also relevant to other organizations, I won’t go into full details in this article but I’d like to list them here:

- Group identities: Recognize and support groups both at regional and functional level.

- Metrics: Increase your understanding of the impact and health of your communities.

- Diversity and inclusion: How do you create processes, standards and workflows to be more inclusive and diverse?

- Volunteer leadership: Shared principles and agreements on volunteer responsibility roles to have healthier and more impactful communities.

- Recognition: Create a rewarding contributor experience for everyone.

- Resource distribution: Standards and systems to make resource distribution fair and consistent across the project.

- Contributor journey and opportunity matching: Connect more people to high impact opportunities and make it easy to join.

Obviously this is something you will need a strong community team to move forward, and I was lucky to work with excellent colleges at the Mozilla Community Development Team on this: Emma, Konstantina, Lucy, Christos, George, Kiki, Mrinalini and a ton of Mozilla volunteers over the Reps program and Reps Council.

You can watch a short video of the project we called “Mission-Driven Mozillians” and how we applied all of this:

What’s next?

I hope this article has helped you understand how we have been modernizing our community approach at Mozilla, and I also hope this can inspire others in their work. I’ve been personally following this approach in all the projects I’ve been helping with Community Strategy, from Mission-Driven Mozillians, to Mozilla Reps, Mozilla Support and Common Voice.

I truly believe that having a strong community strategy is key for any organization where volunteers play a key role, and not only for providing value to the organization or project but also to bring this value back to the people who decided to donate their precious free time because they believe in what you are doing.

There is no way for your strategy to succeed in the long term if volunteers don’t feel and ARE part of the team, working together with you and your team and influencing the direction of the project.

Which part of my work are you most interested in so I can write next in more detail?

Feel free to reach out to me via email (rmartin at mozilla dot com) or twitter if you have questions or feedback, I also really want to know and hear from others solving similar problems.

Thanks!

https://www.nukeador.com/23/01/2020/modernizing-communities-the-mozilla-way/

|

|

The Mozilla Blog: ICANN Directors: Take a Close Look at the Dot Org Sale |

As outlined in two previous posts, we believe that the sale of the nonprofit Public Interest Registry (PIR) to Ethos Capital demands close and careful scrutiny. ICANN — the body that granted the dot org license to PIR and which must approve the sale — needs to engage in this kind of scrutiny.

When ICANN’s board meets in Los Angeles over the next few days, we urge directors to pay particular attention to the question of how the new PIR would steward and be accountable to the dot org ecosystem. We also encourage them to seriously consider the analysis and arguments being made by those who are proposing alternatives to the sale, including the members of the Cooperative Corporation of .ORG Registrants.

As we’ve said before, there are high stakes behind this sale: Public interest groups around the world rely on the dot org registrar to ensure free expression protections and affordable digital real estate. Should this reliance fail under future ownership, a key part of the public interest internet infrastructure would be diminished — and so would the important offline work it fuels.

Late last year, we asked ISOC, PIR and Ethos to answer a series of questions about how the dot org ecosystem would be protected if the sale went through. They responded and we appreciate their engagement, but key questions remain unanswered.

In particular, the responses from Ethos and ISOC proposed a PIR stewardship council made up of representatives from the dot org community. However, no details about the structure, role or powers of this council have been shared publicly. Similarly, Ethos has promised to change PIR’s corporate structure to reinforce its public benefit orientation, but provided few details.

Ambiguous promises are not nearly enough given the stakes. A crystal-clear stewardship charter — and a chance to discuss and debate its contents — are needed before ICANN and the dot org community can even begin to consider whether the sale is a good idea.

One can imagine a charter that provides the council with broad scope, meaningful independence, and practical authority to ensure PIR continues to serve the public benefit. One that guarantees Ethos and PIR will keep their promises regarding price increases, and steer any additional revenue from higher prices back into the dot org ecosystem. One that enshrines quality service and strong rights safeguards for all dot orgs. And one that helps ensure these protections are durable, accounting for the possibility of a future resale.

At the ICANN board meeting tomorrow, directors should discuss and agree upon a set of criteria that would need to be satisfied before approving the sale. First and foremost, this list should include a stewardship charter of this nature, a B corp registration with a publicly posted charter, and a public process of feedback related to both. These things should be in place before ICANN considers approving the sale.

ICANN directors should also discuss whether alternatives to the current sale should be considered, including an open call for bidders. Internet stalwarts like Wikimedia, experts like Marietje Schaake and dozens of important non-profits have proposed other options, including the creation of a co-op of dot orgs. In a Washington Post op-ed, former ICANN chair Esther Dyson argues that such a co-op would “[keep] dot-org safe, secure and free of any motivation to profit off its users’ data or to upsell them pricy add-ons.”

Throughout this process, Mozilla will continue to ask tough questions, as we have on December 3 and December 19. And we’ll continue to push ICANN to hold the sale up against a high bar.

The post ICANN Directors: Take a Close Look at the Dot Org Sale appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/01/23/icann-directors-take-a-close-look-at-the-dot-org-sale/

|

|