Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Hacks.Mozilla.Org: js13kGames 2020: A lean coding challenge with WebXR and Web Monetization |

Have you heard about the js13kGames competition? It’s an online code-golfing challenge for HTML5 game developers. The month-long competition has been happening annually since 2012; it runs from August 13th through September 13th. And the fun part? We set the size limit of the zip package to 13 kilobytes, and that includes all sources—from graphic assets to lines of JavaScript. For the second year in a row you will be able to participate in two special categories: WebXR and Web Monetization.

WebXR entries

The WebXR category started in 2017, introduced as A-Frame. We allowed the A-Frame framework to be used outside the 13k size limit. All you had to do was to link to the provided JavaScript library in the head of your index.html file to use it.

// ...

// ...Then, the following year, 2018, we added Babylon.js to the list of allowed libraries. Last year, we made Three.js another library option.

Which brings us to 2020. Amazingly, the top prize in this year’s WebXR category is a Magic Leap device, thanks to the Mozilla Mixed Reality team.

Adding Web Monetization

Web Monetization is the newest category. Last year, it was introduced right after the W3C Workshop about Web Games. The discussion had identified discoverability and monetization as key challenges facing indie developers. Then, after the 2019 competition ended, some web monetized entries were showcased at MozFest in London. You could play the games and see how authors were paid in real time.

A few months later, Enclave Games was awarded a Grant for the Web, which meant the Web Monetization category in js13kGames 2020 would happen again. For a second year, Coil is offering free membership coupon codes to all participants, so anyone who submits an entry or just wants to play can become a paying web-monetized user.

Implementation details

Enabling the Web Monetization API in your entry is as straightforward as the WebXR implementation. Once again, all you do is add one tag to the head of your index:

// ...

// ...Voila, your game is web monetized! Now you can work on adding extra features to your own creations based on whether or not the visitor playing your game is monetized.

function startEventHandler(event){

// user monetized, offer extra content

}

document.monetization.addEventListener('monetizationstart', startEventHandler);The API allows you to detect this, and provide extra features like bonus points, items, secret levels, and much more. You can get creative with monetizable perks.

Learn from others

Last year, we had 28 entries in the WebXR category, and 48 entries in Web Monetization, out of the 245 total. Several submissions were entered into two categories. The best part? All the source code—from all the years, for all the entries—is available in a readable format in the js13kGames repo on GitHub, so you can see how anything was built.

Also, be sure to read lessons learned blog posts from previous years. Participants share what went well and what could have been improved. It’s a perfect opportunity to learn from their experiences.

Take the challenge

Remember: The 13 kilobyte zip size limit may seem daunting, but with the right approach, it could be doable. If you avoid big images and procedurally generate as much as possible, you should be fine. Plus, the WebXR libraries allow you to build a scene with components faster than from scratch. And most of those entries didn’t even use up the all the allocated zip file space!

Personally, I’m hoping to see more entries in the WebXR and Web Monetization categories this year. Good luck to all, and don’t forget to have fun!

The post js13kGames 2020: A lean coding challenge with WebXR and Web Monetization appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/08/js13kgames-2020-a-lean-coding-challenge/

|

|

Karl Dubost: Browser Wish List - Distressful Content Filtering |

Let's continue with my browser wish list. Previous ideas:

Here are a couple of propositions

- There is content online which may cause distress for individuals.

- Each individual doesn't have the same emotional, cognitive response with regards to different types of content.

- Censorship and wide ban on online content depending on their nature is very problematic.

So how do we make it possible to shield themselves against what they consider being distressful content? I'm not qualifying what is distressful content on purpose, because this is exactly the goal of this post. The nature of content which creates emotional harm is a very personal topic, which can not be decided by others.

Universal shields

Systems like the security alert for harmful websites or the privacy shields all rely on general list decided for the user. Some systems offer a level of customizations, you may decide to allow or bypass the shielding system. But in the first place the shielding system was based on a general rule you had no control on.

That's an issue, because when it's about the nature of the content, this can lead to catastrophic decision excluding some type of contents which is considered harmful by the shield owner.

Personal shields

On the other hands, they are system where you can shield yourself against the website practice. For example for privacy, you may want to use something like uMatrix where you can block everything by default, and allow certain HTTP responses type for each individual URIs. This is what I do on my main browser. It requests a strong effort in tailoring each individual pages. It's a built a policy on the go. It creates general list for future sites (you may block Google Analytics for every future sites you will encounter), but still it doesn't really learn more than that on how to act on your future browsing.

We could imagine applying this method to distressful content with keywords in the page. In terms of distressful content, it may dramatically fail for the same reasons that universal shields fail. They don't understand the content, they just apply a set of rules.

Personal ML shields

So I was wondering if Machine Learning could help here. Where a personal in-browser machine learning engine would flag the content of the links when we are browsing. When reaching a webpage the engine could follow links before we click on them, and create a pre-analysis of the page.

If we click or hover on the link and the analysis is not finished, we could get a popup message saying that the browser don't know yet the nature of the content, it has not finished the analysis. Or if the analysis has been done, it could tell us that based on the analysis and past browsing experience, the content is about this and that, and there are matching our interests.

It should be possible to by-pass such a system if the user wishes so.

It could also help to create pages which are easier to cope with. For example, a page full of images with violent representation and we want to read the content but we want a blur on images by default, with a reveal on image clicking if we wish so.

PS: To address the elephant in the room, I still have a job, but if you read this, know that many qualified people will need your help in finding a new job.

Otsukare!

https://www.otsukare.info/2020/08/12/browser-content-filtering

|

|

The Mozilla Blog: Changing World, Changing Mozilla |

This is a time of change for the internet and for Mozilla. From combatting a lethal virus and battling systemic racism to protecting individual privacy — one thing is clear: an open and accessible internet is essential to the fight.

Mozilla exists so the internet can help the world collectively meet the range of challenges a moment like this presents. Firefox is a part of this. But we know we also need to go beyond the browser to give people new products and technologies that both excite them and represent their interests. Over the last while, it has been clear that Mozilla is not structured properly to create these new things — and to build the better internet we all deserve.

Today we announced a significant restructuring of Mozilla Corporation. This will strengthen our ability to build and invest in products and services that will give people alternatives to conventional Big Tech. Sadly, the changes also include a significant reduction in our workforce by approximately 250 people. These are individuals of exceptional professional and personal caliber who have made outstanding contributions to who we are today. To each of them, I extend my heartfelt thanks and deepest regrets that we have come to this point. This is a humbling recognition of the realities we face, and what is needed to overcome them.

As I shared in the internal message sent to our employees today, our pre-COVID plan for 2020 included a great deal of change already: building a better internet by creating new kinds of value in Firefox; investing in innovation and creating new products; and adjusting our finances to ensure stability over the long term. Economic conditions resulting from the global pandemic have significantly impacted our revenue. As a result, our pre-COVID plan was no longer workable. Though we’ve been talking openly with our employees about the need for change — including the likelihood of layoffs — since the spring, it was no easier today when these changes became real. I desperately wish there was some other way to set Mozilla up for long term success in building a better internet.

But to go further, we must be organized to be able to think about a different world. To imagine that technology will become embedded in our world even more than it is, and we want that technology to have different characteristics and values than we experience today.

So going forward we will be smaller. We’ll also be organizing ourselves very differently, acting more quickly and nimbly. We’ll experiment more. We’ll adjust more quickly. We’ll join with allies outside of our organization more often and more effectively. We’ll meet people where they are. We’ll become great at expressing and building our core values into products and programs that speak to today’s issues. We’ll join and build with all those who seek openness, decency, empowerment and common good in online life.

I believe this vision of change will make a difference — that it can allow us to become a Mozilla that excites people and shapes the agenda of the internet. I also realize this vision will feel abstract to many. With this in mind, we have mapped out five specific areas to focus on as we roll out this new structure over the coming months:

- New focus on product. Mozilla must be a world-class, modern, multi-product internet organization. That means diverse, representative, focused on people outside of our walls, solving problems, building new products, engaging with users and doing the magic of mixing tech with our values. To start, that means products that mitigate harms or address the kinds of the problems that people face today. Over the longer run, our goal is to build new experiences that people love and want, that have better values and better characteristics inside those products.

- New mindset. The internet has become the platform. We love the traits of it — the decentralization, its permissionless innovation, the open source underpinnings of it, and the standards part — we love it all. But to enable these changes, we must shift our collective mindset from a place of defending, protecting, sometimes even huddling up and trying to keep a piece of what we love to one that is proactive, curious, and engaged with people out in the world. We will become the modern organization we aim to be — combining product, technology and advocacy — when we are building new things, making changes within ourselves and seeing how the traits of the past can show up in new ways in the future.

- New focus on technology. Mozilla is a technical powerhouse of the internet activist movement. And we must stay that way. We must provide leadership, test out products, and draw businesses into areas that aren’t traditional web technology. The internet is the platform now with ubiquitous web technologies built into it, but vast new areas are developing (like Wasmtime and the Bytecode Alliance vision of nanoprocesses). Our vision and abilities should play in those areas too.

- New focus on community. Mozilla must continue to be part of something larger than ourselves, part of the group of people looking for a better internet. Our open source volunteers today — as well as the hundreds of thousands of people who donate to and participate in Mozilla Foundation’s advocacy work — are a precious and critical part of this. But we also need to go further and think about community in new ways. We must be increasingly open to joining others on their missions, to contribute to the better internet they’re building.

- New focus on economics. Recognizing that the old model where everything was free has consequences, means we must explore a range of different business opportunities and alternate value exchanges. How can we lead towards business models that honor and protect people while creating opportunities for our business to thrive? How can we, or others who want a better internet, or those who feel like a different balance should exist between social and public benefit and private profit offer an alternative? We need to identify those people and join them. We must learn and expand different ways to support ourselves and build a business that isn’t what we see today.

We’re fortunate that Firefox and Mozilla retain a high degree of trust in the world. Trust and a feeling of authenticity feel unusual in tech today. But there is a sense that people want more from us. They want to work with us, to build with us. The changes we are making today are hard. But with these changes we believe we’ll be ready to meet these people — and the challenges and opportunities facing the future of the internet — head on.

The post Changing World, Changing Mozilla appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/08/11/changing-world-changing-mozilla/

|

|

This Week In Rust: This Week in Rust 351 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Check out this week's This Week in Rust Podcast

Updates from Rust Community

No official Rust announcements this week! :)

Tooling

Newsletters

Observations/Thoughts

- Steve Klabnik Interview

- Why QEMU should move from C to Rust

- First Impressions of Rust

- How to Stick with Rust

- Why learning Rust is great...As a second language

- What Is The Minimal Set Of Optimizations Needed For Zero-Cost Abstraction?

- Propane: an experimental generator syntax for Rust

- [ES] ?Por qu'e me gusta tanto Rust?

Learn Standard Rust

- Moves, copies and clones in Rust

- Rust for a Pythonista #2: Building a Rust crate for CSS inlining

- Surviving Rust async interfaces

- Two Easy Ways to Test Async Functions in Rust

- Cloning yourself - a refactoring for thread-spawning Rust types

- Allocation API, allocators and virtual memory

- Variables and memory management in Rust

- [ES] Polimorfismo con traits en Rust

- [PT] Aprendendo Rust: 06 - Controles de fluxo

- [PT] Meia hora aprendendo Rust - Parte 1

- [video] Crust of Rust: Channels

Learn More Rust

- Zero To Production #3: How To Bootstrap A Rust Web API From Scratch

- Using Rust Lambdas in Production

- Incorporate Postgres with Rust

- Let's implement a Bloom Filter

- A Guide to Contiguous Data in Rust

- Inbound & Outbound FFI

- Tutorial: Deno Apps with WebAssembly, Rust, and WASI

- Single Page Applications using Rust

- Implementing a Type-safe printf in Rust

- Building a Brainf*ck Compiler with Rust and LLVM

- Modernize network function development with this Rust-based framework

- Let's build a RedditBot to curate playlist links - I

- Let's build a RedditBot to curate playlist links - II

- Rust on iOS with SDL2

- [video] Using Linux libc in Rust - with the file-locker Crate

- [video] Embedded Rust Mob Programming

- [video] Implementing TCP in Rust (part 1)

- [video] Define a function with parameters in Rust

Project Updates

- Knurling-rs Announcement

- Meili raises 1.5M€ for open source search in Rust

- hors - instant coding answers via the command line have released v0.6.4, with pretty colorized output by default

Miscellaneous

- Building a faster CouchDB View Server in Rust

- The promise of Rust async-await for embedded

- Implementing the .NET Profiling API in Rust

- Possibly one step towards named arguments in Rust

- Embedded Rust tooling for the 21st century

- rustc 1.44.1 is reproducible in Debian

- Google engineers just submitted a new LLVM optimizer for consideration which gains an average of 2.33% perf.

Crate of the Week

This week's crate is bevy, a very capable yet simple game engine.

Thanks to mmmmib for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- cargo: Deduplicate Cargo workspace information

- dotenv-linter: several good first issues

- ruma: several help wanted issues

- tensorbase: several good first issues

- kanidm: several good first issues

- Libre-SOC: Add PowerPC64 to Rust's new inline assembly implementation (payment available)

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

307 pull requests were merged in the last week

- add back unwinding support for Sony PSP

- fix ICE when using asm! on an unsupported architecture

- handle well known traits for more types

- resolve

charas a primitive even if there is a module in scope - forbid

#[track_caller]on main - remove restriction on type parameters preceding consts w/ feature const-generics

- implement the

min_const_genericsfeature gate - tweak confusable idents checking

- miri: accept some post-monomorphization errors

- bubble up errors from

FileDescriptor::as_file_handle - simplify

array::IntoIter - polymorphize: unevaluated constants

- instance: polymorphize upvar closures/generators

- clean up const-hacks in int endianess conversion functions

- add

as_mut_ptrtoNonNull<[T]> - make

MaybeUninit::as_(mut_)ptrconst - make

IntoIteratorlifetime bounds of&BTreeMapmatch with&HashMap - implement

into_keysandinto_valuesfor associative maps - stabilize

Ident::new_raw - limit I/O vector count on Unix

- add

unsigned_absto signed integers - BTreeMap: better way to postpone root access in DrainFilter

- hashbrown: do not iterate to drop if empty

- hashbrown: relax bounds on HashSet constructors

- hashbrown: avoid closures to improve compile times

- stdarch: add more things that do adds

- futures: avoid writes without any data in write_all_vectored

- clean up rustdoc's

main() - rustdoc: display elided lifetime for non-reference type in doc

Rust Compiler Performance Triage

- 2020-08-11. 1 regression, 1 improvements, 1 of them on rollups. No outstanding nags from last week.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs are currently in the final comment period.

Tracking Issues & PRs

No Tracking Issues or PRs are currently in the final comment period.

New RFCs

- Proposal for POSIX error numbers in

std::os::unix - Standardize methods for leaking containers

- Introduce '$self' macro metavar for hygienic macro items

Upcoming Events

Online

- August 11. Saarbr"ucken, DE - Rust-Saar Meetup

3u16 - August 11. Dallas, TX, US - Dallas Rust - Second Tuesday

- August 13. San Diego, CA, US - San Diego Rust - August 2020 Tele-Meetup

- August 19. Vancouver, BC, CA - Vancouver Rust - Rust Study/Hack/Hang-out Night

- August 20. RustConf

North America

- August 13. Columbus, OH, US - Columbus Rust Society - Monthly Meeting

- August 25. Dallas, TX, US - Dallas Rust - Last Tuesday

Asia Pacific

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

- Senior Rust Engineer at equilibrium (Remote)

- Software Developer at DerivaDEX (Remote)

- Rust Core Engineer at CasperLabs (Remote)

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

You're not allowed to use references in structs until you think Rust is easy. They're the evil-hardmode of Rust that will ruin your day.

Thanks to Tom Phinney for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/08/11/this-week-in-rust-351/

|

|

The Servo Blog: This Week In Servo 135 |

In the past two weeks, we merged 108 PRs in the Servo organization’s repositories.

The latest nightly builds for common platforms are available at download.servo.org.

Last week we released Firefox Reality v1.2, which includes a smoother developer tools experience, along with support for Unity WebXR content and self-signed SSL certificates. See the full release notes for more information about the new release.

Planning and Status

Our roadmap is available online, including the team’s plans for 2020.

This week’s status updates are here.

Exciting works in progress

- paulrouget is adding bookmarks to Firefox Reality.

- Manishearth is implementing basic table support in the new Layout 2020 engine.

- jdm is making it easy to create builds that integrate AddressSanitizer.

- pcwalton is implementing support for CSS floats in the new Layout 2020 engine.

- kunalmohan is implementing the draft WebGPU specification.

Notable Additions

New layout engine

- jdm made

- Manishearth implemented support for the

clipCSS property. - Manishearth fixed the behaviour of the

insetCSS property for absolutely positioned elements.

Non-layout changes

- kunalmohan added the WebGPU conformance test suite to Servo’s automated tests.

- jdm improved the macOS nightly GStreamer packaging.

- nicoabie made a SpiderMonkey Rust binding API more resilient.

- utsavoze added support for

mouseenterandmouseleaveDOM events. - avr1254 removed some unnecessary UTF-8 to UTF-16 conversions when interacting with SpiderMonkey.

- jdm implemented

preserveDrawbingBuffersupport in WebGL code. - paulrouget added a crash reporting UI to Firefox Reality.

- mustafapc19 implemented the

Console.clearDOM API. - kunalmohan fixed a WebGPU crash related to the

GPUErrorScopeAPI, and improved the reporting behaviour to match the specification. - asajeffrey fixed a source of WebGL texture corruption in WebXR.

- asajeffrey added infrastructure to the GStreamer plugin to allow live-streaming 360 degree videos of Hubs rooms to Youtube.

- kunalmohan improved the error reporting behaviour of the WebGPU API.

- asajeffrey update the WebXR Layers implementation to match the latest specification.

- paulrouget improved the Firefox Reality preferences panel to highlight specific experimental features.

- jdm fixed a crash when playing media in Firefox Reality.

- paulrouget fixed a source of memory corruption in the C++ embedding layer.

- jdm avoided pancking when a devtools client disconnects unexpectedly.

- asajeffrey made it easier to test AR web content in desktop builds.

New Contributors

Interested in helping build a web browser? Take a look at our curated list of issues that are good for new contributors!

|

|

David Teller: Possibly one step towards named arguments in Rust |

A number of programming languages offer a feature called “Named Arguments” or “Labeled Arguments”, which makes some function calls much more readable and safer.

Let’s see how hard it would be to add these in Rust.

|

|

Firefox UX: Driving Value as a Tiny UX Content Team: How We Spend Content Strategy Resources Wisely |

Source: Vlad Tchompalov, Unsplash.

Our tiny UX content strategy team works to deliver the right content to the right users at the right time. We make sure product content is useful, necessary, and appropriate. This includes everything from writing an error message in Firefox to developing the full end-to-end content experience for a stand-alone product.

Mozilla has around 1,000 employees, and many of those are developers. Our UX team has 20 designers, 7 researchers, and 3 content strategists. We support the desktop and mobile Firefox browsers, as well as satellite products.

There’s no shortage of requests for content help, but there is a shortage of hours and people to tackle them. When the organization wants more of your time than you actually have, what’s a strategic content strategist to do?

1. Identify high-impact projects and work on those

We prioritize our time for the projects with high impact — those that matter most to the business and reach the most end users. Tactically, this means we do the following:

- Review high-level organizational goals as a team at the beginning of every planning cycle.

- Ask product managers to identify and rank order the projects needing content strategy support.

- Evaluate those requests against the larger business priorities and estimate the work. Will this require 10 percent of one content strategist’s time? 25 percent?

- Communicate out the projects that content strategy will be able to support.

By structuring our work this way, we aim to develop deep expertise on key focus areas rather than surface-level understanding of many.

2. Embed yourself and partner with product management

People sometimes think ‘content strategist’ is another name for ‘copywriter.’ While we do write the words that appear in the product, writing interface copy is about 10–20 percent of our work. The lion’s share of a content strategist’s day is spent laying the groundwork leading up to writing the words:

- Driving clarity

- Collaborating with designers

- Understanding technical feasibility and constraints

- Conducting user research

- Developing processes

- Aligning teams

- Documenting and communicating decisions up and out

To do this work, we embed with cross-functional teams. Content strategy shows up early and stays late. We attend kickoffs, align on user problems, crystallize solutions, and act as connective tissue between the product experience and our partners in localization, legal, and the support team. We often stay involved after designs are handed off because content decisions have long tentacles.

We also work closely with our product managers to ensure that the strategy we bring to the table is the right one. A clear up-front understanding of user and business goals leads to more thoughtful content design.

3. Participate in and lead research

A key way we shape strategy is by participating in user research (as observers and note-takers) and leading usability studies.

“I’m not completely certain what you mean when you say, ‘found on your device.’ WHICH device?” — Usability study participant on a redesigned onboarding experience (User Research Firefox UX, Jennifer Davidson, 2020)

Designing a usability study not only requires close partnership with design and product management, but also forces you to tackle product clarity and strategy issues early. It gives you the opportunity to test an early solution and hear directly from your users about what’s working and what’s not. Hearing the language real people use is enlightening, and it can help you articulate and justify the decisions you make in copy later on.

4. Collaborate with each other

Just because your team works on different projects doesn’t mean you can’t collaborate. In fact, it’s more of a reason to share and align. We want to present our users with a unified end experience, no matter when they encounter our product — or which content strategist worked on a particular aspect of it.

- Peer reviews. Ask one of your content strategy teammates to provide a second set of eyes on your work. This is especially useful for identifying confusing terminology or jargon that you, as the dedicated content strategist, may have adopted as an ‘insider.’ Reviews also keep team members in the loop so they can spread the word to other project teams.

- Document your decisions and why you made them. This builds your team’s institutional memory, saving you time when you (inevitably) need to dig back into those decisions later on.

- Share learnings. Did you just complete a usability testing study that revealed a key insight about language? Share it! Are you developing a framework to help your team think through how to approach product discovery? Share it! The more we can share what we’re each learning individually, the better we can learn and grow as a team.

- Content-only team meetings. At our weekly team meetings, we bring requests for feedback, content challenges we’re struggling with, and other in-the-weeds content topics like how our capitalization rules are evolving. It’s important to have a space to focus on your specialized craft.

- Legal and localization meetings. Our team also meets weekly with product counsel for legal review and monthly with our localization team to discuss terminology. What your teammates bring to those meetings provides visibility into content-related discussions outside of our day-to-day purview.

- Team work days. A few times a year, we clear our calendars and spend the day together. This is an opportunity for us to check in. What’s going well? What would we like to improve? What would we like to accomplish as a team in the coming months?

Cross-platform coordination between content strategy colleagues

5. Communicate the impact of your work

In addition to delivering the work, it’s critical that you evangelize it. Content strategists may love a long copy deck or beautifully formatted spreadsheet, but a lot of people…don’t. And many folks still don’t understand what a product content strategist or UX writer does, especially as an embedded team member.

To make your work and impact tangible, take the time to package it up for demo presentations and blogs. We’re storytellers. Put those skills to work to tell the story of how you arrived at the end result and what it took to get there.

Look for opportunities in your workplace to share — lunch-and-learns, book clubs, lightning talks, etc. When you get to represent your work alongside your team members, people outside of UX can begin to see that you aren’t just a Lorem ipsum magician.

6. Create and maintain a style guide

Creating a style guide is a massive effort, but it’s well worth the investment. First and foremost, it’s a tool that helps the entire team write from a unified perspective and with consistency.

Style guides need to be maintained and updated. The ongoing conversations you have with your team about those changes ensure you consider a variety of use cases and applications. A hard-and-fast rule established for your desktop product might not work so well on mobile or a web-based product, and vice versa. As a collective team, we can make better decisions as they apply across our entire product ecosystem

A style guide also scales your content work — even if you aren’t the person actually doing the work. The reality of a small content team is that copy will need to be created by people outside of your group. With a comprehensive style guide, you can equip those people with guidance. And, while style guides require investment upfront, they can save you time in the long-term by automating decisions already made so you can focus on solving new problems.

The Firefox Photon Design System includes copy guidelines.

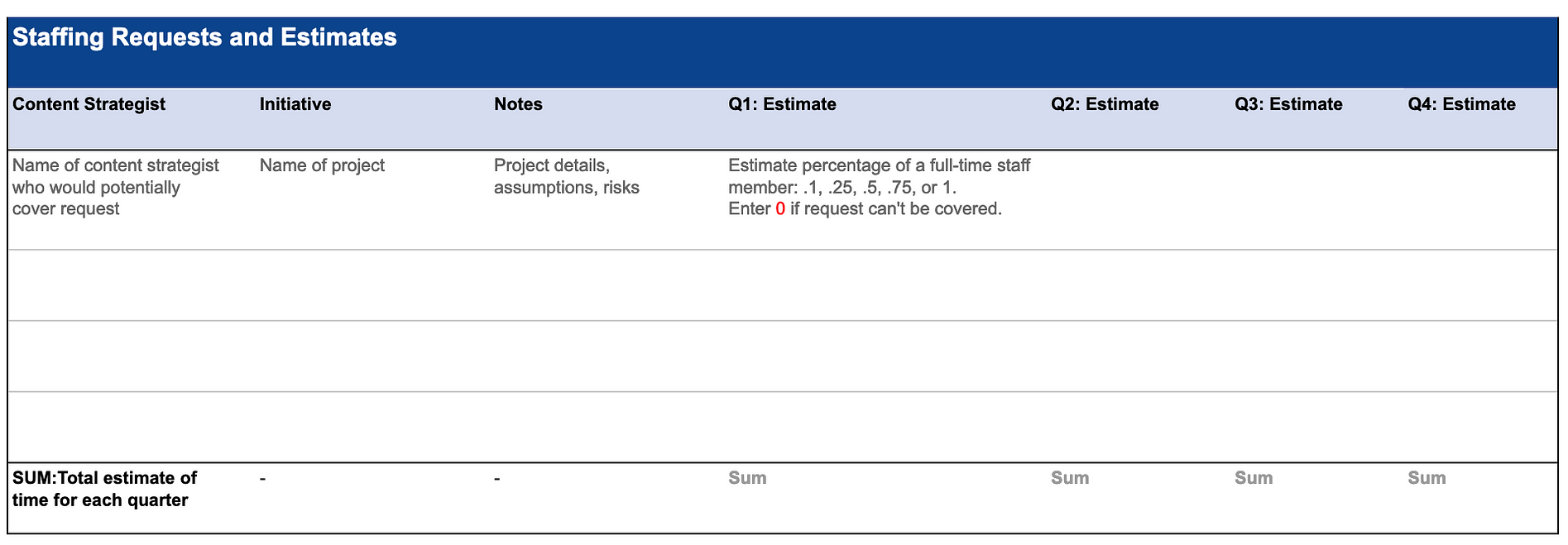

7. Keep track of requests, even when you can’t cover them

Even though you won’t be able to cover it all, it’s a good idea to track and catalogue all of the requests you receive. It’s one thing to just say more content strategy support is needed — it’s another to quantify it. To prioritize your work, you already had to document the projects somewhere, anyway.

Our team captures every request and estimates the percentage of one content strategist’s time that the work would require in a staffing spreadsheet. Does that mean you should be providing 100 percent coverage for everything? Unfortunately, it’d be impossible to deliver a 100 percent on all of it. But it’s eye opening to see that we’d need more people just to cover the business-critical requests.

Here’s a link for creating your own staffing requests tracker.

Template for tracking staffing requests

Closing thoughts

To realize the full breadth and depth of your impact, content strategists should prioritize strategically, collaborate deeply, and document and showcase their work. When you’re building your practice and trying to demonstrate value, it’s tough to take this approach. It’s hard to get a seat at the table initially, and it’s hard to prioritize requests. But even doing a version of these things can yield results — maybe you are able to embed on just one project, and the rest of the time you are tackling a deluge of strings. That’s okay. That’s progress.

Acknowledgements

Special thanks to Michelle Heubusch for making it possible for our tiny team to work strategically. And thanks to Sharon Bautista for editing help.

|

|

Data@Mozilla: Improving Your Experience across Products |

When you log into your Firefox Account, you expect a seamless experience across all your devices. In the past, we weren’t doing the best job of delivering on that experience, because we didn’t have the tools to collect cross-product metrics to help us make educated decisions in a way that fulfilled our lean data practices and our promise to be a trusted steward of your data. Now we do.

Firefox 81 will include new telemetry measurements that help us understand the experience of Firefox Account users across multiple products, answering questions such as: Do users who set up Firefox Sync spend more time on desktop or mobile devices? How is Firefox Lockwise, the password-manager built into the Firefox desktop browser, used differently than the Firefox Lockwise apps? We will use the unique privacy features of Firefox Accounts to answer questions like these while staying true to Mozilla’s data principles of necessity, privacy, transparency, and accountability–in particular, cross-product telemetry will only gather non-identifiable interaction data, like button clicks, used to answer specific product questions.

We achieve this by introducing a new telemetry ping that is specifically for gathering cross-product metrics. This ping will include a pseudonymous account identifier or pseudonym so that we can tell when two pings were created by the same Firefox Account. We manage this pseudonym in a way that strictly limits the ability to map it back to the real user account, by using the same technology that keeps your Firefox Sync data private (you can learn more in this post if you’re curious). Without getting into the cryptographic details, we use your Firefox Account password as the basis for creating a secret key on your client. The key is known only to your instances of Firefox, and only when you are signed in to your Firefox account, and we use that key to generate the pseudonym for your account.

We’ll have more to share soon. As we gain more insight into Firefox, we’ll come back to tell you what we learn!

https://blog.mozilla.org/data/2020/08/07/improving-your-experience-across-products/

|

|

Mozilla Privacy Blog: By embracing blockchain, a California bill takes the wrong step forward. |

The California legislature is currently considering a bill directing a public board to pilot the use of blockchain-type tools to communicate Covid-19 test results and other medical records. We believe the bill unduly dictates one particular technical approach, and does so without considering the privacy, security, and equity risks it poses. We urge the California Senate to reconsider.

The bill in question is A.B. 2004, which would direct the Medical Board of California to create a pilot program using verifiable digital credentials as electronic patient records to communicate COVID-19 test results and other medical information. The bill seems like a well-intentioned attempt to use modern technology to address an important societal problem, the ongoing pandemic. However, by assuming the suitability of cryptography-based verifiable credential models for this purpose, rather than setting out technology-neutral principles and guidelines for the proposed pilot program, the bill would set a dangerous precedent by effectively legislating particular technology outcomes. Furthermore, the chosen direction risks exacerbating the potential for discrimination and exclusion, a lesson Mozilla has learned in our work on digital identity models being proposed around the world. While we appreciate the safeguards that have been introduced into the legislation in its current form, such as its limitations on law enforcement use, they are insufficient. A new approach, one that maximizes public good while minimizing harms of privacy and exclusion, is needed.

A.B. 2004 is grounded in large part on legislative findings that the verifiable credential models being explored by the World Wide Web Consortium (W3C) “show great promise” (in the bill’s words) as a technology for communicating sensitive health information. However, W3C’s standards should not be misconstrued as endorsement of any particular use-case. Mozilla is an active member of and participant in W3C, but does not support the W3C’s verifiable credentials work. From our perspective, this bill over-relies on the potential of verifiable credentials without unpacking the tradeoffs involved in applying them to the sensitive public health problems at hand. The bill also fails to appreciate the many limitations of blockchain technology in this context, as others have articulated.

Fortunately, this bill is designed as the start of a process, establishing a pilot program rather than committing to a long term direction. However, a start in the wrong direction should nevertheless be avoided, rather than spending time and resources we can’t spare. Tying digital real world identities (almost certainly implicated in electronic patient records) to contact tracing solutions and, in time, vaccination and “other medical test results” is categorically concerning. Such a move risks creating new avenues for the discrimination and exclusion of vulnerable communities, who are already being disproportionately impacted by COVID-19. It sets a poor example for the rest of the United States and for the world.

At Mozilla, our view is that digital identity systems — for which verifiable credentials for medical status, the subject at issue here, are a stepping stone and test case — are a key real-world implementation challenge for central policy values of privacy, security, competition, and social inclusion. As lessons from India and Kenya have shown us, attempting to fix digital ID systems retroactively is a convoluted process that often lets real harms continue unabated for years. It’s therefore critical to embrace openness as a core methodology in system design. We published a white paper earlier this year to identify recommendations and guardrails to make an “open” ID system work in reality.

A better approach to developing the pilot program envisioned in this bill would establish design principles, guardrails, and outcome goals up front. It would not embrace any specific technical models in advance, but would treat feasible technology solutions equally, and set up a diverse working group to evaluate a broad range of approaches and paradigms. Importantly, the process should build in the possibility that no technical solution is suitable, even if this outcome forces policymakers back to the drawing board.

We stand with the Electronic Frontier Foundation and the ACLU of California in asking the California Senate to send A.B. 2004 back to the drawing board.

The post By embracing blockchain, a California bill takes the wrong step forward. appeared first on Open Policy & Advocacy.

|

|

Mozilla VR Blog: What's new in ECSY v0.4 and ECSY-THREE v0.1 |

We just released ECSY v0.4 and ECSY-THREE v0.1!

Since the initial release of ECSY we have been focusing on API stability and bug fixing as well as providing some features (such as components’ schemas) to improve the developer experience and provide better validation and descriptive errors when working in development mode.

The API layer hasn't changed that much since its original release but we introduced some new concepts:

Components schemas: Components are now required to have a schema (Unless you are defining a TagComponent).

Defining a component schema is really simple, you just need to define the properties of your component, their types, and, optionally, their default values

class Velocity extends Component {}

Velocity.schema = {

x: { type: Types.Number, default: 0 },

y: { type: Types.Number, default: 0 }

};

We provide type definitions for the basic javascript types, and also on ecsy-three for most of the three.js data types, but you can create your own custom types too.

Defining a schema for a component will give you some benefits for free like:

- Default

copy,cloneandresetimplementation for the component. Although you could define your own implementation for these functions for maximum performance. - Components pool to reuse instances to improve performance and GC stability.

- Tooling such as validators or editors which could show specific widgets depending on the data type and value.

- Serializers for components so we can store them in JSON or ArrayBuffers, useful for networking, tooling or multithreading.

Registering components: One of the requirements we introduced recently is that you must always register your components before using them, and that includes registering them before registering systems that use these components too. This was initially done to support an optimization to Queries, but we feel making this an explicit rule will also allow us to add further optimizations down the road, as well as just generally being easier to reason about.

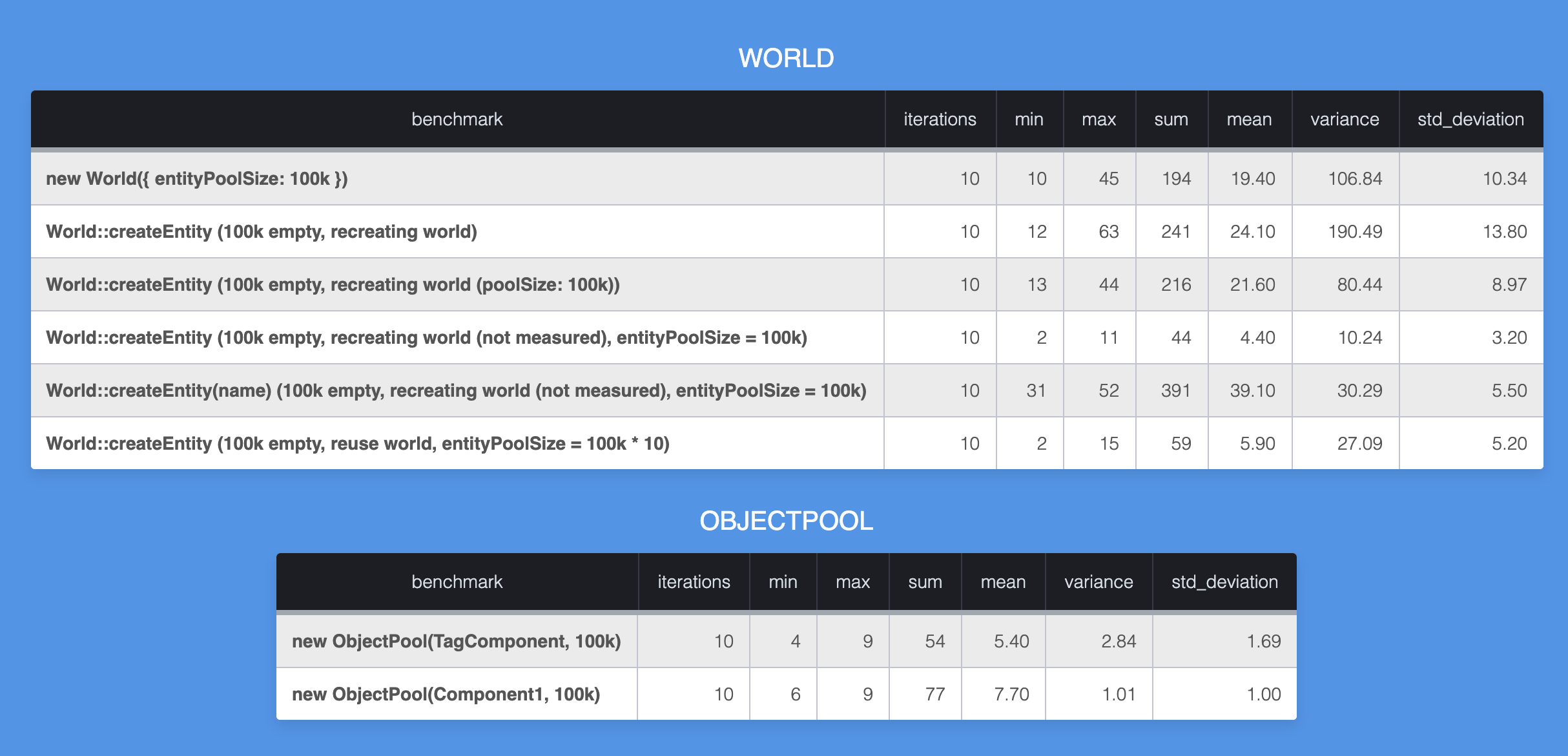

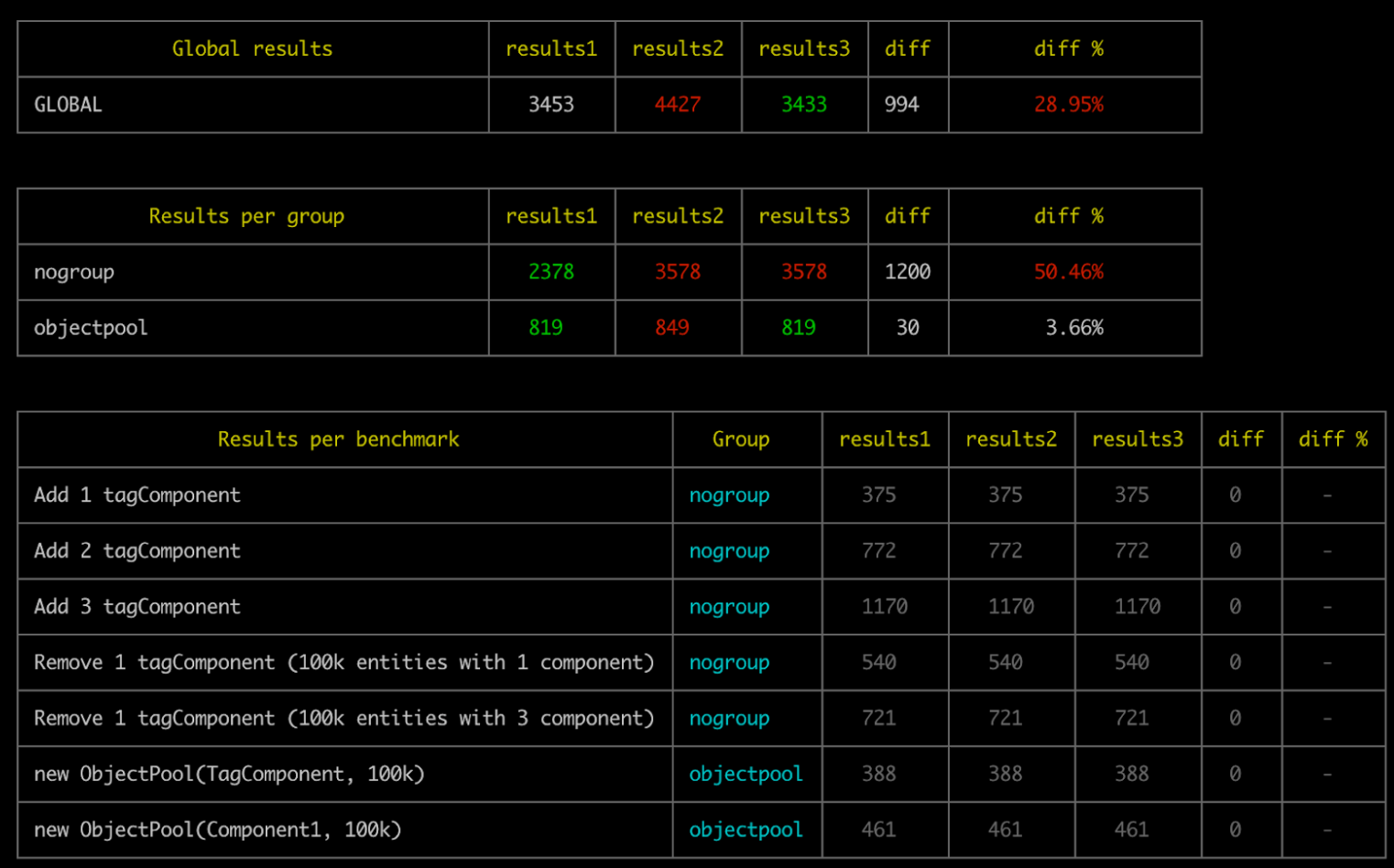

Benchmarks: When building a framework like ECSY that is expected to be doing a lot of operations per frame, it’s hard to infer how a change in the implementation will impact the overall performance. So we introduced a benchmark command to run a set of tests and measure how long they take.

We can use this data to compare across different branches and get an estimation on how much specific changes are affecting the performance.

ECSY-THREE

We created ecsy-three to facilitate developing applications using ECSY and three.js by providing a set of components and systems for interacting with ThreeJS from ECSY. In this release it has gotten a major refactor and we got rid of all the “extras” in the main repository, to focus on the “core” API to make it easy to use with three.js without adding unneeded abstractions.

We introduce extended implementations of ECSY's World and Entity classes (ECSYThreeWorld and ECSYThreeEntity) which provides some helpers to interact with three.js' Object3Ds.

The main helper you will be using is Entity.addObject3DComponent(Object3D, parent) that will add the Object3DComponent to the entity holding a three.js' Object3D reference, as well as adding extra tag components to indentify the type of Object3D we are adding.

Please note that we have been adding tag components for almost every type of Object3D in three.js (Mesh, Light, Camera, Scene, …) but you could still add any other you may need.

For example:

// Create a three.js Mesh

let mesh = new THREE.Mesh(

new THREE.BoxBufferGeometry(),

new THREE.MeshBasicMaterial()

);

// Attach the mesh to a new ECSY entity

let entity = world.createEntity().addObject3DComponent(mesh);

If we inspect the entity, it will have the following components:

Object3DComponentwith{ value: mesh }MeshTagComponent. Automatically added byaddObject3DComponent

Then you can query for each one of these components in your systems and grab the Object3D by using entity.getObject3D(), which is an alias for entity.getComponent(Object3DComponent).value.

import { MeshTagComponent, Object3DComponent } from "ecsy-three";

import { System } from "ecsy";

class RandomColorSystem extends System {

execute(delta) {

this.queries.entities.results.forEach(entity => {

// This will always return a Mesh since

// we are querying for the MeshTagComponent

const mesh = entity.getObject3D();

mesh.material.color.setHex(Math.random() * 0xffffff);

});

}

}

RandomColorSystem.queries = {

entities: {

components: [MeshTagComponent, Object3DComponent]

}

};

When removing Object3D components we also introduced the entity.removeObject3DComponent(unparent) that will get rid of the Object3DComponent as well as all the tag components introduced automatically (For example the MeshTagComponent in the previous example).

One important difference between adding or removing the Object3D components yourself or using these new helpers is that they can handle parenting/unparenting too.

For example the following code will attach the mesh to the scene (Internally it will be doing sceneEntity.getObject3D().add(mesh)):

let entity = world.createEntity().addObject3DComponent(mesh, sceneEntity);

When removing the Object3D component we can pass true as parameter to indicate that we want to unparent this Object3D from its current parent:

entity.removeObject3DComponent(true);

// The previous line is equivalent to:

entity.getObject3D().parent.remove(entity.getObject3D());

"Initialize" helper

Most ecsy & three.js applications will need a set of common components:

- World

- Camera

- Scene

- WebGLRenderer

- Render loop

So we added a helper function initialize in ecsy-three that will create all these for you. It is completely optional, but is a great way to quickly bootstrap an application.

import { initialize } from "ecsy-three";

const { world, scene, camera, renderer } = initialize();

You can find a complete example using all these methods the following glitch:

Developer tools

We updated the developer tools extension (Read about them) to support the newest version of ECSY core, but it should still be backward compatible with previous versions.

We fixed the remote debugging mode that allows you to run the extension in one browser while debugging an application in another browser or device (for example an Oculus Quest).

You can grab them on your favourite browser extension store:

The community

We are so happy with how the community has been helping us build ECSY and the project that have emerged out of them.

We have a bunch of cool experiments around physics, networking, games, and boilerplates and bindings for pixijs, phaser, babylon, three.js, react, and many more!

It’s been especially useful for us to open a Discord where a lot of interesting discussions both ECSY or ECS as a paradigm have happened.

What's next?

There is still a long road ahead, and we have a lot of features and projects in mind to keep improving the ECSY ecosystem as for example:

- Revisit the current reactive queries implementation and design, especially the deferred removal step.

- Continue to experiment with using ECSY in projects internally at Mozilla, like Hubs and Spoke.

- Improving the sandbox and API examples

- Keep adding new systems and components for higher level functionality: physics, teleport, networking, hands & controllers, …

- Keep building new demos to showcase the features we are releasing

Please feel free to use our github repositories (ecsy, ecsy-three, ecsy-devtools) to follow the development, request new features or file issues on bugs you find. Also come participate in community discussions on our discourse forum and discord server.

|

|

Support.Mozilla.Org: Review of the year so far, and looking forward to the next 6 months. |

In 2019 we started looking into our experiences and 2020 saw us release the new responsive redesign, a new AAQ flow, a finalized Firefox Accounts migration, and a few other minor tweaks. We have also performed a Python and Django upgrade carrying on with the foundational work that will allow us to grow and expand our support platform. This was a huge win for our team and the first time we have improved our experience in years! The team is working on tracking the impact and improvement to our overall user experience.

We also know that contributors in Support have had to deal with an old, sometimes very broken, toolset, and so we wanted to work on that this year. You may have already heard the updates from Kiki and Giulia through their monthly strategy updates. The research and opportunity identification the team did was hugely valuable, and the team identified onboarding as an immediate area for improvement. We are currently working through an improved onboarding process and look forward to implementing and launching ongoing work.

Apart from that, we’ve done a quite chunk of work on the Social Support side with the transition from Buffer Reply to Conversocial. The change was planned since the beginning of this year and we worked together with the Pocket team on the implementation. We’ve also collaborated closely with the marketing team to kick off the @FirefoxSupport Twitter account that we’ll be using to focus our Social Support community effort.

Now, the community managers are focusing on supporting the Fennec to Fenix migration. A community campaign to promote the Respond Tool is lining up in parallel with the migration rollout this week and will run until the end of August as we’re completing the rollout.

We plan to continue implementing the information architecture we developed late last year that will improve our navigation and clean up a lot of the old categories that are clogging up our knowledge base editing tools. We’re also looking into redesigning our internal search architecture, re-implement it from scratch and expand our search UI.

2020 is also the year we have decided to focus more on data. Roland and JR have been busy building out our product dashboards, all internal for now – and we are now working on how we make some of this data publicly available. It is still work in progress, but we hope to make this possible sometime in early 2021.

In the meantime, we welcome feedback, ideas, and suggestions. You can also fill out this form or reach out to Kiki/Giulia for questions. We hope you are all as excited about all the new things happening as we are!

Thanks,

Patrick, on behalf of the SUMO team

|

|

The Mozilla Blog: Virtual Tours of the Museum of the Fossilized Internet |

Let’s brainstorm a sustainable future together.

Imagine: We are in the year 2050 and we’re opening the Museum of the Fossilized Internet, which commemorates two decades of a sustainable internet. The exhibition can now be viewed in social VR. Join an online tour and experience what the coal and oil-powered internet of the past was like.

Visit the Museum from home

In March 2020, Michelle Thorne and I announced office tours of the Museum of the Fossilized Internet as part of our new Sustainability programme. Then the pandemic hit, and we teamed up with the Mozilla Mixed Reality team to make it more accessible while also demonstrating the capabilities of social VR with Hubs.

We now welcome visitors to explore the museum at home through their browsers.

The museum was created to be a playful source of inspiration and an invitation to imagine more positive, sustainable futures. Here’s a demo tour to help you get started on your visit.

Video Production: Dan Fernie-Harper; Spoke scene: Liv Erickson and Christian Van Meurs; Tour support: Elgin-Skye McLaren.

Foresight workshops

But that’s not all. We are also building on the museum with a series of foresight workshops. Once we know what preferable, sustainable alternatives look like, we can start building towards them so that in a few years, this museum is no longer just a thought experiment, but real.

Our first foresight workshop will focus on policy with an emphasis on trustworthy AI. In a pilot, facilitators Michelle Thorne and Fieke Jansen will specifically focus on the strategic opportunity that the European Commission is in the process of defining its AI strategy, climate agenda and COVID-19 recovery plans. Thought together, this workshop will develop options to advance both, trustworthy AI and climate justice.

More foresight workshops should and will follow. We are currently looking at businesses, technology, or the funders community as additional audiences. Updates will be available on the wiki.

You are also invited to join the sustainability team as well as our environmental champions on our Matrix instance to continue brainstorming sustainable futures. More updates on Mozilla’s journey towards sustainability will be shared here on the Mozilla Blog.

The post Virtual Tours of the Museum of the Fossilized Internet appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/08/06/virtual-tours-of-the-museum-of-the-fossilized-internet/

|

|

Data@Mozilla: Experimental integration Glean with Unity applications |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean. You can find an index of all TWiG posts online.)

This is a special guest post by non-Glean-team member Daosheng Mu!

You might notice Firefox Reality PC Preview has been released in HTC’s Viveport store. That is a VR web browser that provides 2D overlay browsing alongside immersive content and supports web-based immersive experiences for PC-connected VR headsets. In order to easily deploy our product into the Viveport store, we take advantage of Unity to help make our application launcher. Also because of that, it brings us another challenge about how to use Mozilla’s existing telemetry system.

As we know, Glean SDK has provided language bindings for different programming language requirements that include Kotlin, Swift, and Python. However, when we are talking about supporting applications that use Unity as their development toolkit, there are no existing bindings available to help us achieve it. Unity allows users using a Python interpreter to embed Python scripts in a Unity project; however, due to Unity’s technology being based on the Mono framework, that is not the same as our familiar Python runtime for running Python scripts. So, the alternative way we need to find out is how to run Python on .Net Framework or exactly on Mono framework. If we are discussing possible approaches to run Python script in the main process, using IronPython is the only solution. However, it is only available for Python 2.7, and the Glean SDK Python language binding needs Python 3.6. Hence, we start our plans to develop a new Glean binding for C#.

Getting started

The Glean team and I initialized the discussions about what are the requirements of running Glean in Unity to implement C# binding from Glean. We followed minimum viable product strategy and defined very simple goals to evaluate if the plan could be workable. Technically, we only need to send built-in and custom pings as the current Glean Python binding mechanism, and we are able to just use StringMetricType as our first metric in this experimental Unity project. Besides, we also notice .Net Frameworks have various versions, and it is essential to consider the compatibility with the Mono framework in Unity. Therefore, we decide Glean C# binding would be based on .Net Standard 2.0. Based on these efficient MVP goals and Glean team’s rapid production, we got our first alpha version of C# binding in a very short moment. I really appreciate Alessio, Mike, Travis, and other team members from the Glean team. Their hard work made it happen so quickly, and they were patient with my concerns and requirements.

How it works

In the beginning, it is worth it for us to explain how to integrate Glean into a Hello World C# application. We can choose either importing the C# bindings source code from glean-core/csharp or just building the csharp.sln from the Glean repository and then copy and paste the generated Glean.dll to your own project. Then, in your C# project’s Dependencies setting, add this Glean.dll. Aside from this, we also need to copy and paste glean_ffi.dll that is existing in the folder from pulling Glean after running `cargo build`. Lastly, add Serilog library into your project via NuGet. We can install it through NuGet Package Manager Console as below:

PM> Install-Package Serilog

PM> Install-Package Serilog.Sinks.Console

Defining pings and metrics

Before we start to write our program, let’s design our metrics first. Based on the current ability of Glean SDK C# language binding, we can create a custom ping and set a string type metric for this custom ping. Then, at the end of the program, we will submit this custom ping, this string metric would be collected and uploaded to our data server. The ping and metric description files are as below:

Testing and verifying it

Now, it is time for us to write our HelloWorld program.

As we can see, the code above is very straightforward. Although Glean parser in C# binding hasn’t been supported to assist us create metrics, we are still able to create these metrics in our program manually. One thing you might notice is Thread.Sleep(1000); at the bottom part of the main(). It means pausing the current thread for 1000 milliseconds. Because this HelloWorld program is a console application, the application will quit once there are no other operations. We need this line to make sure the Glean ping uploader has enough time to finish its ping uploading before the main process is quit. We also can choose to replace it with Console.ReadKey(); to let users quit the application instead of closing the application itself directly. The better solution here is to launch a separate process for Glean uploader; this is the current limitation of our C# binding implementation. We will work on this in the future. Regarding the extended code you can go to glean/samples/csharp to see other pieces, we also will use the same code at the Unity section.

After that, we would be interested in seeing if our pings are uploaded to a data server successfully. We can set the Glean debug view environment variable by inserting set GLEAN_DEBUG_VIEW_TAG=samplepings in our command-line tool, samplepings could be any you would like to be as a tag of pings. Then, run your executable file in the same command-line window. Finally, you can see this result from Glean Debug Ping Viewer , the result is as below:

It looks great now. But you might notice the os_version looks not right if you are running it on a Windows 10 platform. This is due to the fact that we are using

Environment.OSVersion to get the OS version, and it is not reliable. The follow up discussion please reference Bug 1653897. The solution is by adding a manifest file, then unmarking the lines of Windows 10 compatibility.

Your first Glean program in Unity

Now, I think we are good enough about the explanation of general C# program parts. Let’s move on the main point of this article, using Glean in Unity. Using Glean in Unity is a little bit different, but it is not too complicated. First of all, open the C# solution that your Unity project creates for you. Because Unity needs more dependencies when using Glean, we would let the Glean NuGet package help us install them in advance. As Glean NuGet package mentions, install Glean NuGet package by the below command:

Install-Package Mozilla.Telemetry.Glean -Version 0.0.2-alpha

Then, check your Packages folder, you could see there are some packages already downloaded into your folder.

However, the Unity project builder couldn’t be able to recognize this Packages folder, we need to move the libraries from these packages into the Unity Assets\Scripts\Plugins folder. You might be able to see these libraries have some runtime versions differently. The basic idea is that Unity is .Net Standard 2.0 compatible, so we can just grab them from lib\netstandard2.0 folders. In addition, Unity allows users to distribute their version to x86 and x86_64 platforms. Therefore, when moving Glean FFI library into this Plugins folder, we have to put Glean FFI library, glean_ffi.dll, x86 and x86_64 builds into Assets\Scripts\Plugins\x86 and Assets\Scripts\Plugins\x86_64 individually. Fortunately, in Unity, we don’t need to worry about the Windows compatibility manifest stuff, they already handle it for us.

Now, we can start to copy the same code to its main C# script.

We might notice we didn’t add Thread.Sleep(1000); at the end of the start() function. It is because in general, Unity is a Window application, the main approach to exit an Unity application is by closing its window. Hence, Glean ping uploader has enough time to finish its task. However, if your application needs to use a Unity specific API, Application.Quit(), to close the program. Please make sure to make the main thread wait for a couple of seconds in order to preserve time for Glean ping uploader to finish its jobs. For more details about this example code, please go to GleanUnity to see its source code.

Next steps

C# binding is still at its initial stage. Although we already support a few metric types, we still have lots of metric types and features that are unavailable compared to other bindings. We also look forward to providing a better solution of off-main process ping upload to resolve needing a main thread to wait for Glean ping uploader before the main process can quit. We will keep working on it!

https://blog.mozilla.org/data/2020/08/06/experimental-integration-glean-with-unity-applications/

|

|

Cameron Kaiser: Google, nobody asked for a new Blogger interface |

I'm writing this post in what Google is euphemistically referring to as an improvement. I don't understand this. I managed to ignore New Blogger for a few weeks but Google's ability to fark stuff up has the same air of inevitability as rotting corpses. Perhaps on mobile devices it's better, and even that is a matter of preference, but it's space-inefficient on desktop due to larger buttons and fonts, it's noticeably slower, it's buggy, and very soon it's going to be your only choice.

My biggest objection, however, is what they've done to the HTML editor. I'm probably the last person on earth to do so, but I write my posts in raw HTML. This was fine in the old Blogger interface which was basically a big freeform textbox you typed tags into manually. There was some means to intercept tags you didn't close, which was handy, and when you added elements from the toolbar you saw the HTML as it went in. Otherwise, WYTIWYG (what you typed is what you got). Since I personally use fairly limited markup and rely on the stylesheet for most everything, this worked well.

The new one is a line editor ... with indenting. Blogger has always really, really wanted you to use

as a container, even though a closing tag has never been required. But now, thanks to the indenter, if you insert a new paragraph then it starts indenting everything, including lines you've already typed, and there's no way to turn this off! Either you close every

tag immediately to defeat this behaviour, or you start using a lot of

s, which specifically defeats any means of semantic markup. (More about this in a moment.) First world problem? Absolutely. But I didn't ask for this "assistance" either, nor to require me to type additional unnecessary content to get around a dubious feature.

But wait, there's less! By switching into HTML view, you lose ($#@%!, stop indenting that line when I type emphasis tags!) the ability to insert hyperlinks, images or other media by any other means other than manually typing them out. You can't even upload an image, let alone automatically insert the HTML boilerplate and edit it.

So switch into Compose view to actually do any of those things, and what happens? Like before, Blogger rewrites your document, but now this happens all the time because of what you can't do in HTML view. Certain arbitrarily-determined naughtytags(tm) like become (my screen-reader friends will be disappointed). All those container close tags that are unnecessary bloat suddenly appear. Oh, and watch out for that dubiously-named "Format HTML" button, the only special feature to appear in the HTML view, as opposed to anything actually useful. To defeat the HTML autocorrupt while I was checking things writing this article, I actually copied and repasted my entire text multiple times so that Blogger would stop the hell messing with it. Who asked for this?? Clearly the designers of this travesty, assuming it isn't some cruel joke perpetuated by a sadistic UI anti-expert or a covert means to make people really cheesed off at Blogger so Google can claim no one uses it and shut it down, now intend HTML view to be strictly touch-up only, if that, and not a primary means of entering a post. Heaven forbid people should learn HTML anymore and try to write something efficient.

Oh, what else? It's slower, because of all the additional overhead (remember, it used to be just a big ol' box o' text that you just typed into, and a selection of mostly static elements making up the UI otherwise). Old Blogger was smart enough (or perhaps it was a happy accident) to know you already had a preview tab open and would send your preview there. New Blogger opens a new, unnecessary tab every time. The fonts and the buttons are bigger, but the icons are of similar size, defeating any reasonable argument of accessibility and just looks stupid on the G5 or the Talos II. There's lots of wasted empty space, too. This may reflect the contents of the crania of the people who worked on it, and apparently they don't care (I complained plenty of times before switching back, I expect no reply because they owe me nothing), so I feel no shame in abusing them.

Most of all, however, there is no added functionality. There is no workflow I know of that this makes better, and by removing stuff that used to work, demonstrably makes at least my own workflow worse.

So am I going to rage-quit Blogger? Well, no, at least not for the blogs I have that presently exist (feel free to visit, linked in the blogroll). I have years of documents here going back to TenFourFox's earliest inception in 2010, many of which are still very useful to vintage Power Mac users, and people know where to find them. It was the lowest effort move at the time to start a blog here and while Blogger wasn't futzing around with their own secret sauce it worked out well.

So, for future posts, my anticipated Rube Goldbergian nightmare is to use Compose view to load my images, copy the generated HTML off, type the rest of the tags manually in a text editor as God and Sir Tim intended and cut and paste it into a blank HTML view before New Blogger has a chance to mess with it. Hopefully they don't close the hole with paste not auto-indenting, for all that's holy. And if this is the future of Blogger, then if I have any future projects in mind, I think it's time for me to start self-hosting them and take a hike. Maybe this really is Google's way of getting this place to shut down.

(I actually liked New Coke, by the way.)

http://tenfourfox.blogspot.com/2020/08/google-nobody-asked-for-new-blogger.html

|

|

Karl Dubost: Browser developer tools timeline |

I was reading In a Land Before Dev Tools by Amber, and I thought, Oh here missing in the history the beautifully chiseled Opera Dragonfly and F12 for Internet Explorer. So let's see what are all the things I myself didn't know.

(This is slightly larger than just browser devtools, but close from what the web developers had to be able to work. If you feel I had a glaring omission in this list, send me an email kdubost is working at mozilla.com)

- 1997-12: JavaScript debugger for Netscape Communicator, future Firefox. (JavaScript Debugger)

- 1998-02: Microsoft Script Debugger for IE 4.0. Thanks to Kilian Valkhof (added on 2020-08-07)

- ~2001: DOM Inspector in Mozilla code base.

- 2001-10: Venkman, JavaScript debugger inside Mozilla Suite by Robert Ginda. (Venkman)

- 2003-10: Fiddler for IE for understanding HTTP network issues (History of releases)

- 2005-09: Developer Toolbar for IE. (Developer Toolbar for IE announced at PDC) which later on became F12

- 2006-01: Firebug for Firefox by Joe Hewitt as an extension for Firefox. Probably 2005, but the first release is January 2006. (11 years of history)

- 2006-01: Web Inspector for Safari. (Introducing the Web Inspector and 10 Years of Web Inspector)

- 2006-06: Drosera for Safari, JavaScript debugger (Introducing Drosera)

- 2007-07: Yslow made by Yahoo! for Firebug originally. It analyzed the pages for its performances. (YSlow Release on YDN)

- 2008-05: Opera Dragonfly in… Opera. Died in 2012 when the company decided to switched to Blink. Sniff. One of the best debugging tools at the time. Opera Dragonfly homepage

- 2008-09: DevTools in Chrome (10 years anniversary post)

- 2009-06: PageSpeed, a tool to evaluate the performance of the Web page, similar to Yslow. (Introducing Page Speed)

- 2009-07: Opera Scope Protocol initially proposed to have a common protocol for all devtools. Browser implementers decided that they were not interested to converge. Opera Scope Protocol Specification Released

- 2009-10: HTTP Archive Specification, a format for HTTP monitoring tools.

- 2010-02: Firebug Lite for Chrome

Note: Without WebArchive, all this history would not have been possible to retrace. The rust of the Web is still ongoing.

Otsukare!

https://www.otsukare.info/2020/08/06/browser-devtools-timeline

|

|

Daniel Stenberg: Upcoming Webinar: curl: How to Make Your First Code Contribution |

When: Aug 13, 2020 10:00 AM Pacific Time (US and Canada) (17:00 UTC)

Length: 30 minutes

Abstract: curl is a wildly popular and well-used open source tool and library, and is the result of more than 2,200 named contributors helping out. Over 800 individuals wrote at least one commit so far.

In this presentation, curl’s lead developer Daniel Stenberg talks about how any developer can proceed in order to get their first code contribution submitted and ultimately landed in the curl git repository. Approach to code and commits, style, editing, pull-requests, using github etc. After you’ve seen this, you’ll know how to easily submit your improvement to curl and potentially end up running in ten billion installations world-wide.

Register in advance for this webinar:

https://us02web.zoom.us/webinar/register/WN_poAshmaRT0S02J7hNduE7g

|

|

Nicholas Nethercote: How to speed up the Rust compiler some more in 2020 |

I last wrote in April about my work on speeding up the Rust compiler. Time for another update.

Weekly performance triage

First up is a process change: I have started doing weekly performance triage. Each Tuesday I have been looking at the performance results of all the PRs merged in the past week. For each PR that has regressed or improved performance by a non-negligible amount, I add a comment to the PR with a link to the measurements. I also gather these results into a weekly report, which is mentioned in This Week in Rust, and also looked at in the weekly compiler team meeting.

The goal of this is to ensure that regressions are caught quickly and appropriate action is taken, and to raise awareness of performance issues in general. It takes me about 45 minutes each time. The instructions are written in such a way that anyone can do it, though it will take a bit of practice for newcomers to become comfortable with the process. I have started sharing the task around, with Mark Rousskov doing the most recent triage.

This process change was inspired by the “Regressions prevented” section of an excellent blost post from Nikita Popov (a.k.a. nikic), about the work they have been doing to improve the speed of LLVM. (The process also takes some ideas from the Firefox Nightly crash triage that I set up a few years ago when I was leading Project Uptime.)

The speed of LLVM directly impacts the speed of rustc, because rustc uses LLVM for its backend. This is a big deal in practice. The upgrade to LLVM 10 caused some significant performance regressions for rustc, though enough other performance improvements landed around the same time that the relevant rustc release was still faster overall. However, thanks to nikic’s work, the upgrade to LLVM 11 will win back much of the performance lost in the upgrade to LLVM 10.

It seems that LLVM performance perhaps hasn’t received that much attention in the past, so I am pleased to see this new focus. Methodical performance work takes a lot of time and effort, and can’t effectively be done by a single person over the long-term. I strongly encourage those working on LLVM to make this a team effort, and anyone with the relevant skills and/or interest to get involved.

Better benchmarking and profiling

There have also been some major improvements to rustc-perf, the performance suite and harness that drives perf.rust-lang.org, and which is also used for local benchmarking and profiling.

#683: The command-line interface for the local benchmarking and profiling commands was ugly and confusing, so much so that one person mentioned on Zulip that they tried and failed to use them. We really want people to be doing local benchmarking and profiling, so I filed this issue and then implemented PRs #685 and #687 to fix it. To give you an idea of the improvement, the following shows the minimal commands to benchmark the entire suite.

# Old target/release/collector --dbbench_local --rustc --cargo # New target/release/collector bench_local

Full usage instructions are available in the README.

#675: Joshua Nelson added support for benchmarking rustdoc. This is good because rustdoc performance has received little attention in the past.

#699, #702, #727, #730: These PRs added some proper CI testing for the local benchmarking and profiling commands, which had a history of being unintentionally broken.

Mark Rousskov also made many small improvements to rustc-perf, including reducing the time it takes to run the suite, and improving the presentation of status information.

cargo-llvm-lines

Last year I wrote about inlining and code bloat, and how they can have a major effect on compile times. I mentioned that tooling to measure code size would be helpful. So I was happy to learn about the wonderful cargo-llvm-lines, which measures how many lines of LLVM IR generated for each function. The results can be surprising, because generic functions (especially commons ones like Vec::push(), Option::map(), and Result::map_err()) can be instantiated dozens or even hundreds of times in a single crate.

I worked on multiple PRs involving cargo-llvm-lines.

#15: This PR added percentages to the output of cargo-llvm-lines, making it easier to tell how important each function’s contribution is to the total amount of code.

#20, #663: These PRs added support for cargo-llvm-lines within rustc-perf, which made it easy to measure the LLVM IR produced for the standard benchmarks.

#72013: RawVec::grow() is a function that gets called by Vec::push(). It’s a large generic function that deals with various cases relating to the growth of vectors. This PR moved most of the non-generic code into a separate non-generic function, for wins of up to 5%.