Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Servo Blog: This Week In Servo 131 |

Welcome back everyone - it’s been a year without written updates, but we’re getting this train back on track! Servo hasn’t been dormant in that time; the biggest news was the public release of Firefox Reality (built on Servo technology) in the Microsoft store.

In the past week, we merged 44 PRs in the Servo organization’s repositories.

The latest nightly builds for common platforms are available at download.servo.org.

Planning and Status

Our roadmap is available online, including the team’s plans for 2020.

This week’s status updates are here.

Exciting works in progress

- jdm is replacing the websocket backend.

- cybai and jdm are implementing dynamic module script imports.

- kunalmohan is implementing the draft WebGPU specification.

Notable Additions

- SimonSapin fixed a source of Undefined Behaviour in the

smallveccrate. - muodov improved the compatibility of invalid form elements with the HTML specification, and added

the missing

requestSubmitAPI. - kunalmohan implemented GPUQueue APIs for WebGPU, and avoided synchronous updates, and implemented the getMappedRange API for GPUBuffer.

- alaryso fixed a bug preventing running tests when using rust-analyzer.

- alaryso avoided a panic in pages that perform layout queries on disconnected iframes.

- paulrouget integrated virtual keyboard support for text inputs into Firefox Reality, as well as added support for keyboard events.

- Manishearth implemented WebAudio node types for reading and writing MediaStreams.

- gterzian improved the responsiveness of the browser when shutting down.

- utsavoza updated the referrer policy implementation to match the updated specification.

- ferjm implemented support for WebRTC data channels.

New Contributors

Interested in helping build a web browser? Take a look at our curated list of issues that are good for new contributors!

|

|

The Rust Programming Language Blog: Announcing Rustup 1.22.0 |

|

|

Fr'ed'eric Wang: Contributions to Web Platform Interoperability (First Half of 2020) |

Note: This blog post was co-authored by AMP and Igalia teams.

Web developers continue to face challenges with web interoperability issues and a lack of implementation of important features. As an open-source project, the AMP Project can help represent developers and aid in addressing these challenges. In the last few years, we have partnered with Igalia to collaborate on helping advance predictability and interoperability among browsers. Standards and the degree of interoperability that we want can be a long process. New features frequently require experimentation to get things rolling, course corrections along the way and then, ultimately as more implementations and users begin exploring the space, doing really interesting things and finding issues at the edges we continue to advance interoperability.

Both AMP and Igalia are very pleased to have been able to play important roles at all stages of this process and help drive things forward. During the first half of this year, here’s what we’ve been up to…

Default Aspect Ratio of Images

In our previous blog post we mentioned our experiment to implement the intrinsic size attribute in WebKit. Although this was a useful prototype for standardization discussions, at the end there was a consensus to switch to an alternative approach. This new approach addresses the same use case without the need of a new attribute. The idea is pretty simple: use specified width and height attributes of an image to determine the default aspect ratio. If additional CSS is used e.g. “width: 100%; height: auto;”, browsers can then compute the final size of the image, without waiting for it to be downloaded. This avoids any relayout that could cause bad user experience. This was implemented in Firefox and Chromium and we did the same in WebKit. We implemented this under a flag which is currently on by default in Safari Tech Preview and the latest iOS 14 beta.

Scrolling

We continued our efforts to enhance scroll features. In WebKit, we began with scroll-behavior, which provides the ability to do smooth scrolling. Based on our previous patch, it has landed and is guarded by an experimental flag “CSSOM View Smooth Scrolling” which is disabled by default. Smooth scrolling currently has a generic platform-independent implementation controlled by a timer in the web process, and we continue working on a more efficient alternative relying on the native iOS UI interfaces to perform scrolling.

We have also started to work on overscroll and overscroll customization, especially for the scrollend event. The scrollend event, as you might expect, is fired when the scroll is finished, but it lacked interoperability and required some additional tests. We added web platform tests for programmatic scroll and user scroll including scrollbar, dragging selection and keyboard scrolling. With these in place, we are now working on a patch in WebKit which supports scrollend for programmatic scroll and Mac user scroll.

On the Chrome side, we continue working on the standard scroll values in non-default writing modes. This is an interesting set of challenges surrounding the scroll API and how it works with writing modes which was previously not entirely interoperable or well defined. Gaining interoperability requires changes, and we have to be sure that those changes are safe. Our current changes are implemented and guarded by a runtime flag “CSSOM View Scroll Coordinates”. With the help of Google engineers, we are trying to collect user data to decide whether it is safe to enable it by default.

Another minor interoperability fix that we were involved in was to ensure that the scrolling attribute of frames recognizes values “noscroll” or “off”. That was already the case in Firefox and this is now the case in Chromium and WebKit too.

Intersection and Resize Observers

As mentioned in our previous blog post, we drove the implementation of IntersectionObserver (enabled in iOS 12.2) and ResizeObserver (enabled in iOS 14 beta) in WebKit. We have made a few enhancements to these useful developer APIs this year.

Users reported difficulties with observe root of inner iframe and the specification was modified to accept an explicit document as a root parameter. This was implemented in Chromium and we implemented the same change in WebKit and Firefox. It is currently available Safari Tech Preview, iOS 14 beta and Firefox 75.

A bug was also reported with ResizeObserver incorrectly computing size for non-default zoom levels, which was in particular causing a bug on twitter feeds. We landed a patch last April and the fix is available in the latest Safari Tech Preview and iOS 14 beta.

Resource Loading

Another thing that we have been concerned with is how we can give more control and power to authors to more effectively tell the browser how to manage the loading of resources and improve performance.

The work that we started in 2019 on lazy loading has matured a lot along with the specification.

The lazy image loading implementation in WebKit therefore passes the related WPT tests and is functional and comparable to the Firefox and Chrome implementations. However, as you might expect, as we compare uses and implementation notes it becomes apparent that determining the moment when the lazy image load should start is not defined well enough. Before this can be enabled in releases some more work has to be done on improving that. The related frame lazy loading work has not started yet since the specification is not in place.

We also added an implementation for stale-while-revalidate. The stale-while-revalidate Cache-Control directive allows a grace period in which the browser is permitted to serve a stale asset while the browser is checking for a newer version. This is useful for non-critical resources where some degree of staleness is acceptable, like fonts. The feature has been enabled recently in WebKit trunk, but it is still disabled in the latest iOS 14 beta.

Contributions were made to improve prefetching in WebKit taking into account its cache partitioning mechanism. Before this work can be enabled some more patches have to be landed and possibly specified (for example, prenavigate) in more detail. Finally, various general Fetch improvements have been done, improving the fetch WPT score. Examples are:

- Remove certain headers when a redirect causes a request method change

- Implement wildcard behavior for Cross-Origin-Expose-Headers”

- Align with Origin header changes

- Fetch: URL parser not always using UTF-8

What’s next

There is still a lot to do in scrolling and resource loading improvements and we will continue to focus on the features mentioned such as scrollend event, overscroll behavior and scroll behavior, lazy loading, stale-while-revalidate and prefetching.

As a continuation of the work done for aspect ratio calculation of images, we will consider the more general CSS aspect-ratio property. Performance metrics such as the ones provided by the Web Vitals project is also critical for web developers to ensure that their websites provide a good user experience and we are willing to investigate support for these in Safari.

We love doing this work to improve the platform and we’re happy to be able to collaborate in ways that contribute to bettering the web commons for all of us.

http://frederic-wang.fr/amp-contributions-to-web-platform-interoperability-H1.html

|

|

Armen Zambrano: In Filter Treeherder jobs by test or manifest path I describe the feature. |

In Filter Treeherder jobs by test or manifest path I describe the feature. In this post I will explain how it came about.

I want to highlight the process between a conversation and a deployed feature. Many times, it is an unseen part of the development process that can be useful for contributors and junior developers who are trying to grow as developers.

Back in the Fall of 2019 I started inquiring into developers’ satisfaction with Treeherder. This is one of the reasons I used to go to the office once in a while. One of these casual face-to-face conversations led to this feature. Mike Conley explained to me how he would look through various logs to find a test path that had failed on another platform (see referenced post for further details).

After I understood the idea, I tried to determine what options we had to implement it. I wrote a Google Doc with various alternative implementations and with information about what pieces were needed for a prototype. I requested feedback from various co-workers to help discover blind spots in my plans.

Once I had some feedback from immediate co-workers, I made my idea available in a Google group (increasing the circle of people giving feedback). I described my intent to implement the idea and was curious to see if anyone else was already working on it or had better ideas on how to implement it. I did this to raise awareness in larger circles, reduce duplicate efforts and learn from prior work.

I also filed a bug to drive further technical discussions and for interested parties to follow up on the work. Fortunately, around the same time Andrew Halberstadt started working on defining explicitly what manifests each task executes before the tasks are scheduled (see bug). This is a major component to make the whole feature on Treeherder functional. In some cases, talking enough about the need can enlist others from their domains of expertise to help with your project.

At the end of 2019 I had time to work on it. Once I endlessly navigated through Treeherder’s code for a few days, I decided that I wanted to see a working prototype. This would validate its value and determine if all the technichal issues had been ironed out. In a couple of days I had a working prototype. Most of the code could be copy/pasted into Treeherder once I found the correct module to make changes in.

Finally, in January the feature landed. There were some small bugs and other follow up enhancements later on.

Stumbling upon this feature was great because on H1 we started looking at changing our CI’s scheduling to use manifests for scheduling instead of tasks and this feature lines up well with it.

|

|

Armen Zambrano: Filter Treeherder jobs by test or manifest path |

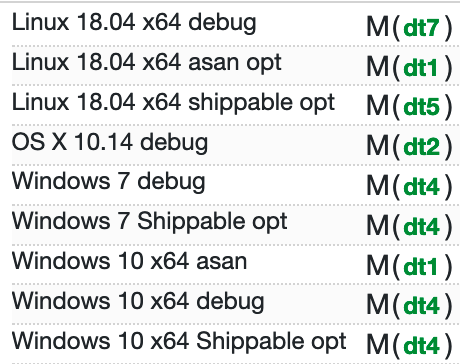

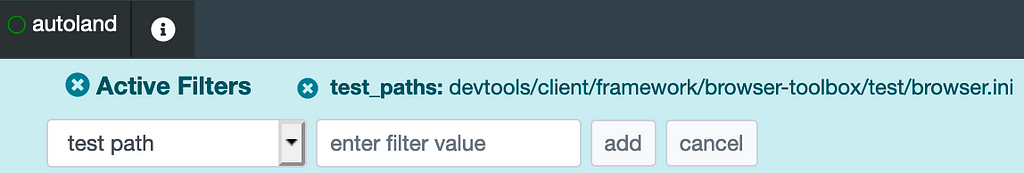

At the beginning of this year we landed a new feature on Treeherder. This feature helps our users to filter jobs using test paths or manifest paths.

This feature is useful for developers and code sheriffs because it permits them to determine whether or not a test that fails in one platform configuration also fails in other ones. Previously, this was difficult because certain test suites are split into multiple tasks (aka “chunks”). In the screenshot below, you can see that the manifest path devtools/client/framework/browser-toolbox/test/browser.ini is executed in different chunks.

NOTE: A manifest is a file that defines various test files, thus, a manifest path defines a group of test paths. Both types of paths can be used to filter jobs.

This filtering method has been integrated to the existing feature, “Filter by a job field” (the funnel icon). See below what the UI looks like:

If you’re curious about the work you can visit the PR.

There’s a lot more coming around this realm as we move toward manifest-based scheduling in the Firefox CI instead of task-based scheduling. Stay tuned! Until then keep calm and filter away.

|

|

Spidermonkey Development Blog: SpiderMonkey Newsletter 5 (Firefox 78-79) |

|

|

Daniel Stenberg: Video: testing curl for security |

The webinar from June 30, now on video. The slides are here.

https://daniel.haxx.se/blog/2020/07/02/video-testing-curl-for-security/

|

|

Support.Mozilla.Org: Let’s meet online: Virtual All Hands 2020 |

Hi folks,

Here I am again sharing with you the amazing experience of another All Hands.

This time no traveling was involved, and every meeting, coffee, and chat were left online.

Virtuality seems the focus of this 2020 and if on one side we strongly missed the possibility of being together with colleagues and contributors, on the other hand, we were grateful for the possibility of being able to connect.

Virtual All Hands has been running for a week, from the 15th of June to the 18th, and has been full of events and meetups.

As SUMO team we had three events running on Tuesday, Wednesday, and Thursday, along with the plenaries and Demos that were presented on Hubs. Floating in virtual reality space while experiencing and listening to new products and features that will be introduced in the second part of the year has been a super exciting experience and something really enjoyable.

Let’s talk about our schedule, shall we?

On Tuesday we run our Community update meeting in which we focussed around what happened in the last 6 months, the projects that we successfully completed, and the ones that we have left for the next half of the year.

We talked a lot about the community plan, and which are the next steps we need to take to complete everything and release the new onboarding experience before the end of the year.

We did not forget to mention everything that happened to the platform. The new responsive redesign and the ask-a-question flow have greatly changed the face of the support forum, and everything was implemented while the team was working on a solution for the spam flow we have been experiencing in the last month.

If you want to read more about this, here are some forum posts we wrote in the last few weeks you can go through regarding these topics:

On Wednesday we focused on presenting the campaign for the Respond Tool. For those of you who don’t know what I am talking about, we shared some resources regarding the tool here. The campaign will run up until today, but we still need your intake on many aspects, so join us on the tool!

The main points we went through during the meeting were:

- Introduction about the tool and the announcement on the forum

- Updates on Mozilla Firefox Browser

- Update about the Respond Tool

- Demo (how to reply, moderate, or use canned response) – Teachable course

- Bugs. If you use the Respond Tool, please file bugs here

- German and Spanish speakers needed: we have a high volume of review in Spanish and German that need your help!

On Thursday we took care of Conversocial, the new tool that substitutes Buffer from now on. We have already some contributors joining us on the tool and we are really happy with everyone ‘s excitement in using the tool and finally having a full twitter account dedicated to SUMO. @firefoxsupport is here, please go, share and follow!

The agenda of the meeting was the following:

- Introduction about the tool

- Contributor roles

- Escalation process

- Demo on Conversocial

- @FirefoxSupport overview

If you were invited to the All Hands or you have NDA access you can access to the meetings at this link: https://onlinexperiences.com

Thank you for your participation and your enthusiasm as always, we are missing live interaction but we have the opportunity to use some great tools as well. We are happy that so many people could enjoy those opportunities and created such a nice environment during the few days of the All Hands.

See you really soon!

The SUMO Team

https://blog.mozilla.org/sumo/2020/07/01/lets-meet-online-virtual-all-hands-2020/

|

|

Hacks.Mozilla.Org: Securing Gamepad API |

Firefox release dates for Gamepad API updates

As part of Mozilla’s ongoing commitment to improve the privacy and security of the web platform, over the next few months we will be making some changes to how the Gamepad_API works.

Here are the important dates to keep in mind:

- 25 of August 2020 (Firefox 81 Beta/Developer Edition):

.getGamepads()method will only return game pads if called in a “secure context” (e.g., https://).- 22 of September 2020 (Firefox 82 Beta/Developer Edition):

- Switch to requiring a permission policy for third-party contexts/iframes.

We are collaborating on making these changes with folks from the Chrome team and other browser vendors. We will update this post with links to their announcements as they become available.

Restricting gamepads to secure contexts

Starting with Firefox 81, the Gamepad API will be restricted to what are known as “secure contexts” (bug 1591329). Basically, this means that Gamepad API will only work on sites served as “https://”.

For the next few months, we will show a developer console warning whenever .getGamepads() method is called from an insecure context.

From Firefox 81, we plan to require secure context for .getGamepads() by default. To avoid significant code breakage, calling .getGamepads() will return an empty array. We will display this console warning indefinitely:

The developer console nows shows a warning when .getGamepads() method is called from insecure contexts

Permission Policy integration

From Firefox 82, third-party contexts (i.e., s that are not same origin) that require access to the Gamepad API will have to be explicitly granted access by the hosting website via a Permissions Policy.

In order for a third-party context to be able to use the Gamepad API, you will need to add an “allow” attribute to your HTML like so:

Once this ships, calling .getGamepads() from a disallowed third-party context will throw a JavaScript security error.

You can our track our implementation progress in bug 1640086.

WebVR/WebXR

As WebVR and WebXR already require a secure context to work, these changes

shouldn’t affect any sites relying on .getGamepads(). In fact, everything should continue to work as it does today.

Future improvements to privacy and security

When we ship APIs we often find that sites use them in unintended ways – mostly creatively, sometimes maliciously. As new privacy and security capabilities are added to the web platform, we retrofit those solutions to better protect users from malicious sites and third-party trackers.

Adding “secure contexts” and “permission policy” to the Gamepad API is part of this ongoing effort to improve the overall privacy and security of the web. Although we know these changes can be a short-term inconvenience to developers, we believe it’s important to constantly evolve the web to be as secure and privacy-preserving as it can be for all users.

The post Securing Gamepad API appeared first on Mozilla Hacks - the Web developer blog.

|

|

Daniel Stenberg: curl 7.71.1 – try again |

This is a follow-up patch release a mere week after the grand 7.71.0 release. While we added a few minor regressions in that release, one of them were significant enough to make us decide to fix and ship an update sooner rather than later. I’ll elaborate below.

Every early patch release we do is a minor failure in our process as it means we shipped annoying/serious bugs. That of course tells us that we didn’t test all features and areas good enough before the release. I apologize.

Numbers

the 193rd release

0 changes

7 days (total: 8,139)

18 bug fixes (total: 6,227)

32 commits (total: 25,943)

0 new public libcurl function (total: 82)

0 new curl_easy_setopt() option (total: 277)

0 new curl command line option (total: 232)

16 contributors, 8 new (total: 2,210)

5 authors, 2 new (total: 805)

0 security fixes (total: 94)

0 USD paid in Bug Bounties

Bug-fixes

compare cert blob when finding a connection to reuse – when specifying the client cert to libcurl as a “blob”, it needs to compare that when it subsequently wants to reuse a connection, as curl already does when specifying the certificate with a file name.

curl_easy_escape: zero length input should return a zero length output – a regression when I switched over the logic to use the new dynbuf API: I inadvertently modified behavior for escaping an empty string which then broke applications. Now verified with a new test.

set the correct URL in pushed HTTP/2 transfers – the CURLINFO_EFFECTIVE_URL variable previously didn’t work for pushed streams. They would all just claim to be the parent stream’s URL.

fix HTTP proxy auth with blank password – another dynbuf conversion regression that now is verified with a new test. curl would pass in “(nil)” instead of a blank string (“”).

terminology: call them null-terminated strings – after discussions and an informal twitter poll, we’ve rephrased all documentation for libcurl to use the phrase “null-terminated strings” and nothing else.

allow user + password to contain “control codes” for HTTP(S) – previously byte values below 32 would maybe work but not always. Someone with a newline in the user name reported a problem. It can be noted that those kind of characters will not work in the credentials for most other protocols curl supports.

Reverted the implementation of “wait using winsock events” – another regression that apparently wasn’t tested good enough before it landed and we take the opportunity here to move back to the solution we have before. This change will probably take another round and aim to get landed in a better shape in a future.

ngtcp2: sync with current master – interestingly enough, the ngtcp2 project managed to yet again update their API exactly this week between these two curl releases. This means curl 7.71.1 can be built against the latest ngtcp2 code to speak QUIC and HTTP/3.

In parallel with that ngtcp2 sync, I also ran into a new problem with BoringSSL’s master branch that is fixed now. Timely for us, as we can now also boast with having the quiche backend in sync and speaking HTTP/3 fine with the latest and most up-to-date software.

Next

We have not updated the release schedule. This means we will have almost three weeks for merging new features coming up then four weeks of bug-fixing only until we ship another release on August 19 2020. And on and on we go.

https://daniel.haxx.se/blog/2020/07/01/curl-7-71-1-try-again/

|

|

The Talospace Project: Firefox 78 on POWER |

UPDATE: The patch has landed on release, beta and ESR 78, so you should be able to build straight from source.

|

|

Honza Bambas: Firefox enables link rel=”preload” support |

We enabled the link preload web feature support in Firefox 78, at this time only at Nightly channel and Firefox Early Beta and not Firefox Release because of pending deeper product integrity checking and performance evaluation.

What is “preload”

Web developers may use the the Link: <..>; rel=preload response header or markup to give the browser a hint to preload some resources with a higher priority and in advance.

Firefox can now preload number of resource types, such as styles, scripts, images and fonts, as well as responses to be later used by plain fetch() and XHR. Use preload in a smart way to help the web page to render and get into the stable and interactive state faster.

Don’t misplace this for “prefetch”. Prefetching (with a similar technique using tags) loads resources for the next user navigation that is likely to happen. The browser fetches those resources with a very low priority without an affect on the currently loading page.

Web Developer Documentation

There is a Mozilla provided MDN documentation for how to use . Definitely worth reading for details. Scope of this post is not to explain how to use preload, anyway.

Implementation overview

Firefox parses the document’s HTML in two phases: a prescan (or also speculative) phase and actual DOM tree building.

The prescan phase only quickly tokenizes tags and attributes and starts so called “speculative loads” for tags it finds; this is handled by resource loaders specific to each type. A preload is just another type of a speculative load, but with a higher priority. We limit speculative loads to only one for a URL, so only the first tag referring that URL starts a speculative load. Hence, if the order is the consumer tag and then the related tag for the same URL, then the speculative load will only have a regular priority.

At the DOM tree building phase, during which we create actual consuming DOM node representations, the respective resource loader first looks for an existing speculative load to use it instead of starting a new network load. Note that except for stylesheets and images, a speculative load is used only once, then it’s removed from the speculative load cache.

Firefox preload behavior

Supported types

“style”, “script”, “image”, “font”, “fetch”.

The “fetch” type is for use by fetch() or XHR.

The “error” event notification

Conditions to deliver the error event in Firefox are slightly different from e.g. Chrome.

For all resource types we trigger the error event when there is a network connection error (but not a DNS error – we taint error event for cross-origin request and fire load instead) or on an error response from the server (e.g. 404).

Some resource types also fire the error event when the mime type of the response is not supported for that resource type, this applies to style, script and image. The style type also produces the error event when not all @imports are successful.

Coalescing

If there are two or more tags before the consuming tag, all mapping to the same resource, they all use the same speculative preload – coalesce to it, deliver event notifications, and only one network load is started.

If there is a tag after the consuming tag, then it will start a new preload network fetch during the DOM tree building phase.

Sub-resource Integrity

Handling of the integrity metadata for Sub-resource integrity checking (SRI) is a little bit more complicated. For it’s currently supported only for the “script” and “style” types.

The rules are: the first tag for a resource we hit during the prescan phase, either a or a consuming tag, we fetch regarding this first tag with SRI according to its integrity attribute. All other tags matching the same resource (URL) are ignored during the prescan phase, as mentioned earlier.

At the DOM tree building phase, the consuming tag reuses the preload only if this consuming tag is either of:

- missing the

integrityattribute completely, - the value of it is exactly the same,

- or the value is “weaker” – by means of the hash algorithm of the consuming tag is weaker than the hash algorithm of the link preload tag;

- otherwise, the consuming tag starts a completely new network fetch with differently setup SRI.

As link preload is an optimization technique, we start the network fetch as soon as we encounter it. If the preload tag doesn’t specify integrity then any later found consuming tag can’t enforce integrity checking on that running preload because we don’t want to cache the data unnecessarily to save memory footprint and complexity.

Doing something like this is considered a website bug causing the browser to do two network fetches:

https://www.janbambas.cz/firefox-enables-link-rel-preload-support/

|

|

Mozilla Localization (L10N): L10n Report: June 2020 Edition |

Welcome!

New community/locales added

New content and projects

What’s new or coming up in Firefox desktop

Deadlines

Upcoming deadlines:

- Firefox 78 is currently in beta and will be released on June 30. The deadline to update localization was on Jun 16.

- The deadline to update localizations for Firefox 79, currently in Nightly, will be July 14 (4 weeks after the previous deadline).

Fluent and migration wizard

Going back to the topic of how to use Fluent’s flexibility at your advantage, we recently ported the Migration Wizard to Fluent. That’s the dialog displayed to users when they import content from other browsers.

Before Fluent, this is how the messages for “Bookmarks” would look like:

32_ie=Favorites 32_edge=Favorites 32_safari=Bookmarks 32_chrome=Bookmarks 32_360se=Bookmarks

That’s one string for each supported browser, even if they’re all identical. This is how the same message looks like in Fluent:

browser-data-bookmarks-checkbox =

.label = { $browser ->

[ie] Favorites

[edge] Favorites

*[other] Bookmarks

}

If all browsers use the same translations in a specific language, this can take advantage of the asymmetric localization concept available in Fluent, and be simplified (“flattened”) to just:

browser-data-bookmarks-checkbox = .label = Translated_bookmarks

The same is true the other way around. The section comment associated to this group of strings says:

## Browser data types ## All of these strings get a $browser variable passed in. ## You can use the browser variable to differentiate the name of items, ## which may have different labels in different browsers. ## The supported values for the $browser variable are: ## 360se ## chrome ## edge ## firefox ## ie ## safari ## The various beta and development versions of edge and chrome all get ## normalized to just "edge" and "chrome" for these strings.

So, if English has a flat string without selectors:

browser-data-cookies-checkbox =

.label = Cookies

A localization can still provide variants if, for example, Firefox is using a different term for cookies than other browsers:

browser-data-cookies-checkbox =

.label = { $browser ->

[firefox] Macarons

*[other] Cookies

}

HTTPS-Only Error page

There’s a new mode, called “HTTPS-Only”, currently tested in Nightly: when users visit a page not available with a secure connection, Firefox will display a warning.

In order to test this page, you can change the value of the dom.security.https_only_mode preference in about:config, then visit this website. Make sure to test the page with the window at different sizes, to make sure all elements fit.

What’s new or coming up in mobile

Concerning mobile right now, we just got updated screenshots for the latest v27 of Firefox for iOS: https://drive.google.com/drive/folders/1ZsmHA-qt0n8tWQylT1D2-J4McjSZ-j4R

We are trying out several options for screenshots going forwards, so stayed tuned so you can tell us which one you prefer.

Otherwise our Fenix launch is still in progress. We are string frozen now, so if you’d like to catch up and test your work, it’s this way: https://pontoon.mozilla.org/projects/android-l10n/tags/fenix/

You should have until July 18th to finish all l10n work on this project, before the cut-off date.

What’s new or coming up in web projects

Firefox Accounts

A third file called main.ftl was added to Pontoon a couple of weeks ago in preparation to support subscription based products. This component contains payment strings for the subscription platform, which will be rolled out to a few countries initially. The staging server will be opened up for localization testing in the coming days. An email with testing instruction and information on supported markets will be sent out as soon as all the information is gathered and confirmed. Stay tuned.

Mozilla.org

In the past month, several dozens of files were added to Pontoon, including new pages. Many of the migrated pages include updates. To help prioritize, please focus on

- resolving the orange warnings first. This usually means that a brand or product name was not converted in the process. Placeables no longer match those in English.

- completing translation one page at a time. Coordinate with other community members, splitting up the work page by page, and conduct peer review.

Speaking of brands, the browser comparison pages are laden with brand and product names, well-known company names. Not all the brand names went to the brands.ftl. This is due to some of the names being mentioned once or twice, or limited to just one file. We do not want to overload the brands.ftl with too many of these rarely used names. The general rule for these third party brands and product names is, keep them unchanged whenever possible.

We skipped WNP#78 but we will have WNP#79 ready for localization in the coming weeks.

Transvision now supports mozilla.org in Fluent format. You can leverage the tool the same way you did before.

What’s new or coming up in Foundation projects

Donate websites

Back in November last year, we mentioned we were working on making localizable the remaining part of the content (the content stored in a CMS) from the new donate website. The site was launched in February, but the CMS localization systems still need some work before the CMS-based content can be properly localized.

Over the next few weeks, Th'eo will be working closely with the makers of the CMS the site is using, to fix the remaining issues, develop new localization capabilities and enable CMS content localization.

Once the systems are operational and if you’re already translating the Donate website UI project, we will add the following two new projects to your dashboard with the remaining content, one for the Thunderbird instance and another one for the Mozilla instance. The vast majority of this content has already been translated, so you should be able to leverage previous translations using the translation memory feature in Pontoon. But because some longer strings may have been split differently by the system, they may not show up in translation memory. For this reason, we will enable back the old “Fundraising” project in Pontoon, in read-only mode, so that you can easily search and access those translations if you need to.

What’s new or coming up in Pontoon

- Translate Terminology. We’ve added a new Terminology project to Pontoon, which contains all Terms from Mozilla’s termbase and lets you translate them. As new terms will be added to Pontoon, they will instantly appear in the project and be ready for translation.There’s also a “Translate” link next to each term in the Terms tab and panel, which makes it easy to translate terms as they are used.

- More relevant API results. Thanks to Vishnudas, system projects (e.g. Tutorial) are now excluded from the default list of projects returned by the API. You can still include system projects in the response if you use set the includeSystem flag to true.

Events

- Want to showcase an event coming up that your community is participating in? Reach out to any l10n-driver, and we’ll include that (see links to emails at the bottom of this report)

Friends of the Lion

- Robb P. , who has not only become top localizer for the Romanian community, but has become a reliable and proactive localizer.

Know someone in your l10n community who’s been doing a great job and should appear here? Contact one of the l10n-drivers and we’ll make sure they get a shout-out (see list at the bottom)!

https://blog.mozilla.org/l10n/2020/06/30/l10n-report-june-2020-edition/

|

|

Giorgio Maone: Save Trust, Save OTF |

As the readers of this blog almost surely know, I'm the author of NoScript, a web browser security enhancer which can be installed on Firefox and Chrome, and comes built-in with the Tor Browser.

NoScript has received support by the Open Technology Fund (OTF) for specific development efforts: especially, to make it cross-browser, better internationalized and ultimately serving a wider range of users.

OTF's mission is supporting technology to counter surveillance and censorship by repressive regimes and foster Internet Freedom. One critical and strict requirement, for OTF to fund or otherwise help software projects, is them being licensed as Free/Libre Open Source Software (FLOSS), i.e. their code being publicly available for inspection, modification and reuse by anyone. Among the successful projects funded by OTF, you may know or use Signal, Tor, Let's Encrypt, Tails, QubeOS, Wireshark, OONI, GlobaLeaks, and millions of users all around the world, no matter their political views, trust them because they are FLOSS, making vulnerabilities and even intentionally malicious code harder to hide.

Now this virtuous modus operandi is facing an existential threat, started when the whole OTF leadership has been fired and replaced by Michael Pack, the controversial new CEO of th U.S. Agency for Global Media (USAGM), the agency OTF reports to.

Lobbying documents emerged on the eve of former OTF CEO Libby Liu's defenestration, strongly suggesting this purge preludes a push to de-fund FLOSS, and especially "p2p, privacy-first" tools, in favor of large scale, centralized and possibly proprietary "alternatives": two closed source commercial products are explicitly named among the purportedly best recipients of funding.

Beside the weirdness of seeing "privacy-first" used as a pejorative when talking about technologies protecting journalists and human rights defenders from repressive regimes such as Iran or People's Republic of China (even more now, while the so called "Security Law" is enforced against Hong Kong protesters), I find very alarming the lack of recognition for the radical importance of the tools being open source to be trusted by their users, no matter the country or the fight they're in, when their lives are at risk.

Talking of my own experience (but I'm confident most other successful and effective OTF-funded software projects have similar stories to tell): I've been repeatedly approached by law enforcement representatives from different countries (including PRC) - and also by less "formal" groups - with a mix of allegedly noble reasons, interesting financial incentives and veiled threats, to put ad-hoc backdoors in NoScript. I could deny all such requests not because of any exceptional moral fiber of mine, even though being part of the "OTF community", where the techies who build the tools meet the human rights activists who use them on the field, helped me growing awareness of my responsibilities. I could say "no" just because NoScript being FLOSS made it impractical/suicidal: everyone, looking at the differences in the source code, could spot the backdoor, and I would loose any credibility as a security software developer. NoScript would be forked, in the best case scenario, or dead.

The strict FLOSS requirement is only one of the great features in OTF's transparent, fair, competitive and evidence-based award process, but I believe it's the best assurance we can actually trust our digital freedom tools.

I'm aware of (very few) other organizations and funds adopting similar criteria, and likely managing larger budgets too, especially in Europe: so if USA really decides to give up their leadership in the Internet Freedom space, NoScript and other tools such as Tor, Tails or OONI would still have a door to knock at.

But none of these entities, AFAIK, own OTF's "secret sauce": bringing together technologists and users in a unique, diverse and inclusive community of caring humans, where real and touching stories of oppression and danger are shared in a safe space, and help shape effective technology which can save lives.

So please, do your part to save Internet Freedom, save OTF, save trust.

|

|

Hacks.Mozilla.Org: New in Firefox 78: DevTools improvements, new regex engine, and abundant web platform updates |

A new stable Firefox version rolls out today, providing new features for web developers. A new regex engine, updates to the ECMAScript Intl API, new CSS selectors, enhanced support for WebAssembly, and many improvements to the Firefox Developer Tools await you.

This blog post provides merely a set of highlights; for all the details, check out the following:

Developer tool improvements

Source-mapped variables, now also in Logpoints

With our improvements over the recent releases, debugging your projects with source maps will feel more reliable and faster than ever. But there are more capabilities that we can squeeze out of source maps. Did you know that Firefox’s Debugger also maps variables back to their original name? This especially helps babel-compiled code with changed variable names and added helper variables. To use this feature, pause execution and enable the “Map” option in the Debugger’s “Scopes” pane.

As a hybrid between the worlds of the DevTools Console and Debugger, Logpoints make it easy to add console logs to live code–or any code, once you’ve added them to your toolbelt. New in Firefox 75, original variable names in Logpoints are mapped to the compiled scopes, so references will always work as expected.

To make mapping scopes work, ensure that your source maps are correctly generated and include enough data. In Webpack this means avoid the “cheap” and “nosources” options for the “devtools” configuration.

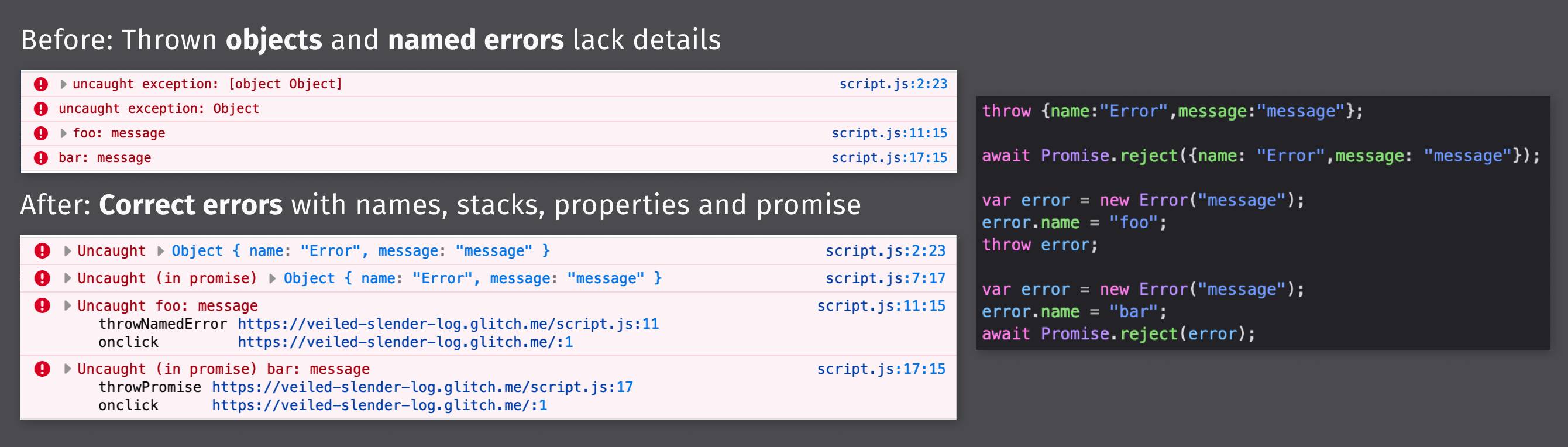

Promises and frameworks error logs get more detailed

Uncaught promise errors are critical in modern asynchronous JavaScript, and even more so in frameworks like Angular. In Firefox 78, you can expect to see all details for thrown errors show up properly, including their name and stack:

The implementation of this functionality was only possible through the close collaboration between the SpiderMonkey engineering team and a contributor, Tom Schuster. We are investigating how to improve error logging further, so please let us know if you have suggestions.

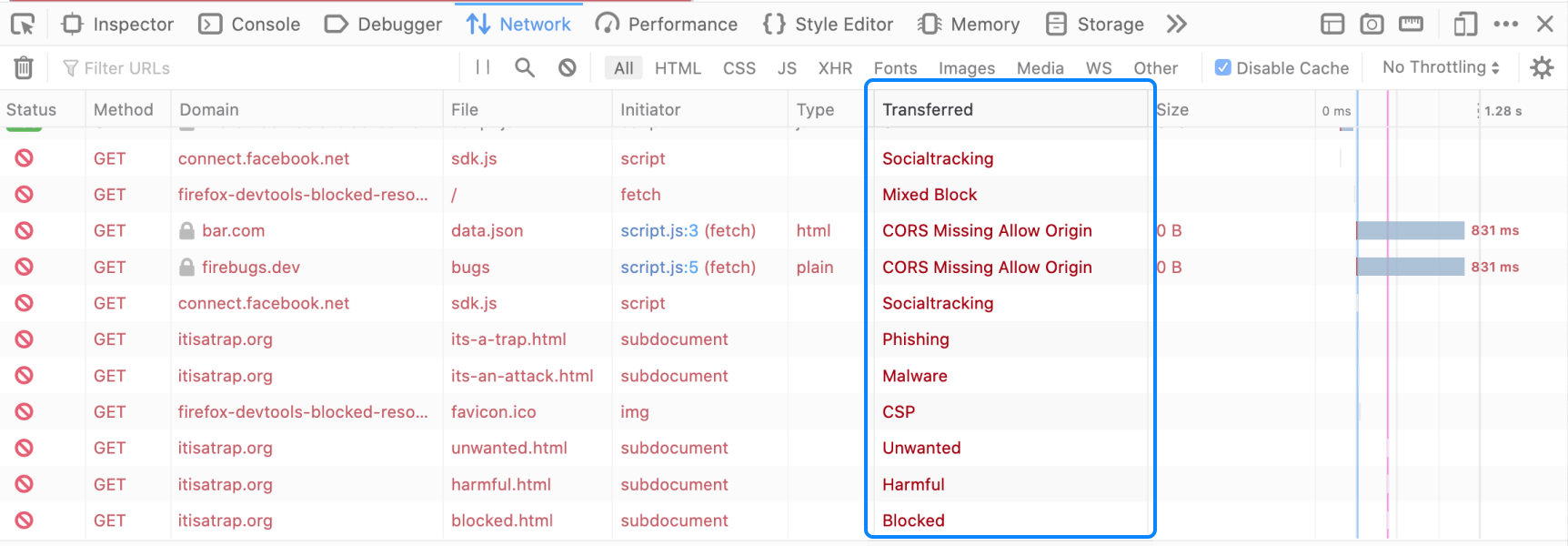

Monitoring failed request issues

Failed or blocked network requests come in many varieties. Resources may be blocked by tracking protection, add-ons, CSP/CORS security configurations, or flaky connectivity, for example. A resilient web tries to gracefully recover from as many of these cases as possible automatically, and an improved Network monitor can help you with debugging them.

Firefox 78 provides detailed reports in the Network panel for requests blocked by Enhanced Tracking Protection, add-ons, and CORS.

Quality improvements

Faster DOM navigation in Inspector

Inspector now opens and navigates a lot faster than before, particularly on sites with many CSS custom properties. Some modern CSS frameworks were especially affected by slowdowns in the past. If you see other cases where Inspector isn’t as fast as expected, please report a performance issue. We really appreciate your help in reporting performance issues so that we can keep improving.

Remotely navigate your Firefox for Android for debugging

Remote debugging’s new navigation elements make it more seamless to test your content for mobile with the forthcoming new edition of Firefox for Android. After hooking up the phone via USB and connecting remote debugging to a tab, you can navigate and refresh pages from your desktop.

Early-access DevTools features in Developer Edition

Developer Edition is Firefox’s pre-release channel. You get early access to tooling and platform features. Its settings enable more functionality for developers by default. We like to bring new features quickly to Developer Edition to gather your feedback, including the following highlights.

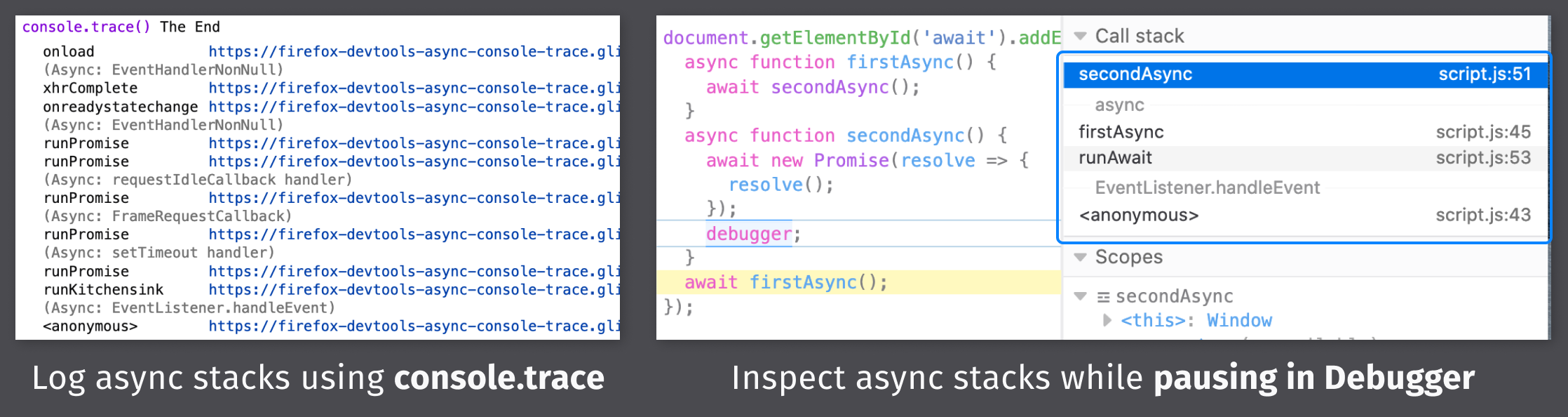

Async stacks in Console & Debugger

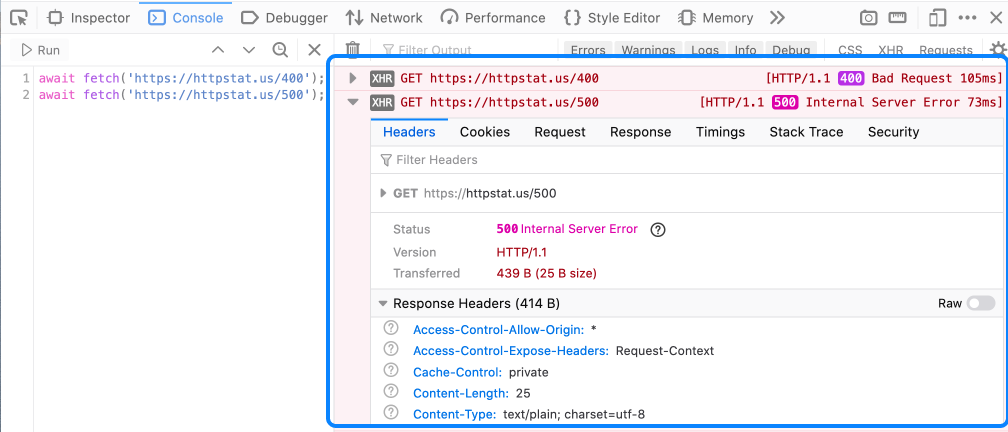

We’ve built new functionality to better support async stacks in the Console and Debugger, extending stacks with information about the events, timers, and promises that lead the execution of a specific line of code. We have been improving asynchronous stacks for a while now, based on early feedback from developers using Firefox DevEdition. In Firefox 79, we expect to enable this feature across all release channels.

Console shows failed requests

Network requests with 4xx/5xx status codes now log as errors in the Console by default. To make them easier to understand, each entry can be expanded to view embedded network details.

Web platform updates

New CSS selectors :is and :where

Version 78 sees Firefox add support for the :is() and :where() pseudo-classes, which allow you to present a list of selectors to the browser. The browser will then apply the rule to any element that matches one of those selectors. This can be useful for reducing repetition when writing a selector that matches a large number of different elements. For example:

header p, main p, footer p,

header ul, main ul, footer ul { … }Can be cut down to

:is(header, main, footer) :is(p, ul) { … }Note that :is() is not particularly a new thing—it has been supported for a while in various browsers. Sometimes this has been with a prefix and the name any (e.g. :-moz-any). Other browsers have used the name :matches(). :is() is the final standard name that the CSSWG agreed on.

:is() and :where() basically do the same thing, but what is the difference? Well, :is() counts towards the specificity of the overall selector, taking the specificity of its most specific argument. However, :where() has a specificity value of 0 — it was introduced to provide a solution to the problems found with :is() affecting specificity.

What if you want to add styling to a bunch of elements with :is(), but then later on want to override those styles using a simple selector? You won’t be able to because class selectors have a higher specificity. This is a situation in which :where() can help. See our :where() example for a good illustration.

Styling forms with CSS :read-only and :read-write

At this point, HTML forms have a large number of pseudo-classes available to style inputs based on different states related to their validity — whether they are required or optional, whether their data is valid or invalid, and so on. You can find a lot more information in our UI pseudo-classes article.

In this version, Firefox has enabled support for the non-prefixed versions of :read-only and :read-write. As their name suggests, they style elements based on whether their content is editable or not:

input:read-only, textarea:read-only {

border: 0;

box-shadow: none;

background-color: white;

}

textarea:read-write {

box-shadow: inset 1px 1px 3px #ccc;

border-radius: 5px;

}

(Note: Firefox has supported these pseudo-classes with a -moz- prefix for a long time now.)

You should be aware that these pseudo-classes are not limited to form elements. You can use them to style any element based on whether it is editable or not, for example a

element with or without contenteditable set:

p:read-only {

background-color: red;

color: white;

}

p:read-write {

background-color: lime;

}

New regex engine

Thanks to the RegExp engine in SpiderMonkey, Firefox now supports all new regular expression features introduced in ECMAScript 2018, including lookbehinds (positive and negative), the dotAll flag, Unicode property escapes, and named capture groups.

Lookbehind and negative lookbehind assertions make it possible to find patterns that are (or are not) preceded by another pattern. In this example, a negative lookbehind is used to match a number only if it is not preceded by a minus sign. A positive lookbehind would match values not preceded by a minus sign.

'1 2 -3 0 -5'.match(/(? Array [ "1", "2", "0" ]

'1 2 -3 0 -5'.match(/(?<=-)\d+/g);

// -> Array [ "3", "5" ]

Unicode property escapes are written in the form \p{…} and \{…}. They can be used to match any decimal number in Unicode, for example. Here’s a unicode-aware version of \d that matches any Unicode decimal number instead of just the ASCII numbers 0-9.

const regex = /^\p{Decimal_Number}+$/u;

Named capture groups allow you to refer to a certain portion of a string that a regular expression matches, as in:

let re = /(?\d{4})-(?\d{2})-(?\d{2})/u;

let result = re.exec('2020-06-30');

console.log(result.groups);

// -> { year: "2020", month: "06", day: "30" }

ECMAScript Intl API updates

Rules for formatting lists vary from language to language. Implementing your own proper list formatting is neither straightforward nor fast. Thanks to the new Intl.ListFormat API, the JavaScript engine can now format lists for you:

const lf = new Intl.ListFormat('en');

lf.format(["apples", "pears", "bananas"]):

// -> "apples, pears, and bananas"

const lfdis = new Intl.ListFormat('en', { type: 'disjunction' });

lfdis.format(["apples", "pears", "bananas"]):

// -> "apples, pears, or bananas"

Enhanced language-sensitive number formatting as defined in the Unified NumberFormat proposal is now fully implemented in Firefox. See the NumberFormat constructor documentation for the new options available.

ParentNode.replaceChildren

Firefox now supports ParentNode.replaceChildren(), which replaces the existing children of a Node with a specified new set of children. This is typically represented as a NodeList, such as that returned by Document.querySelectorAll().

This method provides an elegant way to empty a node of children, if you call replaceChildren() with no arguments. It also is a nice way to shift nodes from one element to another. For example, in this case, we use two buttons to transfer selected options from one box to another:

const noSelect = document.getElementById('no');

const yesSelect = document.getElementById('yes');

const noBtn = document.getElementById('to-no');

const yesBtn = document.getElementById('to-yes');

yesBtn.addEventListener('click', () => {

const selectedTransferOptions = document.querySelectorAll('#no option:checked');

const existingYesOptions = document.querySelectorAll('#yes option');

yesSelect.replaceChildren(...selectedTransferOptions, ...existingYesOptions);

});

noBtn.addEventListener('click', () => {

const selectedTransferOptions = document.querySelectorAll('#yes option:checked');

const existingNoOptions = document.querySelectorAll('#no option');

noSelect.replaceChildren(...selectedTransferOptions, ...existingNoOptions);

});You can see the full example at ParentNode.replaceChildren().

WebAssembly multi-value support

Multi-value is a proposed extension to core WebAssembly that enables functions to return many values, and enables instruction sequences to consume and produce multiple stack values. The article Multi-Value All The Wasm! explains what this means in greater detail.

WebAssembly large integer support

WebAssembly now supports import and export of 64-bit integer function parameters (i64) using BigInt from JavaScript.

WebExtensions

We’d like to highlight three changes to the WebExtensions API for this release:

- When using

proxy.onRequest, a filter that limits based on tab id or window id is now correctly applied. This is useful for add-ons that want to provide proxy functionality in just one window. - Clicking within the context menu from the “all tabs” dropdown now passes the appropriate tab object. In the past, the active tab was erroneously passed.

- When using downloads.download with the

saveAsoption, the recently used directory is now remembered. While this data is not available to developers, it is very convenient to users.

TLS 1.0 and 1.1 removal

Support for the Transport Layer Security (TLS) protocol’s version 1.0 and 1.1, has been dropped from all browsers as of Firefox 78 and Chrome 84. Read TLS 1.0 and 1.1 Removal Update for the previous announcement and what actions to take if you are affected.

Firefox 78 is an ESR release

Firefox follows a rapid release schedule: every four weeks we release a new version of Firefox.

In addition to that, we provide a new Extended Support Release (ESR) for enterprise users once a year. Firefox 78 ESR includes all of the enhancements since the last ESR (Firefox 68), along with many new features to make your enterprise deployment easier.

A noteworthy feature: In previous ESR versions, Service workers (and the Push API) were disabled. Firefox 78 is the first ESR release to support them. If your enterprise web application uses AppCache to provide offline support, you should migrate to these new APIs as soon as possible as AppCache will not be available in the next major ESR in 2021.

Firefox 78 is the last supported Firefox version for macOS users of OS X 10.9 Mavericks, OS X 10.10 Yosemite and OS X 10.11 El Capitan. These users will be moved to the Firefox ESR channel by an application update. For more details, see the Mozilla support page.

See also the release notes for Firefox for Enterprise 78.

The post New in Firefox 78: DevTools improvements, new regex engine, and abundant web platform updates appeared first on Mozilla Hacks - the Web developer blog.

|

|

Dzmitry Malyshau: Missing structure in technical discussions |

People are amazing creatures. When discussing a complex issue, they are able to keep multiple independent arguments in their heads, the pieces of supporting and disproving evidence, and can collapse this system into a concrete solution. We can spend hours navigating through the issue comments on Github, reconstructing the points of view, and making sense of the discussion. Problem is: we don’t actually want to apply this superpower and waste time nearly as often.

Problem with technical discussions

Have you heard of async in Rust? Ever wondered why the core team opted into a completely new syntax for this feature? Let’s dive in and find out! Here is #57640 with 512 comments, kindly asking everyone to check #50547 (with just 308 comments) before expressing their point of view. Following this discussion must have been exhausting. I don’t know how it would be possible to navigate it without the summary comments.

Another example is the loop syntax in WebGPU. Issue #569 has only 70 comments, with multiple attempts to summarize the discussion in the middle. It would probably take a few hours at the minimum to get a gist of the group reasoning for somebody from the outside. And that doesn’t include the call transcripts.

Github has emojis which allow certain comments to show more support. Unfortunately, our nature is such that comments are getting liked when we agree with them, not when they advance the discussion in a constructive way. They are all over the place and don’t really help.

What would help though is having a non-linear structure for the discussion. Trees! They make following HN and Reddit threads much easier, but they too have problems. Sometimes, a really important comment is buried deep in one of the branches. Plus, trees don’t work well for a dialog, when there is some back-and-forth between people.

That brings us to the point: most technical discussions are terrible. Not in a sense that people can’t make good points and progress through it, but rather that there is no structure to a discussion, and it’s too hard to follow. What I see in reality is a lot of focus from a very few dedicated people, and delegation by the other ones to those focused. Many views get misrepresented, and many perspectives never heard, because the flow of comments quickly filters out most potential participants.

Structured discussion

My first stop in the search of a solution was on Discourse. It is successfully used by many communities, including Rust users. Unfortunately, it still has linear structure, and doesn’t bring a lot to the table on top of Github. Try following this discussion about Rust in 2020 for example.

Then I looked at platforms designed specifically for a structured argumentation. One of the most popular today is Kialo. I haven’t done a good evaluation on it, but it seemed that Kialo isn’t targeted at engineers, and it’s a platform that we’d have to register in for use. Wishing to use Markdown with a system like that, I stumbled upon Argdown, and realized that it concluded my search.

Argdown introduces a syntax for defining the structure of an argument in text. Statements, arguments, propositions, conclusions - it has it all, written simply in your text editor (especially if its VSCode, for which there is a plugin), or in the playground. It has command-line tools to produce all sorts of derivatives, like dot graphs, web components, JSON, you name it, from an .argdown file. Naturally, formatting with Markdown in it is also supported.

That discovery led me to two questions. (1) - what would an existing debate look like in such a system? And (2) - how could we shift the workflow towards using one?

So I picked the most contentious topic in WebGPU discussions and tried to reconstruct it. Topic was about choosing the shading language, and why SPIR-V wasn’t accepted. It was discussed by the W3C group over the course of 2+ years, and it’s evident that there is some misunderstanding of why the decision was made to go with WGSL, taking Google’s Tint proposal as a starting point.

I attempted to reconstruct the debate in https://github.com/kvark/webgpu-debate, building the SPIR-V.argdown with the first version of the argumentation graph, solving (1). The repository accepts pull requests that are checked by CI for syntax correctness, inviting everyone to collaborate, solving (2). Moreover, the artifacts are automatically uploaded to Github-pages, rendering the discussion in a way that is easy to explore.

Way forward

I’m excited to have this new way of preserving and growing the structure of a technical debate. We can keep using the code hosting platforms, and arguing on the issues and PR, while solidifying the core points in these .argdown files. I hope to see it applied more widely to the workflows of technical working groups.

http://kvark.github.io/tech/arguments/2020/06/30/technical-discussions.html

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla’s analysis: Brazil’s fake news law harms privacy, security, and free expression |

While fake news is a real problem, the Brazilian Law of Freedom, Liability, and Transparency on the Internet (colloquially referred to as the “fake news law”) is not a solution. This hastily written legislation — which could be approved by the Senate as soon as today — represents a serious threat to privacy, security, and free expression. The legislation is a major step backwards for a country that has been hailed around the world for its landmark Internet Civil Rights Law (Marco Civil) and its more recent data protection law.

Substantive concerns

While this bill poses many threats to internet health, we are particularly concerned by the following provisions:

Breaking end-to-end encryption: According to the latest informal congressional report, the law would mandate all communication providers to retain records of forwards and other forms of bulk communications, including origination, for a period of three months. As companies are required to report much of this information to the government, in essence, this provision would create a perpetually updating, centralized log of digital interactions of nearly every user within Brazil. Apart from the privacy and security risks such a vast data retention mandate entails, the law seems to be infeasible to implement in end-to-end encrypted services such as Signal and WhatsApp. This bill would force companies to leave the country or weaken the technical protections that Brazilians rely on to keep their messages, health records, banking details, and other private information secure.

Mandating real identities for account creation: The bill also broadly attacks anonymity and pseudonymity. If passed, in order to use social media, Brazilian users would have to verify their identity with a phone number (which itself requires government ID in Brazil), and foreigners would have to provide a passport. The bill also requires telecommunication companies to share a list of active users (with their cellphone numbers) to social media companies to prevent fraud. At a time when many are rightly concerned about the surveillance economy, this massive expansion of data collection and identification seems particularly egregious. Just weeks ago, the Brazilian Supreme Court held that mandatory sharing of subscriber data by telecom companies was illegal, making such a provision legally tenuous.

As we have stated before, such a move would be disastrous for the privacy and anonymity of internet users while also harming inclusion. This is because people coming online for the first time (often from households with just one shared phone) would not be able to create an email or social media account without a unique mobile phone number.

This provision would also increase the risk from data breaches and entrench power in the hands of large players in the social media space who can afford to build and maintain such large verification systems. There is no evidence to prove that this measure would help fight misinformation (its motivating factor), and it ignores the benefits that anonymity can bring to the internet, such as whistleblowing and protection from stalkers.

Vague Criminal Provisions: The draft version of the law over the past week has additional criminal provisions that make it illegal to:

- create or share content that poses a serious risk to “social peace or to the economic order” of Brazil, with neither term clearly defined, OR

- be a member of an online group knowing that its primary activity is sharing defamatory messages.

These provisions, which might be modified in the subsequent drafts based on widespread opposition, would clearly place untenable, subjective restrictions on the free expression rights of Brazilians and have a chilling effect on their ability to engage in discourse online. The draft law also contains other concerning provisions surrounding content moderation, judicial review, and online transparency that pose significant challenges for freedom of expression.

Procedural concerns, history, and next steps

This legislation was nominally first introduced into the Brazilian Congress in April 2020. However, on June 25, a radically different and substantially more dangerous version of the bill was sprung on Senators mere hours ahead of being put to a vote. This led to push back from Senators, who asked for more time to pursue the changes, accompanied by widespread international condemnation from civil society groups.

Thanks to concentrated push back from civil society groups such as the Coaliz~ao Direitos na Rede, some of the most drastic changes in the June 25 draft (such as data localisation and the blocking of non-compliant services) have now been informally dropped by the Rapporteur who is still pushing for the law to be passed as soon as possible. Despite these improvements, the most worrying proposals remain, and this legislation could pass the Senate as soon as later today, 30 June 2020.

Next steps

We urge Senator Angelo Coronel and the Brazilian Senate to immediately withdraw this bill, and hold a rigorous public consultation on the issues of misinformation and disinformation before proceeding with any legislation. The Commission on Constitution, Justice, and Citizenship in the Senate remains one of the best avenues for such a review to take place, and should seek the input of all affected stakeholders, especially civil society. We remain committed to working with the government to address these important issues, but not at the cost of Brazilians’ privacy, security, and free expression.

The post Mozilla’s analysis: Brazil’s fake news law harms privacy, security, and free expression appeared first on Open Policy & Advocacy.

|

|

This Week In Rust: This Week in Rust 345 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Check out this week's This Week in Rust Podcast

Updates from Rust Community

News & Blog Posts

- A practical guide to async in Rust

- Secure Rust Guidelines - ANSSI (National Cybersecurity Agency of France)

- Faster Rust development on AWS EC2 with VSCode

- Rust verification tools

- Examining ARM vs X86 Memory Models with Rust

- Disk space and LTO improvements

- Building a faster CouchDB View Server in Rust

- Implementing a Job queue with SQLx and Postgres

- Rust + Actix + CosmosDB (MongoDB) tutorial api

- Extremely Simple Rust Rocket Framework Tutorial

- Build a Smart Bookmarking Tool with Rust and Rocket

- A Future is a Suspending Scheduler

- Cross building Rust GStreamer plugins for the Raspberry Pi

- xi-editor retrospective

- This month in my Database project written in Rust

- A Few More Reasons Rust Compiles Slowly

- Fixing Rust's test suite on RISC-V

- Protobuf code generation in Rust

- Tracking eye centers location with Rust & OpenCV

- Using rust, blurz to read from a BLE device

- rust-analyzer changelog #31

- IntelliJ Rust Changelog #125

- [video] Manipulating ports, virtual ports and pseudo terminals - Rust Wroclaw Webinar

- [video] Rust Stream: Iterators

Crate of the Week

This week's crate is print_bytes, a library to print arbitrary bytes to a stream as losslessly as possible.

Thanks to dylni for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- Gooseberry: Set the kb_dir somewhere more accessible to the user

- Ruma: Add directory and profile query endpoints

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

339 pull requests were merged in the last week

- move leak-check to during coherence, candidate eval

- account for multiple impl/dyn Trait in return type when suggesting

'_ - tweak binop errors

- adds a clearer message for when the async keyword is missing from a function

- allow dynamic linking for iOS/tvOS targets

- always capture tokens for

macro_rules!arguments - change heuristic for determining range literal

- check for assignments between non-conflicting generator saved locals

- const prop: erase all block-only locals at the end of every block

- emit line info for generator variants

- explain move errors that occur due to method calls involving

self - fix handling of reserved registers for ARM inline asm

- improve compiler error message for wrong generic parameter order

- point at the call span when overflow occurs during monomorphization

- provide suggestions for some moved value errors

- self contained linking option

- perform obligation deduplication to avoid buggy

ExistentialMismatch - show the values and computation that would overflow a const evaluation or propagation

- stabilize

#![feature(const_if_match)]and#![feature(const_loop)] - A way forward for pointer equality in const eval

- the const propagator cannot trace references

- warn if linking to a private item

improper_ctypes_definitionslint- add Windows system error codes that should map to io::ErrorKind::TimedOut

- errors: use

-Z terminal-widthin JSON emitter - proc_macro: stop flattening groups with dummy spans

- rustc_lint: only query

typeck_tables_ofwhen a lint needs it - rustdoc: fix doc aliases with crate filtering

- chalk: .chalk file syntax writer

- chalk: add method to get repr data of an ADT to ChalkDatabase

- chalk: fix built-in

Fnimpls when generics are involved - chalk: fix coherence issue with associated types in generic bound

- miri: implement rwlocks on Windows

- miri: supply our own implementation of the CTFE pointer comparison intrinsics

- shortcuts for min/max on ordinary BTreeMap/BTreeSet iterators

- add

TryFrom<{int}>forNonZero{int} - add a fast path for

std::thread::panicking. - add

[T]::partition_point - add unstable

core::mem::variant_countintrinsic - added io forwarding methods to the stdio structs

- stabilize

leading_trailing_ones impl PartialEq> for &[A], &mut [A]- forward

Hash::write_iNtoHash::write_uN - libc: add ancillary socket data accessor functions for solarish OSes

- libc: FreeBSD: machine register structs

- libc: add wexecv, wexecve, wexecvp, wexecvpe

- cargo: add support for

workspace.metadatatable - cargo: adding environment variable CARGO_PKG_LICENSE_FILE

- cargo: enable "--target-dir" in "cargo install"

- cargo: expose built cdylib artifacts in the Compilation structure

- cargo: improve support for non-

mastermain branches - docs.rs: don't panic when a crate doesn't exist for target-redirect

- docs.rs: improve executing tests

- clippy: lint iterator.map(|x| x)

- clippy: new lint: suggest

ptr::readinstead ofmem::replace(..., uninitialized()) - clippy: clippy-driver: pass all args to rustc if --rustc is present

- clippy: cmp_owned: handle when PartialEq is not implemented symmetrically

- rustfmt: do not reorder module declaration with #![macro_use]

- rustfmt: don't reformat with errors unless --force flag supplied

Rust Compiler Performance Triage

- 2020-06-30. Three regressions, two of them on rollups; two improvements, one on a rollup.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

Tracking Issues & PRs

- [disposition: merge] impl

Fromfor String - [disposition: merge] mv std libs to std/

- [disposition: merge] Stabilize

transmutein constants and statics but not const fn - [disposition: merge] added

.collect()into String fromBox - [disposition: merge] Stabilize const_type_id feature

New RFCs

- Linking modifiers for native libraries

- Hierarchic anonymous life-time

- Portable packed SIMD vector type

- crates.io token scopes

Upcoming Events

Online

- June 30. Berlin, DE - Remote - Berlin Rust - Rust and Tell

- July 1. Johannesburg, ZA - Remote - Monthly Joburg Rust Chat!

- July 1. Dublin, IE - Remote - Rust Dublin - July Remote Meetup

- July 1. Indianapolis, IN, US - Indy Rust - Indy.rs - with Social Distancing

- July 13. Seattle, WA, US - Seattle Rust Meetup - Monthly Meetup

North America

- June 30. Dallas, TX, US - Dallas Rust - Last Tuesday

- July 8. Atlanta, GA, US - Rust Atlanta - Grab a beer with fellow Rustaceans

- July 9. Lehi, UT, US - Utah Rust - The Blue Pill: Rust on Microcontrollers

Asia Pacific

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

- Senior Software Engineer - Backend at LogDNA, Remote, US

- Senior Software Engineer - Protocols in Rust at Ockam, Remote

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

References are a sharp tool and there are roughly three different approaches to sharp tools.

- Don't give programmers sharp tools. They may make mistakes and cut their fingers off. This is the Java/Python/Perl/Ruby/PHP... approach.

- Give programmers all the sharp tools they want. They are professionals and if they cut their fingers off it's their own fault. This is the C/C++ approach.

- Give programmers sharp tools, but put guards on them so they can't accidentally cut their fingers off. This is Rust's approach.

Lifetime annotations are a safety guard on references. Rust's references have no sychronization and no reference counting -- that's what makes them sharp. References in category-1 languages (which typically do have synchronization and reference counting) are "blunted": they're not really quite as effective as category-2 and -3 references, but they don't cut you, and they still work; they might just slow you down a bit.

So, frankly, I like lifetime annotations because they prevent me from cutting my fingers off.

Thanks to Ivan Tham for the suggestions!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/06/30/this-week-in-rust-345/

|

|

Mozilla Open Policy & Advocacy Blog: Brazil’s fake news law will harm users |

The “fake news” law being rushed through Brazil’s Senate will massively harm privacy and freedom of expression online. Among other dangerous provisions, this bill would force traceability of forwarded messages, which will require breaking end-to-end encryption. This legislation will substantially harm online security, while entrenching state surveillance.

Brazil currently enjoys some of the most comprehensive digital protections in the world, via its Internet Bill of Rights and the upcoming data protection law is poised to add even more protections. In order to preserve these rights, the ‘fake news’ law should be immediately withdrawn from consideration and be subject to rigorous congressional review with input from all affected parties.

The post Brazil’s fake news law will harm users appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2020/06/29/brazils-fake-news-law-will-harm-users/

|

|

William Lachance: mozregression GUI: now available for Linux |

Thanks to @AnAverageHuman, mozregression once again has an easy to use and install GUI version for Linux! This used to work a few years ago, but got broken with some changes in the mozregression-python2 era and didn’t get resolved until now:

This is an area where using telemetry in mozregression can help us measure the impact of a change like this: although Windows still dominates in terms of marketshare, Linux is very widely used by contributors — of the usage of mozregression in the past 2 months, fully 30% of the sessions were on Linux (and it is possible we were undercounting that due to bug 1646402):

link to query (internal-only)

It will be interesting to watch the usage numbers for Linux evolve over the next few months. In particular, I’m curious to see what percentage of users on that platform prefer a GUI.

Appendix: reducing mozregression-GUI’s massive size

One thing that’s bothered me a bunch lately is that the mozregression GUI’s size is massive and this is even more apparent on Linux, where the initial distribution of the GUI came in at over 120 megabytes! Why so big? There were a few reasons:

- PySide2 (the GUI library we use) is very large (10s of megabytes), and PyInstaller packages all of it by default into your application distribution.

- The binary/rust portions of the Glean Python SDK were been built with debugging information included (basically as a carry-over when it was a pre-alpha product), which made it 38 megabytes big (!) on Linux.

- On Linux at least, a large number of other system libraries are packaged into the distribution.