Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Addons Blog: Changes to storage.sync in Firefox 79 |

Firefox 79, which will be released on July 28, includes changes to the storage.sync area. Items that extensions store in this area are automatically synced to all devices signed in to the same Firefox Account, similar to how Firefox Sync handles bookmarks and passwords. The storage.sync area has been ported to a new Rust-based implementation, allowing extension storage to share the same infrastructure and backend used by Firefox Sync.

Extension data that had been stored locally in existing profiles will automatically migrate the first time an installed extension tries to access storage.sync data in Firefox 79. After the migration, the data will be stored locally in a new storage-sync2.sqlite file in the profile directory.

If you are the developer of an extension that syncs extension storage, you should be aware that the new implementation now enforces client-side quota limits. This means that:

- You can make a call using

storage.sync.GetBytesInUseto estimate how much data your extension is storing locally over the limit. - If your extension previously stored data above quota limits, all that data will be migrated and available to your extension, and will be synced. However, attempting to add new data will fail.

- If your extension tries to store data above quota limits, the storage.sync API call will raise an error. However, the extension should still successfully retrieve existing data.

We encourage you to use the Firefox Beta channel to test all extension features that use the storage.sync API to see how they behave if the client-side storage quota is exceeded before Firefox 79 is released. If you notice any regressions, please check your about:config preferences to ensure that webextensions.storage.sync.kinto is set to false and then file a bug. We do not recommend flipping this preference to true as doing so may result in data loss.

If your users report that their extension data does not sync after they upgrade to Firefox 79, please also file a bug. This is likely related to the storage.sync data migration.

Please let us know if there are any questions on our developer community forum.

The post Changes to storage.sync in Firefox 79 appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/07/09/changes-to-storage-sync-in-firefox-79/

|

|

Mozilla Security Blog: Reducing TLS Certificate Lifespans to 398 Days |

We intend to update Mozilla’s Root Store Policy to reduce the maximum lifetime of TLS certificates from 825 days to 398 days, with the aim of protecting our user’s HTTPS connections. Many reasons for reducing the lifetime of certificates have been provided and summarized in the CA/Browser Forum’s Ballot SC22. Here are Mozilla’s top three reasons for supporting this change.

1. Agility

Certificates with lifetimes longer than 398 days delay responding to major incidents and upgrading to more secure technology. Certificate revocation is highly disruptive and difficult to plan for. Certificate expiration and renewal is the least disruptive way to replace an obsolete certificate, because it happens at a pre-scheduled time, whereas revocation suddenly causes a site to stop working. Certificates with lifetimes of no more than 398 days help mitigate the threat across the entire ecosystem when a major incident requires certificate or key replacements. Additionally, phasing out certificates with MD5-based signatures took five years, because TLS certificates were valid for up to five years. Phasing out certificates with SHA-1-based signatures took three years, because the maximum lifetime of TLS certificates was three years. Weakness in hash algorithms can lead to situations in which attackers can forge certificates, so users were at risk for years after collision attacks against these algorithms were proven feasible.

2. Limit exposure to compromise

Keys valid for longer than one year have greater exposure to compromise, and a compromised key could enable an attacker to intercept secure communications and/or impersonate a website until the TLS certificate expires. A good security practice is to change key pairs frequently, which should happen when you obtain a new certificate. Thus, one-year certificates will lead to more frequent generation of new keys.

3. TLS Certificates Outliving Domain Ownership

TLS certificates provide authentication, meaning that you can be sure that you are sending information to the correct server and not to an imposter trying to steal your information. If the owner of the domain changes or the cloud service provider changes, the holder of the TLS certificate’s private key (e.g. the previous owner of the domain or the previous cloud service provider) can impersonate the website until that TLS certificate expires. The Insecure Design Demo site describes two problems with TLS certificates outliving their domain ownership:

- “If a company acquires a previously owned domain, the previous owner could still have a valid certificate, which could allow them to MitM the SSL connection with their prior certificate.”

- “If a certificate has a subject alt-name for a domain no longer owned by the certificate user, it is possible to revoke the certificate that has both the vulnerable alt-name and other domains. You can DoS the service if the shared certificate is still in use!”

The change to reduce the maximum validity period of TLS certificates to 398 days is being discussed in the CA/Browser Forum’s Ballot SC31 and can have two possible outcomes:

a) If that ballot passes, then the requirement will automatically apply to Mozilla’s Root Store Policy by reference.

b) If that ballot does not pass, then we intend to proceed with our regular process for updating Mozilla’s Root Store Policy, which will involve discussion in mozilla.dev.security.policy.

In preparation for updating our root store policy, we surveyed all of the certificate authorities (CAs) in our program and found that they all intend to limit TLS certificate validity periods to 398 days or less by September 1, 2020.

We believe that the best approach to safeguarding secure browsing is to work with CAs as partners, to foster open and frank communication, and to be diligent in looking for ways to keep our users safe.

The post Reducing TLS Certificate Lifespans to 398 Days appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2020/07/09/reducing-tls-certificate-lifespans-to-398-days/

|

|

Hacks.Mozilla.Org: Testing Firefox more efficiently with machine learning |

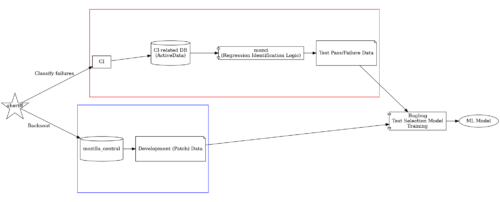

A browser is an incredibly complex piece of software. With such enormous complexity, the only way to maintain a rapid pace of development is through an extensive CI system that can give developers confidence that their changes won’t introduce bugs. Given the scale of our CI, we’re always looking for ways to reduce load while maintaining a high standard of product quality. We wondered if we could use machine learning to reach a higher degree of efficiency.

Continuous integration at scale

At Mozilla we have around 50,000 unique test files. Each contain many test functions. These tests need to run on all our supported platforms (Windows, Mac, Linux, Android) against a variety of build configurations (PGO, debug, ASan, etc.), with a range of runtime parameters (site isolation, WebRender, multi-process, etc.).

While we don’t test against every possible combination of the above, there are still over 90 unique configurations that we do test against. In other words, for each change that developers push to the repository, we could potentially run all 50k tests 90 different times. On an average work day we see nearly 300 pushes (including our testing branch). If we simply ran every test on every configuration on every push, we’d run approximately 1.35 billion test files per day! While we do throw money at this problem to some extent, as an independent non-profit organization, our budget is finite.

So how do we keep our CI load manageable? First, we recognize that some of those ninety unique configurations are more important than others. Many of the less important ones only run a small subset of the tests, or only run on a handful of pushes per day, or both. Second, in the case of our testing branch, we rely on our developers to specify which configurations and tests are most relevant to their changes. Third, we use an integration branch.

Basically, when a patch is pushed to the integration branch, we only run a small subset of tests against it. We then periodically run everything and employ code sheriffs to figure out if we missed any regressions. If so, they back out the offending patch. The integration branch is periodically merged to the main branch once everything looks good.

A subset of the tasks we run on a single mozilla-central push. The full set of tasks were too hard to distinguish when scaled to fit in a single image.

A subset of the tasks we run on a single mozilla-central push. The full set of tasks were too hard to distinguish when scaled to fit in a single image.

A new approach to efficient testing

These methods have served us well for many years, but it turns out they’re still very expensive. Even with all of these optimizations our CI still runs around 10 compute years per day! Part of the problem is that we have been using a naive heuristic to choose which tasks to run on the integration branch. The heuristic ranks tasks based on how frequently they have failed in the past. The ranking is unrelated to the contents of the patch. So a push that modifies a README file would run the same tasks as a push that turns on site isolation. Additionally, the responsibility for determining which tests and configurations to run on the testing branch has shifted over to the developers themselves. This wastes their valuable time and tends towards over-selection of tests.

About a year ago, we started asking ourselves: how can we do better? We realized that the current implementation of our CI relies heavily on human intervention. What if we could instead correlate patches to tests using historical regression data? Could we use a machine learning algorithm to figure out the optimal set of tests to run? We hypothesized that we could simultaneously save money by running fewer tests, get results faster, and reduce the cognitive burden on developers. In the process, we would build out the infrastructure necessary to keep our CI pipeline running efficiently.

Having fun with historical failures

The main prerequisite to a machine-learning-based solution is collecting a large and precise enough regression dataset. On the surface this appears easy. We already store the status of all test executions in a data warehouse called ActiveData. But in reality, it’s very hard to do for the reasons below.

Since we only run a subset of tests on any given push (and then periodically run all of them), it’s not always obvious when a regression was introduced. Consider the following scenario:

| Test A | Test B | |

| Patch 1 | PASS | PASS |

| Patch 2 | FAIL | NOT RUN |

| Patch 3 | FAIL | FAIL |

It is easy to see that the “Test A” failure was regressed by Patch 2, as that’s where it first started failing. However with the “Test B” failure, we can’t really be sure. Was it caused by Patch 2 or 3? Now imagine there are 8 patches in between the last PASS and the first FAIL. That adds a lot of uncertainty!

Intermittent (aka flaky) failures also make it hard to collect regression data. Sometimes tests can both pass and fail on the same codebase for all sorts of different reasons. It turns out we can’t be sure that Patch 2 regressed “Test A” in the table above after all! That is unless we re-run the failure enough times to be statistically confident. Even worse, the patch itself could have introduced the intermittent failure in the first place. We can’t assume that just because a failure is intermittent that it’s not a regression.

The writers of this post having a hard time.

The writers of this post having a hard time.

Our heuristics

In order to solve these problems, we have built quite a large and complicated set of heuristics to predict which regressions are caused by which patch. For example, if a patch is later backed out, we check the status of the tests on the backout push. If they’re still failing, we can be pretty sure the failures were not due to the patch. Conversely, if they start passing we can be pretty sure that the patch was at fault.

Some failures are classified by humans. This can work to our advantage. Part of the code sheriff’s job is annotating failures (e.g. “intermittent” or “fixed by commit” for failures fixed at some later point). These classifications are a huge help finding regressions in the face of missing or intermittent tests. Unfortunately, due to the sheer number of patches and failures happening continuously, 100% accuracy is not attainable. So we even have heuristics to evaluate the accuracy of the classifications!

Sheriffs complaining about intermittent failures.

Sheriffs complaining about intermittent failures.

Another trick for handling missing data is to backfill missing tests. We select tests to run on older pushes where they didn’t initially run, for the purpose of finding which push caused a regression. Currently, sheriffs do this manually. However, there are plans to automate it in certain circumstances in the future.

Collecting data about patches

We also need to collect data about the patches themselves, including files modified and the diff. This allows us to correlate with the test failure data. In this way, the machine learning model can determine the set of tests most likely to fail for a given patch.

Collecting data about patches is way easier, as it is totally deterministic. We iterate through all the commits in our Mercurial repository, parsing patches with our rust-parsepatch project and analyzing source code with our rust-code-analysis project.

Designing the training set

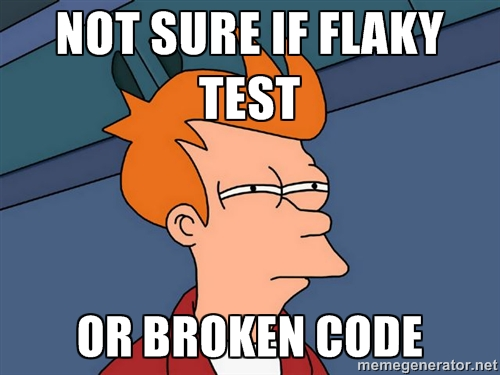

Now that we have a dataset of patches and associated tests (both passes and failures), we can build a training set and a validation set to teach our machines how to select tests for us.

90% of the dataset is used as a training set, 10% is used as a validation set. The split must be done carefully. All patches in the validation set must be posterior to those in the training set. If we were to split randomly, we’d leak information from the future into the training set, causing the resulting model to be biased and artificially making its results look better than they actually are.

For example, consider a test which had never failed until last week and has failed a few times since then. If we train the model with a randomly picked training set, we might find ourselves in the situation where a few failures are in the training set and a few in the validation set. The model might be able to correctly predict the failures in the validation set, since it saw some examples in the training set.

In a real-world scenario though, we can’t look into the future. The model can’t know what will happen in the next week, but only what has happened so far. To evaluate properly, we need to pretend we are in the past, and future data (relative to the training set) must be inaccessible.

Visualization of our split between training and validation set.

Visualization of our split between training and validation set.

Building the model

We train an XGBoost model, using features from both test, patch, and the links between them, e.g:

- In the past, how often did this test fail when the same files were touched?

- How far in the directory tree are the source files from the test files?

- How often in the VCS history were the source files modified together with the test files?

Full view of the model training infrastructure.

Full view of the model training infrastructure.

The input to the model is a tuple (TEST, PATCH), and the label is a binary FAIL or NOT FAIL. This means we have a single model that is able to take care of all tests. This architecture allows us to exploit the commonalities between test selection decisions in an easy way. A normal multi-label model, where each test is a completely separate label, would not be able to extrapolate the information about a given test and apply it to another completely unrelated test.

Given that we have tens of thousands of tests, even if our model was 99.9% accurate (which is pretty accurate, just one error every 1000 evaluations), we’d still be making mistakes for pretty much every patch! Luckily the cost associated with false positives (tests which are selected by the model for a given patch but do not fail) is not as high in our domain, as it would be if say, we were trying to recognize faces for policing purposes. The only price we pay is running some useless tests. At the same time we avoided running hundreds of them, so the net result is a huge savings!

As developers periodically switch what they are working on the dataset we train on evolves. So we currently retrain the model every two weeks.

Optimizing configurations

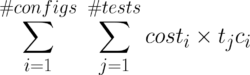

After we have chosen which tests to run, we can further improve the selection by choosing where the tests should run. In other words, the set of configurations they should run on. We use the dataset we’ve collected to identify redundant configurations for any given test. For instance, is it really worth running a test on both Windows 7 and Windows 10? To identify these redundancies, we use a solution similar to frequent itemset mining:

- Collect failure statistics for groups of tests and configurations

- Calculate the “support” as the number of pushes in which both X and Y failed over the number of pushes in which they both run

- Calculate the “confidence” as the number of pushes in which both X and Y failed over the number of pushes in which they both run and only one of the two failed.

We only select configuration groups where the support is high (low support would mean we don’t have enough proof) and the confidence is high (low confidence would mean we had many cases where the redundancy did not apply).

Once we have the set of tests to run, information on whether their results are configuration-dependent or not, and a set of machines (with their associated cost) on which to run them; we can formulate a mathematical optimization problem which we solve with a mixed-integer programming solver. This way, we can easily change the optimization objective we want to achieve without invasive changes to the optimization algorithm. At the moment, the optimization objective is to select the cheapest configurations on which to run the tests.

Using the model

A machine learning model is only as useful as a consumer’s ability to use it. To that end, we decided to host a service on Heroku using dedicated worker dynos to service requests and Redis Queues to bridge between the backend and frontend. The frontend exposes a simple REST API, so consumers need only specify the push they are interested in (identified by the branch and topmost revision). The backend will automatically determine the files changed and their contents using a clone of mozilla-central.

Depending on the size of the push and the number of pushes in the queue to be analyzed, the service can take several minutes to compute the results. We therefore ensure that we never queue up more than a single job for any given push. We cache results once computed. This allows consumers to kick off a query asynchronously, and periodically poll to see if the results are ready.

We currently use the service when scheduling tasks on our integration branch. It’s also used when developers run the special mach try auto command to test their changes on the testing branch. In the future, we may also use it to determine which tests a developer should run locally.

Sequence diagram depicting the communication between the various actors in our infrastructure.

Sequence diagram depicting the communication between the various actors in our infrastructure.

Measuring and comparing results

From the outset of this project, we felt it was crucial that we be able to run and compare experiments, measure our success and be confident that the changes to our algorithms were actually an improvement on the status quo. There are effectively two variables that we care about in a scheduling algorithm:

- The amount of resources used (measured in hours or dollars).

- The regression detection rate. That is, the percentage of introduced regressions that were caught directly on the push that caused them. In other words, we didn’t have to rely on a human to backfill the failure to figure out which push was the culprit.

We defined our metric:

scheduler effectiveness = 1000 * regression detection rate / hours per push

The higher this metric, the more effective a scheduling algorithm is. Now that we had our metric, we invented the concept of a “shadow scheduler”. Shadow schedulers are tasks that run on every push, which shadow the actual scheduling algorithm. Only rather than actually scheduling things, they output what they would have scheduled had they been the default. Each shadow scheduler may interpret the data returned by our machine learning service a bit differently. Or they may run additional optimizations on top of what the machine learning model recommends.

Finally we wrote an ETL to query the results of all these shadow schedulers, compute the scheduler effectiveness metric of each, and plot them all in a dashboard. At the moment, there are about a dozen different shadow schedulers that we’re monitoring and fine-tuning to find the best possible outcome. Once we’ve identified a winner, we make it the default algorithm. And then we start the process over again, creating further experiments.

Conclusion

The early results of this project have been very promising. Compared to our previous solution, we’ve reduced the number of test tasks on our integration branch by 70%! Compared to a CI system with no test selection, by almost 99%! We’ve also seen pretty fast adoption of our mach try auto tool, suggesting a usability improvement (since developers no longer need to think about what to select). But there is still a long way to go!

We need to improve the model’s ability to select configurations and default to that. Our regression detection heuristics and the quality of our dataset needs to improve. We have yet to implement usability and stability fixes to mach try auto.

And while we can’t make any promises, we’d love to package the model and service up in a way that is useful to organizations outside of Mozilla. Currently, this effort is part of a larger project that contains other machine learning infrastructure originally created to help manage Mozilla’s Bugzilla instance. Stay tuned!

If you’d like to learn more about this project or Firefox’s CI system in general, feel free to ask on our Matrix channel, #firefox-ci:mozilla.org.

The post Testing Firefox more efficiently with machine learning appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/07/testing-firefox-more-efficiently-with-machine-learning/

|

|

Karl Dubost: Browser Wish List - Tab Splitting for Contextual Reading |

On Desktop, I'm very often in a situation where I want to read a long article in a browser tab with a certain number of hypertext links. The number of actions I have to do to properly read the text is tedious. It's prone to errors, requires a bit of preparation and has a lot of manual actions.

The Why?

Take for example this article about The End of Tourism. There are multiple ways to read it.

- We can read the text only.

- We can click on each invidiual link when we reach it to open in the background tab, that we will check later.

- We can click, read and come back to the main article.

- We can open a new window and drag and drop the link to this new window.

- We can ctrl+click in a new tab or a new window and then go to the context of this tab and window.

We can do better.

Having the possibility to read contextual information is useful for having a better understanding of the current article we are reading. Making this process accessible with only one click without losing the initial context would be tremendous.

What I Want: Tab Splitting For Contextual Reading

The "open a new window + link drag and drop" model of interactions is the closest of what I would like as a feature for reading things with hypertext links, but it's not practical.

- I want to be able to switch my current tab in a split tab either horizontally or vertically.

- Once the tab is in split mode. The first part is the current article, I'm reading. The second part is blank.

- Each time I'm clicking on a link in the first part (current article), it loads the content of the link in the second part.

- If I find that I want to keep the second part, I would be able to extract it in a new tab or new window.

This provides benefits for reading long forms with a lot of hypertext links. But you can imagine also how practical it could become for code browsing sites. It would create a very easy way to access another part of the code for contextual information or documentation.

There's nothing new required in terms of technologies to implement this. This is just browser UI manipulation and in which context to display a link.

Otsukare!

https://www.otsukare.info/2020/07/09/split-tab-contextual-reading

|

|

Mozilla Open Policy & Advocacy Blog: Criminal proceedings against Malaysiakini will harm free expression in Malaysia |

The Malaysian government’s decision to initiate criminal contempt proceedings against Malaysiakini for third party comments on the news portal’s website is deeply concerning. The move sets a dangerous precedent against intermediary liability and freedom of expression. It ignores the internationally accepted norm that holding publishers responsible for third party comments has a chilling effect on democratic discourse. The legal outcome the Malaysian government is seeking would upend the careful balance which places liability on the bad actors who engage in illegal activities, and only holds companies accountable when they know of such acts.

Intermediary liability safe harbour protections have been fundamental to the growth of the internet. They have enabled hosting and media platforms to innovate and flourish without the fear that they would be crushed by a failure to police every action of their users. Imposing the risk of criminal liability for such content would place a tremendous, and in many cases fatal, burden on many online intermediaries while negatively impacting international confidence in Malaysia as a digital destination.

We urge the Malyasian government to drop the proceedings and hope the Federal Court of Malaysia will meaningfully uphold the right to freedom of expression guaranteed by Malaysia’s Federal Constitution.

The post Criminal proceedings against Malaysiakini will harm free expression in Malaysia appeared first on Open Policy & Advocacy.

|

|

The Mozilla Blog: A look at password security, Part I: history and background |

|

|

Mozilla Addons Blog: Additional JavaScript syntax support in add-on developer tools |

When an add-on is submitted to Firefox for validation, the add-ons linter checks its code and displays relevant errors, warnings, or friendly messages for the developer to review. JavaScript is constantly evolving, and when the linter lags behind the language, developers may see syntax errors for code that is generally considered acceptable. These errors block developers from getting their add-on signed or listed on addons.mozilla.org.

On July 2, the linter was updated from ESLint 5.16 to ESLint 7.3 for JavaScript validation. This upgrades linter support to most ECMAScript 2020 syntax, including features like optional chaining, BigInt, and dynamic imports. As a quick note, the linter is still slightly behind what Firefox allows. We will post again in this blog the next time we make an update.

Want to help us keep the linter up-to-date? We welcome code contributions and encourage developers to report bugs found in our validation process.

The post Additional JavaScript syntax support in add-on developer tools appeared first on Mozilla Add-ons Blog.

|

|

This Week In Rust: This Week in Rust 346 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Check out this week's This Week in Rust Podcast

Updates from Rust Community

News & Blog Posts

- Announcing Rustup 1.22.0

- Ownership of the standard library implementation

- Compiler Team 2020-2021 Roadmap Meeting Minutes

- Back to old tricks ..(or, baby steps in Rust)

- Small strings in Rust

- Choosing a Rust web framework, 2020 edition

- Writing Interpreters in Rust: a Guide

- Transpiling A Kernel Module to Rust: The Good, the Bad and the Ugly

- Bad Apple!! and how I wrote a Rust video player for Task Manager!!

- Boa release v0.9 and make use of Rust's measureme

- RiB (Rust in Blockchain) Newsletter #13

- 7 Things I learned from Porting a C Crypto Library to Rust

- This Month in Rust GameDev #11 (June 2020)

- AWS Lambda with Rust

- Writing a winning 4K intro in Rust

- Ringbahn II: the central state machine

- Bastion floating on Tide - Part 2

- Porting Godot Games To Rust (Part 1)

- Image decay as a service

- IntelliJ Rust Changelog #125

- Abstracting away correctness

- Rendering in Rust

- Super hero Rust fuzzing

- What Is a Dangling Pointer?

- Simple Rocket Web Framework Tutorial | POST Request

- Adventures of OS - System Calls

- Allocation API, Allocators and Virtual Memory

- Cargo [features] explained with examples

- Concurrency Patterns in Embedded Rust

- Getting started with WebAssembly and Rust

- How to Write a Stack in Rust

- Implementing WebSockets in Rust

- rust-analyzer changelog 32

- Rust for JavaScript Developers - Functions and Control Flow

- Rust: The New LLVM

- Using Rust and WebAssembly to Process Pixels from a Video Feed

- WebAssembly with Rust and React (Using create-react-app)

- [Portuguese] Aprendendo Rust: 01 - Hello World

- [audio] Mun

- [audio] Rust and machine learning #3 with Alec Mocatta (Ep. 109)

- [video] Authentication Service in Actix - Part 1: Configuration

- [video] Rust FLTK gui tutorial

Crate of the Week

This week's crate is suckit, a tool to recursively download a website.

Thanks to Martin Schmidt for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

No issues were proposed for CfP.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

308 pull requests were merged in the last week

- add

format_args_capturefeature - don't implement Fn* traits for

#[target_feature]functions - fix wasm32 being broken due to a NodeJS version bump

- handle

macro_rules!tokens consistently across crates - implement

slice_stripfeature - make

likelyandunlikelyconst, gated by featureconst_unlikely - optimise fast path of checked_ops with

unlikely - provide more information on duplicate lang item error.

- remove

TypeckTables::empty(None)and make hir_owner non-optional. - remove unnecessary release from Arc::try_unwrap

- serialize all foreign

SourceFiles into proc-macro crate metadata - stabilize

#[track_caller]. - use WASM's saturating casts if they are available

- use

Spans to identify unreachable subpatterns in or-patterns - Update the rust-lang/llvm-project submodule to include AVR fixes recently merged

- mir-opt: Fix mis-optimization and other issues with the SimplifyArmIdentity pass

- added

.collect()intoStringfromBox - impl

FromforString - linker: create

GNU_EH_FRAMEheader by default when producing ELFs - resolve: disallow labelled breaks/continues through closures/async blocks

- ship rust analyzer

- chalk: add type outlives goal

- chalk: allow printing lifetime placeholders

- chalk: support for ADTs

- hashbrown: add RawTable::erase and remove

- hashbrown: expose RawTable::try_with_capacity

- hashbrown: improve RawIter re-usability

- libc: add a bunch of constants and functions which were missing on Android

- libc: add more WASI libc definitions.

- libc: declare

seekdirandtelldirfor WASI. - stdarch: fix or equals integer comparisons

- cargo: write GNU tar files, supporting long names.

- crates.io: use default branch alias instead of "master"

- clippy: added restriction lint: pattern-type-mismatch

- clippy: suggest

Option::map_or(_else) forif let Some { y } else { x } - rustfmt: do not duplicate const keyword on parameters

- rustfmt: do not remove fn headers (e.g., async) on extern fn items

- rustfmt: pick up comments between trait where clause and open block

Rust Compiler Performance Triage

- 2020-07-07. One unimportant regression on a rollup; six improvements, two on rollups.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

- RFC: Add a new

#[instruction_set(...)]attribute for supporting per-function instruction set changes - Inline

constexpressions and patterns - Inline assembly

Tracking Issues & PRs

- [disposition: merge] stabilize const mem::forget

- [disposition: merge] Stabilize casts and coercions to

&[T]in const fn - [disposition: merge] mv std libs to std/

- [disposition: merge] Stabilize

transmutein constants and statics but not const fn - [disposition: merge] Stabilize const_type_id feature

- [disposition: merge] Accept tuple.0.0 as tuple indexing (take 2)

New RFCs

Upcoming Events

Online

- July 9. Berlin, DE - Rust Hack and Learn

- July 9. San Diego, CA, US - July 2020 Tele-Meetup

- July 13. Seattle, WA, US - Seattle Rust Meetup - Monthly Meetup

- July 16. Turin, IT - Rust Italia - Gruppo di studio di Rust

North America

- July 8. Atlanta, GA, US - Rust Atlanta - Grab a beer with fellow Rustaceans

- July 9. Lehi, UT, US - Utah Rust - The Blue Pill: Rust on Microcontrollers

- July 15. Vancouver, BC, CA - Vancouver Rust - Rust Study/Hack/Hang-out night

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

- Rust Developer at 1Password, Remote (US or Canada)

- Security Engineer at 1Password, Remote (US or Canada)

- Part-time Backend Engineer at Tagnifi, Remote (North America)

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Rust is like a futuristic laser gun with an almost AI-like foot detector that turns the safety on when it recognises your foot.

Thanks to Synek317 for the suggestions!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/07/08/this-week-in-rust-346/

|

|

The Rust Programming Language Blog: Announcing Rustup 1.22.1 |

The rustup working group is happy to announce the release of rustup version 1.22.1. Rustup is the recommended tool to install Rust, a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of rustup installed, getting rustup 1.22.1 may be as easy as closing your IDE and running:

rustup self update

Rustup will also automatically update itself at the end of a normal toolchain update:

rustup update

If you don't have it already, or if the 1.22.0 release of rustup caused you to experience the problem that 1.22.1 fixes, you can get rustup from the appropriate page on our website.

What's new in rustup 1.22.1

When updating dependency crates for 1.22.0, a change in behaviour of the url crate slipped in which caused env_proxy to cease to work with proxy data set in the environment. This is unfortunate since those of you who use rustup behind a proxy and have updated to 1.22.0 will now find that rustup may not work properly for you.

If you are affected by this, simply re-download the installer and run it. It will update your existing installation of Rust with no need to uninstall first.

Thanks

Thanks to Ivan Nejgebauer who spotted the issue, provided the fix, and made rustup 1.22.1 possible, and to Ben Chen who provided a fix for our website.

|

|

Mozilla Addons Blog: New Extensions in Firefox for Android Nightly (Previously Firefox Preview) |

Firefox for Android Nightly (formerly known as Firefox Preview) is a sneak peek of the new Firefox for Android experience. The browser is being rebuilt based on GeckoView, an embeddable component for Android, and we are continuing to gradually roll out extension support.

Including the add-ons from our last announcement, there are currently nine Recommended Extensions available to users. The latest three additions are in Firefox for Android Nightly and will be available on Firefox for Android Beta soon:

Decentraleyes prevents your mobile device from making requests to content delivery networks (i.e. advertisers), and instead provides local copies of common libraries. In addition to the benefit of increased privacy, Decentraleyes also reduces bandwidth usage—a huge benefit in the mobile space.

Privacy Possum has a unique approach to dealing with trackers. Instead of playing along with the cat and mouse game of removing trackers, it falsifies the information trackers used to create a profile of you, in addition to other anti-tracking techniques.

Youtube High Definition gives you more control over how videos are displayed on Youtube. You have the opportunity to set your preferred visual quality option and have it shine on your high-DPI device, or use a lower quality to save bandwidth.If you have more questions on extensions in Firefox for Android Nightly, please check out our FAQ. We will be posting further updates about our future plans on this blog.

The post New Extensions in Firefox for Android Nightly (Previously Firefox Preview) appeared first on Mozilla Add-ons Blog.

|

|

Karl Dubost: Browser Wish List - Tabs Time Machine |

Each time, when asking around you how many tabs are opened in the current desktop browser session, most people will have around per session (July 2020):

- Release: 4 tabs (median) and 8 tabs (mean)

- Nightly: 4 tabs (median) and 51 tabs (mean)

(Having a graph of the full distribution would be interesting here.)

It would be interesting to see the exact distribution, because there is a cohort with a very high number of tabs. I have usually in between 300 and 500 tabs opened. And sometimes I'm cleaning up everything. But after an internal discussion at Mozilla, I realized some people had even more toward a couple of thousand tabs opened at once.

While we are not the sheer majority, we are definitely a group of people probably working with browsers intensively and with specific needs that the browsers currently do not address. Also we have to be careful with these stats which are auto-selecting group of people. If there's nothing to manage a high number of tabs, it is then likely that there will not be a lot of people ready to painstakly manage a high number of tabs.

The Why?

I use a lot of tabs.

But if I turn my head to my bookshelf, there are probably around 2000+ books in there. My browser is a bookshelf or library of content and a desk. But one which is currently not very good at organizing my content. I keep tabs opened

- to access reference content (articles, guidebook, etc)

- to talk about it later on with someone else or in a blog post

- to have access to tasks (opening 30 bugs I need to go through this week)

I sometimes open some tabs twice. I close by mistake a tab without realizing and then when I search the content again I can't find it. I can't do a full text search on all open tabs. I can only manage the tabs vertically with an addon (right now I'm using Tabs Center Redux). And if by any bad luck, we are offline and the tabs had not been navigated beforehand, we loose the full content we needed.

So I’m often grumpy at my browser.

What I want: Content Management

Here I will be focusing on my own use case and needs.

What I would love is an “Apple Time Machine”-like for my browser, aka dated archives of my browsing session, with full text search.

- Search through text keyword all tabs content, not only the title.

- Possibility to filter search queries with time and uri. "Search this keyword only on wikipedia pages opened less than one week ago"

- Tag tabs to create collections of content.

- Archive the same exact uri at different times. Imagine the homepage of the NYTimes at different dates or times and keeping each version locally. (Webarchive is incomplete and online, I want it to work offline).

- The storage format doesn't need to be the full stack of technologies of the current page. Opera Mini for example is using a format which is compressing the page as a more or less interactive image with limited capabilities.

- You could add automation with an automatic backup of everything you are browsing, or have the possibility to manually select the pages you want to keep (like when you decide to pin a tab)

- If the current computer doesn't have enough storage for your needs, an encrypted (paid) service could be provided where you would specify which page you want to be archived away and the ones that you want to keep locally.

Firefox becomes a portable bookshelf and the desk with the piles of papers you are working on.

Browser Innovation

Innovation in browsers don't have to be only about supported technologies, but also about features of the browser itself. I have the feeling that we have dropped the ball on many things, as we race to be transparent with regards to websites and applications. Allowing technologies giving tools to web developers to create cool things is certainly very useful, but making a browser more useful for the immediate users is as much important. I don't want the browser to disappear in this mediating UI, I want it to give me more ways to manage and mitigate my interactions with the Web.

Slightly Related

Open tabs are cognitive spaces by Michail Rybakov.

It is time we stop treating websites as something solitary and alien to us. Web pages that we visit and leave open are artifacts of externalized cognition; keys to thinking and remembering.

The browser of today is a transitory space that brings us into a mental state, not just to a specific website destination. And we should design our browsers for this task.

Otsukare!

Responses/Comments from the Web

-

The Disadvantages of Adding Todo Management Features to Arbitrary Software — Karl Voit.

I guess somehow I poorly explained what I meant, because I didn't think nor want to create a todo management system. I don't want deadlines, nor timestamp for executing things, nor a transition of tasks. * > Although not nearly so much (maybe 30 or 40 tabs at a time), you can count me in this group — Christine Hall * > Interesting request, forwarded to the browser UX team of @MicrosoftEdge — Chris Heilmann

https://www.otsukare.info/2020/07/07/browser-tabs-time-machine

|

|

Mozilla Accessibility: Broadening Our Impact |

Last year, the accessibility team worked to identify and fix gaps in our screen reader support, as well as on some new areas of focus, like improving Firefox for users with low vision. As a result, we shipped some great features. In addition, we’ve begun building awareness across Mozilla and putting in place processes to help ensure delightful accessibility going forward, including a Firefox wide triage process.

With a solid foundation for delightful accessibility well underway, we’re looking at the next step in broadening our impact: expanding our engagement with our passionate, global community. It’s our hope that we can get to a place where a broad community of interested people become active participants in the planning, design, development and testing of Firefox accessibility. To get there, the first step is open communication about what we’re doing and where we’re headed.

To that end, we’ve created this blog to keep you all informed about what’s going on with Firefox accessibility. As a second step, we’ve published the Firefox Accessibility Roadmap. This document is intended to communicate our ongoing work, connecting the dots from our aspirations, as codified in our Mission and Manifesto, through our near term strategy, right down to the individual work items we’re tackling today. The roadmap will be updated regularly to cover at least the next six months of work and ideally the next year or so.

Another significant area of new documentation, pushed by Eitan and Morgan, is around our ongoing work to bring VoiceOver support to Firefox on macOS. In addition to the overview wiki page, which covers our high level plan and specific lists of bugs we’re targeting, there’s also a work in progress architectural overview and a technical guide to contributing to the Mac work.

We’ve also transitioned most of our team technical discussions from a closed Mozilla Slack to the open and participatory Matrix instance. Some exciting conversations are already happening and we hope that you’ll join us.

And that’s just the beginning. We’re always improving our documentation and onboarding materials so stay tuned to this channel for updates. We hope you find access to the team and the documents useful and that if something in our docs calls out to you that you’ll find us on Matrix and help out, whether that’s contributing ideas for better solutions to problems we’re tackling, writing code for features and fixes we need, or testing the results of development work.

We look forward to working with you all to make the Firefox family of products and services the best they can be, a delight to use for everyone, especially people with disabilities.

The post Broadening Our Impact appeared first on Mozilla Accessibility.

https://blog.mozilla.org/accessibility/broadening-our-impact/

|

|

Mozilla Open Policy & Advocacy Blog: Next Steps for Net Neutrality |

Two years ago we first brought Mozilla v. FCC in federal court, in an effort to save the net neutrality rules protecting American consumers. Mozilla has long fought for net neutrality because we believe that the internet works best when people control their own online experiences.

Today is the deadline to petition the Supreme Court for review of the D.C. Circuit decision in Mozilla v. FCC. After careful consideration, Mozilla—as well as its partners in this litigation—are not seeking Supreme Court review of the D.C. Circuit decision. Even though we did not achieve all that we hoped for in the lower court, the court recognized the flaws of the FCC’s action and sent parts of it back to the agency for reconsideration. And the court cleared a path for net neutrality to move forward at the state level. We believe the fight is best pursued there, as well as on other fronts including Congress or a future FCC.

Net neutrality is more than a legal construct. It is a reflection of the fundamental belief that ISPs have tremendous power over our online experiences and that power should not be further concentrated in actors that have often demonstrated a disregard for consumers and their digital rights. The global pandemic has moved even more of our daily lives—our work, school, conversations with friends and family—online. Internet videos and social media debates are fueling an essential conversation about systemic racism in America. At this moment, net neutrality protections ensuring equal treatment of online traffic are critical. Recent moves by ISPs to favor their own content channels or impose data caps and usage-based pricing make concerns about the need for protections all the more real.

The fight for net neutrality will continue on. The D.C. Circuit decision positions the net neutrality movement to continue on many fronts, starting with a defense of California’s strong new law to protect consumers online—a law that was on hold pending resolution of this case.

Other states have followed suit and we expect more to take up the mantle. We will look to a future Congress or future FCC to take up the issue in the coming months and years. Mozilla is committed to continuing our work, with our broad community of allies, in this movement to defend the web and consumers and ensure the internet remains open and accessible to all.

The post Next Steps for Net Neutrality appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2020/07/06/next-steps-for-net-neutrality/

|

|

Mozilla Security Blog: Performance Improvements via Formally-Verified Cryptography in Firefox |

Cryptographic primitives, while extremely complex and difficult to implement, audit, and validate, are critical for security on the web. To ensure that NSS (Network Security Services, the cryptography library behind Firefox) abides by Mozilla’s principle of user security being fundamental, we’ve been working with Project Everest and the HACL* team to bring formally-verified cryptography into Firefox.

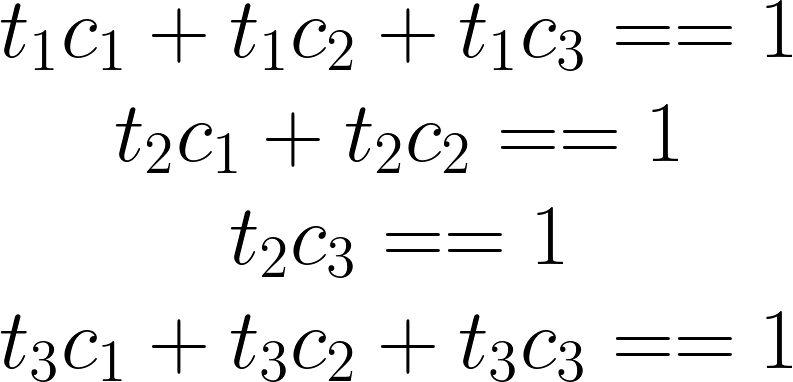

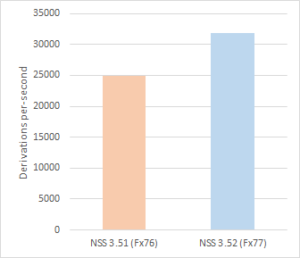

In Firefox 57, we introduced formally-verified Curve25519, which is a mechanism used for key establishment in TLS and other protocols. In Firefox 60, we added ChaCha20 and Poly1305, providing high-assurance authenticated encryption. Firefox 69, 77, and 79 improve and expand these implementations, providing increased performance while retaining the assurance granted by formal verification.

Performance & Specifics

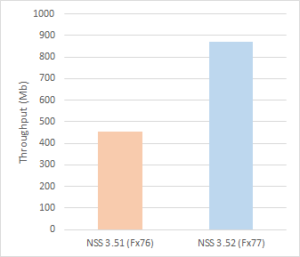

For key establishment, we recently replaced the 32-bit implementation of Curve25519 with one from the Fiat-Crypto project. The arbitrary-precision arithmetic functions of this implementation are proven to be functionally correct, and it improves performance by nearly 10x over the previous code. Firefox 77 updates the 64-bit implementation with new HACL* code, benefitting from a ~27% speedup. Most recently, Firefox 79 also brings this update to Windows. These improvements are significant: Telemetry shows Curve25519 to be the most widely used elliptic curve for ECDH(E) key establishment in Firefox, and increased throughput reduces energy consumption, which is particularly important for mobile devices.

For encryption and decryption, we improved the performance of ChaCha20-Poly1305 in Firefox 77. Throughput is doubled by taking advantage of vectorization with 128-bit and 256-bit integer arithmetic (via the AVX2 instruction set on x86-64 CPUs). When these features are unavailable, NSS will fall back to an AVX or scalar implementation, both of which have been further optimized.

The HACL* project has introduced new techniques and libraries to improve efficiency in writing verified primitives for both scalar and vectorized variants. This allows aggressive code sharing and reduces the verification effort across many different platforms.

What’s Next?

For Firefox 81, we intend to incorporate a formally-verified implementation of the P256 elliptic curve for ECDSA and ECDH. Middle-term targets for verified implementations include GCM, the P384 and P521 elliptic curves, and the ECDSA signature scheme itself. While there remains work to be done, these updates provide an improved user experience and ease the implementation burden for future inclusion of platform-optimized primitives.

The post Performance Improvements via Formally-Verified Cryptography in Firefox appeared first on Mozilla Security Blog.

|

|

Wladimir Palant: Dismantling BullGuard Antivirus' online protection |

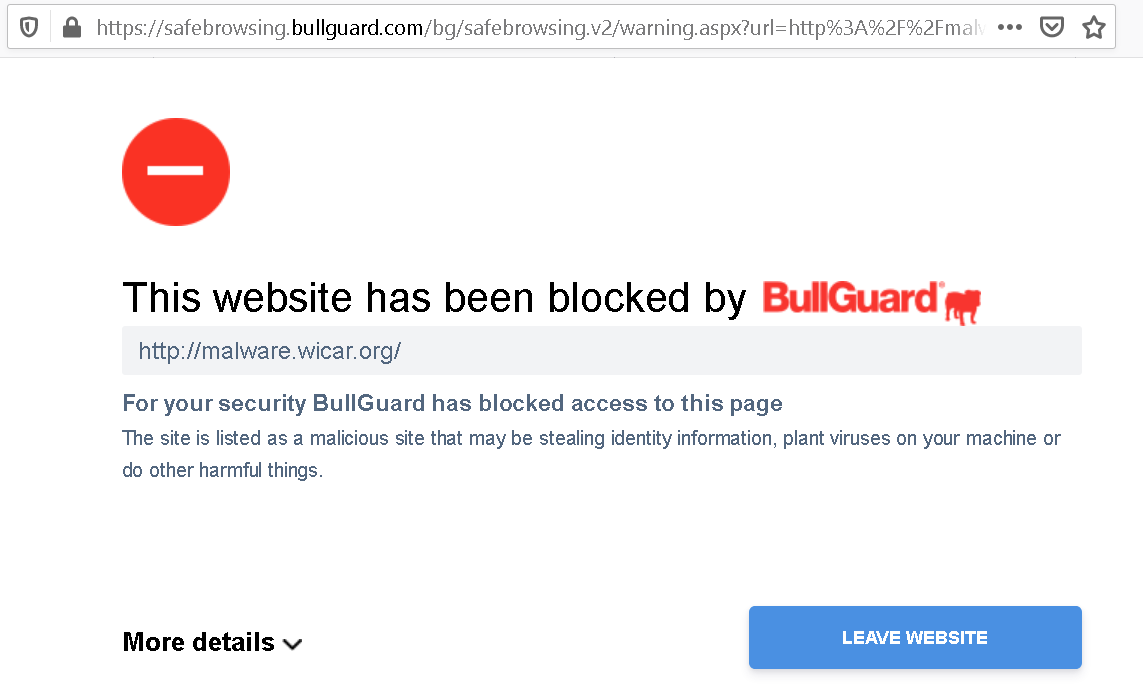

Just like so many other antivirus applications, BullGuard antivirus promises to protect you online. This protection consists of the three classic components: protection against malicious websites, marking of malicious search results and BullGuard Secure Browser for your special web surfing needs. As so often, this functionality comes with issues of its own, some being unusually obvious.

Contents

Summary of the findings

The first and very obvious issue was found in the protection against malicious websites. While this functionality often cannot be relied upon, circumventing it typically requires some effort. Not so with BullGuard Antivirus: merely adding a hardcoded character sequence to the address would make BullGuard ignore a malicious domain.

Further issues affected BullGuard Secure Browsers: multiple Cross-Site Scripting (XSS) vulnerabilities in its user interface potentially allowed websites to spy on the user or crash the browser. The crash might be exploitable for Remote Code Execution (RCE). Proper defense in depth prevented worse here.

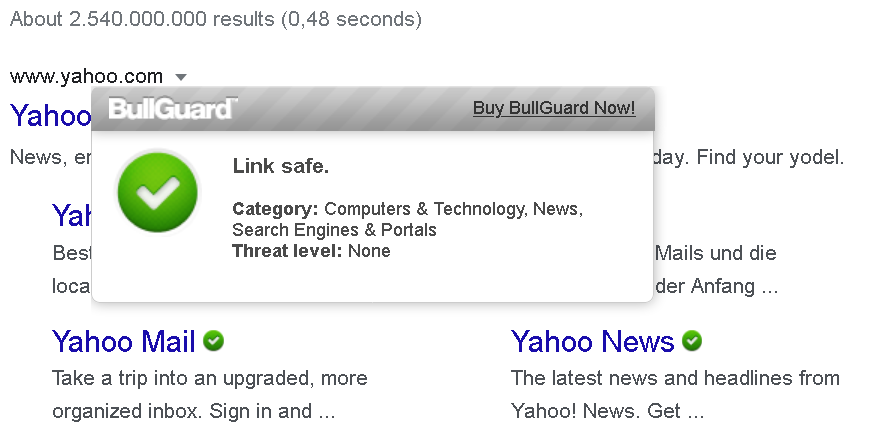

Online protection approach

BullGuard Antivirus listens in on all connections made by your computer. For some of these connections it will get between the server and the browser in order to manipulate server responses. That’s especially the case for malicious websites of course, but the server response for search pages will also be manipulated in order to indicate which search results are supposed to be trustworthy.

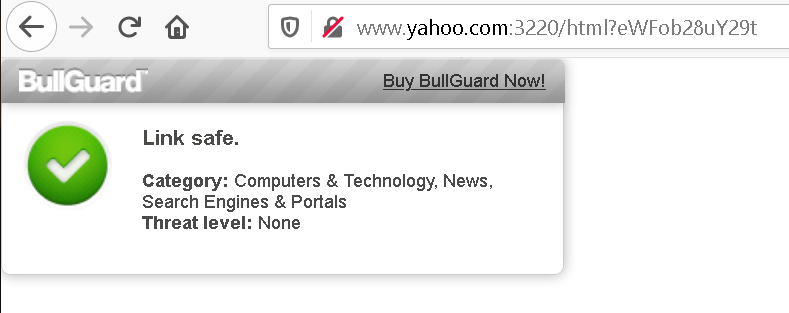

To implement this pop-up, the developers used an interesting trick: connections to port 3220 will always be redirected to the antivirus application, no matter which domain. So navigating to http://www.yahoo.com:3220/html?eWFob28uY29t will yield the following response:

This approach is quite dangerous, a vulnerability in the content served up here will be exploitable in the context of any domain on the web. I’ve seen such Universal XSS vulnerabilities before. In case of BullGuard, none of the content served appears to be vulnerable however. It would still be a good idea to avoid the unnecessary risk: the server could respond to http://localhost:3220/ only and use CORS appropriately.

Unblocking malicious websites

To be honest, I couldn’t find a malicious website that BullGuard Antivirus would block. So I cheated and added malware.wicar.org to the block list in application’s settings. Navigating to the site now resulted in a redirect to bullguard.com:

If you click “More details” you will see a link labeled “Continue to website. NOT RECOMMENDED” at the bottom. So far so good, making overriding the warning complicated and discouraged is sane user interface design. But how does that link work?

Each antivirus application came up with its own individual answer to this question. Some answers turned out more secure than others, but BullGuard’s is still rather unique. The warning page will send you to https://malware.wicar.org/?gid=13fd117f-bb07-436e-85bb-f8a3abbd6ad6 and this gid parameter will tell BullGuard Antivirus to unblock malware.wicar.org for the current session. Where does its value come from? It’s hardcoded in BullGuardFiltering.exe. Yes, it’s exactly the same value for any website and any BullGuard install.

So if someone were to run a malicious email campaign and they were concerned about BullGuard blocking the link to https://malicious.example.com/ – no problem, changing the link into https://malicious.example.com/?gid=13fd117f-bb07-436e-85bb-f8a3abbd6ad6 would disable antivirus protection.

Current BullGuard Antivirus release uses a hid parameter with a value dependent on website and current session. So neither predicting its value nor reusing a value from one website to unblock another should work any more.

XSSing the secure browser

Unlike some other antivirus solutions, BullGuard doesn’t market their BullGuard Secure Browser as an online banking browser. Instead, they seem to suggest that it is good enough for everyday use – if you can live with horrible user experience that is. It’s a Chromium-based browser which “protects you from vulnerable and malicious browser extensions” by not supporting any browser extensions.

Unlike Chromium’s, this browser’s user interface is a collection of various web pages. For example, secure://address_bar.html is the location bar and secure://find_in_page.html the find bar. All pages rely heavily on HTML manipulation via jQuery, so you are probably not surprised that there are cross-site scripting vulnerabilities here.

Vulnerability in the location bar

The code displaying search suggestions when you type something into the location bar went like this:

else if (vecFields[i].type == 3) {

var string_search = BG_TRANSLATE_String('secure_addressbar_search');

// type is search with Google

strHtml += '> + vecFields[i].icon +

'" alt="favicon" class="address_drop_favicon"/> ' +

'

+ vecFields[i].icon +

'" alt="favicon" class="address_drop_favicon"/> ' +

'' +

vecFields[i].title + ' - ' +

string_search + '

';

}

No escaping performed here, so if you type something like secure://address_bar.html. Not only will the code stay there for the duration of the current browser session, it will be able to spy on location bar changes. Ironically, BullGuard’s announcement claims that their browser protects against man-in-the browser attacks which is exactly what this is.

You think that no user would be stupid enough to copy untrusted code into the location bar, right? But regular users have no reason to expect the world to explode simply because they typed in something. That’s why no modern browser allows typing in javascript: addresses any more. And javascript: addresses are far less problematic than the attack depicted here.

Vulnerability in the display of blocked popups

The address bar issue isn’t the only or even the most problematic XSS vulnerability. The page secure://blocked-popups.html runs the following code:

var li = $('')

.addClass('bg_blocked_popups-item')

.attr('onclick', 'jsOpenPopup(''+ list[i] + '', '+ i + ');')

.appendTo(cList);

No escaping performed here either, so a malicious website only needs to use single quotation marks in the pop-up address:

window.open(`/document/download.pdf#'+alert(location.href)+'`, "_blank");

That’s it, the browser will now indicate a blocked pop-up.

And if the user clicks this message, a message will appear indicating that arbitrary JavaScript code is running in the context of secure://blocked-popups.html now. That code can for example call window.bgOpenPopup() which allows it to open arbitrary URLs, even

data: URLs that normally cannot be opened by websites. It could even open an ad page every few minutes, and the only way to get rid of it would be restarting the browser.

But the purpose of window.bgOpenPopup() function isn’t merely allowing the pop-up, it also removes a blocked pop-up from the list. By position, without any checks to ensure that the index is valid. So calling window.bgOpenPopup("...", 100000) will crash the browser – this is an access violation. Use a smaller index and the operation will “succeed,” corrupting memory and probably allowing remote code execution.

And finally, this vulnerability allows calling window.bgOnElementRectMeasured() function, setting the size of this pop-up to an arbitrary value. This allows displaying the pop-up on top of the legitimate browser user interface and display a fake user interface there. In theory, malicious code could conjure up a fake location bar and content area, messing with any website loaded and exfiltrating data. Normally, browsers have visual clues to clearly separate their user interface from the content area, but these would be useless here. Also, it would be comparably easy to make the fake user interface look convincing as this page can access the CSS styles used for the real interface.

What about all the other API functions?

While the above is quite bad, BullGuard Secure Browser exposes a number of other functions to its user interface. However, it seems that these can only be used by the pages which actually need them. So the blocked pop-ups page cannot use anything other than window.bgOpenPopup() and window.bgOnElementRectMeasured(). That’s proper defense in depth and it prevented worse here.

Conclusions

BullGuard simply hardcoding security tokens isn’t an issue I’ve seen before. It should be obvious that websites cannot be allowed to disable security features, and yet it seems that the developers somehow didn’t consider this attack vector at all.

The other conclusion isn’t new but worth repeating: if you build a “secure” product, you should use a foundation that eliminates the most common classes of vulnerabilities. There is no reason why a modern day application should have XSS vulnerabilities in its user interface, and yet it happens all the time.

Timeline

- 2020-04-09: Started looking for a vulnerability disclosure process on BullGuard website, found none. Asked on Twitter, without success.

- 2020-04-10: Sent an email to

security@address, bounced. Contactedmail@support address asking how vulnerabilities are supposed to be reported. - 2020-04-10: Response asks for “clarification.”

- 2020-04-11: Sent a slightly reformulated question.

- 2020-04-11: Response tells me to contact

feedback@address with “application-related feedback.” - 2020-04-12: Sent the same inquiry to

feedback@support address. - 2020-04-15: Received apology for the “unsatisfactory response” and was told to report the issues via this support ticket.

- 2020-04-15: Sent report about circumventing protection against malicious websites.

- 2020-04-17: Sent report about XSS vulnerabilities in BullGuard Secure Browser (delay due to miscommunication).

- 2020-04-17: Protection circumvention vulnerability confirmed and considered critical.

- 2020-04-23: Told that the HTML attachment (proof of concept for the XSS vulnerability) was rejected by the email system, resent the email with a 7zip-packed attachment.

- 2020-05-04: XSS vulnerabilities confirmed and considered critical.

- 2020-05-18: XSS vulnerabilities fixed in 20.0.378 Hotfix 2.

- 2020-06-29: Protection circumvention vulnerability fixed in 20.0.380 Hotfix 2 (release announcement wrongly talks about “XSS vulnerabilities in Secure Browser” again).

- 2020-06-07: Got reply from the vendor that publishing the details one week before deadline is ok.

https://palant.info/2020/07/06/dismantling-bullguard-antivirus-online-protection/

|

|

Firefox UX: UX Book Club Recap: Writing is Designing, in Conversation with the Authors |

The Firefox UX book club comes together a few times a year to discuss books related to the user experience practice. We recently welcomed authors Michael Metts and Andy Welfle to discuss their book Writing is Designing: Words and the User Experience (Rosenfeld Media, Jan. 2020).

To make the most of our time, we collected questions from the group beforehand, organized them into themes, and asked people to upvote the ones they were most interested in.

An overview of Writing is Designing

“In many product teams, the words are an afterthought, and come after the “design,” or the visual and experiential system. It shouldn’t be like that: the writer should be creating words as the rest of the experience is developed. They should be iterative, validated with research, and highly collaborative. Writing is part of the design process, and writers are designers.” — Writing is Designing

Andy and Michael kicked things off with a brief overview of Writing is Designing. They highlighted how writing is about fitting words together and design is about solving problems. Writing as design brings the two together. These activities — writing and designing — need to be done together to create a cohesive user experience.

They reiterated that effective product content must be:

- Usable: It makes it easier to do something. Writing should be clear, simple, and easy.

- Useful: It supports user goals. Writers need to understand a product’s purpose and their audience’s needs to create useful experiences.

- Responsible: What we write can be misused by people or even algorithms. We must take care in the language we use.

We then moved onto Q&A which covered these themes and ideas.

On writing a book that’s not just for UX writers

“Even if you only do this type of writing occasionally, you’ll learn from this book. If you’re a designer, product manager, developer, or anyone else who writes for your users, you’ll benefit from it. This book will also help people who manage or collaborate with writers, since you’ll get to see what goes into this type of writing, and how it fits into the product design and development process.” — Writing is Designing

You don’t have to be a UX writer or content strategy to benefit from Writing Is Designing. The book includes guidance for anyone involved in creating content for a user experience, including designers, researchers, engineers, and product managers. Writing is just as much of a design tool as Sketch or Figma—it’s just that the material is words not pixels.

When language perpetuates racism

“The more you learn and the more you are able to engage in discussions about racial justice, the more you are able to see how it impacts everything we do. Not questioning systems can lead to perpetuating injustice. It starts with our workplaces. People are having important conversations and questioning things that already should have been questioned.” — Michael Metts

Given the global focus on racial justice issues, it wasn’t surprising that we spent a good part of our time discussing how the conversation intersects with our day-to-day work.

Andy talked about the effort at Adobe, where he is the UX Content Strategy Manager, to expose racist terminology in its products, such as ‘master-slave’ and ‘whitelist-blacklist’ pairings. It’s not just about finding a neutral replacement term that appears to users in the interface, but rethinking how we’ve defined these terms and underlying structures entirely in our code.

Moving beyond anti-racist language

“We need to focus on who we are doing this for. We worry what we look like and that we’re doing the right thing. And that’s not the priority. The goal is to dismantle harmful systems. It’s important for white people to get away from your own feelings of wanting to look good. And focus on who you are doing it for and making it a better world for those people.” — Michael Metts

Beyond the language that appears in our products, Michael encouraged the group to educate themselves, follow Black writers and designers, and be open and willing to change. Any effective UX practitioner needs to approach their work with a sense of humility and openness to being wrong.

Supporting racial justice and the Black Lives Matter movement must also include raising long-needed conversations in the workplace, asking tough questions, and sitting with discomfort. Michael recommended reading How To Be An Antiracist by Ibram X. Kendi and So You Want to Talk About Race by Ijeoma Oluo.

Re-examining and revisiting norms in design systems

“In design systems, those who document and write are the ones who are codifying information for long term. It’s how terms like whitelist and blacklist, and master/slave keep showing up, decade after decade, in our stuff. We have a responsibility not to be complicit in codifying and continuing racist systems.” — Andy Welfle

Part of our jobs as UX practitioners is to codify and frame decisions. Design systems, for example, document content standards and design patterns. Andy reminded us that our own biases and assumptions can be built-in to these systems. Not questioning the systems we build and contribute to can perpetuate injustice.

It’s important to keep revisiting our own systems and asking questions about them. Why did we frame it this way? Could we frame it in another way?

Driving towards clarity early on in the design process

“It’s hard to write about something without understanding it. While you need clarity if you want to do your job well, your team and your users will benefit from it, too.” — Writing is Designing

Helping teams align and get clear on goals and user problems is a big part of a product writer’s job. While writers are often the ones to ask these clarifying questions, every member of the design team can and should participate in this clarification work—it’s the deep strategy work we must do before we can write and visualize the surface manifestation in products.

Before you open your favorite design tool (be it Sketch, Figma, or Adobe XD) Andy and Michael recommend writers and visual designers start with the simplest tool of all: a text editor. There you can do the foundational design work of figuring out what you’re trying to accomplish.

The longevity of good content

A book club member asked, “How long does good content last?” Andy’s response: “As long as it needs to.”

Software work is never ‘done.’ Products and the technology that supports them continue to evolve. With that in mind, there are key touch points to revisit copy. For example, when a piece of desktop software becomes available on a different platform like tablet or mobile, it’s a good time to revisit your context (and entire experience, in fact) to see if it still works.

Final thoughts—an ‘everything first’ approach

In the grand scheme of tech things, UX writing is still a relatively new discipline. Books like Writing for Designing are helping to define and shape the practice.

When asked (at another meet-up, not our own) if he’s advocating for a ‘content-first approach,’ Michael’s response was that we need an ‘everything first approach’ — meaning, all parties involved in the design and development of a product should come to the planning table together, early on in the process. By making the case for writing as a strategic design practice, this book helps solidify a spot at that table for UX writers.

Prior texts read by Mozilla’s UX book club

- Technically Wrong: Sexist Apps, Biased Algorithms, and Other Threats of Toxic Tech by Sara Wachter-Boettcher

- Future Ethics by Cennydd Bowles

- Resonate: Present Visual Stories that Transform Audiences by Nancy Duarte

- Ruined by Design by Mike Monteiro

- Calm Technology: Principles and Patterns for Non-Intrusive Design by Amber Case

|

|

Tantek Celik: Changes To IndieWeb Organizing, Brief Words At IndieWebCamp West |

A week ago Saturday morning co-organizer Chris Aldrich opened IndieWebCamp West and introduced the keynote speakers. After their inspiring talks he asked me to say a few words about changes we’re making in the IndieWeb community around organizing. This is an edited version of those words, rewritten for clarity and context. — Tantek

Chris mentioned that one of his favorite parts of our code of conduct is that we prioritize marginalized people’s safety above privileged folks’s comfort.

That was a change we deliberately made last year, announced at last year’s summit. It was well received, but it’s only one minor change.

Those of us that have organized and have been organizing our all-volunteer IndieWebCamps and other IndieWeb events have been thinking a lot about the events of the past few months, especially in the United States. We met the day before IndieWebCamp West and discussed our roles in the IndieWeb community and what can we do to to examine the structural barriers and systemic racism and or sexism that exists even in our own community. We have been asking, what can we do to explicitly dismantle those?

We have done a bunch of things. Rather, we as a community have improved things organically, in a distributed way, sharing with each other, rather than any explicit top-down directives. Some improvements are smaller, such as renaming things like whitelist & blacklist to allowlist & blocklist (though we had documented blocklist since 2016, allowlist since this past January, and only added whitelist/blacklist as redirects afterwards).

Many of these changes have been part of larger quieter waves already happening in the technology and specifically open source and standards communities for quite some time. Waves of changes that are now much more glaringly obviously important to many more people than before. Choosing and changing terms to reinforce our intentions, not legacy systemic white supremacy.

Part of our role & responsibility as organizers (as anyone who has any power or authority, implied or explicit, in any organization or community), is to work to dismantle any aspect or institution or anything that contributes to white supremacy or to patriarchy, even in our own volunteer-based community.

We’re not going to get everything right. We’re going to make mistakes. An important part of the process is acknowledging when that happens, making corrections, and moving forward; keep listening and keep learning.

The most recent change we’ve made has to do with Organizers Meetups that we have been doing for several years, usually a half day logistics & community issues meeting the day before an IndieWebCamp. Or Organizers Summits a half day before our annual IndieWeb Summits; in 2019 that’s when we made that aforementioned update to our Code of Conduct to prioritize marginalized people’s safety.

Typically we have asked people to have some experience with organizing in order to participate in organizers meetups. Since the community actively helps anyone who wants to put in the work to become an organizer, and provides instructions, guidelines, and tips for successfully doing so, this seemed like a reasonable requirement. It also kept organizers meetups very focused on both pragmatic logistics, and dedicated time for continuous community improvement, learning from other events and our own IndieWebCamps, and improving future IndieWebCamps accordingly.

However, we must acknowledge that our community, like a lot of online, open communities, volunteer communities, unfortunately reflects a very privileged demographic. If you look at the photos from Homebrew Website Clubs, they’re mostly white individuals, mostly male, mostly apparently cis. Mostly white cis males. This does not represent the users of the Web. For that matter, it does not represent the demographics of the society we're in.

One of our ideals, I believe, is to better reflect in the IndieWeb community, both the demographic of everyone that uses the Web, and ideally, everyone in society. While we don't expect to solve all the problems of the Web (or society) by ourselves, we believe we can take steps towards dismantling white supremacy and patriarchy where we encounter them.

One step we are taking, effective immediately, is making all of our organizers meetups forward-looking for those who want to organize a Homebrew Website Club or IndieWebCamp. We still suggest people have experience organizing. We also explicitly recognize that any kind of requirement of experience may serve to reinforce existing systemic biases that we have no interest in reinforcing.

We have updated our Organizers page with a new statement of who should participate, our recognition of broader systemic inequalities, and an explicit:

… welcome to Organizers Meetups all individuals who identify as BIPOC, non-male, non-cis, or any marginalized identity, independent of any organizing experience.

This is one step. As organizers, we’re all open to listening, learning, and doing more work. That's something that we encourage everyone to adopt. We think this is an important aspect of maintaining a healthy community and frankly, just being the positive force that that we want the IndieWeb to be on the Web and hopefully for society as a whole.

If folks have questions, I or any other organizers are happy to answer them, either in chat or privately, however anyone feels comfortable discussing these changes.

Thanks. — Tantek

https://tantek.com/2020/187/b1/changes-indieweb-organizing-indiewebcamp-west

|

|

Karl Dubost: Just Write A Blog Post |

This post will be very short. That's the goal. And this is addressed to the Mozilla community as large (be employes or contributors).

- Create a blog.^1

- Ask to be added to Planet Mozilla.^2

- Write small, simple short things.^3

And that's all.

The more you write, the easier it will become.