Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Guillaume Destuynder: Lesser-known tool of the day: getcap, setcap and file capabilities |

tl;dr intro to Linux capabilities

See also: `man capabilities’

Linux’s thread/process privilege checking is based on capabilities. They’re flags to the thread that indicate what kind of additional privileges they’re allowed to use. By default, root has all of these.

Examples:

CAP_DAC_OVERRIDE: Override read/write/execute permission checks (full filesystem access).

CAP_DAC_READ_SEARCH: Only override reading files and opening/listing directories (full filesystem READ access).

CAP_KILL: Can send any signal to any process (such as sig kill).

CAP_SYS_CHROOT: Ability to call chroot().

And so on.

These are useful when you want to restrict your own processes after performing privileged operations. For example, after setting up chroot and binding to a socket. (However, it’s still more limited than seccomp or SELinux, which are based on system calls instead).

Setting/Getting capabilities from userland

You can force capabilities upon programs using setcap, and query these using getcap.

For example, on many Linux distributions you’ll find ping with cap_net_raw (which allows ping to create raw sockets). This means ping doesn’t need to run as root (via setuid, in general) anymore:

getcap /sbin/ping /sbin/ping = cap_net_raw+ep

This has initially been set by a user with cap_setfcap (root has it by default), via this command:

setcap cap_net_raw+ep /sbin/ping

You can find the list of capabilities via:

man capabilities

The “+ep” means you’re adding the capability (“-” would remove it) as Effective and Permitted.

There are 3 modes:

- e: Effective

- This means the capability is “activated”.

- p: Permitted

- This means the capability can be used/is allowed.

- i: Inherited

- The capability is kept by child/subprocesses upon execve() for example.

More info:

man cap_from_text

Usage

While capabilities are relatively well known by Linux C programmers (generally used either in kernel for limiting access to resources, either in user space to drop capabilities while still running as root user) – they’re obscure to most non-programmers.

It’s important to know about their existence for forensic purposes, for example – since those programs have a subset of what’s available to a setuid(0) (i.e. root) program.

Here’s some more usage examples:

- Make a chroot wrapper that run as regular user

- Have a program that can check for all files on the system without being root (such as a scanner for vulnerable software or root kits)

- Enable/Disable use of chattr

- Program that can handle netfiler and network config without running a root

- Create a socket < 1024 without running as root

- Use ptrace on all processes as regular user (for example, by setting this to gdb)

https://www.insecure.ws/2013/12/17/lesser-known-tool-of-the-day-getcap-setcap-and-file-capabilities/

|

|

Henrik Skupin: Automation Development report – week 49/50 2013 |

It’s getting closer to Christmas. So here the 2nd last automation development report for 2013. Also please note that all team members including myself, who were dedicated to Firefox Desktop automation, have been transitioned back from the A-Team into the Mozilla QA team. This will enable us to have a better relationship with QA feature owners, and get them trained for writing automated tests for Firefox. Therefor my posts will be named “Firefox Automation” in the future.

Highlights

With the latest release of Firefox 26.0 a couple of merges in our mozmill-tests repository had to be done. Work involved here was all done by Andreea and Andrei on bug 945708

To get rid of failed attempts to remove files after a testrun with Mozmill, Henrik was working on a new version of mozfile, which includes a method called remove() now. It should be used by any code given that it tries multiple times to remove files or directories if access is getting denied on Windows systems.

We released Mozmill 2.0.2 with a couple of minor fixes and the above mentioned file removal fixes. Beside that mozmill-automation 2.0.2 and new mozmill-environments have been released.

We are still working on the remaining issues with Mozmill 2.0 and are hoping to get them fixed as soon as possible. So that an upgrade to the 2.0.x branch can happen.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 49 and week 50.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda and notes from the last two Automation Development meetings of week 49 and week 50.

http://www.hskupin.info/2013/12/17/automation-development-report-week-4950-2013/

|

|

Nicholas Nethercote: System-wide memory measurement for Firefox OS |

Have you ever wondered exactly how all the physical memory in a Firefox OS device is used? Wonder no more. I just landed a system-wide memory reporter which works on any Firefox product running on a Linux system. This includes desktop Firefox builds on Linux, Firefox for Android, and Firefox OS.

This memory reporter is a bit different to the existing ones, which work entirely within Mozilla processes. The new reporter provides measurements for the entire system, including every user-space process (Mozilla or non-Mozilla) that is running. It’s aimed primarily at profiling Firefox OS devices, because we have full control over the code running on those devices, and so it’s there that a system-wide view is most useful.

Here is some example output from a GeeksPhone Keon.

System Other Measurements 397.24 MB (100.0%) -- mem +--215.41 MB (54.23%) -- free +--105.72 MB (26.61%) -- processes | +---57.59 MB (14.50%) -- process(/system/b2g/b2g, pid=709) | | +--42.29 MB (10.65%) -- anonymous | | | +--42.25 MB (10.63%) -- outside-brk | | | | +--41.94 MB (10.56%) -- [rw-p] [69] | | | | +---0.31 MB (00.08%) ++ (2 tiny) | | | +---0.05 MB (00.01%) -- brk-heap/[rw-p] | | +--13.03 MB (03.28%) -- shared-libraries | | | +---8.39 MB (02.11%) -- libxul.so | | | | +--6.05 MB (01.52%) -- [r-xp] | | | | +--2.34 MB (00.59%) -- [rw-p] | | | +---4.64 MB (01.17%) ++ (69 tiny) | | +---2.27 MB (00.57%) ++ (2 tiny) | +---21.73 MB (05.47%) -- process(/system/b2g/plugin-container, pid=756) | | +--12.49 MB (03.14%) -- anonymous | | | +--12.48 MB (03.14%) -- outside-brk | | | | +--12.41 MB (03.12%) -- [rw-p] [30] | | | | +---0.07 MB (00.02%) ++ (2 tiny) | | | +---0.02 MB (00.00%) -- brk-heap/[rw-p] | | +---8.88 MB (02.23%) -- shared-libraries | | | +--7.33 MB (01.85%) -- libxul.so | | | | +--4.99 MB (01.26%) -- [r-xp] | | | | +--2.34 MB (00.59%) -- [rw-p] | | | +--1.54 MB (00.39%) ++ (50 tiny) | | +---0.36 MB (00.09%) ++ (2 tiny) | +---14.08 MB (03.54%) -- process(/system/b2g/plugin-container, pid=836) | | +---7.53 MB (01.89%) -- shared-libraries | | | +--6.02 MB (01.52%) ++ libxul.so | | | +--1.51 MB (00.38%) ++ (47 tiny) | | +---6.24 MB (01.57%) -- anonymous | | | +--6.23 MB (01.57%) -- outside-brk | | | | +--6.23 MB (01.57%) -- [rw-p] [22] | | | | +--0.00 MB (00.00%) -- [r--p] | | | +--0.01 MB (00.00%) -- brk-heap/[rw-p] | | +---0.31 MB (00.08%) ++ (2 tiny) | +---12.32 MB (03.10%) ++ (23 tiny) +---76.11 MB (19.16%) -- other

The data is obtained entirely from the operating system, specifically from /proc/meminfo and the /proc/ files, which are files provided by the Linux kernel specifically for measuring memory consumption.

I wish that the mem entry at the top was the amount of physical memory available. Unfortunately there is no way to get that on a Linux system, and so it’s instead the MemTotal value from /proc/meminfo, which is “Total usable RAM (i.e. physical RAM minus a few reserved bits and the kernel binary code)”. And if you’re wondering about the exact meaning of the other entries, as usual if you hover the cursor over an entry in about:memory you’ll get a tool-tip explaining what it means.

The measurements given for each process are the PSS (proportional set size) measurements. These attribute any shared memory equally among all processes that share it, and so PSS is the only measurement that can be sensibly summed across processes (unlike “Size” or “RSS”, for example).

For each process there is a wealth of detail about static code and data. (The above example only shows a tiny fraction of it, because a number of the sub-trees are collapsed. If you were viewing it in about:memory, you could expand and collapse sub-trees to your heart’s content.) Unfortunately, there is little information about anonymous mappings, which constitute much of the non-static memory consumption. I have some patches that will add an extra level of detail there, distinguishing major regions such as the jemalloc heap, the JS GC heap, and JS JIT code. For more detail than that, the existing per-process memory reports in about:memory can be consulted. Unfortunately the new system-wide reporter cannot be sensibly combined with the existing per-process memory reporters because the latter are unaware of implicit sharing between processes. (And note that the amount of implicit sharing is increased significantly by the new Nuwa process.)

Because this works with our existing memory reporting infrastructure, anyone already using the get_about_memory.py script with Firefox OS will automatically get these reports along with all the usual ones once they update their source code, and the system-wide reports can be loaded and viewed in about:memory as usual. On Firefox and Firefox for Android, you’ll need to set the memory.system_memory_reporter flag in about:config to enable it.

My hope is that this reporter will supplant most or all of the existing tools that are commonly used to understand system-wide memory consumption on Firefox OS devices, such as ps, top and procrank. And there will certainly be other interesting, available OS-level measurements that are not currently obtained. For example, Jed Davis has plans to measure the pmem subsystem. Please file a bug or email me if you have other suggestions for adding such measurements.

https://blog.mozilla.org/nnethercote/2013/12/17/system-wide-memory-measurement-for-firefox-os/

|

|

Aki Sasaki: LWR (job scheduling) part vi: modularity, cross-cluster communication, and moving faster |

“ The most long-term success tends to come from reducing, to the greatest extent possible, the need for agreement and consensus.

I think we could rephrase "reducing the need for agreement and consensus" as, "increasing our ability to agree-to-disagree and still work well together". I'm going to talk a bit about modularity, forks, and shared APIs.

modularity

Catlee was pushing for LWR modularity, to distance us from buildbot's monolithic app architecture. I was as well, to a degree, with standalone clusters and delegating pooling decisions to a SlaveAPI/Mozpool analog. If you take that a step further, you reach Catlee's view of it (as I understand it):

- minion1 allocation module: this could simply iterate down a list of minions for smaller clusters, or ask SlaveAPI/Mozpool for an appropriate minion in the gecko cluster.

- the job launching module could use sshd on the minion pool if the scripts have their own logging and bootstrapping; another version could talk to a custom minion-side daemon.

- The graph-generation and graph-processing pieces could be modular, allowing for different graph- and job- definition formats between clusters.

- the pending jobs piece, the graphs db + api piece, the event api, and configs pieces as well

By making these modular, we reduce the monolithic app to compatible LEGO® pieces that can be mixed and matched and forked if necessary. Plus, it allows for easier development in parallel.

Similarly, we had been talking about versioning each API and the job- and graph- definitions, so we wouldn't always be locked in to being backwards-compatible. That would allow us to make faster decisions early on that wouldn't necessarily have major long-term implications. It's easier to fail early + fail often if decisions are easily reversible.

This versioning would also allow us to potentially have different workflows or job+graph definitions between LWR clusters, should we need it. The only requirement here would be that each LWR cluster be internally consistent. This could allow for simpler workflows to operate without the overhead of being fully compatible with a complex workflow; similarly, we wouldn't have to shoehorn a complex workflow onto a simpler one.

1 Earlier I mentioned that I (and others) wanted to move away from the buildbot master/slave terminology. I think we've settled on minions for the compute farm machines. We're a bit mixed on the server side: Overlord? Professor? Mastermind? I'd prefer to think of the server cluster as more of a traffic cop than anything overarchingly powerful and omniscient, but I'm not too picky here.

cross-cluster communication

As a bit of [additional] background: Catlee sees LWR as event-based. We would define types of events, and what happens when an event occurs. And I mentioned wanting to support graphs-of-graphs, and hinted at potentially supporting cross-project or cross-corporation architectures here and here 2.

Why not allow LWR clusters to talk to each other? They certainly would be able to, with events. If events involve hitting a specific server on a specific port, that may be tricky if there's a firewall in the way, but that might be solvable with listeners on the DMZ.

Why not allow graphs-of-graphs to reference graphs in another LWR cluster? (If that's feasible.) With this workflow, once the dependencies had all finished, we could trigger a subgraph on another LWR cluster, either via an event or directly in the graph API.

If we're able to read the other cluster's status and/or request a callback event at the end of that subgraph, this could work, and allow for loose coupling between related LWR clusters without requiring their internals be identical.

2 While re-reading this post, I noticed that I was writing about next-gen, post-buildbot build infrastructure at Mozilla back in January 2009. My picture back then was a lot more RDBMS- based then, but a lot of the concepts still hold.

We put that project on the back burner so we could help Mozilla launch a 1.0. Four 1.0's (or equivalent: Maemo Fennec, Android Fennec, Android native Fennec, B2G) and nearly five years later, it looks like we're finally poised to tackle it, together.

moving faster

I tend to want to tackle the hardest piece of a problem first. It's easier to keep the simpler problems in mind when designing solutions for the hardest problem, than vice versa. In LWR's case, the hardest workflow is the existing Gecko builds and tests.

The initial LWR phase 1 may now involve a smaller scoped, Gaia-oriented, pull request autoland system. This worried me: if I'm concerned about forward-compatibility with Gecko phase 1, will I be forced into a stop-energy role during the Gaia phase 1 brainstorming? That sounds counterproductive for everyone involved. That's until I realized modularity and cross-cluster communication could help us avoid requiring future-proof decisions at the outset. If we don't have to worry that decisions made during phase 1 are irreversible, we'll be able to take more chances and move faster.

If we wanted the Gaia workflow to someday be a part of the Gecko dependency graphs, that can happen by either moving those jobs into the Gecko LWR cluster, or we could have the Gecko cluster drive the Gaia cluster.

Forked LWR clusters aren't ideal. But blocking Gaia LWR progress on hand-wavy ideas of what Gecko might need in the future isn't helpful. The option of avoiding the tyranny of a single shared workflow or manifest definition frees us to brainstorm the best solution for each, and converge later if it makes sense.

In part 1, I covered where we are currently, and what needs to change to scale up.

In part 2, I covered a high level overview of LWR.

In part 3, I covered some hand-wavy LWR specifics, including what we can roll out in phase 1.

In part 4, I drilled down into the dependency graph.

In part 5, I covered some thoughts on the jobs and graphs db.

We met with the A-team about this, a couple times, and are planning on working on this together!

Now I'm going to take care of some vcs-sync tasks, prep for our next meeting, and start writing some code.

|

|

Ludovic Hirlimann: If you value privacy |

And are not too far away from Brussels, Belgium , consider attending the biggest pgp signing party in Europe this February. Instructions ans details are written here : https://fosdem.org/2014/keysigning/

|

|

Sean McArthur: client-sessions v0.5 |

It rather bothered me that client-sessions required a native module, but it used a Proxy so as to only re-encrypt the cookie if it a property changed. Stuck 30,000 feet in the air unnaturally, I spent some time removing the usage of the Proxy, and use getters and setters instead.

I also mopped up some bugs while I was at it.

So, v0.5 is live, works better, and such!

|

|

Chris Lord: Linking CSS properties with scroll position: A proposal |

As I, and many others have written before, on mobile, rendering/processing of JS is done asynchronously to responding to the user scrolling, so that we can maintain touch response and screen update. We basically have no chance of consistently hitting 60fps if we don’t do this (and you can witness what happens if you don’t by running desktop Firefox (for now)). This does mean, however, that you end up with bugs like this, where people respond in JavaScript to the scroll position changing and end up with jerky animation because there are no guarantees about the frequency or timeliness of scroll position updates. It also means that neat parallax sites like this can’t be done in quite the same way on mobile. Although this is currently only a problem on mobile, this will eventually affect desktop too. I believe that Internet Explorer already uses asynchronous composition on the desktop, and I think that’s the way we’re going in Firefox too. It’d be great to have a solution for this problem first.

It’s obvious that we could do with a way of declaring a link between a CSS property and the scroll position. My immediate thought is to do this via CSS. I had this idea for a syntax:

scroll-transition-(x|y): [, ]*

where transition-declaration = ( [, ]+ )

and transition-stop = This would work quite similarly to standard transitions, where a limited number of properties would be supported, and perhaps their interpolation could be defined in the same way too. Relative scroll position is 0px when the scroll position of the particular axis matches the element’s offset position. This would lead to declarations like this:

scroll-transition-y: opacity( 0px 0%, 100px 100%, 200px 0% ), transform( 0px scale(1%), 100px scale(100%), 200px scale(1%);

This would define a transition that would grow and fade in an element as the user scrolled it towards 100px down the page, then shrink and fade out as you scrolled beyond that point.

But then Paul Rouget made me aware that Anthony Ricaud had the same idea, but instead of this slightly arcane syntax, to tie it to CSS animation keyframes. I think this is more easily implemented (at least in Firefox’s case), more flexible and more easily expressed by designers too. Much like transitions and animations, these need not be mutually exclusive though, I suppose (though the interactions between them might mean as a platform developer, it’d be in my best interests to suggest that they should :)).

I’m not aware of any proposal of this suggestion, so I’ll describe the syntax that I would expect. I think it should inherit from the CSS animation spec, but prefix the animation-* properties with scroll-. Instead of animation-duration, you would have scroll-animation-bounds. scroll-animation-bounds would describe a vector, the distance along which would determine the position of the animation. Imagine that this vector was actually a plane, that extended infinitely, perpendicular to its direction of travel; your distance along the vector is unaffected by your distance to the vector. In other words, if you had a scroll-animation-bounds that described a line going straight down, your horizontal scroll position wouldn’t affect the animation. Animation keyframes would be defined in the exact same way.

[Edit] Paul Rouget makes the suggestion that rather than having a prefixed copy of animation, that a new property be introduced, animation-controller, of which the default would be time, but a new option could be scroll. We would still need an equivalent to duration, so I would re-purpose my above-suggested property as animation-scroll-bounds.

What do people think about either of these suggestions? I’d love to hear some conversation/suggestions/criticisms in the comments, after which perhaps I can submit a revised proposal and begin an implementation.

http://chrislord.net/index.php/2013/12/16/linking-css-properties-with-scroll-position-a-proposal/

|

|

Robert O'Callahan: Blood Clot |

I'm tagging this post with 'Mozilla' because many Mozilla people travel a lot.

In September, while in California for a week, I developed pain in my right calf. For a few days I thought it was a muscle niggle but after I got back to New Zealand it kept getting worse and my leg was swelling, so I went to my doctor, who diagnosed a blood clot. A scan confirmed that I had one but it was small and non-threatening. I went to a hospital, got a shot of the anticoagulant clexane to stop the clot growing, and then went home. The next day I was put on a schedule of regular rivaroxaban, an oral anticoagulant. Symptoms abated over the next few days and I haven't had a problem since. No side-effects either. I cut myself shaving at the Mozilla Summit and bled for hours, but that's more of an intended effect than a side effect :-).

I had heard of the dreaded DVT, but have never known anyone with it until now, and apparently the same is true for my friends. Fortunately I didn't have a full-blown DVT since the clot did not reach a "deep vein". Still, I can confirm these clots are real and some of the warnings about them are worth paying attention to :-).

I saw a specialist for a followup visit today. Apparently plane travel is not actually such a high risk so in my case it was probably just a contributing factor, along with other factors such as sitting around too much in my hotel room, possibly genetic factors, and probably some bad luck. (Being generally healthy is no sure protection; ironically, I got this clot when I'm fitter than ever before.) So when flying, getting up to walk around, wiggling your toes, and keeping fluid intake up are all worth doing. I used to do none of them :-).

My long term prognosis is completely fine as long as I take the above precautions, wear compressing socks on flights and take anticoagulant before flights. I will be given a blood test to screen for known genetic factors.

I did everything through the public health system and it worked very well. Like health systems everywhere, New Zealand's has its good and bad points, but overall I think it's good. At least it doesn't have the obvious flaws of the USA's system (the only other one I've used). In this case I had basically zero paperwork (signed a couple of forms that staff filled out), personal costs of about $20 (standard GP consultation), good care, reasonable wait times, and modern drugs. I was pleased to see sensible things being done to deliver care efficiently; for example, my treatment program was determined by a specialist nurse, who checked it out with a doctor over the phone. New Zealand has a central drug-buying agency, Pharmac, which is a great system for getting good deals from drug companies (which is why they keep trying to undermine it, via TPP most recently ... of course they managed to make the Pharmac approach illegal in the USA :-(.) Rivaroxaban is relatively new and not yet funded by Pharmac, but Bayer has been basically giving it away to try to encourage Pharmac to fund it.

Overall, blood clots can be nasty but I got off easy. Thanks God!

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [947675] Bug id are sorted in wrong order on the dashboard

- [885148] Commenting via patch details does not clear needinfo flag

- [946353] Attachments should include can_edit field

- [948991] Congratulations on having your first patch approved mail should mention which patch it refers to

- [948961] Add “My Activity” link to account drop down in Mozilla skin

- [928293] Add number of current reviews in queue on user profile

- [949291] add link to a wiki page describing comment tagging

- [946457] Add Ember.attachments API that includes possible flag values

- [947012] The BIDW teams needs a new “product” on BMO

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2013/12/16/happy-bmo-push-day-76/

|

|

Tantek Celik: XFN 10th Anniversary |

Ten years ago to the day, on 2003-12-15, Eric Meyer, Matthew Mullenweg and I launched XFN 1.0:

- my launch post: XFN

- Matt's: Distributed Social Networking Software

- Eric's: XFN

I'm frankly quite pleased that all three of us have managed to keep those post permalinks working for 10 years, and in Matt's case, across a domain redirect as well (in stark contrast to all the sharecropped silo deaths since).

Since then, numerous implementations and sites deployed XFN, many of which survive to this day (both aforelinked URLs are wiki pages, and thus could likely use some updates!).

Thanks to deployment and use in WordPress, despite the decline of blogrolls, XFN remains the most used distributed (cross-site) social network "format" (as much as a handful of rel values can be called a format).

With successes we've also seen rises and falls, such as the amazing Google Social Graph API which indexed and allowed you to quickly query XFN links across the web, which Google subsequently shut down.

A couple of lessons I think we've learned since:

- Simply using

rel="contact"(introduced in v1.1) turned out to be easier and used far more than all of the other granular person-to-person relationship values. - The most useful XFN building block has turned out to be

rel="me"(also introduced in v1.1), first for RelMeAuth, and now for the growing IndieAuth delegated single-sign-on protocol.

Iterations and additions to XFN have been slow but steadily developed on the XFN brainstorming page on the microformats wiki. Most recently the work on fans and followers has been stable now for a couple of years, and thus being used experimentally (as XFN 1.0 started itself), awaiting more experience and implementation adoption.

rel="follower"- a link to someone who is a followerrel="following"- a link to someone who you are following

With the work in the IndieWebCamp community advancing steadily to include the notion of having an "Indie Reader" built into your own personal site, I expect we'll be seeing more publishing and use of follower/following in the coming year.

These days a lot of the latest cutting edge work in designing, developing and publishing independent web sites that work directly peer-to-peer is happening in the IndieWebCamp community, so if you have some experience with XFN you'd like to share, or use-cases for how you want to use XFN on your own site, I recommend you join the #IndieWebCamp IRC channel on Freenode.

Consider also stopping by the upcoming Homebrew Website Club meeting(s) this Wednesday at 18:30, simultaneously in San Francisco, and Portland.

Either way, I'm still a bit amazed pieces of a simple proposal on an independent website 10 years ago are still in active use today. I look forward to hearing others' stories and experiences with XFN and how we might improve it in the next 10 years.

|

|

Mike Conley: Australis Performance Post-mortem Part 3: As Good As Our Tools |

While working on the ts_paint and tpaint regressions, we didn’t just stab blindly at the source code. We had some excellent tools to help us along the way. We also MacGyver‘d a few of those tools to do things that they weren’t exactly designed to do out of the box. And in some cases, we built new tools from scratch when the existing ones couldn’t cut it.

I just thought I’d write about those.

MattN’s Spreadsheet

I already talked about this one in my earlier post, but I think it deserves a second mention. MattN has mad spreadsheet skills. Also, it turns out you can script spreadsheets on Google Docs to do some pretty magical things – like pull down a bunch of talos data, and graph it for you.

I think this spreadsheet was amazingly useful in getting a high-level view of all of the performance regressions. It also proved very, very useful in the next set of performance challenges that came along – but more on those later.

MattN’s got a blog post up about his spreadsheet that you should check out.

The Gecko Profiler

This is a must-have for Gecko hackers who are dealing with some kind of performance problem. The next time I hit something performance related, this is the first tool I’m going to reach for. We used a number of tools in this performance work, but I’m pretty sure this was the most powerful one in our arsenal.

Very simply, Gecko ships with a built-in sampling profile, and there’s an add-on you can install to easily dump, view and share these profiles. That last bit is huge – you click a button, it uploads, and bam – you have a link you can send to someone over IRC to have them look at your profile. It’s sheer gold.

We also built some tools on top of this profiler, which I’ll go into in a few paragraphs.

You can read up on the Gecko Profiler here at the official documentation.

Homebrew Profiler

At one point, jaws built a very simple profiler for the CustomizableUI component, to give us a sense of how many times we were entering and exiting certain functions, and how much time we were spending in them.

Why did we build this? To be honest, it’s been too long and I can’t quite remember. We certainly knew about the Gecko Profiler at this point, so I imagine there was some deficiency with the profiler that we were dealing with.

My hypothesis is that this was when we were dealing strictly with the ts_paint / tpaint regression on Windows XP. Take a look at the graphs in my last post again. Notice how UX (red) and mozilla-central (green) converge at around July 1st on Ubuntu? And how OS X finally converges on t_paint around August 1st?

I haven’t included the Windows 7 and 8 platform graphs, but I’m reasonably certain that at this point, Windows XP was the last regressing platform on these tests.

And I know for a fact that we were having difficulty using the Gecko Profiler on Windows XP, due to this bug.

Basically, on Windows XP, the call tree wasn’t interleaving the Javascript and native-code calls properly, so we couldn’t trust the order of tree, making the profile really useless. This was a serious problem, and we weren’t sure how to workaround it at the time.

And so I imagine that this is what prompted jaws to write the homebrew profiler. And it worked – we were able to find sections of CustomizableUI that were causing unnecessary reflow, or taking too long doing things that could be shortcutted.

I don’t know where jaws’ homebrew profiler is – I don’t have the patch on my machine, and somehow I doubt he does too. It was a tool of necessity, and I think we moved past it once we sorted out the Windows XP stack interleaving thing.

And how did we do that, exactly?

Using the Gecko Profiler on Windows XP

jaws profiler got us some good data, but it was limited in scope, since it only paid attention to CustomizableUI. Thankfully, at some point, Vladan from the Perf team figured out what was going wrong with the Gecko Profiler on Windows XP, and gave us a workaround that lets us get proper profiles again. I have since updated the Gecko Profiler MDN documentation to point to that workaround.

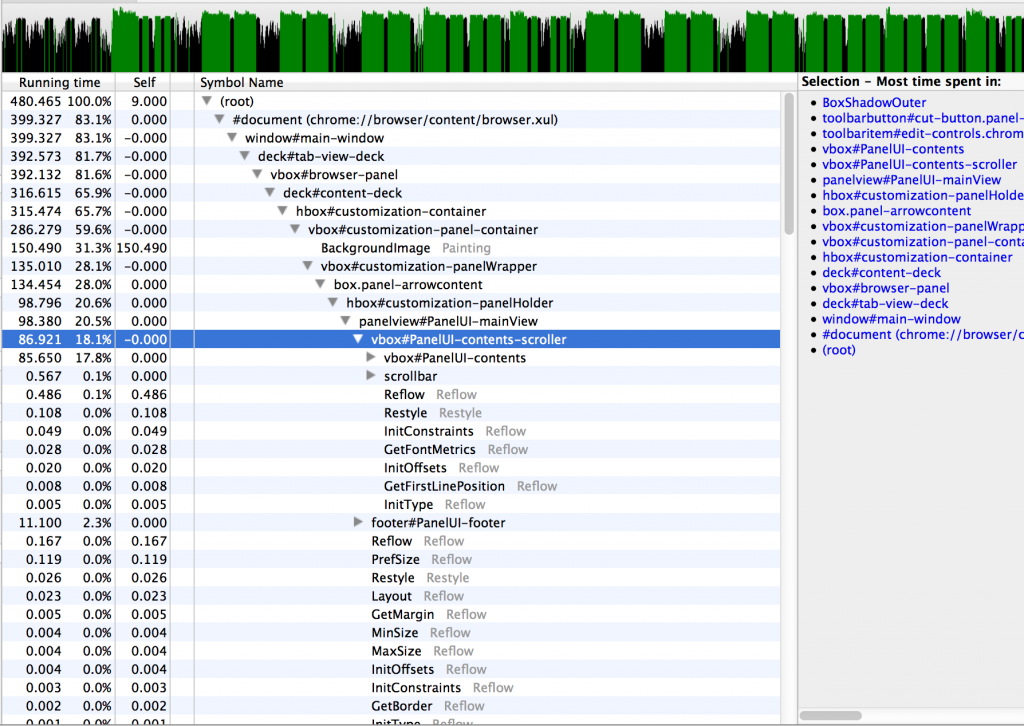

Reflow Profiles

This is where we start getting into some really neat stuff. So while we were hacking on ts_paint and tpaint, Markus Stange from the layout team wrote a patch for Gecko to take “reflow profiles”. This is a pretty big deal – instead of telling us what code is slow, a reflow profile tells us what things take a long time to layout and paint. And, even better, it breaks it down by DOM id!

This was hugely powerful, and I really hope something like this can be built into the Gecko Profiler.

Markus’ patch can be found in this bug, but it’ll probably require de-bitrotting. If and when you apply it, you need to run Firefox with an environment variable MOZ_REFLOW_PROFILE_FILE pointing at the file you’d like the profile written out to.

Once you have that profile, you can view it on Markus’ special fork of the Gecko Profiler viewer.

This is what a reflow profile looks like:

I haven’t linked to one I’ve shared because reflow profiles tend to be very large – too large to upload. If you’d like to muck about with a real reflow profile, you can download one of the reflow profiles attached to this bug and upload it to Markus’ Gecko Profiler viewer.

These reflow profiles were priceless throughout all of the Australis performance work. I cannot stress that enough. They were a way for us to focus on just a facet of the work that Gecko does – layout and painting – and determine whether or not our regressions lay there. If they did, that meant that we had to find a more efficient way to paint or layout. And if the regressions didn’t show up in the reflow profiles, that was useful too – it meant we could eliminate graphics and layout from our pool of suspects.

Comparison Profiles

Profiles are great, but you know what’s even better? Comparison profiles. This is some more Markus Stange wizardry.

Here’s the idea – we know that ts_paint and tpaint have regressed on the UX branch. We can take profiles of both the UX and mozilla-central. What if we can somehow use both profiles and find out what UX is doing that’s uniquely different and uniquely slow?

Sound valuable? You’re damn right it is.

The idea goes like this – we take the “before” profile (mozilla-central), and weight all of its samples by -1. Then, we add the samples from the “after” profile (UX).

The stuff that is positive in the resulting profile is an indicator that UX is slower in that code path. The stuff that is negative means that UX is faster.

How did we do this? Via these scripts. There’s a script in this repository called create_comparison_profile.py that does all of the work in generating the final comparison profile.

Here’s a comparison profile to look at, with mozilla-central as “before” and UX as “after”.

Now I know what you’re thinking – Mike – the root of that comparison profile is a negative number, so doesn’t that mean that UX is faster than mozilla-central?

That would seem logical based on what I’ve already told you, except that talos consistently returns the opposite opinion. And here’s where I expose some ignorance on my part – I’m simply not sure why that root node is negative when we know that UX is slower. I never got a satisfying answer to that question. I’ll update this post if I find out.

What I do know is that drilling into the high positive numbers of these comparison profiles yielded very valuable results. It allowed us to quickly determine what was unique slow about UX.

And in performance work, knowing is more than half the battle – knowing what’s slow is most of the battle. Fixing it is often the easy part – it’s the finding that’s hard.

Oh, and I should also point out that these scripts were able to generate comparison profiles for reflow profiles as well. Outstanding!

Profiles from Talos

Profiling locally is all well and good, but in the end, if we don’t clear the regressions on the talos hardware that run the tests, we’re still not good enough. So that means gathering profiles on the talos hardware.

So how do we do that?

Talos is not currently baked into the mozilla-central tree. Instead, there’s a file called testing/talos/talos.json that knows about a talos repository and a revision in that repository. The talos machines then pull talos from that repository, check out that revision, and execute the talos suites on the build of Firefox they’ve been given.

We were able to use this configuration to our advantage. Markus cloned the talos repository, and modified the talos tests to be able to dump out both SPS and reflow profiles into the logs of the test runs. He then pushed those changes to his user repository for talos, and then simply modified the testing/talos/talos.json file to point to his repo and the right revision.

The upshot being that Try would happily clone Markus’ talos, and we’d get profiles in the test logs on talos hardware! Brilliant!

Now we were cooking with gas – reflow and SPS profiles from the test hardware. Could it get better?

Actually, yes.

Getting the Good Stuff

When the talos tests run, the stuff we really care about is the stuff being timed. We care about how long it takes to paint the window, but not how long it takes to tear down the window. Unfortunately, things like tearing down the window get recorded in the SPS and reflow profiles, and that adds noise.

Wouldn’t it be wonderful to get samples just from the stuff we’re interested in? Just to get samples only when the talos test has its stopwatch ticking?

It’s actually easier than it sounds. As I mentioned, Markus had cloned the talos tests, and he was able to modify tpaint and ts_paint to his liking. He made it so that just as these tests started their stopwatches (waiting for the window to paint), an SPS profile marker was added to the sample taken at that point. A profile marker simply allows us to decorate a sample with a string. When the stopwatch stopped (the window has finished painting), we added another marker to the profile.

With that done, the extraction scripts simply had to exclude all samples that didn’t occur between those two markers.

The end result? Super concentrated profiles. It’s just the stuff we care about. Markus made it work for reflow profiles too – it was really quite brilliant.

And I think that pretty much covers it.

Lessons

- If you don’t have the tools you need, go get them.

- If the tools you need don’t exist, build them, or find someone who can. That someone might be Markus Stange.

- If the tools you need are broken, fix them, or find someone who can.

So with these amazing tools we were eventually able to grind down our ts_paint and tpaint regressions into dust.

And we celebrated! We were very happy to clear those regressions. We were all clear to land!

Or so we thought. Stay tuned for Part 4.

http://mikeconley.ca/blog/2013/12/14/australis-performance-post-mortem-part-3-as-good-as-our-tools/

|

|

John O'Duinn: The financial cost of a checkin (part 2) |

My earlier blog post shows how much we spend per checking using AWS “on demand” instances. Rail and catlee has been working on using much cheaper AWS spot instances (see details here and here for details).

As of today, AWS spot instances are now being used for 30-40% of our linux test jobs in production. We continue to monitor closely, and ramp up more every day. Builds are still being done on on-demand instances, but even so, we’re already seeing this work reduce our costs on AWS – now our AWS costs per checkin us USD$26.40 (down from $30.60) ; broken out as follows: USD$8.44 (down from $11.93) for Firefox builds/tests, USD$5.31 (unchanged) for Fennec builds/tests and USD$12.65 (down from 13.36) for B2G builds/tests.

It is worth noting that our AWS bill for November was *down*, even though our checkin load was *up*. While spot instances get more attention, because they are more technically interesting, it is worth noting for the record that only a small percentage of this cost saving was because of using spot instances. Most of the cost savings in November were from the less-glamorous work of identifying and turning off unwanted tests – ~20% of our overall load was no-longer-needed.

Note:

- A spot instance can be deleted out from under you at zero notice, killing your job-in-progress, if someone else bids more then you for that instance. We’re only seeing ~1% of jobs on spot-instances being killed. We’ve changed our scheduling automation so that now, any spot job which is killed, will automatically be re-triggered on a *non-spot* instance. While a developer might tolerate a delay/restart once, because of the significant cost savings, they would quickly be frustrating if a job was unlucky enough to be killed multiple times.

- When you ask for an on-demand instance, it is almost instant. By contrast, when you ask for a spot-instance, you do not have any guarantee on how quickly Amazon will provide the instance. We’ve seen delays of up to 20-30mins minutes, all totally unpredictable. Handling delays like this requires changes to our automation logic. All work well in progress, but still, work to be done.

- This post only includes costs for AWS jobs, but we run a lot more builds+tests on inhouse machines. Cshields continues to work with mmayo to calculate TCO (Total Cost of Ownership) numbers for the different physical machines Mozilla runs in Mozilla colos. Until we have accurate numbers from IT, I’ve set those inhouse costs to $0.00. This is obviously unrealistic, but felt better then confusing this post with inaccurate data.

- The Amazon prices used here are “OnDemand” prices. For context, Amazon WebServices has 4 different price brackets available, for each different type of machine available:

** OnDemand Instance: The most expensive. No need to prepay. Get an instance in your requested region, within a few seconds of asking. Very high reliability – out of the hundreds of instances that RelEng runs daily, we’ve only lost a few instances over the last ~18months. Our OnDemand builders cost us $0.45 per hour, while our OnDemand testers cost us $0.12 per hour.

** 1 year Reserved Instance: Pay in advance for 1 year of use, get a discount from OnDemand price. Functionally totally identical to OnDemand, the only change is in billing. Using 1 year Reserved Instances, our builders would cost us $0.25 per hour, while our OnDemand testers cost us $0.07 per hour.

** 3 year Reserved Instances: Pay in advance for 3 year of use, get a discount from OnDemand price. Functionally, totally identical to OnDemand, the only change is in billing. Using 3 year Reserved Instances, our builders would cost us $0.20 per hour, while our 3 year Reserved Instance testers cost us $0.05 per hour.

** Spot Instances: The cheapest. No need to prepay. Like a live auction, you bid how much you are willing to pay for it, and so long as you are the highest bidder, you’ll get an instance. This price varies throughout the day, depending on what demand other companies place on that AWS region. We’re using a fixed $0.025, and in future, it might be possible to save even more by doing tricker dynamic bidding.

More news as we have it.

John.

http://oduinn.com/blog/2013/12/13/the-financial-cost-of-a-checkin-part-2/

|

|

Cameron McCormack: CSS Variables in Firefox 29 |

One of the most frequent requests to the CSS Working Group over the years has been to have some kind of support for declaring and using variables in style sheets. After much discussion, the CSS Custom Properties for Cascading Variables specification took the approach of allowing the author to specify custom properties in style rules that cascade and inherit as other inherited properties do. References to variables can be made in property values using the var() functional syntax.

Custom properties that declare variables all must be named beginning with var-. The value of these custom properties is nearly freeform. They can take nearly any stream of tokens, as long as it is balanced.

For example, an author might declare some common values on a style rule that matches the root element, so that they are available on every element in the document:

:root {

var-theme-colour-1: #009EE0;

var-theme-colour-2: #FFED00;

var-theme-colour-3: #E2007A;

var-spacing: 24px;

}

Variables can be referenced at any position within the value of another property, including other custom properties. The variables in the style sheet above can be used, for example, as follows:

h1, h2 {

color: var(theme-colour-1);

}

h1, h2, p {

margin-top: var(spacing);

}

em {

background-color: var(theme-colour-2);

}

blockquote {

margin: var(spacing) calc(var(spacing) * 2);

padding: calc(var(spacing) / 2) 0;

border-top: 2px solid var(theme-colour-3);

border-bottom: 1px dotted var(theme-colour-3);

font-style: italic;

}

When applied to this document:

The title of the document

A witty subtitle

Please consider the following quote:

Text of the quote goes here.

the result would be something that looks like this:

Variables are resolved based on the value of the variable on the element that the property with a variable reference is applied to. If the h2 element had a style="var-theme-colour-1: black" attribute on it, then the h2 { color: var(theme-colour-1); } rule would be resolved using that value rather than the one specified in the :root rule.

Variable references can also include fallback to use in case the variable is not defined or is invalid (due to being part of a variable reference cycle). The first rule in the style sheet using the variables could be rewritten as:

h1, h2 {

color: var(theme-colour-1, rgb(14, 14, 14));

}

which would result in the colour being a dark grey if the theme-colour-1 variable is not defined on one of the heading elements.

Since variable references are expanded using the variable value on the element, this process must be done while determining the computed value of a property. Whenever there is an error during the variable substitution process, it causes the property to be “invalid at computed-value time”. These errors can be due to referencing an undeclared variable with no fallback, or because the substituted value for the property does not parse (e.g. if we assigned a non-colour value to the theme-colour-1 variable and then used that variable in the ‘color’ property). When a property is invalid at computed-value time, the property declaration itself is parsed successfully and will be visible if you inspect the CSSStyleDeclaration object in the DOM. The computed value of the property will take on a default value, however. For inherited properties, like ‘color’, the default value will be ‘inherit’. For non-inherited properties, it is ‘initial’.

Implementation

An initial implementation of CSS Variables has just landed in Firefox Nightly, which is currently at version 29. The feature is not yet enabled for release builds (that is, Firefox Beta and the release version of Firefox), as we are waiting for a few issues to be resolved in the specification and for it to advance a little further in the W3C Process before making it more widely available. It will however continue to be available in Nightly and, after the February 3 merge, in Firefox Aurora.

The only part of the specification that has not yet been implemented is the CSSVariableMap part, which provides an object that behaves like an ECMAScript Map, with get, set and other methods, to get the values of variables on a CSSStyleDeclaration. Note however that you can still get at them in the DOM by using the getPropertyValue and setProperty methods, as long as you use the full property names such as "var-theme-colour-1".

The work for this feature was done in bug 773296, and my thanks to David Baron for doing the reviews there and to Emmanuele Bassi who did some initial work on the implementation. If you encounter any problems using the feature, please file a bug!

|

|

Eric Shepherd: Kuma update: December 13, 2013 |

We’ve pushed a number of updates to Kuma (the software that powers the MDN web site) lately. Here are a few of the more interesting changes:

- Our redesigned user experience has launched! Key features include a new, more attractive look and feel, sidebar navigation in several areas of the site to help you get around specific APIs and technology areas, and more.

- A new search engine is in place, which not only searches better, but provides filters to let you narrow down your search more easily.

- Pages’ tables of contents no longer have numbers next to each item, and items are indented by heading level. This will make the TOCs much more readable.

- A bug causing menus to be cut off on mobile devices has been fixed.

- A bug causing horizontal scrolling on mobile devices has been fixed.

- The initialization of “Tabzilla” (the unified Mozilla tab at the top of the page) is now done in a different place, which will improve page load performance.

- We’ve removed some unused CSS and JavaScript, which hopefully will help load times a little bit.

- The “Interested in reading this offline” link that took you to a page suggesting you download some apps that just provide a different way to read MDN has been removed. This was an experiment that somehow never ended.

- When editing profiles, the correct email address is now displayed.

- Several legacy files have been removed.

There’s a ton of nice improvements! The team will be focusing on fixing performance issues for the next few weeks. Other stuff will be happening too, but we’re going to make a big push to try to improve our load times.

http://www.bitstampede.com/2013/12/13/kuma-update-december-13-2013/

|

|

Chris McAvoy: BadgeKit Future! |

This is the second post in a two part series on BadgeKit, in the first part, I wrote about BadgeKit Now, looking at how you can set up a version of BadgeKit on your own servers now.

BadgeKit is coming! Like I wrote last time, it’s already here. You can pull a handful of Mozilla Open Badges projects together, host them on your servers and build a complete badge platform for your project. All that’s easy to say, but not easy to do. BadgeKit will streamline launching an open badge system so that just about anybody can issue open badges with an open platform that grows with them.

Sunny and Jess wrote an overview of where we want to get to by March, a MVP Beta release for the Digital Media & Learning conference. The release will give users two ways to work with BadgeKit,

- SaaS – define your badge system on the fully hosted site (or your self-hosted site), integrate the system with your existing web applications through our API’s.

- Self hosted – the full experience outlined above, but hosted on your own servers.

In both cases, the issuers build a website that interacts with our API’s to offer the badges to their communities.

The project divides easily into two big buckets – first building out the API’s we created in Open Badger, Aestimia and the Backpack, second building a solid administrative toolkit to define badges and assemble them into larger badge systems. Issuers use the toolkit (which will live at badgekit.org) to design and launch their badge systems, then they’ll integrate the community facing portions of the system into their own site (which preserves their custom look and feel) with our API’s. Here’s a quick sketch of what it all will look like,

Lot’s of API’s

More Flexibility

Last week we talked about a few services in the BadgeKit Now post, OpenBadger, Aestimia, Badge Studio, a ‘badging site’ and the backpack. None of those pieces are going away, but they are all going to get a makeover – each is stripped down to the roots and rebuilt with an emphasis on their web API interfaces. We’re going to standardize on MySQL as a datastore, explore Node.js’ streaming API’s for database access and output and rebuild the account authentication model to allow the services to support more than a single ‘badge system’.

Data Model

The current data model uses a three-tier ownership chain to define a single badge – a badge belongs to a program, a program belongs to a issuer, a issuer is part of a badge system by being defined in the instance of Open Badger. There’s a one to one mapping of a ‘badge system’ like Chicago Summer of Learning to an instance of Open Badger. Adding an extra layer, so that a badge belongs to a program, a program belongs to an issuer, an issuer is part of a badge system, which sits beside other badge systems in the single instance of Open Badger will open us up to hosting several large systems per ‘cluster’ of Open Badger servers. We’re breaking the one to many relationship of a badge to a program and a program to an issuer and allow for badges to belong to multiple programs, or programs to multiple issuers, issuers to multiple systems, providing more of a graph-like collection of objects.

Authentication and Authorization

This collection of applications authenticates the identity of each piece in the chain by signing API calls with JSON Web Tokens. The key used in each app is configured as an environment variable, as long as each app knows the key, they can read and write to other applications with the same key. When we build badge systems that are co-hosted, but span multiple applications (Open Badger talking to the badge site, the badge site passing messages to Aestimia) we’ll have to change the way the distribution of keys between the applications. The client site API keys will allow them access to the hosted API’s.

What’s Next?

You can follow the development of all these pieces on Github and read more about the system as it develops on Planet Badges.

|

|

Ben Hearsum: This week in Mozilla RelEng – December 13th, 2013 |

I thought it might be interesting for folks to get an overview of the rate of change of RelEng infrastructure and perhaps a better idea of what RelEng work looks like. The following list was generated by looking at Release Engineering bugs that were marked as FIXED in the past week. Because it looks at only the “Release Engineering” product in Bugzilla it doesn’t represent everything that everyone in RelEng did, nor was all the work below done by people on the Release Engineering team, but I think it’s a good starting point!

- Emergency responses:

- disable updates for Windows, until the Windows nightly on the second rev finishes

- bouncer checks failing

- Regularly scheduled work:

- b2g merge day work

- Migrate mozilla-beta and comm-beta during 09-dec-2013 merge duty

- Kill mozilla-esr17 (2 versions after FF24 ships)

- Need automatic hsts preload list updates on esr24

- tracking bug for build and release of Firefox 24.2.0 ESR

- Disable Aurora 28 daily updates until merge to mozilla-aurora has stabilized

- tracking bug for build and release of Firefox and Fennec 26.0

- Remove old b2g branches: mozilla-b2g18_v1_0_1 and mozilla-b2g18_v1_0_0

- Disable Desktop Nightly builds and Windows 32 builds on b2g18 and b2g18_v1_1_0hd

- tracking bug for build and release of Firefox and Fennec 27.0b1

- General automation changes:

- Run Valgrind builds on every push

- Use mozinstall for installing b2g desktop builds in gaia-ui-tests script

- Trigger a retry for metro talos suites that fail to initialize metro browser

- Tests on the jetpack tree always test the most recent version of jetpack, not the push they are meant to test

- Servo build’s backup-rust step breaks on first-time slaves

- rootanalysis should be –enable-threadsafe

- Please schedule jit-tests on Try

- Please schedule cpp unittests on Try

- Collect and report output from Android x86 emulator

- Check connectivity to android emulators before and after tests

- blobber failed to upload minidump but didn’t give any useful debugging information

- Schedule b2g desktop mochitests on Cedar for OSX

- Run Valgrind builds on all mozilla-central-based trees (inbound, try, fx-team, etc)

- rootanalysis should be –enable-threadsafe

- Please schedule cpp unittests on all trunk trees and make them ride the trains

- OSX 10.6 talos is running on b2g-inbound

- npm mirror script fails occasionally

- Linkify and improve spidermonkey_build TinderboxPrint messages

- Marionette mozharness script should use proper xre.zip if running on osx

- Make it possible to perform editable installs in mozharness virtualenvs

- legacy vcs-sync process “silently” ignores missing repositories

- Schedule debug b2g emulator xpcshell tests on all trunk branches

- Hf builds need to output failures in a tbpl-recognizable way

- Build/test platform changes:

- Migrate w64-ix-slave day7 batch1 to rev2 image

- Manage slave secrets with puppet

- can’t ssh to some w64 rev2 machines

- Build/test machine requests:

- Other:

http://hearsum.ca/blog/this-week-in-mozilla-releng-december-13th-2013/

|

|

Chris AtLee: Valgrind now running per-push |

This week we started running valgrind jobs per push (bug 946002) on mozilla-central and project branches (bug 801955).

We've been running valgrind jobs nightly on mozilla-central for years, but they were very rarely ever green. Few people looked at their results, and so they were simply ignored.

Recently Nicholas Nethercote has taken up the torch and put in a lot of hard work to get these valgrind jobs working again. They're now running successfully per-push on mozilla-central and project branches and on Try.

Thanks Nicholas! Happy valgrinding all!

http://atlee.ca/blog/posts/valgrind-now-running-per-push.html

|

|

Tarek Ziad'e: Presence on Firefox OS |

Note

The topic is still in flux, this blog post represents my own thoughts, not Mozilla's.

Since a couple of months, we've been thinking about presence in my team at Mozilla, trying to think about what it means and if we need to build something in this area for the future of the web.

The discussion was initiated with this simple question: if you are not currently running social application Foo on your phone, how hard would it be for your friends to know if you're available and to send you an instant notification to reach you ?

A few definitions

Before going any further, we need to define a few words - because I realized through all the dicussions I had that everyone has its own definitions of words like presence.

The word presence as we use it is how XMPP defines it. To summarize, it's a flag that will let you know if someone is online or offline. This flag is updated by the user itself and made available to others by different ways.

By device we define any piece of hardware that may update the user presence. It's most of the time a phone, but by extension it can also be the desktop browser. Maybe one day we will extend this to the whole operating system on desktop, but right now it's easier to stay in our realm: the Firefox desktop browser and the Firefox OS.

We also make the distinction between app-level presence and device-level presence. The first one is basically the ability for an online app to keep track of who's currently connected to its service. The latter, device-level presence, is the ability to keep track of who's active on a device - even if that user is not active in an application - which can even be turned off.

Being active on a device depends on the kind of device. For the desktop browser, it could simply be the fact that the browser is running and the user has toggled an 'online' button. For the phone, there are things like the Idle API that could be used to determine the status. But it's still quite fuzzy what would be the best way to use this.

Last very important point: we're keeping the notion of contacts out of the picture here because we've realized it's a bit of a dream to think that we could embed people's contacts into our service and ask all of the social apps out there to drop their own social graphs in favor of ours. That's another fight. :)

Anyways, I've investigated a bit on what was done on iOS and Android and found out that they both more or less provide ways for apps to reach out their users even when they don't use the app. I am not a mobile expert at all so if I miss something there, let me know!

Presence on iOS

In iOS >= 7.x, applications that want to provide a presence feature can keep a socket opened in the background even if the application is not running any more in the foreground.

The feature is called setKeepAliveTimeout and will give the app the ability to register a handler that will be called periodically to check on the socket connection.

The handler has a limited time to do it (max 10 seconds) but this is enough to handle presence for the user by interacting with a server

The Apple Push Notification Service is also often used by applications to keep a connection opened on a server to receive push notifications. That's comparable to the Simple Push service Mozilla has added in Firefox OS.

Therefore, building an application with device-level presence on an iOS device is doable but requires the publisher to maintain one or several connections per user opened all the time - which is draining the battery. Apple is mitigating the problem by enforcing that the service spends at most 10 seconds to call back its server, but it seems that they are still keeping some resources per application in the background.

Presence on Android

Like iOS, Android provides features to run some services in the background, see http://developer.android.com/guide/components/services.html

However, the service can be killed when the memory becomes low, and if TCP/IP is used it can be hard to have a reliable service. That's also what currently happens in Firefox OS, you can't bet that your application will run forever in the background.

Google also provides a "Google Cloud Messaging" (GCM) service. That provides similar features to Simple Push, to push notifications to users.

There's also a new feature called GCM Cloud Connection Server - (CCS) that allows applications to communicate with the device via XMPP and with client side "Intent Services". The app and the devices interact with CCS, which relays the messages back and forth.

There's a full example on their documentation of a Python server interacting with the GCM service to interact with users.

What's interesting is that the device keeps a single connection to a Google service, that relays calls from application servers. So instead of keeping one connection in every application, the phone shares the same pipe.

It's still up to the app to leverage this service to keep track of connected devices to get device-level presence, but the idea of keeping a single service in the background that dispatches messages to apps and eventually wakes them up, is very appealing to optimize the life of the battery.

Plus, XMPP is a widely known protocol. Offering app developers this standard to interact with the devices is pretty neat.

And Firefox OS ?

If you were to build a chat application today on Firefox OS, you would need to keep your own connection open on your own server. Once your application is sent in the background, you cannot really control what happens when the system decides to shut it down to free some resources.

In other words, you're blacking out and the application service will not really know what's your status on the device. It will soon be able to send a notification via SimplePush to wake up the app - but there's still this grey zone when the app is sent in the background.

The goal of the Presence project is to improve this and provide a better solution for app developers.

At first, we thought about running our own presence service, be it based on ejabberd or whatever XMPP server out there. Since we're hackers, we quickly dived into all the challenges of scaling such a service for Firefox OS users. Making a presence service scaling for millions of users is not a small task - but that's really interesting.

The problem though, is the incentive for an app publisher to use our own presence service. Why whould they do this ? They all already solved presence in their applications, why would they use our own thing ? They would rather want us to provide a better story for background applications - and keep their client-side interacting with their own servers.

But we felt that we could provide a better service for our user experience, something that is less battery draining, and that puts back the user in the center of the picture.

Through the discussions, Ben Bangert came up with a nice proposal that partially answered those questions: Mozilla can keep track of the users' device status (online/offline/available) if they agree, and every user can authorize the applications she uses to fetch these presence updates through the Mozilla service - via a doorhanger page.

This indirection is a bit similar to Android's GCC architecture.

Like GCC, we'd be able to tweak the battery usage if we're in control of the background service that keeps a connection opened to one of our servers. There are several ways to optimize the battery usage for such a service - and we're exploring them.

One extra benefit of having a Mozilla service keep track of the presence flag is that users will be able to stay in control: they can revoke an application's authorization to see their online presence at anytime.

There's also a lot of potential for tweaking how and who see this information. For example, I can decide that BeerChat, my favorite chat app to talk about beer, can see my presence only between 9pm and 11pm.

And of course, like Firefox Sync, the devices could point to a custom Presence service that's not running on a Mozilla server.

What's next ?

The Presence project is just an experiment right now, but we're trying to reach a point where we can have a solid proposal for Firefox OS.

As usual for any Mozilla project, everything is built in the open, and we trying to have weekly meetings to talk about the project.

The wiki page of the project is here : https://wiki.mozilla.org/CloudServices/Presence

It's a big mess right now, but it should improve over time to something more readable.

We're also trying to have a prototype that's up-to-date at https://github.com/mozilla-services/presence and an end user application demo that uses it, a Chat Room at: https://github.com/mozilla-services/presence-chatroom

There's a screencast at http://vimeo.com/80780042 where you can see the whole flow of a user authorizing an application to see her presence and another user reaching out the first user through a notification.

The desktop prototype is based on the excellent Social API feature, and we're now building the Firefox OS prototype - to see how the whole thing looks from a mobile perspective.

There's a mailing list, if you want to get involved: https://mail.mozilla.org/listinfo/wg-presence

|

|

Benjamin Kerensa: Community Building Team: Meetup Day One |

Day One (Wednesday) of the Mozilla Community Building Team Meetup here in San Francisco started with a bit of an icebreaker game so all 40+ of us could learn a little bit about each other. The exercise consisted of throwing a purple plushie around the room and answering a question.

After the icebreaker we had some introductions by the track leads and organizers who described the format of the rest of the week. We then broke into workgroups (Education, Events, Recognition, Systems & Data and Pathways). The initial work that each workgroup did was to put together some ideas in their focus area for 2014.

Later we came back to the main area we would be working from this week and did some more exercises to produce ideas for increasing participation surrounding various areas of the Mozilla project. My evening wrapped up heading with a group of Mozillians and my cousin down to a local restaurant to unwind and have dinner.

|

|

Chris Cooper: On John Leaving Mozilla |

John has posted his farewell over on his blog.

John has posted his farewell over on his blog.

John’s been managing me now for 6.5 years. I’ve had other friends/mentors/managers before, but it was under John that I became a manager myself.

Everything I’ve learned about people-first, no-nonsense management has come from him. Everything I’ve learned about cross-group co-ordination and asking the right questions has come from him. Everything I’ve learned about Irish whiskey has come from him.

On the technical- and pure getting-shit-done-side, the Google Tech Talk he gave on “Release Engineering as a Force Multiplier” is probably the only resume he’ll ever need, even if he doesn’t actually need it.

Where does John’s departure leave Mozilla, and more specifically, our release engineering team, and even more specifically, me?

I don’t know, but I’m optimistic, mostly because of the imprint left by John on all of us.

|

|