Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Eric Shepherd: A brand-new MDN design to make making the Web better better |

Today we launched the new design for the Mozilla Developer Network Web site. We’ve been working on this for a long time, with discussions starting as much as a year ago, and actual coding starting this past summer. It was a huge effort involving a ton of brilliant people, and I’m thrilled with the results! Not only is the new design more attractive, but it has a number of entirely new features that will help you find what you want—and read it—more easily, and in more places, than ever before.

Updated home page

The new home page has a completely revamped organization, with a large search box front and center. Below that are links to key areas of the MDN Web site, including much of our most-used documentation, such as the JavaScript, CSS, and HTML docs, content about Firefox OS and developer tools, and the brand-new Mozilla Developer Program, whose goal is to help Web developers learn more and more quickly.

Zoning in

You may notice the new concept we call “zones” in the screenshot above. A “zone” is a new concept we’ve added, wherein we can construct a special topic area that can accumulate documentation and samples from across MDN to cover that topic. For example, the Firefox OS zone provides documentation about Firefox OS, which involves not only Firefox OS-specific content, but information about HTML, CSS, and so forth.

Here you see our new zone navigation bar along the left side of the screen, promotional boxes for specific content, and lists of key articles.

Search and ye shall find

Search has been significantly improved; we now have an on-site search powered by Elastic Search rather than using Google, which lets us customize the search in useful ways. One particularly helpful feature is the addition of search filters. Once you’ve done a search, you can narrow the search further by using these filters. For example, here’s a search for “window element”:

Well. 5186 results is a lot. You can use our new search filters, though, to narrow those results down a bit. You can choose to restrict your search to one or more topic areas, types of document, and/or skill level. Let’s look for articles specifically about the DOM:

Well. 5186 results is a lot. You can use our new search filters, though, to narrow those results down a bit. You can choose to restrict your search to one or more topic areas, types of document, and/or skill level. Let’s look for articles specifically about the DOM:

It’s worth noting here that these filters rely on content being tagged properly, and much of our content is still in the process of being tagged (especially regarding skill level). This is something we can always use help with, so please drop into #mdn on IRC if you’re interested in helping with this quick and easy way to help improve our search quality!

It’s worth noting here that these filters rely on content being tagged properly, and much of our content is still in the process of being tagged (especially regarding skill level). This is something we can always use help with, so please drop into #mdn on IRC if you’re interested in helping with this quick and easy way to help improve our search quality!

Responsive design

An area in which MDN was sorely lacking in the past was responsive design. This is the concept of designing content to adapt to the device on which it’s being used. The new MDN design makes key adjustments to its layout based on the size of your screen.

|

|

|

| Here’s the Firefox zone’s landing page in standard “desktop” mode. This is what you’ll see browsing to it in a typical desktop browser environment. | Here’s what the same page looks like in “small desktop” mode. This is what the page looks like when your browser window is smaller, such as when viewing on a netbook. | And here’s the same page in “mobile” view. The page’s layout is adjusted to be friendlier on a mobile device. |

Each view mode rearranges the layout, and in some cases removes less important page elements, to improve the page’s utility in that environment.

Quicklinks

The last new feature I’ll point out (although not the last improvement by a long shot!) is the new quicklink feature. We now have the ability to add a collection of quicklinks to pages; this can be done manually by building the list while editing the page, or by using macros.

Here’s a screenshot of the quicklinks area on the page for the CSS background property:

The quicklinks in the CSS reference provide fast access to related properties; when looking at a background-related property, as seen above, you get quick access to all of the background-related properties at once.

There’s also an expandable “CSS Reference” section. Clicking it gives you an alphabetical list of all of the CSS reference pages:

As you see, this lets you quickly navigate through the entire CSS reference without having to backtrack to the CSS landing page. I think this will come as an enormous relief to a lot of MDN users!

To top it off, if you want to have more room for content and don’t need the quicklinks, you can hide them by simply clicking the “Hide sidebar” button at the top of the left column; this results in something like the following:

The quicklinks feature is rapidly becoming my favorite feature of this new MDN look-and-feel. Not all of our content is making full use of it yet, but we’re rapidly expanding its use. It makes navigating content so much easier, and is easy to work with as a content creator on MDN, too.

The quicklinks feature is rapidly becoming my favorite feature of this new MDN look-and-feel. Not all of our content is making full use of it yet, but we’re rapidly expanding its use. It makes navigating content so much easier, and is easy to work with as a content creator on MDN, too.

Next steps

Our development team and the design and UX teams did a fantastic job building this platform, and our community of writers threw in their share as well: between testing the changes to providing feedback, not to mention contributing and updating enormous amounts of documentation to take advantage of new features and to look right in the new design, I’m enormously proud of everyone involved.

There’s plenty left to do. There are new platform features yet to be built, and the content always needs more work. If you’d like to help, drop into #mdn to talk about content or #mdndev to talk about helping with the platform itself. And feel free to file bugs with your suggestions and input.

See you online!

http://www.bitstampede.com/2013/12/09/a-brand-new-mdn-design-to-make-making-the-web-better-better/

|

|

Sean McArthur: Tally |

I had need of a simple counter application on my phone, and I scoured the Play Store for a good one. They all have atrocious UIs, with far too many buttons and features. I found one that was nicely designed, Tap Counter, but the touch target was too small, only directly in the center, making it very easy to miss when counting people and not looking at the screen.

So, over the weekend, I made one.

It’s a simple, beautiful Holo design. It picks from one of the Android Holo colors for the circle up app launch, so it will change up each time you use it. There’s only one button, and it takes up the whole screen. To reset, simply hold down that button, and you’ll get a fun animation.

That’s it. That’s Tally.

|

|

Armen Zambrano Gasparnian: Killing ESR17 and Thunderbird-ESR17 |

Hello all,

Next week, we will have our next merge date [1] on Dec. 9th, 2013.

As part of that merge day we will be killing the ESR17 [2][3] builds and tests

on tbpl.mozilla.org as well as Thunderbird-Esr17 [4].

This is part of our normal process where two merge days after the

creation of the latest ESR release (e.g. ESR24 [5]) we obsolete the last

one (e.g. ESR17).

On an unrelated note to this post, we will be creating updates from

ESR17 to ESR24 even after that date, however, we will have no more

builds and tests on check-in.

Please let me know if you have any questions.

regards,

Armen

#############

Zambrano Gasparnian, Armen (armenzg)

Mozilla Senior Release Engineer

https://mozillians.org/en-US/u/armenzg/

http://armenzg.blogspot.ca

[1] https://wiki.mozilla.org/RapidRelease/Calendar

[2] https://wiki.mozilla.org/Enterprise/Firefox/ExtendedSupport:Proposal

[3] https://tbpl.mozilla.org/?tree=Mozilla-Esr17

[4] https://tbpl.mozilla.org/?tree=Thunderbird-Esr17

[5] https://tbpl.mozilla.org/?tree=Mozilla-Esr24

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://armenzg.blogspot.com/2013/12/killing-esr17-and-thunderbird-esr17.html

|

|

Chris AtLee: Now using AWS Spot instances for tests |

Release Engineering makes heavy use of Amazon's EC2 infrastucture. The vast majority of our Firefox builds for Linux and Android, as well as our Firefox OS builds happen in EC2. We also do a ton of unit testing inside EC2.

Amazon offers a service inside EC2 called spot instances. This is a way for Amazon to sell off unused capacity by auction. You can place a bid for how much you want to pay by the hour for a particular type of VM, and if your price is more than the current market price, you get a cheap VM! For example, we're able to run tests on machines for $0.025/hr instead of the regular $0.12/hr on-demand price. We started experimenting with spot instances back in November.

There are a few downsides to using spot instances however. One is that your VM can be killed at any moment if somebody else bids a higher price than yours. The second is that your instances can't (easily) use extra EBS volumes for persistent storage. Once the VM powers off, the storage is gone.

These issues posed different challenges for us. In the first case, we were worried about the impact that interrupted jobs would have on the tree. We wanted to avoid the case where jobs were run on a spot instance, interrupted because of the market price changing, and then being retried a second time on another spot instance subject to the same termination. This required changes to two systems:

- aws_watch_pending needed to know to start regular on-demand EC2 instances in the case of retried jobs. This has been landed and has been working well, but really needs the next piece to be complete.

- buildbot needed to know to not pick a spot instance to run retried jobs. This work is being tracked in bug 936222. It turns out that we're not seeing too many spot instances being killed off due to market price [1], so this work is less urgent.

The second issue, the VM storage, turned out to be much more complicated to fix. We rely on puppet to make sure that VMs have consistent software packages and configuration files. Puppet requires per-host SSL certificates generated, and at Mozilla, these certificates need to be signed by a central certificate authority. In our previous usage of EC2 we work around this by puppetizing new instances on first boot, and saving the disk image for later use.

With spot instances, we essentially need to re-puppetize every time we create a new VM.

Having fresh storage on boot also impacts the type of jobs we can run. We're starting with running test jobs on spot instances, since there's no state from previous tests that is valuable for the next test.

Builds are more complicated, since we depend on the state of previous builds to have faster incremental builds. In addition, the cost of having to retry a build is much higher than it is for a test. It could be that the spot instances stay up long enough or that we end up clobbering frequently enough that this won't have a major impact on build times. Try builds are always clobbers though, so we'll be running try builds on spot instances shortly.

All this work is being tracked in https://bugzilla.mozilla.org/show_bug.cgi?id=935683

Big props to Rail for getting this done so quickly. With all this great work, we should be able to scale better while reducing our costs.

| [1] | https://bugzilla.mozilla.org/show_bug.cgi?id=935533#c21 |

http://atlee.ca/blog/posts/now-using-aws-spot-instances.html

|

|

Aki Sasaki: LWR (job scheduling) part iv: drilling down into the dependency graph |

I already wrote a bit about the dependency graph here, and :catlee wrote about it here. While I was writing part 2, it became clear that

- I had a lot more ideas about the dependency graph, enough for its own blog post, and

- since I want to tackle writing the dependency graph first, solidifying my ideas about it beforehand would be beneficial to writing it.

I've been futzing around with graphviz with :hwine's help. Not half as much fun as drawings on napkins, but hopefully they make sense. I'm still thinking things through.

jobs and graphs

A quick look at TBPL was enough to convince me that the dependency graph would be complex enough just describing the relationships between jobs. The job details should be separate. Per-checkin, nightly, and periodic-PGO dependency graphs trigger overlapping sets of jobs, so avoiding duplicate job definitions is a plus.

We'll need to submit both the dependency graph and the associated job definitions to LWR together. More on how I think jobs and graphs could work in the db below in part 5.

For phase 1, I think job definitions will only cover enough to feed into buildbot and have them work.

dummy jobs

-

In my initial dependency graph thoughts, I mentioned breakpoint jobs as a throwaway idea, but it's stuck with me.

We could use these at the beginning of graphs that we want to view or edit in the web app before proceeding. Or if we submit an experimental patch to Try and want to verify the results after a specific job or set of jobs before proceeding further. Or if we want to represent QA signoff in a release graph, and allow them to continue the release via the web app.

I imagine we would want a request timeout on this breakpoint, after which it's marked as timed out, and all child jobs are skipped. I imagine we'd also want to set an ACL on at least a subset of these, to limit who can sign off on releases.

Also in releases, we have simple notification jobs that send email when the release has passed certain milestones. We could later potentially support IRC pings and bug comments.

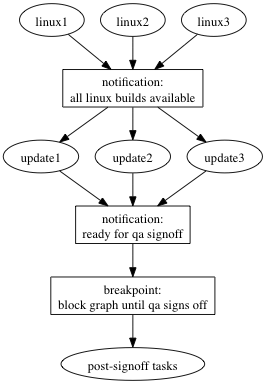

A highly simplified representation of part of a release:

We currently continue the release via manual Release Engineering intervention, after we see an email "go". It would be great to represent it in the dependency graph and give the correct group of people access. Less RelEng bottleneck. - We could also have timer jobs that pause the graph until either cancelled or the timeout is hit. So if you want this graph to run at 7pm PST, you could schedule the graph with an initial timer job that marks itself successful at 7, triggering the next steps in the graph.

-

In buildbot, we currently have a dummy factory that sleeps 5 and exits successfully. We used this back in the dark ages to skip certain jobs in a release, since we could only restart the release from the beginning; by replacing long-running jobs with dummy jobs, we could start at the beginning and still skip the previously successful portions of the release.

We could use dummy jobs to:- simplify the relationships between jobs. In the above graph, we avoided a many-to-many relationship by inserting a notification job in between the linux jobs and the updates.

- trigger when certain groups of jobs finish (e.g. all linux64 mochitests), so downstream jobs can watch for the dummy job in Pulse rather than having to know how many chunks of mochitests we expect to run, and keep track as each one finishes.

- quickly test dependency graph processing: instead of waiting for a full build or test, replace it with a dummy job. For instance, we could set all the jobs of a type to "success" except one "timed out; retry" to test max retry limits quickly. This assumes we can set custom exit statuses for each dummy job, as well as potentially pointing at pre-existing artifact manifest URLs for downstream jobs to reference.

Looking at this list, it appears to me that timer and breakpoint jobs are pretty close in functionality, as are notification and dummy (status?) jobs. We might be able to define these in one or two job types. And these jobs seem simple enough that they may be runnable on the graph processing pool, rather than calling out to SlaveAPI/MozPool for a new node to spawn a script on.

statuses

At first glance, it's probably easiest to reuse the set of TBPL statuses: success, warning, failure, exception, retry. But there are also the grey statuses 'pending' and 'running'; the pink status 'cancelled'; and the statuses 'timed out', and 'interrupted' which are subsets of the first five statuses.

Some statuses I've brainstormed:

- inactive (skipped during scheduling)

- request cancelled

- pending blocked by dependencies

- pending blocked by infrastructure limits

- skipped due to coalescing

- skipped due to dependencies

- request timed out

- running

- interrupted due to user request

- interrupted due to network/infrastructure/spot instance interrupt

- interrupted due to max runtime timeout

- interrupted due to idle time timeout (no output for x seconds)

- completed successful

- completed warnings

- completed failure

- retried (auto)

- retried (user request)

The "completed warnings" and "completed failure" statuses could be split further into "with crash", "with memory leak", "with compilation error", etc., which could be useful to specify, but are job-type-specific.

If we continue as we have been, some of these statuses are only detectable by log parsing. Differentiating these statuses allows us to act on them in a programmatic fashion. We do have to strike a balance, however. Adding more statuses to the list later might force us to revisit all of our job dependencies to ensure the right behavior with each new status. Specifying non-useful statuses at the outset can lead to unneeded complexity and cruft. Perhaps 'state' could be separated from 'status', where 'state' is in the set ('inactive', 'pending', 'running', 'interrupted', 'completed'); we could also separate 'reasons' and 'comments' from 'status'.

Timeouts are split into request timeouts or runtime timeouts (idle timeouts, max job runtime timeouts). If we hit a request timeout, I imagine the job would be marked as 'skipped'. I also imagine we could mark it as 'skipped successful' or 'skipped failure' depending on configuration: the former would work for timer jobs, especially if the request timeout could be specified by absolute clock time in addition to relative seconds elapsed. I also think both graphs and jobs could have request timeouts.

I'm not entirely sure how to coalesce jobs in LWR, or if we want to. Maybe we leave that to graph and job prioritization, combined with request timeouts. If we did coalesce jobs, that would probably happen in the graph processing pool.

For retries, we need to track max [auto] retries, as well as job statuses per run. I'm going to go deeper into this below in part 5.

relationships

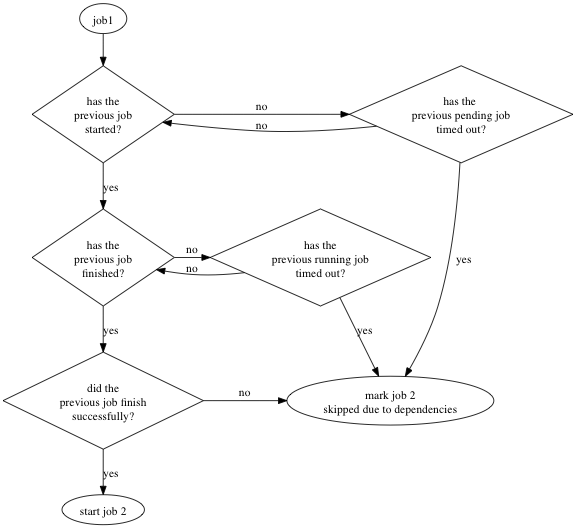

For the most part, I think relationships between jobs can be shown by the following flowchart:

If we mark job 2 as skipped-due-to-dependencies, we need to deal with that somehow if we retrigger job 1. I'm not sure if that means we mark job 2 as "pending-blocked-by-dependencies" if we retrigger job 1, or if the graph processing pool revisits skipped-due-to-dependencies jobs after retriggered jobs finish. I'm going to explore this more in part 5, though I'm not sure I'll have a definitive answer there either.

It should be possible, at some point, to block the next job until we see a specific job status:

- don't run until this dependency is finished/cancelled/timed out

- don't run unless the dependency is finished and marked as failure

- don't run unless the dependency is finished and there's a memory leak or crash

For the most part, we should be able to define all of our dependencies with this type of relationship: block this job on (job X1 status Y1, job X2 status Y2, ...). A request timeout with a predefined behavior-on-expiration would be the final piece.

I could potentially see more powerful commands, like "cancel the rest of the [downstream?] jobs in this graph", or "retrigger this other job in the graph", or "increase the request timeout for this other job", being potentially useful. Perhaps we could add those to dummy status jobs. I could also see them significantly increasing the complexity of graphs, including the potential for infinite recursion in some constructs.

I think I should mark any ideas that potentially introduce too much complexity as out of scope for phase 1.

branch specific definitions

Since job and graph definitions will be in-tree, riding the trains, we need some branch-specific definitions. Is this a PGO branch? Are nightlies enabled on this branch? Are all products and platforms enabled on this branch?

This branch definition config file could also point at a revision in a separate, standalone repo for its dependency graph + job definitions, so we can easily refer to different sets of graph and job definitions by SHA. I'm going to explore that further in part 5.

I worry about branch merges overwriting branch-specific configs. The inbound and project branches have different branch configs than mozilla-central, so it's definitely possible. I think the solution here is a generic branch-level config, and an optional branch-named file. If that branch-named file doesn't exist, use the generic default. (e.g. generic.json, mozilla-inbound.json) I know others disagree with me here, but I feel pretty strongly that human decisions need to be reduced or removed at merge time.

graphs of graphs

I think we need to support graphs-of-graphs. B2G jobs are completely separate from Firefox desktop or Fennec jobs; they only start with a common trigger. Similarly, win32 desktop jobs have no real dependencies on macosx desktop jobs. However, it's useful to refer to them as a single set of jobs, so if graphs can include other graphs, we could create a superset graph that includes the appropriate product- and platform- specific graphs, and trigger that.

If we have PGO jobs defined in their own graph, we could potentially include it in the per-checkin graph with a branch config check. On a per-checkin-PGO branch, the PGO graph would be included and enabled in the per-checkin graph. Otherwise, the PGO graph would be included, but marked as inactive; we could then trigger those jobs as needed via the web app. (On a periodic-PGO branch, a periodic scheduler could submit an enabled PGO graph, separate from the per-checkin graph.)

It's not immediately clear to me if we'll be able to depend on a specific job in a subgraph, or if we'll only be able to depend on the entire subgraph finishing. (For example: can an external graph depend on the linux32 mochitest-2 job finishing, or would it need to wait until all linux32 jobs finish?) Maybe named dummy status jobs will help here: graph1.start, graph1.end, graph1.builds_finished, etc. Maybe I'm overthinking things again.

We need a balancing act between ease of reading and ease of writing; ease of use and ease of maintenance. We've seen the mess a strong imbalance can cause, in our own buildbot configs. The fact that we're planning on making the final graph easily viewable and testable without any infrastructure dependencies helps, in this regard.

graphbuilder.py

I think graphbuilder.py, our [to be written] dependency graph generator, may need to cover several use cases:

- Create a graph in an api-submittable format. This may be all we do in phase 1, but the others are tempting...

- Combine graphs as needed, with branch-specific definitions, and user customizations (think TryChooser and per-product builds).

- Verify that this is a well-formed graph.

- Run other graph unit tests, as needed.

- Potentially output graphviz files for user-friendly local graph visualization?

- It's unclear if we want it to also do the graph+job submitting to the api.

I think the per-checkin graph would be good to build first; the nightly and PGO graphs, as well as the branch-specific defines, might also be nice to have in phase 1.

I have 4 more sections I wrote skeletons for. Since those sections are more db-oriented, I'm going to move those into a part 5.

In part 1, I covered where we are currently, and what needs to change to scale up.

In part 2, I covered a high level overview of LWR.

In part 3, I covered some hand-wavy LWR specifics, including what we can roll out in phase 1.

In part 5, I'm going to cover some dependency graph db specifics.

Now I'm going to meet with the A-team about this, take care of some vcs-sync tasks, and start writing some code.

|

|

K Lars Lohn: Socorro Support Classifiers |

Think of the term “Support” as a category for a set of tags. Another term for “category” in this context is “facet”. Support Classifiers are intended for helping with user support. For example, let's say we're getting crashes from installations of Firefox for which there are known support articles. This tagging system will make it simpler to associate the crash with the support article. Eventually, we could implement a system where the user could be automatically directed to a support article based on how the processor categorized the crash.

Classifications are defined by a list of rules. The first rule to match gets to assign the classification. Rules are in two parts: a predicate and an action:

- predicate: a Python function that implements a test to see if a condition within the raw and/or processed crash is True

- action: a Python function that will attach a tag to the appropriate place within a processed crash

Support Classifiers are the second implementation of Classifiers within the processor. The first was the experimental SkunkClassfiers. We can add as many Classifiers as we wish. While both Support and Skunk classifiers define a single facet with a single value, more complex rules could add multiple classifications. Sets of rules can work together in many ways: apply all rules, apply rules until one fails, apply rules until one succeeds, etc.

Support Classifiers are pretty simple since it can only assign one value to the facet “support”. Each rule is tried one at a time and the first one to succeed gets to assign the value. The Skunk Classifiers work the same way. Future Signature Classifiers could include alternate or experimental signature generation algorithms.

Do you have a Classifier that you'd like to see applied to crashes? There are two ways that you can get your idea implemented in the processor.

- decide if your classifier is one for Support or some other categorization.

- define, in plain English, what you want your predicate and action to be. For example:

- predicate: if the the user put a comment in the crash submission AND they specified an email address and the crash has the signature “EnterBaseline”

- action: add a Support Classification: “contact about EnterBaseline”

- Enter a bug in Bugzilla with the topic New Classifier with your classification category as well as the predicate and action. Make sure that you CC :lars so I can vet your work.

- pending approval, your classifier will be implemented by someone on the Socorro team and pushed to production with the next release.

At the moment, the UI for Socorro does not support searching for classifiers. See Bug 947723 for the current status of adding classifications to the UI.

Want to try your hand at writing your own Support classifier?

Classifier functions are implemented as methods _predicate and _action in a class derived from the base class SupportClassifierBase.

The predicate is a function that accepts references to the raw and processed crashes as well as a reference to a Socorro Processor object itself. It returns a boolean value. The purpose is to determine if the rule is eligible to be applied. For example, the rule could test to see if the crash is a specific product and version. If the test is true, the predicate returns true and execution passes to the action function. If the test returns False, then the action is skipped and we move on to the next rule.

The action is a function that also accepts a copy of the raw and processed crashes as well as a reference to the Socorro Processor object. The action is generally to just add the classification to the processed crash.

All together, a support classifier should look like this:

from socorro.processor.support_classifiers import SupportClassificationRule

class WriteToThisPersonSupportClassifier(SupportClassificationRule):

def version(self):

return '1.0'

def _predicate(self, raw_crash, processed_crash, processor):

# implement the predicate as a boolean expression to be

# returned by this method

return (

raw_crash.UserComment is not None

and raw_crash.EmailAddress is not None

and processed_crash.signature == 'EnterBaseline'

)

def _action(self, raw_crash, processed_crash, processor):

self._add_classification(

# add the support classification to the processed_crash

processed_crash,

# choose the value of your classification on the next line

'contact about EnterBaseline',

# any extra data about the classification (if any)

None,

# the place to log that a classification has been assigned

processor.config.logger,

)

What's inside the raw_crash and processed_crash that my classifier can access?

The raw_crash and the processed_crash are represented in persistent storage the form of a json compatible mapping. When passed to a classifier, they are in the form of a DotDict, a DOM-like structure accessed with '.' notation. There are no "out of bounds" fields with in the crash. The classifier code is running a privileged environment, so all classifiers must be fully vetted before they can be put into production.

raw_crash.UserComment

raw_crash.ProductName

raw_crash.BuildID

processed_crash.json_dump.system_info.OS

processed_crash.json_dump.crash_info.crash_address

processed_crash.json_dump.threads[thread_number][frame_number].function

processed_crash.upload_file_minidump_flash1.json_dump.modules[1].filename

Here's the form of a raw_crash. This is what Socorro receives from crashing instances of Firefox.

{

"AdapterDeviceID" : "0x104a",

"AdapterVendorID" : "0x10de",

"Add-ons" : "%7B972ce4c6-7e08-4474-a285-3208198ce6fd%7D:25.0.1",

"BuildID" : "20131112160018",

"CrashTime" : "1386534180",

"EMCheckCompatibility" : "true",

"EmailAddress": "...@..."

"FlashProcessDump" : "Sandbox",

"id" : "{ec8030f7-c20a-464f-9b0e-13a3a9e97384}",

"InstallTime" : "1384605839",

"legacy_processing" : 0,

"Notes" : "AdapterVendorID: ... ",

"PluginContentURL" : "http://www.j...",

"PluginFilename" : "NPSWF32_11_7_700_169.dll",

"PluginName" : "Shockwave Flash",

"PluginVersion" : "11.7.700.169",

"ProcessType" : "plugin",

"ProductID" : "{ec8030f7-c20a-464f-9b0e-13a3a9e97384}",

"ProductName" : "Firefox",

"ReleaseChannel" : "release",

"StartupTime" : "1386531556",

"submitted_timestamp" : "2013-12-08T20:23:08.450870+00:00",

"Theme" : "classic/1.0",

"throttle_rate" : 10,

"timestamp" : 1386534188.45089,

"Vendor" : "Mozilla",

"Version" : "25.0.1",

"URL" : "http://...",

"UserComment" : "Horrors!",

"Winsock_LSP" : "MSAFD Tcpip ... "

}

Here's the form of the processed_crash:

{

"additional_minidumps" : [

"upload_file_minidump_flash2",

"upload_file_minidump_browser",

"upload_file_minidump_flash1"

],

"addons" : [

[

"testpilot@labs.mozilla.com",

"1.2.3"

],

... more addons ...

],

"addons_checked" : true,

"address" : "0x77a1015d",

"app_notes" : "AdapterVendorID: 0x8086, ...",

"build" : "20131202182626",

"classifications" : {

"support" : {

"classification_version" : "1.0",

"classification_data" : null,

"classification" : "contact about EnterBaseline"

},

"skunk_works" : {

"classification_version" : "0.1",

"classification_data" : null,

"classification" : "not classified"

},

... additional classifiers ...

},

"client_crash_date" : "2013-12-05 23:59:13.000000",

"completeddatetime" : "2013-12-05 23:59:56.119158",

"cpu_info" : "GenuineIntel family 6 model 42 stepping 7 | 4",

"cpu_name" : "x86",

"crashedThread" : 0,

"crash_time" : 1386287953,

"date_processed" : "2013-12-05 23:59:38.160492",

"distributor" : null,

"distributor_version" : null,

"dump" : "OS|Windows NT|6.1.7601 Service Pack 1\n... PIPE DUMP ...,

"email" : null,

"exploitability" : "none",

"flash_version" : "11.9.900.152",

"hangid" : "fake-e167ea3d-8732-4bae-a403-352e32131205",

"hang_type" : -1,

"install_age" : 680,

"json_dump" : {

"system_info" : {

"os_ver" : "6.1.7601 Service Pack 1",

"cpu_count" : 4,

"cpu_info" : "GenuineIntel family 6 model 42 stepping 7",

"cpu_arch" : "x86",

"os" : "Windows NT"

},

"crashing_thread" : {

"threads_index" : 0,

"total_frames" : 55,

"frames" : [

{

"function_offset" : "0x15",

"function" : "NtWaitForMultipleObjects",

"trust" : "context",

"frame" : 0,

"offset" : "0x77a1015d",

"normalized" : "NtWaitForMultipleObjects",

"module" : "ntdll.dll",

"module_offset" : "0x2015d"

},

... more frames ...

]

},

"thread_count" : 10,

"status" : "OK",

"threads" : [

{

"frame_count" : 55,

"frames" : [

{

"function_offset" : "0x15",

"function" : "NtWaitForMultipleObjects",

"trust" : "context",

"frame" : 0,

"module" : "ntdll.dll",

"offset" : "0x77a1015d",

"module_offset" : "0x2015d"

},

... more frames ...

]

},

... more threads ...

],

"modules" : [

{

"end_addr" : "0x12e6000",

"filename" : "plugin-container.exe",

"version" : "26.0.0.5084",

"debug_id" : "8385BD80FD534F6E80CF65811735A7472",

"debug_file" : "plugin-container.pdb",

"base_addr" : "0x12e0000"

},

... more modules ...

],

"sensitive" : {

"exploitability" : "none"

},

"crash_info" : {

"crashing_thread" : 0,

"address" : "0x77a1015d",

"type" : "EXCEPTION_BREAKPOINT"

},

"main_module" : 0

},

"java_stack_trace" : null,

"last_crash" : null,

"os_name" : "Windows NT",

"os_version" : "6.1.7601 Service Pack 1",

"PluginFilename" : "NPSWF32_11_9_900_152.dll",

"pluginFilename" : null,

"pluginName" : null,

"PluginName" : "Shockwave Flash",

"PluginVersion" : "11.9.900.152",

"processor_notes" : "processor03_mozilla_com.89:2012; HybridCrashProcessor",

"process_type" : "plugin",

"product" : "Firefox",

"productid" : "{ec8030f7-c20a-464f-9b0e-13a3a9e97384}",

"reason" : "EXCEPTION_BREAKPOINT",

"release_channel" : "beta",

"ReleaseChannel" : "beta",

"signature" : "hang | F_1152915508___________________________________",

"startedDateTime" : "2013-12-05 23:59:49.142604",

"success" : true,

"topmost_filenames" : "F_388496358________________________________________",

"truncated" : false,

"uptime" : 668,

"url" : "https://...",

"user_comments" : null,

"user_id" : "",

"uuid" : "e167ea3d-8732-4bae-a403-352e32131205",

"version" : "26.0",

"Winsock_LSP" : "...",

... the next entries are additional crash dumps included in this crash ...

"upload_file_minidump_browser" : {

"address" : null,

"cpu_info" : "GenuineIntel family 6 model 42 stepping 7 | 4",

"cpu_name" : "x86",

"crashedThread" : null,

"dump" : "... same form as "dump" key above ... ",

"exploitability" : "ERROR: something went wrong"

"flash_version" : "11.9.900.152",

"json_dump" : { ... same form as "json_dump" key above ... }

"os_name" : "Windows NT",

"os_version" : "6.1.7601 Service Pack 1",

"reason" : "No crash",

"success" : true,

"truncated" : false,

"topmost_filenames" : [

... same form as "topmost_filenames" above ...

],

"signature" : "hang | whatever",

},

"upload_file_minidump_flash1" : {

... same form as "upload_file_minidump_browser" above ...

},

"upload_file_minidump_flash2" : {

... same form as "upload_file_minidump_browser" above ...

},

}

http://www.twobraids.com/2013/12/socorro-support-classifiers.html

|

|

Pascal Finette: On Stage With A Legend |

A few weeks ago I had the incredible fortune to be on stage with Mike Watt, punk rock legend, founding member of bands such as Minutemen, Dos and fIREHOSE, bassist for the Stooges (Iggy Pop's band). You get the idea – the man is a true legend.

My dear friends at CASH Music, a non-profit which builds tools which allow musicians to represent themselves on the web, organized one of their amazing Summits; this one was in LA and it had me on stage with Mike talking about punk rock, open source and everything in-between. It was epic. Here's the video:

|

|

Christian Heilmann: An open talk proposal – Accidental Arrchivism |

People who have seen me speak at one of the dozens of conferences I covered in the last year know that I am passionate about presenting and that I love covering topic from a different angle instead of doing a sales pitch or go through the motions of delivering a packaged talk over and over again.

For a few months now I have been pondering a quite different talk than the topics I normally cover – the open web, JavaScript and development – and I’d love to pitch this talk to the unknown here to find a conference it might fit. If you are organising a conference around digital distribution, tech journalism or publishing, I’d love to come around to deliver it. Probably a perfect setting would be a TEDx or Wired-like event. Without further ado, here is the pitch:

Accidental arrchivism

The subject of media and software piracy is covered in mainstream media with a lot of talk about greedy, unpleasant people who use their knowledge to steal information and make money with it. The image of the arrogant computer nerd as perfectly displayed in Jurassic Park. There is also no shortage of poster children that fit this bill and it is easy to bring up numbers that show how piracy is hurting a whole industry.

This kind of piracy, however, is just the tip of the iceberg when it comes to the whole subject matter. If you dig deeper you will find a complex structure of hierarchies, rules, quality control mechanisms and distribution formats in the piracy scene. These are in many cases superior to those of legal distributors and much more technologically and socially advanced.

In this talk Chris Heilmann will show the results of his research into the matter and show a more faceted view of piracy – one that publishers and distributors could learn from. He will also show positive – if accidental – results of piracy and explain which needs yet unfilled by legal release channels are covered and result in the success of the pirates – not all of them being about things becoming “free”. You can not kill piracy by making it illegal and applying scare tactics – its decentralised structure and its very nature of already being illegal makes that impossible. A lot of piracy happens based on convenience of access. If legal channels embraced and understood some of the ways pirates work and the history of piracy and offered a similar service, a lot of it would be rendered unnecessary.

If you are a conference organiser who’d be interested, my normal presentation rules apply:

- I want this to be a keynote, or closing keynote, not a talk in a side track in front of 20 people

- I want a good quality recording to be published after the event. So far I was most impressed with what Baconconf delivered on that front with the recording of my “Helping or Hurting” presentation.

- I’d like to get my travel expenses back. If your event is in London, Stockholm or the valley, this could be zero as I keep staying in these places

Get in contact either via Twitter (@codepo8), Facebook (thechrisheilmann), LinkedIn, Google+ (+ChristianHeilmann) or email (catch-all email, the answer will come from another one).

If you are a fan of what I do right now and you’d be interested in seeing this talk, spread this pitch far and wide and give it to conference organisers. Thanks.

http://christianheilmann.com/2013/12/08/an-open-talk-proposal-accidental-arrchivism/

|

|

David Humphrey: An Hour of Code spawns hours of coding |

One of the topics my daughters and two of their friends asked me to do this year in our home school is programming. They call it Code Club, and we have been learning HTML, JavaScript, CSS together. We’ve been using Thimble to make things like this visualization of Castles around the world. It’s been a lot of fun.

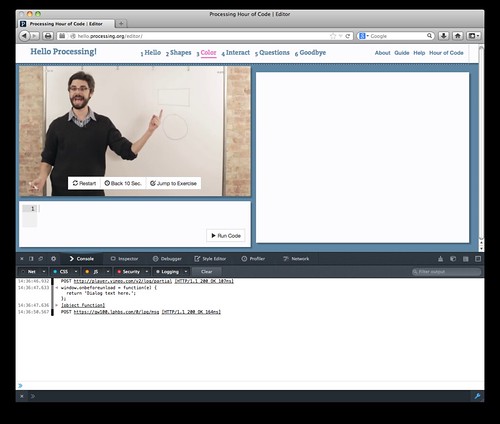

This past week I introduced them to the Processing programming language using this great new interactive tutorial. It was made for Computer Science Education Week and the Hour of Code, in which many organizations (including Mozilla Webmaker, who is also participating) have put together tutorials and learning materials for new programmers.

One of the things I love about the Processing Hour of Code tutorial is that it was made using two projects I worked on for Mozilla, namely Processing.js and Popcorn.js, and does something I always wanted to see people do with them: build in-browser, interactive, rich media, web programming curriculum. Everything you need to learn and code is all in one page, with nothing to install.

I decided to use the Processing tutorial for Code Club this past week, and let the girls try it out. I was a bit worried it would be too hard for them, but they loved it, and were able to have a great time with it, and understand how things worked. Here’s the owl two of the girls made:

The other girls made a one-eyed Minion from Despicable Me. As they were preparing to show one another their creations, disaster struck, and the code for the Minion was lost. Some tears were shed, and we agreed to work on making it again.

Today we decided to see if we could fix the issue that caused us to lose our work in the first place. The Processing Hour of Code was designed to inspire new programmers to try their hand at programming, and what better way than to write some real code that will help other people and improve the tutorial?

What follows is a log I wrote as the girls worked on the problem. Without giving them all the answers, I gave them tips, explained things they didn’t understand, and let them have an organic experience of debugging and fixing a real bug. All three of us loved it, and these are the steps my daughters took along the way:

1) Go to http://hello.processing.org, click on “Click Here To Begin” and go to the Introduction.

2) Try to make the bug happen again. We tried many things to see if we could make the bug happen again, and lose our work. After experimenting for a while, we discovered that you could make the bug happen by doing the following:

- Go to the “Color” section

- Click “Jump to Exercise” on the video.

- Change the code in the code editor.

- Click on the blue outside the code editor

- Press the Delete/Backspace key

- The web page goes back to the beginning, and now we’ve lost our code

3) Find a way to stop this from happening. We went to Google and tried to research some solutions. Here are some of the searches we tried, and what we found:

- “How do you make a web page not go back?”

- “How to keep the delete key from triggering the back button?”

- “How to stop a web page from losing my work hitting backspace”

- “what is the code to stop a web page from losing my work hitting backspace”

Most of these searches gave us the wrong info. Either we found ways to stop a web browser from doing Back when you press Delete, or else we found complicated code for ignoring the Delete key. We tried another search:

- “stop the page from closing”

This brought us to a question on a site called Stack Overflow, with an answer that looked interesting. It talked about using some code called window.onbeforeunload. We had never heard of this, so we did another search for it:

- “window.onbeforeunload”

Many pages came back with details on how to use it, and one of them was Mozilla’s page, which we read. In it we found a short example of how to use it, which we copied into our clipboard:

window.onbeforeunload = function(e) {

return 'Dialog text here.';

};

We tried pasting this into the Processing code editor, but that didn’t work. Instead we needed to change the code for the hello.processing.org web page itself. Our dad showed us one way to do it.

4) We used the Developer Tools to modify the code for the hello.procesing.org web page by pasting the code from Mozilla’s example into the Web Console (Tools > Web Developer > Web Console)

Now we tried again to trigger our bug, and it asked us if we wanted to leave or stay on the page. We fixed it!!!

Now we tried again to trigger our bug, and it asked us if we wanted to leave or stay on the page. We fixed it!!!

We opened another tab and loaded hello.processing.org again, and we see that this version still has the old bug. We now need to make this fix for all versions.

5) We want to fix the actual code for the site. “Dad, how do we fix it? Doesn’t he have have to fix it? How can we fix it for the whole world from here?” Great questions! First we have to find the code so we can make our change. We look at the site’s About page, and see the names of the people who made this web page listed under Credits. We do a search for their names and “Hello Processing”:

- “daniel shiffman scott garner scott murray hello processing”

In the results, we find a site where they were talking about the project, and Dan Shiffman was announcing it. In his announcement he says, “We would love as many folks as possible to test out the tutorial and find bugs.” He goes on to say: “You can file bug reports here: https://github.com/scottgarner/Processing-Hour-Of-Code/issues?state=open“ We are glad to read that he wants people to test it and tell him if they hit bugs. Now we know where to tell them about our bug.

6) At https://github.com/scottgarner/Processing-Hour-Of-Code we find the list of open issues (there were 5), and none of them mentioned our problem–maybe they don’t know about it. We also see all of the code for their site, and there is a lot of it.

7) We create a new account on Github so that we can tell them about the issue, and also fix their code. We then fork their project, and get our own version at https://github.com/threeamigos/Processing-Hour-Of-Code

8 ) Now we have to figure out where to put our code. It’s overwhelming just looking at it. Our dad suggests that we put the code in the page that caused our error, which was http://hello.processing.org/editor/index.html. On github, we see that editor/index.html is an HTML file https://github.com/threeamigos/Processing-Hour-Of-Code/blob/gh-pages/editor/index.html.

9) Next we have to find the right place in this HTML file to paste our code. Our dad tells us we need to find a block, and we quickly locate a bunch of them. We don’t understand how all of them work, but notice there is one at the bottom of the file, and decide to put our code there.

10) We clicked “Edit” on the file in the Github web page, and made the following change:

10) Finally, we made a Pull Request from our code to the original. We told them about the bug, and how it happens, and also that we’d fixed it, and that they could use our code. We’re excited to see their reply, and we hope they will use our code, and that it will help other new programmers too.

|

|

Arky: Mozilla Taiwan Localization Sprint |

Last week I traveled to Taipei for localization sprint with Mozilla Taiwan community. The community translates various Mozilla projects into Chinese (Traditional)(zh-TW). The goal of a localization sprint is to bring together new and experienced translators under one roof. Such events help promote knowledge sharing through peer learning and mentor-ship. Special thanks to Michael Hung, Estela Liu and Natasha Ma for making the Mozilla space available and inviting by providing Pizzas.

The event began with a short introduction to localization by Peter Chen, followed by a brief overview of various translation projects such as translating Mozilla Support (SUMO) by Ernest Chiang, translating Webmaker by Peter Chen, translating addons.mozilla.org by Toby, translating Mozilla Developer Network(MDN) articles by Carl, translating Mozilla videos with Amara tool by Irvin and translating Mozilla Links by Chung-Hui Fang. The speakers then organized participants into topic specific working groups, based on each individual's interest.

It was interesting to see how people used various tools such as Narro, Pootle, Transifex and even Google Docs for translation. It gave me an opportunity to observe and note some of the potential problems in the translation process. At the end of the day, everyone gathered to share and present their group's work. The also took time to answer question that participants had. All in all it was a very productive and enjoyable event. Mozilla badges were issued to recognize the participants' contributions.

Check out the event photos and etherpad for additional details. The Mozilla Taiwan community will continue to translate during their weekly MozTwLab meetups and a follow-up event is planned for the sprint 2014.

http://playingwithsid.blogspot.com/2013/12/Mozilla-Taiwan-L10N-sprint.html

|

|

Jason Orendorff: How EgotisticalGiraffe was fixed |

In October, Bruce Schneier reported that the NSA had discovered a way to attack Tor, a system for online anonymity.

The NSA did this not by attacking the Tor system or its encryption, but by attacking the Firefox web browser bundled with Tor. The particular vulnerability, code-named “EgotisticalGiraffe”, was fixed in Firefox 17, but the Tor browser bundle at the time included an older version, Firefox 10, which was vulnerable.

I’m writing about this because I’m a member of Mozilla’s JavaScript team and one of the people responsible for fixing the bug.

I still don’t know exactly what vulnerability EgotisticalGiraffe refers to. According to Mr. Schneier’s article, it was a bug in a feature called E4X. The security hole went away when we disabled E4X in Firefox 17.

You can read a little about this in Mozilla’s bug-tracking database. E4X was disabled in bugs 753542, 752632, 765890, and 778851, and finally removed entirely in bugs 833208 and 788293. Nicholas Nethercote and Ted Shroyer contributed patches. Johnny Stenback, Benjamin Smedberg, Jim Blandy, David Mandelin, and Jeff Walden helped with code reviews and encouragement. As with any team effort, many more people helped indirectly.

Thank you.

Now I will write as an American. I don’t speak for Mozilla on this or any topic. The views expressed here are my own and I’ll keep my political opinions out of it.

The NSA has twin missions: to gather signals intelligence and to defend American information systems.

From the outside, it appears the two functions aren’t balanced very well. This could be a problem, because there’s a conflict of interest. The signals intelligence folks are motivated to weaponize vulnerabilities in Internet systems. The defense folks, and frankly everyone else, would like to see those vulnerabilities fixed instead.

It seems to me that fixing them is better for national security.

In the particular case of this E4X vulnerability, mainly only Tor users were vulnerable. But it has also been reported that the NSA has bought security vulnerabilities “from private malware vendors”.

All I know about this is a line item in a budget ($25.1 million). I’ve seen speculation that the NSA wants these flaws for offensive use. It’s a plausible conjecture—but I sure hope that’s not the case. Let me try to explain why.

The Internet is used in government. It’s used in banks, hospitals, power plants. It’s used in the military. It’s used to handle classified information. It’s used by Americans around the world. It’s used by our allies. If the NSA is using security flaws in widely-used software offensively (and to repeat, no one says they are), then they are holding in their hands major vulnerabilities in American civilian and military infrastructure, and choosing not to fix them. It would be a dangerous bet: that our enemies are not already aware of those flaws, aren’t already using them against us, and can’t independently buy the same information for the same price. Also that deploying the flaws offensively won’t reveal them.

Never mind the other, purely civilian benefits of a more secure Internet. It just sounds like a bad bet.

Ultimately, the NSA is not responsible for Firefox in particular. That honor and privilege is ours. Yours, too, if you want it.

We have work to do. One key step is content process separation and sandboxing. Internet Explorer and Chrome have had this for years. It’s coming to Firefox. I’d be surprised if a single Firefox remote exploit known to anyone survives this work (assuming there are any to begin with). Firefox contributors from four continents are collaborating on it. You can join them. Or just try it out. It’s rough so far, but advancing.

I’m not doing my job unless life is constantly getting harder for the NSA’s SIGINT mission. That’s not a political statement. That’s just how it is. It’s the same for all of us who work on security and privacy. Not only at Mozilla.

If you know of a security flaw in Firefox, here’s how to reach us.

https://blog.mozilla.org/jorendorff/2013/12/06/how-egotisticalgiraffe-was-fixed/

|

|

Benoit Girard: Introducing Scroll-Graph |

For awhile I’ve been warning people that the FPS counter is very misleading. There’s many case where the FPS might be high but the scroll may still not be smooth. To give a better indicator of the scrolling performance I’ve written Scroll-Graph which can be enabled via layers.scroll-graph. Currently it requires OMTC+OGL but if this is useful we can easily port this to OMTC+D3D. Currently it works best on Desktop, it will need a bit more work for mobile to be compatible with APZC.

To understand how Scroll-Graph works lets look at scrolling from the point of view of a physicist. Scrolling and pan should be smooth. The ideal behavior is for scrolling to behave like you have a page on a low friction surface. Imagine that the page is on a low friction ice arena and that every time you fling the page with your finger or the trackpad you’re applying force to the page. The friction of the ice is applying a small force in the opposite direction. If you plot the velocity graph you’d expect to see something like this roughly:

Now if we scroll perfectly then we expect the velocity of a layer to follow a similar curve. The important part is *not* the position of the velocity curve but it’s the smoothness of the velocity graph. Smooth scrolling means the page has a smooth velocity curve.

Now on to the fun part. Here’s some examples of Scroll-Graph in the browser. Here’s I pan a page and get smooth scrolling with 2 minor inperfections (Excuse the low FPS gif, the scrolling was smooth):

Now here’s an example of a jerky page scrolling. Note the Scroll-Graph is not smooth at all but very ‘spiky’ and it was noticeable to the eye:

‘

http://benoitgirard.wordpress.com/2013/12/06/introducing-scroll-graph/

|

|

Fr'ed'eric Harper: My presentations are Creative Commons: share them, use them, improve them… |

Creative Commons: http://j.mp/1bNjxvC

I want more people to do public speaking. I want more people to share their passion about technology. I want more people to show the awesomeness of the Open Web, and help others be more open. So when someone asks me if he can take one of my presentations, my reaction is: of course! Of course you can: share it, use it, improve it, change it…

I uploaded all my presentations under a Creative Commons license Attribution 2.5 Generic on my SlideShare account. It’s easy to download the original format, but if you have any trouble, feel free to let me know. To be even clearer about what you can do with my material, I’ll add a Creative Commons logo on all my new presentations. Of course, all the content inside of those presentations, like images, are also under Creative Commons license. I as well encourage you to check on this site as there is probably a recording of the presentation related to the slides you want to use: I started to do this a couple of weeks ago, so you won’t find it for older presentations. It can be a guide to help you understand the slides, the content, and how I delivered it: in no situation, you have to share the information in the same way! Make it yours.

As I said in my presentation about public speaking: if you are starting to be on the stage, and wants some feedbacks on your presentation, materials, and ways of delivering your own story, please let me know, I’ll be more than happy to help. So start now, and share your passion with others…

--

My presentations are Creative Commons: share them, use them, improve them… is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- Public speaking at Social Media Breakfast Montr'eal This morning, I had the pleasure to speak at Social...

- Social Media Breakfast – Public Speaking? No, thanks! On the morning of Wednesday the 23rd of October, I’ll...

- A few other tricks about public speaking and stage technology The friend, and future co-worker Christian Heilmann did a good...

|

|

Nathan Froyd: reading binary structures with python |

Last week, I wanted to parse some Mach-O files with Python. “Oh sure,” you think, “just use the struct module and this will be a breeze.” I have, however, tried to do that:

class MyBinaryBlob:

def __init__(self, buf, offset):

self.f1, self.f2 = struct.unpack_from("BB", buf, offset)

and such an approach involves a great deal of copy-and-pasted code. And if you have some variable-length fields mixed in with fixed-length fields, using struct breaks down very quickly. And if you have to write out the fields to a file, things get even more messy. For this experiment, I wanted to do things in a more declarative style.

The desire was that I could say something like:

class MyBinaryBlob:

field_names = ["f1", "f2"]

field_kinds = ["uint8_t", "uint8_t"]

and all the necessary code to parse the appropriate fields out of a binary buffer would spring into existence. (Automagically having the code to write these objects to a buffer would be great, too.) And if a binary object contained something that would be naturally interpreted as a Python list, then I could write a minimal amount of code to do that during initialization of the object as well. I also wanted inheritance to work correctly, so that if I wrote:

class ExtendedBlob(MyBinaryBlob):

field_names = ["f3", "f4"]

field_kinds = ["int32_t", "int32_t"]

ExtendedBlob should wind up with four fields once it is initialized.

At first, I wrote things like:

def field_reader(fmt):

size = struct.calcsize(fmt)

def reader_sub(buf, offset):

return struct.unpack_from(fmt, buf, offset)[0], size

return reader_sub

fi = field_reader("i")

fI = field_reader("I")

fB = field_reader("B")

def initialize_slots(obj, buf, offset, slot_names, field_specs):

total = 0

for slot, reader in zip(slot_names, field_specs):

x, size = reader(buf, offset + total)

setattr(obj, slot, x)

total += size

class MyBinaryBlob:

field_names = ["f1", "f2"]

field_specs = [fB, fB]

def __init__(self, buf, offset):

initialize_slots(self, buf, offset, self.field_names, self.field_specs)

Fields return their size to make it straightforward to add variable-sized fields, not just fixed-width fields that can be parsed by struct.unpack_from. This worked out OK, but I was writing out a lot of copy-and-paste constructors, which was undesirable. Inheritance was also a little weird, since the natural implementation looked like:

class ExtendedBlob(MyBinaryBlob):

field_names = ["f3", "f4"]

field_specs = [fi, fi]

def __init__(self, buf, offset):

super(ExtendedBlob, self).__init__(buf, offset)

initialize_slots(self, buf, offset, self.field_names, self.field_specs)

but that second initialize_slots call needs to start reading at the offset resulting from reading MyBinaryBlob‘s fields. I fixed this by storing a _total_size member in the objects and modifying initialize_slots:

def initialize_slots(obj, buf, offset, slot_names, field_specs):

total = obj._total_size

for slot, reader in zip(slot_names, field_specs):

x, size = reader(buf, offset + total)

setattr(obj, slot, x)

total += size

obj._total_size = total

which worked out well enough.

I realized that if I wanted to use this framework for writing binary blobs, I’d need to construct “bare” objects without an existing buffer to read them from. To do this, there had to be some static method on the class for parsing things out of a buffer. @staticmethod couldn’t be used in this case, because the code inside the method didn’t know what class it was being invoked on. But @classmethod, which received the invoking class as its first argument, seemed to fit the bill.

After some more experimentation, I wound up with a base class, BinaryObject:

class BinaryObject(object):

field_names = []

field_specs = []

def __init__(self):

self._total_size = 0

def initialize_slots(self, buf, offset, slot_names, field_specs):

total = self._total_size

for slot, reader in zip(slot_names, field_specs):

x, size = reader(buf, offset + total)

setattr(self, slot, x)

total += size

self._total_size = total

@classmethod

def from_buf(cls, buf, offset):

# Determine our inheritance path back to BinaryObject

inheritance_chain = []

pos = cls

while pos != BinaryObject:

inheritance_chain.append(pos)

bases = pos.__bases__

assert len(bases) == 1

pos = bases[0]

inheritance_chain.reverse()

# Determine all the field names and specs that we need to read.

all_field_names = itertools.chain(*[c.field_names

for c in inheritance_chain])

all_field_specs = itertools.chain(*[c.field_specs

for c in inheritance_chain])

# Create the actual object and populate its fields.

obj = cls()

obj.initialize_slots(buf, offset, all_field_names, all_field_specs)

return obj

Inspecting the inheritance hierarchy at runtime makes for some very compact code. (The single-inheritance assertion could probably be relaxed to an assertion that all superclasses except the first do not have field_names or field_specs class members; such a relaxation would make behavior-modifying mixins work well with this scheme.) Now my classes all looked like:

class MyBinaryBlob(BinaryObject):

field_names = ["f1", "f2"]

field_specs = [fB, fB]

class ExtendedBlob(MyBinaryBlob):

field_names = ["f3", "f4"]

field_specs = [fi, fi]

blob1 = MyBinaryBlob.from_buf(buf, offset)

blob2 = ExtendedBlob.from_buf(buf, offset)

with a pleasing lack of code duplication. Any code for writing can be written once in the BinaryObject class using a similar inspection of the inheritance chain.

But how does parsing additional things during construction work? Well, subclasses can define their own from_buf methods:

class ExtendedBlobWithList(BinaryObject):

field_names = ["n_objs"]

field_specs = [fI]

@classmethod

def from_buf(cls, buf, offset):

obj = BinaryObject.from_buf.__func__(cls, buf, offset)

# do extra initialization here

for i in range(obj.n_objs):

...

return obj

The trick here is that calling obj = BinaryObject.from_buf(buf, offset) wouldn’t do the right thing: that would only parse any members that BinaryObject had, and return an object of type BinaryObject instead of one of type ExtendedBlobWithList. Instead, we call BinaryObject.from_buf.__func__, which is the original, undecorated function, and pass the cls with which we were invoked, which is ExtendedBlobWithList, to do basic parsing of the fields. After that’s done, we can do our own specialized parsing, probably with SomeOtherBlob.from_buf or similar. (The _total_size member also comes in handy here, since you know exactly where to start parsing additional members.) You can even define from_buf methods that parse a bit, determine what class they should really be constructing, and construct an object of that type instead:

R_SCATTERED = 0x80000000

class Relocation(BinaryObject):

field_names = ["_bits1", "_bits2"]

field_specs = [fI, fI];

__slots__ = field_names

@classmethod

def from_buf(cls, buf, offset):

obj = BinaryObject.from_buf.__func__(Relocation, buf, offset)

# OK, now for the decoding of what we just got back.

if obj._bits1 & R_SCATTERED:

return ScatteredRelocationInfo.from_buf(buf, offset)

else:

return RelocationInfo.from_buf(buf, offset)

This hides any detail about file formats in the parsing code, where it belongs.

Overall, I’m pretty happy with this scheme; it’s a lot more pleasant than bare struct.unpack_from calls scattered about.

https://blog.mozilla.org/nfroyd/2013/12/06/reading-binary-structures-with-python/

|

|