Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Aki Sasaki: LWR (job scheduling) part v: some thoughts on the jobs and graphs db |

[10:57]so one thing - I think it may be premature to look at db models before looking at APIs

I think I agree with that, especially since it looks like LWR may end up looking very different than my initial views of it.

However, as I noted in part 3's preface, I'm going to go ahead and get my thoughts down, with the caveat that this will probably change significantly. Hopefully this will be useful in the high-level sense, at least.

jobs and graphs db

At the bare minimum, this will need graphs and jobs. But right now I'm thinking we may want the following tables (or collections, depending what db solution we end up choosing):

- graph sets

Graph sets would tie a set of graphs together. If we wanted to, say, launch b2g builds as a separate graph from the firefox mobile and firefox desktop graphs, we could still tie them together as a graph set. Alternately, we could create a big graph that represents everything. The benefit of keeping the separate graphs is it's easier to reference and retrigger jobs of a type: retrigger all the b2g jobs, retrigger all the Firefox desktop win32 PGO talos runs.

- graph templates

I'm still in the brainstorm mode for the templates. Having templates for graphs and jobs would allow us to generate graphs from the web app, allowing for a faster UI without having to farm out a job to the graph generation pool to clone a repo and generate a graph. These would have templatized bits like branch name that we can fill out to create a graph for a specific branch. It would also be one way we could keep track of changes in customized graphs or jobs (by diffing the template against the actual job or graph).

- graphs

This would be the actual dependency graphs we use for scheduling, built from the templates or submitted from external requests.

- job templates

This would work similar to the graph templates: how jobs would look, if you filled in the branch name and such, that we can easily work with in the webapp to create jobs.

- jobs

These would have the definitions of jobs for specific graphs, but not the actual job run information -- that would go in the "job runs" table.

- job runs

I thought of "job runs" as separate from "jobs" because I was trying to resolve how we deal with retriggers and retries. Do we embed more and more information inside the job dictionary? What happens if we want to run a new job Y just like completed job X, but as its own entity? Do we know how to scrub the previous history and status of job X, while still keeping the definition the same? (This is what I meant by "volatile" information in jobs). The "job run" table solves this by keeping the "jobs" table all about definitions, and the "job runs" table has the actual runtime history and status. I'm not sure if I'm creating too many tables or just enough here, right now.

If you're wondering what you might run, you might care about the graph sets, and {graph,job} templates tables. If you're wondering what has run, you would look at the graphs and job runs tables. If you're wondering if a specific job or graph were customized, you would compare the graph or job against the appropriate template. And if you're looking at retriggering stuff, you would be cloning bits of the graphs and jobs tables.

(I think Catlee has the job runs in a separate db, as the job queue, to differentiate pending jobs from jobs that are blocked by dependencies. I think they're roughly equivalent in concept, and his model would allow for acting on those pending jobs faster.)

I think the main thing here is not the schema, but my concerns about retries and retriggers, keeping track of customization of jobs+graphs via the webapp, and reducing the turnaround time between creating a graph in LWR and showing it on the webapp. Not all of these things will be as important for all projects, but since we plan on supporting a superset of gecko's needs with LWR, we'll need to support gecko's workflow at some point.

dependency graph repo

This repo isn't a mandatory requirement; I see it as a piece that could speed up and [hopefully] streamline the workflow of LWR. It could allow us to:

- refer to a set of job+graph definitions by a single SHA. That would make it easier to tie graphs- and jobs- templates to the source.

- easily diff, say, mozilla-inbound jobs against mozilla-central. You can do that if the jobs and graphs definitions live in-tree for each, but it's easier to tell if one revision differs from another revision than if one directory tree in a revision differs from that directory tree in another revision. Even in the same repo: it's hard to tell if m-c revision 2's job and graphs have changed from m-c revision 1, without diffing. The job-and-graph repo would [generally] only have a new revision if something has changed.

- pre-populate sets of jobs and graph templates in the db that people can use without re-generating them.

There's no requirement that the jobs+graph definitions live in this repo, but having it would make the webapp graph+job creation a lot easier.

We could create a branch definitions file in-tree (though it could live elsewhere; its location would be defined in LWR's config). The branch definitions could have trychooser-like options to turn parts of the graphs on or off: PGO, nightlies, l10n, etc. These would be referenced by the job and graph definitions: "enable this job if nightlies are enabled in the branch definitions", etc. So in the branch definitions, we could either point to this dependency graph repo+revision, or at a local directory, for the job+graph definitions. In the gecko model, if someone adds a new job type, that would result in a new jobs+graphs repo revision. A patch to point the branch-definitions file at the new revision would land in Try, first, then one of the inbound branches. Then it would get merged to m-c and then out to the other inbound and project branches; then it would ride the trains.

(Of course, in the above model, there's the issue of inbound having different branch definitions than mozilla-central, which is why I was suggesting we have overrides by branch name. I'm not sure of a better solution at the moment.)

The other side of this model: when someone pushes a new jobs+graphs revision, that triggers a "generate new jobs+graphs templates" job. That would enter new jobs+graphs for the new SHA. Then, if you wanted to create a graph or job based on that SHA, the webapp doesn't have to re-build those from scratch; it has them in the db, pre-populated.

chunks and customizations

-

For chunked jobs (most test jobs, l10n), we were brainstorming about having dynamic numbers of chunks. If only a single machine is free, it would grab the first chunk, finish, then ask LWR if there are more chunks to run. If only a handful of machines are available, LWR could lean towards starting

min_chunksjobs. If there are many machines idle, we can triggermax_chunksjobs in parallel. I'm not sure how plausible this is, but this could help if it doesn't add too much complexity. -

I think that while users should be able to adjust graph- or job-priority, we should have max priorities set per-branch or per-user, so we can keep chemspill release builds at the highest priority.

Similarly, I think we should allow customization of jobs via the webapp on certain branches, but

- we should mark them as customized (by, say, a flag, plus the diff between the template and the job), and

- we need to prevent customizing, say, nightly builds that get sent to users, or release builds.

This causes interesting problems when we want to clone a job or graph: do we clone a template, or the customized job or graph that contains volatile information? (I worked around this in my head by creating the schema above.)

Signed graphs and jobs, per-branch restrictions, or separate LWR clusters with different ACLs, could all factor into limiting what people can customize.

retries and retriggers

For retries, we need to track max [auto] retries, as well as job statuses per run. I played with this in my head: do we keep track of runs separately from job definitions? Or clone the jobs, in which case volatile status and customizing jobs become more of an issue? Keeping the job runs separate, and specifying whether they were an auto-retry or a user-generated retrigger, could help in preventing going beyond max-auto-retries.

Retriggers themselves are somewhat problematic if we mark jobs as skipped due to dependencies: if a job that was unsuccessful is retriggered and exits successfully, do we revisit previously skipped jobs? Or do we clone the graph when we retrigger, and keep all downstream jobs pending-blocked-by-dependencies? Does this graph mark the previous graph as a parent graph, or do we mark it as part of the same graph set?

(This is less of a problem currently, since build jobs run sendchanges in that cascading-waterfall type scheduling; any time you retrigger a build, it will retrigger all downstream tests, which is useful if that's what you want. If you don't want the tests, you either waste test machine time or human time in cancelling the tests. Explicitly stating what we want in the graph is a better model imo, but forces us to be more explicit when we retrigger a job and want the downstream jobs.)

Another possibility: I thought that instead of marking downstream jobs as skipped-due-to-dependencies, we could leave them pending-blocked-by-dependencies until they either see a successful run from their upstream dependencies, or hit their TTL (request timeout). This would remove some complexity in retriggering, but would leave a lot of pending jobs hanging around that could slow down the graph processing pool and skew our 15 minute wait time SLA metrics.

I don't think I have the perfect answers to these questions; a lot of my conclusions are based on the scope of the problem that I'm holding in my head. I'm certain that some solutions are better for some workflows and others are better for others. I think, for the moment, I came to the soft conclusion of a hand-wavy retriggering-portions-of-the-graph-via-webapp (or web api call, which does the equivalent).

A random semi-tangential thought: whichever method of pending- or skipped- solution we generate will probably significantly affect our 15 minute wait time SLA metrics, anyway; they may also provide more useful metrics like end-to-end-times. After we move to this model, we may want to revisit our metric of record.

lwr_runner.py

(This doesn't really have anything to do with the db, but I had this thought recently, and didn't want to save it for a part 6.)

While discussing this with the Gaia, A-Team, and perf teams, it became clear that we may need a solution for other projects that want to run simple jobs that aren't ported to mozharness. Catlee was thinking an equivalent process to the buildbot buildslave process: this would handle logging, uploads, status notifications, etc., without requiring a constant connection to the master. Previously I had worked with daemons like this that spawned jobs on the build farm, but then moved to launching scripts via sshd as a lower-maintenance solution.

The downsides of no daemon include having to solve the mach context problem on macs, having to deal with sshd on windows, and needing a remote logging solution in the scripts. The upsides include being able to add machines to the pool without requiring the daemon, avoiding platform-specific issues with writing and maintaining the daemon(s), and script changes are faster to roll out (and more granular) than upgrading a daemon across an entire pool.

if we create a mozharness/scripts/lwr_runner.py that takes a set of commands to run against a repo+revision (or set of repos+revisions), with pre-defined metrics for success and failure, then simpler processes don't need their own mozharness script; we could wrap the commands in lwr_runner.py. And we'd get all the logging, error parsing, and retry logic already defined in mozharness.

I don't think we should rule out either approach just yet. With the lwr_runner.py idea, both approaches seem viable at the moment.

In part 1, I covered where we are currently, and what needs to change to scale up.

In part 2, I covered a high level overview of LWR.

In part 3, I covered some hand-wavy LWR specifics, including what we can roll out in phase 1.

In part 4, I drilled down into the dependency graph.

We met with the A-team about this, a couple times, and are planning on working on LWR together!

Now I'm going to take care of some vcs-sync tasks, prep for this next meeting, and start writing some code.

|

|

Niko Matsakis: Structural arrays in Typed Objects |

Dave Herman and I were tossing around ideas the other day for a revision of the typed object specification in which we remove nominal array types. The goal is to address some of the awkwardness that we have encountered in designing the PJS API due to nominal array types. I thought I’d try writing it out. This is to some extent a thought experiment.

Description by example

I’ve had a hard time trying to identify the best way to present the idea, because it is at once so similar and so unlike what we have today. So I think I’ll begin by working through examples and then try to define a more abstract version.

Let’s begin by defining a new struct type to represent pixels:

var Pixel = new StructType({r: uint8, g: uint8,

b: uint8, a: uint8});

Today, if we wanted an array of pixels, we’d have to create a new type

to represent that array (new ArrayType(Pixel)). Under the new

system, each type would instead come “pre-equipped” with a

corresponding array type, which can be used to create both single and

multidimensional arrays. This type is accessible under the property

Array. For example, here I create three objects:

var pixel = new Pixel();

var row = new Pixel.Array(1024);

var image = new Pixel.Array([1024, 768]);

The first object, pixel, represents just a single pixel. Its type is

simply Pixel. The second object, row, repesents a single

dimensional array of 1024 pixels. I denote this using the following

notation [Pixel : 1024]. The third object, image, represents a

two-dimensional array of 1024x768 pixels, which I denote as

[Pixel : 1024 x 768].

No matter what dimensions they have, all arrays are associated with a single type object. In other words:

objectType(row) === objectType(image) === Pixel.Array

This implies that they share the same prototype as well:

row.__proto__ === image.__proto__ === Pixel.Array.prototype

Whenever you have an instance of an array, such as row or image,

you can access the elements of the array as you would expect:

var a = row[3]; // a has type Pixel

var b = image[1022]; // b has type [Pixel : 768]

var c = b[765]; // c has type Pixel

var d = image[1022][765]; // d has type Pixel

Note that each time you index into an array instance, you remove the “leftmost” (or “outermost”) dimension, until you are left with the core type.

As today, it is always possible to “redimension” an array, so long as the total number of elements are preserved:

// flat has type [Pixel : 786432]:

var flat = image.redim(1024*768);

// three has type [Pixel : 2 x 512 x 768]:

var three = image.redim([2,512,768]);

The variables flat, three, and image all represent pointers into

the same underlying data buffer, but with different underlying

dimensions.

Sometimes it is useful to embed an array into a struct. For example,

imagine a type Gradient that embeds two pixel colors:

var Gradient = new StructType({from: Pixel, to: Pixel})

Rather than having two fields, it might be convenient to express this

type using an array of length 2 instead. In the old system, we would

have used a fixed-length array type for this purpose. In the new system,

we invoke the method dim, which produces a dimensioned type:

var Gradient = new StructType({colors: Pixel.dim(2)})

Dimensioned types are very similar to the older fixed-length array types, except that they are not themselves types. They can only be used as the specification for a field type. When a dimensioned field is reference, the result is an instance of the corresponding array:

var gradient = new Gradient(); // gradient has type Gradient

var colors = gradient.colors; // colors has type [Pixel : 2]

var from = colors[0]; // from has type Pixel

More abstract description

The type T of an typed object can be defined using the following grammar:

T = S | [S : D]

S = scalar | C

D = N | D x N

Here S is what I call a single type. It can either be a scalar

type – like int32, float64, etc. – or a struct, denoted C (to

represent the fact that struct types are defined nominally).

UPDATE: This section has confused a few people. I meant for T to

represent the type of an instance, and hence it includes the specific

dimensions. There would only be on type object for all arrays, so if

we defined a U to represent the set of type objects, it would be S

| [S]. But when you instantiate an array [S] you give it a concrete

dimension. I realize that this is a bit of a confused notion of type,

where I am intermingling the “static” state (“this is an array type”)

and the dynamic portion (“the precise dimensions”). Of course, in this

language we’re defining types dynamically, so the analogy is imprecise

anyway.

For each struct C, there is a struct type definition R is defined

as follows:

R = struct C { (f: T) ... }

Here C is the name of the struct, f is a field name, and T is

the (possibly dimensioned) type of the field.

This description is kind of formal-ish, and it may not be obvious how

to map it to the examples I gave above. Each time a new StructType

instance is created, that instance corresponds to a distinct struct

name C. When a new array instance like image is created, its type

corresponds to [Pixel : 1024 x 768]. This grammar reflects the fact

that struct types are nominal, meaning that the type is tied to a

specific struct type object, but array types are structural – given

the element type and dimensions, we can construct an array type.

Why make this change?

As time goes by we’ve encountered more and more scenarios where the nominal nature of array types is awkward. The problem is that it seems very natural to be able to create an array type given the type of the elements and some dimensions. But in today’s system, because those array types are distinct objects, creating a new array type is both heavyweight and has significance, since the array type has a new prototype.

There are a number of examples from the PJS APIs. In fact, we already did an extensive redesign to accommodate nominal array types already. But let me give you instead an example of some code that is hard to write in today’s system, and which becomes much easier in the system I described above.

Intel has been developing some examples that employ PJS APIs to do transforms on images taken from the camera. Those APIs define a number of filters that are applied (or not applied) as the user selects. Each filter is just a function that is supplied with some information about the incoming image as well as the window size and so on. For example, the filter for detecting faces looks like this:

function face_detect_parallel(frame, len, w, h, ctx) {

var skin = isskin_parallel(frame, len, w, h);

var row_sums = uint32.array(h).buildPar(i => ...);

var col_sums = uint32.array(w).buildPar(i => ...);

...

}

I won’t go into the details of how the filter works. For our purposes,

it suffices to say that isskin_parallel computes a (two-dimensional)

array skin that contains, for each pixel, an indicator of whether

the pixel represents “skin” or not. The row_sums computation then

iterates over each row in the image and computes a sum of how many

pixels in that row contain skin. col_sums is similar except that the

value is computed for each column in the image.

Let’s take a closer look at the row_sums computation:

var row_sums = uint32.array(h).buildPar(i => ...);

// ^~~~~~~~~~~~~~~

What I am highlighting here is that this computation begins by

defining a new array type of length h. This is natural because the

height of the image can (potentially) change as the user resizes the

window. This means that we are defining new array types for every

frame.

This is bad for a number of reasons:

- It’s inefficient. Creating an array type involves some overhead in the engine, and if nothing else it means creating two or three objects.

- If we wanted to install methods on arrays or something like that, creating new array types all the time is problematic, since each will have a distinct prototype.

This pattern seems to come up a lot. Basically, it’s useful to be able to create arrays when you know the element type and the dimensions, and right now that means creating new array types.

What do we lose?

Nominal array types can be useful. They allow people to create local

types and attach methods. For example, if some library defines a struct

type Pixel, then (today) some other library could define:

var MyPixelArray = new ArrayType(Pixel);

MyPixelArray.prototype.myMethod = function(...) { ... }

I’m not too worried about this though. You can create wrapper types at various levels. Most languages I can think of – virtually all – use a structural approach rather than nominal for arrays (although the situations are not directly analogous). I think there is a reason for that.

Is there a compromise?

I suppose we could allow array types to be explicitly instantiated but keep the other aspects of this approach. This permits users to define methods and so on. However, it also means that it is not enough to have the element type and dimension to construct an array instance, one must instead pass in the array type and dimension.

http://smallcultfollowing.com/babysteps/blog/2013/12/12/structural-arrays-in-typed-objects/

|

|

Jack Moffitt: Building Rust Code - Using Make |

This series of posts is about building Rust code. In the first post I covered the current issues (and my solutions) around building Rust using external tooling. This post will cover using Make to build Rust projects.

The Example Crate

For this post, I'm going to use the rust-geom library as an example. It is a simple Rust library used by Servo to handle common geometric tasks like dealing with points, rectangles, and matrices. It is pure Rust code, has no dependencies, and includes some unit tests.

We want to build a dynamic library and the test suite, and the Makefile

should be able to run the test suite by using make check. As much as

possible, we'll use the same crate structure that

rustpkg uses so

that once rustpkg is ready for real use, the transition to it will be

painless.

Makefile Abstractions

Did you know that Makefiles can define functions? It's a little clumsy, but

it works and you can abstract a bunch of the tedium away. I'd never really

noticed them before dealing with the Rust and Servo build systems, which use

them heavily.

By using shell commands like shasum and sed, we can compute crate hashes,

and by using Make's eval function, we can dynamically define new

targets. I've created a rust.mk which can be included in a Makefile that

makes it really easy to build Rust crates.

Magical Makefiles

Let's look at a

Makefile for rust-geom

which uses rust.mk.

include rust.mk

RUSTC ?= rustc

RUSTFLAGS ?=

.PHONY : all

all: rust-geom

.PHONY : check

check: check-rust-geom

$(eval $(call RUST_CRATE, .))

It includes rust.mk, sets up some basic variables that control the compiler

and flags, and then defines the top level targets. The magic bit comes the

call to RUST_CRATE which takes a path to where a crate's lib.rs and

test.rs are located. In this case the path is the current directory, ..

RUST_CRATE finds the pkgid attribute in the crate and uses this to compute

the crate's name, hash, and the output filename for the library. It then

creates a target with the same name as the crate name, in this case

rust-geom, and a target for the output file for the library. It uses the

Rust compiler's support for dependency information so that it will know

exactly when it needs to recompile things.

If the crate contains a test.rs file, it will also create a target that

compiles the tests for the crates into an executable as well as a target to

run the tests. The executable will be named after the crate; for rust-geom it

will be named rust-geom-test. The check target is also named after the

crate, check-rust-geom.

The files lib.rs and test.rs are the files rustpkg itself uses by

default. This Makefile does not support the pkg.rs custom build logic, but

if you need custom logic, it is easy enough to modify this example. One

benefit of following in rustpkg's footsteps here is that this same crate

should be buildable with rustpkg without modification.

Behind the Scenes

rust.mk

is a a little ugly, but not too bad. It defines a few helper functions like

RUST_CRATE_PKGID and RUST_CRATE_HASH which are used by the main

RUST_CRATE function. The syntax is a bit silly because of the use of eval

and the need to escape $s, but it shouldn't be too hard to follow if you're

already familiar with Make syntax.

RUST_CRATE_PKGID = $(shell sed -ne 's/^#[ *pkgid *= *"(.*)" *];$$/\1/p' $(firstword $(1)))

RUST_CRATE_PATH = $(shell printf $(1) | sed -ne 's/^([^#]*)\/.*$$/\1/p')

RUST_CRATE_NAME = $(shell printf $(1) | sed -ne 's/^([^#]*\/){0,1}([^#]*).*$$/\2/p')

RUST_CRATE_VERSION = $(shell printf $(1) | sed -ne 's/^[^#]*#(.*)$$/\1/p')

RUST_CRATE_HASH = $(shell printf $(strip $(1)) | shasum -a 256 | sed -ne 's/^(.{8}).*$$/\1/p')

ifeq ($(shell uname),Darwin)

RUST_DYLIB_EXT=dylib

else

RUST_DYLIB_EXT=so

endif

define RUST_CRATE

_rust_crate_dir = $(dir $(1))

_rust_crate_lib = $$(_rust_crate_dir)lib.rs

_rust_crate_test = $$(_rust_crate_dir)test.rs

_rust_crate_pkgid = $$(call RUST_CRATE_PKGID, $$(_rust_crate_lib))

_rust_crate_name = $$(call RUST_CRATE_NAME, $$(_rust_crate_pkgid))

_rust_crate_version = $$(call RUST_CRATE_VERSION, $$(_rust_crate_pkgid))

_rust_crate_hash = $$(call RUST_CRATE_HASH, $$(_rust_crate_pkgid))

_rust_crate_dylib = lib$$(_rust_crate_name)-$$(_rust_crate_hash)-$$(_rust_crate_version).$(RUST_DYLIB_EXT)

.PHONY : $$(_rust_crate_name)

$$(_rust_crate_name) : $$(_rust_crate_dylib)

$$(_rust_crate_dylib) : $$(_rust_crate_lib)

$$(RUSTC) $$(RUSTFLAGS) --dep-info --lib $$<

-include $$(patsubst %.rs,%.d,$$(_rust_crate_lib))

ifneq ($$(wildcard $$(_rust_crate_test)),"")

.PHONY : check-$$(_rust_crate_name)

check-$$(_rust_crate_name): $$(_rust_crate_name)-test

./$$(_rust_crate_name)-test

$$(_rust_crate_name)-test : $$(_rust_crate_test)

$$(RUSTC) $$(RUSTFLAGS) --dep-info --test $$< -o $$@

-include $$(patsubst %.rs,%.d,$$(_rust_crate_test))

endif

endef

If you wanted, you could add the crate's target and the check target to the

all and check targets within this function, simplifying the main

Makefile. You could also have it generate an appropriate clean-rust-geom

target as well.

It's not going to win a beauty contest, but it will get the job done nicely.

Next Up

In the next post, I plan to show the same example, but using CMake.

http://metajack.im/2013/12/12/building-rust-code--using-make/

|

|

Vladimir Vuki'cevi'c: Two Presentations: PAX Dev 2013 and Advanced JS 2013 |

I have not blogged in a while, because I’m not a good person. Ahem. I’ve also been behind about posting presentations that I’ve done, so I’m catching up on that now.

The first one is a presentation I gave at PAX Dev 2013, about the nuts and bolts involed in bringing a C++ game (or really any app) to the web using Emscripten. The slides are available here, and have the steps involved in going from existing C++ source to getting that running on the web.

The second one is a presentation I gave at Advanced.JS, about how asm.js can be used in traditional JavaScript client-side apps to speed up processing or add new features that would be difficult to rewrite in pure JS. The slides are available here.

|

|

John O'Duinn: On Leaving Mozilla |

tl;dr: On 18nov, I gave my notice to Brendan and Bob that I will be leaving Mozilla, and sent an email internally at Mozilla on 26nov. I’m here until 31dec2013. Thats a lot of notice, yet feels right – its important to me that this is a smooth stable transition.

After they got over the shock, the RelEng team is stepping up wonderfully. Its great to see them all pitching in, sharing out the workload. They will do well. Obviously, at times like this, there are lots of details to transition, so please be patient and understanding with catlee, coop, hwine and bmoss. I have high confidence this transition will continue to go smoothly.

In writing this post, I realized I’ve been here 6.5 years, so thought people might find the following changes interesting:

1) How quickly can Mozilla ship a zero-day security release?

was: 4-6 weeks

now: 11 hours

2) How long to ship a “new feature” release?

was: 12-18 months

now: 12 weeks

3) How many checkins per day?

was: ~15 per day

now: 350-400 per day (peak 443 per day)

4) Mozilla hired more developers

increased number of developers x8

increased number of checkins x21

The point here being that the infrastructure improved faster then Mozilla could hire developers.

5) Mozilla added mobile+b2g:

was: desktop only

now: desktop + mobile + phoneOS – many of which ship from the *exact* same changeset

6) updated tools

was: cvs

now: hg *and* git (aside, I don’t know any other organization that ships product from two *different* source-code revision systems.)

7) Lifespan of human Release Engineers

was 6-12 months

now: two-losses-in-6-years (3 including me)

This team stability allowed people to focus on larger, longer term, improvements – something new hires generally cant do while learning how to keep the lights on.

This is the best infrastructure and team in the software industry that I know of – if anyone reading this knows of better, please introduce me! (Disclaimer: there’s a big difference between people who update website(s) vs people who ship software that gets installed on desktop or mobile clients… or even entire phoneOS!)

Literally, Release Engineering is a force multiplier for Mozilla – this infrastructure allows us to work with, and compete against, much bigger companies. As a organization, we now have business opportunities that were previously just not possible.

Finally, I want to say thanks:

- I’ve been here longer then a few of my bosses. Thanks to bmoss for his council and support over the last couple of years.

- Thanks to Debbie Cohen for making LEAD happen – causing organizational change is big and scary, I know its impacted many of us here, including me.

- Thanks to John Lilly and Mike Schroepfer (“schrep”) – for allowing me to prove there was another, better, way to ship software. Never mind that it hadn’t been done before. And thanks to aki, armenzg, bhearsum, catlee, coop, hwine, jhopkins, jlund, joey, jwood, kmoir, mgerva, mshal, nthomas, pmoore, rail, sbruno, for building it, even when it sounded crazy or hadn’t been done before.

- Finally, thanks to Brendan Eich, Mitchell Baker, and Mozilla – for making the “people’s browser” a reality… putting humans first. Mozilla ships all 90+ locales, even Khmer, all OS, same code, same fixes… all at the same time… because we believe all humans are equal. It’s a living example of the “we work for mankind, not for the man” mindset here, and is something I remain super proud to have been a part of.

take care

John.

|

|

Christian Heilmann: Zebra tables using nth-child and hidden rows? |

Earlier today my colleague Anton Kovalyov who works on the Firefox JavaScript Profiler ran across an interesting problem: if you have a table in the page and you want to colour every odd row differently to make it easier to read (christened as “Zebra Tables” by David F. Miller on Alistapart in 2004) the great thing is that we have support for :nth-child in browsers these days. Without having to resort to JavaScript, you can stripe a table like this.

Now, if you add a class of “hidden” to a row (or set its CSS property of display to none), you visually hide the element, but you don’t remove it from the document. Thus, the striping is broken. Try it out by clicking the “remove row 3” button:

The solution Anton found other people to use is a repeating gradient background instead:

This works to a degree but seems just odd with the fixed size of line-height (what if one table row spans more than one line?).

Jens Grochtdreis offered a pure CSS solution that on the first glance seems to do the job using a mixture of nth-of-type, nth-child and the tilde selector.

This works, until you remove more than one row. Try it by clicking the button again. :(

In essence, the issue we are facing here is that hiding something doesn’t remove it from the DOM which can mess with many things – assistive technology, search engines and, yes, CSS counters.

The solution to the problem is not to hide the parts you want to get rid of but really, physically remove them from the document, thus forcing the browser to re-stripe the table. Stuart Langridge offered a clever solution to simply move the table rows you want to hide to the end of the table using appendChild() and hide them by storing their index (for later retrieval) in an expando:

[…]rows[i].dataset.idx=i;table.appendChild(rows[i]) and [data-idx] { display:none }

That way the original even/odd pairs will get reshuffled. My solution is similar, except I remove the rows completely and store them in a cache object instead:

This solution also takes advantage of the fact that the result of querySelectorAll is not a live list and thus can be used as a copy of the original table.

As with everything on the web, there are many solutions to the same problem and it is fun to try out different ways. I am sure there is a pure CSS solution. It would also be interesting to see how the different ways perform differently. With a huge dataset like the JS Profiler I’d be tempted to guess that using a full table instead of a table scrolling in a viewport with recycling of table rows might actually be a bottleneck. Time will tell.

http://christianheilmann.com/2013/12/12/zebra-tables-using-nth-child-and-hidden-rows/

|

|

Michael Coates: Eliminate Application Attackers before Exploitation - Podcast OWASP AppSensor |

Podcast recorded at OWASP AppSecUSA on the AppSensor project.

-Michael Coates - @_mwc

http://michael-coates.blogspot.com/2013/12/eliminate-application-attackers-before.html

|

|

Sean McArthur: insist: Better assertions for nodejs |

There’s plenty of assertion libraries out there that give you all brand-new APIs with all sorts of assertion testing. I don’t need that. I actually like just using the assert module. Except one thing. The standard message for assert(false) is useless.

I saw better-assert, got excited, and then realized it only provided one method. All I wanted was the assert module, with a better message.

So I made insist. You can truly just drop it instead of assert, and pretend it’s assert. Also, unlike a few libraries who try to do this, insist plays well with multi-line assertions.

var assert = require('insist');

var someArr = [15, 20, 5, 30];

assert(someArr.every(function(val) {

return val > 10;

}));

// output: AssertionError: assert(someArr.every(function(val) {

// return val > 10;

// }));

I insist.

|

|

Daniel Holbert: Multi-line flexbox support in Nightly and Aurora 28 |

In prior Firefox versions, we support single-line flex containers, which let authors group elements into a row or a column, which can then grow, shrink, and/or align the elements depending on the available space.

But with multi-line flexbox support, authors can use the flex-wrap CSS property (or the flex-flow shorthand) to permit a flex container's children to wrap into several lines (i.e. rows or columns), with each line able to independently flex and align its children.

This lets authors easily create flexbox-based toolbars whose buttons automatically wrap when they run out of space:

Similarly, it allows authors to create homescreen layouts with app icons and flexible widgets that wrap to fill the screen:

(Note that the flexible blue widget, labeled "wide", changes its size depending on the available space in its line.)

Furthermore, authors can use the align-content property to control how extra space is distributed around the lines. (I don't have a compelling demo for that, but the flexbox spec has a good diagram of how that looks at the bottom of its align-content section.)

The above screenshots are taken from a simple interactive demo page that I whipped up. Follow the link if you want to try it out.

The work here was primarily done in bug 702508, bug 939896, bug 939901, and bug 939905. Thanks to David Baron, Cameron McCormack, and Mats Palmgren for reviewing the patches.

http://blog.dholbert.org/2013/12/multi-line-flexbox-support-in-nightly.html

|

|

Jonathan Watt: <input type=number> coming to Mozilla |

The support for that I've been working on for Mozilla is now turned on for Aurora 28 and Nightly builds. If you're interested in using here are a few things you should know:

- The current support should first ship in Firefox 28 (scheduled to release in March), barring any issues that would cause us to disable it.

- As of yet, locale specific user input is not supported. In other words user input that contains non-ASCII digits or a decimal separator other than the decimal point (for example, the comma) will not be accepted. If this turns out to be unacceptable then we may have to turn off support for Firefox 28, or else add a hack to at least accept comma. Ehsan has just finished battling to get ICU integrated into gecko's libXUL, so I should be able to fix this properly for Firefox 29.

- Unprivileged content is not currently allowed to access the CSS pseudo-elements that would let it style the different internal parts of the element (the text field, spinner and spin buttons). The only direct control content has over the styling of the internals is that it can remove the spinner by setting

-moz-appearance:textfield;on the input. (The logic and behavior that defines will still apply, just without those buttons.)

If you test the new support and find any bugs please report them, being sure to add ":jwatt" to the CC field and "" to the Summary field of the report.

Tags:

https://jwatt.org/2013/12/11/input-type-number-coming-to-mozilla

|

|

Josh Matthews: You are receiving this mail because you are mentoring this bug |

Good news! As of today, Bugzilla now sends out emails containing both an X-Bugzilla-Mentors header and a “You are mentoring this bug” message. This means that it’s now possible to highlight activity in your mentored bugs in your favourite email client! I use Gmail, so I’ve created a filter that searches for “You are mentoring this bug” and stars it; you might consider the same, or giving it a label so it’s easy to see at a glance if there’s something you should prioritize. For mail header filters, you’ll want to search for X-Bugzilla-Mentors containing your bugzilla email address.

Note: this only works if searching for the mentor tag in the whiteboard yields a unique result. If the tag reads mentor=ashish and there’s an another account with :ashish_d, the result will not be unique so no relevant header value or footer will be applied to any messages sent to Ashish.

Please make use of this! There is no longer a good excuse for questions in mentored bugs to go unanswered or overlooked; let’s make sure we’re providing the best possible mentoring experience to new contributors that we can.

|

|

Code Simplicity: The Secret of Fast Programming: Stop Thinking |

When I talk to developers about code complexity, they often say that they want to write simple code, but deadline pressure or underlying issues mean that they just don’t have the time or knowledge necessary to both complete the task and refine it to simplicity.

Well, it’s certainly true that putting time pressure on developers tends to lead to them writing complex code. However, deadlines don’t have to lead to complexity. Instead of saying “This deadline prevents me from writing simple code,” one could equally say, “I am not a fast-enough programmer to make this simple.” That is, the faster you are as a programmer, the less your code quality has to be affected by deadlines.

Now, that’s nice to say, but how does one actually become faster? Is it a magic skill that people are both with? Do you become fast by being somehow “smarter” than other people?

No, it’s not magic or in-born at all. In fact, there is just one simple rule that, if followed, will eventually solve the problem entirely:

Any time you find yourself stopping to think, something is wrong.

Perhaps that sounds incredible, but it works remarkably well. Think about it—when you’re sitting in front of your editor but not coding very quickly, is it because you’re a slow typer? I doubt it—”having to type too much” is rarely a developer’s productivity problem. Instead, the pauses where you’re not typing are what make it slow. And what are developers usually doing during those pauses? Stopping to think—perhaps about the problem, perhaps about the tools, perhaps about email, whatever. But any time this happens, it indicates a problem.

The thinking is not the problem itself—it is a sign of some other problem. It could be one of many different issues:

Understanding

The most common reason developers stop to think is that they did not fully understand some word or symbol.

This happened to me just the other day. It was taking me hours to write what should have been a really simple service. I kept stopping to think about it, trying to work out how it should behave. Finally, I realized that I didn’t understand one of the input variables to the primary function. I knew the name of its type, but I had never gone and read the definition of the type—I didn’t really understand what that variable (a word or symbol) meant. As soon as I looked up the type’s code and docs, everything became clear and I wrote that service like a demon (pun partially intended).

This can happen in almost infinite ways. Many people dive into a programming language without learning what (, ), [, ], {, }, +, *, and % really mean in that language. Some developers don’t understand how the computer really works. Remember when I wrote The Singular Secret of the Rockstar Programmer? This is why! Because when you truly understand, you don’t have to stop to think. It’s also a major motivation behind my book—understanding that there are unshakable laws to software design can eliminate a lot of the “stopping to think” moments.

So if you find that you are stopping to think, don’t try to solve the problem in your mind—search outside of yourself for what you didn’t understand. Then go look at something that will help you understand it. This even applies to questions like “Will a user ever read this text?” You might not have a User Experience Research Department to really answer that question, but you can at least make a drawing, show it to somebody, and ask their opinion. Don’t just sit there and think—do something. Only action leads to understanding.

Drawing

Sometimes developers stop to think because they can’t hold enough concepts in their mind at once—lots of things are relating to each other in a complex way and they have to think through it. In this case, it’s almost always more efficient to write or draw something than it is to think about it. What you want is something you can look at, or somehow perceive outside of yourself. This is a form of understanding, but it’s special enough that I wanted to call it out on its own.

Starting

Sometimes the problem is “I have no idea what code to start writing.” The simplest solution here is to just start writing whatever code you know that you can write right now. Pick the part of the problem that you understand completely, and write the solution for that—even if it’s just one function, or an unimportant class.

Often, the simplest piece of code to start with is the “core” of the application. For example, if I was going to write a YouTube app, I would start with the video player. Think of it as an exercise in continuous delivery—write the code that would actually make a product first, no matter how silly or small that product is. A video player without any other UI is a product that does something useful (play video), even if it’s not a complete product yet.

If you’re not sure how to write even that core code yet, then just start with the code you are sure about. Generally I find that once a piece of the problem becomes solved, it’s much easier to solve the rest of it. Sometimes the problem unfolds in steps—you solve one part, which makes the solution of the next part obvious, and so forth. Whichever part doesn’t require much thinking to create, write that part now.

Skipping a Step

Another specialized understanding problem is when you’ve skipped some step in the proper sequence of development. For example, let’s say our Bike object depends on the Wheels, Pedals, and Frame objects. If you try to write the whole Bike object without writing the Wheels, Pedals, or Frame objects, you’re going to have to think a lot about those non-existent classes. On the other hand, if you write the Wheels class when there is no Bike class at all, you might have to think a lot about how the Wheels class is going to be used by the Bike class.

The right solution there would be to implement enough of the Bike class to get to the point where you need Wheels. Then write enough of the Wheels class to satisfy your immediate need in the Bike class. Then go back to the Bike class, and work on that until the next time you need one of the underlying pieces. Just like the “Starting” section, find the part of the problem that you can solve without thinking, and solve that immediately.

Don’t jump over steps in the development of your system and expect that you’ll be productive.

Physical Problems

If I haven’t eaten enough, I tend to get distracted and start to think because I’m hungry. It might not be thoughts about my stomach, but I wouldn’t be thinking if I were full—I’d be focused. This can also happen with sleep, illness, or any sort of body problem. It’s not as common as the “understanding” problem from above, so first always look for something you didn’t fully understand. If you’re really sure you understood everything, then physical problems could be a candidate.

Distractions

When a developer becomes distracted by something external, such as noise, it can take some thinking to remember where they were in their solution. The answer here is relatively simple—before you start to develop, make sure that you are in an environment that will not distract you, or make it impossible for distractions to interrupt you. Some people close the door to their office, some people put on headphones, some people put up a “do not disturb” sign—whatever it takes. You might have to work together with your manager or co-workers to create a truly distraction-free environment for development.

Self-doubt

Sometimes a developer sits and thinks because they feel unsure about themselves or their decisions. The solution to this is similar to the solution in the “Understanding” section—whatever you are uncertain about, learn more about it until you become certain enough to write code. If you just feel generally uncertain as a programmer, it might be that there are many things to learn more about, such as the fundamentals listed in Why Programmers Suck. Go through each piece you need to learn until you really understand it, then move on to the next piece, and so on. There will always be learning involved in the process of programming, but as you know more and more about it, you will become faster and faster and have to think less and less.

False Ideas

Many people have been told that thinking is what smart people do, thus, they stop to think in order to make intelligent decisions. However, this is a false idea. If thinking alone made you a genius, then everybody would be Einstein. Truly smart people learn, observe, decide, and act. They gain knowledge and then use that knowledge to address the problems in front of them. If you really want to be smart, use your intelligence to cause action in the physical universe—don’t use it just to think great thoughts to yourself.

Caveat

All of the above is the secret to being a fast programmer when you are sitting and writing code. If you are caught up all day in reading email and going to meetings, then no programming happens whatsoever—that’s a different problem. Some aspects of it are similar (it’s a bit like the organization “stopping to think,”) but it’s not the same.

Still, there are some analogous solutions you could try. Perhaps the organization does not fully understand you or your role, which is why they’re sending you so much email and putting you in so many meetings. Perhaps there’s something about the organization that you don’t fully understand, such as how to go to fewer meetings and get less email. ![]() Maybe even some organizational difficulties can be resolved by adapting the solutions in this post to groups of people instead of individuals.

Maybe even some organizational difficulties can be resolved by adapting the solutions in this post to groups of people instead of individuals.

-Max

|

|

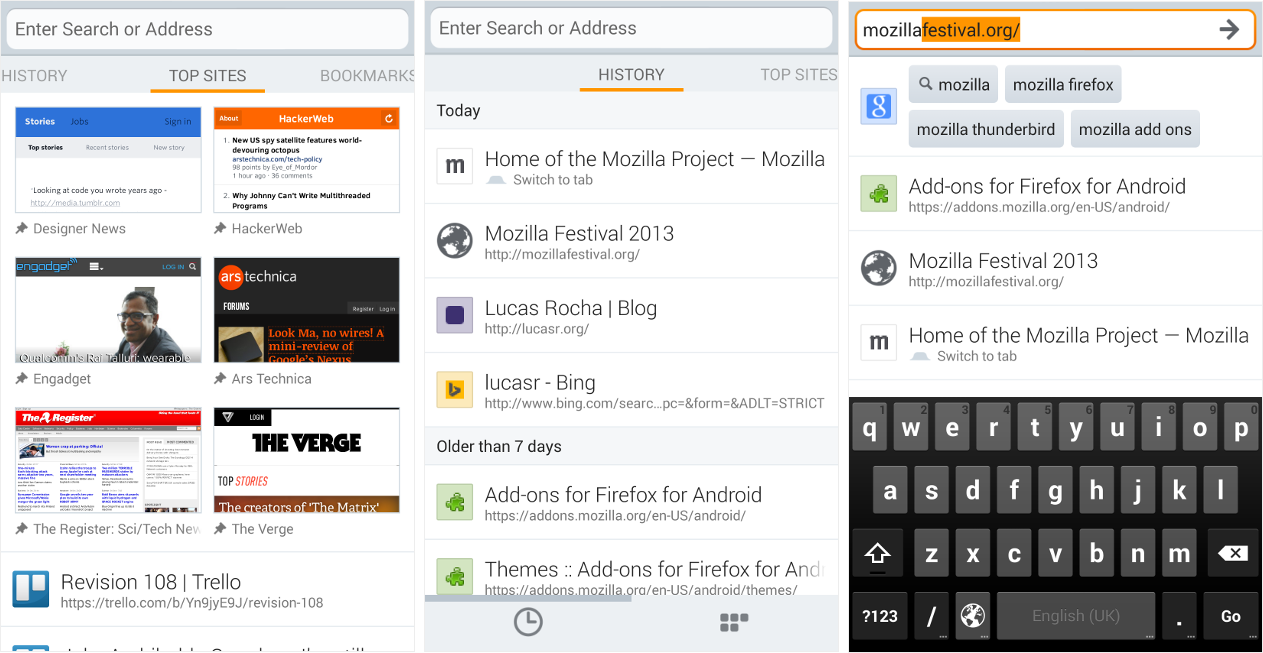

Lucas Rocha: Firefox for Android in 2013 |

Since our big rewrite last year, we’ve released new features of all sizes and shapes in Firefox for Android—as well as tons of bug fixes, of course. The feedback has been amazingly positive.

This was a year of consolidation for us, and I think we’ve succeeded in getting Firefox for Android in a much better place in the mobile browser space. We’ve gone from an (embarrassing) 3.5 average rating on Google Play to a solid 4.4 in just over a year (!). And we’re wrapping up 2013 as a pre-installed browser in a few devices—hopefully the first of many!

We’ve just released Firefox for Android 26 today, our last release this year. This is my favourite release by a mile. Besides bringing a much better UX, the new Home screen lays the ground for some of the most exciting stuff we’ll be releasing next year.

A lot of what we do in Firefox for Android is so incremental that it’s sometimes hard to see how all the releases add up. If you haven’t tried Firefox for Android yet, here is my personal list of things that I believe sets it apart from the crowd.

All your stuff, one tap

The new Home in Firefox for Android 26 gives you instant access to all your data (history, bookmarks, reading list, top sites) through a fluid set of swipable pages. They are easily accessible at any time—when the app starts, when you create a new tab, or when you tap on the location bar.

You can always search your browsing data by tapping on the location bar. As an extra help, we also show search suggestions from your default search engine as well as auto-completing domains you’ve visited before. You’ll usually find what you’re looking for by just typing a couple of letters.

Great for reading

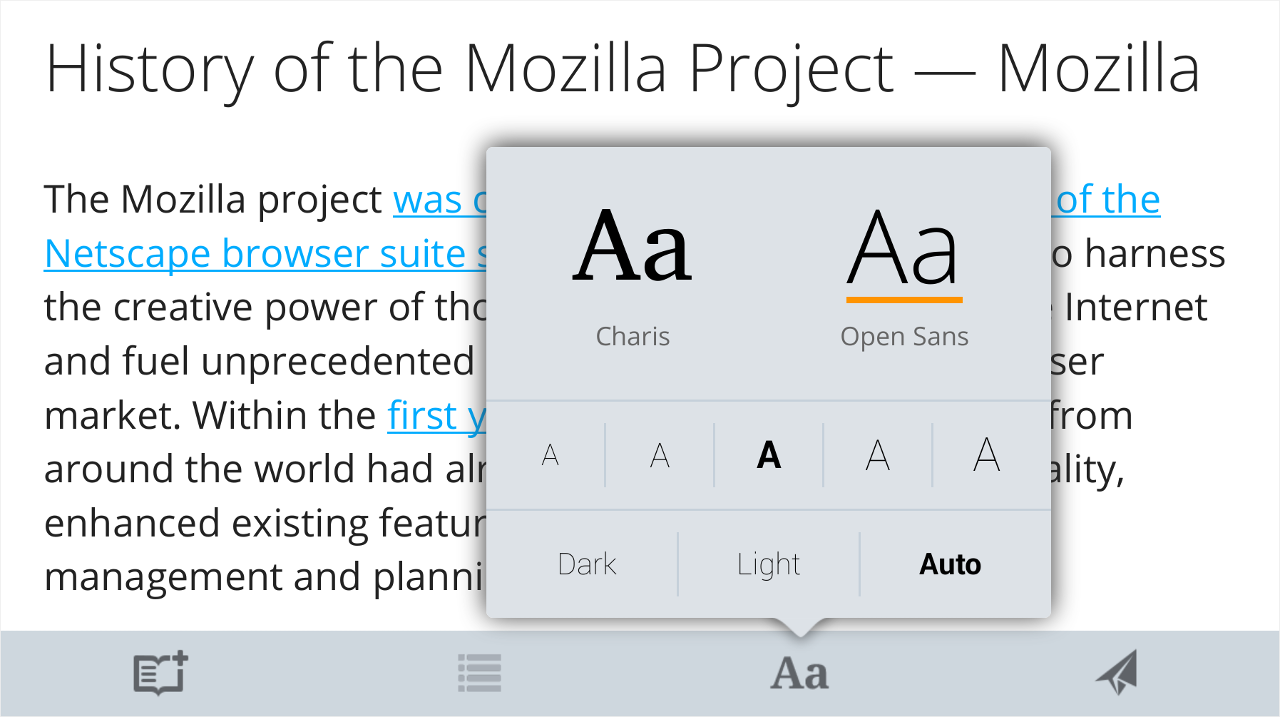

Firefox for Android does a couple of special things for readers. Every time you access a page with long-form content—such as a news article or an essay—we offer you an option to switch to Reader Mode.

Reader Mode removes all the visual clutter from the original page and presents the content in a distraction-free UI—where you can set your own text size and color scheme for comfortable reading. This is especially useful on mobile browsers as there are still many websites that don’t provide a mobile-friendly layout.

Secondly, we bundle nice default fonts for web content. This makes a subtle yet noticeable difference on a lot of websites.

Last but not least, we make it very easy to save content to read later—either by adding pages to Firefox’s reading list or by using our quickshare feature to save it to your favourite app, such as Pocket or Evernote.

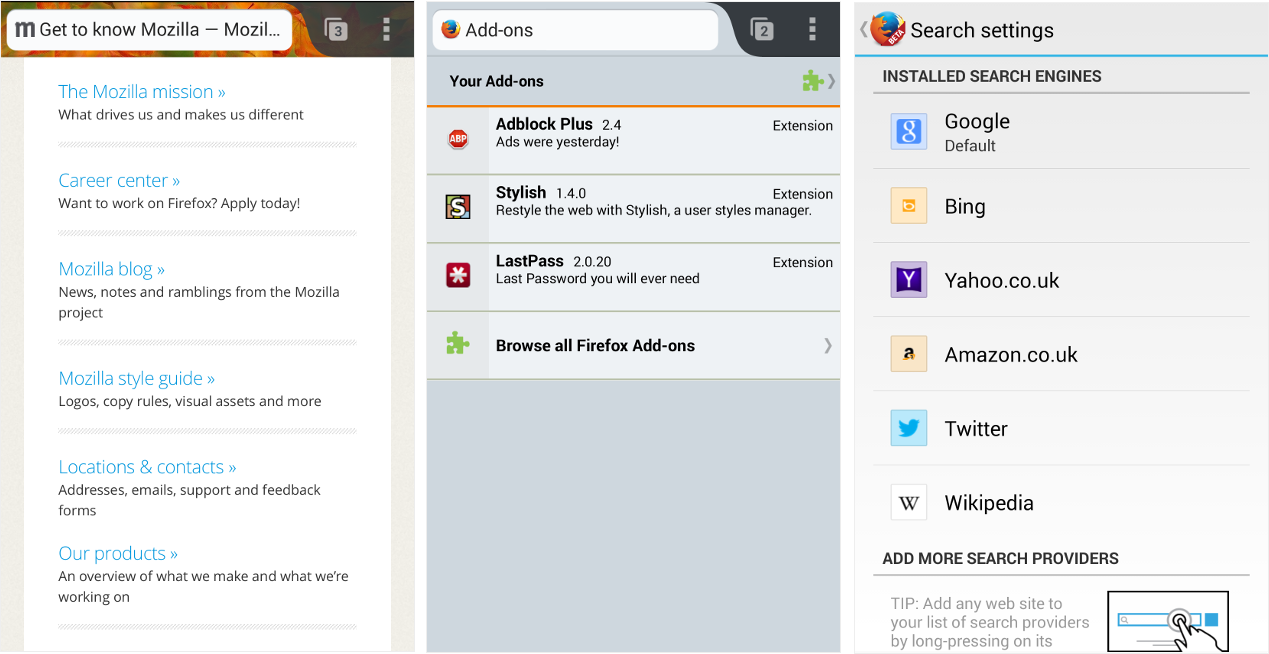

Make it yours

Add-ons are big in desktop Firefox. And we want Firefox for Android to be no different. We provide several JavaScript APIs that allow developers to extend the browser with new features. As a user, you can benefit from add-ons like Adblock Plus and Lastpass.

If you’re into blingy UIs, you can install some lightweight themes. Furthermore, you can install and use any web search engine of your choice.

Smooth panning and zooming

An all-new panning and zooming framework was built as part of the big native rewrite last year. The main focus areas were performance and reliability. The (mobile) graphics team has released major improvements since then and some of this framework is going to be shared across most (if not all) platforms soon.

From a user perspective, this means you get consistently smooth panning and zooming in Firefox for Android.

Fast-paced development

We develop Firefox for Android through a series of fast-paced 6-week development cycles. In each cycle, we try to keep a balance between general housekeeping (bug fixes and polishing) and new features. This means you get a better browser every 6 weeks.

Open and transparent

Firefox for Android is the only truly open-source mobile browser. There, I said it. We’re a community of paid staff and volunteers. We’re always mentoring new contributors. Our roadmap is public. Everything we’re working on is being proposed, reviewed, and discussed in Bugzilla and our mailing list. Let us know if you’d like to get involved by the way :-)

That’s it. I hope this post got you curious enough to try Firefox for Android today. Do we still have work to do? Hell yeah. While 2013 was a year of consolidation, I expect 2014 to be the year of excitement and expansion for Firefox on Android. This means we’ll have to set an even higher bar in terms of quality and, at the same time, make sure we’re always working on features our users actually care about.

2014 will be awesome. Can’t wait! In the meantime, install Firefox for Android and let us know what you think!

|

|

Byron Jones: comment tagging deployed to bmo |

i’ve been working on a bugzilla enhancement which allows you to tag individual comments with arbitrary strings, which was deployed today.

comment tagging features:

automatic collapsing of comments

the bugzilla administrator can configure a list of comment tags which will result in those comments being collapsed by default when a bug is loaded.

this allows obsolete or irrelevant comments to be hidden from the information stream.

comment grouping/threading

bugzilla shows a list of all comment tags in use on the bug, and clicking on a tag will expand those comments while collapsing all others.

this allows for simple threading of comments without diverging significantly from the current bugzilla user interface, api, and schema. you’ll be able to tag all comments relating to the same topic, and remove comments no longer relevant to that thread by removing the tag.

highlighting importing comments

on bugs with a lot of information, it can be time consuming for people not directly involved in the bug to find the relevant comments. applying comment tags to the right comments assists this, and may negate the need for information to be gathered outside of bugzilla.

for example:

- tagging a comment with “STR” (steps to reproduce) will help the qa team quickly find the information they need to verify the fix

- writing a comment summarising a new feature and tagging it with “docs” will help the generation of documentation for mdn or similar

implementation notes

- the “add tag” input field has an auto-complete drop-down, drawing from existing tags weighted by usage count

- by default editbugs membership is required to add tags to comments

- comment tags are not displayed unless you are logged in to bugzilla

- tags are added and removed via xhr, changes are immediately visible to the changer without refreshing the page

- tagging comments will not trigger bugmail, nor alter a bug’s last-modified date

- tags added by other users (or on other tabs) will generally not be visible without a page refresh

Filed under: bmo, mozilla

http://globau.wordpress.com/2013/12/11/comment-tagging-deployed-to-bmo/

|

|

Michael Kaply: Firefox 17 ESR EOL Today |

Firefox 24 ESR should be officially released today which means Firefox 17 ESR users will be automatically upgraded to Firefox 24 ESR. I want to take this opportunity to remind everyone of a major change that happened in Firefox 21 that will impact everyone upgrading to Firefox 24 ESR.

The location of a number of important files that are used to customize Firefox has changed. Here’s the list:

- defaults/preferences -> browser/defaults/preferences

- defaults/profile -> browser/defaults/profile

- extensions -> browser/extensions

- searchplugins -> browser/searchplugins

- plugins -> browser/plugins

- override.ini -> browser/override.ini

If you find that anything you’ve customized is not working anymore, these changes are probably the reason.

|

|

Atul Varma: Clarifying Coding |

With the upcoming Hour of Code, there’s been a lot of confusion as to the definition of what “coding” is and why it’s useful, and I thought I’d contribute my thoughts.

Rather than talking about “coding”, I prefer to think of “communicating with computers”. Coding, depending on its definition, is one of many ways that a human can communicate with a computer; but I feel that the word “communicating” is more powerful than “coding” because it gets to the heart of why we use computers in the first place.

We communicate with computers for many different reasons: to express ourselves, to create solutions to problems, to reuse solutions that others have created. At a minimum, this requires basic explorational literacy: knowing how to use a mouse and keyboard, using them to navigate an operating system and the Web, and so forth. Nouns in this language of interaction include terms like application, browser tab and URL; verbs include click, search, and paste.

These sorts of activities aren’t purely consumptive: we express ourselves every time we write a Facebook post, use a word processor, or take a photo and upload it to Instagram. Just because someone’s literacies are limited to this baseline doesn’t mean they can’t do incredibly creative things with them.

And yet communicating with computers at this level may still prevent us from doing what we want. Many of our nouns, like application, are difficult to create or modify using the baseline literacies alone. Sometimes we need to learn the more advanced skills that were used to create the kinds of things that we want to build or modify.

This is usually how coders learn how to code: they see the digital world around them and ask, “how was that made?” Repeatedly asking this question of everything one sees eventually leads to something one might call “coding”.

This is, however, a situation where the journey may be more important than the destination: taking something you really care about and asking how it’s made–or conversely, taking something imaginary you’d like to build and asking how it might be built–is both more useful and edifying than learning “coding” in the abstract. Indeed, learning “coding” without a context could easily make it the next Algebra II, which is a terrifying prospect.

So, my recommendation: don’t embark on a journey to “learn to code”. Just ask “how was that made?” of things that interest you, and ask “how might one build that?” of things you’d like to create. You may or may not end up learning how to code; you might actually end up learning how to knit. Or cook. Or use Popcorn Maker. Regardless of where your interests lead you, you’ll have a better understanding of the world around you, and you’ll be better able to express yourself in ways that matter.

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [941104] Default secure group for Mozilla Communities should not be “core-security”

- [843457] PROJECT environment variable is not honored when mod_perl is enabled

- [938161] sql_date_format() method for SQLite has an incorrect default format

- [928057] When custom fields are added, a DBA bug should be created to update metrics permissions

- [945501] create “Firefox :: Developer Tools: User Stories” component and enable the User Story extension on this component

- [853509] Bugzilla version not displayed on the index page

- [929321] create a script to delete all bugs from the database, and generate two dumps (with and without bugs)

- [781672] checksetup.pl fails to check the version of the latest Apache2::SizeLimit release (it throws “Invalid version format (non-numeric data)”)

- [938300] vers_cmp() incorrectly compares module versions

- [922226] redirect when attachments contain reviewboard URLs

- [943636] SQL error in quicksearch API when providing just a bug ID

- [945799] I cannot change a review from me to someone else

- [937020] consider hosting mozilla skin fonts on bmo

- [942029] review suggestions only shows the first mentor

- [869989] Add X-Bugzilla-Mentors field to bugmail headers and an indication to mentors in bugmail body

- [942725] add the ability to tag comments with arbitrary tags

- [905384] Project Kickoff Form: Change Drop Down Options for When do the items need to be ordered by? and Currency Request for Total Cost in Finance Questions

more information about comment tagging can be found in this blog post.

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2013/12/10/happy-bmo-push-day-75/

|

|

Andrew Truong: Treat People the Way You Want to Be Treated |

We at Mozilla are a global community connected by a common cause. That's the logic and is straight forward right? Well, it happens in daily life and obviously it can be felt and seen over IRC as some are responded to and some aren't. We have our ups and downs in daily life but we judge each other based on our first impression all the time and based off of physical appearance. We immediately jump to conclusions and to what we feel after meeting that person and that could've been a positive or a negative experience. Now, when we are to interact with those on projects or in groups, we have second thoughts and start doubting that person's ability to work. However, if we flashback to the first impression we made, what could we have done different? Were you treating the other individual rudely? Or was the other individual treating you rudely? This creates the immediate first impression and then we start treating that person the way we were treated. And we continue to do that in daily life. However, if we change our act and treat that person with dignity and respect even though they came off as a rude and grumpy person in the first place. We never knew if that person had a bad day or if that person had some serious issues that was stuck to them and needed to be resolved. In all's end, if we treat people the way we want to be treated, we could change the world and obviously make the world a better place to live and work in. There should be no conflicts between individuals because of race, ethnicity or anything. However, there are people that don't understand the "treat people the way you want to be treated" philosophy because just recently I asked a fellow classmate of mine to help me do something as we are doing this as a class for a Christmas party that is planned for the surrounding communities and they refused. I was partially left in and charge and to lead. In the end, I asked them why they didn't do what I asked, and the response I received? "I'm not a slave". NOW, you make the call, "treat people the way you want to be treated?" or "give them a second chance?"

|

|

Benjamin Kerensa: Best Web Browser: Firefox |

It was great to see that Mozilla Firefox was picked by Linux Journal Reader’s for a second year in a row as the best web browser and with a 2% gain in popularity. Additionally, Firefox OS did pretty good and ranked in the Top 5 for mobile operating systems and I expect next year Firefox OS will take 1st or 2nd place.

Tomorrow, Mozilla Firefox should see a release of Firefox 26.0 to stable and I can tell you this release comes baked with all the goodness you all have come to expect from Firefox. Going back to the Linux Journal Reader’ Choice Awards I really think this continues to be a strong indicator that Linux Users across all distros really enjoy using Firefox and that Firefox remaining a default on many distros is a win for Linux Users considering the popularity of the browser.

Tomorrow, Mozilla Firefox should see a release of Firefox 26.0 to stable and I can tell you this release comes baked with all the goodness you all have come to expect from Firefox. Going back to the Linux Journal Reader’ Choice Awards I really think this continues to be a strong indicator that Linux Users across all distros really enjoy using Firefox and that Firefox remaining a default on many distros is a win for Linux Users considering the popularity of the browser.

If you are a Linux User I highly encourage you to get involved in testing the Nightly and Aurora builds of Firefox. I would also invite you to check out Firefox OS and the app development community (with a newly refreshed MDN!) that is filling the Firefox Marketplace with great apps.

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/uGEO5y7Q0RY/best-web-browser-firefox

|

|

Michael Verdi: The bonuses of really short videos |

One of the nice things about using YouTube for videos on support.mozilla.org is that we get audience retention stats. Here’s what they look like for a minute-long video I made about two years ago.

This video answers the “How do I set my home page?” question at about 23 seconds in. After that the audience really starts to drop off as the video goes into supplementary topics.

This video answers the “How do I set my home page?” question at about 23 seconds in. After that the audience really starts to drop off as the video goes into supplementary topics.

The newer videos I’ve made focus mainly on one thing. This video is only 21 seconds long so it gets right to the point. In this case the average viewer watches the entire thing. Pretty cool!

So shorter is better in this case. Tell me something I don’t already know. Well, my point is that a minute long video is generally considered pretty short. Turns out you might want to think about making videos WAY shorter.

And here’s another bonus for super short videos. They’re much easier to localize. I made this 29 second Firefox OS video as an experiment. After creating an English version, I was able to re-shoot it with the interface in Spanish and drop in localized narration (recorded while I re-shot the video) in about an hour. That’s so awesome. I want to do much more of that next year.

https://blog.mozilla.org/verdi/373/the-bonuses-of-really-short-videos/

|

|