Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

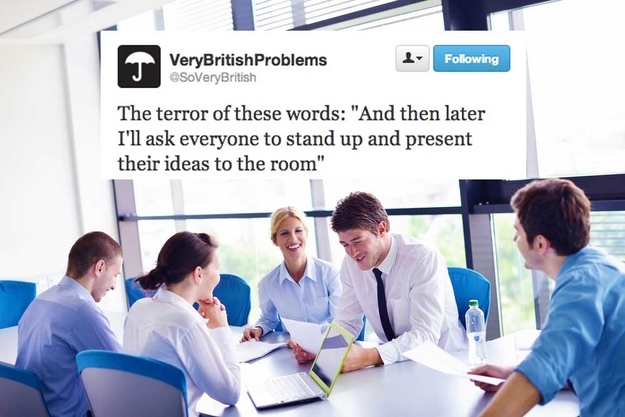

Christian Heilmann: 16 Questions you need to answer before you give a conference talk |

When it comes to giving a talk at a conference the thing people freak out the most about is the delivery. Being in front of a large audience on stage is scary and for many people unthinkable.

I don’t want to discourage anyone to do this – it is amazing and it feels great to inspire people. The good news is that by covering the things here you will go on stage with the utmost confidence and you will dazzle.

I wanted to note down the things people don’t consider about presenting at conferences. There is no shortage of posts claiming that evangelism is “only presenting at conferences”. Furthermore it is deemed only worth-while when done by “real developers who work on the products”. This is the perfect scenario, of course. Before you judge “just presenting” as a simple task consider the following questions. If you are a professional presenter and evangelist you need to have them covered. More importantly, consider the time needed to do that work and how you would fit it into your daily job of delivering code.

- 1) Do you know the topic well enough?

- 2) Are you sure about your facts?

- 3) Are you aware of the audience?

- 4) What’s your story line? How do you package the idea?

- 5) Where are the materials you talk about?

- 6) Are you sure you can release the talk the way you wrote it?

- 7) When are the materials due?

- 8) What does the conference need from you?

- 9) How do you get to the event?

- 10) Do you have all you need? Is everything going to be fine while you’re gone?

- 11) Who pays what – how do you get your money back?

- 12) Can you afford the time? Do you need to extend your trip to make it worth while?

- 13) Have you prepared for a presentation in the conference environment?

- 14) How do you measure if this was a success?

- 15) How do you make this re-usable for your company?

- 16) Are you ready to deal with the follow-up?

1) Do you know the topic well enough?

If you talk about your own product, this is easy. It is also easy to be useless. Writing a demo for a talk that can’t fail is cheating. It is also hard to repeat for the audience. It is a theoretical exercise, and not a feat of delivery. Writing a product in a real work environment means dealing with other people’s demands and issues. It also means you have to re-think what you do a lot, and that makes for better products. How you built your product does not make it a best practice. It worked for you. OK, but is that repeatable by your audience or can they learn from you approach?

To give a great talk, you need to do a lot of homework:

- you need to read what the competition did,

- learn what is applicable,

- you need to understand how the topic you cover can be beneficial for the audience and

- you need to do research about the necessary environment of your talk topic and issues people may run into when they try to repeat what you did.

2) Are you sure about your facts?

It is easy to make grandiose statements and wrap them in the right rhetoric to be exciting. It is also easy to cause a lot of trouble with those. If you make a promise on stage, make sure it is yours to fulfill. You don’t want to get your colleagues into trouble or delivery stress by over-promising.

You are likely to present to an audience who know their stuff and are happy to find holes in your argumentation. Therefore you need to do your homework. Check your facts, keep them up-to-date and don’t repeat truisms of the past.

Get the newest information, and verify that it is fact before adding it to your talk. This also means checking various sources and cross-referencing them. Legwork, research.

Work with your colleagues who will need to deliver what you talk about and maintain it in the future. Ensure they are up to the task. Remember: you are not likely to be the maintainer, so delegate. And delegation means you need to give others a heads-up what is coming their way.

3) Are you aware of the audience?

Your job is to educate and entertain the audience of the conference. To achieve this, you need to cater your talk to the audience. Therefore you need to understand them before you go forward. For this, you need to follow some steps:

Get information about the audience from the conference organisers:

- How big is the audience?

- When is your talk – a talk at the end of the day is harder to take in than one in the morning.

- What is their experience level – you write different materials for experts than for novices. Peer pressure dictates that everyone tells you they are experts. They might still not understand what you do unless you explain it well.

- What is the language of the audience? Are they native speakers of your language or do you need to cater for misunderstandings? Do your pop references and jokes make sense in that country, or are they offensive or confusing? If there is live translation at the event you will have to be slower and pause for the translators to catch up. You will also need to deliver your slides up-front and have a list of technical words that shouldn’t be translated. Your slide deck also needs to be printable for translators to use.

Then think about what you’d like to get out of your talk:

- Try to think like your audience – what excites a group of designers is not the same a group of developers or project managers need

- Look at the rest of the line-up. Is your talk unique or will you clash or repeat with another talk at the same event?

4) What’s your story line? How do you package the idea?

A presentation is nothing without a story. Much like a movie you need to have to have an introduction, a climax and a conclusion. Intersperse this with some anectodes and real life comparisons and you have something people can enjoy and learn from. Add some humour and some open questions to engage and encourage. How do you do that? What fits this talk and this audience?

5) Where are the materials you talk about?

If you want the audience to repeat what you talk about you need to make sure your materials are available.

- Your code examples need to be for example on GitHub, need to work and need documentation.

- If you talk about a product of your company make sure the resource isn’t going away by the time you give your talk.

- Your slides should be on the web somewhere as people always ask for them right after your talk.

6) Are you sure you can release the talk the way you wrote it?

It is simple to pretend that we are a cool crowd and everyone gets a good geek joke. It is also easy to offend people and cause a Twitter storm and an ugly aftermath in the tech press.

It is also easy to get into legal trouble for bad-mouthing the competition or using media nilly-willy. Remember one thing: fair use is a myth and not applicable internationally. Any copyrighted material you use in your talk can become a problem. The simplest way to work around that is to only use creative commons licensed materials.

That might mean you don’t use the cool animated GIF of the latest TV show. And that might be a good thing. Do you know if the audience knows about this show and gets your reference? Thinking internationally and questioning your cultural bias can be the difference between inspiring and confusing. Or frustrating. If you talk about great products that aren’t available in the country you present. Don’t write your talk from your perspective. Think what the audience can do with your material and if they can understand it.

7) When are the materials due?

There are quite a few things that affect the time you have to prepare your presentation:

- Your company might have a policy to have your slides reviewed by legal and marketing. This sounds terrible and smacks of censorship. But it can save your arse. It might also make you aware of things you can hint at that aren’t public knowledge yet.

- The conference might need your talks upfront. Every conference wants at least an outline and your speaker bio to publish on their web site.

- Do you have enough time for research, creation and designing your slides before you travel? Can you upload your deck to the web in case your machine breaks down?

8) What does the conference need from you?

Depending on what the conference does for you, they also want you to help them. That can include:

- some promotion on your social media channels

- information about your travel and accomodation

- VISA requirements and signing releases about filming and material distribution rights

- invoices for expenses you have on their behalf

- information on your availability for speaker dinners, event parties or other side activities of the main event

This needs time allocated to it. In general you can say a one day conference will mean you’re out for three.

9) How do you get to the event?

Traveling is the biggest unknown in your journey to the stage. All kind of things can go wrong. You want to make sure you book the right flights, hotels and have a base close to the event. All this costs money, and you will find conference organisers and your company try to cut down on cost whenever they can. So be prepared for some inconvenience.

Booking and validating your travel adds and extra two days to your conference planning. Getting to and from your home to the event can be a packed and stressful journey. Try to avoid getting to the event stressed, you need to concentrate on what you are there for. Add two buffer days before and after the event at least.

Booking your own travel is great. You are not at the mercy of people spelling your name wrong or asking you for all kind of information like your passport and date of birth. But it also means you need to pay for it in some way or another and you want that money back. Finding a relaxed and affordable schedule is a big task, and something you shouldn’t take too lightly.

10) Do you have all you need? Is everything going to be fine while you’re gone?

You are going to be out for a while. Both from work and your home. Make sure that in your absence you have people in place that can do the job you normally do. Make sure you covered all the needs like passport and visa issues and you know everything about the place you go to.

Prepare to get sick and have medication with you. It can happen and it should not be the end of the trip. Stay healthy, bring some safe food and plan for time to work out in between sitting cramped on planes and in cars.

Do you know how to get to the hotel and around the city you present in? Do you speak the language? Is it safe? Is there someone to look after your home when you’re gone? Prepare for things going wrong and you having to stay longer. Planes and trains and automobiles fail all the time.

11) Who pays what – how do you get your money back?

Conference participation costs money. You need to pay for travel, accomodation, food, wireless, mobile roaming costs and many more unknowns. Make sure you get as much paid for you as possible. Many conference organisers will tell you that you can pay for things and keep receipts. That means you will have to spend quite some time with paperwork and chasing your money. The same applies to company expense procedures. You need to deal with this as early as possible. The longer you wait, the harder it gets.

12) Can you afford the time? Do you need to extend your trip to make it worth while?

A local event is pretty easy to sell to your company. Flying somewhere else and having the both the cost of money and time involved is harder. Even when the conference organiser pays – it means you are out of the office and not available for your normal duty. One way to add value for your company is to add meetings with local clients and press. These need to be organised and planned. So ensure you cater for that.

13) Have you prepared for a presentation in the conference environment?

It is great to use online editors to write your talk. It means people can access your slides and materials. It also means you are independent of your computer – which can and will behave oddly as soon as you are on stage. Online materials are much less useful once you are offline on stage. Conference wireless is anything but reliable. Make sure you are not dependent on a good connection. Ask about the available resolution of the conference projection equipment. Don’t expect sound to be available. And above all – bring your own connectors and power cables and converters.

14) How do you measure if this was a success?

Your company gave you time and money to go to a conference and give a talk. How do you make this worth their while? You have to show something on your return. Make sure you measure the feedback your talk got. See if you can talk to people at the event your company considers important. Collect all this during the event and analyse and compact it as soon as you can. This sounds simple, but it is tough work to analyse sentiment. A Twitter feed of a conference is pretty noisy.

15) How do you make this re-usable for your company?

If you work for a larger company, they expect of you as an evangelist to make your materials available for others. A lot of companies believe in reusable slide decks – for better or worse. Prepare a deck covering your topic that is highly annotated with delivery notes. Prepare to present this to your company. Whilst hardly anything is reusable, you are expected to make that happen.

16) Are you ready to deal with the follow-up?

After the conference you have to answer emails, contact requests and collect and note down your leads. Most companies expect a conference report of some sort. Many expect you to do some internal presentation to debrief you. What you did costs a lot of time and money and you should be prepared to prove your worth.

You’ll get more requests about your talk, the products you covered and people will ask you to speak at other events. And thus the cycle starts over.

Summary

That’s just a few of the things you have to consider as a presenter at conferences. I didn’t deal with stress, jet lag, demands, loneliness and other mental influences here. Next time you claim that people “talk instead of being a real coder” consider if what you’re saying is valid criticism or sounds a bit like “bro, do you even lift?”. Of course there are flim-flam artists and thinly veiled sales people calling themselves evangelists out there. But that doesn’t mean you should consider them the norm.

|

|

Stuart Colville: Removing leading whitespace in ES6 template strings |

In some recent code at work we're using fairly large strings for error descriptions.

JavaScript has always made it hard to deal with long strings, especially when you need to format them across multiple lines. The solutions have always been that you need to concatenate multiple strings like so:

var foo = "So here is us, on the " +

"raggedy edge. Don't push me, " +

"and I won't push you.";

Or use line-continuations:

var foo = "So here is us, on the \

raggedy edge. Don't push me, \

and I won't push you.";

Neither of these are pretty. The latter was originally strictly illegal in ECMAScript (hattip Joseanpg):

A 'LineTerminator' character cannot appear in a string literal, even if preceded by a backslash \. The correct way to cause a line terminator character to be part of the string value of a string literal is to use an escape sequence such as \n or \u000A.

There's a rather wonderful bug on bugzilla discussing the ramifications of changing behaviour to strictly enforce the spec back in 2001. In case you're wondering it was WONTFIXED.

In ECMAScript 5th edition this part was revised to the following:

A line terminator character cannot appear in a string literal, except as part of a LineContinuation to produce the empty character sequence. The correct way to cause a line terminator character to be part of the String value of a string literal is to use an escape sequence such as \n or \u000A.

Anyway, enough with the history lesson, we now have features in ES6 on the horizon and if you're already starting to use ES6 via the likes of babel or as features become available then it provides some interesting possibilities.

Diving in with ES6 template strings

Having seen template strings I jumped right in only to find this happening.

var raggedy = 'raggedy';

console.log(`So here is us, on the

${raggedy} edge. Don't push

me, and I won't push you.`);

So that looks neat right? But hang-on what does it output?

So here is us, on the

raggedy edge. Don't push

me, and I won't push you.

Dammit - so by default template strings preserve leading whitespace like heredoc in PHP (which is kind of a memory I'd buried).

Ok so reading more on the subject of es6 template strings, processing template strings differently is possible with tag functions. Tags are functions that allow you to process the template string how you want.

Making a template tag

With that in mind I thought OK, I'm going to make a tag to make template strings have no leading whitespace on multiple lines but still handle var interpolation as by default.

Here's the code (es6) (note a simpler version is linked to at the end of this post if you're not using full-fat es6 via babel):

export function singleLineString(strings, ...values) {

// Interweave the strings with the

// substitution vars first.

let output = '';

for (let i = 0; i < values.length; i++) {

output += strings[i] + values[i];

}

output += strings[values.length];

// Split on newlines.

let lines = output.split(/(?:\r\n|\n|\r)/);

// Rip out the leading whitespace.

return lines.map((line) => {

return line.replace(/^\s+/gm, '');

}).join(' ').trim();

}

The way tags work is they're passed an array of strings and the rest of the args are the vars to be substituted. Note: as we're using ES6 in this example we've made values into an array by using the spread operator. If the original source string was setup as follows:

var two = 'two';

var four = 'four';

var templateString = `one ${two} three ${four}`;

Then strings is: ["one ", " three ", ""] and values is ["two", "four"]

The loop up front is just dealing with interleaving the two lists to do the var substitution in the correct position.

The next step is to split on newlines to get a list of lines. Then the leading whitespace is removed from each line. Lastly the list is joined with a space between each line and the result is trimmed to remove any trailing space.

The net effect is that you get an easy way to have a long multiline string formatted nicely with no unexpected whitespace.

var raggedy = 'raggedy';

console.log(singleLineString`So here is us, on the

${raggedy} edge. Don't push

me, and I won't push you.`);

Outputs:

So here is us, on the raggedy edge. Don't push me, and I won't push you.

Trying this out in the browser

As template strings are becoming more and more available - here's a version without the fancy ES6 syntax which should work in anything that supports template strings:

See http://jsbin.com/yiliwu/4/edit?js,console

All in all it's not too bad. Hopefully someone else will find this useful. Let us know if you have suggestions or alternative solutions.

https://muffinresearch.co.uk/removing-leading-whitespace-in-es6-template-strings/

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20151012/

|

|

Air Mozilla: Preview: Rally for the User |

A peek at what's coming in the Rally for the User

A peek at what's coming in the Rally for the User

|

|

Mozilla WebDev Community: Extravaganza – October 2015 |

Once a month, web developers from across Mozilla get together to see who can score the highest car emissions rating. While we scour the world for dangerous combustibles, we find time to talk about the work that we’ve shipped, share the libraries we’re working on, meet new folks, and talk about whatever else is on our minds. It’s the Webdev Extravaganza! The meeting is open to the public; you should stop by!

You can check out the wiki page that we use to organize the meeting, or view a recording of the meeting in Air Mozilla. Or just read on for a summary!

Shipping Celebration

The shipping celebration is for anything we finished and deployed in the past month, whether it be a brand new site, an upgrade to an existing one, or even a release of a library.

One and Done on Heroku

First up was bsilverberg with the news that One and Done, Mozilla’s contributor task board, has successfully migrated from the Mozilla-hosted Stackato PAAS to Heroku. One and Done takes advantage of a few interesting features of Heroku, such as App Pipelines and Review Apps.

Pontoon Sync Improvements and New Leaderboards

Next was Osmose (that’s me!) sharing a few new features on Pontoon, a site for submitting translations for Mozilla software:

- mathjazz added a new “Latest Activity” column to project and locale listings.

- jotes added time-based filters to the leaderboard as well as several performance improvements to the page.

- I added

incfile support to the new sync process, as well as a few other fixes such that all Pontoon projects are now using the new sync. Yay!

Air Mozilla iTunes Video Podcast

Peterbe stopped by to share the news that Air Mozilla now has an iTunes-compatible podcast feed for all of its videos. The feed has already been approved by Apple and is available on the iTunes Store.

DXR Static File Cachebusting

ErikRose shared the news that DXR now adds hashes to static file filenames so that updates to the static files don’t get messed up by an old local cache. Interestingly, instead of relying on popular tools like Grunt or Webpack, Erik opted to implement the hashing using some logic in a Makefile plus a little bit of Python. You can check out the pull request to see the details of the change.

Replacing localForage with localStorage

Next we went back to Peterbe, who recently replaced localForage, a library that abstracts several different methods of storing data locally in the browser, with localStorage, the blocking, built-in storage solution that ships with browsers. He also shared a blog post that showed that not only is localStorage simpler to use, it was actually faster in his specific use case.

Mozilla.org Hosted on Deis

Next was jgmize sharing the news that mozilla.org is now hosted on a Deis cluster. Currently a small amount of production data is being hosted by the cluster, but a larger rollout is planned for the near future. Giorgos is responsible for the entire Jenkins setup, including a neat deployment pipeline display, an Ansible playbook, and the deployment pipeline scripts. Pmac ported over hundreds of Apache redirects to Python and wrote a comprehensive set of tests for them as well.

Roundtable

The Roundtable is the home for discussions that don’t fit anywhere else.

Farewell to Wenzel

Lastly, I wanted to specifically call out wenzel, whose last day as a paid contributor for Mozilla was last Friday. Wenzel has been a Mozillian for 9 years, starting as an intern. He’s contributed to almost every major Mozilla web property, and will be missed.

This month’s winner was willkg, with an impressive 200% rating, generating more pollution than the fuel he put in. Local science expert Dr. Potch of Mr. Potch’s Questionable Ethics and Payday Loan Barn was quoted as saying that willkg’s score was “possible”.

If you’re interested in web development at Mozilla, or want to attend next month’s Extravaganza, subscribe to the dev-webdev@lists.mozilla.org mailing list to be notified of the next meeting, and maybe send a message introducing yourself. We’d love to meet you!

See you next month!

https://blog.mozilla.org/webdev/2015/10/12/extravaganza-october-2015/

|

|

Yunier Jos'e Sosa V'azquez: Los principios propuestos por Mozilla para el bloqueo de contenido |

Esta es una traducci'on del art'iculo original publicado en el blog de The Mozilla Blog. Escrito por Denelle Dixon-Thayer.

El bloqueo de contenido se ha convertido en un tema candente en toda la web y en los ecosistemas m'oviles. Ya se ha estado convirtiendo en algo penetrante en el escritorio, y ahora Apple ha hecho posible el desarrollo de aplicaciones para iOS cuya finalidad es bloquear el contenido. Esto caus'o la m'as reciente oleada de actividad, de preocupaci'on y de enfoque. Tenemos que prestar atenci'on.

El bloqueo de contenido no va a desaparecer – ahora es parte de nuestra experiencia en l'inea. Pero el paisaje no se entiende bien, por lo que es dif'icil saber la mejor manera de avanzar en un ambiente sano, la Web abierta. Los usuarios quieren que -ya sea para evitar la visualizaci'on de anuncios, protecci'on contra el seguimiento no deseado, mejorar la velocidad de carga, o reducir consumo de datos- y tenemos que abordar la forma en c'omo la industria debe responder. Quer'iamos empezar por el hacking en los principios propuestos para el bloqueo de contenido. La creciente disponibilidad y uso de los bloqueadores de contenido nos dice que los usuarios quieren controlar su experiencia.

'Esto es una cosa buena. Pero algunos bloqueadores de contenido podr'ian ser perjudiciales en formas que pueden no ser evidentes. Por ejemplo, si el bloqueo de contenido crea nuevos porteros que pueden elegir a los ganadores y perdedores en el espacio editorial o que favorezcan sus propios contenidos por encima de otros, que en 'ultima instancia, perjudica a la competencia y la innovaci'on. A la larga, los usuarios pueden perder tanto control como el que ganan. Lo mismo sucede si el modelo comercial de la Web no forma parte del debate de bloqueo de contenido.

En mi 'ultimo art'iculo, yo transmit'i nuestra intenci'on de colaborar con este paisaje, no s'olo a trav'es del an'alisis y la investigaci'on, pero tambi'en a trav'es de la experimentaci'on, desarrollo de productos, y la promoci'on.

Para ayudar a guiar nuestros esfuerzos y esperando informar a otros, hemos desarrollado tres propuestas “principios del bloqueo de contenido” que ayudar'ian a avanzar en los efectos beneficiosos de bloqueo de contenido y reducir al m'inimo los riesgos. Queremos de su ayuda en ellos. As'i como nuestros principios de privacidad de datos ayudan a guiar nuestras pr'acticas de datos, estos principios de bloqueo de contenido ayudar'an a guiar lo que construimos y lo que apoyamos a trav'es de la industria.

El contenido no es intr'insecamente bueno o malo – con algunas excepciones notables, como el malware. As'i que estos principios no son acerca de qu'e contenido es aceptable para bloquear y cu'al no lo es. Ellos hablan de c'omo y por qu'e el contenido puede ser bloqueado, y c'omo el usuario se puede mantener en el centro a trav'es de ese proceso.

En Mozilla, nuestra misi'on es garantizar una web abierta y de confianza, y que pone a nuestros usuarios el control. Para el bloqueo de contenido, esto es lo que pensamos que significa:

- La neutralidad del contenido: El software de bloqueo de contenido debe centrarse en atender las necesidades de los usuarios potenciales (tales como el rendimiento, la seguridad y la privacidad), en lugar de bloquear determinados tipos de contenidos (como la publicidad).

- Transparencia y Control: El software de bloqueo de contenido debe proporcionar a los usuarios la transparencia y controles significativos sobre las necesidades que est'a tratando de resolver.

- Apertura: El bloqueo debe mantener la igualdad de condiciones y debe bloquear con los mismos criterios independientemente de la fuente del contenido. Los editores y otros proveedores de contenido deber'ian tener formas de participar en un ecosistema de Web abierta, en lugar de ser colocado en una caja penalizada permanente que cierra la Web para sus productos y servicios.

D'iganos lo que piensa de estos principios propuestos en sus canales sociales usando #contentblocking y 'unete a nosotros el viernes 9 de octubre a las 11 A.M PT. para nuestra #BlockParty, una conversaci'on en torno a los problemas y las posibles soluciones a las cuestiones referentes al bloqueo de contenidos. Esperamos con inter'es trabajar con nuestros usuarios, nuestros socios y el resto del ecosistema Web para avanzar en nuestro objetivo com'un de una vida sana de Web abierta.

Fuente: Mozilla Hispano

http://firefoxmania.uci.cu/los-principios-propuestos-por-mozilla-para-el-bloqueo-de-contenido/

|

|

The Mozilla Blog: Katharina Borchert to Join Mozilla Leadership Team as Chief Innovation Officer |

We are excited to announce that Katharina Borchert will be transitioning from our Board of Directors to join the Mozilla leadership team as our new Chief Innovation Officer starting in January.

Mozilla was founded and has thrived based upon the principles of open innovation. We bring together thousands of people from around the world to build great products and empower others with technology, know-how and opportunity to advance the open Web. As Mozilla’s new Chief Innovation Officer, Katarina will be broadly responsible for fostering and further enriching Mozilla’s culture of open innovation and driving our process for identifying new opportunities, prioritizing them, and funding focused explorations across the whole of Mozilla’s global community.

Katharina has a background that includes more than a decade of new business growth and technological innovation in media and journalism, most recently as CEO at Spiegel Online, the online division of one of Europe’s most influential magazines. Prior to that, Katharina was Editor-in-Chief and CEO at WAZ Media Group, where she completely reimagined the way local and regional journalism could be done, launching a new portal “Der Westen” based heavily upon user participation, integrated social media, and one of the earliest with a focus on location-based data in journalism.

Most recently at Mozilla she has served on our Board of Directors since early 2014, and we have come to rely upon her keen strategic insights, broad knowledge of the Internet landscape and her significant executive leadership experience. She has become a strategic ally — a true Mozillian, who cares deeply about the issues of privacy, online safety and transparency that are all critical to the future of a healthy Internet and core to our mission.

Our success comes from fostering a true culture of open innovation in the world and our focus on product and technological innovation remains central to the fulfillment of our mission.

The entire Mozilla community welcomes you, Katharina.

Twitter: https://twitter.com/lyssaslounge

|

|

John Ford: Splitting out taskcluster-base into component libraries |

Taskcluster serverside components are currently built using the suite of libraries in the taskcluster-base npm package. This package is many things: config parsing, data persistence, statistics, json schema validators, pulse publishers, a rest api framework and some other useful tools. Having these all in one single package means that each time a contributor wants to hack on one part of our platform, she'll have to figure out how to install and run all of our dependencies. This is annoying when it's waiting for a libxml.so library build, but just about impossible for contributors who aren't on the Taskcluster platform team. You need

http://blog.johnford.org/2015/10/splitting-out-taskcluster-base-into.html

|

|

Daniel Pocock: RTC Quick Start becoming a book, now in beta |

The Real-Time Communications (RTC) Quick Start Guide started off as a web site but has recently undergone bookification.

As of today, I'm calling this a beta release, although RTC is a moving target and even when a 1.0 release is confirmed, the book will continue to evolve.

An important aspect of this work is that the book needs to be freely and conveniently available to maximize participation in Free RTC. (This leads to the question of which free license should I choose?)

The intended audience for this book are people familiar with setting up servers and installing packages, IT managers, system administrators and support staff and product managers.

There are other books that already explain things like how to setup Asterisk. The RTC Quick Start Guide takes a more strategic view. While other books discuss low-level details, like how to write individual entries in the Asterisk extensions.conf file, the RTC Quick Start Guide looks at the high level questions about how to create a network of SIP proxies, XMPP servers and Asterisk boxes and how to modularize configuration and distribute it across these different components so it is easier to manage and support.

Some chapters give very clear solutions, such as DNS setup while other chapters present a discussion of issues that are managed differently in each site, such as user and credential storage.

One of the key themes of the book is the balance between Internet architecture and traditional telephony, with specific recommendations about mixing named user accounts with extension numbers.

Many people have tried SIP or XMPP on Linux in the past and either found themselves overwhelmed by all the options in Asterisk (start with a SIP proxy, it is easier) or struggling with NAT issues (this has improved with the introduction of ICE and TURN, explained in a chapter on optimizing connectivity).

The guide also looks at the next generation of RTC solutions based on WebRTC and gives specific suggestions to people deploying it with JSCommunicator.

Help needed

There are many ways people can help with this effort.

The most important thing is for people to try it out. If you follow the steps in the guide, does it help you reach a working solution? Give feedback on the Free RTC mailing list or raise bug reports against any specific packages if they don't work for you.

The DocBook5 source code of the book is is available on Github and people can submit changes as pull requests. There are various areas where help is needed: improvements to the diagrams, extra diagrams and helping add details to the chapter about phones, apps and softphones.

How could the book be made more useful for specific sectors such as ISPs, hosting providers, the higher education sector or other domains that typically lead in the deployment of new technology?

There is a PDF version of the book for download, it has been created using the default templates and stylesheets. How could the appearance be improved? Should I look at options for having it made available in print?

The book is only available in English at the moment, what is the best strategy for supporting translations and engaging with translators?

Are there specific communities or projects that the book should be aligned with, such as The Linux Documentation Project? (Note: RTC is not just for Linux and people use many of these components on BSD-like platforms, Windows, Mac and Android).

Which is the best license to choose to engage contributors and translators and ensure the long term success of the project?

Another great way to help is to create links to the book from other web sites and documents.

Any questions or feedback on these topics would be very welcome through theFree RTC mailing list.

http://danielpocock.com/rtc-quick-start-becoming-a-book-now-in-beta

|

|

The Mozilla Blog: Mozilla, GSMA Publish Study on Mobile Opportunity in Emerging Markets |

Mozilla has released a new report — mzl.la/localcontent — co-authored with the GSMA. Titled “Approaches to local content creation: realising the smartphone opportunity,” our report explores how the right tools, coupled with digital literacy education, can empower mobile-first Web users as content creators and develop a sustainable, inclusive mobile Web.

In emerging markets like India, Bangladesh and Kenya, many individuals access the Web exclusively through smartphones. Indeed, by the end of 2020, there will be 3 billion people using the mobile internet across the developing world.

Improved access is heartening, but a dearth of local content in these regions can render the mobile Web irrelevant, leaving users with little reason to engage. This leads to lost opportunities, and a diminished mobile ecosystem.

In this report, Mozilla and the GSMA explore solutions to this challenge. Mozilla and the GSMA sought to answer three key questions:

- What level of digital literacy is necessary to empower mobile-first users?

- What kind of tools enable users to easily create content?

- Can the right training and tools significantly impact the digital ecosystem in mobile-first countries?

Our findings draw on a range of data: 12 weeks of ethnographic research in emerging markets throughout Africa, Asia and the Americas; one year of user-centered design, testing and iteration of Webmaker for Android, Mozilla’s app for spurring local content creation; two pilot studies exploring the relationship between digital skills on mobile Web usage; and additional analysis.

We explored a range of topics — from language to learning to networks — but reached a central conclusion: Higher skill levels and a larger amount of relevant content would benefit a range of actors, including the mobile industry, government and civil society. If the right investments are made, many more newcomers to the internet will enjoy the benefits of online life, and will be able to create value for themselves and others in the process.

Additional lessons learned include:

- There is a latent appetite to create content in emerging markets. Research participants and user testers were hugely excited and enthusiastic about the idea of creating for the Internet.

- Sharing is a powerful motivator for original content creation. In some of our testing contexts, we have observed that the social incentives for “sharing” content provide the hook that leads a user to generate his or her own original content.

- Designing for the “next billion” requires the same focus and respect for the user as designing for those already online. Simply because some are new to the Web, or to content creation, does not mean that those creating platforms and applications should underrate their ability to handle and even enjoy complexity.

- Users do not want to create the same content as everyone else. During workshops, participants continually broke free of our restrictions, escaping the templates and the grids they were provided with, to experiment with more open-ended and fun concepts.

To read the full report, visit mzl.la/localcontent.

Mozilla and the GSMA began their partnership in 2014, motivated by the growing number of people coming online through smartphones and the desire to spark local content creation. To read past research findings, visit mzl.la/research.

|

|

This Week In Rust: This Week in Rust 100 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: nasa42, brson, and llogiq.

Updates from Rust Community

News & Blog Posts

- Safety = Freedom.

- This week in Redox 1. Redox is an upcoming OS written in Rust.

- This week in Servo 36.

- Conference report: Friendly, diverse Rust Camp.

- Neither necessary nor sufficient - why Rust shipped without GC.

- Rustfmt-ing Rust.

- Virtual structs part 4: Extended enums and thin traits.

- Macros. Plans on an overhaul of the syntax extension and macro systems in Rust.

- Lints that collect data per crate.

- Stuff the identity function does (in Rust).

- Rust in detail: Writing scalable chat service from scratch.

- Formalizing Rust.

Notable New Crates & Projects

- Plumbum. Conduit-like data processing library for Rust.

- rust-keepass. Crate to use KeePass databases in Rust.

- Rusoto. AWS client libraries for Rust.

- fired. Fast init system for Redox OS.

- Rust Release Explorer.

- cargo-edit. A utility for adding cargo dependencies from the command line.

- Rust MetroHash. Rust implementation of MetroHash - a high quality, high performance hash algorithm.

- rose_tree. An implementation of the rose tree data structure for Rust.

- daggy. A directed acyclic graph data structure for Rust.

- hdfs-rs. libhdfs binding and wrapper APIs for Rust.

- cargo.el. Emacs minor mode for Cargo.

Updates from Rust Core

102 pull requests were merged in the last week.

Notable changes

- Turn on MIR construction unconditionally.

- Make function pointers implement traits for up to 12 parameters.

- Implement

Read,BufRead,WriteandSeekforCursor>. - Shrink metadata size. libcore.rlib reduced from 19121 kiB to 15934 kiB - 20% win.

- Implement RFC 1238: nonparametric dropck.

- Integer parsing should accept leading plus.

- Add

AsRef/AsMutimpls toBox/Rc/Arc. - rustc: Don't lint about isize/usize in FFI.

- rustc: Improve the dep-info experience.

- Cargo: Fix

makeandmake docon Mac OS X 10.11 El Capitan. - Book: Add documentation on custom allocators.

- Book: New "Syntax Index" chapter.

- rust.vim: Add rudimentary support for the playpen.

- sublime-rust: Major changes. (PR has a list of all changes).

New Contributors

- Carlos Liam

- Chris C Cerami

- Craig Hills

- Cristi Cobzarenco

- Daniel Carral

- Daniel Keep

- glendc

- Joseph Caudle

- J. Ryan Stinnett

- Kyle Robinson Young

- panicbit

- Yoshito Komatsu

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

- 1228: Place left arrow syntax (

place <- expr). - 1260: Allow a re-export for

main. - Amend #911 const-fn to allow unsafe const functions.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Allow overlapping implementations for marker traits.

- Ordered ranges 2.0. Replace

range(Bound::Excluded(&min), Bound::Included(&max))withrange().ge(&min).lt(&max). - Stabilize OS string to bytes conversions.

- Implement

.drain(range)and.drain()respectively as appropriate on collections..

New RFCs

Upcoming Events

- 10/13. San Diego Rust Meetup #9.

- 10/14. RustBerlin Hack and Learn.

- 10/19. Rust Paris.

- 10/20. Rust Hack and Learn Hamburg.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

No jobs listed for this week. Tweet us at @ThisWeekInRust to get your job offers listed here!

Crate of the Week

The Crate of this week is Conrod, a simple to use intermediate-mode GUI library written in pure Rust. Thanks to 7zf.

Conrod forgos the traditional "wrap the default GUI for each operating system/desktop environment" approach and uses OpenGL to present a simple, but color- as well as powerful GUI in its own style. There are a good number of widgets implemented already, and writing GUI code with conrod is a breeze thanks to the very streamlined interface.

Quote of the Week

War is Unsafe

Freedom is Safety

Ignorance is Type-checked

(The new trifecta for rust-lang.org)

Thanks to ruudva for the tip. Submit your quotes for next week!

http://this-week-in-rust.org/blog/2015/10/12/this-week-in-rust-100/

|

|

Mike Shal: Linux Namespacing Pitfalls |

Linux namespaces are a powerful feature for running processes with various levels of containerization. While working on adding them to the tup build system, I stumbled through some problems along the way. For a rough primer on getting started with user and filesystem namespaces, along with how they're used for dependency detection in tup, read on!

http://gittup.org/blog/2015/10/16-linux-namespacing-pitfalls/

|

|

Chris Cooper: RelEng & RelOps Weekly Highlights - October 9, 2015 |

The beginning of October means autumn in the Northern hemisphere. Animals get ready for winter as the leaves change colour, and managers across Mozilla struggle with deliverables for Q4. Maybe we should just investigate that hibernating thing instead.

Modernize infrastructure: Releng, Taskcluster, and A-team sat down a few weeks ago to hash out an updated roadmap for the buildbot-to-taskcluster migration (https://docs.google.com/document/d/1CfiUQxhRMiV5aklFXkccLPzSS-siCtL00wBCCdS4-kM/edit). As you can see from the document, our nominal goal this quarter is to have 64-bit linux builds *and* tests running side-by-side with the buildbot equivalents, with a stretch goal to actually turn off the buildbot versions entirely. We’re still missing some big pieces to accomplish this, but Morgan and the Taskcluster team are tackling some key elements like hooks and coalescing schedulers over the coming weeks.

Aside from the Taskcluster, the most pressing releng concern is release promotion. Release promotion entails taking an existing set of builds that have already been created and passed QA and “promoting” them to be used as a release candidate. This represents a fundamental shift in how we deliver Firefox to end users, and as such is both very exciting and terrifying at the same time. Much of the team will be working on this in Q4 because it will greatly simplify a future transition of the release process to Taskcluster.

Improve CI pipeline: Vlad and Alin have 10.10.5 tests running on try and are working on greening up tests (https://bugzil.la/1203128)

Kim started discussion on dev.planning regarding reducing frequency of linux32 builds and tests https://groups.google.com/forum/#!topic/mozilla.dev.planning/wBgLRXCTlaw (Related bug: https://bugzil.la/1204920)

Windows tests take a long time, in case you hadn’t noticed. This is largely due to e10s (https://wiki.mozilla.org/Electrolysis) which has effectively doubled the number of tests we need to run per-push. We’ve been able to absorb this extra testing on other platforms, but Windows 7 and Windows 8 have been particularly hard hit by the increased demand, often taking more than 24 hours to work through backlog from the try server. While e10s is a product decision and ultimately in the best interest for Firefox, we realize the current situation is terrible in terms of turnaround time for developer changes. Releng will be investigating updating our hardware pool for Windows machines in the new year. In the interim, please be considerate with your try usage, i.e. don’t test on Windows unless you really need to. If you can help fix e10s bugs so to make that the default on beta/release ASAP, that would be awesome.

Release: The big “moment-in-time” release of Firefox 42 approaches. Rail is on the hook for releaseduty for this cycle, and is overseeing beta 5 builds currently.

Operational: Kim increased size of tst-emulator64 spot pool (https://bugzil.la/1204756) so we’ll be able to enable additional Android 4.3 tests on debug once we have when SETA data for them (https://bugzil.la/1201236)

Coop (me) spent last week in Romania getting to know our Softvision contractors in person. Everyone was very hospitable and took good care of me. Alin and Vlad took full advantage of the visit to get better insight into how the various releng systems are interconnected. Hopefully this will pay off with them being able to take on more challenging bugs to advance the state of buildduty. Already they’re starting to investigate how they could help contribute to the slave loan tool. Alin and Vlad will also be joining us for Mozlando in December, so look forward to more direct interaction with them there.

See you next week!

|

|

Nick Cameron: Macros |

We're currently planning an overhaul of the syntax extension and macro systems in Rust. I thought it would be a good opportunity to cover some background on macros and some of the issues with the current system. (Note, that we're not considering anything really radical for the new systems, but hopefully the improvements will be a little bit more than incremental). In this blog post I'd like to talk a bit about macros in general. In later posts I'll try and cover some more Rust-specific things and some areas (like hygiene) in more detail. If you're a Lisp (or Rust macro) expert, this post will probably be very dull.

What are macros?

Macros are a syntactic programming language feature. A macro use is expanded according to a macro definition. Macros usually look somewhat like functions, however, macro expansion happens entirely at compile-time (never at runtime), and usually in the early stages of compilation - sometimes as a preprocessing step (as in C), sometimes after parsing but before further analysis (as in Rust).

Macro expansion is usually a completely syntactic operation. That is, it operates on the program text (or the AST) without knowledge about the meaning of that text (such as type analysis).

At its simplest, macro expansion is textual substitution. For example (in C):

#define FOO 42

int x = FOO;

is expanded by the preprocessor to

int x = 42;

by simply replacing FOO with 42.

Likewise, with arguments, we just substitute the actual arguments into the macro definition, and then the macro into the source:

#define MIN(X, Y) ((X) < (Y) ? (X) : (Y))

int x = MIN(10, 20);

expands to

int x = ((10) < (20) ? (10) : (20));

After expansion, the expanded program is compiled just like a regular program.

What is macro hygiene?

The naive implementation of macros described above can easily go wrong, for example:

static int a = 42;

#define ADD_A(X) ((X) + a)

void foo() {

int a = 0;

int x = ADD_A(10);

}

You might expect x to be 52 at runtime, but it isn't, it is 10. That's because the expansion is:

static int a = 42;

void foo() {

int a = 0;

int x = ((10) + a);

}

There is nothing special about a, it is just a name, so the usual scoping rules apply and we get the a in scope at the macro use site, not the macro definition site as you might expect.

These kind of unexpected results are because C macros are unhygienic. An hygienic macro system (as in Lisp or Rust) would preserve the scoping of the macro definition, so post-expansion, the a from the macro would still refer to the global a rather than the a in foo.

This is the simplest kind of macro hygiene. Once we get into the complexities of hygiene, it turns out there is no great definition. Hygiene applies to variables declared inside a macro (can they be referenced outside it?) as well as applying in some sense to aspects such as privacy. Implementing hygiene gets complex when macro definitions can include macro uses and further macro definitions. To make things even worse, sometimes perfect hygiene is too strong and you want to be able to bend the rules in a (hopefully) safe way.

How can macros be implemented?

Macros can be implemented as simple textual substitution, by manipulating tokens after lexing, or by manipulating the AST after parsing. Conceptually though we simply replace a macro use with the definition, whether the use and definition are represented by text, tokens, or AST nodes. There are some interesting details about exactly how lexing, parsing, and macro expansion interact. But, the most interesting implementation aspect is the algorithm used to maintain hygiene (which I'll cover in a later post).

How macros are implemented also depends on how macros are defined. The simple examples I gave above just substitute the macro definition for the macro use. macro_rules macros in Rust and syntax-rules macros in Scheme allow for pattern matching of arguments in the macro definition, so different code is substituted for the macro use depending on the arguments.

Depending on how macros are defined will affect how and when macros are lexed and parsed. (C macros are not parsed until substitution is completely finished. Rust macros are lexed into tokens before expansion and parsed afterwards).

Procedural macros

The macros described so far simply replace a macro use with macro definition. The macro expander might manipulate the macro definition (to implement hygiene or pattern matching), but the macro definition does not affect the expansion other than providing input. In a procedural macro system, each macro is defined as a program. When a macro use is encountered, the macro is executed (at compile time still) with the macro arguments as input. The macro use is replaced by the result of execution.

For example (using a made up macro language, which should be understandable to Rust programmers. Note though that Rust procedural macros work nothing like this):

proc_macro! foo(x) {

let mut result = String::new();

for i in 0..10 {

result.push_str(x)

}

result

}

fn main() {

let a = "foo!(bar)"; // Hand-waving about string literals.

}

will expand to

fn main() {

let a = "barbarbarbarbarbarbarbarbarbar";

}

A procedural macro is a generalisation of the syntactic macros described so far. One could imagine implementing a syntactic macro as a procedural macro by returning the text of the syntactic macro after manually substituting the arguments.

|

|

Nathan Froyd: gecko include file statistics |

I was inspired to poke at which files were most heavily #include‘d and which files contributed the most text as a result of their #include‘ing after seeing the simplicity of Libre Office’s script for doing so. I had to rewrite it in Python, as the obvious modifications to the awk script weren’t working, and I had no taste for debugging awk code. I’ve put the script up as a gist:

It’s intended to be run from a newly built objdir on Linux like so:

python includebloat.py .

The ability to pick a subdirectory of interest:

python includebloat.py dom/bindings/

was useful to me when I was testing the script, so I wasn’t groveling through several thousand files at a time.

The output lines are formatted like so:

total_size file_size num_of_includes filename

and are intended to be manipulated further via sort, etc. The script might work on Mac and Windows, but I make no promises.

The results were…interesting, if not especially helpful at suggesting modifications for future work. I won’t show the entirety of the script’s output, but here are the top twenty files by total size included (size of the file on disk multiplied by number of times it appears as a dependency), done by filtering the script’s output through sort -n -k 1 -r | head -n 20 | cut -f 1,4 -d ' ':

332478924 /usr/lib/gcc/x86_64-linux-gnu/4.9/include/avx512fintrin.h 189877260 /home/froydnj/src/gecko-dev.git/js/src/jsapi.h 161543424 /usr/include/c++/4.9/bits/stl_algo.h 141264528 /usr/include/c++/4.9/bits/random.h 113475040 /home/froydnj/src/gecko-dev.git/xpcom/glue/nsTArray.h 105880002 /usr/include/c++/4.9/bits/basic_string.h 92449760 /home/froydnj/src/gecko-dev.git/xpcom/glue/nsISupportsImpl.h 86975736 /usr/include/c++/4.9/bits/random.tcc 76991387 /usr/include/c++/4.9/type_traits 72934768 /home/froydnj/src/gecko-dev.git/mfbt/TypeTraits.h 68956018 /usr/include/c++/4.9/bits/locale_facets.h 68422130 /home/froydnj/src/gecko-dev.git/js/src/jsfriendapi.h 66917730 /usr/include/c++/4.9/limits 66625614 /home/froydnj/src/gecko-dev.git/xpcom/glue/nsCOMPtr.h 66284625 /usr/include/x86_64-linux-gnu/c++/4.9/bits/c++config.h 63730800 /home/froydnj/src/gecko-dev.git/js/public/Value.h 62968512 /usr/include/stdlib.h 57095874 /home/froydnj/src/gecko-dev.git/js/public/HashTable.h 56752164 /home/froydnj/src/gecko-dev.git/mfbt/Attributes.h 56126246 /usr/include/wchar.h

How does avx512fintrin.h get included so much? It turns out min, max, or swap. In this case, std::shuffle requires std::uniform_int_distribution from /usr/include/c++/4.9-related files in the above list.

If you are compiling with SSE2 enabled (as is the default on x86-64 Linux), then because is a clearinghouse for all sorts of x86 intrinsics, even though all we need is a few typedefs and intrinsics for SSE2 code. Minus points for GCC header cleanliness here.

What about the top twenty files by number of times included (filter the script’s output through sort -n -k 3 -r | head -n 20 | cut -f 3,4 -d ' ')?

2773 /home/froydnj/src/gecko-dev.git/mfbt/Char16.h 2268 /home/froydnj/src/gecko-dev.git/mfbt/Attributes.h 2243 /home/froydnj/src/gecko-dev.git/mfbt/Compiler.h 2234 /home/froydnj/src/gecko-dev.git/mfbt/Types.h 2204 /home/froydnj/src/gecko-dev.git/mfbt/TypeTraits.h 2132 /home/froydnj/src/gecko-dev.git/mfbt/Likely.h 2123 /home/froydnj/src/gecko-dev.git/memory/mozalloc/mozalloc.h 2108 /home/froydnj/src/gecko-dev.git/mfbt/Assertions.h 2079 /home/froydnj/src/gecko-dev.git/mfbt/MacroArgs.h 2002 /home/froydnj/src/gecko-dev.git/xpcom/base/nscore.h 1973 /usr/include/stdc-predef.h 1955 /usr/include/x86_64-linux-gnu/gnu/stubs.h 1955 /usr/include/x86_64-linux-gnu/bits/wordsize.h 1955 /usr/include/x86_64-linux-gnu/sys/cdefs.h 1955 /usr/include/x86_64-linux-gnu/gnu/stubs-64.h 1944 /usr/lib/gcc/x86_64-linux-gnu/4.9/include/stddef.h 1942 /home/froydnj/src/gecko-dev.git/mfbt/Move.h 1941 /usr/include/features.h 1921 /opt/build/froydnj/build-mc/js/src/js-config.h 1918 /usr/lib/gcc/x86_64-linux-gnu/4.9/include/stdint.h

Not a lot of surprises here. A lot of these are basic definitions for C++ and/or Gecko (, mfbt/Move.h).

There don’t seem to be very many obvious wins, aside from getting GCC to clean up its header files a bit. Getting us to the point where we can use instead of own homegrown mfbt/TypeTraits.h would be a welcome development. Making js/src/jsapi.h less of a mega-header might help some, but brings of a burden of “did I remember to include the correct JS header files”, which probably devolves into people cutting-and-pasting complete lists, which isn’t a win. Splitting up nsISupportsImpl.h seems like it could help a little bit, though with unified compilation, I suspect we’d likely wind up including all the split-up files at once anyway.

https://blog.mozilla.org/nfroyd/2015/10/09/gecko-include-file-statistics/

|

|

QMO: Firefox 42 Beta 7 Testday, October 16th |

Greetings Mozillians!

We are holding the Firefox 42.0 Beta 7 Testday next Friday, October 16th. The main focus of this event is Control Center feature. As usual, there will be unconfirmed bugs to triage and resolved bugs to verify.

Detailed participation instructions are available in this etherpad.

No previous testing experience is required so feel free to join us on the #qa IRC channel and our moderators will make sure you’ve got everything you need to get started.

Hope to see you all on Friday!

Let’s make Firefox better together!

https://quality.mozilla.org/2015/10/firefox-42-beta-7-testday-october-16th/

|

|

John O'Duinn: The “Distributed” book-in-progress: Early Release#1 now available! |

My previous post described how O’Reilly does rapid releases, instead of waterfall-model releases, for book publishing. Since then, I’ve been working with the folks at O’Reilly to get the first milestone of my book ready.

As this is the first public deliverable of my first book, I had to learn a bunch of mechanics, asking questions and working through many, many details. Very time consuming, and all new-to-me, hence my recent silence. The level of detailed coordination is quite something – especially when you consider how many *other* books O’Reilly has in progress at the same time.

One evening, while in the car to a social event with friends, I looked up the “not-yet-live” page to show to friends in the car – only to discover it was live. Eeeeek! People could now buy the 1st milestone drop of my book. Exciting, and scary, all at the same time. Hopefully, people like it, but what if they don’t? What if I missed an important typo in all the various proof-reading sessions? I barely slept at all that night.

One evening, while in the car to a social event with friends, I looked up the “not-yet-live” page to show to friends in the car – only to discover it was live. Eeeeek! People could now buy the 1st milestone drop of my book. Exciting, and scary, all at the same time. Hopefully, people like it, but what if they don’t? What if I missed an important typo in all the various proof-reading sessions? I barely slept at all that night.

In O’Reilly language, this drop is called “Early Release #1 (ER#1)”. Now that ER#1 is out, and I have learned a bunch about the release mechanics involved, the next milestone drop should be more routine. Which is good, because we’re doing these every month. Oh, and like software: anyone who buys ER#1 will be prompted to update when ER#2 is available later in Oct, and prompted again when ER#3 is available in Nov, and so on.

You can buy the book-in-progress by clicking here, or clicking on the thumbnail of the book cover. And please, do let me know what you think – Is there anything I should add/edit/change? Anything you found worked for you, as a “remotie” or person in a distributed team, which you wish you knew when you were starting? If you were going to setup a distributed team today, what would you like to know before you started?

To make sure that any feedback doesn’t get lost or caught in spam filters, I’ve setup a special email address (feedback at oduinn dot com) although I’ve already been surprised by feedback via twitter and linkedin. Thanks again to everyone for their encouragement, proof-reading help and feedback so far.

Now, it’s time to brew more coffee and get back to typing.

John.

http://oduinn.com/blog/2015/10/08/distributed_er1_now_available/

|

|

The Mozilla Blog: Proposed Principles for Content Blocking |

Content blocking has become a hot issue across the Web and mobile ecosystems. It was already becoming pervasive on desktop, and now Apple’s iOS has made it possible to develop iOS applications whose purpose is to block content. This caused the most recent flurry of activity, concern and focus. We need to pay attention.

Content blocking is not going away – it is now part of our online experience. But the landscape isn’t well understood, making it harder to know how best to advance a healthy, open Web. Users want it –whether to avoid the display of ads, protect against unwanted tracking, improve load speed, or reduce data consumption– and we need to address how we as an industry should respond. We wanted to start by hacking on proposed principles for content blocking. The growing availability and use of content blockers tells us that users want to control their experience.

This is a good thing. But some content blocking could be harmful in ways that may not be obvious. For example, if content blocking creates new gatekeepers who can pick winners and losers in the publishing space or who favor their own content over others’, it ultimately harms competition and innovation. In the long run, users could lose as much control as they gain. The same happens if the commercial model of the Web is not part of the content blocking debate.

In my last post, I conveyed our intention to engage with this landscape, not solely through analysis and research, but also through experimentation, product development, and advocacy.

To help guide our efforts and hopefully inform others, we’ve developed three proposed “content blocking principles” that would help advance the beneficial effects of content blocking while minimizing the risks. We want your help hacking on them. Just as our data privacy principles help guide our data practices, these content blocking principles will help guide what we build and what we support across the industry.

Content is not inherently good or bad – with some notable exceptions, such as malware. So these principles aren’t about what content is OK to block and what isn’t. They speak to how and why content can be blocked, and how the user can be maintained at the center through that process.

At Mozilla, our mission is to ensure a Web that is open and trusted and that puts our users in control. For content blocking, here is what we think that means:

- Content Neutrality: Content blocking software should focus on addressing potential user needs (such as on performance, security, and privacy) instead of blocking specific types of content (such as advertising).

- Transparency & Control: The content blocking software should provide users with transparency and meaningful controls over the needs it is attempting to address.

- Openness: Blocking should maintain a level playing field and should block under the same principles regardless of source of the content. Publishers and other content providers should be given ways to participate in an open Web ecosystem, instead of being placed in a permanent penalty box that closes off the Web to their products and services.

Tell us what you think of these proposed principles on your social channels using #contentblocking and join us on Friday October 9 at 11am PT for our #BlockParty, a conversation around the problems and possible solutions to the content blocking question. We look forward to working with our users, our partners and the rest of the Web ecosystem to advance our shared goal of a healthy, open Web.

https://blog.mozilla.org/blog/2015/10/07/proposed-principles-for-content-blocking/

|

|

Air Mozilla: Product Coordination Meeting |

This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order to ensure that...

This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order to ensure that...

|

|

David Burns: A new Marionette version available for Selenium Users with Java, .NET and Ruby support |

If you have been wanting to use Marionette but couldn't because you don't work in Python now is your chance to do so! Well, if you are a Java User, .NET and Ruby you can use it too!! All the latest downloads of the Marionette executable are available from our development github repository releases page. We will be moving this to the Mozilla organization the closer we get to a full release.

There is also a new page on MDN that walks you through the process of setting up Marionette and using it. There are examples for all the language bindings currently supported.

Since you are awesome early adopters it would be great if we could raise bugs.

I am not expecting everything to work but below is a quick list that I know doesn't work.

- No support for self-signed certificates

- No support for actions

- No support logging endpoint

- getPageSource not available. This will be added in at a later stage, it was a slightly contentious part in the specification.

- I am sure there are other things we don't remember

Switching of Frames needs to be done with either a WebElement or an index. Windows can only be switched by window handles. This is currently how it has been discussed in the specification.

If in doubt, raise bugs!

Thanks for being an early adopter and thanks for raising bugs as you find them!

|

|