Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Air Mozilla: Mozilla Weekly Project Meeting, 19 Oct 2015 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20151019/

|

|

Emma Irwin: How to Write a Good ‘Open’ Task |

In Whistler earlier this year, we gathered together a group of code contributors to better understand what barriers, frustrations, ambitions and successes they experienced contributing code to Mozilla projects. Above all other topics, the ‘task description’ was surfaced as the biggest reason for abandoning projects. This, the doorway for participation is given the least attention of all.

As a result, I’ve paid close attention recently to how projects use tasks to invite participation, and experimented a bit in our own Participation Github repository. Probably the best opportunity to understand what makes a task truly ‘open’ is to to witness in ‘real time’, how contributors navigate issue queues. I had such an opportunity this week at the ‘Codeathon for Humanity’ at Grace Hopper Open Source Day, and previously leading an Open Hatch Comes to Campus Day at the University of Victoria.

Based on all of these experiences I want to share to my thoughts on how we’re currently using tasks, and how ‘How to Write a Good ‘Open’ Task’. The act of creating tasks in an open repository is not itself an invitation to participation. Lets be honest about the ‘types of tasks’ we’re creating, and then just design properly for those we intend for participation.

The Garbage or ‘Reminder to Self’ Task : I see these everywhere – and witness the havoc they play on contributors. Garbage tasks create a lot of noise in issue queues, they are the expired, the ‘now irrelevant’ and the ‘notes to self’ variety of tasks that were are no longer, or never where – intended for participation. Often because these issues are so low in priority lists, their descriptions are often non-existent.

Meta Task: Contains a broader picture of goals, and contains more than one action. Could also be called a Feature Task as completion is usually a collaboration between individuals and extends beyond a single milestone or heartbeat. Meta tasks are a great way to track how smaller tasks can lead to bigger impact. For a project they make sense, but for a contributor it’s hard to understand how to break off a piece of work, and what’s already being done. The Participation Team prefixes Meta task descriptions, but perhaps we can be even clearer.

Storytelling Task: I see lots of these as well: tasks already assigned to, or implied as tasks for certain individuals or groups. You can recognize these because comments are more of a conversation, and place to share status and invite feedback. Storytelling tasks are a great way to bring the story of a team’s work to the center of all activities, to make room for questions and feedback but it seems important we recognize them as just this. If a task is not actually open to participation (other than commenting), I think we need to provide mechanisms for filter here as well.

Open or Contributor/Volunteer Task: Is a clear ‘ask’, with action items suitable for completion by an individual. Components of a good open task are:

Appropriate Tag(s)

Helping people filter to Open Issues is a huge win for project and contributor. We’ve been using a tag called ‘volunteer task’ for this purpose, although we may change the name based on feedback. It’s our most viewed tag.

Clear Title

Far too many task titles look like placeholders for work someone means to do themselves: ‘Upgrade theme’, ‘Fix Div Tags’. Better titles avoid jumping to assumptions: ‘Upgrade X Theme to use version x.x Jquery Library’.

Referenced Meta/Parent Task

Creating and referencing a Meta Task Is a great way to connect open tasks to the impact of the work being done. They also help generate a sense of collaboration and community that makes work feel meaningful. I’ve heard over and over again, that the most compelling contribution opportunities are those that clearly show how an individual can have impact on a bigger picture.

Prerequisite

What skills, knowledge or personal goals should someone attempting this task possess? This can be skill-level, time commitment – but also a mechanisms for outreach to those with personal goals that relate to the opportunity. It can also act as a filter, helping people recognize personal limitations that might make this task mismatch.

Short Description

Think of this as the Tweetable, or TL;DR version of the longer description. Challenge yourself to bring the key points into the short description. Use Bullet points to break down points vrs writing long paragraphs of text. Link to longer documentation (and make sure your permissions allow anonymous view)

Participation Steps

I’ve written a lot about designing participation in steps, and believe that breaking things down this way benefits contributor and project. I know this probably feels tedious especially for smaller bugs, but minimally this means linking to a template explaining ‘how to get started’. Example steps might be:

- Read Documentation

- Build your local environment

- Update the README with any issues you found during the build

- Introduce yourself on Discourse

- Self-Assign yourself this task and leave a comment.

Value to Contributor

I sometimes include this, and my opinion is this is where ‘mentored bugs’ could plug in vrs a bug being only about mentoring.

Although some of this might feel like a lot of work, it actually filters out a large number of questions, helps contributors connect more quickly to opportunity and helps build trust in the process.

Here’s one I used last heartbeat

I also consider Participation Personas when I design in case they help you as well.

|

|

Armen Zambrano: Fixes for Sheriffs' Treeherder backfilling and scheduling support |

Few weeks ago we added alerts to pulse_actions:

and we've fixed a couple of bugs since then:

- Backfilling of a test job of pushes with coalesced builds should not test against the wrong build

- Thanks @KWierso for reporting it!

- Filling in a revision on aurora partially fails

- Better documentation

- Moved under the Mozilla Org on GitHub

- Added auto deploy to Heroku from the 'heroku' branch

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/DtHpOhZhOTk/fixes-for-sheriffs-treeherder.html

|

|

QMO: Firefox 42.0 Beta 7 Testday Results |

Hi everyone!

Last Friday, October 16th, we held Firefox 42.0 Beta 7 Testday. It was a successful event (please see the results section below) so a big Thank You goes to everyone involved.

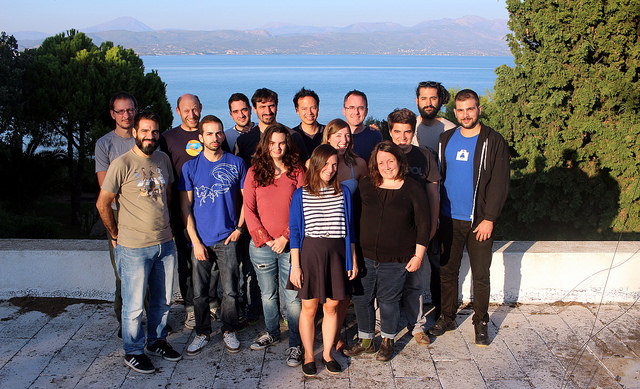

First of all, many thanks to our active contributors: Mohammed Adam, Moin Shaikh, Luna Jernberg, Bolaram Paul, Preethi Dhinesh, Mani Kumar Reddy Kancharla, Syed Muhammad Mahmudul Haque, Hossain Al Ikram, Nazir Ahmed Sabbir, Md. Rahimul Islam, Md. Rakibul Islam Ratul, Sajedul Islam, Khalid Syfullah Zaman, Rezaul Huque Nayeem, Saheda Reza Antora, Apel Mahmud, Md. Asiful Kabir, Mohammad Maruf Islam, Forhad Hossain, Md. Badiuzzaman Pranto, Rayhan, Sujit Debnath, Md. Faysal Alam Riyad, Mahay Alam Khan, Masud Rana.

Secondly, a big thank you to all our active moderators.

Results:

- no new issues found related to tested areas

- 9 bugs were verified: 969474, 1090305, 1174431, 1181300, 1183044, 1187007, 1187482, 1203661 and 1204365.

- 1 fixed bug was set to affected for Firefox 42.0: 1126330.

We hope to see you all in our next events, all the details will be posted on QMO!

https://quality.mozilla.org/2015/10/firefox-42-0-beta-7-testday-results/

|

|

Daniel Stenberg: GOTO Copenhagen |

I was invited speak at the GOTO Copenhagen conference that took place on October 5-6, 2015. A to me previously unknown conference that attracted over a thousand attendees in a hotel in central Copenhagen. According to the info desk, about 800 of these were from Denmark.

My talk was about HTTP/2 (again), which I guess doesn’t make any reader of this to raise his or hers eyebrows. I’d say there were about 200 persons in the audience as the room was fairly full. Probably one of the bigger audiences I’ve talked HTTP/2 to so far.

|

|

This Week In Rust: This Week in Rust 101 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: nasa42, brson, and llogiq.

Updates from Rust Community

News & Blog Posts

- The little book of Rust macros.

- Rust and IDEs. The plan for better integration of Rust with IDEs.

- This week in Redox 2. Redox is an upcoming OS written in Rust.

- This Week In Servo 37.

- Rust to the rescue (of Ruby). Call Rust code from Ruby.

- Hacking Servo for noobs.

- Building an SQL database with 10 Rust beginners.

- Using a Rust DLL from C#.

- Creating a C API for a Rust library.

- A simple link checker built with Rust.

Notable New Crates & Projects

- Rust-Bio. A bio-informatics library for Rust.

- Rust64. A C64 emulator written in Rust.

- Simplemad. A Rust interface for the MPEG audio decoding library libmad.

- pine. Process line output.

- JSONlite. A simple, self-contained, serverless, zero-configuration, json document store.

- medio. Rust bindings to Medium.com api.

Updates from Rust Core

100 pull requests were merged in the last week.

See the subteam report for 2015-10-16 for details.

Notable changes

- rust_trans: struct argument over ffi were passed incorrectly in some situations. Change cabi_x86_64 to better model the sysv abi.

- Move desugarings to lowering step.

- Implement conversion traits for primitive integer types.

- Remove the

push_unsafe!andpop_unsafe!macros. - Add

#[derive(Clone)]tostd::fs::Metadata. - Add

into_innerandget_muttoMutexandRwLock. - Reject

+and-when parsing floats. - Add

Sharedpointer and have{Arc, Rc}use it.

New Contributors

- billpmurphy

- David Ripton

- Fabiano Beselga

- kickinbahk

- Marcello Seri

- nxnfufunezn

- Robert Gardner

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Allow overlapping implementations for marker traits.

- Promote the

libccrate from the nursery. - Enable the compiler to cache incremental workproducts.

- Add some additional utility methods to

OsStringandOsStr.

New RFCs

- Changes to the compiler to support IDEs.

- Prevent unstable items from causing name resolution conflicts with downstream code.

- Amend

recoverwith aPanicSafebound. - Add "panic-safe" or "total" alternatives to the existing panicking slicing syntax.

- Refine the unguarded-escape-hatch from RFC 1238.

- Allow a custom panic handler.

Upcoming Events

- 10/20. Rust Hack and Learn Hamburg.

- 10/28. Columbus Rust Society.

- 10/28. Rust Amsterdam.

- 10/28. RustBerlin Hack and Learn.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

No jobs listed for this week. Tweet us at @ThisWeekInRust to get your job offers listed here!

Crate of the Week

This week's Crate of the Week is Glium a safe Rust wrapper for OpenGL. Thanks to DroidLogician for the suggestion. Submit your suggestions for next week!

OpenGL is a time-honored standard, which also means its API has seen enough growth to make it look like you might find Sleeping Beauty if you look deep enough. Also it was created when multi-core wasn't exactly on the radar, so many things are not thread safe. Caveat error! Glium pre-verifies whatever it can to make errors either impossible at compile time or panic before it can crash (so you at least get a helpful message instead of random garbage). It caches the context, manages your buffers using Rust's standard RAII idiom and by this brings some much-needed sanity to OpenGL programming.

Quote of the Week

S-s-s-s-stack alloc Queen, no C++ though Might drop that pointer, no nullable though Tell golang, "Yo, don't you got enough mem to slow?" Tell 'em Kangaroo Rust, I'll box your flow

Advanced pattern matching - possible Don't go against Rusty - impossible Runtime will leave your CPU on popsicle Man these h*es couldn't be any less logical

http://this-week-in-rust.org/blog/2015/10/19/this-week-in-rust-101/

|

|

Josh Matthews: Creating a C API for a Rust library |

Yoric has been doing great work porting Firefox’s client backend to Rust for use in Servo (see telemetry.rs), so I decided to create a C API to allow using it from other contexts. You can observe the general direction of my problem-solving by looking at the resulting commits in the PR, but I drew a lot of inspiration from html5ever’s C API.

There are three main problems that require solving for any new C API to a Rust library:

- writing low-level bindings to call whatever methods are necessary on high-level Rust types

- writing equivalent C header files for the low-level bindings

- adding automated tests to ensure that all of the pieces work

Low-level bindings:

Having never used telemetry.rs before, I wrote my bindings by looking at the reference commit for integrating the library into Servo, as well as examples/main.rs. This worked out well for me for this afternoon hack, since I didn’t need to spend any time reading through the internals of the library to figure out what was important to expose. This did bite me later when I realized that the implementation for Servo is significantly more complicated than the example code, which caused me to redesign several aspects of the API to require explicitly passing around global state (as can be seen in this commit).

My workflow here was to categorize the library usage that I saw in Servo, which yielded three main areas that I needed to expose through C: initialization/shutdown, definition and recording of histogram types, and serialization. In each case I sketched out some functions that made sense (eg. count_histogram.record(1) became unsafe extern "C" fn telemetry_count_record(count: *mut count_t, value: libc::uint)) and wrote placeholder structures to verify that everything compiled.

Next I implemented type constructors and destructors, and decided not to expose any Rust structure members to C. This allowed me to use types like Vec in my implementation of the global telemetry state, which both improved the resulting API ergonomically (many fewer _free functions are required) and allowed me to write more concise and correct code. This decision also allowed me to define a destructor on a type exposed to C; this would usually be forbidden due to changing the low-level representation of the type in ways visible to C if the structure members were exposed. Generally these API methods took the form of the following:

#[no_mangle]

pub unsafe extern "C" fn telemetry_new_something(telemetry: *mut telemetry_t, ...) -> *mut something_t {

let something = Box::new(something::new(...));

Box::into_raw(something)

}

#[no_mangle]

pub unsafe extern "C" fn telemetry_free_something(something: *mut something_t) {

let something = Box::from_raw(something);

drop(something);

}

The use of Box in this code places the enclosed value on the heap, rather than the stack, which allows us to return to the caller without the value being deallocated. However, because C deals in raw pointers rather than Rust’s Box type, we are forced to convert (ie. reinterpret_cast) the box into a pointer that it can understand. This also means that the memory pointed to will not be deallocated until the Rust code explicitly asks for it, which is accomplished by converting the pointer back into an owned Box upon request.

Once I had meaningful types, I filled out the API methods that were used for operating on them. The types used in the public API are very thin wrappers around the full-featured Rust types, so the step was mostly boilerplate like this:

#[no_mangle]

pub unsafe extern "C" fn telemetry_record_flag(flag: *mut flag_t) {

(*flag).inner.record();

}

The code for serialization was the most interesting part, since it required some choices. The Rust API requires passing a Sender and allows the developer to retrieve the serialized results at any point in the future through the JSON API. In the interests of minimizing the amount of work required on a Saturday afternoon, I chose to expose a synchronous API that waits on the result from the receiver and returns the pretty-printed result in a string, rather than attempting to model any kind of Sender/Receiver or JSON APIs. Even this ended up causing some trouble, since telemetry.rs supports stable, beta and nightly versions of Rust right now. Rust 1.5 contains some nice ffi::CString APIs for passing around C string representations of Rust strings, but these are named differently in Rust 1.4 and don’t exist in Rust 1.3. To solve this problem, I ended up defining an opaque type named serialization_t which wraps a CString value, along with a telemetry_borrow_string API function to extract a C string from it. The resulting API works across all versions of Rust, even if it feels a bit clunky.

C header files

The next step was writing a header file that matched the public types and functions exposed by my low-level bindings (like an inverse bindgen). This was a straightforward application of writing out function prototypes that match, since all of the types I expose are opaque structs (ie. struct telemetry_t;).

The most interesting part of this step was writing a C program that linked against my Rust library and included my new header file. I ported a simple Rust test from one I added earlier to the low-level bindings, then wrote a straightforward Makefile to get the linking right:

CC ?= gcc CFLAGS += -I../../capi/include/ LDFLAGS += -L../../capi/target/debug/ -ltelemetry_capi OBJ := telemetry.o %.o: %.c $(CC) -c -o $@ $< $(CFLAGS) telemetry: $(OBJ) $(CC) -o $@ $^ $(LDFLAGS)

This worked! Running the resulting binary yielded the same output as the test that used the C API from Rust, which seemed like a successful result.

Automated testing

Following html5ever's example, my prior work defined a separate crate for the C API (libtelemetry_capi), which meant that the default cargo test would not exercise it. Until this point I had been running Cargo from the capi subdirectory, and running the makefile from the examples/capi subdirectory. Continuing to steal from html5ever's prior art, I created a script that would run the full suite of Cargo tests for the non-C API parts, then run cargo test --manifest-path capi/Cargo.toml, followed by make -C examples/capi, and made Travis use that as its script to run all tests.

These changes led me to discover a problem with my Makefile - any changes to the Rust code for the C API would not cause the example to be rebuilt, so I didn't actually have effective local continuous integration (the changes still would have been picked up on Travis). Accordingly, I added a DEPS variable to the makefile that looked like this:

DEPS := ../../capi/include/telemetry.h ../../capi/target/debug/libtelemetry_capi.a Makefile

which causes the example to be rebuilt any time any of the C header, the underlying static library, or the Makefile itself are changed. The result is that whenever I'm modifying telemetry.rs, I can now make changes, run ./travis-build.sh and feel confident that I haven't inadvertently broken the C API.

http://www.joshmatthews.net/blog/2015/10/creating-a-c-api-for-a-rust-library/

|

|

Air Mozilla: Webdev Beer and Tell: October 2015 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

|

|

Fr'ed'eric Wang: Open Font Format 3 released: Are browser vendors good at math? |

Version 3 of the Open Font Format was officially published as ISO standard early this month. One of the interesting new feature is that Microsoft's MATH table has been integrated into this official specification. Hopefully, this will encourage type designers to create more math fonts and OS vendors to integrate them into their systems. But are browser vendors ready to use Open Font Format for native MathML rendering? Here is a table of important Open Font Format features for math rendering and (to my knowledge) the current status in Apple, Google, Microsoft and Mozilla products.

| Feature | Rationale | Apple | Microsoft | Mozilla | |

|---|---|---|---|---|---|

| Pre-installed math fonts | Make mathematical rendering possible with the default system fonts. | OSX: Obsolete STIX iOS: no | Android: no Chrome OS: no | Windows: Cambria Math Windows phone: no? | Firefox OS: no |

| MATH table allowed in Web fonts | Workaround the lack of pre-installed math fonts or let authors provide custom math style. | WebKit: yes (no font sanitizer?) | Blink: yes (OTS) | Trident: yes (no font sanitizer?) | Gecko: yes (OTS) |

| USE_TYPO_METRICS OS/2 fsSelection flag taken into account | Math fonts contain tall glyphs (e.g. integrals in display style) and so using the "typo" metrics avoids excessive line spacing for the math text. | WebKit: no | Blink: yes | Trident: yes | Gecko: yes (gfx/) |

| Open Font Format Features | Good mathematical rendering requires some glyph substitutions (e.g. ssty, flac and dtls). | WebKit: yes | Blink: yes | Trident: yes | Gecko: yes |

| Ability to parse the MATH table | Good mathematical rendering requires many font data. | WebKit: yes (WebCore/platform/graphics/) | Blink: no | Trident: yes (LineServices) | Gecko (gfx/) |

| Using the MATH table for native MathML rendering | The MathML specification does not provide detailed rules for mathematical rendering. | WebKit: for operator stretching (WebCore/rendering/mathml/) | Blink: no | Trident: no | Gecko: yes (layout/mathml/) |

| Total Score: | 4/6 | 3/6 | 4.5/6 | 5/6 | |

update: Daniel Cater provided a list of pre-installed fonts on Chrome OS stable, confirming that no fonts with a MATH table are available.

|

|

Rub'en Mart'in: Participation, next steps |

Following up from my previous post, I’m happy to say that during the last quarter we did (and accomplished) an amazing job as Participation team in Mozilla. We were working as a team with a clear long-run mandate for the first time, and I think that was the key to have everyone motivated.

This quarter I’ll be focusing on similar topics, some are follow-ups from previous quarter while others are new and challenging:

- Continue with volunteer coaching. Keep having 1:1s with key volunteers in different communities and helping them to draw their path as contributors, bringing and getting value from their work.

- Support German community to move forward with their plans and integrate new efforts we are driving on the ground around November campaign with the existing volunteers.

- Keep working on Mozilla Reps program and facilitate changes to adapt the program to the current environment as well as design opportunities to contribute with impactful activities.

- Experiment around tools and processes to improve how communities work and visualize their activities.

This is an exciting moment for participation at Mozilla and I’m sure this will mark a key advantage for the organization in 2016 that will allow Mozilla reach new frontiers.

Other team members posts:

- Francisco Picolini: [Participation] What I’ve been doing

- Guillermo Movia: A framework to coach and mentor mozillians

- Rosana Ardila: Empowering volunteers and communities by longer term planning

http://www.nukeador.com/16/10/2015/participation-next-steps/

|

|

Support.Mozilla.Org: What’s up with SUMO – 16th October |

Hello, SUMO Nation!

It’s been a while, but we’re back with more updates for you! We hope you’re feeling better than some of us (*cough cough*) – rumour has it that home-made onion syrup is the best cure around, so maybe a bit of old school domestic alchemy will be required… Never mind the coughing, here are the updates!

Welcome, New Contributors!

Contributors of the last two weeks

- Toad-Hall for amazing patience and skills in dealing with a very frustrated user and keeping the situation under control!

- Peter for his awesome 200+ contributions for Slovenian KB translations this year (including 99% of Thunderbird) – great stuff!

- Vnisha Srivastav for being a great SUMO teacher for Ashish!

- Rahul Talreja for being a great SUMO mentor for Daksh!

-

Ashickur, Safwan, Seeam, and Raiyad for the SUMO Bangladesh Tour 2015, and getting all these contributors on board in Sylhet! Keep rocking all of Bangladesh!

We salute you!

Last SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel.

Reminder: the next SUMO Community meeting…

- …is going to take place on Monday, 19th of October. Join us!

- If you want to add a discussion topic to upcoming the live meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Monday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

Developers

- The notes from the most recent Platform meeting can be found here.

- You can see the current state of the backlog our developers are working on here.

- The next Platform meeting will take place on the 29th of October (Kadir is AFK next week, so we’re cancelling the meeting).

Community

- The most important update in the last two weeks: Etherpad = Etherpad Lite – read all about it here.

- Reminder: let us know what you think about contributor information at SUMO.

- If you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

Support Forum

- Some details from the last SUMO Day: 91% answer rate across the board, 94% for Firefox. Great effort!

- Save the date: the next SUMO Questions Day is coming up on the 22nd of October!

Knowledge Base

- Webmaker content in the KB is getting updated – watch for more up-to-date stuff!

- Check here for other upcoming article updates.

- Save the date: the next SUMO KB Day is coming up on the 29th of October!

L10n

- Huge thanks to everyone rocking the KB last week!

- Watch out for new Webmaker content to update both on your dashboards and in Verbatim / Pontoon!

- Help needed! Let’s build a database of English departments at universities around the world – this will help us find new SUMO l10ns in the future.

Firefox

- for Android

- The documentation for version 42 is wrapping up

- for Desktop

- The 41.0.1 release did fix the Flash/Farmville crash/hang issues – hooray!

- This (and other factors) lead to a very, very low crash rate – double hooray!

- Firefox 41 has been around for a while, so… it’s time to start talking Firefox 42!

- Windows 64 bit support is coming in version 42, but you’ll have to dive deeper (into the FTP site) to get it. Mind you, there will be no support for Silverlight, Java, or other NPAPI plugins except for Flash, which is whitelisted. Apart from that, expect more security, better resource usage and fewer crashes.

- The Norton Toolbar fix is coming late October – more updates here.

- for iOS

- The global launch should happen before the end of this year, but we can’t spoil the surprise for you just yet, sorry!

- Firefox OS

- Version 2.5 coming out in November. It will be geared towards our community and developers. We have some placeholders in place for articles, but in the meantime we’re pointing curious users to MDN.

https://blog.mozilla.org/sumo/2015/10/16/whats-up-with-sumo-16th-october/

|

|

Robert O'Callahan: Hobbiton |

On Sunday afternoon I visited Hobbiton with a few Mozillians. It's a two-hour drive from Auckland to the "Lord of the Rings"/"Hobbit" Hobbiton movie set near Matamata ... a pleasant, if slightly tedious drive through country that looks increasingly Shire-like as you approach the destination. We arrived ahead of schedule and had plenty of time to browse the amusingly profuse gift shop before our tour started.

I'm an enthusiastic fan of The Lord Of The Rings and easily seduced by the rural fantasy of the Shire, but I enjoyed the tour even more than I expected. It's spring and the weather was fine, and Hobbiton is, among other things, a wonderful garden; it looked and smelled gorgeous. The plants, the gently worn footpaths, the spacing of the hobbit holes, the versimilitude of the props, all artfully designed to suggest artlessness --- it all powerfully suggests the place could be real, would be delightful to live in if only it was real, that it should be real. Adding to that the mythology of the movies and Tolkien's books, it was an intoxicating collision between fantasy and reality that kept me slightly off balance mentally.

After the tour proper we entered the Green Dragon Inn for a drink and dinner. The Inn continues the combination of fantasy and reality: carefully designed Shire-props next to "fire exit" signs, real drinks in pottery mugs. The highlight was the dinner feast: food piled on tables, looking like an Epicurean fantasy but actually real, copious and delicious. It's very memorable, great fun, and a bit silly in the best way.

After the dinner there was a walk back through Hobbiton in the dark, with lanterns, admiring the hobbit holes lit to suggest candles and firelight. It was gorgeous again.

Hobbiton is apparently a popular place to propose marriage. One happened on our tour, and another couple in our tour had done so two days before; they couldn't remember the rest of the tour after the proposal, so were repeating it.

The dinner tour is ridiculously expensive, but I thought it was very special and well worth it.

|

|

Daniel Pocock: Enterprise-grade SIP coming to Telepathy |

I've just announced the reSIProcate connection manager for the Telepathy framework on the Linux desktop.

As the announcement states, it is still a proof-of-concept. It was put together very rapidly just to see how well the reSIProcate project and its build system interacts with TelepathyQt, the Qt framework and DBus.

That said, I've been able to make calls from GNOME Empathy to Jitsi, Lumicall and Asterisk.

Why this is important

Telepathy is the default communications framework installed on many Linux desktops. Yet none of the standard connection managers offers thorough support for NAT traversal. NAT traversal is essential for calling people who are not on the same LAN, whether it is just making a call across town or across the world. The XMPP connection manager offers limited support for NAT traversal if one party in the call is using a Google account, but with Google deprecating XMPP support and not everybody wanting to use Google anyway, that is not a stable foundation for free operating systems.

The reSIProcate solution

TURN is the standard (RFC 5766) for relaying media streams when two users want to communicate across the boundaries of NAT networks. There are several TURN servers packaged on Linux now. TURN has also been adopted for WebRTC, so it is a good foundation to build on.

reSIProcate's reCon library provides a high-level telephony API supporting TURN as well as DTLS-SRTP encryption and many other enterprise-grade features.

It also has thorough support for things like DNS NAPTR and SRV records, so you can just put in your SIP address and password and it can discover the correct SIP server, port number and transport protocol from DNS queries. This helps solve another common problem, the complexity of configuring some other softphones.

Screenshots

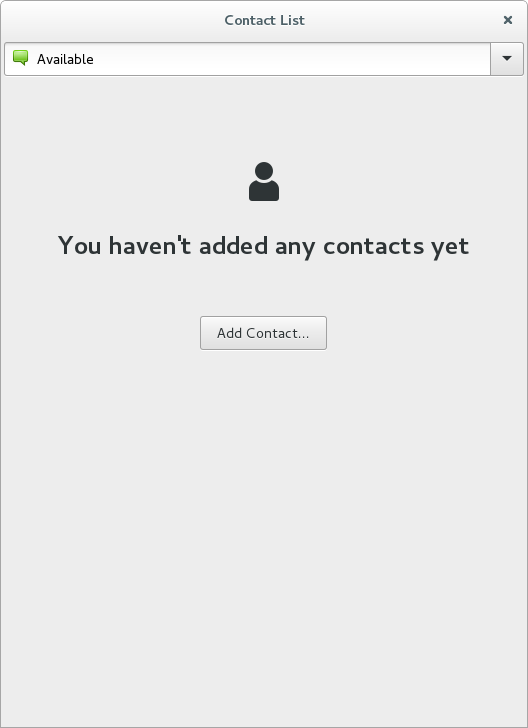

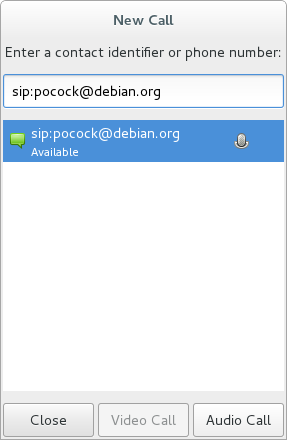

A SIP buddy list isn't supported yet:

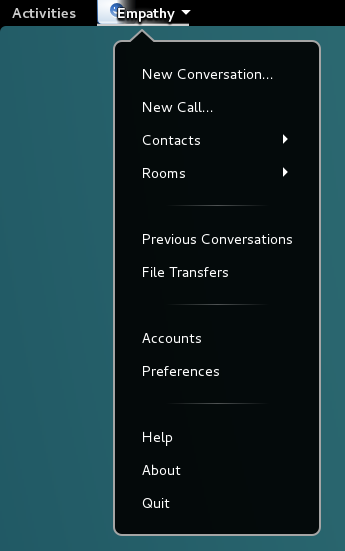

We have to use the Empathy menu to start calls (New Call...) or access account settings:

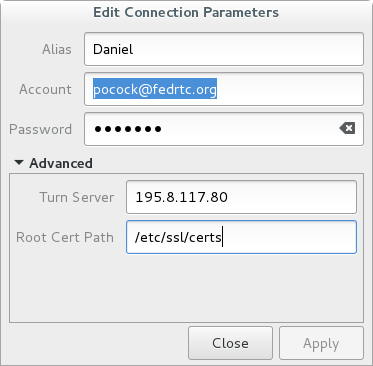

The account settings are very simple. Everything can be discovered using NAPTR and SRV queries, saving the user typing. For now, the TURN server is entered manually but that can also be discovered using an SRV query.

Placing a call from my fedrtc.org Fedora SIP account to my debian.org SIP account:

Project status and direction

More work is required to make this connection manager fully functional. It is available now for testing against other products and to get feedback about how the design should evolve.

http://danielpocock.com/enterprise-grade-sip-coming-to-telepathy

|

|

Mozilla Open Policy & Advocacy Blog: Data retention in Deutschland |

Tomorrow (Friday) the German legislature (the Bundestag) is set to vote on a mandatory data retention law that would require telecommunications and internet service providers to store the location data, SMS and call metadata, and IP addresses of everyone in Germany. Ordinarily, we can look to Germany to be a leader on privacy, which is why it’s so disappointing to see the German government advance legislation that places all users at risk.

While this legislation isn’t as bad as other data retention proposals we’ve seen (e.g., in France, the US, and Canada), to highlight the many dangers of mandatory data retention as a practice and express our opposition to this legislation, we sent a letter, signed by Denelle Dixon-Thayer, Mozilla’s Chief Business and Legal Officer, to every member of the Bundestag. You can read the letter here in English and here in German.

The Mozilla community has also been speaking out against this legislation. Working with local German partners Digitale Gesellschaft and netzpolitik.org we created a petition enabling German-speaking Mozillians to call on the Bundestag to reject this legislation. So far thousands of users have taken action! While it’s always inspiring to see users mobilizing to protect the open Web, this is particularly exciting for us as it is Mozilla’s first advocacy campaign in a language other than English, as well as the first outside of the United States. The Mozilla Policy Team was also in Berlin last week to speak to German lawmakers about this bill.

While it’s likely that this data retention law will pass the Bundestag, we’re confident that it will be struck down by German courts. Indeed, this wouldn’t be the first time that the German courts put a stop to data retention practices. In 2010, the German Federal Constitutional Court struck down Germany’s last data retention law, and in April of last year, the Court of Justice of the EU, the highest court in Europe, issued a sweeping condemnation of mandatory data retention and invalidated the Data Retention Directive (which required every EU country to enact a data retention mandate). This makes it all the more disappointing that the German government is pushing ahead with trying to bring data retention back from the dead, even as other countries across Europe have been repealing their old data retention laws.

We’ll continue to monitor the situation in Germany and to continue to oppose mandatory data retention laws elsewhere in the world. To take action on the law before the Bundestag, click here!

https://blog.mozilla.org/netpolicy/2015/10/15/data-retention-in-deutschland/

|

|

Morgan Phillips: Better Secret Injection with TaskCluster Secrets |

- Secret usage isn't tracked.

- Secrets exist in an environment even when they aren't being used.

- It encourages secrets to be stored in contexts (environment variables and flat files) which are prone to leakage.

In TaskCluster Secrets, each submitted payload (encrypted at rest) is associated with an OAuth scope. The scope also defines which actions a user may make against the secret. For example, to write a secret named '

someNamespace:foo' you'd need an OAuth scope 'secrets:set:someNamespace:foo,' to read it you'd need 'secrets:get:someNamespace:foo,' and so on.Tying each secret to a scope, we're able to generate an interesting work flow for access from within tasks. In short, we can generate and inject temporary credentials with read only access. This forces secrets to be accessed via the api and yields the following benefits:

- Secrets are only brought into a task as they are requested.

- Every secret access is logged via the API server.

- Secrets are only revealed when called by name, instead of just

env.

1.) Submit a write request to the TaskCluster GitHub API :

PUT taskcluster-github.com/v1/write-secret/org/myOrg/repo/myRepo/key/myKey {githubToken: xxxx, secret: {password: xxxx}, expires: '2015-12-31'}2.) GitHub OAuth token is used to verify org/repo ownership. Once verified, we generate a temporary account and write the following secret on behalf of our repo owner :

myOrg/myRepo:myKey {secret: {password: xxxx}, expires: '2015-12-31'}3.) CI jobs are launched alongside HTTP Proxies which will attach an OAuth header to outgoing requests to taskcluster-secrets.com/v1/.... The attached token will have a scope:

secrets:get:myOrg/myRepo:* which allows any secret set by the owner of the myOrg/myRepo repository to be accessed. 4.) Within a CI task, a secret may be retrieved by simple HTTP calls such as:

curl $SECRETS_PROXY_URL/v1/secret/myOrg/myRepo:myKeyEasy, secure, and 100% logged.

|

|

Michael Kaply: Windows 10, Permission Manager and more |

There are some changes coming to Firefox that might impact enterprise users, so i wanted to make folks aware of them.

Firefox 42 has a change to the permission manager so that permissions are based on the origin not just the host. This means that code like this in your AutoConfig file:

Services.perms.add(NetUtil.newURI("http://" + hostname), ...

will only apply to http. You need to be explicit with both:

Services.perms.add(NetUtil.newURI("http://" + hostname), ...

Services.perms.add(NetUtil.newURI("https://" + hostname), ...

Existing permissions will be migrated to both http and https. I've updated the CCK2 to account for this, but you'll need to rebuild your distribution.

Firefox 44 has some major changes coming to JavaScript let/const behavior that could impact your AutoConfig file. I'll be making sure the CCK2 is compatible, but you should make sure you're testing with the latest Firefox 44. Obviously this will impact the Firefox 45 ESR as well, so you'll want to do lots of testing there.

Finally, I got a question about the Firefox/Windows 10 page appearing even when you have upgrade pages turned off. Unfortunately this page bypasses the existing upgrade code. To make sure it doesn't appear, set the preference browser.usedOnWindows10 to true.

https://mike.kaply.com/2015/10/15/windows-10-permission-manager-and-more/

|

|

Rosana Ardila: Empowering volunteers and communities by longer term planning |

Last year Mozilla went through a rigorous planning process for 2015, the Participation 2015 Strategic Plan came out of this process. The plan was open for feedback and that way we structured the year according to a plan that had a three year vision.

When the Reps Council and Peers met in Paris in March, one of the topics that emerged as being of crucial importance for communities was exactly that: how to change the way that we currently approach our goals and aspirations by changing the way we plan. Planning is inevitable for getting projects off the ground and working together. It has always been present in our local and regional communities and in any individual initiative, but until now we haven’t been very intentional in making it explicit or in providing a framework for it.

During the last months the participation team in collaboration with local communities has been working on a framework for mid-term planning that is being tested with 3 communities right now, these communities are Indonesia, Germany and Mexico. The idea behind this framework is to help communities:

- craft a vision for themselves as a community and the impact they want to have

- choose and define their goals

- set measurable objectives

- draft roadmaps

We have seen this approach being used in many organizations around the world and we are testing it right now with our communities to understand how it fits within our culture and to understand what it might unlock. Our working hypothesis is that this planning framework will help us plan thinking holistically about community development and community impact. And that this will unlock great potential:

- by crafting a vision we will be able to see how all different initiatives relate to one another, specially the relationship between a healthy developing community and the impact that it can have

- we will be able to be more ambitious, not only in terms of how big our initiatives are, but the type of impact that we have. Helping us to start exploring more the road of open innovation

- through earlier planning we’ll be able to achieve better outcomes, which will lead to more impact

- We will be building community and personal development into the planning process, thus making sure that we keep on investing in Mozillians

The approach is not only to set a framework but to accompany communities during the planning process, providing guidance when needed but also getting feedback about the process and how it can work in the Mozilla environment. The two main documents for planning are the Regional Community Planning Guide and the Planning for Impact presentation with the framework for choosing community goals.

I will be diving into both of documents in my next blog post, as well as into the support system we’ll be offering to communities that want to use this planning framework. This is just the first draft, so please let me know your thoughts, feedback and ideas!

http://ombrosa.co/2015/10/15/empowering-volunteers-and-communities-by-longer-term-planning/

|

|

Air Mozilla: Web QA Weekly Meeting, 15 Oct 2015 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Allison Naaktgeboren: Changes to Searching on Firefox for Android |

Searching for stuff, either in your own history or on the web, is an integral part of the browsing process. The UI for searching is important to a great user experience. For us, that UI is part of what we call the Awesomescreen. Firefox Desktop has an Awesome bar, we have an Awesomescreen. Look for these changes in Firefox 44.

New Search Suggestions from Your Search History

Previously, if you opt into search suggestions, we only showed you suggestions from your default search engine (if your default search engine supports it). Now we can also show you things you’ve searched for in the past on your mobile device. These suggestions from your search history are denoted by a clock icon. Anthony Lam designed the shiny new UI you’ll see below.

We’ve also updated the layout and styling of the search suggestion ‘buttons’ to help you spot the one you want faster and make the whole process easier on your eyes.

http://www.allisonnaaktgeboren.com/changes-to-searching-on-firefox-for-android/

|

|

Cameron Kaiser: And now for something completely different: The Ricoh Theta m15 panoramic camera and QTVR LIVES! |

Anyway, ObTenFourFox news. The two biggest crash bugs are hopefully repaired, i.e., issues 308 and 309. Issue 308 turned out to be related to a known problem with our native PowerPC irregexp implementation where pretty much anything in the irregexp native macroassembler that makes a OS X ABI-compliant function call goes haywire, craps on stack frames, etc. for reasons I have been unable to determine (our Ion ABI to PowerOpen ABI thunk works fine everywhere else). In this situation, the affected sites uncovered an edge case with checking for backreferences with UTF-16 text that (surprise) makes an ABI-compliant call to an internal irregexp function and stomped over rooted objects on the stack. I've just rewritten it all by hand the same way I did for growing the backtrack stack and that works, and that should be the last such manifestation of this problem. Assuming it sticks, the partial mitigation for this problem that I put in 38.3 will no longer be necessary and will be pulled in 38.5 since it has a memory impact.

Issue 309 was a weird one. This was another Apple Type Services-barfing-on-a-webfont bug that at first blush looked like we just needed to update the font blacklist (issue 261), except it would reliably crash even 10.4 systems which are generally immune. Turns out that null font table blobs were getting into Harfbuzz (our exclusive font shaper) from ATS that we weren't able to detect until it was too late, so I just wallpapered a bit in Harfbuzz to handle the edge case and then added the fonts to the blocklist as well. This doesn't affect Firefox, which has used CoreText exclusively ever since they went 10.6+. Both fixes will be in 38.4.0.

The problem with restoring from LZ4-compressed bookmark backups remains (issue 307) and I still don't know what's wrong. It doesn't appear to be an endian problem, at least, which would have been relatively easy to correct. The workaround is to simply not compress the backups (i.e., roll back to 31) until I figure out why it isn't functioning correctly and it will be in 38.4.0 also. I've also been doing some work on seeking within our MP3 audio implementation, which is the last major hurdle before enabling it by default. It won't be enabled in 38.4.0, but there might be enough of it working at the time of release for you to test it before I publicly roll it out (hopefully 38.5).

Also, some of you might have been surprised that I didn't post anything at the time about Mozilla's new plan to pull plugins (TenFourFox has of course been plugin-free since 6.0). I didn't comment on it frankly because I always figured it was inevitable, for many of the same reasons I've laid out before ad nauseam. Use SandboxSafari if you really need them.

Anyway, moving on to today's post, one of my hobbies is weird cameras. For example, I use a Fujifilm Real 3D W3 camera for 3-D images, which is a crummy 2D camera but takes incredible 3D pictures like nothing else. The camera takes both stills and video that I can display on my 3D HDTV, or I can use a custom tool (which I wrote, natch) to break apart its MPO images into right and left JPEGs and merge them into "conventional" anaglyphs with RedGreen (from the author of iCab, as it happens). I haven't figured out yet how to decode its AVI video into L/R channels, but I'm working on it. Post in the comments if you know how.

Panoramas have also been a longtime interest of mine, facilitated by QuickTime VR, another great software technology Apple completely forgot about (QTVR works fine through 10.5 but 10.6+ QuickTime X dropped it as a "legacy" format; you have to install QuickTime 7 to restore support). Most mobile devices still do a pretty crappy job on panos, and even though iOS and Android's respective panorama modes have definitely improved, they could scarcely have gotten worse. My original panorama workflow was to take one of my Nikon cameras and put it on a tripod, march out angles, and labouriously stitch them together with Hugin or QTVR Authoring Studio. QTVR Authoring Studio, by the way, works perfectly in Classic under 10.4, yet another reason I remain on Tiger forever. Unfortunately this process was not fun to shoot or edit, required much fiddling with exposure settings if lighting conditions were variable, usually had some bad merge areas that required many painstaking hours with an airbrush, and generally yielded an up-down field of view as roomy as an overgrown mail slot even though the image quality was quite good.

The best way to do panoramas is to get every single angle at once with a catadioptric camera. These can be added as external optics -- unfortunately with varying levels of compromises -- to an existing camera, or you can do substantially better by getting a camera expressly built for that purpose, which in this case is the Ricoh Theta m15.

The Ricoh Theta cameras are two fisheye lenses glommed together for a 360 degree view in both axes generated as an equirectangular image; the newest member, the Theta S, just came out (but too late for my trip). The m15 comes in four colours, all of them silly, but mine is blue. You can either take free shots with the button, or you can control it with a smartphone (iOS and Android apps available) over Wi-Fi using either the official Ricoh app or the free Android HDR one (tripod strongly advised).

The m15 doesn't take exceptional images in low light, and the resolution is a bit low (6.4 megapixels at 3584x1792, but remember that it's two images that the camera firmware glues together, and there's an awful lot of spherical aberration due to the design). But it does work, and you shouldn't be scared by the reviews and instructions saying you need a current Intel Mac or Windows PC to view your images. That's a damn lie, of course -- connect the camera over USB and Image Capture will happily download them (even 10.4), or have the smartphone apps download the images to your phone and send them over Bluetooth or E-mail them. Either way, the images work perfectly as QTVR panos; you don't need the Ricoh desktop software. When you load the image into QTVR Authoring Studio and make sure it's oriented horizontally, it "just works" with no tweaking necessary:

And here's a frame from the result, in QuickTime 7 (rendered out at 1024x768, high quality, 100% Photo JPEG compression):

Unlike the ugly map-on-a-sphere distortion the Ricoh apps cause, the QTVR pano looks just like "you're there," and you can share it with all your friends without any other software other than QuickTime. You can look all the way up and down, ignoring the camera's limp effort to edit itself and its base out, and there are no stitch lines or agonizing hours of retouching. With just a few seconds of processing, I'm back along the side of Interstate H3, looking over the Kaneohe bay once again. A perfect memory, perfectly captured. Isn't that what you bought the camera for in the first place?

The new Theta S bumps the resolution to 14.4 megapixels (5376x2688), and both the m15 and S are capable of video with the S offering 1080p quality. In fact, I'm so pleased with even the lower resolution of the m15 that I'll be picking up an S very soon, and my suspicion is it will work just as well. It's wonderful to see that an old tool like QTVR Authoring Studio still works flawlessly with current cameras, and given that QTVR-AS was never written for OS X, it's another example of how Classic is the best reason to still own an Power Mac.

http://tenfourfox.blogspot.com/2015/10/and-now-for-something-completely.html

|

|