Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Cameron: Rustfmt-ing Rust |

http://www.ncameron.org/blog/rustfmt-ing-rust/

http://featherweightmusings.blogspot.com/2015/10/rustfmt-ing-rust.html

|

|

Nick Cameron: Rustfmt-ing Rust |

Rustfmt is a tool for formatting Rust code. It has seen some rapid and impressive development over the last few months, thanks to some awesome contributions from @marcusklaas, @cassiersg, and several others. It is far from finished, but it is a powerful and useful tool. I would like Rustfmt to be a standard part of every Rustacean's toolkit. In particular, I would like Rustfmt to be used on every check-in of the Rust repo (and other large projects). For this to be possible, running Rustfmt on Rust must work without crashes, without generating poor formatting, and the whole repo must be pre-formatted so that future changes are not polluted with tonnes of formatting churn.

To work towards this, I've been running Rustfmt on some crates and modules. I would love to have some help doing this! It should be fairly easy to do with no experience with Rust or Rustfmt necessary (and you certainly don't need to know about compiler or library implementation). Hopefully you'll learn a fair bit about the Rust source code and Rustfmt in the process. This blog post is all about how to help.

For this blog post, I'll assume you don't know much about the world of Rust and go over the background etc. in a little detail. Feel free to ping me on irc or GitHub (nrc in both places) if you need any help.

Background

The Rust repo

Can be found at https://github.com/rust-lang/rust. It contains the source code for the Rust compiler and the standard library. When people talk about contributing to the Rust project, they often mean this repo. There are also other important repos in the rust-lang org.

Rustfmt

Rustfmt is a tool for formatting Rust code. Rustfmt is not finished by a long shot, and there are plenty of bugs, as well as some code it doesn't even try to reformat yet. You can find the rustfmt source at https://github.com/nrc/rustfmt.

The problem

We would like to be able to run Rustfmt on the Rust repo. Ideally, we'd like to run it as part of the test suite to make sure it is properly styled. However, there are two problems with this: having not run Rustfmt on it before, there will be a lot of changes the first time it is run; and there are lots of bugs in Rustfmt which we haven't found and prevent us using it on a project the size of Rust.

The solution

Pick a module or sub-module (best to start small, I wouldn't try whole crates), run Rustfmt on it and inspect the result, if there are problems (or Rustfmt crashes while running), file an issue against Rustfmt. If it succeeds (possibly with some manual fixups), make a PR of the changes and land it!

Details

Running rustfmt

First off you'll need to build rustfmt. For that you'll need the source code and an up to date version of the nightly compiler (scroll down to the bottom). Then to build, just run cargo build in the directory where you cloned Rustfmt. You can check it worked by running cargo test.

The most common reasons for a build failing are not using the nightly version of the compiler, or using one which is not new enough - Rustfmt lives on the bleeding edge of Rust development!

You'll also need a clone of the rust repo. Once you have that, pick a module to work on. You'll have to identify the main file for that module which will be lib.rs or mod.rs. Then to reformat, run something like target/debug/rustfmt --write-mode=overwrite ~/rust/src/librustc_trans/save/mod.rs from the directory you installed Rustfmt in (you will need to change the path to reflect the module you want to reformat).

Rustfmt issues

If Rustfmt crashes during formatting, please get a backtrace by re-running with RUST_BACKTRACE=1. File an issue on the Rustfmt repo. If you can make a minimal test case, that is very much appreciated. The crash should tell you which file Rustfmt failed on. My technique for getting a test case is to copy that file to a temporary and cut code until I have the smallest program which still gives the same crash. Note that input to Rustfmt does not have to be valid Rust, it only needs to parse.

If formatting succeeds, then take a look at the diff to see what has been changed. You can use git diff. I find it easier to push to GitHub and look at their diff. If there are places which you think should have been re-formatted, but weren't, or which were formatted poorly, please submit an issue to the Rustfmt repo. Include the diff of the change which you think is poor, or the code which was not reformatted.

Most cases of poor formatting should block landing the changes to the Rust repo. Some cases will be acceptable, but we could do better. In those cases please file an issue and submit a PR too, but leave a reference to the issue with the PR.

Fixups

Some code will need fixing up after Rustfmt is done. There are two reasons for this: in some places Rustfmt won't reformat the code yet, but it moves around surrounding code in a way which makes this a problem. In other places you might want non-standard formatting, for example if you have a 3x3 array which represents a matrix, you might want this on three lines even though rustfmt can fit it on one line.

For the first case you can manually edit the code. Check that Rustfmt preserves the changed code by running Rustfmt again and checking there are no changes.

For the second case, you can use the #[rustfmt_skip] attribute. This can be placed on functions, modules, and most other items. Again, after fixing up the source code and adding the attribute, check that Rustfmt does not make any further changes (and report as a bug if it does).

Rust PRs

And if it all works out, then submit a PR to Rust! Do this from your branch on GitHub. When submitting the PR, you can put r? @nrc in the first PR comment (or any subsequent comment) to ensure I get pinged for review. Or, if you know the files you worked on are frequently reviewed by a particular person, you can use the same method to request review from them. In that case, please cc @nrc so that I can keep track of what is getting formatted.

Examples

Some example PRs I've done and the issues filed on Rustfmt:

- librustc_trans/save - #262

- libgraphviz - #266, #263

- liballoc - #319, #320, #321, #322

- librustc_front - #339, #340, #341, #342, #366, #372, #384

- librustc_trans/save (again) - no issues, and see how much better rustfmt got in less than a month since the first PR!

|

|

Mozilla WebDev Community: Using peep on Heroku |

I recently moved a Django app to Heroku which was using peep, rather than pip, for package installation. By default Heroku will use pip to install your required packages when it sees a requirements.txt file in the root of your project, and the option to use peep instead does not exist.

Luckily one of my colleagues, pmac, was kind enough to create a share a Heroku buildpack which uses peep instead of pip for package installation. All you need to do to make use of this buildpack is issue the following command using the Heroku CLI for your app:

heroku buildpacks:set https://github.com/pmclanahan/heroku-buildpack-python-peep

Of course, make sure your requirements.txt is peep-compatible.

Happy peeping!

https://blog.mozilla.org/webdev/2015/10/06/using-peep-on-heroku/

|

|

Air Mozilla: Webdev Extravaganza: October 2015 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on.

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on.

|

|

Selena Deckelmann: [berlin] TaskCluster Platform: A Year of Development |

Back in September, the TaskCluster Platform team held a workweek in Berlin to discuss upcoming feature development, focus on platform stability and monitoring and plan for the coming quarter’s work related to Release Engineering and supporting Firefox Release. These posts are documenting the many discussions we had there.

Jonas kicked off our workweek with a brief look back on the previous year of development.

Prototype to Production

In the last year, TaskCluster went from an idea with a few tasks running to running all of FirefoxOS aka B2G continuous integration, which is about 40 tasks per minute in the current environment.

Architecture-wise, not a lot of major changes were made. We went from CloudAMQP to Pulse (in-house RabbitMQ). And shortly, Pulse itself will be moving it’s backend to CloudAMQP! We introduced task statuses, and then simplified them.

On the implementation side, however, a lot changed. We added many features and addressed a ton of docker worker bugs. We killed Postgres and added Azure Table Storage. We rewrote the provisioner almost entirely, and moved to ES6. We learned a lot about babel-node.

We introduced the first alternative to the Docker worker, the Generic worker. We for the first time had Release Engineering create a worker, the Buildbot Bridge.

We have several new users of TaskCluster! Brian Anderson from Rust created a system for testing all Cargo packages for breakage against release versions. We’ve had a number of external contributors create builds for FirefoxOS devices. We’ve had a few Github-based projects jump on taskcluster-github.

Features that go beyond BuildBot

One of the goals of creating TaskCluster was to not just get feature parity, but go beyond and support exciting, transformative features to make developer use of the CI system easier and fun.

Some of the features include:

- Interactive sessions

- Live logging (mentioned in our createArtifact() docs and visible in the task-inspector for a task)

- Public-first task statuses

- Easy Indexing

- Storage in S3 (see createArtifact() documentation)

- Public first, reference-style APIs

- Support for remote device lab workers

Features coming in the near future to support Release

Release is a special use case that we need to support in order to take on Firefox production worload. The focus of development work in Q4 and beyond includes:

- Secrets handling to support Release and ops workflows. In Q4, we should see secrets.taskcluster.net go into production and UI for roles-based management.

- Scheduling support for coalescing, SETA and cache locality. In Q4, we’re focusing on an external data solution to support coalescing and SETA.

- Private data hosting. In Q4, we’ll be using a roles-based solution to support these.

http://www.chesnok.com/daily/2015/10/05/berlin-taskcluster-platform-a-year-of-development/

|

|

Dave Townsend: Delivering Firefox features faster |

Over time Mozilla has been trying to reduce the amount of time between developing a feature and getting it into a user’s hands. Some time ago we would do around one feature release of Firefox every year, more recently we’ve moved to doing one feature release every six weeks. But it still takes at least 12 weeks for a feature to get to users. In some cases we can speed that up by landing new things directly on the beta/aurora branches but the more we do this the harder it is for release managers to track the risk of shipping a given release.

The Go Faster project is investigating ways that we can speed up getting changes to users. System add-ons are one piece of this that will let us deliver updates to core Firefox features more often than the regular six week releases. Instead of being embedded in the rest of the code certain features will be developed as standalone system add-ons.

Building features as add-ons gives us more flexibility in how we deliver the features to users. System add-ons will ship in two different ways. First every Firefox release will include a default set of system add-ons. These are the latest versions of the features at the time the Firefox build was produced. Later during runtime Firefox will contact Mozilla’s update servers to ask for the current list of system add-ons. If there are new or updated versions listed Firefox will download and install them giving users access to the newest features without needing to update the entire application.

Building a feature as an add-on gives developers a lot of benefits too. Developers will be able to work on and test new features without doing custom Firefox builds. Users can even try out new features by just installing the add-ons. Once the feature is ready to ship it ships as an add-on with no code changes necessary for integration into Firefox. This is something we’ve attempted to do before with things like Test Pilot and pdf.js, but system add-ons make this process much smoother and reduces the differences between how the feature runs as an add-on and how it runs when shipped in the application.

The basic support for system add-ons is already included in current nightly builds and Firefox 44 should be the first release that we could use to deliver features like this if we choose. If you’re interested in the details you can read the client implementation plan or follow along the tracking bug for the client side of the feature.

http://www.oxymoronical.com/blog/2015/10/Delivering-Firefox-features-faster

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20151005/

|

|

Selena Deckelmann: TaskCluster Platform: 2015Q3 Retrospective |

Welcome to TaskCluster Platform’s 2015Q3 Retrospective! I’ve been managing this team this quarter and thought it would be nice to look back on what we’ve done. This report covers what we did for our quarterly goals. I’ve linked to “Publications” at the bottom of this page, and we have a TaskCluster Mozilla Wiki page that’s worth checking out.

High level accomplishments

- Dramatically improved stability of TaskCluster Platform for Sheriffs by fixing TreeHerder ingestion logic and regexes, adding better logging and fixing bugs in our taskcluster-vcs and mozilla-taskcluster components

- Created and Deployed CI builds on three major platforms:

- Added Linux64 (CentOS), Mac OS X cross-compiled builds as Tier2 CI builds

- Completed and documented a prototype Windows 2012 builds in AWS and task configuration

- Deployed auth.taskcluster.net, enabling better security, better support for self-service authorization and easier contributions from outside our team

- Added region biasing based on cost and availability of spot instances to our AWS provisioner

- Managed the workload of two interns, and significantly mentored a third

- Onboarded Selena as a new manager

- Held a workweek to focus attention on bringing our environment into production support of Release Engineering

Goals, Bugs and Collaborators

We laid out our Q3 goals in this etherpad. Our chosen themes this quarter were:

- Improve operational excellence — focus on sheriff concerns, data collection,

- Facilitate self-serve consumption — refactoring auth and supporting roles for scopes, and

- Exploit opportunities to differentiate from other platforms — support for interactive sessions, docker images as artifacts, github integration and more blogging/docs.

We had 139 Resolved FIXED bugs in TaskCluster product.

We also resolved 7 bugs in FirefoxOS, TreeHerder and RelEng products/components.

We received significant contributions from other teams: Morgan (mrrrgn) designed, created and deployed taskcluster-github; Ted deployed Mac OS X cross compiled builds; Dustin reworked the Linux TC builds to use CentOS, and resolved 11 bugs related to TaskCluster and Linux builds.

An additional 9 people contributed code to core TaskCluster, intree build scripts and and task definitions: aus, rwood, rail, mshal, gerard-majax, mihneadb@gmail.com, htsai, cmanchester, and echen.

The Big Picture: TaskCluster integration into Platform Operations

Moving from B2G to Platform was a big shift. The team had already made a goal of enabling Firefox Release builds, but it wasn’t entirely clear how to accomplish that. We spent a lot of this quarter learning things from RelEng and prioritizing. The whole team spent the majority of our time supporting others use of TaskCluster through training and support, developing task configurations and resolving infrastructure problems. At the same time, we shipped docker-worker features, provisioner biasing and a new authorization system. One tricky infra issue that John and Jonas worked on early in the quarter was a strange AWS Provisioner failure that came down to an obscure missing dependency. We had a few git-related tree closures that Greg worked closely on and ultimately committed fixes to taskcluster-vcs to help resolve. Everyone spent a lot of time responding to bugs filed by the sheriffs and requests for help on IRC.

It’s hard to overstate how important the Sheriff relationship and TreeHerder work was. A couple teams had the impression that TaskCluster itself was unstable. Fixing this was a joint effort across TreeHerder, Sheriffs and TaskCluster teams.

When we finished, useful errors were finally being reported by tasks and starring became much more specific and actionable. We may have received a partial compliment on this from philor. The extent of artifact upload retries, for example, was made much clearer and we’ve prioritized fixing this in early Q4.

Both Greg and Jonas spent many weeks meeting with Ed and Cam, designing systems, fixing issues in TaskCluster components and contributing code back to TreeHerder. These meetings also led to Jonas and Cam collaborating more on API and data design, and this work is ongoing.

We had our own “intern” who was hired on as a contractor for the summer, Edgar Chen. He did some work with the docker-worker, implementing Interactive Sessions, and did analysis on our provisioner/worker efficiency. We made him give a short, sweet presentation on the interactive sessions. Edgar is now at CMU for his sophomore year and has referred at least one friend back to Mozilla to apply for an internship next summer.

Pete completed a Windows 2012 prototype build of Firefox that’s available from Try, with documentation and a completely automated process for creating AMIs. He hasn’t created a narrated video with dueling, British-English accented robot voices for this build yet.

We also invested a great deal of time in the RelEng interns. Jonas and Greg worked with Anhad on getting him productive with TaskCluster. When Anthony arrived, we also onboarded him. Jonas worked closely to get him working on a new project, hooks.taskcluster.net. To take these two bits of work from RelEng on, I pushed TaskCluster’s roadmap for generic-worker features back a quarter and Jonas pushed his stretch goal of getting the big graph scheduler into production to Q4.

We worked a great deal with other teams this quarter on taskcluster-github, supporting new Firefox and B2G builds, RRAs for the workers and generally telling Mozilla about TaskCluster.

Finally, we spent a significant amount of time interviewing, and then creating a more formal interview process that includes a coding challenge and structured-interview type questions. This is still in flux, but the first two portions are being used and refined currently. Jonas, Greg and Pete spent many hours interviewing candidates.

Berlin Work Week

Toward the end of the quarter, we held a workweek in Berlin to focus our next round of work on critical RelEng and Release-specific features as well as production monitoring planning. Dustin surprised us with delightful laser cut acrylic versions of the TaskCluster logo for the team! All team members reported that they benefited from being in one room to discuss key designs, get immediate code review, and demonstrate work in progress.

We came out of this with 20+ detailed documents from our conversations, greater alignment on the priorities for Platform Operations and a plan for trainings and tutorials to give at Orlando. Dustin followed this up with a series of ‘TC Topics’ Vidyo sessions targeted mostly at RelEng.

Our Q4 roadmap is focused on key RelEng features to support Release.

Publications

Our team published a few blog posts and videos this quarter:

- TaskCluster YouTube channel with two generic worker videos

- On Planet Taskcluster:

- Monitoring TaskCluster Infrastructure (garndt)

- Building Firefox for Windows 2012 on Try (pmoore)

- TaskCluster Component Loader (jhford)

- Getting started with TaskCluster APIs (jonasfj)

- De-mystifying TaskCluster intree scheduling (garndt)

- Running phone builds on TaskCluster (wcosta)

- On Air Mozilla

- Interactive Sessions (Edgar Chen)

- TaskCluster GitHub, Continuous integration for Mozillians by Mozillians (mrrrgn)

http://www.chesnok.com/daily/2015/10/05/taskcluster-platform-2015q3-retrospective/

|

|

Yunier Jos'e Sosa V'azquez: C'omo se hace? Cambiar tu navegador predeterminado en Windows 10 |

La llegada de la 'ultima versi'on de Windows caus'o mucho revuelo entre los usuarios al ver que su navegador predeterminado hab'ia sido cambiado. Por su parte, Mozilla reaccion'o y su CEO Chris Beard le envi'o una carta a su similar de Microsoft Satya Nadella pidiendo que no retrocedieran en la elecci'on y control de los usuarios.

Las versiones actuales del panda rojo deber'ian cambiar esto, pero si por alguna raz'on no lo hace y te gustar'ia recuperar Firefox u otro navegador como predeterminado, te recomiendo que sigas estos pasos:

- Haz clic en el bot'on de men'u

, despu'es selecciona Opciones.

, despu'es selecciona Opciones. - En el panel General, haz clic en Convertir en predeterminado.

- La aplicaci'on de Ajustes de Windows abrir'a la pantalla de Selecciona programas predeterminados.

- Despl'azate hacia abajo y haz clic en la entrada de Explorador web. En este caso, el icono mostrar'a Microsoft Edge o bien Selecciona tu navegador predeterminado.

- En la pantalla de Elegir una aplicaci'on, haz clic en Firefox para establecerlo como el navegador predeterminado.

- Firefox ahora aparece como tu navegador predeterminado. Cierra la ventana para guardar tus cambios.

Y listo, ya tendr'as Firefox de vuelta como tu navegador predeterminado y preferido.

Fuente: Mozilla Support

http://firefoxmania.uci.cu/como-se-hace-cambiar-tu-navegador-predeterminado-en-windows-10/

|

|

The Servo Blog: This Week In Servo 36 |

In the last week, we landed 69 PRs in the Servo repository!

Glenn wrote a short report on how webrender is coming along. Webrender is a new renderer for Servo which is specialized for web content. The initial results are quite promising!

Notable additions

- Patrick and Corey reduced allocator churn in our DOMstring code and string joining code

- Josh restyled

so thatworks. - Vladimir’s Windows work continues

- Corey implemented

- Martin simplified our stacking context creation code

New Contributors

Screenshots

Snazzy new form widgets:

Meetings

At last week’s meeting, we discussed webrender, and pulling app units out into a separate crate.

|

|

Daniel Stenberg: Talked HTTP/2 at ApacheCon |

I was invited as one of the speakers at the ApacheCon core conference in Budapest, Hungary on October 1-2, 2015.

I was once again spreading the news about HTTP/2, why it was made and how it works and of course: updated numbers on adoption right now.

The talk was unfortunately not filmed, but I’ve put my slides for this version of my talk online. Readers of this blog and those who’ve seen my presentations before will recognize large parts of it.

Following my talk was talks about mod_http2, the Apache module for HTTP/2 that will be coming in the upcoming 2.4.17 release of Apache Httpd, explained by its author Stefan Eissing. The name of the module was actually a bit of a surprise to me since it has been known as just mod_h2 for its entire life time up until now.

William A Rowe took us through the state of TLS for the main Apache servers and yeah, the state seem to be pretty good and they’re coming along really well. TLS and then HTTPS is important as that’s really a prerequisite for HTTP/2

I also got to listen to Mark Thomas explain the agonies of making Tomcat support HTTP/2, and then perhaps especially how ALPN and a good set of ciphers are hard to get in Java.

Jean-Frederic Clere then explained how to activate HTTP/2 on all the Apache servers (tomcat, httpd and traffic server) and a little about their HTTP/2 state, following with an explanation how they worked on tomcat to make that use OpenSSL for the TLS layer (including ALPN) to avoid the deadlock of decent TLS support in Java.

All in all, a great track and splendid talks with deep technical content. Exactly the way I like it. Thanks everyone. Apachecon certainly delivered for me! Twas fun.

http://daniel.haxx.se/blog/2015/10/05/talked-http2-at-apachecon/

|

|

Mike Conley: The Joy of Coding – Ep’s 23 – 29 |

Wow! I’ve been a way from this blog for too long. I also haven’t posted any new episodes for The Joy of Coding. I also haven’t been keeping up with my Things I’ve Learned posts.

Time to get back in the saddle. First thing’s first, here are 6 episodes of The Joy of Coding that have aired. Unfortunately, I haven’t put together summaries for any of them, but I’ve put their agendas near the videos so that might give some clues.

Here we go!

Episode 23

Episode 24

Episode 25

Episode 26

Episode 27

Episode 28

Episode 29

http://mikeconley.ca/blog/2015/10/04/the-joy-of-coding-eps-23-29/

|

|

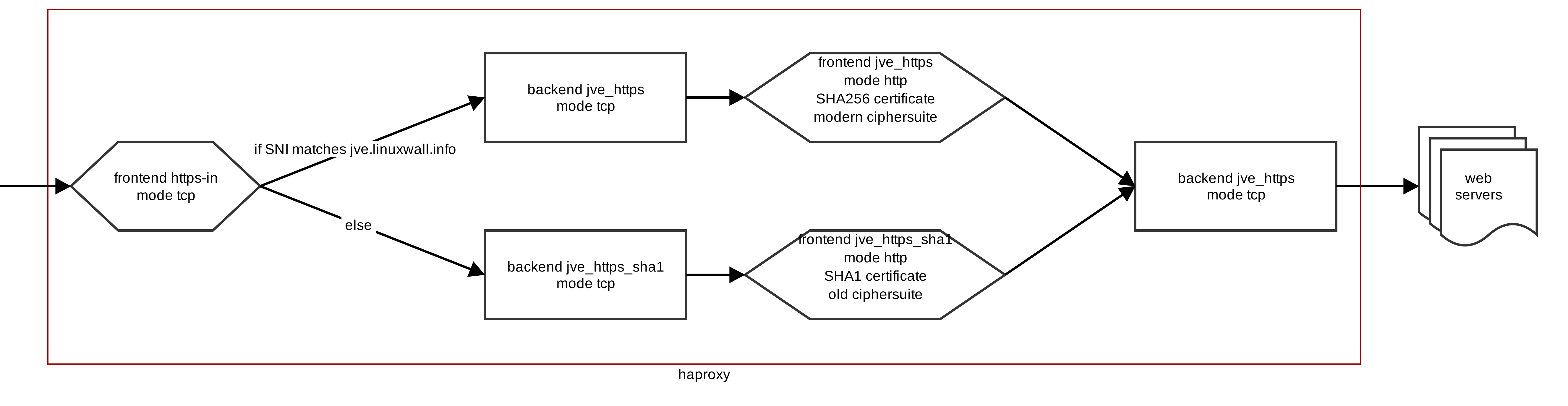

Julien Vehent: SHA1/SHA256 certificate switching with HAProxy |

SHA-1 certificates are on their way out, and you should upgrade to a SHA-256 certificate as soon as possible... unless you have very old clients and must maintain SHA-1 compatibility for a while.

If you are in this situation, you need to either force your clients to upgrade (difficult) or implement some form of certificate selection logic: we call that "cert switching".

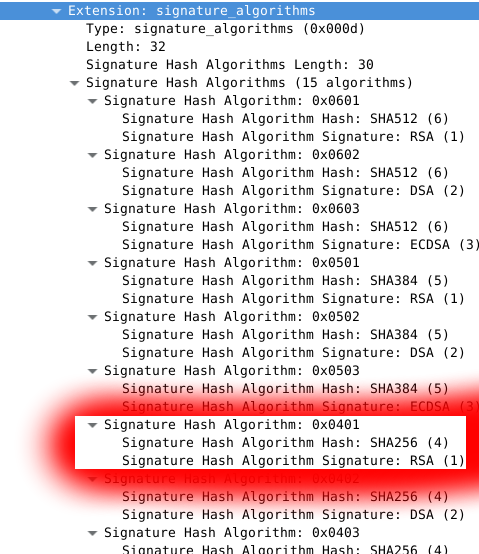

The most deterministic selection method is to serve SHA-256 certificates to clients that present a TLS1.2 CLIENT HELLO that explicitly announces their support for SHA256-RSA (0x0401) in the signature_algorithms extension.

Modern web browsers will send this extension. However, I am not aware of any open source load balancer that is currently able to inspect the content of the signature_algorithms extension. It may come in the future, but for now the easiest way to achieve cert switching is to use HAProxy SNI ACLs: if a client presents the SNI extension, direct it to a backend that presents a SHA-256 certificate. If it doesn't present the extension, assume that it's an old client that speaks SSLv3 or some broken version of TLS, and present it a SHA-1 cert.

This can be achieved in HAProxy by chaining frontend and backends:

global

ssl-default-bind-ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128

-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-R

SA-AES256-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!3DES:!MD5:!PSK

frontend https-in

bind 0.0.0.0:443

mode tcp

tcp-request inspect-delay 5s

tcp-request content accept if { req_ssl_hello_type 1 }

use_backend jve_https if { req.ssl_sni -i jve.linuxwall.info }

# fallback to backward compatible sha1

default_backend jve_https_sha1

backend jve_https

mode tcp

server jve_https 127.0.0.1:1665

frontend jve_https

bind 127.0.0.1:1665 ssl no-sslv3 no-tlsv10 crt /etc/haproxy/certs/jve_sha256.pem tfo

mode http

option forwardfor

use_backend jve

backend jve_https_sha1

mode tcp

server jve_https 127.0.0.1:1667

frontend jve_https_sha1

bind 127.0.0.1:1667 ssl crt /etc/haproxy/certs/jve_sha1.pem tfo ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:ECDHE-RSA-DES-CBC3-SHA:ECDHE-ECDSA-DES-CBC3-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:DES-CBC3-SHA:HIGH:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA

mode http

option forwardfor

use_backend jve

backend jve

rspadd Strict-Transport-Security:\ max-age=15768000

server jve 172.16.0.6:80 maxconn 128

The configuration above receives inbound traffic in the frontend called "https-in". That frontend is in TCP mode and inspects the CLIENT HELLO coming from the client for the value of the SNI extension. If that value exists and matches our target site, it sends the connection to the backend named "jve_https", which redirects to a frontend also named "jve_https" where the SHA256 certificate is configured and served to the client.

If the client fails to present a CLIENT HELLO with SNI, or presents a SNI that doesn't match our target site, it is redirected to the "https_jve_sha1" backend, then to its corresponding frontend where a SHA1 certificate is served. That frontend also supports an older ciphersuite to accommodate older clients.

Both frontends eventually redirect to a single backend named "jve" which sends traffic to the destination web servers.

This is a very simple configuration, and eventually it could be improved using better ACLs (HAproxy regularly adds news ones), but for a basic cert switching configuration, it gets the job done!

|

|

Yunier Jos'e Sosa V'azquez: VI Taller Internacional de Tecnolog'ias de Software Libre y C'odigo Abierto |

Como parte de la XVI Convenci'on y Feria Internacional Inform'atica Habana 2016 a realizarse en nuestra capital a partir del 14 y hasta el 18 de marzo de 2016, se desarrollar'a el VI Taller Internacional de Tecnolog'ias de Software Libre y C'odigo Abierto, un evento donde las personas podr'an debatir en torno a diferentes tem'aticas como por ejemplo: la adopci'on de tecnolog'ias de software libre y c'odigo abierto, el desarrollo y personalizaci'on de sistemas operativos, y aspectos econ'omicos, legales y sociales.

Como parte del taller de software libre y c'odigo abierto en las tem'aticas se tratar'a lo siguiente:

Como parte del taller de software libre y c'odigo abierto en las tem'aticas se tratar'a lo siguiente:

- Adopci'on de tecnolog'ias de software libre y c'odigo abiertoExperiencias en la conducci'on y ejecuci'on de procesos de migraci'on a aplicaciones de software libre y c'odigo abierto. Implantaci'on de tecnolog'ias libres en el sector p'ublico y privado. Modelos de madurez para la selecci'on de tecnolog'ias libres. Rol del software libre en el desarrollo sostenible.

- Construcci'on / personalizaci'on de sistemas operativos basados en fuentes abiertasDesarrollo de sistemas operativos libres a la medida para computadoras de escritorio, servidores y tel'efonos m'oviles. Software libre embebido. Construcci'on de distribuciones GNU/Linux.

- Aspectos econ'omicos, legales y socialesEstudios de viabilidad econ'omica para la adopci'on de software libre. Modelos de negocios aplicables. Impacto social del software libre. Propiedad intelectual, derecho de autor y licenciamiento de tecnolog'ias libres. Marco regulativo para el empleo de software libre.

- Tecnolog'ias de software libreSoftware libre para la nube, seguridad en tecnolog'ias libres. Est'andares abiertos en el desarrollo de soluciones. OpenData. OpenCloud. Tecnolog'ias de gesti'on de datos en c'odigo abierto. Software libre como servicio, aplicaciones empresariales de fuentes abiertas. Tecnolog'ias m'oviles de c'odigo abierto.

Desde la p'agina web del evento tambi'en se puede observar varios datos 'utiles para las personas que deseen participar entre los que destacan:

Fechas Importantes

- Presentaci'on de res'umenes y ponencias: 20 de octubre de 2015

- Notificaci'on de aceptaci'on: 20 de noviembre de 2015

- Env'io del trabajo final para su publicaci'on: 7 de diciembre de 2015

Pautas de Redacci'on

Los trabajos se presentar'an a partir de las tem'aticas principales de los distintos eventos a realizarse en el marco de la Convenci'on, teniendo en cuenta las siguientes especificaciones:

- Deben entregarse en ficheros compatibles con formato de documento abierto

- L'imite de 10 hojas

- El tama~no de la hoja ser'a tipo carta (8,5” x 11” 'o 21,59 cm. x 27,94 cm.), con m'argenes de 2 cm. por cada lado y escrito a dos columnas

- Deber'a utilizarse tipograf'ia Arial a 11 puntos para los encabezados y a 10 puntos para los textos, con un interlineado sencillo

- Se redactar'a en los idiomas del evento (espa~nol o ingl'es)

Estructura de los trabajos:

- T'itulo

- T'itulo (en ingl'es)

- Autor y coautores

- Afiliaci'on y datos de contactos

- Resumen y Palabras Claves (en espa~nol y en ingl'es)

- Introducci'on

- Contenido

- Conclusiones

- Agradecimientos (opcional)

- Referencias bibliogr'aficas

Para facilitar la elaboraci'on de su trabajo conforme a las especificaciones de la Convenci'on, tiene que descargar la plantilla y sustituya sus textos. .doc

Usted deber'a enviar su trabajo a trav'es de la Plataforma para la Gesti'on de Ponencias, recuerde que debe adjuntar adem'as una s'intesis curricular del autor principal. Las presentaciones de los trabajos a exponer en el evento presencial deben ser entregados por los ponentes en la Oficina de Recepci'on de Medios Audiovisuales, en el Palacio de Convenciones, un d'ia antes de la exposici'on en sala. Las conferencias, presentaciones y otros materiales de la Convenci'on se publicar'an en un disco compacto con su registro ISBN.

|

|

Luis Villa: Software that liberates people: feels about FSF@30 and OSFeels@1 |

tl;dr: I want to liberate people; software is a (critical) tool to that end. There is a conference this weekend that understands that, but I worry it isn’t FSF’s.

This morning, social network chatter reminded me of FSF‘s 30th birthday celebration. These travel messages were from friends who I have a great deal of love and respect for, and represent a movement to which I essentially owe my adult life.

Despite that, I had lots of mixed feels about the event. I had a hard time capturing why, though.

While I was still processing these feelings, late tonight, Twitter reminded me of a new conference also going on this weekend, appropriately called Open Source and Feelings. (I badly wanted to submit a talk for it, but a prior commitment kept me from both it and FSF@30.)

I saw the OSFeels agenda for the first time tonight. It includes:

- Design and empathy (learning to build open software that empowers all users, not just the technically sophisticated)

- Inclusive development (multiple talks about this, including non-English, family, and people of color) (so that the whole planet can access, and participate in developing, open software)

- Documentation (so that users understand open software)

- Communications skills (so that people feel welcome and engaged to help develop open software)

This is an agenda focused on liberating human beings by developing software that serves their needs, and engaging them in the creation of that software. That is incredibly exciting. I’ve long thought (following Sen and Nussbaum’s capability approach) that it is not sufficient to free people; they must be empowered to actually enjoy the benefits of that freedom. This is a conference that seems to get that, and I can’t wait to go (and hopefully speak!) next year.

The Free Software Foundation event’s agenda:

- licenses

- crypto

- boot firmware

- federation

These are important topics. But there is clearly a difference in focus here — technology first, not people. No mention of community, or of design.

This difference in focus is where this morning’s conflicted feels came from. On the one hand, I support FSF, because they’ve done an incredible amount to make the world a better place. (OSFeels can take open development for granted precisely because FSF fought so many battles about source code.) But precisely because I support FSF, I’d challenge it, in the next 15 years, to become more clearly and forcefully dedicated to liberating people. In this world, FSF would talk about design, accessibility, and inclusion as much as licensing, and talk about community-building protocols as much as communication protocols. This is not impossible: LibrePlanet had at least some people-focused talks (e.g.), and inclusion and accessibility are a genuine concern of staff, even if they didn’t rise to today’s agenda. But it would still be a big change, because at the deepest level, it would require FSF to see source code as just one of many requirements for freedom, rather than “the point of free software“.

At the same time, OSFeels is clearly filled with people who see the world through a broad, thoughtful ethical lens. It is a sad sign, both for FSF and how it is perceived, that such a group uses the deliberately apolitical language of openness rather than the language of a (hopefully) aligned ethical movement — free software. I’ll look forward to the day (maybe FSF’s 45th (or 31st!) birthday) that both groups can speak and work together about their real shared concern: software that liberates people. I’d certainly have no conflicted feelings about signing up for a conference on that :)

http://lu.is/blog/2015/10/02/software-that-liberates-people-feels-about-fsf30-and-osfeels1/

|

|

David Humphrey: How to become a Fool Stack Programmer |

At least once in your career as a programmer, and hopefully more than once and with deliberate regularity, it is important to leave the comfort of your usual place along the stack and travel up or down it. While you usually fix bugs and add features using a particular application, tool, or API and work on top of some platform, SDK, or operating system, this time you choose to climb down a wrung and work below. In doing so you are working on instead of with something.

There are a number of important outcomes of changing levels. First, what you previously took for granted, and simply used as such, suddenly comes into view as a thing unto itself. This layer that you've been standing on, the one that felt so secure...it turns out to have also have been built, just like the things you build from above! It sounds obvious, but in my experience, the effect it has on your approach from this point forward is drastically changed. Second, and very much related to the first, your likelihood to lash out when you encounter bugs or performance and implementation issues gets abated. You gain empathy and understanding.

If all you ever do is use an implementation, tool, or API, and never build or maintain one, it's easy to take them for granted, and speak about them in detached ways: Why would anyone do it this way? Why is this so slow? Why won't they fix this bug after 12 years! And then, Why are they so stupid as to have done this thing?

Now, the same works in the other direction, too. Even though there are more people operating at the higher level and therefore need to descend to do what I'm talking about, those underneath must also venture above ground. If all you've ever done is implement things, and never gone and used such implementations to build real things, you're just as guilty, and equally, if not more likely to snipe and complain about people above you, who clearly don't understand how things really work. It's tantalizingly easy to dismiss people who haven't worked at your level: it's absolutely true that most of them don't understand your work or point of view. The way around this problem is not to wait and hope that they will come to understand you, but to go yourself, and understand them.

Twitter, HN, reddit, etc. are full of people at both levels making generalizations, lobbing frustration and anger at one another, and assuming that their level is the only one that actually matters (or exists). Fixing this problem globally will never happen; but you can do something at the personal level.

None of us enjoys looking foolish or revealing our own ignorance. And one of the best ways to avoid both is to only work on what we know. What I'm suggesting is that you purposefully move up or down the stack and work on code, and with tools, people, and processes that you don't know. I'm suggesting that you become a Fool, at least in so far as you allow yourself to be humbled by this other world, with its new terminology, constraints, and problems. By doing this you will find that your ability to so easily dismiss the problems of the other level will be greatly reduced. Their problems will become your problems, and their concerns your concerns. You will know that you've correctly done what I'm suggesting when you start noticing yourself referring to "our bug" and "how we do this" instead of "their" and "they."

Becoming a Fool Stack Programmer is not about becoming an expert at every level of the stack. Rather, its goal is to erase the boundary between the levels such that you can reach up or down in order to offer help, even if that help is only to offer a kind word of encouragement when the problem is particularly hard: these are our problems, after all.

I'm grateful to this guy who first taught me this lesson, and encouraged me to always keep moving up the stack.

|

|

Jan de Mooij: Bye Wordpress, hello Jekyll! |

This week I migrated this blog from Wordpress to Jekyll, a popular static site generator. This post explains why and how I did this, maybe it will be useful to someone.

Why?

Wordpress powers thousands of websites, is regularly updated, has a ton of features. Why did I abandon it?

I still think Wordpress is great for a lot of websites and blogs, but I felt it was overkill for my simple website. It had so many features I never used and this came at a price: it was hard to understand how everything worked, it was hard to make changes and it required regular security updates.

This is what I like most about Jekyll compared to Wordpress:

- Maintainance, security: I don't blog often, yet I still had to update Wordpress every few weeks or months. Even though the process is pretty straight-forward, it got cumbersome after a while.

- Setup: Setting up a local Wordpress instance with the same content and configuration was annoying. I never bothered so the little development I did was directly on the webserver. This didn't feel very good or safe. Now I just have to install Jekyll, clone my repository and generate plain HTML files. No database to setup. No webserver to install (Jekyll comes with a little webserver, see below).

- Transparency: With Wordpress, the blog posts were stored somewhere in a MySQL database. With Jekyll, I have Markdown files in a Git repository. This makes it trivial to backup, view diffs, etc.

- Customizability: After I started using Jekyll, customizing this blog (see below) was very straight-forward. It took me less than a few hours. With Wordpress I'm sure it'd have taken longer and I'd have introduced a few security bugs in the process.

- Performance: The website is just some static HTML files, so it's fast. Also, when writing a blog post, I like to preview it after writing a paragraph or so. With Wordpress it was always a bit tedious to wait for the website to save the blog post and reload the page. With Jekyll, I save the markdown file in my text editor and, in the background,

jekyll serveimmediately updates the site, so I can just refresh the page in the browser. Everything runs locally. - Hosting: In the future I may move this blog to GitHub Pages or another free/cheaper host.

Why Jekyll?

I went with Jekyll because it's widely used, so there's a lot of documentation and it'll likely still be around in a year or two. Octopress is also popular but under the hood it's just Jekyll with some plugins and changes, and it seems to be updated less frequently.

How?

I decided to use the default template and customize it where needed. I made the following changes:

- Links to previous/next post at the end of each post, see post.html

- Pagination on the homepage, based on the docs. I also changed the home page to include the contents instead of just the post title.

- Archive page, a list of posts grouped by year, see archive.html

- Category pages. I wrote a small plugin to generate a page + feed for each category. This is based on the example in the plugin documentation. See _plugins/category-generator.rb and _layouts/category.html

- List of categories in the header of each post (with a link to the category page), see post.html

- Disqus comments and number of comments in the header of each post, based on the docs, see post.html. I was able to export the Wordpress comments to Disqus.

- In _config.yml I changed the post URL format ("permalink" option) to not include the category names. This way links to my posts still work.

- Some minor tweaks here and there.

I still want to change the code highlighting style, but that can wait for now.

Conclusion

After using Jekyll for a few hours, I'm a big fan. It's simple, it's fun, it's powerful. If you're tired of Wordpress and Blogger, or just want to experiment with something else, I highly recommend giving it a try.

http://jandemooij.nl/blog/2015/10/03/bye-wordpress-hello-jekyll

|

|

Mozilla Addons Blog: Add-on Compatibility for Firefox 42 |

Firefox 42 will be released on November 3rd. Here’s the list of changes that went into this version that can affect add-on compatibility. There is more information available in Firefox 42 for Developers, so you should also give it a look.

General

- Remove support for the prefixed mozRequestAnimationFrame.

- Convert newTab.xul to newTab.xhtml.

- Provide visual indicator as to which tab is causing sound. This required some changes to the tab XBL bindings, so it can break add-ons that change tab behavior or tab appearance.

- Badged button (like social buttons) causes havoc with overflow and panel menu. This was broken for a while, but it’s fixed now.

- Calling Map/Set/WeakMap() (without `new`) should throw.

XPCOM

- Split nsIContentPolicy::TYPE_SUBDOCUMENT into TYPE_FRAME and TYPE_IFRAME. This should only affect your add-on if it implements nsIContentPolicy.

- Use origin for nsIPermissionManager. Permissions in the Permission Manager used to be handled per-host, and now they will be handled per-origin. This means that https://mozilla.org and http://mozilla.org have different permission entries. This changes some methods in the Permission Manager so they accept URIs instead of strings.

New

- Create API for add-ons to add large header image to speed-dial home panels. This API is available for add-ons on Firefox for Android.

Please let me know in the comments if there’s anything missing or incorrect on these lists. If your add-on breaks on Firefox 42, I’d like to know.

The automatic compatibility validation and upgrade for add-ons on AMO will happen in the coming weeks, so keep an eye on your email if you have an add-on listed on our site with its compatibility set to Firefox 41.

https://blog.mozilla.org/addons/2015/10/02/compatibility-for-firefox-42/

|

|

Joel Maher: Hacking on a defined length contribution program |

Contribution takes many forms where each person has different reasons to contribute or help people contribute. One problem we saw a need to fix was when a new contributor came to Mozilla and picked up a “good first bug”, then upon completion was left not knowing what to do next and picking up other random bugs. The essential problem is that we had no clear path defined for someone to start making more substantial improvements to our projects. This can easily lead toward no clear mentorship as well as a lot of wasted time setting up and learning new things. In response to this, we decided to launch the Summer of Contribution program.

Back in May we announced two projects to pilot this new program: perfherder and developer experience. In the announcement we asked that interested hackers commit to dedicating 5-10 hours/week for 8 weeks to one of these projects. In return, we would act as a dedicated mentor and do our best to ensure success.

I want to outline how the program was structured, what worked well, and what we want to do differently next time.

Program Structure

The program worked well enough, with some improvising, here is what we started with:

-

we created a set of bugs that would be good to get new contributors started and working for a few weeks

-

anybody could express interest via email/irc, we envisioned taking 2-3 participants based on what we thought we could handle as mentors.

That was it, we improvised a little by doing:

- accepting more than 2-3 people to start (4-6)- we had a problem saying no

- folks got ramped up and just kept working (there was no official start date)

- blogging about who was involved and what they would be doing (intro to the perfherder team, intro to the dx team)

- setting up communication channels with contributors like etherpad, email, wunderlist, bugzilla, irc

- setting up regular meetings with contributors

- picking an end date

- summarizing the program (wlach‘s perfherder post, jmaher’s dx post)

What worked well

A lot worked very well, specifically advertising by blog post and newsgroup post and then setting the expectation of a longer contribution cycle rather than a couple weeks. Both :wlach and myself have had a good history of onboarding contributors, and feel that being patient, responding quickly, communicating effectively and regularly, and treating contributors as team members goes a long way. Onboarding is easier if you spend the time to create docs for setup (we have the ateam bootcamp). Without mentors being ready to onboard, there is no chance for making a program like this work.

Setting aside a pile of bugs to work on was successful. The first contribution is hard as there is so much time required for setup, so many tools and terms to get familiar with, and a lot of process to learn. After the first bug is completed, what comes next? Assuming it was enjoyable, one of two paths usually take place:

- Ask what is next to the person that reviewed your code or was nice to you on IRC

- Find another bug and ask to work on it

Both of these are OK models, but there is a trap where you could end up with a bug that is hard to fix, not well defined, outdated/irrelevant, or requires a lot of new learning/setup. This trap is something to avoid where we can build on the experience of the first bug and work on the same feature but on a bug that is a bit more challenging.

A few more thoughts on the predefined set of bugs to get started:

- These should not be easily discoverable as “good first bugs“, because we want people who are committed to this program to work on them, rather than people just looking for an easy way to get involved.

- They should all have a tracking bug, tag, or other method for easily seeing the entire pool of bugs

- All bugs should be important to have fixed, but they are not urgent- think about “we would like to fix this later this quarter or next quarter”. If we do not have some form of urgency around getting the bugs fixed, our eagerness to help out in mentoring and reviewing will be low. A lot of times while working on a feature there are followup bugs, those are good candidates!

- There should be an equal amount (5-10) of starter bugs, next bugs, and other bugs

- Keep in mind this is a starter list, imagine 2-3 contributors hacking on this for a month, they will be able to complete them all.

- This list can grow as the project continues

Another thing that worked is we tried to work in public channels (irc, bugzilla) as much as possible, instead of always private messaging or communicating by email. Also communicating to other team members and users of the tools that there are new team members for the next few months. This really helped the contributors see the value of the work they are doing while introducing them to a larger software team.

Blog posts were successful at communicating and helping keep things public while giving more exposure to the newer members on the team. One thing I like to do is ensure a contributor has a Mozillians profile as well as links to other discoverable things (bugzilla id, irc nick, github id, twitter, etc.) and some information about why they are participating. In addition to this, we also highlighted achievements in the fortnightly Engineering Productivity meeting and any other newsgroup postings we were doing.

Lastly I would like to point out a dedicated mentor was successful. As a contributor it is not always comfortable to ask questions, or deal with reviews from a lot of new people. Having someone to chat with every day you are hacking on the project is nice. Being a mentor doesn’t mean reviewing every line of code, but it does mean checking in on contributors regularly, ensuring bugs are not stuck waiting for needinfo/reviews, and helping set expectations of how work is to be done. In an ideal world after working on a project like this a contributor would continue on and try to work with a new mentor to grow their skills in working with others as well as different code bases.

What we can do differently next time?

A few small things are worth improving on for our next cycle, here is a few things we will plan on doing differently:

- Advertising 4-5 weeks prior and having a defined start/end date (e.g. November 20th – January 15th)

- Really limiting this to a specific number of contributors, ideally 2-3 per mentor.

- Setting acceptance criteria up front. This could be solving 2 easy bugs prior to the start date.

- Posting an announcement welcoming the new team members, posting another announcement at the halfway mark, and posting a completion announcement highlighting the great work.

- Setting up a weekly meeting schedule that includes status per person, great achievements, problems, and some kind of learning (guest speaker, Q&A, etc.). This meeting should be unique per project.

- Have a simple process for helping folks transition out of they have less time than they thought- this will happen, we need to account for it so the remaining contributors get the most out of the program.

In summary we found this to be a great experience and are looking to do another program in the near future. We named this Summer of Contribution for our first time around, but that is limiting to when it can take place and doesn’t respect the fact that the southern hemisphere is experiencing Winter during that time. With that :maja_zf suggested calling it Quarter of Contribution which we plan to announce our next iteration in the coming weeks!

https://elvis314.wordpress.com/2015/10/02/hacking-on-a-defined-length-contribution-program/

|

|

Will Kahn-Greene: Dennis v0.7 released! New lint rules and more tests! |

What is it?

Dennis is a Python command line utility (and library) for working with localization. It includes:

- a linter for finding problems in strings in .po files like invalid Python variable syntax which leads to exceptions

- a template linter for finding problems in strings in .pot files that make translator's lives difficult

- a statuser for seeing the high-level translation/error status of your .po files

- a translator for strings in your .po files to make development easier

v0.7 released!

It's been 10 months since the last release. In that time, I:

- Added a lot more tests and fixed bugs discovered with those tests.

- Added lint rule for bad format characters like %a (#68)

- Missing python-format variables is now an error (#57)

- Fix notype test to handle more cases (#63)

- Implement rule exclusion (#60)

- Rewrite --rule spec verification to work correctly (#61)

- Add --showfuzzy to status command (#64)

- Add untranslated word counts to status command (#55)

- Change Var to Format and use gettext names (#48)

- Handle the standalone } case (#56)

I thought I was close to 1.0, but now I'm less sure. I want to unify the .po and .pot linters and generalize them so that we can handle other l10n file formats. I also want to implement a proper plugin system so that it's easier to add new rules and it'd allow other people to create separate Python packages that implement rules, tokenizers and translaters. Plus I want to continue fleshing out the tests.

At the (glacial) pace I'm going at, that'll take a year or so.

If you're interested in dennis development, helping out or have things you wish it did, please let me know. Otherwise I'll just keep on keepin on at the current pace.

Where to go for more

For more specifics on this release, see here: https://dennis.readthedocs.org/en/v0.7/changelog.html#version-0-7-0-october-2nd-2015

Documentation and quickstart here: https://dennis.readthedocs.org/en/v0.7/

Source code and issue tracker here: https://github.com/willkg/dennis

Source code and issue tracker for Denise (Dennis-as-a-service): https://github.com/willkg/denise

47 out of 80 Silicon Valley companies say their last round of funding depended solely on having dennis in their development pipeline and translating their business plan into Dubstep.

|

|