Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Wil Clouser: Ten years of addons.mozilla.org |

Ten years ago, Ben Smedberg and Wolf landed the first version of the code that would make up AMO. At the end of 2004 it was still called update.mozilla.org, written in PHP, and was just over 200k, compressed.

Behold the home page of update.mozilla.org.

This moved through, I think, 1 major revision “update-beta” which added a database tabled called main that held all the add-on info. This came with some additional fancy styles:

I started around 16 months after this foundation was laid. AMO was essentially flat HTML files sprinkled with PHP code and database queries, just like everyone says not to do now. I think the code was running on a single server named Chameleon at the time and as we released versions of Firefox it would get overwhelmed and become unresponsive. Most of the work at this time was adding in layers of caching like Smarty and keeping everything in memcached.

We had a major rewrite in planning (one with more dynamic possibilities) and I chose CakePHP as the foundation. That release happened and brought another redesign with it:

Check out how those gorgeous pale yellow and faded blue bars complement each other. Also note that we’re still claiming to be in beta at this point.

The Adblock detail page in 2006. Rated 5 out of 5 by someone named Nick!

Finally, at the end of 2006 the infamous chopper design appeared and took over the top third of our site:

Customizability was the message we were trying to communicate with this design. There were some concerns voiced at the time…

This version followed the first quickly. The header was reduced slightly, the content got some more margin, and we started using whitespace to keep things looking simple. On the other hand, you’ll notice our buttons getting more complicated – this was the beginning of our button horrors as we started to customize them for each visitor’s device.

Both chopper designs were relatively short lived and, I want to say around early 2008 we moved to another infamous design – the green candy bar.

Here we are emphasizing search. And how. There was a rumor we actually stole this design from another site at the time – I assure you that wasn’t the case.

I don’t remember the actual date on this one, but let’s say 2009 since we seem to redesign this every year. This was a complete departure from our previous work and the design was done from scratch.

Not a bad looking design and one that served us well.

Finally, our current design:

I still think this is a good looking site.

This design was also the first year we had a separate mobile site.

I’ve had the draft for this post written for years and I think it’s time just to hit publish on it – feel free to comment about all the things I forgot. There should probably also be some disclaimer about me writing this on a Friday afternoon and I take no responsibility for misinformation or wrong dates. ![]()

Thanks to fligtar for having screenshots of all the old versions!

http://micropipes.com/blog/2014/02/21/ten-years-of-addons-mozilla-org/

|

|

Ian Bicking: Collaboration as a Skeuomorphism for Agents |

In concept videos and imaginings about the Future Of Computing we often see Intelligent Agents: smart computer programs that work on your behalf.

But to be more specific, I’m interested in agents that don’t work through formal rules. An SMTP daemon acts on your behalf routing messages to your intended destination, but they do so in an entirely formal way, one that is “correct” or “incorrect”. And if such agents act with initiative, it is initiative based on formal rules, and those formal rules ultimately lead back to the specific intentions of whoever wrote the rules, the rules defined in terms of unambiguous inputs.

Progress on intelligent agents seems to be thin. Gmail sorts some stuff for us in a “smart” way. There are some smart command-based interfaces like Siri, but they are mostly smart frontends for formalized backends, and they lack initiative. Maybe we get an intelligent alert or two, but it’s a tiny minority given all the dumb alerts we get.

One explanation is that we don’t have intelligent agents because we haven’t figured out intelligence. But whatever, intelligent is as intelligent does, if this was the only reason then I would expect to see more dumb attempts at intelligent agents.

It seems worth approaching this topic with more mundane attempts. But there are reasons we (“we” being “us technologists”) don’t.

Intelligent agents will be chronically buggy

If we want agents to do things where it’s not clear what to do, then sometimes they are going to do the wrong thing. It might be a big-scale wrong thing, like they buy airplane tickets and we wanted them to buy concert tickets. Or a small thing, like we want them to buy airplane tickets and something changed about the interface to buy those tickets and now the agent is just confused.

Intelligent agents will be accepting rules from the people they are working for, from normal people. Then normal users become programmers in a sense. Maybe it’s a hand-holding cute and fuzzy programming language based on natural language, but it is the nature of programming that you will create your own bugs. Only a minority of bugs are created because you expressed yourself incorrectly, most bugs are because you thought it through incorrectly, and no friendly interface can fix that.

How then do we deal with buggy intelligent agents, while also allowing them to do useful things?

There are two things that come to mind: logging and having the agent check before doing something. Both are hard in practice.

Logging: this lets you figure out who was responsible for a bad action, or the reasoning behind an action.

Programmers do this all the time to understand their programs, but for an intelligent agent the user is also a developer. When you ask your agent to watch for something, or you ask it to act under certain circumstances, then you’ve programmed it, and you may have programmed it wrong. Fixing that doesn’t mean looking at stack traces, but there has to be some techniques.

You don’t want to have to take users into the mind of the person who programmed the agent. So how can you log actions so they are understandable?

Checking: you’ll want your agent to check in with you before doing some things. Like before actually buying something. Sometimes you’ll want the agent to check in even more often, not because you expect the agent to do something impactful, but because it might do something impactful due to a bug. Or you are just getting to know each other.

Among people this kind of check-in is common, and we have a rich language to describe intentions and to implicitly get support for those intentions. With computer interactions it’s a little less clear: how does an agent talk about what it thinks it should do? How do we know what it says it thinks it should do is what it actually plans to do?

Collaboration

We deal with lots of intelligent agents all the time: each other. We can give each other instructions, and in this way anyone can program another human. We report back to each other about what we did. We can tell each other when we are confused, or unable to complete some operation. We can confirm actions. Confirmation is almost like functional testing, except often it’s the person who receives the instructions who initiates the testing. And all of this is rooted in empathy: understanding what someone else is doing because it’s more-or-less how you would do it.

It’s in these human-to-human interactions we can find the metaphors that can support computer-based intelligent agents.

But there’s a problem: computer-based intelligent agents perform best at computer-mediated tasks. But we usually work alone when we personally perform computer-mediated tasks. When we coordinate these tasks with each other we often resort to low-fidelity check-ins, an email or IM. We don’t even have ways to delegate except via the wide categories of permission systems. If we want to build intelligent agents on the intellectual framework of person-to-person collaboration, we need much better person-to-person collaboration for our computer-based interactions.

(I will admit that I may be projecting this need onto the topic because I’m very interested in person-to-person collaboration. But then I’m writing this post in the hope I can project the same perspective onto you, the reader.)

My starting point is the kind of collaboration embodied in TogetherJS and some follow-on ideas I’m experimenting with. In this model we let people see what each other are “doing” — how they interact with a website. This represents a kind of log of activity, and the log is presented as human-like interactions, like a recording.

But I imagine many ways to enter into collaboration: consider a mode for teaching, where one person is trying to tell the other person how to do something. In this model the helper is giving directed instructions (“click here”, “enter this text”). For teaching it’s often better to tell than to do. But this is also an opportunity to check in: if my intelligent agent is instructing me to do some action (perhaps one I don’t entirely trust it to do on my behalf) then I’m still confirming every specific action. At the same time the agent can benefit me by suggesting actions I might not have figured out on my own.

Or imagine a collaboration system where you let someone pull you in part way through their process. A kind of “hey, come look at this.” This is where the diligent intelligent agent can spend its time checking for things, and then bring your attention when it’s appropriate. Many of the same controls we might want for interacting with other people (like a “busy” status) apply well to the agent who also wants to get our attention, but should maybe wait.

Or imagine a “hey, what are you doing, let me see” collaboration mode, where I invite myself to see what you are doing. Maybe I’ve set up an intelligent agent to check for some situation. Anytime you set up any kind of detector like this, you’ll wonder: is it really still looking? Is it looking for the right thing? I think it should have found something, why didn’t it? This is where it would be nice to be able to peek into the agent’s actions, to watch it doing its work.

If applications become more collaboration-aware there are further possibilities. For instance, it would be great if I could participate in a collaboration session in GitHub and edit a file with someone else. Right now the other person can only “edit” if they also have permission to “save”. As GitHub is now this makes sense, but if collaboration tools were available we’d have a valid use case where only one of the people in the collaboration session could save, while the other person can usefully participate. There’s a kind of cooperative interaction in that model that would be perfect for agents.

We can imagine agents participating already in the collaborative environments we have. For instance, when a continuous integration system detects a regression on a branch destined for production, it could create its own GitHub pull request to revert the changes that led to a regression. On Reddit there’s a bot that I’ve encountered that allows Subreddits to create fairly subtle rules, like allow image posts only on a certain day, ban short comments, check for certain terms, etc. But it’s not something that blocks submission (it’s not part of Reddit itself), instead it uses the same moderator interface that a person does, and it can use this same process to explain to people why their posts were removed, or allow other moderators to intervene when something valid doesn’t happen to fit the rules.

What about APIs?

In everything I’ve described agents are interacting with interfaces in the same way a human interacts with the interface. It’s like everything is a screen scraper. The more common technique right now is to use an API: a formal and stabilized interface to some kind of functionality.

I suggest using the interfaces intended for humans, because those are the interfaces humans understand. When an agent wants to say “I want to submit this post” if you can show the human the filled-in form and show that you want to hit the submit button, you are using what the person is familiar with. If the agent wants to say “this is what I looked for” you can show the data in the context the person would themselves look to.

APIs usually don’t have a staging process like you find in interfaces for humans. We don’t expect humans to act correctly. So we have a shopping cart and a checkout process, you don’t just submit a list of items to a store. You have a composition screen with preview, or interstitial preview. Dangerous or destructive operations get a confirmation step — a confirmation step that could be just as applicable of a warning for an agent as it is for a human.

None of this invalidates the reasons to use an API. And you can imagine APIs with these intermediate steps built in. You can imagine an API where each action can also be marked as “stage-only” and then returns a link where a human can confirm the action. You can imagine an API where each data set returned is also returned with the URL of the equivalent human-readable data set. You can imagine delegation APIs, where instead of giving a category of access to an agent via OAuth, you can ask for some more selective access. All of that would be great, but I don’t think there’s any movement towards this kind of API design. And why would there be? There’s no one eager to make use of it.

That fancy Skeuomorphism term from the title

A Skeuomorphism is something built to be reminiscent of an existing tool, not out of any necessity, but because it provides some sense of familiarity. Our calendar software looks like a physical calendar. We talk of “folders”. We make our buttons look depressable even though it is all a simulation of a physical control.

This has come to mind when I talk of using the same metaphors for interacting with a computer program that we do for interacting with a human.

When we need a new way for people to work with computers a lot of success has come from finding bridges between our existing practices and a computer-based practice. The desktop instead of the command line, the use of cards on mobile, the many visual metaphors that we use, the way we phrase emails as letters, etc. Sometimes these are just scaffolding while people get used to the new systems (maybe flat design is an example). And of course you can pick the wrong metaphors (or go too far)

In this case the metaphor isn’t using the representation of a physical object in the computer, but using the representation of a fellow human as a stand-in for a program.

The goal is enabling a whole list of maybe actions. Maybe “intelligent” doesn’t really mean “knowledgable and smart” but “is not formally verifiable as correct” and “successfully addresses a domain that cannot be fully understood”. You don’t need formal AI for these kinds of tasks. Heuristics don’t need to be sophisticated. But we need interfaces where a computer can make attempts without demanding correctness. And human interaction seems like the perfect model for that.

http://www.ianbicking.org/blog/2014/02/collaboration-as-a-skeuomorphism-for-agents.html

|

|

Ben Hearsum: This week in Mozilla RelEng – February 21th, 2014 |

Highlights:

- Rail spent some time optimizing spot instance bidding, which helps lower our AWS bill.

- Massimo improved one of our major AWS usage reports, which will also help us be more efficient with our usage.

- Ahal got us uploading qemu.logs to blobber, making it easier to debug some types of failures.

- Mike got his patch for “hg purge” landed, which has improved try build times!

- Callek moved all of our in-house test machines onto in-house masters, which takes additional load off of our AWS VPN link (which reduces the chance of tree closures due to it failing).

- Dan continued his work on moving MozBase tests out of the build jobs, which improves build and test turnaround time.

- John started figuring out how to make our signing servers support 64-bit Windows binaries, which is one thing (out of many) that we need to do before shipping 64-bit Windows builds.

- Catlee continued work on Jacuzzi implementation, making good progress on some of the Buildbot implementation that we need.

- Nick made additional progress on fixing our release automation to support shipping Release builds to Beta users for extra testing before we ship for real.

Completed work (resolution is ‘FIXED’):

- Buildduty

- mac-signing4's nagios is having issues

- Report impaired AWS non slave machines

- upload a new talos.zip to capture recent talos changes

- Add swap to linux build machines with <12GB RAM

- builds-4hr.js.gz not updating, all trees closed

- Please upload mozfile-1.1 to http://pypi.pub.build.mozilla.org/pub

- some test machines unable to connect to their masters

- All trees closed due to timeouts as usw2 slaves download or clone

- General Automation

- Need helix-eng builds for test automation

- Rooting analysis mozconfig should be in the tree

- Periodic PGO and non-unified builds shouldn’t be running again on pushes that already have them

- Implement ghetto “gaia-try” by allowing test jobs to operate on arbitrary gaia.json

- Make Pine use mozharness production & limit the jobs run per push

- Save qemu.log to blobber

- decommission final set of KVM buildbot masters back to IT

- Compression for blobber

- Temporarily revert the change to m3.medium AWS instances to see if they are behind the recent increase in test timeouts

- Do our own hg purge

- Lots of Command Queue nagios alerts from new AWS masters

- Loan Requests

- Please loan t-xp32-ix- instance to dminor

- Loan glandium a m3.medium test slave

- Loan jgilbert a Linux M1/R test slave

- Need a slave for bug 818968

- Loan Win8 test slave to Jim Mathies for soft keyboard investigation work

- Slave loan request for a tst-linux64-ec2 vm

- Other

- Platform Support

- Release Automation

- Releases

- Releases: Custom Builds

- Repos and Hooks

- Tools

In progress work (unresolved and not assigned to nobody):

- Balrog: Backend

- Buildduty

- Setup tegras that are returning from loan

- Deploy python 2.7.3 to all build machines

- rewrite watch pending to cope better with spot requests that aren’t being fulfilled

- General Automation

- mozilla-central should contain a pointer to the revision of all external B2G repos, not just gaia

- Don’t reset mock environments if we don’t have to

- Self-serve should be able to request arbitrary builds on a push (not just retriggers or complete sets of dep/PGO/Nightly builds)

- Schedule multimedia b2g mochitests for emulator-jb on Cedar

- Provision enough in-house master capacity

- Provide B2G Emulator builds for Darwin x86

- Do debug B2G desktop builds

- keep buildbot master twistd logs longer

- [tracking bug] Run desktop unittests on Ubuntu

- Add support for add-if-not instruction added by bug 759469 to the mar generation scripts

- Configure holly like Aurora properly

- [Meta] Some “Android 4.0 debug” tests fail

- b2g build improvements

- Run mozbase unit tests from test package

- fx desktop builds in mozharness

- Move Firefox Desktop repacks to use mozharness

- add –dump-config and –dump-config-hierarchy to mozharness

- port MockReset to mozharness

- allow for multiple basedirs to be passed to PurgeMixin.purge_builds()

- allow mozharness’s tbox_print_summary() to be used outside of unittests

- Use spot instances for regular builds

- Create SpiderMonkey builds for Windows on TBPL again

- Do nightly builds with profiling disabled

- Windows ix test machines don’t always start buildbot

- Don’t clobber the source checkout

- Add Linux32 debug SpiderMonkey ARM simulator build

- aws sanity check shouldn’t report instances that are actively doing work as long running

- Allow the possibility of spidermonkey builds on more platforms

- [tarako][build]create “tarako” build

- move off of dev-master01

- Schedule JB emulator builds and tests on cedar

- [tracking bug] migrate hosts off of KVM

- Run jit-tests from test package

- Intermittent Linux spot builder “command timed out: 2700 seconds without output, attempting to kill” while trying to install mock

- Loan Requests

- Loan felipe an AWS unit test machine

- need a loaner bld-linux64-ec2 instance

- Slave loan request for Matt Woodrow

- Loan an ami-6a395a5a instance to Aaron Klotz

- Please lend Andreas Tolfsen a linux64 EC2 m3.medium slave

- Please loan dminor instance to build Android 2.3 Emulator

- Loan :sfink a linux64 bld-centos6-hp-005

- Slave loan request for a tst-linux64-ec2 machine

- Other

- Platform Support

- Migrate ESR branches to win64-rev2

- Setup in-house buildbot masters for remaining in-house testers

- signing win64 builds is busted

- Windows slaves often get permission denied errors while rm’ing files

- Deploy ndk-stack on foopies

- do initial planning on “chunks” of machines to move.

- slave pre-flight tasks

- Deploy patched version of Mesa 8.0.4

- Release Automation

- Support modifying update-settings.ini when doing update verify

- Create SSL products in bouncer as part of release automation

- Figure out how to offer release build to beta users

- cache MAR + installer downloads in update verify

- Releases: Custom Builds

- Repos and Hooks

- Tools

- [Tracking bug] – Assisted/Auto Landing from Bugzilla to tip of $branch

- Store the timing of actions in slaveapi

- Move trychooser hg extension into the new https://hg.mozilla.org/hgcustom/version-control-tools repo

- Use MozillaPulse from pypi

- cut over build/* repos to the new vcs-sync system

- slaveapi still files IT bugs for some slaves that aren’t actually down

- Replace dev-master01 for dev-master1 in our repos

http://hearsum.ca/blog/this-week-in-mozilla-releng-february-21th-2014/

|

|

Roberto A. Vitillo: Main-thread IO Analysis |

- The goal of this post is to show that main-thread IO matters and not to “shame” this or that add-on, or platform subsystem. There is only so much I can do alone by filing bugs and fixing some of them. By divulging knowledge about the off-main thread IO API and why it matters, my hope is that Firefox and add-ons developers alike will try to exploit it the next time an opportunity arises. I changed the title of this post accordingly to reflect my intentions.

- Please note that just because some code performs IO on the main-thread, it doesn’t necessarily mean that it can be avoided. This has to be evaluated case by case.

Recently a patch landed in Firefox that allows telemetry to collect aggregated main-thread disk IO timing per filename. Each filename comes with its accumulated time spent and the number of operations performed for open(), read(), write(), fsync() and stat(). Yoric wrote an interesting article some time ago on why main-thread IO can be a serious performance issue.

I collected a day worth of Nightly data using Telemetry’s map-reduce framework and filtered out all those filenames that were not common enough (< 10%) among the submissions. What follows are some interesting questions we could answer thanks to the newly collected data.

- How common are the various operations?

The most common operation is stat() followed by open(), all other operations are very uncommon with a third quartile of 0. - Is there a correlation between the number of operations and the time spent doing IO on a file?

There is an extremely weak correlation which is what one would expect. Generally, what really makes a difference is the amount of bytes read and written for instance, not the number of actual operations performed. - Does having a SSD disk lower the time spent doing IO on a file?

A Mann-Whitney test confirms that the distributions of time conditioned on “having a SSD” and “not having a SSD” seem to be indeed statistically different. On average having an SSD disk lowers the time by a factor of 2.7.

The above facts apply to all submissions, but what we are really interested in is discerning those files for which the accumulated time is particularly high. An interesting exercise is to compare some distributions of the top 100 files (in terms of time) against the distributions of the general population to determine if bad timings are correlated with older machines:

- Does the distribution of Windows versions differ between the population and the top 100?

Fisher’s test confirms that the distributions do not differ (with high probability), i.e. the OS version doesn’t seem to influence the timings. - Does the distribution of CPU architectures (32 vs 64 bit) differ between the population and the top 100?

Fisher’s test confirms that the distributions do not seem to differ. - Does the distribution of the disk type differ between the population and the top 100?

The distributions do not seem to differ according to Fisher’s test.

Can we deduce something from all this? It seems that files that behave badly do so regardless of the machine’s configuration. That is, removing the offending operations performing IO in the main-thread will likely benefit all users.

Which brings us to the more interesting question of how we should prioritize the IO operations that have to be moved off the main-thread. I am not a big fan of looking only at aggregates of data since outliers turn out to be precious more often than not. Let’s have a look at the distrubtion of the top 100 outliers.

Whoops, looks like httpDataUsage.dat, place-sqlite-wal, omni.ja and prefs.js behave particularly bad quite often. How bad is bad? For instance, one entry of httpDataUsage.dat performs about 100 seconds of IO operations (38 stat & 38 open), i.e. ~ 1.3 seconds per operation!

Now that we have a feeling of the outliers, let’s have a look at the aggregated picture. Say we are interested in those files most users hit (> 90%) and rank them by their third quartile:

In general the total time spent doing IO is about 2 to 3 orders of magnitude lower than for the outliers.

But what happened to the add-ons? It turns out that the initial filtering we applied on our dataset removed most of them. Let’s rectify the situation by taking a dataset with the single hottest entry for each file, in terms of time, and aggregate the filenames by extension:

To keep track of some of the statistics presented here, mreid and myself prepared a dashboard. Time to file some bugs!

http://ravitillo.wordpress.com/2014/02/21/main-thread-io-hall-of-shame/

|

|

Eric Shepherd: Don’t be afraid to share |

The most important rule of contributing to the developer documentation on MDN is this:

Don’t be afraid to contribute, even if you can’t write well and can’t make it beautiful. It’s easier for the writing team to clean up information written by true experts than it is to become experts ourselves.

Seriously. There aren’t that many of us on the writing team, even including our awesome non-staff contributors. We sadly don’t have enough time to become experts at all the things that need to be documented on MDN.

Even if all you do is copy your notes onto a page on MDN and click “Save,” that’s a huge help for us. Even pasting raw text into the wiki is better than not helping at all. Honest!

We’ll review your contribution and fix everything, including:

- Grammar; you don’t have to have perfect (or even good) writing skills—that’s what the writing team is here for!

- Style; we’ll make sure the content matches the MDN style guide.

- Organization; we’ll move your content, if appropriate, so that it’s in the right place on the site.

- Cross-linking; we’ll add links to other related content.

- Structure and frills; we’ll ensure that the layout and structure of your article is consistent, and that it uses any advanced wiki features that can help get the point across (such as live samples and macros).

It’s not your job to make awesome documentation. That’s our job. So don’t let fear of prevent you from sharing what you know.

http://www.bitstampede.com/2014/02/21/dont-be-afraid-to-share/

|

|

Galaxy Kadiyala: MozCoffee Bangalore v4.0 |

After three disastrous MozCoffee meetups, finally we had Mozillians joining us at our fourth MozCoffee!

Thanks to Bram Pitoyo for joining us at the meet-up. Bram works for Mozilla, New Zealand as a UX designer and is in town for Meta Refresh conference.

We had two Mozilla Reps and 5 Mozillians at the meet-up.

#casualcoffeee #web #design #startups with @neocatalyst @brampitoyo @kaustavdm @Shiva_Kolli @GalaxyK @mozilla pic.twitter.com/6a1OYc7Dzq

— Akash Devaraju (@SkyKOG) February 16, 2014From left: +Galaxy Kadiyala , +Jaydev Ajit Kumar , +Bram Pitoyo, +Sagar Hasanadka , +Shivagangadhar Kolli , +Akash Devaraju , +Kaustav Das ModakWe did not have a proper agenda prepared but yes we had a chat with Bram on Marketplace, FirefoxOS, SuMo, UX, FSA and ways to contribute in for the fresh Mozillians.

Me, Kaustav and Bram walked like crazy on the streets of Bangalore to find a mobile shop for FirefoxOS research.

Firefox Student Ambassadors is very popular among college students. We are getting a lot of requests from FSA's to conduct events at their colleges. Pretty soon we will be doing an event for FSA's at a college in Bangalore. Keep watching our Mozilla India social media channels for updates on the event.

Wow! Had great time with #MOZILLIANS Casual coffee made me #enthusiastic @brampitoyo @GalaxyK @kaustavdm @SkyKOG @neocatalyst @Shiva_Kolli

— Sagar Hasanadka (@Hasanadka) February 17, 2014|

|

David Boswell: Opportunities to connect with contributors at scale |

Mozilla has a goal this year to grow the number of active contributors by 10 times. We’ll be able to achieve this by tapping into several different opportunities that let us connect with new contributors at scale. Some of those are:

- 70,000+ people (~200/day) will reach out through the Get Involved page this year to let us know they want to contribute

- 100,000+ people have already taken their first steps on the QA contribution pathway by installing and using Nightly builds

- 60,000+ people have subscribed to a newsletter of weekly contribution opportunities

- 10,000+ people have signed up to the Student Ambassadors program

The numbers of people who have reached out to us through those tools is a powerful example of how Mozilla’s mission resonates and gets people excited to want to help.

We can connect these people to Mozilla initiatives—we just need to get better about identifying and sharing out contribution opportunities and making use of the tools above.

If you have information to add about these tools or know of other opportunities to connect with people at scale, please feel free to update this etherpad. We’ll take these notes and make a guide for people interested in building communities around their projects.

http://davidwboswell.wordpress.com/2014/02/20/opportunities-to-connect-with-contributors-at-scale/

|

|

Eric Shepherd: Healthy docs are happy docs |

Among the first steps we need to take for our “Year of Content” this year is to review our existing documentation to find where improvements need to be made. This involves going through articles on MDN and determining how “healthy” they are. If a doc is reasonably healthy, we can leave it alone. If it’s unhealthy, we need to give it some loving care to fix it up.

Today, I’d like to share with you what constitutes a healthy document. Only once we know that can we dive into more depth as to how to fix those articles that need help.

Check the content’s age

Look to see how recently the content was updated. If it’s been a long time, odds are good that it’s out of date. The degree to which it’s out of date depends, of course, on what the topic is and whether or not there’s been development work in that area recently. Content about CSS is a lot more likely to go quickly stale than material about XPCOM, for example.

Review articles in areas of the site, looking to see how long it’s been since it was last changed, and use your judgement as to whether it’s been too long.

Do the pages have quicklinks?

Quicklinks appear in the left sidebar on MDN pages to offer links to related content. We are in the process of phasing out the old “See also” block at the end of articles in favor of quicklinks. If you see pages that have a section entitled “See also”, they need to be updated. Similarly, many areas of the site have standard quicklink macros that should be used, such as HTMLRef or CSSRef. These automatically construct advanced sidebars which link to related material as well as all other HTML elements or CSS properties.

Pages with no “See also” section or quicklinks may need some added; it’s often useful to provide additional links like these. However, they’re not mandatory.

Are there any KumaScript errors?

This is a big one. Any page that presents a big red KumaScript error box at the top of the page is an immediate “drop everything and fix this” alert. If you don’t know how to fix these (or aren’t able to), visit #mdn on IRC or drop me an email.

Are there appropriate examples and images?

Developers learn best and fastest by example. Any page about a developer technology that doesn’t have good code samples—with explanations of how they work—is probably flawed. Take note of pages that are missing examples, or have overly simplistic (or excessively complicated) examples. Also, most examples should be presented using our live sample system, which allows us to demonstrate inline the results of executing a piece of code.

Similarly, if an article would benefit from screenshots or other images (or videos) to help explain a topic, and doesn’t have any, that’s a detriment to its health, too.

Are there compatibility tables?

Every page about an API or technology should have a compatibility table that indicates what browsers and platforms support it. This includes both tutorials and reference pages. If these are missing, users will have a hard time evaluating whether or not a technology is ready for prime time.

On a related note, pages that are written from the perspective of using them from a particular browser are also flawed. Much of our content is still very Firefox-specific, which isn’t appropriate. MDN’s content should be browser-agnostic, supporting our developers regardless of what platforms and browsers their users are on. We need to find and get rid of boxes and banners that say a technology was added in Firefox version X and move that information into proper compatibility tables.

Do reference pages link to specifications?

Every reference page should include a link to the specification or specifications in which that API was defined. We have a standard table format for presenting this information, as well as a set of macros that help construct them.

Are pages formatted properly?

All pages on MDN should follow our style guide. Admittedly, our style guide is a bit in flux at the moment, and not all of it is written down yet. However, that will change soon (certainly by the time we dive in deeply into this work), so it deserves mentioning here. Content that deviates from the style guide needs updating.

Are pages tagged correctly?

Increasingly, we’re using macros for everything from generating landing pages and menus to search filtering to building to-do lists. This depends heavily on pages being tagged correctly. Articles that don’t follow our tagging standards need to be updated so that all of these features of the MDN Web site work correctly.

Do pages need review?

Healthy articles have been fully reviewed; they have no review requests flagged for them. New articles are automatically flagged for both editorial and technical review, and these reviews may be requested at any time after the article has been created, as well. These reviews help to ensure the documentation’s quality and clarity.

Make sure there aren’t any dead ends

Every article should have links to other pages. Articles that don’t cross-link properly to other content become dead ends in which users get lost. Be sure that terms are properly linked to relevant content elsewhere on MDN; if there aren’t appropriate links to other pages, the articles aren’t healthy.

Do pages use macros properly?

We use macros on MDN for a lot of things. It’s important that they’re used consistently and correctly. By using macros to generate content, we can create more and more consistent content more quickly. For example, when linking to API interface objects, use the DOMXRef macro instead of directly linking to the object’s documentation. This macro can adapt quickly to site changes and design changes. In addition, it automatically creates appropriate tooltips and automatically handles other tasks to improve the site’s usability.

http://www.bitstampede.com/2014/02/20/healthy-docs-are-happy-docs/

|

|

Nathan Froyd: finding addresses of virtual functions |

Somebody on #developers this morning wanted to know if there was an easy way to find the address of the virtual function that would be called on a given object…without a debugger. Perhaps this address could be printed to a logfile for later analysis. Perhaps it just sounds like an interesting exercise.

Since my first attempt had the right idea, but was incomplete in some details, I thought I’d share the actual solution. The solution is highly dependent on the details of the C++ ABI for your particular platform; the code below is for Linux and Mac. If somebody wants to write up a solution for Windows, where the size of pointer-to-member functions can vary (!), I’d love to see it.

include

#include

/* AbstractClass may have several different concrete implementations. */

class AbstractClass {

public:

virtual int f() = 0;

virtual int g() = 0;

};

/* Return the address of the `f' function of `aClass' that would be

called for the expression:

aClass->f();

regardless of the concrete type of `aClass'.

It is left as an exercise for the reader to templatize this function for

arbitrary `f'. */

void*

find_f_address(AbstractClass* aClass)

{

/* The virtual function table is stored at the beginning of the object. */

void** vtable = *(void***)aClass;

/* This structure is described in the cross-platform "Itanium" C++ ABI:

http://mentorembedded.github.io/cxx-abi/abi.html

The particular layout replicated here is described in:

http://mentorembedded.github.io/cxx-abi/abi.html#member-pointers */

struct pointerToMember

{

/* This field has separate representations for non-virtual and virtual

functions. For non-virtual functions, this field is simply the

address of the function. For our case, virtual functions, this

field is 1 plus the virtual table offset (in bytes) of the function

in question. The least-significant bit therefore discriminates

between virtual and non-virtual functions.

"Ah," you say, "what about architectures where function pointers do

not necessarily have even addresses?" (ARM, MIPS, and AArch64 are

the major ones.) Excellent point. Please see below. */

size_t pointerOrOffset;

/* This field is only interesting for calling the function; it

describes the amount that the `this' pointer must be adjusted

prior to the call. However, on architectures where function

pointers do not necessarily have even addresses, this field has the

representation:

2 * adjustment + (virtual_function_p ? 1 : 0) */

ptrdiff_t thisAdjustment;

};

/* Translate from the opaque pointer-to-member type representation to

the representation given above. */

pointerToMember p;

int ((AbstractClass::*m)()) = &AbstractClass::f;

memcpy(&p, &m, sizeof(p));

/* Compute the actual offset into the vtable. Given the differing meaing

of the fields between architectures, as described above, and that

there's no convenient preprocessor macro, we have to do this

ourselves. */

#if defined(__arm__) || defined(__mips__) || defined(__aarch64__)

/* No adjustment required to `pointerOrOffset'. */

static const size_t pfnAdjustment = 0;

#else

/* Strip off the lowest bit of `pointerOrOffset'. */

static const size_t pfnAdjustment = 1;

#endif

size_t offset = (p.pointerOrOffset - pfnAdjustment) / sizeof(void*);

/* Now grab the address out of the vtable and return it. */

return vtable[offset];

}

https://blog.mozilla.org/nfroyd/2014/02/20/finding-addresses-of-virtual-functions/

|

|

Brian King: Reps Leads Meetup Summary |

The Mozilla Reps Council and module Peers met for 2 days over this weekend to solidify plans for 2014 and re-calibrate the vision and goals of Reps in general to align with our ambitious organisational goals around growing community. Traditionally billed as Council meetings, these bi-yearly sessions are designed to get the project leaders together to work on planning and strategy for the program. The program has made a huge impact, but to ensure continued impact we have to continually assess the program to make improvements and work on future strategy. The Council provides the general vision of the program and oversees day-to-day operations globally. The Peers oversee the Council and provide input and vision on the program in general. Also at the meeting were guests William Reynolds (Community Tools, including reps.mozilla.org), Konstantina Papadea (Budget and Swag), Michelle Thorne (Foundation), Marcia Knous (QA), and Rosana Ardila (SUMO).

2014

Our 2014 goal is simple:

Mozilla Reps in 2014 are more engaged, empowered and enabled.

What Happened?

We started day one with a question to attendees to frame the weekend:

How can we improve the Reps program to better enable Mozilla Communities that have impact?

This was the measure for everything we would do for the rest of the time. The two primary topic areas on day one were program structure / goals, and leadership. We broke into sub-groups with the remit of coming up with the bottlenecks and problems in these areas. Solutions were for other sessions. In calling out what we felt were important issues, we could provide focus for the rest of the weekend. We grouped items into three different categories that we felt caught all we wanted to capture – Council and Peers, Mentors, Reps.

Mixed through day one were other sessions. Prefaced by Michelle talking about Teach The Web/Webmaker plans for the year and how Reps can support, we had a general discussion about events. With an average of 3 daily globally, events are central to the program, and will continue to evolve to meet the needs of everyone at Mozilla. Tristan Nitot came in to talk about TRIBE and personal development. Kate Naszradi dialed in really early California time to give us an update on the fast growing Firefox Student Ambassadors Program. Then Mary Colvig and David Boswell to discuss the topic ‘Enable Communities that have Impact’, aka One Million Mozillians. How we are going to scale by 10x in 2014. To round out the day we did a recap and there were mixed feelings on what we achieved, which fed into a new framework for day two.

For day two, we basically threw out the written schedule, and decided to hack. There was a hunger for finding solutions to the issues we identified on day one and starting to fix them. Out of the breakouts we had came a Trello board with specific tasks.

Also on day two: Pete Scanlon dropped in for a discussion on Engagement, the team’s role in 2014 Mozilla goals, and how Reps fit in and can make an impact. We had a few more discussions on important topics that bubbled up such as the Reps application process. We collaborated. We argued. We laughed.

Huge thanks to Henrik Mitsch who did a fantastic job as facilitator over the weekend. Without him it would have been chaos!

Tasks

Here are some of the important topics that surfaced:

- Program Purpose

- Visibility of Council

- Council Accountability

- Council On-boarding

- Council and Budget

- Leadership

- Mentors Scaling

- Mentors Training

- Reps Portal

- Reps Activity

For these and other buckets, we brainstormed solutions and then broke them down to tasks. For sure, there is a lot of work to do, but it can be done. One of the challenges coming out of previous ReMo work events was implemention of work items. There were various reasons for this, and we are coming up with new work models to fix this. Primarily tasked are the folks at the meeting but we will be looking to mentors and all Reps for help.

What Next?

The program purpose is clear and articulated on the wiki, but the scope and mechanisms for working with us have perhaps not been clear. We will fix that, to make it more accessible. Thanks to Michelle for writing a great case-study of Webmaker working with Reps. After we prioritise tasks, we’ll be sharing out with everyone for feedback. We all have a stake in Mozilla Reps.

We can help your team, your community, you. We’ll be telling you how. But this is a two-way street. Tell us how we can support you.

Very soon I will have some more exciting Reps news for you. Watch this space.

Related articles

http://brian.kingsonline.net/talk/2014/02/reps-leads-meetup-summary/

|

|

Fr'ed'eric Harper: Web first |

Creative Commons: http://j.mp/1dPimfh

I evangelised mobile first, and responsive web design for years now. Not that I’ll stop, but I’ll switch my primary focus on something even more important, something I’m calling web first!

I’m a big fan of giving a great experience to the users, and I know you are too. Unfortunately, that means native applications for many developers, startups, and companies. I agree that when it comes to mobile, HTML5 isn’t quite there yet, and it’s why we are developing Firefox OS at Mozilla, but still, the web is a strong platform, and you can give a decent to a very good experience to your customers.

Because native applications seems to give a better integrated experience for the users on their smartphones, why should you start with the browser experience first or web first as I wrote earlier? There are many reasons, but the most important one is that you will give access to your application to everybody with an internet connection! No discrimination about the OS or the device: everybody that have access to a web browser we’ll be able to use your application.

Reaching more people, and building the foundation for native applications are also important advantages. If you really want to build a native application, do it after. If your web architecture was well done, you’ll already have mostly everything you need to communicate between your server, and your native application if necessary (login, saving, processing…).

I feel that web first is so important, that I want to promote this a lot more: I even brought webfirst.org to create a small site with a manifesto about this (I’ll need designers help)! So next time you want to build an application, think web first!

--

Web first is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- No web version! Why do you hate me? We are living in a world of mobile application. Everyone,...

- Mobile First at Web and PHP Conference If you know me a little, you know that I’m...

- It’s not just about developing your application; you need to market it It’s been a couple of years now that I’m a...

|

|

Mitchell Baker: A quick note |

I’ve been quiet since the Town Hall on Directory Tiles. That’s because I’ve been traveling and pretty wrapped up in the trip. I know there is a lot to think about with the Directory Tiles, a lot of good questions and topics raised.

I’m on my way to some more meetings in Europe and then Mobile World Congress. So I may be pretty quiet until MWC is over. Please don’t think that means I’ve zoned out on this topic. I know it’s important.

|

|

Andrew Truong: Have to? vs. Want to? |

I'm sure that all of us know the different between a need and a want but do we know the difference between a have to and want to? It sounds basic and easy to comprehend but there's an analogy behind it.

With a want and a have to doing, there's a line that can't be crossed, you need to watch your step. I am a sumo contributor, it's not a necessity for me to contribute and neither is it for any of us to contribute if we don't have to because the opportunity to leave Mozilla to advance your life and career is available. I enjoy helping users, who come to sumo with an issue they have. Our users may overreact at times but most of the time, they may turn out to be friendly and nice people when we resolve their issue.

I want to... I need to... What do I want and what do I need? A want is something that I prefer to have, prefer to choose but a have is something that is a necessity, something that must be part of our daily life. There are times where you need to be lightened in order to know if what you are doing is necessary and if it's beneficial. An encounter I had with a school mate of mine had me thinking of wants and haves because of this argument I had with them. When requested to do something it's most of the time a want to complete as asked because we were just requested, we have the right to say no. Now let's think of it being something you had to do and you said no, would this not give a bad impression and leave a mark of yourself upon that requester? We need to think clearly before we react, we can always ask if something that they want us to do or if it's something we have to do. Have things cleared up for us makes assignments and other things easier to complete. We mustn't be so stubborn because being stubborn will not get us anywhere in life, we also need to remember to keep an open mind at all times.

Now, let's remember to keep an open mind and remember to think if something is a want to or if it's a have to!

Read this on my blog: http://feer56.ca/?p=53

Enjoyed this content? Please donate to help keep my blog up and alive: http://feer56.ca/?page_id=64

|

|

Pascal Finette: Putting My Money Where My Mouth Is |

I quit my job at Google.

Over the last couple of months it became increasingly clear to me that I need to be deep in the trenches of entrepreneurship. That I care too much about founders, startups and ecosystems that I can do anything else. That I need to be where the action is.

In my blood.

I am incredibly excited to take 2014 to follow my hunches, work with amazing folks like you, grow the startup movement around the world, preach the gospel of entrepreneurship, develop a couple of projects and see where the wind takes me.

What's next?

I founded an advisory and business development firm specializing in open innovation and entrepreneurship (ten|x). I will do a whole bunch of public speaking. I have some exciting plans for The Heretic. I relaunched my website. I allow myself to be open for opportunities, projects and whacky ideas.

Life is freakin' amazing.

Now - let's put our money where our mouth is and get to work!

http://blog.finette.com/2014/02/19/putting-my-money-where-my-mouth-is

|

|

Pierros Papadeas: Contribution Activity Metrics – An Intro |

Yesterday was my 3rd anniversary of joining Mozilla as a paid contributor. We’ve come a long way over those 3 years, and especially on my domain (community building) we experimented, deployed and pioneered new ideas on how to build a global community.

For me, Mozilla Reps was the experimentation focus. Together with William Quiviger we created a program with a specific set of tools to help Mozillians to organize and/or attend events, recruit and mentor new contributors, document and share activities, and support their local communities better. Other parts of the organization also ramped up their contributor engagement efforts, namely Webmaker, SuMo, Addons, QA and Coding, explored new ways to interact and incentivize contributions for their areas.

Inherently with experimentation and growth, some needs are surfaced. The need to have visibility, have a deeper understanding of the inner workings of your community, have hard figures to look at while assessing the progress of a program in order to take informed decisions for the future of it. To meet those needs, each team tried to create their own metrics around their contribution systems (websites, tools, communities), having a narrow scope, only covering their immediate needs.

Starting late 2012, a meta team of community builders was formed across Mozilla (Community Building Team aka CBT), thanks to the efforts of David Boswell trying to get us all on the same page. It was easy to see early-on that we needed to standardize our language around contribution metrics, align and define a unified way forward. The advantages of a unified approach were obvious. For the first time, we would be able to track contributions across all Mozilla projects and cross compare activity metrics for each project. Establishing a common language and definition of a contribution would also be the key to unlock all communications between community builders in Mozilla. We would be speaking the same language and measure activity the same way. For sure interpretations, thresholds and actions after the metrics might differ for each project, but hey! we would have de-duplicated a lot of efforts after all!

Since late December 2013, (almost 2 months now) it is my honor to be part of a newly formed Community Building Team under David. In my new role, I am tasked to lead the Systems and Data working group, delivering these unified tools and language around contribution metrics in Mozilla. You can learn more about the Systems and Data working group reading this intro post. For over a year now, this (initially informal) Working Group has been conceptualizing and rethinking the way we measure contribution across Mozilla, and with this newly formed team the time has come to deliver those. We are well underway on building the first iteration of those tools and getting the first results published.

This post is just the first of many to come in a series of posts around Contribution Activity Metrics and our progress so far. If you love metrics and you are interested in community building, you can join us by being part of the Working Group.

People are doing awesome stuff in Mozilla. Let’s measure them ![]()

|

|

Pete Moore: Weekly review 2014-02-19 |

Accomplishments & status:

Bug 866260 – cut over build/* repos to the new vcs-sync system

This was the main focus of my week. The bug has been resolved/closed. All build/* repositories on hg.m.o are getting mirrored to github mozilla account successfully.

During the testing, I discovered differences between the staging repository I had set up on github, and the existing production repository being served by the legacy system. The differences turned out to be errors in the production legacy system that had gone unnoticed (see Bug 973015 – legacy vcs-sync process doesn’t properly distinguish branch names from bookmark names). This was a great start for the new sync system, as it immediately solved this issue.

We went live on Monday (17 Feb), and no issues have been reported so far.

Many many thanks to both Hal Wine and Aki Asaki for supporting me tremendously with this bug.

Bug 875599 – Delete dead code in tools repo

Created/tested/had reviewed/released a new patch to close this (rather old) bug.

Queue hygiene - I wanted to get rid of this bug which had been lingering a while, and although was not high priority, was relatively quick to resolve.

Bug 862910 – cache MAR + installer downloads in update verify and Bug 710461 - Be smarter about downloading mar files in update verify

Created an initial patch for this (see https://github.com/petemoore/build-tools/compare/bug862910) but after thinking about it some more, have a better idea how to approach this, hopefully reusing the parallelism introduced in Bug 628796 - final verification logs should have a seperator between requests to also offer some speed advantages, and to avoid retesting the same actions multiple times.

Bug 876715 – Determine how to update watcher

Currently testing if this can be closed (checking all tegras still have new watcher, and that none have regressed back to old one, e.g. due to being reimaged with an old image, etc).

Bug 969461 – disable & delete mirror of gaia-ui-tests

Reviewed changes for Hal. All good.

Bug 799719 – (vcs-sync) tracker to retire legacy vcs2vcs

This is a tracking bug for all the vcs sync migration work.

Bug 971372 - wrong users sudo permissions on github-sync[1-4]

With Hal’s help, got sudo access to the vcs sync boxes. Closed/resolved.

Blocked/Waiting on:

"Request for remaining issues regarding hg.mozilla.org"

Working with Ben Kero on this topic - I currently have no bug number…

To look at over the next week:

Bug 847640 – db-based mapper on web cluster

This is the new work which will unblock gecko-dev and l10n rollout into new vcs2vcs system.

Bug 929336 – permanent location for vcs-sync mapfiles, status json, logs

Need to work out where to put all this stuff.

Bug 905742 – Provide B2G Emulator builds for Darwin x86

Updated status to say I’ll be working on it next week.

Areas to develop:

To be discussed.

Quarterly goal tracking:

Notes:

1) Coop’s trip to Germany:

https://releng.etherpad.mozilla.org/241

Anything missing?

2) Flights to Portland - need to discuss - see email

3) TRIBE - no places left in April. Will go next available opportunity.

I probably need to assign this to somebody else - I just don’t have time to look at it at the moment. Suggestions?

Actions:

- Discuss caching strategy with bhearsum

- Check possible flight connections myself if maybe a Friday night flight

- Try to sign up for multiple TRIBE courses at once, to avoid not being able to get a place for a later course, if I secure place at foundation course

|

|

Chris Pearce: How to prefetch video/audio files for uninterrupted playback in HTML5 video/audio |

http://blog.pearce.org.nz/2014/02/how-to-prefetch-videoaudio-files-for.html

|

|

Selena Deckelmann: Printing flashcards on 3x5 index cards |

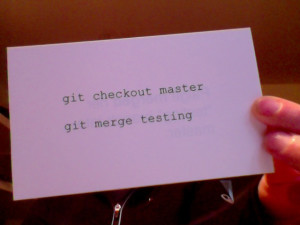

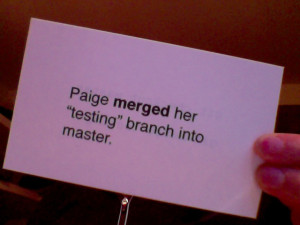

I’m making a few sets of these for a meetup tomorrow night:

In the past, I’d printed out text from a spreadsheet tool and then used a lot of tape and scissors to make the magic happen.

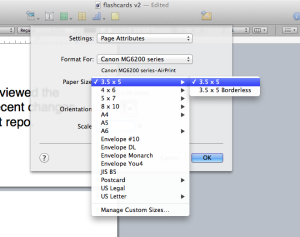

I wanted to step up my game a little and print things directly onto index cards. Printing is a little tricky on most printers because you won’t be able to print double-sided unless you have a very fancy printer. I have a Cannon MG6220 (mostly for printing photos).

Here’s how to set up a word processing app to print out cards (I used Pages):

- Configure the page layout to be 3'' x 5''. Here’s the Page Setup dialog box for Pages:

- Add your flashcards to the document! Add the cards with the front and back of each flashcard as single, adjacent pages. A sample PDF of what this looks like is here.

- To make the printing work, I set up 2 printing presets – one called ‘front flashcards’ and the other called ‘back flashcards’. The ‘front’ is set up to print odd pages only, and ‘back’ is set up to print even pages only and in reverse order.

- Print the front side of the cards using your ‘front flashcards’ preset.

- Flip the stack of cards over, face down, and put them back into the paper feeder (YMMV with this in the event that your printer is set up differently than mine).

- Print the other side of the flashcards using your ‘back flashcards’ preset.

That’s it!

|

|

Robert O'Callahan: World Famous In Newmarket |

The Newmarket Business Association has made a series of videos promoting Newmarket. They're interesting, if a little over-produced for my taste. Their Creativity in Newmarket video has a nice plug for the Mozilla office. Describing our office as a "head office" is a bit over the top, but there you go.

http://robert.ocallahan.org/2014/02/world-famous-in-newmarket.html

|

|

Jan de Mooij: Using segfaults to interrupt JIT code |

(I just installed my own blog so I decided to try it out by writing a bit about interrupt checks ![]() )

)

Most browsers allow the user to interrupt JS code that runs too long, for instance because it’s stuck in an infinite loop. This is especially important for Firefox as it uses a single process for chrome and content (though that’s about to change), so without this dialog a website could hang the browser forever and the user is forced to kill the browser and could lose work. Firefox will show the slow script dialog when a script runs for more than 10 seconds (power users can customize this).

SpiderMonkey

Firefox uses a separate (watchdog) thread to interrupt script execution. It triggers the “operation callback” (by calling JS_TriggerOperationCallback) every second. Whenever this happens, SpiderMonkey promises to call the operation callback as soon as possible. The browser’s operation callback then checks the execution limit, shows the dialog if necessary and returns true to continue execution or false to stop the script.

How this works internally is that JS_TriggerOperationCallback sets a flag on the JSRuntime, and the main thread is responsible for checking this flag every now and then and invoke the operation callback if it’s set. We check this flag for instance on JS function calls and loop headers. We have to do this both for scripts running in the interpreter and the JITs, of course. For example, consider this function:

function f() {

for (var i=0; i<100000000; i++) {

}

}

Until Firefox 26, IonMonkey would emit the following code for this loop:

0x228a3b9: cmpl $0x0,0x2b764ec // interrupt check 0x228a3c0: jne 0x228a490 0x228a3c6: cmp $0x5f5e100,%edx 0x228a3cc: jge 0x228a3da 0x228a3d2: add $0x1,%edx 0x228a3d5: jmp 0x228a3b9

Note that the loop itself is only 4 instructions, but we need 2 more instructions for the interrupt check. These 2 instructions can measurably slow down tight loops like this one. Can we do better?

OdinMonkey

OdinMonkey is our ahead-of-time (AOT) compiler for asm.js. When developing Odin, we (well, mostly Luke) tried to shave off as much overhead as possible, for instance we want to get rid of bounds checks and interrupt checks if possible to close the gap with native code. The result is that Odin does not emit loop interrupt checks at all! Instead, it makes clever use of signal handlers.

When the watchdog thread wants to interrupt Odin execution on the main thread, it uses mprotect (Unix) or VirtualProtect (Windows) to clear the executable bit of the asm.js code that’s currently executing. This means any asm.js code running on the main thread will immediately segfault. However, before the kernel terminates the process, it gives us one last chance to interfere: because we installed our own signal handler, we can trap the segfault and, if the address is inside asm.js code, we can make the signal handler return to a little trampoline that calls the operation callback. Then we can either jump back to the faulting pc or stop execution by returning from asm.js code. (Note that handling segfaults is serious business: if the faulting address is not inside asm.js code, we have an unrelated, “real” crash and we must be careful not to interfere in any way, so that we don’t sweep real crashes under the rug.)

This works really well and is pretty cool: asm.js code has no runtime interrupt checks, just like native code, but we can still interrupt it and show our slow script dialog.

IonMonkey

A while later, Brian Hackett wanted to see if we could make IonMonkey (our optimizing JIT) as fast as OdinMonkey on asm.js code. This means he also had to eliminate interrupt checks for normal JS code running in Ion (we don’t bother doing this for our Baseline JIT as we’ll spend most time in Ion code anyway).

The first thought is to do exactly what Odin does: mprotect all Ion-code, trigger a segfault and return to some trampoline where we handle the interrupt. It’s not that simple though, because Ion-code can modify the GC heap. For instance, when we store a boxed Value, we emit two machine instructions on 32-bit platforms, to store the type tag and the payload. If we use signal handlers the same way Odin does, it’s possible we store the type tag but are interrupted before we can store the payload. Everything will be fine until the GC traces the heap and crashes horribly. Even worse, an attacker could use this to access arbitrary memory.

There’s another problem: when we call into C++ from Ion code, the register allocator tracks GC pointers stored in registers or on the stack, so that the garbage collector can mark them. If we call the operation callback at arbitrary points though, we don’t have this information. This is a problem because the operation callback is also used to trigger garbage collections, so it has to know where all GC pointers are.

What Brian implemented instead is the following:

- The watchdog thread will mprotect all Ion code (we had to use a separate allocator for Ion code so that we can do this efficiently).

- The main thread will segfault and call our signal handler.

- The signal handler unprotects all Ion-code again and patches all loop backedges (jump instructions) to jump to a slow, out-of-line path instead.

- We return from the signal handler and continue execution until we reach the next (patched) loop backedge and call the operation callback, show the slow script dialog, etc.

- All loop backedges are patched again to jump to the loop header.

Note that this only applies to the loop interrupt check: there’s another interrupt check when we enter a script, but for JIT code we combine it with the stack overflow check: when we trigger the operation callback, the watchdog thread also sets the stack limit to a very high value so that the stack check always fails and we also end up in the VM where we can handle the operation callback and reset the stack limit ![]()

Conclusion

Firefox 26 and newer uses signal handlers and segfaults for interrupting Ion code. This was a measurable speedup, especially for tight loops. For example, the empty for-loop I posted earlier runs 33% faster (43 ms to 29 ms). It helps more interesting loops as well, for instance Octane-crypto got ~8% faster.

http://www.jandemooij.nl/blog/2014/02/18/using-segfaults-to-interrupt-jit-code/

|

|