Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Erik Vold: A Blockbuster Firefox |

Anita Elberse was on Charlie Rose awhile back to talk about her book “Blockbusters: Hit-making, Risk-taking, and the Big Business of Entertainment” and that interview had a large impact on me. In the interview she brilliantly emphizes the importance of a blockbuster product/feature in the context of a business. She was talking primarily about the entertainment sector, and I do not think that Firefox is entertainment, but I think the idea still applies quite well.

In the first year that Google Chrome was released they had a large number of blockbuster features, here are just a few off the top of my head:

- The Unibar: Google Chrome made the search bar redundant.

- Built-in Flash: Google Chrome came with Flash built right in to the browser, removing the need to install a plug-in to make a huge portion of the web just work (like charlierose.com).

- Simple Extension APIs: Google Chrome made it incredibly easy to make simple add-ons and release them.

- Speed: Google Google Chrome nailed this when it was released, it was much faster than the rest of the market.

- Rapid Releases: Google Chrome was able to update itself.

- Built-in DevTools: Google Chrome had a Firebug like feature that was solid and fast built-in.

- Per-Window Private Browsing

This list is short, but it was effective, I constantly hear people tell me that they used to use Firefox and that they now use Google Chrome and they love the Unibar or Google Chrome’s speed, right after I tell them that I work on Firefox.

The fact that Firefox has caught up to Google Chrome on these features doesn’t matter. It’s like releasing a movie about ants right after a competing studio released a movie about ants, the effect isn’t the same because the latter seems like a copy, even if it is superior.

Now my concern with Mozilla is that it is too focused on catching up with Google Chrome and not focused enough on being better by creating a blockbuster feature.

Firefox has caught up or is working on catching up in many areas, and we’ve spent a lot of capital to do so. Rapid Release is done, Simple Extension APIs is mostly done, Speed is mostly done, per-window private browsing is done, the Shumway project is tackling the Flash issue, and there are other projects like Australis which is achieving UI parity afaict, pdf.js for a built-in pdf reader, and I’m sure that I’m missing some other projects.

It’s my opinion that simply catching up, or even achieving moderate superority on feature clones isn’t going to bring new or old users to Firefox. This can only be done with a blockbuster feature.

So to call projects that bring us parity with Google Chrome “innovation” is quite a difficult thing for me to believe. I do see how the implementations are innovative, but I am referring to design at the moment. In fact it’s hard for me to believe that any of the projects listed above will increase Firefox’s market share, and some of the projects, like Australis is at risk of moderately damaging our market share.

I contend that Firefox, or more specifically Mozilla, needs to design a blockbuster feature, or perferably many blockbuster features. This is what the add-on community is good at, and that community is what made Firefox great to begin with in my opinion. I think that some good examples of blockbuster attempts were Greasemonkey, Ubiquity, Ad Block, NoScript, Stylish, Tab Groups, Tree Style Tabs, Collusion, and there are good examples of semi-blockbuster features that were built-in to Firefox, like the Add-on Manager redesign, Social API, DevTools Tilt, and the DevTools Responsive Design mode. I’m not saying these were all successful, some were not, but they are all well crafted attempts, and they bring Firefox prestige and grow the community.

It’s late here now and I this post is a longer post than I am used to writing already, so I will have to save the rest for part 2+, where I suggest how Firefox can innovate in the future, but the truth is the Mozilla community is teeming with bright minds, and I would just like to conclude by saying that I implore you to think about blockbuster features, then try to built them! and join the #jetpack channel on irc.mozilla.org if you for help with that latter bit.

Think big Mozillians, at long last it is time to forget about what Google Chrome is doing.

http://work.erikvold.com/firefox/2014/02/12/a-blockbuster-firefox.html

|

|

Asa Dotzler: Netiquette |

“Netiquette, a colloquial portmanteau of network etiquette or Internet etiquette, is a set of social conventions that facilitate interaction over networks, ranging from Usenet and mailing lists to blogs and forums.”*

I appreciate your enthusiasm for all topics Mozilla. And I love a good back-and-forth in the comments. I also want to keep discussion here productive so I’m letting y’all know that I have been and will continue to remove wildly off-topic comments or other attempts at hijacking a discussion.

If you’re trying to get my opinion on some unrelated issue, there are other forums — Twitter‘s probably the best, that work much better.

* “Etiquette in technology.” Wikipedia: the free encyclopedia.

|

|

Asa Dotzler: Super-cool Firefox OS Support App |

There’s a great new app in the Marketplace called Mozilla Support. It gives you off-line access to User Support articles for Firefox browsers and Firefox OS. Can’t get enough of troubleshooting problems for friends and family, install Mozilla Support on your Firefox OS phone (or tablet) today!

http://asadotzler.com/2014/02/11/super-cool-firefox-os-support-app/

|

|

Sean McArthur: intel v0.5 |

intel is turning into an even more awesome logging framework than before, as if that was possible! Today, I released version 0.5.0, and am now here to hawk it’s newness. You can check out the full changelog yourself, but I want to highlight a couple bits.

JSON function strings

intel.config is really powerful when coupled with some JSON config files, but Formatters and Filters were never 100% in config, because you could pass a custom function to either to customize to your little kidney’s content. It’s not possible to include typical functions in JSON. Much sad face. So, the formatFn and filterFn options allow you to write a function in a string, and intel will try to parse it into a function. Such JSON.

Logger.trace

A new lowest level was introduced, lower than even VERBOSE, and that’s TRACE. Likewise, Logger.trace behaves like console.trace, providing a stack trace with your message. If you don’t enable loggers with TRACE level logging, then no stacks will be traced, and everything will choo-choo along snappy-like.

Full dbug integration

This is the goods. intel is an awesome application logging library, since it lets you powerfully and easily be a logging switchboard: everything you want to know goes everywhere you want. However, stand-alone libraries have no business deciding where logs go. Libraries should simply provide logging when asked to, and shut up otherwise. That’s why libraries should use something like dbug. Since v0.4, intel has been able to integrate somewhat with dbug, but with 0.5, it can hook directly into it, meaning less bugs, and better performance. Examples!

// hood/lib/csp.js

var dbug = require('dbug')('hood:csp');

exports.csp = function csp(options) {

dbug('csp options:', options);

if (!options.policy) {

dbug.warn('no policy provided. are you sure?');

}

// ...

};

// myapp/server.js

var intel = require('intel');

intel.console({ debug: 'hood' });

// will see: 'myapp.node_modules.hood.csp:DEBUG csp options: {}'

Dare I say, using intel and dbug together gives the best logging solution for libraries and apps.

|

|

Ben Hearsum: Status update on smaller pools of build machines |

Last week glandium and I wrote a bit about how shrinking the size of our build pools would help get results out faster. This week, a few of us are starting work on implementing that. Last week I called these smaller tools “hot tubs”, but we’ve since settled on the name “jacuzzis”.

We had a few discussions about this in the past few days and quickly realized that we don’t have a way of knowing upfront exactly how many machines or to allocate to each jacuzzi. This number will vary based on the frequency of the builders in the jacuzzi (periodic, nightly, or on-change) as well as the number of pushes to the builders’ branches. Because of this we are firmly committed to making these allocations dynamically adjustable. It’s possible that it may take us many attempts to get the allocations right – so we need to make it as painless as possible. This will also enable us to shift allocations if there’s a sudden change in load (eg, mozilla-inbound is closed for a day, but b2g-inbound gets twice the number of pushes as usual).

There’s two major pieces that need to be worked on: writing a jacuzzi allocator service, and making Buildbot respect it.

The allocator service will be a simple JSON API with the following interface. Below, $buildername is a specific type of job (eg “Linux x86-64 mozilla-inbound build”), $machinename is something like “bld-linux64-ec2-023'' and $pool is something like “bld-linux64'':

- GET /builders/$buildername – Returns a list of machines that are allowed to perform this type of build.

- GET /machines/$machinename – Returns a list of builders that this machine is allowed to build for.

- GET /allocated/$poolname – Returns a list of all machines in the given pool that are allocated to any builder.

Making Buildbot respect the allocator is going to be a little tricky. It requires an explicit list of machines that can perform jobs from each builder. If we implement jacuzzis by adjusting this list, we won’t be able to adjust these dynamically. However, we can adjust some runtime code to override that list after talking to the allocator. We also need to make sure that we can fall back in cases where the allocator is unaware of a builder or unavailable.

To do this, we’re adding code to the Buildbot masters that will query the jacuzzi server for allocated machines before it starts a pending job. If the jacuzzi server returns a 404 (indicating that it’s unaware of the builder), we’ll get the full list of allocated machines from the /allocated/$poolname endpoint. We can subtract this from the full list of machines in the pool and try to start the job on one of the remaining ones. If the allocator service is unavailable for a long period of time we’ll just choose a machine from the full list.

This implementation has the nice side effect of allowing for a gradual roll out — we can simply leave most builders undefined on the jacuzzi server until we’re comfortable enough to roll it out more widely.

In order to get things going as quickly as possible I’ve implemented the jacuzzi allocator as static files for now, supporting only two builders on the Cedar tree. Catlee is working on writing the Buildbot code described above, and Rail is adjusting a few smaller tools to support this. John Hopkins, Taras, and Glandium were all involved in brainstorming and planning yesterday, too.

We’re hoping to get something working on the Cedar branch in production ASAP, possibly as early as tomorrow. While we fiddle with different allocations there we can also work on implementing the real jacuzzi allocator.

Stay tuned for more updates!

http://hearsum.ca/blog/status-update-on-smaller-pools-of-build-machines/

|

|

Austin King: *coin all things to decentralize the web |

It’s easy to see popular service, such as Google Maps as a neutral, free public utility.

A map service makes our phone a futuristic tricorders that changes our lives and make it trivial to get around and discover new things.

But these services are not neutral public utilities.

I care about Redecentralizing the web. But how do we do it?

Here is a crazy idea inspired by bitcoin… plus I wanted to get a post out on “fight mass surveillance day“.

A hard part of something like Diaspora which aimed at being a decentralized social network, is the problem of getting enough nodes up and running. A very small % of the population will step and run a server.

When email was born, a very large % of the internet using population could also run a server. Because if you used the internet back then, there were like a hundred of you. You knew that there were servers and clients, etc.

People setup SMTP servers, email flowed and all was good.

Over time as the internet and the web became popular, this % of the population because insignificant. Our current web architecture rewards centralized players.

If email was invented today, schools, businesses… everyone would be on one of a handful of email services!

So I started thinking about “who are the people running their own services” today?

Bitcoin miners operate the bitcoin transaction network. Think about the VISA network for credit card transactions. These are un-coordinated citizens running the bitcoin network, because they earn bitcoins over time. The network has a secure protocol that balances risks and rewards from many angles.

What if we de-centralized and rewarded service administrators with this same mechanic?

Think about:

- mapcoin

- wikipediacoin

- searchcoin

- babelfishcoin

- instantmessagecoin

- photosharingcoin

- dictionarycoin

- recipiecoin

Running a mapcoin node provides several facilities:

- access to map tiles

- access to compute for analyzing traffic data

- access to compute for processing map updates and settlement

By running a mapcoin node, you pay for electricty, a network connection and a fast computer with big disks. Every now and then, you “win the lotto” and earn a mapcoin. Sysadmins rewarded.

Also, we web developers can build great UIs that consume mapcoin data.

We can compete on great user experiences. Users aren’t locked into

a single vendor. The map data is a collective commons.

Once someone did the hard work of balancing risk, reward and baking it into a mapcoin protocol, OpenStreet Maps could endorse it and find a new sustainability model which would radically reduce their hosting costs.

Currency markets would take care of translating mapcoin into bitcoin or

“real money”.

Existance Proofs:

- Bitcoin is a currency, but also the payment network coin

- Dogecoin is Whoofie or social coin, there is a currency market for it

- SolarCoin is based on Bitcoin technology, but in addition to the usual way of generating coins through mining, crunching numbers to try and solve a cryptographic puzzle, people can earn them as a reward for generating solar energy.

- DNSChain (thanks Eric Mill)

*Coin all things to solve the de-centralization problem… what do you think?

|

|

Benjamin Kerensa: The Day We Fight Back! |

Tomorrow is The Day We Fight Back and its good to know Mozilla is lending its name to support this day of action which focuses on restoring privacy and ending spying by intelligence agencies. You can signup and add the code or if you use WordPress install the plugin and help support TDWFB!

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/fGHF8PsvIQg/day-fight-back

|

|

Eric Shepherd: What documentation belongs on MDN? |

On Friday, I blogged about how to go about ensuring that material that needs to be documented on the Mozilla Developer Network site gets taken care of. Today, I’m going to go over how you can tell if something should be documented on MDN. Believe it or not, it’s not really that hard to figure out!

We cover a lot on MDN; it’s not just about open Web technology. We also cover how to build and contribute to Firefox and Firefox OS, how to create add-ons for Firefox, and much more.

A quick guide to deciding where documentation should go

The quick and dirty way to decide if something should be documented on MDN can be summed up by answering the following questions. Just walk down the list until you get an answer.

- Does this information affect any kind of developer at all?

- If the answer is “no,” then your document doesn’t belong on MDN, but might belong on SUMO.

- Is the information about a technology that has reached a reasonably stable point?

- If the answer is “no,” then it may eventually belong on MDN, but not yet. You might want to put your information on wiki.mo though.

- Is the information about a Web- or app-accessible technology, regardless of browser or platform?

- If “yes,” then write about it on MDN!

- Is the information about Mozilla platform internals, building, or the like?

- If “yes,” then write about it on MDN!

- Is the information about Firefox OS?

- If “yes,” then write about it on MDN!

- Is the information about add-ons for Firefox or other Mozilla applications?

- If “yes,” then write about it on MDN!

- If you get to this line and haven’t gotten a “yes” yet, the answer is probably “no.” But you can always ask on #mdn on IRC to get a second opinion!

A more detailed look at what to document on MDN

Now let’s take a more in-depth look at what topics’ documentation belongs on MDN. Much of this information is duplicated from the MDN article “Does this belong on MDN?”, whose purpose is probably obvious from its title.

Open Web technologies

It should be fairly obvious that we document any technology that can be accessed from Web content or apps. That includes, but isn’t limited to:

- Open Web apps

- CSS

- HTML

- JavaScript

- APIs (the DOM, WebGL, WebSockets, IndexedDB, etc)

- MathML

- SVG

This also includes APIs that are specific to Firefox OS, even those that are restricted to privileged or certified apps.

Firefox OS

An important documentation area these days is Firefox OS. We cover this from multiple perspectives; there are four target audiences for our documentation here: Web app developers, Firefox OS platform developers, developers interested in porting Firefox OS to new platforms, and wireless carriers who want to customize their users’ experience on the devices they sell. We cover all of these!

That means we need documentation for these topics, among others:

- Open Web apps

- Building and installing Firefox OS

- Contributing to the Firefox OS project

- Customizing Gaia

- Porting Firefox OS

The Mozilla platform, Firefox, and add-ons

As always, we continue to document Mozilla internals on MDN. This documentation focuses primarily on developers building and contributing to projects such as Firefox, as well as add-on developers, and includes topics such as:

What don’t we cover?

There’s one last thing to consider: the types of content we don’t include on MDN. Your document doesn’t belong on MDN if the answer to any of the following questions is “yes.”

- Is it a planning document?

- Is it a design document for an upcoming technology?

- Is it about a technology that’s still evolving rapidly and not yet “ready for prime time?”

- Is it a proposal document?

- Is it a technology that’s not exposed to the Web and is specific to a non-Mozilla browser? If it’s not exposed to the Web, but is part of Mozilla code, then you just might want to document it on MDN.

Wrap-up

Hopefully this gives you a better feel for the kinds of things we document on MDN. If you learned anything, I’ve done my job.

Over the next few days, I’ll be continuing my blitz of documentation about documentation process, how to help ensure the work you do is documented thoroughly and well, and how to get along with writers in general.

http://www.bitstampede.com/2014/02/10/what-documentation-belongs-on-mdn/

|

|

Nicholas Nethercote: Nuwa has landed |

A big milestone for Firefox OS was reached this week: after several bounces spread over several weeks, Nuwa finally landed and stuck.

Nuwa is a special Firefox OS process from which all other app processes are forked. (The name “Nuwa” comes from the Chinese creation goddess.) It allows lots of unchanging data (such as low-level Gecko things like XPCOM structures) to be shared among app processes, thanks to Linux’s copy-on-write forking semantics. This greatly increases the number of app processes that can be run concurrently, which is why it was the #3 item on the MemShrink “big ticket items” list.

One downside of this increased sharing is that it renders about:memory’s measurements less accurate than before, because about:memory does not know about the sharing, and so will over-report shared memory. Unfortunately, this is very difficult to fix, because about:memory’s reports are generated entirely within Firefox, whereas the sharing information is only available at the OS level. Something to be aware of.

Thanks to Cervantes Yu (Nuwa’s primary author), along with those who helped, including Thinker Li, Fabrice Desr'e, and Kyle Huey.

https://blog.mozilla.org/nnethercote/2014/02/11/nuwa-has-landed/

|

|

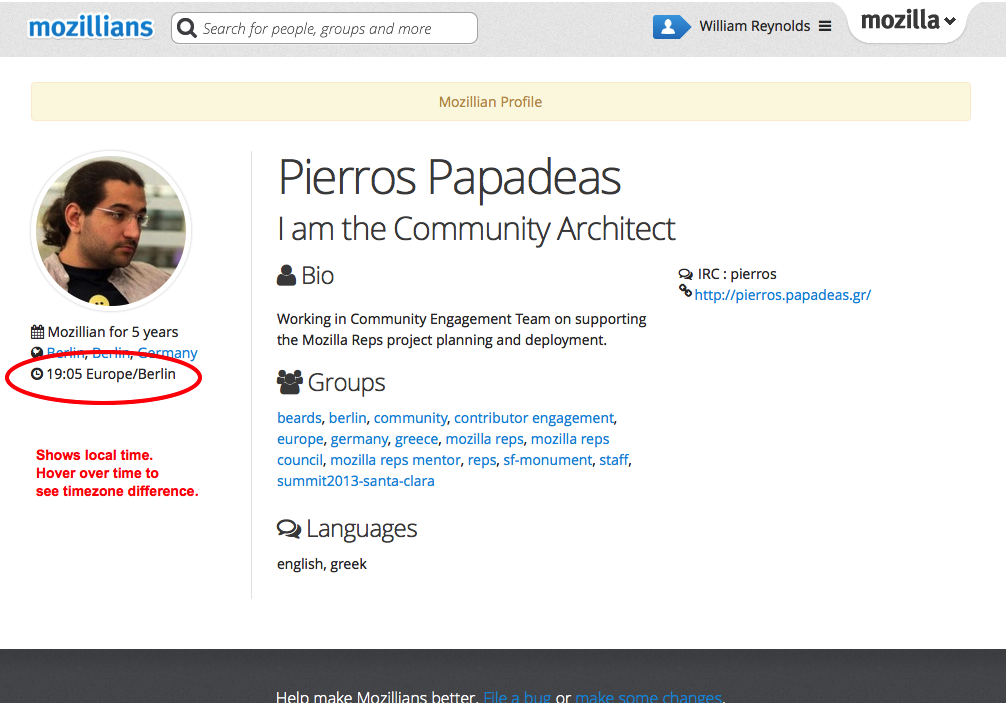

William Reynolds: What time is it for that Mozillian? |

Since Mozilla is a globally distributed project, I work with people in many different timezones. When I want to chat with someone, I often want to know what time it is in their local area. Profiles on mozillians.org now show the person’s local time.

Even better, if you hover over the time on the profile, you will see how many hours that person is ahead or behind you. The site uses your browser’s current timezone for that calculation, so as you travel, the timezone difference will update. And that’s quite handy for a bunch of mobile Mozillians.

http://dailycavalier.com/2014/02/what-time-is-it-for-that-mozillian/

|

|

Doug Belshaw: Weeknote 06/2014 |

Last week I was in Toronto for a Webmaker workweek. It was a little different so I’m going to eschew the usual bullet points for a more image-based review.

Sunday

After assembling on Saturday evening and Sunday morning, the #TeachTheWeb team came together to ensure we could get started as soon as possible. We discussed the semantics and nomenclature around Webmaker resources and Michelle walked us through a potential production cycle:

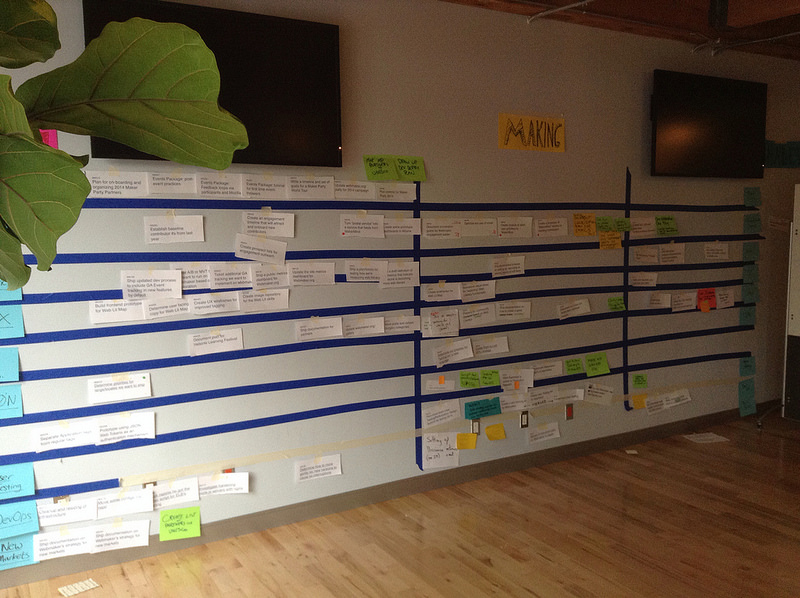

Next, we put up all the scrum tasks for the workweek:

…and then we played dodgeball on trampolines. Obviously.

Monday

As is often the case when you get people face-to-face, we spent a good chunk of the day ensuring that we were on the same page. This involved some wrangling around semantics, mental models and what’s in and out of scope for the team and #TeachTheWeb in general.

Happily, we moved many scrum tasks from ‘To Make’ to ‘Making’:

Tuesday

We were ready to hit the ground running and started off with a plan for the infrastructure that will support #TeachTheWeb courses. Laura gave the context of conversations she’s had with P2PU (who we may be employing to build this out).

We discussed this plan with Brett and the UX team, and agreed a way forward.

Kat and Karen did a great job of scoping out a teaching kit for remix:

The final part of the day was focused on scoping out the rest of the stuff we need to do this week. Things like assessment, badges and metrics/evaluation.

Wednesday

There was a bit of finishing off to do in the morning before the first demos at lunchtime. I did some preliminary thinking about badging, Kat and Karen continued their work on the teaching kit for ‘Remixing’, while Michelle and Laura interacted with other teams to make sure we’re all on the same page.

We got questions and feedback from everyone who took their turn to have explained to them the production cycle and ideas for teach.webmaker.org. Laura and Michelle made the necessary changes and then started planning out what the rest of 2014 will look like.

For a while we’ve been talking about some kind of bookmarklet that allows people to tag resources they find around the web. Just as you might bookmark something with Delicious or pin something to a Pinterest board, so webmakers could use the MakeAPI to surface resources related to specific parts of the Web Literacy Map. I was delighted when Atul stepped up to have a go at it, and so I created a ‘canonical’ list of tags to help with that.

In the evening we went tobogganing, courtesy of Geoffrey MacDougall. Which was epic.

Thursday

Some people couldn’t stay for the entire week, so Chris brought forward the demo sessions from Friday morning to last thing on Thursday. It was great to see how much progress had been made on things that were just ideas earlier in the week. In particular, the UX team had some really interesting ideas about how a new ‘Explore’ tab could work. You can read more about that on Cassie McDaniels’ blog.

Bobby Richter and the rest of the AppMaker team have been doing some amazing work with making mobile webapp development a reality. Karen and I worked on getting towards finishing a first draft of a new Webmaker whitepaper.

Afterwards, Jon Buckley had suggested we all go to the Backyard Axe-Throwing League. I wasn’t great at it, but it turns out some of my colleagues (including JP and Luke) are alarmingly good:

Friday

Not everyone could stay until Friday because of other commitments, so the opening circle was noticeably smaller. We took the opportunity to have the kind of meetings face-to-face that are more difficult even over a video connection.

I headed to the airport around 3pm with Laura and Paula then managed to sleep for some of the overnight flight home. ![]()

|

|

Rob Campbell: Updated Console Keyboard Shortcuts in Firefox |

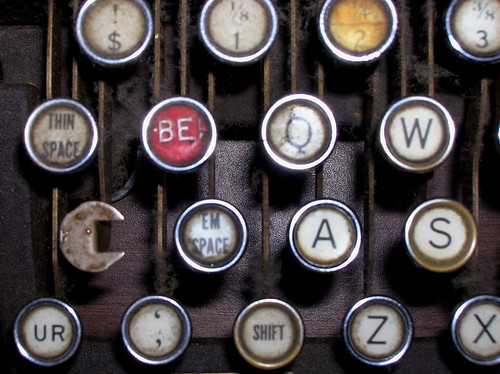

“keyboard” by Mark Lane on Flickr

Cmd-Alt-K on OS X or Ctrl-Shift-K on Linux or Windows will now always focus the console input line. It will no longer close the entire toolbox and you’ll have to use one of the other global toolbox commands, Cmd-Alt-i (or Ctrl-Shift-i) or F12.

Keyboard shortcuts.

They are the bane of Firefox Developer Tools engineers. Ask us in #devtools for a new keyboard shortcut to do X and you will be greeted by a chorus of groans. There just aren’t enough keys on a keyboard for everything we need to use them for.

I added a recent change to automatically focus the Console’s input line when clicking anywhere in the console’s output area (bug 960695). This spawned a series of follow-ups.

The first of these was the addition of Page Up and Page Down controls on the input line. (bug 962531). Now when the input line is focused, it will scroll the output area with page up and page down keys. If the autocomplete popup is active, it’ll scroll too. It’s a little thing that’s nice to have when you’re flipping through all of the completions for, say, the global window object.

The most important change we landed this weekend is a change to the Console’s main Keyboard Shortcut, Cmd-Alt-K on OS X or Ctrl-Shift-K if you’re on Linux or Windows. This key will now always focus the console input line. It will no longer close the entire toolbox and you’ll have to use one of the other global toolbox commands, Cmd-Alt-i (or Ctrl-Shift-I) or F12. (see bug 612253 for details and history)

Try it out. Tell us if you hate it. You can reach us here, IRC, or on the twitters.

And there’s still more to do. I filed bug 967044 to make the Home and End keys do the right thing in the Console’s input line. We have this “metabug” tracking all of the open shortcut bugs in Firefox Devtools. Are we missing any? Let us know!

http://robcee.net/2014/updated-console-keyboard-shortcuts-in-firefox/

|

|

Gervase Markham: How Mozilla Is Different |

We’re replacing Firefox Sync with something different… and not only did we publish the technical documentation of how the crypto works, but it contains a careful and clear analysis of the security improvements and weaknesses compared with the old one. We don’t just tell you “Trust us, it’s better, it’s the new shiny.”

The bottom line is in order get easier account recovery and device addition, and to allow the system to work on slower devices, e.g. Firefox OS phones, your security has become dependent on the strength of your chosen Sync password when it was not before. (Before, Sync didn’t even have passwords.) This post is not about whether that’s the right trade-off or not – I just want to say that it’s awesome that we are open and up front about it.

http://feedproxy.google.com/~r/HackingForChrist/~3/gj9nkG8AoDU/

|

|

Ludovic Hirlimann: PGP Key signing Party in London MArch 25th 2014 |

On march 25th 2014 I’m organizing a pgp key signing party in the Mozilla London office after working hours.

If you value privacy and email you should probably attend. In order to organize the meeting I need to know the number of participants , for that I’m requesting that people register using eventbrite.

For thos who don’t know how pgp key signing party work you should read this http://sietch-tabr.tumblr.com/post/61392468398/pgp-key-signing-party-at-mozilla-summit-2013-details.

|

|

Soledad Penades: Firefox OS Simulator is now a component in Bugzilla |

So you can now file bugs under Product = Firefox OS, Component = Simulator.

Or look for existing bugs!

PS. Aren’t you AMAZE at my mad bugzilla skillzzz? I created direct links for you to file or search Firefox OS Simulator bugs. Ahhh, the power of query strings in URLs…!

http://soledadpenades.com/2014/02/10/firefox-os-simulator-is-now-a-component-in-bugzilla/

|

|

Priyanka Nag: A dream come true....FOSDEM |

I never knew the dream would come true so soon, that too, it was a dream come true++ scenario for me. I was not only invited to FOSDEM, I was invited to give a talk on the platform of FOSDEM.

I still remember the exact feeling when I had received that email which read "Your talk proposal for the Mozilla DevRoom at FOSDEM 2014 has been accepted!". I was on cloud nine....too happy to be able to share the news with even the people sitting next to me in office (I was in my workplace at that time).

From the day of receiving the invitation to the day of travel...things moved a bit too fast and even before I had realized, I was already in the flight for Brussels.

Day 0 in Brussels

Brussels welcomed us with an awesome climate. The temperature was 0'C when we landed. I was warm in my jacket but I remember not being able to feel my hands after being out for sometime. The hotel was at a great location....so centrally located that we had seen most of Brussels just by taking a walk around our hotel on the first day itself. It was in the evening that I met the rest of the Mozillians over dinner. I was literally the youngest one in the team and I bet was the one most scared (for the talk) among them all. Later that night, we had the FOSDEM bear party where I had the chance of meeting some of the awesomest minds of the Open Source world. I wish I could stay back longer at the party that night, but my unfinished slides didn't allow me to do so. I left early and rushed back to my room to complete my slides and run through them, just to be prepared for the big day.

Day 1 in Brussels

The bus we took to the venue was jam-packed and I was astonished to know that most of them (about 95%) were actually travelling to the same place as I was. Someone had mockingly said in the bus-'if we could hijack this bus now, we could build the Operating System of our dreams. This bus has some of the best brains of the world'.

As soon as I entered ULB Campus, I was thrilled! I had never expected such a crowd in an Open Source event.

It was only at 5pm when my blood pressure started rising...my talk was the next one in line. I was scared like hell but somehow the moment I got on the stage and held that mike and few familiar faces wished me luck, I got an unknown strength to address a crowd of 280+ people. This was my first ever talk in a platform like FOSDEM, addressing a crowd this huge!

| |

| Me...in action at FOSDEM |

In the next 30 minutes I just lived my dream. I knew this was the moment I had dreamt about all my life.

I can't be a good judge of my own performance, but the tweets I had received during and after the talk were really encouraging.

Day 2 in Brussels

The next was more of enjoying and less of responsibility. I went around all the buildings, visiting all the booths, talking to people, building contacts (and of-course collecting swags). I met some awesome people that day...some whom if I describe as 'genius' it would probably be an understatement. I also got to meet a few people whom I had previously interacted with,online, but never met before.

|

| Meeting Quim Gil at FOSDEM 2014 |

The most amazing part of this day was when I was told that someone had come at the Mozilla booth, looking for me. When I reached the booth, there was a man who was waiting to interview me and know my journey in the FOSS world. He said that my story could encourage other girls to get involved with Open Source development.

Trust me when I say that there is no feeling better than knowing that your story could make a difference to someone else's life.

I wished FOSDEM was held for longer than 2 days :P

This FOSDEM was a real big event of my life and I hope I get an invitation for the next one as well ;)

My slide can be found here :

http://www.slideshare.net/priynag/women-and-technology-31005551

The recording of my talk can be found here:

Video Link

http://priyankaivy.blogspot.com/2014/02/a-dream-come-truefosdem.html

|

|

Paul Rouget: Servo Layout Engine: Parallelizing the Browser |

Servo Layout Engine: Parallelizing the Browser

During the FOSDEM, Josh Matthews talked about Servo. You can find his slides here: joshmatthews.net/fosdemservo

Video:

|

|

Ron Piovesan: Selling my iPhone for seven figures… |

My phone’s got what can no longer be gotten…

The game is no longer available through online stores, but it still works on phones that had previously downloaded it.

As a result, some online users have offered to sell their smartphones still containing the Flappy Birds app for large sums of money.

BBC News – Flappy Bird creator removes game from app stores.

http://ronpiovesan.com/2014/02/10/selling-my-iphone-for-seven-figures/

|

|

Benjamin Kerensa: Attending Scale12x |

Where are you going to be next week?

Where are you going to be next week?

I know where I will be. I will be attending Scale12x (Southern California Linux Expo) in Los Angeles, CA. I will be there most of the week working with Casey and Joanna to man the Mozilla booth where we will be evangelizing Firefox OS. This is going to be Mozilla’s second year at the Southern California Linux Expo and I have to say were really excited to be there again after lots of interest in Firefox OS and other Mozilla projects last year.

I’m also really looking forward to checking out some of the talks by Community Contributors at the UbuCon which is taking place at Scale12x. If you are attending Scale12x and want to catch up for a beer or lunch/dinner hit me up on Twitter and we will set something up.

Also be sure to check out Where’s Mozilla to learn about other events Mozilla will have a presence at!

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/JFN-v1s-r3M/attending-scale12x

|

|

Hub Figui`ere: The open content |

Open content is content that is also available openly.

The short: people claiming they don't blog anymore but write lengthy on the closed Google+, a platform that is closed (does not allow to pull the content of RSS), discriminate on names, and in the end just represent the Google black hole as it seems only Google fanboys and employees use it.

This also applies to Facebook, Twitter (to a lesser extent, just because of the 140 char limits) and so on.

Sorry this is not the Internet I want. It is 2014, time to take it back.

http://www.figuiere.net/hub/blog/?2014/02/09/845-the-open-content

|

|