Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Asa Dotzler: Firefox OS Happenings: week ending 2014-02-07 |

In the last week, 86 Mozilla contributors fixed about 180 bugs and features tracked in Bugzilla at the Firefox OS product and Gonk OS.

A special thanks to new contributor Sukant Garg [:gargsms] who fixed his first Gaia bug this week, 966211 – Screen should not timeout when I have a running stopwatch”.

Exciting discussions in Firefox OS land include “You’re not crazy – the browser really is gone.” and related “wow. such rocketbar.”, both in mozilla.dev.gaia.

On the website compatibility front, we’ve had some great recent wins that let us stop special casing Yahoo, Scribd, Urbanspoon, deviantART, and several OLX regional sites. Thanks to the dev teams at those sites and to Mozilla’s awesome Web Comptibilty team.

The big Firefox OS news items this week is the Line messaging app for Firefox OS.

Finally, if all goes well, I’ll have the Firefox OS Tablet Contributor Program application form ready next week with tablets shipping to our community by the end of the month. We’re still sorting out some details that could delay things a wee bit, but we’re getting very close, so stay tuned. (And if things are going really well, I’ll also have some news about a second tablet device coming to the program.)

http://asadotzler.com/2014/02/07/firefox-os-happenings-week-ending-2014-02-07/

|

|

Gervase Markham: Why Is Email So Hard? |

I want programs on my machine to be able to send mail. Surely that’s not too much to ask. If I wanted it to Tweet, I’m sure there are a dozen libraries out there I could use. But sending mail… that requires the horror that is sendmail.rc or trying to configure Postfix or Exim, which is the approximate equivalent of using an ocean-going liner to cross a small creek.

Or does it? I just discovered “ssmtp“, which is supposed to be a program which does the very simple thing of accepting mail and passing it on to a configured mailserver – GMail, your own, or whatever. All you have to do is tell it your server name, username and password. Simple, right?

Turns out, not so simple. I kept getting auth failures, whichever server I tried. ssmtp’s verbose mode seemed to show no password being shown, but that’s because it doesn’t show passwords in logs. Once I finally configured a mailserver on port 25 (so no encryption) and broke out Wireshark, it turned out that ssmtp was sending a blank password. But why?

The config file doesn’t have sample entries for the “username” and “password” parameters, so I typed them in. Turns out, the config key for the password is “AuthPass”, not “AuthPassword”. And ssmtp doesn’t think to even say “Hey, you are trying to log in without a password. No-one does that. Are you sure you’re not on crack?” It happily sends off a blank password, because that’s clearly what you wanted if you left the password out entirely.

http://feedproxy.google.com/~r/HackingForChrist/~3/_joSZ5EsLWo/

|

|

Ben Hearsum: This week in Mozilla RelEng – February 7th, 2014 – new format (again)! |

I’ve heard from a few people that the bug long list of bugs I’ve been provided for the past few weeks is a little difficult to read. Starting with this week I’ll be supplementing that with some highlights at the top that talk about some of the more important things that were worked on throughout the week.

Highlights:

- Aki did some work on improving our documentation about things that need to happen on merge days. Historically, this has been a hairy process, and cleaning this up will help us complete it more quickly and without mistakes.

- Armen worked on extracting data to show how much CPU is used on EC2 machines. This data is a step on the road to more efficient use of them.

- Hal started work to enable the tree closure to trees that don’t yet have it. Enabling that will help sheriffs minimize tree closures of large branches (eg, mozilla-inbound) by having better control over incoming load.

- Catlee, Callek, Mike, Simone, and Massimo all attended TRIBE Session 1.

- Nick made it possible for us to ship Firefox 27.0 to our Beta users before we ship it to the Release channel. This gives us a better opportunity to find hard to reproduce bugs such as bug 865701 before we ship.

- Many people both in and outside of RelEng helped debug and fix network load issues that caused massive tree closures. Catlee wrote an in-depth blog post on this for those interested.

- Rail switched many of our EC2 machines from m3.xlarge to the cheaper (but just as fast) c3.xlarge instances.

- I did some experiments with smaller pools of build machines which is a start towards more intelligent build machine selection.

Completed work (resolution is ‘FIXED’):

- Buildduty

- Jobs not being scheduled

- Re-purpose mw32-ix-slave##, linux-ix-slave##, linux64-ix-slave##, bld-linux64-ix-05[1-3], mw32-ix-ref and linux-ix-ref as b-2008-ix-#### (rev2) machines

- Jobs not being scheduled

- Please update version of mock available on internal pypi

- Self-serve is down

- Hamachi nightlies on b2g18 are burning with “Error: Couldn’t find /builds/slave/b2g_m-b18_ham_ntly-00000000000/build/out/host/linux-x86/bin/fs_config”

- Revision mapper down

- deploy gcc 4.7.2 with newer binutils to tooltool server

- nightly builds failing because of switch to ftp-ssl

- Deploy and updated tools check out to all foopies

- upload a new talos.zip file

- General Automation

- b2g merge day work

- B2G emulator tests are broken on Pine and Cedar

- [tracker] deal with machines that will be obsoleted after the esr17 EOL

- Tracking bug for 3-feb-2014 migration work

- No Firefox linux nightly l10n builds since Jan 30

- limit pvtbuilds uploads

- Add ‘latest’ directory for mozilla-central TBPL b2g builds

- android nightlies failing to submit to balrog because of forbidden domain

- Compression for blobber

- Expose system resource usage of jobs

- Schedule debug emulator tests and builds on pine

- Schedule gaia-ui-tests on cedar against emulator builds

- Switch update server for Buri from OTA to FOTA by using the solution seen in bug 935059

- [Tracking bug] automation support for B2G v1.3.0

- Make gecko and gaia archives from device builds publicly available

- Loan Requests

- Please loan t-xp32-ix- instance to dminor

- Slave tst-linux64-ec2 or talos-r3-fed for :marshall_law

- Please loan t-w864-ix- instance to dminor

- Other

- Add AWS networks to inventory

- Try harder to get a fast slave

- recreate bad instances in us-west-2

- a bunch of usw2 slaves failed to puppetize

- Platform Support

- s/m3.xlarge/c3.xlarge/

- Build and deploy a patched gcc 4.7.3

- The clock of some Fedora machines is out of sync

- Migrate mozilla-release and comm-release to win64-rev2

- Releases

- Add SeaMonkey 2.24 Beta 1 to bouncer

- Manually create SSL products for Firefox 27

- tracking bug for build and release of Firefox 24.3.0 ESR

- tracking bug for build and release of Thunderbird 24.3.0

- Disable Aurora 29 daily updates until merge to mozilla-aurora has stabilized

- Add SeaMonkey 2.24 to bouncer

- Generate partial updates from 27.0build1 (27.0rc) to 28.0b1

- Yandex partner repack changes for Fx 27 release

- Releases: Custom Builds

- Update Bing favicon to match current branding for partner builds and add-ons

- Clean up MSN bundles and restore MSN add-on to the correct URL

- Repos and Hooks

- Tools

- slaveapi’s reboot action shouldn’t create a bug for a slave unless it was unable to reboot it

- Support for instance type changes for aws_watch_pending.py

- Don’t display “long loans” in aws sanity report twice

- getting ambigous results for long running instances -> tst-w64-ec2-001 and tst-w64-ec2-002

- Teach slave health to file tracking bugs about b-2008-ix-*

- Mirror https://github.com/mozilla/gaia-ui-tests to hg

- Make git-hg mapfile public

- Loaner EC2 instances should not auto startup

- Standardize on single quotes in slave health

- # of constructors no longer being measured correctly

- Move js into libs as much as possible

In progress work (unresolved and not assigned to nobody):

- Balrog: Backend

- Buildduty

- General Automation

- Create two S3 buckets and make them available from build slaves

- Provision enough in-house master capacity

- Tracking bug for 17-mar-2014 migration work

- Provide B2G Emulator builds for Darwin x86

- Get existing metrofx talos tests running on release/project branches

- Create IAM roles for EC2 instances

- Rooting analysis mozconfig should be in the tree

- gaia-ui tests need to dump a stack when the process crashes

- Sometimes building files more than once on mac and linux

- Make Pine use mozharness production & limit the jobs run per push

- take ownership of and fix relman merge scripts

- Run desktop mochitests-browser-chrome on Ubuntu

- Use spot instances for regular builds

- switch to https for build/test downloads and hg

- split Android 4.0 robocop (rc) into 5 chunks

- Run win64 unit tests at bootup on tst-w64-ec2-xxx fork

- Implement ghetto “gaia-try” by allowing test jobs to operate on arbitrary gaia.json

- Remove ‘update_files’ logic from B2G unittest mozharness scripts

- Please add non-unified builds to mozilla-central

- Cloning of hg.mozilla.org/build/tools and hg.mozilla.org/integration/gaia-central often times out with “command timed out: 1200 seconds without output, attempting to kill”, “command timed out: 1800 seconds without output, attempting to kill”

- Flags passed to jit-test from mozharness should match flags in make check.

- final verification should report remote IP addresses

- b2g builds failing with AttributeError: ‘list’ object has no attribute ‘values’ | caught OS error 2: No such file or directory while running ['./gonk-misc/add-revision.py', '-o', 'sources.xml', '--force', '.repo/manifest.xml']

- keep buildbot master twistd logs longer

- Build the Gecko SDK from Firefox, rather than XULRunner

- Run unittests on Win64 builds

- submit release builds to balrog automatically

- Split up mochitest-bc on desktop into ~30minute chunks

- [tracking bug] migrate hosts off of KVM

- Run jit-tests from test package

- [tarako][build]create “tarako” build

- Use mach to invoke printconfigsetting.py

- Loan Requests

- request for a Windows 7 build slave

- Loan felipe an AWS unit test machine

- loan request for graydon [Ubuntu 64]

- Slave loan request for a talos-mtnlion-r5 machine

- Please lend Andreas Tolfsen a linux64 EC2 m3.medium slave

- loan linux slave to jmaher

- Slave loan request for a tst-linux64-ec2 machine

- Need a slave for bug 818968

- Other

- Create a long term archive of opt+debug builds on M-I for Regression Hunting

- s/m1.medium/m3.medium/

- Add b2g-inbound, fx-team, and mozilla-central to regression archive

- [tracking] infrastructure improvements

- Set up cron jobs to copy mozilla-inbound dep builds to S3

- s/m1.large/m3.large/

- Use mozmake for some windows builds

- Don’t clobber unrelated trees

- [tracking] move services from cruncher to production

- The stage.m.o:/mnt/cm-ixstore01 mount is filling up

- Platform Support

- [tracker] move jobs away from obsoleted machines

- AWS machines should run b2g emulator reftests with GALLIUM_DRIVER=softpipe

- Windows slaves often get permission denied errors while rm’ing files

- Determine number of iX machines to request for 2014

- Run Windows 8 unit tests on Date branch

- Create a Windows-native alternate to msys rm.exe to avoid common problems deleting files

- Release Automation

- Releases

- tracking bug for build and release of Firefox and Fennec 28.0b1

- Set up testing updates for 27.0 and older betas to 27.0 build1

- tracking bug for build and release of Firefox and Fennec 27.0

- Add SeaMonkey 2.25 Beta 1 to bouncer

- Releases: Custom Builds

- Repos and Hooks

- Mercurial server should encourage best practices

- Add a hook to detect changesets with wrong file metadata

- Add every tree to treestatus, and add the treeclosure hook to every tree’s repo

- Tools

- tracker to retire legacy vcs2vcs

- Report Bug # in AWS loan report

- update merge day documentation

- Add an option to trychooser to select Talos profiling options

- permanent location for vcs-sync mapfiles, status json, logs

- cut over build/* repos to the new vcs-sync system

- slaveapi still files IT bugs for some slaves that aren’t actually down

- db-based mapper on web cluster

- Create a Comprehensive Slave Loan tool

http://hearsum.ca/blog/this-week-in-mozilla-releng-february-7th-2014-new-format-again/

|

|

Jeff Griffiths: FOSDEM 2014: JS & Hardware |

I had the privilege of attending and speaking at FOSDEM this past weekend on our plans to help web developer extend the Firefox Developer Tools:

It's now Time for @canuckistani to speak about the essential developer tools! Cc @mozilla #fosdem pic.twitter.com/hR1I8eMdRm

— Mozilla FR (@mozilla_fr) February 1, 2014( the slides are here, and I'll post again when the video is available )

If you've never been, FOSDEM is a pretty unusual conference - it's free for attendees, hosted at ULB university in Brussels, and seems to be the heart and soul ( or at least the key annual event and social event ) for the FOSS community in Europe. Since attending my first FOSDEM in 2012 I've had this deep impression that FOSS communities are distinctly different in Europe than elsewhere, and this was reconfirmed this year.

The Mozilla room was open all day Saturday and was very busy, particularly for talks on JS and Firefox OS. Likewise Sunday's JavaScript room was completely overflowing whenever we tried to get in, which was unfortunate because there were some really interesting talk topics I wanted to see.

Another strong trend that I noticed had an even larger impact this year was the huge array of talks and projects around FOSS and open / embedded hardware. It's really incredible to see the diversity of activity new low-power devices running an open source stack - there was an entire devroom dedicated to the 'internet of things'.

I really like how squarely Firefox OS is placed between these two trends - people want ot write mobile html5 apps, and people want to embed software in all manner of devices. Mozilla is ( quite rightly ) very focused on shipping for phones right now, but we're starting to see the types of experiments with other devices that I think is inevitable:

- Gigaom: "Firefox may beat Google to a web-based slate: Hello, Firefox OS tablet"

- "APC runs the Firefox OS, built for keyboard and mouse input."

- " Mozilla partners with Panasonic to bring Firefox OS to the TV"

This is the great side-effect of working in the open as we do - people don't need to ask us permission to do these things, they just fork a github repo and get started.

|

|

Wil Clouser: Improving our process; Part 1: Identifying Priorities |

One of our stress points is juggling too many projects. It’s easy to hear ideas pitched from excited people and agree that we should do them, but it’s hard to balance that with all the other commitments we’ve already made. At some point we have too many “top priority” projects and people are scrambling to keep up with just the bare minimum – forgoing any hope of actually getting the enhancements done.

With a large number of projects, developers begin working independently to finish them which increases feelings of isolation, contributes to poor communication (“I don’t want to interrupt you”), and delays code review until someone can context switch over to look at it. Basically we’ve hit a point where – on large enough projects – working separately is slowing down our delivery and lowering our team morale.

In addition to being spread too thinly, there is also the question of why are we working on the projects we’re working on. In theory, it’s because they support our company goals, but that can often get muddled in the heat of the moment and interpretations and definitions (and even memory) can affect what we actually work on.

The work week helped highlight these two concerns and after several discussions (thanks to everyone who participated) we came up with some solutions. I’ll address them in reverse order – firstly, to determine priorities (the why), we wanted a more objective and public way of ranking the importance of projects. A public list of projects not only lines up with our open philosophy, but helps developers and the community know who is working on what, and also helps anyone requesting features get a feeling for a delivery timeline. Our solution was to build a grid with 16 relevant fields which we rank from 0-5 and then sum to get a final score. The fields are categorized and are broken down as follows:

- Who finds value from the request? Separate points for consumers, developers, operators, OEMs, and Mozilla employees.

- How does the request align with the company goals[1]? Separate points for each goal.

- How does the request align with the 2014 Marketplace goals? Separate points for the three goals.

- Lastly, is there internal necessity? Separate points for Technological Advancement, Legal Compliance, and Urgency.

Once we have a request, a champion for the request meets with representatives from the product, UX, and developer teams, as well as any other relevant folks. We discuss the request and the value in the specific areas and then add up the score to get a number representative of priority. Clearly this isn’t a hard and fast science, but it’s a lot more objective than a gut feeling. It lets us justify decisions with reasoning, helps us think of all affected groups, and makes sure we’re working on what is important. If something has a high score we’ll start talking about timelines and moving things around, keeping in mind commitments we’ve made, planning and research that needs done, and any synchronizing we need to do with other dependent teams.

The other issue – people trying to do too much – is easily solved in a conversation but will likely be a challenge in practice. The plan is to cap our active tasks to 3 or 4 at any one time. Anything else will queue up behind them or displace them as appropriate. The exact number may change based on size of the team and size of the project, but my goal is to get 2 to 4 people working on a project together and with our current numbers that’s about what we can do.

I’m working on a real-time public view of our tasks but I’m traveling this week and haven’t had a chance to finish it. I’ll post again with a link once it is finished.

[1] Sorry for the non-public link here and the unlinked Marketplace goals. I’m told there will be a publicly accessible link on Feb 24th and I’ll update this post then.

http://micropipes.com/blog/2014/02/07/improving-our-process-part-1-identifying-priorities/

|

|

Planet Mozilla Interns: Mihnea Dobrescu-Balaur: A GitHub river for Elasticsearch |

Elasticsearch is a great tool, allowing users to store and query giant text datasets with speed and ease. However, the thing I like most about elasticsearch is the workflows that you can build around it and its idioms. One of these idioms is the so-called river.

A river is an easy way to set up a continuous flow of data that goes into your elasticsearch datastore. It is more convenient than the classical way of manually indexing data because once configured, all the data will be updated automatically. This reduces complexity and also helps build a real-time system.

There are already a few rivers out there, like the twitter and wikipedia rivers, but there wasn't any GitHub river until now.

Using the GitHub river

The GitHub river allows us to periodically index a repository's public events. If you provide authentication data (as seen in the README), it can also index data for private repositories.

To get a taste of the workflow possibilities this opens, we will index some data from the lettuce repo and explore it in a pretty kibana dashboard.

Assuming you have elasticsearch already installed, you first need to install the river. Make sure you restart elasticsearch after this so it picks up the new plugin.

# if you don't have plugin in your $PATH, it's in $ELASTICSEARCH/bin/plugin.

plugin -i com.ubervu/elasticsearch-river-github/1.2.1

Now we can create our GitHub river:

curl -XPUT localhost:9200/_river/gh_river/_meta -d '{

"type": "github",

"github": {

"owner": "gabrielfalcao",

"repository": "lettuce",

"interval": 3600

}

}

Right after this, elasticsearch will start indexing the most recent 300 (GitHub API policy) public events of gabrielfalcao/lettuce. Then, after one hour, it will check again for new events.

The data is accessible in the gabrielfalcao-lettuce index, where you will find a

different document type for every GitHub event type.

Visualizing the data with Kibana

In order to make some sense of this data, let's get Kibana up and running. First,

you need to download

and extract the latest Kibana build. To access it, open kibana-latest/index.html in your

favorite web browser.

What you see now is the default Kibana start screen. You could go ahead and configure your

own dashboard, but to speed things up I suggest you import the dashboard I set up. First,

download the JSON file that defines the

dashboard. Then, at the top-right corner of the page, go to Load > Advanced > Choose file and select the downloaded JSON.

That's it! You now have a basic dashboard set up that shows some key graphs based on the GitHub data you have in elasticsearch. Furthermore, thanks to the river and the way the dashboard is set up, you will get new data every hour and the dashboard will refresh accordingly.

Besides repository events, the river also fetches all the issue data and open pull requests. Checking those out in Kibana is left as an exercise for the reader.

Happy hacking!

http://www.mihneadb.net/post/a-github-river-for-elasticsearch

|

|

Lawrence Mandel: Manager Hacking presents: A Year In the Financial Life of an Average Mozillian |

Mozilla Manager Hacking is proud to announce an exciting discussion with our CFO, Jim Cook, on “A Year in the Financial Life of an Average Mozillian”. This interactive discussion is designed to map the daily, monthly, and yearly activities of an average employee to our financial statements. The goal of Jim’s compelling and creative presentation is more on his continuing financial series of bringing an awareness and open dialogue to how we think about Mozilla’s finances. Please come prepared to know more, do more and do better!

Event: Open Financial Discussion: A Year In the Financial Life of an Average Mozillian

Presenters: Jim Cook and Winnie Aoeiong

Date/Time: 11 February 2014 @ 10 – 11a.m. PDT

Location: 10FWD MTV / SF Floor 1 Commons / Tor Commons / YVR Commons / PDX Commons / Lon Commons / Par Salle des Fetes / Air Mozilla

Open to employees and vouched Mozillians

Tagged: finance, manager hacking, mozilla, presentation

|

|

Eric Shepherd: Getting on the developer documentation team’s radar |

If you’re a contributor to the Firefox project—or even a casual reader of the Mozilla Developer Network documentation content—you will almost certainly at times come upon something that needs to be documented. Maybe you’re reading the site and discover that what you’re looking for simply isn’t available on MDN. Or maybe you’re implementing an entire new API in Gecko and want to be sure that developers know about it.

Let’s take a look at the ways that you can make this happen.

Requesting documentation team attention

In order to ensure something is covered on MDN, you need to get the attention of the writing team. There are two official methods to do this; which you use depends on various factors, including personal preference and the scope of the work to be done.

The dev-doc-needed keyword

For several years now, we’ve made great use of the dev-doc-needed keyword on bugs. Any bug that you think even might benefit from (or require) a documentation update should have this keyword added to it. Full stop.

Documentation request bugs

The other way to request developer documentation is to file a documentation request bug. This can be used for anything from simple tweaks to documentation to requesting that entirely new suites of content be written. This can be especially useful if the documentation you feel needs to be created encompasses multiple bugs, but, again, can be used for any type of documentation that needs to be written.

Getting ahead: how to get your request to the top of the pile

Just flagging bugs or filing documentation requests is a great start! By doing either (or both) of those things, you’ve gotten onto our list of things to do. But we have a lot of documentation to write: as of the date of this blog post, we have 1233 open documentation requests. As such, prioritization becomes an important factor.

You can help boost your request’s chances of moving toward the top of the to-do list! Here are some tips:

- If your technology is an important one to Mozilla’s mission, be sure to say so in your request. Let us know it’s important, and why.

- Give us lots of information! Be sure we have links to specifications and design documents. Make sure it’s clear what needs to be done. The less we have to hunt for information, the easier the work is to get finished, and the sooner we’re likely to pick your request off the heap.

- Ping us in IRC on #mdn. Ideally, find the MDN staff member in charge of curating your topic area, or the topic driver for the content area your technology falls under, and talk to them about it.

- Email the appropriate MDN staff member, or ping the dev-mdc mailing list, to let us know about your request.

- For large new features or technologies, try to break up your request into multiple, more manageable pieces. If we can document your technology in chunks, we can prioritize sections of it among other requests, making it easier to manage your request efficiently.

I’ll blog again soon with a more complete guide to writing good documentation requests.

Conclusion

Be sure to check out the article “Getting documentation updated” on MDN; this covers much of what I’ve said here. Upcoming posts will go over how to decide if something should be covered on MDN at all, and how to communicate with the developer documentation team in order to make sure that the documentation we produce is as good as possible.

http://www.bitstampede.com/2014/02/07/getting-on-the-docs-teams-radar/

|

|

Marco Zehe: Switching back to Windows |

Yes, you read correctly! After five years on a Mac as my private machine, I am switching back to a Windows machine in a week or so, depending on when Lenovo’s shipment arrives.

You are probably asking yourself, why I am switching back. In this post, I’ll try to give some answers to that question, explain my very personal views on the matters that prompted this switch, and give you a bit of an insight into how I work and why OS X and VoiceOver no longer really fit that bill for me.

A bit of history

When I started playing with a Mac in 2008, I immediately realised the potential this approach that Apple was taking had. Bundling a screen reader with the operating system had been done before, on the GNOME desktop, for example, but Apple’s advantage is that they control the hardware and software back to front and always know what’s inside their boxes. So a blind user is always guaranteed to get a talking Mac when they buy one.

On Windows and Linux, the problem is that the hardware used is unknown to the operating system. On pre-installed systems, this is usually being taken care of, but on custom-built machines with standard OEM versions of Windows or your Linux distro downloaded from the web, things are different. There may be this shiny new sound card that just came out, which your dealer put in the box, but which neither operating system knows about, because there are no drivers. And gone is the dream of a talking installation! So, even when Windows 8 now allows Narrator to be turned on very early in the installation process in multiple languages even, and Orca can be activated early in a GNOME installation, this all is of no use if the sound card cannot be detected and the speech synthesizer canot output its data through conected speakers.

And VoiceOver had quite some features already when I tried it in OS X 10.5 Leopard: It had web support, e-mail was working, braille displays, too, the Calendar was one of the most accessible on any desktop computer I had ever seen, including Outlook’s calendar with the various screen readers on Windows, one of which I had even worked on myself in earlier years, and some third-party apps were working, too. In fact, my very first Twitter client ran on the Mac, and it was mainstream.

There was a bit of a learning curve, though. VoiceOver’s model of interacting with things is quite different from what one might be used to on Windows at times. Especially interacting with container items such as tables, text areas, a web page and other high-level elements can be confusing at first. If you are not familiar with VoiceOver, interacting means zooming into an element. A table suddenly gets rows and columns, a table row gets multiple cells, and each cell gets details of the contained text when interacting with each of these items consecutively.

In 2009, Apple advanced things even further when they published Snow Leopard (OS X 10.6). VoiceOver now had support for the trackpads of modern MacBooks, and when the Magic TrackPad came out later, it also just worked. The Item Chooser, VoiceOver’s equivalent of a list of links or headings, included more items to list by, and there was now support for so-called web spots, both user-defined and automatic. A feature VoiceOver calls Commanders allowed the assignment of commands to various types of keystrokes, gestures, and others. If you remember: Snow Leopard cost 29 us Dollars, and aside from a ton of new features in VoiceOver, it obviously brought all the great new features that Snow Leopard had in store for everyone. A common saying was: Other screen readers needed 3 versions for this many features and would have charged several hundred dollars of update fees. And it was a correct assessment!

In 2011, OS X 10.7 Lion came out, bringing a ton of features for international users. Voices well-known from iOS were also made available in desktop formats for over 40 languages, international braille tables were added, and it was no longer required to purchase international voices separately from vendors such as AssistiveWare.. This meant that users in more countries could just try out VoiceOver on any Mac in an Apple retail store or a reseller’s place. There were more features such as support for WAI-ARIA landmarks on the web, activities, which are either application or situation-specific sets of VoiceOver settings, and better support for the Calendar, which got a redesign in this update.

First signs of trouble

But this was also the time when first signs of problems came up. Some things just felt unfinished. For example: The international braille support included grade 2 for several languages, including my mother tongue German. German grade 2 has a thing where by default, nothing is capitalized. German capitalizes many more words than English, for example, and it was agreed a long time ago that only special abbreviations and expressions should be capitalized. Only in learning material, general orthographic capitalization rules should be used. In other screen readers, capitalization can be turned on or off for German and other language grade 2 (or even grade 1). Not so in VoiceOver for both OS X and iOS. One is forced to use capitalization. This makes reading quite awkward. And yes, makes, because this remains an issue in both products to this date. I even entered a bug into Apple’s bug tracker for this, but it was shelved at some point without me being notified.

Some other problems with braille also started to surface. For some inexplicable reason, I often have to press routing buttons twice until the cursor appears at the spot I want it to when editing documents. While you can edit braille verbosity where you can define what pieces of information are being shown for a given control type, you cannot edit what gets displayed as the control type text. A “closed disclosure triangle” always gets shown as such, same as an opened one. On a 14 cell display, this takes two full-length displays, on a 40 cell one, it wastes most of the real estate and barely leaves room for other things.

Other problems also gave a feeling of unfinished business. The WAI-ARIA landmark announcement, working so well on iOS, was very cumbersome to listen to on OS X. The Vocalizer voices used for international versions had a chipmunk effect that was never corrected and, while funny at first, turned out to be very annoying in day-to-day use.

OK, the enthusiastic Mac fan boy that I was, thought, let’s report these issues and also wait for the updates to trickle in. None of the 10.7 updates really fixed the issues I was having.

Then a year later, Mountain Lion, AKA OS X 10.8, came out, bringing a few more features, but compared to the versions before, much much less. Granted, it was only a year between these two releases, whereas the two cycles before had been two years each, but the features that did come in weren’t too exciting. There was a bit polish here and there with drag and drop, one could now sort the columns of a table, and press and hold buttons, and a few little things more. Safari learned a lot new HTML5 and more WAI-ARIA and was less busy, but that was about it. Oh yes and one could now access all items in the upper right corner of the screen. But again, not many of the previously reported problems were solved, except for the chipmunk effect.

There were also signs of real problems. I have a Handy Tech Evolution braille display as a desktop braille display, and that had serious problems from one Mountain Lion update to the next, making it unusable with the software. It took two or three updates, distributed over four or five months, before that was solved, basically turning the display into a useless piece of space-waster.

And so it went on

And 10.9 AKA Mavericks again only brought a bit polish, but also introduced some serious new bugs. My Handy Tech BrailleStar 40, a laptop braille display, is no longer working at all. It simply isn’t being recognized when plugged into the USB port. Handy Tech are aware of the problem, so I read, but since Apple is in control of the Mac braille display drivers, who knows when a fix will come, if at all in a 10.9 update. And again, old bugs have not been fixed. And new ones have been introduced, too.

Mail, for example, is quite cumbersome in conversation view now. While 10.7 and 10.8 very at least consistent in displaying multiple items in a table-like structure, 10.9 simply puts the whole mail in as an embedded character you have to interact with to get at the details. It also never keeps its place, always jumping to the left-most item, the newest message in the thread.

The Calendar has taken quite a turn for the worse, being much more cumbersome to use than in previous versions. The Calendar UI seems to be a subject of constant change anyway, according to comments from sighted people, and although it is technically accessible, it is no longer really usable, because there are so many layers and sometimes unpredictable focus jumps and interaction oddities.

However, having said that, an accessible calendar is one thing I am truly going to miss when I switch back to Windows. I know various screen readers take stabs at making the Outlook calendar accessible, and it gets broken very frequently, too. At least the one on OS X is accessible. I will primarily be doing calendaring from my iOS devices in the future. There, I have full control over things in a hassle-free manner.

iBooks, a new addition to the product, is a total accessibility disaster with almost all buttons unlabeled, and the interface being slow as anything. Even the update issued shortly after the initial Mavericks release didn’t solve any of those problems, and neither did the 10.9.1 update that came out a few days before Christmas 2013.

From what I hear, Activities seem to be pretty broken in this release, too. I don’t use them myself, but heard that a friend’s activities all stopped working, triggers didn’t fire, and even setting them up fresh didn’t help.

Here comes the meat

And here is the first of my reasons why I am switching back to Windows: All of the above simply added up to a point where I lost confidence in Apple still being dedicated to VoiceOver on the Mac as they were a few years ago. Old bugs aren’t being fixed, new ones introduced and, despite the beta testers, which I was one of, reporting them, were often not addressed (like the Mail and Calendar problems, or iBooks). Oh yes, Pages, after four years, finally became more accessible recently, Keynote can now run presentations with VoiceOver, but these points still don’t negate the fact that VoiceOver itself is not receiving the attention any more that it would need to as an integrated part of the operating system.

The next point is one that has already been debated quite passionately on various forums and blogs in the past: VoiceOver is much less efficient when browsing the web than screen readers on Windows are. Going from element to element is not really snappy, jumping to headings or form fields often has a delay, depending on the size and complexity of a page, and the way Apple chose to design their modes requires too much thinking on the user’s part. There is single letter quick navigation, but you have to turn on quick navigation with the cursor keys first, and enable the one letter quick navigation separately once in the VoiceOver utility. When cursor key quick navigation is on, you only navigate via left and right arrow keys sequentially, not top to bottom as web content, which is still document-based for the most part, would suggest. The last used quick navigation key also influences the item chooser menu. So if I moved to a form field last via quick navigation, but then want to choose a link from the item chooser, the item chooser opens to the form fields first. I have to left arrow to get to the links. Same with headings. For me, that is a real slow-down.

Also, VoiceOver is not good at keeping its place within a web page. As with all elements, once interaction stops, then starts again, VoiceOver starts interaction at the very first element. Conversations in Adium or Skype, and even the Messages app supplied by Apple, all suffer from this. One cannot jump into and out of the HTML area without losing one’s place. Virtual cursors on Windows in various screen readers are very good at remembering the spot they were at when focus left the area. And even Apple’s VoiceOver keystroke to jump to related elements, which is supposed to jump between the input and HTML area in such conversation windows, is a subject of constant breakage, re-fixing, and other unpredictability. It does not even work right in Apple’s own Messages app in most cases.

Over-all, there are lots of other little things when browsing the web which add up to make me feel I am much less productive when browsing the web on a Mac than I am on Windows.

Next is VoiceOver’s paradigm of having to interact with many elements. One item where this also comes into play is text. If I want to read something in detail, be it on the web, a file name, or basically anything, I have to interact with the element, or elements, before I get to the text level, read word by word or character by character, and then stop interaction as many times as I started it to get back to where I was before wanting to read in detail. Oh yes, there are commands to read and spell by character, word, and sentence, but because VoiceOver uses the Control+Option keys as its modifiers, and the letters for those actions are all located on the left-hand side of the keyboard, it means I have to take my right hand off its usual position to press these keys while the left hand holds the Control and Option keys. MacBooks as well as the Apple Wireless Keyboard don’t have Control and Option keys on both sides, and my hand cannot be bent in a fashion that I can grab these keys all with one hand. Turning on and off the VoiceOver key lock mechanism for this would add even more cumbersome to the situation.

And this paradigm of interaction is also applied to the exploration of screen content by TrackPad. You have to interact or stop interacting with items constantly to get a feel for the whole screen. And even then, I often feel I never get a complete picture. Unlike on iOS, where I always have a full view of a screen. Granted, a desktop screen displays far more information than could possibly fit on a TrackPad without being useless millimeter touch targets, but still the hassle of interaction led to me not using the TrackPad at all except for some very seldom specific use cases. We’re talking about a handful instances per year.

Next problem I am seeing quite often is the interaction braille gives me. In some cases, the output is just a dump of what speech is telling me. In other cases, it is a semi-spacial representation of the screen content. In yet another instance, it may be a label with some chopped off text to the right, or to the left, with the cursor not always in predictable positions. I already mentioned the useless grade 2 in German, and the fact that I often have to press the routing button at least twice before the cursor gets where I want it to go. The braille implementation in VoiceOver gives a very inconsistent impression, and feels unfinished, or done by someone who is not a braille reader and doesn’t really know the braille reader’s needs.

Next problem: Word processing. Oh yes, Pages can do tables in documents now, and other stuff also became more accessible, but again because of the paradigms VoiceOver uses, getting actual work done is far more cumbersome than on Windows. One has, for example, to remember to decouple the VoiceOver cursor from the actual system focus and leave that inside the document area when one wants to execute something on a tool bar. Otherwise, focus shifts, too, and a selection one may have made gets removed, rendering the whole endeavor pointless. Oh yes, and one has to turn the coupling back on later, or habits will result in unpredictable results because the system focus didn’t move where one would have expected it to. And again, VoiceOver’s horizontally centered navigation paradigm. Pages of a document in either Pages or Nisus Writer Pro appear side by side, when they are visually probably appearing below one another. Each page is its own container element. All of this leaves me with the impression that I don’t have as much control over my word processing as I have in MS Word or even the current snapshot builds of OpenOffice or LibreOffice on Windows. I also get much more information that I don’t have to look for explicitly, for example the number of the current page. NVDA, but probably others, too, have multilingual document support in Word. I immediately hear which spell checking is being used in a particular paragraph or even sentence.

There are some more issues which were not addressed to this day. There is no PDF reader I know of on OS X that can deal with tagged (accessible) PDFs. Even when tags are present, Preview doesn’t do anything with them, giving the more or less accurate text extraction that one gets from untagged PDFs. As a result, there is no heading navigation, no table semantic output, and more that accessible PDFs support.

And the fact that there is no accessible Flash plug-in for web browsers on OS X also has caused me to switch to a Windows VM quite often just to be able to view videos embedded in blogs or articles. Oh yeah, HTML5 video is slowly coming into more sites, but the reality is that Flash is probably still going to be there for a couple of years. This is not Apple’s fault, the blame here is solely to be put on Adobe for not providing an accessible Flash plug-in, but it is one more thing that adds to me not being as productive on a Mac as I want to be on a desktop computer.

Conclusion

In summary: By all of the above, I do not mean to say that Apple did a bad job with VoiceOver to begin with. On the contrary: Especially with iOS, they have done an incredibly good job for accessibility in the past few years. And the fact that you can nowadays buy a Mac and install and configure it fully in your language is highly commendable, too! I will definitely miss the ability to configure my system alone, without sighted assistance, should I need to reinstall Windows. As I said above, that is still not fully possible without assistance. It is just the adding up of things that I found over the years that caused me to realize that some of the design decisions Apple has made for OS X, bugs that were not addressed or things get broken and not fixed, and the fact that apps are either accessible or they aren’t, and there’s hardly any in-between, are not compatible with my way of working with a desktop computer or laptop in the longer term. For iOS, I have a feeling Apple are still full-steam ahead with accessibility, introducing great new features with each release, and hopefully also fixing braille problems as discussed by Jonathan Mosen in this great blog post. For OS X, I am no longer as convinced their heart is in it. As I have a feeling OS X itself may become a second-class citizen behind iOS soon, but that, again, is only my personal opinion.

So there you have it. This is why I am going to be using a Lenovo notebook with NVDA as my primary screen reader for my private use from now on. I will still be using a Mac for work of course, but for my personal use, the Mac is being replaced. I want to be fast, effective, productive, and be sure my assistive technology doesn’t suddenly stop working with my braille display or be susceptible to business decisions placing less emphasis on it. Screen readers on Windows are being made by independent companies or organizations with their own business models. And there is choice. If one does no longer fit particular needs, another will most likely do the trick. I do not have that on OS X.

http://www.marcozehe.de/2014/02/07/switching-back-to-windows/

|

|

Pierros Papadeas: Mozilla Contribution Madlibs |

Michelle Marovich is preparing to run the Designing for Participation workshop for the People team. She’s looking for some real world examples that can help make the concepts concrete for everyone, so she set up a Contribution Madlib template for people to fill out.

Here is mine, on a contribution idea I have for sometime now.

I want to get more people to contribute to Mozilla Location Service, I need thousands of people to help me on it therefore I will reach out to all established geo-communities and post on all relevant forums and mailing lists in order to publicize the work.

Then I will organize regular open meetings and local meetups with communities on the ground in order to engage with the people who are interested. I break the work down into tasks by creating local teams that can self-organize the work that needs to be done locally.

I communicate those tasks by a form of an online game, so people become more engaged with the contribution opportunities. So that we can work effectively together, I always make sure that we have an open channel on IRC with people on call to answer questions. I continue to raise awareness of the work by evangelizing what the team is doing on the already established channels of the Geolocation industry.

I communicate decisions and progress by delegating this to the people on the Location Services team that are handling progress reports. When we achieve a milestone, reach a goal, or someone does something amazing I recognize them by cool prizes, badges and local recognition titles.

|

|

William Reynolds: Madlibs for mozillians.org contributions |

Michelle Marovich is organizing a Design for Participation workshop, and she has created a fun Contribution Madlibs template for people to fill out. I completed a version for how the community tools team works on mozillians.org:

We want to improve the value of mozillians.org, we need several people to collaborate with us on it therefore we will share our plans and contribution opportunities on our project wiki page and a blog syndicated on Planet Mozilla in order to publicize the work.

Then we mentor those people and communicate regularly on our project channels in order to engage with the people who are interested.

We break the work down into tasks by creating bugs for various skills and amounts of effort.

We communicate those tasks by marking them on Bugzilla and linking to them from our project wiki page and our IRC channel.

So that we can work effectively together, we always make sure that people can ask questions, give feedback and share ideas on our discussion forum and IRC channel.

We continue to raise awareness of the work by blogging about it as well as sharing it with Mozillians at the project meeting, the Grow Mozilla meeting and by email.

We communicate decisions and progress by posting to different discussion forums, syncing up in our weekly meeting and commenting on bugs.

When we achieve a milestone, reach a goal, or someone does something amazing we recognize them by personally thanking them and recognizing their hard work publicly.

If you want to get involved with mozillians.org, check out our project wiki page to learn how to get started.

http://dailycavalier.com/2014/02/madlibs-for-mozillians-org-contributions/

|

|

Nicholas Nethercote: A slimmer and faster pdf.js |

TL;DR: Firefox’s built-in PDF viewer is on track to gain some drastic improvements in memory consumption and speed when Firefox 29 is released in late April.

Firefox 19 introduced a built-in PDF viewer which allows PDF files to be viewed directly within Firefox. This is made possible by the pdf.js project, which implements a PDF viewer entirely in HTML and JavaScript.

This is a wonderful feature that makes the reading of PDFs on websites much less disruptive. However, pdf.js unfortunately suffers at times from high memory consumption. Enough, in fact, that it is currently the #5 item on the MemShrink project’s “big ticket items” list.

Recently, I made four improvements to pdf.js, each of which reduces its memory consumption greatly on certain kinds of PDF documents.

Image masks

The first improvement involved documents that use image masks, which are bitmaps that augment an image and dictate which pixels of the image should be drawn. Previously, the 1-bit-per-pixel (a.k.a 1bpp) image mask data was being expanded into 32bpp RGBA form (a typed array) in a web worker, such that every RGB element was 0 and the A element was either 0 or 255. This typed array was then passed to the main thread, which copied the data into an ImageData object and then put that data to a canvas.

The change was simple: instead of expanding the bitmap in the worker, just transfer it as-is to the main thread, and expand its contents directly into the ImageData object. This removes the RGBA typed array entirely.

I tested two documents on my Linux desktop, using a 64-bit trunk build of Firefox. Initially, when loading and then scrolling through the documents, physical memory consumption peaked at about 650 MiB for one document and about 800 MiB for the other. (The measurements varied somewhat from run to run, but were typically within 10 or 20 MiB of those numbers.) After making the improvement, the peak for both documents was about 400 MiB.

Image copies

The second improvement involved documents that use images. This includes scanned documents, which consist purely of one image per page.

Previously, we would make five copies of the 32bpp RGBA data for every image.

- The web worker would decode the image’s colour data (which can be in several different colour forms: RGB, grayscale, CMYK, etc.) from the PDF file into a 24bpp RGB typed array, and the opacity (a.k.a. alpha) data into an 8bpp A array.

- The web worker then combined the the RGB and A arrays into a new 32bpp RGBA typed array. The web worker then transferred this copy to the main thread. (This was a true transfer, not a copy, which is possible because it’s a typed array.)

- The main thread then created an

ImageDataobject of the same dimensions as the typed array, and copied the typed array’s contents into it. - The main thread then called

putImageData()on theImageDataobject. The C++ code within Gecko that implementsputImageData() then created a newgfxImageSurfaceobject and copied the data into it. - Finally, the C++ code also created a Cairo surface from the

gfxImageSurface.

Copies 4 and 5 were in C++ code and are both very short-lived. Copies 1, 2 and 3 were in JavaScript code and so lived for longer; at least until the next garbage collection occurred.

The change was in two parts. The first part involved putting the image data to the canvas in tiny strips, rather than doing the whole image at once. This was a fairly simple change, and it allowed copies 3, 4 and 5 to be reduced to a tiny fraction of their former size (typically 100x or more smaller). Fortunately, this caused no slow-down.

The second part involved decoding the colour and opacity data directly into a 32bpp RGBA array in simple cases (e.g. when no resizing is involved), skipping the creation of the intermediate RGB and A arrays. This was fiddly, but not too difficult.

If you scan a US letter document at 300 dpi, you get about 8.4 million pixels, which is about 1 MiB of data. (A4 paper is slightly larger.) If you expand this 1bpp data to 32bpp, you get about 32 MiB per page. So if you reduce five copies of this data to one, you avoid about 128 MiB of allocations per page.

Black and white scanned documents

The third improvement also involved images. Avoiding unnecessary RGBA copies seemed like a big win, but when I scrolled through large scanned documents the memory consumption still grew quickly as I scrolled through more pages. I eventually realized that although four of those five copies had been short-lived, one of them was very long-lived. More specifically, once you scroll past a page, its RGBA data is held onto until all pages that are subsequently scrolled past have been decoded. (The memory is eventually freed; it just takes longer than we’d like.) And fixing it is not easy, because it involves page-prioritization code isn’t easy to change without hurting other aspects of pdf.js’s performance.

However, I was able to optimize the common case of simple (e.g. unmasked, with no resizing) black and white images. Instead of expanding the 1bpp image data to 32bpp RGBA form in the web worker and passing that to the main thread, the code now just passes the 1bpp form directly. (Yep, that’s the same optimization that I used for the image masks.) The main thread can now handle both forms, and for the 1bpp form the expansion to the 32bpp form also only happens in tiny strips.

I used a 226 page scanned document to test this. At about 34 MiB per page, that’s over 7,200 MiB of pixel data when expanded to 32bpp RGBA form. And sure enough, prior to my change, scrolling quickly through the whole document caused Firefox’s physical memory consumption to reach about 7,800 MiB. With the fix applied, this number reduced to about 700 MiB. Furthermore, the time taken to render the final page dropped from about 200 seconds to about 25 seconds. Big wins!

The same optimization could be done for some non-black and white images (though the improvement will be smaller). But all the examples from bug reports were black and white, so that’s all I’ve done for now.

Parsing

The fourth and final improvement was unrelated to images. It involved the parsing of the PDF files. The parsing code reads files one byte at a time, and constructs lots of JavaScript strings by appending one character at a time. SpiderMonkey’s string implementation has an optimization that handles this kind of string construction efficiently, but the optimization doesn’t kick in until the strings have reached a certain length; on 64-bit platforms, this length is 24 characters. Unfortunately, many of the strings constructed during PDF parsing are shorter than this, so in order a string of length 20, for example, we would also create strings of length 1, 2, 3, …, 19.

It’s possible to change the threshold at which the optimization applies, but this would hurt the performance of some other workloads. The easier thing to do was to modify pdf.js itself. My change was to build up strings by appending single-char strings to an array, and then using Array.join to concatenate them together once the token’s end is reached. This works because JavaScript arrays are mutable (unlike strings which are immutable) and Array.join is efficient because it knows exactly how long the final string will be.

On a 4,155 page PDF, this change reduced the peak memory consumption during file loading from about 1130 MiB to about 800 MiB.

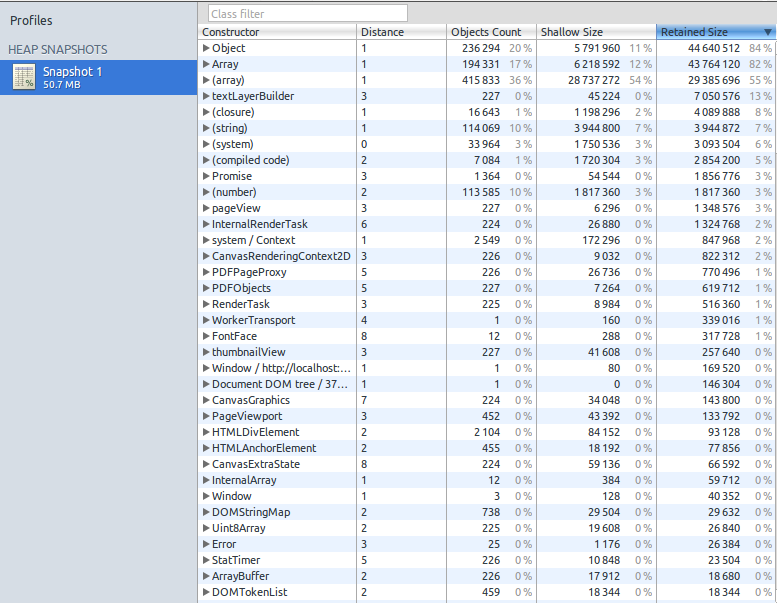

Profiling

The fact that I was able to make a number of large improvements in a short time indicates that pdf.js’s memory consumption has not previously been closely looked at. I think the main reason for this is that Firefox currently doesn’t have much in the way of tools for profiling the memory consumption of JavaScript code (though the devtools team is working right now to rectify this). So I will explain the tricks I used to find the places that needed optimization.

Choosing test cases

First I had to choose some test cases. Fortunately, this was easy, because we had numerous bug reports about high memory consumption which included test files. So I just used them.

Debugging print statements, part 1

For each test case, I looked first at about:memory. There were some very large “objects/malloc-heap/elements/non-asm.js” entries, which indicate that lots of memory is being used by JavaScript array elements. And looking at pdf.js code, typed arrays are used heavily, especially Uint8Array. The question is then: which typed arrays are taking up space?

To answer this question, I introduced the following new function.

function newUint8Array(length, context) {

dump("newUint8Array(" + context + "): " + length + "\n");

return new Uint8Array(length);

}

I then replaced every instance like this:

var a = new Uint8Array(n);

with something like this:

var a = newUint8Array(n, 1);

I used a different second argument for each instance. With this in place, when the code ran, I got a line printed for every allocation, identifying its length and location. With a small amount of post-processing, it was easy to identify which parts of the code were allocating large typed arrays. (This technique provides cumulative allocation measurements, not live data measurements, because it doesn’t know when these arrays are freed. Nonetheless, it was good enough.) I used this data in the first three optimizations.

Debugging print statements, part 2

Another trick involved modifying jemalloc, the heap allocator that Firefox uses. I instrumented jemalloc’s huge_malloc() function, which is responsible for allocations greater than 1 MiB. I printed the sizes of allocations, and at one point I also used gdb to break on every call to huge_malloc(). It was by doing this that I was able to work out that we were making five copies of the RGBA pixel data for each image. In particular, I wouldn’t have known about the C++ copies of that data if I hadn’t done this.

Notable strings

Finally, while looking again at about:memory, I saw some entries like the following, which are found by the “notable strings” detection.

> | | | | | | +----0.38 MB (00.03%) -- string(length=10, copies=6174, "http://sta")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=11, copies=6174, "http://stac")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=12, copies=6174, "http://stack")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=13, copies=6174, "http://stacks")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=14, copies=6174, "http://stacks.")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=15, copies=6174, "http://stacks.m")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=16, copies=6174, "http://stacks.ma")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=17, copies=6174, "http://stacks.mat")/gc-heap

> | | | | | | +----0.38 MB (00.03%) -- string(length=18, copies=6174, "http://stacks.math")/gc-heap

It doesn’t take much imagination to realize that strings were being built up one character at a time. This looked like the kind of thing that would happen during tokenization, and I found a file called parser.js and looked there. And I knew about SpiderMonkey’s optimization of string concatenation and asked on IRC about why it might not be happening, and Shu-yu Guo was able to tell me about the threshold. Once I knew that, switching to use Array.join wasn’t difficult.

What about Chrome’s heap profiler?

I’ve heard good things in the past about Chrome/Chromium’s heap profiling tools. And because pdf.js is just HTML and JavaScript, you can run it in other modern browsers. So I tried using Chromium’s tools, but the results were very disappointing.

Remember the 226 page scanned document I mentioned earlier, where over 7,200 MiB of pixel data was created? I loaded that document into Chromium and used the “Take Heap Snapshot” tool, which gave the following snapshot.

At the top left, it claims that the heap was just over 50 MiB in size. Near the bottom, it claims that 225 Uint8Array objects had a “shallow” size of 19,608 bytes, and a “retained” size of 26,840 bytes. This seemed bizarre, so I double-checked. Sure enough, the operating system (via top) reported that the relevant chromium-browser process was using over 8 GiB of physical memory at this point.

So why the tiny measurements? I suspect what’s happening is that typed arrays are represented by a small header struct which is allocated on the GC heap, and it points to the (much larger) element data which is allocated on the malloc heap. So if the snapshot is just measuring the GC heap, in this case it’s accurate but not useful. (I’d love to hear if anyone can confirm or refute this hypothesis.) I also tried the “Record Heap Allocations” tool but it gave much the same results.

Status

These optimizations have landed in the master pdf.js repository, and were imported into Firefox 29, which is currently on the Aurora branch, and is on track to be released on April 29.

The optimizations are also on track to be imported into the Firefox OS 1.3 and 1.3T branches. I had hoped to show that some PDFs that were previously unloadable on Firefox OS would now be loadable. Unfortunately, I am unable to load even the simplest PDFs on my Buri (a.k.a. Alcatel OneTouch), because the PDF viewer app appears to consistently run out of gralloc memory just before the first page is displayed. Ben Kelly suggested that Async pan zoom (APZ) might be responsible, but disabling it didn’t help. If anybody knows more about this please contact me.

Finally, I’ve fixed most of the major memory consumption problems with the PDFs that I’m aware of. If you know of other PDFs that still cause pdf.js to consume large amounts of memory, please let me know. Thanks.

https://blog.mozilla.org/nnethercote/2014/02/07/a-slimmer-and-faster-pdf-js/

|

|

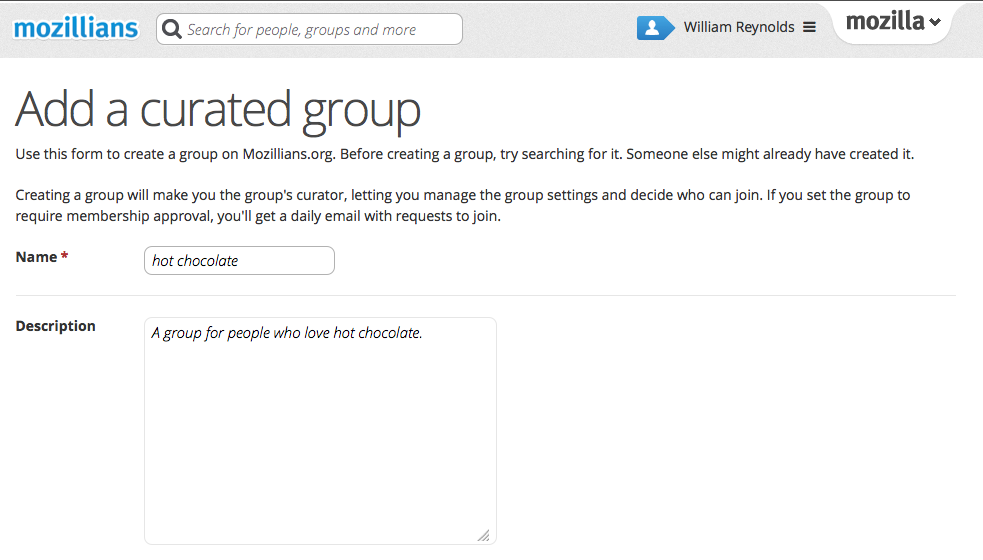

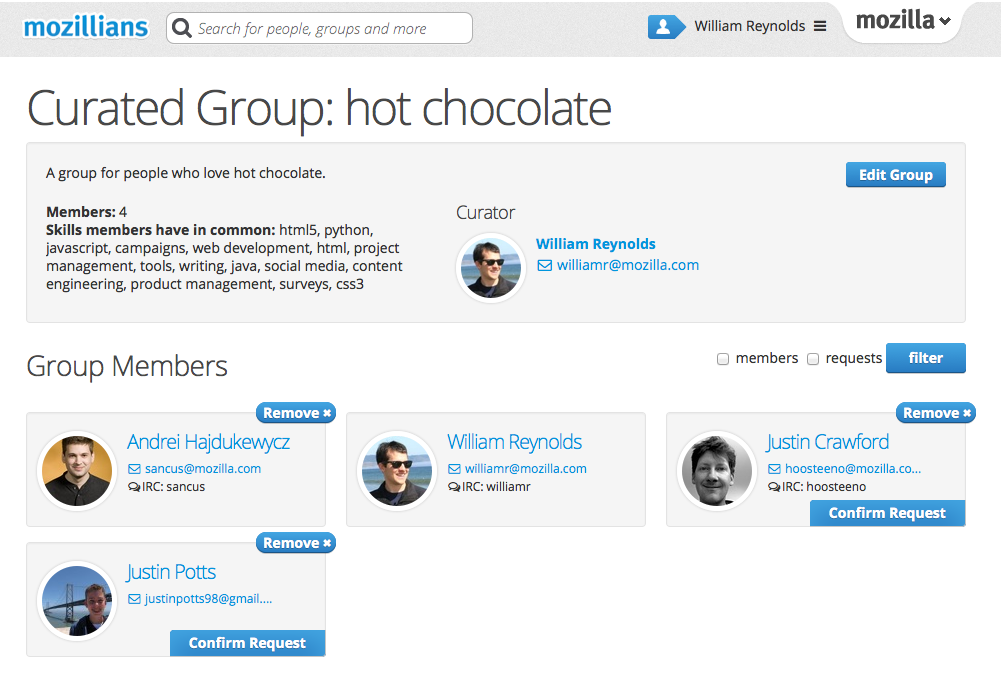

William Reynolds: mozillians.org groups now have curators and other goodnesss |

On mozillians.org, Mozilla’s community directory, there are hundreds of self-organized groups of people based on a variety of interests. The Community Tools team has released some big improvements for how you can create, manage and view groups in order to provide more value in connecting with fellow Mozillians.

New ways to curate groups

Starting today, all new groups will have a curator, which is the person who created the group. The curator has the ability to set the general information of the group, manage some settings and moderate the membership of the group.

The general information on curated groups now includes the fields that have been shown on functional area groups for a while. These fields include a description, IRC channel, website, and wiki page. The group curator can also decide if the group is accepting new members by default or only by request.

In the past, users created groups simply by typing words into a field on their profiles. Now, users create groups from the groups page.

If a group is set to accept new members by request, users can request to join the group and the curator will be able to manage those requests from the group’s page. Also, daily email notifications will be sent out to let the curator know when there are membership requests. Curators are able to filter group members to quickly see who is in the group and who has requested membership.

All the groups previously created still exist, and users can join and leave those groups freely.

Give it a try

I’m excited to see how Mozillians will use the new functionality for groups. You can create a new group from the [Browse Groups] page. Please file bugs for issues and enhancement ideas. If you have questions or feedback, please post on our discussion forum or stop by #commtools on IRC.

http://dailycavalier.com/2014/02/mozillians-org-groups-now-have-curators-and-other-goodnesss/

|

|

Fr'ed'eric Harper: Have you ever think about your personal brand? |

Creative Commons: http://j.mp/JPFq23ic

Do you think personal branding is not for you? Why should you care about your own brand? After all, it’s not like you are an actor or the lead singer for a rock band. In fact, it’s never been more important for you to think about yourself as a brand.

I think it’s so important that I’ve done presentations on the subject, and now I’m writing a book for developers with Apress: How to Be a Rock Star Developer (the title isn’t final). Thinking about you as a brand will provide rocket fuel for your career. You’ll find better jobs or become the “go-to guy” in certain situations; you’ll become known for your expertise and leadership; people will seek your advice and point of view; you’ll get paid better to speak, write, or consult. As a developer, there are many tools you can use to scale, and this book will help you understand how to get visibility, make a real impact, and achieve your goal. No need to be a marketing expert or a personal branding guru: be yourself, and get your dream job or get to the next level of your career.

In the book, you will learn what personal branding is, and why you should care about it. You’ll also learn what the key themes of a good brand are, and how you can find the ingredients to build your own, unique brand. Most importantly, you’ll understand how to work your magic to make it happen, and capitalize on what’s making you unique. You’ll also learn:

- How to use sites like StackOverflow and Github to build your expertise and your reputation.

- How to promote your brand unobtrusively in a way that attracts better-paying jobs, consulting gigs, industry invitations, or contract work.

- How to become visible to the movers and shakers in your specific category of development.

- How to exert power and influence to help yourself and others.

I’m writing this post today for two reasons:

- You can register now to get notified when the book (no obligation to buy the book if you changed your mind), and the e-book will be available (You’ll receive one, and only one email – two with the confirmation);

- I’m offering you to speak about this topic at your conference or user group.

The primary audience for this book will be developers, or any technical person, but anyone, even if you aren’t working in IT, will be able to benefit from it: you’ll need to make abstraction of the developers’s specifics examples or tricks. This topic is a passion for me as thinking about myself as a brand helped me be where I am today. My goals with these presentations and the book are to help you understand that, in today’s world, it’s critical to get visibility, have an impact, and of course, do epic shit!

P.S.: Thanks in advance for all of you that will share the love!

--

Have you ever think about your personal brand? is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- I’m working on a personal branding book for developers This year I got two book writing offers, but the...

- Personal branding, the recording of my presentation Remember the presentation I did on personal branding at Kongossa...

- Personal Branding, more important than ever Yesterday, I did a talk at Kongossa Web Series about...

|

|

Ben Hearsum: Experiments with smaller pools of build machines |

Since the 3.0 days we’ve been using a pool of identical machines to build Firefox. It started off with a few machines per platform, and has since expanded into many, many more (close to 100 on Mac, close to 200 on Windows, and many more hundreds on Linux). This machine pooling is one of the main things that has enabled us to scale to support so many more branches, pushes, and developers. It means that we don’t need to close the trees when a single machine fails (anyone remember fx-win32-tbox?) and makes it easier to buy extra capacity like we’ve done with our use of Amazon’s EC2.

However, this doesn’t come without a price. On mozilla-inbound alone there are more than 30 different jobs that a Linux build machine can run. Multiply that by 20 or 30 branches and you get a Very Large Number. Having so many different types of jobs you can do, you rarely end up doing the same job twice in a row. This means that a very high percentage of our build jobs are clobbers. Even with ccache enabled, these take much more time to do than an incremental build.

This week I’ve run a couple of experiments using a smaller pool of machines (“hot tubs”) to handle a subset of job types on mozilla-inbound. The results have been somewhat staggering. A hot tub with 3 machines returned results in an average of 60% of the time our production pool did, but coalesced 5x the number of pushes. A hot tub with 6 machines returned results in an average of 65% of the time our production pool did, and only coalesced 1.4x the number of pushes. For those interested, the raw numbers are available

With more tweaks to the number of machines and job types in a hot tub I think we can make these numbers even better – maybe even to the point where we both give results sooner and reduce coalescing. We also have some technical hurdles to overcome in order to implement this in production. Stay tuned for further updates on this effort!

http://hearsum.ca/blog/experiments-with-smaller-pools-of-build-machines/

|

|

Eric Shepherd: Topic curation on MDN: Inventory and evaluate |

The first step in the process of beginning to properly curate the content on MDN is for us to inventory and evaluate our existing content. This lets us find the stuff that needs to be updated, the pages that need to be deleted, and the content that we’re outright missing.

We’re still working on refining the details of how this process will work—so feel free to drop into the #mdn channel to discuss your ideas. That said, there are some basic things that clearly need to be done.

Each of the content curators will coordinate this work in each of their topic areas, working with other community members, the topic drivers for the subtopics in those topic areas, and anyone else that wants to help.

Eliminate the junk

First, we need to get rid of the stuff that outright doesn’t need to be on MDN. There are a few categories of this:

- Content already marked as “junk”.

- Inappropriate or spam content.

- Dangerously wrong content.

- Duplicate pages; sometimes people create a new page that replicates content that already exists. We need to decide which one is best in these cases and get rid of the others. This will sometimes involve merging bits of useful content from multiple pages together first.

- Select dangerously obsolete content. In general, we try to preserve obsolete content, instead labeling it as such. However, if the content is actually dangerous in some way, we may consider actually removing it entirely.

Develop an organization plan

The next task is to finalize our content organization plan. We’ve been discussing this plan for a long, long time now, and in fact in the summer of 2013, we finalized a plan. Unfortunately, when we started to actually implement it, we realized that in practice, it’s rather confusing. So we’re going to take another crack at it to resolve the frustrating and confusing aspects of our hierarchy plan. In particular, there are aspects of our new Web platform documentation layout that just didn’t work in the real world, and we’ll be working to fix that soon.

As soon as this organization work gears up in earnest again, you’ll know, because we’ll be asking for help!

Move content to where it belongs

Once we know what the site layout is going to be, we need to start moving content so that everything is in the right place. This involves two activities.

First, we need to move content that’s already been organized once into the new correct places. Most content that’s already been recently reorganized probably is in the right place already, or at least close to it. We just need to go through all this material and make sure it’s in its final, correct home under the new site layout.

The second, and much bigger, job is to go through the many thousands of pages that have never been properly organized and move them to where they belong. We have entire sets of documentation content that are entirely located at the top of MDN’s content hierarchy, even though they belong in a subtree somewhere. These need to be found and fixed.

For example, most of the documentation of Gecko internals is actually located at the top of the site’s hierarchy. Likewise with NSPR documentation, among others. All of that needs to be moved to where it should be.

In many cases, this will also involve building out the site hierarchy itself; that is, creating the landing pages and menus that guide readers through levels of material until they reach the specific documentation they’re looking for. That’s for people like me, that like to browse through a documentation set until they find what they want, instead of relying entirely on search.

What’s incomplete?

The next step is to go through these pages and figure out which ones aren’t complete, or are in need of updates. There are plenty of these. We need to label them appropriately and file documentation request bugs to get them on the list of things to be updated.

What’s missing?

Once all of that is done, we need to go through each topic area and figure out what material is missing. What APIs are entirely undocumented? What method links go to empty pages? What pages are just stubs with no useful information? What technologies or topics are missing tutorials and examples?

All of these are things we need to catalog so that we can close the gaps in our documentation.

There’s lots more to be done, but this article is long enough. We’ll take a look at some of the other tasks that need to be taken on in future posts.

http://www.bitstampede.com/2014/02/06/topic-curation-on-mdn-inventory-and-evaluate/

|

|

Daniel Glazman: Next Game Frontier, The conference dedicated to Web Gaming |

Last October, I was attending the famous Paris Web conference in Paris, France. In the main lobby of the venue, two Microsoftees (David Catuhe and David Rousset) were demo'ing a game based on their own framework Open Source babylon.js. Yes, Microsoftee and an Open Source JS framework over WebGL... I was looking at their booth, the people queuing to try the game and started explaining them there are conferences about Gaming, there are conferences about Web technologies in general and html5 in particular but there are no conference dedicated to Gaming based on Web technologies...

To my surprise, the two Davids reacted very positively to my proposal and we started immediately discussing a plan for such a conference.

People, I am immensely happy to announce the First Edition of the Next Game Frontier conference, the conference dedicated to Web Gaming, co-organized this year by Microsoft and Samsung Electronics.

People, I am immensely happy to announce the First Edition of the Next Game Frontier conference, the conference dedicated to Web Gaming, co-organized this year by Microsoft and Samsung Electronics.

Web site: Next Game Frontier

Location: Microsoft France campus, Issy-les-moulineaux, France

Date: 13th of March 2014