Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Felipe Gomes: Hello 2014 |

Happy new year everyone! During the holidays I had been thinking about my goals for 2014, and I thought it’d be a good exercise to remember what I’ve done in the past year, the projects I worked on, etc. It’s nice to look back and see what was accomplished last year and think about how to improve it. So here’s my personal list; it doesn’t include everything but it includes the things I found most important:

Related to performance/removing main-thread-IO:

- Rewrote the storage for the AddonRepository module in the work with Irving to make the Addons Manager async.

- Did the Downloads API file/folder/mimetype launcher code and storage migrator for the new JS downloads API project.

- After Drew made the contentprefs API async I help update a number of the API consumers to async.

Related to hotfixes:

- Created a hotfix to decrease the update check interval which brought more users to up-to-date Firefox versions, who were lagging behind by never seeing the update message.

- Worked on a hotfix to deactivate pdf.js if necessary, when we were releasing it to all users for the first time. Fortunately we didn’t have to use it.

- Assisted in the work of another hotfix that was being prepared to change the update fingerprints but also luckily wasn’t needed.

- Wrote down some documentation about all of the hotfix process to streamline the creation of future ones.

Related to electrolysis:

- Worked on the tab crashed page

- Made

- Added support for form autocomplete

- Worked on improving the event handling for events that are handled by both processes

Other things:

- For mochitests: worked on fixing some of the optional parameters like –repeat which weren’t working for all kinds of mochitests, and added the –run-until-failure option.

- Added the –debug-on-failure option which works together with the –jsdebugger option.

- Helped organize the projects of Desktop Firefox for the Google Summer of Code and guide many students through the proposal phase while they drafted their proposals.

- Mentored one student for GSOC who created an add-on to visualize memory usage information from about:memory.

Events attended:

- FISL – The yearly 7k-people free software forum in Brazil

- The MozSummit Assembly meeting to help plan the summit, and the summit of course

- A Firefox OS campaign in Brazil

- The Firefox Performance week

And:

- Reviewed/feedbacked about 300 patches

That’s it. Looking forward to a great 2014!

|

|

Gervase Markham: about:credits – Name Order and Non-ASCII Characters |

The Mozilla project credits those who have “made a significant investment of time, with useful results, into Mozilla project-governed activities”, and who apply for inclusion, in the about:credits list, which appears in every browser product Mozilla has ever made.

Historically, the system of scripts which I used to manage the list were not as internationalized as they could be. In particular, it did not support sorting on a name component other than the last-written one. Also, although I don’t know of technical reasons why this is so, many non-English names are present without the appropriate accents on the letters.

But I’m pleased to say that any issues here are now fixed. So, if your name is not rendered or sorted as you would like on about:credits (either due to name component ordering or lack of accents) please email me and I will correct it for you.

http://feedproxy.google.com/~r/HackingForChrist/~3/gjPhqSm9lzI/

|

|

Gervase Markham: How To Run a Productive Community Discussion |

I ran a session at the Mozilla Summit with the (wordy) title of “Building a Framework to Enable Mozilla to Effectively Communicate Across Our Community”.

The outcome of the Brussels session, cleaned up, was this document which explains how to run a useful and productive community consultation and discussion. So if you propose something to a colleague, they say “you should ask the community about that”, and a cold sweat breaks out on your forehead, this is the document for you.

Many thanks for Zach Beauvais for doing the initial wrangling of the session notes. Feedback on the current document is very welcome.

http://feedproxy.google.com/~r/HackingForChrist/~3/HjOh0SqVhr0/

|

|

Jess Klein: The Participatory Design Culture and Terms of Badges |

So let's take a second to talk about collaboration -- or as I like to think of it: the conversation:

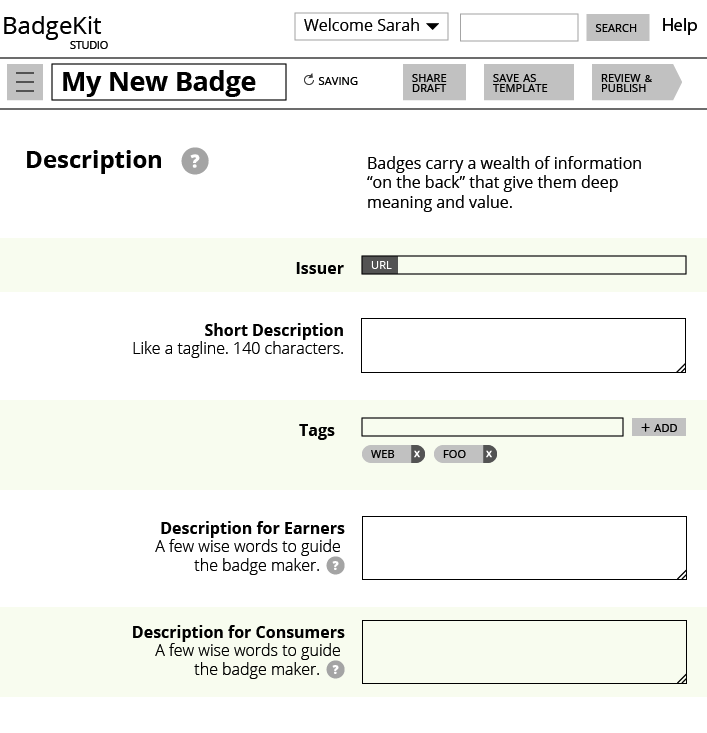

Before I get too far along in the solution, let me guide you through some potential use cases for participatory design within the badge making experience.

- As a badge issuer I might want people to:

- be able to use my badge 'as is' and re-issue it as their own.

- They can re-use the art

- They can re-use the metadata

- They can re-use the badge name

- They can re-use the badge criteria

- be able to 'fork' and modify my badge and re-issue it as their own.

- be able to tweak my badge's metadata

- be able to publicly issue my badge

- be able to only issue the badge privately

- use the badge artwork that I designed

- be able to issue this badge to <13 span="" users="">

- be able to issue this badge with age restrictions

- be able to issue this badge only to >13 users (etc)

- be able to localize the badge

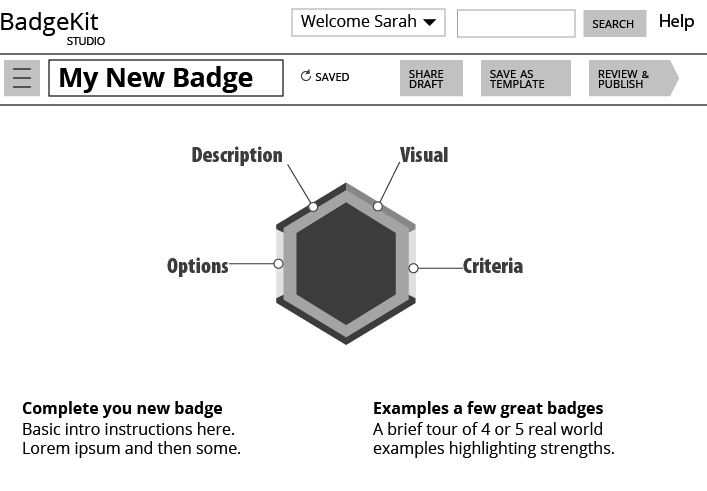

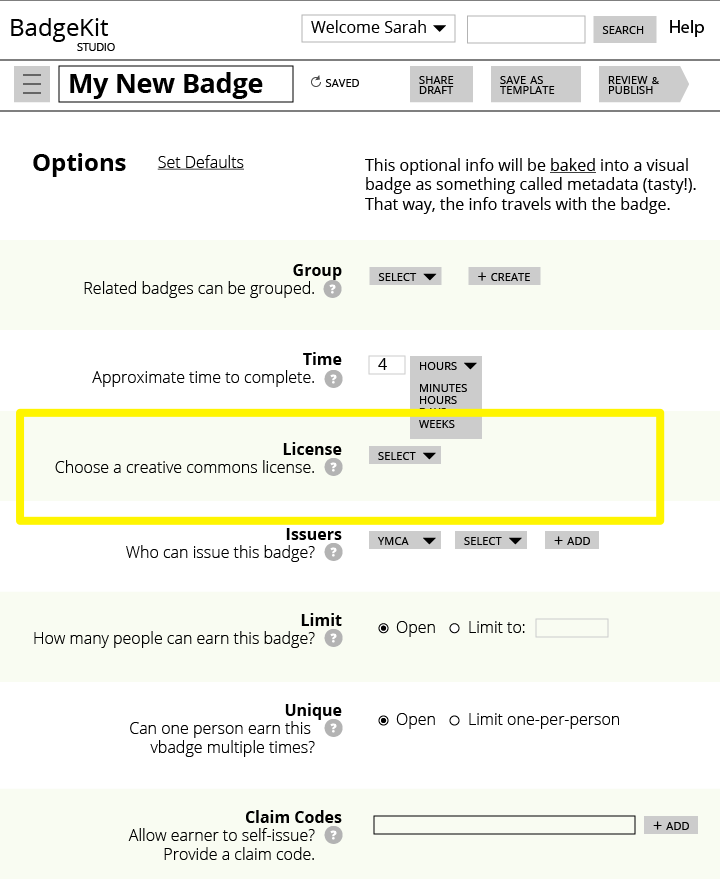

As a badge maker is using this part of the BadgeKit site, they can define many parts of the badge - from visual design to badge description. Additionally, as you can see in the wireframe, in the optional information we could enable users to add licensing and set parameters for the project. This is really not an answer to the intellectual property question, but just some context.

- Pros: automatic, the default, commonly understood

- Cons: Doesn't encourage this type of creating

- Pros:

- More closely matches this project's underlying purpose--cooperation, collaboration...

- This sharing model allows people to quickly use, change, and share badges

- Cons:

- Seems to apply more acutely to the core software (vs. the bi-product)

- PRO: A unique license structure could allow users to badge component parts or whole badges.

- CON: This seems a bit over the top, considering how many great licensing frameworks are already in existence. This would be another thing that badge issuers would need to conform to, and really, who is going to police it ?

The response from my colleague Michelle Thorne was so strong, I have to add it here: " Please, please, please don't make a new license! It will cause confusion and lack of interoperability. This is more restrictive than all rights reserved. Instead, consider a "badge licensing policy" which suggests how to license and attribute based on existing licensing standards, like Creative Commons."

- Pros:

- Alligns w/ this project's purpose

- Flexible, so that it offers a media maker the ability to state what level of remixability she is comfortable with

- Allows someone to fulfill ambition of encouraging dialogue, collaboration, etc., w/o sacrificing control, etc.

- Cons:

- New, not universally recognized or understood --> I'd argue these are some of the best known copyright licenses with lots of adoption. Education space already using widely.

- Not made for source code. So, worth considering how to use CC for creative work (like badge design and descriptions) and source code (but not metadata) like the OBI.

- Adrienne K. , Goss. "Student Note: Codifying a Commons: Copyright, Copyleft, and the Creative Commons Project." Chicago-Kent Law Review. (2007): 963. http://scholarship.kentlaw.iit.edu/cgi/viewcontent.cgi?article=3609&context=cklawreview.

- Montagnani, Maria Lilla. "A New Interface Between Copyright Law and Technology: How User-Generated Content Will Shape the Future of Online Distribution." Cardozo Arts & Entertainment Law Journal. (2009): 719. http://www.cardozoaelj.com/wp-content/uploads/2013/04/Montagnani.pdf.

- Elkin-Koren, Niva. "Intellectual Property and Public Values: What Contracts Cannot Do: The Limits of Private Ordering in Facilitating a Creative Commons." Fordham Law Review. (2005): 375.

- Loren, Lydia Pallas. "Building a Reliable Semicommons of Creative Works: Enforcement of Creative Commons Licenses and Limited Abandonment of Copyright." George Mason Law Review. (2007): 271. http://www.georgemasonlawreview.org/doc/14-2_Loren.pdf.

- West, Ashley. "Little Victories: Promoting Artistic Progress through the Enforcement of Creative Commons Attribution and Share-Alike Licenses."Florida State University Law Review. (2009): 903. http://www.law.fsu.edu/journals/lawreview/downloads/364/west.pdf.

- Participate: Designing with User Generated Content : http://nypl.bibliocommons.com/item/show/19472503052907_participate

Please note: I am not a lawyer, nor do I pretend to be. I am merely researching the subject.

----------------

http://jessicaklein.blogspot.com/2014/01/the-participatory-design-culture-and.html

|

|

Fr'ed'eric Buclin: Bugzilla 5.0 moved to HTML5 |

A quick note to let you know that starting with Bugzilla 4.5.2, which should be released soon (a few weeks at most), it now uses the HTML5 doctype instead of the HTML 4.01 Transitional one. This means that cool new features from HTML5 can now be implemented in the coming Bugzilla 5.0. A lot of cleanup has been required to remove all obsolete HTML elements and attributes, and to move all hardcoded styles into CSS files, and to replace many

http://lpsolit.wordpress.com/2014/01/09/bugzilla-5-0-moved-to-html5/

|

|

Michael Kaply: Can Firefox do this? |

A lot of what I’ve learned about customizing Firefox came from many different people asking me a question like – “Can you do this in Firefox?”

For instance:

- Can you remove the Set as Background Image menuitem?

- Can you easily change the Firefox branding?

- Can you turn off private browsing?

- Can you block access to local files?

- Can you disable safe mode?

Through the research I did into these types of questions, I learned a lot about how Firefox works and how to modify it to meet the needs of various people and organizations. And a lot of what I learned ended up in the new CCK2.

Have you ever asked the question “Can you do this in Firefox?”

What did you want to do?

|

|

Jonas Finnemann Jensen: Custom Telemetry Dashboards |

In the past quarter I’ve been working on analysis of telemetry pings for the telemetry dashboard, I previously outlined the analysis architecture here. Since then I’ve fixed bugs, ported scripts to C++, fixed more bugs and given the telemetry-dashboard a better user-interface with more features. There’s probably still a few bugs around, decent logging is still missing, but data aggregated is fairly stable and I don’t think we’re going to make major API changes anytime soon.

So I think it’s time to let others consume the aggregated histograms, enabling the creation of custom dashboard. In the following sections I’ll demonstrate how to get started with telemetry.js and build a custom filtered dashboard with CSV export.

Getting Started with telemetry.js

On the server-side the aggregated histograms for a given channel, version and measure is stored in a single JSON file. To reduce storage overhead we use a few tricks, such as translating filter-paths to identifiers, appending statistical fields at the end of a histogram array and computing bucket offsets from specification. This makes the server-side JSON files rather hard to read. Furthermore, we would like the flexibility to change this format, move files to a different server or perhaps do a binary encoding of histograms. To facilitate this, data access is separated from data storage with telemetry.js. This is a Javascript library to be included from telemetry.mozilla.org/v1/telemetry.js. We promise that best efforts will be made to ensure API compatibility of the telemetry.js version hosted at telemetry.mozilla.org.

I’ve used telemetry.js to create the primary dashboard hosted at telemetry.mozilla.org, so the API grants access to the data used here. I ‘ve also written extensive documentation for telemetry.js. To get you started consuming the aggregates presented on the telemetry dashboard, I’ve posted the snippet below to a jsfiddle. The code initializes telemetry.js, then proceeds load the evolution of a measure over build dates. Once loaded the code prints a histogram for each build date of the 'CYCLE_COLLECTOR' measure within 'nightly/27'. Feel free to give it a try…

Telemetry.init(function() { Telemetry.loadEvolutionOverBuilds( 'nightly/28', // from Telemetry.versions() 'CYCLE_COLLECTOR', // From Telemetry.measures('nightly/28', callback) function(histogramEvolution) { histogramEvolution.each(function(date, histogram) { print("--------------------------------"); print("Date: " + date); print("Submissions: " + histogram.submissions()); histogram.each(function(count, start, end) { print(count + " hits between " + start + " and " + end); }); }); } ); });

Warning: the channel/version string and measure string shouldn’t be hardcoded. The list of channel/versions available is returned by Telemetry.versions() and Telemetry.measure('nightly/27', callback) invokes callback with a JSON object of measures available for the given version/channel (See documentation). I understand that it can be tempting to hardcode these values for some special dashboard, and while we don’t plan to remove data, it would be smart to test that the data is available and show a warning if not. Channel, version and measure names may be subject to change as they are changed in the repository.

CSV Export with telemetry.jquery.js

One of the really boring and hard-to-get-right parts of the telemetry dashboard is the list of selectors used to filter histograms. Luckily, the user-interface logic for selecting channel, version, measure and applying filters is implemented as a reusable jQuery widget called telemetry.jquery.js. There’s no dependency on jQuery UI, just jquery.ui.widget.js which contains the jQuery widget factory. This library makes it very easy to write a custom dashboard if you just want write the part that presents a filtered histogram.

The snippet below shows how to create a histogramfilter widget and bind to the histogramfilterchange event, which is fired whenever the selected histogram is changed and loaded. With this setup you don’t need to worry about loading, filtering or maintaining state as the synchronizeStateWithHash option sets the filter-path as window.location.hash. If you want to have multiple instances of the histogramfilter widget, you might want to disable the synchronizeStateWithHash option, and read the state directly instead, see jQuery stateful plugins tutorial for how to get/set a option like state dynamically.

Telemetry.init(function() { // Create histogram-filter from jquery.telemetry.js $('#filters').histogramfilter({ // Synchronize selected histogram with window.location.hash synchronizeStateWithHash: true, // This demo fetches histograms aggregated by build date evolutionOver: 'Builds' }); // Listen for histogram-filter changes $('#filters').bind('histogramfilterchange', function(event, data) { // Check if histogram is loaded if (data.histogram) { update(data.histogram); } else { // If data.histogram is null, then we're loading... } }); });

The options for histogramfilter is will documented in the source for telemetry.jquery.js. This file can be found in the telemetry-dashboard repository, but it should be distributed with custom dashboards, as backwards compatibility isn’t a priority for this library. There is a few extra features hidden in telemetry.jquery.js, which let’s you implement custom elements, choose useful defaults, change behavior, limit available histogram kinds and a few other things.

|

|

Gregory Szorc: Why do Projects Support old Python Releases |

I see a number of open source projects supporting old versions of Python. Mercurial supports 2.4, for example. I have to ask: why do projects continue to support old Python releases?

Consider:

- Python 2.4 was last released on December 19, 2008 and there will be no more releases of Python 2.4.

- Python 2.5 was last released on May 26, 2011 and there will be no more releases of Python 2.5.

- Python 2.6 was last released on October 29, 2013 and there will be no more releases of Python 2.6.

- Everything before Python 2.7 is end-of-lifed

- Python 2.7 continues to see periodic releases, but mostly for bug fixes.

- Practically all of the work on CPython is happening in the 3.3 and 3.4 branches. Other implementations continue to support 2.7.

- Python 2.7 has been available since July 2010

- Python 2.7 provides some very compelling language features over earlier releases that developers want to use

- It's much easier to write dual compatible 2/3 Python when 2.7 is the only 2.x release considered.

- Python 2.7 can be installed in userland relatively easily (see projects like pyenv).

Given these facts, I'm not sure why projects insist on supporting old and end-of-lifed Python releases.

I think maintainers of Python projects should seriously consider dropping support for Python 2.6 and below. Are there really that many people on systems that don't have Python 2.7 easily available? Why are we Python developers inflicting so much pain on ourselves to support antiquated Python releases?

As a data point, I successfully transitioned Firefox's build system from requiring Python 2.5+ to 2.7.3+ and it was relatively pain free. Sure, a few people complained. But as far as I know, not very many new developers are coming in and complaining about the requirement. If we can do it with a few thousand developers, I'm guessing your project can as well.

Update 2014-01-09 16:05:00 PST: This post is being discussed on Slashdot. A lot of the comments talk about Python 3. Python 3 is its own set of considerations. The intended focus of this post is strictly about dropping support for Python 2.6 and below. Python 3 is related in that porting Python 2.x to Python 3 is much easier the higher the Python 2.x version. This especially holds true when you want to write Python that works simultaneously in both 2.x and 3.x.

http://gregoryszorc.com/blog/2014/01/08/why-do-projects-support-old-python-releases

|

|

Fr'ed'eric Harper: Trace a line between the web, and your private life |

Creative Commons: http://j.mp/1cB9LKZ

People often tell me that I share a lot of things on the Web: it’s true. As weird as it seems to some people, I traced a line between my private life, and what I’m sharing online.

It’s important in today’s world to trace a line between your personal life, and the web itself. With services like Facebook, Twitter, Flickr, Instagram, and more, it has never been so easy to share every little moment of your life with friends, family, but also with strangers. I won’t talk about the privacy rights (or not) on these websites as I’m not an expert on this topic, but the truth is that mostly everything you put on the web, will probably live there forever (kind of), and someone, somewhere, will have access to it. Don’t get me wrong, I like the web, but I used to tell people that if you want something to stay private, just don’t put it on the web (even if you carefully set the privacy settings, and targeted who will see what you want to share).

My rule is quite simple as there are few things I don’t want to share online: I don’t want people to know where I’m living (neighborhood is ok), and I don’t want people to have my phone number (I hate this way of communicating, too intrusive for me). This is my line between privacy, and the web… what is yours?

--

Trace a line between the web, and your private life is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- No web version! Why do you hate me? We are living in a world of mobile application. Everyone,...

- Do you have a positive impact on other’s life? I like to share: it’s why I’m doing public speaking,...

- Music is my life I must say that I’m a music lover. I like...

|

|

Selena Deckelmann: My nerd story: it ran in the family, but wasn’t inevitable |

This is about how I came to identify as a hacker. It was inspired by Crystal Beasley’s post. This is unfortunately also related to recent sexist comments from a Silicon Valley VC about the lack of women hackers. I won’t bother to hate-link, as others have covered his statements fully elsewhere.

I’ve written and talked about my path into the tech industry before, and my thoughts about how to get more women involved. But I didn’t really ever start the story where it probably should have been started: in my grandfather’s back yard.

I spent the first few years of my life in Libby, MT. Home of the Libby Dam, an asbestos mine and loggers. My grandfather, Bob, was a TV repairman in this very remote part of Montana. He was also a bit of a packrat.

I can still picture the backyard in my mind — a warren of pathways through busted up TVs, circuit boards, radios, transistors, metal scrap, wood and hundreds of discarded appliances that Grandpa would find broken and eventually would fix.

His garage was similarly cramped — filled with baby jars, coffee cans and bizarre containers of electronic stuff, carefully sorted. Grandpa was a Ham, so was my uncle and Grandma. I don’t remember exactly when it happened, but at some point my uncle taught me Morse code. I can remember writing notes full of dots and dashes and being incredibly excited to learn a code and to have the ability to write secret messages.

I remember soldering irons and magnifying lens attachments to glasses. We had welding equipment and so many tools. And tons of repaired gadgets, rescued from people who thought they were dead for good.

Later, we had a 286 and then a 386 in the house. KayPro, I think, was the model. I’d take off the case and peer at the dust bunnies and giant motherboard of that computer. I had no idea what the parts were back then, but it looked interesting, a lot like the TV boards I’d seen in piles when I was little.

I never really experimented with software or hardware and computers as a kid. I was an avid user of software. Which, is something of a prelude to my first 10 years of my professional life as a sysadmin. I’m a programmer now, but troubleshooting and dissecting other people’s software problems still feels the most natural.

Every floppy disk we had was explored. I played Dig Dug and Mad Libs on an Apple IIe like a champ. I mastered PrintShop and 8-in-1, the Office-equivalent suite we had at the time. And if something went wrong with our daisy wheel printer, I was on it – troubleshooting paper jams, ribbon outages and stuck keys.

My stepdad was a welder, and he tried to get me interested in mechanical things (learning how to change the oil in my car was a point of argument in our family). But, to be honest, I really wasn’t that into it beyond fixing paper jams so that I could get a huge banner printed out.

I was good at pretty much all subjects in school apart from spelling and gym class. I LOVED to read — spending all my spare time at the local library for many years, and then consuming books (sometimes two a day) in high school. My focus was: get excellent grades, get a scholarship, get out of Montana.

And there were obstacles. We moved around pretty often, and my new schools didn’t always believe that I’d taken certain classes, or that grades I’d gotten were legit. I had school counselors tell me that I shouldn’t take math unless I planned “to become a mathematician.” I was required to double up on math classes to “make up” algebra and pre-algebra I’d taken one and two years earlier. I gave and got a lot of shit from older kids in classes because I was immature and a smartass. I got beat up on buses, again because I was a smartass and had a limited sense of self-preservation.

The two kids I knew who were into computers in high school were really, really nerdy. I was awkward, in Orchestra, and didn’t wear the kind of cool girl clothes you need to make it socially. I wasn’t exactly at the bottom of the social heap, but I was pretty close for most of high school. And avoiding those kids who hung out after school in the computer lab was something I knew to do to save myself social torture.

Every time I hear people say that all we need to do is offer computer science classes to get more girls coding, I remember myself at that age, and exactly what I knew would happen to me socially if I openly showed an interest in computers.

I lucked out my first year in college. I met a guy in my dorm who introduced me to HTML and the web in 1994. He spent hours telling me story after story about kids hacking hotel software and stealing long distance. He introduced me to Phrack and 2600. He and his friends helped me build my first computer. I remember friends saying stuff to me like, “You really did that?” at the time. Putting things together seemed perfectly natural and fun, given my childhood spent around family doing exactly the same thing.

It took two more years before I decided that I wanted to learn how to program, and that I maybe wanted to get a job doing computer-related work after college. What I do now has everything to do with those first few months in 1994 when I just couldn’t tear myself away from the early web, or the friends I made who all did the same thing.

Jen posted an awesome chart of her nerd story. I made one sorta like it, but couldn’t manage to make it as terse.

Whether it was to try and pursue a music performance career after my violin teacher encouraged me, starting out as a chemistry major after a favorite science teacher in high school told me I should, or moving into computer science after several years of loving, mischievous fun with a little band of hackers; what made the difference in each of these major life decisions was mentorship and guidance from people I cared about.

Maybe that’s a no-brainer to people reading this. I say it because sometimes people think that we come to our decisions about what we do with our lives in a vacuum. That destiny or natural affinity are mostly at work. I’m one anecdata point against destiny being at work, and I have heard lots of stories like mine. Especially from new PyLadies.

Not everyone is like me, but I think plenty of people are. And those people who are like me — who could have picked one of many careers, who like computers but weren’t in love or obsessed with them at a young age — could really use role models, fun projects and social environments that are low-risk in middle school, high school and college. And as adults.

Making that happen isn’t easy, but it’s worth sharing our enthusiasm and creating spaces safe for all kinds of people to explore geeky stuff.

Thanks to an early childhood enjoyment of electronics and the thoughtfulness of a few young men in 1994, I became the open source hacker I am today.

|

|

Christian Heilmann: This developer wanted to create a language selector and asked for help on Twitter. You won’t believe what happened next! |

Hey, it works for Upworthy, right?

Yesterday in a weak moment Dhananjay Garg asked me on Twitter to help him with a coding issue and I had some time to do that. Here’s a quick write-up of what I created and why.

Dhananjay had posted his problem on StackOverflow and promptly got an answer that wasn’t really solving or explaining the issue, but at least linked to W3Schools to make matters worse (no, I am not bitter about the success of either resource).

The problem was solved, yes (assignment instead of comparison – = instead of === ) but the code was still far from efficient or easily maintainable which was something he asked about.

The thing Dhananjay wanted to achieve was to have a language selector for a web site that would redirect to documents in different languages and store the currently selected one in localStorage to redirect automatically on subsequent visits. The example posted has lots and lots of repetition in it:

function english() { if(typeof(Storage)!=="undefined") { localStorage.clear(); localStorage.language="English"; window.location.reload(); } } function french() { if(typeof(Storage)!=="undefined") { localStorage.clear(); localStorage.language="French"; window.location.href = "french.html"; } } window.onload = function() { if (localStorage.language === "Language") { window.location.href = "language.html"; } else if (localStorage.language === "English") { window.location.href = "eng.html"; } else if (localStorage.language === "French") { window.location.href = "french.html"; } else if (localStorage.language === "Spanish") { window.location.href = "spanish.html"; } |

This would be done for 12 languages, meaning you’ll have 12 functions all doing the same with different values assigned to the same property. That’s the first issue here, we have parameters on functions to avoid this. Instead of having a function for each language, you write a generic one:

function setLanguage(language, url) { localStorage.clear(); localStorage.language = language; window.location.href = url; } } |

Then you could call for example setLanguage(‘French’, ‘french.html’) to set the language to French and do a redirect. However, it seems dangerous to clear the whole of localStorage every time when you simply redefine one of its properties.

The onload handler reading from localStorage and redirecting accordingly is again riddled with repetition. The main issue I see here is that both the language values and the URLs to redirect to are hard-coded and a repetition of what has been defined in the functions setting the respective languages. This would make it easy to make a mistake as you repeat values.

A proposed solution

Assuming we really want to do this in JavaScript (I’d probably do a .htaccess re-write on a GET parameter and use a cookie) here’s the solution I came up with.

First of all, text links to set a language seem odd as a choice and do take up a lot of space. This is why I decided to go with a select box instead. As our functionality is dependent on JavaScript to work, I do not create any static HTML at all but just use a placeholder form in the document to add our language selector to:

span> id="languageselect">> |

Instead of repeating the same information in the part of setting the language and reading out which one has been set onload, I store the information in one singular object:

var locales = { 'us': {label: 'English',location:'english.html'}, 'de': {label: 'German',location: 'german.html'}, 'fr': {label: 'Francais',location: 'french.html'}, 'nl': {label: 'Nederlands',location: 'dutch.html'} }; |

I also create locale strings instead of using the name of the language as the condition. This makes it much easier to later on change the label of the language.

This is generally always a good plan. If you find yourself doing lots of “if – else if” in your code use an object instead. That way to get to the information all you need to do is test if the property exists in your object. In this case:

if (locales['de']) { // or locales.de - the bracket allows for spaces console.log(locales.de.label) // -> "German" } |

The rest is then all about using that data to populate the select box options and to create an accessible form. I explained in the comments what is going on:

// let's not leave globals (function(){ // when the browser knows about local storage… if ('localStorage' in window) { // Define a single object to hold all the locale // information - notice that it is a good idea // to go for a language code as the key instead of // the text that is displayed to allow for spaces // and all kind of special characters var locales = { 'us': {label: 'English',location:'english.html'}, 'de': {label: 'German',location: 'german.html'}, 'fr': {label: 'Francais',location: 'french.html'}, 'nl': {label: 'Nederlands',location: 'dutch.html'} }; // get the current locale. If nothing is stored in // localStorage, preset it to 'en' var currentlocale = window.localStorage.locale || 'en'; // Grab the form element var selectorContainer = document.querySelector('#languageselect'); // Add a label for the select box - this is important // for accessibility selectorContainer.innerHTML = '+ 'Select Language'; // Create a select box var selector = document.createElement('select'); selector.name = 'language'; selector.id = 'languageselectdd'; var html = ''; // Loop over all the locales and put the right data into // the options. If the locale matches the current one, // add a selected attribute to the option for (var i in locales) { var selected = (i === currentlocale) ? 'selected' : ''; html += 'i+'" '+selected+'>'+ locales[i].label+''; } // Set the options of the select box and add it to the // form selector.innerHTML = html; selectorContainer.appendChild(selector); // Finish the form HTML with a submit button selectorContainer.innerHTML += ''; // When the form gets submitted… selectorContainer.addEventListener('submit', function(ev) { // grab the currently selected option's value var currentlocale = this.querySelector('select').value; // Store it in local storage as 'locale' window.localStorage.locale = currentlocale; // …and redirect to the document defined in the locale object alert('Redirect to: '+locales[currentlocale].location); //window.location = locales[currentlocale].location; // don't send the form ev.preventDefault(); }, false); } })(); |

Surely there are different ways to achieve the same, but the important part here is that an object is a great way to store information like this and simplify the whole process. If you want to add a language now, all you need to do is to modify the locales object. Everything is maintained in one place.

|

|

Robert Nyman: Geek Meet January 2014 with Ade Oshineye |

Sold out

All seats have been taken. Please write a comment to be put on a waiting list, there are always a number of cancellations, so there’s still a chance.

It’s finally time for another Geek Meet! Man, it’s been a while, but now it’s actually happening again!

Introducing Ade Oshineye

I’m proud to introduce Ade Oshineye (Twitter, Google+), Developer Advocate at Google. I’ve had the pleasure of seeing Ade present and to learn from him, and happy to have him come to Stockholm and talk at Geek Meet.

He works on the stack of protocols, standards and APIs that power the social web, which in practice means looking after the Google+ APIs and +1 button with a focus on Europe, Middle East and Africa (EMEA). He’s also the co-author of O’Reilly’s “Apprenticeship Patterns: Guidance for the aspiring software craftsman” and part of the small team behind http://developerexperience.org/, which is an attempt to successfully apply the techniques of user experience professionals to products and tools for developers.

The presentations

Ade will give two presentations during the evening:

Cross-context user journeys

In this talk he will explore the problem of cross-context user journeys. These are user journeys which cross devices (imagine starting your shopping list on your home machine and finishing it on your work computer), contexts (for example desktop to mobile or app to web site) and platforms (a user with an Android phone and an iPad).

He’ll show you how:

- Embedded webviews are becoming the world’s most popular browsers

- What happens when you don’t test your site in new contexts (such as inside apps like Google+,GMail, Facebook and Twitter)

- The limits of responsive design

- How to cross contexts using technologies like x-callback-url, deep

- Links, intents and identity via social login

Using Youtube & Freebase to build recommendation systems

In this talk he’ll show you how to use Youtube watch history and the Freebase ontology to build a simple recommendation system. We’ll walk through the code for the system and talk about how you can build similar things using these datasets and datasets you already own.

Time & place

This Geek Meet will be sponsored by Creuna, and will take place January 30th at 18:00 in their office at Kungsholmsgatan 23 in Stockholm. Creuna will also provide beer/wine and pizza to every attendee, all free of charge.

Sign up now!

Please sign up with a comment below. Please only sign up if you know you can attend. There are 150 seats available, and you can only sign up yourself. Please use a valid name and e-mail address, since this will be used to identify you at the event to get in.

Follow Geek Meet

Geek Meet is available in a number of channels:

The hash tag used is #geekmeetsthlm.

Sold out

All seats have been taken. Please write a comment to be put on a waiting list, there are always a number of cancellations, so there’s still a chance.

|

|

James Long: Stop Writing JavaScript Compilers! Make Macros Instead |

The past several years have been kind to JavaScript. What was once a mediocre language plagued with political stagnation is now thriving with an incredible platform, a massive and passionate community, and a working standardization process that moves quickly. The web is the main reason for this, but node.js certainly has played its part.

ES6, or Harmony, is the next batch of improvements to JavaScript. It is near finalization, meaning that all interested parties have mostly agreed on what is accepted. It's more than just a new standard; Chrome and Firefox have already implemented a lot of ES6 like generators, let declarations, and more. It really is happening, and the process that ES6 has gone through will pave the way for quicker, smaller improvements to JavaScript in the future.

There is much to be excited about in ES6. But the thing I am most excited about is not in ES6 at all. It is a humble little library called sweet.js.

Sweet.js implements macros for JavaScript. Stay with me here. Macros are widely abused or badly implemented so many of you may be in shock right now. Is this really a good idea?

Yes, it is, and I hope this post explains why.

Macros Done Right

There are lots of different notions of "macros" so let's get that out of the way first. When I say macro I mean the ability to define small things that can syntactically parse and transform code around them.

C calls these strange things that look like #define foo 5 macros, but they really aren't macros like we want. It's a bastardized system that essentially opens up a text file, does a search-and-replace, and saves. It completely ignores the actual structure of the code so they are pointless except for a few trivial things. Many languages copy this feature and claim to have "macros" but they are extremely difficult and limiting to work with.

Real macros were born from Lisp in the 1970's with defmacro (and these were based on decades of previous research, but Lisp popularized the concept). It's shocking how often good ideas have roots back into papers from the 70s and 80s, and even specifically from Lisp itself. It was a natural step for Lisp because Lisp code has exactly the same syntax as its data structures. This means it's easy to throw data and code around and change its meaning.

Lisp went on to prove that macros fundamentally change the ecosystem of the language, and it's no surprise that newer languages have worked hard to include them.

However, it's a whole lot harder to do that kind of stuff in other languages that have a lot more syntax (like JavaScript). The naive approach would make a function that takes an AST, but ASTs are really cumbersome to work with, and at that point you might as well just write a compiler. Luckily, a lot of research recently has solved this problem and real Lisp-style macros have been included in newer languages like julia and rust.

And now, JavaScript.

A Quick Tour of Sweet.js

This post is not a tutorial on JavaScript macros. This post intends to explain how they could radically improve JavaScript's evolution. But I think I need to provide a little meat first for people who have never seen macros before.

Macros for languages that have a lot of special syntax take advantage of pattern matching. The idea is that you define a macro with a name and a list of patterns. Whenever that name is invoked, at compile-time the code is matched and expanded.

macro define {

rule { $x } => {

var $x

}

rule { $x = $expr } => {

var $x = $expr

}

}

define y;

define y = 5;

The above code expands to:

var y;

var y = 5;

when run through the sweet.js compiler.

When the compiler hits define, it invokes the macro and runs each rule against the code after it. When a pattern is matched, it returns the code within the rule. You can bind identifiers & expressions within the matching pattern and use them within the code (typically prefixed with $) and sweet.js will replace them with whatever was matched in the original pattern.

We could have written a lot more code within the rule for more advanced macros. However, you start to see a problem when you actually use this: if you introduce new variables in the expanded code, it's easy to clobber existing ones. For example:

macro swap {

rule { ($x, $y) } => {

var tmp = $x;

$x = $y;

$y = tmp;

}

}

var foo = 5;

var tmp = 6;

swap(foo, tmp);

swap looks like a function call but note how the macro actually matches on the parentheses and 2 arguments. It might be expanded into this:

var foo = 5;

var tmp = 6;

var tmp = foo;

foo = tmp;

tmp = tmp;

The tmp created from the macro collides with my local tmp. This is a serious problem, but macros solve this by implementing hygiene. Basically they track the scope of variables during expansion and rename them to maintain the correct scope. Sweet.js fully implements hygiene so it never generates the code you see above. It would actually generate this:

var foo = 5;

var tmp$1 = 6;

var tmp$2 = foo;

foo = tmp$1;

tmp$1 = tmp$2;

It looks a little ugly, but notice how two different tmp variables are created. This makes it extremely powerful to create complex macros elegantly.

But what if you want to intentionally break hygiene? Or what you want to process certain forms of code that are too difficult for pattern matching? This is rare, but you can do this with something called case macros. With these macros, actual JavaScript code is run at expand-time and you can do anything you want.

macro rand {

case { _ $x } => {

var r = Math.random();

letstx $r = [makeValue(r)];

return #{ var $x = $r }

}

}

rand x;

The above would expand to:

var x$246 = 0.8367501533161177;

Of course, it would expand to a different random number every time. With case macros, you use case instead of rule and code within the case is run at expand-time and you use #{} to create "templates" that construct code just like the rule in the other macros. I'm not going to go deeper into this now, but I will be posting tutorials in the future so follow my blog if you want to here more about how to write these.

These examples are trivial but hopefully show that you can hook into the compilation phase easily and do really powerful things.

Macros are modular, Compilers are not!

One thing I like about the JavaScript community is that they aren't afraid of compilers. There are a wealth of libraries for parsing, inspecting, and transforming JavaScript, and people are doing awesome things with them.

Except that doesn't really work for extending JavaScript.

Here's why: it splits the community. If project A implements an extension to JavaScript and project B implements a different extension, I have to choose between them. If I use project A's compiler to try to parse code from project B, it will error.

Additionally, each project will have a completely different build process and having to learn a new one every time I want to try out a new extension is terrible (the result is that fewer people try out cool projects, and fewer cool projects are written). I use Grunt, so every damn time I need to write a grunt task for a project if one doesn't exist already.

For example, traceur is a really cool project that compiles a lot of ES6 features into simple ES5. However, it only has limited support for generators. Let's say I wanted to use regenerator instead, since it's much more awesome at compiling yield expressions.

I can't reliably do that because traceur might implement ES6 features that regenerator's compiler doesn't know about.

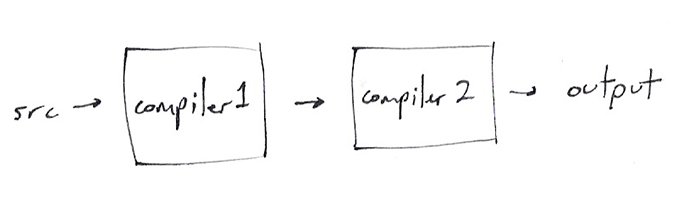

Now, for ES6 features we kind of get lucky because it is a standard and compilers like esprima have included support for the new syntax, so lots of projects will recognize it. But passing code through multiple compilers is just not a good idea. Not only is it slower, it's not reliable and the toolchain is incredibly complicated.

The process looks like this:

I don't think anyone is actually doing this because it doesn't compose. The result is that we have big monolothic compilers and we're forced to choose between them.

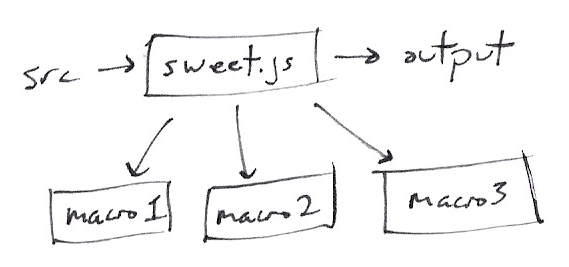

Using macros, it would look more like this:

There's only one build step, and we tell sweet.js which modules to load and in what order. sweet.js registers all of the loaded macros and expands your code with all them.

You can setup an ideal workflow for your project. This is my current setup: I configure grunt to run sweet.js on all my server-side and client-side js (see my gruntfile). I run grunt watch whenever I want to develop, and whenever a change is made grunt compiles that file automatically with sourcemaps. If I see a cool new macro somebody wrote, I just npm install it and tell sweet.js to load it in my gruntfile, and it's available. Note that for all macros, good sourcemaps are generated, so debugging works naturally.

Think about that. Imagine being able to pull in extensions to JavaScript that easily. What if TC39 actually introduced future JavaScript enhancements as macros? What if JavaScript actually adopted macros natively so that we never even had to run the build step?

This could potentially loosen the shackles of JavaScript to legacy codebases and a slow standardization process. If you can opt-in to language features piecemeal, you give the community a lot of power to be a part of the conversation since they can make those features.

Speaking of which, ES6 is a great place to start. Features like destructuring and classes are purely syntactical improvements, but are far from widely implemented. I am working on a es6-macros project which implements a lot of ES6 features as macros. You can pick and choose which features you want and start using ES6 today, as well as any other macros like Nate Faubion's execellent pattern matching library.

A good example of this is in Clojure, the core.async library offers a few operators that are actually macros. When a go block is hit, a macro is invoked that completely transforms the code to a state machine. They were able to implement something similar to generators, which lets you pause and resume code, as a library because of macros (the core language doesn't know anything about it).

Of course, not everything can be a macro. The ECMA standardization process will always be needed and certain things require native implementations to expose complex functionality. But I would argue that a large part of improvements to JavaScript that people want could easily be implemented as macros.

That's why I'm excited about sweet.js. Keep in mind it is still in early stages but it is actively being worked on. I will teach you how to write macros in the next few blog posts, so please follow my blog if you are interested.

(Thanks to Tim Disney and Nate Faubion for reviewing this)

http://jlongster.com/Stop-Writing-JavaScript-Compilers--Make-Macros-Instead

|

|

Mike Hommey: Shared compilation cache experiment |

One known way to make compilation faster is to use ccache. Mozilla release engineering builds use it. In many cases, though, it’s not very helpful on developer local builds. As usual, your mileage may vary.

Anyways, one of the sad realizations on release engineering builds is that the ccache hit rate is awfully low for most Linux builds. Much lower than for Mac builds. According to data I gathered a couple months ago on mozilla-inbound, only about a quarter of the Linux builds have a ccache hit rate greater than 50% while more than half the Mac builds have such a hit rate.

A plausible hypothesis for most of this problem is that the number of build slaves being greater on Linux, a build is less likely to occur on a slave that has a recent build in cache. And while better, the Mac cache hit rates were not really great either. That’s due to the fact that consecutive pushes, that share like > 99% code in common, are most usually not built on the same slave.

With this in mind, at Taras’s request, I started experimenting, before the holiday break, with sharing the ccache contents. Since a lot of our builds are running on Amazon Web Services (AWS), it made sense to run the experiment with S3.

After setting up some AWS instances as custom builders (more on this in a subsequent post) with specs similar to what we use for build slaves, I took past try pushes and replayed them on my builder instances, with a proof of concept, crude implementation of ccache-like compilation caching on S3. Both the build times and cache hit rate looked very promising. Unfortunately, I didn’t get the corresponding try build stats at the time, and it turns out the logs are now gone from the FTP server, so I had to rerun the experiment yesterday, against what was available, which is the try logs from the past two weeks.

So, I ran 629 new linux64 opt builds using between 30 and 60 builders. Which ended up being too much because the corresponding try pushes didn’t all trigger linux64 opt builds. Only 311 of them did. I didn’t start this run with a fresh compilation cache, but obviously, so do try builders, so it’s fair game. Of my 629 builds, 50 failed. Most of those failures were due to problems in the corresponding try pushes. But a few were problems with S3 that I didn’t handle in the PoC (sometimes downloading from S3 fails for some reason, and that would break the build instead of falling back to compiling locally), or with something fishy happening with the way I set things up.

Of the 311 builds on try, 23 failed. Of those 288 successful builds, 8 lack ccache stats, because in some cases (like a failure during “make check”) the ccache stats are not printed. Interestingly, only 81 of the successful builds ran on AWS, while 207 ran on Mozilla-owned machines. This unfortunately makes build time comparisons harder.

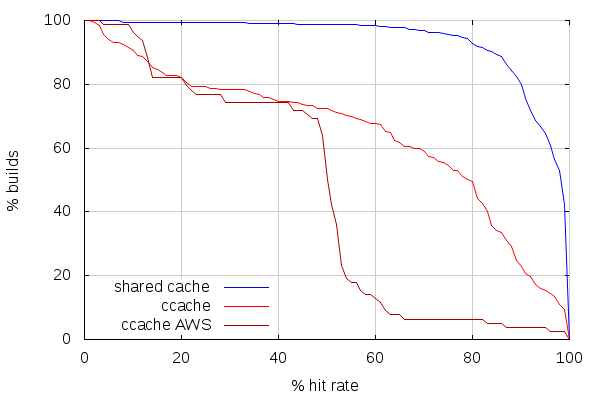

With that being said, here is how cache hit rates compare between non-AWS build slaves using ccache, AWS build slaves using ccache and my AWS builders using shared cache:

The first thing to note here is that this quite doesn’t match my observations from a few months ago on mozilla-inbound. But that could very be related to the fact that try and mozilla-inbound pushes have different patterns.

The second thing to note is how few builds have more than 50% hit rate on AWS build slaves. A possible explanation is that AWS instances are started with a prefilled but old ccache (because looking at the complete stats shows the ccache storage is almost full), and that a lot of those AWS slaves are new (we recently switched to using spot instances). It would be worth checking the stats again after a week of try builds.

While better, non-AWS slaves are still far from efficient. But the crude shared cache PoC shows very good hit rates. In fact, it turns out most if not all builds with less than 50% hit rate are PGO or non-unified builds. As most builds are neither, the cache hit rate for the first few of those is low.

This shows another advantage of the shared cache: a new slave doesn’t have to do slow builds before doing faster builds. It gets the same cache hit rate as slaves that have been running for longer. Which, on AWS, means we could actually shutdown slaves during low activity periods, without worrying about losing the cache data on ephemeral storage.

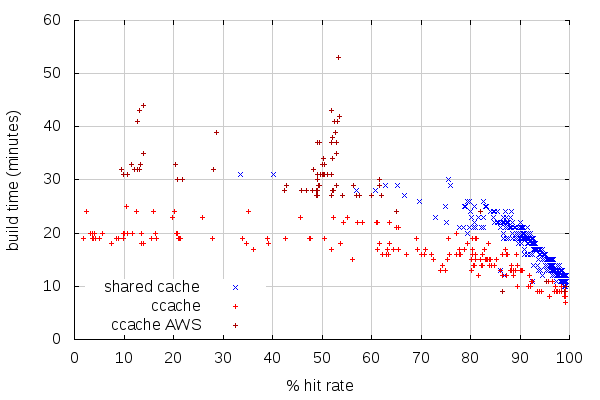

With such good hit rates, we can expect good build times. Sadly, the low number of high ccache hit rate builds on AWS slaves makes the comparison hard. Again, coming back with new stats in a week or two should make for better numbers to compare against.

(Note that I removed, from this graph, non-unified and PGO builds, which have very different build times)

At first glance, it would seem builds with the shared cache are slower, but there are a number of factors to take into account:

- The non-AWS build slaves are generally faster than the AWS slaves, which is why the builds with higher hit rates are generally faster with ccache.

- The AWS build slaves have pathetic build times.

- As the previous graph showed, the hit rates are very good with the shared cache, which places most of those builds on the right end of this graph.

This is reflected on average build times: with shared cache, it is 14:20, while it is 15:27 with ccache on non-AWS slaves. And the average build time for AWS slaves with ccache is… 31:35. Overall, the average build time on try, with AWS and non-AWS build slaves, is 20:03. So on average, shared cache is a win over any setup we’re currently using.

Now, I need to mention that when I say the shared cache implementation I used is crude, I do mean it. For instance, it doesn’t re-emit warnings like ccache does. But more importantly, it’s not compressing anything, which makes its bandwidth use very high, likely making things slower than they could be.

I’ll follow-up with hopefully better stats in the coming weeks. I may gather stats for inbound, as well. I’ll also likely test the same approach with Windows builds some time soon.

|

|

Jeff Griffiths: (de) centralization |

I'm a big fan of Zombie movies and comics. I suspect I am not alone in this, Zombies have never been more popular than they are right now. One of my favourite parts about these movies is how they typically end: a small group of survivors in a post-apocalyptic wasteland, scrappily starting to build a new civilization amongst the rubble of the old.

Being a nerd, it's a fun thought experiment to wonder how a world in this state would use the technology all around them and communicate, re-build the internet, re-connect with each other. What would the web look like?

This low-power future implies a lot about the devices we'd be using. Low power mobile devices might be the only thing we could run - all these data centers running Twitter & Facebook would go dark after a few weeks. Imagine this scene: a small encampment of survivors generating power using improvised hydroelectric from a small river, charging deep cycle marine batteries and using power inverters to power a minimum number of AC devices.

The good news is that as a survivor you wouldn't be short on entertainment. Just raid a nearby Walmart / Bestbuy and you've have all the movies you'd want. Power any laptop or tablet from your dodgy bicycle-powered generators or batteries, and you've got movie night to keep you sane in a world gone mad!

Communications would be much harder. All the equipment required to run our modern mobile data networks is completely dependant on high-power infrastructure. Like with power we would need to improvise, fall back on sneakernet meetups, shortwave radio for voice communications, eventually longer range wireless tech like WiMAX. We'd need software that's just as home being offline as it is syncing local changes to remote data.

Interestingly enough, this idea of 'offline first' apps and eventually consistent data is exacly what we need for mobile web apps right now. The toolset you would need to provide service with relatively unreliable infrastructure is basically what's described by the 'offline first' project:

It strikes me that the conditions we imagine for ourselves in a post-apocalyptic world aren't that different than thouse that exist right now in 3rd and 2nd world countries, after a natural disaster or even on certain networks in the heart of Silicon Valley ( I'm looking at you AT&T ). The tools we deliver to these environments would be no different, and having our apps and data handle bad networks just makes sense given how bad networks can be even when there isn't a disaster happening.

|

|

Gregory Szorc: On Multiple Patches in Bugs |

There is a common practice at Mozilla for developing patches with multiple parts. Nothing wrong with that. In fact, I think it's a best practice:

- Smaller, self-contained patches are much easier to grok and review than larger patches.

- Smaller patches can land as soon as they are reviewed. Larger patches tend to linger and get bit rotted.

- Smaller patches contribute to a culture of being fast and nimble, not slow and lethargic. This helps with developer confidence, community contributions, etc.

There are some downsides to multiple, smaller patches:

- The bigger picture is harder to understand until all parts of a logical patch series are shared. (This can be alleviated through commit messages or reviewer notes documenting future intentions. And of course reviewers can delay review until they are comfortable.)

- There is more overhead to maintain the patches (rebasing, etc). IMO the solutions provided by Mercurial and Git are sufficient.

- The process overhead for dealing with multiple patches and/or bugs can be non-trivial. (I would like to think good tooling coupled with continuous revisiting of policy decisions is sufficient to counteract this.)

Anyway, the prevailing practice at Mozilla seems to be that multiple patches related to the same logical change are attached to the same bug. I would like to challenge the effectiveness of this practice.

Given:

- An individual commit to Firefox should be standalone and should not rely on future commits to unbust it (i.e. bisects to any commit should be safe).

- Bugzilla has no good mechanism to isolate review comments from multiple attachments on the same bug, making deciphering simultaneous reviews on multiple attachments difficult and frustrating. This leads to review comments inevitably falling through the cracks and the quality of code suffering.

- Reiterating the last point because it's important.

I therefore argue that attaching multiple reviews to a single Bugzilla bug is not a best practice and it should be avoided if possible. If that means filing separate bugs for each patch, so be it. That process can be automated. Tools like bzexport already do it. Alternatively (and even better IMO), we ditch Bugzilla's code review interface (Splinter) and integrate something like ReviewBoard instead. We limit Bugzilla to tracking, high-level discussion, and metadata aggregation. Code review happens elsewhere, without all the clutter and chaos that Bugzilla brings to the table.

Thoughts?

http://gregoryszorc.com/blog/2014/01/07/on-multiple-patches-in-bugs

|

|

Christian Heilmann: Finding real happiness in our jobs |

Want to comment? There are threads on Google+ and Facebook

It is 2014 and you are reading a post that will be talk at a web development conference. We’re lucky. We are super lucky. We are part of an elite group of people who are in a market where companies come to court us. We are in a position to say no to some contracts and we are so tainted by now that we complain when companies we work for have lesser variety in the free lunches – things that proverbially don’t exist – than the other companies we hear about.

I’ve talked about this before in my “Reasons to be cheerful” and “The prestige of being a web developer” presentations and I stand by my word: there is nothing more exciting in IT than being a web developer. It is the only platform that has the openness, the decentralisation and the ever constant evolution that is necessary to meet the demands of people out there when it comes to interacting with computers. All of this, however, relies on things we seem to have forgotten about: longevity, reaching who we work for and constantly improving the platform we are dependent on.

The ebbing revolution

What do I mean by that? Well, I am disappointed. When the web came about and became widely available – even at a 56k connection – it was a revolution. It blew everything that came before it away:

- Merchants could sell products 24/7 and reach people far, far away without having to open a shop locally or print catalogues and get them delivered.

- Writers and other creatives could distribute without being hindered by contracts of publishers and an assurance of making money for everyone involved before getting creative.

- The fixed state of the interface was no more. I could resize the font if I needed it to be bigger, I could copy and paste some text into a translation engine to make the content accessible to me.

- Content distribution was without quality loss. Papers were sent in digital formats, openly readable by all instead of being faxed or sent as a Word document on a floppy disk

It was a shock to the system, a world-wide distribution platform and everybody was invited. It scared the hell out of old-school publishers and it still scares the hell out of governments and other people who’d like to keep information controlled and maybe even hidden. Much like the printing press scared the powers in charge when it was invented as it brought literacy to the people who otherwise weren’t allowed to be literate.

In Renaissance Europe, the arrival of mechanical movable type printing introduced the era of mass communication which permanently altered the structure of society. The relatively unrestricted circulation of information and (revolutionary) ideas transcended borders, captured the masses in the Reformation and threatened the power of political and religious authorities; the sharp increase in literacy broke the monopoly of the literate elite on education and learning and bolstered the emerging middle class.

The web is all that; and more. If we allow it to blossom and change and not turn into TV on steroids where people go to consume and forget about creation.

But enough about history, let’s fast forward through a period of abundance and ridiculous projects for us: the first Dotcom bubble, the current entrepreneurial avalanche and move on to the now.

We’re losing the web

The web was good to all of us – damn good – and it is slipping away from us. When the president of the United States of America declares that people should learn to “code” and not to “make”, I get worried (code is only a small part of building product to help humans, you also need interfaces and data). When schools get iPads instead of computers with editors I get worried. When we measure what we do by comparing us with the success of software products built for closed environments and in a fixed state then we have a real problem with our self-image.

The web replaced a large amount of desktop apps of old and made them hardware and location independent. Now we go back full cycle to a world where consuming web content means downloading and installing an app on your device – if it is available for yours, that is. This is not innovation – it is staying safe in an old monetization model of controlling the consumption and being able to make the consumption environment become outdated so people have to buy the newer, shinier, more expensive one. Giving up control is still anathema for many of our clients – but this is actually what the web is about. You give up control and trade it for lots more users and very simple software update cycles. The same way we have to finally get it into our heads that the user controls the experience and we can not force them into a certain resolution, hardware or browser. Then we reach the most users.

However, there is a problem: the larger part of the web still runs on outdated ideas and practices and therefore easily can be seen as inferior to native apps.

The web of others

When was the last time you saw a product used by millions and making a real difference to people being built with the things we all are excited about? I am not talking about the things that keep us busy – the Instagrams, the Twitters, the Imgurs – these are time sinks and escapism. I am talking about the things that keep the world moving. The banking sites, the booking systems, the email systems, the travel sites, government web sites, educational institutions. The solutions we promised when the web boomed to replace the clunky old desktop software, phone numbers and faxes.

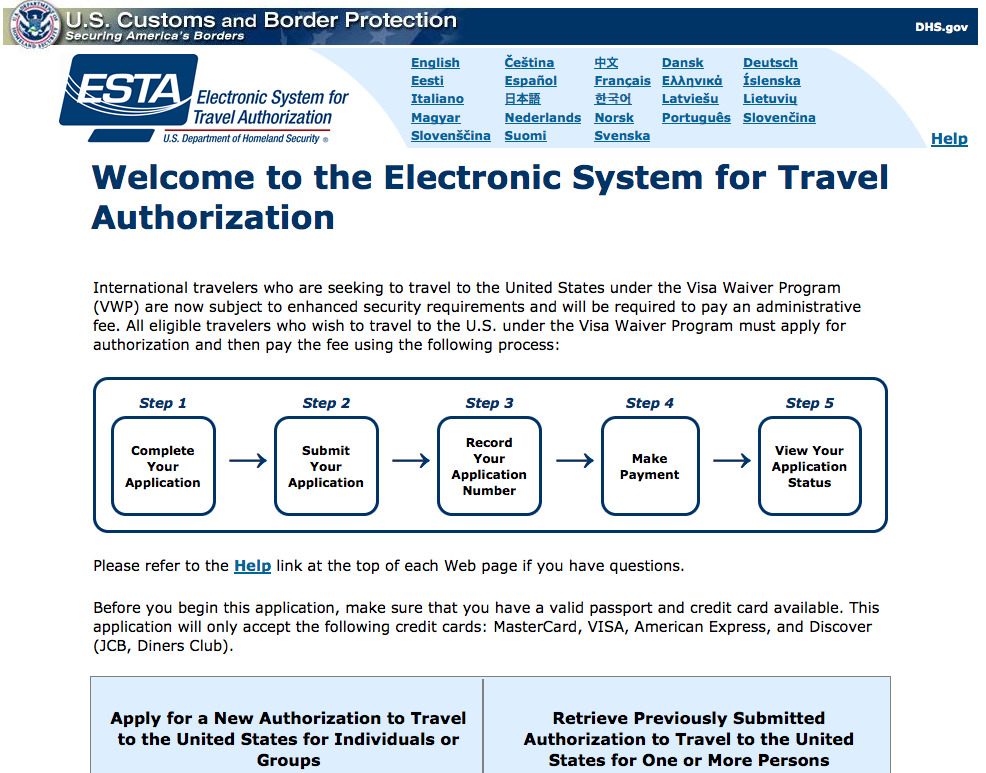

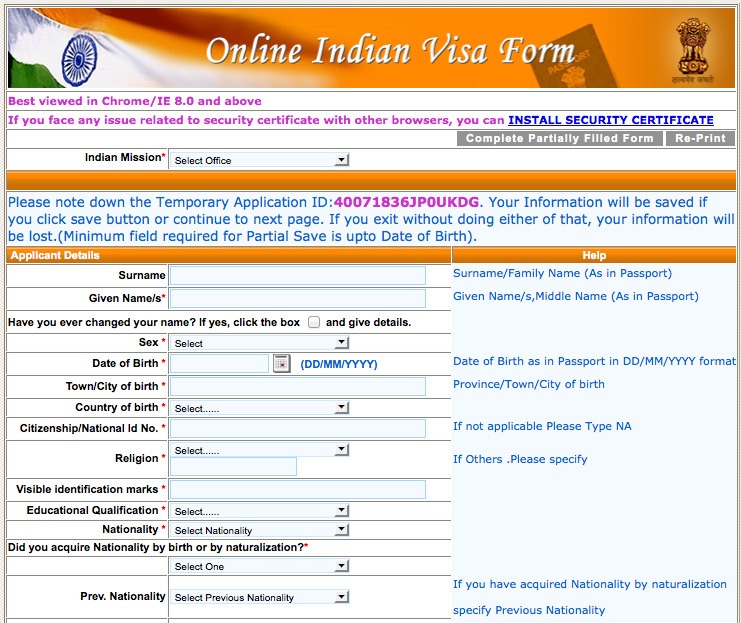

Case in point: check out the beauty of the ESTA Visa waiver web site where you need to fill in your personal information if you want to travel to the States. Still, it beats the Indian counterpart which only works in Chrome or IE8, and from time to time has no working SSL certificate.

How come there is a big gap between what universities teach and what we do – we who are seen as magicians by people who just use the web? Of course universities are not there to teach the most current, unstable, state of affairs, but it has been over 15 years and a lot of coursework is still stuck with pre-CSS practices.

One main issue I see is a massive disconnect between the people who pay us and what they care about and our wants and needs. This becomes painfully obvious when you contract, build something and a few months later have to remove it from your portfolio as the maintenance crew completely changed or messed up the product.

Many times our clients don’t get excited about the things we get excited about. All they really want is to have the product delivered on budget, on time and in a state that does the job. I call this the “good enough” problem. Reaching a milestone in the product cycle is more important than the quality of the product. How many times have you heard a sentence like “yes, this is not up to our standard but it needs to get out the door. We’ll plan for a cleanup later”. That cleanup never happens though. And that frustrates us.

Navel gazing

So what do we do when we get frustrated? We look for other ways to get excited; quick wins and things that sound innovative and great. This is a very natural survival instinct – we deserve to be happy and if what we are asked to do doesn’t make us happy, we find other ways.

If the people who caused our frustration don’t understand what we do, we find solace in talking to those who do, namely us. We blog on design blogs, we create quick scripts and tools that are almost the same as another one we use (but not quite) and we get a warm fuzzy feeling when people applaud, upvote and tweet what we did for a day. We keep solving the same issues that have been solved, but this time we do it in node.js and python and ruby and not in PHP (just as an example). We run in circles but every year we get better and shinier shoes to run with. We keep busy.

This has become an epidemic. We are not fixing projects, we are not really communicating with the people who mess up all of our good intentions. We don’t even talk to the people who build the software platforms our work is dependent on. Instead, we come up with more and more plugins, build scripts, pre-processors and other abstractions that sound amazing and promise to make us more effective. This is navel gazing, it is escapism, and it won’t help us a bit in our quest to build great products with the web. The straw-man we erected is that our platform isn’t good, our workflow is broken and that standards are not evolving fast enough and that browsers always lag behind. Has this not always been the case? And how did we get here if everything is so terrible and in need of replacement? Is live reloading really that much of a lifesaver or is switching with cmd+tab and hitting cmd+r not something that could become muscle memory, like handling all the gears and pedals when driving a car?

Ironically we solve the problem of the people who we blame not to care about our work – we concentrate on delivering more in a shorter amount of time with lesser able developers. We’re heading toward an assembly line mentality where products are built by putting together things we don’t understand to create other things that can be shipped in vast numbers and lacking individual touches.

Finding genuine happiness

What it boils down to is motivation and sincere happiness. There is an excellent TED talk out there by Dan Ariely called “What makes us feel good about our work?” and it contains some very interesting concepts.

Dan’s research

As part of his research, Dan conducted an experiment: people in a study were asked to look at sheets of paper and count letters that are double. They are told that they get paid a certain amount of money for each sheet they work through. Every time they finish with a page the interviewers renegotiated the money people get, giving less and less with each sheet. The idea was to test when people say “no, that is not worth my time”.

The study tested three scenarios:

- In the first one people would put their name on the sheet, do the task and give the final sheet to a supervisor. This one would look at the sheet, nod and put in on a pile on the table.

- In the second scenario no name was added to the sheet and the supervisor would not look at the sheet, but instead just put it on the pile.

- In the third scenario no name was added and the supervisor would just shred the paper in front of the study participant without looking at either.

This lead to some very interesting findings. First of all, although people saw their papers being shredded without being looked at, they still did not cheat, although it’d be easy money. Secondly there was not much of a gap between the minimum viable price per sheet in the scenario where the paper was shredded to the one that was not looked at.

The most important part was not what happened to the paper or if money would still flow – it was adding a sense of ownership by adding a name and being recognised for having done the work. Validation was the most important part that kept people going.

The reward of creation

The other study was asking people to fold origami objects with or without detailed explanations. Instead of just paying people to do that, the supervisor afterwards asked people for how much these should be sold. Other participants were asked how much they would pay for the final products, not knowing how much work went into them. Not surprisingly the creators of the origami structures valued them much higher than the ones who were asked to buy them. The origami structures done without detailed explanations were of lesser visual quality, which lead the prospective buyers to value them even less, whereas the makers ranked them higher, as more work went into them.

Dan calls this the IKEA principle – if you make people assemble something with very simple instructions but that still needed some extra work people value it much more. The other example he showed that explained this were ready-made cake bake mixtures. These were not a big seller, although they promised homemakers to save a lot of time. They became much more successful when the company making them removed the egg powder and asked people to add an egg. This meant people customised the cake – if only a bit – and got a feeling that they achieved something when they bake a cake. Furthermore, adding fresh eggs made for higher quality cakes at the same time.

This all sounds amazingly familiar, doesn’t it?

Finding real happiness in our jobs

There is a reason I am so happy to be a web developer: I fought for it. I didn’t start in a time where advice I got was to “use build script X and library Y and you are done”. I didn’t start in a time where browsers had developer tools built in. I did start in a time where browsers were not even remotely rendering the same and there was no predictable way to tell why things went wrong. That’s why I can enjoy what we have. That’s why I can marvel at the fact that I could do a button with rounded corners, drop shadows and a gradient in a few lines of CSS now and make it smoothly transition to another state without a single line of script.

This is happiness we don’t allow newcomers into our market to find these days as we force-feed them quick solutions that make us more effective but to them are just tools to put together. Instead of explaining outlines and brush strokes and pens we give them a paint-by-number template and wonder when there is not much excitement once they finished painting it out. We don’t allow people to feel ownership, to discover on their own terms how to do things, and thus we get a new generation of developers who see the web as a half-baked development environment compared to others. We don’t make newcomers happy by keeping them from making mistakes or find out about bugs. We bore them instead as we don’t challenge them.

Instead of creating a weekly abstraction to a problem and declaring it better than anything the W3C agrees on maybe it is time for us “in the know” to take our ideas elsewhere. It is time to make the whole web better, not the shiny echosphere we work in. This means we should talk more to product managers, project managers, CEOs, CMS creators, framework people, enterprise software developers – all the people that we see as not caring for what we care for and we’ve been ignoring as being boring.

These are the people who build the enterprise solutions we are baffled by. These are the people who need to deal with compliance and certifcations before they can work. And for that, they need standards, not awesomesauce.js or amazingstuff.io.

We chickened out having these hard and tiring conversations for too long. But they are worth having. Only when people realise just how much a labour of love a working, progressively enhancing interface that works across devices is we will get the recognition we deserve. We will not have our papers shredded in front of us, but get the nod instead. Right now we are being paid ridiculous amounts of money to do something other people do not want to care about. This is cushy, but leaves us empty and unappreciated on a intellectual level.

This is not the time to feel important about ourselves. This is not the time to make us more effective without people knowing what we do. This is the time to be experts who care for the platform that enabled us to be who we are. Each and everyone of you has it in you to make sure that what we do right now will continue to be good to us. This is the time to bring that out and use it.

Want to comment? There are threads on Google+ and Facebook

http://christianheilmann.com/2014/01/07/finding-real-happiness-in-our-jobs/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [942599] Documentation about possible_duplicates() lists ‘products’ as argument instead of ‘product’

- [748095] Bugzilla crashes when the shutdownhtml parameter is set and using a non-cookie based authentication method

- [918384] backport upstream bug 756048 to bmo/4.2 to allow setting bug/attachment flags using the webservices

- [956135] backport upstream bug 952284 to bmo/4.2 to hide tags set to private comments in the bug activity table

- [956052] backport upstream bug 945535 to bmo/4.2 for performance improvement in bugs with large number of attachments

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/01/07/happy-bmo-push-day-78/

|

|

Robert Nyman: Looking at 2013 |

Now 2013 is over, 2014 has started, and it’s a new year with new possibilities, challenges and experiences. I thought I’d take a look back at what 2013 was like for me.

Thinking about all the things that happened in 2013, it’s hard to fathom just how many things one can experience in one year, places to see and things to do.

I never really got to write a post about my 2012, but I was quite happy with my my Summing up 2011 post. This time around, I contemplated what I wanted to cover and share, and came to the conclusion to focus on travel and places.

One thing that I also realized is that I’ve been blogging far too little this year, and rather just sharing small stories and anecdotes on Facebook (and some of the stories below are taken/inspired from those posts, marked as quotes). In 2014 I really want to get going with writing more here, and I hope I’ll live up to it!

Traveling and speaking

Since I started to keep track a few years back of how much and far I travel, 2013 was the year with most travel days and kilometers so far: 99 travel days, 211,074 km covered, visiting 27 cities. A big amount of that was covered in the fall, which was packed with lots of interesting trips and experiences.

I’ve spent the last days categorizing and gathering my pictures from 2013 in a good structure, and all photos are available on Flickr – there you’ll find both all travel pics as well as those from other things I did throughout the year.

When it comes to speaking, I became Lanyrd’s most well-traveled speaker, with having given presentations in 27 countries at 66 events. That number has now gone up to 29 countries and 72 events; I really look forward to my 30th country I will speak in! Any takers? ![]()

Also, all the slides and videos from my talks are available on Lanyrd.