Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Florian Qu`eze: Firefox is awesome |

"Hey, you know what? Firefox is awesome!" someone exclaimed at the other end of the coworking space a few weeks ago. This piqued my curiosity, and I moved closer to see what she was so excited about.

When

I saw the feature she was delighted of finding, it reminded me a

similar situation several years ago. In high school, I was trying to

convince a friend to switch from IE to Mozilla. The arguments about

respecting web standards didn't convince him. He tried Mozilla anyway to

please me, and found one feature that excited him.

He

had been trying to save some images from webpages, and for some reason

it was difficult to do (possibly because of context menu hijacking,

which was common at the time, or maybe because the images were displayed

as a background, …). He had even written some Visual Basic code to

parse the saved HTML source code and find the image urls, and then

downloaded them, but the results weren't entirely satisfying.

Now

with Mozilla, he could just right click, select "View Page Info", click

on the "Media" tab, and find a list of all the images of the page. I

remember how excited he looked for one second, until he clicked a

background image in the list and the preview stayed blank; he then

clicked the "Save as" button anyway and… nothing happened. Turns out

that "Save as" button was just producing an error in the Error Console.

He then looked at me, very disappointed, and said that my Mozilla isn't

ready yet.

After that disappointment, I didn't insist much on him using Mozilla instead of IE (I think he did switch anyway a few weeks or months later).

A few months later, as I had time during summer vacations, I tried to

create an add-on for the last thing I could do with IE but not Firefox:

list the hostnames that the browser connects to when loading a page (the

add-on, View Dependencies, is on AMO). I used this to maintain a hosts file that was blocking ads on the network's gateway.

Working

on this add-on project caused me to look at the existing Page Info code

to find ideas about how to look through the resources loaded by the

page. While doing this, I stumbled on the problem that was causing

background image previews to not be displayed. Exactly 10 years ago, I

created a patch, created a bugzilla account (I had been lurking on

bugzilla for a while already, but without creating an account as I

didn't feel I should have one until I had something to contribute), and

attached the patch to the existing bug about this background preview issue.

Two days later, the patch was reviewed (thanks db48x!), I addressed the

review comment, attached a new patch, and it was checked-in.

I remember how excited I was to verify the next day that the bug was gone

in the next nightly, and how I checked that the code in the new nightly

was actually using my patch.

A couple months later, I fixed the "Save as" button too in time for Firefox 1.0.

Back to 2014. The reason why someone in my coworking space was finding Firefox so awesome is that "You can click "View Page Info", and then view all the images of the page and save them." Wow. I hadn't heard anybody talking to me about Page Info in years. I did use it a lot several years ago, but don't use it that much these days. I do agree with her that Firefox is awesome, not really because it can save images (although that's a great feature other browsers don't have), but because anybody can make it better for his/her own use, and by doing so making it awesome for millions of other people now but also in the future. Like I did, ten years ago.

|

|

Raniere Silva: MathML July Meeting |

MathML July Meeting

Note

Sorry for the delay in write this.

This is a report about the Mozilla MathML July IRC Meeting (see the announcement here). The topics of the meeting can be found in this PAD (local copy of the PAD) and the IRC log (local copy of the IRC log) is also available.

In the last 4 weeks the MathML team closed 4 bugs, worked in one other. This are only the ones tracked by Bugzilla.

The next meeting will be in July 14th at 8pm UTC (note that it will be in an different time from the last meating, more information below). Please add topics in the PAD.

|

|

Nikhil Marathe: ServiceWorkers in Firefox Update: July 26, 2014 |

It’s been over 2 months since my last post, so here is an update. But first,

a link to a latest build (and this time it won’t expire!). For instructions on

enabling all the APIs, see the earlier post.

Download builds

Registration lifecycle

The patches related to ServiceWorker registration have landed in Nightlybuilds! unregister() still doesn’t work in Nightly (but does in the build

above), since Bug 1011268 is waiting on review.

The state mechanism is not available. But the bug is easy to fix and

I encourage interested Gecko contributors (existing and new) to give it a shot.

Also, the ServiceWorker specification changed just a few days ago,

so Firefox still has the older API with everything on ServiceWorkerContainer.

This bug is another easy to fix bug.

Fetch

Ben Kelly has been hard at work implementing Headers and some of them havelanded in Nightly. Unfortunately that isn’t of much use right now since the

Request and Response objects are very primitive and do not handle Headers.

We do have a spec updated Fetch API, with

Request, Response and fetch() primitives. What works and what doesn’t?

- Request and Response objects are available and the fetch event will hand

your ServiceWorker a Request, and you can return it a Response and this will

work! Only the Response(“string body”) form is implemented. You can of

course create an instance and set the status, but that’s about it. - fetch() does not work on ServiceWorkers! In documents, only the fetch(URL)

form works. - One of our interns, Catalin Badea has taken over implementing Fetch while

I’m on vacation, so I’m hoping to publish a more functional API once I’m

back.

postMessage()/getServiced()

Catalin has done a great job of implementing these, and they are waiting forreview. Unfortunately I was unable to integrate his patches into the

build above, but he can probably post an updated build himself.

Push

Another of our interns, Tyler Smith, has implemented the new PushAPI! This is available for use on navigator.pushRegistrationManager

and your ServiceWorker will receive the Push notification.

Cache/FetchStore

Nothing available yet.Persistence

Currently neither ServiceWorker registrations, nor scripts are persisted or available offline. Andrea Marchesini is working on the former, and will be back from vacation next week to finish it off. Offline script caching is currently unassigned. It is fairly hairy, but we think we know how to do it. Progress on this should happen within the next few weeks.Documentation

Chris Mills has started working on MDN pages about ServiceWorkers.Contributing to ServiceWorkers

As you can see, while Firefox is not in a situation yet to implementfull-circle offline apps, we are making progress. There are several employees

and two superb interns working on this. We are always looking for more

contributors. Here are various things you can do:

The ServiceWorker specification is meant to solve your needs. Yes, it

is hard to figure out what can be improved without actually trying it out, but

I really encourage you to step in there, ask questions and file

issues to improve the specification before it becomes immortal.

Improve Service Worker documentation on MDN. The ServiceWorker spec introduces

several new concepts and APIs, and the better documented we have them, the

faster web developers can use them. Start

here.

There are several Gecko implementation bugs, here ordered in approximately

increasing difficulty:

- 1040924 - Fix and re-enable the serviceworker tests on non-Windows.

- 1043711 - Ensure ServiceWorkerManager::Register() can always

extract a host from the URL. - 1041335 - Add mozilla::services Getter for

nsIServiceWorkerManager. - 982728 - Implement ServiceWorkerGlobalScope update() and

unregister(). - 1041340 - ServiceWorkers: Implement [[HandleDocumentUnload]].

- 1043004 - Update ServiceWorkerContainer API to spec.

- 931243 - Sync XMLHttpRequest should be disabled on ServiceWorkers.

- 1003991 - Disable https:// only load for ServiceWorkers when Developer Tools are open.

- Full list

#content channel on irc.mozilla.org. http://blog.nikhilism.com/2014/07/serviceworkers-in-firefox-update-july.html

|

|

Chris McDonald: Negativity in Talks |

I was at a meetup recently, and one of the organizers was giving a talk. They come across some PHP in the demo they are doing, and crack a joke about how bad PHP is. The crowd laughs and cheers along with the joke. This isn’t an isolated incident, it happens during talks or discussions all the time. That doesn’t mean it is acceptable.

When I broke into the industry, my first gig was writing Perl, Java, and PHP. All of these languages have stigmas around them these days. Perl has its magic and the fact that only neckbeard sysadmins write it. Java is the ‘I just hit tab in my IDE and the code writes itself!’ and other comments on how ugly it is. PHP, possibly the most made fun of language, doesn’t even get a reason most of the time. It is just ‘lulz php is bad, right gaise?’

Imagine a developer who is just getting started. They are ultra proud of their first gig, which happens to be working on a Drupal site in PHP. They come to a user group for a different language they’ve read about and think sounds neat. They then hear speakers that people appear to respect making jokes about the job they are proud of, the crowd joining in on this negativity. This is not inspiring to them, it just reinforces the impostor syndrome most of us felt as we started into tech.

So what do we do about this? If you are a group organizer, you already have all the power you need to make the changes. Talk with your speakers when they volunteer or are asked to speak. Let them know you want to promote a positive environment regardless of background. Consider writing up guidelines for your speakers to agree to.

How about as just an attendee? The best bet is probably speaking to one of the organizers. Bring it to their attention that their speakers are alienating a portion of their audience with the language trash talking. Approach it as a problem to be fixed in the future, not as if they intended to insult.

Keep in mind I’m not opposed to direct comparison between languages. “I enjoy the lack of type inference because it makes the truth table much easier to understand than, for instance, PHP’s.” This isn’t insulting the whole language, it isn’t turning it into a joke. It is just illustrating a difference that the speaker values.

Much like other negativity in our community, this will be something that will take some time to fix. Keep in mind this isn’t just having to do with user group or conference talks. Discussions around a table suffer from this as well. The first place one should address this problem is within themselves. We are all better than this pandering, we can build ourselves up without having to push others down. Let’s go out and make our community much more positive.

|

|

Tarek Ziad'e: ToxMail experiment |

I am still looking for a good e-mail replacement that is more respectful of my privacy.

This will never happen with the existing e-mail system due to the way it works: when you send an e-mail to someone, even if you encrypt the body of your e-mail, the metadata will transit from server to server in clear, and the final destination will store it.

Every PGP UX I have tried is terrible anyways. It's just too painful to get things right for someone that has no knowledge (and no desire to have some) of how things work.

What I aiming for now is a separate system to send and receive mails with my close friends and my family. Something that my mother can use like regular e-mails, without any extra work.

I guess some kind of "Darknet for E-mails" where they are no intermediate servers between my mailbox and my mom's mailbox, and no way for a eavesdropper to get the content.

Ideally:

- end-to-end encryption

- direct network link between my mom's mail server and me

- based on existing protocols (SMTP/IMAP/POP3) so my mom can use Thunderbird or I can set her up a Zimbra server.

Project Tox

The Tox Project is a project that aims to replace Skype with a more secured instant messaging system. You can send text, voice and even video messages to your friends.

It's based on NaCL for the crypto bits and in particular the crypto_box API which provides high-level functions to generate public/private key pairs and encrypt/decrypt messages with it.

The other main feature of Tox is its Distributed Hash Table that contains the list of nodes that are connected to the network with their Tox Id.

When you run a Tox-based application, you become part of the Tox network by registering to a few known public nodes.

To send a message to someone, you have to know their Tox Id and send a crypted message using the crypto_box api and the keypair magic.

Tox was created as an instant messaging system, so it has features to add/remove/invite friends, create groups etc. but its core capability is to let you reach out another node given its id, and communicate with it. And that can be any kind of communication.

So e-mails could transit through Tox nodes.

Toxmail experiment

Toxmail is my little experiment to build a secure e-mail system on the top of Tox.

It's a daemon that registers to the Tox network and runs an SMTP service that converts outgoing e-mails to text messages that are sent through Tox. It also converts incoming text messages back into e-mails and stores them in a local Maildir.

Toxmail also runs a simple POP3 server, so it's actually a full stack that can be used through an e-mail client like Thunderbird.

You can just create a new account in Thunderbird, point it to the Toxmail SMPT and POP3 local services, and use it like another e-mail account.

When you want to send someone an e-mail, you have to know their Tox Id, and use TOXID@tox as the recipient.

For example:

7F9C31FE850E97CEFD4C4591DF93FC757C7C12549DDD55F8EEAECC34FE76C029@tox

When the SMTP daemon sees this, it tries to send the e-mail to that Tox-ID. What I am planning to do is to have an automatic conversion of regular e-mails using a lookup table the user can maintain. A list of contacts where you provide for each entry an e-mail and a tox id.

End-to-end encryption, no intermediates between the user and the recipient. Ya!

Caveats & Limitations

For ToxMail to work, it needs to be registered to the Tox network all the time.

This limitation can be partially solved by adding in the SMTP daemon a retry feature: if the recipient's node is offline, the mail is stored and it tries to send it later.

But for the e-mail to go through, the two nodes have to be online at the same time at some point.

Maybe a good way to solve this would be to have Toxmail run into a Raspberry-PI plugged into the home internet box. That'd make sense actually: run your own little mail server for all your family/friends conversations.

One major problem though is what to do with e-mails that are to be sent to recipients that are part of your toxmail contact list, but also to recipients that are not using Toxmail. I guess the best thing to do is to fallback to the regular routing in that case, and let the user know.

Anyways, lots of fun playing with this on my spare time.

The prototype is being built here, using Python and the PyTox binding:

https://github.com/tarekziade/toxmail

It has reached a state where you can actually send and receive e-mails :)

I'd love to have feedback on this little project.

|

|

Kevin Ngo: Poker Sess.28 - Building an App for Tournament Players |

Re-learning how to fish.

Re-learning how to fish.

Nothing like a win to get things back on track. I went back to my bread-and-butter, the Saturday freerolls at the Final Table. Through the dozen or so times I've played these freerolls, I've amassed an insane ROI. After three hours of play, we chopped four ways for $260.

Building a Personalized Poker Buddy App

I have been thinking about building a personalized mobile app for myself to assist me in all things poker. I always try to look to my hobbies for inspiration to build and develop. With insomnia at time of writing, I was reading Rework by 37signals to pass the time. It said to make use of fleeting inspiration as it came. And this idea may click. The app will have two faces. A poker tracker on one side, and a handy tournament pocket tool on the other.

The poker tracker would track and graph earnings (and losings!) over time. Data is beautiful, and a solid green line slanting from the lower-left to the upper-right would impart some motivation. My blog (and an outdated JSON file) has been the only means I have for bookkeeping. I'd like to be able to easily input results on my phone immediately after a tournament (for those times I don't feel like blogging after a bust).

The pocket tool will act as a "pre-hand" reference during late stage live tournaments. To give recommendations, factoring in several conditions and siutations, on what I should do next hand. It will be optimized to be usable before a hand since phone-use during a hand is illegal. The visuals will be obfuscated and surreptitious (maybe style it like Facebook...) such that neighboring players not catch on. I'd input the blinds, antes, number of players, table dynamics, my stack size, and my position to determine the range of hands I can profitably open-shove.

Though it can also act a post-hand reference, containing Harrington's pre-flop strategy charts and some hand vs hand race percentages.

I'd pay for this, and I'd other live tournament players would be interested as well. I have been in sort of an entrepreneur mood lately, grabbing Rework and $100 Start-Up. I have domain knowledge, a need for a side project, a niche, and something I could dogfood.

The Poker Bits

Won the morning freeroll, busted on the final table on the afternoon freeroll. The biggest mistake that stuck out was calling off a river bet with AQ on a KxxQx board against a maniac (who was obviously high). He raised UTG, I flatted in position with AQ. I called his half-pot cbet with a gutshot and over keeping his maniac image in mind. I improved on the turn and called a min-bet. Then I called a good-sized bet on the river. I played this way given his image and the pot odds, but I should 3bet pre, folded flop, raised turn, or folded river given the triple barrel.

I have been doing well in online tournaments as well. A first place finish in single-table tourney, and a couple high finishes in 500-man multi-table tourneys. Though I have been doing terrible in cash games. It's weird, I used to play exclusively 6-max cash, but since I started playing full ring tourneys, I haven't gotten reaccustomed to it. I prefer the flow of tourneys; it has a start and an end, players increasingly become more aggressive, the blinds make it feel I'm always on the edge. Conversely, cash games are a boring grind.

Session Conclusions

- Went Well: playing more conservative, decreasing cbet%, improving hand reading

- Mistakes: should have folded a couple of one-pair hands to double/triple barrels, playing terribly at cash games, playing AQ preflop bad

- Get Better At: understanding verbal poker tells from Elwood's new book

- Profit: +$198

|

|

Jeff Walden: New mach build feature: build-complete notifications on Linux |

Spurred on by gps‘s recent mach blogging (and a blogging dry spell to rectify), I thought it’d be worth noting a new mach feature I landed in mozilla-inbound yesterday: build-complete notifications on Linux.

On OS X, mach build spawns a desktop notification when a build completes. It’s handy when the terminal where the build’s running is out of view — often the case given how long builds take. I learned about this feature when stuck on a loaner Mac for a few months due to laptop issues, and I found the notification quite handy. When I returned to Linux, I wanted the same thing there. evilpie had already filed bug 981146 with a patch using DBus notifications, but he didn’t have time to finish it. So I picked it up and did the last 5% to land it. Woo notifications!

(Minor caveat: you won’t get a notification if your build completes in under five minutes. Five minutes is probably too long; some systems build fast enough that you’d never get a notification. gps thinks this should be shorter and ideally configurable. I’m not aware of an existing bug for this.)

http://whereswalden.com/2014/07/25/new-mach-build-feature-build-complete-notifications-on-linux/

|

|

Florian Qu`eze: Converting old Mac minis into CentOS Instantbird build slaves |

A while ago, I received a few retired Mozilla minis. Today 2 of them started their new life as CentOS6 build slaves for Instantbird, which means we now have Linux nightlies again! Our previous Linux build slave, running CentOS5, was no longer able to build nightlies based on the current mozilla-central code, and this is the reason why we haven't had Linux nightlies since March. We know it's been a long wait, but to help our dear Linux testers forgive us, we started offering 64bit nightly builds!

For the curious, and for future reference, here are the steps I followed to install these two new build slaves:

Partition table

The Mac minis came with a GPT partition table and an hfs+ partition that we don't want. While the CentOS installer was able to detect them, the grub it installed there didn't work. The solution was to convert the GPT partition table to the MBR older format. To do this, boot into a modern linux distribution (I used an Ubuntu 13.10 live dvd that I had around), install gdisk (sudo apt-get update && sudo apt-get gdisk) and use it to edit the disk's partition table:

sudo gdisk /dev/sda

Press 'r' to start recovery/transformation, 'g' to convert from GPT to MBR, 'p' to see the resulting partition table, and finally 'w' to write the changes to disk (instructions initially from here).

Exit gdisk.

Now you can check the current partition table using gparted. At this point I deleted the hfs+ partition.

Installing CentOS

The version of CentOS needed to use the current Mozilla build tools is CentOS 6.2. We tried before using another (slightly newer) version, and we never got it to work.

Reboot on a CentOS 6.2 livecd (press the 'c' key at startup to force the mac mini to look for a bootable CD).

Follow the instructions to install CentOS on the hard disk.

I customized the partition table a bit (50000MB for /, 2048MB of swap space, and the rest of the disk for /home).

The only non-obvious part of the CentOS install is that the boot loaded needs to be installed on the MBR rather than on the partition where the system is installed. When the installer asks where grub should be installed, set it to /dev/sda (the default is /dev/sha2, and that won't boot). Of course I got this wrong in my first attempts.

Installing Mozilla build dependencies

First, install an editor that is usable to you. I typically use emacs, so: sudo yum install emacs

The Mozilla Linux build slaves use a specifically tweaked version of gcc so that the produced binaries have low runtime dependencies, but the compiler still has the build time feature set of gcc 4.7. If you want to use something as old as CentOS6.2 to build, you need this specific compiler.

The good thing is, there's a yum repository publicly available where all the customized mozilla packages are available. To install it, create a file named /etc/yum.repos.d/mozilla.repo and make it contain this:

[mozilla] name=Mozilla baseurl=http://puppetagain.pub.build.mozilla.org/data/repos/yum/releng/public/CentOS/6/x86_64/ enabled=1 gpgcheck=0

Adapt the baseurl to finish with i386 or x86_64 depending on whether you are making a 32 bit or 64 bit slave.

After saving this file, you can check that it had the intended effect by running this comment to list the packages from the mozilla repository: repoquery -q --repoid=mozilla -a

You want to install the version of gcc473 and the version of mozilla-python27 that appear in that list.

You also need several other build dependencies. MDN has a page listing them:

yum groupinstall 'Development Tools' 'Development Libraries' 'GNOME Software Development'yum install mercurial autoconf213 glibc-static libstdc++-static yasm wireless-tools-devel mesa-libGL-devel alsa-lib-devel libXt-devel gstreamer-devel gstreamer-plugins-base-devel pulseaudio-libs-devel

Unfortunately, two dependencies were missing on that list (I've now fixed the page):

yum install gtk2-devel dbus-glib-devel

At this point, the machine should be ready to build Firefox.

Instantbird, because of libpurple, depends on a few more packages:yum install avahi-glib-devel krb5-devel

And it will be useful to have ccache:

yum install ccache

Installing the buildbot slave

First, install the buildslave command, which unfortunately doesn't come as a yum package, so you need to install easy_install first:

yum install python-setuptools python-devel mpfr

easy_install buildbot-slave

python-devel and mpfr here are build time dependencies of the buildbot-slave package, and not having them installed will cause compiling errors while attempting to install buildbot-slave.

We are now ready to actually install the buildbot slave. First let's create a new user for buildbot:

adduser buildbot

su buildbot

cd /home/buildbot

Then the command to create the local slave is:

buildslave create-slave --umask=022 /home/buildbot/buildslave buildbot.instantbird.org:9989 linux-sN password

The buildbot slave will be significantly more useful if it starts automatically when the OS starts, so let's edit the crontab (crontab -e) to add this entry:@reboot PATH=/usr/local/bin:/usr/bin:/bin /usr/bin/buildslave start /home/buildbot/buildslave

The reason why the PATH environment variable has to be set here is that the default path doesn't contain /usr/local/bin, but that's where the mozilla-python27 packages installs python2.7 (which is required by mach during builds).

One step in the Instantbird builds configured on our buildbot use hg clean --all and this requires the purge mercurial extension to be enabled, so let's edit ~buidlbot/.hgrc to look like this:$ cat ~/.hgrc

[extensions]

purge =

Finally, ssh needs to be configured so that successful builds can be uploaded automatically. Copy and adapt ~buildbot/.ssh from an existing working build slave. The files that are needed are id_dsa (the ssh private key) and known_hosts (so that ssh doesn't prompt about the server's fingerprint the first time we upload something).

Here we go, working Instantbird linux build slaves! Figuring out all these details for our first CentOS6 slave took me a few evenings, but doing it again on the second slave was really easy.

http://blog.queze.net/post/2014/07/25/Converting-old-Mac-minis-into-CentOS-Instantbird-build-slaves

|

|

Aki Sasaki: on leaving mozilla |

Today's my last day at Mozilla. It wasn't an easy decision to move on; this is the best team I've been a part of in my career. And working at a company with such idealistic principles and the capacity to make a difference has been a privilege.

Looking back at the past five-and-three-quarter years:

-

I wrote mozharness, a versatile scripting harness. I strongly believe in its three core concepts: versatile locking config; full logging; modularity.

- By my count, over 85% of our buildbot builders (build+test job types) are currently running through mozharness scripts. Given over 100k build+test jobs in a day, that's a sizeable amount. So many people across so many teams contributed to this, and we're not done yet. Once desktop builds are in mozharness and we discontinue Android 2.2 support, that number should be close to, if not over, 90%.

-

I helped FirefoxOS (b2g) ship, and it's making waves in the industry. Internally, the release processes are well on the path to maturing and stabilizing, and b2g is now riding the trains.

-

Merge day: Releng took over ownership of merge day, and b2g increased its complexity exponentially.

I don't think it's quite that bad :) I whittled it down from requiring someone's full mental capacity for three out of every six weeks, to several days of precisely following directions.Listening to @escapewindow explain merge day processes is like looking Cthulhu in the eyes. Sanity draining away rapidly

— Laura Thomson (@lxt) April 29, 2014 - I rewrote vcs-sync to be more maintainable and robust, and to support gecko-dev and gecko-projects. Being able to support both mercurial and git across many hundreds of repos has become a core part of our development and automation, primarily because of b2g. The best thing you can say about a mission critical piece of infrastructure like this is that you can sleep through the night or take off for the weekend without worrying if it'll break. Or go on vacation for 3 1/2 weeks, far from civilization, without feeling guilty or worried.

-

Merge day: Releng took over ownership of merge day, and b2g increased its complexity exponentially.

-

I helped ship three mobile 1.0's. I learned a ton, and I don't think I could have gotten through it by myself; John and the team helped me through this immensely.

-

On mobile, we went from one or two builds on a branch to full tier-1 support: builds and tests on checkin across all of our integration-, release-, and project- branches. And mobile is riding the trains.

-

We Sim-shipped 5.0 on Firefox desktop and mobile off the same changeset. Firefox 6.0b2, and every release since then, was built off the same automation for desktop and mobile. Those were total team efforts.

-

I will be remembered for the mobile pedalboard. When we talked to other people in the industry, this was more on-device mobile test automation than they had ever seen or heard of; their solutions all revolved around manual QA.

(full set) - And they are like effin bunnies; we later moved on to shoe rack bunnies, rackmounted bunnies, and now more and more emulator-driven bunnies in the cloud, each numbering in the hundreds or more. I've been hands off here for quite a while; the team has really improved things leaps and bounds over my crude initial attempts.

-

On mobile, we went from one or two builds on a branch to full tier-1 support: builds and tests on checkin across all of our integration-, release-, and project- branches. And mobile is riding the trains.

- I brainstormed next-gen build infrastructure. I started blogging about this back in January 2009, based largely around my previous webapp+db design elsewhere, but I think my LWR posts in Dec 2013 had more of an impact. A lot of those ideas ended up in TaskCluster; mozharness scripts will contain the bulk of the client-side logic. We'll see how it all works when TaskCluster starts taking on a significant percentage of the current buildbot load :)

I will stay a Mozillian, and I'm looking forward to see where we can go from here!

|

|

Vaibhav Agrawal: Lets have more green trees |

I have been working on making jobs ignore intermittent failures for mochitests (bug 1036325) on try servers to prevent unnecessary oranges, and save resources that goes into retriggering those jobs on tbpl. I am glad to announce that this has been achieved for desktop mochitests (linux, osx and windows). It doesn’t work for android/b2g mochitests but they will be supported in the future. This post explains how it works in detail and is a bit lengthy, so bear with me.

Lets see the patch in action. Here is an example of an almost green try push:

Note: one bc1 orange job is because of a leak (Bug 1036328)

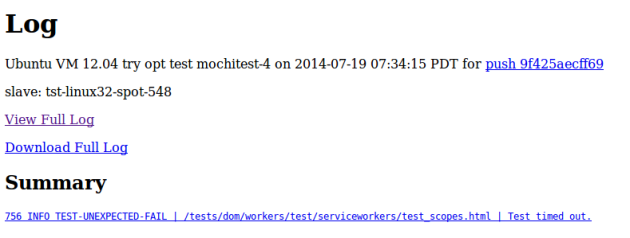

In this push, the intermittents were suppressed, for example this log shows an intermittent on mochitest-4 job on linux :

Even though there was an intermittent failure for this job, the job remains green. We can determine if a job produced an intermittent by inspecting the number of tests run for the job on tbpl, which will be much smaller than normal. For example in the above intermittent mochitest-4 job it shows “mochitest-plain-chunked: 4696/0/23” as compared to the normal “mochitest-plain-chunked: 16465/0/1954”. Another way is looking at the log of the particular job for “TEST-UNEXPECTED-FAIL”.

The algorithm behind getting a green job even in the presence of an intermittent failure is that we recognize the failing test, and run it independently 10 times. If the test fails < 3 times out of 10, it is marked as intermittent and we leave it. If it fails >=3 times out of 10, it means that there is a problem in the test turning the job orange.

Next to test the case of a “real” failure, I wrote a unit test and tested it out in the try push:

This job is orange and the log for this push is:

In this summary, a test is failing for more than three times and hence we get a real failure. The important line in this summary is:

3086 INFO TEST-UNEXPECTED-FAIL | Bisection | Please ignore repeats and look for ‘Bleedthrough’ (if any) at the end of the failure list

This tells us that the bisection procedure has started and we should look out for future “Bleedthrough”, that is, the test causing the failure. And at the last line it prints the “real failure”:

TEST-UNEXPECTED-FAIL | testing/mochitest/tests/Harness_sanity/test_harness_post_bisection.html | Bleedthrough detected, this test is the root cause for many of the above failures

Aha! So we have found a permanent failing test and it is probably due to some fault in the developer’s patch. Thus, the developers can now focus on the real problem rather than being lost in the intermittent failures.

This patch has landed on mozilla-inbound and I am working on enabling it as an option on trychooser (More on that in the next blog post). However if someone wants to try this out now (works only for desktop mochitest), one can hack in just a single line:

options.bisectChunk = ‘default’

such as in this diff inside runtests.py and test it out!

Hopefully, this will also take us a step closer to AutoLand (automatic landing of patches).

Other Bugs Solved for GSoC:

[1028226] – Clean up the code for manifest parsing

[1036374] – Adding a binary search algorithm for bisection of failing tests

[1035811] – Mochitest manifest warnings dumped at start of each robocop test

A big shout out to my mentor (Joel Maher) and other a-team members for helping me in this endeavour!

https://vaibhavag.wordpress.com/2014/07/26/lets-have-more-green-trees/

|

|

Just Browsing: Taming Gruntfiles |

Every software project needs plumbing.

If you write your code in JavaScript chances are you’re using Grunt. And if your project has been around long enough, chances are your Gruntfile is huge. Even though you write comments and indent properly, the configuration is starting to look unwieldy and is hard to navigate and maintain (see ngbp’s Gruntfile for an example).

Enter load-grunt-config, a Grunt plugin that lets you break up your Gruntfile by task (or task group), allowing for a nice navigable list of small per-task Gruntfiles.

When used, your Grunt config file tree might look like this:

./

|_ Gruntfile.coffee

|_ grunt/

|_ aliases.coffee

|_ browserify.coffee

|_ clean.coffee

|_ copy.coffee

|_ watch.coffee

|_ test-group.coffee

watch.coffee, for example, might be:

module.exports = {

sources:

files: [

'/**/*.coffee',

'/**/*.js'

]

tasks: ['test']

html:

files: ['/**/*.html']

tasks: ['copy:html', 'test']

css:

files: ['/**/*.css']

tasks: ['copy:css', 'test']

img:

files: ['/img/**/*.*']

tasks: ['copy:img', 'test']

}

and aliases.coffee:

module.exports = {

default: [

'clean'

'browserify:libs'

'browserify:dist'

]

dev: [

'clean'

'connect'

'browserify:libs'

'browserify:dev'

'mocha_phantomjs'

'watch'

]

}

By default, load-grunt-config reads the task configurations from the grunt/ folder located on the same level as your Gruntfile. If there’s an alias.js|coffee|yml file in that directory, load-grunt-config will use it to load your task aliases (which is convenient because one of the problem with long Gruntfiles is that the task aliases are hard to find).

Other files in the grunt/ directory define configurations for a single task (e.g. grunt-contrib-watch) or a group of tasks.

Another nice thing is that load-grunt-config takes care of loading plugins; it reads package.json and automatically loadNpmTaskss all the grunt plugins it finds for you.

To sum it up, for a bigger project, your Gruntfile can get messy. load-grunt-config helps combat that by introducing structure into the build configuration, making it more readable and maintainable.

Happy grunting!

http://feedproxy.google.com/~r/justdiscourse/browsing/~3/YqKdljiix8M/

|

|

Gregory Szorc: Please run mach mercurial-setup |

Hey there, Firefox developer! Do you use Mercurial? Please take the time right now to run mach mercurial-setup from your Firefox clone.

It's been updated to ensure you are running a modern Mercurial version. More awesomely, it has support for a couple of new extensions to make you more productive. I think you'll like what you see.

mach mercurial-setup doesn't change your hgrc without confirmation. So it is safe to run to see what's available. You should consider running it periodically, say once a week or so. I wouldn't be surprised if we add a notification to mach to remind you to do this.

http://gregoryszorc.com/blog/2014/07/25/please-run-mach-mercurial-setup

|

|

Gervase Markham: Now We Are Five… |

10 weeks old, and beautifully formed by God :-) The due date is 26th January 2015.

http://feedproxy.google.com/~r/HackingForChrist/~3/Fr9A7i_QIQg/

|

|

Maja Frydrychowicz: Database Migrations ("You know nothing, Version Control.") |

This is the story of how I rediscovered what version control doesn't do for you. Sure, I understand that git doesn't track what's in my project's local database, but to understand is one thing and to feel in your heart forever is another. In short, learning from mistakes and accidents is the greatest!

So, I've been working on a Django project and as the project acquires new features, the database schema changes here and there. Changing the database from one schema to another and possibly moving data between tables is called a migration. To manage database migrations, we use South, which is sort of integrated into the project's manage.py script. (This is because we're really using playdoh, Mozilla's augmented, specially-configured flavour of Django.)

South is lovely. Whenever you change the model definitions in your Django project, you ask South to generate Python code that defines the corresponding schema migration, which you can customize as needed. We'll call this Python code a migration file. To actually update your database with the schema migration, you feed the migration file to manage.py migrate.

These migration files are safely stored in your git repository, so your project has a history of database changes that you can replay backward and forward. For example, let's say you're working in a different repository branch on a new feature for which you've changed the database schema a bit. Whenever you switch to the feature branch you must remember to apply your new database migration (migrate forward). Whenever you switch back to master you must remember to migrate backward to the database schema expected by the code in master. Git doesn't know which migration your database should be at. Sometimes I'm distracted and I forget. :(

As always, it gets more interesting when you have project collaborators because they might push changes to migration files and you must pay attention and remember to actually apply these migrations in the right order. We will examine one such scenario in detail.

Adventures with Overlooked Database Migrations

Let's call the actors Sparkles and Rainbows. Sparkles and Rainbows are both contributing to the same project and so they each regularly push or pull from the same "upstream" git repository. However, they each use their own local database for development. As far as the database goes, git is only tracking South migration files. Here is our scenario.

- Sparkles pushes Migration Files 1, 2, 3 to upstream and applies these migrations to their local db in that order.

- Rainbows pulls Migration Files 1, 2, 3 from upstream and applies them to their local db in that order.

All is well so far. The trouble is about to start.

- Sparkles reverses Migration 3 in their local database (backward migration to Migration 2) and pushes a delete of the Migration 3 file to upstream.

- Rainbows pulls from upstream: the Migration 3 file no longer exists at

HEADbut it must also be reversed in the local db! Alas, Rainbows does not perform the backward migration. :( - Life goes on and Sparkles now adds Migration Files 4 and 5, applies the migrations locally and pushes the files to upstream.

- Rainbows happily pulls Migrations Files 4 and 5 and applies them to their local db.

Notice that Sparkles' migration history is now 1-2-4-5 but Rainbows' migration history is 1-2-3-4-5, but 3 is nolonger part of the up-to-date project!

At some point Rainbows will encounter Django or South errors, depending on the nature of the migrations, because the database doesn't match the expected schema. No worries, though, it's git, it's South: you can go back in time and fix things.

I was recently in Rainbows' position. I finally noticed that something was wrong with my database when South started refusing to apply the latest migration from upstream, telling me "Sorry! I can't drop table TaskArea, it doesn't exist!"

tasks:0011_auto__del_taskarea__del_field_task_area__add_field_taskkeyword_name FATAL ERROR - The following SQL query failed: DROP TABLE tasks_taskarea CASCADE; The error was: (1051, "Unknown table 'tasks_taskarea'") >snip KeyError: "The model 'taskarea' from the app 'tasks' is not available in this migration."

In my instance of the Sparkles-Rainbows story, Migration 3 and Migration 5 both drop the TaskArea table; I'm trying to apply Migration 5, and South grumbles in response because I had never reversed Migration 3. As far as South knows, there's no such thing as a TaskArea table.

Let's take a look at my migration history, which is conveniently stored in the database itself:

select migration from south_migrationhistory where app_name="tasks";

The output is shown below. The lines of interest are 0010_auth__del and 0010_auto__chg; I'm trying to apply migration 0011 but I can't, because it's the same migration as 0010_auto__del, which should have been reversed a few commits ago.

+------------------------------------------------------------------------------+ | migration | +------------------------------------------------------------------------------+ | 0001_initial | | 0002_auto__add_feedback | | 0003_auto__del_field_task_allow_multiple_finishes | | 0004_auto__add_field_task_is_draft | | 0005_auto__del_field_feedback_task__del_field_feedback_user__add_field_feed | | 0006_auto__add_field_task_creator__add_field_taskarea_creator | | 0007_auto__add_taskkeyword__add_tasktype__add_taskteam__add_taskproject__ad | | 0008_task_data | | 0009_auto__chg_field_task_team | | 0010_auto__del_taskarea__del_field_task_area__add_field_taskkeyword_name | | 0010_auto__chg_field_taskattempt_user__chg_field_task_creator__chg_field_ta | +------------------------------------------------------------------------------+

I want to migrate backwards until 0009, but I can't do that directly because the migration file for 0010_auto__del is not part of HEAD anymore, just like Migration 3 in the story of Sparkles and Rainbows, so South doesn't know what to do. However, that migration does exist in a previous commit, so let's go back in time.

I figure out which commit added the migration I need to reverse:

# Display commit log along with names of files affected by each commit. # Once in less, I searched for '0010_auto__del' to get to the right commit. $ git log --name-status | less

What that key information, the following sequence of commands tidies everything up:

# Switch to the commit that added migration 0010_auto__del $ git checkout e67fe32c # Migrate backward to a happy migration; I chose 0008 to be safe. # ./manage.py migrate [appname] [migration] $ ./manage.py migrate oneanddone.tasks 0008 $ git checkout master # Sync the database and migrate all the way forward using the most up-to-date migrations. $ ./manage.py syncdb && ./manage.py migrate

|

|

Mark Finkle: Firefox for Android: Collecting and Using Telemetry |

Firefox 31 for Android is the first release where we collect telemetry data on user interactions. We created a simple “event” and “session” system, built on top of the current telemetry system that has been shipping in Firefox for many releases. The existing telemetry system is focused more on the platform features and tracking how various components are behaving in the wild. The new system is really focused on how people are interacting with the application itself.

Collecting Data

The basic system consists of two types of telemetry probes:

- Events: A telemetry probe triggered when the users takes an action. Examples include tapping a menu, loading a URL, sharing content or saving content for later. An Event is also tagged with a Method (how was the Event triggered) and an optional Extra tag (extra context for the Event).

- Sessions: A telemetry probe triggered when the application starts a short-lived scope or situation. Examples include showing a Home panel, opening the awesomebar or starting a reading viewer. Each Event is stamped with zero or more Sessions that were active when the Event was triggered.

We add the probes into any part of the application that we want to study, which is most of the application.

Visualizing Data

The raw telemetry data is processed into summaries, one for Events and one for Sessions. In order to visualize the telemetry data, we created a simple dashboard (source code). It’s built using a great little library called PivotTable.js, which makes it easy to slice and dice the summary data. The dashboard has several predefined tables so you can start digging into various aspects of the data quickly. You can drag and drop the fields into the column or row headers to reorganize the table. You can also add filters to any of the fields, even those not used in the row/column headers. It’s a pretty slick library.

Acting on Data

Now that we are collecting and studying the data, the goal is to find patterns that are unexpected or might warrant a closer inspection. Here are a few of the discoveries:

Page Reload: Even in our Nightly channel, people seem to be reloading the page quite a bit. Way more than we expected. It’s one of the Top 2 actions. Our current thinking includes several possibilities:

- Page gets stuck during a load and a Reload gets it going again

- Networking error of some kind, with a “Try again” button on the page. If the button does not solve the problem, a Reload might be attempted.

- Weather or some other update-able page where a Reload show the current information.

We have started projects to explore the first two issues. The third issue might be fine as-is, or maybe we could add a feature to make updating pages easier? You can still see high uses of Reload (reload) on the dashboard.

Remove from Home Pages: The History, primarily, and Top Sites pages see high uses of Remove (home_remove) to delete browsing information from the Home pages. People do this a lot, again it’s one of the Top 2 actions. People will do this repeatably, over and over as well, clearing the entire list in a manual fashion. Firefox has a Clear History feature, but it must not be very discoverable. We also see people asking for easier ways of clearing history in our feedback too, but it wasn’t until we saw the telemetry data for us to understand how badly this was needed. This led us to add some features:

- Since the History page was the predominant source of the Removes, we added a Clear History button right on the page itself.

- We added a way to Clear History when quitting the application. This was a bit tricky since Android doesn’t really promote “Quitting” applications, but if a person wants to enable this feature, we add a Quit menu item to make the action explicit and in their control.

- With so many people wanting to clear their browsing history, we assumed they didn’t know that Private Browsing existed. No history is saved when using Private Browsing, so we’re adding some contextual hinting about the feature.

These features are included in Nightly and Aurora versions of Firefox. Telemetry is showing a marked decrease in Remove usage, which is great. We hope to see the trend continue into Beta next week.

External URLs: People open a lot of URLs from external applications, like Twitter, into Firefox. This wasn’t totally unexpected, it’s a common pattern on Android, but the degree to which it happened versus opening the browser directly was somewhat unexpected. Close to 50% of the URLs loaded into Firefox are from external applications. Less so in Nightly, Aurora and Beta, but even those channels are almost 30%. We have started looking into ideas for making the process of opening URLs into Firefox a better experience.

Saving Images: An unexpected discovery was how often people save images from web content (web_save_image). We haven’t spent much time considering this one. We think we are doing the “right thing” with the images as far as Android conventions are concerned, but there might be new features waiting to be implemented here as well.

Take a look at the data. What patterns do you see?

Here is the obligatory UI heatmap, also available from the dashboard:

http://starkravingfinkle.org/blog/2014/07/firefox-for-android-collecting-and-using-telemetry/

|

|

Gregory Szorc: Repository-Centric Development |

I was editing a wiki page yesterday and I think I coined a new term which I'd like to enter the common nomenclature: repository-centric development. The term refers to development/version control workflows that place repositories - not patches - first.

When collaborating on version controlled code with modern tools like Git and Mercurial, you essentially have two choices on how to share version control data: patches or repositories.

Patches have been around since the dawn of version control. Everyone knows how they work: your version control system has a copy of the canonical data and it can export a view of a specific change into what's called a patch. A patch is essentially a diff with extra metadata.

When distributed version control systems came along, they brought with them an alternative to patch-centric development: repository-centric development. You could still exchange patches if you wanted, but distributed version control allowed you to pull changes directly from multiple repositories. You weren't limited to a single master server (that's what the distributed in distributed version control means). You also didn't have to go through an intermediate transport such as email to exchange patches: you communicate directly with a peer repository instance.

Repository-centric development eliminates the middle man required for patch exchange: instead of exchanging derived data, you exchange the actual data, speaking the repository's native language.

One advantage of repository-centric development is it eliminates the problem of patch non-uniformity. Patches come in many different flavors. You have plain diffs. You have diffs with metadata. You have Git style metadata. You have Mercurial style metadata. You can produce patches with various lines of context in the diff. There are different methods for handling binary content. There are different ways to express file adds, removals, and renames. It's all a hot mess. Any system that consumes patches needs to deal with the non-uniformity. Do you think this isn't a problem in the real world? Think again. If you are involved with an open source project that collects patches via email or by uploading patches to a bug tracker, have you ever seen someone accidentally upload a patch in the wrong format? That's patch non-uniformity. New contributors to Firefox do this all the time. I also see it in the Mercurial project. With repository-centric development, patches never enter the picture, so patch non-uniformity is a non-issue. (Don't confuse the superficial formatting of patches with the content, such as an incorrect commit message format.)

Another advantage of repository-centric development is it makes the act of exchanging data easier. Just have two repositories talk to each other. This used to be difficult, but hosting services like GitHub and Bitbucket make this easy. Contrast with patches, which require hooking your version control tool up to wherever those patches are located. The Linux Kernel, like so many other projects, uses email for contributing changes. So now Git, Mercurial, etc all fulfill Zawinski's law. This means your version control tool is talking to your inbox to send and receive code. Firefox development uses Bugzilla to hold patches as attachments. So now your version control tool needs to talk to your issue tracker. (Not the worst idea in the world I will concede.) While, yes, the tools around using email or uploading patches to issue trackers or whatever else you are using to exchange patches exist and can work pretty well, the grim reality is that these tools are all reinventing the wheel of repository exchange and are solving a problem that has already been solved by git push, git fetch, hg pull, hg push, etc. Personally, I would rather hg push to a remote and have tools like issue trackers and mailing lists pull directly from repositories. At least that way they have a direct line into the source of truth and are guaranteed a consistent output format.

Another area where direct exchange is huge is multi-patch commits (branches in Git parlance) or where commit data is fragmented. When pushing patches to email, you need to insert metadata saying which patch comes after which. Then the email import tool needs to reassemble things in the proper order (remember that the typical convention is one email per patch and email can be delivered out of order). Not the most difficult problem in the world to solve. But seriously, it's been solved already by git fetch and hg pull! Things are worse for Bugzilla. There is no bullet-proof way to order patches there. The convention at Mozilla is to add Part N strings to commit messages and have the Bugzilla import tool do a sort (I assume it does that). But what if you have a logical commit series spread across multiple bugs? How do you reassemble everything into a linear series of commits? You don't, sadly. Just today I wanted to apply a somewhat complicated series of patches to the Firefox build system I was asked to review so I could jump into a debugger and see what was going on so I could conduct a more thorough review. There were 4 or 5 patches spread over 3 or 4 bugs. Bugzilla and its patch-centric workflow prevented me from importing the patches. Fortunately, this patch series was pushed to Mozilla's Try server, so I could pull from there. But I haven't always been so fortunate. This limitation means developers have to make sacrifices such as writing fewer, larger patches (this makes code review harder) or involving unrelated parties in the same bug and/or review. In other words, deficient tools are imposing limited workflows. No bueno.

It is a fair criticism to say that not everyone can host a server or that permissions and authorization are hard. Although I think concerns about impact are overblown. If you are a small project, just create a GitHub or Bitbucket account. If you are a larger project, realize that people time is one of your largest expenses and invest in tools like proper and efficient repository hosting (often this can be GitHub) to reduce this waste and keep your developers happier and more efficient.

One of the clearest examples of repository-centric development is GitHub. There are no patches in GitHub. Instead, you git push and git fetch. Want to apply someone else's work? Just add a remote and git fetch! Contrast with first locating patches, hooking up Git to consume them (this part was always confusing to me - do you need to retroactively have them sent to your email inbox so you can import them from there), and finally actually importing them. Just give me a URL to a repository already. But the benefits of repository-centric development with GitHub don't stop at pushing and pulling. GitHub has built code review functionality into pushes. They call these pull requests. While I have significant issues with GitHub's implemention of pull requests (I need to blog about those some day), I can't deny the utility of the repository-centric workflow and all the benefits around it. Once you switch to GitHub and its repository-centric workflow, you more clearly see how lacking patch-centric development is and quickly lose your desire to go back to the 1990's state-of-the-art methods for software development.

I hope you now know what repository-centric development is and will join me in championing it over patch-based development.

Mozillians reading this will be very happy to learn that work is under way to shift Firefox's development workflow to a more repository-centric world. Stay tuned.

http://gregoryszorc.com/blog/2014/07/24/repository-centric-development

|

|

Sean Bolton: Why Do People Join and Stay Part Of a Community (and How to Support Them) |

[This post is inspired by notes from a talk by Douglas Atkin (currently at AirBnB) about his work with cults, brands and community.]

We all go through life feeling like we are different. When you find people that are different the same way you are, that’s when you decide to join.

As humans, we each have a unique self narrative: “we tell ourselves a story about who we are, what others are like, how the world works, and therefore how one does (or does not) belong in order to maximize self.” We join a community to become more of ourselves – to exist in a place where we feel we don’t have to self-edit as much to fit in.

A community must have a clear ideology – a set of beliefs about what it stands for – a vision of the world as it should be rather than how it is, that aligns with what we believe. Communities form around certain ways of thinking first, not around products. At Mozilla, this is often called “the web we want” or ‘the web as it should be.’

When joining a community people ask two questions: 1) Are they like me? and 2) Will they like me? The answer to these two fundamental human questions determine whether a person will become and stay part of a community. In designing a community it is important to support potential members in answering these questions – be clear about what you stand for and make people feel welcome. The welcoming portion requires extra work in the beginning to ensure that a new member forms relationships with people in the community. These relationships keep people part of a community. For example, I don’t go to a book club purely for the book, I go for my friends Jake and Michelle. Initially, the idea of a book club attracted me but as I became friends with Jake and Michelle, that friendship continually motivated me to show up. This is important because as the daily challenges of life show up, social bonds become our places of belonging where we can recharge.

These social ties must be mixed with doing significant stuff together. In designing how community members participate, a very helpful tool is the community commitment curve. This curve describes how a new member can invest in low barrier, easy tasks that build commitment momentum so the member can perform more challenging tasks and take on more responsibility. For example, you would not ask a new member to spend 12 hours setting up a development environment just to make their first contribution. This ask is too much for a new person because they are still trying to figure out ‘are the like me?’ and ‘will they like me?’ In addition, their sense of contribution momentum has not been built – 12 hours is a lot when your previous task is 0 but 12 is not so much when your previous was 10.

The community commitment curve is a powerful tool for community builders because it forces you to design the small steps new members can take to get involved and shows structure to how members take on more complex tasks/roles – it takes some of the mystery out! As new members invest small amounts of time, their commitment grows, which encourages them to invest larger amounts of time, continually growing both time and commitment, creating a fulfilling experience for the community and the member. I made a template for you to hack your own community commitment curve.

Social ties combined with a well designed commitment curve, for a clearly defined purpose, is powerful combination in supporting a community.

|

|

Marco Bonardo: Unified Complete coming to Firefox 34 |

The awesomebar in Firefox Desktop has been so far driven by two autocomplete searches implemented by the Places component:

- history: managing switch-to-tab, adaptive and browsing history, bookmarks, keywords and tags

- urlinline: managing autoFill results

Moving on, we plan to improve the awesomebar contents making them even more awesome and personal, but the current architecture complicates things.

Some of the possible improvements suggested include:

- Better identify searches among the results

- Allow the user to easily find favorite search engine

- Always show the action performed by Enter/Go

- Separate searches from history

- Improve the styling to make each part more distinguishable

When working on these changes we don't want to spend time fighting with outdated architecture choices:

- Having concurrent searches makes hard to insert new entries and ensure the order is appropriate

- Having the first popup entry disagree with the autoFill entry makes hard to guess the expected action

- There's quite some duplicate code and logic

- All of this code predates nice stuff like Sqlite.jsm, Task.jsm, Preferences.js

- The existing code is scary to work with and sometimes obscure

Due to these reasons, we decided to merge the existing components into a single new component called UnifiedComplete (toolkit/components/places/UnifiedComplete.js), that will take care of both autoFill and popup results. While the component has been rewritten from scratch, we were able to re-use most of the old logic that was well tested and appreciated. We were also able to retain all of the unit tests, that have been also rewritten, making them use a single harness (you can find them in toolkit/components/places/tests/unifiedcomplete/).

So, the actual question is: which differences should I expect from this change?

- The autoFill result will now always cope with the first popup entry. Though note the behavior didn't change, we will still autoFill up to the first '/'. This means a new popup entry is inserted as the top match.

- All initialization is now asynchronous, so the UI should not lag anymore at the first awesomebar search

- The searches are serialized differently, responsiveness timing may differ, usually improve

- Installed search engines will be suggested along with other matches to improve their discoverability

The component is currently disabled, but I will shortly push a patch to flip the pref that enables it. The preference to control whether to use new or old components is browser.urlbar.unifiedcomplete, you can already set it to true into your current Nightly build to enable it.

This also means old components will shortly be deprecated and won't be maintained anymore. That won't happen until we are completely satisfied with the new component, but you should start looking at the new one if you use autocomplete in your project. Regardless we'll add console warnings at least 2 versions before complete removal.

If you notice anything wrong with the new awesomebar behavior please file a bug in Toolkit/Places and make it block Bug UnifiedComplete so we are notified of it and can improve the handling before we reach the first Release.

http://blog.bonardo.net/2014/07/24/unified-complete-coming-to-firefox-34

|

|

Christian Heilmann: [Video]: The web is dead? – My talk at TEDx Thessaloniki |

Today the good folks at TEDx Thessaloniki released the recording of my talk “The web is dead“.

I’ve given you the slides and notes earlier here on this blog but here’s another recap of what I talked about:

- The excitement for the web is waning – instead apps are the cool thing

- At first glance, the reason for this is that apps deliver much better on a mobile form factor and have a better, high fidelity interaction patterns

- If you scratch the surface of this message though you find a few disturbing points:

- Everything in apps is a number game – the marketplaces with the most apps win, the apps with the most users are the ones that will get more users as those are the most promoted ones

- The form factor of an app was not really a technical necessity. Instead it makes software and services a consumable. The full control of the interface and the content of the app lies with the app provider, not the users. On the web you can change the display of content to your needs, you can even translate and have content spoken out for you. In apps, you get what the provider allows you to get

- The web allowed anyone to be a creator. The curb to mount from reader to writer was incredibly low. In the apps world, it becomes much harder to become a creator of functionality.

- Content creation is easy in apps. If you create the content the app makers wants you to. The question is who the owner of that content is, who is allowed to use it and if you have the right to stop app providers from analysing and re-using your content in ways you don’t want them to. Likes and upvotes/downvotes aren’t really content creation. They are easy to do, don’t mean much but make sure the app creator has traffic and interaction on their app – something that VCs like to see.

- Apps are just another form factor to control software for the benefit of the publisher. Much like movies on DVDs are great, because when you scratch them you need to buy a new one, publishers can now make software services become outdated, broken and change, forcing you to buy a new one instead of enjoying new features cropping up automatically.

- Apps lack the data interoperability of the web. If you want your app to succeed, you need to keep the users locked into yours and not go off and look at others. That way most apps are created to be highly addictive with constant stimulation and calls to action to do more with them. In essence, the business models of apps these days force developers to create needy, bullying tamagotchi and call them innovation

http://christianheilmann.com/2014/07/24/video-the-web-is-dead-my-talk-at-tedx-thessaloniki/

|

|

Jennie Rose Halperin: Video about Open Source and Community |

Building and Leveraging an Open Source Developer Community.

this talk by Jade Wang is really great. Thanks to Adam Lofting for turning me onto it!

http://jennierosehalperin.me/video-about-open-source-and-community/

|

|