Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Localization (L10N): L10n Report: December 2020 Edition |

Welcome!

New localizers

Are you a locale leader and want us to include new members in our upcoming reports? Contact us!

New community/locales added

New content and projects

What’s new or coming up in Firefox desktop

Upcoming deadlines:

- Firefox 85 is currently in beta and will be released on January 26. The deadline to update localization is on January 17 (see this older l10n report to understand why it moved closer to the release date).

As anticipated in the last report, this release cycle is longer than usual (6 weeks instead of 4), to accommodate for the end of year holidays in Europe and the US.

The number of new strings remains pretty low, but expect this to change during the first half of 2021, when we should have new content, thanks to a mix of new features and old interfaces revisited. There will also be changes to improve consistency around the use of Title Case and Sentence case for English. This won’t result in new strings to translate for other locales, but it’s a good reminder that each locale should set and follow its own rules, and they should be documented in style guides.

Since we’re at the end of year, here are a few numbers for Firefox:

- We currently ship Firefox in 96 languages. You should be proud of that accomplishment, since it makes Firefox the most widely localized browser on the market [1].

- Nightly ships with 10 additional locales. Some of them are very close to shipping in stable builds, hopefully that will happen in 2021.

[1] Disclaimer: other browsers make it quite difficult to understand which languages are effectively supported (there’s no way to switch language, and they don’t necessarily work in the open). Other vendors seem to also have a low entry barrier when it comes to adding a new language, and listing it as available. On the other hand, at Mozilla we require high priority parts to be completely translated, or very close, and a sustainable community before shipping.

What’s new or coming up in mobile

In many regards, you can surely say about 2020 “What a year…” – and that also applies to mobile at Mozilla.

We shipped products, dropped some… Let’s take a closer look at what’s happened over the year.

In 2020, we shipped the all new Firefox for Android browser (“Fenix”) in 94 languages, which is a great accomplishment. Thank you again to all the localizers who have contributed to this project and its global launch. Your work has ensured that Firefox for Android remains a successful product around the world. We are humbled and grateful for that.

As for the latest release that comes out in December, we will be able to try out a few new features, such as a tab grid view and the ability to delete downloads.

2020 also brought improvements and cool new features to Firefox for iOS – especially since the iOS update to version 14. To only list a few:

- We now have the ability to set Firefox iOS by default on iOS devices (try it out if you haven’t yet!)

- We can add a Firefox widget to our homescreen

- We can also add Firefox to the Today Widget, which allows us to open new tabs quickly or visit links that we’ve copied to our clipboard

But 2020 has also been a year when we have had to drop some mobile projects, such as Scryer, Firefox Lite and Firefox for Fire TV. We thank you all for the hard work on these products.

Firefox Reality should be available until at least 2021, but not much l10n work is to be expected. We are still figuring things out in regards to Lockwise, we will keep you posted once we know more.

We are looking forwards to 2021 to continue shipping great localized mobile projects. Thank you all for your ongoing work and support!

What’s new or coming up in web projects

mozilla.org

This year, mozilla.org saw the long awaited migration from .lang to .ftl format. The change gives localizers greater flexibility to localize the site in their language more naturally, and the localized content is pushed to production from once a day manually to a few times in an hour automatically. The new file structure ensures consistency usage in brands and product names across the site. The threshold to activate a locale has lowered, with the hope that it would attract more localizers to participate.

In the past seven months or so, 90+ files have been migrated, added and updated to the new format, and more are still in the work. New pages would be more content heavy and more informational.

Another major change is, instead of creating a new What’s New Page (or WNP) with every Firefox release, the team has decided to promote the evergreen WNP page with stable content for an extended period of time. If Firefox desktop is offered in your locale, please make sure this page is fully localized.

The mozilla.org team would like to take the opportunity to express their deep gratitude to all of our community localizers, all over the world. Your work is critical to Mozilla’s global impact and essential for making Mozilla’s products available to the widest possible audience. 61% of 2020 visits were in locales served primarily by community localizers. In those locales, non-Firefox visits to our website grew by 10% this year and downloads increased by 13%! Mozilla’s audience is all over the world, and we couldn’t reach it without you, the localizers who bring www.mozilla.org to your communities. Thank you!

Firefox Accounts

This year, a limited payment feature was added to a few select markets. Next year, the payment feature will be expanded to support PayPal and in more European regions. The team is working on the details and we will learn more soon.

Common Voice

Despite a challenging year, the project saw a significant growth in dataset collection in the past six months: an additional 2,000 hours added, 6 more languages (Hindi, Lithuanian, Luganda, Thai, Finnish, Hungarian) , and over 7 million clips total! Check out which languages have the most hours on Discourse.

Project WebThings

Given the year 2020 has turned out to be, you may not recall that Mozilla’s strategy for Project WebThings this year was to successfully establish it as an independent, community-led open source project. The important work needed to complete that transition took place in November and December. Through the efforts of community members the project streamlined its name to just “WebThings”, established a new home at webthings.io, relocated all the project’s code and assets on GitHub, created a new set of backend services to support and maintain the global network of users’ WebThings Gateways, and provided a simple path for transitioning Gateways to the new community infrastructure through the release of WebThings Gateway 1.0. Though a lot changed, Pontoon continues to be our platform for localization and the WebThings team has been continually delighted by the contributions there, with teams supporting thirty-four languages. You’ll still find WebThings discussion on Discourse, including several more detailed announcements about the community’s newfound independence and plans for 2021.

What’s new or coming up in Foundation projects

Wagtail has become the first CMS to be fully integrated & automated with Pontoon — and managing translations doesn’t require writing or manually deploying a single line of code. This will dramatically reduce the amount of time required to make some content localizable and get it published.

Mozilla Foundation worked directly with Torchbox, Wagtail’s editor, and sponsored the development of the Wagtail Localize plugin, adding localization support into Wagtail. This solution will not only work for us, but for any organization using this free and open source CMS!

Wagtail is currently used on the Foundation website, the Mozilla Festival website and on the Donate websites. A lot of efforts went into designing a system to manage content at a granular level for each locale, so that you will only translate content that is relevant for your locale. For instance you won’t see in Pontoon content that is used for performing A/B tests in English, or custom content that is only relevant to other locales. Another nice feature is that your work gets automatically published on production within a few minutes. You can read more about the changes for the Mozilla donate websites here and you can expect even more content to be localized via Wagtail Localize in 2021!

What’s new or coming up in SuMo

We’ve got a few releases including Firefox 83 in mid-November and Firefox 84 just this week. Some of the following articles are completely new but most are only updated. You can also keep track of SUMO’s new articles on our sprint wiki page in the above links.

Here are the recent articles that have been translated:

- Firefox 83

- Firefox 84

- Firefox for Android

What’s new or coming up in Pontoon

Team insights

We’ve just landed a new feature on team dashboards – Insights – which shows an overview of the translation and review activity of each team. Stay tuned for more details in a blog post that will be published soon.

Editor refactor

In order to simplify adding new editor implementations to Pontoon, Adrian refactored the frontend editor code using React hooks. All features should work exactly the same as before, but perform better.

Upgraded to Django 3

Thanks to Philipp Pontoon has been upgraded from Django 2.2 to Django 3.1. The process also brought several related library upgrades and new dependency management using pip-compile.

New test automation

We have moved our test automation fromTravis CI to GitHub Actions. Thanks to Axel and Flod for taking care of it! We’ve also split automation into multiple tasks, which comes with several benefits.

Front-end bugfixes

Several frontend bugs have been fixed by our new contributor Mitch. Perhaps the most interesting one: changing the text to uppercase directly in JS files instead of using the text-transform property of CSS. Reason? The text-transform property is not reliable for some locales. Welcome to the team, Mitch!

Project config improvements

Thanks to Jotes we’ve landed several improvements and bugfixes for the project config support. Jotes also migrated several unit tests from using the Django test framework to pytest.

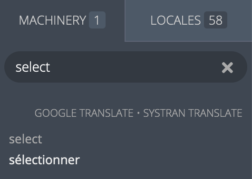

Upcoming changes to Machinery

April and Jotes have made good progress on the implementation of the Concordance search. It will be added to the Machinery panel and will allow you to search all your past translations without leaving the translate view. Two related changes have already landed – you can now reset custom search with a click of a button, and when you copy custom search result, it gets added to the editor instead of replacing its content.

Friends of the Lion

Image by Elio Qoshi

- Huge kudos to Alejandro, Manuel, and Sergio who only joined the Galician community recently but have accomplished a lot! All three are studying for a Master’s degree in Translation Technologies and would like to get some hands-on experience in the field and an open-source company like Mozilla can help expand that experience. In a short month, they studied all the onboarding documents, familiarized themselves with Pontoon and the localization process, then single handedly brought the mozilla.org site to the best shape it has seen in recent years: 36k+ words localized. While they are finishing up their studies, based on their experience with this project, they want to share with us some of the fruits of their effort that might benefit Pontoon and future projects. Thank you all so much, we can’t wait!

Know someone in your l10n community who’s been doing a great job and should appear here? Contact one of the l10n-drivers and we’ll make sure they get a shout-out (see list at the bottom)!

Useful Links

- #l10n-community channel on Matrix

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying (CocoMo) – l10n Project Manager for mozilla.org, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Matjaz – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2020/12/22/l10n-report-december-2020-edition/

|

|

David Humphrey: SnowyOwls.ca |

tldr; I made you a little birding web app for Christmas with Begin.com and Next.js to help you find Snowy Owls in Canada. The code is here.

On the Healing Powers of a Side Project

I've finally finished the semester, and am ready for a holiday. The past three weeks have been non-stop marking: labs, assignments, quizzes, tests, projects, you name it.

I find that these long marking periods go better for me if I also work on a side project in parallel. When you teach programming, and your marking involves reading and reviewing a million lines of code, you start to yearn for opportunities to write some code of your own. I always need a project, so while I work through my marking pile, I give myself little breaks to implement bits of my chosen side project. It keeps me moving forward and happy.

Owls as Inspiration

I've been obsessed with owls throughout the pandemic. I wrote previously about our woods' new Barred Owl, and how we installed a nest box for it (UPDATE: we've had a second Barred Owl move in, so everything seems to be going to plan). Our Great Horned Owls have started hooting to each other every evening, which is amazing to listen to as we go for our walks.

But as the snow begins to fall each December, my attention turns to another owl: the Snowy Owl. Normally at this time of year I'm seeing Snowy Owls on my long commutes to and from work. With COVID, I'm not out driving anymore, and as such, I'm not having as easy a time finding them.

I decided that this year's marking-side-project would be a tool to help people find Snowy Owls near where they live. I've long wanted to play with eBird and the eBird API, and hoped that I could get recent sighting data this way. To use the eBird API, you have to create an account and then request an API key. After that you can do all sorts of interesting queries to get current or historical data about sightings by species, region, or location.

My goals for the project were these:

- Had to be shipped at the end of my marking period

- Include a write-up with some general info on Snowy Owls and advice on how to find them. I would need to do research for this part.

- Include some historical data (i.e., charts) from eBird of sightings in Canada to show when Snowy Owls are most often seen

- Create an interactive, live map of current sightings for the past month

- Create a sortable tabular view of the same data, with locations and how old the sightings are

Serverless with Begin + Next.js

I also wanted to use this project to learn a few new technologies. I decided to write the back-end as AWS Lambda functions using Begin, and build the front-end in React with Next.js.

First, I've been meaning to try Begin for over a year. I've been following along with Brian LeRoux's work on arc.codes and Begin, and every time it comes up in my feed, I tell myself "next time, I'll try this." Well, this is the time!

Second, I have to teach a bunch of web and front-end React courses next term. I also need to learn Next.js for a few of the projects. Picking React and Next.js seemed like an easy way to refresh myself.

Thoughts on Begin.com

Begin is a platform for deploying modern web apps (CDN, data, lambda functions, etc). I've worked a lot with static hosting platforms like GitHub Pages, Vercel, and Netlify, but never with Begin. One of my goals for 2021 (first time I've written that, goodbye 2020!) is to learn more about AWS. My institution has become part of AWS Educate, which means that my students and I can get access to AWS services for our courses.

I thought that Begin would be nice, because it lets me try AWS, but with training wheels on. I'm not on the hook for configuring or securing anything directly. Begin gives me a code-based, declarative pipeline from GitHub to AWS, including S3, CloudFront, Lambda, API Gateway, DynamoDB, and probably other stuff I don't know about. I push to my main branch, and my code is linted, tested, built, and deployed to staging. Similarly, if I push a tag, everything goes to production. They have a very generous free tier, which blows away what you get from the other JAM stack providers I've used.

Things that I like about Begin:

- I bring a git repo, and they connect everything else within AWS. I'm not ready or interested (yet) in twisting all the dials in AWS, so this is a fantastic option for me. For the most part, I never felt like complexity of the underlying AWS infrastructure or configs "leaked" into my project.

- Begin is technology/framework agnostic. I could have written this web app 50 different ways, and they'd all be workable with Begin. You aren't forced into a particular framework or set of tooling.

- Almost everything is configured via files in the repo vs. enormous web consoles. Begin does have a nice web console where you can see what's happening and change how certain things work. But the bulk of what you do is done declaratively. I also like that you work at a high enough level that you aren't stuck authoring thousand-line YAML files.

- The docs are fairly complete and had most of what I needed. I've read them all multiple times, and often when I thought something hadn't been covered, I'd go back and discover that the details were in fact there.

- I love that everything you need for a web app is here. Almost every platform I use is missing something: static hosting I expect, and serverless functions are becoming mandatory, too; but to also have a database built in is amazing. Just about every project you build has some need for data, and Begin has it. I used it to build a simple analytics back-end (thanks to Wes for the sample code I used to write my own), and with more time could have done a lot more. It's really flexible.

- Being able to bring my own domain and have it hooked up automatically with SSL certs. I didn't have to fiddle with nginx or certbot. Amazing.

- The various pieces of what you're building fit together in ways that make sense. I've used some other JAM stack platforms, and their functions often felt bolted-on as an after-thought vs. part of the initial offering. With Begin I have a

src/http/*directory for all my functions, and my root directory holds my React app, while shared code lives insrc/shared. I like working in monorepos, and this feels like a good design, with everything in easy reach. - A lot of what Begin's CI/CD pipeline does for you, I can do myself with GitHub Actions. But, here I didn't have to do any of it. Having the entire pipeline preconfigured was excellent, and let me work more quickly.

Things that I found confusing or difficult with Begin:

- The name. It's impossible to Google for bugs, docs, StackOverflow, etc. My general search algorithm uses the technology name as a prefix. But literally every technology you work with has "begin" in their docs, so that doesn't work. I never really figured out a good workflow for finding things with Google. Netlify, Vercel, etc. don't have this problem.

- Edge cases with the tooling. I ran into a bunch of situations where the docs and tools didn't match my experience. For example:

- the docs claim you can generate boilerplate code for your http functions. I started from one of their sample projects that didn't have any http functions. As a result, when I wanted to add my functions, nothing was installed or wired up correctly (e.g., no

sandbox). The docs assume this is all done for you, so when you have to do it yourself, you end up having to dig around in other sample projects to see how they do their configs and setup. This isn't easy, though, because there aren't very many complex apps to use as an example (or I couldn't find them, see naming issues above). I'm used to working without docs, so I got most things working on my own. But I think this could be improved. - Getting my custom domain set up with Begin was difficult (for me). Their docs discuss a number of suggested domain name registrars. Based on this I chose Namecheap and bought my domain,

snowyowls.ca. Begin wants you to create CNAME records forstaging.snowyowls.caandwww.snowyowls.ca. I followed all the docs carefully but couldn't get it. Eventually I found out that Namecheap needs me to include the.wwwand.stagingsuffixes for my CNAMEs. I still don't have all the HTTP to HTTPS redirects working the way they should. Probably this is something that people who do this all the time would know, or could figure out, but it seems like Begin is aiming for devs who do need help with this. I wasted a lot of time on issues like this.

ENOSPCerrors during install or build steps. Begin has hard limits on the size of your development directory (~500M), and also each of your functions (~5M). As a result of these ceilings, I was constantly banging my head. So many of the packages I normally reach for were suddenly too big. This includes many dev dependencies I rely on (Prettier, Jest, and all the ESLint plugins I usually use). I would get everything working locally, push my code, and have Begin fail withENOSPC. Sometimes it would be the total size of my repo; other times a particular function was overweight; still other times the error would be wrong, and trying a rebuild would make it work. In all cases you get an opaqueENOSPCerror and that's it. I had to rewrite a bunch of modules to inline, stripped-down versions of code I needed. I don't know how Netlify gets around this while Begin can't (or hasn't), but I think it's a major blocker for them, and will hurt adoption. (As an aside: we joke about how massive node_modules is, but seriously, the fact that I can't fit a reasonable JS dev environment in 500M without a lot of extra work is totally ridiculous. The environmental impact of having to download close to 1G of deps every time I want to write a single line of JS in a new project, or for every CI run, is a problem we could and should solve.)

Overall, I enjoyed working with Begin on this project. Once I figured out how everything worked, I was able to work quickly with their pipeline. It was great writing my back-end and front-end together, and being able to include persistent data for analytics. I have zero concern about scaling, security, cost surprises, etc.

Begin is great and you should try it. I'll use it again for sure.

Thoughts on Next.js

When I'm writing React code, I usually use create-react-app. It works well for small projects, but I find its lack of opinion on lots of things means you have to supplement it with all kinds of extra packages. I find this frustrating, because I want to focus on my project, not on picking winners in the endless front-end arms race. At the other extreme you have something like Angular, which I also have to teach next term. It has an opinion on everything, almost none of which I share. It's too stifling, and I usually want something in between.

Another option is Gatsby, which I spent a lot of time using last year on another project. However, it doesn't really fit with the kind of apps I like to write (i.e., dynamic client-side apps vs data-driven static sites). Next.js seems to offer an interesting alternative, with lots of good decisions already made for me and excellent developer experience, but without a strictness that would limit me from customizing the things I have to. I was excited by what I read about the version 10 release, and wanted to give it a try.

Things that I like about Next.js:

- Their "zero config" really is exactly that. I didn't have to spend any time on setup or fighting with configs.

- The choice between server-side rendering (SSR), pre-rendering at build time (SSG), or client-side rendering. I use a mix of pre-rendering and client-side rendering, and it works great. When I'm done I use

npm run buildandnpm run exportand I have a statically builtout/directory that Begin can upload to S3. - useSWR is perhaps the best data fetching library I've ever used. Period. I absolutely love it, and I'll use it on every React app I build in the future.

- The filesystem routing is easy to use. Drop a file in

pages/and it's a page in the app. - I love that all static assets in

public/are automatically available at the root within the web app (e.g.,public/favicon.icoishref="/favicon.ico").

Honestly, there is so little Next.js code in my repo that it's hard to discuss it or even find it as I scroll through the repo! Everything is just my React app, with a few cli tools I don't have to configure. This is what I want.

Things that I found confusing or difficult with Next.js:

- A lot of the awesome things you read about Next.js 10 doing require a server. For example, the

- I initially really enjoyed the simplicity of the Next.js Router. However, a few weeks into the project, I started to run into problems. For example, scroll position restoration when navigating between pages is horribly broken. I had to write my own custom scroll restoration code, which was a total pain and waste of time. If next.js is going to call them "pages" it really needs to track scroll position between navigations like a regular web page does. I also had problems getting Next.js and Begin to play nice with deep linking into my app. For example, I have a page called

/mapbut going directly to that page renders the home page. I'm not sure whose bug this is, Begin, Next.js, or mine, but I'd like it fixed. - I had to write my own custom dev server to get Begin's sandbox and Next.js to play nicely together locally. With create-react-app, proxying a backend is already baked in. It was a bit annoying to have to stop work on my project in order to implement this myself. Again, I think that Next.js assumes you have a server, and my use case doesn't seem to be their primary one. It would be good if they put the serverless use case on an equal footing. There is a

serverlessbuild target, but it didn't do what I wanted (or I don't understand how to leverage it, yet). - I don't like having to choose between React and HTML. Next.js assumes you're only working with React components. Yes, I want to use React components, but I also care a lot about the HTML that surrounds them. Next.js lets you escape this and override the HTML, but it's not the default.

I'd use Next.js again. It's closer to what I want than Gatsby or create-react-app. I love how minimal it is (a few CLI calls, some components, and hooks). But I also think there's still room for another "React Distro" that does some things differently. Maybe the type of web projects I want to build are the problem, but I really enjoy making web sites and apps at the same time. I don't want to choose. I want both.

Conclusion: Stay Safe and Go Find Some Owls

As we enter our tenth month of the pandemic, I wanted to make something for the current moment. Christmas won't be the same this year: we won't be able to celebrate or visit our parents, siblings, or their families; I can't get together with any friends for a meal; and many of the usual traditions our family has are off the table. I'm sad at all of it.

I can't fix any of this, but I wanted to do something to give some small bit of joy over the holidays. While the pandemic forces us to avoid each other, we're still allowed to go outside, to drive in the country, to walk in the park or along the shoreline, and to look for Snowy Owls.

As I was finishing up the app's code, I noticed that a new owl had been spotted 15 minutes from our house. My wife and I drove off into the falling snow in search of it, creeping along an old fence line stretched across a farmer's field. It was really beautiful to be out, to be hopeful, and to be focused on what is yet to come.

Merry Christmas.

|

|

Kartikaya Gupta: 9 years and change |

I should probably note here that November 20 was my last day as a Mozilla employee. In theory, that shouldn't really change much, given the open-source nature of Mozilla. In practice, of course, it does. I did successfully set up a non-staff account and migrate things to that, so I still retain some level of access. I intend to continue contributing; however, my contributions will likely be restricted to things that don't require paging in huge chunks of code, or require large chunks of time. In other words, mostly cleanup-type stuff, or smaller bugfixes/enhancements.

I still believe that the Mozilla mission to make the Internet healthier is important, but over the course of the past year, I've come to realize that there are other problems facing society are perhaps more important and fundamental. That, combined with more companies opening up remote positions, provided me with an opportunity that I decided to take. In January, I'll be starting work on the Cash App Platform team at Square, and hopefully will be able to help them move the needle on economic empowerment.

Working at Mozilla was in many ways a dream come true. It was truly an honour to work alongside so many world-class engineers, on so many different problems. I'm going to miss it, for sure, but I am also excited to see what the future holds.

A final note: if you follow this blog via Planet Mozilla, keep in mind that only posts I tag as "mozilla" show up there, and those posts will be much fewer in number going forward (not that they were particularly numerous before...). I have an RSS feed (yes, RSS is still a thing) if you care to follow that.

|

|

Spidermonkey Development Blog: SpiderMonkey Newsletter 8 (Firefox 84-85) |

|

|

Support.Mozilla.Org: SUMO Updates – Looking back on 2020 |

There is a lot happening in 2020 even when the world we live in right now has changed dramatically in just a year. Amidst all that, I feel even more grateful that the passion in our community remains despite all the internal changes in the organization and as we take our time to rearrange the pieces back, and refocus our lenses in order to welcome 2021.

I want to take this opportunity to reflect back and celebrate what we have accomplished together in 2020:

H1: Transition to Conversocial and get the community strategy project off the ground

We begin our journey in Berlin this year for our last all hands before the world goes into the state we are right now. The discussion at that time has also helped us to shape the community strategy project which we’ve been working on since the end of 2019. We also moved our main communication to Matrix, We received a lot of positive feedback during the transition period which made us confident for the full transition. We also managed to move our Social Support platform from Buffer Reply to Conversocial in March.

We’ve done a lot of progress on the onboarding project too, as part of the community strategy project. Now we have the design and the copy ready for implementation, which still needs to be scheduled.

H2: Intoruducing Play Store Support officially and getting the base metrics agreed

In Q3, we focused our efforts to helped the mobile team to transition from Fennec to Fenix. And we use the rest of the year to work on the remaining areas from the community strategy project that we have re-evaluated. One of the most important piece is the base metrics for the community which I can’t wait to share with you the beginning of next year. On top of that, I’m also putting together a plan to leverage this page as a guidelines center for the contributors moving forward.

Recently, we’ve also managed to do an experiment on tagging on the support forum. This is a small experiment that serves as a stepping stone for the larger tagging strategy project that we will be working on as a team for the next year.

—

I want also to acknowledge how difficult 2020 was. “Difficult” is probably not even the right word. Despite the combination of uncertainty, various turmoil, frustration, that we experienced, I’m grateful that we could remain focus and accomplished all of these things together.

Thank you is barely enough to express how grateful we are for all the contributions, discussion, ideas, and feedback that we shared through the year 2020. But nevertheless, thank you for always believing and being part of Mozilla’s mission.

Let’s keep on rocking the free web through 2021 and beyond!

Kiki

https://blog.mozilla.org/sumo/2020/12/18/sumo-updates-looking-back-on-2020/

|

|

Data@Mozilla: This Week in Glean: Glean in 2021 |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.)

All “This Week in Glean” blog posts are listed in the TWiG index (and on the Mozilla Data blog).

A year ago the Glean project was different. We had just released Glean v22.1.0 and Fenix (aka Firefox for Android aka Firefox Daylight) was not released yet, Project FOG was just an idea for the year to come.

2020 changed that, but 2020 changed a lot. What didn’t change was my main assignment: I kept working all throughout the year on the Glean SDK, fixing bugs, expanding its capabilities, enabling more platforms and integrating it into more products. Of course this was only possible because the whole team did that as well.

In September I took over the tech lead role for the SDK from Alessio. One part of this role includes thinking bigger and sketching out the future for the Glean SDK.

Let’s look at this future and what ideas we have for 2021.

(One note: right now these are ideas more than a plan. It neither includes a timeline nor does it include all the other things maintenance includes.)

The vision

The Glean SDK is a fully self-servable telemetry SDK, usable across different platforms. It enables product owners and engineers to instrument their products and rely on their data collection, while following Mozilla policies & privacy standards.

The ideas

In the past weeks I started a list of things I want the Glean SDK to do next. This is a short and incomplete list of not-yet-proposed or accepted ideas. For 2021 we will need to fit this in with the larger Glean project, including plans and ideas for the pipeline and tooling. Oh, and I need to talk with my team to actually decide on the plan and allocate who does what when.

Metric types

When we set out to revamp our telemetry system we build it with the idea of offering higher-level metric types, that give more meaning to individual data points, allowing more correct data collection and better aggregation, analysis and visualisation of this data. We’re not there yet. We currently support more than a dozen metric types across all platforms equally, with the same user-friendly APIs in all supported languages. We also know that this still does not cover all intended use cases that, for example, Firefox Desktop wants. In 2021 we will probably need to work on a few more types, better ergonomics and especially documentation on when which metric type is appropriate.

Revamped testing APIs

Engineers should instrument their code where appropriate and use the collected data to analysis behavior and performance in the wild. The Glean SDK ensures their data is reliably collected and sent to our pipeline. But we cannot ensure that the way the data is collected is correct or whether the metric even makes sense. That’s why we encourage that each metric recording is accompanied by tests to ensure data is collected under the right circumstances and that its the right data under the given test case. Only that way folks will be able to verify and analyse the incoming data later and rely on their results.

The available testing APIs in the Glean SDK provide a bare minimum. For each metric type one can check which data it currently holds. That’s probably easy to validate for simpler metrics such as strings, booleans and counters, but as soon as you have more complex one like any of the distributions or a timespan it gets a bit more complex. Additionally we offer a way to check if any errors occured during recording, which are usually also reported in the data. But here again developers need to know which errors can happen under what circumstances for which metric type.

And at last we want developers to reach for custom pings when the default pings are not sufficient. Testing these is currently near impossible.

Testing these different usages of the SDK can and should be improved. We already have the first proposals and bugs for this:

- Custom Ping Unit Tests proposes a simple callback-like API to validate data in custom pings

- Testing parameter in Glean metric definitions proposes to make existing tests/testing documentation for metrics more visible

- Improvements to Glinter, the Glean linter (e.g. opinions on naming of things)

(Note: as of writing these document might be inaccessible to the wider public, but they will be made public once we gather wider feedback)

For sure we will have more ideas on this in 2021.

UniFFI – generate all the code

The Glean SDK is cross-platform by shipping a core library written in Rust with language bindings on top that connect it with the target platform. This has the advantage that most of the logic is written once and works across all the targets we have. But this also has the downside that each language binding sort of duplicates the user-visible API in their target language again. Currently all metric type implementations need to happen in Rust first, followed by implementations in the language bindings.

All of the implementation work today is mostly manual, but usually follows the same approach. The language binding implementations should not hold additional state, but currently some still do.

Implementation of new metric types and fixing bugs or improving the recording APIs of existing ones results in a lot of busy work replicating the same code patterns in all the languages (of which we now have have 7: Kotlin, Swift, Python, C#, Rust, JavaScript and C++). If we also come up with new metric types next year this becomes worse.

A while ago some of my colleagues started the UniFFI project, a multi-language bindings generator for Rust. For a couple of reasons the Glean SDK cannot rely on that yet.

In 2021 I want us to work towards the goal of using UniFFI (or something like it) to reduce the manual work required on each language binding. This should reduce our general workload supporting several languages and help us avoid accidental bugs on implementing (and maintaining) metric types.

This is a rather short list of things that only focus on the SDK. Let’s see what we get tackled in 2021.

End of 2020

The This Week in Glean series is going into winter hiatus until the second week of January 2021. Thanks to everyone who contributed to 25 This Week in Glean blog posts in 2020:

Alessio, Travis, Mike, Chutten, Bea, Raphael, Anthony, Will & Daosheng

https://blog.mozilla.org/data/2020/12/18/this-week-in-glean-glean-in-2021/

|

|

Jan-Erik Rediger: This Week in Glean: Glean in 2021 |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.)

All "This Week in Glean" blog posts are listed in the TWiG index (and on the Mozilla Data blog). This article is cross-posted on the Mozilla Data blog.

A year ago the Glean project was different. We had just released Glean v22.1.0 and Fenix (aka Firefox for Android aka Firefox Daylight) was not released yet, Project FOG was just an idea for the year to come.

2020 changed that, but 2020 changed a lot. What didn't change was my main assignment: I kept working all throughout the year on the Glean SDK, fixing bugs, expanding its capabilities, enabling more platforms and integrating it into more products. Of course this was only possible because the whole team did that as well.

In September I took over the tech lead role for the SDK from Alessio. One part of this role includes thinking bigger and sketching out the future for the Glean SDK.

Let's look at this future and what ideas we have for 2021.

(One note: right now these are ideas more than a plan. It neither includes a timeline nor does it include all the other things maintenance includes.)

The vision

The Glean SDK is a fully self-servable telemetry SDK, usable across different platforms. It enables product owners and engineers to instrument their products and rely on their data collection, while following Mozilla policies & privacy standards.

The ideas

In the past weeks I started a list of things I want the Glean SDK to do next. This is a short and incomplete list of not-yet-proposed or accepted ideas. For 2021 we will need to fit this in with the larger Glean project, including plans and ideas for the pipeline and tooling. Oh, and I need to talk with my team to actually decide on the plan and allocate who does what when.

Metric types

When we set out to revamp our telemetry system we build it with the idea of offering higher-level metric types, that give more meaning to individual data points, allowing more correct data collection and better aggregation, analysis and visualisation of this data. We're not there yet. We currently support more than a dozen metric types across all platforms equally, with the same user-friendly APIs in all supported languages. We also know that this still does not cover all intended use cases that, for example, Firefox Desktop wants. In 2021 we will probably need to work on a few more types, better ergonomics and especially documentation on when which metric type is appropriate.

Revamped testing APIs

Engineers should instrument their code where appropriate and use the collected data to analysis behavior and performance in the wild. The Glean SDK ensures their data is reliably collected and sent to our pipeline. But we cannot ensure that the way the data is collected is correct or whether the metric even makes sense. That's why we encourage that each metric recording is accompanied by tests to ensure data is collected under the right circumstances and that its the right data under the given test case. Only that way folks will be able to verify and analyse the incoming data later and rely on their results.

The available testing APIs in the Glean SDK provide a bare minimum. For each metric type one can check which data it currently holds. That's probably easy to validate for simpler metrics such as strings, booleans and counters, but as soon as you have more complex one like any of the distributions or a timespan it gets a bit more complex. Additionally we offer a way to check if any errors occured during recording, which are usually also reported in the data. But here again developers need to know which errors can happen under what circumstances for which metric type.

And at last we want developers to reach for custom pings when the default pings are not sufficient. Testing these is currently near impossible.

Testing these different usages of the SDK can and should be improved. We already have the first proposals and bugs for this:

- Custom Ping Unit Tests proposes a simple callback-like API to validate data in custom pings

- Testing parameter in Glean metric definitions proposes to make existing tests/testing documentation for metrics more visible

- Improvements to Glinter, the Glean linter (e.g. opinions on naming of things)

(Note: as of writing these document might be inaccessible to the wider public, but they will be made public once we gather wider feedback)

For sure we will have more ideas on this in 2021.

UniFFI - generate all the code

The Glean SDK is cross-platform by shipping a core library written in Rust with language bindings on top that connect it with the target platform. This has the advantage that most of the logic is written once and works across all the targets we have. But this also has the downside that each language binding sort of duplicates the user-visible API in their target language again. Currently all metric type implementations need to happen in Rust first, followed by implementations in the language bindings.

All of the implementation work today is mostly manual, but usually follows the same approach. The language binding implementations should not hold additional state, but currently some still do.

Implementation of new metric types and fixing bugs or improving the recording APIs of existing ones results in a lot of busy work replicating the same code patterns in all the languages (of which we now have have 7: Kotlin, Swift, Python, C#, Rust, JavaScript and C++). If we also come up with new metric types next year this becomes worse.

A while ago some of my colleagues started the UniFFI project, a multi-language bindings generator for Rust. For a couple of reasons the Glean SDK cannot rely on that yet.

In 2021 I want us to work towards the goal of using UniFFI (or something like it) to reduce the manual work required on each language binding. This should reduce our general workload supporting several languages and help us avoid accidental bugs on implementing (and maintaining) metric types.

This is a rather short list of things that only focus on the SDK. Let's see what we get tackled in 2021.

End of 2020

The This Week in Glean series is going into winter hiatus until the second week of January 2021. Thanks to everyone who contributed to 25 This Week in Glean blog posts in 2020:

Alessio, Travis, Mike, Chutten, Bea, Raphael, Anthony, Will & Daosheng

|

|

Mozilla Privacy Blog: Continuing to Protect our Users in Kazakhstan |

|

|

Mozilla GFX: moz://gfx newsletter #54 |

Hey all, Jim Mathies here, the new Mozilla Graphics Team manager. We haven’t had a Graphics Newsletter since July, so there’s lots to catch up on. TL/DR – We’re shipping our Rust based WebRender backend to a very wide audience as of Firefox 84. Read on for more detail on our progress.

WebRender Current Status

The release audience for WebRender has expanded quite a bit over the last six months. As a result we expect to achieve nearly 80% desktop coverage by the end of the year and have a goal of shipping to 100% of our user base by next summer.

Operating System Support

MacOS – As of Firefox 84, we are shipping to all versions of MacOS including the latest Big Sur release.

Windows 10 – We are currently shipping to all versions of Windows 10.

Windows 8/8.1 – We are currently shipping to all versions of Windows 8.

Windows 7 – We are currently shipping to a subset of Windows 7 users. Users currently excluded are running versions of the operating system which have not received the first major platform update. Prior to this update Windows 7 lacked features WebRender relies on for painting to a window managed by a separate process. We are working on adding a fallback mechanism that moves composition into the parent browser process to work around this. We hope to ship support for this fallback mechanism in Firefox 85.

Android – We are currently shipping to devices that leverage the Mali-G chipset, Pixel devices, and a majority of Adreno 5 and 6 devices. Mali-T GPUs are our next big release target. Once we get Mali-T support out the door, we’ll have achieved 70% coverage for our mobile user base.

Linux – We have a little announcement to make here, in Firefox 84 will ship with an accelerated WebRender backend for the first time ever to a subset of Linux users. The target cohort leverages X11, Gnome, and recent Mesa library versions. We plan to expand this rollout to more desktop configurations over time, stay tuned!

Qualified Hardware

A note about qualified hardware – we have the ability to restrict who received the new pipeline based on a combination of hardware parameters – GPU manufacturer, generation, driver versions, battery power, video refresh rate, dual monitor configurations, and even screen size. We leveraged these filters heavily during our initial rollout to target specific cohorts. Thankfully most of these filters are no longer in use as our target audience has expanded greatly over the last six months.

As of Firefox 83, we are shipping to a majority of nVidia and AMD GPUs, and to all Intel GPUs newer than Generation 6. We actually shipped to Generation 6 GPUs in 83 but had to back off when some users reported rendering glitches on Reddit. The fix for this issue landed in 85 and has since been uplifted to 84 for rollout to Release, bringing WebRender support to the vast majority of modern Intel chipsets in Firefox 84.

The remaining GPUs we plan to target include older Intel Generation 4.5 and 5, a batch of mobile specific ‘LP’ Intel chipsets, and various older AMD/ATI/nVidia chipsets that represent the long tail of compatible chipsets from these manufacturers.

If you’re curious to see if your device is qualified and running with the new pipeline, visit about:support in a tab and view the Graphics section for this information.

The Long Tail

WebRender is an accelerated rendering backend. This means we leverage the power of your graphics hardware to speed getting pixels to the screen. Unfortunately there are some hardware configurations which will never be able to support this type of rendering pipeline. That’s a problem in that without 100% WebRender coverage for the Firefox user base, we’ll never be able to remove the old pipelining code these users leverage today. Our solution here involves a new fallback mechanism that performs rendering in software. Since WebRender currently supports an OpenGL based hardware backend, software fallback is essentially a software implementation of certain OpenGL ES3 features tailored for WebRender support. We’ve recently started testing software fallback in Nightly and are seeing better than expected performance. We’re not ready to ship this implementation yet but we’re getting closer. Once ready, software fallback will provide WebRender support to the ‘long tail’ of lower-end hardware, uncommon configurations, and users with specific issues like bad drivers.

Shipping software fallback will allow us to close the loop on 100% WebRender coverage, at which point the Graphics Team can move forward on new and interesting projects we’ve been itching to get too for a while now.

WebGPU

Independent of WebRender rollout we are continuing our work on Firefox’s WebGPU implementation currently available for testing in Nightly builds. The specification is on track to reach MVP status in the near future, after which it will go through a period of feedback and change on the road to the release of the final specification sometime in 2021.

Looking Beyond WebRender

WebRender development and shipping has taken a few years to accomplish. We’re finally at a point where the team is starting to think about what we’ll work on once we’ve shipped WebRender to our entire user population. There’s lots to do! An overall theme is currently emerging in our planning – Visual Quality and Performance! We’re investigating various opportunities to extend the WebRender pipeline deeper into Gecko’s layout engine, HDR features, improved color management, performance improvements for SVG and Canvas, and improvements in power consumption. We’ll post more about these projects in future posts, stay tuned!

Happy New Year from the Mozilla Graphics Team!

https://mozillagfx.wordpress.com/2020/12/17/moz-gfx-newsletter-54-2/

|

|

Mozilla Addons Blog: Friend of Add-ons: Andrei Petcu |

Please meet our newest Friend of Add-ons, Andrei Petcu! Andrei is a developer and a free software enthusiast. Over the last four years, he has developed several extensions and themes for Firefox, assisted users with troubleshooting browser issues, and helped improve Mozilla products by filing issues and contributing code.

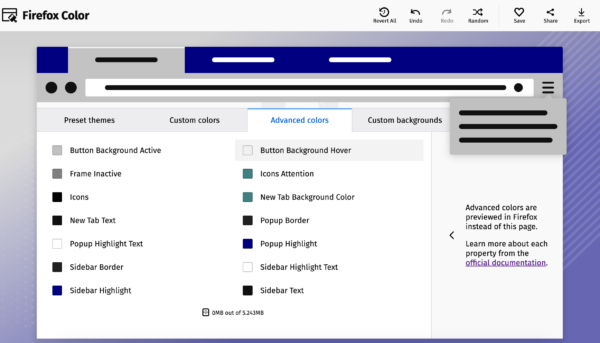

Andrei made a significant contribution to the add-ons community earlier this year by expanding Firefox Color’s ability to customize the browser. He hadn’t originally planned to make changes to Firefox Color, but he became interested in themer.dev, an open-source project that lets users create custom themes for their development environments. After seeing another user ask if themer could create a custom Firefox theme, Andrei quickly investigated implementation options and set to work.

Once a user creates a Firefox theme using themer.dev, they can install it in one of two ways: they can submit the theme through addons.mozilla.org (AMO) and then install the signed .xpi file, or they can apply it as a custom theme through Firefox Color without requiring a signature.

For the latter, there was a small problem: Firefox Color could only support customizations to the most popular parts of the browser’s themeable areas, like the top bar’s background color, the search bar color, and the colors for active and inactive tabs. If a user wanted to modify unsupported areas, like the sidebar or the background color of a new tab page, they wouldn’t be able to see those modifications if they applied the theme through Firefox Color; they would need to install it via a signed .xpi file.

Andrei reached out with a question: if he submitted a patch to Firefox Color that would expand the number of themeable areas, would it be accepted? Could he go one step further and add another panel to the Firefox Color site so users could explore customizing those areas in real time?

We were enthusiastic about his proposal, and not long after, Andrei began submitting patches to gradually add support. Thanks to his contributions, Firefox Color users can now customize 29 (!) more areas of the browser. You can play with modifying these areas by navigating to the “Advanced Colors” tab of color.firefox.com (make sure you have the Firefox Color extension installed to see these changes live in your browser!).

If you’re a fan of minimalist themes, you may want to install Firefox Color to try out Andrei’s flat white or flat dark themes. He has also created examples of using advanced colors to subtly modify Firefox’s default light and dark themes.

We hope designers enjoy the flexibility to add more fine-grained customization to their themes for Firefox (even if they use their powers to make Firefox look like Windows 95).

Currently, Andrei is working on a feature to let users import and export passwords in about:logins. Once that wraps up, he plans to contribute code to the new Firefox for Android.

On behalf of the entire Add-ons Team, thank you for all of your wonderful contributions, Andrei!

If you are interested in getting involved with the add-ons community, please take a look at our current contribution opportunities.

To browse themes for Firefox, visit addons.mozilla.org. You can also learn how to make your custom themes for Firefox on Firefox Extension Workshop.

The post Friend of Add-ons: Andrei Petcu appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/12/17/friend-of-add-ons-andrei-petcu/

|

|

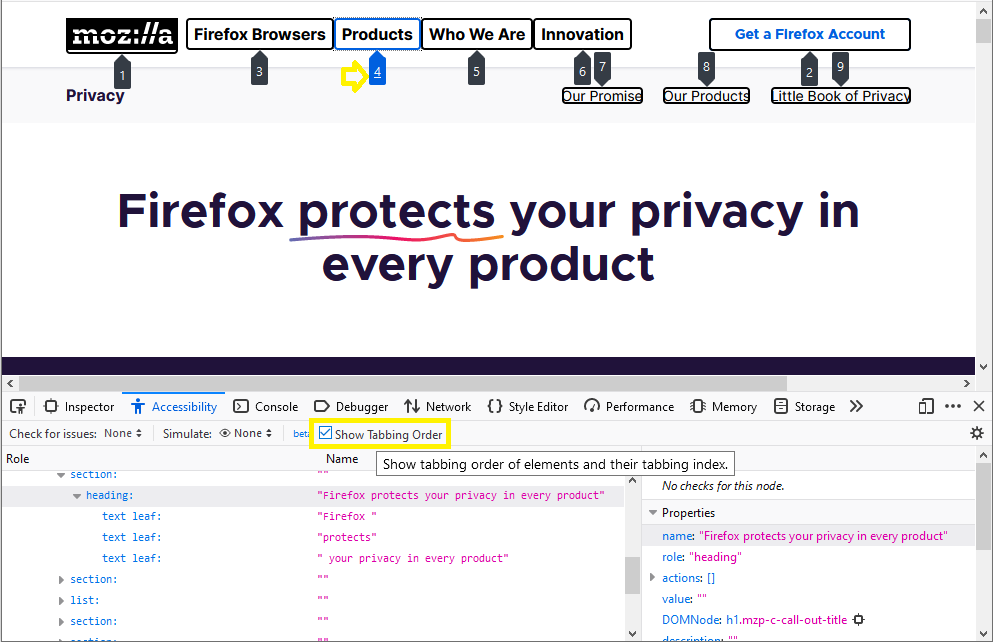

Hacks.Mozilla.Org: Improving Cross-Browser Testing, Part 1: Web Application Testing Today |

Testing web applications can be a challenge. Unlike most other kinds of software, they run across a multitude of platforms and devices. They have to be robust regardless of form factor or choice of browser.

We know this is a problem developers feel: when the MDN Developer Needs Assessment asked web developers for their top pain points, cross-browser testing was in the top five in both 2019 and 2020.

Analysis of the 2020 results revealed a subgroup, comprising 13% of respondents, for whom difficulties writing and running tests were their overall biggest pain point with the web platform.

At Mozilla, we see that as a call to action. With our commitment to building a better Internet, we want to provide web developers the tools they need to build great web experiences – including great tools for testing.

In this series of posts we will explore the current web-application testing landscape and explain what Firefox is doing today to allow developers to run more kinds of tests in Firefox.

The WebDriver Standard

Most current cross-browser test automation uses WebDriver, a W3C specification for browser automation. The protocol used by WebDriver originated in Selenium, one of the oldest and most popular browser automation tools.

To understand the features and limitations of WebDriver, let’s dive in and look at how it works under the hood.

WebDriver provides an HTTP-based synchronous command/response protocol. In this model, clients such as Selenium — called a local end in WebDriver parlance — communicate with a remote end HTTP server using a fixed set of steps:

- The local end sends an HTTP request representing a WebDriver command to the remote end.

- The remote end takes implementation-specific steps to carry out the command, following the requirements of the WebDriver specification.

- The remote end returns an HTTP response to the local end.

This remote end HTTP server could be built into the browser itself, but the most common setup is for all the HTTP processing to happen in a browser-specific driver binary. This accepts the WebDriver HTTP requests and converts them into an internal format for the browser to consume.

For example when automating Firefox, geckodriver converts WebDriver messages into Gecko’s custom Marionette protocol, and vice versa. ChromeDriver and SafariDriver work in a similar way, each using an internal protocol specific to their associated browser.

Example: A Simple Test Script

To understand this better, let’s take a simple example: navigating to a page, finding an element, and testing a property on that element. From the point of view of a test author, the code to implement this might look like:

browser.go("http://localhost:8000")

element = browser.querySelectorAll(".test")[0]

assert element.tag == "div"Each line of code in this example causes a single HTTP request from the local end to the remote end, representing a single WebDriver command.

The program does not continue until the local end receives the corresponding HTTP response. In the initial browser.go call, for example, the remote end will only send its response once the browser has finished loading the requested page.

On the wire that program generates the following HTTP traffic (some unimportant details omitted for brevity):

POST /session/25bc4b8a-c96e-4e61-9f2d-19c021a6a6a4/url HTTP/1.1 Content-Length: 43

{"url": "http://localhost:8000/index.html"}

At this point the browser performs the network operations to navigate to the requested URL, http://localhost:8000/index.html. Once that page has finished loading, the remote end sends the following response back to the automation client.

HTTP/1.1 200 OK content-type: application/json; charset=utf-8 content-length: 14

{"value":null}

Next comes the request to find the element with class test:

POST /session/25bc4b8a-c96e-4e61-9f2d-19c021a6a6a4/elements HTTP/1.1 Content-Length: 43

{"using": "css selector", "value": ".test"}

HTTP/1.1 200 OK content-type: application/json; charset=utf-8 content-length: 90

{"value":[{"element-6066-11e4-a52e-4f735466cecf":"0d861ba8-6901-46ef-9a78-0921c0d6bb5a"}]}

And finally the request to get the element tag name:

GET /session/25bc4b8a-c96e-4e61-9f2d-19c021a6a6a4/element/0d861ba8-6901-46ef-9a78-0921c0d6bb5a/name HTTP/1.1

HTTP/1.1 200 OK content-type: application/json; charset=utf-8 content-length: 15

{"value":"div"}

Even though the three lines of the code involve significant network operations, the control flow is simple to understand and easy to express in a large range of common programming languages. That’s very different from the situation inside the browser itself, where an apparently simple operation like loading a page has a large number of asynchronous steps.

The fact that the remote end handles all that complexity makes it much easier to write automation clients.

Flexibility

In the simple model above, the local end talks directly to the driver binary which is in control of the browser. But in real test deployment scenarios, the situation may be more complex; arbitrary HTTP middleware can be deployed between the local end and the driver.

One common application of this is to provide provisioning capabilities. Using an intermediary such as Selenium Grid, a single WebDriver HTTP endpoint can front a large number of OS and browser combinations, proxying the commands for each test to the requested machine.

The well-understood semantics of HTTP, combined with the wealth of existing tooling make this kind of setup relatively easy to build and deploy at scale, even over untrusted, possibly high latency, networks like the internet.

This is important to services such as SauceLabs and BrowserStack which run automation on remote servers.

Limitations of HTTP-Based WebDriver

The synchronous command/response model of HTTP imposes some limitations on WebDriver. Since the browser can only respond to commands, it’s hard to model things which may happen in the browser outside the context of a specific request.

A clear example of this is alerts. Alerts can appear at any time, so every WebDriver command has to specifically check for an alert being present before running.

Similar problems occur with logging; the ideal API would send log events as soon as they are generated, but with HTTP-based WebDriver this isn’t possible. Instead, a logging API requires buffering on the browser side, and the client must accept that it may not receive all log messages.

Concerns about standardizing a poor, unreliable API means that logging features have not yet made it into the W3C specification for WebDriver, despite being a common user request.

One of the reasons WebDriver adopted the HTTP model despite these limitations was the simplicity of the programming model. With a fully blocking API, one could easily write WebDriver clients using only language features that were mainstream in the early 2000s.

Since then, many programming languages have gained first-class support for handling events and asynchronous control flow. This means that some of the underlying assumptions that went into the original WebDriver protocol — like asynchronous, event-driven code being too hard to write — are no longer true.

DevTools Protocols

As well as automation via WebDriver, modern browsers also provide remote access for the use of the browser’s DevTools. This is essential for cases where it’s difficult to debug an issue on the same machine where the page itself is running, like an issue that only occurs on mobile.

Different browsers provide different DevTools features, which often require explicit support in the engine and expose implementation details that are not visible to web content. Therefore it’s unsurprising that each browser engine has a unique DevTools protocol, according to their particular requirements.

In DevTools, there’s a core requirement that UI must respond to events emitted by the browser engine. Examples include logging console messages and network requests as they come in so that a user can follow progress.

This means that DevTools protocols don’t use the command/response paradigm of HTTP. Instead, they use a bidirectional protocol in which messages may originate from either the client or the browser. This allows the DevTools to update in real time, responding to changes in the browser as they happen.

Remote automation isn’t a core use case of DevTools. Some operations that are common in one case are rare in the other. For example, client-initiated navigation is present in almost all automated tests, but is rare in DevTools.

Nevertheless, low-level control needed when debugging mean it’s possible to write many automation features on top of the DevTools protocol feature set. Indeed in some browsers such as Chrome, the browser-internal message format used to bridge the gap between the WebDriver binary and the browser itself is in fact the DevTools protocol.

This has inevitably led to the question of whether it’s possible to build automation on top of the DevTools protocol directly. With languages offering better support for asynchronous control flow, and modern web applications demanding more low-level control for testing, libraries such as Google’s Puppeteer have taken DevTools protocols and constructed automation-specific client libraries on top.

These libraries support advanced features such as network request interception which are hard to build on top of HTTP-based WebDriver. The typically promise-based APIs also feel more like modern front-end programming, which has made these tools popular with web developers.

Even mainly WebDriver-based tools are adding additional features which can’t be realised through WebDriver alone. For example some of the new features in Selenium 4, such as access to console logs and better support for HTTP Authentication, require bidirectional communication, and will initially only be supported in browsers which can speak Chrome’s DevTools protocol.

DevTools Difficulties

Although using DevTools for automation is appealing in terms of the feature set, it’s also fraught with problems.

DevTools protocols are browser-specific and can expose a lot of internal state that’s not part of the Web Platform. This means that libraries using DevTools features for automation are typically tied to a specific rendering engine.

They are also beholden to changes in those engines; the tight coupling to the engine internals means DevTools protocols usually offer very limited guarantees of stability.

For the DevTools themselves this isn’t a big problem; the same team usually owns the front-end and the back-end so any refactor just has to update both the client and server at the same time, and cross-version compatibility is not a serious concern. But for automation, it imposes a significant burden on both the client library developer and the test authors.

With WebDriver a single client can work with any supported browser release. With DevTools-based automation, a new client may be required for each browser version. This is the case for Puppeteer, for example, where each Puppeteer release is tied to a particular version of Chromium.

The fact that DevTools protocols are browser-specific makes it very challenging to use them as the foundation for cross-browser tooling. Some automation clients, like Cypress and Microsoft’s Playwright, have made heroic efforts here, eschewing WebDriver but still supporting multiple browsers.

Using a combination of existing DevTools protocols and custom protocols implemented through patches to the underlying browser code or via WebExtensions, they provide features not possible in WebDriver whilst supporting several browser engines.

Requiring such a large amount of code to be maintained by the automation library, and putting the library on the treadmill of browser engine updates, makes maintenance difficult and gives the library authors less time to focus on their core automation features.

Summary and Next Steps

As we have seen, the web application testing ecosystem is becoming fragmented. Most cross-browser testing uses WebDriver; a W3C specification that all major browser engines support.

However, limitations in WebDriver’s HTTP-based protocol mean that automation libraries are increasingly choosing to use browser-specific DevTools protocols to implement advanced features, foregoing cross-browser support when they do.

Test authors shouldn’t have to choose between access to functionality, and browser-specific tooling. And client authors shouldn’t be forced to keep up with the often-breakneck pace of browser engine development.

In our next post, we’ll describe some work Mozilla has done to bring previously Chromium-only test tooling to Firefox.

Acknowledgements

Thanks to Tantek Celik, Karl Dubost, Jan Odvarko, Devin Reams, Maire Reavy, Henrik Skupin, and Mike Taylor for their valuable feedback and suggestions.

The post Improving Cross-Browser Testing, Part 1: Web Application Testing Today appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/12/cross-browser-testing-part-1-web-app-testing-today/

|

|

Daniel Stenberg: curl supports NASA |

Not everyone understands how open source is made. I received the following email from NASA a while ago.

Subject: Curl Country of Origin and NDAA Compliance

Hello, my name is [deleted] and I am a Supply Chain Risk Management Analyst at NASA. As such, I ensure that all NASA acquisitions of Covered Articles comply with Section 208 of the Further Consolidated Appropriations Act, 2020, Public Law 116-94, enacted December 20, 2019. To do so, the Country of Origin (CoO) information must be obtained from the company that develops, produces, manufactures, or assembles the product(s). To do so, please provide an email response or a formal document (a PDF on company letterhead is preferred, but a simple statement is sufficient) specifically identifying the country, or countries, in which Curl is developed and maintained

If the country of origin is outside the United States, please provide any information you may have stating that testing is performed in the United States prior to supplying products to customers. Additionally, if available, please identify all authorized resellers of the product in question.

Lastly, please confirm that Curl is not developed by, contain components developed by, or receive substantial influence from entities prohibited by Section 889 of the 2019 NDAA. These entities include the following companies and any of their subsidiaries or affiliates:

Hytera Communications Corporation

Huawei Technologies Company

ZTE Corporation

Dahua Technology Company

Hangzhou Hikvision Digital Technology CompanyFinally, we have a time frame of 5 days for a response.

Thank you,

My answer

Okay, I first considered going with strong sarcasm in my reply due to the complete lack of understanding, and the implied threat in that last line. What would happen if I wouldn’t respond in time?

Then it struck me that this could be my chance to once and for all get a confirmation if curl is already actually used in space or not. So I went with informative and a friendly tone.

Hi [name],

I will answer to these questions below to the best of my ability, and maybe you can answer something for me?

curl (https://curl.se) is an open source project that creates two products, curl the command line tool and libcurl the library. I am the founder, lead developer and core maintainer of the project. To this date, I have done about 57% of the 26,000 changes in the source code repository. The remaining 43% have been done by 841 different volunteers and contributors from all over the world. Their names can be extracted from our git repository: https://github.com/curl/curl

You can also see that I own most, but not all, copyrights in the project.

I am a citizen of Sweden and I’ve been a citizen of Sweden during the entire time I’ve done all and any work on curl. The remaining 841 co-authors are from all over the world, but primarily from western European countries and the US. You could probably say that we live primarily “on the Internet” and not in any particular country.

We don’t have resellers. I work for an American company (wolfSSL) where we do curl support for customers world-wide.

Our testing is done universally and is not bound to any specific country or region. We test our code substantially before release.

Me knowingly, we do not have any components or code authored by people at any of the mentioned companies.

So finally my question: can you tell me anything about where or for what you use curl? Is it used in anything in space?

Regards,

Daniel

Used in space?

Of course my attempt was completely in vain and the answer back was very brief and it just said…

“We are using curl to support NASA’s mission and vision.”

Credits

Space ship image by Elias Sch. from Pixabay

|

|

Hacks.Mozilla.Org: 2020 MDN Web Developer Needs Assessment now available |

The 2020 MDN Web Developer Needs Assessment (DNA) report is now available! This post takes you through what we’ve accomplished in 2020 based on the findings in the inaugural report, key takeaways of the 2020 survey, and what our next steps are as a result.

What We’ve Accomplished

In December 2019, Mozilla released the first Web Developer Needs Assessment survey report. This was a very detailed study of web developers globally and their main pain points with the web platform, designed with input from nearly 30 stakeholders representing product advisory board member organizations (and others) including browser vendors, the W3C, and industry. You can find a full list in the report itself (PDF, 1.4MB download).

We learned that while more than 3/4 of respondents are very satisfied or satisfied with the Web platform, their 4 biggest frustrations included having to support specific browsers (e.g., IE11), dealing with outdated or inaccurate documentation for frameworks and libraries, avoiding or removing a feature that doesn’t work across browsers, and testing across browsers.

Mozilla and other web industry orgs took action based on these results, for example:

- MDN prioritized documentation projects that were most needed by the industry.

- Mozilla’s engineering team incorporated the findings into future browser engineering team planning and prioritisation work.

- A cross-industry effort improved cross-browser support for Flexbox (see Closing the gap (in flexbox) for more details).

- Google used the results to help understand and prioritize the key areas of developer frustration, and used the developer satisfaction scores as a success metric going forward.

- The results provided valuable input to several standardization and pre-standardization discussions at W3C’s annual TPAC meeting.

- Microsoft used the Web DNA as one of their primary research tools when planning investments in the web platform and the surrounding ecosystem of content and tools (for example webhint.io), and it directly impacted how they now think about areas like cross-browser testing, legacy browser compatibility, best practices hinting, and more.

The 2020 Survey Results

The inaugural results were so useful that in 2020 we decided to run it again, with the same level of collaboration between browser vendors and other stakeholders.

This year we expanded the survey to include some new questions about accessibility tools and web testing, which were requested by some of the survey stakeholders as key areas of interest that should be explored more this time round. We also hired an experienced data scientist to conduct analysis and employ data science best practices.

We ran the survey from October 12 through November 2, 2020 and secured a similarly wide distribution of respondents.

Browser compatibility remains the top pain point, and it is also interesting to note that overall satisfaction with the platform hasn’t changed much, with 77.7% being very satisfied or satisfied with the Web in 2020.

New for this year are the results of our segmentation analysis of the needs which yielded seven, distinct segments. Each one has wildly different needs that surface as the most frustrating when compared to the overall mean scores:

- Documentation Disciples — Their top frustrations are outdated documentation for frameworks and libraries and outdated documentation for HTML, CSS, and JavaScript, as well as supporting specific browsers.

- Browser Beaters — Their top frustrations are clustered around issues with browser compatibility, design and layout. Like Document Disciples, they also find having to support specific browsers more frustrating than the overall mean.

- Progressive Programmers — Their top frustrations are clustered around lack of APIs, lack of support for Progressive Web Apps (PWAs), and using web technologies. For them, browser related needs were typically less frustrating than the overall mean.

- Testing Technicians — Needs statements relating to testing, whether end-to-end, front-end, or testing across browsers, caused the most frustration for this segment. Their top frustrations are clustered around issues with browser compatibility, design and layout. Like Progressive Pros, this segment also finds browser compatibility needs less frustrating than the overall mean, with the exception of testing across browsers.

- Keeping Currents — The need statements that this segment found most frustrating were keeping up with a large number of new and existing tools and frameworks and keeping up with changes to the web platform. Sticking with the themes from 2019, this segment is concerned with the Pace of Change of the web platform.