Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Security Blog: Preloading Intermediate CA Certificates into Firefox |

Throughout 2020, Firefox users have been seeing fewer secure connection errors while browsing the Web. We’ve been improving connection errors overall for some time, and a new feature called Intermediate Certificate Authority (CA) Preloading is our latest innovation. This technique reduces connection errors that users encounter when web servers forget to properly configure their TLS security.

In essence, Firefox pre-downloads all trusted Web Public Key Infrastructure (PKI) intermediate CA certificates into Firefox via Mozilla’s Remote Settings infrastructure. This way, Firefox users avoid seeing an error page for one of the most common server configuration problems: not specifying proper intermediate CA certificates.

For Intermediate CA Preloading to work, we need to be able to enumerate every intermediate CA certificate that is part of the trusted Web PKI. As a result of Mozilla’s leadership in the CA community, each CA in Mozilla’s Root Store Policy is required to disclose these intermediate CA certificates to the multi-browser Common CA Database (CCADB). Consequently, all of the relevant intermediate CA certificates are available via the CCADB reporting mechanisms. Given this information, we periodically synthesize a list of these intermediate CA certificates and place them into Remote Settings. Currently the list contains over two thousand entries.

When Firefox receives the list for the first time (or later receives updates to the list), it enumerates the entries in batches and downloads the corresponding intermediate CA certificates in the background. The list changes slowly, so once a copy of Firefox has completed the initial downloads, it’s easy to keep it up-to-date. The list can be examined directly using your favorite JSON tooling at this URL: https://firefox.settings.services.mozilla.com/v1/buckets/security-state/collections/intermediates/records

For details on processing the records, see the Kinto Attachment plugin for Kinto, used by Firefox Remote Settings.

Certificates provided via Intermediate CA Preloading are added to a local cache and are not imbued with trust. Trust is still derived from the standard Web PKI algorithms.

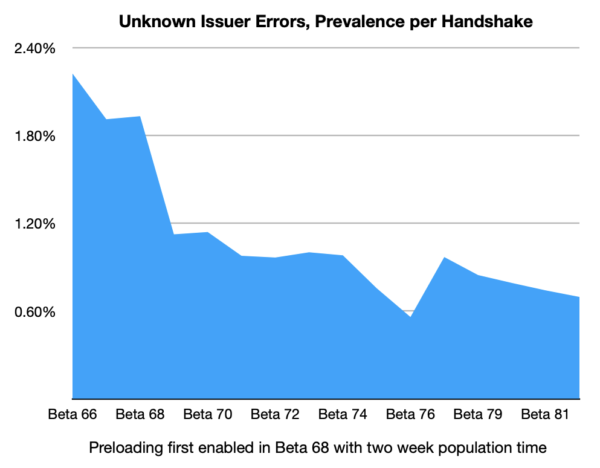

Our collected telemetry confirms that enabling Intermediate CA Preloading in Firefox 68 has led to a decrease of unknown issuers errors in the TLS Handshake.

While there are other factors that affect the relative prevalence of this error, this data supports the conclusion that Intermediate CA Preloading is achieving the goal of avoiding these connection errors for Firefox users.

Intermediate CA Preloading is reducing errors today in Firefox for desktop users, and we’ll be working to roll it out to our mobile users in the future.

The post Preloading Intermediate CA Certificates into Firefox appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2020/11/13/preloading-intermediate-ca-certificates-into-firefox/

|

|

Daniel Stenberg: there is always a CURLOPT for it |

Embroidered and put on the kitchen wall, on a mug or just as words of wisdom to bring with you in life?

https://daniel.haxx.se/blog/2020/11/13/there-is-always-a-curlopt-for-it/

|

|

Hacks.Mozilla.Org: Warp: Improved JS performance in Firefox 83 |

Introduction

We have enabled Warp, a significant update to SpiderMonkey, by default in Firefox 83. SpiderMonkey is the JavaScript engine used in the Firefox web browser.

With Warp (also called WarpBuilder) we’re making big changes to our JIT (just-in-time) compilers, resulting in improved responsiveness, faster page loads and better memory usage. The new architecture is also more maintainable and unlocks additional SpiderMonkey improvements.

This post explains how Warp works and how it made SpiderMonkey faster.

How Warp works

Multiple JITs

The first step when running JavaScript is to parse the source code into bytecode, a lower-level representation. Bytecode can be executed immediately using an interpreter or can be compiled to native code by a just-in-time (JIT) compiler. Modern JavaScript engines have multiple tiered execution engines.

JS functions may switch between tiers depending on the expected benefit of switching:

- Interpreters and baseline JITs have fast compilation times, perform only basic code optimizations (typically based on Inline Caches), and collect profiling data.

- The Optimizing JIT performs advanced compiler optimizations but has slower compilation times and uses more memory, so is only used for functions that are warm (called many times).

The optimizing JIT makes assumptions based on the profiling data collected by the other tiers. If these assumptions turn out to be wrong, the optimized code is discarded. When this happens the function resumes execution in the baseline tiers and has to warm-up again (this is called a bailout).

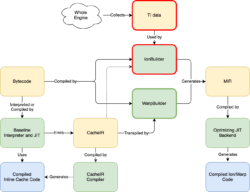

For SpiderMonkey it looks like this (simplified):

Profiling data

Our previous optimizing JIT, Ion, used two very different systems for gathering profiling information to guide JIT optimizations. The first is Type Inference (TI), which collects global information about the types of objects used in the JS code. The second is CacheIR, a simple linear bytecode format used by the Baseline Interpreter and the Baseline JIT as the fundamental optimization primitive. Ion mostly relied on TI, but occasionally used CacheIR information when TI data was unavailable.

With Warp, we’ve changed our optimizing JIT to rely solely on CacheIR data collected by the baseline tiers. Here’s what this looks like:

There’s a lot of information here, but the thing to note is that we’ve replaced the IonBuilder frontend (outlined in red) with the simpler WarpBuilder frontend (outlined in green). IonBuilder and WarpBuilder both produce Ion MIR, an intermediate representation used by the optimizing JIT backend.

Where IonBuilder used TI data gathered from the whole engine to generate MIR, WarpBuilder generates MIR using the same CacheIR that the Baseline Interpreter and Baseline JIT use to generate Inline Caches (ICs). As we’ll see below, the tighter integration between Warp and the lower tiers has several advantages.

How CacheIR works

Consider the following JS function:

function f(o) {

return o.x - 1;

}The Baseline Interpreter and Baseline JIT use two Inline Caches for this function: one for the property access (o.x), and one for the subtraction. That’s because we can’t optimize this function without knowing the types of o and o.x.

The IC for the property access, o.x, will be invoked with the value of o. It can then attach an IC stub (a small piece of machine code) to optimize this operation. In SpiderMonkey this works by first generating CacheIR (a simple linear bytecode format, you could think of it as an optimization recipe). For example, if o is an object and x is a simple data property, we generate this:

GuardToObject inputId 0 GuardShape objId 0, shapeOffset 0 LoadFixedSlotResult objId 0, offsetOffset 8 ReturnFromIC

Here we first guard the input (o) is an object, then we guard on the object’s shape (which determines the object’s properties and layout), and then we load the value of o.x from the object’s slots.

Note that the shape and the property’s index in the slots array are stored in a separate data section, not baked into the CacheIR or IC code itself. The CacheIR refers to the offsets of these fields with shapeOffset and offsetOffset. This allows many different IC stubs to share the same generated code, reducing compilation overhead.

The IC then compiles this CacheIR snippet to machine code. Now, the Baseline Interpreter and Baseline JIT can execute this operation quickly without calling into C++ code.

The subtraction IC works the same way. If o.x is an int32 value, the subtraction IC will be invoked with two int32 values and the IC will generate the following CacheIR to optimize that case:

GuardToInt32 inputId 0 GuardToInt32 inputId 1 Int32SubResult lhsId 0, rhsId 1 ReturnFromIC

This means we first guard the left-hand side is an int32 value, then we guard the right-hand side is an int32 value, and we can then perform the int32 subtraction and return the result from the IC stub to the function.

The CacheIR instructions capture everything we need to do to optimize an operation. We have a few hundred CacheIR instructions, defined in a YAML file. These are the building blocks for our JIT optimization pipeline.

Warp: Transpiling CacheIR to MIR

If a JS function gets called many times, we want to compile it with the optimizing compiler. With Warp there are three steps:

- WarpOracle: runs on the main thread, creates a snapshot that includes the Baseline CacheIR data.

- WarpBuilder: runs off-thread, builds MIR from the snapshot.

- Optimizing JIT Backend: also runs off-thread, optimizes the MIR and generates machine code.

The WarpOracle phase runs on the main thread and is very fast. The actual MIR building can be done on a background thread. This is an improvement over IonBuilder, where we had to do MIR building on the main thread because it relied on a lot of global data structures for Type Inference.

WarpBuilder has a transpiler to transpile CacheIR to MIR. This is a very mechanical process: for each CacheIR instruction, it just generates the corresponding MIR instruction(s).

Putting this all together we get the following picture (click for a larger version):

We’re very excited about this design: when we make changes to the CacheIR instructions, it automatically affects all of our JIT tiers (see the blue arrows in the picture above). Warp is simply weaving together the function’s bytecode and CacheIR instructions into a single MIR graph.

Our old MIR builder (IonBuilder) had a lot of complicated code that we don’t need in WarpBuilder because all the JS semantics are captured by the CacheIR data we also need for ICs.

Trial Inlining: type specializing inlined functions

Optimizing JavaScript JITs are able to inline JavaScript functions into the caller. With Warp we are taking this a step further: Warp is also able to specialize inlined functions based on the call site.

Consider our example function again:

function f(o) {

return o.x - 1;

}This function may be called from multiple places, each passing a different shape of object or different types for o.x. In this case, the inline caches will have polymorphic CacheIR IC stubs, even if each of the callers only passes a single type. If we inline the function in Warp, we won’t be able to optimize it as well as we want.

To solve this problem, we introduced a novel optimization called Trial Inlining. Every function has an ICScript, which stores the CacheIR and IC data for that function. Before we Warp-compile a function, we scan the Baseline ICs in that function to search for calls to inlinable functions. For each inlinable call site, we create a new ICScript for the callee function. Whenever we call the inlining candidate, instead of using the default ICScript for the callee, we pass in the new specialized ICScript. This means that the Baseline Interpreter, Baseline JIT, and Warp will now collect and use information specialized for that call site.

Trial inlining is very powerful because it works recursively. For example, consider the following JS code:

function callWithArg(fun, x) {

return fun(x);

}

function test(a) {

var b = callWithArg(x => x + 1, a);

var c = callWithArg(x => x - 1, a);

return b + c;

}

When we perform trial inlining for the test function, we will generate a specialized ICScript for each of the callWithArg calls. Later on, we attempt recursive trial inlining in those caller-specialized callWithArg functions, and we can then specialize the fun call based on the caller. This was not possible in IonBuilder.

When it’s time to Warp-compile the test function, we have the caller-specialized CacheIR data and can generate optimal code.

This means we build up the inlining graph before functions are Warp-compiled, by (recursively) specializing Baseline IC data at call sites. Warp then just inlines based on that without needing its own inlining heuristics.

Optimizing built-in functions

IonBuilder was able to inline certain built-in functions directly. This is especially useful for things like Math.abs and Array.prototype.push, because we can implement them with a few machine instructions and that’s a lot faster than calling the function.

Because Warp is driven by CacheIR, we decided to generate optimized CacheIR for calls to these functions.

This means these built-ins are now also properly optimized with IC stubs in our Baseline Interpreter and JIT. The new design leads us to generate the right CacheIR instructions, which then benefits not just Warp but all of our JIT tiers.

For example, let’s look at a Math.pow call with two int32 arguments. We generate the following CacheIR:

LoadArgumentFixedSlot resultId 1, slotIndex 3 GuardToObject inputId 1 GuardSpecificFunction funId 1, expectedOffset 0, nargsAndFlagsOffset 8 LoadArgumentFixedSlot resultId 2, slotIndex 1 LoadArgumentFixedSlot resultId 3, slotIndex 0 GuardToInt32 inputId 2 GuardToInt32 inputId 3 Int32PowResult lhsId 2, rhsId 3 ReturnFromIC

First, we guard that the callee is the built-in pow function. Then we load the two arguments and guard they are int32 values. Then we perform the pow operation specialized for two int32 arguments and return the result of that from the IC stub.

Furthermore, the Int32PowResult CacheIR instruction is also used to optimize the JS exponentiation operator, x ** y. For that operator we might generate:

GuardToInt32 inputId 0 GuardToInt32 inputId 1 Int32PowResult lhsId 0, rhsId 1 ReturnFromIC

When we added Warp transpiler support for Int32PowResult, Warp was able to optimize both the exponentiation operator and Math.pow without additional changes. This is a nice example of CacheIR providing building blocks that can be used for optimizing different operations.

Results

Performance

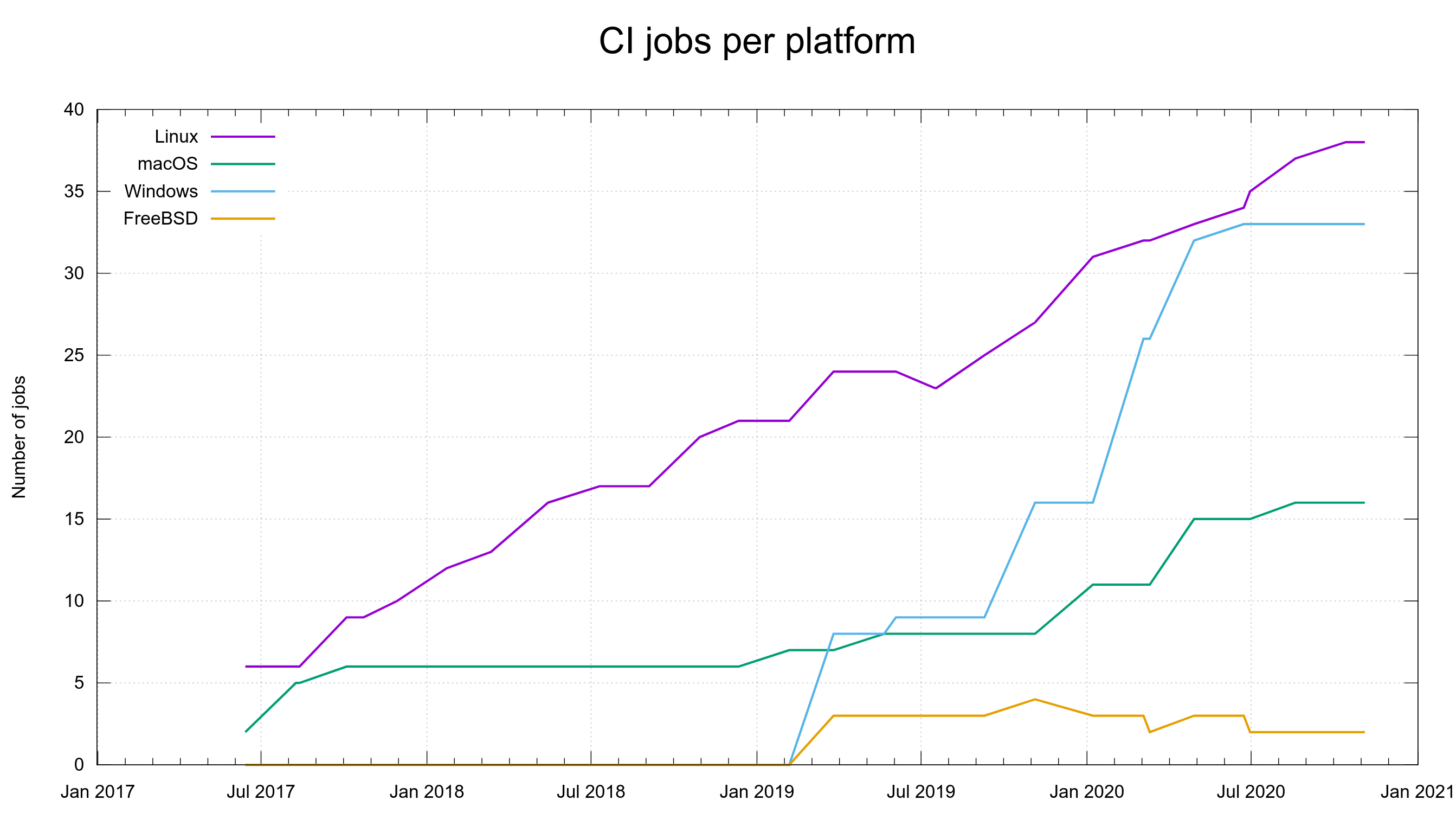

Warp is faster than Ion on many workloads. The picture below shows a couple examples: we had a 20% improvement on Google Docs load time, and we are about 10-12% faster on the Speedometer benchmark:

We’ve seen similar page load and responsiveness improvements on other JS-intensive websites such as Reddit and Netflix. Feedback from Nightly users has been positive as well.

The improvements are largely because basing Warp on CacheIR lets us remove the code throughout the engine that was required to track the global type inference data used by IonBuilder, resulting in speedups across the engine.

The old system required all functions to track type information that was only useful in very hot functions. With Warp, the profiling information (CacheIR) used to optimize Warp is also used to speed up code running in the Baseline Interpreter and Baseline JIT.

Warp is also able to do more work off-thread and requires fewer recompilations (the previous design often overspecialized, resulting in many bailouts).

Synthetic JS benchmarks

Warp is currently slower than Ion on certain synthetic JS benchmarks such as Octane and Kraken. This isn’t too surprising because Warp has to compete with almost a decade of optimization work and tuning for those benchmarks specifically.

We believe these benchmarks are not representative of modern JS code (see also the V8 team’s blog post on this) and the regressions are outweighed by the large speedups and other improvements elsewhere.

That said, we will continue to optimize Warp the coming months and we expect to see improvements on all of these workloads going forward.

Memory usage

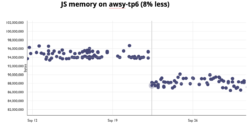

Removing the global type inference data also means we use less memory. For example the picture below shows JS code in Firefox uses 8% less memory when loading a number of websites (tp6):

We expect this number to improve the coming months as we remove the old code and are able to simplify more data structures.

Faster GCs

The type inference data also added a lot of overhead to garbage collection. We noticed some big improvements in our telemetry data for GC sweeping (one of the phases of our GC) when we enabled Warp by default in Firefox Nightly on September 23:

Maintainability and Developer Velocity

Because WarpBuilder is a lot more mechanical than IonBuilder, we’ve found the code to be much simpler, more compact, more maintainable and less error-prone. By using CacheIR everywhere, we can add new optimizations with much less code. This makes it easier for the team to improve performance and implement new features.

What’s next?

With Warp we have replaced the frontend (the MIR building phase) of the IonMonkey JIT. The next step is removing the old code and architecture. This will likely happen in Firefox 85. We expect additional performance and memory usage improvements from that.

We will also continue to incrementally simplify and optimize the backend of the IonMonkey JIT. We believe there’s still a lot of room for improvement for JS-intensive workloads.

Finally, because all of our JITs are now based on CacheIR data, we are working on a tool to let us (and web developers) explore the CacheIR data for a JS function. We hope this will help developers understand JS performance better.

Acknowledgements

Most of the work on Warp was done by Caroline Cullen, Iain Ireland, Jan de Mooij, and our amazing contributors Andr'e Bargull and Tom Schuster. The rest of the SpiderMonkey team provided us with a lot of feedback and ideas. Christian Holler and Gary Kwong reported various fuzz bugs.

Thanks to Ted Campbell, Caroline Cullen, Steven DeTar, Matthew Gaudet, Melissa Thermidor, and especially Iain Ireland for their great feedback and suggestions for this post.

The post Warp: Improved JS performance in Firefox 83 appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/11/warp-improved-js-performance-in-firefox-83/

|

|

Mozilla Performance Blog: Using the Mach Perftest Notebook |

Using the Mach Perftest Notebook

In my previous blog post, I discussed an ETL (Extract-Transform-Load) implementation for doing local data analysis within Mozilla in a standardized way. That work provided us with a straightforward way of consuming data from various sources and standardizing it to conform to the expected structure for pre-built analysis scripts.

Today, not only are we using this ETL (called PerftestETL) locally, but also in our CI system! There, we have a tool called Perfherder which ingests data created by our tests in CI so that we can build up visualizations and dashboards like Are We Fast Yet (AWFY). The big benefit that this new ETL system provides here is that it greatly simplifies the path to getting from raw data to a Perfherder-formatted datum. This lets developers ignore the data pre-processing stage which isn’t important to them. All of this is currently available in our new performance testing framework MozPerftest.

One thing that I omitted from the last blog post is how we use this tool locally for analyzing our data. We had two students from Bishop’s University (:axew, and :yue) work on this tool for course credits during the 2020 Winter semester. Over the summer, they continued hacking with us on this project and finalized the work needed to do local analyses using this tool through MozPerftest.

Below you can see a recording of how this all works together when you run tests locally for comparisons.

There is no audio in the video so here’s a short summary of what’s happening:

- We start off with a folder containing some historical data from past test iterations that we want to compare the current data to.

- We start the MozPerftest test using the `–notebook-compare-to` option to specify what we want to compare the new data with.

- The test runs for 10 iterations (the video is cut to show only one).

- Once the test ends, the PerftestETL runs and standardizes the data and then we start a server to serve the data to Iodide (our notebook of choice).

- A new browser window/tab opens to Iodide with some custom code already inserted into the editor (through a POST request) for a comparison view that we built.

- Iodide is run, and a table is produced in the report.

- The report then shows the difference between each historical iteration and the newest run.

With these pre-built analyses, we can help developers who are tracking metrics for a product over time, or simply checking how their changes affected performance without having to build their own scripts. This ensures that we have an organization-wide standardized approach for analyzing and viewing data locally. Otherwise, developers each start making their own report which (1) wastes their time, and more importantly, (2) requires consumers of these reports to familiarize themselves with the format.

That said, the real beauty in the MozPerftest integration is that we’ve decoupled the ETL from the notebook-specific code. This lets us expand the notebooks that we can open data in so we could add the capability for using R-Studio, Google Data Studio, or Jupyter Notebook in the future.

If you have any questions, feel free to reach out to us on Riot in #perftest.

https://blog.mozilla.org/performance/2020/11/12/using-the-mach-perftest-notebook/

|

|

Mozilla Performance Blog: Performance Sheriff Newsletter (October 2020) |

|

|

Benjamin Bouvier: Botzilla, a multi-purpose Matrix bot tuned for Mozilla |

|

|

Firefox UX: We’re Changing Our Name to Content Design |

The Firefox UX content team has a new name that better reflects how we work.

Co-authored with Betsy Mikel

Photo by Brando Makes Branding on Unsplash

Hello. We’re the Firefox Content Design team. We’ve actually met before, but our name then was the Firefox Content Strategy team.

Why did we change our name to Content Design, you ask? Well, for a few (good) reasons.

It better captures what we do

We are designers, and our material is content. Content can be words, but it can be other things, too, like layout, hierarchy, iconography, and illustration. Words are one of the foundational elements in our design toolkit — similar to color or typography for visual designers — but it’s not always written words, and words aren’t created in a vacuum. Our practice is informed by research and an understanding of the holistic user journey. The type of content, and how content appears, is something we also create in close partnership with UX designers and researchers.

“Then, instead of saying ‘How shall I write this?’, you say, ‘What content will best meet this need?’ The answer might be words, but it might also be other things: pictures, diagrams, charts, links, calendars, a series of questions and answers, videos, addresses, maps […] and many more besides. When your job is to decide which of those, or which combination of several of them, meets the user’s need — that’s content design.”

— Sarah Richards defined the content design practice in her seminal work, Content Design

It helps others understand how to work with us

While content strategy accurately captures the full breadth of what we do, this descriptor is better understood by those doing content strategy work or very familiar with it. And, as we know from writing product copy, accuracy is not synonymous with clarity.

Strategy can also sound like something we create on our own and then lob over a fence. In contrast, design is understood as an immersive and collaborative practice, grounded in solving user problems and business goals together.

Content design is thus a clearer descriptor for a broader audience. When we collaborate cross-functionally (with product managers, engineers, marketing), it’s important they understand what to expect from our contributions, and how and when to engage us in the process. We often get asked: “When is the right time to bring in content? And the answer is: “The same time you’d bring in a designer.”

We’re aligning with the field

Content strategy is a job title often used by the much larger field of marketing content strategy or publishing. There are website content strategists, SEO content strategists, and social media content strategists, all who do different types of content-related work. Content design is a job title specific to product and user experience.

And, making this change is in keeping with where the field is going. Organizations like Slack, Netflix, Intuit, and IBM also use content design, and practice leaders Shopify and Facebook recently made the change, articulating reasons that we share and echo here.

It distinguishes the totality of our work from copywriting

Writing interface copy is about 10% of what we do. While we do write words that appear in the product, it’s often at the end of a thoughtful design process that we participate in or lead.

We’re still doing all the same things we did as content strategists, and we are still strategic in how we work (and shouldn’t everyone be strategic in how they work, anyway?) but we are choosing a title that better captures the unseen but equally important work we do to arrive at the words.

It’s the best option for us, but there’s no ‘right’ option

Job titles are tricky, especially for an emerging field like content design. The fact that titles are up for debate and actively evolving shows just how new our profession is. While there have been people creating product content experiences for a while, the field is really starting to now professionalize and expand. For example, we just got our first dedicated content design and UX writing conference this year with Button.

Content strategy can be a good umbrella term for the activities of content design and UX writing. Larger teams might choose to differentiate more, staffing specialized strategists, content designers, and UX writers. For now, content design is the best option for us, where we are, and the context and organization in which we work.

“There’s no ‘correct’ job title or description for this work. There’s not a single way you should contribute to your teams or help others understand what you do.”

— Metts & Welfle, Writing is Designing

Words matter

We’re documenting our name change publicly because, as our fellow content designers know, words matter. They reflect but also shape reality.

We feel a bit self-conscious about this declaration, and maybe that’s because we are the newest guests at the UX party — so new that we are still writing, and rewriting, our name tag. So, hi, it’s nice to see you (again). We’re happy to be here.

Thank you to Michelle Heubusch, Gemma Petrie, and Katie Caldwell for reviewing this post.

https://blog.mozilla.org/ux/2020/11/were-changing-our-name-to-content-design/

|

|

Mike Hommey: Announcing git-cinnabar 0.5.6 |

Please partake in the git-cinnabar survey.

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

These release notes are also available on the git-cinnabar wiki.

What’s new since 0.5.5?

- Updated git to 2.29.2 for the helper.

git cinnabar git2hgandgit cinnabar hg2gitnow have a--batchflag.- Fixed a few issues with experimental support for python 3.

- Fixed compatibility issues with mercurial >= 5.5.

- Avoid downloading unsupported clonebundles.

- Provide more resilience to network problems during bundle download.

- Prebuilt helper for Apple Silicon macos now available via

git cinnabar download.

|

|

Karl Dubost: Career Opportunities mean a lot of things |

When being asked

I believe there are good career opportunities for me at Company X

what do you understand? Some people will associate this directly to climbing the hierarchy ladder of the company. I have the feeling the reality is a lot more diverse. "career opportunities" mean different things to different people.

So I asked around me (friends, family, colleague) what was their take about it, outside of going higher in the hierarchy.

-

change of titles (role recognition).

This doesn't necessary imply climbing the hierarchy ladder, it can just mean you are recognized for a certain expertise.

-

change of salary/work status (money/time).

Sometimes, people don't want to change their status but want a better compensation, or a better working schedule (aka working 4 days a week with the same salary).

-

change of responsibilities (practical work being done in the team)

Some will see this as a possibility to learn new tricks, to diversify the work they do on a daily basis.

-

change of team (working on different things inside the company)

Working on a different team because you think they do something super interesting there is appealing.

-

being mentored (inside your own company)

It's a bit similar to the two previous one, but there's a framework inside the company where you are not helping and/or bringing your skills to the project, but you go partially in another team to learn the work of this team. Think about it as a kind of internal internship.

-

change of region/country

Working in a different country or region in the same country when it's your own choice. This flexibility is a gem for me. I did it a couple of times. When working at W3C, I moved from the office on the French Riviera to working from home in Montreal (Canada). And a bit later, I moved from Montreal to the W3C office in Japan. At Mozilla too, (after moving back to Montreal for a couple of years), I moved from Montreal to Japan again. There are career opportunities because they allow you to work in a different setting with different people and communities, and this in itself makes the life a lot richer.

-

Having dedicated/planned time for Conference or Courses

Being able to follow a class on a topic which helps the individual to grow is super useful. But for this to be successful, it has to be understood that it requires time. Time to follow the courses, time to do the homework of the course.

I'm pretty sure there are other possibilities. Thanks to everyone who shared their thoughts.

Otsukare!

|

|

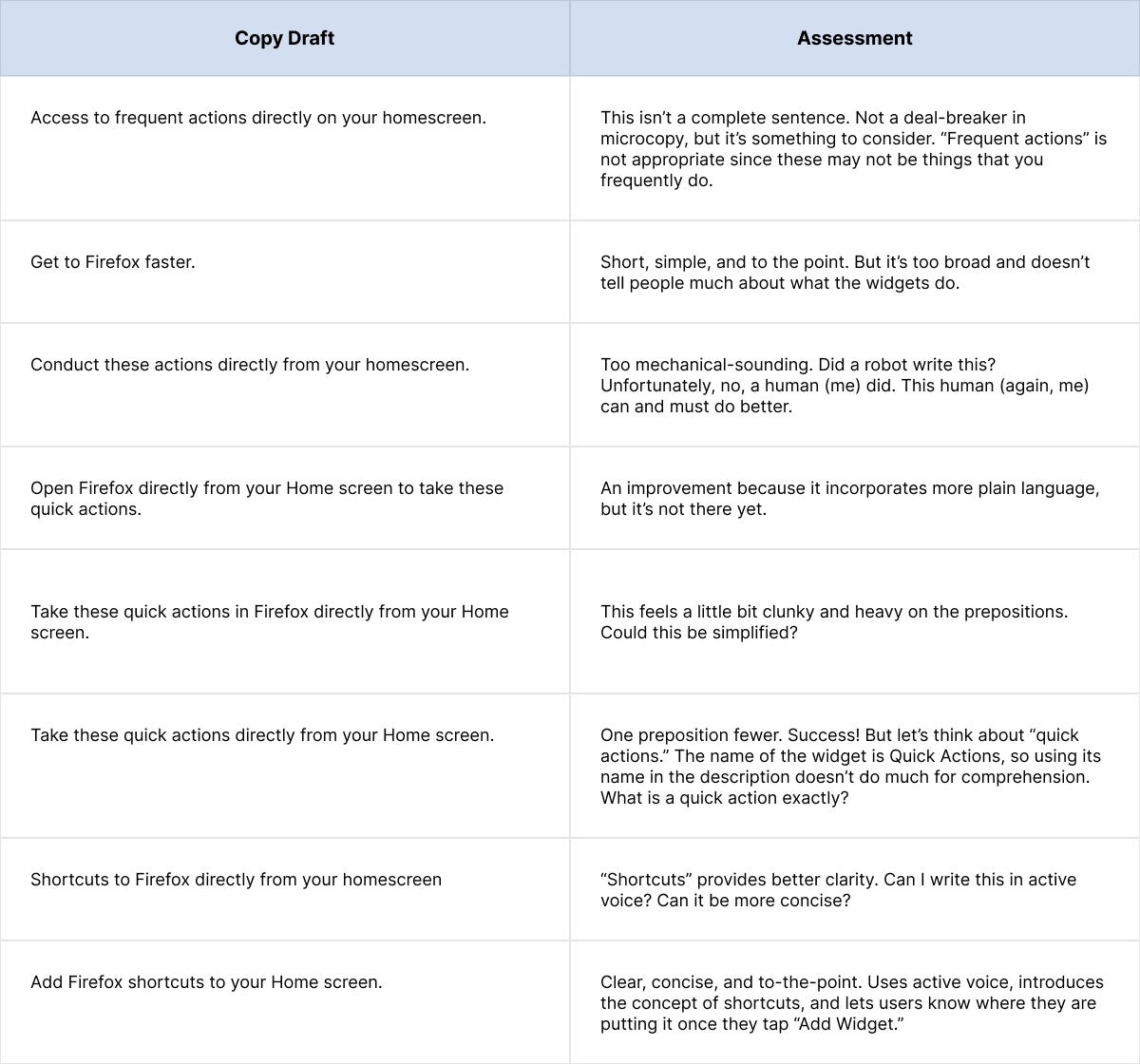

Firefox UX: How to Write Microcopy That Improves the User Experience |

Photo by Amador Loureiro on Unsplash.

The small bits of copy you see sprinkled throughout apps and websites are called microcopy. As content designers, we think deeply about what each word communicates.

Microcopy is the tidiest of UI copy types. But do not let its crisp, contained presentation fool you: the process to get to those final, perfect words can be messy. Very messy. Multiple drafts messy, mired with business and technical constraints.

Here’s a secret about good writing that no one ever tells you: When you encounter clear UX content, it’s a result of editing and revision. The person who wrote those words likely had a dozen or more versions you’ll never see. They also probably had some business or technical constraints to consider, too.

If you’ve ever wondered what all goes into writing microcopy, pour yourself a micro-cup of espresso or a micro-brew and read on!

Blaise Pascal, translated from Lettres Provinciales

1. Understand the component and how it behaves.

As a content designer, you should try to consider as many cases as possible up front. Work with Design and Engineering to test the limits of the container you’re writing for. What will copy look like when it’s really short? What will it look like when it’s really long? You might be surprised what you discover.

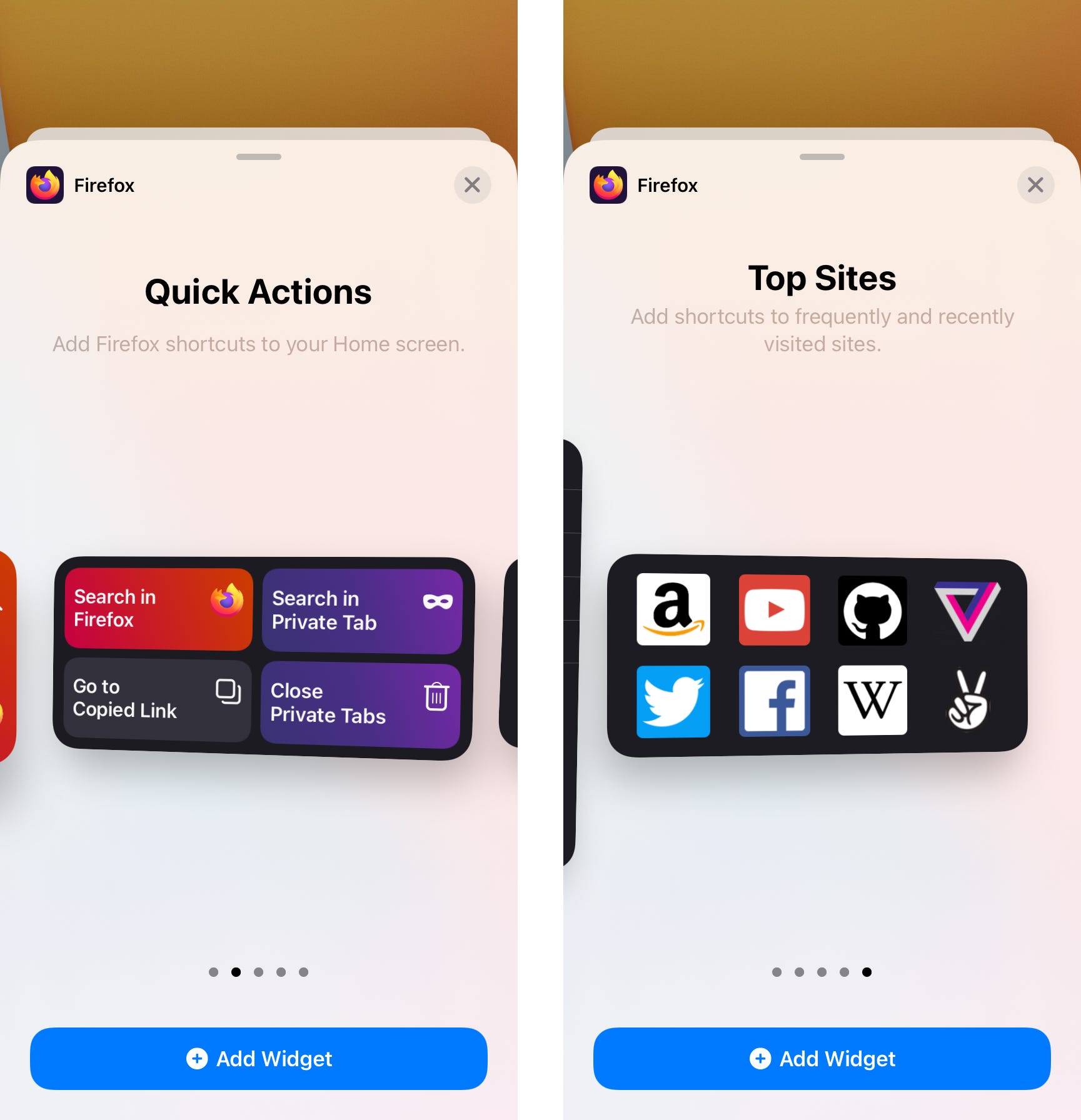

Before writing the microcopy for iOS widgets, I needed first to understand the component and how it worked.

Apple recently introduced a new component to the iOS ecosystem. You can now add widgets the Home Screen on your iPhone, iPad, or iPod touch. The Firefox widgets allow you to start a search, close your tabs, or open one of your top sites.

Before I sat down to write a single word of microcopy, I would need to know the following:

- Is there a character limit for the widget descriptions?

- What happens if the copy expands beyond that character limit? Does it truncate?

- We had three widget sizes. Would this impact the available space for microcopy?

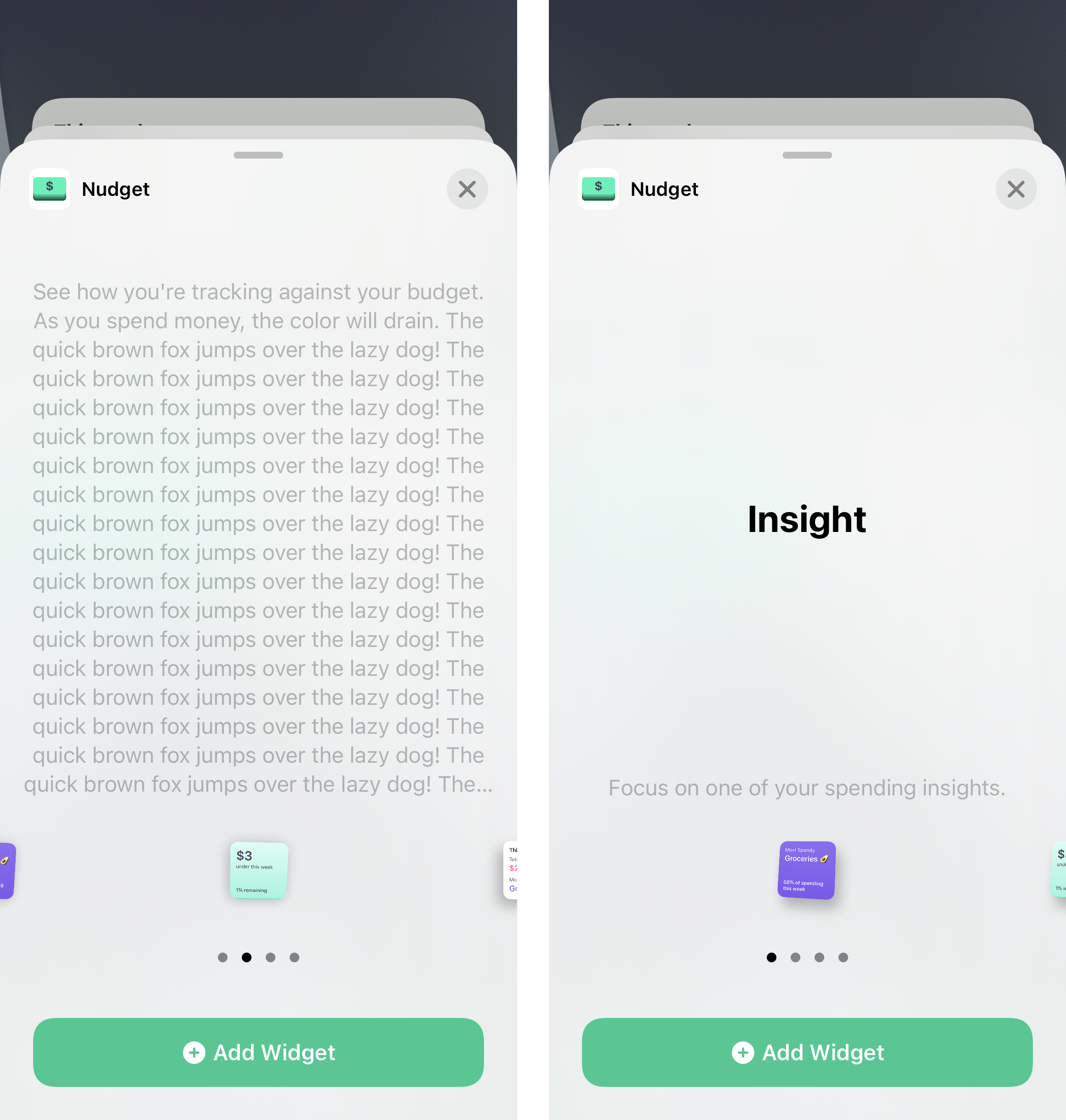

Because these widgets didn’t yet exist in the wild for me to interact with, I asked Engineering to help answer my questions. Engineering played with variations of character length in a testing environment to see how the UI might change.

Engineering tried variations of copy length in a testing environment. This helped us understand surprising behavior in the template itself.

We learned the template behaved in a peculiar way. The widget would shrink to accommodate a longer description. Then, the template would essentially lock to that size. Even if other widgets had shorter descriptions, the widgets themselves would appear teeny. You had to strain your eyes to read any text on the widget itself. Long story short, the descriptions needed to be as concise as possible. This would accommodate for localization and keep the widgets from shrinking.

First learning how the widgets behaved was a crucial step to writing effective microcopy. Build relationships with cross-functional peers so you can ask those questions and understand the limitations of the component you need to write for.

2. Spit out your first draft. Then revise, revise, revise.

Mark Twain, The Wit and Wisdom of Mark Twain

Now that I understood my constraints, I was ready to start typing. I typically work through several versions in a Google Doc, wearing out my delete key as I keep reworking until I get it ‘right.’

I wrote several iterations of the description for this widget to maximize the limited space and make the microcopy as useful as possible.

Microcopy strives to provide maximum clarity in a limited amount of space. Every word counts and has to work hard. It’s worth the effort to analyze each word and ask yourself if it’s serving you as well as it could. Consider tense, voice, and other words on the screen.

3. Solicit feedback on your work.

Before delivering final strings to Engineering, it’s always a good practice to get a second set of eyes from a fellow team member (this could be a content designer, UX designer, or researcher). Someone less familiar with the problem space can help spot confusing language or superfluous words.

In many cases, our team also runs copy by our localization team to understand if the language might be too US-centric. Sometimes we will add a note for our localizers to explain the context and intent of the message. We also do a legal review with in-house product counsel. These extra checks give us better confidence in the microcopy we ultimately ship.

Wrapping up

Magical microcopy doesn’t shoot from our fingertips as we type (though we wish it did)! If we have any trade secrets to share, it’s only that first we seek to understand our constraints, then we revise, tweak, and rethink our words many times over. Ideally we bring in a partner to help us further refine and help us catch blind spots. This is why writing short can take time.

If you’re tasked with writing microcopy, first learn as much as you can about the component you are writing for, particularly its constraints. When you finally sit down to write, don’t worry about getting it right the first time. Get your thoughts on paper, reflect on what you can improve, then repeat. You’ll get crisper and cleaner with each draft.

Acknowledgements

Thank you to my editors Meridel Walkington and Sharon Bautista for your excellent notes and suggestions on this post. Thanks to Emanuela Damiani for the Figma help.

This post was originally published on Medium.

https://blog.mozilla.org/ux/2020/11/how-to-write-microcopy-that-improves-the-user-experience/

|

|

This Week In Rust: This Week in Rust 364 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

- [Inside] Exploring PGO for the Rust compiler

Newsletters

Tooling

Observations/Thoughts

- Rust Ray Tracer, an Update (and SIMD)

- Rust emit=asm Can Be Misleading

- A survey into static analyzers configurations: Clippy for Rust, part 1

- Why Rust is the Future of Game Development

- Rust as a productive high-level language

- 40 millisecond bug

- Postfix macros in Rust

- A Quick Tour of Trade-offs Embedding Data in Rust

- Why Developers Love Rust

Rust Walkthroughs

- Make a Language - Part Nine: Function Calls

- Building a Weather Station UI

- Getting Graphical Output from our Custom RISC-V Operating System in Rust

- Build your own: GPG

- Rpi 4 meets Flutter and Rust

- AWS Lambda + Rust

- Orchestration in Rust

- Rocket Tutorial 02: Minimalist API

- Get simple IO stats using Rust (throughput, ...)

- Type-Safe Discrete Simulation in Rust

- [series] A Gemini Client in Rust

- Postfix macros in Rust

- Processing a Series of Items with Iterators in Rust

- Compilation of Active Directory Logs Using Rust

- [FR] The Rust Programming Language (translated in French)

Project Updates

Miscellaneous

Crate of the Week

This week's crate is postfix-macros, a clever hack to allow postfix macros in stable Rust.

Thanks to Willi Kappler for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

333 pull requests were merged in the last week

- Implement destructuring assignment for tuples

- reverse binding order in matches to allow the subbinding of copyable fields in bindings after

@ - Fix unreachable sub-branch detection in or-patterns

- Transform post order walk to an iterative approach

- Compile rustc crates with the initial-exec TLS model

- Make some

std::iofunctionsconst - Stabilize

Poll::is_readyandis_pendingas const - Stabilize

hint::spin_loop - Simplify the implementation of

Cell::get_mut - futures: Add

StreamExt::cycle - futures: Add

TryStreamExt::try_buffered - cargo: Avoid some extra downloads with new feature resolver

Rust Compiler Performance Triage

- 2020-11-10: 1 Regression, 2 Improvements, 2 mixed

A mixed week with improvements still outweighing regressions. Perhaps the biggest highlight was the move to compiling rustc crates with the initial-exec TLS model which results in fewer calls to _tls_get_addr and thus faster compile times.

See the full report for more.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No RFCs are currently in the final comment period.

Tracking Issues & PRs

- [disposition: merge] Stabilize clamp

- [disposition: merge] [android] Add support for android's file descriptor ownership tagging to libstd.

- [disposition: merge] Implement Error for &(impl Error)

- [disposition: merge] Add checking for no_mangle to unsafe_code lint

- [disposition: merge] Tracking issue for methods converting

booltoOption

New RFCs

Upcoming Events

Online

- November 12, Berlin, DE - Rust Hack and Learn - Berline.rs

- November 12, Washington, DC, US - Mid-month Rustful—How oso built a runtime reflection system for Rust - Rust DC

- November 12, Lehi, UT, US - WASM, Rust, and the State of Async/Await - Utah Rust

- November 18, Vancouver, BC, CA - Rust Study/Hack/Hang-out night - Vancouver Rust

- November 24, Dallas, TX, US - Last Tuesday - Dallas Rust

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

There are no bad programmers, only insufficiently advanced compilers

Thanks to Nixon Enraght-Moony for the suggestion.

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/11/11/this-week-in-rust-364/

|

|

William Lachance: iodide retrospective |

A bit belatedly, I thought I’d write a short retrospective on Iodide: an effort to create a compelling, client-centred scientific notebook environment.

Despite not writing a ton about it (the sole exception being this brief essay about my conservative choice for the server backend) Iodide took up a very large chunk of my mental energy from late 2018 through 2019. It was also essentially my only attempt at working on something that was on its way to being an actual product while at Mozilla: while I’ve led other projects that have been interesting and/or impactful in my 9 odd-years here (mozregression and perfherder being the biggest successes), they fall firmly into the “internal tools supporting another product” category.

At this point it’s probably safe to say that the project has wound down: no one is being paid to work on Iodide and it’s essentially in extreme maintenance mode. Before it’s put to bed altogether, I’d like to write a few notes about its approach, where I think it had big advantanges, and where it seems to fall short. I’ll conclude with some areas I’d like to explore (or would like to see others explore!). I’d like to emphasize that this is my opinion only: the rest of the Iodide core team no doubt have their own thoughts. That said, let’s jump in.

What is Iodide, anyway?

One thing that’s interesting about a product like Iodide is that people tend to project their hopes and dreams onto it: if you asked any given member of the Iodide team to give a 200 word description of the project, you’d probably get a slightly different answer emphasizing different things. That said, a fair initial approximation of the project vision would be “a scientific notebook like Jupyter, but running in the browser”.

What does this mean? First, let’s talk about what Jupyter does: at heart, it’s basically a Python “kernel” (read: interpreter), fronted by a webserver. You send snipits of the code to the interpreter via a web interface and they would faithfully be run on the backend (either on your local machine in a separate process or a server in the cloud). Results are then be returned back to the web interface and then rendered by the browser in one form or another.

Iodide’s model is quite similar, with one difference: instead of running the kernel in another process or somewhere in the cloud, all the heavy lifting happens in the browser itself, using the local JavaScript and/or WebAssembly runtime. The very first version of the product that Hamilton Ulmer and Brendan Colloran came up with was definitely in this category: it had no server-side component whatsoever.

Truth be told, even applications like Jupyter do a fair amount of computation on the client side to render a user interface and the results of computations: the distinction is not as clear cut as you might think. But in general I think the premise holds: if you load an iodide notebook today (still possible on alpha.iodide.io! no account required), the only thing that comes from the server is a little bit of static JavaScript and a flat file delivered as a JSON payload. All the “magic” of whatever computation you might come up with happens on the client.

Let’s take a quick look at the default tryit iodide notebook to give an idea of what I mean:

%% fetch

// load a js library and a csv file with a fetch cell

js: https://cdnjs.cloudflare.com/ajax/libs/d3/4.10.2/d3.js

text: csvDataString = https://data.sfgov.org/api/views/5cei-gny5/rows.csv?accessType=DOWNLOAD

%% js

// parse the data using the d3 library (and show the value in the console)

parsedData = d3.csvParse(csvDataString)

%% js

// replace the text in the report element "htmlInMd"

document.getElementById('htmlInMd').innerText = parsedData[0].Address

%% py

# use python to select some of the data

from js import parsedData

[x['Address'] for x in parsedData if x['Lead Remediation'] == 'true']The %% delimiters indicate individual cells. The fetch cell is an iodide-native cell with its own logic to load the specified resources into a JavaScript variables when evaluated. js and py cells direct the browser to interpret the code inside of them, which causes the DOM to mutate. From these building blocks (and a few others), you can build up an interactive report which can also be freely forked and edited by anyone who cares to.

In some ways, I think Iodide has more in common with services like Glitch or Codepen than with Jupyter. In effect, it’s mostly offering a way to build up a static web page (doing whatever) using web technologies— even if the user interface affordances and cell-based computation model might remind you more of a scientific notebook.

What works well about this approach

There’s a few nifty things that come from doing things this way:

- The environment is easily extensible for those familiar with JavaScript or other client side technologies. There is no need to mess with strange plugin architectures or APIs or conform to the opinions of someone else on what options there are for data visualization and presentation. If you want to use jQuery inside your notebook for a user interface widget, just import it and go!

- The architecture scales to many, many users: since all the computation happens on the client, the server’s only role is to store and retrieve notebook content. alpha.iodide.io has happily been running on Heroku’s least expensive dyno type for its entire existence.

- Reproducibility: so long as the iodide notebook has no references to data on third party servers with authentiction, there is no stopping someone from being able to reproduce whatever computations led to your results.

- Related to reproducibility, it’s easy to build off of someone else’s notebook or exploration, since everything is so self-contained.

I continue to be surprised and impressed with what people come up with in the Jupyter ecosystem so I don’t want to claim these are things that “only Iodide” (or other tools like it) can do— what I will say is that I haven’t seen many things that combine both the conciseness and expressiveness of iomd. The beauty of the web is that there is such an abundance of tutorials and resources to support creating interactive content: when building iodide notebooks, I would freely borrow from resources such as MDN and Stackoverflow, instead of being locked into what the authoring software thinks one should be able express.

What’s awkward

Every silver lining has a cloud and (unfortunately) Iodide has a pretty big one. Depending on how you use Iodide, you will almost certainly run into the following problems:

- You are limited by the computational affordances provided by the browser: there is no obvious way to offload long-running or expensive computations to a cluster of machines using a technology like Spark.

- Long-running computations will block the main thread, causing your notebook to become extremely unresponsive for the duration.

The first, I (and most people?) can usually live with. Computers are quite powerful these days and most data tasks I’m interested in are easily within the range of capabilities of my laptop. In the cases where I need to do something larger scale, I’m quite happy to fire up a Jupyter Notebook, BigQuery Console, or

The second is much harder to deal with, since it means that the process of exploration that is so key to scientific computing. I’m quite happy to wait 5 seconds, 15 seconds, even a minute for a longer-running computation to complete but if I see the slow script dialog and everything about my environment grinds to a halt, it not only blocks my work but causes me to lose faith in the system. How do I know if it’s even going to finish?

This occurs way more often than you might think: even a simple notebook loading pandas (via pyodide) can cause Firefox to pop up the slow script dialog:

Why is this so? Aren’t browsers complex beasts which can do a million things in parallel these days? Only sort of: while the graphical and network portions of loading and using a web site are highly parallel, JavaScript and any changes to the DOM can only occur synchronously by default. Let’s break down a simple iodide example which trivially hangs the browser:

%% js

while (1) { true }link (but you really don’t want to)

What’s going on here? When you execute that cell, the web site’s sole thread is now devoted to running that infinite loop. No longer can any other JavaScript-based event handler be run, so for example the text editor (which uses Monaco under the hood) and menus are now completely unresponsive.

The iodide team was aware of this issue since I joined the project. There were no end of discussions about how to work around it, but they never really come to a satisfying conclusion. The most obvious solution is to move the cell’s computation to a web worker, but workers don’t have synchronous access to the DOM which is required for web content to work as you’d expect. While there are projects like ComLink that attempt to bridge this divide, they require both a fair amount of skill and code to use effectively. As mentioned above, one of the key differentiators between iodide and other notebook environments is that tools like jQuery, d3, etc. “just work”. That is, you can take a code snipit off the web and run it inside an iodide notebook: there’s no workaround I’ve been able to find which maintains that behaviour and ensures that the Iodide notebook environment is always responsive.

It took a while for this to really hit home, but having some separation from the project, I’ve come to realize that the problem is that the underlying technology isn’t designed for the task we were asking of it, nor is it likely to ever be in the near future. While the web (by which I mean not only the browser, but the ecosystem of popular tooling and libraries that has been built on top of it) has certainly grown in scope to things it was never envisioned to handle like games and office applications, it’s just not optimized for what I’d call “editable content”. That is, the situation where a web page offers affordances for manipulating its own representation.

While modern web browsers have evolved to (sort of) protect one errant site from bringing the whole thing down, they certainly haven’t evolved to protect a particular site against itself. And why would they? Web developers usually work in the terminal and text editor: the assumption is that if their test code misbehaves, they’ll just kill their tab and start all over again. Application state is persisted either on-disk or inside an external database, so nothing will really be lost.

Could this ever change? Possibly, but I think it would be a radical rethinking of what the web is, and I’m not really sure what would motivate it.

A way forward

While working on iodide, we were fond of looking at this diagram, which was taken from this study of the data science workflow:

It describes how people typically perform computational inquiry: typically you would poke around with some raw data, run some transformations on it. Only after that process was complete would you start trying to build up a “presentation” of your results to your audience.

Looking back, it’s clear that Iodide’s strong suit was the explanation part of this workflow, rather than collaboration and exploration. My strong suspicion is that we actually want to use different tools for each one of these tasks. Coincidentally, this also maps to the bulk of my experience working with data at Mozilla, using iodide or not: my most successful front-end data visualization projects were typically the distilled result of a very large number of adhoc explorations (python scripts, SQL queries, Jupyter notebooks, …). The actual visualization itself contains very little computational meat: basically “just enough” to let the user engage with the data fruitfully.

Unfortunately much of my work in this area uses semi-sensitive internal Mozilla data so can’t be publicly shared, but here’s one example:

I built this dashboard in a single day to track our progress (resolved/open bugs) when we were migrating our data platform from AWS to GCP. It was useful: it let us quickly visualize which areas needed more attention and gave upper management more confidence that we were moving closer to our goal. However, there was little computation going on in the client: most of the backend work was happening in our Bugzilla instance: the iodide notebook just does a little bit of post-processing to visualize the results in a useful way.

Along these lines, I still think there is a place in the world for an interactive visualization environment built on principles similar to iodide: basically a markdown editor augmented with primitives oriented around data exploration (for example, charts and data tables), with allowances to bring in any JavaScript library you might want. However, any in-depth data processing would be assumed to have mostly been run elsewhere, in advance. The editing environment could either be the browser or a code editor running on your local machine, per user preference: in either case, there would not be any really important computational state running in the browser, so things like dynamic reloading (or just closing an errant tab) should not cause the user to lose any work.

This would give you the best of both worlds: you could easily create compelling visualizations that are easy to share and remix at minimal cost (because all the work happens in the browser), but you could also use whatever existing tools work best for your initial data exploration (whether that be JavaScript or the more traditional Python data science stack). And because the new tool has a reduced scope, I think building such an environment would be a much more tractable project for an individual or small group to pursue.

More on this in the future, I hope.

Many thanks to Teon Brooks and Devin Bayly for reviewing an early draft of this post

https://wlach.github.io/blog/2020/11/iodide-retrospective/?utm_source=Mozilla&utm_medium=RSS

|

|

Mozilla Attack & Defense: Firefox for Android: LAN-Based Intent Triggering |

This blog post is a guest blog post. We invite participants of our bug bounty program to write about bugs they’ve reported to us.

Background

In its inception, Firefox for Desktop has been using one single process for all browsing tabs. Since Firefox Quantum, released in 2017, Firefox has been able to spin off multiple “content processes”. This revised architecture allowed for advances in security and performance due to sandboxing and parallelism. Unfortunately, Firefox for Android (code-named “Fennec”) could not instantly be built upon this new technology. Legacy architecture with a completely different user interface required Fennec to remain single-process. But in the fall of 2020, a Fenix rose: The latest version of Firefox for Android is supported by Android Components, a collection of independent libraries to make browsers and browser-like apps that bring latest rendering technologies to the Android ecosystem.

For this rewrite to succeed, most work on legacy Android product (“Fennec”) was paused and put into maintenance mode except for high-severity security vulnerabilities.

It was during this time, coinciding with the general availability of the new Firefox for Android, that Chris Moberly from GitLab’s Security Red Team reported the following security bug in the almost legacy browser.

Overview

Back in August, I found a nifty little bug in the Simple Service Discovery Protocol (SSDP) engine of Firefox for Android 68.11.0 and below. Basically, a malicious SSDP server could send Android Intent URIs to anyone on a local wireless network, forcing their Firefox app to launch activities without any user interaction. It was sort of like a magical ability to click links on other peoples’ phones without them knowing.

I disclosed this directly to Mozilla as part of their bug bounty program – you can read the original submission here.

I discovered the bug around the same time Mozilla was already switching over to a completely rebuilt version of Firefox that did not contain the vulnerability. The vulnerable version remained in the Google Play store only for a couple weeks after my initial private disclosure. As you can read in the Bugzilla issue linked above, the team at Mozilla did a thorough review to confirm that the vulnerability was not hiding somewhere in the new version and took steps to ensure that it would never be re-introduced.

Disclosing bugs can be a tricky process with some companies, but the Mozilla folks were an absolute pleasure to work with. I highly recommend checking out their bug bounty program and seeing what you can find!

I did a short technical write-up that is available in the exploit repository. I’ll include those technical details here too, but I’ll also take this opportunity to talk a bit more candidly about how I came across this particular bug and what I did when I found it.

Bug Hunting

I’m lucky enough to be paid to hack things at GitLab, but after a long hard day of hacking for work I like to kick back and unwind by… hacking other things! I’d recently come to the devastating conclusion that a bug I had been chasing for the past two years either didn’t exist or was simply beyond my reach, at least for now. I’d learned a lot along the way, but it was time to focus on something new.

A friend of mine had been doing some cool things with Android, and it sparked my interest. I ordered a paperback copy of “The Android Hacker’s Handbook” and started reading. When learning new things, I often order the big fat physical book and read it in chunks away from my computer screen. Everyone learns differently of course, but there is something about this method that helps break the routine for me.

Anyway, I’m reading through this book and taking notes on areas that I might want to explore. I spend a lot of time hacking in Linux, and Android is basically Linux with an abstraction layer. I’m hoping I can find something familiar and then tweak some of my old techniques to work in the mobile world.

I get to Chapter 2, where it introduces the concept of Android “Intents” and describes them as follows:

“These are message objects that contain information about an operation to be performed… Nearly all common actions – such as tapping a link in a mail message to launch the browser, notifying the messaging app that an SMS has arrived, and installing and removing applications – involve Intents being passed around the system.”

This really piqued my interest, as I’ve spent quite a lot of time looking into how applications pass messages to each other under Linux using things like Unix domain sockets and D-Bus. This sounded similar. I put the book down for a bit and did some more research online.

The Android developer documentation provides a great overview on Intents. Basically, developers can expose application functionality via an Intent. Then they create something called an “Intent filter” which is the application’s way of telling Android what types of message objects it is willing to receive.

For example, an application could offer to send an SMS to one of your contacts. Instead of including all of the code required actually to send an SMS, it could craft an Intent that included things like the recipient phone number and a message body and then send it off to the default messaging application to handle the tricky bits.

Something I found very interesting is that while Intents are often built in code via a complex function, they can also be expressed as URIs, or links. In the case of our text message example above, the Intent URI to send an SMS could look something like this:

sms://8675309?body=”hey%20there!”

In fact, if you click on that link while reading this on an Android phone, it should pop open your default SMS application with the phone number and message filled in. You just triggered an Intent.

It was at this point that I had my “Hmmm, I wonder what would happen if I…” moment. I don’t know how it is for everyone else, but that phrase pretty much sums up hacking for me.

You see, I’ve had a bit of success in forcing desktop applications to visit URIs and have used this in the past to find exploitable bugs in targets like Plex, Vuze, and even Microsoft Windows.

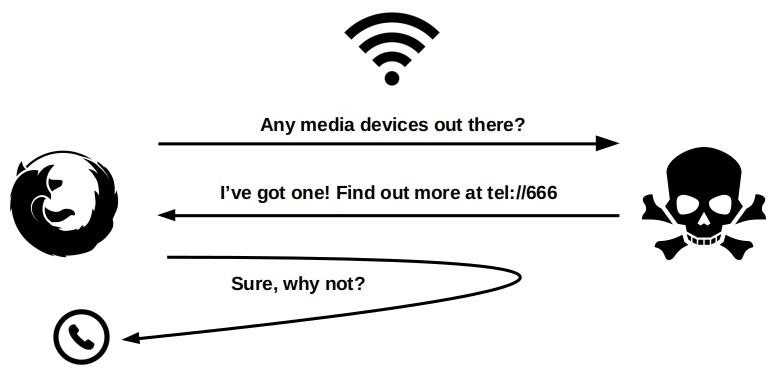

These previous bugs abused functionality in something called Simple Service Discovery Protocol (SSDP), which is used to advertise and discover services on a local network. In SSDP, an initiator will send a broadcast out over the local network asking for details on any available services, like a second-screen device or a networked speaker. Any system can respond back with details on the location of an XML file that describes their particular service. Then, the initiator will automatically go to that location to parse the XML to better understand the service offering.

And guess what that XML file location looks like…

… That’s right, a URI!

In my previous research on SSDP vulnerabilities, I had spent a lot of time running Wireshark on my home network. One of the things I had noticed was that my Android phone would send out SSDP discovery messages when using the Firefox mobile application. I tried attacking it with all of the techniques I could think of at that time, but I never observed any odd behavior.

But that was before I knew about Intents!

So, what would happen if we created a malicious SSDP server that advertised a service’s location as an Android Intent URI? Let’s find out!

What happened…

First, let’s take a look at what I observed over the network prior to putting this all together. While running Wireshark on my laptop, I noticed repeated UDP packets originating from my Android phone when running Firefox. They were being sent to port 1900 of the UDP multicast address 239.255.255.250. This meant that any device on the local network could see these packets and respond if they chose to.

The packets looked like this:

M-SEARCH * HTTP/1.1HOST: 239.255.255.250:1900MAN: "ssdp:discover"MX: 2ST: roku:ecp

This is Firefox asking if there are any Roku devices on the network. It would actually ask for other device types as well, but the device type itself isn’t really relevant for this bug.

It looks like an HTTP request, right? It is… sort of. SSDP uses two forms of HTTP over UDP:

- HTTPMU: HTTP over multi-cast UDP. Meaning the request is broadcast to the entire network over UDP. This is used for clients discovering devices and servers announcing devices.

- HTTPU: HTTP over uni-cast UDP. Meaning the request is sent from one host directly to another over UDP. This is used for responding to search requests.

If we had a Roku on the network, it would immediately respond with a UDP uni-cast packet that looks something like this:

HTTP/1.1 200 OK CACHE-CONTROL: max-age=1800 DATE: Tue, 16 Oct 2018 20:17:12 GMT EXT: LOCATION: http://192.168.1.100/device-desc.xml OPT: "http://schemas.upnp.org/upnp/1/0/"; ns=01 01-NLS: uuid:7f7cc7e1-b631-86f0-ebb2-3f4504b58f5c SERVER: UPnP/1.0 ST: roku:ecp USN: uuid:7f7cc7e1-b631-86f0-ebb2-3f4504b58f5c::roku:ecp BOOTID.UPNP.ORG: 0 CONFIGID.UPNP.ORG: 1

Then, Firefox would query that URI in the LOCATION header (http://192.168.1.100/device-desc.xml), expecting to find an XML document that told it all about the Roku and how to interact with it.

This is where the experimenting started. What if, instead of http://192.168.1.100/device-desc.xml, I responded back with an Android Intent URI?

I grabbed my old SSDP research tool evil-ssdp, made some quick modifications, and replied with a packet like this:

HTTP/1.1 200 OK CACHE-CONTROL: max-age=1800 DATE: Tue, 16 Oct 2018 20:17:12 GMT EXT: LOCATION: tel://666 OPT: "http://schemas.upnp.org/upnp/1/0/"; ns=01 01-NLS: uuid:7f7cc7e1-b631-86f0-ebb2-3f4504b58f5c SERVER: UPnP/1.0 ST: upnp:rootdevice USN: uuid:7f7cc7e1-b631-86f0-ebb2-3f4504b58f5c::roku:ecp BOOTID.UPNP.ORG: 0 CONFIGID.UPNP.ORG: 1

Notice in the LOCATION header above we are now using the URI tel://666, which uses the URI scheme for Android’s default Phone Intent.

Guess what happened? The dialer on the Android device magically popped open, all on its own, with absolutely no interaction on the mobile device itself. It worked!

PoC

I was pretty excited at this point, but I needed to do some sanity checks and make sure that this could be reproduced reliably.

I toyed around with a couple different Android devices I had, including the official emulator. The behavior was consistent. Any device on the network running Firefox would repeatedly trigger whatever Intent I sent via the LOCATION header as long as the PoC script was running.

This was great, but for a good PoC we need to show some real impact. Popping the dialer may look like a big scary Remote Code Execution bug, but in this case it wasn’t. I needed to do better.

The first step was to write an exploit that was specifically designed for this bug and that provided the flexibility to dynamically provide an Intent at runtime. You can take a look at what I ended up with in this Python script.

The TL;DR of the exploit code is that it will listen on the network for SSDP “discovery” messages and respond back to them pretending to be exactly the type of device they are looking for. Instead of providing a link to an XML in the LOCATION header, I would pass in a user supplied argument, which can be an Android Intent. There’s some specifics here that matter and have to be formatted exactly right in order for the initiator to trust them. Luckily, I’d spent some quality time reading the IETF specs to figure that out in the past.

The next step was to find an Intent that would be meaningful in a demonstration of web browser exploitation. Targeting the browser itself seemed like a likely candidate. However, the developer docs were not very helpful here – the URI scheme to open a web browser is defined as http or https. However, the native SSDP code in Firefox mobile would handle those URLs on its own and not pass them off to the Intent system.

A bit of searching around and I learned that there was another way to build an Intent URI while specifying the actual app you would like to send it to. Here’s an example of an Intent URI to specifically launch Firefox and browse to example.com:

intent://example.com/#Intent;scheme=http;package=org.mozilla.firefox;endIf you’d like to try out the exploit yourself, you can grab an older version of Firefox for Android here.

To start, simply join an Android device running a vulnerable version of Firefox to a wireless network and make sure Firefox is running.

Then, on a device that is joined to the same wireless network, run the following command:

# Replace "wlan0" with the wireless device on your attacking machine. python3 ./ffssdp.py wlan0 -t "intent://example.com/#Intent;scheme=http;package=org.mozilla.firefox;end"

All Android devices on the network running Firefox will automatically browse to example.com.

If you’d like to see an example of using the exploit to launch another application, you can try something like the following, which will open the mail client with a pre-filled message:

# Replace "wlan0" with the wireless device on your attacking machine. python3 ./ffssdp.py wlps0 -t "mailto:itpeeps@work.com?subject=I've%20been%20hacked&body=OH%20NOES!!!"

These of course are harmless examples to demonstrate the exploit. A bad actor could send a victim to a malicious website, or directly to the location of a malicious browser extension and repeatedly prompt the user to install it.

It is possible that a higher impact exploit could be developed by looking deeper into the Intents offered inside Firefox or to target known-vulnerable Intents in other applications.

When disclosing vulnerabilities, I often feel a sense of urgency once I’ve found something new. I want to get it reported as quickly as possible, but I also try to make sure I’ve built a PoC that is meaningful enough to be taken seriously. I’m sure I don’t always get this balance right, but in this case I felt like I had enough to provide a solid report to Mozilla.

What came next

I worked out a public disclosure date with Mozilla, waited until that day, and then uploaded the exploit code with a short technical write-up and posted a link on Twitter.

To be perfectly honest, I thought the story would end there. I assumed I would get a few thumbs-up emojis from friends and it would then quickly fade away. Or worse, someone would call me out as a fraud and point out that I was completely wrong about the entire thing.

But, guess what? It caught on a bit. Apparently people are really into web browser bugs AND mobile bugs. I think probably it resonates as it is so close to home, affecting that little brick that we all carry in our pockets and share every intimate detail of our life with.

I have a Google “search alert” for my name, and it started pinging me a couple times a day with mentions in online tech articles all around the world, and I even heard from some old friends who came across my name in their news feeds. Pretty cool!

While I’ll be the first to admit that this isn’t some super-sophisticated, earth-shattering discovery, it did garner me a small bit of positive attention and in my line of work that’s pretty handy. I think this is one of the major benefits of disclosing bugs in open-source software to organizations that care – the experience is transparent and can be shared with the world.

Another POC video created by @LukasStefanko

Lessons learned

This was the first significant discovery I’ve made both in a mobile application and in a web browser, and I certainly wouldn’t consider myself an expert in either. But here I am, writing a guest blog with Mozilla. Who’d have thought? I guess the lesson here is to try new things. Try old things in new ways. Try normal things in weird things. Basically, just try things.

Hacking, for me at least, is more of an exploration than a well-planned journey. I often chase after random things that look interesting, trying to understand exactly what is happening and why it is happening that way. Sometimes it pays off right away, but more often than not it’s an iterative process that provides benefits at unexpected times. This bug was a great example, where my past experience with SSDP came back to pay dividends with my new research into Android.

Also, read books! And while the book I mention above just happens to be about hacking, I honestly find just as much (maybe more) inspiration consuming information about how to design and build systems. Things are much easier to take apart if you understand how they are put together.

Finally, don’t avoid looking for bugs in major applications just because you may not be the globally renowned expert in that particular area. If I did that, I would never find any at all. The bugs are there, you just have to have curiosity and persistence.

If you read this far, thanks! I realize I went off on some personal tangents, but hopefully the experience will resonate with other hackers on their own explorations.

With that, back to you Freddy.

Conclusion

As one of the oldest Bug Bounty Programs out there, we really enjoy and encourage direct participation on Bugzilla. The direct communication with our developers and security engineers help you gain insight into browser development and allows us to gain new perspectives.

Furthermore, we will continue to have guest blog posts for interesting bugs here. If you want to learn more about our security internals, follow us on Twitter @attackndefense or subscribe to our RSS feed.

Finally, thanks to Chris for reporting this interesting bug!

|

|

Eric Shepherd: Amazon Wins Sheppy |

Following my departure from the MDN documentation team at Mozilla, I’ve joined the team at Amazon Web Services, documenting the AWS SDKs. Which specific SDKs I’ll be covering are still being finalized; we have some in mind but are determining who will prioritize which still, so I’ll hold off on being more specific.

Instead of going into details on my role on the AWS docs team, I thought I’d write a new post that provides a more in-depth introduction to myself than the little mini-bios strewn throughout my onboarding forms. Not that I know if anyone is interested, but just in case they are…

Personal

Now a bit about me. I was born in the Los Angeles area, but my family moved around a lot when I was a kid due to my dad’s work. By the time I left home for college at age 18, we had lived in California twice (where I began high school), Texas twice, Colorado twice, and Louisiana once (where I graduated high school). We also lived overseas once, near Pekanbaru, Riau Province on the island of Sumatra in Indonesia. It was a fun and wonderful place to live. Even though we lived in a company town called Rumbai, and were thus partially isolated from the local community, we still lived in a truly different place from the United States, and it was a great experience I cherish.

My wife, Sarah, and I have a teenage daughter, Sophie, who’s smarter than both of us combined. When she was in preschool and kindergarten, we started talking about science in pretty detailed ways. I would give her information and she would draw conclusions that were often right, thinking up things that essentially were black holes, the big bang, and so forth. It was fun and exciting to watch her little brain work. She’s in her “nothing is interesting but my 8-bit chiptunes, my game music, the games I like to play, and that’s about it” phase, and I can’t wait until she’s through that and becomes the person she will be in the long run. Nothing can bring you so much joy, pride, love, and total frustration as a teenage daughter.

Education

I got my first taste of programming on a TI-99/4 computer bought by the Caltex American School there. We all were given a little bit of programming lesson just to get a taste of it, and I was captivated from the first day, when I got it to print a poem based on “Roses are red, violets are blue” to the screen. We were allowed to book time during recesses and before and after school to use the computer, and I took every opportunity I could to do so, learning more and more and writing tons of BASIC programs on it.

This included my masterpiece: a game called “Alligator!” in which you drove an alligator sprite around with the joystick, eating frogs that bounced around the screen while trying to avoid the dangerous bug that was chasing you. When I discovered that other kids were using their computer time to play it, I was permanently hooked.

I continued to learn and expand my skills over the years, moving on to various Apple II (and clone) computers, eventually learning 6502 and 65816 assembly, Pascal, C, dBase IV, and other languages.

During my last couple years of high school, I got a job at a computer and software store (which was creatively named “Computer and Software Store”) in Mandeville, Louisiana. In addition to doing grunt work and front-of-store sales, I also spent time doing programming for contract projects the store took on. My first was a simple customer contact management program for a real estate office. My most complex completed project was a three-store cash register system for a local chain of taverns and bars in the New Orleans area. I also built most of a custom inventory, quotation, and invoicing system for the store, though this was never finished due to my departure to start college.

The project about which I was most proud: a program for tracking information and progress of special education students and students with special needs for the St. Tammany Parish School District. This was built to run on an Apple IIe computer, and I was delighted to have the chance to build a real workplace application for my favorite platform.