Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Performance Blog: Dynamic Test Documentation with PerfDocs |

After over twenty years, Mozilla is still going strong. But over that amount of time, there’s bound to be changes in responsibilities. This brings unique challenges with it to test maintenance when original creators leave and knowledge of the purposes, and inner workings of a test possibly disappears. This is especially true when it comes to performance testing.

Our first performance testing framework is Talos, which was built in 2007. It’s a fantastic tool that is still used today for performance testing very specific aspects of Firefox. We currently have 45 different performance tests in Talos, and all of those together produce as many as 462 metrics. Having said that, maintaining the tests themselves is a challenge because some of the people who originally built them are no longer around. In these tests, the last person who touched the code, and who is still around, usually becomes the maintainer of these tests. But with a lack of documentation on the tests themselves, this becomes a difficult task when you consider the possibility of a modification causing a change in what is being measured, and moving away from its original purpose.

Over time, we’ve built another performance testing framework called Raptor which is primarily used for page load testing (e.g. measuring first paint, and first contentful paint). This framework is much simpler to maintain and keep up with its purpose but the settings used for the tests change often enough that it becomes easy to forget how we set up the test, or what pages are being tested exactly. We have a couple other frameworks too, with the newest one (which is still in development) being MozPerftest – there might be a blog post on this in the future. With this many frameworks and tests, it’s easy to see how test maintenance over the long term can turn into a bit of a mess when it’s left unchecked.

To overcome this issue, we decided to implement a tool to dynamically document all of our existing performance tests in a single interface while also being able to prevent new tests from being added without proper documentation or, at the least, an acknowledgement of the existence of the test. We called this tool PerfDocs.

Currently, we use PerfDocs to document tests in Raptor and MozPerftest (with Talos in the plans for the future). At the moment in Raptor, we only document the tests that we have, along with the pages that are being tested. Given that Raptor is a simple framework with the main purpose being to measure page loads, this documentation gives us enough without getting overly complex. However, we do plan to add much more information to it in the future (e.g. what branches the tests run on, what are the test settings).

The PerfDocs integration with MozPerftest is far more interesting though and you can find it here. In MozPerftest, all tests have a mandatory requirement of having metadata in the test itself. For example, here’s a test we have for measuring the start-up time on our mobile browsers which describes things such as the browsers that it runs on, and even the owner of the test. This lets us force the test writer to think about maintainability as we move into the future rather than simply writing it and forgetting it. For that Android VIEW test, you can find the generated documentation here. If you look through the documented tests that we have, you’ll notice that we also don’t have a single person listed as a maintainer. Instead, we refer to the team that built it as the maintainer. Furthermore, the tests actually exist in the folders (or code) that those teams are responsible for so they don’t need to exist in the frameworks folder giving us more accountability for their maintenance. By building tests this way, with documentation in mind, we can ensure that as time goes on, we won’t lose information about what is being tested, its purpose, along with who should be responsible for maintaining it.

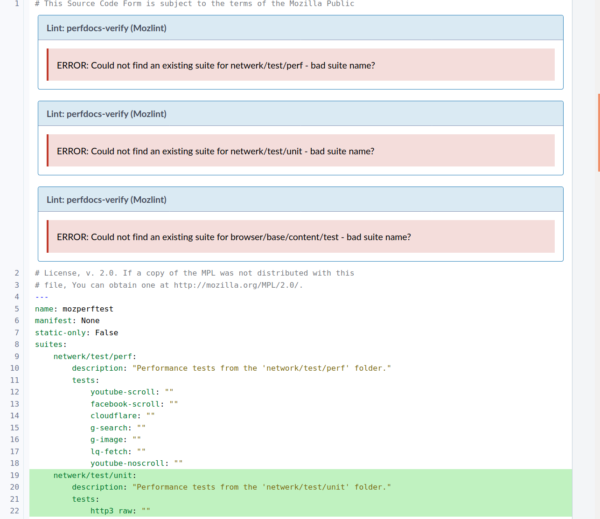

Lastly, as I alluded to above, outside of generating documentation dynamically we also ensure that any new tests are properly documented before they are added into mozilla-central. This is done for both Raptor and MozPerftest through a tool called review-bot which runs tests on submitted patches in Phabricator (the code review tool that we use). When a patch is submitted, PerfDocs will run to make sure that (1) all the tests that were documented actually exist, and (2) all the tests which exist are actually documented. This way, we can prevent our documentation from becoming outdated with tests that don’t exist anymore, and that all tests are always documented in some way.

The review-bot left a complaint on this patch which was adding new suites. This one is because we could not find the actual tests.

In the future, we hope to be able to expand this tool and its features from our performance tests to the massive box of functional tests that we have. If you think having 462 metrics to track is a lot, consider the thousands of tests we have for ensuring that Firefox functionality is properly tested.

This project started in Q4 of 2019, with myself [:sparky], and Alexandru Ionescu [:alexandrui] building up the base of this tool. Then, in early H1-2020, Myeongjun Go [:myeongjun], a fantastic volunteer contributor, began hacking on this project and brought us from lightly documenting Raptor tests to having links to the tested pages in it, and even integrating PerfDocs into MozPerftest.

If you have any questions, feel free to reach out to us on Riot in #perftest.

https://blog.mozilla.org/performance/2020/11/03/dynamic-test-documentation-with-perfdocs/

|

|

Daniel Stenberg: HSTS your curl |

HTTP Strict Transport Security (HSTS) is a standard HTTP response header for sites to tell the client that for a specified period of time into the future, that host is not to be accessed with plain HTTP but only using HTTPS. Documented in RFC 6797 from 2012.

The idea is of course to reduce the risk for man-in-the-middle attacks when the server resources might be accessible via both HTTP and HTTPS, perhaps due to legacy or just as an upgrade path. Every access to the HTTP version is then a risk that you get back tampered content.

Browsers preload

These headers have been supported by the popular browsers for years already, and they also have a system setup for preloading a set of sites. Sites that exist in their preload list then never get accessed over HTTP since they know of their HSTS state already when the browser is fired up for the first time.

The entire .dev top-level domain is even in that preload list so you can in fact never access a web site on that top-level domain over HTTP with the major browsers.

With the curl tool

Starting in curl 7.74.0, curl has experimental support for HSTS. Experimental means it isn’t enabled by default and we discourage use of it in production. (Scheduled to be released in December 2020.)

You instruct curl to understand HSTS and to load/save a cache with HSTS information using --hsts . The HSTS cache saved into that file is then updated on exit and if you do repeated invokes with the same cache file, it will effectively avoid clear text HTTP accesses for as long as the HSTS headers tell it.

I envision that users will simply use a small hsts cache file for specific use cases rather than anyone ever really want to have or use a “complete” preload list of domains such as the one the browsers use, as that’s a huge list of sites and for most use cases just completely unnecessary to load and handle.

With libcurl

Possibly, this feature is more useful and appreciated by applications that use libcurl for HTTP(S) transfers. With libcurl the application can set a file name to use for loading and saving the cache but it also gets some added options for more flexibility and powers. Here’s a quick overview:

CURLOPT_HSTS – lets you set a file name to read/write the HSTS cache from/to.

CURLOPT_HSTS_CTRL – enable HSTS functionality for this transfer

CURLOPT_HSTSREADFUNCTION – this callback gets called by libcurl when it is about to start a transfer and lets the application preload HSTS entries – as if they had been read over the wire and been added to the cache.

CURLOPT_HSTSWRITEFUNCTION – this callback gets called repeatedly when libcurl flushes its in-memory cache and allows the application to save the cache somewhere and similar things.

Feedback?

I trust you understand that I’m very very keen on getting feedback on how this works, on the API and your use cases. Both negative and positive. Whatever your thoughts are really!

|

|

Data@Mozilla: This week in Glean: Glean.js |

(“This Week in Glean” is a series of blog posts that the Glean Team at Mozilla is using to try to communicate better about our work. They could be release notes, documentation, hopes, dreams, or whatever: so long as it is inspired by Glean.)

In a previous TWiG blog post, I talked about my experiment on trying to compile glean-core to Wasm. The motivation for that experiment was the then upcoming Glean.js workweek, where some of us were going to take a pass at building a proof-of-concept implementation of Glean in Javascript. That blog post ends on the following note:

My conclusion is that although we can compile glean-core to Wasm, it doesn’t mean that we should do that. The advantages of having a single source of truth for the Glean SDK are very enticing, but at the moment it would be more practical to rewrite something specific for the web.

When I wrote that post, we hadn’t gone through the Glean.js workweek and were not sure yet if it would be viable to pursue a new implementation of Glean in Javascript.

I am not going to keep up the suspense though. We were able to implement a proof of concept version of Glean that works in Javascript environments during that workweek, it:

- Persisted data throughout application runs (e.g. client_id);

- Allowed for recording event metrics;

- Sent Glean schema compliant pings to the pipeline.

And all of this, we were able to make work on:

- Static websites;

- Svelte apps;

- Node.js servers;

- Electron apps;

- Node.js command like applications;

- Node.js server applications;

- Qt/QML apps.

Check out the code for this project on: https://github.com/brizental/gleanjs

The outcome of the workweek confirmed it was possible and worth it to go ahead with Glean.js. For the past weeks the Glean SDK team has officially started working on the roadmap for this project’s MVP.

Our plan is to have an MVP of Glean.js that can be used on webextensions by February/2021.

The reason for our initial focus on webextensions is that the Ion project has volunteered to be Glean.js’ first consumer. Support for static websites and Qt/QML apps will follow. Other consumers such as Node.js servers and CLIs are not part of the initial roadmap.

Although we learned a lot by building the POC, we were probably left with more open questions than answered ones. The Javascript environment is a very special one and when we set out to build something that can work virtually anywhere that runs Javascript, we were embarking on an adventure.

Each Javascript environment has different resources the developer can interact with. Let’s think, for example, about persistence solutions: on web browsers we can use localStorage or IndexedDB, but on Node.js servers / CLIs we would need to go another way completely and use Level DB or some other external library. What is the best way to deal with this and what exactly are the differences between environments?

The issue of having different resources is not even the most challenging one. Glean defines internal metrics and their lifetimes, and internal pings and their schedules. This is important so that our users can do base analysis without having any custom metrics or pings. The hardest open question we were left with was: what pings should Glean.js send out of the box and what should their scheduling look like?

Because Glean.js opens up possibilities for such varied consumers: from websites to CLIs, defining scheduling that will be universal for all of its consumers is probably not even possible. If we decide to tackle these questions for each environment separately, we are still facing tricky consumers and consumers that we are not used to, such as websites and web extensions.

Websites specifically come with many questions: how can we guarantee client side data persistence, if a user can easily delete all of it by running some code in the console or tweaking browser settings. What is the best scheduling for pings, if each website can have so many different usage lifecycles?

We are excited to tackle these and many other challenges in the coming months. Development of the roadmap can be followed on Bug 1670910.

https://blog.mozilla.org/data/2020/11/02/this-week-in-glean-glean-js/

|

|

Dustin J. Mitchell: Taskcluster's DB (Part 2) - DB Migrations |

This is part 2 of a deep-dive into the implementation details of Taskcluster’s backend data stores. Check out part 1 for the background, as we’ll jump right in here!

Azure in Postgres

As of the end of April, we had all of our data in a Postgres database, but the data was pretty ugly. For example, here’s a record of a worker as recorded by worker-manager:

partition_key | testing!2Fstatic-workers

row_key | cc!2Fdd~ee!2Fff

value | {

"state": "requested",

"RowKey": "cc!2Fdd~ee!2Fff",

"created": "2015-12-17T03:24:00.000Z",

"expires": "3020-12-17T03:24:00.000Z",

"capacity": 2,

"workerId": "ee/ff",

"providerId": "updated",

"lastChecked": "2017-12-17T03:24:00.000Z",

"workerGroup": "cc/dd",

"PartitionKey": "testing!2Fstatic-workers",

"lastModified": "2016-12-17T03:24:00.000Z",

"workerPoolId": "testing/static-workers",

"__buf0_providerData": "eyJzdGF0aWMiOiJ0ZXN0ZGF0YSJ9Cg==",

"__bufchunks_providerData": 1

}

version | 1

etag | 0f6e355c-0e7c-4fe5-85e3-e145ac4a4c6c

To reap the goodness of a relational database, that would be a “normal”[] table: distinct columns, nice data types, and a lot less redundancy.

All access to this data is via some Azure-shaped stored functions, which are also not amenable to the kinds of flexible data access we need:

_load _create _remove _modify _scan

[] In the normal sense of the word – we did not attempt to apply database normalization.

Database Migrations

So the next step, which we dubbed “phase 2”, was to migrate this schema to one more appropriate to the structure of the data.

The typical approach is to use database migrations for this kind of work, and there are lots of tools for the purpose. For example, Alembic and Django both provide robust support for database migrations – but they are both in Python.

The only mature JS tool is knex, and after some analysis we determined that it both lacked features we needed and brought a lot of additional features that would complicate our usage. It is primarily a “query builder”, with basic support for migrations. Because we target Postgres directly, and because of how we use stored functions, a query builder is not useful. And the migration support in knex, while effective, does not support the more sophisticated approaches to avoiding downtime outlined below.

We elected to roll our own tool, allowing us to get exactly the behavior we wanted.

Migration Scripts

Taskcluster defines a sequence of numbered database versions. Each version corresponds to a specific database schema, which includes the structure of the database tables as well as stored functions. The YAML file for each version specifies a script to upgrade from the previous version, and a script to downgrade back to that version. For example, an upgrade script might add a new column to a table, with the corresponding downgrade dropping that column.

version: 29

migrationScript: |-

begin

alter table secrets add column last_used timestamptz;

end

downgradeScript: |-

begin

alter table secrets drop column last_used;

end

So far, this is a pretty normal approach to migrations. However, a major drawback is that it requires careful coordination around the timing of the migration and deployment of the corresponding code. Continuing the example of adding a new column, if the migration is deployed first, then the existing code may execute INSERT queries that omit the new column. If the new code is deployed first, then it will attempt to read a column that does not yet exist.

There are workarounds for these issues. In this example, adding a default value for the new column in the migration, or writing the queries such that they are robust to a missing column. Such queries are typically spread around the codebase, though, and it can be difficult to ensure (by testing, of course) that they all operate correctly.

In practice, most uses of database migrations are continuously-deployed applications – a single website or application server, where the developers of the application control the timing of deployments. That allows a great deal of control, and changes can be spread out over several migrations that occur in rapid succession.

Taskcluster is not continuously deployed – it is released in distinct versions which users can deploy on their own cadence. So we need a way to run migrations when upgrading to a new Taskcluster release, without breaking running services.

Stored Functions

Part 1 mentioned that all access to data is via stored functions. This is the critical point of abstraction that allows smooth migrations, because stored functions can be changed at runtime.

Each database version specifies definitions for stored functions, either introducing new functions or replacing the implementation of existing functions.

So the version: 29 YAML above might continue with

methods:

create_secret:

args: name text, value jsonb

returns: ''

body: |-

begin

insert

into secrets (name, value, last_used)

values (name, value, now());

end

get_secret:

args: name text

returns: record

body: |-

begin

update secrets

set last_used = now()

where secrets.name = get_secret.name;

return query

select name, value from secrets

where secrets.name = get_secret.name;

end

This redefines two existing functions to operate properly against the new table.

The functions are redefined in the same database transaction as the migrationScript above, meaning that any calls to create_secret or get_secret will immediately begin populating the new column.

A critical rule (enforced in code) is that the arguments and return type of a function cannot be changed.

To support new code that references the last_used value, we add a new function:

get_secret_with_last_used:

args: name text

returns: record

body: |-

begin

update secrets

set last_used = now()

where secrets.name = get_secret.name;

return query

select name, value, last_used from secrets

where secrets.name = get_secret.name;

end

Another critical rule is that DB migrations must be applied fully before the corresponding version of the JS code is deployed.

In this case, that means code that uses get_secret_with_last_used is deployed only after the function is defined.

All of this can be thoroughly tested in isolation from the rest of the Taskcluster code, both unit tests for the functions and integration tests for the upgrade and downgrade scripts. Unit tests for redefined functions should continue to pass, unchanged, providing an easy-to-verify compatibility check.

Phase 2 Migrations

The migrations from Azure-style tables to normal tables are, as you might guess, a lot more complex than this simple example. Among the issues we faced:

- Azure-entities uses a multi-field base64 encoding for many data-types, that must be decoded (such as

__buf0_providerData/__bufchunks_providerDatain the example above) - Partition and row keys are encoded using a custom variant of urlencoding that is remarkably difficult to implement in pl/pgsql

- Some columns (such as secret values) are encrypted.

- Postgres generates slightly different ISO8601 timestamps from JS’s

Date.toJSON()

We split the work of performing these migrations across the members of the Taskcluster team, supporting each other through the tricky bits, in a rather long but ultimately successful “Postgres Phase 2” sprint.

0042 - secrets phase 2

Let’s look at one of the simpler examples: the secrets service.

The migration script creates a new secrets table from the data in the secrets_entities table, using Postgres’s JSON function to unpack the value column into “normal” columns.

The database version YAML file carefully redefines the Azure-compatible DB functions to access the new secrets table.

This involves unpacking function arguments from their JSON formats, re-packing JSON blobs for return values, and even some light parsing of the condition string for the secrets_entities_scan function.

It then defines new stored functions for direct access to the normal table. These functions are typically similar, and more specific to the needs of the service. For example, the secrets service only modifies secrets in an “upsert” operation that replaces any existing secret of the same name.

Step By Step

To achieve an extra layer of confidence in our work, we landed all of the phase-2 PRs in two steps. The first step included migration and downgrade scripts and the redefined stored functions, as well as tests for those. But critically, this step did not modify the service using the table (the secrets service in this case). So the unit tests for that service use the redefined stored functions, acting as a kind of integration-test for their implementations. This also validates that the service will continue to run in production between the time the database migration is run and the time the new code is deployed. We landed this step on GitHub in such a way that reviewers could see a green check-mark on the step-1 commit.

In the second step, we added the new, purpose-specific stored functions and rewrote the service to use them. In services like secrets, this was a simple change, but some other services saw more substantial rewrites due to more complex requirements.

Deprecation

Naturally, we can’t continue to support old functions indefinitely: eventually they would be prohibitively complex or simply impossible to implement. Another deployment rule provides a critical escape from this trap: Taskcluster must be upgraded at most one major version at a time (e.g., 36.x to 37.x). That provides a limited window of development time during which we must maintain compatibility.

Defining that window is surprisingly tricky, but essentially it’s two major revisions. Like the software engineers we are, we packaged up that tricky computation in a script, and include the lifetimes in some generated documentation

What’s Next?

This post has hinted at some of the complexity of “phase 2”. There are lots of details omitted, of course!

But there’s one major detail that got us in a bit of trouble.

In fact, we were forced to roll back during a planned migration – not an engineer’s happiest moment.

The queue_tasks_entities and queue_artifacts_entities table were just too large to migrate in any reasonable amount of time.

Part 3 will describe what happened, how we fixed the issue, and what we’re doing to avoid having the same issue again.

|

|

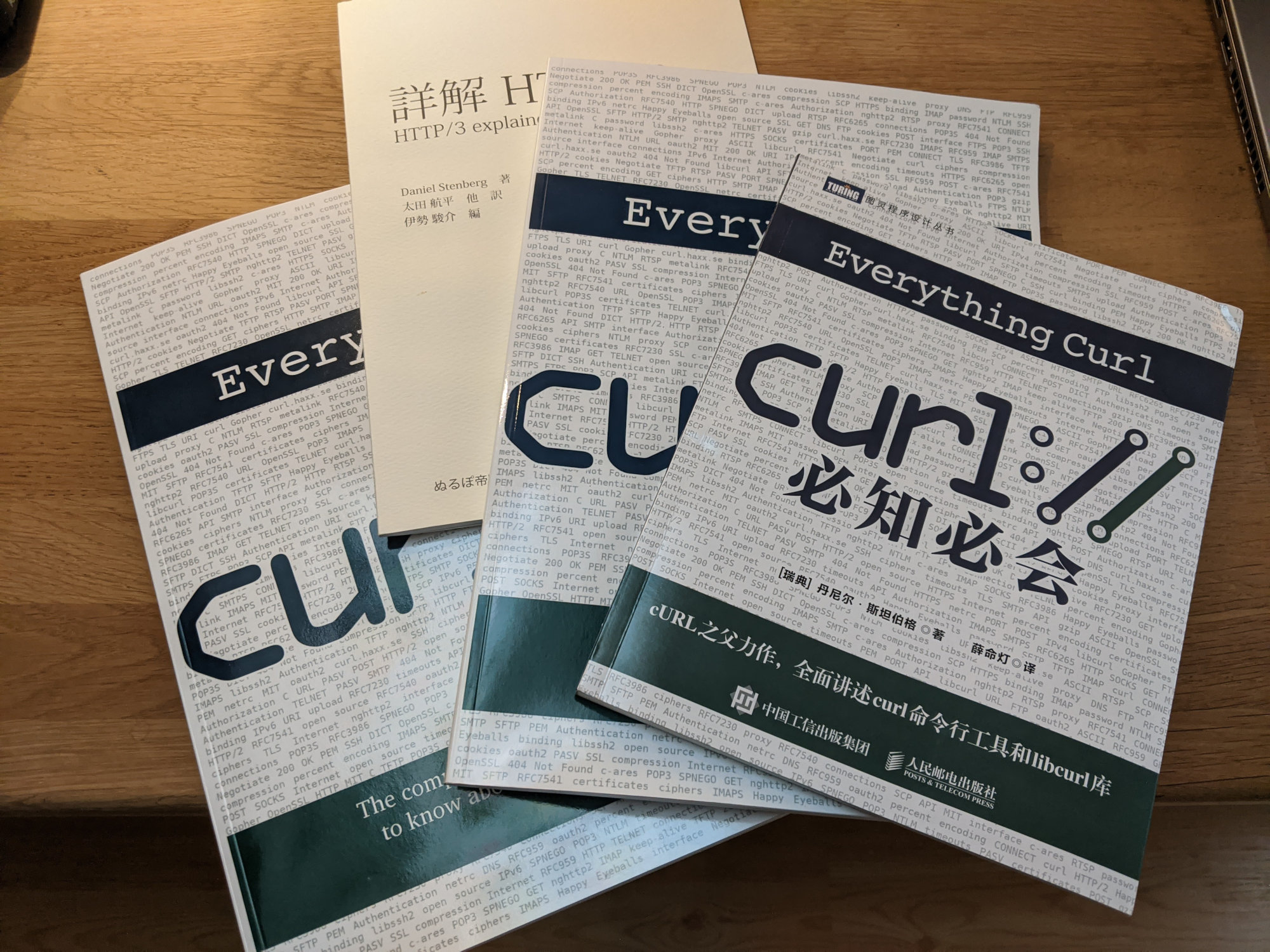

Daniel Stenberg: Everything curl in Chinese |

The other day we celebrated everything curl turning 5 years old, and not too long after that I got myself this printed copy of the Chinese translation in my hands!

This version of the book is available for sale on Amazon and the translation was done by the publisher.

The book’s full contents are available on github and you can read the English version online on ec.haxx.se.

If you would be interested in starting a translation of the book into another language, let me know and I’ll help you get started. Currently the English version consists of 72,798 words so it’s by no means an easy feat to translate! My other two other smaller books, http2 explained and HTTP/3 explained have been translated into twelve(!) and ten languages this way (and there might be more languages coming!).

Unfortunately I don’t read Chinese so I can’t tell you how good the translation is!

https://daniel.haxx.se/blog/2020/10/29/everything-curl-in-chinese/

|

|

Mozilla Addons Blog: Contribute to selecting new Recommended extensions |

Recommended extensions—a curated list of extensions that meet Mozilla’s highest standards of security, functionality, and user experience—are in part selected with input from a rotating editorial board of community contributors. Each board runs for six consecutive months and evaluates a small batch of new Recommended candidates each month. The board’s evaluation plays a critical role in helping identify new potential Recommended additions.

Recommended extensions—a curated list of extensions that meet Mozilla’s highest standards of security, functionality, and user experience—are in part selected with input from a rotating editorial board of community contributors. Each board runs for six consecutive months and evaluates a small batch of new Recommended candidates each month. The board’s evaluation plays a critical role in helping identify new potential Recommended additions.

We are now accepting applications for community board members through 18 November. If you would like to nominate yourself for consideration on the board, please email us at amo-featured [at] mozilla [dot] org and provide a brief explanation why you feel you’d make a keen evaluator of Firefox extensions. We’d love to hear about how you use extensions and what you find so remarkable about browser customization. You don’t have to be an extension developer to effectively evaluate Recommended candidates (though indeed many past board members have been developers themselves), however you should have a strong familiarity with extensions and be comfortable assessing the strengths and flaws of their functionality and user experience.

Selected contributors will participate in a six-month project that runs from December – May.

Here’s the entire collection of Recommended extensions, if curious to explore what’s currently curated.

Thank you and we look forward to hearing from interested contributors by the 18 November application deadline!

The post Contribute to selecting new Recommended extensions appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/10/29/contribute-to-selecting-new-recommended-extensions/

|

|

Hacks.Mozilla.Org: MDN Web Docs evolves! Lowdown on the upcoming new platform |

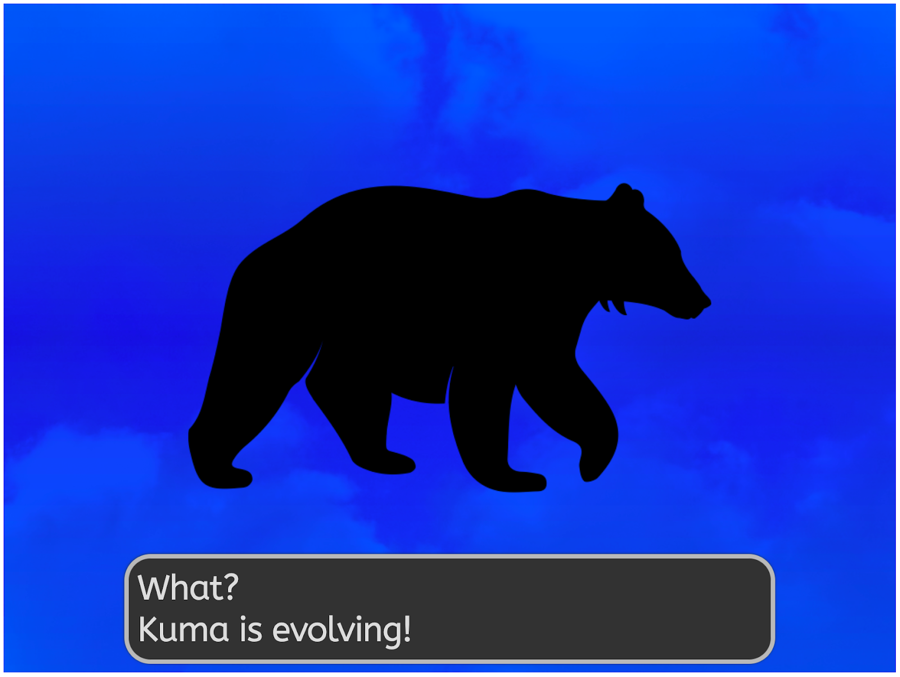

The time has come for Kuma — the platform that powers MDN Web Docs — to evolve. For quite some time now, the MDN developer team has been planning a radical platform change, and we are ready to start sharing the details of it. The question on your lips might be “What does a Kuma evolve into? A KumaMaMa?”

For those of you not so into Pok'emon, the question might instead be “How exactly is MDN changing, and how does it affect MDN users and contributors”?

For general users, the answer is easy — there will be very little change to how we serve the great content you use everyday to learn and do your jobs.

For contributors, the answer is a bit more complex.

The changes in a nutshell

In short, we are updating the platform to move the content from a MySQL database to being hosted in a GitHub repository (codename: Project Yari).

The main advantages of this approach are:

- Less developer maintenance burden: The existing (Kuma) platform is complex and hard to maintain. Adding new features is very difficult. The update will vastly simplify the platform code — we estimate that we can remove a significant chunk of the existing codebase, meaning easier maintenance and contributions.

- Better contribution workflow: We will be using GitHub’s contribution tools and features, essentially moving MDN from a Wiki model to a pull request (PR) model. This is so much better for contribution, allowing for intelligent linting, mass edits, and inclusion of MDN docs in whatever workflows you want to add it to (you can edit MDN source files directly in your favorite code editor).

- Better community building: At the moment, MDN content edits are published instantly, and then reverted if they are not suitable. This is really bad for community relations. With a PR model, we can review edits and provide feedback, actually having conversations with contributors, building relationships with them, and helping them learn.

- Improved front-end architecture: The existing MDN platform has a number of front-end inconsistencies and accessibility issues, which we’ve wanted to tackle for some time. The move to a new, simplified platform gives us a perfect opportunity to fix such issues.

The exact form of the platform is yet to be finalized, and we want to involve you, the community, in helping to provide ideas and test the new contribution workflow! We will have a beta version of the new platform ready for testing on November 2, and the first release will happen on December 14.

Simplified back-end platform

We are replacing the current MDN Wiki platform with a JAMStack approach, which publishes the content managed in a GitHub repo. This has a number of advantages over the existing Wiki platform, and is something we’ve been considering for a number of years.

Before we discuss our new approach, let’s review the Wiki model so we can better understand the changes we’re making.

Current MDN Wiki platform

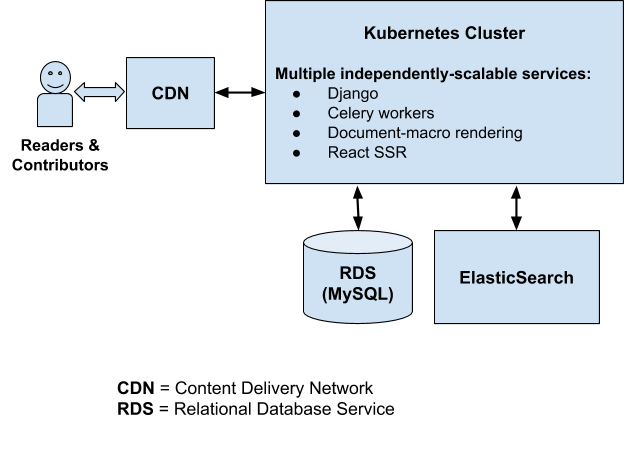

It’s important to note that both content contributors (writers) and content viewers (readers) are served via the same architecture. That architecture has to accommodate both use cases, even though more than 99% of our traffic comprises document page requests from readers. Currently, when a document page is requested, the latest version of the document is read from our MySQL database, rendered into its final HTML form, and returned to the user via the CDN.

That document page is stored and served from the CDN’s cache for the next 5 minutes, so subsequent requests — as long as they’re within that 5-minute window — will be served directly by the CDN. That caching period of 5 minutes is kept deliberately short, mainly due to the fact that we need to accommodate the needs of the writers. If we only had to accommodate the needs of the readers, we could significantly increase the caching period and serve our document pages more quickly, while at the same time reducing the workload on our backend servers.

You’ll also notice that because MDN is a Wiki platform, we’re responsible for managing all of the content, and tasks like storing document revisions, displaying the revision history of a document, displaying differences between revisions, and so on. Currently, the MDN development team maintains a large chunk of code devoted to just these kinds of tasks.

New MDN platform

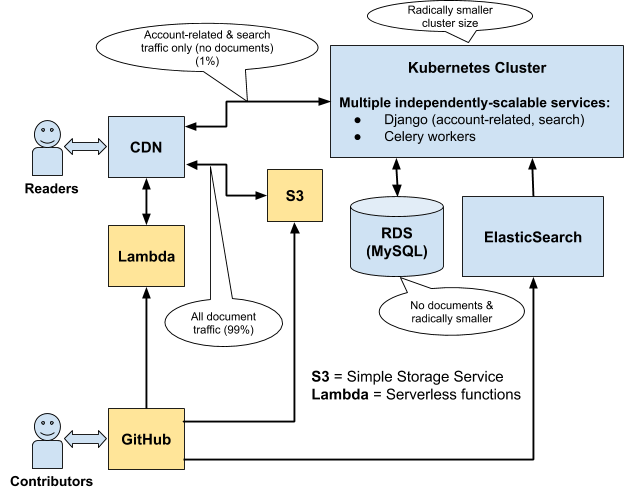

With the new JAMStack approach, the writers are served separately from the readers. The writers manage the document content via a GitHub repository and pull request model, while the readers are served document pages more quickly and efficiently via pre-rendered document pages served from S3 via a CDN (which will have a much longer caching period). The document content from our GitHub repository will be rendered and deployed to S3 on a daily basis.

You’ll notice, from the diagram above, that even with this new approach, we still have a Kubernetes cluster with Django-based services relying on a relational database. The important thing to remember is that this part of the system is no longer involved with the document content. Its scope has been dramatically reduced, and it now exists solely to provide APIs related to user accounts (e.g. login) and search.

This separation of concerns has multiple benefits, the most important three of which are as follows:

- First, the document pages are served to readers in the simplest, quickest, and most efficient way possible. That’s really important, because 99% of MDN’s traffic is for readers, and worldwide performance is fundamental to the user experience.

- Second, because we’re using GitHub to manage our document content, we can take advantage of the world-class functionality that GitHub has to offer as a content management system, and we no longer have to support the large body of code related to our current Wiki platform. It can simply be deleted.

- Third, and maybe less obvious, is that this new approach brings more power to the platform. We can, for example, perform automated linting and testing on each content pull request, which allows us to better control quality and security.

New contribution workflow

Because MDN content is soon to be contained in a GitHub repo, the contribution workflow will change significantly. You will no longer be able to click Edit on a page, make and save a change, and have it show up nearly immediately on the page. You’ll also no longer be able to do your edits in a WYSIWYG editor.

Instead, you’ll need to use git/GitHub tooling to make changes, submit pull requests, then wait for changes to be merged, the new build to be deployed, etc. For very simple changes such as fixing typos or adding new paragraphs, this may seem like a step back — Kuma is certainly convenient for such edits, and for non-developer contributors.

However, making a simple change is arguably no more complex with Yari. You can use the GitHub UI’s edit feature to directly edit a source file and then submit a PR, meaning that you don’t have to be a git genius to contribute simple fixes.

For more complex changes, you’ll need to use the git CLI tool, or a GUI tool like GitHub Desktop, but then again git is such a ubiquitous tool in the web industry that it is safe to say that if you are interested in editing MDN, you will probably need to know git to some degree for your career or course. You could use this as a good opportunity to learn git if you don’t know it already! On top of that there is a file system structure to learn, and some new tools/commands to get used to, but nothing terribly complex.

Another possible challenge to mention is that you won’t have a WYSIWYG to instantly see what the page looks like as you add your content, and in addition you’ll be editing raw HTML, at least initially (we are talking about converting the content to markdown eventually, but that is a bit of a ways off). Again, this sounds like a step backwards, but we are providing a tool inside the repo so that you can locally build and preview the finished page to make sure it looks right before you submit your pull request.

Looking at the advantages now, consider that making MDN content available as a GitHub repo is a very powerful thing. We no longer have spam content live on the site, with us then having to revert the changes after the fact. You are also free to edit MDN content in whatever way suits you best — your favorite IDE or code editor — and you can add MDN documentation into your preferred toolchain (and write your own tools to edit your MDN editing experience). A lot of engineers have told us in the past that they’d be much happier to contribute to MDN documentation if they were able to submit pull requests, and not have to use a WYSIWYG!

We are also looking into a powerful toolset that will allow us to enhance the reviewing process, for example as part of a CI process — automatically detecting and closing spam PRs, and as mentioned earlier on, linting pages once they’ve been edited, and delivering feedback to editors.

Having MDN in a GitHub repo also offers much easier mass edits; blanket content changes have previously been very difficult.

Finally, the “time to live” should be acceptable — we are aiming to have a quick turnaround on the reviews, and the deployment process will be repeated every 24 hours. We think that your changes should be live on the site in 48 hours as a worst case scenario.

Better community building

Currently MDN is not a very lively place in terms of its community. We have a fairly active learning forum where people ask beginner coding questions and seek help with assessments, but there is not really an active place where MDN staff and volunteers get together regularly to discuss documentation needs and contributions.

Part of this is down to our contribution model. When you edit an MDN page, either your contribution is accepted and you don’t hear anything, or your contribution is reverted and you … don’t hear anything. You’ll only know either way by looking to see if your edit sticks, is counter-edited, or is reverted.

This doesn’t strike us as very friendly, and I think you’ll probably agree. When we move to a git PR model, the MDN community will be able to provide hands-on assistance in helping people to get their contributions right — offering assistance as we review their PRs (and offering automated help too, as mentioned previously) — and also thanking people for their help.

It’ll also be much easier for contributors to show how many contributions they’ve made, and we’ll be adding in-page links to allow people to file an issue on a specific page or even go straight to the source on GitHub and fix it themselves, if a problem is encountered.

In terms of finding a good place to chat about MDN content, you can join the discussion on the MDN Web Docs chat room on Matrix.

Improved front-end architecture

The old Kuma architecture has a number of front-end issues. Historically we have lacked a well-defined system that clearly describes the constraints we need to work within, and what our site features look like, and this has led to us ending up with a bloated, difficult to maintain front-end code base. Working on our current HTML and CSS is like being on a roller coaster with no guard-rails.

To be clear, this is not the fault of any one person, or any specific period in the life of the MDN project. There are many little things that have been left to fester, multiply, and rot over time.

Among the most significant problems are:

- Accessibility: There are a number of accessibility problems with the existing architecture that really should be sorted out, but were difficult to get a handle on because of Kuma’s complexity.

- Component inconsistency: Kuma doesn’t use a proper design system — similar items are implemented in different ways across the site, so implementing features is more difficult than it needs to be.

When we started to move forward with the back-end platform rewrite, it felt like the perfect time to again propose the idea of a design system. After many conversations leading to an acceptable compromise being reached, our design system — MDN Fiori — was born.

Front-end developer Schalk Neethling and UX designer Mustafa Al-Qinneh took a whirlwind tour through the core of MDN’s reference docs to identify components and document all the inconsistencies we are dealing with. As part of this work, we also looked for areas where we can improve the user experience, and introduce consistency through making small changes to some core underlying aspects of the overall design.

This included a defined color palette, simple, clean typography based on a well-defined type scale, consistent spacing, improved support for mobile and tablet devices, and many other small tweaks. This was never meant to be a redesign of MDN, so we had to be careful not to change too much. Instead, we played to our existing strengths and made rogue styles and markup consistent with the overall project.

Besides the visual consistency and general user experience aspects, our underlying codebase needed some serious love and attention — we decided on a complete rethink. Early on in the process it became clear that we needed a base library that was small, nimble, and minimal. Something uniquely MDN, but that could be reused wherever the core aspects of the MDN brand was needed. For this purpose we created MDN-Minimalist, a small set of core atoms that power the base styling of MDN, in a progressively enhanced manner, taking advantage of the beautiful new layout systems we have access to on the web today.

Each component that is built into Yari is styled with MDN-Minimalist, and also has its own style sheet that lives right alongside to apply further styles only when needed. This is an evolving process as we constantly rethink how to provide a great user experience while staying as close to the web platform as possible. The reason for this is two fold:

- First, it means less code. It means less reinventing of the wheel. It means a faster, leaner, less bandwidth-hungry MDN for our end users.

- Second, it helps address some of the accessibility issues we have begrudgingly been living with for some time, which are simply not acceptable on a modern web site. One of Mozilla’s accessibility experts, Marco Zehe, has given us a lot of input to help overcome these. We won’t fix everything in our first iteration, but our pledge to all of our users is that we will keep improving and we welcome your feedback on areas where we can improve further.

A wise person once said that the best way to ensure something is done right is to make doing the right thing the easy thing to do. As such, along with all of the work already mentioned, we are documenting our front-end codebase, design system, and pattern library in Storybook (see Storybook files inside the yari repo) with companion design work in Figma (see typography example) to ensure there is an easy, public reference for anyone who wishes to contribute to MDN from a code or design perspective. This in itself is a large project that will evolve over time. More communication about its evolution will follow.

The future of MDN localization

One important part of MDN’s content that we have talked about a lot during the planning phase is the localized content. As you probably already know, MDN offers facilities for translating the original English content and making the localizations available alongside it.

This is good in principle, but the current system has many flaws. When an English page is moved, the localizations all have to be moved separately, so pages and their localizations quite often go out of sync and get in a mess. And a bigger problem is that there is no easy way of signalling that the English version has changed to all the localizers.

General management is probably the most significant problem. You often get a wave of enthusiasm for a locale, and lots of translations done. But then after a number of months interest wanes, and no-one is left to keep the translations up to date. The localized content becomes outdated, which is often harmful to learning, becomes a maintenance time-suck, and as a result, is often considered worse than having no localizations at all.

Note that we are not saying this is true of all locales on MDN, and we are not trying to downplay the amount of work volunteers have put into creating localized content. For that, we are eternally grateful. But the fact remains that we can’t carry on like this.

We did a bunch of research, and talked to a lot of non-native-English speaking web developers about what would be useful to them. Two interesting conclusions were made:

- We stand to experience a significant but manageable loss of users if we remove or reduce our localization support. 8 languages cover 90% of the accept-language headers received from MDN users (en, zh, es, ja, fr, ru, pt, de), while 14 languages cover 95% of the accept-languages. We predict that we would expect to lose at most 19% of our traffic if we dropped L10n entirely.

- Machine translations are an acceptable solution in most cases, if not a perfect one. We looked at the quality of translations provided by automated solutions such as Google Translate and got some community members to compare these translations to manual translations. The machine translations were imperfect, and sometimes hard to understand, but many people commented that a non-perfect language that is up-to-date is better than a perfect language that is out-of-date. We appreciate that some languages (such as CJK languages) fare less well than others with automated translations.

So what did we decide? With the initial release of the new platform, we are planning to include all translations of all of the current documents, but in a frozen state. Translations will exist in their own mdn/translated-content repository, to which we will not accept any pull requests. The translations will be shown with a special header that says “This is an archived translation. No more edits are being accepted.” This is a temporary stage until we figure out the next step.

Note: In addition, the text of the UI components and header menu will be in English only, going forward. They will not be translated, at least not initially.

After the initial release, we want to work with you, the community, to figure out the best course of action to move forward with for translations. We would ideally rather not lose localized content on MDN, but we need to fix the technical problems of the past, manage it better, and ensure that the content stays up-to-date.

We will be planning the next phase of MDN localization with the following guiding principles:

- We should never have outdated localized content on MDN.

- Manually localizing all MDN content in a huge range of locales seems infeasible, so we should drop that approach.

- Losing ~20% of traffic is something we should avoid, if possible.

We are making no promises about deliverables or time frames yet, but we have started to think along these lines:

- Cut down the number of locales we are handling to the top 14 locales that give us 95% of our recorded accept-language headers.

- Initially include non-editable Machine Learning-based automated translations of the “tier-1” MDN content pages (i.e. a set of the most important MDN content that excludes the vast long tail of articles that get no, or nearly no views). Ideally we’d like to use the existing manual translations to train the Machine Learning system, hopefully getting better results. This is likely to be the first thing we’ll work on in 2021.

- Regularly update the automated translations as the English content changes, keeping them up-to-date.

- Start to offer a system whereby we allow community members to improve the automated translations with manual edits. This would require the community to ensure that articles are kept up-to-date with the English versions as they are updated.

Sign up for the beta test

As we mentioned earlier, we are going to launch a beta test of the new MDN platform on November 2nd. We would love your help in testing out the new system and contribution workflow, letting us know what aspects seem good and what aspects seem painful, and suggesting improvements.

If you want to be notified when the new system is ready for testing, please let us know using this form.

Acknowledgements

I’d like to thank my colleagues Schalk Neethling, Ryan Johnson, Peter Bengtsson, Rina Tambo Jensen, Hermina Condei, Melissa Thermidor, and anyone else I’ve forgotten who helped me polish this article with bits of content, feedback, reviews, edits, and more.

The post MDN Web Docs evolves! Lowdown on the upcoming new platform appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2020/10/mdn-web-docs-evolves-lowdown-on-the-upcoming-new-platform/

|

|

Dustin J. Mitchell: Taskcluster's DB (Part 1) - Azure to Postgres |

This is a deep-dive into some of the implementation details of Taskcluster. Taskcluster is a platform for building continuous integration, continuous deployment, and software-release processes. It’s an open source project that began life at Mozilla, supporting the Firefox build, test, and release systems.

The Taskcluster “services” are a collection of microservices that handle distinct tasks: the queue coordinates tasks; the worker-manager creates and manages workers to execute tasks; the auth service authenticates API requests; and so on.

Azure Storage Tables to Postgres

Until April 2020, Taskcluster stored its data in Azure Storage tables, a simple NoSQL-style service similar to AWS’s DynamoDB. Briefly, each Azure table is a list of JSON objects with a single primary key composed of a partition key and a row key. Lookups by primary key are fast and parallelize well, but scans of an entire table are extremely slow and subject to API rate limits. Taskcluster was carefully designed within these constraints, but that meant that some useful operations, such as listing tasks by their task queue ID, were simply not supported. Switching to a fully-relational datastore would enable such operations, while easing deployment of the system for organizations that do not use Azure.

Always Be Migratin’

In April, we migrated the existing deployments of Taskcluster (at that time all within Mozilla) to Postgres. This was a “forklift migration”, in the sense that we moved the data directly into Postgres with minimal modification. Each Azure Storage table was imported into a single Postgres table of the same name, with a fixed structure:

create table queue_tasks_entities(

partition_key text,

row_key text,

value jsonb not null,

version integer not null,

etag uuid default public.gen_random_uuid()

);

alter table queue_tasks_entities add primary key (partition_key, row_key);

The importer we used was specially tuned to accomplish this import in a reasonable amount of time (hours). For each known deployment, we scheduled a downtime to perform this migration, after extensive performance testing on development copies.

We considered options to support a downtime-free migration. For example, we could have built an adapter that would read from Postgres and Azure, but write to Postgres. This adapter could support production use of the service while a background process copied data from Azure to Postgres.

This option would have been very complex, especially in supporting some of the atomicity and ordering guarantees that the Taskcluster API relies on. Failures would likely lead to data corruption and a downtime much longer than the simpler, planned downtime. So, we opted for the simpler, planned migration. (we’ll revisit the idea of online migrations in part 3)

The database for Firefox CI occupied about 350GB. The other deployments, such as the community deployment, were much smaller.

Database Interface

All access to Azure Storage tables had been via the azure-entities library, with a limited and very regular interface (hence the _entities suffix on the Postgres table name).

We wrote an implementation of the same interface, but with a Postgres backend, in taskcluster-lib-entities.

The result was that none of the code in the Taskcluster microservices changed.

Not changing code is a great way to avoid introducing new bugs!

It also limited the complexity of this change: we only had to deeply understand the semantics of azure-entities, and not the details of how the queue service handles tasks.

Stored Functions

As the taskcluster-lib-entities README indicates, access to each table is via five stored database functions:

_load _create _remove _modify _scan

Stored functions are functions defined in the database itself, that can be redefined within a transaction. Part 2 will get into why we made this choice.

Optimistic Concurrency

The modify function is an interesting case.

Azure Storage has no notion of a “transaction”, so the azure-entities library uses an optimistic-concurrency approach to implement atomic updates to rows.

This uses the etag column, which changes to a new value on every update, to detect and retry concurrent modifications.

While Postgres can do much better, we replicated this behavior in taskcluster-lib-entities, again to limit the changes made and avoid introducing new bugs.

A modification looks like this in Javascript:

await task.modify(task => {

if (task.status !== 'running') {

task.status = 'running';

task.started = now();

}

});

For those not familiar with JS notation, this is calling the modify method on a task, passing a modifier function which, given a task, modifies that task.

The modify method calls the modifier and tries to write the updated row to the database, conditioned on the etag still having the value it did when the task was loaded.

If the etag does not match, modify re-loads the row to get the new etag, and tries again until it succeeds.

The effect is that updates to the row occur one-at-a-time.

This approach is “optimistic” in the sense that it assumes no conflicts, and does extra work (retrying the modification) only in the unusual case that a conflict occurs.

What’s Next?

At this point, we had fork-lifted Azure tables into Postgres and no longer require an Azure account to run Taskcluster. However, we hadn’t yet seen any of the benefits of a relational database:

- data fields were still trapped in a JSON object (in fact, some kinds of data were hidden in base64-encoded blobs)

- each table still only had a single primary key, and queries by any other field would still be prohibitively slow

- joins between tables would also be prohibitively slow

Part 2 of this series of articles will describe how we addressed these issues. Then part 3 will get into the details of performing large-scale database migrations without downtime.

|

|

Mozilla Addons Blog: Extensions in Firefox 83 |

In addition to our brief update on extensions in Firefox 83, this post contains information about changes to the Firefox release calendar and a feature preview for Firefox 84.

Thanks to a contribution from Richa Sharma, the error message logged when a tabs.sendMessage is passed an invalid tabID is now much easier to understand. It had regressed to a generic message due to a previous refactoring.

End of Year Release Calendar

The end of 2020 is approaching (yay?), and as usual people will be taking time off and will be less available. To account for this, the Firefox Release Calendar has been updated to extend the Firefox 85 release cycle by 2 weeks. We will release Firefox 84 on 15 December and Firefox 85 on 26 January. The regular 4-week release cadence should resume after that.

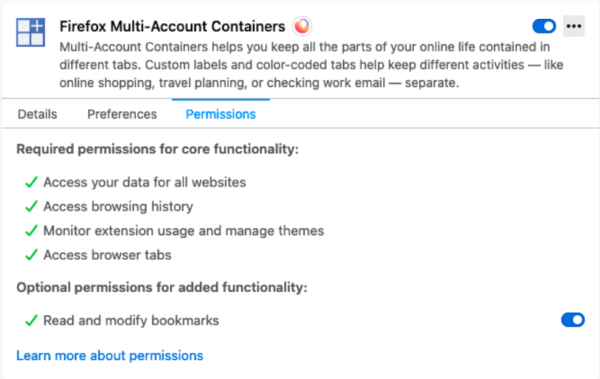

Coming soon in Firefox 84: Manage Optional Permissions in Add-ons Manager

Starting with Firefox 84, currently available on the Nightly pre-release channel, users will be able to manage optional permissions of installed extensions from the Firefox Add-ons Manager (about:addons).

We recommend that extensions using optional permissions listen for the browser.permissions.onAdded and browser.permissions.onRemoved API events. This ensures the extension is aware of the user granting or revoking optional permissions.

The post Extensions in Firefox 83 appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2020/10/28/extensions-in-firefox-83/

|

|

Will Kahn-Greene: Everett v1.0.3 released! |

What is it?

Everett is a configuration library for Python apps.

Goals of Everett:

flexible configuration from multiple configured environments

easy testing with configuration

easy documentation of configuration for users

From that, Everett has the following features:

is composeable and flexible

makes it easier to provide helpful error messages for users trying to configure your software

supports auto-documentation of configuration with a Sphinx

autocomponentdirectivehas an API for testing configuration variations in your tests

can pull configuration from a variety of specified sources (environment, INI files, YAML files, dict, write-your-own)

supports parsing values (bool, int, lists of things, classes, write-your-own)

supports key namespaces

supports component architectures

works with whatever you're writing--command line tools, web sites, system daemons, etc

v1.0.3 released!

This is a minor maintenance update that fixes a couple of minor bugs, addresses a Sphinx deprecation issue, drops support for Python 3.4 and 3.5, and adds support for Python 3.8 and 3.9 (largely adding those environments to the test suite).

Why you should take a look at Everett

At Mozilla, I'm using Everett for a variety of projects: Mozilla symbols server, Mozilla crash ingestion pipeline, and some other tooling. We use it in a bunch of other places at Mozilla, too.

Everett makes it easy to:

deal with different configurations between local development and server environments

test different configuration values

document configuration options

First-class docs. First-class configuration error help. First-class testing. This is why I created Everett.

If this sounds useful to you, take it for a spin. It's a drop-in replacement

for python-decouple and os.environ.get('CONFIGVAR', 'default_value') style

of configuration so it's easy to test out.

Enjoy!

Where to go for more

For more specifics on this release, see here: https://everett.readthedocs.io/en/latest/history.html#october-28th-2020

Documentation and quickstart here: https://everett.readthedocs.io/

Source code and issue tracker here: https://github.com/willkg/everett

|

|

Wladimir Palant: What would you risk for free Honey? |

Honey is a popular browser extension built by the PayPal subsidiary Honey Science LLC. It promises nothing less than preventing you from wasting money on your online purchases. Whenever possible, it will automatically apply promo codes to your shopping cart, thus saving your money without you lifting a finger. And it even runs a reward program that will give you some money back! Sounds great, what’s the catch?

With such offers, the price you pay is usually your privacy. With Honey, it’s also security. The browser extension is highly reliant on instructions it receives from its server. I found at least four ways for this server to run arbitrary code on any website you visit. So the extension can mutate into spyware or malware at any time, for all users or only for a subset of them – without leaving any traces of the attack like a malicious extension release.

Contents

The trouble with shopping assistants

Please note that there are objective reasons why it’s really hard to build a good shopping assistant. The main issue is how many online shops there are. Honey supports close to 50 thousand shops, yet I easily found a bunch of shops that were missing. Even the shops based on the same engine are typically customized and might have subtle differences in their behavior. Not just that, they will also change without an advance warning. Supporting this zoo is far from trivial.

Add to this the fact that with most of these shops there is very little money to be earned. A shopping assistant needs to work well with Amazon and Shopify. But supporting everything else has to come at close to no cost whatsoever.

The resulting design choices are the perfect recipe for a privacy nightmare:

- As much server-side configuration as somehow possible, to avoid releasing new extension versions unnecessarily

- As much data extraction as somehow possible, to avoid manual monitoring of shop changes

- Bad code quality with many inconsistent approaches, because improving code is costly

I looked into Honey primarily due to its popularity, it being used by more than 17 million users according to the statement on the product’s website. Given the above, I didn’t expect great privacy choices. And while I haven’t seen anything indicating malice, the poor choices made still managed to exceed my expectations by far.

Unique user identifiers

By now you are probably used to reading statements like the following in company’s privacy statements:

None of the information that we collect from these events contains any personally identifiable information (PII) such as names or email addresses.

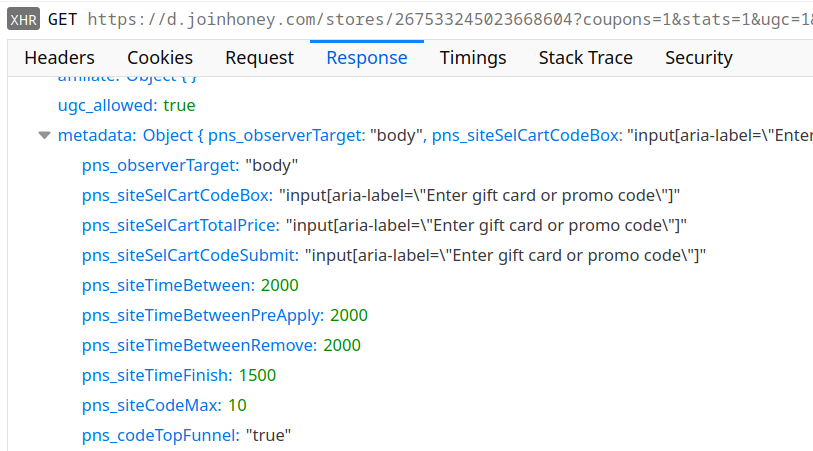

But of course a persistent semi-random user identifier doesn’t count as “personally identifiable information.” So Honey creates several of those and sends them with every request to its servers:

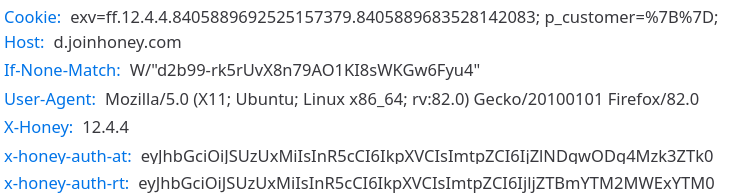

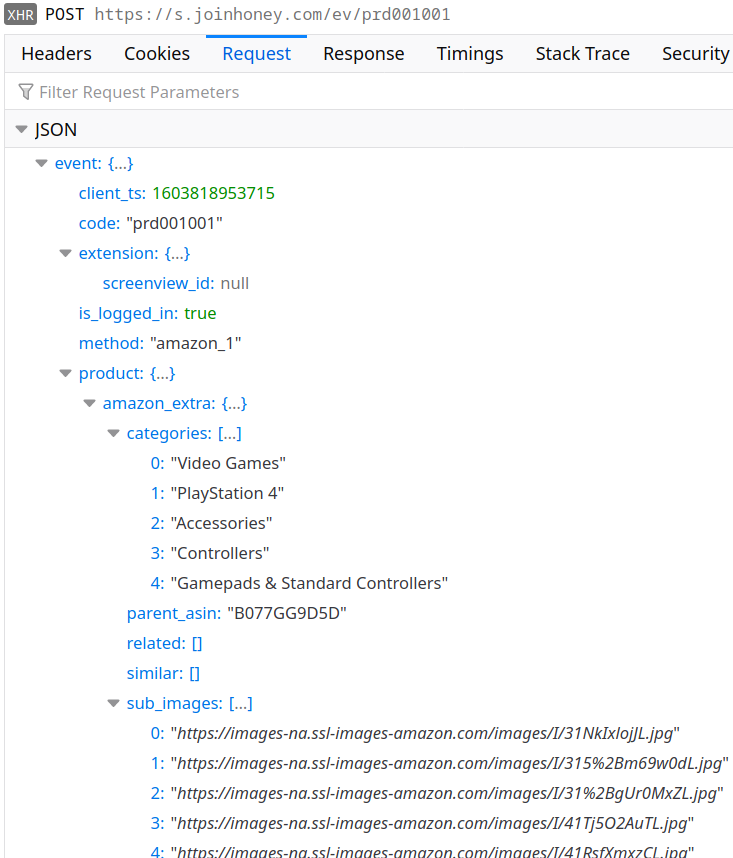

Here you see the exv value in the Cookie header: it is a combination of the extension version, a user ID (bound to the Honey account if any) and a device ID (locally generated random value, stored persistently in the extension data). The same value is also sent with the payload of various requests.

If you are logged into your Honey account, there will also be x-honey-auth-at and x-honey-auth-rt headers. These are an access and a refresh token respectively. It’s not that these are required (the server will produce the same responses regardless) but they once again associate your requests with your Honey account.

So that’s where this Honey privacy statement is clearly wrong: while the data collected doesn’t contain your email address, Honey makes sure to associate it with your account among other things. And the account is tied to your email address. If you were careless enough to enter your name, there will be a name associated with the data as well.

Remote configure everything

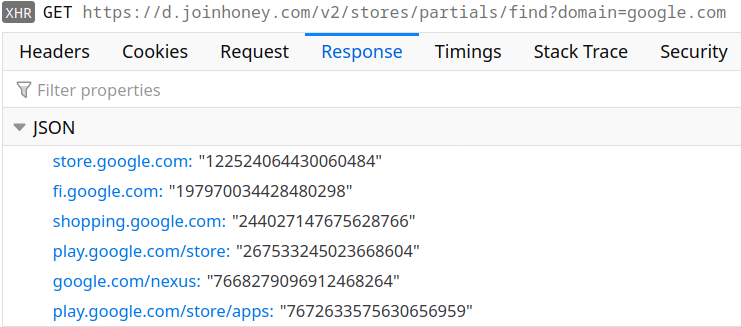

Out of the box, the extension won’t know what to do. Before it can do anything at all, it first needs to ask the server which domains it is supposed to be active on. The result is currently a huge list with some of the most popular domains like google.com, bing.com or microsoft.com listed.

Clearly, not all of google.com is an online shop. So when you visit one of the “supported” domains for the first time within a browsing session, the extension will request additional information:

Now the extension knows to ignore all of google.com but the shops listed here. It still doesn’t know anything about the shops however, so when you visit Google Play for example there will be one more request:

The metadata part of the response is most interesting as it determines much of the extension’s behavior on the respective website. For example, there are optional fields pns_siteSelSubId1 to pns_siteSelSubId3 that determine what information the extension sends back to the server later:

Here the field subid1 and similar are empty because pns_siteSelSubId1 is missing in the store configuration. Were it present, Honey would use it as a CSS selector to find a page element, extract its text and send that text back to the server. Good if somebody wants to know what exactly people are looking at.

Mind you, I only found this functionality enabled on amazon.com and macys.com, yet the selectors provided appear to be outdated and do not match anything. So is this some outdated functionality that is no longer in use and that nobody bothered removing yet? Very likely. Yet it could jump to life any time to collect more detailed information about your browsing habits.

The highly flexible promo code applying process

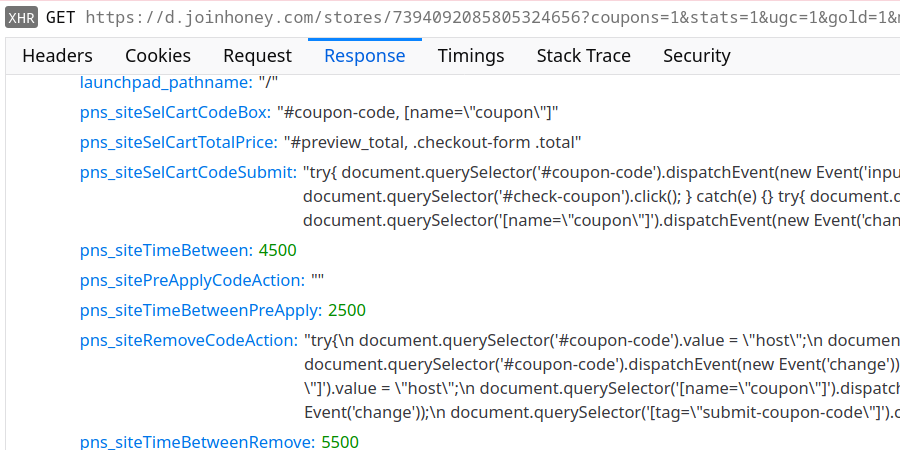

As you can imagine, the process of applying promo codes can vary wildly between different shops. Yet Honey needs to do it somehow without bothering the user. So while store configuration normally tends to stick to CSS selectors, for this task it will resort to JavaScript code. For example, you get the following configuration for hostgator.com:

The JavaScript code listed under pns_siteRemoveCodeAction or pns_siteSelCartCodeSubmit will be injected into the web page, so it could do anything there: add more items to the cart, change the shipping address or steal your credit card data. Honey requires us to put lots of trust into their web server, isn’t there a better way?

Turns out, Honey actually found one. Allow me to introduce a mechanism labeled as “DAC” internally for reasons I wasn’t yet able to understand:

The acorn field here contains base64-encoded JSON data. It’s the output of the acorn JavaScript parser: an Abstract Syntax Tree (AST) of some JavaScript code. When reassembled, it turns into this script:

let price = state.startPrice;

try {

$('#coupon-code').val(code);

$('#check-coupon').click();

setTimeout(3000);

price = $('#preview_total').text();

} catch (_) {

}

resolve({ price });

But Honey doesn’t reassemble the script. Instead, it runs it via a JavaScript-based JavaScript interpreter. This library is explicitly meant to run untrusted code in a sandboxed environment. All one has to do is making sure that the script only gets access to safe functionality.

But you are wondering what this $() function is, aren’t you? It almost looks like jQuery, a library that I called out as a security hazard on multiple occasions. And indeed: Honey chose to expose full jQuery functionality to the sandboxed scripts, thus rendering the sandbox completely useless.

Why did they even bother with this complicated approach? Beats me. I can only imagine that they had trouble with shops using Content Security Policy (CSP) in a way that prohibited execution of arbitrary scripts. So they decided to run the scripts outside the browser where CSP couldn’t stop them.

When selectors aren’t actually selectors

So if the Honey server turned malicious, it would have to enable Honey functionality on the target website, then trick the user into clicking the button to apply promo codes? It could even make that attack more likely to succeed because some of the CSS code styling the button is conveniently served remotely, so the button could be made transparent and spanning the entire page – the user would be bound to click it.

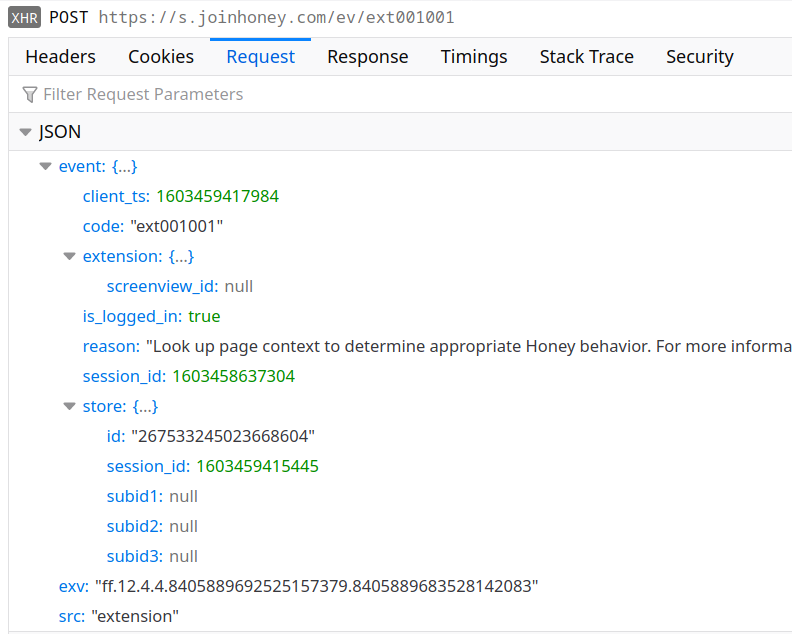

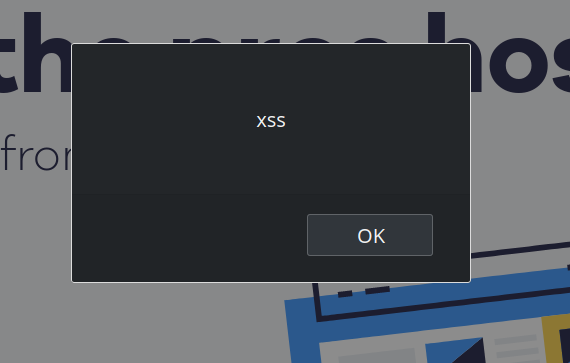

No, that’s still too complicated. Those selectors in the store configuration, what do you think: how are these turned into actual elements? Are you saying document.querySelector()? No, guess again. Is anybody saying “jQuery”? Yes, of course it is using jQuery for extension code as well! And that means that every selector could be potentially booby-trapped.

In the store configuration pictured above, pns_siteSelCartCodeBox field has the selector #coupon-code, [name="coupon"] as its value. What if the server replaces the selector by

This message actually appears multiple times because Honey will evaluate this selector a number of times for each page. It does that for any page of a supported store, unconditionally. Remember that whether a site is a supported store or not is determined by the Honey server. So this is a very simple and reliable way for this server to leverage its privileged access to the Honey extension and run arbitrary code on any website (Universal XSS vulnerability).

How about some obfuscation?

Now we have simple and reliable, but isn’t it also too obvious? What if somebody monitors the extension’s network requests? Won’t they notice the odd JavaScript code?

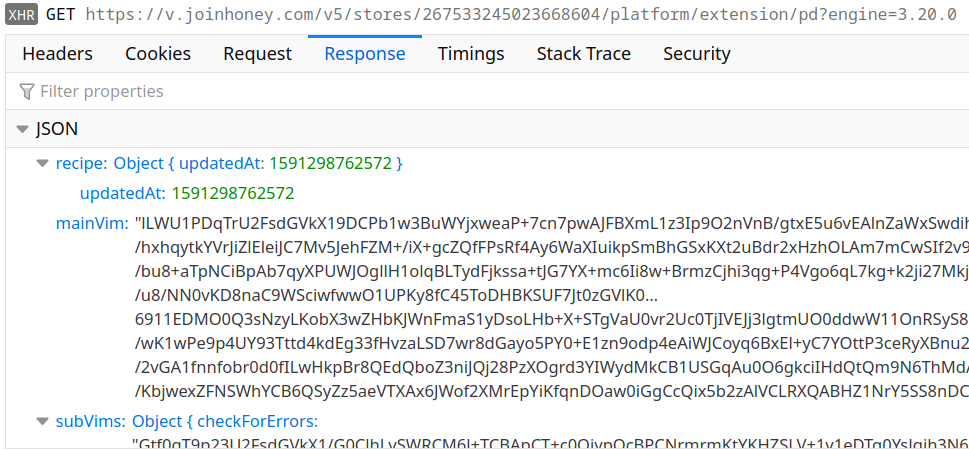

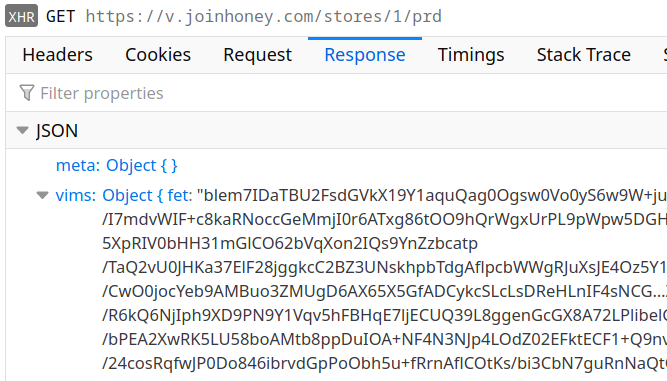

That scenario is rather unlikely actually, e.g. if you look at how long Avast has been spying on their users with barely anybody noticing. But Honey developers are always up to a challenge. And their solution was aptly named “VIM” (no, they definitely don’t mean the editor). Here is one of the requests downloading VIM code for a store:

This time, there is no point decoding the base64-encoded data: the result will be binary garbage. As it turns out, the data here has been encrypted using AES, with the start of the string serving as the key. But even after decrypting you won’t be any wiser: the resulting JSON data has all key names replaced by numeric indices and values are once again encrypted.

You need the following script to decrypt the data (requires CryptoJS):

const keys = [

"alternate", "argument", "arguments", "block", "body", "callee", "cases",

"computed", "consequent", "constructor", "declaration", "declarations",

"discriminant", "elements", "expression", "expressions", "finalizer",

"handler", "id", "init", "key", "kind", "label", "left", "method", "name",

"object", "operator", "param", "params", "prefix", "properties", "property",

"quasi", "right", "shorthand", "source", "specifiers", "superClass", "tag",

"test", "type", "update", "value"

];

function decryptValue(obj)

{

if (Array.isArray(obj))

return obj.map(decryptValue);

if (typeof obj != "object" || !obj)

return obj;

let result = {};

for (let key of Object.keys(obj))

{

let value = obj[key];

if (key.startsWith("_"))

key = keys[parseInt(key.substr(1), 10)];

if (typeof value == "string")

value = CryptoJS.AES.decrypt(value.slice(1), value[0] + "+" + key).toString(CryptoJS.enc.Utf8);

else

value = decryptValue(value);

result[key] = value;

}

return result;

}

var data = "" ;

data = JSON.parse(CryptoJS.AES.decrypt(data.slice(10), data.slice(0, 10)).toString(CryptoJS.enc.Utf8));

console.log(decryptValue(data));

What you get is once again the Abstract Syntax Tree (AST) of some JavaScript code. The lengthy chunks of JavaScript code are for example categorizing the pages of a shop, determining what kind of logic should apply to these. And the sandboxing is once again ineffective, with the code being provided access to jQuery for example.

So here is a mechanism, providing the server with a simple way to run arbitrary JavaScript code on any website it likes, immediately after the page loads and with sufficient obfuscation that nobody will notice anything odd. Mission accomplished?

Taking over the extension

Almost. So far we were talking about running code in the context of websites. But wouldn’t running code in the context of the extension provide more flexibility? There is a small complication: Content Security Policy (CSP) mechanism disallows running arbitrary JavaScript code in the extension context. At least that’s the case with the Firefox extension due to the Mozilla Add-ons requirements, on Chrome the extension simply relaxed CSP protection.

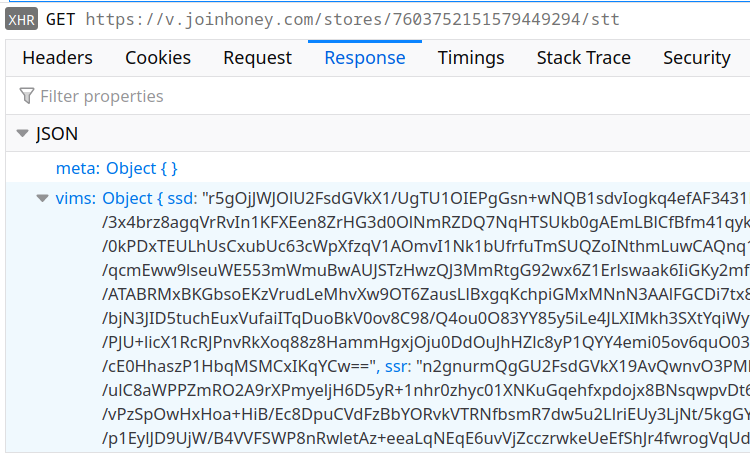

But that’s not really a problem of course. As we’ve already established, running the code in your own JavaScript interpreter circumvents this protection. And so the Honey extension also has VIM code that will run in the context of the extension’s background page:

It seems that the purpose of this code is extracting user identifiers from various advertising cookies. Here is an excerpt:

var cs = {

CONTID: {

name: 'CONTID',

url: 'https://www.cj.com',

exVal: null

},

s_vi: {

name: 's_vi',

url: 'https://www.linkshare.com',

exVal: null

},

_ga: {

name: '_ga',

url: 'https://www.rakutenadvertising.com',

exVal: null

},

...

};

The extension conveniently grants this code access to all cookies on any domains. This is only the case on Chrome however, on Firefox the extension doesn’t request access to cookies. That’s most likely to address concerns that Mozilla Add-ons reviewers had.

The script also has access to jQuery. With the relaxed CSP protection of the Chrome version, this allows it to load any script from paypal.com and some other domains at will. These scripts will be able to do anything that the extension can do: read or change website cookies, track the user’s browsing in arbitrary ways, inject code into websites or even modify server responses.

On Firefox the fallout is more limited. So far I could only think of one rather exotic possibility: add a frame to the extension’s background page. This would allow loading an arbitrary web page that would stay around for the duration of the browsing session while being invisible. This attack could be used for cryptojacking for example.

About that privacy commitment…

The Honey Privacy and Security policy states:

We will be transparent with what data we collect and how we use it to save you time and money, and you can decide if you’re good with that.

This sounds pretty good. But if I still have you here, I want to take a brief look at what this means in practice.

As the privacy policy explains, Honey collects information on availability and prices of items with your help. Opening a single Amazon product page results in numerous requests like the following:

The code responsible for the data sent here is only partly contained in the extension, much of it is loaded from the server:

Yes, this is yet another block of obfuscated VIM code. That’s definitely an unusual way to ensure transparency…

On the bright side, this particular part of Honey functionality can be disabled. That is, if you find the “off” switch. Rather counter-intuitively, this setting is part of your account settings on the Honey website:

Don’t know about you, but after reading this description I would be no wiser. And if you don’t have a Honey account, it seems that there is no way for you to disable this. Either way, from what I can tell this setting won’t affect other tracking like pns_siteSelSubId1 functionality outlined above.

On a side note, I couldn’t fail to notice one more interesting feature not mentioned in the privacy policy. Honey tracks ad blocker usage, and it will even re-run certain tracking requests from the extension if blocked by an ad blocker. So much for your privacy choices.

Why you should care

In the end, I found that the Honey browser extension gives its server very far reaching privileges, but I did not find any evidence of these privileges being misused. So is it all fine and nothing to worry about? Unfortunately, it’s not that easy.

While the browser extension’s codebase is massive and I certainly didn’t see all of it, it’s possible to make definitive statements about the extension’s behavior. Unfortunately, the same isn’t true for a web server that one can only observe from outside. The fact that I only saw non-malicious responses doesn’t mean that it will stay the same way in future or that other people will make the same experience.

In fact, if the server were to invade users’ privacy or do something outright malicious, it would likely try to avoid detection. One common way is to only do it for accounts that accumulated a certain amount of history. As security researchers like me usually use fairly new accounts, they won’t notice anything. Also, the server might decide to limit such functionality to countries where litigation is less likely. So somebody like me living in Europe with its strict privacy laws won’t see anything, whereas US citizens would have all of their data extracted.

But let’s say that we really trust Honey Science LLC given its great track record. We even trust PayPal who happened to acquire Honey this year. Maybe they really only want to do the right thing, by any means possible. Even then there are still at least two scenarios for you to worry about.

The Honey server infrastructure makes an extremely lucrative target for hackers. Whoever manages to gain control of it will gain control of the browsing experience for all Honey users. They will be able to extract valuable data like credit card numbers, impersonate users (e.g. to commit ad fraud), take over users’ accounts (e.g. to demand ransom) and more. Now think again how much you trust Honey to keep hackers out.

But even if Honey had perfect security, they are also a US-based company. And that means that at any time a three letter agency can ask them for access, and they will have to grant it. That agency might be interested in a particular user, and Honey provides the perfect infrastructure for a targeted attack. Or the agency might want data from all users, something that they are also known to do occasionally. Honey can deliver that as well.

And that’s the reason why Mozilla’s Add-on Policies list the following requirement:

Add-ons must be self-contained and not load remote code for execution

So it’s very surprising that the Honey browser extension in its current form is not merely allowed on Mozilla Add-ons but also marked as “Verified.” I wonder what kind of review process this extension got that none of the remote code execution mechanisms have been detected.

Edit (2020-10-28): As Hubert Figui`ere pointed out, extensions acquire this “Verified” badge by paying for the review. All the more interesting to learn what kind of review has been paid here.

While Chrome Web Store is more relaxed on this front, their Developer Program Policies also list the following requirement:

Developers must not obfuscate code or conceal functionality of their extension. This also applies to any external code or resource fetched by the extension package.

I’d say that the VIM mechanism clearly violates that requirement as well. As I’m still to discover a working mechanism to report violations of Chrome’s Developer Program Policies, it is to be seen whether this will have any consequences.

https://palant.info/2020/10/28/what-would-you-risk-for-free-honey/

|

|

Patrick Cloke: django-render-block 0.8 (and 0.8.1) released! |

A couple of weeks ago I released version 0.8 of django-render-block, this was followed up with a 0.8.1 to fix a regression.

django-render-block is a small library that allows you render a specific block from a Django (or Jinja) template, this is frequently used for emails when …

https://patrick.cloke.us/posts/2020/10/27/django-render-block-0.8-released/

|

|

The Talospace Project: Firefox 82 on POWER goes PGO |