Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Hacks.Mozilla.Org: Dweb: Building a Resilient Web with WebTorrent |

In this series we are covering projects that explore what is possible when the web becomes decentralized or distributed. These projects aren’t affiliated with Mozilla, and some of them rewrite the rules of how we think about a web browser. What they have in common: These projects are open source, and open for participation, and share Mozilla’s mission to keep the web open and accessible for all.

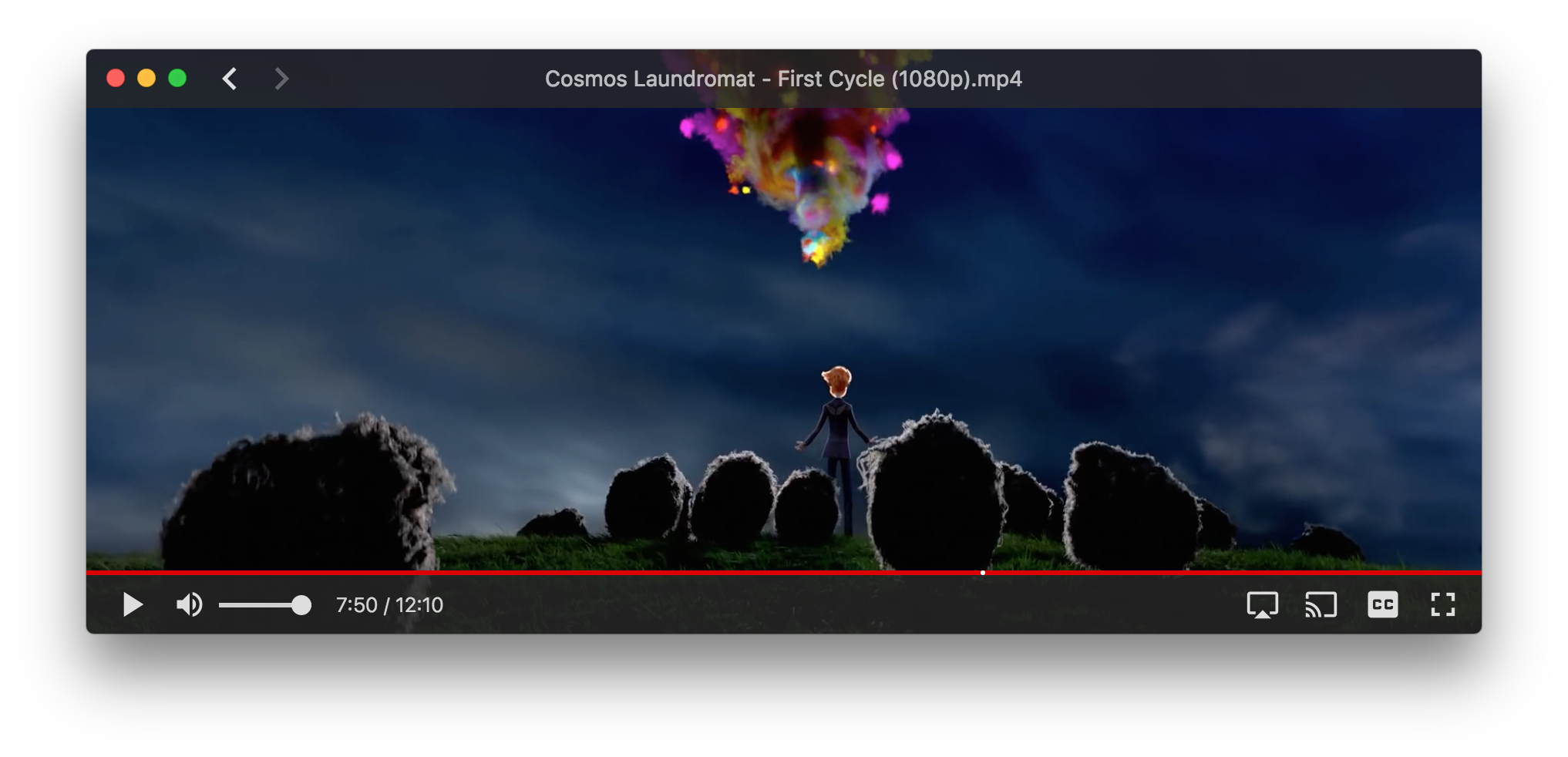

The web is healthy when the financial cost of self-expression isn’t a barrier. In this installment of the Dweb series we’ll learn about WebTorrent – an implementation of the BitTorrent protocol that runs in web browsers. This approach to serving files means that websites can scale with as many users as are simultaneously viewing the website – removing the cost of running centralized servers at data centers. The post is written by Feross Aboukhadijeh, the creator of WebTorrent, co-founder of PeerCDN and a prolific NPM module author… 225 modules at last count! –Dietrich Ayala

What is WebTorrent?

WebTorrent is the first torrent client that works in the browser. It’s written completely in JavaScript – the language of the web – and uses WebRTC for true peer-to-peer transport. No browser plugin, extension, or installation is required.

Using open web standards, WebTorrent connects website users together to form a distributed, decentralized browser-to-browser network for efficient file transfer. The more people use a WebTorrent-powered website, the faster and more resilient it becomes.

Architecture

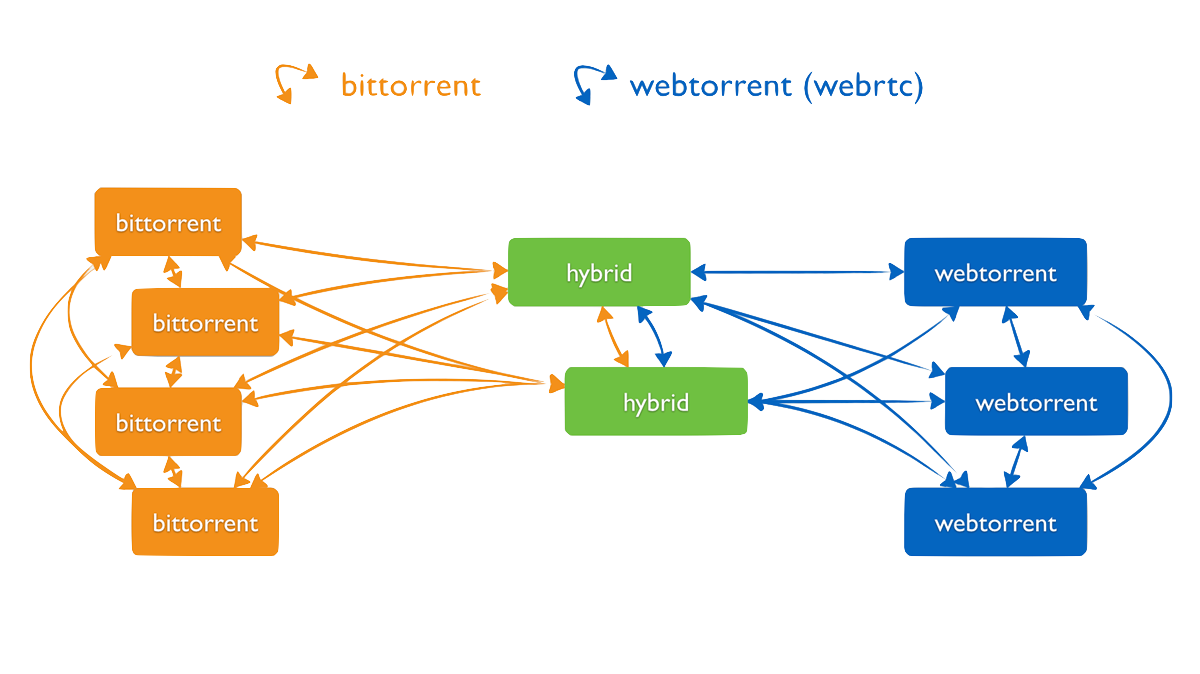

The WebTorrent protocol works just like BitTorrent protocol, except it uses WebRTC instead of TCP or uTP as the transport protocol.

In order to support WebRTC’s connection model, we made a few changes to the tracker protocol. Therefore, a browser-based WebTorrent client or “web peer” can only connect to other clients that support WebTorrent/WebRTC.

Once peers are connected, the wire protocol used to communicate is exactly the same as in normal BitTorrent. This should make it easy for existing popular torrent clients like Transmission, and uTorrent to add support for WebTorrent. Vuze already has support for WebTorrent!

Getting Started

It only takes a few lines of code to download a torrent in the browser!

To start using WebTorrent, simply include the webtorrent.min.js script on your page. You can download the script from the WebTorrent website or link to the CDN copy.

This provides a WebTorrent function on the window object. There is also an

npm package available.

var client = new WebTorrent()

// Sintel, a free, Creative Commons movie

var torrentId = 'magnet:...' // Real torrent ids are much longer.

var torrent = client.add(torrentId)

torrent.on('ready', () => {

// Torrents can contain many files. Let's use the .mp4 file

var file = torrent.files.find(file => file.name.endsWith('.mp4'))

// Display the file by adding it to the DOM.

// Supports video, audio, image files, and more!

file.appendTo('body')

})

That’s it! Now you’ll see the torrent streaming into a tag in the webpage!

Learn more

You can learn more at webtorrent.io, or by asking a question in #webtorrent on Freenode IRC or on Gitter. We’re looking for more people who can answer questions and help people with issues on the GitHub issue tracker. If you’re a friendly, helpful person and want an excuse to dig deeper into the torrent protocol or WebRTC, then this is your chance!

https://hacks.mozilla.org/2018/08/dweb-building-a-resilient-web-with-webtorrent/

|

|

Tim Taubert: Bitslicing, an Introduction |

Bitslicing (in software) is an implementation strategy enabling fast, constant-time implementations of cryptographic algorithms immune to cache and timing-related side channel attacks.

This post intends to give a brief overview of the general technique, not requiring much of a cryptographic background. It will demonstrate bitslicing a small S-box, talk about multiplexers, LUTs, Boolean functions, and minimal forms.

What is bitslicing?

Matthew Kwan coined the term about 20 years ago after seeing Eli Biham present his paper A Fast New DES Implementation in Software. He later published Reducing the Gate Count of Bitslice DES showing an even faster DES building on Biham’s ideas.

The basic concept is to express a function in terms of single-bit logical operations – AND, XOR, OR, NOT, etc. – as if you were implementing a logic circuit in hardware. These operations are then carried out for multiple instances of the function in parallel, using bitwise operations on a CPU.

In a bitsliced implementation, instead of having a single variable storing a, say, 8-bit number, you have eight variables (slices). The first storing the left-most bit of the number, the next storing the second bit from the left, and so on. The parallelism is bounded only by the target architecture’s register width.

What’s it good for?

Biham applied bitslicing to DES, a cipher designed to be fast in hardware. It uses eight different S-boxes, that were usually implemented as lookup tables. Table lookups in DES however are rather inefficient, since one has to collect six bits from different words, combine them, and afterwards put each of the four resulting bits in a different word.

Speed

In classical implementations, these bit permutations would be implemented with a combination of shifts and masks. In a bitslice representation though, permuting bits really just means using the “right” variables in the next step; this is mere data routing, which is resolved at compile-time, with no cost at runtime.

Additionally, the code is extremely linear so that it usually runs well on heavily pipelined modern CPUs. It tends to have a low risk of pipeline stalls, as it’s unlikely to suffer from branch misprediction, and plenty of opportunities for optimal instruction reordering for efficient scheduling of data accesses.

Parallelization

With a register width of n bits, as long as the bitsliced implementation is no more than n times slower to run a single instance of the cipher, you end up with a net gain in throughput. This only applies to workloads that allow for parallelization. CTR and ECB mode always benefit, CBC and CFB mode only when decrypting.

Constant execution time

Constant-time, secret independent computation is all the rage in modern applied cryptography. Bitslicing is interesting because by using only single-bit logical operations the resulting code is immune to cache and timing-related side channel attacks.

Fully Homomorphic Encryption

The last decade brought great advances in the field of Fully Homomorphic Encryption (FHE), i.e. computation on ciphertexts. If you have a secure crypto scheme and an efficient NAND gate you can use bitslicing to compute arbitrary functions of encrypted data.

Bitslicing a small S-box

Let’s work through a small example to see how one could go about converting arbitrary functions into a bunch of Boolean gates.

Imagine a 3-to-2-bit S-box function, a

component found in many symmetric encryption algorithms. Naively, this would be

represented by a lookup table with eight entries, e.g. SBOX[0b000] = 0b01,

SBOX[0b001] = 0b00, etc.

uint8_t SBOX[] = { 1, 0, 3, 1, 2, 2, 3, 0 };

This AES-inspired S-box interprets three input bits as a polynomial in GF(23) and computes its inverse mod P(x) = x3 + x2 + 1, with 0-1 := 0. The result plus (x2 + 1) is converted back into bits and the MSB is dropped.

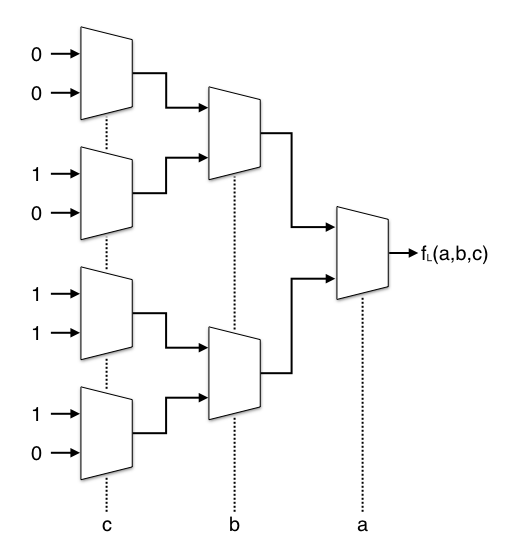

You can think of the above S-box’s output as being a function of three Boolean variables, where for instance f(0,0,0) = 0b01. Each output bit can be represented by its own Boolean function, i.e. fL(0,0,0) = 0 and fR(0,0,0) = 1.

LUTs and Multiplexers

If you’ve dealt with FPGAs before you probably know that these do not actually implement Boolean gates, but allow Boolean algebra by programming Look-Up-Tables (LUTs). We’re going to do the reverse and convert our S-box into trees of multiplexers.

Multiplexer is just a fancy word for data selector. A 2-to-1 multiplexer selects one of two input bits. A selector bit decides which of the two inputs will be passed through.

bool mux(bool a, bool b, bool s) { return s ? b : a; }

Here are the LUTs, or rather truth tables, for the Boolean functions fL(a,b,c) and fR(a,b,c):

abc | SBOX abc | f_L() abc | f_R() -----|------ -----|------- -----|------- 000 | 01 000 | 0 000 | 1 001 | 00 001 | 0 001 | 0 010 | 11 010 | 1 010 | 1 011 | 01 ---> 011 | 0 + 011 | 1 100 | 10 100 | 1 100 | 0 101 | 10 101 | 1 101 | 0 110 | 11 110 | 1 110 | 1 111 | 00 111 | 0 111 | 0

The truth table for fL(a,b,c) is (0, 0, 1, 0, 1, 1, 1, 0) or 2Eh. We can also call this the LUT-mask in the context of an FPGA. For each output bit of our S-box we need a 3-to-1 multiplexer, and that in turn can be represented by 2-to-1 multiplexers.

Multiplexers in Software

Let’s take the mux() function from above and make it constant-time. As stated

earlier, bitslicing is competitive only through parallelization, so, for

demonstration, we’ll use uint8_t arguments to later compute eight

S-box lookups in parallel.

uint8_t mux(uint8_t a, uint8_t b, uint8_t s) { return (a & ~s) | (b & s); }

If the n-th bit of s is zero it selects the n-th bit in a, if not it

forwards the n-th bit in b. The wider the target architecture’s registers,

the bigger the theoretical throughput – but only if the workload can take

advantage of the level of parallelization.

A first implementation

The two output bits will be computed separately and then assembled into the

final value returned by SBOX(). Each multiplexer in the above diagram is

represented by a mux() call. The first four take the LUT-masks

2Eh and B2h as inputs.

The diagram shows Boolean functions that only work with single-bit parameters.

We use uint8_t, so instead of 1 we need to use ~0 to get 0b11111111.

uint8_t SBOXL(uint8_t a, uint8_t b, uint8_t c) { uint8_t c0 = mux( 0, 0, c); uint8_t c1 = mux(~0, 0, c); uint8_t c2 = mux(~0, ~0, c); uint8_t c3 = mux(~0, 0, c); uint8_t b0 = mux(c0, c1, b); uint8_t b1 = mux(c2, c3, b); return mux(b0, b1, a); }

uint8_t SBOXR(uint8_t a, uint8_t b, uint8_t c) { uint8_t c0 = mux(~0, 0, c); uint8_t c1 = mux(~0, ~0, c); uint8_t c2 = mux( 0, 0, c); uint8_t c3 = mux(~0, 0, c); uint8_t b0 = mux(c0, c1, b); uint8_t b1 = mux(c2, c3, b); return mux(b0, b1, a); }

void SBOX(uint8_t a, uint8_t b, uint8_t c, uint8_t* l, uint8_t* r) { *l = SBOXL(a, b, c); *r = SBOXR(a, b, c); }

That wasn’t too hard. SBOX() is constant-time and immune to cache timing

attacks. Not counting the negation of constants (~0) we have 42 gates in total

and perform eight lookups in parallel.

Assuming, for simplicity, that a table lookup is just one operation, the

bitsliced version is about five times as slow. If we had a workflow that

allowed for 64 parallel S-box lookups we could achieve eight times the

current throughput by using uint64_t variables.

A better mux() function

mux() currently needs three operations. Here’s another variant using XOR:

uint8_t mux(uint8_t a, uint8_t b, uint8_t s) { uint8_t c = a ^ b; return (c & s) ^ a; }

Now there still are three gates, but the new version lends itself often to

easier optimization as we might be able to precompute a ^ b and reuse the

result.

Simplifying the circuit

Let’s optimize our circuit manually by following these simple rules:

mux(a, a, s)reduces toa.- Any

X AND ~0will always beX. - Anything

AND 0will always be0. mux()with constant inputs can be reduced.

With the new mux() variant there are a few XOR rules to follow as well:

- Any

X XOR Xreduces to0. - Any

X XOR 0reduces toX. - Any

X XOR ~0reduces to~X.

Inline the remaining mux() calls, eliminate common subexpressions, repeat.

void SBOX(uint8_t a, uint8_t b, uint8_t c, uint8_t* l, uint8_t* r) { uint8_t na = ~a; uint8_t nb = ~b; uint8_t nc = ~c; uint8_t t0 = nb & a; uint8_t t1 = nc & b; uint8_t t2 = b | nc; uint8_t t3 = na & t2; *l = t0 | t1; *r = t1 | t3; }

Using the laws of Boolean algebra and the rules formulated above I’ve reduced the circuit to nine gates (down from 42!). We actually couldn’t simplify it any further.

Next: Circuit Minimization

Finding the minimal form of a Boolean function is an NP-complete problem. Manual optimization is tedious but doable for a tiny S-box such as the example used in this post. It will not be as easy for multiple 6-to-4-bit S-boxes (DES) or an 8-to-8-bit one (AES).

There are simpler and faster ways to build those circuits, and deterministic algorithms to check whether we reached the minimal form. I will try to find the time to cover these in an upcoming post, in the not too distant future.

https://timtaubert.de/blog/2018/08/bitslicing-an-introduction/

|

|

Nick Cameron: Rustfmt 1.0 release candidate |

The current version of Rustfmt, 0.99.2, is the first 1.0 release candidate. It is available on nightly and beta (technically 0.99.1 there) channels, and from the 13th September will be available with stable Rust.

1.0 will be a huge milestone for Rustfmt. As part of it's stability guarantees, it's formatting will be frozen (at least until 2.0). That means any sub-optimal formatting still around will be around for a while. So please help test Rustfmt and report any bugs or sub-optimal formatting.

Rustfmt's formatting is specified in RFC 2436. Rustfmt does not reformat comments, string literals, or many macros/macro uses.

To install Rustfmt: rustup component add rustfmt-preview. To run use rustfmt main.rs (replacing main.rs with the file (and submodules) you want to format) or cargo fmt. For more information see the README.

|

|

The Mozilla Blog: Welcome Amy Keating, our incoming General Counsel |

I’m excited to announce that Amy Keating will be joining us in September as Mozilla’s new General Counsel.

Amy will work closely with me to help scale and reinforce our legal capabilities. She will be responsible for all aspects of Mozilla’s legal work including product counseling, commercial contracts, licensing, privacy issues and legal support to the Mozilla Foundation.

“Mozilla’s commitment to innovation and an internet that is open and accessible to all speaks to me at a personal level, and I’ve been drawn to serving this kind of mission throughout my career,” said Amy Keating, Mozilla incoming General Counsel. “I’m grateful for the opportunity to learn from Mozilla’s incredible employees and community and to help promote the principles that make Mozilla a trusted and unique voice in the world.”

Amy joins Mozilla from Twitter, Inc. where she has been Vice President, Legal and Deputy General Counsel. When she joined Twitter in 2012, she was the first lawyer focused on litigation, building out the functions and supporting the company as both the platform and the employee base grew in the U.S. and internationally. Her role expanded over time to include oversight of Twitter’s product counseling, regulatory, privacy, employment legal, global litigation, and law enforcement legal response functions. Prior to Twitter, Amy was part of Google, Inc.’s legal team and began her legal career as an associate at Bingham McCutchen LLP.

From her time at Twitter and prior, Amy brings a wealth of experience and a deep understanding of the product, litigation, regulatory, international, intellectual property and employment legal areas.

Join me in welcoming Amy to Mozilla!

Denelle

The post Welcome Amy Keating, our incoming General Counsel appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/08/14/welcome-amy-keating-our-incoming-general-counsel/

|

|

Mozilla Addons Blog: Building Extension APIs with Friend of Add-ons Oriol Brufau |

Please meet Oriol Brufau, our newest Friend of Add-ons! Oriol is one of 23 volunteer community members who have landed code for the WebExtensions API in Firefox since the technology was first introduced in 2015. You may be familiar with his numerous contributions if you have set a specific badge text color for your browserAction, highlighted multiple tabs with the tabs.query API, or have seen your extension’s icon display correctly in about:addons.

While our small engineering team doesn’t always have the resources to implement every approved request for new or enhanced WebExtensions APIs, the involvement of community members like Oriol adds considerable depth and breadth to technology that affects millions of users. However, the Firefox code base is large, complex, and full of dependencies. Contributing code to the browser can be difficult even for experienced developers.

As part of celebrating Oriol’s achievements, we asked him to share his experience contributing to the WebExtensions API with the hope that it will be helpful for other developers interested in landing more APIs in Firefox.

When did you first start contributing code to Firefox? When did you start contributing code to WebExtensions APIs?

I had been using Firefox Nightly, reporting bugs and messing with code for some time, but my first code contribution wasn’t until February 2016. This was maybe not the best choice for my first bug. I managed to fix it, though I didn’t have much idea about what the code was doing, and my patch needed some modifications by Jonathan Kew.

For people who want to start contributing, it’s probably a better idea to search Bugzilla for a bug with the ‘good-first-bug’ keyword. (Editor’s note: you can find mentored good-first-bugs for WebExtensions APIs here.)

I started contributing to the WebExtensions API in November 2017, when I learned that legacy extensions would stop working even if I had set the preference to enable legacy extensions in Nightly. Due to the absence of good compatible alternatives to some of my legacy add-ons, I tried to write them myself, but I couldn’t really do what I wanted because some APIs were buggy or lacked various features. Therefore, I started making proposals for new or enhanced APIs, implementing them, and fixing bugs.

What were some of the challenges to building and landing code for the WebExtensions API?

I wasn’t very familiar with WebExtensions APIs, so understanding their implementation was a bit difficult at first. Also, debugging the code can be tricky. Some code runs in the parent process and some in the content one, and the debugger can make Firefox crash.

Initially, I used to forget about testing for Android. Sometimes I had a patch that seemed to work perfectly for Linux, but it couldn’t land because it broke some Android tests. In fact, not being able to run Android tests locally in my PC is a big annoyance.

What resources did you use to overcome those challenges?

I use https://searchfox.org, a source code indexing tool for Firefox, which makes it easy to find the code that I want to modify, and I received some help from mentors in Bugzilla.

Reading the documentation helps but it’s not very detailed. I usually need to look at the Firefox or Chromium code in order to answer my questions.

Did any of your past experiences contributing code to Firefox help you create and land the WebExtensions APIs?

Yes. Despite being unfamiliar with WebExtensions APIs at first, I had a considerable experience with searching code using Searchfox, using ‘./mach build fast’ to recompile only the frontend, running tests, managing my patches with Mercurial, and getting them reviewed and landed.

Also, I already had commit access level 1, which allows me to run tests in the try servers. That’s helpful for ensuring everything works on Android.

What advice would you give people who want to build and land WebExtensions APIs in Firefox?

1. I didn’t find explanations for how the code is organized, so I would first summarize it.

The code is mainly distributed into three different folders:

- /browser/components/extensions/:

- /mobile/android/components/extensions/

- /toolkit/components/extensions/

The ‘browser’ folder contains the code specific to Firefox desktop, the ‘android’ is specific to Firefox for Android, and ‘toolkit’ contains code shared for both.

Some APIs are defined directly in ‘toolkit’, and some are defined differently in ‘browser’ and ‘android’ but they can still share some code from ‘tookit’.

2. APIs are defined using JSON schemas. They are located in the ‘schemas’ subdirectory of the folders above, and describe the API properties and methods, determine which kind of parameters are accepted, etc.

3. The actual logic of the APIs is written in JavaScript files in the ‘parent’ and ‘child’ subdirectories, mostly the former.

Is there anything else you would like to add?

The existing Webextension APIs are now more mature, useful and reliable than they were when support for legacy extensions was dropped. It’s great that promising new APIs are on the way!

Thanks, Oriol! It is a pleasure to have you in the community and and we wish you all the best in your future endeavors.

If you are interested in contributing to the WebExtensions API and are new to Firefox’s infrastructure, we recommend that you onboard to the Firefox codebase and then land a patch for a good-first-bug. If you are more familiar with Firefox infrastructure, you may want to implement one of the approved WebExtensions API requests.

For more opportunities to contribute to the add-ons ecosystem, please visit our Contribution wiki.

The post Building Extension APIs with Friend of Add-ons Oriol Brufau appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/08/14/building-extension-apis-oriol-brafau/

|

|

Mozilla Localization (L10N): L10N Report: August Edition |

Please note some of the information provided in this report may be subject to change as we are sometimes sharing information about projects that are still in early stages and are not final yet.

Welcome!

After a quick pause in July, your primary source of localization information at Mozilla is back!

New content and projects

What’s new or coming up in Firefox desktop

As localization drivers, we’re currently working on rethinking and improving the experience of multilingual users in Firefox. While this is a project that will span through several releases of Firefox, the first part of this work already landed in Nightly (Firefox 63): it’s a language switcher in Preferences, hidden behind the intl.multilingual.enabled preference, that currently allows to switch to another language already installed on the system (via language packs).

The next step will be to allow installing a language pack directly from Preferences (for the release version), and install dictionaries when user chooses to add a new language. For that reason, we’re creating a list of dictionaries for each locale. For more details, and discover how you can help, read this thread on dev-l10n.

We’re also working on building a list of native language names to use in the language switcher; once again, check dev-l10n for more info.

Quite a few strings landed in the past weeks for Nightly:

- Pages for certificate errors have a new look. To test them, you currently need to change the setting browser.security.newcerterrorpage.enabled to true in about:config. The testing instructions available in our documentation remain valid.

- There’s a whole new section dedicated to Content blocking in preferences, enabled by default in Nightly.

What’s new or coming up in mobile

It’s summer time in the western hemisphere, which means many projects (and people!) are taking a break – which also means not many strings are expected to land in mobile land during this period.

One notable thing is that Firefox iOS v13 was just released, and Marathi is a new locale this time around. Congratulations to the team.

On Firefox for Android front, Bosnian (bs), Occitan (oc) and Triqui (trs) are new locales that shipped with on current release version, v61. And we just added English from Canada (en-CA) and Ligurian (lij) to our Nightly v63 multi-locale build, which is available through the Google Play Store. Congratulations to everyone!

Other than that, most mobile projects are on a bit of a hiatus for the rest of the month. However, do expect some new and exciting projects to come in the pipeline over the course of the next few weeks. Stay tuned for more information!

What’s new or coming up in web projects

AMO

About two weeks ago, over 160 sets of curated add-on titles and descriptions were landed in Pontoon. Once localized, they will be included in a Shield Study to be launched on August 13. The study will run for about 2 months. This is probably the largest and longest study the AMO team has conducted.

The current Disco Pane (about:addons) lists curated extensions and themes which are manually programmed. TAAR (Telemetry Aware Add-on Recommender) is a new machine-learning extension discovery system that makes personalized recommendations based on information available in Firefox standard Telemetry. Based on TAAR’s potential to enhance content discovery by surfacing more diversified and personalized recommendations, the team wants to integrate TAAR as a product feature of Disco Pane. It’s called “Disco-TAAR”.

The localized titles and description will increase users’ likelihood to install and install more than one. To be part of this study, you need to make sure your locale has completed at least 80% of the AMO strings by August 12.

Common Voice

Like many of you, the team is taking a summer break. However, when they come back, they promise to introduce a new home page at the beginning of next month. There should be no localization impact.

There are three ways to contributing to this project:

- Web part (through Pontoon)

- Sentence collection

- Recording

We now have 70 locales showing interest in the project. Many have reached 100% completion or close to it. Congratulations to reaching the first milestones. However, your work shouldn’t stop here. The sentence collection is a major challenge that all the teams face before the fun recording part can begin. Ruben Martin from the Open Innovation team addresses the challenges in this blog post. If you want to learn more about Common Voice project, sign up to Discourse where lots of discussions take place.

What’s new or coming up in Foundation projects

August fundraising emails will be sent to English audience only, the team realizes a lot of people, especially Europeans are gone on holidays and won’t be reading emails. A lot of localizers should be away as well, so they decided it was best to skip this email and focus on September fundraiser.

The Internet Health Report team has started working on next year’s report and is planning to send a localized email in French, German and Spanish to collect examples of projects that improve the health of the internet, links to great new research studies or ideas for topics they should include in the upcoming report.

As for the localized campaign work, it is slowing down in August for several reasons, one of them being an ongoing process to hire two new team members to expand the advocacy campaigns in Europe: a campaign manager, and a partnership organizer. If you know potential candidates, that would be great if you could forward them these offers!

That being said, you can expect some movement on the Copyright campaign towards the end of the month as the next vote is currently scheduled on September 12th.

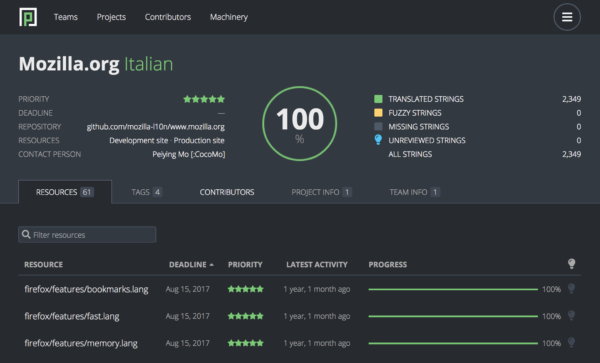

What’s new or coming up in Pontoon

File priorities and deadlines

The most critical piece of information coming from the tags feature is file priority. It used to be pretty hard to discover, because it was only available in the rarely used Tags tab. We fixed that by exposing file priority in the Resources tab of the Localization dashboard. Similarly, we also added a deadline column to the localization dashboard.

Read-only locales

Pontoon introduced the ability to enable locales for projects in read-only mode. They act the same as regular (read-write) locales, except that users cannot make any edits through Pontoon (by submitting or reviewing translations or suggestions, uploading files or performing batch actions).

That allows us to access translations from locales that do not use Pontoon for localization of some of their projects, which brings a handful of benefits. For example, dashboards and the API will now present full project status across all locales, all Mozilla translations will be accessible in the Locales tab (which is not the case for localizations like the Italian Firefox at the moment) and the Translation Memory of locales currently not available in Pontoon will improve.

Expect more details about this feature as soon as we start enabling read-only locales.

Translation display improvements

Thanks to a patch by Vishal Sharma, translations status in the History tab is now also shown to unauthenticated users and non-Translators. We’ve also removed rejected suggestions from the string list and the editor.

Events

- The Jakarta l10n event has taken place last weekend with the local community. Some of the things we worked on include: localizing Rocket browser in Javanese and Sundanese, localizing corresponding SUMO articles, refining style guides… and much more!

- Want to showcase an event coming up that your community is participating in? Reach out to any l10n-driver and we’ll include that (see links to emails at the bottom of this report)

Accomplishments

Common Voice

Many communities have made significant progress in the third step: donating their voices. In July, recording were made in the following languages and more.

- 33 hours English

- 60 hours Catalan

- 30 hours Mandarin

- 24 hours Kabyle

- 18 hours French

- 14 hours German

- 9 other languages at < 10 hours

For the complete list of all the languages, check the language status dashboard.

Useful Links

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Facebook group: it’s new! Come check it out!

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

- #l10n irc channel: this wiki page will help you get set up with IRC. For L10n, we use the #l10n channel for all general discussion. You can also find a list of IRC channels in other languages here.

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying – l10n Project Manager for mozilla.org, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Axel (Pike) – l10n Tech Team Lead

- Sta's – l20n/FTL tamer

- Zibi (gandalf) – L10n/Intl Platform Software Engineer

- Matjaz – Pontoon dev

- Adrian – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2018/08/14/l10n-report-august-edition-2/

|

|

Robert O'Callahan: Diagnosing A Weak Memory Ordering Bug |

For the first time in my life I tracked a real bug's root cause to incorrect usage of weak memory orderings. Until now weak memory bugs were something I knew about but had subconciously felt were only relevant to wizards coding on big iron, partly because until recently I've spent most of my career using desktop x86 machines.

Under heavy load a Pernosco service would assert in Rust's std::thread::Thread::unpark() with the error "inconsistent state in unpark". Inspecting the code led to the disturbing conclusion that the only way to trigger this assertion was memory corruption; the value of self.inner.state should always be between 0 and 2 inclusive, and if so then we shouldn't be able to reach the panic. The problem was nondeterministic but I was able to extract a test workload that reproduced the bug every few minutes. I tried recording it in rr chaos mode but was unable to reproduce it there (which is not surprising in hindsight since rr imposes sequential consistency).

With a custom panic handler I was able to suspend the process in the panic handler and attach gdb to inspect the state. Everything looked fine; in particular the value of self.inner.state was PARKED so we should not have reached the panic. I disassembled unpark() and decided I'd like to see the values of registers in unpark() to try to determine why we took the panic path, in particular the value of self.inner (a pointer) loaded into RCX and the value of self.inner.state loaded into RAX. Calling into the panic handler wiped those registers, so I manually edited the binary to replace the first instruction of the panic handler with UD2 to trigger an immediate core-dump before registers were modified.

The core-dump showed that RCX pointed to some random memory and was not equal to self.inner, even though we had clearly just loaded it from there! The value of state in RAX was loaded correctly via RCX, but was garbage because we were loading from the wrong address. At this point I formed the theory the issue was a low-level data race, possibly involving relaxed memory orderings — particularly because the call to unpark() came from the Crossbeam implementation of Michael-Scott lock-free queues. I inspected the code and didn't see an obvious memory ordering bug, but I also looked at the commit log for Crossbeam and found that a couple of memory ordering bugs had been fixed a long time ago; we were stuck on version 0.2 while the released version is 0.4. Upgrading Crossbeam indeed fixed our bug.

Observation #1: stick to sequential consistency unless you really need the performance edge of weaker orderings.

Observation #2: stick to sequential consistency unless you are really, really smart and have really really smart people checking your work.

Observation #3: it would be really great to have user-friendly tools to verify the correctness of unsafe, weak-memory-dependent code like Crossbeam's.

Observation #4: we need a better way of detecting when dependent crates have known subtle correctness bugs like this (security bugs too). It would be cool if the crates.io registry knew about deprecated crate versions and cargo build warned about them.

http://robert.ocallahan.org/2018/08/for-first-time-in-my-life-i-tracked.html

|

|

Firefox Nightly: Symantec Distrust in Firefox Nightly 63 |

As of today, TLS certificates issued by Symantec are distrusted in Firefox Nightly.

You can learn more about what this change means for websites and our release schedule for that change in our Update on the Distrust of Symantec TLS Certificates post published last July by the Mozilla security team.

The Symantec distrust is already effective in Chrome Canary which means that visitors to a web site with a Symantec certificate which was not replaced now get a warning page:

(left is Chrome Canary, right is Firefox Nightly)

(left is Chrome Canary, right is Firefox Nightly)

We strongly encourage website operators to replace their distrusted Symantec certificate as soon as possible before this change hits the Firefox 63 release planned for October 23.

If you are a Firefox Nightly user, you can also get involved and help this transition by contacting the support channels of these websites to warn them about this change!

https://blog.nightly.mozilla.org/2018/08/14/symantec-distrust-in-firefox-nightly-63/

|

|

Mike Hoye: Licensing Edgecases |

While I’m not a lawyer – and I’m definitely not your lawyer – licensing questions are on my plate these days. As I’ve been digging into one, I’ve come across what looks like a strange edge case in GPL licensing compliance that I’ve been trying to understand. Unfortunately it looks like it’s one of those Affero-style, unforeseen edge cases that (as far as I can find…) nobody’s tested legally yet.

I spent some time trying to understand how the definition of “linking” applies in projects where, say, different parts of the codebase use disparate, potentially conflicting open source licenses, but all the code is interpreted. I’m relatively new to this area, but generally speaking outside of copying and pasting, “linking” appears to be the critical threshold for whether or not the obligations imposed by the GPL kick in and I don’t understand what that means for, say, Javascript or Python.

I suppose I shouldn’t be surprised by this, but it’s strange to me how completely the GPL seems to be anchored in early Unix architectural conventions. Per the GPL FAQ, unless we’re talking about libraries “designed for the interpreter”, interpreted code is basically data. Using libraries counts as linking, but in the eyes of the GPL any amount of interpreted code is just a big, complicated config file that tells the interpreter how to run.

At a glance this seems reasonable but it seems like a pretty strange position for the FSF to take, particularly given how much code in the world is interpreted, at some level, by something. And honestly: what’s an interpreter?

The text of the license and the interpretation proposed in the FAQ both suggest that as long as all the information that a program relies on to run is contained in the input stream of an interpreter, the GPL – and if their argument sticks, other open source licenses – simply… doesn’t apply. And I can’t find any other major free or open-source licenses that address this question at all.

It just seems like such a weird place for an oversight. And given the often-adversarial nature of these discussions, given the stakes, there’s no way I’m the only person who’s ever noticed this. You have to suspect that somewhere in the world some jackass with a very expensive briefcase has an untested legal brief warmed up and ready to go arguing that a CPU’s microcode is an “interpreter” and therefore the GPL is functionally meaningless.

Whatever your preferred license of choice, that really doesn’t seem like a place we want to end up; while this interpretation may be technically correct it’s also very-obviously a bad-faith interpretation of both the intent of the GPL and that of the authors in choosing it.

The position I’ve taken at work is that “are we technically allowed to do this” is a much, much less important question than “are we acting, and seen to be acting, as good citizens of the larger Open Source community”. So while the strict legalities might be blurry, seeing the right thing to do is simple: we treat the integration of interpreted code and codebases the same way we’d treat C/C++ linking, respecting the author’s intent and the spirit of the license.

Still, it seems like something the next generation of free and open-source software licenses should explicitly address.

|

|

Shing Lyu: Chatting with your website visitors through Chatra |

When I started the blog, I didn’t add a message board below each article because I don’t have the time to deal with spam. Due to broken windows theory, if I leave the spam unattended my blog will soon become a landfill for spammers. But nowadays many e-commerce site or brand sites have a live chatting box, which will solve my problem because I can simply ignore spam, while interested readers can ask questions and provide feedbacks easily. That’s why when my sponsor, Chatra.io, approached me with their great tool, I fell in love with it right away and must share it with everyone.

How it works

First, signup for a free account here, and you’ll be logged into a clean and modern chat interface.

You’ll get a JavaScript widget snippet (in the “Set up & customize” page or email), which you can easily place onto your site (even if you don’t have a backend, like this site). A chat button will immediately appear on your site. Your visitor can now send you messages, and you can choose to reply them right away or followup later using their web dashboard, desktop or mobile app.

What I love about Chatra

Easy setup and clean UI

As you can see, the setup is simply pasting a block of code into your blog template (or use their app or plugin for your platform), and it works right away. The chat interface is modern and clean, you can “get it” within no time if you ever used any chat app.

Considerations for bloggers who can’t be online all day

You might wonder, “I don’t have an army of customer service agents, how can I keep everyone happy with only myself replying messages?”. But Chatra already considered that for you with messenger mode, which can receive messages 24/7 even if you are offline. A bot will automatically reply to your visitor and ask for their contact details, so you can follow up later with an email. Every live or missed message can be configured to be sent to your email, so you can check them in batch after a while. Also messaging history are preserved even if the visitor left and come back later, so you get the context of what they were saying. It’s also important to to set expectations for your visitor, to let them know you are working alone and can’t reply super fast. That brings us to the next point: customizable welcome messages and prompts.

Customizable and programmable

Almost everything in Chatra is customizable. From the welcome message, chat button text, to the automatic reply content. So instead of saying “We are a big team and we’ll reply in 10 mins, guaranteed!”, you can instead say something along the line of “Hi, I’m running this site alone and I’d love to hear from you. I’ll get back to you within days”. Besides customizing the look and feel and tone of speech, you can also setup triggers that automatically initiate a chat when criteria meet. For example we can send a automated message when a visitor reads the article for more then 1 minute. Of course you can further customize the experience using the developer API.

Out-of-the-box Google Analytics integration

One thing I really care about is understanding how my visitors interact with the site, and how I can optimize the content and UX to further engage them. I did that through Google Analytic. Much to my amazement, Chatra detected my Google analytics configuration and automatically send relevant events to my Google Analytic tracking, without me even setting up anything. I can directly create goals based on the events and track the conversion funnel leading to a chat.

Pricing and features

Chatra has a free and a paid plan, and also a 2-week trial period that allows you to test everything before paying. The number of registered agents, connected websites and concurrent chats is unlimited in all plans. The free plan has basic features, including mobile apps, Google Analytics integration, some API options, etc., and allows 1 agent to be online at a time, which is sufficient enough for a one-person website like mine. But you can have several agents taking turns chatting: when one goes offline, another connects. And even if the online spot is already taken, other agents can still access the dashboard and read chats.

The paid plan starts at $15 per month and gives you access to all features, including automatic triggers and visitors online list, saved replies, typing insights, visitor information, integration with services like Zapier, Slack, Help Scout and more, and allows as many agents online as paid for. Agents on the paid plan can also take turns chatting, so there’s no need to pay for all of them.

Conclusion

All in all, Chatra is a nice tool to further engage your visitors. The free plan is generous enough for most small scale websites. In case you scales up in the future, their paid plan is affordable and pays for itself after a few successful sales. So if you want an easy and convenient way to chat with your visitors, gain feedback and have more insights into your users, you should give Chatra a try with this link now.

https://shinglyu.github.io/web/2018/08/13/chatting-with-your-website-visitors-through-chatra.html

|

|

Mozilla Security Blog: TLS 1.3 Published: in Firefox Today |

On friday the IETF published TLS 1.3 as RFC 8446. It’s already shipping in Firefox and you can use it today. This version of TLS incorporates significant improvements in both security and speed.

Transport Layer Security (TLS) is the protocol that powers every secure transaction on the Web. The version of TLS in widest use, TLS 1.2, is ten years old this month and hasn’t really changed that much from its roots in the Secure Sockets Layer (SSL) protocol, designed back in the mid-1990s. Despite the minor number version bump, this isn’t the minor revision it appears to be. TLS 1.3 is a major revision that represents more than 20 years of experience with communication security protocols, and four years of careful work from the standards, security, implementation, and research communities (see Nick Sullivan’s great post for the cool details).

Security

TLS 1.3 incorporates a number of important security improvements.

First, it improves user privacy. In previous versions of TLS, the entire handshake was in the clear which leaked a lot of information, including both the client and server’s identities. In addition, many network middleboxes used this information to enforce network policies and failed if the information wasn’t where they expected it. This can lead to breakage when new protocol features are introduced. TLS 1.3 encrypts most of the handshake, which provides better privacy and also gives us more freedom to evolve the protocol in the future.

Second, TLS 1.3 removes a lot of outdated cryptography. TLS 1.2 included a pretty wide variety of cryptographic algorithms (RSA key exchange, 3DES, static Diffie-Hellman) and this was the cause of real attacks such as FREAK, Logjam, and Sweet32. TLS 1.3 instead focuses on a small number of well understood primitives (Elliptic Curve Diffie-Hellman key establishment, AEAD ciphers, HKDF).

Finally, TLS 1.3 is designed in cooperation with the academic security community and has benefitted from an extraordinary level of review and analysis. This included formal verification of the security properties by multiple independent groups; the TLS 1.3 RFC cites 14 separate papers analyzing the security of various aspects of the protocol.

Speed

While computers have gotten much faster, the time data takes to get between two network endpoints is limited by the speed of light and so round-trip time is a limiting factor on protocol performance. TLS 1.3’s basic handshake takes one round-trip (down from two in TLS 1.2) and TLS 1.3 incorporates a “zero round-trip” mode in which the client can send data to the server in its first set of network packets. Put together, this means faster web page loading.

What Now?

TLS 1.3 is already widely deployed: both Firefox and Chrome have fielded “draft” versions. Firefox 61 is already shipping draft-28, which is essentially the same as the final published version (just with a different version number). We expect to ship the final version in Firefox 63, scheduled for October 2018. Cloudflare, Google, and Facebook are running it on their servers today. Our telemetry shows that around 5% of Firefox connections are TLS 1.3. Cloudflare reports similar numbers, and Facebook reports that an astounding 50+% of their traffic is already TLS 1.3!

TLS 1.3 was a big effort with a huge number of contributors., and it’s great to see it finalized. With the publication of the TLS 1.3 RFC we expect to see further deployments from other browsers, servers and toolkits, all of which makes the Internet more secure for everyone.

The post TLS 1.3 Published: in Firefox Today appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2018/08/13/tls-1-3-published-in-firefox-today/

|

|

Firefox Test Pilot: Send: Going Bigger |

Send encrypts your files in the browser. This is good for your privacy because it means only you and the people you share the key with can decrypt it. For me, as a software engineer, the challenge with doing it this way is the limited API set available in the browser to “go full circle”. There’s a few things that make it a difficult problem.

The biggest limitation on Send today is the size of the file. This is because we load the entire thing into memory and encrypt it all at once. It’s a simple and effective way to handle small files but it makes large files prone to failure from running out of memory. What size of file is too big also varies by device. We’d like everyone to be able to send large files securely regardless of what device they use. So how can we do it?

The first challenge is to not load and encrypt the file all at once. RFC 8188 specifies a standard for an encrypted content encoding over HTTP that is designed for streaming. This ensures we won’t run out of memory during encryption and decryption by breaking the file into smaller chunks. Implementing the RFC as a Stream give us a nice way to represent our encrypted content.

With a stream instead of a Blob we run into another challenge when it’s time to upload. Streams are not fully supported by the fetch API in all the browsers we want to support yet, including Firefox. We can work around this though, with WebSockets.

Now we’re able to encrypt, upload, download, and decrypt large files without using too much memory. Unfortunately, there’s one more problem to face before we’re able to save a file. There’s no easy way to download a stream from javascript to the local filesystem. The standard way to download data from memory as a file is with createObjectURL, which needs a blob. To stream the decrypted data as a download requires a trip through a ServiceWorker. StreamSaver.js is a nice implementation of the technique. Here again we run into browser support as a limiting factor. There isn’t a work around that doesn’t require having the whole file in memory, which is another case of our original problem. But, streams will be stable in Firefox soon so we’ll be able to support large files as soon as they’re available.

In the end, it’s quite complicated to do end-to-end encryption of large files in the browser compared to small ones, but it is possible. It’s one of many improvements we’re working on for Send this summer that we’re excited about.

As always, you’re welcome to join us on GitHub, and thank you to everyone who’s contributed so far.

Send: Going Bigger was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

https://medium.com/firefox-test-pilot/send-going-bigger-75a499e397df?source=rss----46b1a2ddb811---4

|

|

Princi Vershwal: Vector Tile Support for OpenStreetMap’s iD Editor |

Protocolbuffer Binary Format(.pbf) and Mapbox Vector Tiles(.mvt) are two popular formats for sharing map data. Prior to this GSoC project, the iD editor in OSM supported GPX data. GPX is an XML schema designed as a common GPS data format for software applications. It can be used to describe waypoints, tracks, and routes.

The main objective of the project was to add support for vector tile data to iD. MVT and PBF contain data of a particular tile. These files contain data in Protocolbuffer binary format and can have various sets of data like name of cities, or train stations etc. This data can be in the form of points, lines or polygons. A vector tile looks something like this :

The goal is to draw the data of these tile on iD and it should show up on the screen like this :

For implementing the feature the following steps were followed:

- Creating a new layer : A new mvt layer is created that would accept a pbf/mvt file. d3_request library is used to read the data in arraybuffer format.

- Converting data to GeoJSON : The arraybuffer data is converted to GeoJSON format before passing to the drawMvt function.

For converting vector tile data to GeoJSON data, Mapbox provides with two libraries:

1. vt2geojson

2. vector-tile-js

vt2geojson is great for changing vector tiles to GeoJSON from remote URLs or local system files but it works with Node.js only.

For iD we have used mapbox’s vector-tile-js, it read Mapbox Vector Tiles and allows access to the layers and features, these features can be further converted to GeoJSON. - MVT drawing : This GeoJSON data is pass directly to the D3 draw functions which renders the data. (iD uses D3 for all of our drawing already)

All the work related to the above steps is here.

4. Next step was writing the tests for the above code. Tests for the code are here.

Performance Testing

- Choosing data : The data which was used to create the vector tiles for testing is this : https://data.cityofnewyork.us/Environment/2015-Street-Tree-Census-Tree-Data/pi5s-9p35/data

It is a dense data consisting of only points. - Creating MVTs : Vector tiles were created using the above data using a tool called tippecanoe. Mapbox’s tippecanoe is used to build vector tilesets from large (or small) collections of GeoJSON, Geobuf, or CSV features.

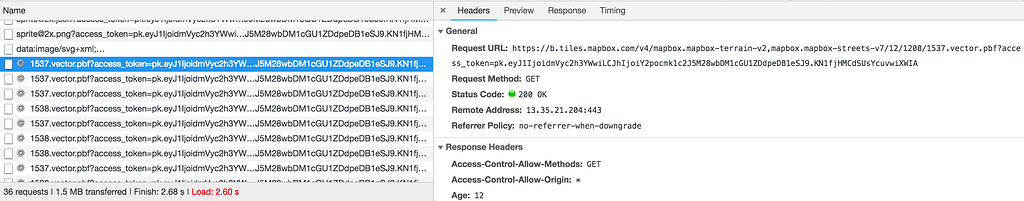

- Tippecanoe converts GeoJSON data to mbtiles format, these files contain data for more than one tile. Mapbox/mbview was used to view these tiles in localhost and extract individual tiles from the network tab.

4. This URL when passed to iD draws the vector tile like this :

URL used : http://preview.ideditor.com/master/#background=Bing&disable_features=boundaries&map=9.00/39.7225/-74.0153&mvt=https://a.tiles.mapbox.com/v4/mapbox.mapbox-terrain-v2,mapbox.mapbox-streets-v7/12/1207/1541.vector.pbf?access_token= ‘pk.0000.1111’

# replace value with your mapbox public access token

Some More Interesting Stuff

There is much more that can be done with vector tiles. One thing is better styling of the drawings. A very next step is to provide different colors to different layers of the tile data.

For more discussion, you can follow here.

My earlier blogs can be found here.

|

|

Robert O'Callahan: The Parallel Stream Multiplexing Problem |

Imagine we have a client and a server. The client wants to create logical connections to the server (think of them as "queries"); the client sends a small amount of data when it opens a connection, then the server sends a sequence of response messages and closes the connection. The responses must be delivered in-order, but the order of responses in different connections is irrelevant. It's important to minimize the start-to-finish latency of connections, and the latency between the server generating a response and the client receiving it. There could be hundreds of connections opened per second and some connections produce thousands of response messages. The server uses many threads; a connection's responses are generated by a specific server thread. The client may be single-threaded or use many threads; in the latter case a connection's responses are received by a specific client thread. What's a good way to implement this when both client and server are running in the same OS instance? What if they're communicating over a network?

This problem seems quite common: the network case closely resembles a Web browser fetching resources from a single server via HTTP. The system I'm currently working on contains an instance of this internally, and communication between the Web front end and the server also looks like this. Yet even though the problem is common, as far as I know it's not obvious or well-known what the best solutions are.

A standard way to handle this would be to multiplex the logical connections into a single transport. In the local case, we could use a pair of OS pipes as the transport, a client-to-server pipe to send requests and a server-to-client pipe to return responses. The client allocates connection IDs and the server attaches connection IDs to response messages. Short connections can be very efficient: a write syscall to open a connection, a write syscall to send a response, maybe another write syscall to send a close message, and corresponding read syscalls. One possible problem is server write contention: multiple threads sending responses must make sure the messages are written atomically. In Linux this happens "for free" if your messages are all smaller than PIPE_BUF (4096), but if they aren't you have to do something more complicated, the simplest being to hold a lock while writing to the pipe, which could become a bottleneck for very parallel servers. There is a similar problem with client read contention, which is mixed up with the question of how you dispatch received responses to the thread reading from a connection.

A better local approach might be for the client to use an AF_UNIX socket to send requests to the server, and with each request message pass a file descriptor for a fresh pipe that the server should use to respond to the client. It requires a few more syscalls but client threads require no user-space synchronization, and server threads require no synchronization after the dispatch of a request to a server thread. A pool of pipes in the client might help.

The network case is harder. A naive approach is to multiplex the logical connections over a TCP stream. This suffers from head-of-line-blocking: a lost packet can cause delivery of all messages to be blocked while the packet is retransmitted, because all messages across all connections must be received in the order they were sent. You can use UDP to avoid that problem, but you need encryption, retransmits, congestion control, etc so you probably want to use QUIC or something similar.

The Web client case is interesting. You can multiplex over a WebSocket much like a TCP stream, with the same disadvantages. You could issue an HTTP request for each logical connection, but this would limit the number of open connections to some unknown maximum, and could have even worse performance than the Websocket if the browser and server don't negotiate QUIC + HTTP2. A good solution might be to multiplex the connections into a RTCDataChannel in non-ordered mode. This is probably quite simple to implement in the client, but fairly complex to implement in the server because the RTCDataChannel protocol is complicated (for good reasons AFAIK).

This multiplexing problem seems quite common, and its solutions interesting. Maybe there are known best practices or libraries for this, but I haven't found them yet.

http://robert.ocallahan.org/2018/08/the-parallel-stream-multiplexing-problem.html

|

|

Cameron Kaiser: TenFourFox FPR9b2 available |

The WiFi fix in beta 1 was actually to improve HTML5 geolocation accuracy, and Chris T has confirmed that it does, so that's been updated in the release notes. Don't worry, you are always asked before your location is sent to a site.

On the Talos II side, I've written an enhancement to KVMPPC allowing it to actually monkeypatch Mac OS X with an optimized bcopy in the commpage. By avoiding the overhead of emulating dcbz's behaviour on 32-bit PPC, this hack improves the T2's Geekbench score by almost 200 points in Tiger. Combined with another small routine to turn dcba hints into nops so they don't cause instruction faults, this greatly reduces stalls and watchdog tickles when running Mac apps in QEMU. I'll have a formal article on that with source code for the grubby proletariat shortly, plus a big surprise launch of something I've been working on very soon. Watch this space.

http://tenfourfox.blogspot.com/2018/08/tenfourfox-fpr9b2-available.html

|

|

Daniel Stenberg: A hundred million cars run curl |

One of my hobbies is to collect information about where curl is used. The following car brands feature devices, infotainment and/or navigation systems that use curl - in one or more of their models.

These are all brands about which I've found information online (for example curl license information), received photos of or otherwise been handed information by what I consider reliable sources (like involved engineers).

Do you have curl in a device installed in another car brand?

List of car brands using curl

Baojun, BMW, Buick, Cadillac, Chevrolet, Ford, GMC, Holden, Mazda, Mercedes, Nissan, Opel, Renault, Seat, Skoda, Subaru, Suzuki, Tesla, Toyota, VW and Vauxhall.

All together, this is a pretty amazing number of installations. This list contains seven (7) of the top-10 car brands in the world 2017! And all the top-3 brands. By my rough estimate, something like 40 million cars sold in 2017 had curl in them. Presumably almost as many in 2016 and a little more in 2018 (based on car sales stats).

Not too shabby for a little spare time project.

How to find curl in your car

Sometimes the curl open source license is included in a manual (it includes my name and email, offering more keywords to search for). That's usually how I've found out many uses purely online.

Sometimes the curl license is included in the "open source license" screen within the actual infotainment system. Those tend to list hundreds of different components and without any search available, you often have to scroll for many minutes until you reach curl or libcurl. I occasionally receive photos of such devices.

Related: why is your email in my car and I have toyota corola.

https://daniel.haxx.se/blog/2018/08/12/a-hundred-million-cars-run-curl/

|

|

Mike Hommey: Announcing git-cinnabar 0.5.0 |

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

These release notes are also available on the git-cinnabar wiki.

What’s new since 0.4.0?

- git-cinnabar-helper is now mandatory. You can either download one with

git cinnabar downloadon supported platforms or build one withmake. - Performance and memory consumption improvements.

- Metadata changes require to run

git cinnabar upgrade. - Mercurial tags are consolidated in a separate (fake) repository. See the README file.

- Updated git to 2.18.0 for the helper.

- Improved memory consumption and performance.

- Improved experimental support for pushing merges.

- Support for clonebundles for faster clones when the server provides them.

- Removed support for the .git/hgrc file for mercurial specific configuration.

- Support any version of Git (was previously limited to 1.8.5 minimum)

- Git packs created by git-cinnabar are now smaller.

- Fixed incompatibilities with Mercurial 3.4 and >= 4.4.

- Fixed tag cache, which could lead to missing tags.

- The prebuilt helper for Linux now works across more distributions (as long as libcurl.so.4 is present, it should work)

- Properly support the

pack.packsizelimitsetting. - Experimental support for initial clone from a git repository containing git-cinnabar metadata.

- Now can successfully clone the pypy and GNU octave mercurial repositories.

- More user-friendly errors.

Development process changes

It took about 6 months between version 0.3 and 0.4. It took more than 18 months to reach version 0.5 after that. That’s a long time to wait for a new version, considering all the improvements that have happened under the hood.

From now on, the release branch will point to the last tagged release, which is roughly the same as before, but won’t be the default branch when cloning anymore.

The default branch when cloning will now be master, which will receive changes that are acceptable for dot releases (0.5.x). These include:

- Changes in behavior that are backwards compatible (e.g. adding new options which default to the current behavior).

- Changes that improve error handling.

- Changes to existing experimental features, and additions of new experimental features (that require knobs to be enabled).

- Changes to Continuous Integration/Tests.

- Git version upgrades for the helper.

The next branch will receive changes for the next “major” release, which as of writing is planned to be 0.6.0. These include:

- Changes in behavior.

- Changes in metadata.

- Stabilizing experimental features.

- Remove backwards compability with older metadata (< 0.5.0).

|

|

Mozilla VR Blog: This Week in Mixed Reality: Issue 15 |

This week is mainly about bug fixing and getting some new features to launch.

Browsers

Firefox Reality is in the bug fixing phase, keeping the team very busy:

- The team has reviewed the report from testing with actual users. Lots of changes in progress.

- Burning down bugs in Firefox Reality. Big ones this week include refactoring immersive mode and improving loading times

- Fix broken OAuth logins and opening pages in new windows.

Social

A bunch of bug fixes and improvements to Hubs by Mozilla:

- Support for single sided objects to reduce rendering time on things like walls where you only need to see one side

- Fixes for the pen drawing tool

Content Ecosystem

- Magic Leap hardware is now publicly available!

|

|

Hacks.Mozilla.Org: MDN Changelog for July 2018: CDN tests, Goodbye Zones, and BCD |

|

|

QMO: Firefox DevEdition 62 Beta 18 Testday, August 17th |

Greetings Mozillians!

We are happy to let you know that Friday, August 17th, we are organizing Firefox 62 DevEdition Beta 18 Testday. We’ll be focusing our testing on Activity Stream, React Animation Inspector and Toolbars & Window Controls features. We will also have fixed bugs verification and unconfirmed bugs triage ongoing.

Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better!

See you on Friday!

https://quality.mozilla.org/2018/08/firefox-devedition-62-beta-18-testday-august-17th/

|

|