Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Addons Blog: Add-ons in 2017 |

A little more than a year ago we started talking about where add-ons were headed, and what the future would look like. It’s been busy, and we wanted to give everyone an update as well as provide guidance on what to expect in 2017.

Over the last year, we’ve focused as a community on foundational work building out WebExtensions support in Firefox and addons.mozilla.org (AMO), reducing the time it takes for listed add-ons to be reviewed while maintaining the standards we apply to them, and getting Add-ons ready for e10s. We’ve made a number of changes to our process and products to make it easier to submit, distribute, and discover add-ons through initiatives like the signing API and a revamped Discovery Pane in the add-ons manager. Finally, we’ve continued to focus on communicating changes to the developer community via direct outreach, mailing lists, email campaigns, wikis, and the add-ons blog.

As we’ve mentioned, WebExtensions are the future of add-ons for Firefox, and will continue to be where we concentrate efforts in 2017. WebExtensions are decoupled from the platform, so the changes we’ll make to Firefox in the coming year and beyond won’t affect them. They’re easier to develop, and you won’t have to learn about Firefox internals to get up and running. It’ll be easier to move your add-ons over to and from other browsers with minimal changes, as we’re making the APIs compatible – where it makes sense – with products like Opera, Chrome, and Edge.

By the end of 2017, and with the release of Firefox 57, we’ll move to WebExtensions exclusively, and will stop loading any other extension types on desktop. To help ensure any new extensions work beyond the end of 2017, AMO will stop accepting any new extensions for signing that are not WebExtensions in Firefox 53. Throughout the year we’ll expand the set of APIs available, add capabilities to Firefox that don’t yet exist in other browsers, and put more WebExtensions in front of users.

There’s a lot of moving parts, and we’ll be tracking more detailed information – including a timeline and roadmap – on the WebExtensions section of the Mozilla Wiki. If you’re interested in getting involved with the add-on community and WebExtensions, we have a few ways you can do that. We’re looking forward to the next year, and will continue to post updates and additional information here on the Add-ons blog.

For more information on Add-ons and WebExtensions, see:

Note: Edited to better identify specifics around Firefox 53

|

|

Air Mozilla: The Joy of Coding - Episode 81 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Air Mozilla: Weekly SUMO Community Meeting Nov. 23, 2016 |

This is the Sumo weekly call

This is the Sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-nov-23-2016/

|

|

Firefox Nightly: These Weeks in Firefox: Issue 6 |

Highlights

- A reminder that Firefox ESR 52 will be the last version to support WinXP

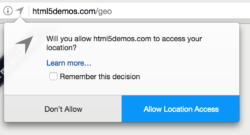

- past reports that the new permission doorhanger UI has landed!

- Permission prompts are no longer accidentally dismissable

- Available actions are more prominent, not just the main one

- Working on followups in mozilla-central. Notice problems? File them blocking this bug.

- ralin reports that the new HTML-based visual video controls have landed!

- florian and MattN report that the insecure password warning in the URL bar will ship in Firefox 51!

- They also note that work on the contextual warning for insecure passwords made great progress and is currently slated for Firefox 52.

- Flip security.insecure_field_warning.contextual.enabled to true and signon.autofillForms.http to false to test the feature.

- kitcambridge wants people to know that a new add-on has been released to let you examine your own Sync data. As always, the source is also available

- He also has a call-to-action to validate your bookmarks data (see this mailing list thread and this follow-up post for how to contribute anonymized validator data)

Contributor(s) of the Week

- Please welcome Joe Hildebrand, who has joined Mozilla as Director of Engineering for the Firefox browsers!

- Resolved bugs (excluding employees): https://mzl.la/2gcieyv

- New Contributors

Project Updates

Add-ons

- The team has published a blog post on what’s new for WebExtensions in Firefox 52

- They’re also testing out chrome.storage.sync and working on getting backend storage into release quality

Electrolysis (e10s)

- Turning on e10s-multi on Nightly (with 2 content processes) is blocked on Talos regressions and a few test failures. This should happen sometime soon during the Nightly 53 cycle (and hold on Nightly)

Firefox Core Engineering

- ddurst reports that there is going to be an aggressive push to update “orphaned” Firefox installs (44-47, inclusive) between now and the end of 2016

- “Orphaned” = not updated since 2 versions back.

- Concerted effort to encourage people to update from 42-47 to current (50). 42-25 isn’t a huge chunk, but this is a much more aggressive push.

- What causes orphans?

- Some are just users being slow to update

- Blocked by security suite. (Avast was trying to update on users’ behalf). Some are worked out with vendors, some are actual problems.

- Some are related to network latency (bugs have been filed and fixed), but ddurst’s team just got a machine that’s not updating and not affected by latency.

- 3.x upgrades

- This is something we’ll turn our attention to once the 42+ orphan level is deemed acceptable

- Issuing updates as minor for 3.x and lower, hopefully

Form Auto-fill

- MattN wants to draw everyone’s attention to the intent to implement for DOM Web Payments API. That team is coordinating schedules with the Form Auto-fill team.

Platform UI and Other Platform Audibles

- mconley reports that the ability to style -moz-appearance: none checkbox and radio input form fields has landed on central! Aiming to try to uplift to 52.

- daleharvey made it so that we can show the login manager autocomplete popup on focus (this bug was reported 10 years ago!)

- scottwu has a patch up for review that adds a datepicker (to complement the timepicker that was recently added). Link to very detailed spec.

- A patch from MSU students Fred_ and miguel to combine the e10s and non-e10s will likely land this week (but preffed off)

- A patch by MSU student beachjar that vertically centers the will re-land soon now that he’s fixed this bug

Privacy / Security

- nhnt11 has simplified the way we open captive portal tabs

Quality of Experience

- mikedeboer reports that the demo / ideation phase for the new Theming API is nearly finished

- An engineering plan in the making, and almost ready for feedback rounds

- The team is looking at performance measures now (memory, new window creation, tab opening, etc)

- dolske has some onboarding updates:

- 51 Beta is now live with the latest automigration test/data-gathering. (No wizard on startup, migrates and gives option to undo)

- Gijs is starting work on allowing automigration-undo even after activating Sync

- In discussions with verdi about an updated first-run experience (nutshell: simplified, focus on new-tab page so user can quickly see familiar auto-migrated data, starting point for low-key introduction of features)

- Fewer tabs, sign in to FxA, and then directly to about:newtab. (If you migrated from Chrome, your top sites will be there)

Search

- florian reports that recent Places changes improved the performance of coloring visited links in a page and the performance of searching through history

- The team has also fixed the display of Open Search providers on sites that provide a huge amount of them

Sync / Firefox Accounts

- Sync tracker improvements have landed! Please file bugs if you have bookmark sync enabled and see missing or scrambled bookmarks.

- 4 Mozillians have tried the About Sync validator and filed bugs. Keep them coming. (Email to kcambridge@mozilla.com)

- A new Sync storage server written in Go is coming soon

Here are the raw meeting notes that were used to derive this list.

Want to help us build Firefox? Get started here!

Here’s a tool to find some mentored, good first bugs to hack on.

https://blog.nightly.mozilla.org/2016/11/23/these-weeks-in-firefox-issue-6/

|

|

Doug Belshaw: How to create a sustainable web presence |

In the preface to his 2002 book, Small Pieces Loosely Joined Dave Weinberger writes:

What the Web has done to documents it is doing to just about every institution it touches. The Web isn’t primarily about replacing atoms with bits so that we can, for example, shop on line or make our supply chains more efficient. The Web isn’t even simply empowering groups, such as consumers, that have traditionally had the short end of the stick. Rather, the Web is changing our understanding of what puts things together in the first place. We live in a world that works well if the pieces are stable and have predictable effects on one another. We think of complex institutions and organizations as being like well-oiled machines that work reliably and almost serenely so long as their subordinate pieces perform their designated tasks. Then we go on the Web, and the pieces are so loosely joined that frequently the links don’t work; all too often we get the message (to put it palindromically) “404! Page gap! 404!” But, that’s ok because the Web gets its value not from the smoothness of its overall operation but from its abundance of small nuggets that point to more small nuggets. And, most important, the Web is binding not just pages but us human beings in new ways. We are the true “small pieces” of the Web, and we are loosely joining ourselves in ways that we’re still inventing.

In the fourteen years since Weinberger wrote these words, we’ve come to an everyday lived experience of living in a blended physical/digital world where things don’t always have stable, predictable effects.

As a result, those of us with the required mindset to deal with the impermanence of technologies are constantly riding the wave of innovation on the web, experimenting with different facets of our online identities, and learning new skills that may be immediately useful, or perhaps put to work further down the line.

On the other hand, those with the opposite - a deficit mindset tend to flock to places of perceived stability. Places that, in internet terms, have ‘always’ been around. These might be social networking platforms such as Facebook which 'everyone’ uses, search engines like Google, or email providers such as Microsoft. This attitude then seeps into the way we approach our projects at work: we look to either repurpose the platforms with which we’re already familiar, or create a one-stop shop where everything related to the project should reside.

I’d argue that we should use the medium of the web in the way that it works best. That is to go back to the title of Weinberger’s book, as 'small pieces, loosely joined’. Although I don’t claim any originality or credit for doing so, I want to use my own web presence as an example of this approach.

1. Canonical URL

The one thing that those with a deficit mindset do get right is that you should have a single place to point people towards, one place that serves a focus of attention. For me, that’s dougbelshaw.com:

As you can see, at the time of writing, this serves as an introduction to my work, as well as providing links to my other places and accounts online. This approach would work equally well for a project, or for an organisation.

Note that I control this space. I own (well, rent) it and that I can make this look however I want, rather than having to follow templates from a provider.

2. Horses for courses

This blog post that you’re reading is on a separate blog to my main blog. I use this one for anything relating to digital/new literacies and, as such, provide a way for those interested in this aspect of my work to get a 'clean’ feed, without the 'noise’ of my other work.

While you can get a similar effect by creating categories within, say WordPress-powered blogs, in my experience separate blogs work better. It also means that if one blog goes down for whatever reason, and is inaccessible to your audience, you still have another place that you can publish.

I also try to experiment with new technologies when creating different blogs, as it means that you have to work with different affordances, and develop different skills, as a result of using various platforms. Just like when you speak a foreign language there are idiomatic ways of saying things, so outlets and platforms have their own peculiarities and nuances.

3. Going with the flow

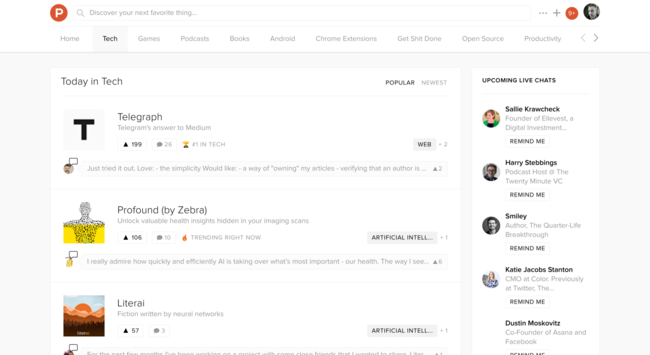

New outlets and platforms come out on a weekly basis. Some gain traction, some don’t. Over time, it becomes easier which of those featured on Product Hunt are likely to gain mass adoption. There’s a whole other post around Open Source versus proprietary solutions, and free versus paid.

As you can see from the above screenshot, Product Hunt today features Telegraph, a new blogging platform similar to Medium, from the makers of [Telegram](telegram.co) (a messaging service I already use). So, of course, I’ll explore that and sign up for an account. Then, when I’m ready to start a new blog I’ll have it in the toolkit.

On the other hand, Telegraph may prove to be a place to which I syndicate my posts. By that, I mean more than just sharing the links on social networks. As I do with many of the other posts I write, I’ll import this post into both Medium and into LinkedIn. After all, I’m in the business of trying to get as many people to see my work as possible.

Conclusion

There’s more to be written on this subject, but I hope this serves as a primer to getting started with a 'small pieces, loosely joined’ approach to having an online web presence. Whether it’s for you as an individual, for a project you’re working on, or for your organisation, it’s an approach that works like the web!

Image by Paulo Sim~oes Mendes

Comments? Questions? I’m @dajbelshaw on Twitter, or you can email me: hello@dynamicskillset.com

|

|

Marco Zehe: JavaScript is not an enemy of accessibility! |

When I started making my social media rounds this morning, I came across Jeffrey Zeldman’s call to action for this year’s Blue Beany Day on November 30th. But I respectfully disagree with a number of points he is making in his post about JavaScript frameworks and their accessibility implications.

Frameworks aren’t inaccessible because they’re frameworks

First, I agree that the spreading of new JS frameworks is a temporary problem for accessibility, since many developers who build these frameworks do indeed not know, or take into account, web standards such as the correct HTML semantics, or where these do not work, standards like WAI-ARIA. But if they did, the output those frameworks generated would be just as accessible as plain HTML typed into a plain text editor.

So the problem with inaccessible output is not that they come from frameworks, but that these frameworks are being told the wrong thing. And in my opinion, the solution cannot be to crawl back into a niche and just do plain HTML and CSS and ignore JavaScript. It was invented 18 years ago and is actively being improved to provide better user experiences. Ignoring it would be like going back to Windows 95 and hope the computer still capable of running it will last another month or two.

I strongly believe that this problem can be solved. The solution to this comes in three main parts.

Education

Web standards and their accessibility implications must become part of any and all university curricula that teach web development. A theme that resurfaces at every conversation I have with hiring managers is that engineers fresh from university have never even heard of accessibility.

And guess who those people should be that teach the younger generation these basics? Right, it should be us seasoned accessibility advocates who worked in this field for 15 or 20 years and know this stuff. We must push for many more solutions to this teaching problem than we have in the past.

Fix up frameworks and teach others by doing so

The second angle to attack this problem from is by fixing existing frameworks and pushing those fixes upstream via pull requests or whatever means is used in that particular project’s environment. And be vocal about it. Explain why you’re doing certain things and what the accessibility and usability benefits are. I know a lot of good people who already do this, and successfully, and have helped to make the world a lot better again for many who rely on assistive technologies while accessing web content. And also include those who benefit, but don’t use such assistive technologies.

And even if you’re someone who doesn’t have the coding skills to fix up the frameworks yourself, open pull requests. Describe the issues, maybe link to some resources about web accessibility. And tell others you opened that pull request, so they may become aware and jump in to provide their skills.

But first and foremost, be friendly when you do those things. The framework authors or community aren’t your enemy. They may lack some knowledge, but that is probably just a matter of improper education at their university (see above), or lack of awareness. Both can be changed, and this is only achieved by being friendly, empathic, and not by putting them in a defensive position by being rude.

Push on implementors to improve standards support

A third point that is equally important is to push implementors, in an equally friendly tone, to agree on technical issues in standards bodies and implement better support for new widgets, improve support in existing ones such as styling for form elements, for example, and thus give developers less reason to reinvent the wheel with every framework. The better the browser tooling is, the more accessible the web becomes from this angle, too.

JavaScript is not our enemy

JavaScript or frameworks built with it are not our enemy. They are neither the enemy of accessibility, nor of web standards in general. And neither are the ones who thought up those frameworks to make other developers’ lives easier. They want to do good things for the web just like accessibility advocates do, they just need to work together more.

https://www.marcozehe.de/2016/11/23/javascript-not-enemy-accessibility/

|

|

Daniel Stenberg: curl security audit |

“the overall impression of the state of security and robustness

of the cURL library was positive.”

I asked for, and we were granted a security audit of curl from the Mozilla Secure Open Source program a while ago. This was done by Mozilla getting a 3rd party company involved to do the job and footing the bill for it. The auditing company is called Cure53.

I applied for the security audit because I feel that we’ve had some security related issues lately and I’ve had the feeling that we might be missing something so it would be really good to get some experts’ eyes on the code. Also, as curl is one of the most used software components in the world a serious problem in curl could have a serious impact on tools, devices and applications everywhere. We don’t want that to happen.

I applied for the security audit because I feel that we’ve had some security related issues lately and I’ve had the feeling that we might be missing something so it would be really good to get some experts’ eyes on the code. Also, as curl is one of the most used software components in the world a serious problem in curl could have a serious impact on tools, devices and applications everywhere. We don’t want that to happen.

Scans and tests and all

We run static analyzers on the code frequently with a zero warnings tolerance. The daily clang-analyzer scan hasn’t found a problem in a long time and the Coverity once-every-few-weeks occasionally finds something suspicious but we always fix those immediately.

We have thousands of tests and unit tests that we run non-stop on the code on multiple platforms running multiple build combinations. We also use valgrind when running tests to verify memory use and check for potential memory leaks.

Secrecy

The audit itself. The report and the work on fixing the issues were all done on closed mailing lists without revealing to the world what was really going on. All as our fine security process describes.

There are several downsides with fixing things secretly. One of the primary ones is that we get much fewer eyes on the fixes and there aren’t that many people involved when discussing solutions or approaches to the issues at hand. Another is that our test infrastructure is made for and runs only public code so the code can’t really be fully tested until it is merged into the public git repository.

The report

We got the report on September 23, 2016 and it certainly gave us a lot of work.

The audit report has now been made public and is a very interesting work if you’re into security, C code and curl hacking. I find the report very clear, well written and it spells out each problem very accurately and even shows proof of concept code snippets and exploit examples to drive the points home.

Quoted from the report intro:

As for the approach, the test was rooted in the public availability of the source code belonging to the cURL software and the investigation involved five testers of the Cure53 team. The tool was tested over the course of twenty days in August and September of 2016 and main efforts were focused on examining cURL 7.50.1. and later versions of cURL. It has to be noted that rather than employ fuzzing or similar approaches to validate the robustness of the build of the application and library, the latter goal was pursued through a classic source code audit. Sources covering authentication, various protocols, and, partly, SSL/TLS, were analyzed in considerable detail. A rationale behind this type of scoping pointed to these parts of the cURL tool that were most likely to be prone and exposed to real-life attack scenarios. Rounding up the methodology of the classic code audit, Cure53 benefited from certain tools, which included ASAN targeted with detecting memory errors, as well as Helgrind, which was tasked with pinpointing synchronization errors with the threading model.

They identified no less than twenty-three (23) potential problems in the code, out of which nine were deemed security vulnerabilities. But I’d also like to emphasize that they did also actually say this:

At the same time, the overall impression of the state of security and robustness of the cURL library was positive.

Resolving problems

In the curl security team we decided to downgrade one of the 9 vulnerabilities to a “plain bug” since the required attack scenario was very complicated and the risk deemed small, and two of the issues we squashed into treating them as a single one. That left us with 7 security vulnerabilities. Whoa, that’s a lot. The largest amount we’ve ever fixed in a single release before was 4.

I consider handling security issues in the project to be one of my most important tasks; pretty much all other jobs are down-prioritized in comparison. So with a large queue of security work, a lot of bug fixing and work on features basically had to halt.

You can get a fairly detailed description of our work on fixing the issues in the fix and validation log. The report, the log and the advisories we’ve already posted should cover enough details about these problems and associated fixes that I don’t feel a need to write about them much further.

More problems

Just because we got our hands full with an audit report doesn’t mean that the world stops, right? While working on the issues one by one to have them fixed we also ended up getting an additional 4 security issues to add to the set, by three independent individuals.

All these issues gave me a really busy period and it felt great when we finally shipped 7.51.0 and announced all those eleven fixes to the world and I could get a short period of relief until the next tsunami hits.

|

|

Matjaz Horvat: Set up your own Pontoon instance in 5 minutes |

Heroku is a cloud Platform-as-a-Service (PaaS) we use at Pontoon for over a year and a half now. Despite some specifics that are not particularly suitable for our use case, it has proved to be a very reliable and easy to use deployment model.

Thanks to the amazing Jarek, you can now freely deploy your own Pontoon instance in just a few simple steps, without leaving the web browser and with very little configuration.

To start the setup, click a Deploy to Heroku button in your fork, upstream repository or this blog post. You will need to log in to Heroku or create an account first.

Next, you’ll be presented with the configuration page. All settings are optional, so you can simply scroll to the botton of the page and click Deploy.

Still, I suggest you to set the App Name for an easy to remember URL and Admin email & password, which are required for logging in (instead of Firefox Accounts, custom Heroku deployment uses conventional log in form).

When setup completes, you’re ready to View your personal Pontoon instance in your browser or Manage App in the Heroku Dashboard.

This method is also pretty convenient to quickly test or demonstrate any Pontoon improvements you might want to provide – without setting the development environment locally. Simply click Deploy to Heroku from the README file in your fork after you have pushed the changes.

If you’re searching for inspiration on what to hack on, we have some ideas!

http://horv.at/blog/set-up-your-own-pontoon-instance-in-5-minutes/

|

|

Mozilla Addons Blog: webextensions-examples and Hacktoberfest |

Hacktoberfest is an event organized by DigitalOcean in partnership with GitHub. It encourages contributions to open source projects during the month of October. This year the webextensions-examples project participated.

“webextensions-examples” is a collection of simple but complete and installable WebExtensions, that demonstrate how to use the APIs and provide a starting point for people writing their own WebExtensions.

We had a great response: contributions from 8 new volunteers in October. Contributions included 4 brand-new complete examples:

- emoji-substitution: shows how to use a content script to modify web pages. “emoji-substitution” replaces text in web pages with the corresponding emoji.

- list-cookies: shows how to use the cookies API, by showing a popup that lists all the cookies in the active tab.

- selection-to-clipboard: shows how to copy text to the system clipboard, by injecting a content script into pages that copies the selection to the clipboard on mouse-up events.

- window-manipulator: shows how to use the windows API to manipulate browser windows. It adds options to resize, open, close, and minimize windows.

So thanks to DigitalOcean, to the add-ons team for helping me review PRs, and most of all, to our new contributors:

https://blog.mozilla.org/addons/2016/11/22/webextensions-examples-and-hacktoberfest/

|

|

Christian Heilmann: Interviewing a depressed internet at The Internet Days |

This morning I gave one of two keynotes at the Internet Days in Stockholm, Sweden. Suffice to say, I felt well chuffed to be presenting at such a prestigious event and opening up for a day that will be closed by an interview with Edward Snowden!

Frankly, I freaked out and wanted to do something special. So, instead of doing a “state of the web” talk or some “here is what’s amazing right now”, I thought it would be fun to analyse how the web is doing. As such, I pretended to be a psychiatrist who interviews a slightly depressed internet on the verge of a midlife crisis.

The keynote is already available on YouTube.

The slides are on Slideshare:

Here is the script, which – of course – I failed to stick to. The bits in strong are my questions, the rest the answers of a slightly depressed internet.

Hello again, Internet. How are we doing today?

Not good, and I don’t quite know why.

It’s not that you feel threatened again, is it?

No, no, that’s a thing of the past. It was a bit scary for a while when the tech press gave up on me and everybody talked to those other guys: Apps.

But it seems that this is well over. People don’t download apps anymore. I think the reason is that they were too pushy. Deep down, people don’t want to be locked in. They don’t want to have to deal with software issues all the time. A lot of apps felt like very needy Tamagochi – always wanting huge updates and asking for access to all kind of data. Others even flat out told you you’re in the wrong country or the phone you spent $500 on last month is not good enough any longer.

No, I really think people are coming back to me.

That’s a good thing, isn’t it?

Yes, I suppose. But, I feel bad. I don’t think I make a difference any longer. People see me as a given. They see me as plumbing and aren’t interested in how I work. They don’t see me as someone to talk to and create things with me. They just want to consume what others do. And – much like running clear water – they don’t understand that it isn’t a normal thing for everybody. It is actually a privilege to be able to talk to me freely. And I feel people are not using this privilege.

I want to matter, I want to be known as a great person to talk to.

Oh dear, this sounds like you’re heading into a midlife crisis. Promise me you won’t do something stupid like buying a fancy sports car to feel better…

It’s funny you say that. I don’t want to, but I have no choice. People keep pushing me into things. Things I never thought I needed to be in.

But, surely that must feel good. Change is good, right?

Yes, and no. Of course it feels great that people realise I’m flexible enough to power whatever. But there are a few issues with that. First of all, I don’t want to power things that don’t solve issues but are blatant consumerism. When people talk about “internet of things” it is mostly about solving inconveniences of the rich and bored -instead of solving real problems. There are a few things that I love to power. I’m great at monitoring things and telling you if something is prone to breaking before it does. But these are rare. Most things I am pushed into are borderline silly and made to break. Consume more, do less. Constant accumulation of data with no real insight or outcome. Someone wants that data. It doesn’t go back to the owner of the things in most cases – or only a small part of it as a cute graph. I don’t keep that data either. But I get dizzy with the amount of traffic I need to deal with.

And the worst is that people connect things to me without considering the consequences. Just lately a lot of these things ganged up on me and took down a large bit of me for a while. The reason was that none of those things ever challenged their owners to set them up properly. A lack of strong passwords allowed a whole army of things that shouldn’t need to communicate much to shout and start a riot.

So, you feel misused and not appreciated?

Yes, many are bored of how I look now and want me to be flashier than before. It’s all about escaping into an own world. A world that is created for you and can be consumed without effort. I am not like this. I’m here to connect people. To help collaborative work. I want to empower people to communicate on a human level.

But, that’s what people do, isn’t it? People create more data than ever.

Maybe. Yes, I could be, but I don’t think I am. Sure, people talk to me. People look into cameras and take photos and send them to me. But I don’t get explanations. People don’t describe what they do and they don’t care if they can find it again later. Being first and getting quick likes is more important. It’s just adding more stuff, over and over.

So, people talk to you and it is meaningless? You feel like all you get is small talk?

I wished it were small. People have no idea how I work and have no empathy for how well connected the people they talk to are. I get huge files that could be small. And they don’t contain any information on what they are. I need to rely on others to find that information for me.

When it comes down to it, I am stupid. That’s why I am still around. I am so simple, I can’t break.

The ones using me to get information from humans use intelligent systems to try to decipher what it all means. Take the chat systems you talked about. These analyse images and turn them into words. They detect faces, emotions and text. This data goes somewhere, I don’t know where and most of the users don’t care. These systems then mimic human communication and give the recipient intelligent answers to choose from. Isn’t that cool?

People use me to communicate with other people and use language created for them by machines. Instead of using us, humans are becoming the transport mechanism for machines to talk to each other and mine data in between.

This applies to almost all content on the web – it is crazy. Algorithms write the news feeds; not humans.

Why do you think that is?

Speed. Constant change. Always new, always more, always more optimised. People don’t take time to verify things. It sickens me.

Why do you care?

You’re right, I shouldn’t be bothered. But I don’t feel good. I feel slow and sluggish.

Take a tweet. 140 letters, right?

Loading a URL that is a tweet on a computer downloads 3 megabytes of code. I feel – let’s name it – fat. I want to talk to everybody, but I am fed too much extra sugar and digital carbs. Most of the new people who are eager to meet me will never get me.

This is because this company want to give users their site functionality as soon as possible. But it feels more sinister to me. It is not about using me, it is about replacing me and keeping people inside of one system.

I am meant to be a loosely connected network of on-demand solutions. These used to be small, not a whopping 3 Megabytes to read 140 characters.

People still don’t get that about me. Maybe it is because I got two fathers and no mother. Some people get angry about this and don’t understand it. Maybe a woman’s touch would have made a better system.

But the worst is that I am almost in my 30s and I still haven’t got an income.

What do you mean by that?

There seems to be no way to make money with me. All everybody does is show ads for products and services people may or may not buy.

Ask for money to access something on me and people will not use it. Or find an illegal copy of the same thing. We raised a whole generation of people who don’t understand that creating on me is free, but not everything that I show is free to use and to consume.

Ads are making me sick and obese. Many of them don’t stop at showing people products. They spy on the people who use me, mine how they interact with things and sell that data to others. In the past, only people who use me for criminal purposes did that. Blackmail and such.

Can’t you do anything about that?

I can’t, but some people try. There’s ad blockers, new browsers that remove ads and proxy services. But to me these are just laxatives. I still need to consume the whole lot and then I need to get rid of it again. That’s not a healthy way to lose weight.

Some of them have nasty side effects, as you never know who watches the watchmen.

What do you need to feel better?

I want to communicate with humans again. I want to see real content, human interactions and real emotions. I don’t want to have predictable creativity and quick chuckles. I want people to use me for what I was meant to do – real communication and creativity.

Yes, you said that. What’s stopping you?

Two kinds of people. The ones who know my weaknesses and use me to attack others and bully them. This makes me really sick, and I find it disappointing that those who act out of malice are better at using me than others.

The second kind of people are those who don’t care. They have me, but they don’t understand that I could bring prosperity, information and creativity to everyone on the planet.

I need to lose some weight and I need to be more open to everybody out there again. Not dependent on a shiny and well-connected consumption device.

So, you’re stuck in between bullies and sheep?

Your words. Not mine. I like people. Some of my best friends are people. I want to keep empowering them.

However, if we don’t act now, those who always tried to control me will have won. Not because they are good at it, but because the intelligent, kind and good people of the web allowed the hooligans to take over. And that allows those who want to censor and limit me to do so in the name of “security”.

You sound worried…

I am. We all should be. This is not a time to be silent. This is not a time to behave. This is a time that needs rebels.

People who use their words, their kindness and their willingness to teach others the benefits of using me rather than consuming through me. Hey, maybe there are quite a few here who listened to me and help me get fit and happy again.

The feedback so far was amazing. People loved the idea and I had a whole day of interviews, chats, podcasts and more. Tomorrow will be fun, too, I am sure.

https://www.christianheilmann.com/2016/11/22/interviewing-a-depressed-internet-at-the-internet-days/

|

|

Ted Mielczarek: sccache, Mozilla’s distributed compiler cache, now written in Rust |

We build a lot of code at Mozilla. Every time someone pushes changes to the code that makes up Firefox we build the application on multiple platforms in a variety of build configurations. This means that we’re constantly looking for ways to make the build faster–to get faster results from our builds and tests and to use less machine time so that we can use fewer machines for builds and save money.

A few years ago my colleague Mike Hommey did some work to see if we could deploy a shared compiler cache. We had been using ccache for many of our builds, but since we use ephemeral build machines in AWS and we also have a large pool of build machines, it doesn’t help as much as it does on a developer’s local machine. If you’re interested in the details, I’d recommend you go read his series of blog posts: Shared compilation cache experiment, Shared compilation cache experiment, part 2, Testing shared cache on try and Analyzing shared cache on try. The short version is that the project (which he named sccache) was extremely successful and improved our build times in automation quite a bit. Another nice win was that he added support for Microsoft Visual C++ in sccache, which is not supported by ccache, so we were finally able to use a compiler cache on our Windows builds.

This year we started a concerted effort to drive build times down even more, and we’ve made some great headway. Some of the ideas for improvement we came up with would involve changes to sccache. I started looking at making changes to the existing Python sccache codebase and got a bit frustrated. This is not to say that Mike wrote bad code, he does fantastic work! By nature of the sccache design it is doing a lot of concurrent work and Python just does not excel at that workload. After talking with Mike he mentioned that he had originally planned to write sccache in Rust, but at the time Rust had not had its 1.0 release and the ecosystem just wasn’t ready for the work he needed to do. I had spent several months learning Rust after attending an “introduction to Rust” training session and I thought it’d be a good time to revisit that choice. (I went back and looked at some meeting notes and in late April I wrote a bullet point “Got distracted and started rewriting sccache in Rust”.)

As with all good software rewrites, the reality of things made it into a much longer project than anticipated. (In fairness to myself, I did set it aside for a few months to spend time on another project.) After seven months of part-time work on the project it’s finally gotten to the point where I’m ready to put it into production usage, replacing the existing Python tool. I did a series of builds on our Try server to compare performance of the existing sccache and the new version, mostly to make sure that I wasn’t going to cause regressions in build time. I was pleasantly surprised to find that the Rust version gave us a noticeable improvement in build times! I hadn’t done any explicit work on optimizing it, but some of the improvements are likely due to the process startup overhead being much lower for a Rust binary than a Python script. It actually lowered the time we spent running our configure script by about 40% on our Linux builds and 20% on our OS X builds, which makes some sense because configure invokes the compiler quite a few times, and when using ccache or sccache it will invoke the compiler using that tool.

My next steps are to tackle the improvements that were initially discussed. Making sccache usable for local developers is one thing, since Windows developers can’t use ccache currently this should help quite a bit there. We also want to make it possible for developers to use sccache and get cache hits from the builds that our automation has already done. I’d also like to spend some time polishing the tool a bit so that it’s usable to a wider audience outside of Mozilla. It solves real problems that I’m sure other organizations face as well and it’d be great for others to benefit from our work. Plus, it’s pretty nice to have an excuse to work in Rust.

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 21 Nov 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20161121/

|

|

Tarek Ziad'e: Smoke testing Swagger-based Web Services |

Swagger has come a long way. The project got renamed ("Open API") and it seems to have a vibrant community.

If you are not familiar with it, it's a specification to describe your HTTP endpoints (spec here) that has been around since a few years now ~ and it seems to be getting really mature at this point.

I was surprised to find out how many available tools they are now. The dedicated page has a serious lists of tools.

There's even a Flask-based framework used by Zalando to build microservices.

Using Swagger makes a lot of sense for improving the discoverability and documentation of JSON web services. But in my experience, with these kind of specs it's always the same issue: unless it provides a real advantage for developers, they are not maintained and eventually removed from projects.

So that's what I am experimenting on right now.

One use case that interests me the most is to see whether we can automate part of the testing we're doing at Mozilla on our web services by using Swagger specs.

That's why I've started to introduce Swagger on a handful of projects, so we can experiment on tools around that spec.

One project I am experimenting on is called Smwogger, a silly contraction of Swagger and Smoke (I am a specialist of stupid project names.)

The plan is to see if we can fully automate smoke tests against our APIs. That is, a very simple test scenario against a deployment, to make sure everything looks OK.

In order to do this, I have added an extension to the spec, called x-smoke-test, where developers can describe a simple scenario to test the API with a couple of assertions. There are a couple of tools like that already, but I wanted to see whether we could have one that could be 100% based on the spec file and not require any extra coding.

Since every endpoint has an operation identifier, it's easy enough to describe it and have a script (==Smwogger) that plays it.

Here's my first shot at it... The project is at https://github.com/tarekziade/smwogger and below is an extract from its README

Running Smwogger

To add a smoke test for you API, add an x-smoke-test section in your YAML or JSON file, describing your smoke test scenario.

Then, you can run the test by pointing the Swagger spec URL (or path to a file):

$ bin/smwogger smwogger/tests/shavar.yaml

Scanning spec... OK

This is project 'Shavar Service'

Mozilla's implementation of the Safe Browsing protocol

Version 0.7.0

Running Scenario

1:getHeartbeat... OK

2:getDownloads... OK

3:getDownloads... OK

If you need to get details about the requests and responses sent, you can use the -v option:

$ bin/smwogger -v smwogger/tests/shavar.yaml

Scanning spec... OK

This is project 'Shavar Service'

Mozilla's implementation of the Safe Browsing protocol

Version 0.7.0

Running Scenario

1:getHeartbeat...

GET https://shavar.somwehere.com/__heartbeat__

>>>

HTTP/1.1 200 OK

Content-Type: text/plain; charset=UTF-8

Date: Mon, 21 Nov 2016 14:03:19 GMT

Content-Length: 2

Connection: keep-alive

OK

<<<

OK

2:getDownloads...

POST https://shavar.somwehere.com/downloads

Content-Length: 30

moztestpub-track-digest256;a:1

>>>

HTTP/1.1 200 OK

Content-Type: application/octet-stream

Date: Mon, 21 Nov 2016 14:03:23 GMT

Content-Length: 118

Connection: keep-alive

n:3600

i:moztestpub-track-digest256

ad:1

u:tracking-protection.somwehere.com/moztestpub-track-digest256/1469223014

<<<

OK

3:getDownloads...

POST https://shavar.somwehere.com/downloads

Content-Length: 35

moztestpub-trackwhite-digest256;a:1

>>>

HTTP/1.1 200 OK

Content-Type: application/octet-stream

Date: Mon, 21 Nov 2016 14:03:23 GMT

Content-Length: 128

Connection: keep-alive

n:3600

i:moztestpub-trackwhite-digest256

ad:1

u:tracking-protection.somwehere.com/moztestpub-trackwhite-digest256/1469551567

<<<

OK

Scenario

A scenario is described by providing a sequence of operations to perform, given their operationId.

For each operation, you can make some assertions on the response by providing values for the status code and some headers.

Example in YAML

x-smoke-test:

scenario:

- getSomething:

response:

status: 200

headers:

Content-Type: application/json

- getSomethingElse

response:

status: 200

- getSomething

response:

status: 200

If a response does not match, an assertion error will be raised.

Posting data

When you are posting data, you can provide the request body content in the operation under the request key.

Example in YAML

x-smoke-test:

scenario:

- postSomething:

request:

body: This is the body I am sending.

response:

status: 200

Replacing Path variables

If some of your paths are using template variables, as defined by the swagger spec, you can use the path option:

x-smoke-test:

scenario:

- postSomething:

request:

body: This is the body I am sending.

path:

var1: ok

var2: blah

response:

status: 200

You can also define global path values that will be looked up when formatting paths. In that case, variables have to be defined in a top-level path section:

x-smoke-test:

path:

var1: ok

scenario:

- postSomething:

request:

body: This is the body I am sending.

path:

var2: blah

response:

status: 200

Variables

You can extract values from responses, in order to reuse them in subsequential operations, wether it's to replace variables in path templates, or create a body.

For example, if getSomething returns a JSON dict with a "foo" value, you can extract it by declaring it in a vars section inside the response key:

x-smoke-test:

path:

var1: ok

scenario:

- getSomething:

request:

body: This is the body I am sending.

path:

var2: blah

response:

status: 200

vars:

foo:

query: foo

default: baz

Smwogger will use the query value to know where to look in the response body and extract the value. If the value is not found and default is provided, the variable will take that value.

Once the variable is set, it will be reused by Smwogger for subsequent operations, to replace variables in path templates.

The path formatting is done automatically. Smwogger will look first at variables defined in operations, then at the path sections.

Conclusion

None for now. This is an ongoing experiment. But happy to get your feedback on github!

https://ziade.org/2016/11/21/smoke-testing-swagger-based-web-services/

|

|

Nick Desaulniers: Static and Dynamic Libraries |

This is the second post in a series on memory segmentation. It covers working with static and dynamic libraries in Linux and OSX. Make sure to check out the first on object files and symbols.

Let’s say we wanted to reuse some of the code from our previous project in our next one. We could continue to copy around object files, but let’s say we have a bunch and it’s hard to keep track of all of them. Let’s combine multiple object files into an archive or static library. Similar to a more conventional zip file or “compressed archive,” our static library will be an uncompressed archive.

We can use the ar command to create and manipulate a static archive.

1 2 | |

The -r flag will create the archive named libhello.a and add the files

x.o and y.o to its index. I like to add the -v flag for verbose output.

Then we can use the familiar nm tool I introduced in the

previous post

to examine the content of the archives and their symbols.

1 2 3 4 5 6 7 8 9 10 11 | |

Some other useful flags for ar are -d to delete an object file, ex. ar -d

libhello.a y.o and -u to update existing members of the archive when their

source and object files are updated. Not only can we run nm on our archive,

otool and objdump both work.

Now that we have our static library, we can statically link it to our program

and see the resulting symbols. The .a suffix is typical on both OSX and

Linux for archive files.

1 2 3 4 5 6 | |

Our compiler understands how to index into archive files and pull out the functions it needs to combine into the final executable. If we use a static library to statically link all functions required, we can have one binary with no dependencies. This can make deployment of binaries simple, but also greatly increase their size. Upgrading large binaries incrementally becomes more costly in terms of space.

While static libraries allowed us to reuse source code, static linkage does not allow us to reuse memory for executable code between different processes. I really want to put off talking about memory benefits until the next post, but know that the solution to this problem lies in “dynamic libraries.”

While having a single binary file keeps things simple, it can really hamper memory sharing and incremental relinking. For example, if you have multiple executables that are all built with the same static library, unless your OS is really smart about copy-on-write page sharing, then you’re likely loading multiple copies of the same exact code into memory! What a waste! Also, when you want to rebuild or update your binary, you spend time performing relocation again and again with static libraries. What if we could set aside object files that we could share amongst multiple instances of the same or even different processes, and perform relocation at runtime?

The solution is known as dynamic libraries. If static libraries and static linkage were Atari controllers, dynamic libraries and dynamic linkage are Steel Battalion controllers. We’ll show how to work with them in the rest of this post, but I’ll prove how memory is saved in a later post.

Let’s say we want to created a shared version of libhello. Dynamic libraries typically have different suffixes per OS since each OS has it’s preferred object file format. On Linux the .so suffix is common, .dylib on OSX, and .dll on Windows.

1 2 3 4 5 6 7 | |

The -shared flag tells the linker to create a special file called a shared

library. The -fpic option converts absolute addresses to relative addresses,

which allows for different processes to load the library at different virtual

addresses and share memory.

Now that we have our shared library, let’s dynamically link it into our executable.

1 2 3 4 | |

The dynamic linker essential produces an incomplete binary. You can verify

with nm. At runtime, we’ll delay start up to perform some memory mapping

early on in the process start (performed by the dynamic linker) and pay slight

costs for trampolining into position independent code.

Let’s say we want to know what dynamic libraries a binary is using. You can either query the executable (most executable object file formats contain a header the dynamic linker will parse and pull in libs) or observe the executable while running it. Because each major OS has its own object file format, they each have their own tools for these two checks. Note that statically linked libraries won’t show up here, since their object code has already been linked in and thus we’re not able to differentiate between object code that came from our first party code vs third party static libraries.

On OSX, we can use otool -L to check which .dylibs will get pulled in.

1 2 3 4 | |

So we can see that a.out depends on libhello.dylib (and expects to find it

in the same directory as a.out). It also depends on shared library called

libSystem.B.dylib. If you run otool -L on libSystem itself, you’ll see it

depends on a bunch of other libraries including a C runtime, malloc

implementation, pthreads implementation, and more. Let’s say you want to find

the final resting place of where a symbol is defined, without digging with nm

and otool, you can fire up your trusty debugger and ask it.

1 2 3 4 5 | |

You’ll see a lot of output since puts is treated as a regex. You’re looking

for the Summary line that has an address and is not a symbol stub. You can

then check your work with otool and nm.

If we want to observe the dynamic linker in action on OSX, we can use dtruss:

1 2 3 4 5 6 7 8 9 10 11 | |

On Linux, we can simply use ldd or readelf -d to query an executable for a

list of its dynamic libraries.

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

We can then use strace to observe the dynamic linker in action on Linux:

1 2 3 4 5 6 7 8 | |

What’s this LD_LIBRARY_PATH thing? That’s shell syntax for setting an

environmental variable just for the duration of that command (as opposed to

exporting it so it stays set for multiple commands). As opposed to OSX’s

dynamic linker, which was happy to look in the cwd for libhello.dylib, on Linux

we must supply the cwd if the dynamic library we want to link in is not in the

standard search path.

But what is the standard search path? Well, there’s another environmental

variable we can set to see this, LD_DEBUG. For example, on OSX:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

LD_DEBUG is pretty useful. Try:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | |

For some cool stuff, I recommend checking out LD_DEBUG=symbols and

LD_DEBUG=statistics.

Going back to LD_LIBRARY_PATH, usually libraries you create and want to reuse

between projects go into /usr/local/lib and the headers into

/usr/local/include. I think of the convention as:

1 2 3 4 5 6 7 8 9 | |

Unfortunately, it’s a loose convention that’s broken down over the years and

things are scattered all over the place. You can also run into dependency and

versioning issues, that I don’t want to get into here, by placing libraries

here instead of keeping them in-tree or out-of-tree of the source code of a

project. Just know when you see a library like libc.so.6 that the numeric

suffix is a major version number that follows semantic versioning. For more

information, you should read Michael Kerrisk’s excellent book The Linux

Programming Interface. This post is based on his chapter’s 41 & 42 (but with

more info on tooling and OSX).

If we were to place our libhello.so into /usr/local/lib (on Linux you need to

then run sudo ldconfig) and move x.h and y.h to /usr/local/include, then we

could then compile with:

1

| |

Note that rather than give a full path to our library, we can use the -l flag

followed by the name of our library with the lib prefix and .so suffix removed.

When working with shared libraries and external code, three flags I use pretty often:

1 2 3 | |

For finding specific flags needed for compilation where dynamic linkage is

required, a tool called pkg-config can be used for finding appropriate flags.

I’ve had less than stellar experiences with the tool as it puts the onus on the

library author to maintain the .pc files, and the user to have them installed

in the right place that pkg-config looks. When they do exist and are

installed properly, the tool works well:

1 2 3 4 | |

Using another neat environmental variable, we can hook into the dynamic linkage process and inject our own shared libraries to be linked instead of the expected libraries. Let’s say libgood.so and libmalicous.so both define a symbol for a function (the same symbol name and function signature). We can get a binary that links in libgood.so’s function to instead call libmalicous.so’s version:

1 2 3 4 | |

Manually invoking the dynamic linker from our code, we can even man in the middle library calls (call our hooked function first, then invoke the original target). We’ll see more of this in the next post on using the dynamic linker.

As you can guess, readjusting the search paths for dynamic libraries is a security concern as it let’s good and bad folks change the expected execution paths. Guarding against the use of these env vars becomes a rabbit hole that gets pretty tricky to solve without the heavy handed use of statically linking dependencies.

In the the previous post, I alluded to undefined symbols like puts. puts

is part of libc, which is probably the most shared dynamic library on most

computing devices since most every program makes use of the C runtime. (I

think of a “runtime” as implicit code that runs in your program that you didn’t

necessarily write yourself. Usually a runtime is provided as a library that

gets implicitly linked into your executable when you compile.) You can

statically link against libc with the -static flag, on Linux at least (OSX

makes this difficult,

“Apple does not support statically linked binaries on Mac OS X”).

I’m not sure what the benefit would be to mixing static and dynamic linking, but after searching the search paths from LD_DEBUG=libs for shared versions of a library, if any static ones are found, they will get linked in.

There’s also an interesting form of library called a “virtual dynamic shared

object” on Linux. I haven’t covered memory mapping yet, but know it exists, is

usually hidden for libc, and that you can read more about it via man 7 vdso.

One thing I find interesting and don’t quite understand how to recreate is that somehow glibc on Linux is also executable:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

Also, note that linking against third party code has licensing implications (of course) of particular interest when it’s GPL or LGPL. Here is a good overview which I’d summarize as: code that statically links against LGPL code must also be LGPL, while any form of linkage against GPL code must be GPL’d.

Ok, that was a lot. In the previous post, we covered Object Files and Symbols. In this post we covered hacking around with static and dynamic linkage. In the next post, I hope to talk about manually invoking the dynamic linker at runtime.

http://nickdesaulniers.github.io/blog/2016/11/20/static-and-dynamic-libraries/

|

|

Jack Moffitt: Servo Interview on The Changelog |

The Changelog has just published an episode about Servo. It covers the motivations and goals of the project, some aspects of Servo performance and use of the Rust language, and even has a bit about our wonderful community. If your curious about why Servo exists, how we plan to ship it to real users, or what it was like to use Rust before it was stable, I recommend giving it a listen.

https://metajack.im/2016/11/21/servo-interview-on-the-changelog/

|

|

Tantek Celik: Happy Third Birthday to the Homebrew Website Club! |

Three years ago (2013-324) we held the first Homebrew Website Club meetup at Mozilla San Francisco.

In the tradition of the Homebrew Computer Club, I wrote up the Homebrew Website Club Newsletter Volume 1 Issue 1 which has been largely replaced by Kevin Marks's excellent live-tweeting and summary postings after each San Francisco meetup.

Since then Homebrew Website Clubs have sprung up in over a dozen cities world wide and continue regularly (fortnightly or monthly) in nine cities across four time zones and three countries, six of which started in 2016!

New Homebrew Website Club cities this year, along with their start dates and a subsequent photo from one of their meetups this year:

- 2016-01-13: Los Angeles, California

- 2016-05-25: N"urnberg, GERMANY

- 2016-06-15: Bellingham, Washington

- 2016-09-21: Baltimore, Maryland

- 2016-10-05: Birmingham, ENGLAND - no photo! (yet) Hope to see one soon!

- 2016-06-15: London, ENGLAND

We have also seen a surge in cities with folks that are interested in starting up a Homebrew Website Club. Many existing cities started with just two people and grew slowly and steadily over time. All it takes is two individuals, committed to supporting each other in a fortnightly (or monthly) gathering to share what they have done recently on their personal websites, and they aspire to create next.

Find your city on this wiki page and add yourself! Then hop in the #indieweb chat channel and say hi!

- indieweb.org/Homebrew_Website_Club#Getting_Started_or_Need_Restarting

- #indieweb chat on the Freenode IRC network

- Tips on how to organize and run a Homebrew Website Club

Pick a venue, talk about all things independent web, take a fun photo like the N"urnberg animate GIF above, or like this recent one in San Francisco and post it on the wiki page for the event.

Remember to keep it fun as well as productive. Even if all you do is get together and finish writing a blog post and posting it on your indieweb site, that’s a good thing. Especially these days, the more people we can encourage to write authentic content and publish on their own sites, the better.

Previously: Congrats 2 years of HWC!

http://tantek.com/2016/324/b1/happy-birthday-homebrew-website-club

|

|

Support.Mozilla.Org: SUMO Show & Tell: A Small Fox on a Big River |

Hey there, SUMO Nation!

It is with great joy that I present to you a guest post by one of the most involved people I’ve met in my (still relatively short) time at SUMO. Seburo has been a core contributor in the community from his first day on board, and I can only hope we get to enjoy his presence among us in the future. He is one of those people whose photo could be put under “Mozillian” in the encyclopedias of this world… But, let’s not dawdle and move on to his words…

This is the outline of a presentation given at the London All-Hands as part of a session where the SUMO contributors had the opportunity to talk about the work that they have been involved in.

Towards the end of 2015, I noticed that we were getting an increasing number of requests on the SUMO Support Forum questioning how users could put Firefox for Android (Fennec, the small fox of this tale) on their Amazon Kindle Fire tablet (the big river). At first it was just one or two questions, but the more I saw the more I realised that there were some key facts driving the questions:

- We know that users like using Firefox for Android on their mobile devices. It enables them to use Firefox as their user agent on the web when they are away from their laptop or desktop.

- We know that people like using Android, possibly the worlds most popular operating system. People recognise and take comfort from the little green Android logo when they see it alongside a device they wish to purchase and they appreciate the ease of use and depth of support at a lower price point than its competitors.

- With a little research, it became clear why people have the Kindle Fire tablet – the price point. In the UK at the time it was retailing for lb60, almost half the price of a comparative device from the big name brand leader.

What was confusing and confounding users was that having purchased a device at a great price, that uses an operating system they know, they could not find Firefox for Android in the Amazon app store. SUMO does not support such a configuration, but with the number of questions coming through, I realised that there must be something we could do.

I started helping some users side load Firefox for Android onto their devices and through answering questions I soon found myself using a fairly standard text that users found solved their problem. As I refined it, it made sense for this to be included within the SUMO Knowledge Base, the user facing guide to using Mozilla software. But before I could do this, there was one key issue for which I needed the help of the truly amazing Firefox Mobile team…which version of Firefox for Android to use.

Whilst Firefox and Firefox Beta are seen as the best product versions, I was advised they they would only get updates through Google Play – not ideal if the user in on the Amazon variant of Android. I was advised that Aurora was the version to use as it would get important security updates and pointed in the direction of the site where it could be downloaded from. In addition to this, the Firefox Mobile team helped shape some of the language I was going to use and helped check my draft article (I did say that they are amazing…!).

Whilst there is no change to our support of the Kindle platform (the article carries a “health warning” to that effect), I think of this work as a great example of how several different teams, both staff and contributors, can come together to help find a user focussed solution.

Thank you for sharing your story, Seburo. An inspiring tale of initiating on a positive change that affects many users and makes Firefox more available as a result.

Do you have a story you would like to share with us? Let us know in the comments!

https://blog.mozilla.org/sumo/2016/11/19/sumo-show-and-tell-a-small-fox-on-a-big-river/

|

|

Robert O'Callahan: Overcoming Stereotypes One Parent At A Time |

I just got back from a children's sports club dinner where I hardly knew anyone and apparently I was seated with the other social leftovers. It turned out the woman next to me was very nice and we had a long conversation. She was excited to hear that I do computer science and software development, and mentioned that her daughter is starting university next year and strongly considering CS. I gave my standard pitch about why CS is a wonderful career path --- hope I didn't lay it on too thick. The daughter apparently is interested in computers and good at maths, and her teachers think she has a "logical mind", so that all sounded promising and I said so. But then the mother started talking about how that "logical mind" wasn't really a girly thing and asking whether the daughter might be better doing something softer like design. I pushed back and asked her not to make assumptions about what women and men might enjoy or be capable of, and mentioned a few of the women I've known who are extremely capable at hard-core CS. I pointed out that while CS isn't for everyone and I think people should try to find work they're passionate about, the demand and rewards are often greater for people in more technical roles.

This isn't the first time I've encountered mothers to a greater or lesser extent steering their daughters away from more technical roles. I've done a fair number of talks in high schools promoting CS careers, but at least for girls maybe targeting their parents somehow would also be worth doing.

I'll send this family some links to Playcanvas and other programming resources and hope that they, plus my sales pitch, will make a difference. One of the difficulties here is that you never know or find out whether what you did matters.

http://robert.ocallahan.org/2016/11/overcoming-stereotypes-one-parent-at.html

|

|

Emma Humphries: Redirecting Needinfos |

One of bugzilla.mozilla.org's (BMO) many features I love is needinfo. Rather than assigning a bug to someone to get more information (as one would do in JIRA,) one sets the needinfo flag on the person you need to ask. That way you don't have to unassign and reassign a bug, and you can have multiple needinfo requests in-flight (like when I ask platform engineers about who should be recommended reviewers in their components.)

Nathan Froyd found a great feature I had not known about in BMO. If you are needinfo'ed, but not the person with the answer, you can redirect the request so that it's transparent to the person asking.

I'm a little embarrassed that I didn't know this feature, but I'll be adding it to future BMO trainings I give.

ETA I'm looking at bug 1287461 and this may not be working as intended; investigating.

|

|

Emma Humphries: Red Hat Women in Open Source Award |

Red Hat is taking nominations for the Women in Open Source award, with USD 2,500 for the winner.

Nominations are due by the Friday after USA Thanksgiving (November 23rd,) so if you or people you know would benefit from the recognition of this award, consider nominating yourself or them.

The rules say the award is open to "an individual who identifies gender as female" so I don't know if they are inclusive of non-binary/genderqueer open source contributors. If you, dear reader, can clarify that, please do in the comments.

|

|