Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Robert O'Callahan: Welcoming Richard Dawkins |

Richard Dawkins wants New Zealand to invite Trump/Brexit-refugee scientists to move here to create "the Athens of the modern world".

I appreciate the compliment he pays my country (though, to be honest, I don't know why he singled us out). I would be delighted to see that vision happen, but in reality it's not going to. Every US election the rhetoric ratchets up and people promise to move here, but very very few of them follow through. Even Dawkins acknowledges it's a pipe-dream. This particular dream is inconceivable because "the Athens of the modern world" would need a gigantic amount of steady government funding for research, and that's not going to happen here.

To be honest it's a little bit frustrating to keep hearing people talk about moving to New Zealand without following through ... it feels like being treated more as a rhetorical device than a real place and people. That said, I personally would be delighted to welcome any science/technology people who really want to move here, and New Zealand's immigration system makes that relatively easy. I'd be especially delighted for Richard Dawkins to follow his own lead.

http://robert.ocallahan.org/2016/11/richard-dawkins-wants-new-zealand-to.html

|

|

Support.Mozilla.Org: What’s Up with SUMO – 10th November |

Greetings, SUMO Nation!

How have you been? Many changes around and we haven’t been slacking either – we are getting closer to the soft launch of the new community platform (happening next week), so be there when it happens :-) More details below…

Welcome, new contributors!

- alexandre.huat

- …and probably more of you, but you haven’t made yourselves known, so all the newcomer glory is reserved for Alexandre this week ;-)

If you just joined us, don’t hesitate – come over and say “hi” in the forums!

Contributors of the week

- All the forum supporters who tirelessly helped users out for the last week.

- All the writers of all languages who worked tirelessly on the KB for the last week.

We salute you!

SUMO Community meetings

- LATEST ONE: 9th of November – you can read the notes here and see the video at AirMozilla.

- NEXT ONE: happening on the 16th of November!

- If you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- We are going to retire the main SUMO mailing list, since it has been not used extensively for quite a while. So, you should remove sumo(AT)lists.mozilla.org from your contact lists.

- Reminder: read Seburo’s post about SUMO’s diversity on the Mozilla Discourse forums – and join the discussion there!

- Reminder: Got ideas for SUMO badges highlighting people’s “soft” skills? Talk to Roland or Michal.

- Final reminder: The malware issue is still ongoing, but there is a very easy method of making sure users don’t fall into any fake update traps – they should upgrade to version 49 as soon as possible!

Platform

- Check the notes from the last meeting in this document. (and don’t forget about our meeting recordings).

- One of the highlights is the proposed community forum structure – if you care deeply about it, take a peek into the notes!

- The soft launch is happening next week, so…

- You can preview the current migration test site here.

- Drop your feedback into the same feedback document as usual.

- We wil use all of next week for testing and reporting bugs for the structure, UX bits, data mapping, etc. – on Thursday (17th of November), we’ll decide whether we’re ready to go for real (or not yet, which will mean more work on making the site look and work the way it should).

- Reminder: The post-migration feature list can be found here (courtesy of Roland – ta!)

- We are still looking for user stories to focus on immediately after the migration (also known as “Phase 1”)

- If you are interested in test-driving the new platform now, please contact Madalina. Got feedback about the staging site? Drop it here!

Social

- Reminder: email Sierra at sreedATmozilla.com to get a scheduled training date for Respond and get on board as soon as you complete it.

- All those of you who already rock the social using the new tool – you are legends!

- It’s the final countdown for the Army of Awesome (the tool, not the people). It will be going away in about a week. Once more, huuuuuuuge thank yous to all of those who used it to great effect!

Support Forum

- The Flash vulnerability issue has been resolved with the latest update, please take a look at the Bugzilla thread for more details. If you see people reporting Flash issues, please ask them to update to the latest version!

- Moderators, please take a look at the new staging site (talk to Rachel to get access if you haven’t used it before).

- If you’re interested in crisis management ideas, contact Rachel to get access to her draft document describing that.

- No big news from the forums this week, which is good – it means things are progressing (rather) smoothly – thank you all!

- Don’t forget that the support forum for forum supporters is there for you if you need more help helping others ;-)

Knowledge Base & L10n

- 360 revisions were submitted over the last 7 days to the KB – keep rocking, SUMO l10ns!

- We are a week before next scheduled release (November 15th, due to a rescheduling). What does that mean?

- Only Joni or other admins can introduce and/or approve potential last minute changes of next release content; only Joni or other admins can set new content to RFL; localizers should focus on this content

- All other existing content is deprioritized, but can be edited and localized as usual

- Final reminder! here’s the list of articles that are required for the version 50 release:

- https://support.mozilla.org/kb/search-contents-current-page-text-or-links/locales

- https://support.mozilla.org/kb/mixed-content-blocking-firefox/locales

- https://support.mozilla.org/kb/how-do-i-turn-do-not-track-feature/locales

- https://support.mozilla.org/kb/tabs-organize-websites-single-window/locales

- https://support.mozilla.org/kb/using-tabs-firefox-android/locales

- https://support.mozilla.org/kb/whats-new-firefox-android/locales

- https://support.mozilla.org/kb/tabs-organize-websites-single-window/locales

- https://support.mozilla.org/kb/insecure-password-warning-firefox/locales

- We are still waiting for confirmation about the mechanics of l10n on the new platform – thank you for your patience!

- As for the launch locales, the spreadsheet listing them has been updated, and all the locales defined as “as archive” will be moved over as well (just the KB content, not the UI strings), but will not be made available to users until we have made sure there is enough of it and the quality is good enough.

Firefox

- for Desktop

- Version 50 coming November 15th! The forum thread is here, thanks to Philipp.

- for iOS

- No news from under the apple tree.

- No news from under the apple tree.

So, next week is (soft) migration week! Get ready to kick the tires of our new ride ;-) We’re all looking forward to a new start there – but with all of you, the best friends we could imagine to take on this adventure together with us. TTFN!

https://blog.mozilla.org/sumo/2016/11/10/whats-up-with-sumo-10th-november/

|

|

Air Mozilla: Connected Devices Weekly Program Update, 10 Nov 2016 |

Weekly project updates from the Mozilla Connected Devices team.

Weekly project updates from the Mozilla Connected Devices team.

https://air.mozilla.org/connected-devices-weekly-program-update-20161110/

|

|

Christian Heilmann: Decoded Chats – fourth edition featuring Sarah Drasner on SVG |

At SmashingConf Freiburg I took some time to interview Sarah Drasner on SVG.

In this interview we covered what SVG can bring, how to use it sensibly and what pitfalls to avoid.

You can see the video and get the audio recording of our chat over at the Decoded blog:

Sarah is a dear friend and a lovely person and knows a lot about animation and SVG.

Here are the questions we covered:

- SVG used to be a major “this is the future of the web” and then it vanished for a while. What is the reason of the new interest in a format that old?

- Tooling in SVG seems to be still lagging behind in what Flash gave us. Are there any good tools that have – for example – a full animation timeline?

- SVG syntax on first glance seems rather complex due to its XML format and lots of shortcut notations. Or is it just a matter of getting used to it?

- Coordinate systems seem to be easy to understand, however when it comes to dynamic coordinate systems and vector basics people get lost much easier. When you teach, is this an issue?

- What about prejudices towards SVG? It is rumoured to be slow and very memory intense. Is this true?

- Presets of tools seem to result in really large SVG files which is why we need extra tools to optimise them. Is this improving with the new-found interest in SVG?

- There seems to be a “war of animation tools”. You can use SVG, CSS Animations, The Web Animation API, or JavaScript libraries. What can developer do about this? Should we learn all of them?

- There are security issues with linking to external SVG files which makes them harder to use than – for example – images. This can be discouraging and scary for implementers, what can we do there?

- Does SVG live in the uncanny valley between development and design?

- Is there one thing you’d love people to stop saying about SVG as it is not true but keeps coming up in conversations?

|

|

Yunier Jos'e Sosa V'azquez: Firefox nunca m'as pedir'a repetidamente contrase~nas |

Desde hace algunos a~nos, todos los que usamos servidores proxy para acceder a Internet mediante Firefox, de una forma u otra alguna vez hemos tenido problemas con la autenticaci'on. Molestas ventanas emergentes pidiendo el usuario y contrase~na saltaban cuando menos te lo imaginabas, y, aunque la p'agina about:config nos permite llevar el nivel de configuraci'on del navegador al m'aximo, estos “trucos” no funcionaban para nada.

Despu'es de esperar un avance palpable en torno a este problema, hace pocos d'ias en Bugzilla actualizaron el estado de los bugs relacionados a RESOLVED FIXED y una agradable sorpresa me he llevado al probar varias veces y comprobar que funciona perfectamente.

Desde aqu'i llegue nuestro m'as sincero agradecimiento a Honza Bambas, Gary Lockyer, Patrick McManus y a todas las personas involucradas que de una forma u otra ayudaron a solucionar este bug.

Este parche se puede encontrar en el canal Nighlty (actualmente 52), invito a todos los interesados a descargar y probar esta versi'on para encontrar posibles errores antes de ser liberada en el canal Release (planificado para marzo de 2017).

Si te ha gustado esta noticia, d'ejanos saber tu opini'on en un comentario ;-).

https://firefoxmania.uci.cu/firefox-no-pedira-nunca-mas-repetidamente-contrasenas/

|

|

Air Mozilla: Reps Weekly Meeting Nov. 10, 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Mozilla Reps Community: Rep of the Month – October 2016 |

Hossain al Ikram is a passionate contributor from Bangladesh community. He is frontrunner for QA community from past two years and has been setting examples of remarkable leadership and value contribution under several functional areas. Ikram has shown great potential and he is proving his mettle at every instance.

He is actively mentoring people from different countries for QA initiative, He recently helped Indian community in setting up QA team. He also organized MozActivate campaign in Bangladesh. Check some examples QA events from Rajshahi, Sylhet, Chittagong , mentoring in Varenda or mentoring in Rajshahi. Also he started a ToT for WebCompat with more editions in November. You can read about his awesome work on his website.

Geraldo has been one of the most active members in Brazilian community over the last 3 months. Helping to coordinate Mozilla presence at FISL (one of the biggest OpenSource events in Brazil), engaging with the community, running events like Sao Paulo workday , or Latinoware, and even assisting to MozFest!

He is a very engaged mozillian, that also helps run events for Webcompat and SUMO hackatons. This November, you will see Geraldo doing more of his stuff in the upcoming events, promoting Mozilla mission, being an awesome Mozilla Club member, and spreading some #mozlove. Be sure to check his Medium account for more news about his work!

Please join us in congratulating them as Reps of the Month for October 2016!

https://blog.mozilla.org/mozillareps/2016/11/10/rep-of-the-month-october-2016/

|

|

Chris H-C: Data Science is Hard – Case Study: Latency of Firefox Crash Rates |

Firefox crashes sometimes. This bothers users, so a large amount of time, money, and developer effort is devoted to keep it from happening.

That’s why I like that image of Firefox Aurora’s crash rate from my post about Firefox’s release model. It clearly demonstrates the result of those efforts to reduce crash events:

So how do we measure crashes?

That picture I like so much comes from this dashboard I’m developing, and uses a very specific measure of both what a crash is, and what we normalize it by so we can use it as a measure of Firefox’s quality.

Specifically, we count the number of times Firefox or the web page content disappears from the user’s view without warning. Unfortunately, this simple count of crash events doesn’t give us a full picture of Firefox’s quality, unless you think Firefox is miraculously 30% less crashy on weekends:

So we need to normalize it based on some measure of how much Firefox is being used. We choose to normalize it by thousands of “usage hours” where a usage hour is one hour Firefox was open and running for a user without crashing.

Unfortunately, this choice of crashes per thousand usage hours as our metric, and how we collect data to support it, has its problems. Most significant amongst these problems is the delay between when a new build is released and when this measure can tell you if it is a good build or not.

Crashes tend to come in quickly. Generally speaking, when a user’s Firefox disappears out from under them, they are quick to restart it. This means this new Firefox instance is able to send us information about that crash usually within minutes of it happening. So for now, we can ignore the delay between a crash happening and our servers being told about it.

The second part is harder: when should users tell us that everything is fine?

We can introduce code into Firefox that would tell us every minute that nothing bad happened… but could you imagine the bandwidth costs? Even every hour might be too often. Presently we record this information when the user closes their browser (or if the user doesn’t close their browser, at the user’s local midnight).

The difference between the user experiencing an hour of un-crashing Firefox and that data being recorded is recording delay. This tends to not exceed 24 hours.

If the user shuts down their browser for the day, there isn’t an active Firefox instance to send us the data for collection. This means we have to wait for the next time the user starts up Firefox to send us their “usage hours” number. If this was a Friday’s record, it could easily take until Monday to be sent.

The difference between the data being recorded and the data being sent is the submission delay. This can take an arbitrary length of time, but we tend to see a decent chunk of the data within two days’ time.

This data is being sent in throughout each and every day. Somewhere at this very moment (or very soon) a user is starting up Firefox and that Firefox will send us some Telemetry. We have the facilities to calculate at any given time the usage hours and the crash numbers for each and every part of each and every day… but this would be a wasteful approach. Instead, a scheduled task performs an aggregation of crash figures and usage hour records per day. This happens once per day and the result is put in the CrashAggregates dataset.

The difference between a crash or usage hour record being submitted and it being present in this daily derived dataset is aggregation delay. This can be anywhere from 0 to 23 hours.

This dataset is stored in one format (parquet), but queried in another (prestodb fronted by re:dash). This migration task is performed once per day some time after the dataset is derived.

The difference between the aggregate dataset being derived and its appearance in the query interface is migration delay. This is roughly an hour or two.

Many queries run against this dataset and are scheduled sequentially or on an ad hoc basis. The query that supplies the data to the telemetry crash dashboard runs once per day at 2pm UTC.

The difference between the dataset being present in the query interface and the query running is query scheduling delay. This is about an hour.

This provides us with a handy equation:

latency = reporting delay + submission delay + aggregation delay + migration delay + query scheduling delay

With typical values, we’re seeing:

latency = 6 hours + 24 hours + 12 hours + 1 hour + 1 hour

latency = 2 days

And since submission delay is unbounded (and tends to be longer than 24 hours on weekends and over holidays), the latency is actually a range of probable values. We’re never really sure when we’ve heard from everyone.

So what’s to blame, and what can we do about it?

The design of Firefox’s Telemetry data reporting system is responsible for reporting delay and submission delay: two of the worst offenders. submission delay could be radically improved if we devoted engineering resources to submitting Telemetry (both crash numbers and “usage hour” reports) without an active Firefox running (using, say, a small executable that runs as soon as Firefox crashes or closes). reporting delay will probably not be adjusted very much as we don’t want to saturate our users’ bandwidth (or our own).

We can improve aggregation delay simply by running the aggregation, migration, and query multiple times a day, as information is coming in. Proper scheduling infrastructure can remove all the non-processing overhead from migration delay and query scheduling delay which can bring them easily down below a single hour, combined.

In conclusion, even given a clear and specific metric and a data collection mechanism with which to collect all the data necessary to measure it, there are still problems when you try to use it to make timely decisions. There are technical solutions to these technical problems, but they require a focused approach to improve the timeliness of reported data.

:chutten

|

|

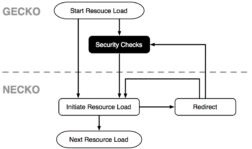

Mozilla Security Blog: Enforcing Content Security By Default within Firefox |

Enforcing Content Security Historically

Enforcing Content Security By Default

https://blog.mozilla.org/security/2016/11/10/enforcing-content-security-by-default-within-firefox/

|

|

The Rust Programming Language Blog: Announcing Rust 1.13 |

The Rust team is happy to announce the latest version of Rust, 1.13.0. Rust is a systems programming language focused on safety, speed, and concurrency.

As always, you can install Rust 1.13.0 from the appropriate page on our website, and check out the detailed release notes for 1.13.0 on GitHub. 1448 patches were landed in this release.

It’s been a busy season in Rust. We enjoyed three Rust conferences, RustConf, RustFest, and Rust Belt Rust, in short succession. It was great to see so many Rustaceans in person, some for the first time! We’ve been thinking a lot about the future, developing a roadmap for 2017, and building the tools our users tell us they need.

And even with all that going on, we put together a new release filled with fun new toys.

What’s in 1.13 stable

The 1.13 release includes several extensions to the language, including the

long-awaited ? operator, improvements to compile times, minor feature

additions to cargo and the standard library. This release also includes many

small enhancements to documentation and error reporting, by many contributors,

that are not individually mentioned in the release notes.

This release contains important security updates to Cargo, which depends on curl and OpenSSL, which both published security updates recently. For more information see the respective announcements for curl 7.51.0 and OpenSSL 1.0.2j.

The ? operator

Rust has gained a new operator, ?, that makes error handling more pleasant by

reducing the visual noise involved. It does this by solving one simple

problem. To illustrate, imagine we had some code to read some data from a file:

fn read_username_from_file() -> Result<String, io::Error> {

let f = File::open("username.txt");

let mut f = match f {

Ok(file) => file,

Err(e) => return Err(e),

};

let mut s = String::new();

match f.read_to_string(&mut s) {

Ok(_) => Ok(s),

Err(e) => Err(e),

}

}

This code has two paths that can fail, opening the file and reading the data

from it. If either of these fail to work, we’d like to return an error from

read_username_from_file. Doing so involves matching on the result of the I/O

operations. In simple cases like this though, where we are only propagating

errors up the call stack, the matching is just boilerplate - seeing it written

out, in the same pattern every time, doesn’t provide the reader with a great

deal of useful information.

With ?, the above code looks like this:

fn read_username_from_file() -> Result<String, io::Error> {

let mut f = File::open("username.txt")?;

let mut s = String::new();

f.read_to_string(&mut s)?;

Ok(s)

}

The ? is shorthand for the entire match statements we wrote earlier. In other

words, ? applies to a Result value, and if it was an Ok, it unwraps it and

gives the inner value. If it was an Err, it returns from the function you’re

currently in. Visually, it is much more straightforward. Instead of an entire

match statement, now we are just using the single “?” character to indicate that

here we are handling errors in the standard way, by passing them up the

call stack.

Seasoned Rustaceans may recognize that this is the same as the try! macro

that’s been available since Rust 1.0. And indeed, they are the same. Before

1.13, read_username_from_file could have been implemented like this:

fn read_username_from_file() -> Result<String, io::Error> {

let mut f = try!(File::open("username.txt"));

let mut s = String::new();

try!(f.read_to_string(&mut s));

Ok(s)

}

So why extend the language when we already have a macro? There are multiple

reasons. First, try! has proved to be extremely useful, and is used often in

idiomatic Rust. It is used so often that we think it’s worth having a sweet

syntax. This sort of evolution is one of the great advantages of a powerful

macro system: speculative extensions to the language syntax can be prototyped

and iterated on without modifying the language itself, and in return, macros that

turn out to be especially useful can indicate missing language features. This

evolution, from try! to ? is a great example.

One of the reasons try! needs a sweeter syntax is that it is quite

unattractive when multiple invocations of try! are used in

succession. Consider:

try!(try!(try!(foo()).bar()).baz())

as opposed to

foo()?.bar()?.baz()?

The first is quite difficult to scan visually, and each layer of error handling

prefixes the expression with an additional call to try!. This brings undue

attention to the trivial error propagation, obscuring the main code path, in

this example the calls to foo, bar and baz. This sort of method chaining

with error handling occurs in situations like the builder pattern.

Finally, the dedicated syntax will make it easier in the future to produce nicer

error messages tailored specifically to ?, whereas it is difficult to produce

nice errors for macro-expanded code generally (in this release, though, the ?

error messages could use improvement).

Though this is a small feature, in our experience so far, ? feels like a solid

ergonomic improvement to the old try! macro. This is a good example of the

kinds of incremental, quality-of-life improvements Rust will continue to

receive, polishing off the rough corners of our already-powerful base language.

Read more about ? in RFC 243.

Performance improvements

There has been a lot of focus on compiler performance lately. There’s good news in this release, and more to come.

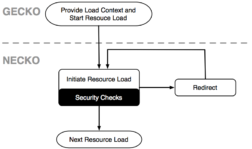

Mark Simulacrum and Nick Cameron have been refining perf.rust-lang.org, our tool for tracking compiler performance. It runs the rustc-benchmarks suite regularly, on dedicated hardware, and tracks the results over time. This tool records the results for each pass in the compiler and is used by the compiler developers to narrow commit ranges of performance regressions. It’s an important part of our toolbox!

We can use this tool to look at a graph of performance over the 1.13 development cycle, shown below. This cycle covered the dates from August 16 through September 29 (the graph begins from Augest 25th though and is filtered in a few ways to eliminate bogus, incomplete, or confusing results). There appear to be some big reductions, which are quantified on the corresponding statistics page.

The big improvement demonstrated in the graphs, on September 1, is from an optimization from Niko to cache normalized projections during translation. That is to say, during generation of LLVM IR, the compiler no longer recomputes concrete instances of associated types each time they are needed, but instead reuses previously-computed values. This optimization doesn’t affect all code bases, but in code bases that exhibit certain patterns, like futures-rs, where debug mode build-time improved by up to 40%, you’ll notice the difference.

Another such optimization, that doesn’t affect every crate but does affect some

in a big way, came from Michael Woerister, and improves compile time for crates

that export many inline functions. When a function is marked #[inline], in

addition to translating that function for use by the current crate, the compiler

stores its MIR representation in the crate rlib, and translates the function to

LLVM IR in every crate that calls it. The optimization Michael did is obvious in

retrospect: there are some cases where inline functions are only for the

consumption of other crates, and never called from the crate in which they are

defined; so the compiler doesn’t need to translate code for inline functions in

the crate they are defined unless they are called directly. This saves the

cost of rustc converting the function to LLVM IR and LLVM optimizing and

converting the function to machine code.

In some cases this results in dramatic improvements. Build times for the ndarray crate improved by 50%, and in the (unreleased) winapi 0.3 crate, rustc now emits no machine code at all.

But wait, there’s more still! Nick Nethercote has turned his focus to compiler performance as well, focusing on profiling and micro-optimizations. This release contains several fruits of his work, and there are more in the pipeline for 1.14.

Other notable changes

This release contains important security updates to Cargo, which depends on curl and OpenSSL, which both published security updates recently. For more information see the respective announcements for curl 7.51.0 and OpenSSL 1.0.2j.

Macros can now be used in type position (RFC 873), and attributes can be applied to statements (RFC 16):

// Use a macro to name a type

macro_rules! Tuple {

{ $A:ty,$B:ty } => { ($A, $B) }

}

let x: Tuple!(i32, i32) = (1, 2);

// Apply a lint attribute to a single statement

#[allow(uppercase_variable)]

let BAD_STYLE = List::new();

Inline drop flags have been removed. Previously, in case of a conditional move, the compiler would store a “drop flag” inline in a struct (increasing its size) to keep track of whether or not it needs to be dropped. This means that some structs take up some unexpected extra space, which interfered with things like passing types with destructors over FFI. It also was a waste of space for code that didn’t have conditional moves. In 1.12, MIR became the default, which laid the groundwork for many improvements, including getting rid of these inline drop flags. Now, drop flags are stored in an extra slot on the stack frames of functions that need them.

1.13 contains a serious bug in code generation for ARM targets using hardware floats (which is most ARM targets). ARM targets in Rust are presently in our 2nd support tier, so this bug was not determined to block the release. Because 1.13 contains a security update, users that must target ARM are encouraged to use the 1.14 betas, which will soon get a fix for ARM.

Language stabilizations

- The

Reflecttrait is deprecated. See the explanation of what this means for parametricity in Rust. - Stabilize macros in type position. RFC 873.

- Stabilize attributes on statements. RFC 16.

Library stabilizations

checked_abs,wrapping_abs, andoverflowing_absRefCell::try_borrow, andRefCell::try_borrow_mut- Add

assert_ne!anddebug_assert_ne! - Implement

AsRef<[T]>forstd::slice::Iter - Implement

CoerceUnsizedfor{Cell, RefCell, UnsafeCell} - Implement

Debugforstd::path::{Components,Iter} - Implement conversion traits for

char SipHasheris deprecated. UseDefaultHasher.- Implement more traits for

std::io::ErrorKind

Cargo features

See the detailed release notes for more.

Contributors to 1.13.0

We had 155 individuals contribute to 1.13.0. Thank you so much!

- Aaron Gallagher

- Abhishek Kumar

- aclarry

- Adam Medzi'nski

- Ahmed Charles

- Aleksey Kladov

- Alexander von Gluck IV

- Alexandre Oliveira

- Alex Burka

- Alex Crichton

- Amanieu d’Antras

- Amit Levy

- Andrea Corradi

- Andre Bogus

- Andrew Cann

- Andrew Cantino

- Andrew Lygin

- Andrew Paseltiner

- Andy Russell

- Ariel Ben-Yehuda

- arthurprs

- Ashley Williams

- athulappadan

- Austin Hicks

- bors

- Brian Anderson

- c4rlo

- Caleb Jones

- CensoredUsername

- cgswords

- changchun.fan

- Chiu-Hsiang Hsu

- Chris Stankus

- Christopher Serr

- Chris Wong

- clementmiao

- Cobrand

- Corey Farwell

- Cristi Cobzarenco

- crypto-universe

- dangcheng

- Daniele Baracchi

- DarkEld3r

- David Tolnay

- Dustin Bensing

- Eduard Burtescu

- Eduard-Mihai Burtescu

- Eitan Adler

- Erik Uggeldahl

- Esteban K"uber

- Eugene Bulkin

- Eugene R Gonzalez

- Fabian Zaiser

- Federico Ravasio

- Felix S. Klock II

- Florian Gilcher

- Gavin Baker

- Georg Brandl

- ggomez

- Gianni Ciccarelli

- Guillaume Gomez

- Jacob

- jacobpadkins

- Jake Goldsborough

- Jake Goulding

- Jakob Demler

- James Duley

- James Miller

- Jared Roesch

- Jared Wyles

- Jeffrey Seyfried

- JessRudder

- Joe Neeman

- Johannes L"othberg

- John Firebaugh

- johnthagen

- Jonas Schievink

- Jonathan Turner

- Jorge Aparicio

- Joseph Dunne

- Josh Triplett

- Justin LeFebvre

- Keegan McAllister

- Keith Yeung

- Keunhong Lee

- king6cong

- Knight

- knight42

- Kylo Ginsberg

- Liigo

- Manish Goregaokar

- Mark-Simulacrum

- Matthew Piziak

- Matt Ickstadt

- mcarton

- Michael Layne

- Michael Woerister

- Mikhail Modin

- Mohit Agarwal

- Nazim Can Altinova

- Neil Williams

- Nicholas Nethercote

- Nick Cameron

- Nick Platt

- Niels Sascha Reedijk

- Nikita Baksalyar

- Niko Matsakis

- Oliver Middleton

- Oliver Schneider

- orbea

- Panashe M. Fundira

- Patrick Walton

- Paul Fanelli

- philipp

- Phil Ruffwind

- Piotr Jawniak

- pliniker

- QuietMisdreavus

- Rahul Sharma

- Richard Janis Goldschmidt

- Scott A Carr

- Scott Olson

- Sean McArthur

- Sebastian Ullrich

- S'ebastien Marie

- Seo Sanghyeon

- Sergio Benitez

- Shyam Sundar B

- silenuss

- Simonas Kazlauskas

- Simon Sapin

- Srinivas Reddy Thatiparthy

- Stefan Schindler

- Stephan H"ugel

- Steve Klabnik

- Steven Allen

- Steven Fackler

- Terry Sun

- Thomas Garcia

- Tim Neumann

- Tobias Bucher

- Tomasz Miasko

- trixnz

- Tshepang Lekhonkhobe

- Ulrich Weigand

- Ulrik Sverdrup

- Vadim Chugunov

- Vadim Petrochenkov

- Vanja Cosic

- Vincent Esche

- Wesley Wiser

- William Lee

- Ximin Luo

- Yossi Konstantinovsky

- zjhmale

|

|

Sean McArthur: RustConf 2016 |

I got to attend RustConf in September1, and felt these talks in particular may interest someone working on FxA2:

- The keynote isn’t too important to watch if you’re not that involved in Rust. It’s mostly talking about the 2017 plan. But, worth mentioning, is that part of that plan for 2017 is to target and improve Rust libraries for writing server software. A small piece of that is hyper, a Rust HTTP library I work on. Maybe we can start to consider writing some of our software in Rust in 2017.

Futures - The Rust community has been working rapidly on a very promising concept to write asynchronous code in Rust. You likely are pretty comfortable with how Promises work in JavaScript. The Futures library in Rust feels very similar to JavaScript Promises, but! But! They compile down to an optimized state machine, without the need to allocate a whole bunch of closures like JavaScript does.

On top of the Futures library, the community is working on a library that is “futures + network IO”, and that’s tokio. It’s a framework designed to help anyone build a network protocol library. A big user of this is hyper. The examples in hyper show how expressive this pattern can be, while still being super fast.

How to do community RFCs - Rust has a method for the community to suggest improvements to the language, which they call RFCs. These are very similar in practice to Python’s PEPs. It’s been quite successful, and other notable projects have adopted it as well, such as Ember.js. In fact, the RFC process from Rust is what I looked at when we were adjusting how to do our FxA Features. This talk showed how truly impressive it is that the community can work together at designing a better feature.

Rust is a great way to learn how to do systems programming - This was a really special talk about how someone who may be scared of the ominous “systems programming” can actually dive right in without worrying about blowing off a (computer’s) leg. If you’ve mostly used “higher level” languages, and wondered how in the world to dive in, Julia has a great message for you.

If you don’t check out any other talk, at least look at this one. If videos aren’t your thing, try the written article form instead.

|

|

Air Mozilla: The Joy of Coding - Episode 79 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

https://air.mozilla.org:443/the-joy-of-coding-episode-78-20161109/

|

|

Yunier Jos'e Sosa V'azquez: !Firefox cumple sus primeros 12 a~nos! |

Un d'ia como hoy, pero 12 a~nos atr'as fue liberada de forma oficial Firefox 1.0, un navegador diferente y alternativo al IE de aquellos tiempos.

Desde aquel glorioso 9 de noviembre de 2004, Firefox ha incluido funcionalidades que han revolucionado la web, sorteado problemas y navegado con astucia para convertirse en unos de los proyectos de software libre m'as importantes del mundo. Sin dudas debemos sentirnos felices por eso.

Solo nos queda desear muchas felicidades y muchos a~nos m'as de vida a Firefox y a la Comunidad Mozilla por mantenerlo.

Un fondo de escritorio para la ocasi'on.

Los dejo con algunas fotos de nuestras anteriores celebraciones.

|

|

Air Mozilla: Weekly SUMO Community Meeting Nov. 09, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org:443/weekly-sumo-community-meeting-nov-09-2016/

|

|

Doug Belshaw: How to use a VPN to ensure good 'digital hygiene' while travelling |

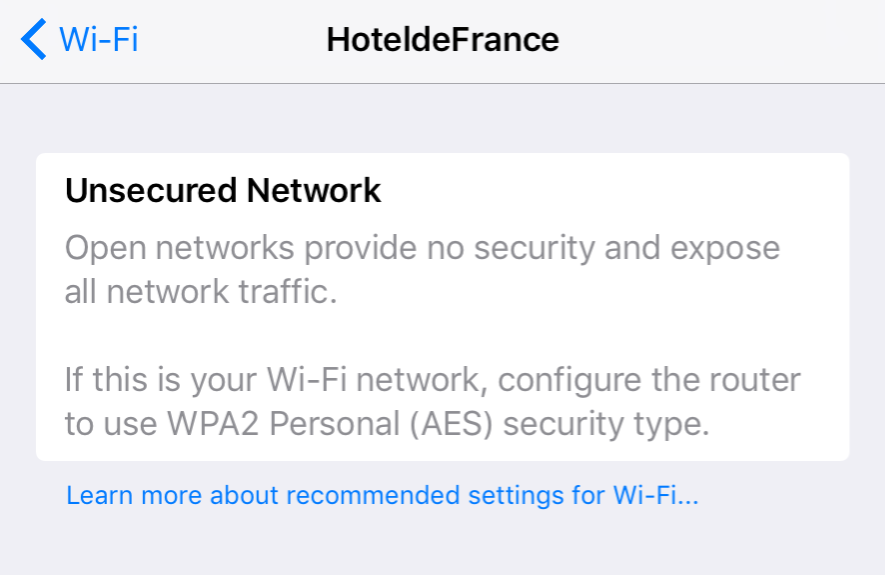

I travel reasonably often as part of my work. One trend I’ve noticed recently is for hotels to provide unsecured wifi, without even so much as a landing page. While this means a ‘frictionless’ experience for guests connecting to the internet, it’s also extremely bad practice from a security point of view.

Unless you know and trust the person or organisation providing your internet connection, you should proceed with caution. Your data are valuable - the business model of Facebook is testament to that! Protect your digital identity.

A Virtual Private Network (VPN) is a way to route your traffic through a trusted server. You could run your own, but the usual way is to pay for this kind of service to ensure there are no bandwidth bottlenecks. A nice little bonus to using VPNs is the ability to make it look like you are based in another country, meaning you get access to content that might be restricted in your own country.

I’m still a fan of iPREDator but it can be cumbersome to set up. That’s why I’m currently using TunnelBear as it’s super-simple to configure, works across all of my devices, and they promise not to keep any logs of your activity (which could be shared with the authorities, etc.)

Getting started on iOS

I’m not going to screenshot every step, but I’m sure you can figure it out.

1) Download TunnelBear from the App Store.

2) Open the app and sign up for a new account. You could use a throwaway email account like Mailinator if you’re willing to keep setting up new accounts, I guess.

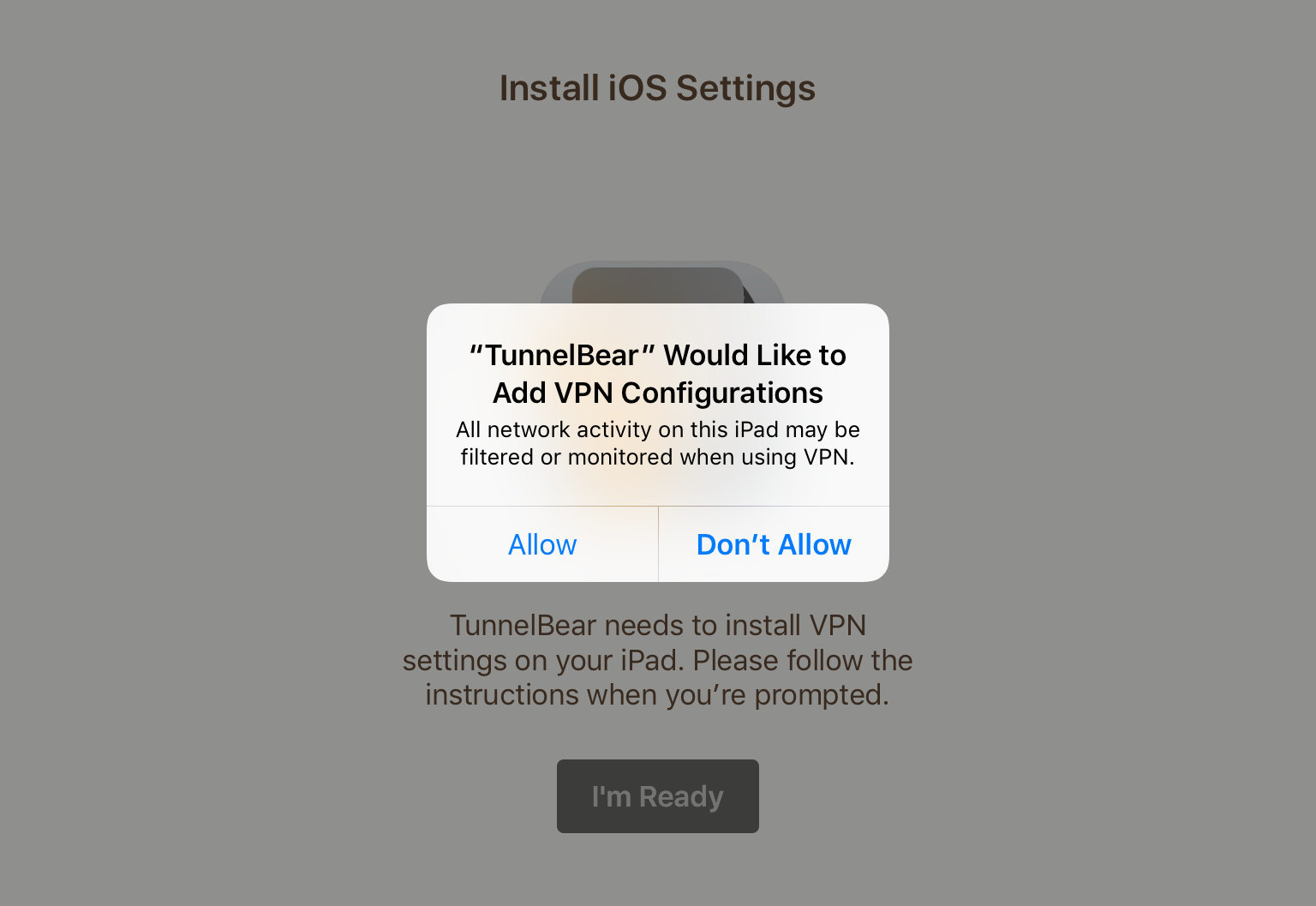

3) Allow TunnelBear to change the VPN settings for your device.

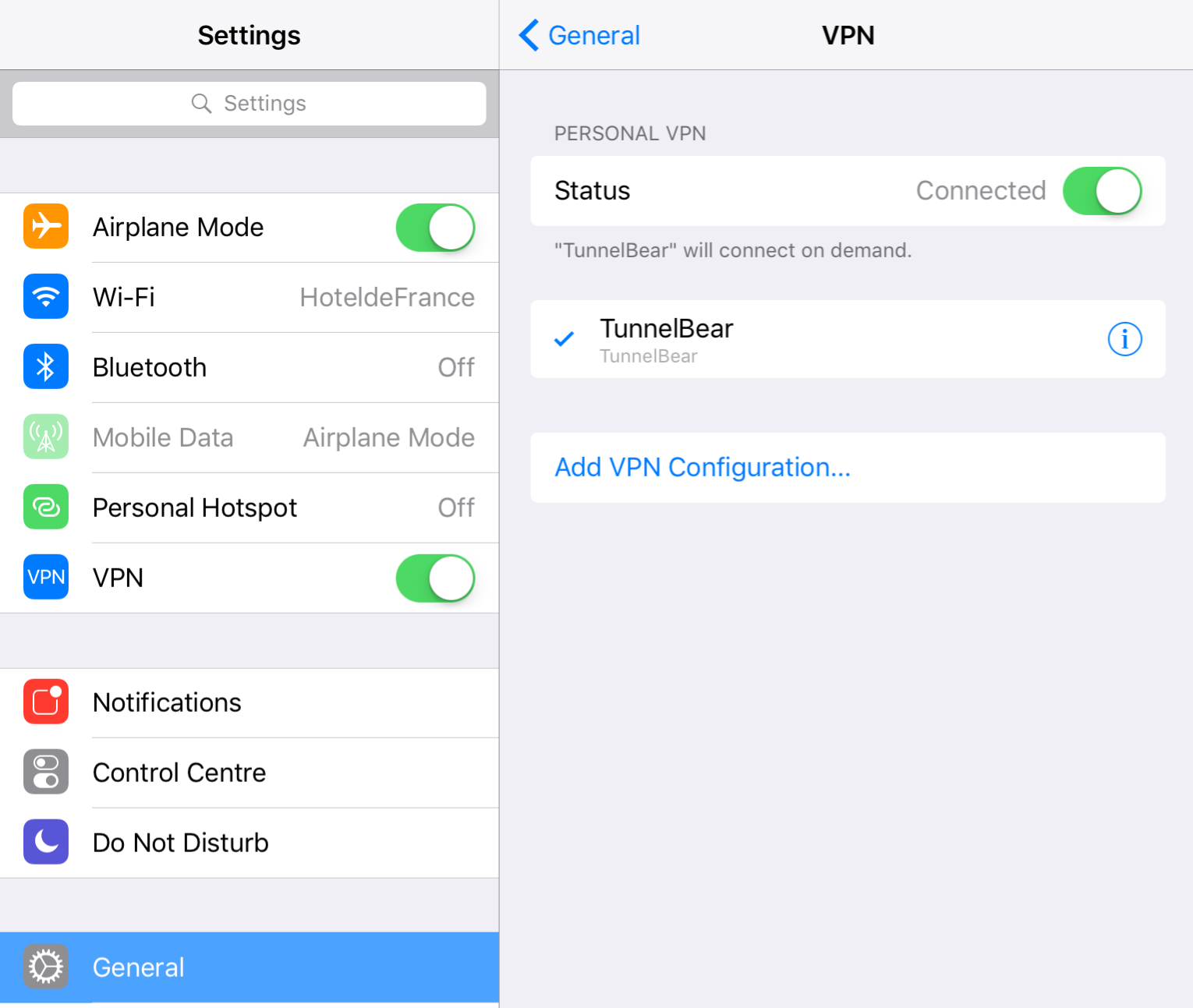

4) Once these VPN settings are installed, you don’t actually have to use the app, as you can connect by going to Settings -> General -> VPN and toggling the switch to ON.

When this toggle switch is on, all of your traffic is being routed through the VPN.

There’s also TunnelBear apps for Mac and Windows, and a one-click install process for Chrome and Opera web browsers. This is great news for users of Chromebooks (like me!)

I’d recommend setting up TunnelBear (or whatever VPN you choose) before travelling. That way, you don’t have to connect to an unsecured wifi network at all. For more advanced users, there’s Tor and the Tor browser, based on Firefox. This works slightly differently, bouncing your traffic around the internet, and is actually what I use on Android for private browsing.

Comments? Questions? I’m @dajbelshaw or you can email me: hello@dynamicskillset.com

|

|

Andy McKay: If you've got nothing to hide... |

When it was revealed that every Government in the world was using technology to spy unlawfully on every other country, there was an arugment that this was acceptable "as long as you have nothing to hide". That argument is, of course, complete rubbish and individual freedoms and rights to privacy are extremely important.

But we've just witnessed in the US another problem with this argument. It's acceptable "as long as you have nothing to hide from every single government and authority that is going to possibly come after". For example, the current Canadian Liberal government might be "ok", but who's going to get elected next. And next? What happens if one of those governments is like the one coming into the US?

There is a chilling example of this in history. In the Netherlands they recorded peoples information in a census and that included peoples religion. In 1941 there were about 140,000 Dutch Jews living in the Netherlands. After the Nazi invasion there were an estimated 35,000 left. Because the Government had census data for them, it was easy for the Nazis to find and eliminate them using that data.

Some 75% of the Dutch-Jewish population perished, an unusually high percentage compared with other occupied countries in western Europe.

As Cory Doctrow said today:

All those times we said, "When you build mass surveillance, you don't just put power in the hands of those you trust, but also the next guy"

— Cory Doctorow (@doctorow) November 9, 2016

Most of my (admittedly small) readership lives in North America or Europe. That means there's likely a Government surveillance operation monitoring you. It could be internal (in my case CSIS) or external (in my case the NSA). Even worse, they share their information with each other through Five Eyes to get around laws. There is a:

"supra-national intelligence organisation that doesn't answer to the known laws of its own countries"

Edward Snowden, via Wikipedia

The US has just elected someone who repeatedly spews lies, rascism, sexism, hatred and profiling and has said he will go after his enemies [1]. That person will soon oversee the largest surveillance operation in the history of mankind. A surveillance operation that spys on the majority of people reading this blog. That should worry you.

[1] By enemies, that's the press, women who accuse him of doing the things he says he does and so on. But you know all this right?

|

|

Niko Matsakis: Associated type constructors, part 4: Unifying ATC and HKT |

This post is a continuation of my posts discussing the topic of associated type constructors (ATC) and higher-kinded types (HKT):

- The first post focused on introducing the basic idea of ATC, as well as introducing some background material.

- The second post showed how we can use ATC to model HKT, via the “family” pattern.

- The third post did some exploration into what it would mean to support HKT directly in the language, instead of modeling them via the family pattern.

- This post considers what it might mean if we had both ATC and HKT in the language: in particular, whether those two concepts can be unified, and at what cost.

Unifying HKT and ATC

So far we have seen “associated-type constructors” and “higher-kinded types” as two distinct concepts. The question is, would it make sense to try and unify these two, and what would that even mean?

Consider this trait definition:

trait Iterable {

type Iter<'a>: Iterator<Item=Self::Item>;

type Item;

fn iter<'a>(&'a self) -> Self::Iter<'a>;

}

In the ATC world-view, this trait definition would mean that you can now specify a type like the following

::Iter<'a>

Depending on what the type T and lifetime 'a are, this might get

“normalized”. Normalization basically means to expand an associated

type reference using the types given in the appropriate impl. For

example, we might have an impl like the following:

impl<A> Iterable for Vec<A> {

type Item = A;

type Iter<'a> = std::vec::Iter<'a, A>;

fn iter<'a>(&'a self) -> Self::Iter<'a> {

self.clone()

}

}

In that case, as Iterable>::Iter<'x> could be normalized

to std::vec::Iter<'x, Foo>. This is basically exactly the same way

that associated type normalization works now, except that we have

additional type/lifetime parameters that are placed on the associated

item itself, rather than having all the parameters come from the trait

reference.

Associated type constructors as functions

Another way to view an ATC is as a kind of function, where the

normalization process plays the role of evaluating the function when

applied to various arguments. In that light, as

Iterable>::Iter could be viewed as a “type function” with a signature

like lifetime -> type; that is, a function which, given a type and a

lifetime, produces a type:

as Iterable>::Iter<'x>

^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ^^

function argument

When I write it this way, it’s natural to ask how such a function is

related to a higher-kinded type. After all, lifetime -> type could

also be a kind, right? So perhaps we should think of as

Iterable>::Iter as a type of kind lifetime -> type? What would that mean?

Limitations on what can be used in an ATC declaration

Well, in the last post, we saw that, in order to ensure that inference is tractable, HKT in Haskell comes with pretty strict limitations on the kinds of “type functions” we can support. Whatever we chose to adopt in Rust, it would imply that we need similar limitations on ATC values that can be treated as higher-kinded.

That wouldn’t affect the impl of Iterable for Vec that we saw

earlier. But imagine that we wanted Range, which is the type

produced by 0..22, to act as an Iterable. Now, ranges like 0..22

are already iterable – so the type of an iterator could just be

Self, and iter() can effectively just be clone(). So you might

think you could just write:

impl<u32> Iterable for Range<u32>

type Item = u32;

type Iter<'a> = Range<u32>;

// ^^^^ doesn't use `'a'` at all

fn iter(&self) -> Range<u32> {

*self

}

}

However, this impl would be illegal, because Range doesn’t use

the parameter 'a. Presuming we adopted the rule I suggested in the

previous post, every value for Iter<'a> would have to use the 'a

exactly once, as the first lifetime argument. So Foo<'a, u32> would

be ok, as would &'a Bar, but Baz<'static, 'a> would not.

Working around this limitation with newtypes

You could work around this limitation above by introducing a newtype. Something like this:

struct RangeIter<'a> {

range: Range<u32>,

dummy: PhantomData<&'a ()>,

// ^^ need to use `'a` somewhere

}

We can then implement Iterator for RangeIter<'a> and just proxy

next() on to self.range.next(). But this is kind of a drag.

An alternative: give users the choice

For a long time, I had assumed that if we were going to introduce HKT,

we would do so by letting users define the kinds more explicitly. So,

for example, if we wanted the member Iter to be of kind lifetime ->

type, we might declare that explicitly. Using the <_> and <'_>

notation I was using in earlier posts, that might look like this:

trait Iterable {

type Iter<'_>;

}

Now the trait has declared that impls must supply a valid, partially

applied struct/enum name as the value for Iter.

I’ve somewhat soured on this idea, for a variety of reasons. One big one is that we are forcing trait users to mak this choice up front, when it may not be obvious whether a HKT or an ATC is the better fit. And of course it’s a complexity cost: now there are two things to understand.

Finally, now that I realize that HKT is going to require bounds, not

having names for things means it’s hard to see how we’re going to

declare those bounds. In fact, even the Iterable trait probably has

some bounds; you can’t just use any old lifetime for the

iterator. So really the trait probably includes a condition that

Self: 'iter, meaning that the iterable thing must outlive the

duration of the iteration:

trait Iterable {

type Iter<'iter>: Iterator<Item=Self::Item>

where Self: 'iter; // <-- bound I was missing before

type Item;

fn iter<'iter>(&'iter self) -> Self::Iter<'iter>;

}

Why focus on associated items?

You might wonder why I said that we should consider lifetime -> type rather than saying

that Iterable::Iter would be something of kind type -> lifetime ->

type. In other words, what about the input types to the trait itself?

It turns out that this idea doesn’t really make sense. First off, it

would naturally affect existing associated types. So Iterator::Item,

for example, would be something of kind type -> type, where the

argument is the type of the iterator. as Iterator>::Item

would be the syntax for applying Iterator::Item to Range.

Since we can write generic functions with higher-kinded parameters

like fn foo>(), that means that I here might be

Iterator::Item, and hence I> would be equivalent to

as Iterator>::Item.

But remember that, to make inference tractable, we want to know that

?X if and only if ?X = ?Y. That means that we could

not allow as Iterator>::Item to normalize to the same

thing as as SomeOtherTrait>::Foo. You can see that this

doesn’t even remotely resemble associated types as we know them, which

are just plain one-way functions.

Conclusions

This is kind of the “capstone” post for the series that I set out to write. I’ve tried to give an overview of what associated type constructors are; the ways that they can model higher-kinded patterns; what higher-kinded types are; and now what it might mean if we tried to combine the two ideas.

I hope to continue this series a bit further, though, and in particular to try and explore some case studies and further thoughts. If you’re interested in the topic, I strongly encourage you to hop over to the internals thread and take a look. There have been a lot of insightful comments there.

That said, currently my thinking is this:

- Associated type constructors are a natural extension to the language. They “fit right in” syntactically with associated types.

- Despite that, ATC would represent a huge step up in expressiveness,

and open the door to richer traits. This could be particularly important

for many libraries, such as futures.

- I know that Rayon had to bend over backwards in some places because we lack any way to express an “iterable-like” pattern.

- Higher-kinded types as expressed in Haskell are not very suitable for Rust:

- they don’t cover bounds, which we need;

- the limitation to “partially applied” struct/enum names is not a natural fit, even if we loosen it somewhat.

- Moreover, adding HKT to the language would be a big complexity jump:

- to use Rust, you already have to understand associated types, and ATC is not much more;

- but adding to that rules and associated syntax for HKT feels like a lot to ask.

So currently I lean towards accepting ATC with no restrictions and modeling HKT using families. That said, I agree that the potential to feel like a lot of “boilerplate”. I sort of suspect that, in practice, HKT would require a fair amount of its own boilerplate (i.e, to abstract away bounds and so forth), and/or not be suitable for Rust, but perhaps further exploration of example use-cases will be instructive in this regard.

Comments

Please leave comments on this internals thread.

|

|

Air Mozilla: Martes Mozilleros November 8, 2016 |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

|

|

Cameron Kaiser: Happy 6th birthday, TenFourFox |

Hail to the Chief!

http://tenfourfox.blogspot.com/2016/11/happy-6th-birthday-tenfourfox.html

|

|

Doug Belshaw: Digital literacies have a civic element |

My ‘Essential Elements of Digital Literacies’ (thesis / book) looks like this:

Unlike some other people who seemed to need a subject for their latest blog post or journal article, this wasn’t something I just sat down and thought about for half an hour. This was the result of a few years worth of work, and a large meta-analysis of theory and practice.

The elements that most people seem to take issue with when looking at the above diagram are 'Confident’ and 'Civic’. The top row, the four 'skillsets’ seem to pose no problem, but people wonder how they can teach the bottom four 'mindsets’ - particularly the two just highlighted.

The latest episode of the Techgypsies podcast by Audrey Watters and Kin Lane does a great job of explaining the Civic element of digital literacies. I’ve embedded the player below, or click here. Listen to the whole thing as it’s fascinating, but the bit that we’re interested here starts at about the 20-minute mark.

Audrey and Kin use the 'scandal’ around Hillary Clinton’s private email server as a lens to show how poor our understanding of everyday tech actually is. What I thought was particularly enlightening was their likening the 'learn to code’ movement to standard IT practices. In other words: “oh, this is too hard for you? well, just leave it to us and we’ll sort it out for you”. In other words, passive, uncritical use of technology is fine unless, you know, you’re a 'techie’.

In learning organisations, in businesses, and in families, there are practices built upon technologies that need to be learned. As Audrey and Kin outline, although it’s entirely unsexy, an understanding of difference between POP, SMTP, and IMAP would have meant people could have seen the email 'scandal’ as entirely a non-event.

What I really appreciated was Audrey’s reframing of this kind of thing as a social studies issue. We shouldn’t have to have separate classes for this kind of thing any more. Instead, our society should have a baseline understanding of how the tech we use every day works. That also applies to web domains, and to the way that data flows around the web.

Of course, a lot of this is covered in Mozilla’s Web Literacy Map. Not all of what we need to know pertains to the 'web’, of course - which is where the Essential Elements of Digital Literacies come in. They’re plural, context-dependent, and should be co-defined in your community. As well as raising awareness of the latest shiny technologies (e.g. blockchain, AI) we should be ensuring people are comfortable with the tech they’re using right now.

Questions? Comments? I’m @dajbelshaw or you can email me: hello@dynamicskillset.com

|

|