Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Brian King: Activate Mozilla |

Today we are launching Activate Mozilla, a campaign where you can find what are the focus initiatives that support the current organization goals, how to start participating and mobilize your community around clearly defined activities.

One of the main asks from our community recently has been the need of more clarity on what are the most important things to do to support Mozilla right now and how to participate. With this site we have a place to answer this question that is always up-to-date.

We are launching with three activities, focused on Rust, Web Compatibility, and Test Pilot. Within each activity we explain why this is important, some goals we are trying to reach, and provide step by step instructions on how to do the activity. We want you to #mozactivate by mobilizing your community, so don’t forget to share share share!

This is a joint effort between the Participation team and other teams at Mozilla. We’ll be adding more activities to the campaign over time.

|

|

Soledad Penades: dogetest.com |

This summer, Sam and me are exploring Servo’s capabilities and building cool demos to showcase them (and most specially, WebRender, its engine “that aims to draw web content like a modern game engine”).

We could say there are two ways of experimenting. One in which you go and try out things as you think of them, and another one in which you look at what works first, and build a catalogue of resources that you can use to experiment with. An imperfect metaphor would be a comparison between going into an arts store and pick a tool… try something… then try something else with another tool as you see fit, etc (if the store would allow this), versus establishing what tools you can use before you go to the store to buy them to build your experiment.

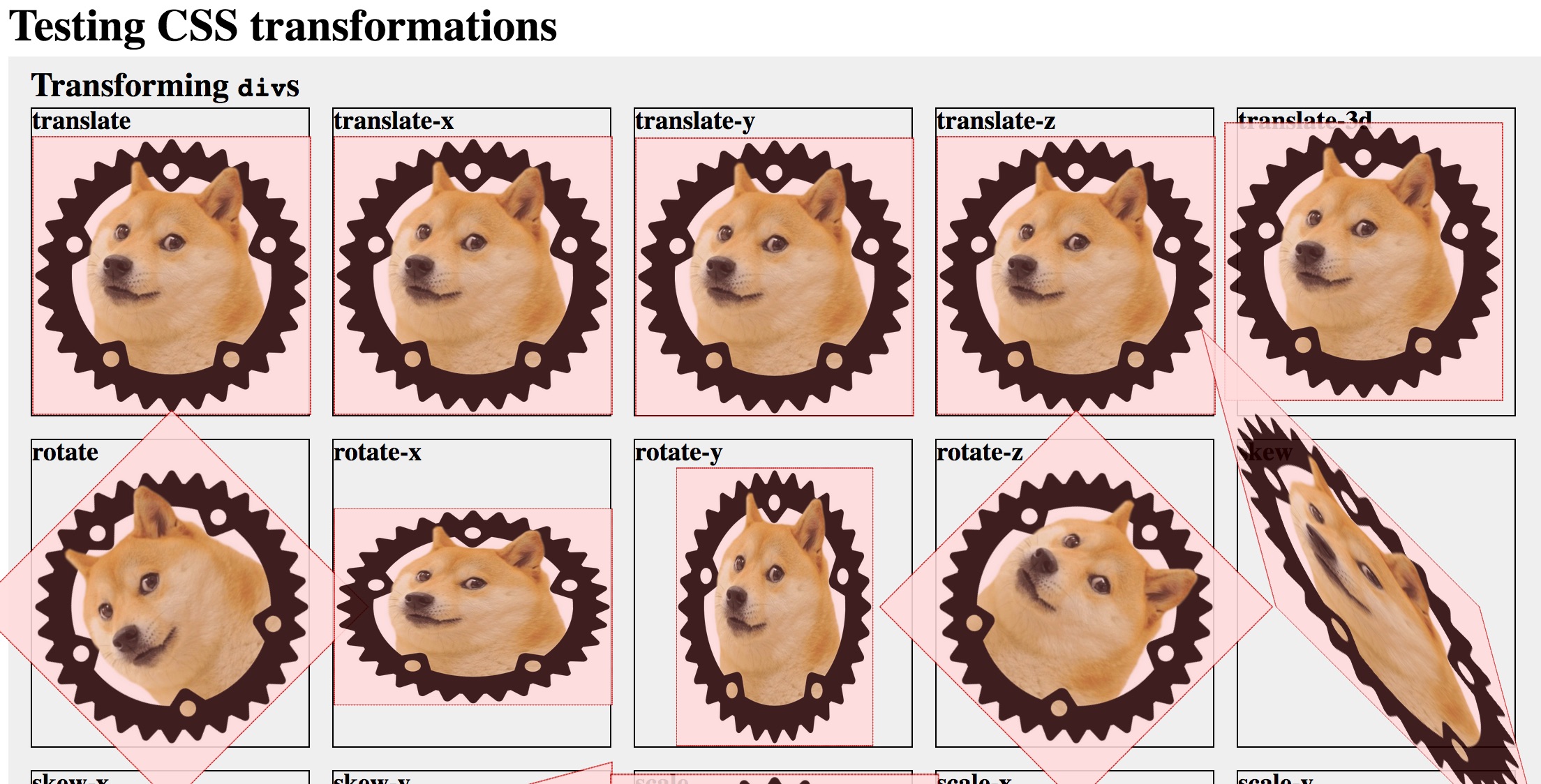

WebRender is very good at CSS, but we didn’t know for sure what worked. We kept having “ideas” but stumbled upon walls of lack of implementation, or we would forget that X or Y didn’t work, and then we’d try to use that feature again and found that it was still not working.

To avoid that, we built two demo/tests that repeatedly render the same type of element and a different feature applied to each one: CSS transformations and CSS filters.

This way we can quickly determine what works, and we can also compare the behaviour between browsers, which is really neat when things look “weird”. And each time we want to build a new demo, we can look at the demos and be “ah, this didn’t work, but maybe I could use that other thing”.

Our tests use two types of element for now: a DIV with the unofficial but de facto Servo logo (a doge inside a cog wheel), and an IFRAME with an image as well. We chose those elements for two reasons: because the DIV is a sort of minimum building block, and because IFRAMEs tend to be a little “difficult”, with them being their own document and etc… they raise the rendering bar, so to speak

|

|

Cameron Kaiser: And now for something completely different: what you missed by not going to Vintage Computer Festival West XI |

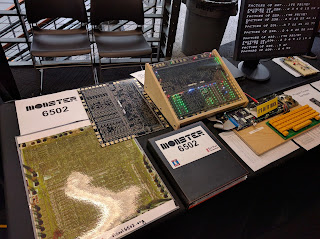

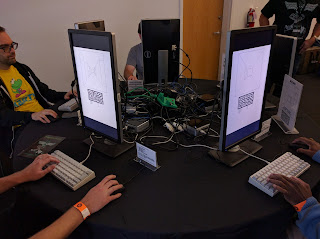

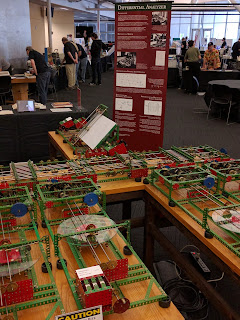

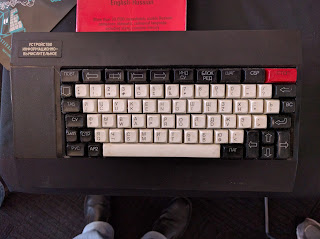

You could have seen the other great exhibits at the Computer History Museum,

the complete Tomy Tutor family, hosted by my lovely wife and me that corrupted young minds from a very early age (for which I won a special award for Most Complete and 2nd place overall in the Microcomputer category), seen a really big MOS 6502 recreation, played Maze War, solved differential equations the old fashioned way, realized that in Soviet Russia computer uses you, given yourself terrible eyestrain, used a very credible MacPaint clone on a Tandy Color Computer, messed with Steve Chamberlain's mind, played with an unusual Un*x workstation powered by the similarly unusual (and architecturally troublesome) Intel i860, marveled at the very first Amiga 1000 (serial #1), attempted to steal an Apple I, snuck onto Infinite Loop on the way back south, and got hustled off by Apple security for plastering "Ready for PowerPC upgrade" stickers all over the MacBooks in the company store.But you didn't. And now it's too late.*

*Well, you could always come next year.

http://tenfourfox.blogspot.com/2016/08/and-now-for-something-completely.html

|

|

William Lachance: Perfherder Quarter of Contribution Summer 2016: Results |

Following on the footsteps of Mike Ling’s amazing work on

|

|

William Lachance: Perfherder Quarter of Contribution: Summer 2016 Edition Results! |

Following on the footsteps of Mike Ling’s amazing work on

|

|

Air Mozilla: Prometheus and Grafana Presentation - Speaker Ben Kochie |

Ben Kochie Site Reliability Engineer and Prometheus maintainer at SoundCloud will be presenting basics of Prometheus Monitoring and Grafana reporting.

Ben Kochie Site Reliability Engineer and Prometheus maintainer at SoundCloud will be presenting basics of Prometheus Monitoring and Grafana reporting.

https://air.mozilla.org/prometheus-and-grafana-presentation-speaker-ben-kochie/

|

|

Air Mozilla: The Joy of Coding - Episode 67 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Air Mozilla: Weekly SUMO Community Meeting August 10, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-august-10-2016/

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1286027] Display ReviewRequestSummary diffstat information in MozReview section

- [1290959] Create custom NDA bug entry form in Legal

- [1279368] Run Script to Remove Old Whiteboard Tag and Add New Keyword to affected bugs where we are creating new keywords.

- [1290499] Updates to Recruiting Bug Form

- [1287895] fetching Github pull requests should have better logging to help with debugging errors

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/08/10/happy-bmo-push-day-25/

|

|

Gervase Markham: On Trial |

As many readers of this blog will know, I have cancer. I’ve had many operations over the last fifteen years, but a few years ago we decided that the spread was now wide enough that further surgery was not very pointful; we should instead wait for particular lesions to start causing problems, and only then treat them. (I have metastases in my lungs, liver, remaining kidney, leg, pleura and other places.)

Historically, chemotherapy hasn’t been an option for me. Broad spectrum chemotherapies work by killing anything growing fast; but my rather unusual cancer doesn’t grow fast (which is why I’ve lived as long as I have so far) and so they would kill me as quickly as they would kill it. And there are no targetted drugs for Adenoid Cystic Carcinoma, the rare salivary gland cancer I have.

However, recently my oncologist referred me to The Christie hospital in Manchester, which is doing some interesting research on cancer genetics. With them, I’m trying a few things, but the most immediate is that yesterday I entered a Phase 1 trial called AToM, which is trialling a couple of drugs in combination which may be able to help me.

The two drugs are an existing drug called olaparib, and a new one known only as AZD0156. Each of these drugs inhibits a different one of the seven or so mechanisms cells use to repair DNA after it’s been damaged. (Olaparib inhibits the PARP pathway; AZD0156 the ATM pathway.) Cells which realise they can’t repair themselves commit “cell suicide” (apoptosis). The theory is that these repair mechanisms are shakier in cancer cells than normal cells, and so cancer cells should be disproportionately affected (and so commit suicide more) if the mechanisms are inhibited.

As this is a Phase 1 trial, the goal is more about making sure the drug doesn’t kill people than about whether it works well, although the doses now being used are in the clinical range, and another patient with my cancer has seen some improvement. The trial document listed all sorts of possible side-effects, but the doctors say other patients are tolerating the combination well. Only experience will tell how it affects me. I’ll be on the drugs as long as I am seeing benefit (defined as “my cancer is not growing”). And, of course, hopefully there will be benefit to people in the future when and if this drug is approved for use.

In practical terms, the first three weeks of the trial are quite intensive in terms of the amount of hospital visits required (and I live 2 hours drive from Manchester), and the following six weeks moderately intensive, so I may be less responsive to email than normal. I also won’t be doing any international travel.

http://feedproxy.google.com/~r/HackingForChrist/~3/mInXOrHtPfQ/

|

|

Air Mozilla: Connected Devices Meetup |

The Connected Devices team at Mozilla is an effort to apply our guiding principles to the Internet of Things. We have a lot to learn...

The Connected Devices team at Mozilla is an effort to apply our guiding principles to the Internet of Things. We have a lot to learn...

|

|

Air Mozilla: Intern Presentations 2016, 09 Aug 2016 |

Group 3 of the interns will be presenting what they worked on this summer.

Group 3 of the interns will be presenting what they worked on this summer.

|

|

Tim Taubert: Continuous Integration for NSS |

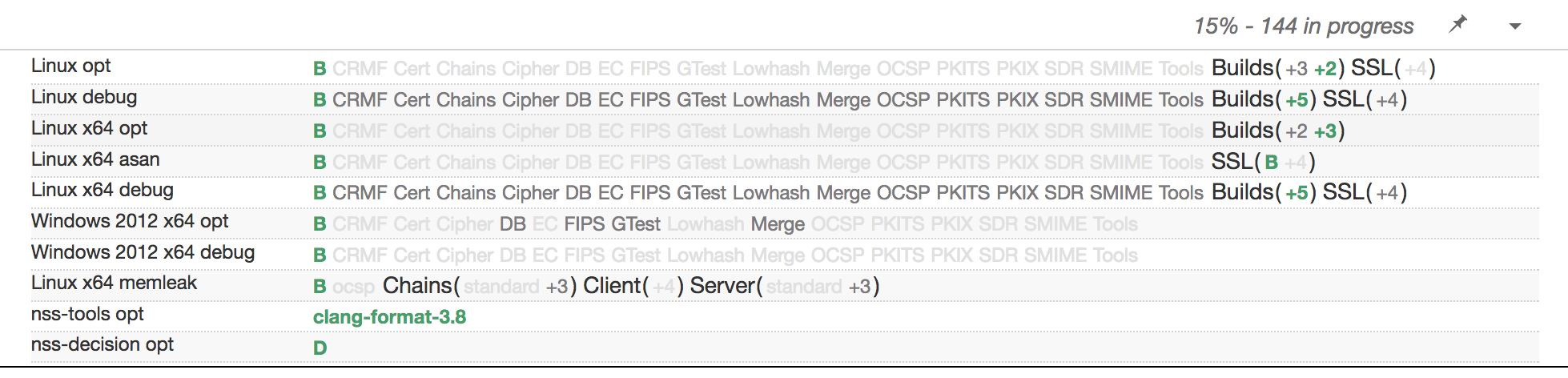

The following image shows our TreeHerder dashboard after pushing a changeset to the NSS repository. It is the result of only a few weeks of work (on our side):

Based on my experience from building a Taskcluster CI for NSS over the last weeks, I want to share a rough outline of the process of setting this up for basically any Mozilla project, using NSS as an example.

What is the goal?

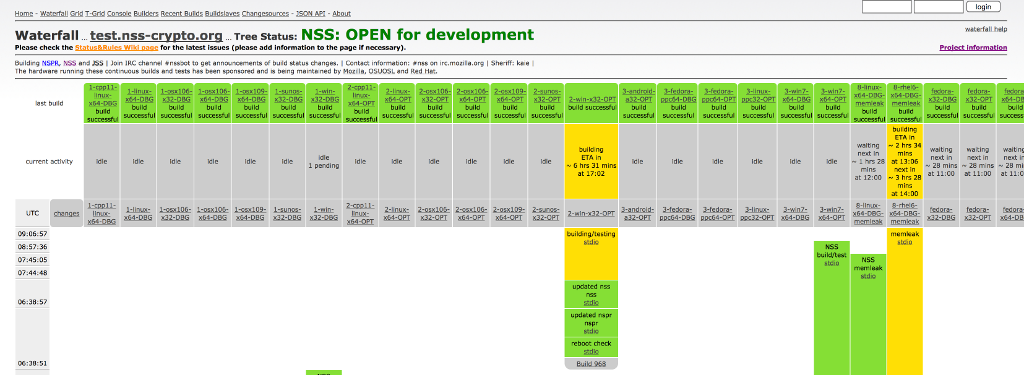

The development of NSS has for a long time been heavily supported by a fleet of buildbots. You can see them in action by looking at our waterfall diagram showing the build and test statuses of the latest pushes to the NSS repository.

Unfortunately, this setup is rather complex and the bots are slow. Build and test tasks are run sequentially and so on some machines it takes 10-15 hours before you will be notified about potential breakage.

The first thing that needs to be done is to replicate the current setup as good as possible and then split monolithic test runs into many small tasks that can be run in parallel. Builds will be prepared by build tasks, test tasks will later download those pieces (called artifacts) to run tests.

A good turnaround time is essential, ideally one should know whether a push broke the tree after not more than 15-30 minutes. We want a TreeHerder dashboard that gives a good overview of all current build and test tasks, as well as an IRC and email notification system so we don’t have to watch the tree all day.

Docker for Linux tasks

To build and test on Linux, Taskcluster uses Docker. The build instructions for the image containing all NSS dependencies, as well as the scripts to build and run tests, can be found in the automation/taskcluster/docker directory.

For a start, the fastest way to get something up and running (or building) is

to use ADD in the Dockerfile to bake your scripts into the image. That way

you can just pass them as the command in the task definition later.

# Add build and test scripts.

ADD bin /home/worker/bin

RUN chmod +x /home/worker/bin/*

Once you have NSS and its tests building and running in a local Docker container, the next step is to run a Taskcluster task in the cloud. You can use the Task Creator to spawn a one-off task, experiment with your Docker image, and with the task definition. Taskcluster will automatically pull your image from Docker Hub:

{ "created": " ... ", "deadline": " ... ", "payload": { "image": "ttaubert/nss-ci:0.0.21", "command": [ "/bin/bash", "-c", "bin/build.sh" ], "maxRunTime": 3600 }, }

Docker and task definitions are well-documented, so this step shouldn’t be too difficult and you should be able to confirm everything runs fine. Now instead of kicking off tasks manually the next logical step is to spawn tasks automatically when changesets are pushed to the repository.

Using taskcluster-github

Triggering tasks on repository pushes should remind you of Travis CI, CircleCI, or AppVeyor, if you worked with any of those before. Taskcluster offers a similar tool called taskcluster-github that uses a configuration file in the root of your repository for task definitions.

If your master is a Mercurial repository then it’s very helpful that you don’t have to mess with it until you get the configuration right, and can instead simply create a fork on GitHub. The documentation is rather self-explanatory, and the task definition is similar to the one used by the Task Creator.

Once the WebHook is set up and receives pings, a push to your fork will make “Lisa Lionheart”, the Taskcluster bot, comment on your push and leave either an error message or a link to the task graph. If on the first try you see failures about missing scopes you are lacking permissions and should talk to the nice folks over in #taskcluster.

Move scripts into the repository

Once you have a GitHub fork spawning build and test tasks when pushing you should move all the scripts you wrote so far into the repository. The only script left on the Docker image would be a script that checks out the hg/git repository and then uses the scripts in the tree to build and run tests.

This step will pay off very early in the process, rebuilding and pushing the Docker image to Docker Hub is something that you really don’t want to do too often. All NSS scripts for Linux live in the automation/taskcluster/scripts directory.

#!/usr/bin/env bash set -v -e -x if [ $(id -u) = 0 ]; then # Drop privileges by re-running this script. exec su worker $0 $@ fi # Do things here ...

Use the above snippet as a template for your scripts. It will set a few flags

that help with debugging later, drop root privileges, and rerun it as the

unprivileged worker user. If you need to do things as root before building or

running tests, just put them before the exec su ... call.

Split build and test runs

Taskcluster encourages many small tasks. It’s easy to split the big monolithic test run I mentioned at the beginning into multiple tasks, one for each test suite. However, you wouldn’t want to build NSS before every test run again, so we should build it only once and then reuse the binary. Taskcluster allows to leave artifacts after a task run that can then be downloaded by subtasks.

# Build. cd nss && make nss_build_all # Package. mkdir artifacts tar cvfjh artifacts/dist.tar.bz2 dist

The above snippet builds NSS and creates an archive containing all the binaries and libraries. You need to let Taskcluster know that there’s a directory with artifacts so that it picks those up and makes them available to the public.

{ "created": " ... ", "deadline": " ... ", "payload": { "image": "ttaubert/nss-ci:0.0.21", "artifacts": { "public": { "type": "directory", "path": "/home/worker/artifacts", "expires": " ... " } }, "command": [ ... ], "maxRunTime": 3600 }, }

The test task then uses the $TC_PARENT_TASK_ID environment variable to

determine the correct download URL, unpacks the build and starts running tests.

Making artifacts automatically available to subtasks, without having to pass

the parent task ID and build a URL, will hopefully be added to Taskcluster in

the future.

# Fetch build artifact. curl --retry 3 -Lo dist.tar.bz2 https://queue.taskcluster.net/v1/task/$TC_PARENT_TASK_ID/artifacts/public/dist.tar.bz2 tar xvjf dist.tar.bz2 # Run tests. cd nss/tests && ./all.sh

Writing decision tasks

Specifying task dependencies in your .taskcluster.yml file is unfortunately not possible at the moment. Even though the set of builds and tasks you want may be static you can’t create the necessary links without knowing the random task IDs assigned to them.

Your only option is to create a so-called decision task. A decision task is the only task defined in your .taskcluster.yml file and started after you push a new changeset. It will leave an artifact in the form of a JSON file that Taskcluster picks up and uses to extend the task graph, i.e. schedule further tasks with appropriate dependencies. You can use whatever tool or language you like to generate these JSON files, e.g. Python, Ruby, Node, …

task: payload: image: "ttaubert/nss-ci:0.0.21" maxRunTime: 1800 artifacts: public: type: "directory" path: "/home/worker/artifacts" expires: "7 days" graphs: - /home/worker/artifacts/graph.json

All task graph definitions including the Node.JS build script for NSS can be found in the automation/taskcluster/graph directory. Depending on the needs of your project you might want to use a completely different structure. All that matters is that in the end you produce a valid JSON file. Slightly more intelligent decision tasks can be used to implement features like try syntax.

mozilla-taskcluster for Mercurial projects

If you have all of the above working with GitHub but your main repository is hosted on hg.mozilla.org you will want to have Mercurial spawn decision tasks when pushing.

The Taskcluster team is working on making .taskcluster.yml files work for Mozilla-hosted Mercurial repositories too, but while that work isn’t finished yet you have to add your project to mozilla-taskcluster. mozilla-taskcluster will listen for pushes and then kick off tasks just like the WebHook.

TreeHerder Configuration

A CI is no CI without a proper dashboard. That’s the role of TreeHerder at Mozilla. Add your project to the end of the repository.json file and create a new pull request. It will usually take a day or two after merging until your change is deployed and your project shows up in the dashboard.

TreeHerder gets the per-task configuration from the task definition. You can configure the symbol, the platform and collection (i.e. row), and other parameters. Here’s the configuration data for the green B at the start of the fifth row of the image at the top of this post:

{ "created": " ... ", "deadline": " ... ", "payload": { ... }, "extra": { "treeherder": { "jobKind": "build", "symbol": "B", "build": { "platform": "linux64" }, "machine": { "platform": "linux64" }, "collection": { "debug": true } } } }

IRC and email notifications

Taskcluster is a very modular system and offers many APIs. It’s built with mostly Node, and thus there are many Node libraries available to interact with the many parts. The communication between those is realized by Pulse, a managed RabbitMQ cluster.

The last missing piece we wanted is an IRC and email notification system, a bot that notifies about failures on IRC and sends emails to all parties involved. It was a piece of cake to write nss-tc that uses Taskcluster Node.JS libraries and Mercurial JSON APIs to connect to the task queue and listen for task definitions and failures.

A rough overview

I could have probably written a detailed post for each of the steps outlined here but I think it’s much more helpful to start with an overview of what’s needed to get the CI for a project up and running. Each step and each part of the system is hopefully more obvious now if you haven’t had too much interaction with Taskcluster and TreeHerder so far.

Thanks to the Taskcluster team, especially John Ford, Greg Arndt, and Pete Moore! They helped us pull this off in a matter of weeks and besides Linux builds and tests we already have Windows tasks, static analysis, ASan+LSan, and are in the process of setting up workers for ARM builds and tests.

https://timtaubert.de/blog/2016/08/continuous-integration-for-nss/

|

|

Soledad Penades: Web Animations: why and when to use them, and some demos we wrote |

There’s been much talk of Web Animations for a long time but it wasn’t quite clear what that meant exactly, or even when could we get that new thing working in our browsers, or whether we should actually care about it.

A few weeks ago, Bel'en, Sam and me met to brainstorm and build some demos to accompany the Firefox 48 release with early support for Web Animations. We had been asked with very little time, but we reckoned we could build at least a handful of them.

We tried to look at this from a different, pragmatic perspective.

Most graphic API demos tend to be very technical and abstract, which tends to excite graphics people but mean nothing to web developers. The problem web developers face is not making circles bounce randomly on the screen, it is to display and format text on screen, and they have to write CSS and work with designers who want to play a bit with the CSS code to give it as much ‘swooosh’ as they envision. Any help we can provide to these developers so they can evaluate new APIs quickly and decide if it’s going to make their work easier is going to be highly appreciated.

So our demos focused less on moving DIVs around and more on delivering value, either to developers by offering side by side comparisons (JS vs CSS code and JS vs CSS performance) or to end users with an example of an style guide where designers can experiment with the animation values in situ, and another example where the user can adjust the amount of animation to their liking—we see this as a way of using the API for improving accessibility.

You can also download all the demos from the repo:

git clone https://github.com/mozdevs/Animation-examples.gitAnd should you use CSS or should you use JS? The answer is: it depends.

Let’s look at the same animation defined with CSS and JS, taken straight from the CSS vs JS demo:

CSS:

.rainbow {

animation: rainbow 2s alternate infinite;

}

@keyframes rainbow {

0% { background: #ff004d; }

20% { background: #ff77ab; }

50% { background: #00e756; }

80% { background: #29adff; }

100% { background: #ff77ab;}

}JavaScript:

let el = document.querySelector('.rainbow');

el.animate([

{ background: '#ff004d', offset: 0 },

{ background: '#ff77ab', offset: 0.20 },

{ background: '#00e756', offset: 0.5 },

{ background: '#29adff', offset: 0.80 },

{ background: '#ff77ab', offset: 1 }

], {

duration: 2000,

direction: 'alternate',

iterations: Infinity

});As you can see, this is fairly similar.

CSS’s syntax for animations has been with us for a while. We know how it works, there is good support across browsers and there is tooling to generate keyframes. But once the keyframes are defined, there’s no going back. Modifying them on the fly is difficult unless you start messing with strings to build styles.

This is where the JS API proves its worth. It can tap into live animations, and it can create new animations. And you can create animations that are very tedious to define in CSS, even using preprocessors, or just plain impossible to predict: imagine that you want custom colour animations starting from an unknown colour A and going to another unknown colour B. Trying to pregenerate all these unknown transitions from the beginning would result in an enormous CSS file which is hard to maintain, and would not even be as accurate as a newly created animation could be.

There’s another advantage of using the Web Animations API versus animating with a library such as tween.js—the API is hardware accelerated and also runs on the compositor thread, which means incredibly smooth performance and pretty much perfect timing. When you animate DOM elements using JavaScript you have to create lots of strings to set the style property of these DOM elements. This will eventually kick a pretty massive Garbage Collector, which will make your animations pause for a bit: the dreaded jank!

Finally another cool feature of these native animations is that you can inspect them using the browser developer tools. And you can scrub back and forth, change their speed to slow them down or make them faster, etc. You cannot tap into JS-library-defined animations (e.g. tween.js) unless there’s support from the library such an inspector, etc.

Essentially: Web Animations cheat by running at browser level. This is the best option if you want to animate DOM elements. For interpolating between plain values (e.g. a three.js object position vector x, y, z properties) you should use tween.js or similar.

If you want to know more here’s an article at Hacks talking about compositors, layers and other internals, and inspecting with DevTools. And with plenty of foxes!

The full API isn’t implemented in any browser yet, but both Firefox and Chrome stable have initial support already. There’s no excuse: start animating things already! I’m also excited that both browsers shipped this early support more or less simultaneously—makes things so much easier for developers.

With mega thanks to Bel'en for pushing this forward and to Sam for going out of his internship way to help us with this

|

|

Myk Melez: A Mozilla App Outside Central |

After forking gecko-dev again for an experiment, I wondered if there was a better way to create a Mozilla app outside the mozilla-central repository. After all, that repository, mirrored as mozilla/gecko-dev on GitHub, is huge. And forking the same repo twice to the same GitHub account (or organization) requires cumbersome workarounds (and workarounds for the workarounds in some cases).

Plus, Mozilla apps don’t necessarily need to modify Gecko. But even when they do, they could share a single fork, with branches for each app, if there was a way to create a Mozilla app that imported gecko-dev as a dependency without having to live inside a fork of gecko-dev itself.

So I created an example app that does just that. It happens to use a shallow Git submodule to import gecko-dev, but it could use a Git subtree or any other dependency management tool.

The build process touches only one file in the gecko-dev subdirectory: it creates a symlink to the parent (app) directory to work around the limitation that Mozilla app directories must be subdirectories of gecko-dev.

To try it for yourself, clone the mykmelez/mozilla-app repo and build it:

git clone --recursive --shallow-submodules https://github.com/mykmelez/mozilla-app

cd mozilla-app/

./mach build && ./mach run

You should see a window like this:

And your clone will contain the app’s files, the gecko-dev submodule directory, and an obj directory containing the built app:

> ls

LICENSE app.mozbuild components gecko-dev modules moz.configure obj

app branding confvars.sh mach moz.build mozconfig

Caveats:

The app builds Gecko from source, so you still need Mozilla build prerequisites. On some platforms, you can install these via ./mach bootstrap.

I’ve only tested it on Mac, but it probably works on Linux, and it probably doesn’t work on Windows (because of the symlink).

Although the Mozilla application framework is mature and robust, it isn’t explicitly generalized for use beyond Firefox anymore, and it could change or break at any time.

https://mykzilla.org/2016/08/09/a-mozilla-app-outside-central/

|

|

Chris Cooper: RelEng & RelOps Weekly highlights - August 8, 2016 |

Two of our interns finished up their terms recently. The Toronto office feels a little emptier without them, or at least that’s how I imagine it since I don’t actually work in Toronto.

Modernize infrastructure:

Trunk-based Fennec debug builds+tests have been disabled in buildbot and moved to tier 1 in Taskcluster

Improve Release Pipeline:

Mihai finished migrating release sanity to Release Promotion. Release sanity helps to catch release issues that are susceptible to human process error. It has been live since 48.0b9, and has already helped catch a few issues that could have delayed the releases!

Improve CI Pipeline:

Rob created an automated build using taskcluster-github to create new taskcluster windows amis whenever the workertype manifest (or opencloudconfig) is updated.

David Burns posted a status update to dev.platform about the ongoing build system improvements.

Operational:

Balrog has been successfully migrated to the new Docker-based CloudOps infrastructure, which will make development and maintenance much easier.

We’ve ended the experiment of providing Firefox code mirrors on bitbucket. This will allow us to shut down some legacy infrastructure shortly.

We’ve shut down legacy vcs-sync!

Nearly everything that was indexed on MXR has been indexed on DXR! A few stragglers remain, but are very low-use trees. Additionally, mxr.mozilla.org now brings up an interstital page which offers a link to DXR instead of the hardhat.

Many betas died to bring you e10s.

Many betas died to bring you e10s.Release:

We’ve released Firefox 48 which brings many long-awaited features to our users.

Outreach

Our amazing interns Francis Kang and Connor Sheehan have finished their tenure with us. They did grace us with their intern presentations before they left, which you can replay on Air Mozilla:

- Diving in to the unknown (Francis) - https://air.mozilla.org/diving-into-the-unknown/

- Release Promotion: Keeping tabs on automation (Connor) - https://air.mozilla.org/release-promotion-keeping-tabs-on-automation/

Hiring:

We’ve filled the two release engineering positions we had advertised. It felt like we had an embarrassment of riches at some points during that hiring process. Thanks to everyone who took the time to apply.

See you next week!

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 08 Aug 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160808/

|

|

Mozilla Security Blog: MWoS 2015: Let’s Encrypt Automation Tooling |

The Mozilla Winter of Security of 2015 has ended, and the participating teams of students are completing their projects.

The Mozilla Winter of Security of 2015 has ended, and the participating teams of students are completing their projects.

The Certificate Automation tooling for Let’s Encrypt project wrapped up this month, having produced an experimental proof-of-concept patch for the Nginx webserver to tightly integrate the ACME automated certificate management protocol into the server operation.

The MWoS team, my co-mentor Richard Barnes, and I would like to thank Klaus Krapfenbauer, his advisor Martin Schmiedecker, and the Technical University of Vienna for all the excellent research and work on this project.

Below is Klaus’ end-of-project presentation on AirMozilla, as well as further details on the project.

MWoS Let’s Encrypt Certificate Automation presentation on AirMozilla

Developing an ACME Module for Nginx

Author: Klaus Krapfenbauer

Note: The module is an incomplete proof-of-concept, available at https://github.com/mozilla/mwos-letsencrypt-2015

The 2015-2016 Mozilla Winter of Security included a project to implement an ACME client within a well-known web server, to show the value of automated HTTPS configuration when used with Let’s Encrypt. Projects like Caddy Server showed the tremendous ease-of-use that could be attained, so for this project we sought to patch-in such automation to a mainstay web server: Nginx.

The goal of the project is to build a module for a web server to make securing your web site even easier. Instead of configuring the web server, getting the certificate (e.g. with the Let’s Encrypt Certbot) and installing the certificate on the web server, you just need to configure your web server. The rest of the work is done by the built-in ACME module in the web server.

Nginx

This project didn’t specify which particular web server we should develop on. We evaluated several, including Apache, Nginx, and Stunnel. Since the goal is to help as many people as possible in securing their web sites we narrowed to the two most widely-used: Nginx and Apache. Ultimately, we decided to work with Nginx since it has a younger code base to develop with.

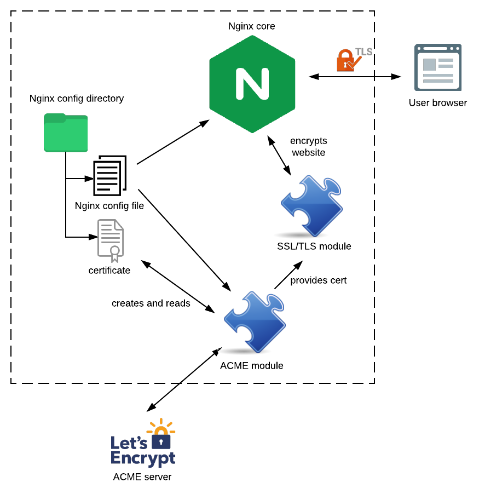

Nginx has a module system with different types of modules for different purposes. There are load-balancer modules which pass the traffic to multiple backend servers, filter modules which convert the data of a website (for example encrypt it like the SSL/TLS module) and handler modules which create the content of a web request (e.g. the http handler loads the html file from the disk and serves it). In addition to their purpose the module types also differ in how they hook into the server core, which makes the choice crucial when you start to implement a Nginx module. In our case none of the types were suitable, which introduced some difficulties, discussed later.

The ACME module

The Nginx module should be a replacement of the traditional workflow involving the ACME Certbot. Therefore the features of the module should resemble the features of the Certbot. This includes:

- Generate and store a key-pair

- Register an account on an ACME server

- Create an authorization for a domain

- Solve the HTTP challenge for the domain authorization

- At a later date, support the other challenge types

- Retrieve the certificate from the ACME server

- Renew a certificate

- Configure the Nginx SSL/TLS module to use the certificate

To provide the necessary information for all the steps in the ACME protocol, we introduced new Nginx configuration directives:

- A directive to activate the module

- Directive(s) for the recovery contact of the ACME account (optional)

- An array of URIs like “mailto:” or “tel:”

Everything else is gathered from the default Nginx directives. For example, the domain for which the certificate is issued is taken from the Nginx configuration directive “server_name”.

As the ACME module is an extension of the Nginx server itself, it’s a part of the software and therefore uses the Nginx config file for its own configuration and stores the certificates in the Nginx config directory. The ACME module communicates with the ACME server (e.g. Let’s Encrypt, but it could be any other server speaking the ACME protocol) for gathering the certificate, then configures the SSL/TLS module to use this certificate. The SSL/TLS module then does the encryption work for the website’s communication to the end user’s browser.

Let’s look at the workflow of setting up a secure website. In a world without ACME, anyone who wanted to setup an encrypted website had to:

- Create a CSR (certificate signing request) with all the information needed

- Send the CSR over to a CA (certificate authority)

- Pay the CA for getting a signed certificate

- Wait for their reply containing the certificate (this could take hours)

- Download the certificate and put it in the right place on the server

- Configure the server to use the certificate

With the ACME protocol and the Let’s Encrypt CA you just have to:

- Install an ACME client

- Use the ACME client to:

- Enter all your information for the certificate

- Get a certificate

- Automatically configure the server

That’s already a huge improvement, but with the ACME module for Nginx it’s even more simple. You just have to:

- Activate the ACME module in the server’s configuration

Pretty much everything else is handled by the ACME module. So it does all the steps the Let’s Encrypt client does, but fully automated during the server startup. This is how easy it can and should be to encourage website admins to secure their sites.

The minimal configuration work for the ACME module is to just add the “acme” directive to the server context in the Nginx config for which you would like to activate it. For example:

…

http {

…

server {

listen 443 ssl;

server_name example.com;

acme;

…

…

location / {

…

}

}

}

…

Experienced challenges

Designing and developing the ACME module was quite challenging.

As mentioned earlier, there are different types of modules which enhance different portions of the Nginx core server. The default Nginx module types are: handler modules (which create content on their own), filter modules (which convert website data – like the SSL/TLS module does) and load-balancer modules (which route requests to backend servers). Unfortunately, the ACME module and its inherent workflow does not fit any of these types. Our module breaks these conventions: it has its own configuration directives, and requires hooks into both the core and other modules. Nginx’s module system was not designed to accommodate our module’s needs, therefore we had a very limited choice on when we could perform the ACME protocol communication.

The ACME module serves to configure the existing SSL/TLS module, which performs the actual encryption of the website. Our module needs to control the SSL/TLS module to some degree in order to provide the ACME-retrieved encryption certificates. Unfortunately, the SSL/TLS module does a check for the existence and the validity of the certificates during the Nginx configuration parsing phase while the server is starting. This means the ACME module must complete its tasks before the configuration is parsed. Our decision, due to those limitations, was to handle all the certificate gathering at the time when the “acme” configuration directive is parsed in the configuration during server startup. After getting the certificates, the ACME module then updates the in-memory configuration of the SSL/TLS module to use those new certificates.

Another architectural problem arose when implementing the ACME HTTP challenge-response. To authorize a domain using the ACME HTTP challenge, the server needs to respond with a particular token at a well known URL path in its domain. Basically, it must publish this token like a web server publishes any other site. Unfortunately, at the time the ACME module is processing, Nginx has not yet started: There’s no web server. If the ACME module exits, permitting web server functions to begin (and keeping in mind the SSL/TLS module certificate limitations from before), there’s no simple mechanism to resume the ACME functions later. Architecturally, this makes sense for Nginx, but it is inconvenient for this project. Faced with this dilemma, for the purposes of this proof-of-concept, we decided to launch an independent, tiny web server to service the ACME challenge before Nginx itself properly starts.

Conclusion

As discussed, the limitations of a Nginx module prompted some suboptimal architectural design decisions. As in many software projects, the problem is that we want something from the underlying framework which it wasn’t designed to do. The current architectural design of the ACME module should be considered a proof-of-concept.

There are potential changes that would improve the architecture of the module and the communication between the Nginx core, the SSL/TLS module and the ACME module. These changes, of course, have pros and cons which merit discussion.

One change would be deferring the retrieval of the certificate to a time after the configuration is parsed. This would require spoofing the SSL/TLS module with a temporary certificate until the newly retrieved certificate is ready. This is a corner-case issue that arises just for the first server start when there is no previously retrieved certificate already stored.

Another change is the challenge-response: A web server inside a web server (whether with a third party library or not) is not clean. Therefore perhaps the TLS-SNI or another challenge type in the ACME protocol could be more suitable, or perhaps there is some method to start Nginx while still permitting the module to continue work.

Finally, the communication to the SSL/TLS module is very hacky.

Current status of the project & future plans

The current status of the module can be roughly described as a proof-of-concept in a late development stage. The module creates an ephemeral key-pair, registers with the ACME server, requests the authentication challenge for the domain and starts to answer the challenge. As the proof of concept isn’t finished yet, we intend to carry on with the project.

Many thanks

This project was an exciting opportunity to help realize the vision of securing the whole web. Personally, I’d like to give special thanks to J.C. Jones and Richard Barnes from the Mozilla Security Engineering Team who accompanied and supported me during the project. Also special thanks to Martin Schmiedecker, my professor and mentor at SBA Research at the Vienna University of Technology, Austria. Of course I also want to thank the whole Mozilla organization for holding the Mozilla Winter of Security and enabling students around the world to participate in some great IT projects. Last but not least, many thanks to the Let’s Encrypt project for allowing me to participate and play a tiny part in such a great security project.

https://blog.mozilla.org/security/2016/08/08/mwos-2015-lets-encrypt-automation-tooling/

|

|

Michael Kaply: First Beta of e10s CCK2 is Available |

The first version of the CCK2 Wizard that supports e10s is available. This is not production ready, so please don’t use it for production.

It has full backwards compatibility to Firefox 31, so you don’t need to use different CCK2 Wizards for older Firefox versions. Assuming there are no major bug reports, this will be released officially at the end of the week.

Please report bugs on Github. Support issues go to cck2.freshdesk.com.

https://mike.kaply.com/2016/08/08/first-beta-of-e10s-cck2-is-available/

|

|

Matjaz Horvat: Improving string filters |

A new filter is available in Pontoon that allows you to search for translations submitted within a specified time range. Do you want to review all changes since the last merge day or check out work from the last week? It’s now easier than ever.

Time range can be selected using the manual input, calendar widget and a few predefined shortcuts. Or perhaps more conveniently using the chart that displays translation submissions over time, so you don’t shoot in the dark while defining range.

With recent additions to strings filters, we’ve also slightly redesigned the filter panel to make it more structured. Also available are translation counts, so you don’t need to apply the filter only to learn there are no matches.

We have more plans for improving filters in the future, most notably with the ability to combine multiple filters, so that you could e.g. display all suggestions submitted yesterday by Joe.

Coming up next

Our current focus is dashboard redesign, which will add some features currently only available on external dashboards. For more details on what’s currently in the oven, check out our roadmap. If you’d like to see a specific feature come to life early, get involved!

|

|