Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Manish Goregaokar: Starting at Mozilla |

I got a job!

I’m now working at Mozilla as a Research Engineer, on Servo.

I started two weeks ago, and so far I’m really enjoying it! I feel quite lucky to get to work on an open source project; with an amazing and helpful team. Getting to do most of my work in Rust is great, too :)

So far I’ve been working on the network stack (specifically, “making fetch happen”), and I’ll probably be spending time on DOM things as well.

Really excited to see how this goes!

http://manishearth.github.io/blog/2016/06/04/starting-at-mozilla/

|

|

About:Community: Firefox 47 new contributors |

With the release of Firefox 47, we are pleased to welcome the 41 developers who contributed their first code change to Firefox in this release, 33 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

http://blog.mozilla.org/community/2016/06/04/firefox-47-new-contributors/

|

|

Kim Moir: Submissions for Releng 2016: due by July 1, 2016 |

|

| Picture by howardignatius- Creative Commons Attribution-NonCommercial-NoDerivs 2.0 Generic (CC BY-NC-ND 2.0) https://www.flickr.com/photos/howardignatius/14482954049/sizes/l |

http://relengofthenerds.blogspot.com/2016/06/submissions-for-releng-2016-due-by-july.html

|

|

Support.Mozilla.Org: What’s Up with SUMO – 3rd June |

Hello, SUMO Nation!

Due to a technical glitch (someone didn’t remember how to press the right buttons here, but don’t look at me… I’m innocent! Almost… ;-)) we’re a day late, but we are here and… so are the latest & greatest news from the world of SUMO!

Welcome, new contributors!

Contributors of the week

- The forum supporters who helped users out for the last week.

- The writers of all languages who worked on the KB for the last week.

We salute you!

Most recent SUMO Community meeting

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

The next SUMO Community meeting…

- …is happening on WEDNESDAY the 8th of June – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- PLATFORM UPDATE TIME! We are currently testing Lithium’s capabilities. You can learn more about Lithium here. Please note that this does not mean we have reached a final decision regarding the future of SUMO’s technical backend. We will be sharing more information with you shortly. If you’re interested in playing with a sandbox environment, let Michal know.

- Mozillians in Jakarta can expect a SUMO event coming up sometime soon (thanks to Kelimutu!)

- Have you read the first SUMO Release Report?

-

Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

- Ongoing reminder #3: want to know what’s going on with the Admins? Check this thread in the forum.

Social

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- We are getting ready for the version 47 release of Firefox for Desktop and Mobile next week! Are there any common responses, upcoming bugs, or features that you, the beta users have a head start on? We want to know about it!

- Remember, if you’re new to the support forum, come over and say hi!

Knowledge Base & L10n

- New goals for all localizers in your dashboards!

- Reminder: Firefox for Android v47 documents are ready – get localizing!

- Reminder: L10n hackathons everywhere! Find your people and get organized!

Firefox

- for Android

- Version 46 support discussion thread.

- Reminder: version 47 will stop supporting Gingerbread. High time to update your Android installations. For all those who are already sad, a little teaser of what’s coming up (if you upgrade your Android OS, that is), aside from the usual Firefox mobile goodness:

- you’ll be able to turn off web fonts,

- the favicons will be removed from the URL bar to prevent spoofing

- Version 48 articles will be coming after June 18, courtesy of Joni!

- for Desktop

- For the main thread on our forums about Firefox 46, click here.

- Version 47 is coming next week! Release highlights include:

- Synced tabs will be highlighted,

- No more required Silverlight for Netflix and Flash for YouTube,

- VP9 & other assorted awesomeness,

- …but still no mandatory signed plug-in checks, bummer!

- for iOS

- Firefox for iOS 4.0 IS HERE!

- Firefox for iOS 5.0 should be with us in approximately 4 weeks! It should be out as early as June 21 (subject to Apple’s approval) and Joni is writing English articles for it already. Release highlights include bidirectional bookmark sync!

That’s it for this week! To round it all off nicely, go ahead and watch Kevin Kelly’s visit to Mozilla, during which he talked about the near future trends in technology. See you next week!

https://blog.mozilla.org/sumo/2016/06/03/whats-up-with-sumo-3rd-june/

|

|

Anjana Vakil: Warming up to Mercurial |

When it comes to version control, I’m a Git girl. I had to use Subversion a little bit for a project in grad school (not distributed == not so fun). But I had never touched Mercurial until I decided to contribute to Mozilla’s Marionette, a testing tool for Firefox, for my Outreachy application. Mercurial is the main version control system for Firefox and Marionette development,1 so this gave me a great opportunity to start learning my way around the hg. Turns out it’s really close to Git, though there are some subtle differences that can be a little tricky. This post documents the basics and the trip-ups I discovered. Although there’s plenty of other info out there, I hope some of this might be helpful for others (especially other Gitters) using Mercurial or contributing to Mozilla code for the first time. Ready to heat things up? Let’s do this!

Getting my bearings on Planet Mercury

OK, so I’ve been working through the Firefox Onramp to install Mercurial (via the bootstrap script) and clone the mozilla-central repository, i.e. the source code for Firefox. This is just like Git; all I have to do is:

$ hg clone

(Incidentally, I like to pronounce the hg command “hug”, e.g. “hug clone”. Warm fuzzies!)

Cool, I’ve set foot on a new planet! …But where am I? What’s going on?

Just like in Git, I can find out about the repo’s history with hg log. Adding some flags make this even more readable: I like to --limit the number of changesets (change-whats? more on that later) displayed to a small number, and show the --graph to see how changes are related. For example:

$ hg log --graph --limit 5

or, for short:

$ hg log -Gl5

This outputs something like:

@ changeset: 300339:e27fe24a746f

|\ tag: tip

| ~ fxtree: central

| parent: 300125:718e392bad42

| parent: 300338:8b89d98ce322

| user: Carsten "Tomcat" Book <cbook@mozilla.com>

| date: Fri Jun 03 12:00:06 2016 +0200

| summary: merge mozilla-inbound to mozilla-central a=merge

|

o changeset: 300338:8b89d98ce322

| user: Jean-Yves Avenard <jyavenard@mozilla.com>

| date: Thu Jun 02 21:08:05 2016 +1000

| summary: Bug 1277508: P2. Add HasPendingDrain convenience method. r=kamidphish

|

o changeset: 300337:9cef6a01859a

| user: Jean-Yves Avenard <jyavenard@mozilla.com>

| date: Thu Jun 02 20:54:33 2016 +1000

| summary: Bug 1277508: P1. Don't attempt to demux new samples while we're currently draining. r=kamidphish

|

o changeset: 300336:f75d7afd686e

| user: Jean-Yves Avenard <jyavenard@mozilla.com>

| date: Fri Jun 03 11:46:36 2016 +1000

| summary: Bug 1277729: Ignore readyState value when reporting the buffered range. r=jwwang

|

o changeset: 300335:71a44348d3b7

| user: Jean-Yves Avenard <jyavenard@mozilla.com>

~ date: Thu Jun 02 17:14:03 2016 +1000

summary: Bug 1276184: [MSE] P3. Be consistent with types when accessing track buffer. r=kamidphish

Great! Now what does that all mean?

Some (confusing) terminology

Changesets/revisions and their identifiers

According to the official definition, a changeset is “an atomic collection of changes to files in a repository.” As far as I can tell, this is basically what I would call a commit in Gitese. For now, that’s how I’m going to think of a changeset, though I’m sure there’s some subtle difference that’s going to come back to bite me later. Looking forward to it!

Changesets are also called revisions (because two names are better than one?), and each one has (confusingly) two identifying numbers: a local revision number (a small integer), and a global changeset ID (a 40-digit hexadecimal, more like Git’s commit IDs). These are what you see in the output of hg log above in the format:

changeset: :

For example,

changeset: 300339:e27fe24a746f

is the changeset with revision number 300339 (its number in my copy of the repo) and changeset ID e27fe24a746f (its number everywhere).

Why the confusing double-numbering? Well, apparently because revision numbers are “shorter to type” when you want to refer to a certain changeset locally on the command line; but since revision numbers only apply to your local copy of the repo and will “very likely” be different in another contributor’s local copy, you should only use changeset IDs when discussing changes with others. But on the command line I usually just copy-paste the hash I want, so length doesn’t really matter, so… I’m just going to ignore revision numbers and always use changeset IDs, OK Mercurial? Cool.

Branches, bookmarks, heads, and the tip

I know Git! I know what a “branch” is! - Anjana, learning Mercurial

Yeeeah, about that… Unfortunately, this term in Gitese is a false friend of its Mercurialian equivalent.

In the land of Gitania, when it’s time to start work on a new bug/feature, I make a new branch, giving it a feature-specific name; do a bunch of work on that branch, merging in master as needed; then merge the branch back into master whenever the feature is complete. I can make as many branches as I want, whenever I want, and give them whatever names I want.

This is because in Git, a “branch” is basically just a pointer (a reference or “ref”) to a certain commit, so I can add/delete/change that pointer whenever and however I want without altering the commit(s) at all. But on Mercury, a branch is simply a “diverged” series of changesets; it comes to exist simply by virtue of a given changeset having multiple children, and it doesn’t need to have a name. In the output of hg log --graph, you can see the branches on the left hand side: continuation of a branch looks like |, merging |\, and branching |/. Here are some examples of what that looks like.

Confusingly, Mercuial also has named branches, which are intended to be longer-lived than branches in Git, and actually become part of a commit’s information; when you make a commit on a certain named branch, that branch is part of that commit forever. This post has a pretty good explanation of this.

Luckily, Mercurial does have an equivalent to Git’s branches: they’re called bookmarks. Like Git branches, Mercurial bookmarks are just handy references to certain commits. I can create a new one thus:

$ hg bookmark my-awesome-bookmark

When I make it, it will point to the changeset I’m currently on, and if I commit more work, it will move forward to point to my most recent changeset. Once I’ve created a bookmark, I can use its name pretty much anywhere I can use a changeset ID, to refer to the changeset the bookmark is pointing to: e.g. to point to the bookmark I can do hg up my-awesome-bookmark. I can see all my bookmarks and the changesets they’re pointing to with the command:

$ hg bookmarks

which outputs something like:

loadvars 298655:fe18ebae0d9c

resetstats 300075:81795401c97b

* my-awesome-bookmark 300339:e27fe24a746f

When I’m on a bookmark, it’s “active”; the currently active bookmark is indicated with a *.

OK, maybe I was wrong about branches, but at least I know what the “HEAD” is! - Anjana, a bit later

Yeah, nope. I think of the “HEAD” in Git as the branch (or commit, if I’m in “detached HEAD” state) I’m currently on, i.e. a pointer to (the pointer to) the commit that would end up the parent of whatever I commit next. In Mercurial, this doesn’t seem to have a special name like “HEAD”, but it’s indicated in the output of hg log --graph by the symbol @. However, Mercurial documentation does talk about heads, which are just the most recent changesets on all branches (regardless of whether those branches have names or bookmarks pointing to them or not).2 You can see all those with the command hg heads.

The head which is the most recent changeset, period, gets a special name: the tip. This is another slight difference from Git, where we can talk about “the tip of a branch”, and therefore have several tips. In Mercurial, there is only one. It’s labeled in the output of hg log with tag: tip.

Recap: Mercurial glossary

| Term | Meaning |

|---|---|

| branch | a “diverged” series of changesets; doesn’t need to have a name |

| bookmark | a named reference to a given commit; can be used much like a Git branch |

| heads | the last changesets on each diverged branch, i.e. changesets which have no children |

| tip | the most recent changeset in the entire history (regardless of branch structure) |

All the world’s a stage (but Mercury’s not the world)

Just like with Git, I can use hg status to see the changes I’m about to commit before committing with hg commit. However, what’s missing is the part where it tells me which changes are staged, i.e. “to be committed”. Turns out the concept of “staging” is unique to Git; Mercurial doesn’t have it. That means that when you type hg commit, any changes to any tracked files in the repo will be committed; you don’t have to manually stage them like you do with git add (hg add is only used to tell Mercurial to track a new file that it’s not tracking yet).

However, just like you can use git add --patch to stage individual changes to a certain file a la carte, you can use the now-standard record extension to commit only certain files or parts of files at a time with hg commit --interactive. I haven’t yet had occasion to use this myself, but I’m looking forward to it!

Turning back time

I can mess with my Mercurial history in almost exactly the same way as I would in Git, although whereas this functionality is built in to Git, in Mercurial it’s accomplished by means of extensions. I can use the rebase extension to rebase a series of changesets (say, the parents of the active bookmark location) onto a given changeset (say, the latest change I pulled from central) with hg rebase, and I can use the hg histedit command provided by the histedit extension to reorder, edit, and squash (or “fold”, to use the Mercurialian term) changesets like I would with git rebase --interactive.

My Mozilla workflow

In my recent work refactoring and adding unit tests for Marionette’s Python test runner, I use a workflow that goes something like this.

I’m gonna start work on a new bug/feature, so first I want to make a new bookmark for work that will branch off of central:

$ hg up central

$ hg bookmark my-feature

Now I go ahead and do some work, and when I’m ready to commit it I simply do:

$ hg commit

which opens my default editor so that I can write a super great commit message. It’s going to be informative and formatted properly for MozReview/Bugzilla, so it might look something like this:

Bug 1275269 - Add tests for _add_tests; r?maja_zf

Add tests for BaseMarionetteTestRunner._add_tests:

Test that _add_tests populates self.tests with correct tests;

Test that invalid test names cause _add_tests to

throw Exception and report invalid names as expected.

After working for a while, it’s possible that some new changes have come in on central (this happens about daily), so I may need to rebase my work on top of them. I can do that with:

$ hg pull central

followed by:

$ hg rebase -d central

which rebases the commits in the branch that my bookmark points to onto the most recent changeset in central. Note that this assumes that the bookmark I want to rebase is currently active (I can check if it is with hg bookmarks).

Then maybe I commit some more work, so that now I have a series of commits on my bookmark. But perhaps I want to reorder them, squash some together, or edit commit messages; no problemo, I just do a quick:

$ hg histedit

which opens a history listing all the changesets on my bookmark. I can edit that file to pick, fold (squash), or edit changesets in pretty much the same way I would using git rebase --interactive.

When I’m satisfied with the history, I push my changes to review:

$ hg push review

My special Mozillian configuration of Mercurial, which a wizard helped me set up during installation, magically prepares everything for MozReview and then asks me if I want to

publish these review requests now (Yn)?

To which I of course say Y (or, you know, realize I made a horrible mistake, say n, go back and re-do everything, and then push to review again).

Then I just wait for review feedback from my mentor, and perhaps make some changes and amend my commits based on that feedback, and push those to review again.

Ultimately, once the review has passed, my changes get merged into mozilla-inbound, then eventually mozilla-central (more on what that all means in a future post), and I become an official contributor. Yay! :)

So is this goodbye Git?

Nah, I’ll still be using Git as my go-to version control system for my own projects, and another Mozilla project I’m contributing to, Perfherder, has its code on Github, so Git is the default for that.

But learning to use Mercurial, like learning any new tool, has been educational! Although my progress was (and still is) a bit slow as I get used to the differences in features/workflow (which, I should reiterate, are quite minor when coming from Git), I’ve learned a bit more about version control systems in general, and some of the design decisions that have gone into these two. Plus, I’ve been able to contribute to a great open-source project! I’d call that a win. Thanks Mercurial, you deserve a hg. :)

Further reading

- Understanding Mercurial and Mercurial for Git users on the Mercurial wiki

- Mercurial for git developers from the Python Developer’s Guide

- Mercurial Tips from Mozilla developer Andreas Tolfsen (:ato)

- Mercurial vs Git; it’s all in the branches by Felipe Contreras

Notes

1 However, there is a small but ardent faction of Mozilla devs who refuse to stop using Git. Despite being a Gitter, I chose to forego this option and use Mercurial because a) it’s the default, so most of the documentation etc. assumes it’s what you’re using, and b) I figured it was a good chance to get to know a new tool.

|

|

Benjamin Smedberg: Concert This Sunday: The King of Instruments and the Instrument of Kings |

This coming Sunday, I will be performing a concert with trumpeter Kyra Hill as part of the parish concert series. I know that many of the readers of my site don’t live anywhere near Johnstown, Pennsylvania, but if you do, we’d love to have you, and it’ll be a lot of fun.

2:30 p.m.

Our Mother of Sorrows Church

415 Tioga Street, Johnstown PA

Why you should come

- You’ll get to hear what wise men riding camels sneaking away from Herod sounds like.

- If that’s not your thing, what about eight minutes of non-stop fantasy based on the four notes of the bell tower of Westminster?

- Or a trumpet playing Gregorian chant?

- During the intermission, there will be a pipe organ petting zoo. If you’ve always wondered what all those knobs and buttons are for, here’s your chance to find out!

I am proud that almost all of the music in this program was written in the last 100 years: there are compositions dating from 1919, 1929, 1935, 1946, 1964, 2000, and 2004. Unlike much of the classical music world which got lost around 1880 and never recovered, music for organ has seen a explosion of music and musical styles that continues to the present day. Of course there is the obligatory piece by J.S. Bach, because how could you have an organ concert without any Bach? But beyond that, there are pieces by such modern greats as Alan Hovhaness, Marcel Dupr'e, Louis Vierne, and Olivier Messiean.

It’s been a while since I performed a full-length concert; it has been fun to get back in the swing of regular practice and getting pieces up to snuff. I hope you find it as enjoyable to listen to as it has been for me to prepare!

|

|

Kim Moir: DevOpsDays Toronto recap |

|

| Glenn Gould Studios, CBC, Toronto. |

|

| Statue of Glenn Gould outside the CBC studios that bear his name. |

Day 1

The day started out with an introduction from the organizers and a brief overview of history of DevOps days. They also made a point about reminding everyone that they had agreed to the code of conduct when they bought their ticket. I found this explicit mention of the code of conduct quite refreshing.

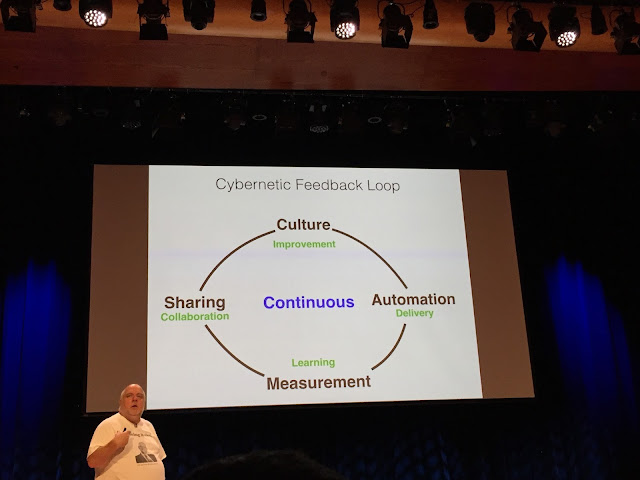

The first talk of the day was John Willis, evangelist at Docker. He gave an overview of the state of enterprise devops. I found this a fresh perspective because I really don't know what happens in enterprises with respect to DevOps since I have been working in open source communities for so long. John providing an overview of what DevOps encompasses.

DevOps is a continuous feedback loop.

He talked a lot about how empathy is so important in our jobs. He mentions that at Netflix has a slide deck that describes company culture. He doesn't know if this is still the case, but it he had heard that if you hadn't read the company culture deck and show up for an interview at Netflix, you would be automatically disqualified for further interviews. Etsy and Spotify have similar open documents describing their culture.

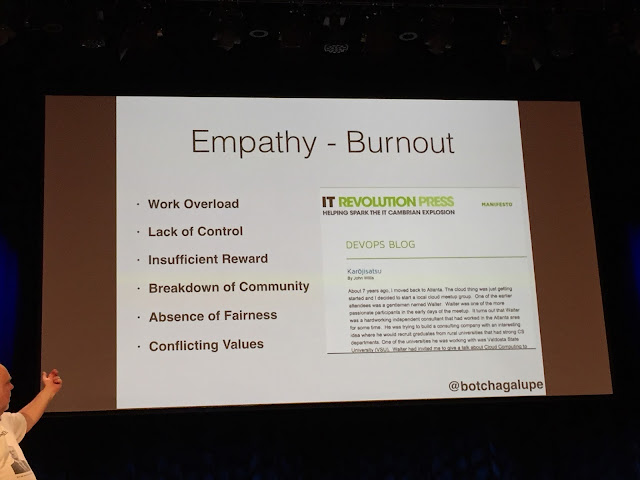

Here he discusses the research by Christina Maslach on the six sources of burnout.

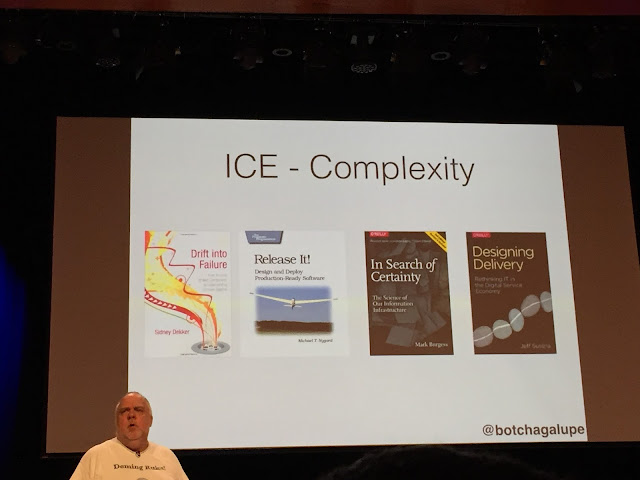

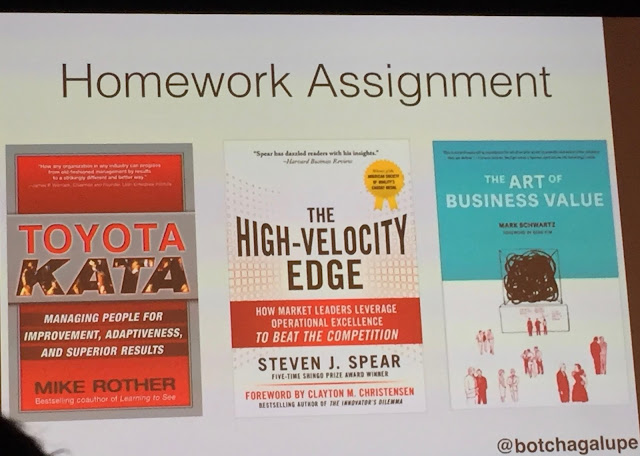

He gave us some reading to do. I've read the "Release It!" book which is excellent and has some fascinating stories of software failure in it, I've added the other books to my already long reading list.

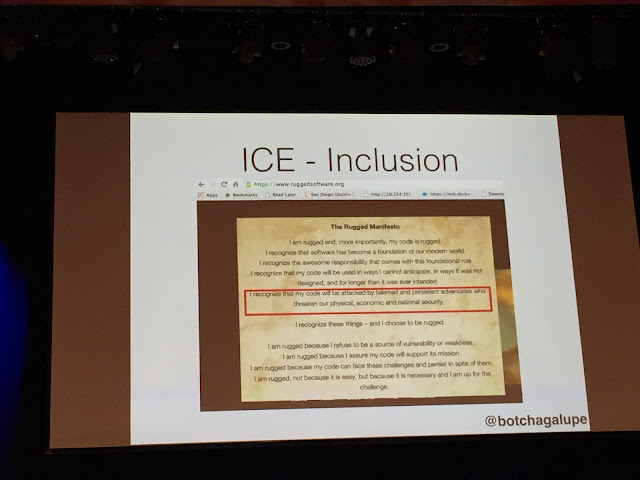

The rugged manifesto and realizing that the code you write will always be under attack by malicious authors. ICE stands for Inclusivity, Complexity and Empathy.

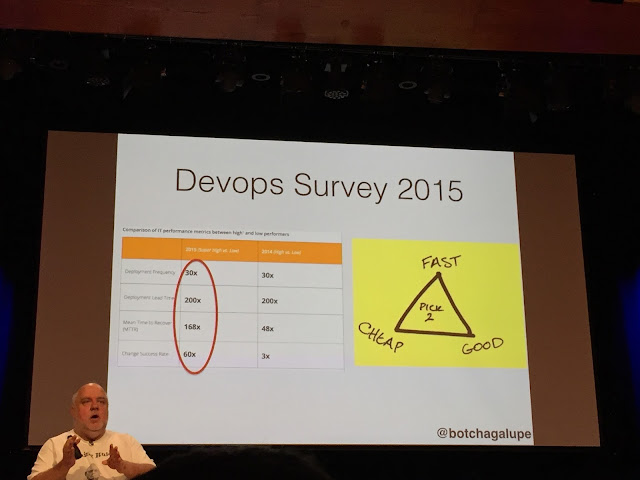

He stated that it's a long standing mantra that you can have two of either fast, cheap or good but recent research shows that today we can many changes quickly, and if there is a failure the mean time to recovery is short.

He left us with some more books to read.

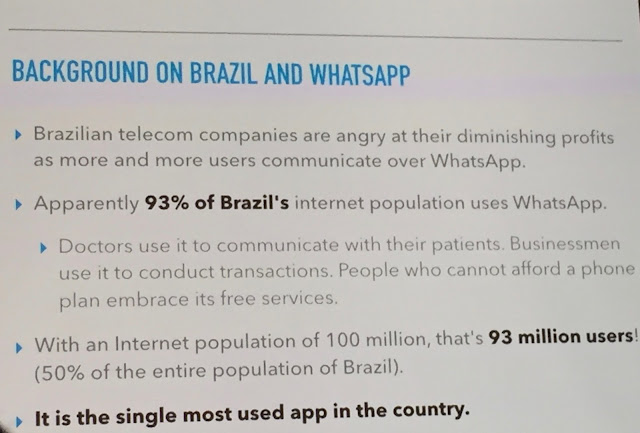

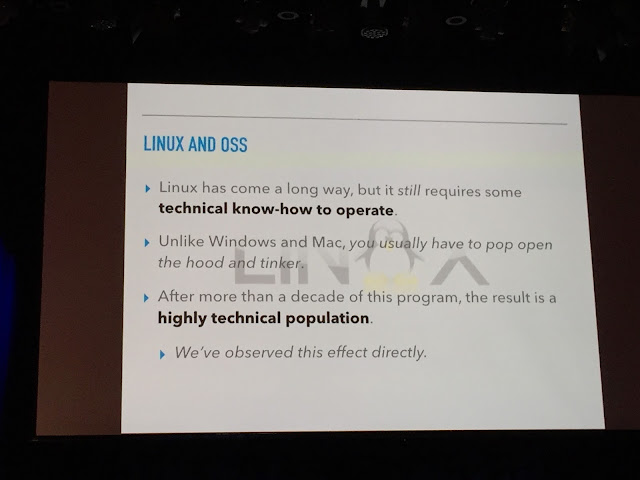

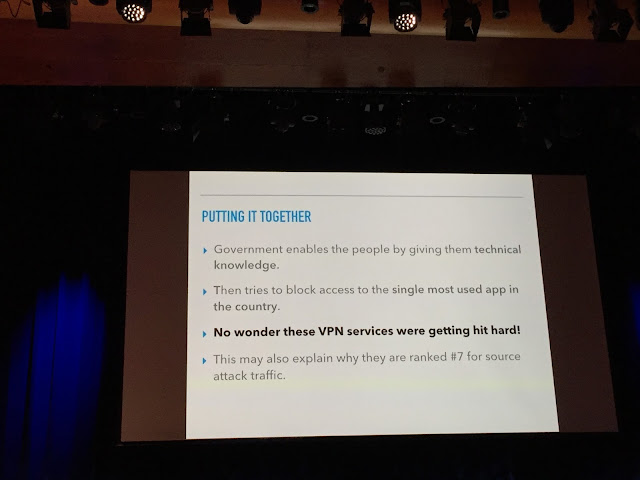

The second talk was a really interesting talk by Hany Fahim, CEO of VM Farms. It was a short mystery novella describing how VM Farms servers suddenly experienced a huge traffic spike when the Brazilian government banned Whatsapp as a result of a legal order. I love a good war story.

Hany discussed one day VMfarms suddenly saw a huge increase in traffic.

This was a really important point. When your system is failing to scale, it's important to decide if it's a valid increase in traffic or malicious.

Looking on twitter, they found that a court case in Brazil had recently ruled that Whatsup would be blocked for 48 hours. Users started circumventing this block via VPN. Looking at their logs, they determined that most of the traffic was resolving to ip addresses from Brazil and that there was a large connection time during SSL handshakes.

The government of Brazil encouraged the use of open source software versus Windows, and thus the users became more technically literate, and able to circumvent blocks via VPN.

In conclusion, making changes to use multi-core HAProxy fixed a lot of issues. Also, twitter was and continues to be a great source of information on activity that is happening in other countries. Whatsapp was returned to service and then banned a second time, and their servers were able to keep up with the demand.

After lunch, we were back to to more talks. The organizers came on stage for a while to discuss the afternoon's agenda. They also remarked that one individual had violated the code of conduct and had been removed from the conference. So, the conference had a code of conduct and steps were taken if it was violated.

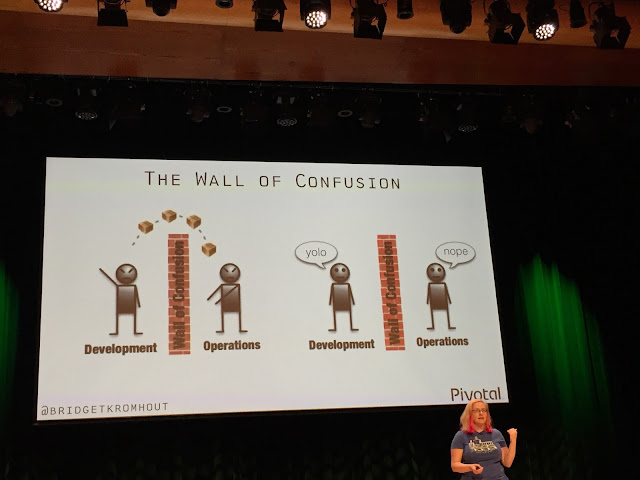

Next up, Bridget Kromhout from Pivotal gave a talk entitled Containers will not Fix your Broken Culture.

I first saw Bridget speak at Beyond the Code in Ottawa in 2014 about scaling the streaming services for Drama Fever on AWS. At the time, I was moving our mobile test infrastructure to AWS so I was quite enthralled with her talk because 1) it was excellent 2) I had never seen another woman give a talk about scaling services on AWS. Representation matters.

I first saw Bridget speak at Beyond the Code in Ottawa in 2014 about scaling the streaming services for Drama Fever on AWS. At the time, I was moving our mobile test infrastructure to AWS so I was quite enthralled with her talk because 1) it was excellent 2) I had never seen another woman give a talk about scaling services on AWS. Representation matters.The summary of the talk last week was that no matter what tools you adopt, you need to communicate with each other about the cultural changes are required to implement new services. A new microservices architecture is great, but if these teams that are implementing these services are not talking to each other, the implementation will not succeed.

Bridget pointing out that the technology we choose to implement is often about what is fashionable.

Shoutout to Jennifer Davis' and Katherine Daniel's Effective DevOps book. (note - I've read it on Safari online and it is excellent. The chapter on hiring is especially good)

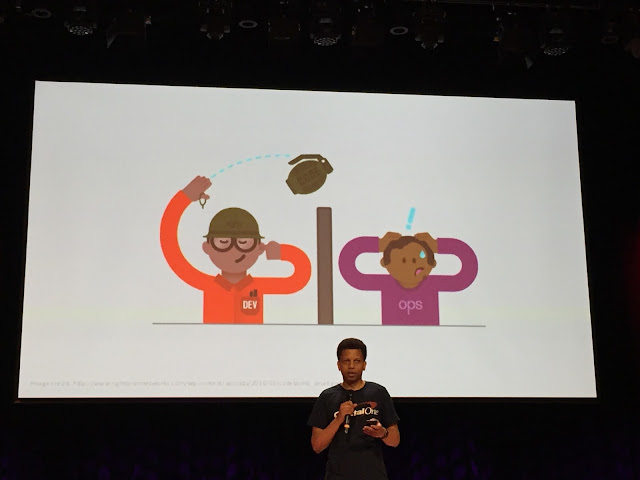

Loved this poster about the wall of confusion between development and operations.

In the afternoon, there were were lightning talks and then open spaces. Open spaces are free flowing discussions where the topic is voted upon ahead of time. I attended ones on infrastructure automation, CI/CD at scale and my personal favourite, horror stories. I do love hearing how distributed system can go down and how to recover. I found that the conversations were useful but it seemed like some of them were dominated by a few voices. I think it would be better if the person that suggested to topic for the open space also volunteered to moderate the discussion.

Day 2

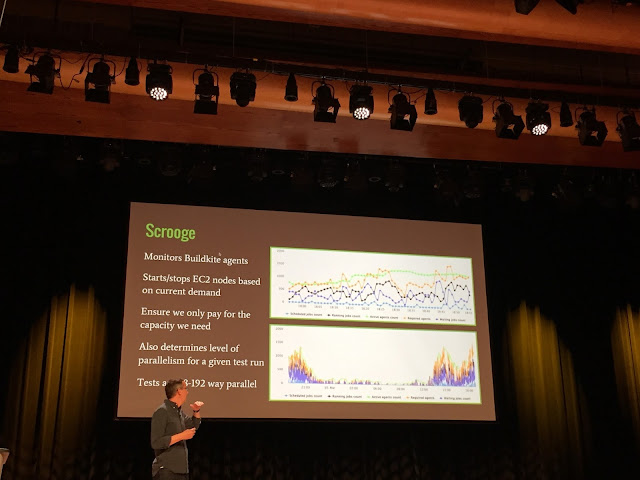

The second day started out with a fantastic talk by John Arthorne of Shopify speaking on scaling their deployment pipeline. As a side note, John and I worked together for more than a decade on Eclipse while we both worked at IBM so it was great to catch up with him after the talk.He started by giving some key platform characteristics. Stores on Shopify have flash sales that have traffic spikes so they need to be able to scale for these bursts of traffic.

From commit to deploy in 10 minutes. Everyone can deploy. This has two purposes: Make sure the developer stays involved in the deploy process. If it only takes 10 minutes, they can watch to make sure that their deploy succeeds. If it takes longer, they might move on to another task. Another advantage of this quick deploy process is that it can delight customers with the speed of deployment. They also deploy in small batches to ensure that the mean time to recover is small if the change needs to be rolled back.

BuildKite is a third party build and test orchestration service. They wrote a tool called Scrooge that monitors the number of EC2 nodes based on current demand to reduce their AWS bills. (Similar to what Mozilla releng does with cloud-tools)

Shopify uses a open source orchestration tool called ShipIt. I was sitting next to my colleague Armen at the conference and he started chuckling at this point because at Mozilla we also wrote an application called ship-it which release management uses to kick off Firefox releases. Shopify also has a overall view of the ship it deployment process which allows developers to see the percentages of nodes where their change has been deployed. One of the questions after the talk was why they use AWS for their deployment pipeline when they have use machines in data centres for their actual customers. Answer: They use AWS where resilency is not an issue.

Building containers is computationally expensive. He noted that a lot of engineering resources went into optimizing the layers in the Docker containers. To isolate changes to the smallest layer. They build service called Locutus to build the containers on commit, and push to a registry. It employs caching to make the builds smaller.

He emphasized the need to empower developers to use DevOp practices by giving them tools, and showing them how to use them. For instance, if they needed to run docker to test something, walk them through it so they will know how to do it next time.

He had excellent advice on how to work on projects outside of work to showcase skills for future employers.

Diversity and Inclusion

As an aside, whenever I'm at a conference I note the number of people in the "not a white guy" group. This conference had an all men organizing committee but not all white men. (I recognize the fact that not all diversity is visible i.e. mental health, gender identity, sexual orientation, immigration status etc) They was only one woman speaker, but there were a few non-white speakers. There were very few women attendees. I'm not sure what the process was to reach out to potential speakers other than the CFP.

There were slides that showed diverse developers which was refreshing.

Loved Roderick's ops vs dev slide.

I learned a lot at the conference and am thankful for all the time that the speakers took to prepare their talks. I enjoyed all the conversations I had learning about the challenges people face in the organizations implementing continuous integration and deployment. It also made me appreciate the culture of relentless automation, continuous integration and deployment that we have at Mozilla.

I don't know who said this during the conference but I really liked it

It was interesting to learn how all these people are making their companies heart beat stronger via DevOps practices and tools.

http://relengofthenerds.blogspot.com/2016/06/devopsdays-toronto-recap.html

|

|

Mozilla Addons Blog: Developer profile: Lu'is Miguel (aka Quicksaver) |

“I bleed add-on code,” says Lu'is Miguel.

Always a heavy user of bookmarks and feeds, Lu'is Miguel one day decided to customize his Firefox sidebar to better accommodate his personal tastes (he didn’t like how the ever-present sidebar pushed web content aside). But Lu'is soon discovered he could only go so far solving this problem with minor CSS tweaks.

That’s when he resolved himself to develop a full-blown add-on. He created OmniSidebar, an elegant extension that allows you to slide your sidebar into view with a simple gesture, among other rich interface features.

Another of Lu'is’ add-ons—FindBar Tweak—has a similar origin story; Lu'is identified a personal desire first, then built an add-on to address it. In this case he wanted a quick way to scan code for just the parts applicable to his work.

“Earlier today I was working with pinned tabs, and I had to research how they happen,” explains Lu'is. “Looking for the word ‘pinned’ in Firefox’s code using FindBar Tweak, I was able to easily read through only the relevant bits in a few seconds, because it not only takes me directly to what I’m looking for, but also helps me visually make sense of what is actually relevant.”

Lu'is has created four other add-ons, including the popular Tab Groups. Lu'is spoke with me briefly about his unique interest in add-ons…

It sounds like you basically create all of your add-ons based on personal browsing needs. Is that an accurate characterization?

Lu'is Miguel: Yeah, that’s a fair way to put it. Everything has been about making my Firefox behave and interact with me the way I prefer it to, from quickly finding what I’m looking for in a page, to having my feeds and bookmarks readily accessible without screwing up the rest of the page layout, properly organizing all my buttons in the browser, keeping a clean window but always ready to interact in whatever way I need it to. The “anything goes” approach of Firefox add-ons make them a very fun playground for me in that respect.

As a procrastination enthusiast, I’ve found that when the process—towards whatever goal—is optimal, fast, and effective, I am the most motivated to do it. So I look for every way to enhance productivity in every task I do, not just for developing, even just casual browsing really.

Puzzle Bars conveniently pulls up your customized toolbar with a simple swing of the mouse.

Although, that’s not entirely the case anymore. There’s a lot in my add-ons that come from user suggestions and requests, many [features] I don’t really use myself. But if it’s to improve the add-on and I have the availability to do it, how can I say no to the most awesome and supportive users a developer could ask for?

Beyond add-ons you’ve created, do you do other types of development?

LM: I’ve contributed a few patches for Firefox itself through Bugzilla, but that’s about it. When I set out to do something, I focus on it, so right now I bleed add-on code.

Are you working on any new add-ons you want to tell us about?

LM: I do have a couple of ideas for new add-ons, but those probably won’t happen for a while, mostly because I have to focus on my current ones a lot, as they will need to be ported to WebExtensions eventually. Since my add-ons are mostly UI-modifications at their base, that will be… challenging.

I have been working on Tab Groups 2 though, which is a major rewrite of almost everything, to hopefully make it more stable and perform better. It’s turning out really awesome.

What other add-ons—ones you haven’t created—are your favorites and why?

LM: I have around 30 add-ons enabled in my main profile at any one time. A few favorites come to mind…

AdBlock Plus is always at the top of my list. I dislike waiting a long time for pages to fully load because of several blinking boxes, or misleading ads.

Download Panel Tweaker for a sleek, direct, and fully functional downloads panel.

Tab Mix Plus for optimized opening, closing, moving, and loading tab behavior. There’s just so much it can do!

Stylish for a few nits here and there, and the custom glass style I made for myself, which I’ve found no other theme or add-on out there can even come close to this.

I especially like Turn Off the Lights. It helps me focus on whatever video I’m watching. I tend to get distracted easily. If there’s something in the corner of my eye that stands out for some reason, I will look at it. So that functionality is simple and yet very effective.

Thanks for chatting with us, Lu'is! I can think of no better way to close this conversation than by sharing a recent installment of your web series…

https://blog.mozilla.org/addons/2016/06/02/developer-profile-luis-miguel-aka-quicksaver/

|

|

Air Mozilla: Reps weekly, 02 Jun 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Air Mozilla: Web QA Team Meeting, 02 Jun 2016 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, wonderful ideas, glorious demos, and a...

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, wonderful ideas, glorious demos, and a...

|

|

Air Mozilla: Web QA Team Meeting, 02 Jun 2016 |

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

Weekly Web QA team meeting - please feel free and encouraged to join us for status updates, interesting testing challenges, cool technologies, and perhaps a...

|

|

Roberto A. Vitillo: What is it like to be a Data Engineer at Mozilla? |

When people ask me what I do at Mozilla and I tell them I work with data, more often than not their reaction is something like “Wait, what? What kind of data could Mozilla possibly have?” But let’s start from the beginning of my journey. Even though I have worked on data systems since my first internship, I didn’t start my career at Mozilla officially as a Data Engineer. I was hired to track, investigate, and fix performance problems in the Firefox browser; an analyst of sorts, given my background in optimizing large scientific applications for the ATLAS experiment at the LHC.

The thing with applications for which you have some control over how they are used is that you can “just” profile your known use cases and go from there. Profiling a browser is an entirely different story. Every user might stress the browser in different ways and you can’t just sample stacks and indiscriminately collect data from your users without privacy and performance consequences. If that wasn’t enough, there are as many different hardware/software configurations as there are users. Even with the same configuration, a different driver version can be make a huge difference. This is where Telemetry comes in, an opt-in data acquisition system that collects general performance metrics like garbage collection timings and hardware information, like the GPU model, across our user-base. In case you are wondering what data I am talking about, why don’t you have a look? You are just one click away from about:telemetry, a page that shows the data that Telemetry collects.

Imagine receiving Terabytes of data with millions of payloads per day, each containing thousands of complex hierarchical measurements, and being tasked to make sense of that data, to find patterns in it, how would you do it? Step 1 is getting the data, how hard can it possibly be, right? In order to do that, I had to fire a script to launch an EC2 instance to which I had to ssh into and from which I could run a custom, non-distributed, MapReduce (MR) system written in Python. This was a couple of years ago; you might be wondering why anyone would use a custom MR system instead of something like Hadoop back then. It turns out that it was simple enough to support the use cases the performance team had at the time and when something went wrong it was easy to debug. Trust me, debugging a full-fledged distributed system can be painful.

The system was very simple, the data was partitioned on S3 according to a set of dimensions, like submission date, and the MR framework allowed the user to define the which values to use for each dimension. My first assignment was to get back the ratio of users that had a solid state disk. Sounds simple enough, yet it took me a while to get the data I wanted out of the system. The debug cycle was very time consuming; I had to edit my job, try to run it and if something went wrong start all over again. An analysis for a single day could take hours to complete and, as it’s often the case with data, if something that was supposed to be a string turned out be an integer because of data corruption, and I failed to handle that case, the whole thing exploded.

There weren’t many people running Telemetry analyses back then; it was just too painful. In time, as more metrics were added and I tried to answer more questions with data, I decided to step back for a moment and try something new. I played around with different systems like Pig, mrjob, and Spark. Though Spark has a rich API with far more operators than map & reduce, what ultimately sold me was the fact that it had a read–eval–print loop. If your last command failed, you could just retry it without having to rerun the whole job from the beginning! I initially used a Scala API to run my analyses but I soon started missing some of the Python libraries I grew used to for analysis, like pandas, scipy and matplotlib; the Scala counterpart just weren’t on par with it. I wanted to bring others on board and convincing someone to write analyses in a familiar language was a lot easier. So I built a tiny wrapper around Spark’s Python API which lets user run queries on our data from a Jupyter notebook and in time the number of people using Telemetry for their analytics needs grew.

I deliberately ignored many design problems that had to be solved. What storage format should be used? How should the data be partitioned? How should the cluster(s) be provisioned; EMR, ECS or something else entirely? How should recurrent jobs be scheduled?

Telemetry is one of the most complex data sources we have but there are many others, like the server logs from a variety of services. Others were solving similar problems as the ones I faced but with different approaches, so we joined forces. Fast-forward a couple of years and Telemetry has changed a lot. A Heka pipeline pre-processes and validates the Telemetry data before dumping it on S3. Scala jobs convert the raw JSON data in more manageable derived Parquet datasets that can then be read by Spark with Python, Scala or R. The scope of Telemetry has expanded to drive not only performance needs but business ones as well. Derived datasets can be queried with SQL through Presto and re:dash allows us to build and share dashboard among peers. Jobs can be scheduled and monitored with Airflow. Telemetry based analyses are being run at all level of the organization: no longer are few people responsible to answer every imaginable data related question: there are interns running statistically rigorous analyses on A/B experiments and Senior Managers building retention plots for their products. Analyses are being shared as Jupyter notebooks and peer-reviewed on Bugzilla. It’s every engineer’s responsibility to know how to measure how well its own feature or product is performing.

There is this popular meme about how Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it. The truth is that there are only so many companies out there that can claim a user base with millions of users. This is one of the thing I love the most about Mozilla, we aren’t big as the giants but we aren’t small either. As an engineer, I get to solve challenging problems with the agility, flexibility and freedom of a startup. Our engineers work on interesting projects and there is a lot of room for trying new things, disrupting the status quo and pushing our comfort zone.

What’s the life of a Data engineer at Mozilla nowadays? How do we spend our time and what are our responsibilities?

Building the data pipeline

We build, maintain, monitor and deploy our data infrastructure and make sure that it works reliably and efficiently. We strive to use the smallest number of technologies that can satisfy our needs and work well in concert.

What kind of data format (e.g. Avro, Parquet) and compression codec (e.g. Snappy, LZO) should be used for the data? Should the data be stored on HDFS or S3? How should it be partitioned and bucketed? Where should the metadata (e.g. partition columns) be stored? Should the data be handled in real-time or in batch? Should views be recomputed incrementally or from scratch? Does summary data need to be accurate or can probabilistic data structures be used? Those are just some of the many decisions a data engineer is expected to take.

We contribute to internal projects. Heka for example, a stream processing system, is used in a multitude of ways: from loading and parsing log files to performing real time analysis, graphing, and anomaly detection.

At Mozilla, we love open source. When we built our stream processing system, we open sourced it. When different data systems like Spark or Presto need some custom glue to make them play ball, we extend them to fit our needs. Everything we do is out in the open; many of our discussions happen either on Bugzilla and on public mailing lists. Whatever we learn we share with the outside world.

Supporting other teams

Engineers, managers, and executives alike come to us with questions that they would like to answer with data but don’t know yet quite how to. We try to understand which are the best metrics that can answer their questions, assuming they can be answered in the first place. If the metrics required are missing then we add them to our pipeline. This could mean adding instrumentation to Firefox or, if the desired metrics can be derived from simpler ones that are available in the raw data, extending our derived datasets.

Once the data is queryable, we teach our users how to access it with e.g. Spark, Presto or Heka, depending on where the dataset is stored and what their requirements are. We learn from common patterns and inefficiencies by supporting our users’ analysis needs. For example, if a considerable amount queries require to group a dataset in a certain way, we might opt to build a pre-grouped version of the dataset to improve the performance.

We strive to review many of our users’ analyses. It’s far too easy to make mistakes when analyzing data since the final deliverables, like plots, can look reasonable even in the presence of subtle bugs.

Doing data science

We build alerting mechanisms that monitor our data to warn us when something is off. From representing time-series with hierarchical statistical models to using SAX for anomaly detection, the fun never stops.

We create dashboards for general use cases, like the display of daily aggregates partitioned by a set of dimensions or to track engagement ratios of products & features.

We validate hypotheses with A/B tests. For example, one of the main features we are going to ship this year, Electrolysis, requires a clear understanding of its performance impact before shipping it. We designed and ran A/B tests to compare with statistically sound methods a vast variety of performance metrics with and without Electrolysis enabled.

There is also no shortage of analyses that are more exploratory in nature. For example, we recently noticed a small spike in crashes whenever a new release is shipped. Is it a measurement artifact due to crashes that happened during the update? Or maybe there is something off that causes Firefox to crash after a new version is installed, and if so what is it? We don’t have an answer to this conundrum yet.

The simpler it is to get answers, the more questions will be asked.

This has been my motto for a while. Being data driven doesn’t mean having a siloed team of wizards and engineers that poke at data. Being data driven means that everyone in the company knows how to access the data they need in order to answer the questions they have; that’s the ultimate goal of a data engineering team. We are getting there but there is still a lot to do. If you have read so far, are passionate about data and have relevant skills, check out our jobs page.

https://robertovitillo.com/2016/06/02/so-what-do-you-do-at-mozilla/

|

|

Doug Belshaw: Some thoughts and recommendations on the future of the Open Badges backpack and community |

Intro

Back in January of this year, Mozilla announced a ‘continued commitment’ to, but smaller role in, the Open Badges ecosystem. That was as expected: a couple of years ago Mozilla and the MacArthur Foundation had already spun out a non-profit in the form of the Badge Alliance.

That Mozilla post included this paragraph:

We will also reconsider the role of the Badge Backpack. Mozilla will continue to host user data in the Backpack, and ensure that data is appropriately protected. But the Backpack was never intended to be the central hub for Open Badges — it was a prototype, and the hope has forever been a more federated and user-controlled model. Getting there will take time: the Backpack houses user data, and privacy and security are paramount to Mozilla. We need to get the next iteration of Backpack just right. We are seeking a capable person to help facilitate this effort and participate in the badges technical community. Of course, we welcome code contributions to the Backpack; a great example is the work done by DigitalMe.

Last month, digitalme subsequently announced they have a contract with Mozilla to work on both the Open Badges backpack and wider technical infrastructure. As Kerri Lemoie pointed out late last year, there’s no-one at Mozilla working on Open Badges right now. However, that’s a feature rather than a bug; the ecosystem in the hands of the community, where it belongs.

Tim Riches, CEO of digitalme, states that their first priority will be to jettison the no-longer-supported Mozilla Persona authentication system used for the Open Badges backpack:To improve user experience across web and mobile devices our first action will be to replace Persona with Passport.js. This will also provide us with the flexibility to enable user to login with other identity providers in the future such as Twitter, Linkedin and Facebook. We will also be improving stability and updating the code base.

In addition, digitalme are looking at how the backpack can be improved from a user point of view:

“We will be reviewing additional requirements for the backpack and technical infrastructure gathered from user research at MozFest supported by The Nominet Trust in the UK, to create a roadmap for further development, working closely with colleagues from Badge Alliance.

Some of the technical work was outlined at the beginning of the year by Nate Otto, Director of the Badge Alliance. On that roadmap is “Federated Backpack Protocol: Near and Long-term Solutions”. As the paragraph from the Mozilla post notes, federation is something that’s been promised for so long — at least the last four years.

Federation is technically complex. In fact, even explaining it is difficult. The example I usually give is around the way email works. When you send an email, you don’t have to think about which provider the recipient uses (e.g. Outlook365, GMail, Fastmail, etc.) as it all just works. Data is moved around the internet leading to the intended person receiving a message from you.

The email analogy breaks down a bit if you push it too hard, but in the Open Badges landscape, the notion of federation is crucial. It allows badge recipients to store their badges wherever they choose. At the moment, we’ve effectively got interoperable silos; there’s no easy way for users to move their badges between platforms elsewhere.

As Nate mentions in another post, building a distributed system is hard not just because of technical considerations, but because it involves co-ordinating multiple people and organisations.

It is much harder to build a distributed ecosystem than a centralized one, but it is in this distributed ecosystem, with foundational players like Mozilla playing a part, that we will build a sustainable and powerful ecosystem of learning recognition that reflects the values of the Web.

Tech suggestions

I’m delighted that there’s some very smart and committed people working on the technical side of the Open Badges ecosystem. For example, yesterday’s community call (which unfortunately I couldn’t make) resurrected the ‘tech panel’. One thing that’s really important is to ensure that the *user experience* across the Open Badges ecosystem is unambiguous; people who have earned badges need to know where they’re putting them and why. At the moment, we’ve got three services wrapped up together in badge issuing platforms such as Open Badge Academy:

One step towards federation would be to unpick these three aspects on the ecosystem level. For example, providing an ‘evidence store‘ could be something that all badge platforms buy into. This would help avoid problems around evidence disappearing if a badge provider goes out of business (as Achievery did last year).

A second step towards federation would be for the default (Mozilla/Badge Alliance) badge backpack to act as a conduit to move badges between systems. Every badge issuing platform could/should have a ‘store in backpack’ feature. If we re-interpret the ‘badge backpack’ metaphor as being a place where you securely store (but don’t necessarily display) your badges this would encourage providers to compete on badge display.

The third step towards federation is badge discoverability. Numbers are hard to come by within the Open Badges ecosystem as the specification was explicitly developed to put learners in control. Coupled with Mozilla’s (valid) concerns around security and privacy, it’s difficult both to get statistics around Open Badges and discover relevant badges. Although Credmos is having a go at the latter, more could be done on the ecosystem level. Hopefully this should be solved with the move to Linked Data in version 2.0 of the specification.

Community suggestions

While I’m limited on the technical contributions I can make to the Badge Alliance, something I’m committed to is helping the community move forward in new and interesting ways. Although Nate wrote a community plan back in March, I still think we can do better in helping those new to the ecosystem. Funnelling people into a Slack channel leads to tumbleweeds, by and large. As I mentioned on a recent community call, I’d like to see an instance of Discourse which would build knowledge base and place for the community to interact in more more targeted ways that the blunt instrument that is the Open Badges Google Group.

Something which is, to my mind, greatly missed in the Open Badges ecosystem, is the role that Jade Forester played in curating links and updates for the community via the (now defunct) Open Badges blog. Since she moved on from Mozilla and the Badge Alliance, that weekly pulse has been sorely lacking. I’d like to see some of the advice in the Community Building Guide being followed. In fact, Telescope (the free and Open Source tool it’s written about) might be a good crowdsourced solution.

Finally, I’d like to see a return of working groups. While I know that technically anyone can set one up any time and receive the blessing of the Badge Alliance, we should find ways to either resurrect or create new ones. Open Badges is a little bit too biased towards (U.S.) formal education at the moment.

Conclusion

The Badge Alliance community needs to be more strategic and mindful about how we interact going forwards. The ways that we’ve done things up until now have worked to get us here, but they’re not necessarily what we need to ‘cross the chasm’ and take Open Badges (even more) mainstream.

I’m pleased that Tim Cook is now providing some strategic direction for the Badge Alliance beyond the technical side of things. I’m confident that we can continue to keep up the momentum we’ve generated over the last few years, as well as continue to evolve to meet the needs of users at every point of the technology adoption curve.

Image CC BY-NC Thomas Hawk

http://dougbelshaw.com/blog/2016/06/02/badges-backpack-community/

|

|

Henrik Skupin: Firefox-ui-tests – Platform Operations Project of the Month |

Hello from Platforms Operations! Once a month we highlight one of our projects to help the Mozilla community discover a useful tool or an interesting contribution opportunity.

This month’s project is firefox-ui-tests!

What are firefox-ui-tests?

Firefox UI tests are a test suite for integration tests which are based on the Marionette automation framework and are majorly used for user interface centric testing of Firefox. The difference to pure Marionette tests is, that Firefox UI tests are interacting with the chrome scope (browser interface) and not content scope (websites) by default. Also the tests have access to a page object model called Firefox Puppeteer. It eases the interaction with all ui elements under test, and especially makes interacting with the browser possible even across different localizations of Firefox. That is a totally unique feature compared to all the other existing automated test suites.

Where Firefox UI tests are used

As of today the Firefox UI functional tests are getting executed for each code check-in on integration and release branches, but limited to Linux64 debug builds due to current Taskcluster restrictions. Once more platforms are available the testing will be expanded appropriately.

But as mentioned earlier we also want to test localized builds of Firefox. To get there the developer, and release builds, for which all locales exist, have to be used. Those tests run in our own CI system called mozmill-ci which is driven by Jenkins. Due to a low capacity of test machines only a handful of locales are getting tested. But this will change soon with the complete move to Taskcluster. With the CI system we also test updates of Firefox to ensure that there is no breakage for our users after an update.

What are we working on?

The current work is fully dedicated to bring more visibility of our test results to developers. We want to get there with the following sub projects:

- Bug 1272228 – Get test results out of the by default hidden Tier-3 level on Treeherder and make them reporting as Tier-2 or even Tier-1. This will drastically reduce the number of regressions introduced for our tests.

- Bug 1272145 – Tests should be located close to the code which actually gets tested. So we want to move as many Firefox UI tests as possible from testing/firefox-ui-tests/tests to individual browser or toolkit components.

- Bug 1272236 – To increase stability and coverage of Firefox builds including all various locales, we want to get all of our tests for nightly builds on Linux64 executed via TaskCluster.

How to run the tests

The tests are located in the Firefox development tree. That allows us to keep them up-to-date when changes in Firefox are introduced. But that also means that before the tests can be executed a full checkout of mozilla-central has to be made. Depending on the connection it might take a while… so take the chance to grab a coffee while waiting.

Now that the repository has been cloned make sure that the build prerequisites for your platform are met. Once done follow these configure and build steps to build Firefox. Actually the build step is optional, given that the tests also allow a Firefox build as downloaded from mozilla.org to be used.

When the Firefox build is available the tests can be run. A tool which allows a simple invocation of the tests is called mach and it is located in the root of the repository. Call it with various arguments to run different sets of tests or a different binary. Here some examples:

# Run integration tests with the Firefox you built

./mach firefox-ui-functional

# Run integration tests with a downloaded Firefox

./mach firefox-ui-functional --binary %path%

# Run update tests with an older downloaded Firefox

./mach firefox-ui-update --binary %path%

There are some more arguments available. For an overview consult our MDN documentation or run eg. mach firefox-ui-functional --help.

Useful links and references

- Project URL: https://wiki.mozilla.org/Auto-tools/Projects/Firefox_UI_Tests

- User documentation: https://developer.mozilla.org/en-US/docs/Mozilla

- Firefox Puppeteer documentation: http://firefox-puppeteer.readthedocs.io/en/latest/

How to get involved

If the above sounds interesting to you, and you are willing to learn more about test automation, the firefox-ui-tests project is definitely a good place to get started. We have a couple of open mentored bugs, and can create even more, depending on individual requirements and knowledge in Python.

Feel free to get in contact with us in the #automation IRC channel by looking for whimboo or maja_zf.

http://www.hskupin.info/2016/06/02/firefox-ui-tests-platform-operations-project-of-the-month/

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2016/06/01 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

The Review Queues

In the past 3 weeks, 1277 listed add-ons were reviewed:

- 1192 (93%) were reviewed in fewer than 5 days.

- 68 (5%) were reviewed between 5 and 10 days.

- 17 (1%) were reviewed after more than 10 days.

There are 67 listed add-ons awaiting review.

You can read about the recent improvements in the review queues here.

If you’re an add-on developer and are looking for contribution opportunities, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 48 Compatibility

The compatibility blog post for Firefox 48 is up. The bulk validation should be run in the coming weeks.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Extension Signing

The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions. The current plan is to remove the signing override preference in Firefox 48. This was pushed back because we don’t have the unbranded builds needed for add-on developers to test on Beta and Release.

Multiprocess Firefox

If your add-on isn’t compatible with multiprocess Firefox (e10s) yet, please read this guide. Note that in the future Firefox will only support extensions that explicitly state multiprocess compatibility, so make sure you set the right flag:

- WebExtensions are compatible by default and don’t need any flags to be set.

- SDK extensions need to set the multiprocess permission in package.json.

- All other extensions need to set the multiprocessCompatible flag in install.rdf.

https://blog.mozilla.org/addons/2016/06/01/add-ons-update-82/

|

|

Mozilla Addons Blog: June 2016 Featured Add-ons |

Pick of the Month: Smart HTTPS (Encrypt Your Communications)

by ilGur

Automatically changes HTTP addresses to secure HTTPS, and if loading errors occur, will revert back to HTTP.

“Using it is as easy as installing.”

Featured: YouTube No Buffer (Stop Autoplaying)

by James Fray

A lightweight extension that prevents YouTube from auto-buffering videos.

“I needed this, as YouTube makes the browser too slow. Thanks.”

Featured: Weather Forecast Plus

by Alexis Jaksone

Easily check the weather anywhere in the world right from the convenience of your toolbar.

“Simple, unobtrusive, snappy.”

Nominate your favorite add-ons

Featured add-ons are selected by a community board made up of add-on developers, users, and fans. Board members change every six months, so there’s always an opportunity to participate. Stayed tuned to this blog for the next call for applications. Here’s further information on AMO’s featured content policies.

If you’d like to nominate an add-on for featuring, please send it to amo-featured@mozilla.org for the board’s consideration. We welcome you to submit your own add-on!

https://blog.mozilla.org/addons/2016/06/01/june-2016-featured-add-ons/

|

|

Air Mozilla: OC16 Mitchell Baker Mozilla |

Mozilla Executive Chairwoman Mitchell Baker talks about the digitization of everything.

Mozilla Executive Chairwoman Mitchell Baker talks about the digitization of everything.

|

|

Support.Mozilla.Org: SUMO Release Report: Firefox 46 and Firefox for iOS 4.0 |

Hello, SUMO Nation!

We have a document to share with you, and we’re also sharing its entirety with you below (if you are allergic to shared documents).

It explains what has happened during and after the launch of Firefox 46 and Firefox for iOS 4.0 on the SUMO front – so you can see how much work was done by everyone in the community in those busy days.

If you have questions, as always, go to our forums and fire away!

Without further ado, here is the…

SUMO Release Report: Firefox 46 and Firefox for iOS 4.0

Knowledge Base and Localization

Articles Created and Updated

| Article | Voted “helpful” (English/US only) | Global views |

| Desktop (April 28-May 16) | ||

| Firefox 46 Crashes at startup on OSx | 38% | 1483 |

| Add-on signing in Firefox | 75% | 53 |

| Android (April 28-May 16) | ||

| Firefox is no longer be supported on this version of Android | 73% | 1818 |

| Open links in the background for later viewing with Firefox for Android | 76-85% | 2372 |

| Add-on signing in Firefox for Android | 70-73% | 6455 |

Localization Coverage

| Article | % of top 10 locales it is localized into | % of top 20 locales it is localized into |

| Desktop (April 28-May 16) | ||

| Firefox 46 Crashes at startup on OSx | 80%* | 50%* |

| Add-on signing in Firefox | 100% | 100% |

| Android (April 28-May 16) | ||

| Firefox is no longer be supported on this version of Android | 40%* | 25%* |

| Open links in the background for later viewing with Firefox for Android | 100% | 65%* |

| Add-on signing in Firefox for Android | 100% | 65%* |

- Our l10n tool at the moment does not surface the latest content (focusing on the most frequently visited one), so we rely on 1:1 communications to inform contributors about new launch articles. This may lead to lower rates of l10n initially after a launch.

- In the top 20 locales, the bottom half includes 8 that are under localized due to lack of active l10n community members. We are working on addressing this in the coming months through community efforts (e.g. for Turkish and Arabic).

- Two of the top 20 locales are from the Nordics (Swedish, Finnish), where English is widely spoken, which impacts community interest in localizing content into their native languages.

Support Forum Threads

Solved top viewed threads

- Unable to launch Firefox 46 in RHEL 6.6 – Solved

- Recently updated firefox and now print margins are metric, how do I reset to inches? – Solved

- Please update flash

Unsolved top viewed threads

- “This browser does not support video playback.” Video playback Error on Twitter: Windows 10 – 80 views

- Work around – Linux tool tipcolor contrast

- Great malware troubleshooting – Adware keeps Taking Over Firefox, Firefox@helper2

Bugs Created from Forum threads

- MMNk Malware crashing in iOS

- Blank Page loading issue in firefox 46:

- Hitman pro Alert crash:

- Firefox no longer starting on older hardware, as well as SSE2:

- Issue where Kaspersky did not properly decode facebook

Social Support Highlights

| Top 5 Contributors | Replies |

| Andrew Truong | 70 |

| Jhonatas Rodrigues Machado | 17 |

| Noah Y | 15 |

| Swarnava Sengupta | 4 |

| Magno Reis | 2 |

- Jhonatas started contributing later in release, but has been doing a lot of great work since he join SUMO.

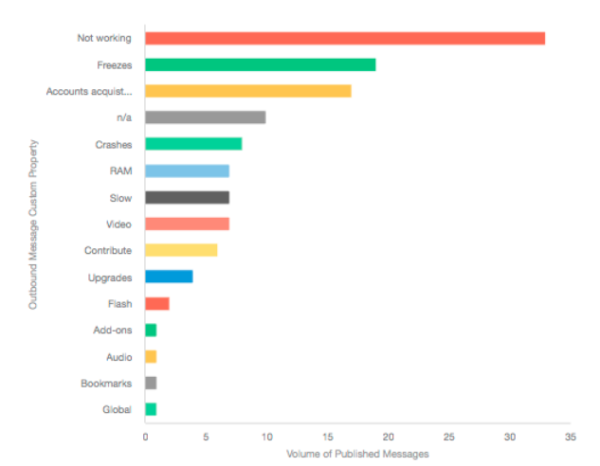

Trending issues

These topics are categorized by our social support team when they submit a response to a user.

“Not Working” is an easy categorization, but doesn’t give us much information. As time goes on we will work to refine the buckets that seem too general.

https://blog.mozilla.org/sumo/2016/06/01/sumo-release-report-firefox-46-and-firefox-for-ios-4-0/

|

|

Air Mozilla: The Joy of Coding - Episode 59 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Chris Lord: Open Source Speech Recognition |

I’m currently working on the Vaani project at Mozilla, and part of my work on that allows me to do some exploration around the topic of speech recognition and speech assistants. After looking at some of the commercial offerings available, I thought that if we were going to do some kind of add-on API, we’d be best off aping the Amazon Alexa skills JS API. Amazon Echo appears to be doing quite well and people have written a number of skills with their API. There isn’t really any alternative right now, but I actually happen to think their API is quite well thought out and concise, and maps well to the sort of data structures you need to do reliable speech recognition.

So skipping forward a bit, I decided to prototype with Node.js and some existing open source projects to implement an offline version of the Alexa skills JS API. Today it’s gotten to the point where it’s actually usable (for certain values of usable) and I’ve just spent the last 5 minutes asking it to tell me Knock-Knock jokes, so rather than waste any more time on that, I thought I’d write this about it instead. If you want to try it out, check out this repository and run npm install in the usual way. You’ll need pocketsphinx installed for that to succeed (install sphinxbase and pocketsphinx from github), and you’ll need espeak installed and some skills for it to do anything interesting, so check out the Alexa sample skills and sym-link the ‘samples‘ directory as a directory called ‘skills‘ in your ferris checkout directory. After that, just run the included example file with node and talk to it via your default recording device (hint: say ‘launch wise guy‘).

Hopefully someone else finds this useful – I’ll be using this as a base to prototype further voice experiments, and I’ll likely be extending the Alexa API further in non-standard ways. What was quite neat about all this was just how easy it all was. The Alexa API is extremely well documented, Node.js is also extremely well documented and just as easy to use, and there are tons of libraries (of varying quality…) to do what you need to do. The only real stumbling block was pocketsphinx’s lack of documentation (there’s no documentation at all for the Node bindings and the C API documentation is pretty sparse, to say the least), but thankfully other members of my team are much more familiar with this codebase than I am and I could lean on them for support.

I’m reasonably impressed with the state of lightweight open source voice recognition. This is easily good enough to be useful if you can limit the scope of what you need to recognise, and I find the Alexa API is a great way of doing that. I’d be interested to know how close the internal implementation is to how I’ve gone about it if anyone has that insider knowledge.

http://chrislord.net/index.php/2016/06/01/open-source-speech-recognition/

|

|