Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mitchell Baker: Chairing Mozilla’s Board |

Building a Network of People

In a previous post, I gave an overview of the five general areas I focus on in my role as Founder and Executive Chair of Mozilla. The first of the five areas is the traditional “Chair of the Board” role. Here I’ll give a bit of detail about one initiative I’m currently working on in this area.

In the overview post, I gave the following description of this part of my role:

I work on mission focus, governance, development and operation of the Board and the selection, support and evaluation of the most senior executives. […]Less traditionally, this portion of my role includes an ongoing activity I call “weaving all aspects of Mozilla into a whole.” Mozilla is an organizationally complex mixture of communities, legal entities and programs. Unifying this into “one Mozilla” is important.”

My current focus chairing the Board is on building a network of people who can help us identify and recruit potential Board level contributors and senior advisors. I view this as a multi-year development program. There are a few reasons for this:

- Mozilla is an unusual organization and it takes a while to understand us.

- We intend to increase diversity across a number of axis, from gender to geography.

A conscious effort to expand the set of people who interact with and from whom Board level candidates might come is critical. So while we are looking to expand each of the Mozilla Foundation and Mozilla Corporation Boards in 2016, the goal here is much longer-term and broader.

This work is also part of the development of the Mozilla Leadership Network (MLN), a new initiative being developed by the Mozilla Foundation team. The idea underpinning the Mozilla Leadership Network is that Mozilla is most effective when many people feel connected to us and feel Mozilla gives extra impact to their actions on behalf of an open Internet. The MLN seeks to provide these connection points. We hope the MLN will include a wide range of people, from students figuring out their path in life to accomplished professionals and recognized thought leaders.

Both Mozilla and I have a long history of connecting with individual contributors and with local and regional communities. This year I’ve added this additional focus on senior advisors and potential Board level contributors. This is an ongoing process. So far I’ve had three or four brainstorming sessions focused on expanding the network of people we might want to get to know. From this point, we do some information gathering to get to know more about people we now have pointers to. Then we start to get to know people, to see (a) who has a good feel for the open Internet, Mozilla, or the kinds of initiatives we’re focused on; and (b) who has both interest and time to engage with Mozilla more.

I’ve had detailed conversations with nine of the people we’ve identified, sometimes multiple times and hours. Of these, approximately 75% are women and two third are located outside the US, representing our interest in increasing our diversity along multiple axis.

These conversations are not about “do you want to be a board member?”. They are pretty detailed explorations of a person’s sense of the Open Internet, Mozilla’s role, our initiatives, challenges and opportunities. The conversations are invitations to engage with Mozilla at any level and explorations of how appealing our current initiatives might be.

In the next week or so I’ll do a follow up post about the Board search in particular.

The Mozilla communities are part of what I love most about Mozilla. I remain deeply involved with our current communities through the work of the Participation team. This new focus broadens the possibilities. The number of people who look to Mozilla to help build an Internet that is open and accessible is both motivating and inspiring.

http://blog.lizardwrangler.com/2016/06/01/chairing-mozillas-board/

|

|

Project Tofino: Tofino Project Goals Update |

This is the first in a fortnightly series of blog posts providing updates on the Tofino Project’s progress. Expect Product design updates…

|

|

Air Mozilla: Weekly SUMO Community Meeting June 1, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-june-1-2016/

|

|

Daniel Glazman: BlueGriffon 2.0 released |

I am happy to announce that BlueGriffon 2.0 is now available from http://www.bluegriffon.org .

http://www.glazman.org/weblog/dotclear/index.php?post/2016/06/01/BlueGriffon-2.0-released

|

|

Karl Dubost: PWA - Heatmap Discussions Compilation |

PWA stands for Progressive Web Apps. In French, it sounds like "Pouah!"" which could be roughly translated to "Yuck!". That in itself could be a good summary of these last few days.

No URLs has been harmed during this compilation (apart of one person who posted on medium, if you care about URLs, don't post on medium). I'm just keeping the list around to remember the context.

15 June 2015

Note the year… 2015

Progressive Web Apps: Escaping Tabs Without Losing Our Soul, Alex Russel:

URLs and links as the core organizing system: if you can’t link to it, it isn’t part of the web

18 March 2016

Progressive web apps: the long game, Remy Sharp:

TL;DR: There's a high barrier of entry to add to home screen that will create a trust between our users and our web apps. A trust that equals the trust of an app store installed app. This is huge.

23 May 2016

@adactio, Jeremy Keith:

Strongly disagree with Lighthouse wanting “Manifest's display property set to standalone/fullscreen to allow launching without address bar.”

24 May 2016

Regressive Web Apps, Jeremy Keith:

Chrome developers have decided that displaying URLs is not “best practice”. It was filed as a bug.

On URLs in Progressive Web Apps, Bruce Lawson:

What do you think? How can we best allow the user to see the current URL in a discoverable way?

25 May 2016

The Ideal Web Experience, Dion Almaer:

We aren’t yet at the ideal web experience, but I think that we are at least poised better with the technology and thinking around progressive web apps. It is all well and good to expect every development team behind a website to be craftsmen with infinite time to build the perfect experience, but the world isn’t like that. There are a slew of problems, a ton of legacy, and piles of work to do. All we can hope for is that this work is prioritized. —

26 May 2016

DRAMA!

@slightlylate, Alex Russel:

@dalmaer @xeenon @adactio @owencm: if you think the URL is going to get killed on my watch then you aren't paying any attention whatsoever.

27 May 2016

Beyond Progressive Web Apps, Matthias Beitl:

This is where things collide with existing functionality. Swiping from the top is already tied to the OS, not the current app. Well, this is how things are today, we can find ways to make it work. Not just for URLs, who says there is no common ground for a slide-from-top menu that works for both apps and URLs. All implementation details aside, browsers can only go so far, the operating system must be designed to take care of those needs.

28 May 2016

State of the gap, Remy Sharp:

With time, and persistence, users (us included) will come to expect PWAs to work. If it's on my homescreen, it'll work. The same way as any good native app might work today.

30 May 2016

Regression toward being mean, Jeremy Keith:

Simply put, in this instance, I don’t think good intentions are enough.

Not The Post I Wanted To Be Writing…, Alex Russel:

This matters because URL sharing is the proto-social behavior. It’s what enabled the web to spread and prosper, and it’s something we take for granted. In fact, if you look at any of my talks, you’ll see that I call it out as the web’s superpower.

31 May 2016

Progressive Web Apps and our regressive approach, Christian Heilmann:

The idea that a PWA is progressively enhanced and means that it could be a web site that only converts in the right environment is wonderful. We can totally do that. But we shouldn’t pretend that this is the world we need to worry about right now. We can do that once we solved the issue of web users not wanting to pay for anything and show growth numbers on the desktop. For now, PWAs need to be the solution for the next mobile users. And this is where we have an advantage over native apps. Let’s use that one.

1 June 2016

You got to love the new Web… Pouah! This is the URL of an article in the PWA environment. Yuck!

https://www.washingtonpost.com/pwa/#https://www.washingtonpost.com/news/the-switch/wp/2016/05/27/newspapers-escalate-their-fight-against-ad-blockers/

I guess it's why they want to hide it.

Otsukare!

|

|

Tantek Celik: Running for the W3C Advisory Board Again |

From Open Friendly To Open Habits

Almost three years ago, after I’d been elected, but before I officially started my term, I started the Advisory Board’s public wiki page:

In that time the page has grown, had sections moved around, but in general is being edited by nearly every member of the AB. In three years we have achieved a new level of acceptance of opennness in the AB. I would say the AB is now both as individuals, and as a whole, "open friendly", that is, friendly and supportive of being open.

That being said I’m still doing much more editing than others, which is not by design but by habit and necessity.

On any given discussion it still takes some careful thinking on the part of the AB about if we can make the discussion public, how should we make it public, what is a good summary, etc. There’s rarely any obvious answers here, so the only way to get better at it is by practicing, so we practice in meetings, discuss / decide how to openly communicate, repeat.

My role is still very much one of taking the initiative and encouraging such open documentation (sometimes doing it too).

My goal for the next period is to effect cultural transfer so that I’m no longer doing more/most of the edits on our open wiki page, and instead have us all collectively reminding each other to publicly document decisions, priorities, summaries.

My goal is to eliminate my specific role in this regard. So that it just becomes accepted as the way the AB works, as a habit, both as individuals, and as a whole.

I don’t know if such a cultural change is possible in two years time, but I think we have to try.

Open Priorities

Any group of people with as broad a mission as the AB can potentially spend their time on any number of things. To both operate more affectively as a board (not just a set of elected individuals), and to help the broader W3C community provide us feedback, we publish our priorities openly, as we re-evaluate them annually (potentially semi-annually with new members joining in June / July)

https://www.w3.org/wiki/AB#Priorities_2016

I think this is pretty important as it communicates what is it that we, the AB as a whole think is most important to work on, and with our initials, what we as individuals feel we can most effectively contribute to.

I am going to touch on the few that I see as both important for the AB, and areas that I am bringing my personal skills and experience to help solve:

Maintenance

Maintenance is about how specifications are maintained, making sure they are, and frankly, obsoleting dead specs. It is perhaps the most important longterm priority for W3C.

This gets at the heart of one of the biggest philosophical disagreements in modern specification development.

Whether to have "living" specifications that you can depend on to always be updated, but you’re not sure what parts are stable, or to have "stable" specifications that you can depend on to not change from underneath you, but not be sure if are seeing the latest errata, the latest fixes incorporated.

Or worse, a stable specification which "those in the know" know to ignore because it’s been abandoned, but which a new developer (market entrant) does not know, wastes time trying to implement, and then eventually gets frustrated with W3C or standards as a whole because of the time they wasted.

Right now there are unmaintained specifications on the W3C’s Technical Reports (TR) page. And there are also obsolete specifications as well. Can you tell quickly which are which? I doubt anyone can. This is a problem. We have to fix this.

Best practices for new specifications

Best practices for rec track. Yes we have a maintenance problem we need to fix, but that’s only half the equation, the other half is getting better at producing maintainable specifications, that hopefully we won’t have to obsolete!

The biggest change happening here at W3C, instigated and promoted by many of us on the AB, is a focus on incubating ideas and proposals before actually working on them in a working group.

This incubation approach is fairly new to W3C, and frankly, to standards organizations as a whole.

It completely changes the political dynamic from, trusting a bunch of authoritative individuals to come up with the right ideas, design etc. a priori and via discussion, compromise, consensus, to instead forcing some amount of early implementation testing, filtering, feedback, iteration.

The reality is that this is a huge philosophical change, and frankly a huge shift in the balance of power & influence of the participants, from academics to engineers, from architects to hackers. So if you were an idealist academic that had a perfect design that you thought everyone should just implement, you’re going to find yourself facing more challenges, which may be frustrating. Similarly if you were a high level enterprise architect, used to commanding whole teams of people to implement your top-down designs.

Incubation shifts power to builders, to implementers, and this is seen as a good thing, because if you cannot convince someone (including yourself and your time) to implement something, even partially, to start with, you should probably reconsider whether it is worth implementing, its use-cases, etc.

By prototyping ideas early, we can debunk bad (e.g. excessively complex) ideas more quickly. By applying the time costs of implementation, we quickly confront the reality that no feature is free, nothing should be included for completeness sake. Everything has a cost, and thus must provide obvious user-value.

Incubation works not only to double-check ideas, but to also provide secondary, tertiary perspectives that help chisel away inessential aspects of a feature, an idea. Aspects that might otherwise be far too easy to include, for politeness sake by those seeking consensus at the cost of avoiding essential conflict and debate.

I bring a particularly strong perspective to the incubation discussions, and that is from my experience with IndieWebCamp where not only do we strongly encourage such incubation of any idea, we require it by the person proposing the idea! And not just in a library, but deployed on their personal website as part of their identity online. We call this practice selfdogfooding, and it has helped reduce and minimize many a feature, or whole specifications.

Security

Lastly but perhaps most user-visible, security (and privacy), in particular fear of losing either, is perhaps the number one user-visible challenge to the open web. Articles like this one are being written fairly frequently:

Privacy Concerns Curb Online Commerce, Communication

Some of this is perception, some of it as a result of nearly monthly "data breaches" of major web site / data silos, and others from fears of mass involuntary surveillance, from both governements, and private corporations seeking to use any information to better target their advertisements.

The key aspects we can work on and improve at W3C are related to the very specifications we design. We can, at a minimum work to minimize possible security threats from new features or specifications. On the up side, we can promote specifications that provide better security than previous works. There’s lots to do here, and lots of groups are working on various aspects of security at W3C, including the W3C Technical Architecture Group (TAG).

The AB’s role in security is to help these groups both coordinate, share approaches, and to provide encouragements in W3C processes to promote awareness and consdieration of security across the entire web platform.

Looking Forward

That is a lot to work on, and those are only three of the W3C AB’s priorities for 2016. I hope to continue working on them, and help make a difference in each over the next two years.

Thanks for your all your feedback and support. Let’s keep making the open web the best platform the world has ever seen.

Update: 2016-06-01

Update: I’ve been re-elected to the AB for another two years, starting 2016-07-01. Congrats to my fellow elected AB members!

http://tantek.com/2016/152/b1/running-for-w3c-advisory-board-again

|

|

Cameron Kaiser: Extending TenFourFox 38 |

Yes, it was a lovely wedding. Thanks for your well-wishes.

I've been slogging through the TenFourFox 43.0 port and while the JIT is coming along slowly and I'm making bug fixes as I find them, I still have a few critical failures to smoke out and I'd say I'm still about two weeks away from tackling 45. Thus, we'll definitely have an TenFourFox 38.9 consisting of high-priority cross-platform security fixes backported from Firefox 45ESR and there may need to be a 38.10 while TenFourFox 45 stabilizes. This 38.9, which I remind you does not correlate to any official Firefox version, will come out parallel to 45.3 and I should have test builds by this weekend for the scheduled Tuesday release. TenFourFox 38.9 will also include a fix for a JIT bug that I discovered in 43 that also affects 38.

I'm also accumulating post-45 features I want to add to our fork of Gecko 45 to keep it relevant for a bit longer. Once 45 gets off the ground, I'm considering having an unstable and stable branch again with features moving from unstable to stable as they bake in beta testing, though unlike Mozilla our cycles will probably be 12 weeks instead of 6 due to our smaller user base. These features are intentionally ones that are straightforward to port; I'm not going to do massive surgery like what was required for Classilla to move forward. I definitely learned my lesson about that.

More later.

http://tenfourfox.blogspot.com/2016/05/extending-tenfourfox-38.html

|

|

Jen Kagan: day 6: brain to launch & a close reading |

the project i’m working on, min-vid, is a firefox add-on that lets you minimize a video and keep it in the corner of the browser so you can watch while you’re reading/doing other stuff on the page behind it.

this is an idea straight out of dave’s brain.

getting this idea from dave’s brain to the firefox browser takes a lot of work and coordination. one of the first steps was for the dev team to decide on which features would add up to an ‘mvp,’ or ‘minimum viable product.’

a user experience designer looks at these mvp features and designs the human-readable interface around them. all the little x’s in the corners of browser windows, things that light up or expand or gray out when you mouse over them, windows that snap into place… they’re all designed by a person to work that way. in min-vid’s case, this person is named john. hey john.

so in addition to this set of mvp features, there are some basic logistical things that go into launching an open source product: a wiki page, a README that explains the thing and lets people know how to contribute, a name.

and then there are a thousand functionality issues to figure out. here’s a screen shot of partial issue list:

one mvp feature of this product is vimeo support since lots of folks watch videos hosted by vimeo. dave is working on that, and it involves getting the vimeo api to talk to the firefox add-on api. looking at his code for the new vimeo support, i realized i didn’t understand the basic structure of the existing add-on.

so i spent a big chunk of today doing what basically amounted to a close reading of the core add-on code. i copy/pasted it into github gists, where i added comments, questions, and links to helpful resources. then, i sent my marked up index.js file to dave and he responded with additional comments, answers, and more resources.

to folks (but mostly, ahem, my own internal monologue) who say “THAT IS NOT REAL WORK OR A GOOD USE OF TIME,” i say, “wrong this is actually a great learning exercise bye!”

http://www.jkitppit.com/2016/06/01/day-6-brain-to-launch-a-close-reading/

|

|

Emma Irwin: Mozfest 2016 for Participation |

Earlier this month a group of people met in Berlin to imagine and design Mozfest 2016.

Blending inspiration and ideas from open news, science, localization, youth, connected devices and beyond – we spent three glorious days collaborating and building a vision of a Mozfest like no other.

The Participation team emerged from this experience with a new vision for Mozillian participation we’re calling ‘Mozfest Space* Contributors’. Roles designed to bring success on the goals of every space in the building. This is a very different approach from recent years where our focus has been more participatory as facilitators, helpers and learners. With this new approach, we’re inviting contribution, ownership and responsibility in shaping the event. Super, super exciting – I hope you agree!

Exploring the potential of contributor roles within Spaces, we found amazing potential! Open Science imagined a ‘Science Translator’ role – helping people overcome scientific jargon to connect with ideas. The Web Literacy group has big plans for their physical space, one where a ‘Set Designer’ would be incredibly helpful in making those dreams come true.

Open News, and others thought about ‘Help Desk’ leads, and more than one space has suggested that the addition of technical mentors and session translators would bring diversity and connection. Can you see yet why this will be amazing?

Outreach for contributors this year will be focused squarely on finding people with the skills, passion, vision and a commitment to supporting these spaces. In many cases roles will be a key part of planning in the months leading up to Mozfest.

Also – we’re already piloting this very idea! having recently selecting Priyanka Nag and Mayur Patil to be part of the Participation team’s Mozfest planning. I’m so grateful for their help and leadership in making this a fantastic experience for wranglers and contributors alike.

On July 15th we’ll post all available roles, and launch the application process. You can find an FAQ here.

Sponsorship from the Participation Team for Mozfest 2016 will be for these roles only. The call for the proposals will be run by the MozFest organizers who will have a limited number of travel stipends available through that separate process.

* Space – an area of Mozfest with content and space built and activated under a certain theme (like Open Science, Youth Zone and Web Literacy)

* Space Wrangler – Person organizing and building a space at Mozilla

Special thanks to Elio from the Community Design Group for creating ‘Volunteer Role’ mockups!

Role avatars by freepik.

|

|

Mozilla Addons Blog: Using Google Analytics in Extensions |

As an add-on developer, you may want to have usage reporting integrated into your add-on. This allows you to understand how your users are using the add-on in real life, which can often lead to important insights and code updates that improve user experience.

The most popular way to do this is to inject the Google Analytics script into your codebase as if it were a web page. However, this is incompatible with our review policies. Injecting remote scripts into privileged code – or even content – is very dangerous and it’s not allowed. Fortunately, there are ways to send reports to Google Analytics without resorting to remote script injection, and they are very easy to implement.

Update: add-ons that use GA are required to have a privacy policy on AMO, and the data they send should be only what’s strictly necessary for usage reporting. This blog post is meant to show the safer ways of using GA, not advocate its unrestricted use.

I created a branch of one of my add-ons to serve as a demo. The add-on is a WebExtension that injects a content script into some AMO pages to add links that are useful to admins and reviewers. The diff for the branch shouldn’t take much time to read and understand. It mostly comes down to this XHR:

let request = new XMLHttpRequest();

let message =

"v=1&tid=" + GA_TRACKING_ID + "&cid= " + GA_CLIENT_ID + "&aip=1" +

"&ds=add-on&t=event&ec=AAA&ea=" + aType;

request.open("POST", "https://www.google-analytics.com/collect", true);

request.send(message);In my demo I do everything from the content script code. For add-ons with a more complex structure, you should set up a background script that does the reporting and have content scripts send messages to it if needed.

I set up my reporting so the hits are sent as events. You can read about reporting types and all the different parameters in the Google Analytics developer docs.

Thanks to the real-time tracking feature I could see that my implementation was working right away:

![]()

That’s it! With just a few lines of code you can set up Google Analytics for your add-on safely, in a way that meets our review guidelines.

https://blog.mozilla.org/addons/2016/05/31/using-google-analytics-in-extensions/

|

|

Nathan Froyd: why gecko data structures should be preferred to std:: ones |

In light of the recent announcement that all of our Tier-1 platforms now have a C++11-supporting standard library, I received some questions about whether we should continue encouraging the use of Gecko-specific data structures. My answer was “yes”, and as I was writing the justification for said answer, I felt that the justification was worth broadcasting to a wider audience. Here are the reasons I came up with; feel free to agree or disagree in the comments.

- Gecko’s data structures can be customized extensively for our purposes, whereas we don’t have the same control over the standard library. Our string classes, for instance, permit sharing structure between strings (whether via something like nsDependentString or reference-counted string buffers); that functionality isn’t currently supported in the standard library. While the default behavior on allocation failure in Gecko is to crash, our data structures provide interfaces for failing gracefully when allocations fail. Allocation failures in standard library data structures are reported via exceptions, which we don’t use. If you’re not using exceptions, allocation failures in those data structures simply crash, which isn’t acceptable in a number of places throughout Gecko.

- Gecko data structures can assume things about the environment that the standard library can’t. We ship the same memory allocator on all our platforms, so our hashtables and our arrays can attempt to make their allocation behavior line up with what the memory allocator efficiently supports. It’s possible that the standard library implementations we’re using do things like this, but it’s not guaranteed by the standard.

- Along similar lines as the first two, Gecko data structures provide better visibility for things like debug checks and memory reporting. Some standard libraries we support come with built-in debug modes, but not all of them, and not all debug modes are equally complete. Where possible, we should have consistent support for these sorts of things across all our platforms.

- Custom data structures may provide better behavior than standard data structures by relaxing the specifications provided by the standard. The WebKit team had a great blog post on their new mutex implementation, which optimizes for cases that OS-provided mutexes aren’t optimized for, either because of compatibility constraints or because of outside specifications. Chandler Carruth has a CppCon talk where he mentions the non-ideal interfaces in many of the standard library data structures. We can do better with custom data structures.

- Data structures in the standard library may provide inconsistent performance across platforms, or disagree on the finer points of the standard. Love them or hate them, Gecko’s data structures at least provide consistent behavior everywhere.

Most of these arguments are not new; if you look at the documentation for Facebook’s open-source Folly library, for instance, you’ll find a number of these arguments, if not expressed in quite the same way. Browsing through WebKit’s WTF library shows they have a number of the same things that we do in xpcom/ or mfbt/ as well, presumably for some of the same reasons.

All of this is not to say that our data structures are perfect: the APIs for our hashtables could use some improvements, our strings and nsTArray do a poor job of separating “data structure” from “algorithm”, nsDeque serves as an excellent excuse to go use the standard library instead, and XPCOM’s synchronization primitives should stop going through NSPR and use the underlying OS’s primitives directly (or simply be rewritten to use something like WebKit’s locking primitives, above). This is a non-exhaustive list; I have more ideas if people are interested.

Having a C++11 standard library on all platforms brings opportunities to remove dead polyfills; MFBT contains a number of these (Atomics.h, Tuple.h, TypeTraits.h, UniquePtr.h, etc.) But we shouldn’t flock to the standard library’s functionality just because it’s the standard. If the standard library’s functionality doesn’t fit our use cases, we should definitely write our own replacement(s) and use them widely.

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1272384] inline user story changes should not be shown on bug-modal

- [1220807] The new UI doesn’t show anything for the review requester in the attachments table if their name contains :\s

- [1275567] ‘user story’ summary shouldn’t be visible in module header when user story is empty

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/05/31/happy-bmo-push-day-20/

|

|

Christian Heilmann: Progressive Web Apps and our regressive approach |

Custom made, cute, but not reusable

In the weeks following Google IO there was a lot of discussion about progressive web apps, Android instant Apps and the value and role of URLs and links in the app world. We had commentary, ponderings, Pathos, explanation of said Pathos after it annoyed people and an excellent round-up on where we stand with web technology for apps.

My favourite is Remy Sharp’s post which he concludes as:

I strongly believe in the concepts behind progressive web apps and even though native hacks (Flash, PhoneGap, etc) will always be ahead, the web, always gets there. Now, today, is an incredibly exciting time to be build on the web.

PWAs beat anything we tried so far

As a card-carrying lover of the web, I am convinced that PWAs are a necessary step into the right direction. They are a very important change. So far, all our efforts to crack the advertised supremacy of native apps over the web failed. We copied what native apps did. We tried to erode the system from within. We packaged our apps and let them compete in closed environments. The problem is that they couldn’t compete in quality. In some cases this might have been by design of the platform we tried to run them on. A large part of it is that “the app revolution” is powered by the age old idea of planned obsolesence, something that is against anything the web stands for.

I made a lot of points about this in my TEDx talk “The Web is dead” two years ago:

We kept trying to beat native with the promises of the web: its open nature, its easy distribution, and its reach. These are interesting, but also work against the siren song of apps on mobile: that you are in control and that you can sell them to an audience of well-off, always up-to-date users. Whether this is true or not was irrelevant – it sounded great. And that’s what we need to work against. The good news is that we now have a much better chance than before. But more on that later.

Where to publish if you need to show quick results?

Consider yourself someone who is not as excited about the web as we are. Imagine being someone with short-term goals, like impressing a VC. As a publisher you have to make a decision what to support:

- iOS, a platform with incredible tooling, a predictable upgrade strategy and a lot of affluent users happy to spend money on products.

- Android, a platform with good tooling, massive fragmentation, a plethora of different devices on all kind of versions of the OS (including custom ones by hardware companies) and users much less happy to spend money but expecting “free” versions

- The web, a platform with tooling that’s going places, an utterly unpredictable and hard to measure audience that expects everything for free and will block your ads and work around your paywalls.

If all you care about is a predictable audience you can do some budgeting for, this doesn’t look too rosy for Android and abysmal for the web. The carrot of “but you can reach millions of people” doesn’t hold much weight when these are not easy to convert to paying users.

To show growth you need numbers. You don’t do that by being part of a big world of links and resources. You do that by locking people in your app. You do it by adding a webview so links open inside it. This is short-sighted and borderline evil, but it works.

And yes, we are in this space. This is not about what technology to use, this is not about how easy it is to maintain your app. This is not about how affordable developers would be. The people who call the shots in the app market and make the money are not the developers. They are those who run the platforms and invest in the companies creating the apps.

The app honeymoon period is over

The great news is that this house of cards is tumbling. App download numbers are abysmally low and the usage of mobiles is in chat clients, OS services and social networks. The closed nature of marketplaces works heavily against random discovery. There is a thriving market of fake reviews, upvotes, offline advertising and keyword padding that makes the web SEO world of the last decade look much less of the cesspool we remember. End users are getting tired of having to install and uninstall apps and continuously get prompts to upgrade them.

This is a great time to break into this model. That Google finally came up with Instant Apps (after promising atomic updates for years) shows that we reached the breaking point. Something has to change.

Growth is on mobile and connectivity issues are the hurdle

Here’s the issue though: patience is not a virtue of our market. To make PWAs work, bring apps back to the web and have the link as the source of distribution instead of closed marketplaces we need to act now. We need to show that PWAs solve the main issue of the app market: that the next users are not in places with great connectivity, and yet on mobile devices.

And this is where progressive web apps hit the sweet spot. You can have a damn small footprint app shell that gets sweet and easy to upgrade content from the web. You control the offline experience and what happens on flaky connections. PWAs are a “try before you buy”, showing you immediately what you get before you go through the process of adding it to your home screen or download the whole app. Exactly what Instant Apps are promising. Instant Apps have the problem that Android isn’t architected that way and that developers need to change their approach. The web was already built on this idea and the approach is second nature to us.

PWAs need to succeed on mobile first

The idea that a PWA is progressively enhanced and means that it could be a web site that only converts in the right environment is wonderful. We can totally do that. But we shouldn’t pretend that this is the world we need to worry about right now. We can do that once we solved the issue of web users not wanting to pay for anything and show growth numbers on the desktop. For now, PWAs need to be the solution for the next mobile users. And this is where we have an advantage over native apps. Let’s use that one.

Open questions

Of course, there are many issues to consider:

- How do PWAs work with permissions? Can we ask permissions on demand and what happens when users revoke them? Instant apps have that same issue.

- How do I uninstall a PWA? Does removing the icon from my homescreen free all the data? Should PWAs have a memory management control?

- What about URLs? Should they display or not? Should there be a long-tap to share the URL? Personally, I’d find a URL bar above an app confusing. I never “hacked” the URL of an app – but I did use “share this app” buttons. With a PWA, this is sending a URL to friends, and that’s a killer feature.

- How do we deal with the issue of iOS not supporting Service Workers? What about legacy and third party Android devices? Sure, PWAs fall back to normal HTML5 apps, but we’ve seen them not taking off in comparison to native apps.

- What are the “must have” features of native apps that PWAs need to match? Those people want without being a hurdle or impossible to implement?

These are exciting times and I am looking forward to PWAs being the wedge in the cracks that are showing in closed environments. The web can win this, but we won’t get far if we demand features that only make sense on desktop and are in use by us – the experts. End users deserve to have an amazing, form-factor specific experience. Let’s build those. And for the love of our users, let’s build apps that let them do things and not only consume them. This is what apps are for.

https://www.christianheilmann.com/2016/05/31/progressive-web-apps-and-our-regressive-approach/

|

|

Daniel Stenberg: On billions and “users” |

At times when I’ve gone out (yes it happens), faced an audience and talked about my primary spare time project curl, I’ve said a few times in the past that we have one billion users.

Users?

OK, as this is open source I’m talking about, I can’t actually count my users and what really constitutes “a user” anyway?

If the same human runs multiple copies of curl (in different devices and applications), is that human then counted once or many times? If a single developer writes an application that uses libcurl and that application is used by millions of humans, is that one user or are they millions of curl users?

What about pure machine “users”? In the subway in one of the world’s largest cities, there’s an automated curl transfer being done for every person passing the ticket check point. Yet I don’t think we can count the passing (and unknowing) passengers as curl users…

I’ve had a few people approach me to object to my “curl has one billion users” statement. Surely not one in every seven humans on earth are writing curl command lines! We’re engineers and we’re picky with the definitions.

Because of this, I’m trying to stop talking about “number of users”. That’s not a proper metric for a project whose primary product is a library that is used by applications or within devices. I’m instead trying to assess the number of humans that are using services, tools or devices that are powered by curl. Fun challenge, right?

Who isn’t using?

I’ve tried to imagine of what kind of person that would not have or use any piece of hardware or applications that include curl during a typical day. I certainly can’t properly imagine all humans in this vast globe and how they all live their lives, but I quite honestly think that most internet connected humans in the world own or use something that runs my code. Especially if we include people who use online services that use curl.

I’ve tried to imagine of what kind of person that would not have or use any piece of hardware or applications that include curl during a typical day. I certainly can’t properly imagine all humans in this vast globe and how they all live their lives, but I quite honestly think that most internet connected humans in the world own or use something that runs my code. Especially if we include people who use online services that use curl.

curl is used in basically all modern TVs, a large percentage of all car infotainment systems, routers, printers, set top boxes, mobile phones and apps on them, tablets, video games, audio equipment, Blu-ray players, hundreds of applications, even in fridges and more. Apple alone have said they have one billion active devices, devices that use curl! Facebook uses curl extensively and they have 1.5 billion users every month. libcurl is commonly used by PHP sites and PHP empowers no less than 82% of the sites w3techs.com has figured out what they run (out of the 10 million most visited sites in the world).

There are about 3 billion internet users worldwide. I seriously believe that most of those use something that is running curl, every day. Where Internet is less used, so is of course curl.

Every human in the connected world, use something powered by curl every day

Frigging Amazing

It is an amazing feeling when I stop and really think about it. When I pause to let it sink in properly. My efforts and code have spread to almost every little corner of the connected world. What an amazing feat and of course I didn’t think it would reach even close to this level. I still have hard time fully absorbing it! What a collaborative success story, because I could never have gotten close to this without the help from others and the community we have around the project.

But it isn’t something I think about much or that make me act very different in my every day life. I still work on the bug reports we get, respond to emails and polish off rough corners here and there as we go forward and keep releasing new curl releases every 8 weeks. Like we’ve done for years. Like I expect us and me to continue doing for the foreseeable future.

It is also a bit scary at times to think of the massive impact it could have if or when a really terrible security flaw is discovered in curl. We’ve had our fair share of security vulnerabilities so far through our history, but we’ve so far been spared from the really terrible ones.

So I’m rich, right?

If I ever start to describe something like this to “ordinary people” (and trust me, I only very rarely try that), questions about money is never far away. Like how come I give it away free and the inevitable “what if everyone using curl would’ve paid you just a cent, then…“.

I’m sure I don’t need to tell you this, but I’ll do it anyway: I give away curl for free as open source and that is a primary reason why it has reached to the point where it is today. It has made people want to help out and bring the features that made it attractive and it has made companies willing to use and trust it. Hadn’t it been open source, it would’ve died off already in the 90s. Forgotten and ignored. And someone else would’ve made the open source version and instead filled the void a curlless world would produce.

https://daniel.haxx.se/blog/2016/05/31/on-billions-and-users/

|

|

Asa Dotzler: thoughts on web performance and ad blockers |

I often use an ad blocker with my web browser. I do this not because I hate seeing ads. I block ads because I can’t take the performance hit.

Running an ad blocker, or using Firefox’s tracking protection, makes the web responsive again and a pleasure to use. Sites load fast, navigation is smooth, everything is just better in terms of performance when the ads and their scripts are removed from the web.

I don’t like the idea, though, that I’m depriving lots of great independent sites (some of them run by friends) of their ad revenue. Unfortunately, Ads have grown worse and worse over the last decade. They are now just too much load, physically and cognitively and the current state is unsustainable. Users are going to move to ad blockers if web sites and the big ad networks don’t clean up their act.

Sure, everyone moving to ad blockers would make the web feel speedy again, but it would probably mean we all lose a lot of great ad-supported content on the Web and that’s not a great outcome. One of the wonderful things about the web is the long-tail of independent content it makes available to the world — mostly supported by ads.

I think we can find a middle ground that sees ad-tech pull back to something that still generates reasonable returns but doesn’t destroy the experience of the web. I think we can reverse the flow of people off of the web into content silos and apps. But I don’t see that happening without some browser intervention. (Remember when browsers, Firefox leading the pack, decided pop-ups were a step too far? That’s the kind of intervention I’m thinking of.)

I’ve been thinking about what that could look like and how it could be deployed so it’s a win for publishers and users and so that the small and independent publishers especially don’t get crushed in the escalating battle between users and advertising networks.

Web publishers and readers both want sites to be blazing fast and easy to use. The two are very well aligned here. There’s less alignment around tracking and attention grabbing, but there’s agreement from both publishers and readers, I’m sure, that slow sites suck for everyone

So, with this alignment on a key part of the larger advertising mess, let’s build a feedback loop that makes the web fast again. Browsers can analyze page load speed and perhaps bandwidth usage, figure out what part of that comes from the ads, and when it crosses a certain threshold warn the user with a dialog something like “Ads appear to be slowing this site. Would you like to block ads for a week?”

If deployed at enough scale, sites would quickly see a drop-off in ad revenue if their ads started slowing the site down too much. But unlike current ad-blockers, sites would have the opportunity and the incentive to fix the problem and get the users back after a short period of time.

This also makes the ad networks clearly responsible for the pain they’re bringing and gets publishers and readers both on the same side of the debate. It should, in theory, push ad networks to lean down and *still* provide good returns and that’s the kind of competition we need to foster.

What do you all think? Could something like this work to make the web fast again?

https://asadotzler.com/2016/05/30/thoughts-on-web-performance-and-advertising/

|

|

Yunier Jos'e Sosa V'azquez: Actualizaci'on en el soporte de Firefox para OS X |

En un comunicado en el blog de las futuras versiones de Firefox, Mozilla ha expresado el fin del soporte para OS X 10.6, 10.7 y 10.8 el pr'oximo mes de agosto cuando liberen una nueva actualizaci'on del navegador. Despu'es del tiempo estimado, Firefox seguir'a funcionando en esas plataformas pero ya no recibir'an nuevas funcionalidades o correcciones de seguridad.

El motivo principal por el que Mozilla ha tomado esta decisi'on es la culminaci'on del soporte por parte de Apple para esas plataformas, y al no recibir actualizaciones de seguridad, se convierten en objeto de ataques, altamente peligrosas y atentan contra la seguridad de los usuarios. Mozilla recomienda encarecidamente a todos que emplean esos sistemas operativos obsoletos que actualicen a versiones de OS X actualmente soportadas por Apple.

Por su parte, Firefox ESR 45 continuar'a dando soporte a OS X 10.6, 10.7 y 10.8 hasta mediados de 2017. Tiempo suficiente para que las personas amantes a Firefox, migren hacia plataformas seguras.

Si eres fan'atico a los productos de Apple y tienes un iPhone, iPad o iTouch, debes saber que Firefox tambi'en est'a disponible para ellos y puedes descargarlo desde la Apple Store.

http://firefoxmania.uci.cu/actualizacion-en-el-soporte-de-firefox-para-os-x/

|

|

Daniel Stenberg: everybody runs this code all the time |

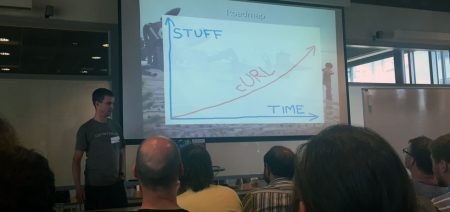

I was invited to talk about curl at the recent FOSS North conference in Gothenburg on May 26th. It was the first time the conference ran, but I think it went smooth and the ~110 visitors seemed to have a good time. It was a single track and there was a fairly good and interesting mix of talkers and subjects I think. They’re already planning to make it return again in spring 2017, so if you’re into FOSS and you’re in the Nordic region, consider this event next year…

I took on the subject of talking about my hacker ring^W^Wcurl project insights. Here’s my slide set:

At the event I sat down and had a chat with Simon Campanello, a reporter at IDG Techworld here in Sweden who subsequently posted this article about curl (in Swedish) and how our code has ended up getting used so widely.

https://daniel.haxx.se/blog/2016/05/30/everybody-runs-this-code/

|

|

Tantek Celik: Tomorrowland: A Change Of Perspective & A Flight To Paris |

One Saturday morning last August I got a ride with some friends from San Francisco to Mill Valley for the weekly San Francisco Running Company (SFRC) trail run. My favorite route is the ~7 mile round trip to Tennessee Valley Beach and back.

The SFRC crowd tends to be pretty quick, both those from November Project SF and other regulars. I kept up with the beach group most of the way but slowly fell behind as we got closer to the shore. I reached the beach just as everyone else was turning around from the sand. I still wanted to go touch the Pacific.

Running down to the surf by myself brought a lot of things to mind. I was inspired by the waves & rocks to try a handstand on the rocky black sand.

I didn’t think anyone was watching but not everyone had run back right away, and my friend Ali caught me handstanding at a distance with her iPhone 6.

I didn’t think anyone was watching but not everyone had run back right away, and my friend Ali caught me handstanding at a distance with her iPhone 6.

I held it for a split second, long enough to feel the physical shift of perspective, and also gain a greater sense of the possible, of possibilities. Being upside down, feeling gravity the opposite of normal, makes you question the normal, question dominant views, dominant forces. The entire run back felt different. Different thoughts, different views of the trail. I stopped and took different photos.

They say never look back.

I say reflection is a source of insight, wisdom, and inspiration.

After returning to the SFRC store, we promptly drove back to SF and grabbed a quick bite at the Blue Front Cafe.

I went home to shower, change, pack, and take a car to the airport. Made it to the gate just as my flight started boarding and found a couple of my colleagues also waiting for the same direct flight to Paris.

After boarding and settling into my seat, the next moments were a bit fuzzy. I don’t remember if they fed us right after take-off or not, or if I took a nap immediately, or if I started to look through the entertainment options.

Whether I napped first or not, I do distinctly remember scrolling through new movie releases and coming upon Tomorrowland. When I saw the thumbnail of the movie poster I got a very different impression from when I saw the Metreon marquee three months earlier.

I decided to give it a try.

http://tantek.com/2016/150/b1/tomorrowland-change-perspective-flight-paris

|

|

Alex Vincent: Associate’s Degree in Computer Science (Emphasis in Mathematics) |

Hi, all. I know I’ve been really quiet lately, because I’ve been really busy. My fulltime job is continuing along well, and I just completed a Associate’s in Arts degree, majoring in Computer Science with an emphasis in Mathematics at Chabot College.

I have an online music course to take to complete my lower division education requirements, and then I’ll be starting in the fall quarter at California State University, East Bay on a Bachelor’s of Science degree, also majoring in Computer Science.

No, I don’t have any witty pearls of wisdom to offer in speeches, so I will defer to the expert in commencement speeches, Baz Luhrmann:

|

|

Eitan Isaacson: Here is a web interface for switching on your light |

Like I mentioned in a previous post, I wanted to try out a more hackable wifi plug. I got a Kankun “smart” plug. Like the other one I have the software is horrible. The good news is that they left SSH enabled on it.

Once I get SSH access I got to work on a web app for it. I spent an hour styling a pretty button, look:

And when you turn it on it looks like this:

Anyway, if you want to have a pretty webby button for controlling you wifi socket you can grab it here.

https://blog.monotonous.org/2016/05/28/here-is-a-web-interface-for-switching-on-your-light/

|

|