Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Mozilla Blog: Help Us Spread the Word: Encryption Matters |

Today, the Internet is one of our most important global public resources. It’s open, free and essential to our daily lives. It’s where we chat, play, bank and shop. It’s also where we create, learn and organize.

All of this is made possible by a set of core principles. Like the belief that individual security and privacy on the Internet is fundamental.

Mozilla is devoted to standing up for these principles and keeping the Internet a global public resource. That means watching for threats. And recently, one of these threats to the open Internet has started to grow: efforts to undermine encryption.

Encryption is key to a healthy Internet. It’s the encoding of data so that only people with a special key can unlock it, such as the sender and the intended receiver of a message. Internet users depend on encryption everyday, often without realizing it, and it enables amazing things. It safeguards our emails and search queries, and medical data. It allows us to safely shop and bank online. And it protects journalists and their sources, human rights activists and whistleblowers.

Encryption isn’t a luxury — it’s a necessity. This is why Mozilla has always taken encryption seriously: it’s part of our commitment to protecting the Internet as a public resource that is open and accessible to all.

Government agencies and law enforcement officials across the globe are proposing policies that will harm user security through weakening encryption. The justification for these policies is often that strong encryption helps bad actors. In truth, strong encryption is essential for everyone who uses the Internet. We respect the concerns of law enforcement officials, but we believe that proposals to weaken encryption — especially requirements for backdoors — would seriously harm the security of all users of the Internet.

At Mozilla, we continue to push the envelope with projects like Let’s Encrypt, a free, automated Web certificate authority dedicated to making it easy for anyone to run an encrypted website. Developed in collaboration with the Electronic Frontier Foundation, Cisco, Akamai and many other technology organizations, Let’s Encrypt is an example of how Mozilla uses technology to make sure we’re all more secure on the Internet.

However, as more and more governments propose tactics like backdoors, technology alone will not be enough. We will also need to get Mozilla’s community — and the broader public — involved. We will need them to tell their elected officials that individual privacy and security online cannot be treated as optional. We can play a critical role if we get this message across.

We know this is a tough road. Most people don’t even know what encryption is. Or, they feel there isn’t much they can do about online privacy. Or, both.

This is why we are starting a public education campaign run with the support of our community around the world. In the coming weeks, Mozilla will release videos, blogs and activities designed to raise awareness about encryption. You can watch our first video today — it shows why controlling our personal information is so key. More importantly, you can use this video to start a conversation with friends and family to get them thinking more about privacy and security online.

If we can educate millions of Internet users about the basics of encryption and its connection to our everyday lives, we’ll be in a good position to ask people to stand up when the time comes. We believe that time is coming soon in many countries around the world. You can pitch in simply by watching, sharing and having conversations about the videos we’ll post over the coming weeks.

If you want to get involved or learn more about Mozilla’s encryption education campaign, visit mzl.la/encrypt. We hope you’ll join us to learn about and support encryption.

https://blog.mozilla.org/blog/2016/02/16/help-us-spread-the-word-encryption-matters/

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1246413] Email::Address caches all email addresses

- [1235182] User Story should always be visible

- [1244602] rewrite the bmo –> reviewboard connector to create a bug instead of updating reviewboard

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/02/16/happy-bmo-push-day-6/

|

|

Cameron Kaiser: More about the Talos POWER8 |

Phoronix has also done some early benchmarking on a test system Raptor gave them access to. This is notable because this means the damn thing actually exists. Although OpenBenchmarking calls it "57 cores," that's probably an artifact of SMT (my POWER6 has two cores and two threads per core, so AIX thinks it has four logical cores; my bet is that this is an 8-core system and POWER8 has eight threads per core, with some reserved for the kernel, hence "57"). Raptor has published their own set of benchmarks, but they tested against a Sandy Bridge Xeon and an AMD Opteron 6328, so I'm not sure how useful that comparison is; the Phoronix tests are against more current competitors, which I think is a fairer fight. Although they reported only three tests in that article, on the two tests where the Talos was on equal footing with the other systems (i.e., had access to the same acceleration or there weren't x86-specific optimized paths) it was nearly neck and neck with the top-ranked Xeon and Haswell i7s -- and remember you get the CPU and the motherboard for that $3K. Performance will only get better as the various Linux distros improve their support for the POWER8's capabilities. You can read some other interesting tidbits in the discussion thread.

So, again, if you're at all interested, please put in your (non-binding but do be serious) interest. Rumour has it their threshold is 2,000 units for a production run.

http://tenfourfox.blogspot.com/2016/02/more-about-talos-power8.html

|

|

Emily Dunham: Using Notty |

Using Notty

I recently got the “Hey, you’re a Rust Person!” question of how to install notty and interact with it.

A TTY was originally a teletypewriter. Linux users will have most likely encountered the concept of TTYs in the context of the TTY1 interface where you end up if your distro fails to start its window manager. Since you use ctrl + alt + f[1,2,...] to switch between these interfaces, it’s easy to assume that “TTY” refers to an interactive workspace.

Notty itself is only a virtual terminal. Think of it as a library meant as a building block for creating graphical terminal emulators. This means that a user who saw it on Hacker News and wants to play around should not ask “how do I install notty”, but rather “how do I run a terminal emulator built on notty?”.

Easy Mode

Get some Rust:

curl -sf https://raw.githubusercontent.com/brson/multirust/master/blastoff.sh | sh multirust update nightly

Get the system dependencies:

sudo apt-get install libcairo2-dev libgdk-pixbuf2.0 libatk1.0 libsdl-pango-dev libgtk-3-dev

Run Notty:

git clone https://github.com/withoutboats/notty.git cd notty/scaffolding multirust run nightly cargo run

And there you have it! As mentioned in the notty README, “This terminal is buggy and feature poor and not intended for general use”. Notty is meant as a library for building graphical terminals, and scaffolding is only a minimal proof of concept.

|

|

Daniel Stenberg: Survey: a curl related event? |

Call it a conference, a meetup or a hackathon. As curl is about to turn 18 years next month, I’m checking if there’s enough interest to try to put together a physical event to gather curl hackers and fans somewhere at some point. We’ve never done it in the past. Is the time ripe now?

Please tell us your views on this by filling out this survey that we run during this week only!

https://daniel.haxx.se/blog/2016/02/15/survey-a-curl-related-event/

|

|

This Week In Rust: This Week in Rust 118 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: Vikrant and llogiq.

Updates from Rust Community

News & Blog Posts

- Binding threads and processes to CPUs in Rust.

- The many kinds of code reuse in Rust.

- Code of heat conductivity. Llogiq on Rust's Code of Conduct.

- Rustic Bits. And on the small things that make code rustic.

- Why Rust's ownership/borrowing is hard.

- [video] Rust: Unlocking Systems Programming by Aaron Turon.

- This week in Servo 50.

- This week in Amethyst 4. Amethyst is a data-oriented game engine written in Rust.

Notable New Crates & Project Updates

- A MOS6502 assembler implemented as a Rust macro.

- Ketos. Lisp dialect scripting and extension language for Rust programs.

- Parity. Next Generation Ethereum Client, written in Rust.

- TensorFlow Rust. Rust language bindings for TensorFlow from Google.

- rpc-perf. A tool for benchmarking RPC services from Twitter.

- rust-lzma. A Rust crate that provides a simple interface for LZMA compression and decompression.

Updates from Rust Core

125 pull requests were merged in the last week.

See the triage digest and subteam reports for more details.

Notable changes

- [breaking batch] don't glob export

ast::UnOpvariants. - [breaking batch] Rename and refactor ast::Pat_ variants.

- [breaking batch] Remove some unnecessary indirection from AST structures.

- Allow registering MIR-passes through compiler plugins.

- Add a new i586 Linux target.

- std: Deprecate all

std::os::*::rawtypes. - Workaround LLVM optimizer bug by not marking &mut pointers as noalias.

- Don't let

remove_dir_allrecursively remove a symlink. - Split dummy-idx node to fix expand_givens DFS.

- Do not expect blocks to have type str.

- Add _post methods for blocks and crates.

- fix: read_link cannot open some files reported as symbolic links on windows.

New Contributors

- Adam Perry

- Carlos E. Garcia

- Daniel Robertson

- Felix Gruber

- Johan Lorenzo

- Kenneth Koski

- Masood Malekghassemi

- Richard Bradfield

- Scott Whittaker

- Thomas Winwood

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Stabilize volatile read and write.

- Move some net2 functionality into libstd.

- Extend atomic compare_and_swap.

- Safe

memcpyfrom one slice to another of the same type and length. - Add

maybe!macro equivalent totry!. - Add additional

try!(expr => return)that will return without value. - Add a

let...elseexpression, similar to Swift'sguard let...else. - Add macros to get the values of configuration flags.

- Implement

Into,Fromand new traitIntegerCastfor primitive integer types. - Add

retain_muttoVecandVecDeque. - Propose a design for specialization, which permits multiple

implblocks to apply to the same type/trait.

New RFCs

- Add octet-oriented interface to

std::net::Ipv6Addr. - Extend the pattern syntax for alternatives in

matchstatement. - Add

#[clear_on_drop]and#[clear_stack_on_return]to securely clear sensitive data after use. - Amend RFC 550 with misc. follow set corrections.

Upcoming Events

- 2/16. San Diego Rust: Eat– Drink– Rust! Downtown Rust Meetup.

- 2/17. Copenhagen Rust Group meetup.

- 2/17. Rust Los Angeles Monthly Meetup.

- 2/17. Rust Berlin: Leaf and Collenchyma.

- 2/18. Rust Hack and Learn Hamburg @ 4=1.

- 2/24. OpenTechSchool Berlin: Rust Hack and Learn.

- 2/25. Tokyo Rust Meetup #3.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

- Research Engineer - Servo at Mozilla.

- Senior Research Engineer - Rust at Mozilla.

- PhD and postdoc positions at MPI-SWS.

Tweet us at @ThisWeekInRust to get your job offers listed here!

GSoc Project

Hi students! Looking for an awesome summer project in Rust? Look no further! Chris Holcombe from Canonical is an experienced GSoC mentor and has a project to implement CephX protocol decoding. Check it out here.

Crate of the Week

This week's Crate of the Week is rayon,

which gives us par_iter()/par_iter_mut() functions that use an internal thread pool to easily parallelize data-parallel operations.

There's also rayon::join(|| .., || ..) for Fork-Join-style tasks. Apart from the ease of use, it also performs very well, comparable to hand-optimized code.

Thanks to LilianMoraru for the suggestion.

Submit your suggestions for next week!

https://this-week-in-rust.org/blog/2016/02/15/this-week-in-rust-118/

|

|

Mozilla Release Management Team: New value in the tracking flags: blocking |

For quite sometime, we, Release management, have been using tracking flags to keep a list of important bugs for the release. Until now, for tracking, we used the "+" value to achieve this objective. This value carries different meanings:

- critical bug

- important regression

- important bug or issue that release management wants to be fixed

However, just using "+" does not allow us to differentiate between nice-to-have bug fixes vs must-fixes that block the release. To address this issue, we decided to introduce a new value to the tracking flags list: blocking. For bugs with tracking-firefoxN flag set to blocking, we will delay or skip a release.

Liz, Ritu & Sylvestre

http://release.mozilla.org/tracking/flags/blocking/2016/02/14/blocking-value-tracking-flags.html

|

|

Jeff Walden: Quote of the day |

An excellent and concise explanation of why the First Amendment, and freedom of speech more broadly and more generally, matters to me:

Much of the Court’s opinion is devoted to deprecating the closed mindedness of our forebears…. Closed minded they were–as every age is, including our own, with regard to matters it cannot guess, because it simply does not consider them debatable. The virtue of a democratic system with a First Amendment is that it readily enables the people, over time, to be persuaded that what they took for granted is not so, and to change their laws accordingly.

It’s taken years of following SCOTUS particularly, and the legal sphere more generally, for me to realize that of all the issues out there, freedom of speech is the one I care about most. Without it, we can’t actually argue about all the other issues that matter, persuading each other, learning from each other, and so on. It is necessary for representative democracy to be able to freely discuss everything and attempt to persuade each other, for us to have any chance at sound policy. The late Justice Scalia gets it exactly right in this quote.

(Speech implications aside: I have no immediate opinion on the legal question in the case as I only discovered it today. For the policy question — which too many people will confuse with the legal question — I would agree with the case’s outcome.)

Rest in peace, Justice Scalia. I’ll miss your First Amendment votes, from flag burning to content neutrality and forum doctrine to (especially, for the reasons noted above) political speech (if not always), among the votes you cast and opinions you wrote. Others less inclined to agree with you might choose to remember you (or at least should remember you) as the justice whose vote ultimately struck down California’s Proposition 8, even as (especially as) you considered the legal question argle-bargle. As Cass Sunstein recognized, you were “one of the most important justices ever”, and the world of law will be worse without you.

(In the spirit of freedom of speech, I generally post all comments I receive, as written. I hope to do the same for this post. But if I must, I’ll moderate excessively vitriolic comments.)

|

|

Gareth Aye: "MathLeap Explained" in MathLeap’s Community Blog |

MathLeap is a web application that STEM teachers use to create self-grading assignments. Unlike other programs, MathLeap provides a…

|

|

Andy McKay: Writing a WebExtension |

Yesterday I spent 10 minutes throwing together an example WebExtension to show how a few APIs worked. It's not a complicated or sophisticated one by any means, it just shows a pop-up and links to trigger some tab APIs.

But when writing an add-on like that, you might notice a few things:

Thanks to about:debugging you can load the add-on straight from the directory and run it. There is no xpi, zip or compile step. It just works.

The menu is a html page with some JavaScript on it. That's familiar territory for any web developer.

The HTML and Javascript reload each time I make a change. That's refreshingly simple and easy to develop with.

Those factors alone make developing that add-on so much easier than before. We are getting there.

|

|

Cameron Kaiser: 38.6.1 released |

|

|

Eric Rahm: Are they slim yet? |

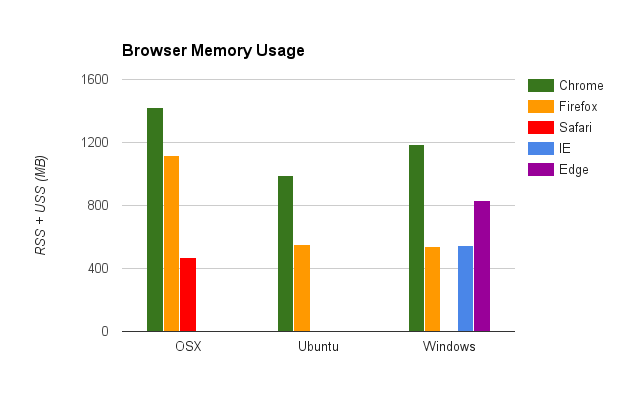

In my previous post I focused on how Firefox compares against itself with multiple content processes. In this post I’d like to take a look at how Firefox compares to other browsers.

For this task I automated as much as I could, the code is available as the atsy project on github. My goal here is to allow others to repeat my work, point out flaws, push fixes, etc. I’d love for this to be a standardized test for comparing browsers on a fixed set of pages.

As with my previous measurements, I’m going with:

total_memory = RSS(parent) + sum(USS(children))

An aside on the state of WebDriver and my hacky workarounds

When various WebDriver implementations get fixed we can make a cleaner test available. I had a dream of automating the tests across browsers using the WebDriver framework, alas, trying to do anything with tabs and WebDriver across browsers and platforms is a fruitless endeavor. Chrome’s actually the only one I could get somewhat working with WebDriver.

Luckily Chrome and Firefox are completely automated. I had to do some trickery to get Chrome working, filed a bug, doesn’t sound like they’re interested in fixing it. I also had to do some trickery to get Firefox to work (I ended up using our marionette framework directly instead), there are some bugs, not much traction there either.

IE and Safari are semi-automated, in that I launch a browser for you, you click a button, and then hit enter when it’s done. Safari’s WebDriver extension is completely broken, nobody seems to care. IE’s WebDriver completely failed at tabs (among other things), I’m not sure where to a file a bug for that.

Edge is mostly manual, its WebDriver implementation doesn’t support what I need (yet), but it’s new so I’ll give it a pass. Also you can’t just launch the browser with a file path, so there’s that. Also note I was stuck running it in a VM from modern.ie which was pretty old (they don’t have a newer one). I’d prefer not to do that, but I couldn’t upgrade my Windows 7 machine to 10 because Microsoft, Linux, bootloaders and sadness.

I didn’t test Opera, sorry. It uses blink so hopefully the Chrome coverage is good enough.

The big picture

The numbers

| OS | Browser | Version | RSS + USS |

|---|---|---|---|

| OSX 10.10.5 | Chrome Canary | 50.0.2627.0 | 1,354 MiB |

| OSX 10.10.5 | Firefox Nightly (e10s) | 46.0a1 20160122030244 | 1,065 MiB |

| OSX 10.10.5 | Safari | 9.0.3 (10601.4.4) | 451 MiB |

| Ubuntu 14.04 | Google Chrome Unstable | 49.0.2618.8 dev (64-bit) | 944 MiB |

| Ubuntu 14.04 | Firefox Nightly (e10s) | 46.0a1 20160122030244 (64-bit) | 525 MiB |

| Windows 7 | Chrome Canary | 50.0.2631.0 canary (64-bit) | 1,132 MiB |

| Windows 7 | Firefox Nightly (e10s) | 47.0a1 20160126030244 (64-bit) | 512 MiB |

| Windows 7 | IE | 11.0.9600.18163 | 523 MiB |

| Windows 10 | Edge | 20.10240.16384.0 | 795 MiB |

So yeah, Chrome’s using about 2X the memory of Firefox on Windows and Linux. Lets just read that again. That gives us a bit of breathing room.

It needs to be noted that Chrome is essentially doing 1 process per page in this test. In theory it’s configurable and I would have tried limiting its process count, but as far as I can tell they’ve let that feature decay and it no longer works. I should also note that Chrome has it’s own version of memshrink, Project TRIM, so memory usage is an area they’re actively working on.

Safari does creepily well. We could attribute this to close OS integration, but I would guess I’ve missed some processes. If you take it at face value, Safari is using 1/3 the memory of Chrome, 1/2 the memory of Firefox. Even if I’m miscounting, I’d guess they still outperform both browsers.

IE was actually on par with Firefox which I found impressive. Edge is using about 50% more memory than IE, but I wouldn’t read too much into that as I’m comparing running IE on Windows 7 to Edge on an outdated Windows 10 VM.

|

|

Nicolas Mandil: The Internet, a human need or a human right ? |

In its post The Internet is a Global Public Resource, Mark Surman asks if we think the Internet rank with other primary human needs.

Yes, it’s time to make the Internet a mainstream concern. It’s important that Mozilla, a FLOSS mainstream software maker supports it, because we will need end-to-end consuming chain involvement to succeed.

The Internet is important in our lives, and yes it’s turning to be critical with automation, IoT, IA… because we will delegate to it more decisions levels: decision resource to decision making. However, I don’t conceive it as an absolute human need. It’s more a consuming need: a (really nice and powerful) convenience.

The question to me is more about the social contract, the context it is used in: the (actual situation of the) Internet is critical regarding humans rights more than humans needs. Before being a life requirement, it’s a social condition. It’s more about democracy than life. More about people’s interaction than people’s life.

Hence, if it’s about the social contact, that in our societies is expressed in ours Constitution, we should express how the current state of the Internet is going for/against ours nation fundamental law, the values and the norms hierarchy it carry (like what is more important between security and liberty, in which context, …).

If, as Mark proposed, we consider the parallels with the environmental movement, the question started from preserving a living species, to the living species diversity, to the balance of a system we are part of. With the Internet, it’s the same thing, we need to spread out the idea that it’s not only about a particular thing aside, it’s about it’s interaction in our system and its impact on the actual balance that can drastically change the system’s rules.

To succeed, we should do it considering the actual questioning in the society (fears and hopes), the cultural crisis, to show how the alignment with our mission builds an alternative with answers. That’s a critical condition if we want to be cohesive to get a massive impact. The social contract is a common narrative that define a common identity on which we base the judgment of our actions.

To me, in my country, France, I would like to see a campaign that question liberty, equality and fraternity against the Internet in my daily life & choices.

https://repeer.org/2016/02/12/the-internet-a-humain-need-or-a-human-right/

|

|

Majken Connor: Building Mozilla’s Core Strength |

I was lucky enough to be invited to “Mozlando” and was really pleased with the 3 pillars Chris Beard revealed for Mozilla in 2016. Especially the concept of building core strength. As a ballet dancer I’ve actually used this analogy myself when making suggestions for projects I’ve been involved in. Of course the issue always is turning concepts into practice, getting people to actually apply them practically when they go back to work.

Community should be one of Mozilla’s core strengths. It’s been taken for granted, not very well understood, and no one seems to really be sure how to measure its strength let alone build it. There are some really great people trying to approach the problem from different angles, but I think we’re overlooking the basics and not applying knowledge and process that we already have.

Minimum Viable Product

I assume most of you reading this are familiar enough with Agile best practices to recognize this term. George Roter brought it with him to the Participation Team (or at least used the term more often than his predecessors). We need to identify the MVP for community strength at Mozilla. What is the least thing that needs to happen to be able to say we have a healthy community? We need to identify it, and then realign everything necessary until we’re capable of shipping it.

Mobilize the Base

I think anyone that works with a movement understands that if you can’t mobilize your base, then you’re lost. It’s the definition of a movement. Mozilla has hundreds and thousands of volunteers, and volunteers-in-waiting. It’s a massive untapped resource that should be the single litmus test of Mozilla’s success:

Can Mozilla mobilize its base?

The answer is pretty clear, whether or not it can, it doesn’t. I think this should be the single most important goal for 2016, and it supports all the initiatives already identified. Technical teams are focusing on making what’s already in the browser better. That’s great, because you need to give your base a product it can believe in if you want them to be moved to action. Done on its own, it’s much harder to come up with a direction, or a definition of success. But if you’re trying to get your base excited about your product again, all of a sudden you have something you can measure, and someone to whom you can listen to guide your progress.

Obviously where this weakness exposes itself the most is in non-technical teams and especially in marketing. In this age of viral advertising and the sharing economy, Mozilla came with a built in network of savvy internet citizens. Getting our message out there should be our core strength. We should have a network of professionals who know how to leverage that network. We should be intentionally investing in attracting and retaining those members of the community that can be mobilized, and cutting out practices that don’t further this goal. No other problem should be prioritized above this.

This must be our core strength, our minimum viable product. We make products but ultimately we’re an organization built on a mission, on values, on a movement. We must be able to mobilize our base before we can accomplish anything else worth accomplishing.

No one should be able to beat us at this.

This isn’t a nice-to-have.

This is how we survive.

|

|

Chris Cooper: RelEng & RelOps Weekly Highlights - February 12, 2016 |

This past week, the release engineering managers – Amy, catlee, and coop (hey, that’s me!) – were in Washington, D.C. meeting up with the other managers who make up the platform operations management team. Our goal was to try to improve the ways we plan, prioritize, and cooperate. I thought it was quite productive. I’ll have more to say about next week once I gather my thoughts a little more.

Everyone else was *very* busy while we were away. Details are below.

Modernize infrastructure:

Dustin deployed a change to the TaskCluster authorization service to support “named temporary credentials”. With this change, credentials can come with a unique name, allowing better identification, logging, and auditing. This is a part of Dustin’s work to implement “TaskCluster Login v3” which should provide a smoother and more flexible way to connect to TaskCluster and create credentials for all of the other tasks you need to perform.

Windows 10 in the cloud is being tested. All the ground work is done to make golden AMIs, mirroring the first stages of work done for Windows 7 in the cloud. Being able to perform some subset of Windows 10 testing in the cloud should allow us to purchase less hardware than we had originally anticipated for this quarter.

Improve CI pipeline:

One of the subjects discussed at Mozlando was improving the overall integration of localization (l10n) builds with our continuous integration (CI) system. Mike fixed an l10n packaging bug this week that I first remember looking at over 4 years ago. This fix allows us to properly test l10n packaging of Mac builds in a release configuration on check-in, thereby avoiding possible headaches later in the release cycle. (https://bugzil.la/700997)

Armen, Joel, Dustin, and Greg worked together to green up even more Linux test jobs in TaskCluster. Among other things, this involved upgrading to the latest Docker (1.10.0) and diagnosing some test runner scripts which use 1.3GB of RAM – not counting the Firefox binaries they run! This project has already been a long slog, but we are constantly making progress and will soon have all jobs in-tree at Tier 2.

Release:

Ben and Nick started designing a new Balrog feature that will make it possible to change update rules in response to certain events. Ben is planning to blog about this is more detail next week.

It’s was a busy week for releases. Many were shipped or are still in-flight:

- Firefox/Fennec 45.0b3

- Firefox/Fennec 45.0b4

- Firefox 44.0.1, quickly followed by Firefox/Fennec 44.0.2 due to a security issue

- Firefox 38.6.1esr

- Thunderbird_45.0b1 was shipped to Windows and Mac populations. We are planning a follow-up 45.0b2 in the near future to pick up the swap back to gtk2 for Linux users.

- Firefox 45.0b5 (in progress)

- Thunderbird 38.6.0 (in progress)

As always, you can find more specific release details in our post-mortem minutes: https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-02-10 and https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-02-17

Operational:

Kim landed a patch to enable Mac OS X 10.10.5 testing on try by default and disable 10.6 testing. This allowed us to disable some old r5 machines and install around 30 new 10.10.5 machines and enable them in production. Hooray for increased capacity! (https://bugzil.la/1239731)

See you next week!

|

|

Andrew Halberstadt: The Zen of Mach |

Mach is the Mozilla developer's swiss army knife. It gathers all the important commands you'll ever need to run, and puts them in one convenient place. Instead of hunting down documentation, or asking for help on irc, often a simple |mach help| is all that's needed to get you started. Mach is great. But lately, mach is becoming more like the Mozilla developer's toolbox. It still has everything you need but it weighs a ton, and it takes a good deal of rummaging around to find anything.

Frankly, a good deal of the mach commands that exist now are either poorly written, confusing to use, or even have no business being mach commands in the first place. Why is this important? What's wrong with having a toolbox?

Here's a quote from an excellent article on engineering effectiveness from the Developer Productivity lead at Twitter:

Finally there’s a psychological aspect to providing good tools to engineers that I have to believe has a really (sic) impact on people’s overall effectiveness. On one hand, good tools are just a pleasure to work with. On that basis alone, we should provide good tools for the same reason so many companies provide awesome food to their employees: it just makes coming to work every day that much more of a pleasure. But good tools play another important role: because the tools we use are themselves software, and we all spend all day writing software, having to do so with bad tools has this corrosive psychological effect of suggesting that maybe we don’t actually know how to write good software. Intellectually we may know that there are different groups working on internal tools than the main features of the product but if the tools you use get in your way or are obviously poorly engineered, it’s hard not to doubt your company’s overall competence.

Working with good tools is a pleasure. Rather than breaking mental focus, they keep you in the zone. They do not deny you your zen. Mach is the frontline, it is the main interface to Mozilla for most developers. For this reason, it's especially important that mach and all of its commands are an absolute joy to use.

There is already good documentation for building a mach command, so I'm not going to go over that. Instead, here are some practical tips to help keep your mach command simple, intuitive and enjoyable to use.

Keep Logic out of It

As awesome as mach is, it doesn't sprinkle magic fairy dust on your messy jumble of code to make it

smell like a bunch of roses. So unless your mach command is trivial, don't stuff all your logic into

a single mach_commands.py. Instead, create a dedicated python package that contains all your functionality,

and turn your mach_commands.py into a dumb dispatcher. This python package will henceforth be

called the 'underlying library'.

Doing this makes your command more maintainable, more extensible and more re-useable. It's a no-brainer!

No Global Imports

Other than things that live in the stdlib, mozbuild or mach itself, don't import anything in a

mach_commands.py's global scope. Doing this will evaluate the imported file any time the mach

binary is invoked. No one wants your module to load itself when running an unrelated command or

|mach help|.

It's easy to see how this can quickly add up to be a huge performance cost.

Re-use the Argument Parser

If your underlying library has a CLI itself, don't redefine all the arguments with

@CommandArgument decorators. Your redefined arguments will get out of date, and your users will

become frustrated. It also encourages a pattern of adding 'mach-only' features, which seem like a

good idea at first, but as I explain in the next section, leads down a bad path.

Instead, import the underlying library's ArgumentParser directly. You can do this by using the

parser argument to the @Command decorator. It'll even conveniently accept a callable so you

can avoid global imports. Here's an example:

```python def setup_argument_parser(): from mymodule import MyModuleParser return MyModuleParser()

@CommandProvider class MachCommands(object): @Command('mycommand', category='misc', description='does something', parser=setup_argument_parser): def mycommand(self, **kwargs): # arguments from MyModuleParser are in kwargs ```

If the underlying ArgumentParser has arguments you'd like to avoid exposing to your mach command,

you can use argparse.SUPPRESS to hide it from the help.

Don't Treat the Underlying Library Like a Black Box

Sometimes the underlying library is a huge mess. It can be very tempting to treat it like a black box and use your mach command as a convenient little fantasy-land wrapper where you can put all the nice things without having to worry about the darkness below.

This situation is temporary. You'll quickly make the situation way worse than before, as not only will your mach command devolve into a similar state of darkness, but now changes to the underlying library can potentially break your mach command. Just suck it up and pay a little technical debt now, to avoid many times that debt in the future. Implement all new features and UX improvements directly in the underlying library.

Keep the CLI Simple

The command line is a user interface, so put some thought into making your command useable and

intuitive. It should be easy to figure out how to use your command simply by looking at its help. If

you find your command's list of arguments growing to a size of epic proportions, consider breaking

your command up into subcommands with an @SubCommand decorator.

Rather than putting the onus on your user to choose every minor detail, make the experience more magical than a Disney band.

Be Annoyingly Helpful When Something Goes Wrong

You want your mach command to be like one of those super helpful customer service reps. The ones with the big fake smiles and reassuring voices. When something goes wrong, your command should calm your users and tell them everything is ok, no matter what crazy environment they have.

Instead of printing an error message, print an error paragraph. Use natural language. Include all relevant paths and details. Format it nicely. Create separate paragraphs for each possible failure. But most importantly, only be annoying after something went wrong.

Use Conditions Liberally

A mach command will only be enabled if all of its condition functions return True. This keeps the global |mach help| free of clutter, and makes it painfully obvious when your command is or isn't supposed to work. A command that only works on Android, shouldn't show up for a Firefox desktop developer. This only leads to confusion.

Here's an example:

```python from mozbuild.base import ( MachCommandBase, MachCommandConditions as conditions, )

@CommandProvider class MachCommands(MachCommandBase): @Command('mycommand', category='post-build', description='does stuff' conditions=conditions.is_android): def mycommand(self): pass ```

If the user does not have an active fennec objdir, the above command will not show up by default in |mach help|, and trying to run it will display an appropriate error message.

Design Breadth First

Put another way, keep the big picture in mind. It's ok to implement a mach command with super specific functionality, but try to think about how it will be extended in the future and build with that in mind. We don't want a situation where we clone a command to do something only slightly differently (e.g |mach mochitest| and |mach mochitest-b2g-desktop| from back in the day) because the original wasn't extensible enough.

It's good to improve a very specific use case that impacts a small number of people, but it's better to create a base upon which other slightly different use cases can be improved as well.

Take a Breath

Congratulations, now you are a mach guru. Take a breath, smell the flowers and revel in the satisfaction of designing a great user experience. But most importantly, enjoy coming into work and getting to use kick-ass tools.

|

|

Christian Heilmann: Answering some questions about developer evangelism |

I just had a journalist ask me to answer a few questions about developer evangelism and I did so on the train ride. Here are the un-edited answers for your perusal.

In your context, what’s a developer evangelist?

As defined quite some time ago in my handbook (http://developer-evangelism.com/):

“A developer evangelist is a spokesperson, mediator and translator between a company and both its technical staff and outside developers.”

This means first and foremost that you are a technical person who is focused on making your products understandable and maintainable.

This includes writing easy to understand code examples, document and help the engineering staff in your company find its voice and get out of the mindset of building things mostly for themselves.

It also means communicating technical needs and requirements to the non-technical staff and in many cases prevent marketing from over-promising or being too focused on your own products.

As a developer evangelist your job is to have the finger on the pulse of the market. This means you need to know about the competition and general trends as much as what your company can offer. Meshing the two is where you shine.

How did you get to become one?

I ran into the classic wall we have in IT: I’ve been a developer for a long time and advanced in my career to lead developer, department lead and architect. In order to advance further, the only path would have been management and discarding development. This is a big issue we have in our market: we seemingly value technical talent above all but we have no career goals to advance to beyond a certain level. Sooner or later you’d have to become something else. In my case, I used to be a radio journalist before being a developer, so I put the skillsets together and proposed the role of developer evangelist to my company. And that’s how it happened.

What are some of your typical day-to-day duties?

- Helping product teams write and document good code examples

- Find, filter, collate and re-distribute relevant news

- Answer pull requests, triage issues and find new code to re-use and analyse

- Help phrasing technical responses to problems with our products

- Keep in contact with influencers and ensure that their requests get answered

- Coach and mentor colleagues to become better communicators

- Prepare articles, presentations and demos

- Conference and travel planning

How often do you code?

As often as I can. Developer Evangelism is a mixture of development and communication. If you don’t build the things you talk about it is very obvious to your audience. You need to be trusted by your technical colleagues to be a good communicator on their behalf, and you can’t be that when all you do is powerpoints and attend meetings. At the same time, you also need to know when not to code and let others shine, giving them your communication skills to get people who don’t understand the technical value of their work to appreciate them more.

What’s the primary benefit enterprises hope to gain by employing developer evangelists?

The main benefit is developer retention and acquisition. Especially in the enterprise it is hard to attract new talent in today’s competitive environment. By showing that you care about your products and that you are committed to giving your technical staff a voice you give prospective hires a future goal that not many companies have for them. Traditional marketing tends to not work well with technical audiences. We have been promised too much too often. People are trusting the voice of people they can relate to. And in the case of a technical audience that is a developer evangelist or advocate (as other companies tend to favour to call it). A secondary benefit is that people start talking about your product on your behalf if they heard about it from someone they trust.

What significant challenges have you met in the course of your developer evangelism?

There is still quite some misunderstanding of the role. Developers keep asking you how much you code, assuming you betrayed the cause and run the danger of becoming yet another marketing shill. Non-technical colleages and management have a hard time measuring your value and expect things to happen very fast. Marketing departments have been very good over the years showing impressive numbers. For a developer evangelist this is tougher as developers hate being measured and don’t want to fill out surveys. The impact of your work is sometimes only obvious weeks or months later. That is an investment that is hard to explain at times. The other big challenge is that companies tend to think of developer evangelism as a new way of marketing and people who used to do that can easily transition into that role by opening a GitHub account. They can’t. It is a technical role and your “street cred” in the developer world is something you need to have earned before you can transition. The same way you keep getting compared to developers and measured by comparing how much code you’ve written. A large part of your job after a while is collecting feedback and measuring the success of your evangelism in terms of technical outcome. You need to show numbers and it is tough to get them as there are only 24 hours in a day.

Another massive issue is that companies expect you to be a massive fan of whatever they do when you are an evangelist there. This is one part, but it is also very important that you are the biggest constructive critic. Your job isn’t to promote a product right or wrong, your job is to challenge your company to build things people want and you can get people excited about without dazzling them.

What significant rewards have you achieved in the course of your developer evangelism?

The biggest win for me is the connections you form and to see people around you grow because you promote them and help them communicate better. One very tangible reward is that you meet exciting people you want to work with and then get a chance to get them hired (which also means a hiring bonus for you).

One main difference I found when transitioning was that when you get the outside excited your own company tends to listen to your input more. As developers we think our code speaks for itself, but seeing that we always get asked to build things we don’t want to should show us that by becoming better communicators we could lead happier lives with more interesting things to create.

What personality traits do you see as being important to being a successful developer evangelist?

You need to be a good communicator. You need to not be arrogant and sure that you and only you can build great things but instead know how to inspire people to work with you and let them take the credit. You need to have a lot of patience and a thick skin. You will get a lot of attacks and you will have to work with misunderstandings and prejudices a lot of times. And you need to be flexible. Things will not always go the way you want to, and you simply can not be publicly grumpy about this. Above all, it is important to be honest and kind. You can’t get away with lies and whilst bad-mouthing the competition will get you immediate results it will tarnish your reputation quickly and burn bridges.

What advice would you give to people who would like to become a developer evangelist?

Start by documenting your work and writing about it. Then get up to speed on your presenting skills. You do that by repetition and by not being afraid of failure. We all hate public speaking, and it is important to get past that fear. Mingle, go to meetups and events and analyse talks and articles of others and see what works for you and is easy for you to repeat and reflect upon. Excitement is the most important part of the job. If you’re not interested, you can’t inspire others.

How do you see the position evolving in the future?

Sooner or later we’ll have to make this an official job term across the market and define the skillset and deliveries better than we do now. Right now there is a boom and far too many people jump on the train and call themselves Developer “Somethings” without being technically savvy in that part of the market at all. There will be a lot of evangelism departments closing down in the nearer future as the honeymoon boom of mobile and apps is over right now. From this we can emerge more focused and cleaner.

A natural way to find evangelists in your company is to support your technical staff to transition into the role. Far too many companies right now try to hire from the outside and get frustrated when the new person is not a runaway success. They can’t be. It is all about trust, not about numbers and advertising.

https://www.christianheilmann.com/2016/02/12/answering-some-questions-about-developer-evangelism/

|

|

Karl Dubost: [worklog] A week with pretty funny bugs |

From the window the shadow of the matsu (

|

|

Mark Surman: MoFo 2016 Goals + KPIs |

Earlier this month, we started rolling out Mozilla Foundation’s new strategy. The core goal is to make the health of the open internet a mainstream issue globally. We’re going to do three things to make this happen: shape the agenda; connect leaders; and rally citizens. I provided an overview of this strategy in another post back in December.

As we start rolling out this strategy, one of our first priorities is figuring out how to measure both the strength and the impact of our new programs. A team across the Foundation has spent the past month developing an initial plan for this kind of measurement. We’ve posted a summary of the plan in slides (here) and the full plan (here).

Preparing this plan not only helped us get clear on the program qualities and impact we want to have, it also helped us come with a crisper way to describe our strategy. Here is a high level summary of what we came up with:

1. Shape the agenda

Impact goal: our top priority issues are mainstream issues globally (e.g. privacy).

Measures: citations of Mozilla / MLN members, public opinion

2. Rally citizens

Strength goal: rally 10s of millions of people to take action and change how they — and their friends — use the web.

Measures: # of active advocates, list size

Impact goal: people make better, more conscious choices. Companies and governments react with better products and laws.

Measures: per campaign evaluation, e.g. educational impact or did we defeat bad law?

3. Connect leaders

Strength goal: build a cohesive, world class network of people who care about the open internet.

Measures: network strength; includes alignment, connectivity, reach and size

Impact goal: network members shape + spread the open internet agenda.

Measures: participation in agenda-setting, citations, influence evaluation

Last week, we walked through this plan with the Mozilla Foundation board. What we found: it turns out that looking at metrics is a great way to get people talking about the intersection of high level goals and practical tactics. E.g. we need to be thinking about tools other than email as we grow our advocacy work outside of Europe and North America.

If you’re involved in our community or just following along with our plans, I encourage you to open up the slides and talk them through with some other people. My bet is they will get you thinking in new and creative ways about the work we have ahead of us. If they do, I’d love to hear thoughts and suggestions. Comments, as always, welcome on this post and by email.

The post MoFo 2016 Goals + KPIs appeared first on Mark Surman.

http://marksurman.commons.ca/2016/02/11/mofo-2016-goals-kpis/

|

|

Christian Heilmann: Making ES6 available to all with ChakraCore – A talk at JFokus2016 |

Today I gave two talks at JFokus in Stockholm, Sweden. This is the one about JavaScript and ChakraCore.

Presentation: Making ES6 available to all with ChakraCore

Christian Heilmann, Microsoft2015 was a year of massive JavaScript innovation and changes. Lots of great features were added to language, but using them was harder than before as not all features are backwards compatible with older browsers. Now browsers caught on and with the open sourcing of ChakraCore you have a JavaScript runtime to embed in your products and reliable have ECMAScript support. Chris Heilmann of Microsoft tells the story of the language and the evolution of the engine and leaves you with a lot of tips and tricks how to benefit from the new language features in a simple way.

I wrote the talk the night before, and thought I structure it the following way:

- Old issues

- The learning process

- The library/framework issue

- The ES6 buffet

- Standards and interop

- Breaking monopolies

Slides

The Slide Deck is available on Slideshare.

Screencast

A screencast of the talk is on YouTube

Resources:

- Learn ES2015 on BabelJS

- ES6 overview in 350 Bullet Points

- Exploring ES6 by Axel Rauschmayer

- Talk: Dependency Hell Just Froze Over by Stephan B"onnemann (JSConfEU 2015)

- Talk: You should use [insert library/framework], it’s the bestestest! by Paul Lewis (Full Frontal 2015)

- Big Rig

- The Cost of Frameworks

thisand arrow functions- ECMAScript browser support table

- BabelJS Transpiler

- The Cost of Transpiling ES2015 to ES5

- The ES6 Conundrum

- ChakraCore and Time Travel Debugging

- Submitting ChakraCore to the NodeJS mainline

- Open Sourcing Chakra Core

- ChakraCore on GitHub

|

|