Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Air Mozilla: Web QA Weekly Meeting, 07 Jan 2016 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Air Mozilla: Reps weekly, 07 Jan 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Alex Johnson: get_apk mozharness |

I recently learned object-oriented Python programming by working on Bug 1151954 that transferred get_apk.sh to mozharness so it can be used with push_apk.py.

Thank you Sylvestre for the review and for being patient with me as I was learning how mozharness works.

Mozilla continues to help me learn and be a better programmer.

|

|

Mozilla Security Blog: Man-in-the-Middle Interfering with Increased Security |

According to the plan we published earlier for deprecating SHA-1, on January 1, 2016, Firefox 43 began rejecting new certificates signed with the SHA-1 digest algorithm. For Firefox users with unfiltered access to the Internet, this change probably went unnoticed, since there simply aren’t that many new SHA-1 certs being used. However, for Firefox users who are behind certain “man-in-the-middle” devices (including some security scanners and antivirus products), this change removed their ability to access HTTPS web sites. When a user tries to connect to an HTTPS site, the man-in-the-middle device sends Firefox a new SHA-1 certificate instead of the server’s real certificate. Since Firefox rejects new SHA-1 certificates, it can’t connect to the server.

How to tell if you’re affected

If you can access this article in Firefox, you’re fine. If you’re reading this in another browser, see if you can load the security blog (or any other HTTPS link) in Firefox. Click “Advanced”, and if you see the error code “SEC_ERROR_CERT_SIGNATURE_ALGORITHM_DISABLED”, then you’re affected.

What to do if you’re affected

The easiest thing to do is to install the newest version of Firefox. You will need to do this manually, using an unaffected copy of Firefox or a different browser, since we only provide Firefox updates over HTTPS.

If you want to avoid reinstalling, advanced users can fix their local copy of Firefox by going to about:config and changing the value of “security.pki.sha1_enforcement_level” to 0 (which will accept all SHA-1 certificates).

You should also make sure that any systems you have that might be doing man-in-the-middle are up to date, for example, some anti-virus software or security scanning devices. Some vendors have removed the use of SHA-1 in recent updates.

Commitment to deprecate SHA-1

We are still committed to removing support for SHA-1 certificates from Firefox. The latest version of Firefox re-enables support for SHA-1 certificates to ensure that we can get updates to users behind man-in-the-middle devices, and enable us to better evaluate how many users might be affected. Vendors of TLS man-in-the-middle systems should be working to update their products to use newer digest algorithms.

https://blog.mozilla.org/security/2016/01/06/man-in-the-middle-interfering-with-increased-security/

|

|

About:Community: Mozilla’s Strategic Narrative for 2016 and Beyond |

All around the world, passionate Mozillians are working to move Mozilla’s mission forward. But if you asked five different Mozillians what the mission is, you might get seven different answers.

At the end of last year, Mozilla’s CEO, Chris Beard presented a clear articulation of Mozilla’s mission, vision, role and how our products will help us get there in the next five years. The goal of this Strategic Narrative is to create for all of Mozilla, a concise, shared understanding of our goals that we can use to be more empowered as individuals to make decisions, and identify opportunities to move Mozilla forward.

In order for Mozilla to achieve it’s mission we cannot work alone. We need all of the thousands of Mozillians around the world aligned behind this, so that we can do incredible things at a pace and with a voice that is louder than ever before.

That is why one of the Participation Team’s six strategic initiatives for the first half of this year is to spread this narrative to as many Mozillians as possible so that in 2016 we can have our largest impact yet. We will also be creating a follow-up post that will do a deep dive specifically on the Participation Team strategy for 2016.

Understanding this strategy will be crucial for anyone looking to make an impact at Mozilla this year, as it will determine what we advocate for, how we focus our resources, and which projects we focus on for 2016.

Understanding this strategy will be crucial for anyone looking to make an impact at Mozilla this year, as it will determine what we advocate for, how we focus our resources, and which projects we focus on for 2016.

As the year kicks off we will dive more deeply into this strategy and share more details of how the various teams and projects around Mozilla are working to further these goals.

The immediate call to action is to think about this in the context of your work and about how you will contribute to Mozilla next year this helps shape your innovations, your ambitions and your impact for 2016.

We hope you will join the conversation and share your questions, comments, and plans for what you will do to drive the strategic narrative forward in 2016 on discourse here or share your thoughts on Twitter with the hashtag #Mozilla2016Strategy.

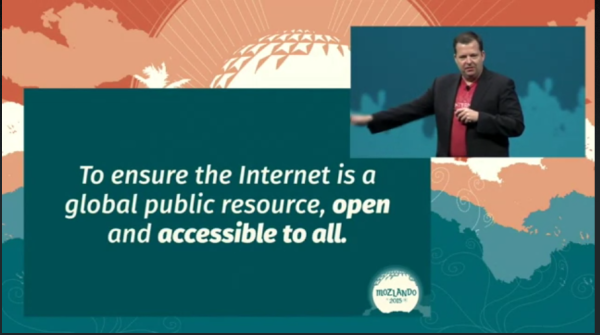

Mission, Vision & Strategy

Our Mission

To ensure the Internet is a global public resource open and accessible to all.

Our Vision

An Internet that truly puts people first. An Internet where individuals can shape their own experience. An Internet where people are empowered, safe and independent.

Our Role

Mozilla is a true advocate for you in your online life. We advocate for you both within your online experience & on your behalf for the health of the Internet.

Our Work

Our Pillars

- Products: We build people-centered products & educational experiences that help people unlock the full potential of their online life.

- Technology: We develop robust technical solutions that bring the Internet to life across multiple platforms.

- People: We develop community leaders and contributors who will invent, shape and defend the Internet.

How we lock-in positive change for the long-term.

The “how” matters just as much as the “what”. Our health and lasting impact depends upon how much our products and activities:

- Promote Interoperability, Open Source & Open Standards

- Grow & Nurture Communities

- Champion Policy & Legal Protections

- Educate and activate digital citizens

http://blog.mozilla.org/community/2016/01/06/mozillas-strategic-narrative-for-2016-and-beyond/

|

|

Roberto A. Vitillo: Telemetry meets Parquet |

In Mozilla’s Telemetry land, raw JSON pings are stored on S3 within files containing framed Heka records, which form our immutable, append-only master dataset. Reading the raw data with Spark can be slow for analyses that read only a handful of fields per record from the thousands available; not to mention the cost of parsing the JSON blobs in the first place.

We are slowly moving away from JSON to a more OLAP-friendly serialization format for the master dataset, which requires defining a proper schema. Given that we have been collecting data in big JSON payloads since the beginning of time, different subsystems have been using various, in some cases schema un-friendly, data layouts. That and the fact that we have thousands of fields embedded in a complex nested structure makes it hard to retrospectively enforce a schema that matches our current data, so a change is likely not going to happen overnight.

Until recently the only way to process Telemetry data with Spark was to read the raw data directly, which isn’t efficient but gets the job done.

Batch views

Some analyses require filtering out a considerable amount of data before the actual workload can start. To improve the efficiency of such jobs, we started defining derived streams, or so called pre-computed batch views in lambda’s architecture lingo.

A batch view is regenerated or updated daily and, in a mathematical sense, it’s simply a function over the entire master dataset. The view usually contains a subset of the master dataset, possibly transformed, with the objective to make analyses that depend on it more efficient.

As a concrete example, we have run an A/B test on Aurora 43 in which we disabled or enabled Electrolysis (E10s) based on a coin flip (note that E10s is enabled by default on Aurora). In this particular experiment we were aiming to study the performance of E10s. The experiment had a lifespan of one week per user. As some of our users access Firefox sporadically, we couldn’t just sample a few days worth of data and be done with it as ignoring the long tail of submissions would have biased our results.

We clearly needed a batch view of our master dataset that contained only submissions for users that were currently enrolled in the experiment, in order to avoid an expensive filtering step.

Parquet

We decided to serialize the data for our batch views back to S3 as Parquet files. Parquet is a columnar file storage that is slowly becoming the lingua franca of Hadoop’s ecosystem as it can be read and written from e.g. Hive, Pig & Spark.

Conceptually it’s important to clarify that Parquet is just a storage format, i.e. a binary representation of the data, and it relies on object models, like the one provided by Avro or Thrift, to represent the data in memory. A set of object model converters are provided to map between the in-memory representation and the storage format.

Parquet supports efficient compression and encoding schemes, which are applied on a per-column level where the data tends to be homogeneous. Furthermore, as Spark can load parquet files in a Dataframe, a Python analysis can potentially experience the same performance as a Scala one thanks to a unified physical execution layer.

A Parquet file is composed of:

- row groups, which contain a subset of rows – a row group is composed of a set of column chunks;

- column chunks, which contain values for a specific column – a column chunk is partitioned in a set of pages;

- pages, which are the smallest level of granularity for reads – compression and encoding are applied at this level of abstraction

- a footer, which contains the schema and some other metadata.

As batch views typically use only a subset of the fields provided in our Telemetry payloads, the problem of defining a schema for such subset becomes a non-issue.

Benefits

Unsurpsingly, we have seen speed-ups of up to a couple of orders of magnitude in some analyses and a reduction of file size by up to 8x compared to files having the same compression scheme and content (no JSON, just framed Heka records).

Each view is generated with a Spark job. As a concrete example, E10sExperiment.scala generates the batch view for the E10s experiment cited above. Views can be easily added as most of the boilerplate code is taken care of. In the future Heka is likely going to produce the views directly, once it supports Parquet natively.

|

|

Doug Belshaw: Digital Skills and Digital Literacy: knowing the difference and teaching both |

I was delighted to be notified on Twitter that a recent Literacy Today article heavily features my work around digital literacies. The main article by Baha Mali is accompanied by a ‘sidebar’ by Ian O'Byrne (a friend and valuable contributor to the Web Literacy Map work I led at Mozilla).

The focus of the article is on the Eight Essential Elements of Digital Literacies that I outline in my thesis, TEDx talk, and ebook.

Update (8th January 2016): I’ve been asked by the editor of Literacy Today to remove the link to the PDF. However, it’s been selected as one of the articles which will become open-access in February 2016. The link will appear via the magazine website.

Comments? Questions? I’m @dajbelshaw or you can email me: mail@dougbelshaw.com / hello@dynamicskillset.com

|

|

Air Mozilla: The Joy of Coding - Episode 39 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2016/01/06 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

The Review Queues

In the past 3 weeks, 1801 add-ons were reviewed:

- 1679 (93%) were reviewed in less than 5 days.

- 25 (1%) were reviewed between 5 and 10 days.

- 97 (5%) were reviewed after more than 10 days.

There are 471 listed add-ons awaiting review, and 6 unlisted add-ons awaiting review.

If you’re an add-on developer and would like to see add-ons reviewed faster, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 44 Compatibility

This compatibility blog post is live. The bulk compatibility validation should be run in the coming weeks.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Extension Signing

The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions. The current plan is to remove the signing override preference in Firefox 44.

Electrolysis

Electrolysis, also known as e10s, is the next major compatibility change coming to Firefox. In a nutshell, Firefox will run on multiple processes now, running content code in a different process than browser code.

This is the time to test your add-ons and make sure they continue working in Firefox. We’re holding regular office hours to help you work on your add-ons, so please drop in on Tuesdays and chat with us!

WebExtensions

If you read the post on the future of add-on development, you should know there are big changes coming. We’re investing heavily on the new WebExtensions API, so we strongly recommend that you start looking into it for your add-ons. You can track progress of its development in http://www.arewewebextensionsyet.com/.

We will be talking about development with WebExtensions in the upcoming FOSDEM. Come hack with us if you’re around!

https://blog.mozilla.org/addons/2016/01/06/add-ons-update-75/

|

|

About:Community: Participation Lab Notes: Participation Champions |

This year, during the 2016 planning process, an exciting opportunity arose to impact goals around participation across the organization. Chris Beard announced “invite participation” as one of the five top-line guiding principles for 2016 and each team was asked to show a clear connection from one of the top-line principles to their team’s goals for the year.

In order to capitalize on this momentum, the Participation Team formed a group called the Participation Champions to help improve the quality and maximize the impact of teams’ “invite participation” initiatives as they were being built.

Invite Participation Top-Line Guidance:

- Empowering people and building community to invent, shape and defend the Internet is core to who we are, and critical to the success of our mission.

- Participation is key to our ability to have impact at scale. It is both an end and a means. We need it in our work and on the Internet more broadly to achieve and sustain positive change.

- We must design participation into our products, innovation activities, and efforts to promote our cause.

The key asks for initiatives tied to “invite participation” were:

- Ensure that we are designing for participation.

- Find and rally allies, leaders and supporters to our cause, and support their work.

- Identify and invest in opportunities to pilot radical and modern participation

The Process

As each team began drafting their 2016 plans and identifying initiatives for the first half of the year, the Participation Team formed a group of 21 staff members from across 11 different teams all who work directly and regularly with contributors. This group of Participation Champions met regularly with the purpose of reviewing the 2016 plans with a focus on “invite participation” to help identify opportunities and adjustments to plans that would increase their capacity to empower people and build community around Mozilla’s mission.

Our process was, first, to collectively review each “invite participation” initiative, specifically considering how it could be strengthened and identifying concerns or missed opportunities. After the collective review, each functional and supporting team was delegated to a different Participation Champion who looked deeply at that team’s goals, and made specific suggestions for strengthening participation in that area. These suggestions were based on both the feedback from the collective review and a more in-depth look at the overarching goals.

We decided that each Participation Champion would communicate those suggestions and feedback directly to the team owner and/or the planning point person. At the end of the quarter we came together assess the impact of our approach, to share what we learned and identify suggestions for future reviews.

The Outcome

Based on the final discussion we concluded that while we didn’t have an immediate and dramatic effect on the plans themselves, this exercise helped drive more conversations about participation, and added accountability to many team’s who hadn’t realized that people would be reading or reviewing their “invite participation” plans.

At the end of this process we had three pairs of findings and suggestions that we will attempt to implement and incorporate into the next strategic planning process:

- Finding: Teams were willing to talk about increasing participation in their work but reluctant to change the label of initiative to “invite participation”.

- Suggestion: Change the way the drop-down menus are structured in the planning spreadsheets so that it’s easier for teams to choose multiple top-line guidances. Find ways to emphasize the importance of hitting on multiple top-line guidances instead of just one.

- Finding: Some parts of the process were uncomfortable because team’s weren’t expecting or ready to have a conversation about their initiatives.

- Suggestion: Re-think the one-on-one conversation approach for the next planning process and have this kind of review surfaced formally at the start of the planning process so teams are more open/expecting it.

- Finding: It was difficult to convey a crisp incentive for why teams should care about participation or incorporate it into their plans.

- Suggestions: Model the potential of participation through “bright light” projects and help individual teams identify their needs that could be met by participation. Additionally, work with managers find ways to tie a team’s success in participation to points, deliverables, or other rewards.

We believe that these planning processes are an excellent opportunity to strengthen participation at Mozilla. Therefore in 6 months we’ll be reconvening the Participation Champions, and based on our experience this round, we’ll spend some time thinking about how we can help move participation forward in the next round of planning! If you’re interested in joining please let me know at lucy@mozilla.com.

http://blog.mozilla.org/community/2016/01/06/participation-lab-notes-participation-champions/

|

|

Daniel Pocock: Want to use free software to communicate with your family in Christmas 2016? |

Was there a friend or family member who you could only communicate with using a proprietary, privacy-eroding solution like Skype or Facebook this Christmas?

Would you like to be only using completely free and open solutions to communicate with those people next Christmas?

Developers

Even if you are not developing communications software, could the software you maintain make it easier for people to use "sip:" and "xmpp:" links to launch other applications? Would this approach make your own software more convenient at the same time? If your software already processes email addresses or telephone numbers in any way, you could do this.

If you are a web developer, could you make WebRTC part of your product? If you already have some kind of messaging or chat facility in your website, WebRTC is the next logical step.

If you are involved with the Debian or Fedora projects, please give rtc.debian.org and FedRTC.org a go and share your feedback.

If you are involved with other free software communities, please come to the Free-RTC mailing list and ask how you can run something similar.

Everybody can help

Do you know any students who could work on RTC under Google Summer of Code, Outreachy or any other student projects? We are particularly keen on students with previous experience of Git and at least one of Java, C++ or Python. If you have contacts in any universities who can refer talented students, that can also help a lot. Please encourage them to contact me directly.

In your workplace or any other organization where you participate, ask your system administrator or developers if they are planning to support SIP, XMPP and WebRTC. Refer them to the RTC Quick Start Guide. If your company web site is built with the Drupal CMS, refer them to the DruCall module, it can be installed by most webmasters without any coding.

If you are using Debian or Ubuntu in your personal computer or office and trying to get best results with the RTC and VoIP packages on those platforms, please feel free to join the new debian-rtc mailing list to discuss your experiences and get advice on which packages to use.

Everybody is welcome to ask questions and share their experiences on the Free-RTC mailing list.

Please also come and talk to us at FOSDEM 2016, where RTC is in the main track again. FOSDEM is on 30-31 January 2016 in Brussels, attendance is free and no registration is necessary.

This mission can be achieved with lots of people making small contributions along the way.

|

|

The Mozilla Blog: Firefox OS will Power New Panasonic UHD TVs Unveiled at CES |

Panasonic announced that Firefox OS will power the new Panasonic DX900 UHD TVs, the first LED LCD TVs in the world with Ultra HD Premium specification, unveiled today at CES 2016.

Panasonic TVs powered by Firefox OS are already available globally, enabling consumers to find their favorite channels, apps, videos, websites and content quickly and pin content and apps to their TV’s home screen.

Mozilla and Panasonic have been collaborating since 2014 to provide consumers with intuitive, optimized user experiences and allow them to enjoy the benefits of the open Web platform. Firefox OS is the first truly open platform built entirely on Web technologies that delivers more choice and control to users, developers and hardware manufacturers.

What’s New in Firefox OS For TVs

Panasonic TVs powered by Firefox OS already have intuitive and customizable home screens that allow consumers to access their favorite channels, apps, videos, websites and content through the TV home screen. You start off with three choices of “quick access” to Live TV, Apps and Devices – and you can also pin any app or content you like to your TV home screen.

The newest version of Firefox OS (2.5) is currently available to partners and developers and adds some exciting new features. This update will be made available to Panasonic DX900 UHD TVs powered by Firefox OS later this year.

This update will include a new way to discover Web apps and save them to your TV. Several major apps such as Vimeo, iHeartRadio, Atari, AOL, Giphy and Hubii are excited to work with Mozilla to provide TV optimized Web apps.

This update will also enable Panasonic DX900 UHD TVs powered by Firefox OS with features that sync Firefox across platforms for a seamless experience across devices, including a “send to TV” feature to easily share Web content from Firefox for Android to a Firefox OS powered TV.

Firefox OS Across Connected Devices

Connected devices and systems are rapidly emerging around us. We often refer to this as an Internet of Things, and it indeed is creating a network of connected resources, very much like the traditional internet. But now it also connects the physical world, creating a possibility to enjoy and manage our environment in new and interesting ways.

To create a healthy connected device environment, Mozilla believes it is critical to build an open and independent alternative to proprietary platforms. We are committed to giving people control over their online lives and are exploring new use cases in the world of connected devices that bring better user benefits and experiences.

We look forward to continuing our partnerships with developers, manufacturers and a community that shares our open values, as we believe their support and contributions are instrumental to success. Working with Panasonic to offer TVs powered by Firefox OS is an important part of our efforts.

More information:

Firefox OS Smart TV Demo Video

Firefox OS Smart TV Images

PANASONIC DX900 News Release

|

|

Nick Cameron: My thoughts on Rust in 2016 |

2015 was a huge year for Rust - we released version 1.0, we stabilised huge swathes of the language and libraries, we grew massively as a community, and we re-organised the governance of the project. I expect 2016 will not be as interesting at first blush (it's hard to beat a 1.0 release), but it will be a super-important year for Rust, and there is a lot of exciting stuff coming up. In this blog post I'll cover what I think will happen. This isn't an official position, and no promises...

2015

Before we get into my thoughts for the future, here are a few numbers for the past year.

In 2015, the Rust community:

- Created 331 RFCs,

- of which 161 were accepted and merged,

- 120 people submitted an RFC, six people submitted 10 or more, Alex Crichton submitted 23.

- Created 559 RFC issues.

- Merged 4630 PRs into the Rust repo,

- authored by 831 people, of whom 91 people authored 10 or more and 446 authored one, Steve Klabnik authored 551.

- Filed 4710 issues,

- of which 1611 are still open,

- from 1319 people, of whom 79 filed ten or more, Alex Crichton filed 159.

- Released six stable versions of Rust (1.0 - 1.5).

- Actually kept Rust stable - 96% of crates which compiled with 1.0 still compiled with 1.5.

You might be interested in what I thought this time, last year.

2016

Much like the second half of 2015, I expect to see a lot of incremental improvements in 2016 - libraries being polished, compiler bugs fixed, tools improved, and users getting happier and more numerous. There are a fair number of big items on the horizon though - we've spent a lot of time recently planning, refactoring, and consolidating, and I expect that to bear fruit this year.

Language

Impl specialisation is the ability to have multiple impls of a trait for a type, if a most specialised one can be determined. It opens up opportunities for optimisation and solves some annoying issues. There is an RFC almost ready to be accepted and the implementation is well under way. A sure bet for early 2016.

We've talked about efficient inheritance (aka virtual structs) for a while now. There is some really nice overlap with specialisation and most of a plan has been drawn up. There are still some big questions, but I hope these can be overcome.

Another type system feature that's been talked about for a while is abstract return types (sometimes referred to as impl trait). This allows to use a trait (instead of a type) as the return value of a function. The idea is simple, but there are some really niggly issues still to work out. Still, the demand is there, so it seems like something that could happen in 2016.

A new system of allocators and placement box syntax would allow Rust to cater to a bunch of systems programming needs (e.g., specifying the allocator for a collection). It is also the gateway to garbage collected objects in Rust. Felix Klock has been working hard on the design and implementation, it should all come together this year.

The biggest language issue I'll be working on is a revamp of the macro system, in particular getting procedural macros on the road to becoming a fully-fledged, stable part of Rust. I've been exploring plans on this blog - look for the last few posts.

One area where Rust is a little weak is with error handling. We've been discussing ways to make that more ergonomic for a while now and the favourite plan is likely to be accepted as an RFC very soon. This proposal consists of a ? operator which acts like try! (e.g., you would write foo()? where today you write try!(foo())) and a try ... catch expression which allows for some processing of exceptions. I expect this proposal (or something very similar) to be accepted and implemented pretty soon.

We might finally get to wave goodbye to drop flags. We can dream, anyway.

Libraries

On the library front, I predict that 2016 will be the year of the crate. The standard library is looking pretty good now, and I expect that it will get only minor polish. I expect that things from outside will move into it, but probably very slowly, at least until towards the end of the year. On the other hand, there will be a lot of interesting stuff happening outside the Rust repo, both in the nursery and elsewhere.

libc has already had a revamp, I expect it to get a bunch of polish and move towards stabilisation.

rand is another nursery crate which I expect to be getting more stable, I'm not sure if there are any big changes in its future.

One of the most exciting crates around is mio, which provides very low level support for async IO. I expect to see that develop a lot and to move towards official status this year.

There are also some really exciting crates for concurrency around: crossbeam, rayon, simple_parallel, and others. I have no idea where we'll end up in this area, but I'm excited to find out!

Tools

I think 2016 will be a really exciting year for Rust tools. There is a lot coming up and it is a high priority area. Some highlights:

- incremental compilation,

- IDEs,

- rustup/multirust rewrite and combined tool,

- better cross compilation support,

- better windows support,

- packaging for Linux distros,

- refactoring tools,

- rustfmt getting better,

- using Cargo to build rustc?

Community

It's been great seeing the community grow in 2015 and I hope it does even more of this in 2016. I'm looking forward to meeting more awesome people, seeing more interesting projects, more blog posts, more views on the language from different backgrounds, more meetups, more everything!

All being well, there should be at least one book published on Rust this year, hopefully more. Along with the online docs improving, I expect this will be a real boost for newcomers to Rust.

I don't know of any official plans, but I hope and expect that there will be another Rust Camp this year. Last year's event was so much fun, I'm sure a sequel would be amazing.

I also expect to see Rust getting more 'serious' - being used in production (and publicly so), being used in important open source projects, existing Rust projects maturing and becoming important in their own right, not just as Rust projects.

Some things I think won't happen

Higher kinded types - I think we all want them in some form, but it's a huge design challenge and there is already so much to be worked on. I'd be very surprised to see a solid RFC for HKT this year, never mind an implementation.

Backwards incompatibilty - we've been pretty good at keeping stable and not breaking things, I'm confident we can continue this trend.

2.0 - there's talk of having a backwards incompatible version bump at some point, but it's looking increasingly unlikely for 2016. We're doing well so far, and there is nothing obvious coming up which will require it. I'm not confident we can get away with never having 2.0, but I'd bet against it in 2016.

Happy new year

Whatever happens, I hope 2016 is a great year for the whole community. Happy new year!

|

|

QMO: Jamie Charlton: a versatile and engaged contributor |

Jamie Charlton has been involved with Mozilla since 2014 and contributes to various parts of the Mozilla project, including Firefox OS. He is from Wassaic, New York.

Hi Jamie! How did you discover the Web?

For the most part I grew up with the web, but mainly got into the web when I was 13-14 and I wanted to learn how to work with and code for the web.

How did you hear about Mozilla?

For the most part I always use Firefox due to the security settings and found out about Mozilla from Firefox.

How and why did you start contributing to Mozilla?

The irony of dumb luck – I started contributing to Mozilla on December 22, 2014. I had installed the Firefox Aurora because it was supposed to support more of the html5 gaming features that were up and coming and I wanted to see how well they worked, then I accidentally stumbled upon webIDE and was really intrigued by Firefox OS and filed my first bug that day.

Jamie (center) at the Work Week in Orlando, FL in December 2015.

Have you contributed to any other Mozilla projects in any other way?

Yes, several. I am a Mozilla rep, and I also help out in IRC running the nightly channel helping people with questions regarding anything to do with a nightly version of anything from Thunderbird, Firefox OS nightly, to Firefox nightly – anything anyone asks about.

What’s the contribution you’re the most proud of?

Odd question… none actually, to me this is just a lot of fun.

What advice would you give to someone who is new and interested in contributing to Mozilla?

Dive right in, don’t be afraid to mess up, if you mess up someone will help you fix it. First hand experience is the best way to learn and get involved.

If you had one word or sentence to describe Mozilla, what would it be?

Fun!

What exciting things do you envision for you and Mozilla in the future?

Well hopefully some more fun and a lot more projects based around Firefox OS.

Is there anything else you’d like to say or add to the above questions?

Have fun, and don’t take anything seriously – it’s more fun that way.

The Mozilla QA and Firefox OS teams would like to thank Jamie for his contributions.

Jamie has been extremely helpful in updating documentation and in filing Firefox OS bugs. – Marcia Knous

Dedicated and highly enthusiastic, Jamie_ has had a profound impact in QA. He has been learning and growing from the time he has started joining us, and continues to help Mozilla better the web. He also helps Mozilla grow and tries to help recruit QA locally.

Jamie_, we salute you and thank you for your contributions. We hope that there will be many more to come. – Naoki Hirata

https://quality.mozilla.org/2016/01/jamie-charlton-a-versatile-and-engaged-contributor/

|

|

Mark C^ot'e: Review Board history |

A few weeks ago, mdoglio found an article from six years ago comparing Review Board and Splinter in the context of GNOME engineering. This was a fascinating read because, without having read this article in advance, the MozReview team ended implementing almost everything the author talked about.

Firstly, I admit the comparison isn’t quite fair when you replace bugzilla.gnome.org with bugzilla.mozilla.org. GNOME doesn’t use attachment flags, which BMO relies heavily on. I haven’t ever submitted a patch to GNOME, but I suspect BMO’s use of review flags makes the review process at least a bit simpler.

The first problem with Review Board that he points out is that the

“post-review command-line leaves a lot to be desired when compared to

git-bz”. This was something we did early on in MozReview, all be it

with Mercurial instead: the ability to push patches up to MozReview

with the hg command. Admittedly, we need an extension, mainly

because of interactions with BMO, but we’ve automated that setup with

mach mercurial-setup to reduce the friction. Pushing commits is the

area of MozReview that has seen the fewest complaints, so I think the

team did a great job there in making it intuitive and easy to use.

Then we get to what the author describes as “a more fundamental

problem”: “a review in Review Board is of a single diff”. As he

continues, “More complex enhancements are almost always done as

patchsets [emphasis his], with each patch in the set being kept as

standalone as possible. … Trying to handle this explicitly in the

Review Board user interface would require some substantial changes”.

This was also an early feature of MozReview, implemented at the same

time as hg push support. It’s a core philosophy baked into

MozReview, the single biggest feature that distinguishes MozReview

from pretty much every other code-review tool out there. It’s

interesting to see that people were thinking about this years

before we started down that road.

An interesting aside: he says that “a single diff … [is] not very natural to how people work with Git”. The article was written in 2009, as GitHub was just starting to gain popularity. GitHub users tend to push fix-up commits to address review points rather than editing the original commits. This is at least in part due to limitations present early on in GitHub: comments would be lost if the commit was updated. The MozReview team, in fact, has gotten some push back from people who like working this way, who want to make a bunch of follow-up commits and then squash them all down to a single commit before landing. People who strongly support splitting work into several logical commits and updating them in place actually tend to be Mercurial users now, especially those that use the evolve extension, which can even track bigger changes like commit reordering and insertion.

Back to Review Board. The author moves onto how they’d have to integrate Review Board with Bugzilla: “some sort of single-sign-on across Bugzilla and Review Board”, “a bugzilla extension to link to reviews”, and “a Review Board extension to update bugs in Bugzilla”. Those are some of the first features we developed, and then later improved on.

There are other points he lists that we don’t have, like an “automated process to keep the repository list in Review Board in sync with the 600+ GNOME repositories”. Luckily many people at Mozilla work on just one repo: mozilla-central. But it’s true that we have to add others manually.

Another is “reduc[ing] the amount of noise for bug reporters”, which you get if you confine all patch-specific discussion to the review tool. We don’t have this yet; to ease the transition to Review Board, we currently mirror pretty much everything to Bugzilla. I would really like to see us move more and more of code-related discussion to Review Board, however. Hopefully as more people transition to using MozReview full time, we can get there.

Lastly, I have to laugh a bit at “it has a very slick and well developed web interface for reviewing and commenting on patches”. Clearly we thought so as well, but there are those that prefer the simplicity of Splinter, even in 2015, although probably mostly from habit. Trying to reconcile these two views is very challenging.

|

|

Benoit Girard: Multi-threaded WebGL on Mac |

(Follow Bug 1232742 for more details)

Today we enabled CGL’s ‘Multi-threaded OpenGL Execution‘ mode on Nightly. There’s a lot of good information on that page so I wont repeat it all here.

In short, even though OpenGL is already an asynchronous API, the driver must do some work to prepare the OpenGL command queue which can be a bottleneck for certain content. Right now Firefox makes OpenGL call on the main thread on all platforms so it’s up to the driver where & how to schedule this work. Typically the command buffers are prepared synchronously at the moment of the call and in some cases can have a lot of overhead. If the WebGL applications needs the full refresh window, say ~15ms, then the work done in the driver might start causing missed frame degrading performance. Turning on this feature will move this work to another thread.

We decided to turn it on because we believe it will be overall beneficial for well optimized WebGL content that is CPU-bound as measured in the Unity Benchmark:

Overall this leads to a 15% score improvements however in some cases the scores are worse because the feature only helps for speed up demos that are bound by the CPU overhead of certain OpenGL calls.

Turning this on is an experiment to see the benefit of making OpenGL calls asynchronous. Based on the results we may consider ‘remoting’ the OpenGL calls manually on more platforms for performance reasons however we’re still undecided since it’s a large new project.

https://benoitgirard.wordpress.com/2016/01/04/multi-threaded-webgl-on-mac/

|

|

David Humphrey: On Sabbatical |

As I begin the new year, I'm trying something new. I'll be taking a sabbatical for all of 2016. Normally at this time of year I'm starting new courses and beginning research projects that I'll lead in the spring/summer semester. I've been following that rhythm now for over 16 years, really giving it all I have, and I'm in need of a break. Coincidentally, I'll be turning 40 in a few months, and that means I've been in the classroom, in one form or another, for the past 35 years.

While I wouldn't count myself among those who enjoy 'new' for newness sake (give me routine, discipline, and a long road!), I'm actually very grateful for the opportunity provided by my institution. Historically a sabbatical is something you do every seventh year, just as God rested on the seventh day and later instructed Moses to rest every seventh year. I'm aware that this isn't so common in private industry, and I think it means you end up losing good people to burn out with predictable regularity. At Seneca you can apply for one every 7 years (no guarantees), and I was lucky enough to have my application accepted.

The sabbatical has lots of conditions: first, I make only 80% of my salary; second, it's meant for people to use in order to accomplish some kind of project. For some, this means upgrading their education, writing a book, doing research, or developing curriculum. While I'm still figuring out a schedule, I do have a few plans for my year.

First, I need to retool. It's funny because I've done nothing but live at the edge of web technology since 2005, implementing web standards in Gecko, building experimental web technologies and libraries, and trying to port desktop-type things to the web. Yet in that same period things have changed, and changed radically. I've realized that I can't learn and understand everything I need to simply by adding it bit by bit to what I already know. I need to unlearn some things. I need to relearn some things. I need to go in new directions.

I'm the kind of person who likes being good at things and knowing what I'm doing, so I find this way of being a hard one. At the same time, I'm keenly aware of the benefits of not becoming (or remaining) comfortable. There's a lot of talk these days about the need for empathy in the workplace, and in the educational context, one kind of empathy is for a prof to really understand what it feels like to be a student who is learning all the time. I need to be humbled, again.

Second, I want to broaden not only my technological base, but also my community involvement, peers, and mentors. I've written before about how important it is to me to work with certain people vs. on certain problems; I still feel that way. During the past decade open source won. Now, everyone, everywhere does it, and we're better off for this fact. But it's also true that what we knew--what I knew--about open source in 2005 isn't really true anymore. The historical people, practices, and philosophies of 'open' have expanded, collapsed, shifted, and evolved. Even within my own community of Mozilla, things are totally different (for example, most of the people who were at Mozilla when I started aren't there today). I'm still excited about Mozilla, and plan to do a bunch with them (especially helping Mark build his ideas, and helping to maintain and grow Thimble); but I also need to go for a bunch of long walks and see who and what I meet out amongst other repos and code bases.

I'm going to try and write more as I go, so I'll follow this blog post with other such pieces, if you want to play along from home. I'm less likely to be irc in 2016, but you can always chat with me on Twitter or send me mail. I'd encourage you to reach out if you have something you think I should be thinking about, or if you want to see if I'd be interested in working on something with you.

Happy New Year.

|

|

David Burns: The "power" of overworking |

The other week I was in Orlando, Florida for a Mozilla All-Hands. It is a week where around 1200 Mozillians get together to spend time with each other planning, coding, or solving some hard problems.

One of the topics that came up was how someone always seemed to be online. This comment was a little more than "they never seem to go offline from IRC". It was "they seem to commenting on things around 20 hours a day". Overworking is a simple thing to do and when you love your job you can easily be pulled into this trap.

I use the word trap and I mean it!

If you are overworking you put yourself into this state where people come to expect that you will overwork. If you overwork, and have a manager who doesn't notice that you are overworking, when you do normal hours they begin to think that you are slacking. If you do have a manager who is telling you to stop overdoing it, you might then have colleagues who don't notice that you work all the hours. They then expect you to do be this machine, doing everything and more. And those colleagues that notice you doing too many hours start to think your manager is a poor manager for not helping you have a good work/life balance.

At this point, everyone is starting to lose. You are not being as productive as your could be. Studies have shown that working more than 40 hours a week only marginally increases productivity and this only lasts for a few weeks before productivity drops below the productivity you would have if you worked 40 hours a week.

The reasons for overworking can be numerous but the one that regularly stands out is imposter syndrome. "If I work 50 hours a week then people won't see me fail because I will hopefully have fixed it in time". This is a fallacy, people are happy to wait for problems to be fixed as long as it is in hand. Having one person be responsible for fixing things is a road to ruin. A good team is measured by how quickly they help colleagues. If you fall, there will be 2 people there to pick you up.

Before you start working more than 40 hours a week start thinking about the people this is going to impact. This is not only your colleagues, who start having to clean up technical debt, but your personal life. It is also your loved ones who are impacted. Missing an anniversary, a birthday, a dance/music recital. Work is never worth missing that!

If you are working more than 40 hours I suggest bringing this up in your next 1:1. Your manager will appreciate that you are doing some self care (if they are good managers) and work with you in making changes to your workload. They could be over promising their team and need to get this under control.

http://www.theautomatedtester.co.uk/blog/2016/the-power-of-overworking.html

|

|

The Servo Blog: This Week In Servo 46 |

In the last week, we landed 61 PRs in the Servo organization’s repositories. Given the New Year, the number of people out on vacation, and the repeated Linode DDOS events, it’s amazing that so many things landed! Great work, all.

This week, edunham moved us onto our own EC2 account for our CI, which will bring us both more reliability (our automation was “fighting” for instances with another team’s) and allowed us to remove some of most expensive fallback instances.

Notable Additions

- Simon renamed the

rust-snapshot-hashfile torust-nightly-date - larsberg fixed the long-broken TWiS RSS feed

- ckimes added an input event for

HTMLInputElement - jdm made button elements activateable, fixing reddit and github 2fa issues

- bholley split the layout wrappers up more, in support of the Stylo work

New Contributors

Screenshots

None this week.

Meetings

All were cancelled again, due to the New Year holiday.

|

|

About:Community: Firefox OS Participation in 2015 |

TL;DR

Though a great deal was achieved and accomplished through the Participation Team’s collaboration with Firefox OS, a major realization was that participation can only be as successful as a team’s readiness to incorporate participation into their workflow.

Problem Statement

Firefox OS was not as open a project as many others at Mozilla, and in terms of engineering was not structured well enough to scale participation. Firefox OS was identified as an area where Participation could make a major impact and a small group from the Participation Team was directed to work closely with them to achieve their goals. After the Firefox OS focus shift in 2015 from being partner driven development to user and developer driven, participation was identified as key to success.

The Approach

The Participation Team did an audit of the current state of participation. We interviewed cross-functional team members and did research into current contributions. Without any reliable data, our best guess was that there were less than 200 contributors, with technical contributions being much less than that. Launch teams around the world had contributed in non-technical capacities since 2013, and a new community was growing in Africa around the launch of the Klif device.

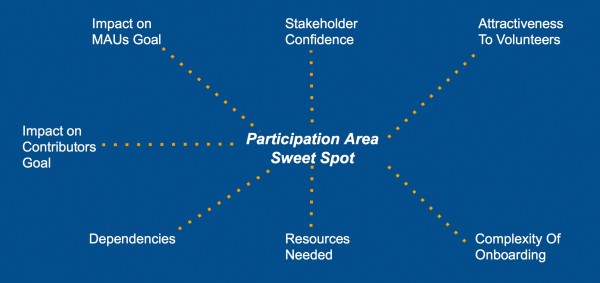

Based on a number of contribution areas (coding, ports, foxfooding, …), we created a matrix where we applied different weighted criteria. Criteria such as impact on organizational goals, for example, were given a greater weighting. Our intent was to create a “participation sweet spot” where we could narrow in on the areas of participation that could provide the most value to volunteers and the organization.

Participation Criteria

The result was to focus on five areas for technical contributions to Firefox OS:

- Foxfooding

- Porting

- Gaia Development

- Add-ons

- B2GDroid

In parallel, we built out the Firefox OS Participation team, a cross-functional team from engineering, project management, marketing, research and more areas. We had a cadence of weekly meetings, and communicated also on IRC and on an email list. A crucial meeting was a planning meeting in Paris in September, 2015, where we finalized details of the plan to start executing on.

The Firefox OS Participation Hub

As planning progressed, it became clear that a focal point for all activities would be the Firefox OS Participation Hub, a website for technical contributors to gather. The purpose was two-fold:

- To showcase and track technical contributions to Firefox OS. We would list ways to get involved, in multiple areas, but focusing on the “sweet spot” areas we had identified. The idea was not to duplicate existing content, but to gather it all in one place.

- To facilitate foxfooding, a place where users could go to get builds for their device and register their device. The more people using and testing Firefox OS, the better the product could become.

The Hub came to life in early November.

Challenges

There were a number of challenges that we faced along the way.

- Participation requires a large cultural shift, and cannot happen overnight. Mozilla is an open and open source organization, but because of market pressures and other factors Firefox OS was not a participatory project.

- One learning was that we should have embedded the program deeper in the Firefox OS organization earlier, doing more awareness building. This would have mitigated an issue where beyond the core Firefox OS Participation team, it was hard getting the attention of other Firefox OS team members.

- Timing is always hard to get right, because there will always be individual and team priorities and deliverables not directly related that will take precedence. During the program rollout, we were made acutely aware of this coming up the release of Firefox OS 2.5.

For participation to really take hold and succeed, broader organizational and structural changes are needed. Making participation part of deliverables will go some way to achieving this.

Achievements

Despite the challenges mentioned above, the Firefox OS Participation team managed to accomplish a great deal in less than six months:

- we brought all key participation stakeholders on the Firefox OS team together and drafted a unified vision and strategy for participation

- we launched the Firefox OS Participation Hub, the first of its kind to learn about contribution opportunities and download latest Firefox OS builds

- we formed a “Porting Working Group” focused on porting Firefox OS to key devices identified, and enabling volunteers to port to more devices

- we distributed more than 500 foxfooding devices in more than 40 countries

- the “Mika Project” explored a new to engage with, recognize and retain foxfooders through gamification – The “Save Mika!” challenge will be rolling out 2016Q1

Moving Forward

Indeed, participation will be a major focus for Connected Devices in 2016. Participation can and will have a big impact on helping teams solve problems, do work in areas they need help with and achieve new levels of success. To be sure, before a major participation initiative can be successful, the team needs to be set-up for success 4 major components:

- Managerial buy-in. “It’s important to have permission to have participation in your work”. Going one step further, it needs to be part of day-to-day deliverables.

- Are there tools open? Do you have a process for bug triaging and mentorship? Is there good and easily discoverable documentation?

- Do they have an onboarding system in place for new team members (staff and volunteers)?

- Measurement. How can we systematically and reliably measure contributions, over time, to detect trends to act upon to maintain a healthy project? Some measurements are in place, but we need more.

We put Participation on the map for Firefox OS and as announced at Mozlando, the Connected Devices team will be doubling down its efforts to design for participation and work in the open.

We are excited about the challenges and opportunities ahead of us, and we can’t wait to provide regular updates on our progress with everyone. So as we enter the new year, please make sure to follow us on Discourse, Twitter, and Facebook for latest news and updates.

2016, here we come!

Brian, on behalf of the Participation Team.

(Originally posted on Brian King’s blog)

http://blog.mozilla.org/community/2016/01/04/firefox-os-participation-in-2015/

|

|