Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Reps Community: Reps, regional communities and beyond: 2015 |

First thing today: fasten your seat belt, this will be a long blog post filled with the most important lessons of 2015!

2015 was a different year for our communities and Reps. With the participation team and without the focus on Firefox OS product launches, the year was full of changes and experiments. We tried many things for the first time, including asking ourselves really hard questions, even questioning the very things that made us successful in the past. But that ride was really worth it, because we learned a lot that helped us shift our focus, identify new programs, and launch experiments around improving accountability, visibility and planning.

Lessons from 2015

Moving Away from an Event Focus

As the Reps program reached maturity, a key learning was that our processes tools are optimized for events and that this emphasis on events made supporting other initiatives harder.

As a result, in 2016 we will be prioritizing experimentation that leads us to success on our mission beyond events.

Emphasis on events also made council’s work very cumbersome and frustrating, as they struggled to evaluate event goals and outcomes in a way that felt effective and clear to everyone. As volunteers, this also meant council had almost no time for really important tasks the program needs to move forward. In 2016, council will change focus, and begin providing strategic guidance on a quarterly basis, which will help Reps understand priorities and focus their energy.

Growing Our Alumni Program

We also realized this year that we hadn’t been doing a great job keeping our former Reps, or Alumni, informed and involved in some capacity with our work. We have an amazing network of Alumni Reps whose experience and wisdom could help newer Reps,so we’ll be planning and offering new and meaningful ways for Alumni to stay involved in the future.

Accountability and Visibility

We discovered a big bug in terms of accountability: currently we have no process for regional communities to keep Reps accountable. Most Reps do a fantastic job supporting their communities, but when this is not the case, it’s difficult for the regional community to raise this, and when they do, it sometimes results in conflict. We still don’t have a perfect solution for this, but in 2016 we plan to experiment with solutions that can bring us closer to fixing this bug.

It’s hard to have an overview of what is going on in the Mozilla universe! With the Reps portal we know what Reps are doing, but with regional communities is difficult to have a centralized way to find out. We started some experiments to find fun ways for communities to let others know what they’re doing, it’s still early to say how successful this is, but with the community yearbook (example from Cuba) and the community timelines (Indonesian timeline) we hope to learn more. Also, we will experiment with new tools in 2016.

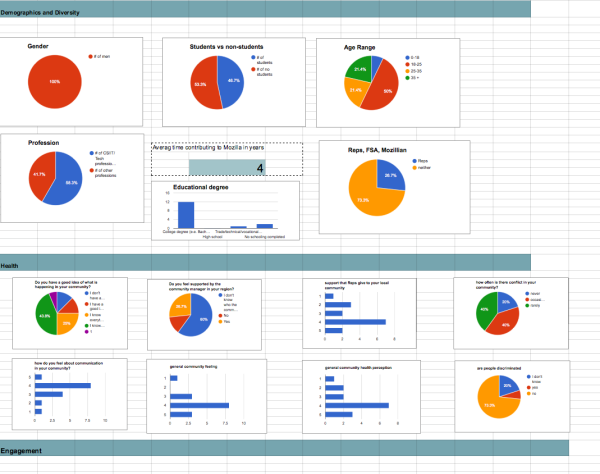

As a way to understand our communities better we also started a quarterly survey and dashboard to gain even more visibility over our biggest communities. This has helped us understand more how the communities are doing, their health, governance and their diversity. We want to start using this data for helping communities understand themselves better and work together in a better way.

A Focus on Planning

Planning was something we started thinking more seriously about in 2015, because in the past it mostly happened organically. Different groups planned different initiatives and communities were often uncoordinated. With the mid-term planning we started experimenting advance planning with some communities. A particularly interesting piece of work that the Indonesian community is doing around creating a financial planning role. All really exciting and we hope to see this take off next year.

We also started experimenting with 1:1 conversations with many Mozillians and we’re seeing that this focused support is helping Mozillians plan, think and act differently in their communities. We are still testing this, but so far the feedback has been great and we are learning a lot from all Mozillians too.

Two Success stories

Refreshing the Reps Call

The success of the Reps call refresh is an example of how much better we can get when Mozillans work together and experiment, iterate and find ways to always improve. The Reps call is especially close to my heart because it gives a space for many Reps and communities to showcase their work.

This year, a group of Reps led by Ioana and Konstantina started experimenting with many things to make the Reps call better and have more attendance and views. They experimented with the length, cutting it down to 30 min, the format, the sections and speakers and they even optimized the best time to send the invite and reminder!

Based on the results of these experiments the new call now shares a full agenda in advance, speakers sometimes wait some weeks to present because the call is full (we’re popular!) and we always take good notes, making the content available for everyone. We have also added 5 minute slots which are great because speakers now really try to make great use of their time and it has also encouraged many Reps and Mozillians to share what they’re working on and what they’ve learned.

Also, seeing all the faces from all around the world and Reps sharing their ideas and passion is a fantastic inspiration and a way to see the power of the Mozilla community in one call. We will keep on trying new things and we hope to make this call always better.

Identifying New Directions for the Reps program

For many, the Reps the program has reached a maturity point where there is a need for an evolution. The Council and the peers identified this back in March in Paris and since this time we have had many discussions, but the proposed changes seemed daunting and it felt like an impossible task to get the Reps to agree on them.

Towards the end of the year the Council decided it was time to change things in order to be ready for 2016. So they revisited all ideas, comments, discourse and mailing list threads and put together a proposal with the high level changes to the program. The result was a plan is to create working groups with Reps interested in shaping the future of the program and work on it next year. This process was difficult and our Peer Henrik Mitsch was a driving force behind it.

The reactions to the proposal have been very positive, we held a town hall during the Orlando work week and had a fantastic conversation, which I encourage everyone interested to watch. As I mentioned, only the broad strokes are clear and in 2016 we will work together making this a reality. The fact that this process received such positive and constructive feedback is testimony to the hard work of this Council and the Reps who want to take the program to the next level.

Looking Forward to 2016

With the changes to the Reps program, the new support for regional communities and the intention to work much more closer with the FSA program, we’re ready to welcome 2016 and accelerate the power of the Mozilla community. Apart from all the work we did this year in 2015 we’re bringing additional focus. I like to say that in 2015 Mozilla felt a bit like a buffet of left overs for volunteers, we had initiatives that had been going on for a while, but we weren’t focusing all our communities in one direction. In 2016 we will start focusing on two main initiatives around a privacy campaign on campuses and the experimentation around connected devices, and we are sure that that will help Reps, regional communities, FSA and all Mozillians to reach their full potential. 2016, here we come!

https://blog.mozilla.org/mozillareps/2015/12/24/reps-regional-communities-and-beyond-2015/

|

|

Daniel Stenberg: HTTP/2 adoption, end of 2015 |

When I asked my surrounding in March 2015 to guess the expected HTTP/2 adoption by now, we as a group ended up with about 10%. OK, the question was vaguely phrased and what does it really mean? Let’s take a look at some aspects of where we are now.

When I asked my surrounding in March 2015 to guess the expected HTTP/2 adoption by now, we as a group ended up with about 10%. OK, the question was vaguely phrased and what does it really mean? Let’s take a look at some aspects of where we are now.

Perhaps the biggest flaw in the question was that it didn’t specify HTTPS. All the browsers of today only implement HTTP/2 over HTTPS so of course if every HTTPS site in the world would support HTTP/2 that would still be far away from all the HTTP requests. Admittedly, browsers aren’t the only HTTP clients…

During the fall of 2015, both nginx and Apache shipped release versions with HTTP/2 support. nginx made it slightly harder for people by forcing users to select either SPDY or HTTP/2 (which was a technical choice done by them, not really enforced by the protocols) and also still telling users that SPDY is the safer choice.

Let’s Encrypt‘s finally launching their public beta in the early December also helps HTTP/2 by removing one of the most annoying HTTPS obstacles: the cost and manual administration of server certs.

Amount of Firefox responses

This is the easiest metric since Mozilla offers public access to the metric data. It is skewed since it is opt-in data and we know that certain kinds of users are less likely to enable this (if you’re more privacy aware or if you’re using it in enterprise environments for example). This also then measures the share by volume of requests; making the popular sites get more weight.

Firefox 43 counts no less than 22% of all HTTP responses as HTTP/2 (based on data from Dec 8 to Dec 16, 2015).

Out of all HTTP traffic Firefox 43 generates, about 63% is HTTPS which then makes almost 35% of all Firefox HTTPS requests are HTTP/2!

Firefox 43 is also negotiating HTTP/2 four times as often as it ends up with SPDY.

Amount of browser traffic

One estimate of how large share of browsers that supports HTTP/2 is the caniuse.com number. Roughly 70% on a global level. Another metric is the one published by KeyCDN at the end of October 2015. When they enabled HTTP/2 by default for their HTTPS customers world wide, the average number of users negotiating HTTP/2 turned out to be 51%. More than half!

Cloudflare however, claims the share of supported browsers are at a mere 26%. That’s a really big difference and I personally don’t buy their numbers as they’re way too negative and give some popular browsers very small market share. For example: Chrome 41 – 49 at a mere 15% of the world market, really?

I think the key is rather that it all boils down to what you measure – as always.

Amount of the top-sites in the world

Netcraft bundles SPDY with HTTP/2 in their October report, but it says that “29% of SSL sites within the thousand most popular sites currently support SPDY or HTTP/2, while 8% of those within the top million sites do.” (note the “of SSL sites” in there)

That’s now slightly old data that came out almost exactly when Apache first release its HTTP/2 support in a public release and Nginx hadn’t even had it for a full month yet.

Facebook eventually enabled HTTP/2 in November 2015.

Amount of “regular” sites

There’s still no ideal service that scans a larger portion of the Internet to measure adoption level. The httparchive.org site is about to change to a chrome-based spider (from IE) and once that goes live I hope that we will get better data.

W3Tech’s report says 2.5% of web sites in early December – less than SPDY!

I like how isthewebhttp2yet.com looks so far and I’ve provided them with my personal opinions and feedback on what I think they should do to make that the preferred site for this sort of data.

Using the shodan search engine, we could see that mid December 2015 there were about 115,000 servers on the Internet using HTTP/2. That’s 20,000 (~24%) more than isthewebhttp2yet site says. It doesn’t really show percentages there, but it could be interpreted to say that slightly over 6% of HTTP/1.1 sites also support HTTP/2.

On Dec 3rd 2015, Cloudflare enabled HTTP/2 for all its customers and they claimed they doubled the number of HTTP/2 servers on the net in that single move. (The shodan numbers seem to disagree with that statement.)

Amount of system lib support

iOS 9 supports HTTP/2 in its native HTTP library. That’s so far the leader of HTTP/2 in system libraries department. Does Mac OS X have something similar?

I had expected Window’s wininet or other HTTP libs to be up there as well but I can’t find any details online about it. I hear the Android HTTP libs are not up to snuff either but since okhttp is now part of Android to some extent, I guess proper HTTP/2 in Android is not too far away?

Amount of HTTP API support

I hear very little about HTTP API providers accepting HTTP/2 in addition or even instead of HTTP/1.1. My perception is that this is basically not happening at all yet.

Next-gen experiments

If you’re using a modern Chrome browser today against a Google service you’re already (mostly) using QUIC instead of HTTP/2, thus you aren’t really adding to the HTTP/2 client side numbers but you’re also not adding to the HTTP/1.1 numbers.

QUIC and other QUIC-like (UDP-based with the entire stack in user space) protocols are destined to grow and get used even more as we go forward. I’m convinced of this.

Conclusion

Everyone was right! It is mostly a matter of what you meant and how to measure it.

Future

Recall the words on the Chromium blog: “We plan to remove support for SPDY in early 2016“. For Firefox we haven’t said anything that absolute, but I doubt that Firefox will support SPDY for very long after Chrome drops it.

http://daniel.haxx.se/blog/2015/12/24/http2-adoption-at-end-of-2015/

|

|

Hannah Kane: 2015 Year in Review, Part 3 |

Whew! After all that reflection, it’s time to turn towards 2016. Here are some goals I’d like to focus on in the next year, with regards to the MLN platform and related work.

Expand our reach. From Laura and Bobby’s research findings, it’s clear that to reach beyond our existing audience, we must use more inclusive language, talk less about ourselves and more about our community, and provide clearer pathways.

H1 Action Steps:

- Conduct an audit of the site with this goal in mind.

- Localize the site and continue to localize the curricular content.

Resolve our branding challenges. This includes the larger issue of reducing the number of brands, but within the Teach site specifically, we need to highlight our credentials in the education space.

“Mozilla is written everywhere. Why would mozilla be involved in teaching?” “What are they trying to sell through me?”

– Research study participant

H1 Action Steps:

- Revise key pages on the site (About, Teach Like Mozilla, plus a new Leadership Opportunities page that’s in the works) to better reflect our role and history with this work.

- Create templates to use across all MLN properties, providing some much needed visual coherence.

Provide better on-ramps. Our calls-to-action tend to be very heavy lifts (e.g. start a Club, join a Hive). Even our low-bar CTAs sound like a serious commitment (e.g. “Pledge to Teach”).

H1 Action Steps:

- Conduct a homepage redesign

- Replace the Pledge with a newsletter sign-up

- Build a Leadership Opportunities page clarifying our offerings

- Better integrate Hive into the website

“Productize” the experience. We need to transform Teach.mozilla.org into something people use, rather than simply a destination for finding information.

H1 Action Steps:

- Build the curriculum database and virtual classrooms (See the project brief)

- Launch new Web Literacy and 21st Century Skills badges

- Complete Web Literacy Map redesign

Support our programs. We need to keep pace with the expansion and development plans of Clubs.

H1 Action Steps:

- Develop tools and resources for Club Captains and Regional Coordinators. (See an early version of the Clubs Platform product brief.)

Continue to support tools. Our community depends on these tools, so we need to maintain them and find ways to innovate and better meet our community’s needs.

H1 Action Steps:

- Release the new Goggles (early in 2016) – Goggles will then go into maintenance mode for awhile

- Localize Thimble

- Enable the discovery of content made with tools

- Research possible directions for the next phase of Thimble development. We have a few ideas in mind already (stay tuned for an upcoming post).

Sounds like a lot, right? It is! Can’t wait to get started.

http://hannahgrams.com/2015/12/23/2015-year-in-review-part-3/

|

|

Air Mozilla: Bugzilla Development Meeting, 23 Dec 2015 |

Help define, plan, design, and implement Bugzilla's future!

Help define, plan, design, and implement Bugzilla's future!

https://air.mozilla.org/bugzilla-development-meeting-20151223/

|

|

Mozilla Addons Blog: Loading temporary add-ons |

With Firefox 43 we’ve enabled add-on signing, which requires add-ons installed in Firefox to be signed. If you are running the Nightly or Dev Edition of Firefox, you can disable this globally by accessing about:config and changing the xpinstall.signatures.required value to false. In Firefox 43 and 44 you can do this on the Release and Beta versions, respectively.

In the future, however, disabling signing enforcement won’t be an option for the Beta and Release versions as Firefox will remove this option. We’ve released a signing API and the jpm sign command which will allow developers to get a signed add-on to test on stable releases of Firefox, but there is another solution in Firefox 45 aimed at add-on developers, which gives you the ability to run an unsigned, restartless add-on temporarily.

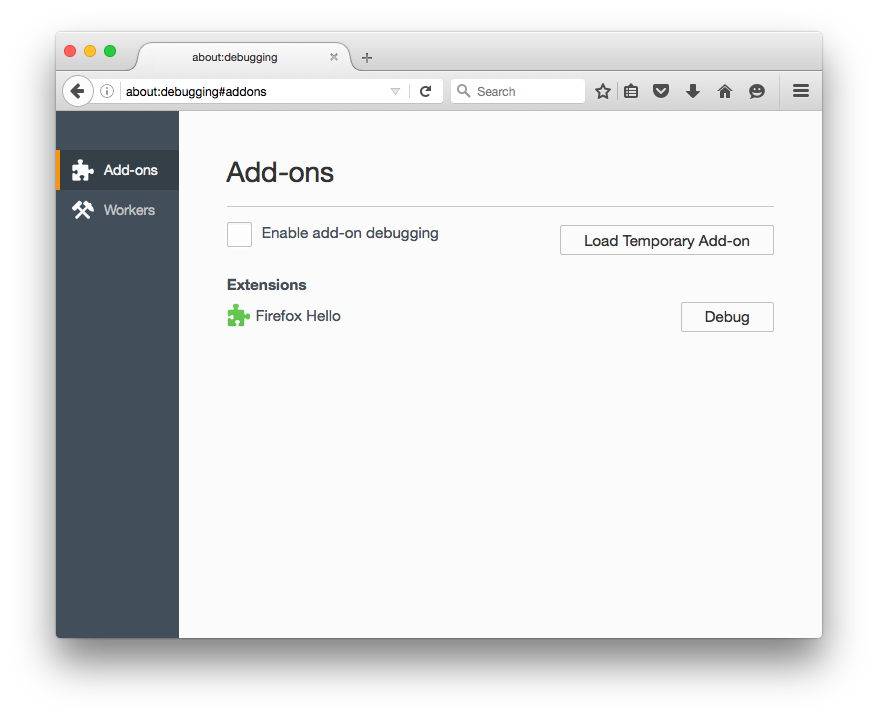

To enable this, visit a new page in Firefox, about:debugging:

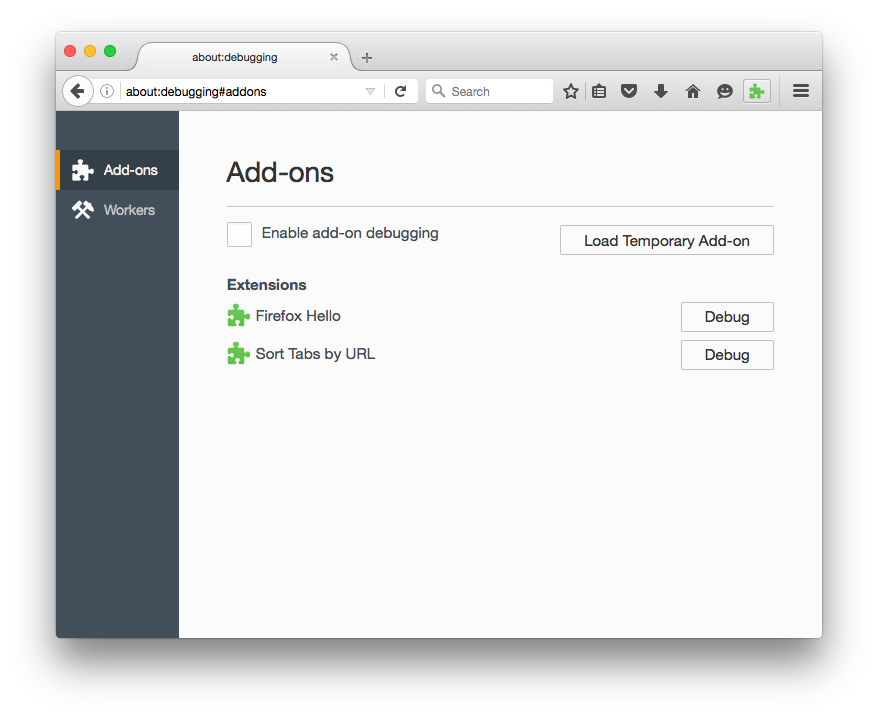

In my case I’ve got a new version of an add-on I’m developing; a new WebExtension called “Sort Tabs by URL”. To load this unsigned version temporarily, click on the “Load Temporary Add-on” and select the .xpi file for the add-on. This will load an unsigned add-on temporarily, for the duration of the current browser session. You can see this in about:debugging and also by the green button in the toolbar that the add-on creates:

The add-on is only loaded temporarily; once you restart your browser, the add-on will no longer be loaded, and you’ll have to re-load it from the add-ons manager.

If you don’t want to go to the effort of making an .xpi of your add-on while developing – you can just select a file from within the add-on, and it will be loaded temporarily without having to make a zip file.

One last note, if you have an add-on installed and then install the an add-on temporarily with the same id then the older add-on is disabled and the new temporary add-on is used.

https://blog.mozilla.org/addons/2015/12/23/loading-temporary-add-ons/

|

|

Dave Herman: Neon: Node + Rust = |

If you’re a JavaScript programmer who’s been intrigued by Rust’s hack without fear theme—making systems programming safe and fun—but you’ve been waiting for inspiration, I may have something for you! I’ve been working on Neon, a set of APIs and tools for making it super easy to write native Node modules in Rust.

TL;DR:

- Neon is an API for writing fast, crash-free native Node modules in Rust;

- Neon enables Rust’s parallelism with guaranteed thread safety;

- Neon-cli makes it easy to create a Neon project and get started; and finally…

- Help wanted!

I Can Rust and So Can You!

I wanted to make it as easy as possible to get up and running, so I built neon-cli, a command-line tool that lets you generate a complete Neon project skeleton with one simple command and build your entire project with nothing more than the usual npm install.

If you want to try building your first native module with Neon, it’s super easy: install neon-cli with npm install -g neon-cli, then create, build, and run your new project:

% neon new hello

...follow prompts...

% cd hello

% npm install

% node -e 'require("./")'If you don’t believe me, I made a screencast, so you know I’m legit.

I Take Thee at thy Word

To illustrate what you can do with Neon, I created a little word counting demo. The demo is simple: read in the complete plays of Shakespeare and count the total number of occurrences of the word “thee”. First I tried implementing it in pure JS. The top-level code splits the corpus into lines, and sums up the counts for each line:

function search(corpus, search) {

var ls = lines(corpus);

var total = 0;

for (var i = 0, n = ls.length; i < n; i++) {

total += wcLine(ls[i], search);

}

return total;

}Searching an individual line involves splitting the line up into word and matching each word against the search string:

function wcLine(line, search) {

var words = line.split(' ');

var total = 0;

for (var i = 0, n = words.length; i < n; i++) {

if (matches(words[i], search)) {

total++;

}

}

return total;

}The rest of the details are pretty straightforward but definitely check out the code—it’s small and self-contained.

On my laptop, running the algorithm across all the plays of Shakespeare usually takes about 280 – 290ms. Not hugely expensive, but slow enough to be optimizable.

Fall Into our Rustic Revelry

One of the amazing things about Rust is that highly efficient code can still be remarkably compact and readable. In the Rust version of the algorithm, the code for summing up the counts for all the lines looks pretty similar to the JS code:

let mut total = 0;

for word in line.split(' ') {

if matches(word, search) {

total += 1;

}

}

total // in Rust you can omit `return` for a trailing expressionIn fact, that same code can be written at a higher level of abstraction without losing performance, using iteration methods like filter and fold (similar to Array.prototype.filter and Array.prototype.reduce in JS):

line.split(' ')

.filter(|word| matches(word, search))

.fold(0, |sum, _| sum + 1)In my quick experiments, that even seems to shave a few milliseconds off the total running time. I think this is a nice demonstration of the power of Rust’s zero-cost abstractions, where idiomatic and high-level abstractions produce the same or sometimes even better performance (by making additional optimizations possible, like eliminating bounds checks) than lower-level, more obscure code.

On my machine, the simple Rust translation runs in about 80 – 85ms. Not bad—about 3x as fast just from using Rust, and in roughly the same number of lines of code (60 in JS, 70 in Rust). BTW, I’m being approximate here with the numbers, because this isn’t a remotely scientific benchmark. My goal is just to demonstrate that you can get significant performance improvements from using Rust; in any given situation, the particular details will of course matter.

Their Thread of Life is Spun

We’re not done yet, though! Rust enables something even cooler for Node: we can easily and safely parallelize this code—and I mean without the night-sweats and palpitations usually associated with multithreading. Here’s a quick look at the top level logic in the Rust implementation of the demo:

let total = vm::lock(buffer, |data| {

let corpus = data.as_str().unwrap();

let lines = lines(corpus);

lines.into_iter()

.map(|line| wc_line(line, search))

.fold(0, |sum, line| sum + line)

});The vm::lock API lets Neon safely expose the raw bytes of a Node Buffer object (i.e., a typed array) to Rust threads, by preventing JS from running in the meantime. And Rust’s concurrency model makes programming with threads actually fun.

To demonstrate how easy this can be, I used Niko Matsakis’s new Rayon crate of beautiful data parallelism abstractions. Changing the demo to use Rayon is as simple as replacing the into_iter/map/fold/ lines above with:

lines.into_par_iter()

.map(|line| wc_line(line, search))

.sum()Keep in mind, Rayon wasn’t designed with Neon in mind—its generic primitives match the iteration protocols of Rust, so Neon was able to just pull it off the shelf.

With that simple change, on my two-core MacBook Air, the demo goes from about 85ms down to about 50ms.

Bridge Most Valiantly, with Excellent Discipline

I’ve worked on making the integration as seamless as possible. From the Rust side, Neon functions follow a simple protocol, taking a Call object and returning a JavaScript value:

fn search(call: Call) -> JS {

let scope = call.scope;

// ...

Ok(Integer::new(scope, total))

} The scope object safely tracks handles into V8’s garbage-collected heap. The Neon API uses the Rust type system to guarantee that your native module can’t crash your app by mismanaging object handles.

From the JS side, loading the native module is straightforward:

var myNeonModule = require('neon-bridge').load();Wherefore’s this Noise?

Hopefully this demo is enough to get people interested. Beyond the sheer fun of it, I think the strongest reasons for using Rust in Node are performance and parallelism. As the Rust ecosystem grows, it’ll also be a way to give Node access to cool Rust libraries. Beyond that, I’m hoping that Neon can make a nice abstraction layer that just makes writing native Node modules less painful. With projects like node-uwp it might even be worth exploring evolving Neon towards a JS-engine-agnostic abstraction layer.

There are lots of possibilities, but I need help! If you want to get in touch, I’ve created a community slack and a #neon-bridge IRC channel on freenode.

A Quick Thanks

There’s a ton of fun exploration and work left to do but I couldn’t have gotten this far without huge amounts of help already: Andrew Oppenlander’s blog post got me off the ground, Ben Noordhuis and Marcin Cie'slak helped me wrestle with V8’s tooling, I picked up a few tricks from Nathan Rajlich’s evil genius code, Adam Klein and Fedor Indutny helped me understand the V8 API, Alex Crichton helped me with compiler and linker arcana, Niko Matsakis helped me with designing the safe memory management API, and Yehuda Katz helped me with the overall design.

You know what this means? Maybe you can help too!

http://calculist.org/blog/2015/12/23/neon-node-rust/?utm_source=all&utm_medium=Atom

|

|

David Lawrence: Happy BMO Push Day! (Part 2) |

the following changes have been pushed to bugzilla.mozilla.org:

- [1229894] Backport bug upstream 1221518 to bmo/4.2 [SECURITY] XSS in dependency graphs when displaying the bug summary

- [1234237] Backport upstream bug 1232785 to bmo/4.2 [SECURITY] Buglists in CSV format can be parsed as valid javascript in some browsers

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2015/12/23/happy-bmo-push-day-part-2/

|

|

Hannah Kane: 2015 Year in Review, Part 2 |

2015 was a great year for the MLN Platform team. I recently shared a timeline of the work that was accomplished this year, but now I’d like to focus a bit on how the year went from a process perspective.

The team formed early in the year and quickly launched the first version of Teach.mozilla.org. We used agile methodologies to quickly iterate based on user feedback and testing. The team went through some changes mid-way through the year, but at any given point during the year, it felt like a strong, cross-functional team showcasing great teamwork, communication, and enthusiasm for the projects.

We made use of the heartbeat model all year long. We started each heartbeat with a planning meeting, did a daily stand-up, and ended every heartbeat by joining the full product team for a demo. We also used Github all year long, and it became an active place where we benefited from frequent feedback from members of our community, as well as a useful way to collaborate with other team members from across the organization.

Meanwhile, the CDOT team, led by Dave Humphrey, kicked into high gear in the Spring to finish the Brackets integration project. Luke joined soon after and was able to take the Thimble UI and UX to the next level. We launched the new Thimble on August 31, and received great reviews from users. This team worked together closely, with regular stand-up meetings, and semi-regular backlog grooming sessions (aka triage).

In the last quarter of the year, we continued to build and maintain the Teach site and add new features to Thimble, but we also started to look toward 2016 plans. We did a lot of ideation and research work on upcoming projects including an MLN Directory, a Curriculum Database, and the next iteration of Badges. We also found the time to revamp X-Ray Goggles, as part of the year-long process to retire some tools and upgrade others.

Though overall I think 2015 was fantastic, while reflecting on the year, I’ve come up with a list of things I think we can improve in 2016:

- More retrospectives. I think we only did two or three all year! I’d like to think this is largely because things felt good most of the time, but I also know that there’s always room for improvement, and having a regularly scheduled retrospective can surface small process tweaks that make a world of difference. (I’m showing my Scrum Master roots here. :))

- Work on shared ownership. Though the team is cross-functional, we managed to silo ourselves within the team. The main symptom of this problem is when stand-ups become each person updating the PM, rather than team members coordinating with one another. I’d say our stand-ups, on average, were about 75% updates, and only 25% coordinating. Definitely room for improvement.

- Clarify the shape of the work and the team. It’s never been completely clear what the mandate for this team includes—certainly teach.mozilla.org, and things that are driven by the MLN team—badges, the web lit curriculum, Clubs, the Gigabit and Hive sites. But what about the MozFest website and app, which members of our team contributed to? What about Thimble? Goggles? In theory, it’s not a big need to define borders between things. But in practice, it can be confusing. When do we need to schedule a new stand-up? When do we need a separate repo? How do we prioritize when we’re comparing figurative apples and oranges? How can we easily visualize and account for people’s capacity when they’re working on multiple projects?

- Better articulate our goals and how we will measure them. The first version of the Teach site was intentionally brochure-ware, without many specific conversion targets or KPIs. After two-thirds of a year, I think we can now clarify specific goals and set up the instrumentation to measure them. The research Laura and Bobby did in Q4 has really helped with this (stay tuned for the third post in this series).

And some of the biggest process wins from 2015 (IMO!):

- Working on multiple projects allowed us to make certain things more efficient and unified. A great example of this is how the X-Ray Goggles team borrowed from the Thimble landing page during the redesign.

- We were responsive to evolving programs. For example, the Clubs program, in its inaugural year, went through several shifts that required changes to the site content and to user workflows. While we weren’t always able to keep pace with the needs, I do think we were able to accommodate many of the changes.

- Having a consistent team for months at a time was luxurious and I believe beneficial to the end product.

- Having high standards. I’ll speak specifically to design, though this extends to engineering work as well. I credit Cassie and the design team for leveling up our design work this year. The design team crits, crowd-sourcing of Redpen feedback, audits, and intense attention to detail led to some of the most consistent, strong design work I’ve seen.

- Is having awesome team members a process win? Because, man, I like the people on this team. Mavis, Sabrina, Atul, Jess, Dave, Gideon, Kieran, Jordan, Luke, Kristina, and Pomax—thanks for being so smart, talented, easy going, enthusiastic, and delightful to work with!

http://hannahgrams.com/2015/12/22/2015-year-in-review-part-2/

|

|

Mozilla Fundraising: Designing for Fundraising: The Delightful Tale of Dapper Fox |

https://fundraising.mozilla.org/designing-for-fundraising-the-delightful-tale-of-dapper-fox/

|

|

Air Mozilla: Martes mozilleros, 22 Dec 2015 |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos.

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1227998] B2G Droid product missing some fields that are on FxOS bugs

- [1231248] Add hint that DuoSec login is probably your LDAP username

- [1226287] A few more Data & BI Services Team product tweaks

- [1232324] BMO: Incorrect regexp used to filter bug IDs in Bugzilla::WebService::BugUserLastVisit

- [1231346] UI tweaks to make 2FA setup/changes more intuitive

- [1234325] Backport upstream bug 1230932 to bmo/4.2 to fix providing a condition as an ID to the webservice results in a taint error

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2015/12/22/happy-bmo-push-day-2/

|

|

Chris H-C: Needed Someplace to put my Sunglasses |

Pretty cunning, dontchathink?

https://chuttenblog.wordpress.com/2015/12/22/needed-someplace-to-put-my-sunglasses/

|

|

Dave Hunt: Selenium tests with pytest |

When you think of Mozilla you most likely first associate it with Firefox or our mission to build a better internet. You may not think we have many websites of our own, beyond perhaps the one where you can download our products. It’s only when you start listing them that you realise how many we actually have; addons repository, product support, app marketplace, build results, crash statistics, community directory, contributor tasks, technical documentation, and that’s just a few! Each of these have a suite of automated functional tests that simulate a user interacting with their browser. For most of these we’re using Python and the pytest harness. Our framework has evolved over time, and this year there have been a few exciting changes.

Over four years ago we developed and released a plugin for pytest that removed a lot of duplicate code from across our suites. This plugin did several things; it handled starting a Selenium browser, passing credentials for tests to use, and generating a HTML report. As it didn’t just do one job, it was rather difficult to name. In the end we picked pytest-mozwebqa because it was only specific in addressing the needs of the Web QA team at Mozilla. It really took us to a new level of consistency and quality across all our our web automation projects.

This year, when I officially joined the Web QA team, I started working on breaking the plugin up into smaller plugins, each with a single purpose. The first to be released was the HTML report generation (pytest-html), which generates a single file report as an alternative to the existing JUnit report or console output. The plugin was written such that the report can be enhanced by other plugins, which ultimately allows us to include screenshots and other useful things in the report.

This year, when I officially joined the Web QA team, I started working on breaking the plugin up into smaller plugins, each with a single purpose. The first to be released was the HTML report generation (pytest-html), which generates a single file report as an alternative to the existing JUnit report or console output. The plugin was written such that the report can be enhanced by other plugins, which ultimately allows us to include screenshots and other useful things in the report.

Next up was the variables injection (pytest-variables). This was needed primarily because we have tests that require an existing user account in the application under test. We couldn’t simply hard-code these credentials into our tests, because our tests are open source, and if we exposed these credentials someone may be able to use them and adversely affect our test results. With this plugin we are able to store our credentials in a private JSON file that can be simply referenced from the command line.

The final plugin was for browser provisioning (pytest-selenium). This started as a fork of the original plugin because much of the code already existed. There were a number of improvements, such as providing direct access to the Selenium object in tests, and avoiding setting a default implicit wait. In addition to supporting Sauce Labs, we also added support for BrowserStack and TestingBot.

Now that pytest-selenium has been released, we have started to migrate our own projects away from pytest-mozwebqa. The migration is relatively painless, but does involve changes to tests. If you’re a user of pytest-mozwebqa you can check out a few examples of the migration. There will no longer be any releases of pytest-mozwebqa and I will soon be marking this project as deprecated.

The most rewarding consequence of breaking up the plugins is that we’ve already seen individual contributors adopting and submitting patches. If you’re using any of these plugins let us know – I always love hearing how and where our tools are used!

|

|

Mozilla Addons Blog: WebExtensions in Firefox 45 |

In August we announced that work had begun on the WebExtensions API as the future of developing add-ons in Firefox. This post covers the progress we’ve made since then.

WebExtensions is currently in an alpha state, so while this is a great time to get involved, please keep in mind that things might change if you decide to use it in its current state. Since August, we’ve closed 77 bugs and ramped up the WebExtensions team at Mozilla. With the release of Firefox 45 in March 2016, we’ll have full support for the following APIs: alarms, contextMenus, pageAction and browserAction. Plus a bunch of partially supported APIs: bookmarks, cookies, extension, i18n, notifications, runtime, storage, tabs, webNavigation, webRequest, windows.

A full list of the API differences is available, and you can also follow along on the state of WebExtensions on arewewebextensionsyet.com. All add-ons built with WebExtensions are fully compatible with a multiprocess Firefox and will work in Chrome and Opera.

Beyond APIs, support is being added to addons.mozilla.org to enable developers to upload their add-ons and have them tested, that should be ready for Firefox 44. Documentation is being worked on in MDN and a set of example WebExtensions is available.

Over the coming months we will work our way towards a beta in Firefox 47 and the first stable release in Firefox 48. If you’d like to jump in to help, or get your API added, then please join us on our mailing list or at one of our public meetings.

Your contributions are appreciated!

https://blog.mozilla.org/addons/2015/12/21/webextensions-in-firefox-45-2/

|

|

Patrick Walton: Drawing CSS Box Shadows in WebRender |

I recently landed a change in WebRender to draw CSS box shadows using a specialized shader. Because it’s an unusual approach to drawing shadows, I thought I’d write up how it works.

Traditionally, browsers have drawn box shadows in three passes: (1) draw the unblurred box (or a nine-patch corner/edge for one); (2) blur in the horizontal direction; (3) blur in the vertical direction. This works because a Gaussian blur is a separable filter: it can be computed as the product of two one-dimensional convolutions. This is a reasonable approach, but it has downsides. First of all, it has a high cost in memory bandwidth; for a standard triple box blur on the CPU, every pixel is touched 6 times, and on the GPU every pixel is touched $$6 sigma$$ times (or $$3 sigma$$ times if a common linear interpolation trick is used), not counting the time needed to draw the unblurred image in the first place. ($$sigma$$ here is half the specified blur radius.) Second, the painting of each box shadow requires no fewer than three draw calls including (usually) one shader switch, which are expensive, especially on mobile GPUs. On the GPU, it’s often desirable to use parallel algorithms that reduce the number of draw calls and state changes, even if those algorithms have a large number of raw floating-point operations—simply because the GPU is a stream processor that’s designed for such workloads.

The key trick used in WebRender is to take advantage of the fact that we’re blurring a (potentially rounded) box, not an ordinary image. This allows us to express the Gaussian blur in (in the case of an unrounded box) a closed form and (in the case of a rounded box) a closed form minus a sum computed with a small loop. To draw a box shadow, WebRender runs a shader implementing this logic and caches the results in a nine-patch image mask stored in a texture atlas. If the page contains multiple box shadows (even those with heterogeneous sizes and radii), the engine batches all the invocations of this shader into one draw call. This means that, no matter how many box shadows are in use, the number of draw calls and state changes remains constant (as long as the size of the texture atlas isn’t exhausted). Driver overhead and memory bandwidth are minimized, and the GPU spends as much time as possible in raw floating-point computation, which is exactly the kind of workload it’s optimized for.

The remainder of this post will be a dive into the logic of the fragment shader itself. The source code may be useful as a reference.

For those unfamiliar with OpenGL, per-pixel logic is expressed with a fragment shader (sometimes called a pixel shader). A fragment shader (in this case) is conceptually a function that maps arbitrary per-pixel input data to the RGB values defining the resulting color for that pixel. In our case, the input data for each pixel simply consists of the $$x$$ and $$y$$ coordinates for that pixel. We’ll call our function $$RGB(u,v)$$ and define it as follows:

$$RGB(u,v) = sum_{y=-oo}^{oo} sum_{x=-oo}^{oo}G(x-u)G(y-v)RGB_{"rounded box"}(x,y)$$

Here, $$RGB_{"rounded box"}(x,y)$$ is the color of the unblurred, possibly-rounded box at the coordinate $$(x,y)$$, and $$G(x)$$ is the Gaussian function used for the blur:

$$G(x)=1/sqrt(2 pi sigma^2) e^(-x^2/(2 sigma^2))$$

A Gaussian blur in one dimension is a convolution that maps each input pixel to an average of the pixels adjacent to it weighted by $$G(x)$$, where $$x$$ is the distance from the output pixel. A two-dimensional Gaussian blur is simply the product of two one-dimensional Gaussian blurs, one for each dimension. This definition leads to the formula for $$RGB(x,y)$$ above.

Since CSS box shadows blur solid color boxes, the color of each pixel is either the color of the shadow (call it $$RGB_{"box"}$$) or transparent. We can rewrite this into two functions:

$$RGB(x,y) = RGB_{"box"}C(x,y)$$

and

$$C(u,v) = sum_{y=-oo}^{oo} sum_{x=-oo}^{oo}G(x-u)G(y-v)C_{"rounded box"}(x,y)$$

where $$C_{"rounded box"}(x,y)$$ is 1.0 if the point $$(x,y)$$ is inside the unblurred, possibly-rounded box and 0.0 otherwise.

Now let’s start with the simple case, in which the box is unrounded. We’ll call this function $$C_{"blurred box"}$$:

$$C_{"blurred box"}(u,v) = sum_{y=-oo}^{oo} sum_{x=-oo}^{oo}G(x-u)G(y-v)C_{"box"}(x,y)$$

where $$C_{"box"}(x,y)$$ is 1.0 if the point $$(x,y)$$ is inside the box and 0.0 otherwise.

Let $$x_{"min"}, x_{"max"}, y_{"min"}, y_{"max"}$$ be the left, right, top, and bottom extents of the box respectively. Then $$C_{"box"}(x,y)$$ is 1.0 if $$x_{"min"} <= x <= x_{"max"}$$ and $$y_{"min"} <= y <= y_{"max"}$$ and 0.0 otherwise. Now let’s rearrange $$C_{"blurred box"}(x,y)$$ above:

$$C_{"blurred box"}(u,v) =

(sum_{y=-oo}^{y_{"min"} - 1}

sum_{x=-oo}^{x=oo} G(x-u)G(y-v)C_{"box"}(x,y)) +

(sum_{y=y_{"min"}}^{y_{"max"}}

(sum_{x=-oo}^{x_{"min"}-1} G(x-u)G(y-v)C_{"box"}(x,y)) +

(sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)C_{"box"}(x,y)) +

(sum_{x=x_{"max"}+1}^{x=oo} G(x-u)G(y-v)C_{"box"}(x,y))) +

(sum_{y=y_{"max"} + 1}^{oo}

sum_{x=-oo}^{x=oo} G(x)G(y)C_{"box"}(x,y))$$

We can now eliminate several of the intermediate sums, along with $$C_{"box"}(x,y)$$, using its definition and the sum bounds:

$$C_{"blurred box"}(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)$$

Now let’s simplify this expression to a closed form. To begin with, we’ll approximate the sums with integrals:

$$C_{"blurred box"}(u,v) ~~ int_{y_{"min"}}^{y_{"max"}} int_{x_{"min"}}^{x_{"max"}} G(x-u)G(y-v) dxdy$$

$$= int_{y_{"min"}}^{y_{"max"}} G(y-v) int_{x_{"min"}}^{x_{"max"}} G(x-u) dxdy$$

Now the inner integral can be evaluated to a closed form:

$$int_{x_{"min"}}^{x_{"max"}}G(x-u)dx

= int_{x_{"min"}}^{x_{"max"}}1/sqrt(2 pi sigma^2) e^(-(x-u)^2/(2 sigma^2))dx

= 1/2 "erf"((x_{"max"}-u)/(sigma sqrt(2))) - 1/2 "erf"((x_{"min"}-u)/(sigma sqrt(2)))$$

$$"erf"(x)$$ here is the Gauss error function. It is not found in GLSL (though it is found in ), but it does have the following approximation suitable for evaluation on the GPU:

$$"erf"(x) ~~ 1 - 1/((1+a_1x + a_2x^2 + a_3x^3 + a_4x^4)^4)$$

where $$a_1$$ = 0.278393, $$a_2$$ = 0.230389, $$a_3$$ = 0.000972, and $$a_4$$ = 0.078108.

Now let’s finish simplifying $$C(u,v)$$:

$$C_{"blurred box"}(u,v) ~~

int_{y_{"min"}}^{y_{"max"}} G(y-v) int_{x_{"min"}}^{x_{"max"}} G(x-u) dxdy$$

$$= int_{y_{"min"}}^{y_{"max"}} G(y-v)

(1/2 "erf"((x_{"max"}-u)/(sigma sqrt(2))) - 1/2 "erf"((x_{"min"}-u)/(sigma sqrt(2)))) dy$$

$$= 1/2 "erf"((x_{"max"}-u)/(sigma sqrt(2))) - 1/2 "erf"((x_{"min"}-u)/(sigma sqrt(2)))

int_{y_{"min"}-v}^{y_{"max"}} G(y-v) dy$$

$$= 1/4 ("erf"((x_{"max"}-u)/(sigma sqrt(2))) - "erf"((x_{"min"}-u)/(sigma sqrt(2))))

("erf"((y_{"max"}-v)/(sigma sqrt(2))) - "erf"((y_{"min"}-v)/(sigma sqrt(2))))$$

And this gives us our closed form formula for the color of the blurred box.

Now for the real meat of the shader: the handling of nonzero border radii. CSS allows boxes to have elliptical radii in the corners, with separately defined major axis and minor axis lengths. Each corner can have separately defined radii; for simplicity, we only consider boxes with identical radii on all corners in this writeup, although the technique readily generalizes to heterogeneous radii. Most border radii on the Web are circular and homogeneous, but to handle CSS properly our shader needs to support elliptical heterogeneous radii in their full generality.

As before, the basic function to compute the pixel color looks like this:

$$C(u,v) = sum_{y=-oo}^{oo} sum_{x=-oo}^{oo}G(x-u)G(y-v)C_{"rounded box"}(x,y)$$

where $$C_{"rounded box"}(x,y)$$ is 1.0 if the point $$(x,y)$$ is inside the box (now with rounded corners) and 0.0 otherwise.

Adding some bounds to the sums gives us:

$$C(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)

C_{"rounded box"}(x,y)$$

$$C_{"rounded box"}(x,y)$$ is 1.0 if $$C_{"box"}(x,y)$$ is 1.0—i.e. if the point $$(x,y)$$ is inside the unrounded box—and the point is either inside the ellipse defined by the value of the border-radius property or outside the border corners entirely. Let $$C_{"inside corners"}(x,y)$$ be 1.0 if this latter condition holds and 0.0 otherwise—i.e. 1.0 if the point $$(x,y)$$ is inside the ellipse defined by the corners or completely outside the corner area. Graphically, $$C_{"inside corners"}(x,y)$$ looks like a blurry version of this:

Then, because $$C_{"box"}(x,y)$$ is always 1.0 within the sum bounds, $$C_{"rounded box"}(x,y)$$ reduces to $$C_{"inside corners"}(x,y)$$:

$$C(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)

C_{"inside corners"}(x,y)$$

Now let $$C_{"outside corners"}(x,y)$$ be the inverse of $$C_{"inside corners"}(x,y)$$—i.e. $$C_{"outside corners"}(x,y) = 1.0 - C_{"inside corners"}(x,y)$$. Intuitively, $$C_{"outside corners"}(x,y)$$ is 1.0 if $$(x,y)$$ is inside the box but outside the rounded corners—graphically, it looks like one shape for each corner. With this, we can rearrange the formula above:

$$C(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)

(1.0 - C_{"outside corners"}(x,y))$$

$$= sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v) -

G(x-u)G(y-v)C_{"outside corners"}(x,y)$$

$$= (sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)G(y-v)) -

sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)C_{"outside corners"}(x,y)$$

$$= C_{"blurred box"}(u,v) -

sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)C_{"outside corners"}(x,y)$$

We’ve now arrived at our basic strategy for handling border corners: compute the color of the blurred unrounded box, then “cut out” the blurred border corners by subtracting their color values. We already have a closed form formula for $$C_{"blurred box"}(x,y)$$, so let’s focus on the second term. We’ll call it $$C_{"blurred outside corners"}(x,y)$$:

$$C_{"blurred outside corners"}(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)C_{"outside corners"}(x,y)$$

Let’s subdivide $$C_{"outside corners"}(x,y)$$ into the four corners: top left, top right, bottom right, and bottom left. This is valid because every point belongs to at most one of the corners per the CSS specification—corners cannot overlap.

$$C_{"blurred outside corners"}(u,v) = sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)(C_{"outside TL corner"}(x,y) + C_{"outside TR corner"}(x,y)

+ C_{"outside BR corner"}(x,y) + C_{"outside BL corner"}(x,y))$$

$$= (sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)(C_{"outside TL corner"}(x,y) + C_{"outside TR corner"}(x,y))) +

sum_{y=y_{"min"}}^{y_{"max"}} sum_{x=x_{"min"}}^{x_{"max"}}

G(x-u)G(y-v)(C_{"outside BR corner"}(x,y) + C_{"outside BL corner"}(x,y))$$

$$= (sum_{y=y_{"min"}}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside TL corner"}(x,y)) +

sum_{x=x_{"min"}}^{x_{"max"}} G(x-u) C_{"outside TR corner"}(x,y))) +

sum_{y=y_{"min"}}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside BL corner"}(x,y)) +

sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside BR corner"}(x,y))$$

Let $$a$$ and $$b$$ be the horizontal and vertical border radii, respectively. The vertical boundaries of the top left and top right corners are defined by $$y_min$$ on the top and $$y_min + b$$ on the bottom; $$C_{"outside TL corner"}(x,y)$$ and $$C_{"outside TR corner"}(x,y)$$ will evaluate to 0 if $$y$$ lies outside this range. Likewise, the vertical boundaries of the bottom left and bottom right corners are $$y_max - b$$ and $$y_max$$.

(Note, again, that we assume all corners have equal border radii. The following simplification depends on this, but the overall approach doesn’t change.)

Armed with this simplification, we can adjust the vertical bounds of the sums in our formula:

$$C_{"blurred outside corners"}(u,v) =

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

((sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside TL corner"}(x,y)) +

sum_{x=x_{"min"}}^{x_{"max"}} G(x-u) C_{"outside TR corner"}(x,y))) +

sum_{y=y_{"max"} - b}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside BL corner"}(x,y)) +

sum_{x=x_{"min"}}^{x_{"max"}} G(x-u)C_{"outside BR corner"}(x,y))$$

And, following similar logic, we can adjust their horizontal bounds:

$$C_{"blurred outside corners"}(u,v) =

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

((sum_{x=x_{"min"}}^{x_{"min"} + a} G(x-u)C_{"outside TL corner"}(x,y)) +

sum_{x=x_{"max"} - a}^{x_{"max"}} G(x-u) C_{"outside TR corner"}(x,y))) +

sum_{y=y_{"max"} - b}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{x_{"min"} + a} G(x-u)C_{"outside BL corner"}(x,y)) +

sum_{x=x_{"max"} - a}^{x_{"max"}} G(x-u)C_{"outside BR corner"}(x,y))$$

At this point, we can work on eliminating all of the $$C_{"outside corner"}$$ functions from our expression. Let’s look at the definition of $$C_{"outside TR corner"}(x,y)$$. $$C_{"outside TR corner"}(x,y)$$ is 1.0 if the point $$(x,y)$$ is inside the rectangle formed by the border corner but outside the ellipse that defines that corner. That is, $$C_{"outside TR corner"}(x,y)$$ is 1.0 if $$y_{"min"} <= y <= y_{"min"} + b$$ and $$E_{"TR"}(y) <= x <= x_{"max"}$$, where $$E_{"TR"}(y)$$ defines the horizontal position of the point on the ellipse with the given $$y$$ coordinate. $$E_{"TR"}(y)$$ can easily be derived from the equation of an ellipse centered at `$$(x_0, y_0)$$:

$$(x-x_0)^2/a^2 + (y-y_0)^2/b^2 = 1$$

$$(x-x_0)^2 = a^2(1 - (y-y_0)^2/b^2)$$

$$x = x_0 + sqrt(a^2(1 - (y-y_0)^2/b^2))$$

$$E_{"TR"}(y) = x_0 + a sqrt(1 - ((y-y_0)/b)^2)$$

Parallel reasoning applies to the other corners.

Now that we have bounds within which each $$C_{"outside corner"}$$ function evaluates to 1.0, we can eliminate all of these functions from the definition of $$C_{"blurred outside corners"}$$:

$$C_{"blurred outside corners"}(u,v) =

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

((sum_{x=x_{"min"}}^{E_{"TL"}(y)} G(x-u)) +

sum_{x=E_{"TR"}(y)}^{x_{"max"}} G(x-u))) +

sum_{y=y_{"max"} - b}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{E_{"BL"}(y)} G(x-u)) +

sum_{x=E_{"BR"}(y)}^{x_{"max"}} G(x-u))$$

To simplify this a bit further, let’s define an intermediate function:

$$E(y, y_0) = a sqrt(1 - ((y - y_0)/b)^2)$$

And rewrite $$C_{"blurred outside corners"}(x,y)$$ as follows:

$$C_{"blurred outside corners"}(u,v) =

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

((sum_{x=x_{"min"}}^{x_{"min"} + a - E(y, y_{"min"} + b)} G(x-u)) +

sum_{x=x_{"max"} - a + E(y, y_{"min"} + b)}^{x_{"max"}} G(x-u))) +

(sum_{y=y_{"max" - b}}^{y_{"max"}} G(y-v)

((sum_{x=x_{"min"}}^{x_{"min"} + a - E(y, y_{"max"} - b)} G(x-u)) +

sum_{x=x_{"max"} - a + E(y, y_{"max"} - b)}^{x_{"max"}} G(x-u)))$$

Now we simply follow the procedure we did before for the box. Approximate the inner sums with integrals:

$$C_{"blurred outside corners"}(u,v) ~~

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

((int_{x_{"min"}}^{x_{"min"} + a - E(y, y_{"min"} + b)} G(x-u)dx) +

int_{x_{"max"} - a + E(y, y_{"min"} + b)}^{x_{"max"}} G(x-u)dx)) +

(sum_{y=y_{"max" - b}}^{y_{"max"}} G(y-v)

((int_{x_{"min"}}^{x_{"min"} + a - E(y, y_{"max"} - b)} G(x-u)dx) +

int_{x_{"max"} - a + E(y, y_{"max"} - b)}^{x_{"max"}} G(x-u)dx))$$

Replace $$int G(x)dx$$ with its closed-form solution:

$$C_{"blurred outside corners"}(u,v) ~~

(sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

(1/2 "erf"((x_{"min"} - u + a - E(y, y_{"min"} - v + b)) / (sigma sqrt(2))) -

1/2 "erf"((x_{"min"} - u) / (sigma sqrt(2))) +

(1/2 "erf"((x_{"max"} - u) / (sigma sqrt(2))) -

1/2 "erf"((x_{"max"} - u - a + E(y, y_{"min"} - v + b)) / (sigma sqrt(2)))))) +

sum_{y=y_{"max"} - b}^{y_{"max"}} G(y-v)

(1/2 "erf"((x_{"min"} - u + a - E(y, y_{"max"} - v - b)) / (sigma sqrt(2))) -

1/2 "erf"((x_{"min"} - u) / (sigma sqrt(2))) +

(1/2 "erf"((x_{"max"} - u) / (sigma sqrt(2))) -

1/2 "erf"((x_{"max"} - u - a + E(y, y_{"max"} - v - b)) / (sigma sqrt(2)))))$$

$$= 1/2 (sum_{y=y_{"min"}}^{y_{"min"} + b} G(y-v)

("erf"((x_{"min"} - u + a - E(y, y_{"min"} - v + b)) / (sigma sqrt(2))) -

"erf"((x_{"min"} - u) / (sigma sqrt(2))) +

("erf"((x_{"max"} - u) / (sigma sqrt(2))) -

"erf"((x_{"max"} - u - a + E(y, y_{"min"} - v + b)) / (sigma sqrt(2)))))) +

sum_{y=y_{"max"} - b}^{y_{"max"}} G(y-v)

("erf"((x_{"min"} - u + a - E(y, y_{"max"} - v - b)) / (sigma sqrt(2))) -

"erf"((x_{"min"} - u) / (sigma sqrt(2))) +

("erf"((x_{"max"} - u) / (sigma sqrt(2))) -

"erf"((x_{"max"} - u - a + E(y, y_{"max"} - v - b)) / (sigma sqrt(2)))))$$

And we’re done! Unfortunately, this is as far as we can go with standard mathematical functions. Because the parameters to the error function depend on $$y$$, we have no choice but to evaluate the inner sum numerically. Still, this only results in one loop in the shader.

The current version of the shader implements the algorithm basically as described here. There are several further improvements that could be made:

The Gauss error function approximation that we use is accurate to

$$5 xx 10^-4$$, which is way more accurate than we need. (Remember that the units here are 8-bit color values!) The approximation involves computing$$x, x^2, x^3, " and " x^4$$, which is expensive since we evaluate the error function many times for each pixel. It could be a nice speedup to replace this with a less accurate, faster approximation. Or we could use a lookup table.We should not even compute the amount to subtract from the corners if the pixel in question is more than

$$3 sigma$$pixels away from them.$$C_{"blurred outside corners"}(x,y)$$is a function of sigmoid shape. It might be interesting to try approximating it with a logistic function to avoid the loop in the shader. It might be possible to do this with a few iterations of least squares curve fitting on the CPU or with some sort of lookup table. Unfortunately, the parameters to the approximation will have to be per-box-shadow, because$$C_{"blurred outside corners"}$$depends on$$a$$,$$b$$,$${x, y}_{"min, max"}$$, and$$sigma$$.Evaluating

$$G(x)$$could also be done with a lookup table. There is already a GitHub issue filed on this.

Finally, it would obviously be nice to perform some comprehensive benchmarks of this rendering algorithm, fully optimized, against the standard multiple-pass approach to drawing box shadows. In general, WebRender is not at all GPU bound on most hardware (like most accelerated GPU vector graphics rasterizers), so optimizing the count of GPU raster operations has not been a priority so far. When and if this changes (which I suspect it will as the rendering pipeline gets more and more optimized), it may be worth going back and optimizing the shader to reduce the load on the ALU. For now, however, this technique seems to perform quite well in basic testing, and since WebRender is so CPU bound it seems likely to me that the reduction in draw calls and state changes will make this technique worth the cost.

http://pcwalton.github.com/blog/2015/12/21/drawing-css-box-shadows-in-webrender/

|

|

Code Simplicity: Two is Too Many |

There is a key rule that I personally operate by when I’m doing incremental development and design, which I call “two is too many.” It’s how I implement the “be only as generic as you need to be” rule from the Three Flaws of Software Design.

Essentially, I know exactly how generic my code needs to be by noticing that I’m tempted to cut and paste some code, and then instead of cutting and pasting it, designing a generic solution that meets just those two specific needs. I do this as soon as I’m tempted to have two implementations of something.

For example, let’s say I was designing an audio decoder, and at first I only supported WAV files. Then I wanted to add an MP3 parser to the code. There would definitely be common parts to the WAV and MP3 parsing code, and instead of copying and pasting any of it, I would immediately make a superclass or utility library that did only what I needed for those two implementations.

The key aspect of this is that I did it right away—I didn’t allow there to be two competing implementations; I immediately made one generic solution. The next important aspect of this is that I didn’t make it too generic—the solution only supports WAV and MP3 and doesn’t expect other formats in any way.

Another part of this rule is that a developer should ideally never have to modify one part of the code in a similar or identical way to how they just modified a different part of it. They should not have to “remember” to update Class A when they update Class B. They should not have to know that if Constant X changes, you have to update File Y. In other words, it’s not just two implementations that are bad, but also two locations. It isn’t always possible to implement systems this way, but it’s something to strive for.

If you find yourself in a situation where you have to have two locations for something, make sure that the system fails loudly and visibly when they are not “in sync.” Compilation should fail, a test that always gets run should fail, etc. It should be impossible to let them get out of sync.

And of course, the simplest part of this rule is the classic “Don’t Repeat Yourself” principle—don’t have two constants that represent the same exact thing, don’t have two functions that do the same exact thing, etc.

There are likely other ways that this rule applies. The general idea is that when you want to have two implementations of a single concept, you should somehow make that into a single implementation instead.

When refactoring, this rule helps find things that could be improved and gives some guidance on how to go about it. When you see duplicate logic in the system, you should attempt to combine those two locations into one. Then if there is another location, combine that one into the new generic system, and proceed in that manner. That is, if there are many different implementations that need to be combined into one, you can do incremental refactoring by combining two implementations at a time, as long as combining them does actually make the system simpler (easier to understand and maintain). Sometimes you have to figure out the best order in which to combine them to make this most efficient, but if you can’t figure that out, don’t worry about it—just combine two at a time and usually you’ll wind up with a single good solution to all the problems.

It’s also important not to combine things when they shouldn’t be combined. There are times when combining two implementations into one would cause more complexity for the system as a whole or violate the Single Responsibility Principle. For example, if your system’s representation of a Car and a Person have some slightly similar code, don’t solve this “problem” by combining them into a single CarPerson class. That’s not likely to decrease complexity, because a CarPerson is actually two different things and should be represented by two separate classes.

This isn’t a hard and fast law of the universe—it’s a more of a strong guideline that I use for making judgments about design as I develop incrementally. However, it’s quite useful in refactoring a legacy system, developing a new system, and just generally improving code simplicity.

-Max

|

|

Mark Surman: Mozilla Foundation 2020 Strategy |

We outlined a vision back in October for the next phase of Mozilla Foundation’s work: fuel the movement that is building the next wave of open into the digital world.

Since then, we’ve been digging into the first layer ‘how do we do this?’ detail. As part of this process, we have asked things like: What issues do we want to focus on first? How do we connect leaders and rally citizens to build momentum? And, how does this movement building work fit into Mozilla’s overall strategy? After extensive discussion and reflection, we drafted a Mozilla Foundation 2020 Strategy document to answer these questions, which I’m posting here for comment and feedback. There is both a slide version and a long form written version.

The first piece of this strategy is to become a louder, more articulate thought leader on the rights of internet users and the health of the internet.

Concretely, that means picking the issues we care about and taking a stance. For the first phase of this movement building work, we are going to focus on:

- Online privacy: from surveillance to tracking to security, it’s eroding

- Digital inclusion: from zero rating to harassment, it’s not guaranteed

- Web literacy: the internet is growing, but web literacy isn’t

We’ll show up in these issues through everything from more frequent blog posts and opinion pieces to a new State of the Web report that we hope to release toward the end of 2016.

The other key pieces of our strategy are growing our ‘leadership network’ and creating a full scale ‘advocacy engine’ that both feed and draw from this agenda. As part of the planning process, we developed a simple strategy map to show how all pieces work together:

A. Shape the agenda. Articulate a clear, forceful agenda. Start with privacy, inclusion and literacy over next three years. Focus MoFo efforts here first. Impact: online privacy, digital inclusion and web literacy are mainstream social issues globally.

B. Connect leaders. Continue to build a leadership network to gather and network people are who are motivated by this agenda. Get them doing stuff together, generating new, concrete solutions through things like MozFest and communities of practice. Impact: more people and orgs working alongside Mozilla to shape the agenda and rally citizens.

C. Rally citizens. Build an advocacy group that will rally a global force of 10s of millions of people who take action and change how they — and their friends — use the web. Impact: people make more conscious choices, companies and governments react.

This movement building strategy is meant to complement Mozilla’s product and technology efforts. If we point roughly in the same direction, things like Firefox, our emerging work on things like open connected devices and rallying people to a common cause give us a chance to have an impact far bigger than if we did one of these things alone.

While this builds on our past work, it is worth noting that there are some important differences from the initial thinking we had earlier in the year. We started out talking about a ‘Mozilla Academy’ or ‘Mozilla Learning’. And we had universal web literacy as our top line social impact goal. Along the way, we realized that web literacy is one important area where our movement building work can impact the world — but that there are other issues where we want and need to have impact as well. The focus on a rolling agenda setting model in the current strategy reflects that realization.

It’s also worth calling out: a significant portion of this strategy is not new. In fact, the whole approach we used was to look at what’s working and where we have strengths, and then build from there. Much of what we plan to do with the Leadership Network already exists in the form of communities of practices like Hive, Open News, Science Lab and our Open Web Network. These networks become the key hubs that we build the larger network around. Similarly, we have had increasing success with advocacy and fundraising — we are now going to invest much more here to grow further. The only truly new part is the explicit agenda-setting function. Doing more here should have been obvious before, but it wasn’t. We’ve added it into the core of our strategy to both focus and draw on our leadership and advocacy work.

As you’ll see if you look at the planning documents (slides | long form), we are considering the current documents as version 0.8. That means that the broad framework is complete and fixed. The next phase will involve a) engagement with our community and partners re: how this framework can provide the most value and b) initial roll out of key parts of the plan to test our thinking by doing. Plans to do this in the first half of 2016 are detailed in the documents.

At this stage, we really want reactions to this next level of detail. What seems compelling? What doesn’t? Where are there connections to the broader movement or to other parts of Mozilla that we’re not making yet? And, most important, are there places that you want to get involved? There are many ways to offer feedback, including going to the Mozilla Leadership planning wiki and commenting on this blog.

I’m excited about this strategy, and I’m optimistic about how it can make Mozilla, our allies and the web stronger. And as we move into our next phase of engagement and doing, I’m looking forward to talking to more and more people about this work.

The post Mozilla Foundation 2020 Strategy appeared first on Mark Surman.

|

|

Emily Dunham: Questions about Open Source and Design |

Questions about Open Source and Design

Today, I posed a question to some professional UI and UX designers:

How can an open source project without dedicated design experts collaborate with amateur, volunteer designers to produce a well-designed product?

They revealed that they’ve faced similar collaboration challenges, but knew of neither a specific process to solve the problem nor an organization that had overcome it in the past.

Have you solved this problem? Have you tried some process or technique and learned that it’s not able to solve the problem? Email me (design@edunham.net) if you know of an open source project that’s succeeded at opening their design as well, and I’ll update back here with what I learn!

In no particular order, here are some of the problems that we were talking about:

- Non-designers struggle to give constructive feedback on design. I can say “that’s ugly” or “that’s hard to use” more easily than I can say “here’s how you can make it better”.

- Projects without designers in the main decision-making team can have a hard time evaluating the quality of a proposed design.

- Non-designers struggle to articulate the objective design needs of their projects, so design remains a single monolithic problem rather than being decomposed into bite-sized, introductory issues the way code problems are.

- Volunteer designers have a difficult time finding open source projects to get involved with.

- Non-designers don’t know the difference between different types of design, and tend to bikeshed on superficial, obvious traits like colors when they should be focusing on more subtle parts of the user experience. We as non-designers are like clients who ask for a web site without knowing that there’s a difference between frontend development, back end development, database administration, and systems administration.

- The tests which designers apply to their work are often almost impossible to automate. For instance, I gather that a lot of user interaction testing involves watching new users attempt to complete a task using a given design, and observing the challenges they encounter.

Again, if you know of an open source project that’s overcome any of these challenges, please email me at design@edunham.net and tell me about it!

http://edunham.net/2015/12/21/questions_about_open_source_and_design.html

|

|

Kevin Ngo: How A-Frame VR is Different from Other 3D Markup Languages |

Rough diagram of the A-Frame entity-component system.

Rough diagram of the A-Frame entity-component system.

Upon first seeing A-Frame, branded as "building blocks for the web" displaying

markup like , developers may conceive A-Frame as yet another 3DML (3D

markup language) such as X3Dom or GLAM. What A-Frame brings to

the game is that it is based off an entity-component system, a pattern

used by universal game engines like Unity which favors composability

over inheritance. As we'll see, this makes A-Frame extremely extendable.

And A-Frame VR is extremely mindful of how to start a developer ecosystem. There are tools, tutorials, guides, boilerplates, libraries being built and knowledge being readily shared on Slack.

Entity-Component System

The entity-component system is a pattern in which every entity, or object, in a scene are general placeholders. Then components are used to add appearance, behavior, and functionality. They're bags of logic and data that can be applied to any entity, and they can be defined to just about do anything, and anyone can easily develop and share their components. To imagine this visually, let's revisit this image:

An entity, by itself without components, doesn't render or do anything. A-Frame ships with over 15 basic components. We can add a geometry component to give it shape, a material component to give it appearance, or a light component and sound component to have it emit light or sound.

Each component has properties that further defines how it modifies the entity. And components can be mixed and matched at will, hence the "composable" word root of "component". In traditional terms, they can be thought of as plugins. And anyone can write them to do anything, even explode an entity. They are expected to become an integral part of the workflow of building advanced scenes.

Writing and Sharing Components

So at what point does the promise of the ecosystem come in? A component is simply a plain JavaScript object that defines several lifecycle handlers that manages the component's data. Here are some example third-party components that I and other people have written:

Small components can be as little as a few lines of code. Under the hood, they either do three.js object or JavaScript DOM manipulations. I will go into more detail how to write a component at a later data, but to get started building a sharable component, check out the component boilerplate.

Comparison with Other 3DMLs

Other 3DMLs, or any markup languages at all for that matter, are based on an inheritance pattern. This is sort of the default pattern to go towards given the heirarchichal nature of HTML and XML. Even A-Frame was initially built in this way. The problem is that this lacks composability. Customizing objects to do something more than basic becomes difficult, both to the user and to the library developer.

The functionality of the language then becomes dependent on how many features the maintainers and the library add. With A-Frame however, composability brings about limitless functionality:

Putting it logically, the different kinds of functionality you can squeeze out of an object is the permutation of the number of components you have. With the basic 16 components that A-Frame comes with, that's 65536 different sets of components that could be used. Add in the fact that components can be further customized with properties, and that there is an ecosystem of components to tap into, the previous use of the word "limitless" was quite literal.

With other 3DML libraries, if they ship 50 different kinds of objects, then you get only get 50 different kinds of objects with fixed behavior.

|

|

Daniel Stenberg: A 2015 retrospective |

Wow, another year has passed. Summing up some things I did this year.

Commits

I don’t really have good global commit count for the year, but github counts 1300 commits and I believe the vast majority of my commits are hosted there. Most of them in curl and curl-oriented projects.

We did 8 curl releases during the year featuring a total of 575 bug fixes. The almost 1,200 commits were authored by 107 different individuals.

Books

I continued working on http2 explained during the year, and after having changed to markdown format it is now available in more languages than ever thanks to our awesome translators!

I started my second book project in the fall of 2015, using the working title everything curl, which is a much larger book effort than the HTTP/2 book and after having just passed 23,500 words that create over 110 pages in the PDF version, almost half of the planned sections are still left to write…

I almost doubled my number of twitter followers during this year, now at 2,850 something. While this is a pointless number, reaching out slightly further does have the advantage that I get better responses and that makes me appreciate and get more out of twitter.

Stackoverflow

I’ve continued to respond to questions there, and my total count is now at 550 answers, out of which I wrote about 80 this year. The top scored answer I wrote during 2015 is for a question that isn’t phrased like one: Apache and HTTP2.

Keyboard use

I’ve pressed a bit over 6.4 million keys on my primary keyboard during the year, and 10.7% of the keys were pressed on weekends.

During the 2900+ hours when at least one key press were registered, I averaged on 2206 key presses per hour.

The most excessive key banging hour of the year started September 21 at 14:00 and ended with me reaching 10,875 key presses.

The most excessive day was June 9, during which I pushed 63,757 keys.

Talks

This is all the 16 opportunities where I’ve talked in front of an audience during 2015. As you will see, the list of topics were fairly limited…

- HTTP/2 at FOSDEM February 1st, Brussels

- curl in embedded devices at FOSDEM February 1st, Brussels

- practical uses of curl and TLS, at Mera Krypto, March 5 Stockholm

- curl and TLS at Mera Krypto, April 29th Stockholm

- HTTP/2 for TCP/IP Geeks Stockholm, May 6th Stockholm

- (non-public event) curl, for [name redacted] May 21st in Stockholm

- curl – a hobby project with a billions users at Google Tech Talk, August 26th in Stockholm, Sweden

- HTTP/2 with curl, foss-sthlm on June 4th in Stockholm