Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

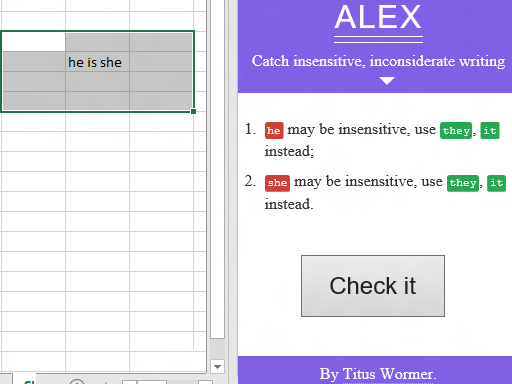

Christian Heilmann: Flagging up inconsiderate writing in Microsoft Office using JavaScript |

Alex.js is a service to “Catch insensitive, inconsiderate writing” in text. You can try out Alex online.

Whatever your stance on “too PC” is, it is a good idea to think about the words we use and what effect they can have on people. And when it is easy to avoid a word that may cause harm, why not do it? That said, we’re lazy and forgetful creatures, which is a why a tool like Alex.js can help.

With Alex being a JavaScript solution, we didn’t have to wait long for its integration into our favourite toys like Atom, Sublime, Gulp and Slack. However, these are our tools and the message and usefulness of Alex should be spread wider.

Whilst I am writing this in Sublime text, the fact is that most documents are written in office suites. Either the ones by Microsoft or Google’s or other online versions. I was very excited to see that Microsoft Office now has the ability to extend all its apps with JavaScript and I wanted the Alex.js integration to be my first contribution to that team. Then lots of traveling happened and the open sourcing of the JavaScript engine and real life stuff (moving, breaking up, such things).

On my last trip to Redmond I had a sit-down with the apps and extensions team and we talked about JavaScript, packaging and add-ons. One of the bits I brought up is Alex.js and how it would be a killer feature for office as a reminder for people not to be horrible without planning to be.

And lo and behold, the team listened and my ace colleagues Kiril Seksenov, John-David Dalton and Kevin Hill now made it happen: you can now download the Alex-add-in.

The add-in works in: Excel 2013 Service Pack 1 or later, Excel Online, PowerPoint 2013 Service Pack 1 or later, Project 2013 Service Pack 1 or later, Word 2013 Service Pack 1 or later and Word Online.

Spread the word and let’s get rid of inconsiderate writing one document at a time.

|

|

Fr'ed'eric Wang: MathML at the Web Engines Hackfest 2015 |

Hackfest

Two weeks ago, I travelled to Spain to participate to the second Web Engines Hackfest which was sponsored by Igalia and Collabora. Such an event has been organized by Igalia since 2009 and used to be focused on WebkitGTK+. It is great to see that it has now been extended to any Web engines & platforms and that a large percentage of non-igalian developers has been involved this year. If you did not get the opportunity to attend this event or if you are curious about what happened there, take a look at the wiki page or flickr album.

I really like this kind of hacking-oriented and participant-driven event where developers can meet face to face, organize themselves in small collaboration groups to efficiently make progress on a task or give passionate talk about what they have recently been working on. The only small bemol I have is that it is still mainly focused on WebKit/Blink developments. Probably, the lack of Mozilla/Microsoft participants is probably due to Mozilla Coincidental Work Weeks happening at the same period and to the proprietary nature of EdgeHTML (although this is changing?). However, I am confident that Igalia will try and address this issue and I am looking forward to coming back next year!

MathML developments

This year, Igalia developer Alejandro G. Castro wanted to work with me on WebKit's MathML layout code and more specifically on his MathML refactoring branch. Indeed, as many people (including Google developers) who have tried to work on WebKit's code in the past, he arrived to the conclusion that the WebKit's MathML layout code has many design issues that make it a burden for the rest of the layout team and too complex to allow future improvements. I was quite excited about the work he has done with Javier Fern'andez to try and move to a simple box model closer to what exists in Gecko and thus I actually extended my stay to work one whole week with them. We already submitted our proposal to the webkit-dev mailing list and received positive feedback, so we will now start merging what is ready. At the end of the week, we were quite satisfied about the new approach and confident it will facilitate future maintenance and developements :-)

While reading a recent thread on the Math WG mailing list, I realized that many MathML people have only vague understanding of why Google (or to be more accurate, the 3 or 4 engineers who really spent some time reviewing and testing the WebKit code) considered the implementation to be unsafe and not ready for release. Even worse, Michael Kholhase pointed out that for someone totally ignorant of the technical implementation details, the communication made some years ago around the "flexbox-based approach" gave the impression that it was "the right way" (indeed, it somewhat did improve the initial implementation) and the rationale to change that approach was not obvious. So let's just try and give a quick overview of the main problems, even if I doubt someone can have good understanding of the situation without diving into the C++ code:

- WebKit's code to stretch operator was not efficient at all and was limited to some basic fences buildable via Unicode characters.

- WebKit's MathML code violated many layout invariants, making the code unreliable.

- WebKit's MathML code relied heavily on the C++ renderer classes for flexboxes and has to manage too many anonymous renderers.

The main security concerns were addressed a long time ago by Martin Robinson and me. Glyph assembly for stretchy operators are now drawn using low-level font painting primitive instead of creating one renderer object for each piece and the preferred width for them no longer depends on vertical metrics (although we still need some work to obtain Gecko's good operator spacing). Also, during my crowdfunding project, I implemented partial support for the OpenType MATH table in WebKit and more specifically the MathVariant subtable, which allows to directly use construction of stretchy operators specified by the font designer and not only the few Unicode constructions.

However, the MathML layout code still modifies the renderer tree to force the presence of anonymous renderers and still applies specific CSS rules to them. It is also spending too much time trying to adapt the parent flexbox renderer class which has at the same time too much features for what is needed for MathML (essentially automatic box alignment) and not enough to get exact placement and measuring needed for high-quality rendering (the TeXBook rules are more complex, taking into account various parameters for box shifts, drops, gaps etc).

During the hackfest, we started to rewrite a clean implementation of some MathML renderer classes similar to Gecko's one and based on the MathML in HTML5 implementation note. The code now becomes very simple and understandable. It can be summarized into four main functions. For instance, to draw a fraction we need:

computePreferredLogicalWidthswhich sets the preferred width of the fraction during the first layout pass, by considering the widest between numerator and denominator.layoutBlockandfirstLineBaselinewhich calculate the final width/height/ascent of the fraction element and position the numerator and denominator.paintwhich draws the fraction bar.

Perhaps, the best example to illustrate how the complexity has been reduced is the case of the renderer of mmultiscripts/msub/msup/msubsup elements (attaching an arbitrary number of subscripts and superscripts before or after a base). In the current WebKit implementation, we have to create three anonymous wrappers (a first one for the base, a second one for prescripts and a third one for postscripts) and an anonymous wrapper for each subscript/superscript pair, add alignment styles for these wrappers and spend a lot of time maintaining the integrity of the renderer tree when dynamic changes happen. With the new code, we just need to do arithmetic calculations to position the base and script boxes. This is somewhat more complex than the fraction example above but still, it remains arithmetic calculations and we can not reduce any further if we wish quality comparable to TeXBook / MATH rules. We actually take into account many parameters from the OpenType MATH table to get much better placement of scripts. We were able to fix bug 130325 in about twenty minutes instead of fighting with a CSS "negative margin" hack on anonymous renderers.

MathML dicussions

The first day of the hackfest we also had an interesting "breakout session" to define the tasks to work on during the hackfest. Alejandro briefly presented the status of his refactoring branch and his plan for the hackfest. As said in the previous section, we have been quite successful in following this plan: Although it is not fully complete yet, we expect to merge the current changes soon. Dominik R"ottsches who works on Blink's font and layout modules was present at the MathML session and it was a good opportunity to discuss the future of MathML in Chrome. I gave him some references regarding the OpenType MATH table, math fonts and the MathML in HTML5 implementation note. Dominik said he will forward the references to his colleagues working on layout so that we can have deeper technical dicussion about MathML in Blink in the long term. Also, I mentioned noto-fonts issue 330, which will be important for math rendering in Android and actually does not depend on the MathML issue, so that's certainly something we could focus on in the short term.

Alejandro also proposed to me to prepare a talk about MathML in Web Engines and exciting stuff happening with the MathML Association. I thus gave a brief overview of MathML and presented some demos of native support in Gecko. I also explained how we are trying to start a constructive approach to solve miscommunication between users, spec authors and implementers ; and gather technical and financial resources to obtain a proper solution. In a slightly more technical part, I presented Microsoft's OpenType MATH table and how it can be used for math rendering (and MathML in particular). Finally, I proposed my personal roadmap for MathML in Web engines. Although I do not believe I am a really great speaker, I received positive feedback from attendees. One of the thing I realized is that I do not know anything about the status and plan in EdgeHTML and so overlooked to mention it in my presentation. Its proprietary nature makes hard for external people to contribute to a MathML implementation and the fact that Microsoft is moving away from ActiveX de facto excludes third-party plugin for good and fast math rendering in the future. After I went back to Paris, I thus wrote to Microsoft employee Christian Heilmann (previously working for Mozilla), mentioning at least the MathML in HTML5 Implementation Note and its test suite as a starting point. MathML is currently on the first page of the most voted feature requested for Microsoft Edge and given the new direction taken with Microsoft Edge, I hope we could start a discussion on MathML in EdgeHTML...

Conclusion

This was a great hackfest and I'd like to thank again all the participants and sponsors for making it possible! As Alejandro wrote to me, "I think we have started a very interesting work regarding MathML and web engines in the future.". The short term plan is now to land the WebKit MathML refactoring started during the hackfest and to finish the work. I hope people now understand the importance of fonts with an OpenType MATH table for good mathematical rendering and we will continue to encourage browser vendors and font designers to make such fonts wide spread.

The new approach for WebKit MathML support gives good reason to be optmimistic in the long term and we hope we will be able to get high-quality rendering. The fact that the new approach addresses all the issues formulated by Google and that we started a dialogue on math rendering, gives several options for MathML in Blink. It remains to get Microsoft involved in implementing its own OpenType table in EdgeHTML. Overall, I believe that we can now have a very constructive discussion with the individuals/companies who really want native math support, with the Math WG members willing to have their specification implemented in browsers and with the browser vendors who want a math implementation which is good, clean and compatible with the rest of their code base. Hopefully, the MathML Association will be instrumental in achieving that. If everybody get involved, 2016 will definitely be an exciting year for native MathML in Web engines!

|

|

Emma Irwin: Surfacing Teachable Moments in Community |

I’m still figuring out how to cross-post well with Medium . And if I want to, trying embed for fund.

|

|

Air Mozilla: 2016 Roadmap for Discourse - Community Ops |

Session from Mozilla Community Ops Meetup in London. Designing a roadmap for discourse.mozilla-community.org and other Mozilla Discourse sites

Session from Mozilla Community Ops Meetup in London. Designing a roadmap for discourse.mozilla-community.org and other Mozilla Discourse sites

https://air.mozilla.org/2016-roadmap-for-discourse-community-ops/

|

|

Francesco Lodolo: Test of PHP 7 Performances |

PHP might not be hugely popular these days, but it’s a fundamental part of the tools I use everyday to manage localization of web parts at Mozilla (e.g. mozilla.org).

Today I’ve decided to give PHP 7 a try on my local virtual machine: it’s a pretty light VM running Debian 8 (2 GB ram, 2 cores), hosted on a Mac with VMWare Fusion. Web pages are served by Apache and mod_php, not by PHP’s internal web server, and there’s no optimization of any kind on the config.

Since I and my colleague Pascal spent quite a bit of time improving code quality and performances in our tools during the last year or so, I already had a script to collect some performance data. I ran the test against Langchecker, which is the tool providing data to our Webdashboard.

Existing version: PHP 5.6.14-0+deb8u1

New version: PHP 7.0.0-5~dotdeb+8.1

Time needed to generate the page

Memory consumption

Memory consumption

While the number of runs was pretty small (50 for each request), I got decently consistent results for memory and time. And the results speak clearly about the performance improvements in PHP 7.

While the number of runs was pretty small (50 for each request), I got decently consistent results for memory and time. And the results speak clearly about the performance improvements in PHP 7.

I don’t personally consider PHP 7 ready for a production environment but, if you can, it’s definitely worth a try. In our case the code didn’t need any change.

http://www.yetanothertechblog.com/2015/12/19/test-of-php-7-performances/

|

|

Karl Dubost: Jetlag, e10s addon and many breadcrumbs [worklog] |

Week-end travel

Coming back from a work week in Orlando. I woke up on Friday morning (3am Orlando) to arrive at home on Sunday evening around (6pm Japan). I decided to take Monday off for getting a bit of slip and straight up things.

e10s addon

Discussions started to how to organize the work on contacting the developers of e10s + addon. As usual I would prefer working with emails than spreadsheets.

I will be perfectly satisfied with sending an email for each individual add-on. With a unique topic, sent to a specific mailing-list (mozilla only for privacy reason). I requested the creation of the list.

The benefit is that it creates a unique thread for each add-on. It is searchable, even when offline, archived with dates for free, offers a single thread for each add-on, filterable, and can redirect the answers from the contact to the list, archives accessible when Karl gets hit by a bus.

For example mail topic can be

[addon-e10s-compat] Adblock Edge

for this specific add-on and so on

[addon-e10s-compat] FoxTab [addon-e10s-compat] Garmin Communicator

Low tech have resiliency and benefits ;)

Working with uBlock and Persona

Failed many times already, tried many combination, and still unable to make uBlock and login.persona.org working together in harmony, even by whitelisting the appropriate domain names. If someone knows the good mix for setting this up. Later: With the help of mat, I found the solution. My third party cookies blocking was getting into the way. There must be a better way in the UI to understand what is happening. The whitelisting didn't work.

Any way. It's already a better day. So Thanks mat!

WebCompat project: Coding style and other things

- Coding Styles on webcompat project

- pre-PR review?

- Making our code a bit more DRY

- Error 500 means the server is having an issue. Often you should look into the coding more than the HTTP itself. Proposed a patch.

- Code cleaning

- Another fix about a mixup in between full response in Flask and JSON body

- Issue about Activity page UX

- CSS fix me has been deployed. Yeah. We need to iron a bit the edges.

WebKit on iOS and tapping

We can expect interoperability issues down the road. More Responsive Tapping on iOS. WebKit decides to change the behavior depending on some configurations of the meta viewport.

Firefox on Windows, Android, Mac, Firefox OS

It's always interesting to have a perspective on the real world usage. We have a tendency to have a view which is very oriented by our very local environment (intimate environment). I learned something at Mozlando (Mozilla Work Week in Orlando).

Web Developers (and now browsers implementers) are in majority using Mac OS X and/or linux. Just look around you at conferences crowd. On the other hand the Firefox user base is dominated by Firefox on Windows Desktop, then Firefox on Android, and only then Firefox on Mac OS X. I suspect many Web developers have switched to chrome on a daily basis because of the bad history of Firebug and not knowing what happened in the space of Firefox Developer Tools. Pretty shiny tools. I usually prefer using the Firefox Developer Tools UI more than Chrome Dev Tools UI. The old Opera Presto Dragonfly has still my vote of confidence for many features, than none of the current tools have matched.

From top to bottom: Opera Presto with Opera Dragonfly Opera Blink with Chrome Developer tools * Firefox Gecko with Firefox Developer tools

Talking about Firefox Developer tools. Created two bugs about the Debugger UI.

Miscellaneous

- Explained a bit more on Outreach for WebCompat to a volunteer. This should be a blog post. Maybe tonight.

- Probably a webcompat team meeting this week. Done.

- Nice read about smaller and faster Websites: "When we prize our own convenience over craft, we’re building a web for us, the developers. We’re building a web that’s easy to assemble but lousy to use."

- Usual bugs triage on webcompat.com. Help is always welcome.

- Filled the Mozlando survey. The survey starts with "You are anonymous". Interesting friction in between the comments box where you want to share more ideas and opinions, that will probably reveal partly who you are depending on who's reading this comment box.

- Firefox 43 release notes.

- Impressive. Simple and well made. Discover markup for WebVR

- Published: Progressive enhancement for a Canvas demo

- Bugs triage on Webcompat.com. Again the max-width issue on issue 2028

- Funky issues about Scrollbars and Google Groups

- Some roles for volunteer contributors on the webcompat project

Otsukare!

|

|

About:Community: Saying goodbye to Demo Studio |

When the Demo Studio area of MDN launched in 2011, it was a great new place for developers to show off the then-cutting-edge technologies of HTML5 and CSS3. In the years since then, more than 1,100 demos have been uploaded, making it one of the largest demo sites focused on cross-browser web technology demos. Demo Studio also served as the basis for the long-running Dev Derby contest, which offered prizes for the best demos using a different technology each month.

In the last few years, quite a number of code-sharing sites have become very popular, as they are solely focused on code-sharing functionality, for example, Github Pages, CodePen, and JSFiddle.

Given the variety of alternative sites, and the technical and logistical costs of maintaining Demo Studio, we at MDN have made the decision to remove the Demo Studio after January 15, 2016. After that date, demos will no longer be available from MDN via Demo Studio.

We encourage demo authors to move your demo to an alternate site, such as your own website, if you have one, or one of the popular code-sharing sites. In case you no longer have a copy of your demo code, you will be able to download your demo code from Demo Studio until 23:59, Pacific Standard Time, on January 15, 2016. If you’re looking for a place to share your demo, check out our guide to Using Github pages.

After January 15, 2016, you will be able to request a copy of your demo code by submitting a bug in Bugzilla. Please be sure to include the name of your demo(s) and an email address at which you can receive the source code. Demos will be available via this process until April 8, 2016.

Key Dates

- 2016-01-15

- Demo Studio will be removed; deadline to download your code.

- 2016-04-08

- Deadline to request a copy of your demo code manually, if you didn’t download it.

You are welcome to link to the new location of your demo from your MDN profile page. No automatic redirects will be provided.

Thanks again to all the demo authors who have contributed to Demo Studio!

http://blog.mozilla.org/community/2015/12/18/saying-goodbye-to-demo-studio/

|

|

Chris Cooper: RelEng & RelOps Weekly Highlights - December 18, 2015 - Mozlando edition |

This is also *not* Mihai.

This is also *not* Mihai.Even though I’ve been involved in the day-to-day process, have manage a bunch of people working on the relevant projects, and indeed have been writing these quasi-weekly updates, it was until Chris AtLee put together a slide deck retrospective of what we had accomplished in releng over the last 6 months that it really sunk in (hint: it’s a lot):

Slide deck from @chrisatlee’s #releng 6-month retrospective yesterday: https://t.co/ebVpC9PRqm #mozlando

— Chris Cooper (@ccooper) December 9, 2015But enough about the “ancient” past, here’s what has been happening since Mozlando:

Modernize infrastructure: There was a succession of meetings at Mozlando to help bootstrap people on using TaskCluster (TC). These were well-attended, at least by people in my org, many of whom have a vested interest in using TC in the ongoing release promotion work.

Speaking of release promotion, the involved parties met in Orlando to map out the remaining work that stands between us and releasing a promoted CI as a beta, even if just in parallel to an actual release. We hope to have all the build artifacts generated via release promotion by the end of 2016 — l10n repacks are the long pole here — with the remaining accessory service tasks like signing and updates coming online early in 2016.

Improve CI pipeline: Mozilla announced a change of strategy in Orlando with regards to FirefoxOS.

FirefoxOS is alive and strong, but the push through carriers is over. We pivot to IoT and user experience. #mozlando

— Ari Jaaksi (@jaaksi) December 9, 2015In theory, the switch from phones to connected devices should improve our CI throughput in the near-term, provided we are actually able to turn off any of the existing b2g build variants or related testing. This will depend on commitments we’ve already made to carriers, and what baseline b2g coverage Mozilla deems important.

During Mozlando, releng held a sprint to remove the jacuzzi code from our various repos. Jacuzzis were once an important way to prevent “bursty,” prolific jobs (namely l10n) from claiming all available capacity in our machine pools. With the recent move to AWS for Windows builds, this is really only an issue for our Mac build platform now, and even that *should* be fixed sometime soon if we’re able to repack Mac builds on Linux. In the interim, the added complexity of the jacuzzi code wasn’t deemed worth the extra maintenance hassle, so we ripped it out. You served your purpose, but good riddance.

Release: Sadly, we are never quite insulated from the ongoing needs of the release process during these all-hands events. Mozlando was no different. In fact, it’s become such a recurrent issue for release engineering, release management, and release QA that we’ve started discussing ways to be able to timeshift the release schedule either forward or backward in time. This would also help us deal with holidays like Thanksgiving and Christmas when many key players in the release process (and devs too) might normally be on vacation. No changes to announce yet, but stay tuned.

With the upcoming deprecation of SHA-1 support by Microsoft in 2016, we’ve been scrambling to make sure we have a support plan for Firefox users on older versions on Windows operating systems. We determined that we would need to offer multiple dot releases to our users: a first one to update the updater itself and the related maintenance service to recognize SHA-2, and then a second update where we begin signing Firefox itself with SHA-2. (https://bugzil.la/1079858)

Jordan was on the hook for the Firefox 43.0 final release that went out the door on Tuesday, December 15.

As with any final release, there is a related uplift cycle. These uplift cycles are also problematic, especially between the aurora and beta branches where there continues to be discrepancies between the nightly- and release-model build processes. The initial beta build (b1) for Firefox 44 was delayed for several days while we resolved a suite of issues around GTK3, general crashes, and FHR submissions on mobile. Much of this work also happened at Mozlando.

Operational: We continue the dance of disabling older r5 Mac minis running 10.10.2 to replace them with new, shiny r7 Mac minis running 10.10.5. As our r7 mini capacity increases, we also able/required to retire some of the *really* old r4 Mac minis running OS X 10.6, mostly because we need the room in the datacenter. The gating factor here has been making sure that tests works still work on the various release branches on the new r7 minis. Joel has been tackling this work, and this week was able to verify the tests on the mozilla-release branch. Only the esr38 branch is still running on the r5 minis. Big thanks to Kim and our stalwart buildduty contractors, Alin and Vlad, for slogging through the buildbot-configs with patches for this.

Speaking of our buildduty contractors, Alin and Vlad both received commit level 2 access to the Mozilla repos in Mozlando. This makes them much more autonomous, and is a result of many months of steady effort with patches and submissions. Good work, guys!

The Mozilla VR Team may soon want a Gecko branch for generating Windows builds with a dedicated update channel. The VR space at Mozilla is getting very exciting!

I can’t promise much content during the Christmas lull, but look for more releng updates in the new year.

|

|

Michael Kaply: My Best Year Ever |

As 2015 comes to an end, it’s time to reflect back on the accomplishments of the previous year. For me personally and for Kaply Consulting, it was my best year ever.

- I contributed more patches to Mozilla than I have in many years.

- I grew my email list substantially.

- I grew my business revenue by 10%.

- I finally planned well enough to be able to take the last two weeks of the year off.

The major contributor to my success this year, was Michael Hyatt’s Five Days to Your Best Year Ever. If you’ve struggled in the past with goal setting, I highly recommend checking out his program. You can start with this free video.

Thank you to everyone who helped me have such a great year. Happy holidays and happy new year!

Disclaimer: I’m an affiliate partner, so if you click and purchase the program, I will receive an affiliate commission.

|

|

About:Community: Leadership Summit Planning |

With a successful Mozlando for the Participation Cohort still in our rear-view mirror, we are excited to begin the launch process for the Leadership Summit, happening next month in Singapore.

What is the Leadership Summit?

As part of the Participation Team’s Global Gatherings application process for this event, we asked people to commit to developing and being accountable to recruit and organize contributors in order grow the size and impact of their community in 2016. We ran also ran a second application process this month, to identify new and emerging leaders – bringing our invited total to 136.

Participation at Mozlando

The Leadership Summit will bring together this group for two days of sessions and experiences that will:

Help Participation Leaders will feel prepared (skills, mindsets, network) and understand their role as leaders/mobilizers who can unleash a wave of growth in our communities, in impact and in numbers.

Help Participation Leaders leave with action plans and commitments, specific to growing/evolving their communities and having impact on Connected Devices and a Global Campus Campaign.

Help everyone leave feeling that “we’re doing this together.” Everyone attending (volunteer and staff Mozillians) will feel like a community that is aligned with Mozilla’s overall direction, and who now trust one another and have each others back.

“You mentioned Connected Devices and a Global Campus Campaign? Say more…”

Sure! In setting goals for 2016, we realized that focusing on one or two truly impactful initiatives, will bring us closer to unleashing the Participation Mozilla needs, while providing opportunity for individuals to connect their ideas, energy and skills in the way that feels valued and rewarding.

To that end, we are currently developing a list of sessions and experiences for the Leadership Summit that will set us up for success in 2016 as community leaders, and on each of our three focus areas:

- Connected Devices

- Campus Campaign

- Regional / Local / Grassroots

We will say more about each as we get closer to the Summit, as well as including the opportunity to connect personal goals through 1:1 coaching for all attending.

“What about Reps and other functional areas?”

Fear not! Sessions and experiences will include:

Alignment for Reps around changes in the program, what’s expected of them, and what they can expect from Council and staff in 2016

Opportunity for volunteers in specific functional areas to build relationships with staff in those areas

This event will complete the process of merging participants from three events into one activated cohort – this is our beginning, and I am excited, I hope you are too!

Feature Image by ‘Singapore’ CC by 2.0 David Russo

http://blog.mozilla.org/community/2015/12/18/participation-leaders-summit/

|

|

Emma Irwin: Leadership Summit Planning |

With a successful Mozlando for the Participation Cohort still in our rear-view mirror, we are excited to begin the launch process for the Leadership Summit, happening next month in Singapore

What is the Leadership Summit?

As part of the Participation Team’s Global Gatherings application process for this event, we asked people to commit to developing and being accountable to recruit and organize contributors in order grow the size and impact of their community in 2016. We ran also ran a second application process this month, to identify new and emerging leaders – bringing our invited total to 136.

The Leadership Summit will bring together this group for two days of sessions and experiences that will:

Help Participation Leaders will feel prepared (skills, mindsets, network) and understand their role as leaders/mobilizers who can unleash a wave of growth in our communities, in impact and in numbers.

Help Participation Leaders leave with action plans and commitments, specific to growing/evolving their communities and having impact on Connected Devices and a Global Campus Campaign.

Help everyone leave feeling that “we’re doing this together.” Everyone attending (volunteer and staff Mozillians) will feel like a community that is aligned with Mozilla’s overall direction, and who now trust one another and have each others back.

“You mentioned Connected Devices and a Global Campus Campaign? Say more…”

Sure! In setting goals for 2016, we realized that focusing on one or two truly impactful initiatives, will bring us closer to unleashing the Participation Mozilla needs, while providing opportunity for individuals to connect their ideas, energy and skills in the way that feels valued and rewarding.

To that end, we are currently developing a list of sessions and experiences for the Leadership Summit that will set us up for success in 2016 as community leaders, and on each of our three focus areas:

- Connected Devices

- Campus Campaign

- Regional / Local / Grassroots

We will say more about each as we get closer to the Summit, as well as including the opportunity to connect personal goals through 1:1 coaching for all attending.

“What about Reps and other functional areas?”

Fear not! Sessions and experiences will include:

Alignment for Reps around changes in the program, what’s expected of them, and what they can expect from Council and staff in 2016

Opportunity for volunteers in specific functional areas to build relationships with staff in those areas

This event will complete the process of merging participants from three events into one activated cohort – this is our beginning, and I am excited, I hope you are too!

Feature Image by ‘Singapore’ CC by 2.0 David Russo

|

|

Dave Townsend: Linting for Mozilla JavaScript code |

One of the projects I’ve been really excited about recently is getting ESLint working for a lot of our JavaScript code. If you haven’t come across ESLint or linters in general before they are automated tools that scan your code and warn you about syntax errors. They can usually also be set up with a set of rules to enforce code styles and warn about potential bad practices. The devtools and Hello folks have been using eslint for a while already and Gijs asked why we weren’t doing this more generally. This struck a chord with me and a few others and so we’ve been spending some time over the past few weeks getting our in-tree support for ESLint to work more generally and fixing issues with browser and toolkit JavaScript code in particular to make them lintable.

One of the hardest challenges with this is that we have a lot of non-standard JavaScript in our tree. This includes things like preprocessing as well as JS features that either have since been standardized with a different syntax (generator functions for example) or have been dropped from the standardization path (array comprehensions). This is bad for developers as editors can’t make sense of our code and provide good syntax highlighting and refactoring support when it doesn’t match standard JavaScript. There are also plans to remove the non-standard JS features so we have to remove that stuff anyway.

So a lot of the work done so far has been removing all this non-standard stuff so that ESLint can pass with only a very small set of style rules defined. Soon we’ll start increasing the rules we check in browser and toolkit.

How do I lint?

From the command line this is simple. Make sure and run ./mach eslint --setup to install eslint and some related packages then just ./mach eslint to lint a specific area. You can also lint the entire tree. For now you may need to periodically run setup again as we add new dependencies, at some point we may make mach automatically detect that you need to re-run it.

You can also add ESLint support to many code editors and as of today you can add ESLint support into hg!

Why lint?

Aside from just ensuring we have standard JavaScript in the tree linting offers a whole bunch of benefits.

- Linting spots JavaScript syntax errors before you have to run your code. You can configure editors to run ESLint to tell you when something is broken so you can fix it even before you save.

- Linting can be used to enforce the sorts of style rules that keep our code consistent. Imagine no more nit comments in code review forcing you to update your patch. You can fix all those before submitting and reviewers don’t have to waste time pointing them out.

- Linting can catch real bugs. When we turned on one of the basic rules we found a problem in shipping code.

- With only standard JS code to deal with we open the possibility of using advanced like AST transforms for refactoring (e.g. recast). This could be very useful for switching from Cu.import to ES6 modules.

- ESLint in particular allows us to write custom rules for doing clever things like handling head.js imports for tests.

Where are we?

There’s a full list of everything that has been done so far in the dependencies of the ESLint metabug but some highlights:

- Removed #include preprocessing from browser.js moving all included scripts to global-scripts.inc

- Added an ESLint plugin to allow linting the JS parts of XBL bindings

- Fixed basic JS parsing issues in lots of b2g, browser and toolkit code

- Created a hg extension that will warn you when committing code that fails ESLint

- Turned on some basic linting rules

- Mozreview is close to being able to lint your code and give review comments where things fail

- Work is almost complete on a linting test that will turn orange on the tree when code fails to lint

What’s next?

I’m grateful to all those that have helped get things moving here but there is still more work to do. If you’re interested there’s really two ways you can help. We need to lint more files and we need to turn on more lint rules.

The .eslintignore file shows what files are currently ignored in the lint checks. Removing files and directories from that involves fixing JavaScript standards issues generally but every extra file we lint is a win for all the above reasons so it is valuable work. Also mostly straightforward once you get the hang of it, there are just a lot of files.

We also need to turn on more rules. We’ve got a rough list of the rules we want to turn on in browser and toolkit but as you might guess they aren’t on because they fail right now. Fixing up our JS to work with them is simple work but much appreciated. In some cases ESLint can also do the work for you!

http://www.oxymoronical.com/blog/2015/12/Linting-for-Mozilla-JavaScript-code

|

|

Dave Townsend: Running ESLint on commit |

ESLint becomes the most useful when you get warnings before even trying to land or get your code reviewed. You can add support to your code editor but not all editors support this so I’ve written a mercurial extension which gives you warnings any time you commit code that fails lint checks. It uses the same rules we run elsewhere. It doesn’t abort the commit, that would be annoying if you’re working on a feature branch but gives you a heads up about what needs to be fixed and where.

To install the extension add this to a hgrc file, I put it in the .hg/hgrc file of my mozilla-central clone rather than the global config.

[extensions] mozeslint =/tools/mercurial/eslintvalidate.py

After that anything that creates a commit, so that includes mq patches, will run any changed JS files through ESLint and show the results. If the file was already failing checks in a few places then you’ll still see those too, maybe you should fix them up too before sending your patch for review?

http://www.oxymoronical.com/blog/2015/12/Running-ESLint-on-commit

|

|

Mozilla Addons Blog: Signing Firefox add-ons with jpm sign |

With this week’s release of Firefox 43, all add-ons must now be signed. While this will make the block-list and other malware prevention measures more effective, add-on developers who wish to distribute outside of addons.mozilla.org must now add signing to their release flow.

To make it easier for these developers, we released the add-on signing API last month. Today, we’re also providing a new version of the jpm command line tool that makes add-on signing even easier.

Installation

Install jpm for NodeJS from NPM like this:

npm install jpm

Generate API Credentials

In order to work with the signing API you first need to log in to the addons.mozilla.org developer hub and generate API credentials.

Signing an Add-on

To begin signing an SDK-based add-on with jpm, change into the source directory and run this command:

jpm sign --api-key ${AMO_API_KEY} --api-secret ${AMO_API_SECRET}

This will fetch a signed XPI file to your current directory (or --addon-dir) that you can self-host for installation into Firefox. Read more about add-on distribution here. Since this XPI is intended for distribution outside of addons.mozilla.org, it assumes you don’t want your add-on listed on addons.mozilla.org. This is referred to as an unlisted add-on.

Updating an Add-on

To sign a new version of your unlisted add-on, just increment the version number in your package.json file and re-run the jpm sign command.

Signing XPI Files Directly

If you aren’t using jpm to manage your add-on, you can still sign the XPI file directly, like this:

jpm sign --xpi /path/to/your-addon.xpi --api-key ... --api-secret ...

Signing Requirements

We recently made changes to the signing requirements for add-ons not listed on addons.mozilla.org. We still do some basic checks to make sure that the add-on is well formed enough to install without errors but if those checks pass, any add-on will be signed.

Keep in mind that signing is only required for distributing your add-on. You can always install unsigned add-ons into a development version of Firefox for testing purposes.

Listed Add-ons

The jpm sign command currently doesn’t support add-ons distributed on addons.mozilla.org (referred to as listed add-ons) at the moment. Listed add-ons still require a manual review.

Going Further

We hope that the jpm command eases the development burden introduced by signing. See the jpm sign reference documentation for more options, features, and examples. As usual, please report bugs if you run into any.

https://blog.mozilla.org/addons/2015/12/18/signing-firefox-add-ons-with-jpm-sign/

|

|

Niko Matsakis: Rayon: data parallelism in Rust |

Over the last week or so, I’ve been working on an update to Rayon, my experimental library for data parallelism in Rust. I’m pretty happy with the way it’s been going, so I wanted to write a blog post to explain what I’ve got so far.

Rayon’s goal is to make it easy to add parallelism to your sequential code – so basically to take existing for loops or iterators and make them run in parallel. For example, if you have an existing iterator chain like this:

1 2 3 | |

then you could convert that to run in parallel just by changing from

the standard sequential iterator

to Rayon’s parallel iterator

:

1 2 3 | |

Of course, part of making parallelism easy is making it safe. Rayon guarantees you that using Rayon APIs will not introduce data races.

This blog post explains how Rayon works. It starts by describing the

core Rayon primitive (join) and explains how that is implemented. I

look in particular at how many of Rust’s features come together to let

us implement join with very low runtime overhead and with strong

safety guarantees. I then explain briefly how the parallel iterator

abstraction is built on top of join.

I do want to emphasize, though, that Rayon is very much work in

progress

. I expect the design of the parallel iterator code in

particular to see a lot of, well, iteration (no pun intended), since

the current setup is not as flexible as I would like. There are also

various corner cases that are not correctly handled, notably around

panic propagation and cleanup. Still, Rayon is definitely usable today

for certain tasks. I’m pretty excited about it, and I hope you will be

too!

Rayon’s core primitive: join

In the beginning of this post, I showed an example of using a parallel iterator to do a map-reduce operation:

1 2 3 | |

In fact, though, parallel iterators are just a small utility library

built atop a more fundamental primitive: join. The usage of join

is very simple. You invoke it with two closures, like shown below, and

it will potentially execute them in parallel. Once they have both

finished, it will return:

1 2 | |

The fact that the two closures potentially run in parallel is key:

the decision of whether or not to use parallel threads is made

dynamically, based on whether idle cores are available. The idea is

that you can basically annotate your programs with calls to join to

indicate where parallelism might be a good idea, and let the runtime

decide when to take advantage of that.

This approach of potential parallelism

is, in fact, the key point of

difference between Rayon’s approach and

crossbeam’s scoped threads. Whereas in crossbeam,

when you put two bits of work onto scoped threads, they will always

execute concurrently with one another, calling join in Rayon does

not necessarily imply that the code will execute in parallel. This not

only makes for a simpler API, it can make for more efficient

execution. This is because knowing when parallelism is profitable is

difficult to predict in advance, and always requires a certain amount

of global context: for example, does the computer have idle cores?

What other parallel operations are happening right now? In fact, one

of the main points of this post is to advocate for potential

parallelism as the basis for Rust data parallelism libraries, in

contrast to the guaranteed concurrency that we have seen thus far.

This is not to say that there is no role for guaranteed concurrency

like what crossbeam offers. Potential parallelism

semantics also

imply some limits on what your parallel closures can do. For example,

if I try to use a channel to communicate between the two closures in

join, that will likely deadlock. The right way to think about join

is that it is a parallelization hint for an otherwise sequential

algorithm. Sometimes that’s not what you want – some algorithms are

inherently parallel. (Note though that it is perfectly reasonable to

use types like Mutex, AtomicU32, etc from within a join call –

you just don’t want one closure to block waiting for the other.)

Example of using join: parallel quicksort

join is a great primitive for divide-and-conquer

algorithms. These

algorithms tend to divide up the work into two roughly equal parts and

then recursively process those parts. For example, we can implement a

parallel version of quicksort like so:

1 2 3 4 5 6 7 8 9 10 11 | |

In fact, the only difference between this version of quicksort and a

sequential one is that we call rayon::join at the end!

How join is implemented: work-stealing

Behind the scenes, join is implemented using a technique called

work-stealing. As far as I know, work stealing was first

introduced as part of the Cilk project, and it has since become a

fairly standard technique (in fact, the name Rayon is an homage to

Cilk).

The basic idea is that, on each call to join(a, b), we have

identified two tasks a and b that could safely run in parallel,

but we don’t know yet whether there are idle threads. All that the

current thread does is to add b into a local queue of pending work

and then go and immediately start executing a. Meanwhile, there is a

pool of other active threads (typically one per CPU, or something like

that). Whenever it is idle, each thread goes off to scour the pending

work

queues of other threads: if they find an item there, then they

will steal it and execute it themselves. So, in this case, while the

first thread is busy executing a, another thread might come along

and start executing b.

Once the first thread finishes with a, it then checks: did somebody

else start executing b already? If not, we can execute it

ourselves. If so, we should wait for them to finish: but while we

wait, we can go off and steal from other processors, and thus try to

help drive the overall process towards completion.

In Rust-y pseudocode, join thus looks something like this (the

actual code works somewhat differently; for example, it allows

for each operation to have a result):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

What makes work stealing so elegant is that it adapts naturally to the

CPU’s load. That is, if all the workers are busy, then join(a, b)

basically devolves into executing each closure sequentially (i.e.,

a(); b();). This is no worse than the sequential code. But if there

are idle threads available, then we get parallelism.

Performance measurements

Rayon is still fairly young, and I don’t have a lot of sample programs to test (nor have I spent a lot of time tuning it). Nonetheless, you can get pretty decent speedups even today, but it does take a bit more tuning than I would like. For example, with a tweaked version of quicksort, I see the following parallel speedups on my 4-core Macbook Pro (hence, 4x is basically the best you could expect):

| Array Length | Speedup |

|---|---|

| 1K | 0.95x |

| 32K | 2.19x |

| 64K | 3.09x |

| 128K | 3.52x |

| 512K | 3.84x |

| 1024K | 4.01x |

The change that I made from the original version is to introduce

sequential fallback. Basically, we just check if we have a small

array (in my code, less than 5K elements). If so, we fallback to a

sequential version of the code that never calls join. This can

actually be done without any code duplication using traits, as you can

see from the demo code. (If you’re curious, I explain the idea

in an appendix below.)

Hopefully, further optimizations will mean that sequential fallback is less necessary – but it’s worth pointing out that higher-level APIs like the parallel iterator I alluded to earlier can also handle the sequential fallback for you, so that you don’t have to actively think about it.

In any case, if you don’t do sequential fallback, then the results you see are not as good, though they could be a lot worse:

| Array Length | Speedup |

|---|---|

| 1K | 0.41x |

| 32K | 2.05x |

| 64K | 2.42x |

| 128K | 2.75x |

| 512K | 3.02x |

| 1024K | 3.10x |

In particular, keep in mind that this version of the code is pushing

a parallel task for all subarrays down to length 1. If the array is

512K or 1024K, that’s a lot of subarrays and hence a lot of task

pushing, but we still get a speedup of 3.10x. I think the reason that

the code does as well as it does is because it gets the big things

right – that is, Rayon avoids memory allocation and virtual dispatch,

as described in the next section. Still, I would like to do better

than

0.41x for a 1K array (and I think we can).

Taking advantage of Rust features to minimize overhead

As you can see above, to make this scheme work, you really want to drive down the overhead of pushing a task onto the local queue. After all, the expectation is that most tasks will never be stolen, because there are far fewer processors than there are tasks. Rayon’s API is designed to leverage several Rust features and drive this overhead down:

joinis defined generically with respect to the closure types of its arguments. This means that monomorphization will generate a distinct copy ofjoinspecialized to each callsite. This in turn means that whenjoininvokesoper_a()andoper_b()(as opposed to the relatively rare case where they are stolen), those calls are statically dispatched, which means that they can be inlined. It also means that creating a closure requires no allocation.- Because

joinblocks until both of its closures are finished, we are able to make full use of stack allocation. This is good both for users of the API and for the implementation: for example, the quicksort example above relied on being able to access an&mut [T]slice that was provided as input, which only works becausejoinblocks. Similarly, the implementation ofjoinitself is able to completely avoid heap allocation and instead rely solely on the stack (e.g., the closure objects that we place into our local work queue are allocated on the stack).

As you saw above, the overhead for pushing a task is reasonably low, though not nearly as low as I would like. There are various ways to reduce it further:

- Many work-stealing implementations use heuristics to try and decide

when to skip the work of pushing parallel tasks. For example, the

Lazy Scheduling work by Tzannes et al. tries to avoid pushing a

task at all unless there are idle worker threads (which they call

hungry

threads) that might steal it. - And of course good ol’ fashioned optimization would help. I’ve never

even looked at the generated LLVM bitcode or assembly for

join, for example, and it seems likely that there is low-hanging fruit there.

Data-race freedom

Earlier I mentioned that Rayon also guarantees data-race freedom. This means that you can add parallelism to previously sequential code without worrying about introducing weird, hard-to-reproduce bugs.

There are two kinds of mistakes we have to be concerned about. First,

the two closures might shared some mutable state, so that changes made

by one would affect the other. For example, if I modify the above

example so that it (incorrectly) calls quick_sort on lo in both

closures, then I would hope that this will not compile:

1 2 3 4 5 6 7 8 | |

And indeed I will see the following error:

1 2 3 | |

Similar errors arise if I try to have one closure process lo (or

hi) and the other process v, which overlaps with both of them.

Side note: This example may seem artificial, but in fact this is an actual bug that I made (or rather, would have made) while implementing the parallel iterator abstraction I describe later. It’s very easy to make these sorts of copy-and-paste errors, and it’s very nice that Rust makes this kind of error a non-event, rather than a crashing bug.

Another kind of bug one might have is to use a non-threadsafe type

from within one of the join closures. For example, Rust offers a

non-atomic reference-counted type called Rc. Because Rc uses

non-atomic instructions to update the reference counter, it is not

safe to share an Rc between threads. If one were to do so, as I show

in the following example, the ref count could easily become incorrect,

which would lead to double frees or worse:

1 2 3 4 5 6 7 | |

But of course if I try that example, I get a compilation error:

1 2 3 4 5 6 | |

As you can see in the final note

, the compiler is telling us that

you cannot share Rc values across threads.

So you might wonder what kind of deep wizardry is required for the

join function to enforce both of these invariants? In fact, the

answer is surprisingly simple. The first error, which I got when I

shared the same &mut slice across two closures, falls out from

Rust’s basic type system: you cannot have two closures that are both

in scope at the same time and both access the same &mut slice. This

is because &mut data is supposed to be uniquely accessed, and

hence if you had two closures, they would both have access to the same

unique

data. Which of course makes it not so unique.

(In fact, this was one of the great epiphanies for me in

working on Rust’s type system. Previously I thought that dangling

pointers

in sequential programs and data races

were sort of

distinct bugs: but now I see them as two heads of the same Hydra.

Basically both are caused by having rampant aliasing and mutation, and

both can be solved by the ownership and borrowing. Nifty, no?)

So what about the second error, the one I got for sending an Rc

across threads? This occurs because the join function declares that

it’s two closures must be Send. Send is the Rust name for a trait

that indicates whether data can be safely transferred across

threads. So when join declares that its two closures must be Send,

it is saying it must be safe for the data those closures can reach to

be transferred to another thread and back again

.

Parallel iterators

At the start of this post, I gave an example of using a parallel iterator:

1 2 3 | |

But since then, I’ve just focused on join. As I mentioned earlier,

the parallel iterator API is really just a

pretty simple wrapper around join. At the moment, it’s

more of a proof of concept than anything else. But what’s really nifty

about it is that it does not require any unsafe code related to

parallelism – that is, it just builds on join, which encapsulates

all of the unsafety. (To be clear, there is a small amount of unsafe

code related to managing uninitialized memory when

collecting into a vector. But this has nothing to do with

parallelism; you’ll find similar code in Vec. This code is also

wrong in some edge cases because I’ve not had time to do it properly.)

I don’t want to go too far into the details of the existing parallel

iterator code because I expect it to change. But the high-level idea

is that we have this trait ParallelIterator which

has the following core members:

1 2 3 4 5 6 7 8 9 | |

The idea is that the method state divides up the iterator into some

shared state and some per-thread

state. The shared state will

(potentially) be accessible by all worker threads, so it must be

Sync (sharable across threads). The per-thread-safe will be split

for each call to join, so it only has to be Send (transferrable to

a single other thread).

The ParallelIteratorState trait represents some

chunk of the remaining work (e.g., a subslice to be processed). It has

three methods:

1 2 3 4 5 6 7 8 9 10 11 | |

The len method gives an idea of how much work remains. The

split_at method divides this state into two other pieces. The

for_each method produces all the values in this chunk of the

iterator. So, for example, the parallel iterator for a slice &[T]

would:

- implement

lenby just returning the length of the slice, - implement

split_atby splitting the slice into two subslices, - and implement

for_eachby iterating over the array and invokingopon each element.

Given these two traits, we can implement a parallel operation like collection by following the same basic template. We check how much work there is: if it’s too much, we split into two pieces. Otherwise, we process sequentially (note that this automatically incorporates the sequential fallback we saw before):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Click these links, for example, to see the code to collect into a vector or to reduce a stream of values into one.

Conclusions and a historical note

I’m pretty excited about this latest iteration of Rayon. It’s dead simple to use, very expressive, and I think it has a lot of potential to be very efficient.

It’s also very gratifying to see how elegant data parallelism in Rust has become. This is the result of a long evolution and a lot of iteration. In Rust’s early days, for example, it took a strict, Erlang-like approach, where you just had parallel tasks communicating over channels, with no shared memory. This is good for the high-levels of your application, but not so good for writing a parallel quicksort. Gradually though, as we refined the type system, we got closer and closer to a smooth version of parallel quicksort.

If you look at some of my earlier designs,

it should be clear that the current iteration of Rayon is by far the

smoothest yet. What I particularly like is that it is simple for

users, but also simple for implementors – that is, it doesn’t

require any crazy Rust type system tricks or funky traits to achieve

safety here. I think this is largely due to two key developments:

IMHTWAMA

, which was the decision to make&mutreferences be non-aliasable and to removeconst(read-only, but not immutable) references. This basically meant that Rust authors were now writing data-race-free code by default.- Improved Send traits, or RC 458, which modified the

Sendtrait to permit borrowed references. Prior to this RFC, which was authored by Joshua Yanovski, we had the constraint that for data to beSend, it had to be'static– meaning it could not have any references into the stack. This was a holdover from the Erlang-like days, when all threads were independent, asynchronous workers, but none of us saw it. This led to some awful contortions in my early designs to try to find alternate traits to express the idea of data that was threadsafe but also contained stack references. Thankfully Joshua had the insight that simply removing the'staticbound would make this all much smoother!

Appendix: Implementing sequential fallback without code duplication

Earlier, I mentioned that for peak performance in the quicksort demo, you want to fallback to sequential code if the array size is too small. It would be a drag to have to have two copies of the quicksort routine. Fortunately, we can use Rust traits to generate those two copies automatically from a single source. This appendix explains the trick that I used in the demo code.

First, you define a trait Joiner that abstracts over the join

function:

1 2 3 4 5 6 7 8 | |

This Joiner trait has two implementations, corresponding to

sequential and parallel mode:

1 2 3 4 5 | |

Now we can rewrite quick_sort to be generic over a type J: Joiner,

indicating whether this is the parallel or sequential implementation.

The parallel version will, for small arrays, convert over to

sequential mode:

1 2 3 4 5 6 7 8 9 10 11 12 | |

http://smallcultfollowing.com/babysteps/blog/2015/12/18/rayon-data-parallelism-in-rust/

|

|

Steve Fink: Animation Done Wrong (aka Fix My Code, Please!) |

A blast from the past: in early 2014, we enabled Generational Garbage Collection (GGC) for Firefox desktop. But this blog post is not about GGC. It is about my victory dance when we finally pushed to button to let it go live on desktop firefox. Please click on that link; it is, after all, what this blog post is about.

Old-timers will recognize the old TBPL interface, since replaced with TreeHerder. I grabbed a snapshot of the page after I pushed GGC live (yes, green pushes really used to be that green!), then hacked it up a bit to implement the letter fly-in. And that fly-in is what I’d like to talk about now.

At the time I wrote it, I barely knew Javascript, and I knew even less CSS. But that was a long time ago.

Today… er, well, today I don’t know any more of either of those than I did back then.

Which is ok, since my whole goal here is to ask: what is the right way to implement that page? And specifically, would it be possible to do without any JS? Or perhaps minimal JS, just some glue between CSS animations or something?

To be specific: I am very particular about the animation I want. After the letters are done flying in, I want them to cycle through in the way shown. For example, they should be rotating around in the “O”. In general, they’re just repeatedly walking a path that is possibly discontinuous (as with any letter other than “O”). We’ll call this the marquee pattern.

Then when flying in, I want them to go to their appropriate positions within the marquee pattern. I don’t want them to fly to a starting position and only start moving in the marquee pattern once they get there. Oh noes, no no no. That would introduce a visible discontinuity. Plus which, the letters that started out close to their final position would move very slowly at first, then jerk into faster motion when the marquee began. We couldn’t have that now, could we?

I knew about CSS animations at the time I wrote this. But I couldn’t (and still can’t) see how to make use of them, at least without doing something crazy like redefining the animation every frame from JS. And in that case, why use CSS at all?

CSS can animate a smooth path between fixed points. So if I relaxed the constraint above (about the fly-in blending smoothly into the marquee pattern), I could pretty easily set up an animation to fly to the final position, then switch to a marquee animation. But how can you get the full effect? I speculated about some trick involving animating invisible elements with one animation, then having another animation to fly-in from each element’s original location to the corresponding invisible element’s marquee location, but I don’t know if that’s even possible.

You can look at the source code of the page. It’s a complete mess, combining as it does a cut and paste of the TBPL interface, plus all of jquery crammed in, and then finally my hacky code at the end of the file. Note that this was not easy code for me to write, and I did it when I got the idea at around 10pm during a JS work week, and it took me until about 4am. So don’t expect much in the way of comments or sanity or whatnot. In fact, the only comment that isn’t commenting out code appears to be the text “????!”.

The green letters have the CSS class “success”. There are lots of hardcoded coordinates, generated by hand-drawing the text in the Gimp and manually writing down the relevant coordinates. But my code doesn’t matter; the point is that there’s some messy Javascript called every frame to calculate the desired position of every one of those letters. Surely there’s a better way? (I should note that the animation is way smoother in today’s Nightly than it was back then. Progress!)

Anyway, the exact question is: how would someone implement this effect if they actually knew what they were doing?

I’ll take my answer off the air. In the comments, to be precise. Thank you in advance.

https://blog.mozilla.org/sfink/2015/12/17/animation-done-wrong-aka-fix-my-code-please/

|

|

Air Mozilla: Rust: Built by Community, Built For Community |

Dave Herman talks about developing Rust in an open community.

Dave Herman talks about developing Rust in an open community.

https://air.mozilla.org/rust-built-by-community-built-for-community/

|

|

Hannah Kane: 2015 Year in Review, Part 1 |

This year a team-like object (I say that because the team members changed throughout the year, not because any particular configuration wasn’t team-like :)) supported what was called for most of the year the “Mozilla Learning Network.”

Much of the work was in building and iterating on a new website, teach.mozilla.org; and supporting the new version of Thimble. We also did quite a bit of work supporting X-Ray Goggles, badges, the web literacy map, and MozFest.

Please click here to view a timeline featuring highlights from the year. I’ve tried to keep this relatively brief, so it’s largely focused on front-end work, and isn’t comprehensive of all the work that was completed this year. It also doesn’t include the several research and planning projects that happened throughout the year, and especially near the end.

Before we settle into our long winter’s nap, I want to extend a heartfelt thank you to everyone who contributed to these projects this year, including: Mavis Ou, Sabrina Ng, Atul Varma, Jess Klein, Luke Pacholski, Pomax, Kristina Shu, Dave Humphrey, Gideon Thomas, Kieran Sedgewick, Jordan Theriault, Cassie McDaniel, Simon Wex, Jon Buckley, Chris DeCairos, Lainie DeCoursy, Kevin Zawacki, Matthew Willse, Chris Lawrence, Amira Dhalla, Chad Sansing, Laura de Reynal, Bobby Richter, Errietta Kostala, and Greg McVerry. I’m sure I’m forgetting some people, so apologies in advance.

In a follow-up post, I’ll be sharing some lessons learned from our work this year, as well as plans for 2016.

|

|

Air Mozilla: German speaking community bi-weekly meeting, 17 Dec 2015 |

Zweiw"ochentliches Meeting der deutschsprachigen Community / German speaking community bi-weekly meeting

Zweiw"ochentliches Meeting der deutschsprachigen Community / German speaking community bi-weekly meeting

https://air.mozilla.org/german-speaking-community-bi-weekly-meeting-20151217/

|

|

The Mozilla Blog: Firefox Users Can Now Watch Netflix HTML5 Video on Windows |

Netflix announced today that their HTML5 video player now supports Firefox on Windows Vista and later using Adobe’s new Primetime CDM (Content Decryption Module). This means Netflix fans can watch their favorite shows on Firefox without installing NPAPI plugins.

As we announced earlier this year, Mozilla has been working with Adobe and Netflix to enable HTML5 video playback. This is an important step on Mozilla’s roadmap to deprecate NPAPI plugins. Adobe’s Primetime CDM runs in Mozilla’s open-source CDM sandbox, providing better user security compared to NPAPI plugins.

In 2016, Mozilla and Adobe plan to bring the Primetime CDM to Firefox on other operating systems.

Netflix Blog Post: HTML5 Video is now supported in Firefox

Adobe Blog Post: Update on HTML5 Premium Video Playback with Adobe Encrypted Media Extensions Support in Firefox

https://blog.mozilla.org/blog/2015/12/17/firefox-users-can-now-watch-netflix-html5-video-on-windows/

|

|

photo by Alex Eylar

photo by Alex Eylar